Latest Posts

-

What happens when you open a support case with Red Hat?

Most of us have encountered a moment of frustration when using personal technology—a forgotten password, or unresponsive screen on a smartphone, or perhaps you have had an ongoing issue with your internet service provider or your bank. Once you’ve tracked down the support number and dialed in, many times, here is what happens:

-A really nice, well-intentioned representative of the company answers your call and asks you to describe the issue.

-Their questions are likely based on a flow chart-like decision tree that acts as a script to identify the most common issues with the product you are using.

-This works well if your issue is on the list, but if it isn’t, that’s when you start using stronger language, asking your own questions and become a bold advocate for yourself hoping to get “escalated” to Tier 2 Support.

-Reaching Tier 2 might seem like a minor victory in getting the help you need, but often it starts out just like in the Tier 1 setting. You redescribe the issue, answer questions (albeit from a more experienced support representative) and in this moment you feel like you’ve started all over again.

-While you are waiting on and off of hold for various resources, you start clicking around through the self-help section of the company’s website only to find descriptions of your issue paired with a recommendation to call support!What we do in Red Hat Support is different than that.

At Red Hat we know a community is more capable than an individual and that a single perspective rarely develops the best idea. We create our products by leveraging the input and innovation contributed by thousands of members upstream.

Wouldn’t it be better to share this problem on a larger scale and receive input from knowledgeable people from all over Red Hat?

We organize our support teams around the product they support. We train our support engineers on the entirety of the product, and then deep dive into one of the many dozens of technologies that bring these products to life. By allowing this path to specialization, our Support Engineers rapidly earn credibility in their domain. Rather than learning a script, they learn the resources available to them for collaboration during troubleshooting with cross-functional teams. Rather than transferring ownership to another tier of Support, everyone within this specialty group already has access to the issue and works concurrently with their peers to make progress solving your problem.

While we direct the attention of the specifics of your case to specialized Support Engineers, we also share the takeaways internally to key stakeholders within Red Hat to improve our products and services. Teams in engineering want to know the problems reported by users. Teams in consulting want to know how to avoid issues when developing implementation plans for other customers. Teams in marketing want to know the best solutions already documented to share them with other customers proactively.

We believe in the benefits of sharing information to develop the best products and services, but in this case it also works directly to solve your problem as quickly as possible. Your ability to participate in the refinement of our products continues as you share your perspective through working your support case - Red Hat and our community of other customers thank you for your contributions!

Posted: November 30 2017 at 6:04 PM -

Why New Relic Synthetics?

Do you think it's important for a web property to have the following?

- Ability to detect application outages from a customers perspective for both web apps and APIs

- An accurate uptime score, based on if the application is Up for the customer

- Ability to be alerted to user experience degradation

- Ability to diagnose and troubleshoot problems with CDN or DNS

- Ability to show performance gains over time from a customers perspective

- Ability to track performance gains by introducing changes to the CDN

- Have a public status page that reflects the operational status of components from a customers perspective

If so then APM and other origin based monitoring is not enough. You need Synthetic and Real User Monitoring (RUM), New Relic calls this Browser monitoring, in addition to APM to achieve the above. The Red Hat Customer Portal did have an old synthetic monitoring platform, but it had many shortcomings that limited it's usefulness such as a proprietary scripting language. In 2015 a cross departmental team with members from System Engineering, Subscriber Platform, PlatOps and IT-Pnt, evaluated several Synthetic monitoring tools from different vendors to fill this need.

From that evaluation New Relic out classed the competition on every point.

How we are using Synthetics and RUM today

Since we chose New Relic for our Synthetic and RUM monitoring tool we have been using it extensively on the Customer Portal with great success. We currently have over 50 New Relic Synthetic monitors + their RUM product (Browser) monitoring the Red Hat Customer Portal applications such as:

- Case Managment

- Downloads

- Documenation

- Knowledgebase

- RHSM

- RHN

It gives us:

- Outage detection from customers view

- Alerts when there is an outage that affects customers even when origin server monitors are fine

- Accurate Uptime Score

- Insight into users experience as they browse

- Diagnose and find and troubleshoot problems related to the CDN and DNS

- Show performance changes over time

- Pre-prod and internal monitoring with Private Locations

- Automated public status page status.redhat.com

And we continue to expand adding more monitors as we identify parts of the portal that need it. As well as looking into consolidating APM and Synthetics in New Relic under one Alert system.

Our Monitoring Architecture

Real Examples

Example of a Synthetic monitor detecting a DNS failure, because we had monitoring outside the Akamai we knew this was an external problem:

And we knew when it was fixed:

Here is an example of a RUM monitor which reflects a spike in pageload time that was reported from customers browsers which accurately alerted us to a user experience problem:

Here is our 7 day Up Time score based on Synthetic monitors:

We would not be able to show this score with confidence without our suite of New Relic synthetic monitors

We also are depending on New Relic alerts as the engine to power our public status page status.redhat.com

These are a few of the many reasons why we love New Relic Synthetics and Browser on the Red Hat Customer Portal.

Posted: June 15 2017 at 2:51 PM -

The New Red Hat Product Security Center

The Customer Portal team here at Red Hat talks A LOT about content. We talk about creating it, serving it, indexing it and maintaining it. The list goes on. One aspect of content we’ve been focusing on a lot lately, and one I talked about in a previous post, is presentation. Even the best, most detailed and accurate content can still fail customers if it’s not presented well.

As a result of this insight, we’ve turned our collective eyes toward security content and its presentation in the Customer Portal. The result? The newly redesigned Red Hat Product Security Center. In order to introduce you to this new area of the Portal I’d like to walk you through some of the objectives of the changes and enhancements the team has made to this crucial portion of the site.

Organized content. In-context tools. On those occasions when a new vulnerability surfaces, content presentation key. Our customers need concise, well-organized content to know if they are affected and if so, remediate the risks. To this end we’ve created a custom Vulnerability content type for high-priority responses. Organized in action-oriented tabs, these pages are designed to provide the information customers need to judge impact, diagnose and resolve. Customers can even use Red Hat Access Labs designed to detect whether systems are vulnerable, right in the page.

Find the updates you need, when you need them most. Whether during an active vulnerability response or performing preventative maintenance, security related administration is especially vital for our customers. To provide the best customer experience during these crucial workflows, we wanted to cut out all the noise and provide a security-specific search experience providing only security updates, CVE and Red Hat Access Labs.

Keep ‘em coming back. On the new Product Security Center, we’ve made fresh, rich content a focus. To achieve this, we’ve tapped into our most important security content resource - Red Hat Product Security. Red Hat Product Security creates world-class content detailing the latest in security research and how it relates to your Red Hat products. This content is front and center in the Product Security Center. We believe featuring this high-quality, timely content will provide new incentive for customers to visit this area of the Portal more frequently, thereby staying up-to-date on the vital security news essential to keeping their data, and the data of their customers safe and secure.

We hope you find these new resources helpful, and as always we look forward to your feedback in the comments below.

Posted: December 17 2015 at 8:21 PM -

Recent Enhancements to the Customer Portal

Here in the Red Hat Customer Portal we're always striving for the best possible customer experience. We constantly examine feedback, identify pain points, and test to make sure we're delivering on the subscription value that sets Red Hat apart from its competitors. We make enhancements continuously, but in this post I wanted to round up a few recent changes that hopefully improve your experience as a user of the Red Hat Customer Portal.

Knowlegebase content redesign - If you’re a regular Customer Portal user, this is the one you’ve probably already noticed. Reading Articles, Solutions and Announcements is now a much improved experience when using a mobile device. On desktop, we’ve shifted some elements around to dedicate more of the available screen space to content. Actions for commenting on, rating and following content have been made more accessible. Have feedback on the redesign? Leave it here.

Knowledgebase search - We’ve added additional search-specific metrics to better understand the usefulness of content found through search. This data will help identify improvements in our content and will result in more of the right content being found by our customers. A significant portion of Customer Portal searches are for software downloads. Improvements in the findability of this content will allow our customers to find the right software, faster. Lastly, we continue to make incremental UI/ UX changes to convey more relevant information about the result. This will help our customers recognize the right result faster and more easily.

Support case management - The recent redesign of the case management interface is focused on improving efficiency for customers when creating and managing cases, as well as internal efficiency when servicing them.

New Red Hat Access Labs - The team is constantly cranking out apps focused on simplifying common configuration, security, performance and troubleshooting tasks. Some useful recent additions to the Labs ecosystem are RHEL Upgrade Helper, Product Life Cycle Checker, and NTP Configuration.

Faster authentication - Improvements made to our user service means faster authentication times, giving you easier access to the content and services you rely on.

Faster content downloading - Users should see a marked improvement in the performance of Red Hat Network (RHN) with relation to downloading content as a result of optimizations made to the way Red Hat delivers this content.

More tailored Red Hat Subscription Management (RHSM) errata notifications - Users with systems registered through the Red Hat Subscription management (RHSM) interface can now be subscribed to get notifications on a system-level basis. Users subscribed in this way will receive a notification only when a package on the user organization’s account is affected by errata. Previously, RHSM errata notifications were at the subscription level only. Manage these preferences here.

Product feature voting - New to the Customer Portal is the ability for customers to vote on possible upcoming product features. This gives you the opportunity to prioritize the features you'd most like to see added in upcoming releases and directly influence the direction of the product(s) you rely on. Currently, feature voting is open on Red Hat Enterprise Linux OpenStack Platform and will be coming soon to more products.

Posted: September 23 2015 at 2:56 PM -

Recent Enhancements to the Customer Portal

Here in the Red Hat Customer Portal we're always striving for the best possible customer experience. We constantly examine feedback, identify pain points, and test to make sure we're delivering on the subscription value that sets Red Hat apart from its competitors. We make enhancements continuously, but in this post I wanted to round up a few recent changes that hopefully improve your experience as a user of the Red Hat Customer Portal.

Knowlegebase content redesign - If you’re a regular Customer Portal user, this is the one you’ve probably already noticed. Reading Articles, Solutions and Announcements is now a much improved experience when using a mobile device. On desktop, we’ve shifted some elements around to dedicate more of the available screen space to content. Actions for commenting on, rating and following content have been made more accessible. Have feedback on the redesign? Leave it here.

Knowledgebase search - We’ve added additional search-specific metrics to better understand the usefulness of content found through search. This data will help identify improvements in our content and will result in more of the right content being found by our customers. A significant portion of Customer Portal searches are for software downloads. Improvements in the findability of this content will allow our customers to find the right software, faster. Lastly, we continue to make incremental UI/ UX changes to convey more relevant information about the result. This will help our customers recognize the right result faster and more easily.

Support case management - The recent redesign of the case management interface is focused on improving efficiency for customers when creating and managing cases, as well as internal efficiency when servicing them.

New Red Hat Access Labs - The team is constantly cranking out apps focused on simplifying common configuration, security, performance and troubleshooting tasks. Some useful recent additions to the Labs ecosystem are RHEL Upgrade Helper, Product Life Cycle Checker, and NTP Configuration.

Faster authentication - Improvements made to our user service means faster authentication times, giving you easier access to the content and services you rely on.

Faster content downloading - Users should see a marked improvement in the performance of Red Hat Network (RHN) with relation to downloading content as a result of optimizations made to the way Red Hat delivers this content.

More tailored Red Hat Subscription Management (RHSM) errata notifications - Users with systems registered through the Red Hat Subscription management (RHSM) interface can now be subscribed to get notifications on a system-level basis. Users subscribed in this way will receive a notification only when a package on the user organization’s account is affected by errata. Previously, RHSM errata notifications were at the subscription level only. Manage these preferences here.

Product feature voting - New to the Customer Portal is the ability for customers to vote on possible upcoming product features. This gives you the opportunity to prioritize the features you'd most like to see added in upcoming releases and directly influence the direction of the product(s) you rely on. Currently, feature voting is open on Red Hat Enterprise Linux OpenStack Platform and will be coming soon to more products.

Posted: September 23 2015 at 2:56 PM -

Red Hat IT: OpenShift Has Streamlined our Workload. Let It Streamline Yours.

Are you looking for ways to deliver your applications more quickly and with less effort? Do you need to move more services to the cloud?

Red Hat has these same issues and goals. By using our own product OpenShift, we have been able to shorten our release cycle times and support our developers better.

We built and deployed our own OpenShift Enterprise Platform-as-a-Service (PaaS) offering and are running it in Amazon Web Services (AWS). This has allowed our teams to have more control over creating and developing their own applications in the cloud. OpenShift's automated workflows and tooling help our developers access what they need, when they need it.

We hope our success story can become your success story with OpenShift.

Case Study: IT OpenShift Enterprise

In 2013, Red Hat IT launched a new internal PaaS deployment, available to all associates and based on OpenShift Enterprise.

The Challenge

Before the launch of IT’s OpenShift Enterprise offering, IT did not distinguish much between major enterprise applications and smaller applications that do not require strict enterprise management and change control. Plus our release engineering tooling was falling behind the modern standards.

While management processes are valuable in many cases, our Red Hat associates were asking for a self-service, application-deployment platform for lighter workloads. At the same time, IT wanted to:

- shorten our release cycle times

- support developers

- expand our services to the cloud

Because we needed multiple application security zones and platform customizations (for some enterprise applications), we could not move into OpenShift Online.

The Solution

IT's Cloud Enablement team (in collaboration with OpenShift Engineering, IT Operations, and Global Support Services (GSS)) built and deployed a 90-day, proof-of-concept OpenShift Engineering PaaS offering, running in AWS, within 5 weeks. Developers and teams began creating and managing their own applications immediately and moved us towards our goal of running more services in the cloud.

At the end of the proof-of-concept phase, IT delivered additional releases on the platform. IT also made it a production service, expanded the number of available security zones, and added other new features.

The Details

The IT OpenShift service currently supports 3 major security zones, each with infrastructure to support 3 different application sizes (small, medium, and large districts). These zones are spread across multiple AWS regions and accounts to ensure higher availability and security. The security zones offer different options depending on the intended customer base and application security requirements, including:

- internal/intranet only

- externally accessible

- IT development

The OpenShift Enterprise PaaS provides a high level of availability through load-balanced broker services, MongoDB replica sets and redundant components at every level. Individual application owners can now deliver application high-availablilty within the platform.

The Collaboration

IT team members included both associates who had previously worked on OpenShift Online and associates who had never before touched OpenShift. To facilitate cooperation, the IT team worked closely with the OpenShift Engineering teams, some of whom were former IT team members.

This unique setup allowed for a rapid flow of information between IT and Engineering. The teams met on a regular basis while implementing the project. This collaborative environment made it easier to discuss and deliver feature requests, bugs, and patches.

As we began to form our OpenShift Enterprise support team for our external customers, GSS was able to work closely with IT and use this effort as a training ground for our support associates. Our internal partnership allowed Red Hat to serve our current and future customers even better.

The Products

OpenShift Enterprise 2.2

Red Hat® Enterprise Linux® 6.6

Red Hat Cloud Access for AWS

Puppet

Nagios

GraphiteThe Numbers

31 Amazon Elastic Compute Cloud (EC2) nodes in AWS for Openshift infrastructure (brokers + app nodes) | 7 EC2 nodes in AWS for supporting Openshift infrastructure | 1038 Red Hat associates logged into the system

1093 Applications deployed | 618 Domains | 11 Bugs opened with Engineering | 5 Feature requests opened with Engineering

The Workloads

- Access Labs, https://access.redhat.com/labs/

- Partner Enablement Training for JBoss BPMS6 and FSW

- SalesForce.com connector to sync our Partner Center Salesforce data to a SaaS (Software-as-a-Service) Learning Management System

- Conference room reservation mobile app

And many others . . . .

The Tools

- Red Hat IT’s standard Nagios active monitoring, plus 5 custom scripts to monitor broker services.

- Red Hat IT’s standard Graphite (for data collection and trending)

- Red Hat IT’s standard MongoDB monitoring tools

- AWS CloudFormation Stacks (for provisioning EC2 infrastructure across availability zones in 3 Virtual Private Clouds (VPCs))

- Custom administrative scripts to manage new dynamic cloud services

The Lessons Learned

- Agile (Scrum) methodologies are effective for managing an OpenShift implementation.

- Building services in the cloud (AWS) is significantly different from building them in a datacenter. But with proper tooling and understanding, the cloud model offers many opportunities.

- Working directly with GSS and Engineering helped us make this project more visible throughout the company.

- Mojo can provide a good place to manage documentation and provide a support portal for our users.

- Developers waited to do most major user application development until the Production PaaS service was available. Users want to know their platform will be supported.

The Benefits

- A flexible and stable PaaS platform is now available to all Red Hat associates.

- OpenShift Enterprise allows us to customize the platform as needed.

- Developers can now code, test, and deploy applications directly into our internal OpenShift Enterprise system with minimal paperwork.

- We now have more services running in the cloud and more experience with the cloud model.

The Future

- We will soon be moving to OpenShift Enterprise v3.0, with Docker and Kubernetes technologies built in.

- We are also working to integrate Red Hat Atomic into our PaaS offerings.

Subject Matter Experts: Tom Benninger and Andrew Butcher

Product Owner: Hope Lynch, hlynch@redhat.com (please copy on correspondence)Posted: May 15 2015 at 7:53 PM -

Drupal in Docker

Containerizing Drupal

Docker is a new technology within the past few years, but many know that linux containers have been around longer than that. With the excitement around containers and Docker, the Customer Portal team at Red Hat wanted to take a look at how we could leverage Docker to deploy our application. We hope to always iterate on how code gets released to our customers, and containers seemed like a logical step to speeding up our application delivery.

Current Process

The method of defining our installed Drupal modules centered around RPM packaging. This process of bundling Drupal modules inside of RPMs made sense for aligning ourselves with the release processes of other workstreams. The issue with this process for Drupal is managing many RPMs (we use over 100 Drupal modules on the Customer Portal). As our developers began to take over more of the operational aspects of Drupal releases, the process of the RPM packaging didn't flow well with the rest of coding that our developers were maintaining. Packaging a Drupal module inside of an RPM adds that extra abstraction layer of versioning as well, so our developers didn't always know exactly which version with patches of a module may be installed.

Along with RPM packaging, there wasn't a unified way in which code was installed per environment. For our close-to-production and production environments, this centered around versioned RPM updates via puppet. However, for our CI environment, in order to speed up the application delivery there, contributed Drupal module installs had to be done manually by the developer (mainly to support integration testing in case that module needed to be removed quickly). Developer environments were also different in that they didn't always have a packaged RPM for delivering code, but instead the custom code repository would need to be directly accessible to the developer.

Delivering a Docker image

You can probably see how unwieldy juggling over one hundred modules with custom code can become. Our Drupal team wanted to deliver one artifact that defined the entire release of our application. Docker doesn't solve this simply by shoving all the code inside a container, but it does allow for an agreement to be established as to what our Drupal developers are responsible for delivering. The host that runs this container can have the tools it needs to run Drupal with the appropriate monitoring. The application developer can define the entirety of the Drupal application, all the way from installing Drupal core to supplying our custom code. Imagine drawing a circle around all the parts that existed on the host that define our Drupal app. This is essentially how our Docker image was formed.

Below is the Dockerfile we use to deploy Drupal inside a container. You might notice one important piece that is missing: the database! It is assumed that your database host exists outside this container and can be connected to from this application (it could even be another running container on the host).

Also note, this is mostly an image used for testing and spinning up environments quickly right now, but as we vet our continuous delivery process the structure of this file would likely change.

Dockerfile summary

This is some basic start configuration. We use the rhel7 Docker image as a base for running Drupal. A list of published Red Hat containers can be found here.

# Docker - Customer Portal Drupal # # VERSION dev # Use RHEL 7 image as the base image for this build. # Depends on subscription-manager correctly being setup on the RHEL 7 host VM that is building this image # With a correctly setup RHEL 7 host with subscriptions, those will be fed into the docker image build and yum repos # will become available FROM rhel7:latest MAINTAINER Ben Pritchett

Here we install yum packages and drush. The yum cache is cleared on the same line as the install in order to save space between image snapshots.

# Install all the necessary packages for Drupal and our application. Immediately yum update and yum clean all in this step # to save space in the image RUN yum -y --enablerepo rhel-7-server-optional-rpms install tar wget git httpd php python-setuptools vim php-dom php-gd memcached php-pecl-memcache mc gcc make php-mysql mod_ssl php-soap hostname rsyslog php-mbstring; yum -y update; yum clean all # Still need drush installed RUN pear channel-discover pear.drush.org && pear install drush/drush

Supervisord can be used to manage several processes at once, since the Docker container goes away when the last foreground process exits. Also we have some file permissions changes to the appropriate process users.

# Install supervisord (since this image runs without systemd) RUN easy_install supervisor RUN chown -R apache:apache /usr/sbin/httpd RUN chown -R memcached:memcached /usr/bin/memcached RUN chown -R apache:apache /var/log/httpd

With Docker, you could run a configuration management tool inside the container. However this generally increases the scope of what your app needs. These PHP config options are the same for all environments, so they are simply changed in place here. A tool like Augeus would be helpful for making these line edits to configuration files that don't change often.

# we run Drupal with a memory_limit of 512M RUN sed -i "s/memory_limit = 128M/memory_limit = 512M/" /etc/php.ini # we run Drupal with an increased file size upload limit as well RUN sed -i "s/upload_max_filesize = 2M/upload_max_filesize = 100M/" /etc/php.ini RUN sed -i "s/post_max_size = 8M/post_max_size = 100M/" /etc/php.ini # we comment out this rsyslog config because of a known bug (https://bugzilla.redhat.com/1088021) RUN sed -i "s/$OmitLocalLogging on/#$OmitLocalLogging on/" /etc/rsyslog.conf

Here we add the makefile for our Drupal environment. This is the definition of where Drupal needs to be installed and with what modules/themes.

# Uses the drush make file in this Docker repo to correctly install all the modules we need # https://www.drupal.org/project/drush_make ADD drupal.make /tmp/drupal.make

Here we add some Drush config, and Registry Rebuild tool (which we have gotten a lot of value out of).

# Add a drushrc file to point to default site ADD drushrc.php /etc/drush/drushrc.php # Install registry rebuild tool. This is helpful when your Drupal registry gets # broken from moving modules around RUN drush @none dl registry_rebuild --nocolor

Finally, we install Drupal using drush.make. This takes a bit of time while all the modules are downloaded from Drupal.org.

# Install Drupal via drush.make. RUN rm -rf /var/www/html ; drush make /tmp/drupal.make /var/www/html --nocolor;

Some file permissions changes occur here. Notice we don't have a settings.php file; that will be added with the running Docker container.

# Do some miscellaneous cleanup of the Drupal file system. If certain files are volume linked into the container (via -v at runtime) # some of these files will get overwritten inside the container RUN chmod 664 /var/www/html/sites/default && mkdir -p /var/www/html/sites/default/files/tmp && mkdir /var/www/html/sites/default/private && chmod 775 /var/www/html/sites/default/files && chmod 775 /var/www/html/sites/default/files/tmp && chmod 775 /var/www/html/sites/default/private RUN chown -R apache:apache /var/www/html

Here we add our custom code, and link it into the appropriate location on the filesystem. Host name is filtered in this example ($INTERNAL_GIT_REPO). Another way to approach this would be to house the Dockerfile with our custom code to centralize things a bit.

# Pull in our custom code for the Customer Portal Drupal application RUN git clone $INTERNAL_GIT_REPO /opt/drupal-custom # Put our custom code in the appropriate places on disk RUN ln -s /opt/drupal-custom/all/themes/kcs /var/www/html/sites/all/themes/kcs RUN ln -s /opt/drupal-custom/all/modules/custom /var/www/html/sites/all/modules/custom RUN ln -s /opt/drupal-custom/all/modules/features /var/www/html/sites/all/modules/features RUN rm -rf /var/www/html/sites/all/libraries RUN ln -s /opt/drupal-custom/all/libraries /var/www/html/sites/all/

Here's an example of installing a module outside of drush.make. I believe due to drush.make's issues with git submodules, this was done in a custom manner.

# get version 0.8.0 of raven-php. This is used for integration with Sentry RUN git clone https://github.com/getsentry/raven-php.git /opt/raven; cd /opt/raven; git checkout d4b741736125f2b892e07903cd40450b53479290 RUN ln -s /opt/raven /var/www/html/sites/all/libraries/raven

Add all the configuration files that don't make sense to make one line changes for. Again, these can be added here since they do not change with environments.

# Add all our config files from the Docker build repo ADD supervisord /etc/supervisord.conf ADD drupal.conf /etc/httpd/conf.d/site.conf ADD ssl_extras.conf /etc/httpd/conf.d/ssl.conf ADD docker-start.sh /docker-start.sh ADD drupal-rsyslog.conf /etc/rsyslog.d/drupal.conf

The user for this Docker image is root, but supervisord handles running different processes as their appropriate users (apache, memcached, etc).

USER root

The docker-start.sh script handles the database configuration once the Drupal container is running.

CMD ["/bin/bash", "/docker-start.sh"]

Docker start script

Once the Docker image is built that contains all the Drupal code we'll want to run, the container will be started up on the appropriate environment. Notice that environment-specific configuration was left out of the Docker image building process, and for this reason we know that this image should be deployable to any of our environments (assuming it gets the right configuration). With a configuration tool like puppet or ansible, we can provide the correct settings.php file to each host before our Docker container is deployed, and "configure" the container on startup with a command similar to below:

/usr/bin/docker run -td -p 80:80 -p 11211:11211 -e DRUPAL_START=1 -e DOMAIN=ci -v /var/log/httpd:/var/log/httpd -v /opt/settings.php:/var/www/html/sites/default/settings.php:ro drupal-custom:latest

A summary of some of the arguments:

* -p 80:80 -p 11211:11211 (open/map the correct ports for apache and memcached)

* -e DRUPAL_START=1 (apply the database configuration stored in Drupal code, described in the docker-start.sh script)

* -e DOMAIN=ci (let this container know it belongs in the ci domain)

* -v /var/log/httpd:/var/log/httpd (write apache logs inside the container to the host. In this way, we always have our logs stored between container restarts)

* -v /opt/settings.php:/var/www/html/sites/default/settings.php:ro (Let the container know its configuration from what exists on the host)When the container starts up, the assumed action to take is to start up supervisord, and apply database configuration if it was requested with DRUPAL_START. Mainly this involves running some drush helper commands, like features revert, applying module updates to the database, and ensuring our list of installed modules is correct with the Master module.

#!/bin/bash if [[ ! -z $DRUPAL_START ]]; then supervisord -c /etc/supervisord.conf & sleep 20 cd /var/www/html && drush cc all status=$? if [[ $status != 0 ]]; then drush rr fi cd /var/www/html && drush en master -y cd /var/www/html && drush master-execute --no-uninstall --scope=$DOMAIN -y && drush fra -y && drush updb -y && drush cc all status=$? if [[ $status != 0 ]]; then echo "Drupal release errored out on database config commands, please see output for more detail" exit 1 fi echo "Finished applying updates for Drupal database" kill $(jobs -p) sleep 20 fi supervisord -n -c /etc/supervisord.confThat's it! On your host, you should now be able to access the Drupal environment over port 80, assuming that the database connection within settings.php is correct. Depending on whether DRUPAL_START was set, the environment may take some time to configure itself against a current database.

The entirety of this example Dockerfile can be found here.

Next blog post we'll talk about using this container approach with Jenkins to automate the delivery pipeline.

Posted: April 8 2015 at 8:55 PM -

Explore certified partner solutions with the new Red Hat Certification Catalog

Today, we are excited to announce the addition of a new Red Hat Certification Catalog to the numerous resources within the Customer Portal.

Red Hat collaborates with hundreds of companies that develop hardware, devices, plug-ins, software applications, and services that are tested, supported, and certified to run on Red Hat technologies. Empowered with the new Red Hat Certification Catalog, you can explore a wide variety of partner solutions to:

- Ensure your Red Hat solution is running on tested, verified and supported hardware

- Find third-party software solutions tested specifically on the Red Hat platform

- Choose a Red Hat certified public cloud provider to run your Red Hat technologies

Our ecosystem of thousands of certified partner solutions continues to expand with each new release of Red Hat products, and the new catalog has been designed with you (our customers) in mind. Using the new Red Hat Certification Catalog, you can find hardware and cloud providers that are certified to run our latest product releases such as Red Hat Enterprise Linux 7 or Red Hat Linux OpenStack Platform 5. Furthermore, you can use the new catalog to find a public cloud provider certified to offer the latest version of Red Hat Enterprise Linux within a specific region or supporting a specific language.

Check out the new Red Hat Certification Catalog for yourself today, available via Certification in the navigation, to be equipped with the best combination of Red Hat technologies and certified partner solutions.

Posted: July 14 2014 at 9:00 PM -

Red Hat Customer Portal Wins Association of Support Professionals Award

We're excited to announce that for the fourth consecutive year, the Red Hat Customer Portal has been named one of the industry’s "Ten Best Web Support Sites” by the Association of Support Professionals (ASP). ASP is an international membership organization for customer support managers and professionals.

The "Ten Best Web Support Sites" competition is an awards program that showcases excellence in online service and support, and this year we were honored alongside other technology industry leaders including Cisco, Google Adwords, IBM, McAfee, Microsoft Surface Support, and PTC. Winners were selected based on performance criteria including overall usability, design and navigation, knowledgebase and search implementation, interactive features, and customer experience.

Since receiving our ASP award last year, we've been working diligently to improve our user experience even more. Armed with both customer and ASP feedback, we have enhanced our portal navigation and added features to better help customers find what they need, how and when they need it. The Customer Portal team's focus on analytics has brought rapid development and change. Using email and website statistics, A/B testing, and customer feedback, we are doing everything we can to better understand how our subscribers are using the portal.

While we're honored to receive the ASP award again, we're committed to continued innovation and improvement to give our customers the best experience. That commitment is evidenced by our most recent addition, the Certification Catalog, which unifies Red Hat certifications into a single platform and allows users to find certified hardware, certified third-party software solutions, and certified public cloud providers for their specific environment.

Learn more about the ASP award:

- ASP's 2014 press release: http://www.asponline.com/14announcement.html

- Red Hat's press blog: http://www.redhat.com/en/about/blog/red-hat-customer-portal-named-one-ten-best-web-support-sites-2014

Posted: July 14 2014 at 7:21 PM -

Red Hat Enterprise Linux 7 is here: new improvements to downloads, product page, and documentation

With the upcoming release of Red Hat Enterprise Linux 7 on Tuesday June 10th, we've improved several things for our customers to provide the best experience yet.

Two new ways to download a product- A product page download section, and a revamped downloads area:

A refined getting started guide, using icons to help prep and set expectations for a basic deployment of RHEL 7

Curated content for user tasks to help customers accomplish their most important tasks

A redesigned look and feel to the docs, including removal of the html-single and epub formatA draft of the page can be seen here: https://access.redhat.com/site/products/red-hat-enterprise-linux-7. At launch, this page will replace the existing RHEL product page at this URL https://access.redhat.com/site/products/red-hat-enterprise-linux

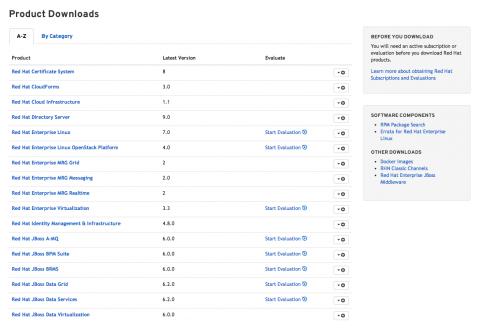

New Downloads:

There are two new ways to download RHEL now:-

The product page tab for Downloads

-

A new downloads page leading to a new application:

As of today, all versions of Red Hat Enterprise Linux are available here.

New Get Started:

We've also introduced a new getting started guide for RHEL 7, with simplified requirements and steps.

Curated Content:

We've also tried to reorganize our content by some of the most common tasks our customers read about. By looking at documentation trends, we've organized our resources based on what our customers have shown us is high priority. From there, we worked to display and organize different types of content to help them quickly learn about all the benefits available in RHEL 7.Documentation Changes:

We've also changed how we handle documentation in a few ways. In order to improve upon our documentation by adding comments, and future enhancements, we've removed the html-single and epub formats. We did this because we really want to take this opportunity to focus on the html version and do what we can do improve that experience in the future.Additionally we've set PDF documentation to require a login. While this may be an adjustment for some users, we believe that we'll be able to offer a much better experience with future improvements focusing on html.

We're really excited about these changes, and would love to hear back about what we can do to make your experience even better.

Posted: June 9 2014 at 8:33 PM -