Automation Controller Administration Guide

Administrator Guide for Automation Controller

Abstract

Preface

The automation controller Administration Guide describes the administration of automation controller through custom scripts, management jobs, and more. Written for DevOps engineers and administrators, the automation controller Administration Guide assumes a basic understanding of the systems requiring management with automation controllers easy-to-use graphical interface.

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Providing feedback on Red Hat documentation

If you have a suggestion to improve this documentation, or find an error, please contact technical support at https://access.redhat.com to create an issue on the Ansible Automation Platform Jira project using the docs-product component.

Chapter 1. Automation controller licensing, updates and support

Automation controller is provided as part of your annual Red Hat Ansible Automation Platform subscription.

Ansible is an open source software project and is licensed under the GNU General Public License version 3, as described in the Ansible Source Code

You must have valid subscriptions attached before installing Ansible Automation Platform.

For more information, see Attaching Subscriptions.

1.1. Trial and evaluation

You require a license to run automation controller. You can start by using a free trial license.

- Trial licenses for Ansible Automation Platform are available at: http://ansible.com/license

- Support is not included in a trial license or during an evaluation of the automation controller software.

1.2. Subscription Types

Red Hat Ansible Automation Platform is provided at various levels of support and number of machines as an annual subscription.

Standard:

- Manage any size environment

- Enterprise 8x5 support and SLA

- Maintenance and upgrades included

- Review the SLA at Product Support Terms of Service

- Review the Red Hat Support Severity Level Definitions

Premium:

- Manage any size environment, including mission-critical environments

- Premium 24x7 support and SLA

- Maintenance and upgrades included

- Review the SLA at Product Support Terms of Service

- Review the Red Hat Support Severity Level Definitions

All subscription levels include regular updates and releases of automation controller, Ansible, and any other components of the Platform.

For more information, contact Ansible through the Red Hat Customer Portal or at http://www.ansible.com/contact-us/.

1.3. Node counting in licenses

The automation controller license defines the number of Managed Nodes that can be managed as part of a Red Hat Ansible Automation Platform subscription.

A typical license says "License Count: 500", which sets the maximum number of Managed Nodes at 500.

For more information on managed node requirements for licensing, see https://access.redhat.com/articles/3331481.

Ansible does not recycle node counts or reset automated hosts.

1.4. Attaching Subscriptions

You must have valid Ansible Automation Platform subscriptions attached before installing Ansible Automation Platform.

Attaching subscriptions is unnecessary if your Red Hat account has enabled Simple Content Access Mode. However, you must register to Red Hat Subscription Management (RHSM) or Red Hat Satellite before installing Ansible Automation Platform.

Procedure

To find the

pool_idof your subscription, enter the following command:# subscription-manager list --available --all | grep "Ansible Automation Platform" -B 3 -A 6

The command returns the following:

Subscription Name: Red Hat Ansible Automation Platform, Premium (5000 Managed Nodes) Provides: Red Hat Ansible Engine Red Hat Single Sign-On Red Hat Ansible Automation Platform SKU: MCT3695 Contract: ******** Pool ID: ******************** Provides Management: No Available: 4999 Suggested: 1

To attach this subscription, enter the following command:

# subscription-manager attach --pool=<pool_id>

If all nodes have attached, then the repositories are found.

To check whether the subscription attached successfully, enter the following command:

# subscription-manager list --consumed

To remove this subscription, enter the following command:

#subscription-manager remove --pool=<pool_id>

1.5. Component licenses

To view the license information for the components included in automation controller, refer to /usr/share/doc/automation-controller-<version>/README.

where <version> refers to the version of automation controller you have installed.

To view a specific license, refer to /usr/share/doc/automation-controller-<version>/*.txt.

where * is the license file name to which you are referring.

Chapter 2. Start, stop, and restart automation controller

Automation controller ships with an administrator utility script, automation-controller-service. The script can start, stop, and restart all automation controller services running on the current single automation controller node. The script includes the message queue components and the database if it is an integrated installation.

External databases must be explicitly managed by the administrator. You can find the services script in /usr/bin/automation-controller-service, which can be invoked with the following command:

root@localhost:~$ automation-controller-service restart

In clustered installs, the automation-controller-service restart does not include PostgreSQL as part of the services that are restarted. This is because it exists external to automation controller, and PostgreSQL does not always need to be restarted. Use systemctl restart automation-controller to restart services on clustered environments instead.

You must also restart each cluster node for certain changes to persist as opposed to a single node for a localhost install.

For more information on clustered environments, see the Clustering section.

You can also invoke the services script using distribution-specific service management commands. Distribution packages often provide a similar script, sometimes as an init script, to manage services. For more information, see your distribution-specific service management system.

When running automation controller in a container, do not use the automation-controller-service script. Restart the pod using the container environment instead.

Chapter 3. Custom Inventory Scripts

Inventory scripts have been discontinued.

For more information, see Export old inventory scripts in the Automation controller User Guide.

If you use custom inventory scripts, migrate to sourcing these scripts from a project. For more information, see Inventory File Importing, and Inventory sources in the Automation controller User Guide.

If you are setting up an inventory file, see Editing the Red Hat Ansible Automation Platform installer inventory file and find examples specific to your setup.

If you are migrating to execution environments, see:

- Upgrading to execution environments.

- Creating and consuming execution environments.

- Automation mesh design patterns.

- Mesh Topology in the Ansible Automation Platform Upgrade and Migration Guide to validate your topology.

For more information about automation mesh on a VM-based installation, see the Red Hat Ansible Automation Platform automation mesh guide for VM-based installations.

For more information about automation mesh on an operator-based installation, see the Red Hat Ansible Automation Platform automation mesh for operator-based installations.

If you already have a mesh topology set up and want to view node type, node health, and specific details about each node, see Topology Viewer.

Chapter 4. Inventory File Importing

Automation controller enables you to choose an inventory file from source control, rather than creating one from scratch. This function is the same as for custom inventory scripts, except that the contents are obtained from source control instead of editing their contents in a browser. This means that the files are non-editable, and as inventories are updated at the source, the inventories within the projects are also updated accordingly, including the group_vars and host_vars files or directory associated with them. SCM types can consume both inventory files and scripts. Both inventory files and custom inventory types use scripts.

Imported hosts have a description of imported by default. This can be overridden by setting the _awx_description variable on a given host. For example, if importing from a sourced .ini file, you can add the following host variables:

[main] 127.0.0.1 _awx_description="my host 1" 127.0.0.2 _awx_description="my host 2"

Similarly, group descriptions also default to imported, but can also be overridden by _awx_description.

To use old inventory scripts in source control, see Export old inventory scripts in the Automation controller User Guide.

4.1. Custom Dynamic Inventory Scripts

A custom dynamic inventory script stored in version control can be imported and run. This makes it much easier to make changes to an inventory script. Rather than having to copy and paste a script into automation controller, it is pulled directly from source control and then executed. The script must handle any credentials required for its task. You are responsible for installing any Python libraries required by the script. (Custom dynamic inventory scripts have the same requirement.) This applies to both user-defined inventory source scripts and SCM sources as they are both exposed to Ansible virtualenv requirements related to playbooks.

You can specify environment variables when you edit the SCM inventory source. For some scripts, this is sufficient. However, this is not a secure way to store secret information that gives access to cloud providers or inventory.

A better way is to create a new credential type for the inventory script you are going to use. The credential type must specify all the necessary types of inputs. Then, when you create a credential of this type, the secrets are stored in an encrypted form. If you apply that credential to the inventory source, the script has access to those inputs.

For more information, see Custom Credential Types in the Automation controller User Guide.

4.2. SCM Inventory Source Fields

The source fields used are:

-

source_project: the project to use. -

source_path: the relative path inside the project indicating a directory or a file. If left blank, "" is still a relative path indicating the root directory of the project. -

source_vars: if set on a "file" type inventory source then they are passed to the environment variables when running.

Additionally:

- An update of the project automatically triggers an inventory update where it is used.

- An update of the project is scheduled immediately after creation of the inventory source.

- Neither inventory nor project updates are blocked while a related job is running.

-

In cases where you have a large project (around 10 GB), disk space on

/tmpcan be an issue.

You can specify a location manually in the automation controller UI from the Create Inventory Source page. Refer to Adding a source for instructions on creating an inventory source.

When you update a project, refresh the listing to use the latest SCM information. If no inventory sources use a project as an SCM inventory source, then the inventory listing might not be refreshed on update.

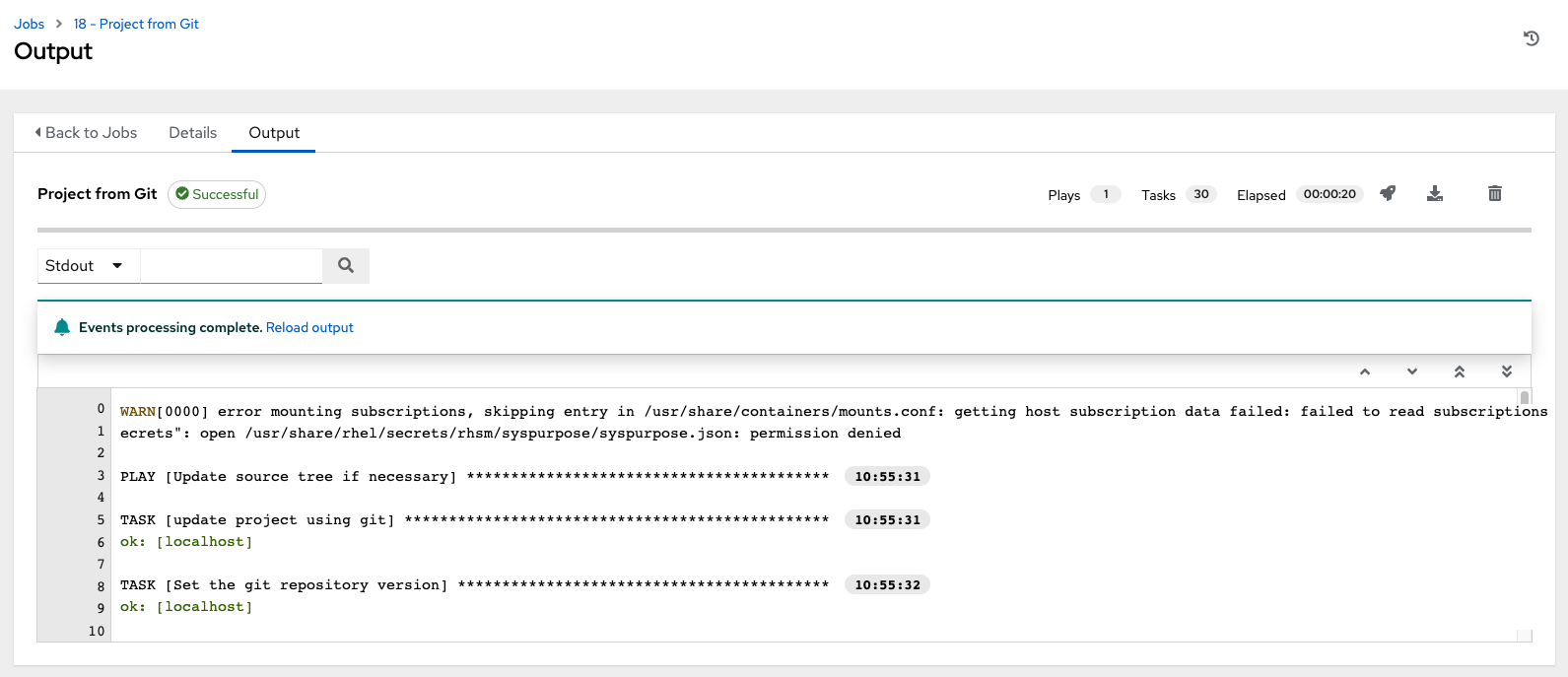

For inventories with SCM sources, the Job Details page for inventory updates displays a status indicator for the project update and the name of the project.

The status indicator links to the project update job.

The project name links to the project.

You can perform an inventory update while a related job is running.

4.2.1. Supported File Syntax

Automation controller uses the ansible-inventory module from Ansible to process inventory files, and supports all valid inventory syntax that automation controller requires.

Chapter 5. Multi-Credential Assignment

Automation controller provides support for assigning zero or more credentials to a job template.

5.1. Background

Before automation controller v3.3, job templates had the following requirements with respect to credentials:

- All job templates (and jobs) were required to have exactly one Machine/SSH or Vault credential (or one of both).

- All job templates (and jobs) could have zero or more "extra" credentials.

-

Extra credentials represented "Cloud" and "Network" credentials that could be used to provide authentication to external services through environment variables, for example,

AWS_ACCESS_KEY_ID.

This model required a variety of disjoint interfaces for specifying credentials on a job template and it lacked the ability to associate multiple Vault credentials with a playbook run, a use case supported by Ansible core from Ansible 2.4 onwards.

This model also poses a stumbling block for certain playbook execution workflows, such as having to attach a "dummy" Machine/SSH credential to the job template to satisfy the requirement.

5.2. Important Changes

All automation controller 4.4 Job templates have a single interface for credential assignment.

From the API endpoint:

GET /api/v2/job_templates/N/credentials/

You can associate and disassociate credentials using POST requests, similar to the behavior in the deprecated extra_credentials endpoint:

POST /api/v2/job_templates/N/credentials/ {'associate': true, 'id': 'X'}

POST /api/v2/job_templates/N/credentials/ {'disassociate': true, 'id': 'Y'}With this model, a job template is considered valid even when there are no credentials assigned to it. This model also provides users the ability to assign multiple Vault credentials to a job template.

5.3. Launch Time Considerations

Before automation controller v3.3, job templates used a configurable attribute, ask_credential_on_launch. This value was used at launch time to determine which missing credential values were necessary for launch. This was a way to specify a Machine or SSH credential to satisfy the minimum credential requirement.

Under the unified credential list model, this attribute still exists, but it no longer requires a credential. Now when ask_credential_on_launch is true, it signifies that you can specify a list of credentials at launch time to override those defined on the job template. For example:

POST /api/v2/job_templates/N/launch/ {'credentials': [A, B, C]}`

If ask_credential_on_launch is false, it signifies that custom credentials provided in the POST /api/v2/job_templates/N/launch/ are ignored.

Under this model, the only purpose for ask_credential_on_launch is to signal API clients to prompt the user for (optional) changes at launch time.

5.4. Multi-Vault Credentials

Because you can assign multiple credentials to a job, you can specify multiple Vault credentials to decrypt when your job template runs. This functionality mirrors the support for Managing vault passwords.

Vault credentials now have an optional field, vault_id, which is similar to the --vault-id argument of ansible-playbook.

Use the following procedure to run a playbook which makes use of multiple vault passwords:

Procedure

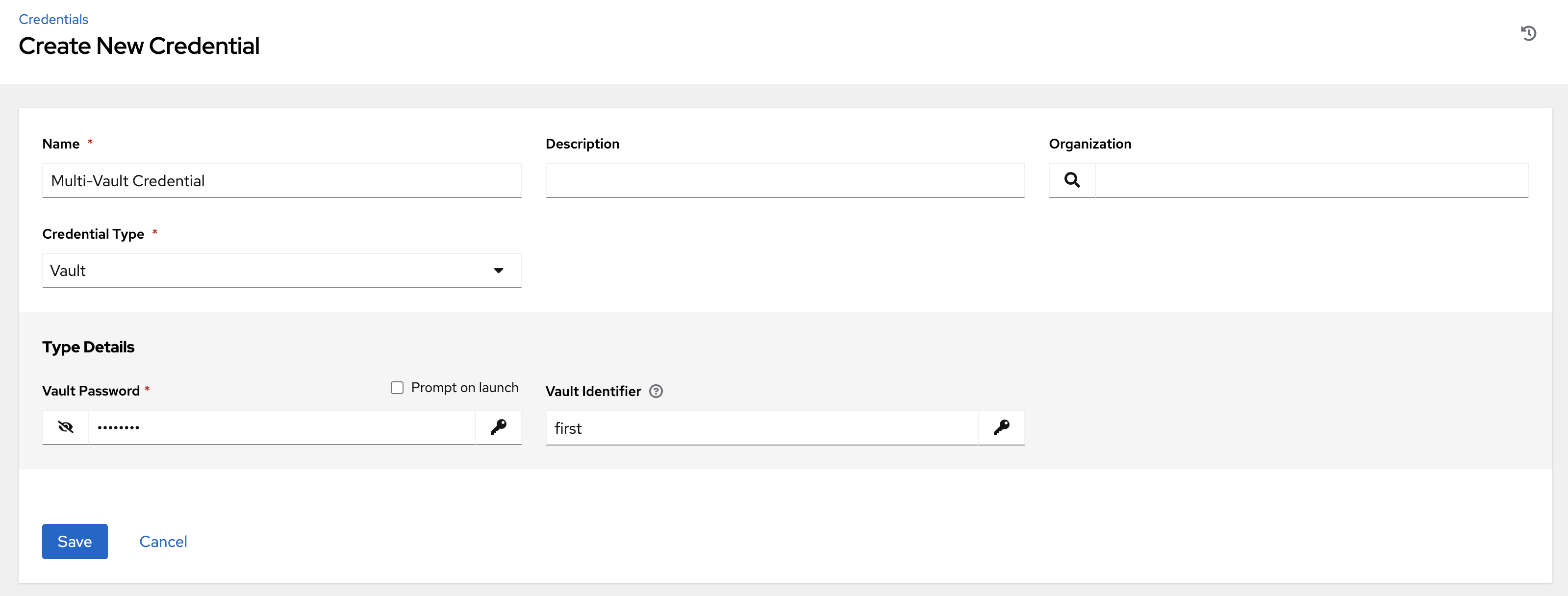

- Create a Vault credential in automation controller for each vault password.

- Specify the Vault ID as a field on the credential and input the password (which is encrypted and stored).

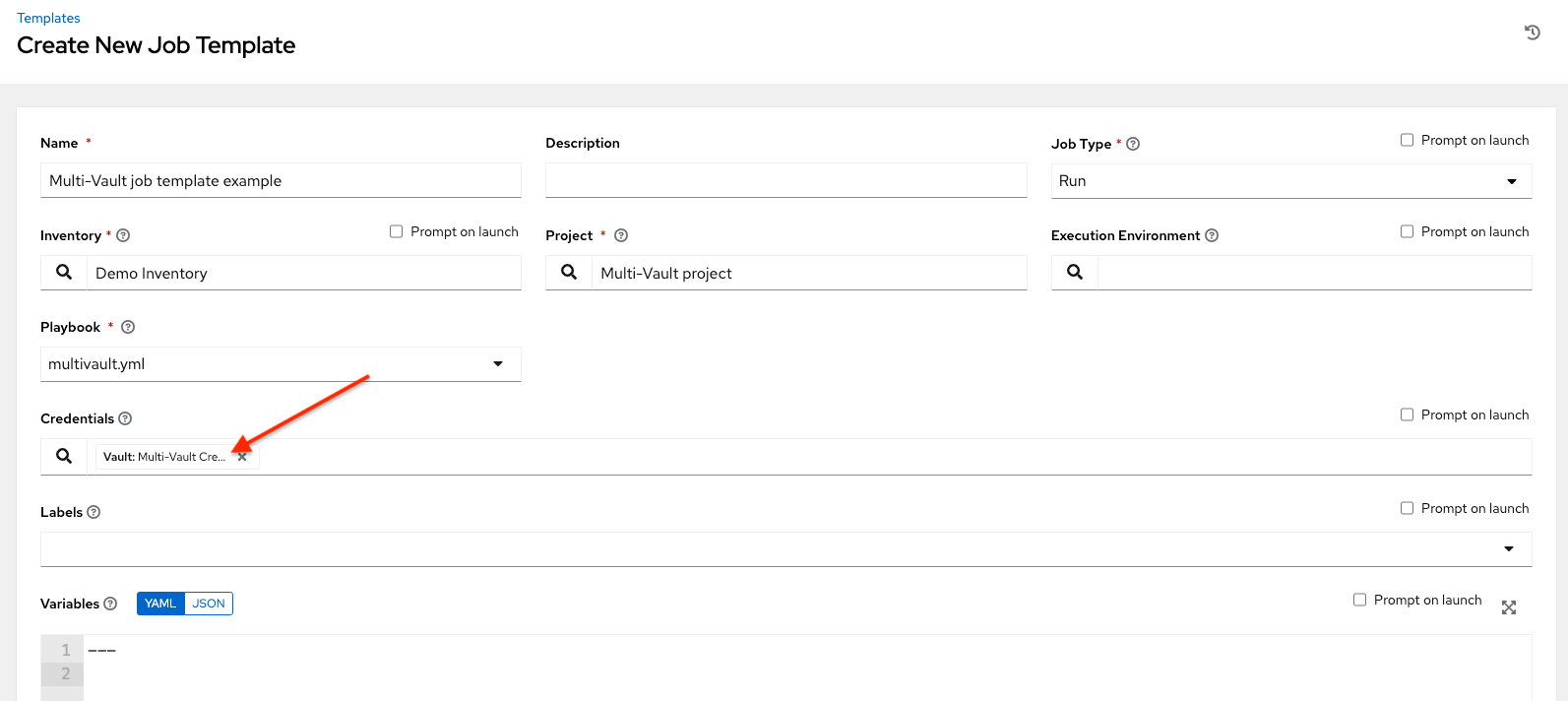

- Assign multiple vault credentials to the job template using the new credentials endpoint:

POST /api/v2/job_templates/N/credentials/

{

'associate': true,

'id': X

}Alternatively, you can perform the same assignment in the automation controller UI in the Create Credential page:

In this example, the credential created specifies the secret to be used by its Vault Identifier ("first") and password pair. When this credential is used in a Job Template, as in the following example, it only decrypts the secret associated with the "first" Vault ID:

If you have a playbook that is set up the traditional way with all the secrets in one big file without distinction, then leave the Vault Identifier field blank when setting up the Vault credential.

5.4.1. Prompted Vault Credentials

For passwords for Vault credentials that are marked with Prompt on launch, the launch endpoint of any related Job Templates communicate necessary Vault passwords using the passwords_needed_to_start parameter:

GET /api/v2/job_templates/N/launch/

{

'passwords_needed_to_start': [

'vault_password.X',

'vault_password.Y',

]

}

Where X and Y are primary keys of the associated Vault credentials:

POST /api/v2/job_templates/N/launch/

{

'credential_passwords': {

'vault_password.X': 'first-vault-password'

'vault_password.Y': 'second-vault-password'

}

}5.4.2. Linked credentials

Instead of uploading sensitive credential information into automation controller, you can link credential fields to external systems and use them to run your playbooks.

For more information, see Secret Management System in the Automation controller User Guide.

Chapter 6. Management Jobs

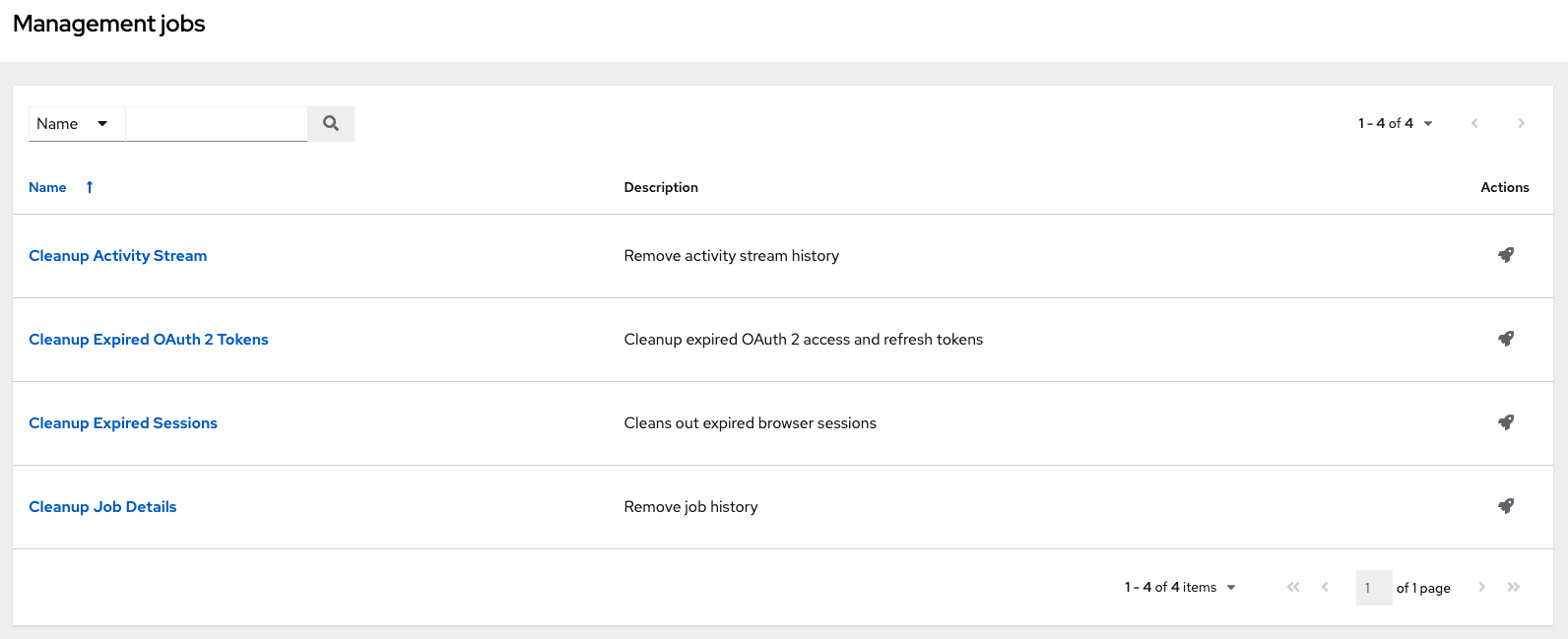

Management Jobs assist in the cleaning of old data from automation controller, including system tracking information, tokens, job histories, and activity streams. You can use this if you have specific retention policies or need to decrease the storage used by your automation controller database.

From the navigation panel, select Administration → Management Jobs.

The following job types are available for you to schedule and launch:

- Cleanup Activity Stream: Remove activity stream history older than a specified number of days

- Cleanup Expired OAuth 2 Tokens: Remove expired OAuth 2 access tokens and refresh tokens

- Cleanup Expired Sessions: Remove expired browser sessions from the database

- Cleanup Job Details: Remove job history older than a specified number of days

6.1. Removing Old Activity Stream Data

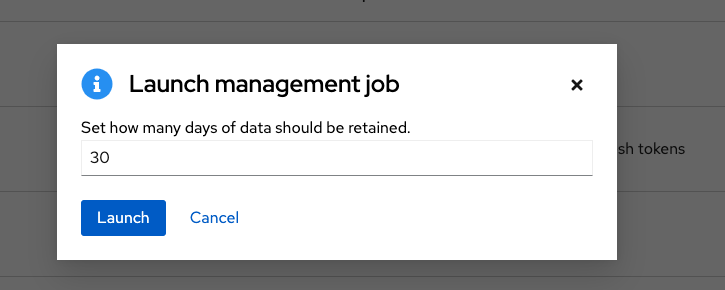

To remove older activity stream data, click the launch

![]() icon beside Cleanup Activity Stream.

icon beside Cleanup Activity Stream.

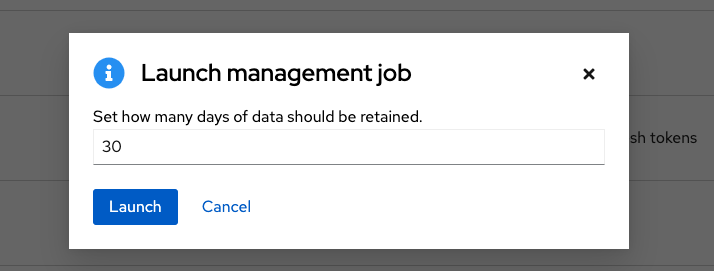

Enter the number of days of data you would like to save and click Launch.

6.1.1. Scheduling deletion

Use the following procedure to review or set a schedule for purging data marked for deletion:

Procedure

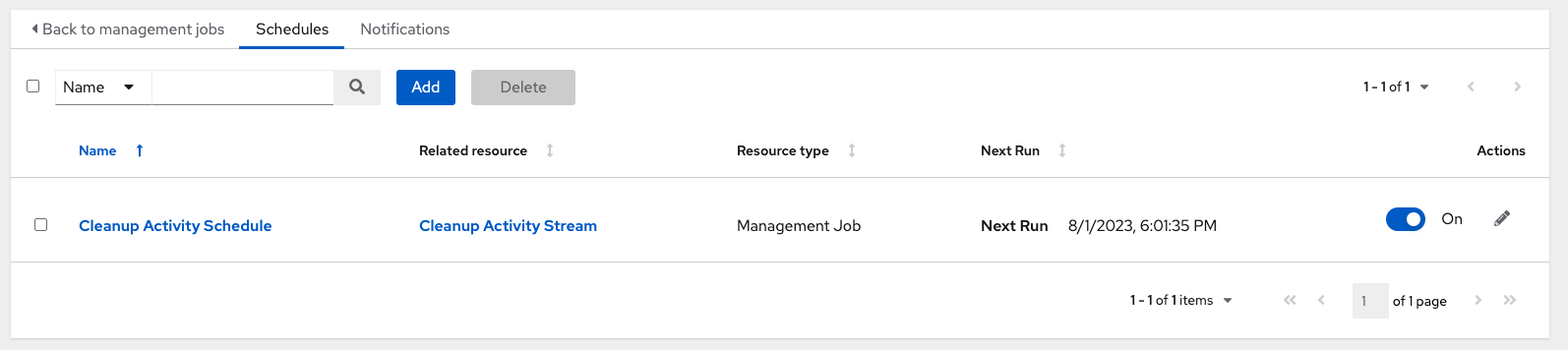

For a particular cleanup job, click the Schedules tab.

Note that you can turn this scheduled management job on and off using the ON/OFF toggle button.

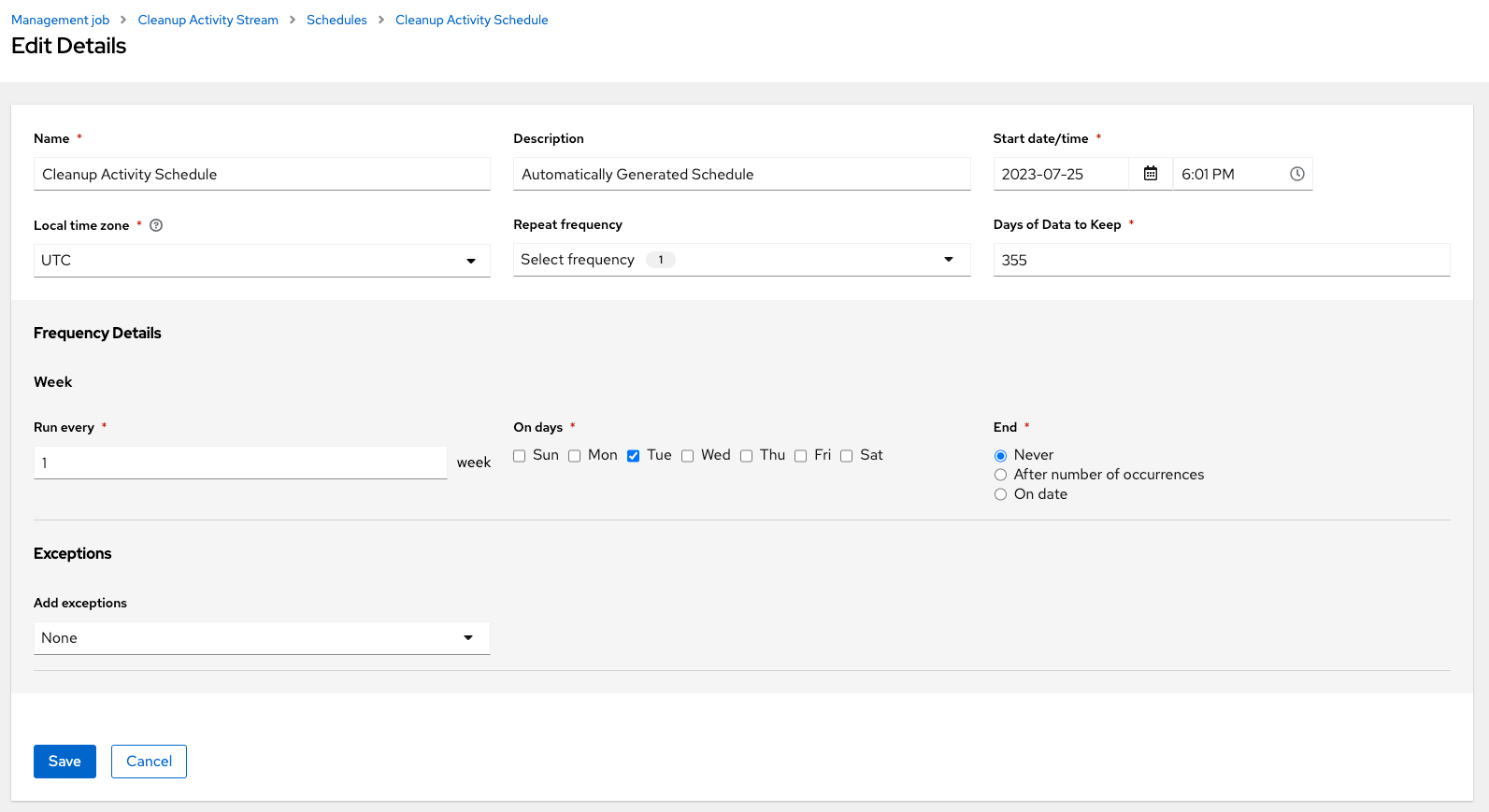

- Click the name of the job, "Cleanup Activity Schedule" in this example, to review the schedule settings.

Click Edit to modify them. You can also click Add to create a new schedule for this management job.

Enter the appropriate details into the following fields and click Save:

- Name required

- Start Date required

- Start Time required

- Local time zone the entered Start Time should be in this timezone.

- Repeat frequency the appropriate options display as the update frequency is modified including data you do not want to include by specifying exceptions.

- Days of Data to Keep required - specify how much data you want to retain.

The Details tab displays a description of the schedule and a list of the scheduled occurrences in the selected Local Time Zone.

Jobs are scheduled in UTC. Repeating jobs that run at a specific time of day can move relative to a local timezone when Daylight Saving Time shifts occur.

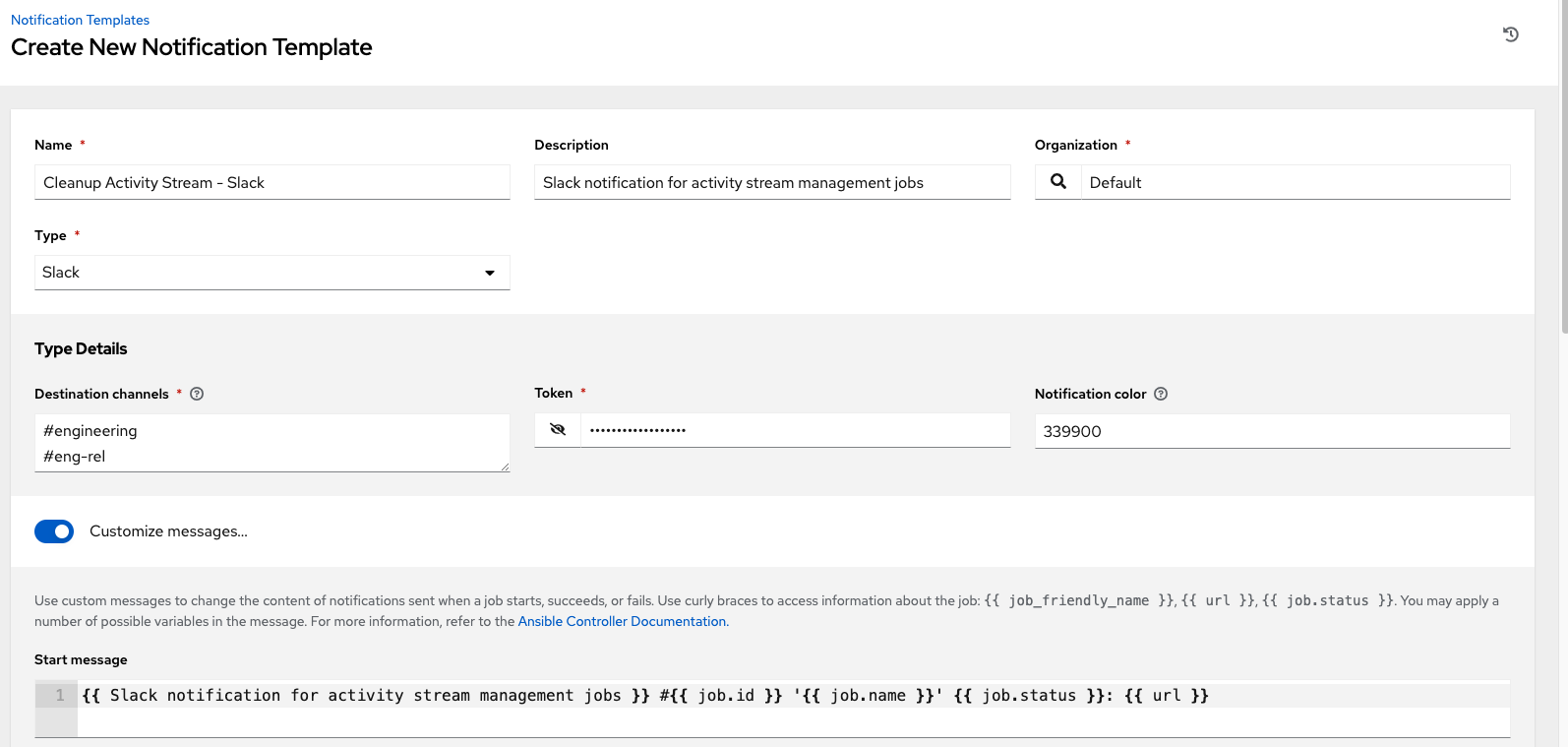

6.1.2. Setting notifications

Use the following procedure to review or set notifications associated with a management job:

Procedure

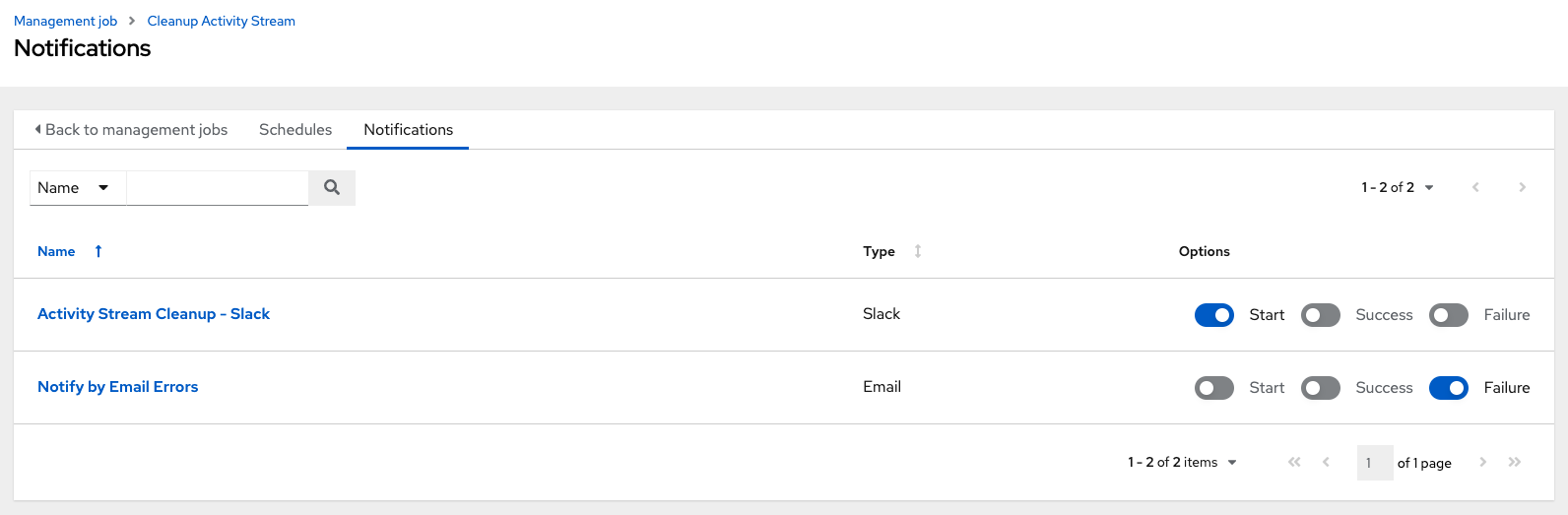

- For a particular cleanup job, select the Notifications tab.

If none exist, for more information, see Notifications in the Automation controller User Guide.

The following shows an example of a notification with details specified:

6.2. Cleanup Expired OAuth2 Tokens

To remove expired OAuth2 tokens, click the launch

![]() icon next to Cleanup Expired OAuth2 Tokens.

icon next to Cleanup Expired OAuth2 Tokens.

You can review or set a schedule for cleaning up expired OAuth2 tokens by performing the same procedure described for activity stream management jobs.

For more information, see Scheduling deletion.

You can also set or review notifications associated with this management job the same way as described in setting notifications for activity stream management jobs.

For more information, see Notifications in the Automation controller User Guide.

6.2.1. Cleanup Expired Sessions

To remove expired sessions, click the launch

![]() icon beside Cleanup Expired Sessions.

icon beside Cleanup Expired Sessions.

You can review or set a schedule for cleaning up expired sessions by performing the same procedure described for activity stream management jobs. For more information, see Scheduling deletion.

You can also set or review notifications associated with this management job the same way as described in Notifications for activity stream management jobs.

For more information, see Notifications in the Automation controller User Guide.

6.2.2. Removing Old Job History

To remove job history older than a specified number of days, click the launch

![]() icon beside Cleanup Job Details.

icon beside Cleanup Job Details.

Enter the number of days of data you would like to save and click Launch.

The initial job run for an automation controller resource, such as Projects, or Job Templates, are excluded from Cleanup Job Details, regardless of retention value.

You can review or set a schedule for cleaning up old job history by performing the same procedure described for activity stream management jobs.

For more information, see Scheduling deletion.

You can also set or review notifications associated with this management job in the same way as described in Notifications for activity stream management jobs, or for more information, see Notifications in the Automation controller User Guide.

Chapter 7. Clustering

Clustering is sharing load between hosts. Each instance must be able to act as an entry point for UI and API access. This must enable the automation controller administrators to use load balancers in front of as many instances as they want and keep good data visibility.

Load balancing is optional, and it is entirely possible to have ingress on one or all instances as needed.

Each instance must be able to join the automation controller cluster and expand its ability to run jobs. This is a simple system where jobs can run anywhere rather than be directed on where to run. Also, you can group clustered instances into different pools or queues, called Instance groups.

Ansible Automation Platform supports container-based clusters by using Kubernetes, meaning you can install new automation controller instances on this platform without any variation or diversion in functionality. You can create instance groups to point to a Kubernetes container. For more information, see the Container and instance groups section.

Supported operating systems

The following operating systems are supported for establishing a clustered environment:

- Red Hat Enterprise Linux 8 or later

Isolated instances are not supported in conjunction with running automation controller in OpenShift.

7.1. Setup considerations

Learn about the initial setup of clusters. To upgrade an existing cluster, see Upgrade Planning in the Ansible Automation Platform Upgrade and Migration Guide.

Note the following important considerations in the new clustering environment:

- PostgreSQL is a standalone instance and is not clustered. Automation controller does not manage replica configuration or database failover (if the user configures standby replicas).

- When you start a cluster, the database node must be a standalone server, and PostgreSQL must not be installed on one of the automation controller nodes.

-

PgBouncer is not recommended for connection pooling with automation controller. Automation controller relies on

pg_notifyfor sending messages across various components, and therefore, PgBouncer cannot readily be used in transaction pooling mode. - The maximum supported instances in a cluster is 20.

- All instances must be reachable from all other instances and they must be able to reach the database. It is also important for the hosts to have a stable address or hostname (depending on how the automation controller host is configured).

- All instances must be geographically collocated, with reliable low-latency connections between instances.

-

To upgrade to a clustered environment, your primary instance must be part of the

defaultgroup in the inventory and it needs to be the first host listed in thedefaultgroup. - Manual projects must be manually synced to all instances by the customer, and updated on all instances at once.

-

The

inventoryfile for platform deployments should be saved or persisted. If new instances are to be provisioned, the passwords and configuration options, as well as host names, must be made available to the installer.

7.2. Install and configure

Provisioning new instances involves updating the inventory file and re-running the setup playbook. It is important that the inventory file contains all passwords and information used when installing the cluster or other instances might be reconfigured. The inventory file contains a single inventory group, automationcontroller.

All instances are responsible for various housekeeping tasks related to task scheduling, such as determining where jobs are supposed to be launched and processing playbook events, as well as periodic cleanup.

[automationcontroller] hostA hostB hostC [instance_group_east] hostB hostC [instance_group_west] hostC hostD

If no groups are selected for a resource, then the automationcontroller group is used, but if any other group is selected, then the automationcontroller group is not used in any way.

The database group remains for specifying an external PostgreSQL. If the database host is provisioned separately, this group must be empty:

[automationcontroller] hostA hostB hostC [database] hostDB

When a playbook runs on an individual controller instance in a cluster, the output of that playbook is broadcast to all of the other nodes as part of automation controller’s websocket-based streaming output functionality. You must handle this data broadcast using internal addressing by specifying a private routable address for each node in your inventory:

[automationcontroller] hostA routable_hostname=10.1.0.2 hostB routable_hostname=10.1.0.3 hostC routable_hostname=10.1.0.4 routable_hostname

For more information about routable_hostname, see General variables in the Red Hat Ansible Automation Platform Installation Guide.

Previous versions of automation controller used the variable name rabbitmq_host. If you are upgrading from a previous version of the platform, and you previously specified rabbitmq_host in your inventory, rename rabbitmq_host to routable_hostname before upgrading.

7.2.1. Instances and ports used by automation controller and automation hub

Ports and instances used by automation controller and also required by the on-premise automation hub node are as follows:

- Port 80, 443 (normal automation controller and automation hub ports)

- Port 22 (ssh - ingress only required)

- Port 5432 (database instance - if the database is installed on an external instance, it must be opened to automation controller instances)

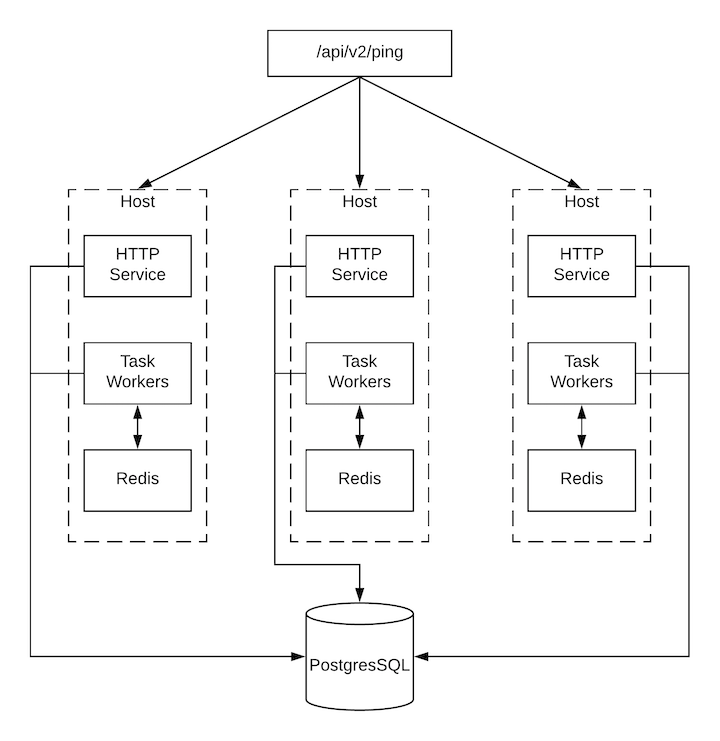

7.3. Status and monitoring by browser API

Automation controller reports as much status as it can using the browser API at /api/v2/ping to validate the health of the cluster. This includes the following:

- The instance servicing the HTTP request

- The timestamps of the last heartbeat of all other instances in the cluster

- Instance Groups and Instance membership in those groups

View more details about Instances and Instance Groups, including running jobs and membership information at /api/v2/instances/ and /api/v2/instance_groups/.

7.4. Instance services and failure behavior

Each automation controller instance is made up of the following different services working collaboratively:

- HTTP services

- This includes the automation controller application itself as well as external web services.

- Callback receiver

- Receives job events from running Ansible jobs.

- Dispatcher

- The worker queue that processes and runs all jobs.

- Redis

- This key value store is used as a queue for event data propagated from ansible-playbook to the application.

- Rsyslog

- The log processing service used to deliver logs to various external logging services.

Automation controller is configured so that if any of these services or their components fail, then all services are restarted. If these fail often in a short span of time, then the entire instance is placed offline in an automated fashion to allow remediation without causing unexpected behavior.

For backing up and restoring a clustered environment, see the Backup and restore clustered environments section.

7.5. Job runtime behavior

The way jobs are run and reported to a normal user of automation controller does not change. On the system side, note the following differences:

When a job is submitted from the API interface it is pushed into the dispatcher queue. Each automation controller instance connects to and receives jobs from that queue using a scheduling algorithm. Any instance in the cluster is just as likely to receive the work and execute the task. If an instance fails while executing jobs, then the work is marked as permanently failed.

- Project updates run successfully on any instance that could potentially run a job. Projects synchronize themselves to the correct version on the instance immediately before running the job. If the required revision is already locally checked out and Galaxy or Collections updates are not required, then a sync cannot be performed.

-

When the synchronization happens, it is recorded in the database as a project update with a

launch_type = syncandjob_type = run. Project syncs do not change the status or version of the project; instead, they update the source tree only on the instance where they run. -

If updates are required from Galaxy or Collections, a sync is performed that downloads the required roles, consuming more space in your

/tmp file. In cases where you have a large project (around 10 GB), disk space on/tmpcan be an issue.

7.5.1. Job runs

By default, when a job is submitted to the automation controller queue, it can be picked up by any of the workers. However, you can control where a particular job runs, such as restricting the instances from which a job runs on.

To support taking an instance offline temporarily, there is a property enabled defined on each instance. When this property is disabled, no jobs are assigned to that instance. Existing jobs finish, but no new work is assigned.

Troubleshooting

When you issue a cancel request on a running automation controller job, automation controller issues a SIGINT to the ansible-playbook process. While this causes Ansible to stop dispatching new tasks and exit, in many cases, module tasks that were already dispatched to remote hosts will run to completion. This behavior is similar to pressing Ctrl-c during a command-line Ansible run.

With respect to software dependencies, if a running job is canceled, the job is removed but the dependencies remain.

7.6. Deprovisioning instances

Re-running the setup playbook does not automatically deprovision instances since clusters do not currently distinguish between an instance that was taken offline intentionally or due to failure. Instead, shut down all services on the automation controller instance and then run the deprovisioning tool from any other instance.

Procedure

-

Shut down the instance or stop the service with the command:

automation-controller-service stop. Run the following deprovision command from another instance to remove it from the automation controller cluster:

$ awx-manage deprovision_instance --hostname=<name used in inventory file>

Example

awx-manage deprovision_instance --hostname=hostB

Deprovisioning instance groups in automation controller does not automatically deprovision or remove instance groups. For more information, see the Deprovisioning instance groups section.

Chapter 8. Instance and container groups

Automation controller enables you to execute jobs through Ansible playbooks run directly on a member of the cluster or in a namespace of an OpenShift cluster with the necessary service account provisioned. This is called a container group. You can execute jobs in a container group only as-needed per playbook. For more information, see Container groups.

For execution environments, see Execution environments in the Automation controller User Guide.

8.1. Instance groups

Instances can be grouped into one or more instance groups. Instance groups can be assigned to one or more of the following listed resources:

- Organizations

- Inventories

- Job templates

When a job associated with one of the resources executes, it is assigned to the instance group associated with the resource. During the execution process, instance groups associated with job templates are checked before those associated with inventories. Instance groups associated with inventories are checked before those associated with organizations. Therefore, instance group assignments for the three resources form the hierarchy:

Job Template > Inventory > Organization

Consider the following when working with instance groups:

-

You can define other groups and group instances in those groups. These groups must be prefixed with

instance_group_. Instances are required to be in theautomationcontrollerorexecution_nodesgroup alongside otherinstance_group_groups. In a clustered setup, at least one instance must be present in theautomationcontrollergroup, which appears ascontrolplanein the API instance groups. For more information and example scenarios, see Group policies forautomationcontroller. You cannot modify the

controlplaneinstance group, and attempting to do so results in a permission denied error for any user.Therefore, the Disassociate option is not available in the Instances tab of

controlplane.-

A

defaultAPI instance group is automatically created with all nodes capable of running jobs. This is like any other instance group but if a specific instance group is not associated with a specific resource, then the job execution always falls back to the default instance group. The default instance group always exists, and you cannot delete or rename it. -

Do not create a group named

instance_group_default. - Do not name any instance the same as a group name.

8.1.1. Group policies for automationcontroller

Use the following criteria when defining nodes:

-

Nodes in the

automationcontrollergroup can definenode_typehostvar to behybrid(default) orcontrol. -

Nodes in the

execution_nodes groupcan definenode_typehostvar to beexecution(default) orhop.

You can define custom groups in the inventory file by naming groups with instance_group_* where * becomes the name of the group in the API. You can also create custom instance groups in the API after the install has finished.

The current behavior expects a member of an instance_group_* to be part of automationcontroller or execution_nodes group.

Example

[automationcontroller] 126-addr.tatu.home ansible_host=192.168.111.126 node_type=control [automationcontroller:vars] peers=execution_nodes [execution_nodes] [instance_group_test] 110-addr.tatu.home ansible_host=192.168.111.110 receptor_listener_port=8928

After you run the installer, the following error appears:

TASK [ansible.automation_platform_installer.check_config_static : Validate mesh topology] ***

fatal: [126-addr.tatu.home -> localhost]: FAILED! => {"msg": "The host '110-addr.tatu.home' is not present in either [automationcontroller] or [execution_nodes]"}

To fix this, move the box 110-addr.tatu.home to an execution_node group:

[automationcontroller] 126-addr.tatu.home ansible_host=192.168.111.126 node_type=control [automationcontroller:vars] peers=execution_nodes [execution_nodes] 110-addr.tatu.home ansible_host=192.168.111.110 receptor_listener_port=8928 [instance_group_test] 110-addr.tatu.home

This results in:

TASK [ansible.automation_platform_installer.check_config_static : Validate mesh topology] ***

ok: [126-addr.tatu.home -> localhost] => {"changed": false, "mesh": {"110-addr.tatu.home": {"node_type": "execution", "peers": [], "receptor_control_filename": "receptor.sock", "receptor_control_service_name": "control", "receptor_listener": true, "receptor_listener_port": 8928, "receptor_listener_protocol": "tcp", "receptor_log_level": "info"}, "126-addr.tatu.home": {"node_type": "control", "peers": ["110-addr.tatu.home"], "receptor_control_filename": "receptor.sock", "receptor_control_service_name": "control", "receptor_listener": false, "receptor_listener_port": 27199, "receptor_listener_protocol": "tcp", "receptor_log_level": "info"}}}

After you upgrade from automation controller 4.0 or earlier, the legacy instance_group_ member likely has the awx code installed. This places that node in the automationcontroller group.

8.1.2. Configure instance groups from the API

You can create instance groups by POSTing to /api/v2/instance_groups as a system administrator.

Once created, you can associate instances with an instance group using:

HTTP POST /api/v2/instance_groups/x/instances/ {'id': y}`An instance that is added to an instance group automatically reconfigures itself to listen on the group’s work queue. For more information, see the following section Instance group policies.

8.1.3. Instance group policies

You can configure automation controller instances to automatically join instance groups when they come online by defining a policy. These policies are evaluated for every new instance that comes online.

Instance group policies are controlled by the following three optional fields on an Instance Group:

-

policy_instance_percentage: This is a number between 0 - 100. It guarantees that this percentage of active automation controller instances are added to this instance group. As new instances come online, if the number of instances in this group relative to the total number of instances is less than the given percentage, then new ones are added until the percentage condition is satisfied. -

policy_instance_minimum: This policy attempts to keep at least this many instances in the instance group. If the number of available instances is lower than this minimum, then all instances are placed in this instance group. -

policy_instance_list: This is a fixed list of instance names to always include in this instance group.

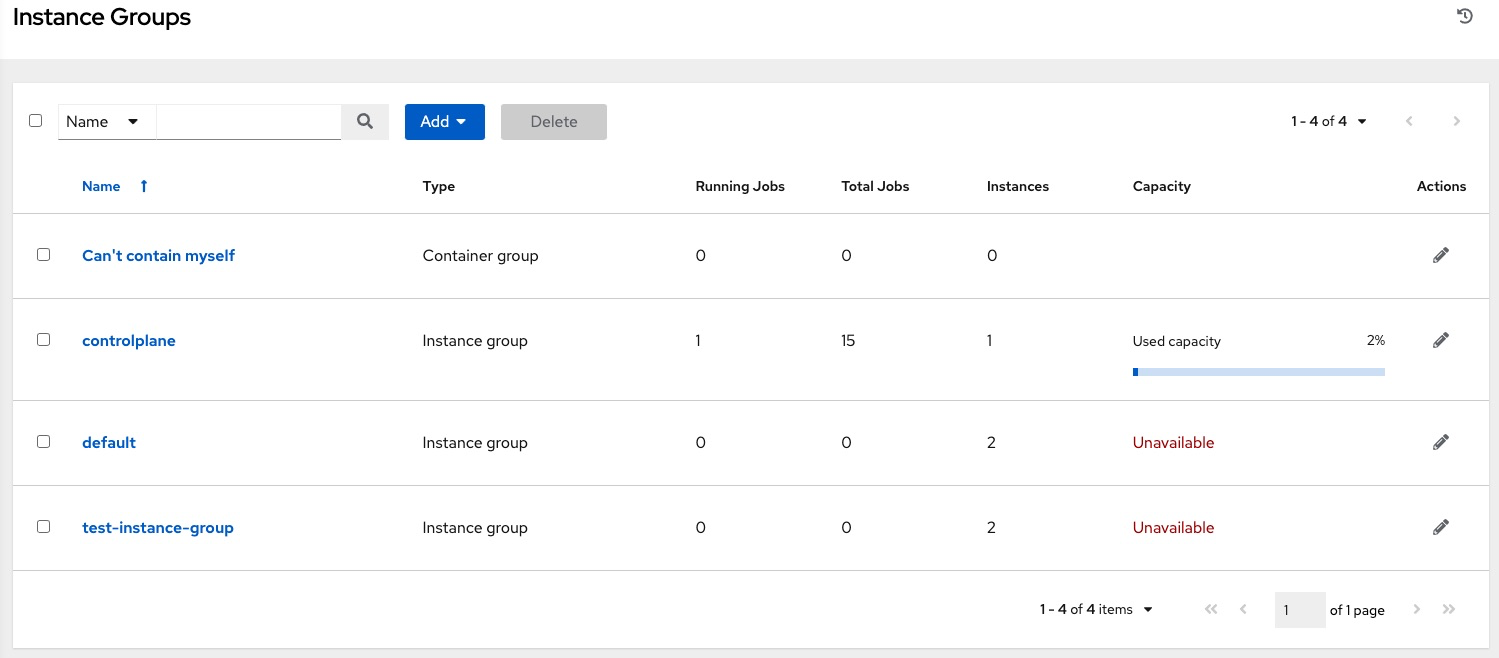

The Instance Groups list view from the automation controller user interface (UI) provides a summary of the capacity levels for each instance group according to instance group policies:

Additional resources

For more information, see the Managing Instance Groups section of the Automation controller User Guide.

8.1.4. Notable policy considerations

Take the following policy considerations into account:

-

Both

policy_instance_percentageandpolicy_instance_minimumset minimum allocations. The rule that results in more instances assigned to the group takes effect. For example, if you have apolicy_instance_percentageof 50% and apolicy_instance_minimumof 2 and you start 6 instances, 3 of them are assigned to the instance group. If you reduce the number of total instances in the cluster to 2, then both of them are assigned to the instance group to satisfypolicy_instance_minimum. This enables you to set a lower limit on the amount of available resources. - Policies do not actively prevent instances from being associated with multiple instance groups, but this can be achieved by making the percentages add up to 100. If you have 4 instance groups, assign each a percentage value of 25 and the instances are distributed among them without any overlap.

8.1.5. Pinning instances manually to specific groups

If you have a special instance which needs to be exclusively assigned to a specific instance group but do not want it to automatically join other groups by "percentage" or "minimum" policies:

Procedure

-

Add the instance to one or more instance groups'

policy_instance_list. -

Update the instance’s

managed_by_policyproperty to beFalse.

This prevents the instance from being automatically added to other groups based on percentage and minimum policy. It only belongs to the groups you have manually assigned it to:

HTTP PATCH /api/v2/instance_groups/N/

{

"policy_instance_list": ["special-instance"]

}

HTTP PATCH /api/v2/instances/X/

{

"managed_by_policy": False

}8.1.6. Job runtime behavior

When you run a job associated with an instance group, note the following behaviors:

- If you divide a cluster into separate instance groups, then the behavior is similar to the cluster as a whole. If you assign two instances to a group then either one is as likely to receive a job as any other in the same group.

- As automation controller instances are brought online, it effectively expands the work capacity of the system. If you place those instances into instance groups, then they also expand that group’s capacity. If an instance is performing work and it is a member of multiple groups, then capacity is reduced from all groups for which it is a member. De-provisioning an instance removes capacity from the cluster wherever that instance was assigned. For more information, see the Deprovisioning instance groups section for more detail.

Not all instances are required to be provisioned with an equal capacity.

8.1.7. Control where a job runs

If you associate instance groups with a job template, inventory, or organization, a job run from that job template is not eligible for the default behavior. This means that if all of the instances inside of the instance groups associated with these three resources are out of capacity, the job remains in the pending state until capacity becomes available.

The order of preference in determining which instance group to submit the job to is as follows:

- Job template

- Inventory

- Organization (by way of project)

If you associate instance groups with the job template, and all of these are at capacity, then the job is submitted to instance groups specified on the inventory, and then the organization. Jobs must execute in those groups in preferential order as resources are available.

You can still associate the global default group with a resource, like any of the custom instance groups defined in the playbook. You can use this to specify a preferred instance group on the job template or inventory, but still enable the job to be submitted to any instance if those are out of capacity.

Examples

-

If you associate

group_awith a job template and also associate thedefaultgroup with its inventory, you enable thedefaultgroup to be used as a fallback in casegroup_agets out of capacity. - In addition, it is possible to not associate an instance group with one resource but designate another resource as the fallback. For example, not associating an instance group with a job template and having it fall back to the inventory or the organization’s instance group.

This presents the following two examples:

- Associating instance groups with an inventory (omitting assigning the job template to an instance group) ensures that any playbook run against a specific inventory runs only on the group associated with it. This is useful in the situation where only those instances have a direct link to the managed nodes.

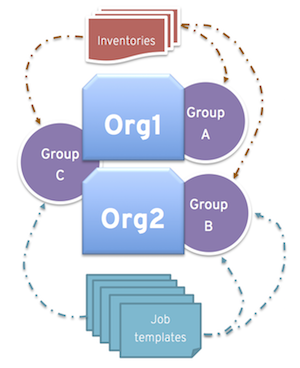

An administrator can assign instance groups to organizations. This enables the administrator to segment out the entire infrastructure and guarantee that each organization has capacity to run jobs without interfering with any other organization’s ability to run jobs.

An administrator can assign multiple groups to each organization, similar to the following scenario:

- There are three instance groups: A, B, and C. There are two organizations: Org1 and Org2.

- The administrator assigns group A to Org1, group B to Org2 and then assigns group C to both Org1 and Org2 as an overflow for any extra capacity that may be needed.

- The organization administrators are then free to assign inventory or job templates to whichever group they want, or let them inherit the default order from the organization.

Arranging resources this way offers you flexibility. You can also create instance groups with only one instance, enabling you to direct work towards a very specific Host in the automation controller cluster.

8.1.8. Instance group capacity limits

There is external business logic that can drive the need to limit the concurrency of jobs sent to an instance group, or the maximum number of forks to be consumed.

For traditional instances and instance groups, you might want to enable two organizations to run jobs on the same underlying instances, but limit each organization’s total number of concurrent jobs. This can be achieved by creating an instance group for each organization and assigning the value for max_concurrent_jobs.

For automation controller groups, automation controller is generally not aware of the resource limits of the OpenShift cluster. You can set limits on the number of pods on a namespace, or only resources available to schedule a certain number of pods at a time if no auto-scaling is in place. In this case, you can adjust the value for max_concurrent_jobs.

Another parameter available is max_forks. This provides additional flexibility for capping the capacity consumed on an instance group or container group. You can use this if jobs with a wide variety of inventory sizes and "forks" values are being run. This enables you to limit an organization to run up to 10 jobs concurrently, but consume no more than 50 forks at a time:

max_concurrent_jobs: 10 max_forks: 50

If 10 jobs that use 5 forks each are run, an eleventh job waits until one of these finishes to run on that group (or be scheduled on a different group with capacity).

If 2 jobs are running with 20 forks each, then a third job with a task_impact of 11 or more waits until one of these finishes to run on that group (or be scheduled on a different group with capacity).

For container groups, using the max_forks value is useful given that all jobs are submitted using the same pod_spec with the same resource requests, irrespective of the "forks" value of the job. The default pod_spec sets requests and not limits, so the pods can "burst" above their requested value without being throttled or reaped. By setting the max_forks value, you can help prevent a scenario where too many jobs with large forks values get scheduled concurrently and cause the OpenShift nodes to be oversubscribed with multiple pods using more resources than their requested value.

To set the maximum values for the concurrent jobs and forks in an instance group, see Creating an instance group in the Automation controller User Guide.

8.1.9. Deprovisioning instance groups

Re-running the setup playbook does not deprovision instances since clusters do not currently distinguish between an instance that you took offline intentionally or due to failure. Instead, shut down all services on the automation controller instance and then run the deprovisioning tool from any other instance.

Procedure

Shut down the instance or stop the service with the following command:

automation-controller-service stop

Run the following deprovision command from another instance to remove it from the controller cluster registry:

awx-manage deprovision_instance --hostname=<name used in inventory file>

Example

awx-manage deprovision_instance --hostname=hostB

Deprovisioning instance groups in automation controller does not automatically deprovision or remove instance groups, even though re-provisioning often causes these to be unused. They can still show up in API endpoints and stats monitoring. You can remove these groups with the following command:

awx-manage unregister_queue --queuename=<name>

Removing an instance’s membership from an instance group in the inventory file and re-running the setup playbook does not ensure that the instance is not added back to a group. To be sure that an instance is not added back to a group, remove it through the API and also remove it in your inventory file. You can also stop defining instance groups in the inventory file. You can manage instance group topology through the automation controller UI. For more information about managing instance groups in the UI, see Managing Instance Groups in the Automation controller User Guide.

If you have isolated instance groups created in older versions of automation controller (3.8.x and earlier) and want to migrate them to execution nodes to make them compatible for use with the automation mesh architecture, see Migrate isolated instances to execution nodes in the Ansible Automation Platform Upgrade and Migration Guide.

8.2. Container groups

Ansible Automation Platform supports container groups, which enable you to execute jobs in automation controller regardless of whether automation controller is installed as a standalone, in a virtual environment, or in a container. Container groups act as a pool of resources within a virtual environment. You can create instance groups to point to an OpenShift container, which are job environments that are provisioned on-demand as a pod that exists only for the duration of the playbook run. This is known as the ephemeral execution model and ensures a clean environment for every job run.

In some cases, you might want to set container groups to be "always-on", which you can configure through the creation of an instance.

Container groups upgraded from versions prior to automation controller 4.0 revert back to default and remove the old pod definition, clearing out all custom pod definitions in the migration.

Container groups are different from execution environments in that execution environments are container images and do not use a virtual environment. For more information, see Execution environments in the Automation controller User Guide.

8.2.1. Creating a container group

A ContainerGroup is a type of InstanceGroup that has an associated credential that enables you to connect to an OpenShift cluster.

Prerequisites

- A namespace that you can launch into. Every cluster has a "default" namespace, but you can use a specific namespace.

- A service account that has the roles that enable it to launch and manage pods in this namespace.

-

If you are using execution environments in a private registry, and have a container registry credential associated with them in automation controller, the service account also needs the roles to get, create, and delete secrets in the namespace. If you do not want to give these roles to the service account, you can pre-create the

ImagePullSecretsand specify them on the pod spec for theContainerGroup. In this case, the execution environment must not have a container registry credential associated, or automation controller attempts to create the secret for you in the namespace. - A token associated with that service account. An OpenShift or Kubernetes Bearer Token.

- A CA certificate associated with the cluster.

The following procedure explains how to create a service account in an OpenShift cluster or Kubernetes, to be used to run jobs in a container group through automation controller. After the service account is created, its credentials are provided to automation controller in the form of an OpenShift or Kubernetes API Bearer Token credential.

Procedure

-

To create a service account, download and use the sample service account,

containergroup saand modify it as needed to obtain the credentials. Apply the configuration from

containergroup-sa.yml:oc apply -f containergroup-sa.yml

Get the secret name associated with the service account:

export SA_SECRET=$(oc get sa containergroup-service-account -o json | jq '.secrets[0].name' | tr -d '"')

Get the token from the secret:

oc get secret $(echo ${SA_SECRET}) -o json | jq '.data.token' | xargs | base64 --decode > containergroup-sa.tokenGet the CA certificate:

oc get secret $SA_SECRET -o json | jq '.data["ca.crt"]' | xargs | base64 --decode > containergroup-ca.crt

-

Use the contents of

containergroup-sa.tokenandcontainergroup-ca.crtto provide the information for the OpenShift or Kubernetes API Bearer Token required for the container group.

To create a container group:

Procedure

- Use the automation controller UI to create an OpenShift or Kubernetes API Bearer Token credential to use with your container group. For more information, see Creating a credential in the Automation controller User Guide.

- From the navigation panel select Administration → Instance Groups.

- Click Add and select Create Container Group.

- Enter a name for your new container group and select the credential previously created to associate it to the container group.

8.2.2. Customizing the pod specification

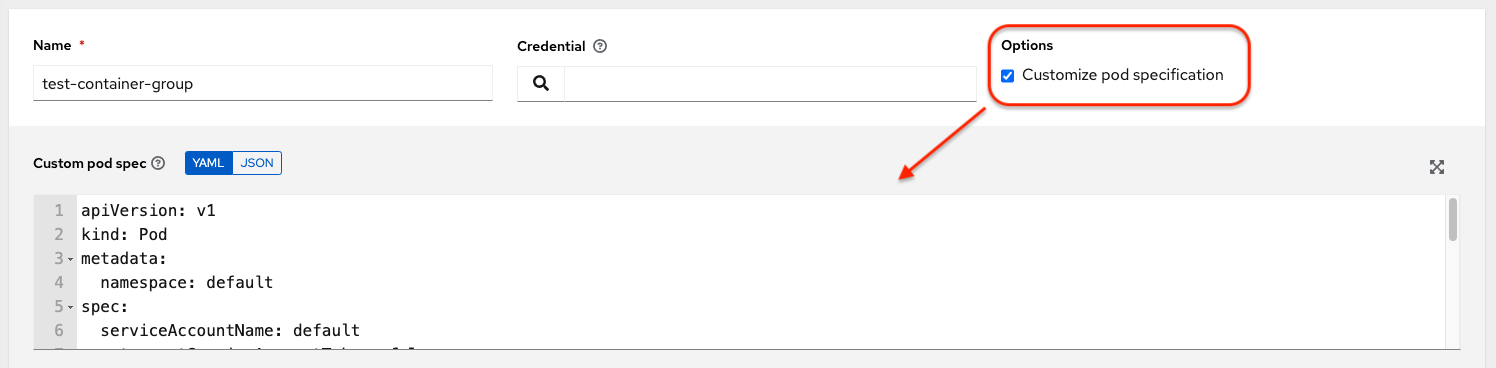

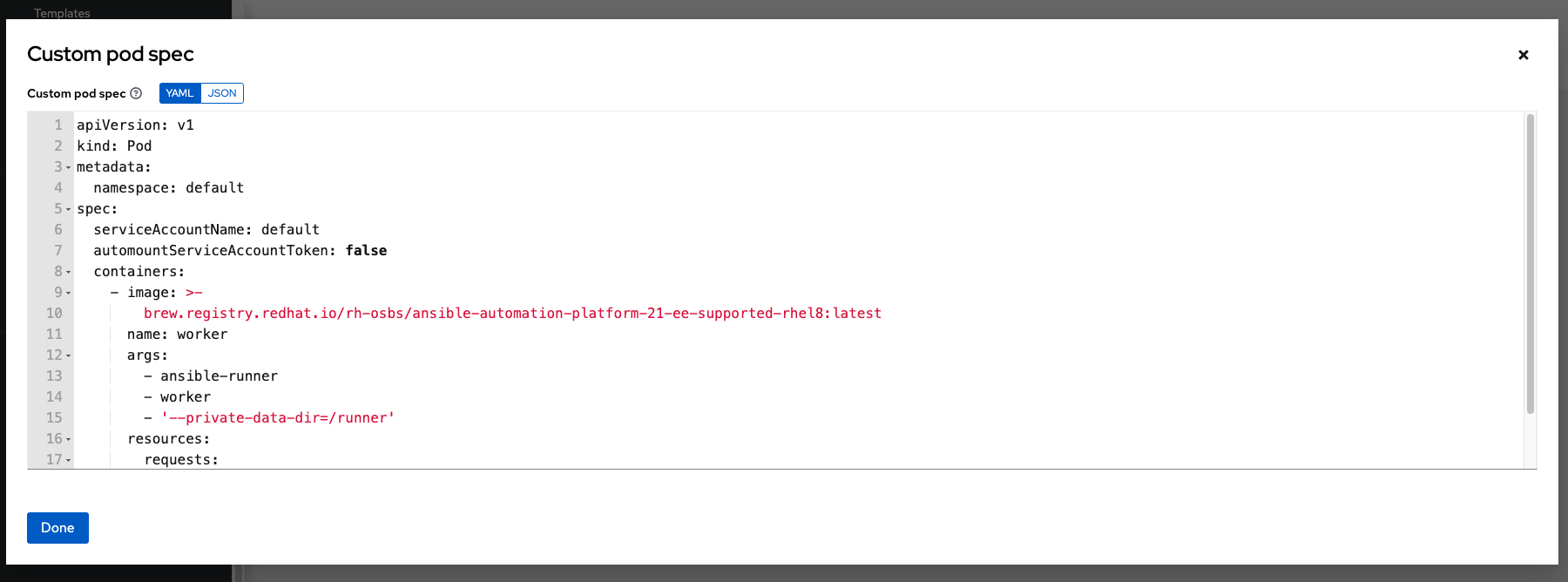

Ansible Automation Platform provides a simple default pod specification, however, you can provide a custom YAML or JSON document that overrides the default pod specification. This field uses any custom fields such as ImagePullSecrets, that can be "serialized" as valid pod JSON or YAML. A full list of options can be found in the Pods and Services section of the OpenShift documentation.

Procedure

To customize the pod specification, specify the namespace in the Pod Spec Override field by using the toggle to enable and expand the Pod Spec Override field.

- Click Save.

You can provide additional customizations, if needed. Click Expand to view the entire customization window:

The image when a job launches is determined by which execution environment is associated with the job. If you associate a container registry credential with the execution environment, then automation controller attempts to make an ImagePullSecret to pull the image. If you prefer not to give the service account permission to manage secrets, you must pre-create the ImagePullSecret and specify it on the pod specification, and omit any credential from the execution environment used.

For more information, see the Allowing Pods to Reference Images from Other Secured Registries section of the Red Hat Container Registry Authentication article.

Once you have created the container group successfully, the Details tab of the newly created container group remains, which enables you to review and edit your container group information. This is the same menu that is opened if you click the

![]() icon from the Instance Group link. You can also edit Instances and review Jobs associated with this instance group.

icon from the Instance Group link. You can also edit Instances and review Jobs associated with this instance group.

Container groups and instance groups are labeled accordingly.

8.2.3. Verifying container group functions

To verify the deployment and termination of your container:

Procedure

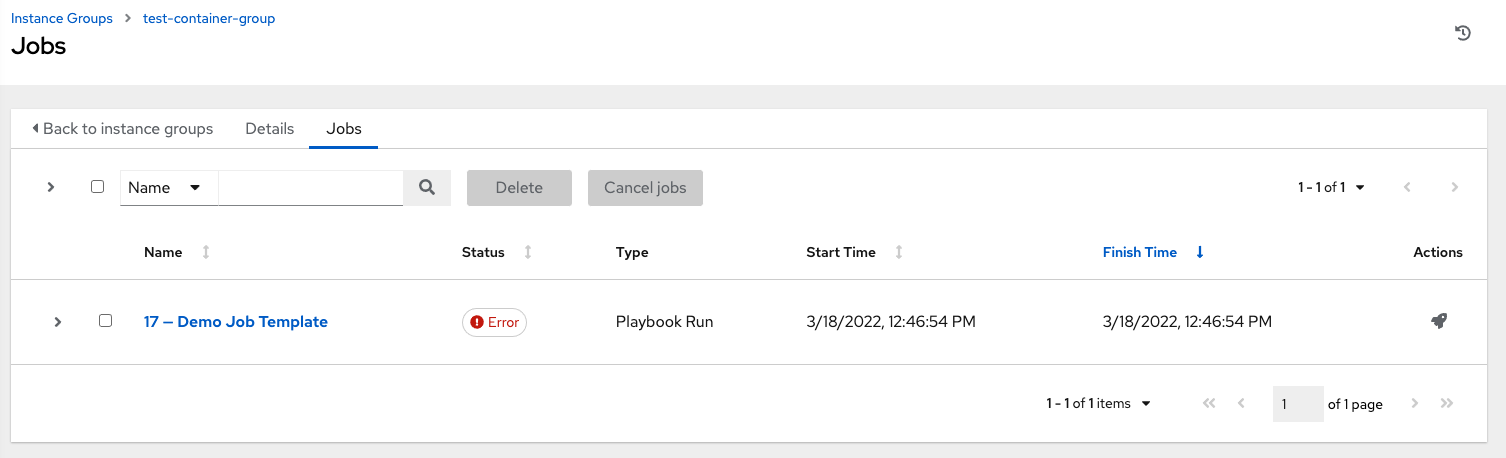

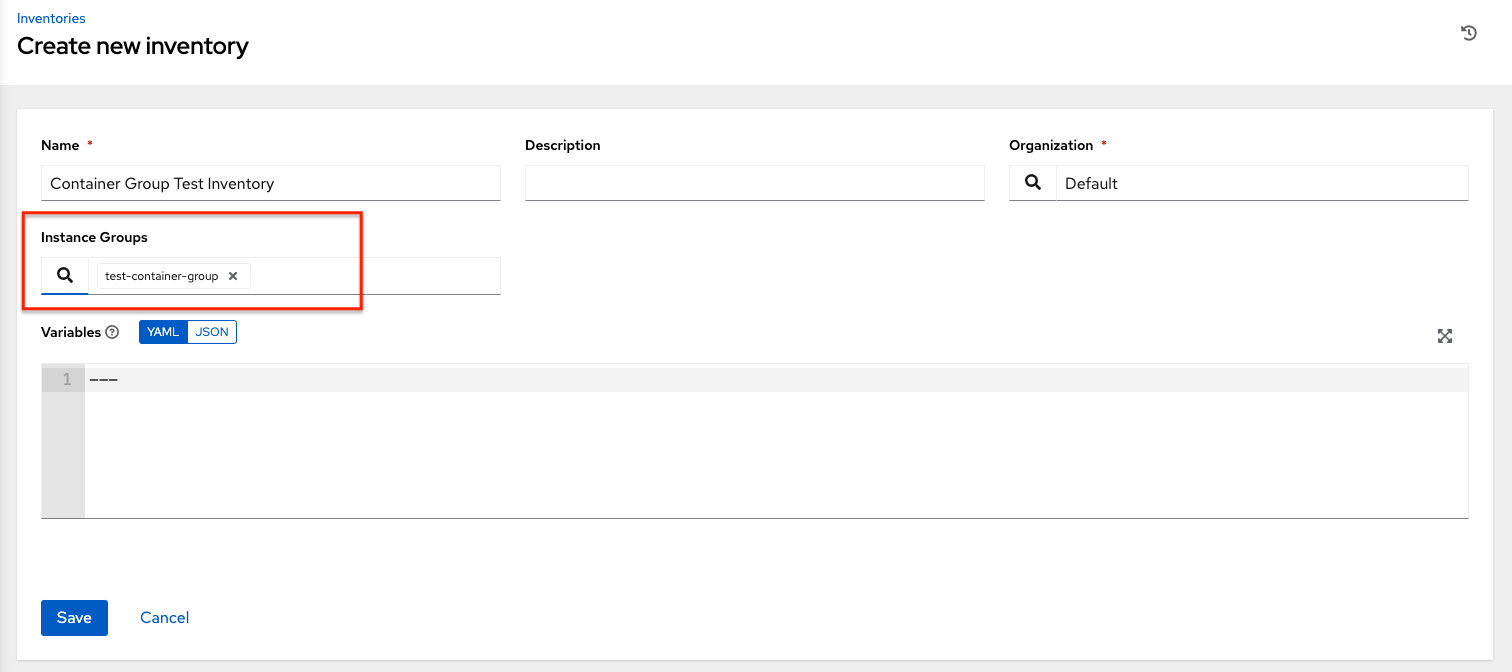

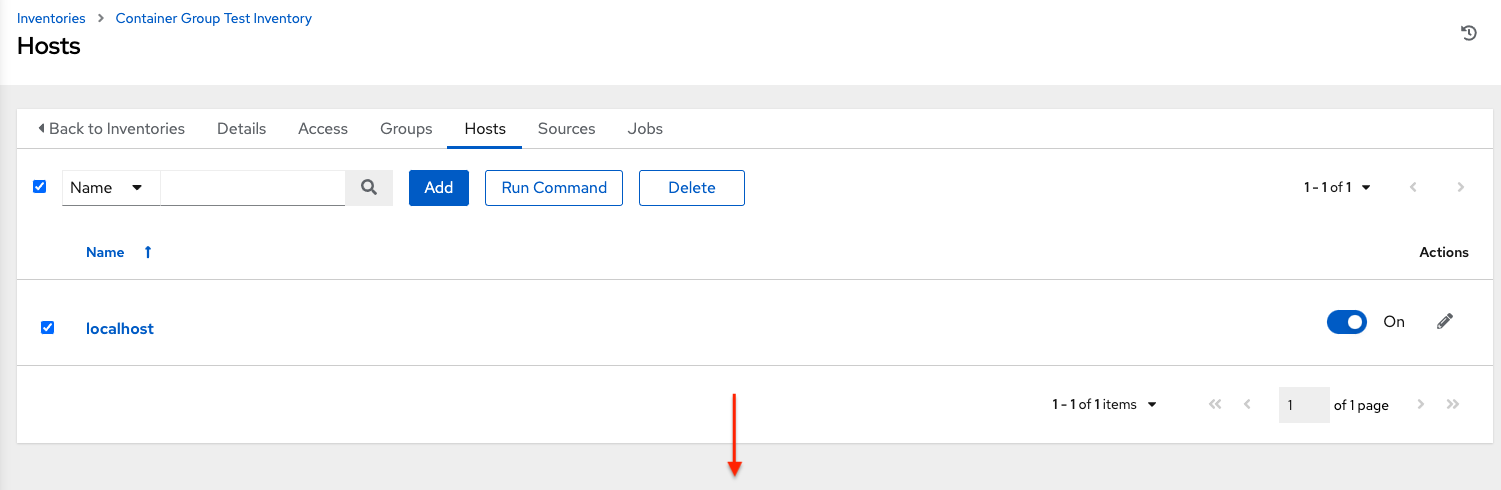

Create a mock inventory and associate the container group to it by populating the name of the container group in the Instance Group field. For more information, see Add a new inventory in the Automation controller User Guide.

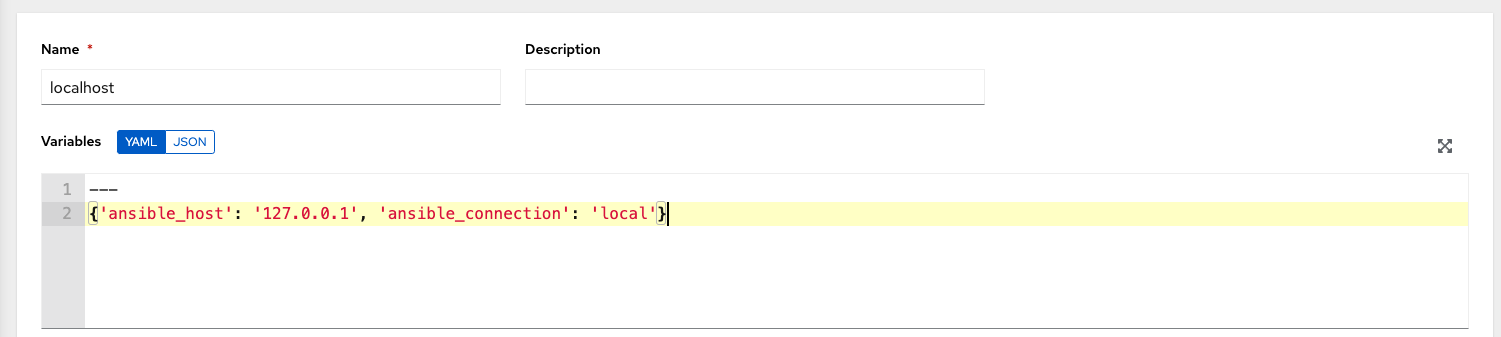

Create the

localhosthost in the inventory with variables:{'ansible_host': '127.0.0.1', 'ansible_connection': 'local'}

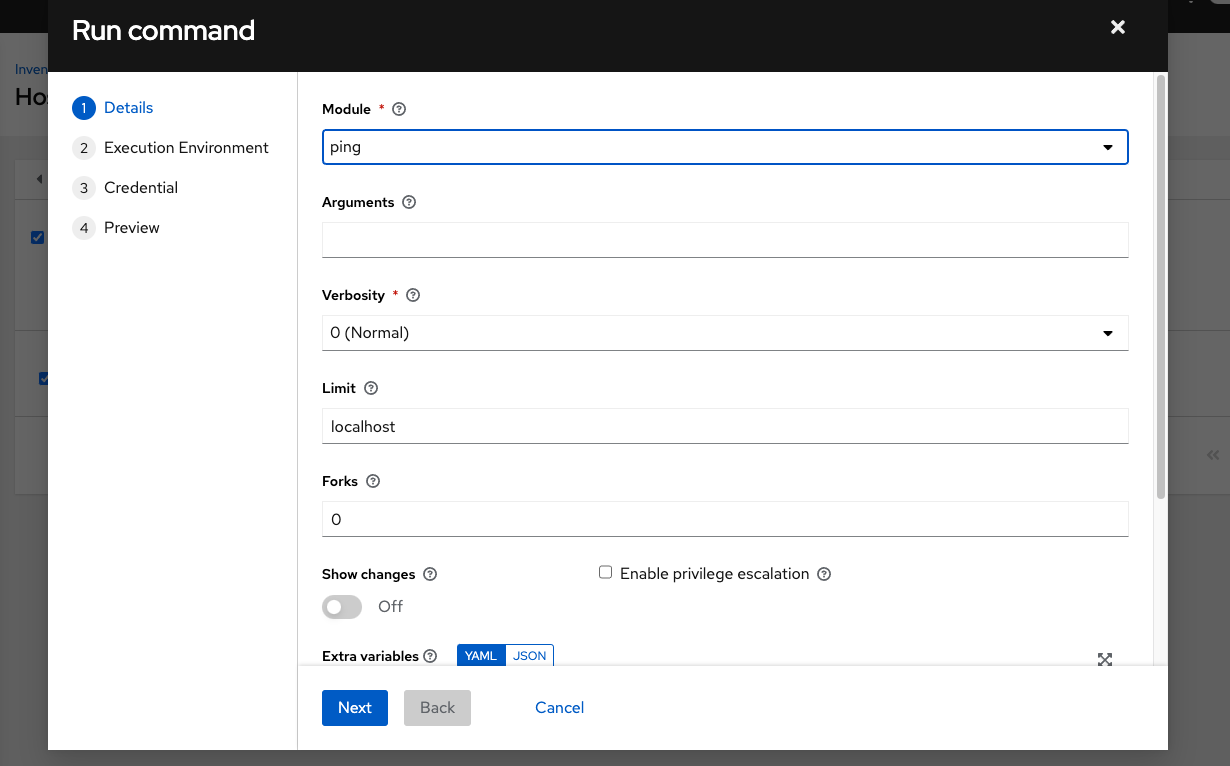

Launch an ad hoc job against the localhost using the ping or setup module. Even though the Machine Credential field is required, it does not matter which one is selected for this test:

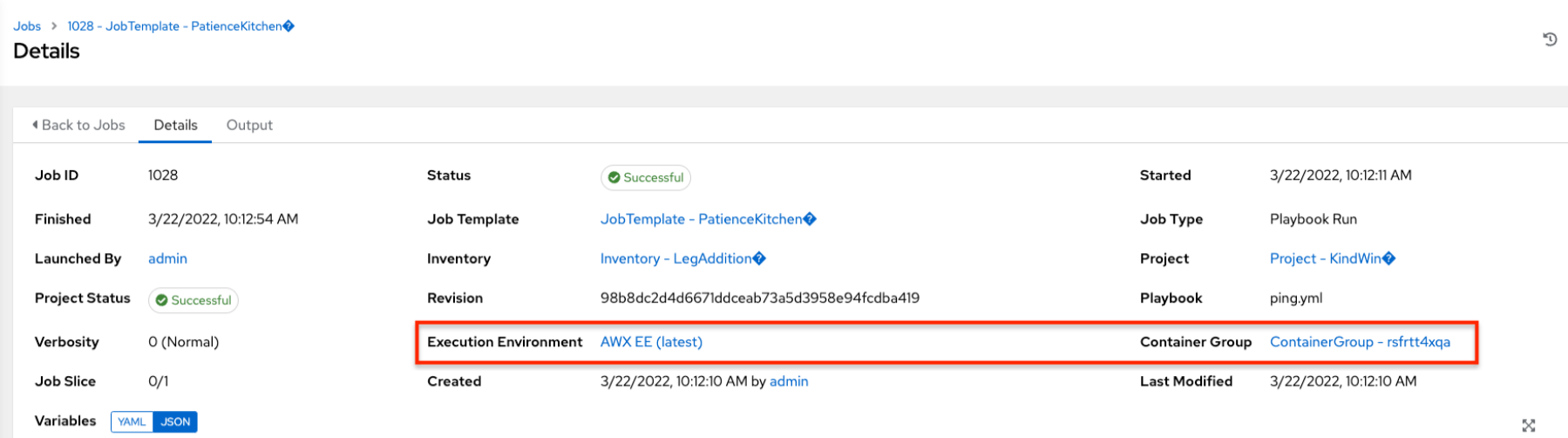

You can see in the Jobs details view that the container was reached successfully using one of the ad hoc jobs.

If you have an OpenShift UI, you can see pods appear and disappear as they deploy and terminate. Alternatively, you can use the CLI to perform a get pod operation on your namespace to watch these same events occurring in real-time.

8.2.4. View container group jobs

When you run a job associated with a container group, you can see the details of that job in the Details view along with its associated container group and the execution environment that spun up.

8.2.5. Kubernetes API failure conditions

When running a container group and the Kubernetes API responds that the resource quota has been exceeded, automation controller keeps the job in pending state. Other failures result in the traceback of the Error Details field showing the failure reason, similar to the following example:

Error creating pod: pods is forbidden: User "system: serviceaccount: aap:example" cannot create resource "pods" in API group "" in the namespace "aap"

8.2.6. Container capacity limits

Capacity limits and quotas for containers are defined by objects in the Kubernetes API:

-

To set limits on all pods within a given namespace, use the

LimitRangeobject. For more information see the Quotas and Limit Ranges section of the OpenShift documentation. - To set limits directly on the pod definition launched by automation controller, see Customizing the pod specification and the Compute Resources section of the OpenShift documentation.

Container groups do not use the capacity algorithm that normal nodes use. You need to set the number of forks at the job template level. If you configure forks in automation controller, that setting is passed along to the container.

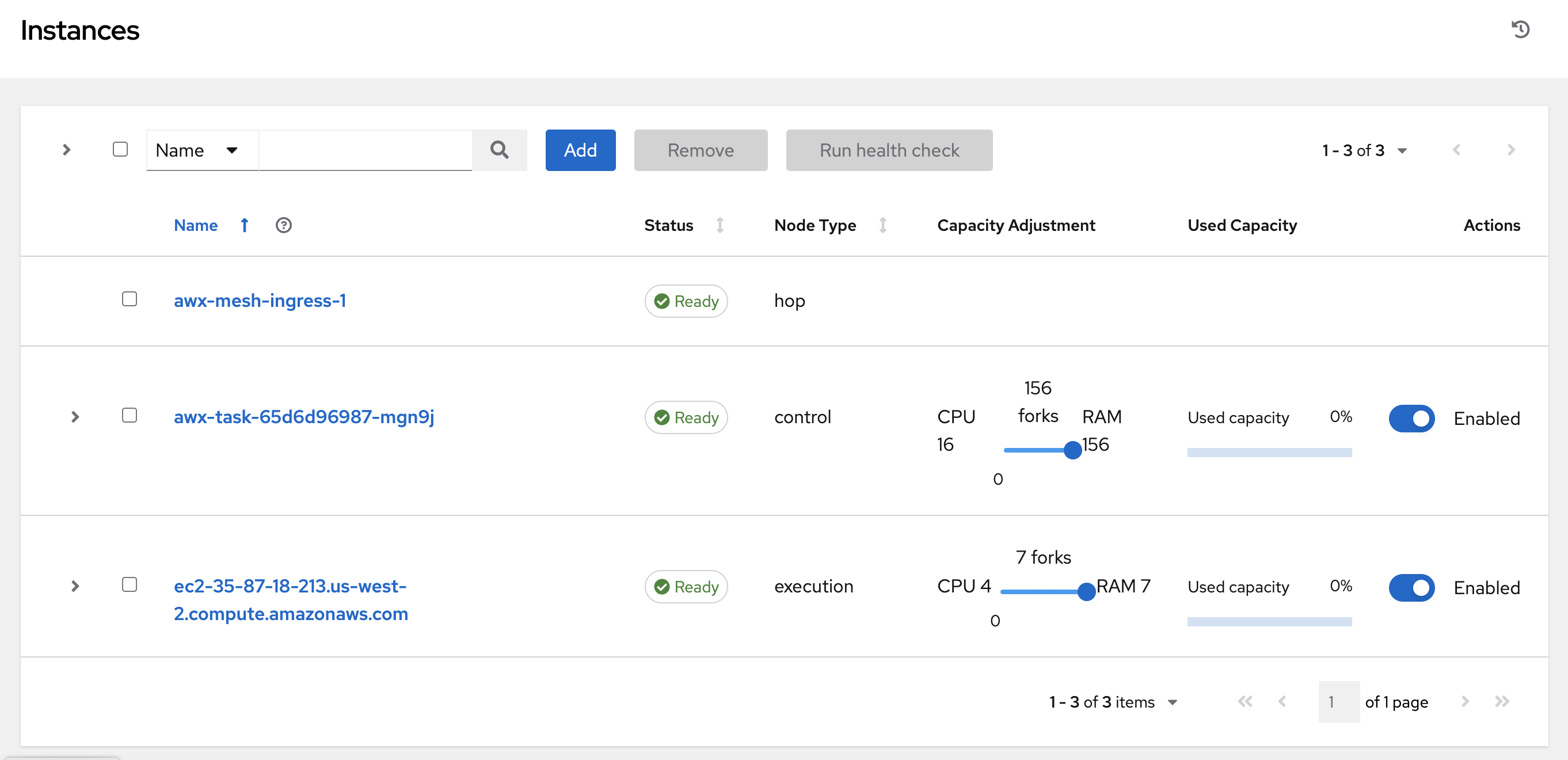

Chapter 9. Managing Capacity With Instances

Scaling your automation mesh is available on OpenShift deployments of Red Hat Ansible Automation Platform and is possible through adding or removing nodes from your cluster dynamically, using the Instances resource of the automation controller UI, without running the installation script.

Instances serve as nodes in your mesh topology. Automation mesh enables you to extend the footprint of your automation. The location where you launch a job can be different from the location where the ansible-playbook runs.

To manage instances from the automation controller UI, you must have System Administrator or System Auditor permissions.

In general, the more processor cores (CPU) and memory (RAM) a node has, the more jobs that can be scheduled to run on that node at once.

For more information, see Automation controller capacity determination and job impact.

9.1. Prerequisites

The automation mesh is dependent on hop and execution nodes running on Red Hat Enterprise Linux (RHEL). Your Red Hat Ansible Automation Platform subscription grants you ten Red Hat Enterprise Linux licenses that can be used for running components of Ansible Automation Platform.

For additional information about Red Hat Enterprise Linux subscriptions, see Registering the system and managing subscriptions in the Red Hat Enterprise Linux documentation.

The following steps prepare the RHEL instances for deployment of the automation mesh.

- You require a Red Hat Enterprise Linux operating system. Each node in the mesh requires a static IP address, or a resolvable DNS hostname that automation controller can access.

- Ensure that you have the minimum requirements for the RHEL virtual machine before proceeding. For more information, see the Red Hat Ansible Automation Platform system requirements.

Deploy the RHEL instances within the remote networks where communication is required. For information about creating virtual machines, see Creating Virtual Machines in the Red Hat Enterprise Linux documentation. Remember to scale the capacity of your virtual machines sufficiently so that your proposed tasks can run on them.

- RHEL ISOs can be obtained from access.redhat.com.

- RHEL cloud images can be built using Image Builder from console.redhat.com.

9.2. Pulling the secret

If you are using the default execution environment (provided with automation controller) to run on remote execution nodes, you must add a pull secret in the automation controller that contains the credential for pulling the execution environment image.

To do this, create a pull secret on the automation controller namespace and configure the ee_pull_credentials_secret parameter in the Operator as follows:

Procedure

Create a secret:

oc create secret generic ee-pull-secret \ --from-literal=username=<username> \ --from-literal=password=<password> \ --from-literal=url=registry.redhat.io oc edit automationcontrollers <instance name>Add

ee_pull_credentials_secret ee-pull-secretto the specification:spec.ee_pull_credentials_secret=ee-pull-secret

To manage instances from the automation controller UI, you must have System Administrator or System Auditor permissions.

9.3. Setting up Virtual Machines for use in an automation mesh

Procedure

SSH into each of the RHEL instances and perform the following steps. Depending on your network access and controls, SSH proxies or other access models might be required.

Use the following command:

ssh [username]@[host_ip_address]

For example, for an Ansible Automation Platform instance running on Amazon Web Services.

ssh ec2-user@10.0.0.6

- Create or copy an SSH key that can be used to connect from the hop node to the execution node in later steps. This can be a temporary key used just for the automation mesh configuration, or a long-lived key. The SSH user and key are used in later steps.

Enable your RHEL subscription with

baseosandappstreamrepositories. Ansible Automation Platform RPM repositories are only available through subscription-manager, not the Red Hat Update Infrastructure (RHUI). If you attempt to use any other Linux footprint, including RHEL with RHUI, this causes errors.sudo subscription-manager register --auto-attach

If Simple Content Access is enabled for your account, use:

sudo subscription-manager register

For more information about Simple Content Access, see Getting started with simple content access.

Enable Ansible Automation Platform subscriptions and the proper Red Hat Ansible Automation Platform channel:

# subscription-manager repos --enable ansible-automation-platform-2.4-for-rhel-8-x86_64-rpms for RHEL 8 # subscription-manager repos --enable ansible-automation-platform-2.4-for-rhel-9-x86_64-rpms for RHEL 9

Ensure the packages are up to date:

sudo dnf upgrade -y

Install the ansible-core packages:

sudo dnf install -y ansible-core

9.4. Managing instances

To expand job capacity, create a standalone execution node that can be added to run alongside a deployment of automation controller. These execution nodes are not part of the automation controller Kubernetes cluster. The control nodes run in the cluster connect and submit work to the execution nodes through Receptor. These execution nodes are registered in automation controller as type execution instances, meaning they are only used to run jobs, not dispatch work or handle web requests as control nodes do.

Hop nodes can be added to sit between the control plane of automation controller and standalone execution nodes. These hop nodes are not part of the Kubernetes cluster and are registered in automation controller as an instance of type hop, meaning they only handle inbound and outbound traffic for otherwise unreachable nodes in different or more strict networks.

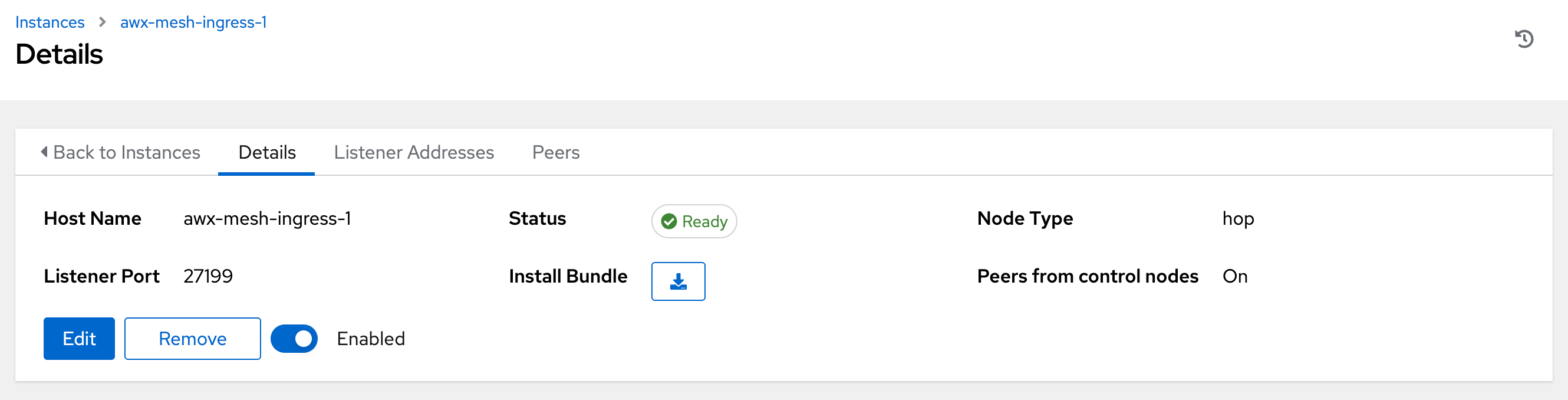

The following procedure demonstrates how to set the node type for the hosts.

Procedure

- From the navigation panel, select Administration → Instances.

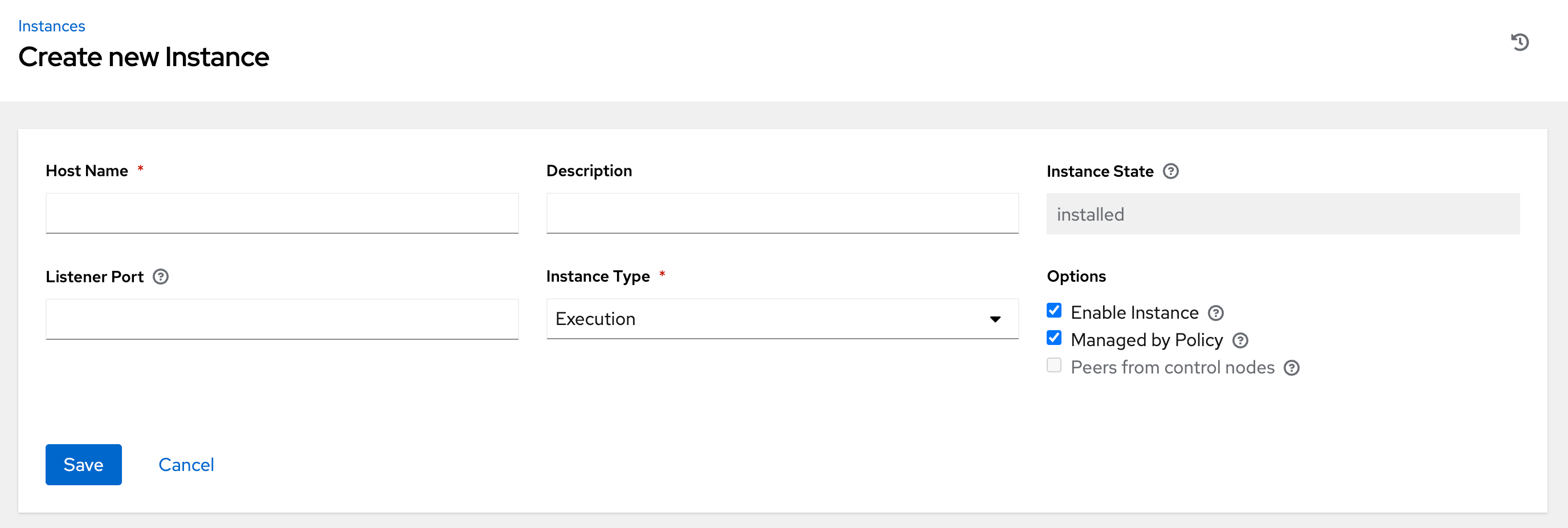

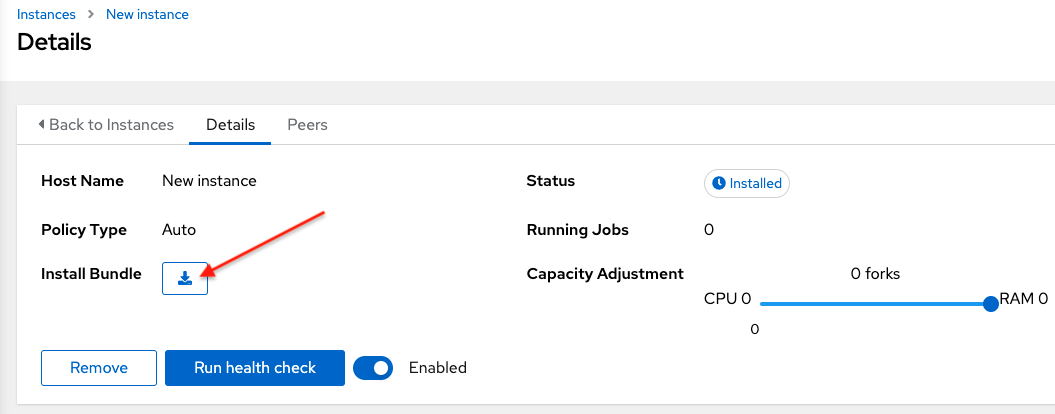

On the Instances list page, click Add. The Create new Instance window opens.

An instance requires the following attributes:

Host Name: (required) Enter a fully qualified domain name (public DNS) or IP address for your instance. This field is equivalent to

hostnamefor installer-based deployments.NoteIf the instance uses private DNS that cannot be resolved from the control cluster, DNS lookup routing fails, and the generated SSL certificates is invalid. Use the IP address instead.

- Optional: Description: Enter a description for the instance.

- Instance State: This field is auto-populated, indicating that it is being installed, and cannot be modified.

-

Listener Port: This port is used for the receptor to listen on for incoming connections. You can set the port to one that is appropriate for your configuration. This field is equivalent to

listener_portin the API. The default value is 27199, though you can set your own port value. Instance Type: Only

executionandhopnodes can be created. Operator based deployments do not support Control or Hybrid nodes.Options:

- Enable Instance: Check this box to make it available for jobs to run on an execution node.

- Check the Managed by Policy box to enable policy to dictate how the instance is assigned.

Check the Peers from control nodes box to enable control nodes to peer to this instance automatically. For nodes connected to automation controller, check the Peers from Control nodes box to create a direct communication link between that node and automation controller. For all other nodes:

- If you are not adding a hop node, make sure Peers from Control is checked.

- If you are adding a hop node, make sure Peers from Control is not checked.

- For execution nodes that communicate with hop nodes, do not check this box.

To peer an execution node with a hop node, click the

icon next to the Peers field.

icon next to the Peers field.

The Select Peers window is displayed.

Peer the execution node to the hop node.

Click Save.

To view a graphical representation of your updated topology, see Topology viewer.

NoteExecute the following steps from any computer that has SSH access to the newly created instance.

Click the

icon next to Install Bundle to download the tar file that includes this new instance and the files necessary to install the created node into the automation mesh.

icon next to Install Bundle to download the tar file that includes this new instance and the files necessary to install the created node into the automation mesh.

The install bundle contains TLS certificates and keys, a certificate authority, and a proper Receptor configuration file.

receptor-ca.crt work-public-key.pem receptor.key install_receptor.yml inventory.yml group_vars/all.yml requirements.yml

Extract the downloaded

tar.gzInstall Bundle from the location where you downloaded it. To ensure that these files are in the correct location on the remote machine, the install bundle includes theinstall_receptor.ymlplaybook. The playbook requires the Receptor collection. Run the following command to download the collection:ansible-galaxy collection install -r requirements.yml

Before running the

ansible-playbookcommand, edit the following fields in theinventory.ymlfile:all: hosts: remote-execution: ansible_host: 10.0.0.6 ansible_user: <username> # user provided ansible_ssh_private_key_file: ~/.ssh/<id_rsa>-

Ensure

ansible_hostis set to the IP address or DNS of the node. -

Set

ansible_userto the username running the installation. -

Set

ansible_ssh_private_key_fileto contain the filename of the private key used to connect to the instance. -

The content of the

inventory.ymlfile serves as a template and contains variables for roles that are applied during the installation and configuration of a receptor node in a mesh topology. You can modify some of the other fields, or replace the file in its entirety for advanced scenarios. For more information, see Role Variables.

-

Ensure

For a node that uses a private DNS, add the following line to

inventory.yml:ansible_ssh_common_args: <your ssh ProxyCommand setting>

This instructs the

install-receptor.ymlplaybook to use the proxy command to connect through the local DNS node to the private node.- When the attributes are configured, click Save. The Details page of the created instance opens.

- Save the file to continue.

The system that is going to run the install bundle to setup the remote node and run

ansible-playbookrequires theansible.receptorcollection to be installed:ansible-galaxy collection install ansible.receptor

or

ansible-galaxy install -r requirements.yml

-

Installing the receptor collection dependency from the

requirements.ymlfile consistently retrieves the receptor version specified there. Additionally, it retrieves any other collection dependencies that might be needed in the future. - Install the receptor collection on all nodes where your playbook will run, otherwise an error occurs.

-

Installing the receptor collection dependency from the

If

receptor_listener_portis defined, the machine also requires an available open port on which to establish inbound TCP connections, for example, 27199. Run the following command to open port 27199 for receptor communication:sudo firewall-cmd --permanent --zone=public --add-port=27199/tcp

Run the following playbook on the machine where you want to update your automation mesh:

ansible-playbook -i inventory.yml install_receptor.yml

After this playbook runs, your automation mesh is configured.

To remove an instance from the mesh, see Removing instances.

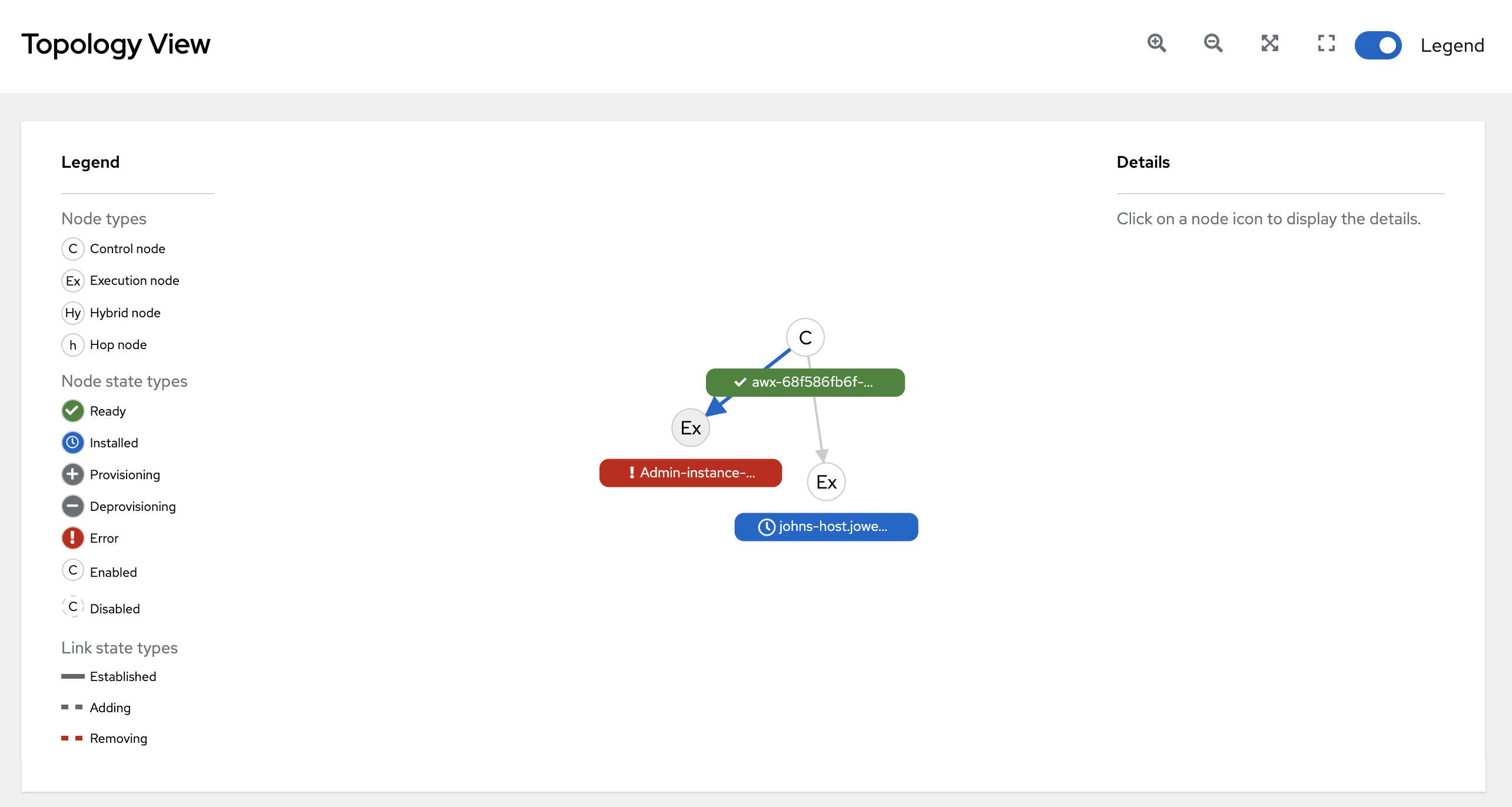

Chapter 10. Topology viewer

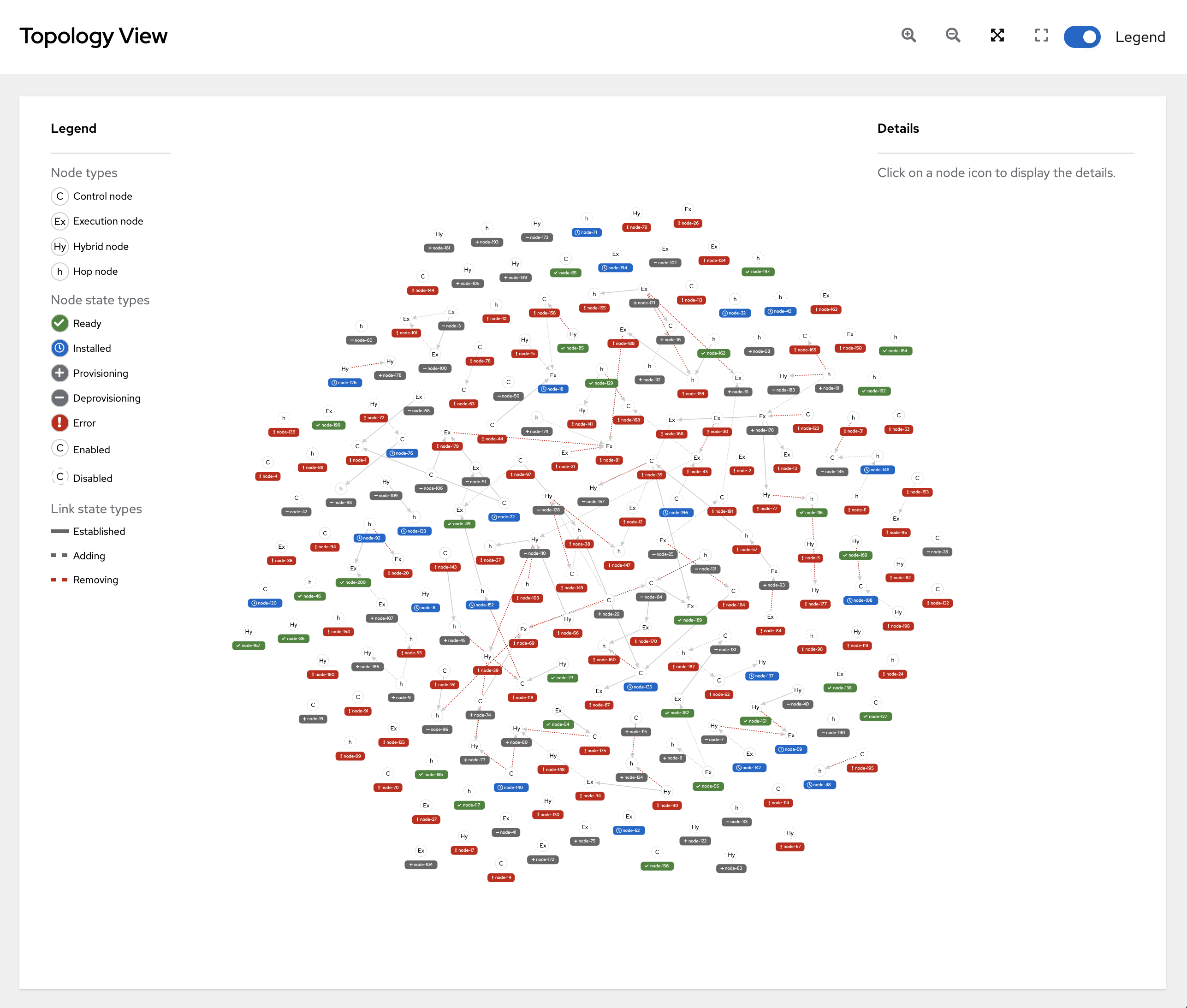

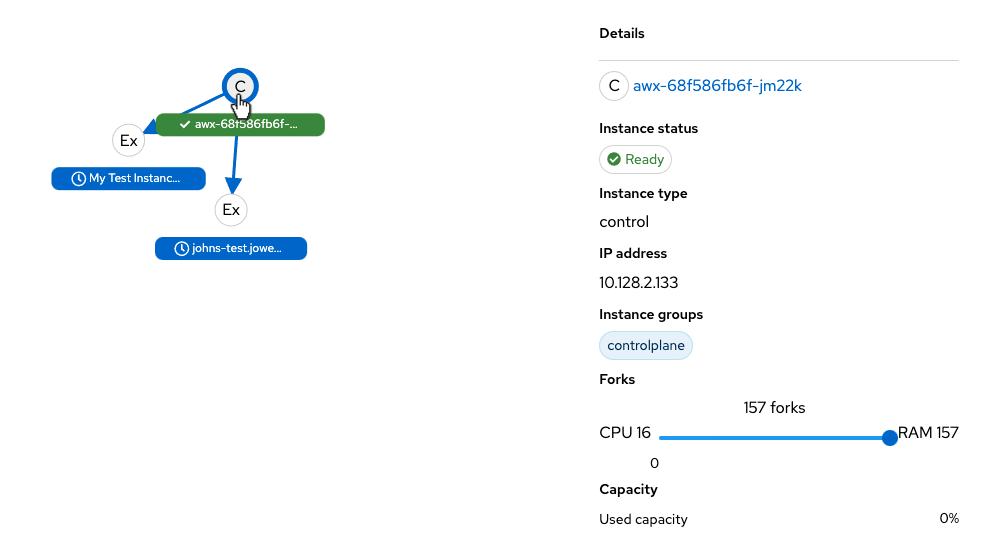

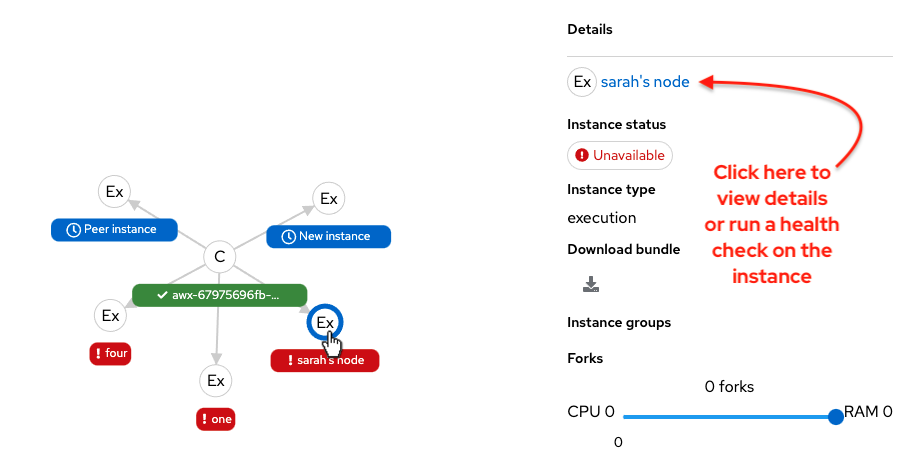

The topology viewer enables you to view node type, node health, and specific details about each node if you already have a mesh topology deployed.

To access the topology viewer from the automation controller UI, you must have System Administrator or System Auditor permissions.

For more information about automation mesh on a VM-based installation, see the Red Hat Ansible Automation Platform automation mesh guide for VM-based installations.

For more information about automation mesh on an operator-based installation, see the Red Hat Ansible Automation Platform automation mesh for operator-based installations.

10.1. Accessing the topology viewer

Use the following procedure to access the topology viewer from the automation controller UI.

Procedure

From the navigation panel, select Administration → Topology View. The Topology View opens and displays a graphical representation of how each receptor node links together.

To adjust the zoom levels, or manipulate the graphic views, use the control icons: zoom-in (

), zoom-out (

), zoom-out (

), expand (

), expand (

), and reset (

), and reset (

) on the toolbar.

) on the toolbar.

You can also click and drag to pan around; and scroll using your mouse or trackpad to zoom. The fit-to-screen feature automatically scales the graphic to fit on the screen and repositions it in the center. It is particularly useful when you want to see a large mesh in its entirety.

To reset the view to its default view, click the Reset zoom (

) icon.

) icon.

Refer to the Legend to identify the type of nodes that are represented.

For VM-based installations, see Control and execution planes

For operator-based installations, see Control and execution planes

for more information on each type of node.

NoteIf the Legend is not present, use the toggle on the top bar to enable it.

The Legend shows the

node status <node_statuses>by color, which is indicative of the health of the node. An Error status in the Legend includes the Unavailable state (as displayed in the Instances list view) plus any future error conditions encountered in later versions of automation controller.The following link statuses are also shown in the Legend:

- Established: This is a link state that indicates a peer connection between nodes that are either ready, unavailable, or disabled.

- Adding: This is a link state indicating a peer connection between nodes that were selected to be added to the mesh topology.

- Removing: This is a link state indicating a peer connection between nodes that were selected to be removed from the topology.

Hover over a node and the connectors highlight to show its immediate connected nodes (peers) or click on a node to retrieve details about it, such as its hostname, node type, and status.

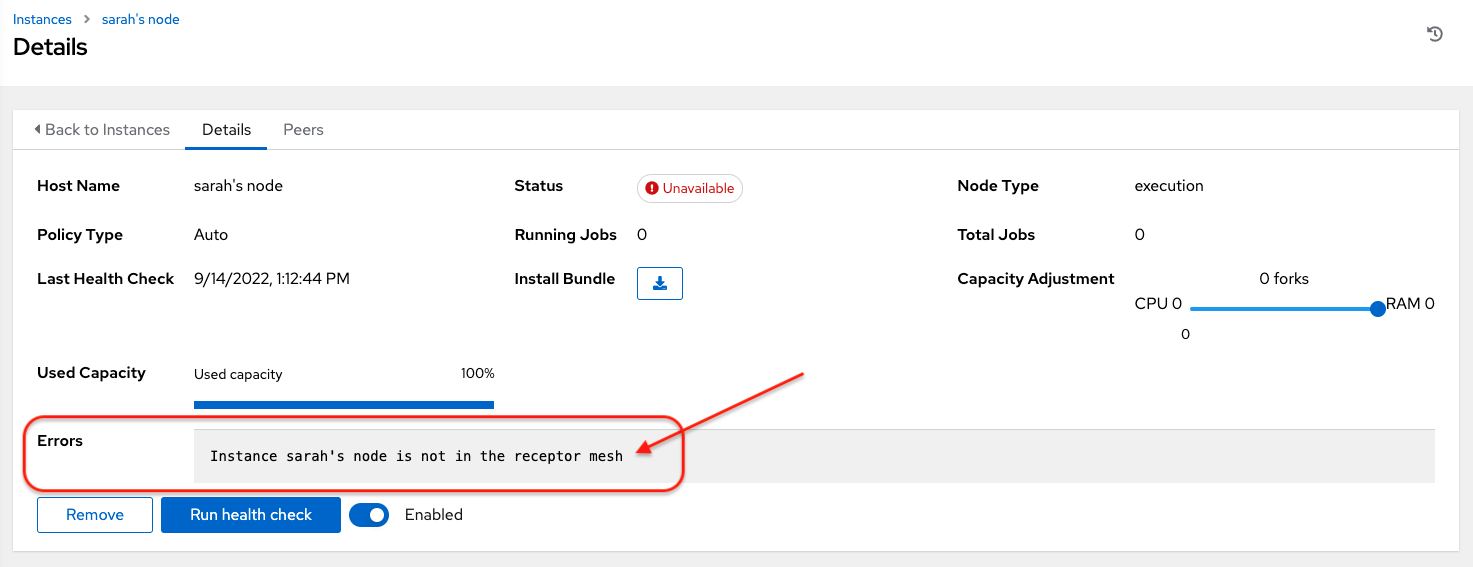

Click on the link for instance hostname from the details displayed to be redirected to its Details page that provides more information about that node, most notably for information about an

Errorstatus, as in the following example.

You can use the Details page to remove the instance, run a health check on the instance on an as-needed basis, or unassign jobs from the instance. By default, jobs can be assigned to each node. However, you can disable it to exclude the node from having any jobs running on it.

For more information on creating new nodes and scaling the mesh, see Managing Capacity with Instances.

Chapter 11. Automation controller logfiles

Automation controller logfiles can be accessed from two centralized locations:

-

/var/log/tower/ -

/var/log/supervisor/

In the /var/log/tower/ directory, you can view logfiles captured by:

- tower.log: Captures the log messages such as runtime errors that occur when the job is executed.

- callback_receiver.log: Captures callback receiver logs that handles callback events when running ansible jobs.

- dispatcher.log: Captures log messages for the automation controller dispatcher worker service.

- job_lifecycle.log: Captures details of the job run, whether it is blocked, and what condition is blocking it.

- management_playbooks.log: Captures the logs of management playbook runs, and isolated job runs such as copying the metadata.

- rsyslog.err: Captures rsyslog errors authenticating with external logging services when sending logs to them.

- task_system.log: Captures the logs of tasks that automation controller is running in the background, such as adding cluster instances and logs related to information gathering or processing for analytics.

- tower_rbac_migrations.log: Captures the logs for rbac database migration or upgrade.

- tower_system_tracking_migrations.log: Captures the logs of the controller system tracking migration or upgrade.

- wsbroadcast.log: Captures the logs of websocket connections in the controller nodes.

In the /var/log/supervisor/ directory, you can view logfiles captured by:

-

awx-callback-receiver.log: Captures the log of callback receiver that handles callback events when running ansible jobs, managed by

supervisord. - awx-daphne.log: Captures the logs of Websocket communication of WebUI.

- awx-dispatcher.log: Captures the logs that occur when dispatching a task to an automation controller instance, such as when running a job.

-

awx-rsyslog.log: Captures the logs for the

rsyslogservice. - awx-uwsgi.log: Captures the logs related to uWSGI, which is an application server.

- awx-wsbroadcast.log: Captures the logs of the websocket service that is used by automation controller.

-

failure-event-handler.stderr.log: Captures the standard errors for

/usr/bin/failure-event-handlersupervisord’s subprocess. -

supervisord.log: Captures the logs related to

supervisorditself. - wsrelay.log: Captures the communication logs within the websocket relay server.

- ws_heartbeat.log: Captures the periodic checks on the health of services running on the host.

- rsyslog_configurer.log: Captures rsyslog configuration activity associated with authenticating with external logging services.

The /var/log/supervisor/ directory includes stdout files for all services as well.

You can expect the following log paths to be generated by services used by automation controller (and Ansible Automation Platform):

- /var/log/nginx/

- /var/lib/pgsql/data/pg_log/

- /var/log/redis/

Troubleshooting

Error logs can be found in the following locations:

-

Automation controller server errors are logged in

/var/log/tower. -

Supervisors logs can be found in

/var/log/supervisor/. - Nginx web server errors are logged in the httpd error log.

-

Configure other automation controller logging needs in

/etc/tower/conf.d/.

Explore client-side issues using the JavaScript console built into most browsers and report any errors to Ansible through the Red Hat Customer portal at: https://access.redhat.com/.

Chapter 12. Logging and Aggregation

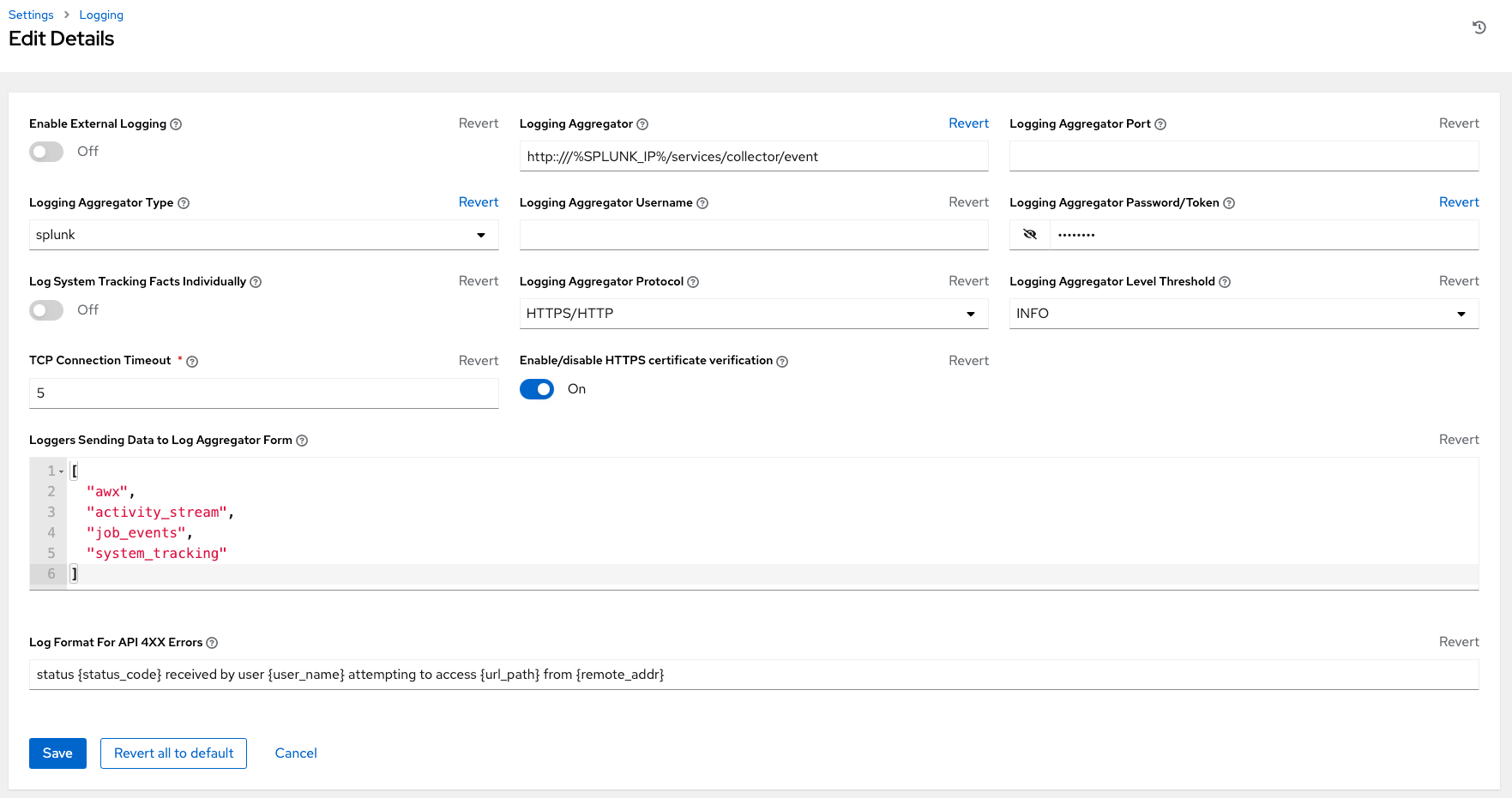

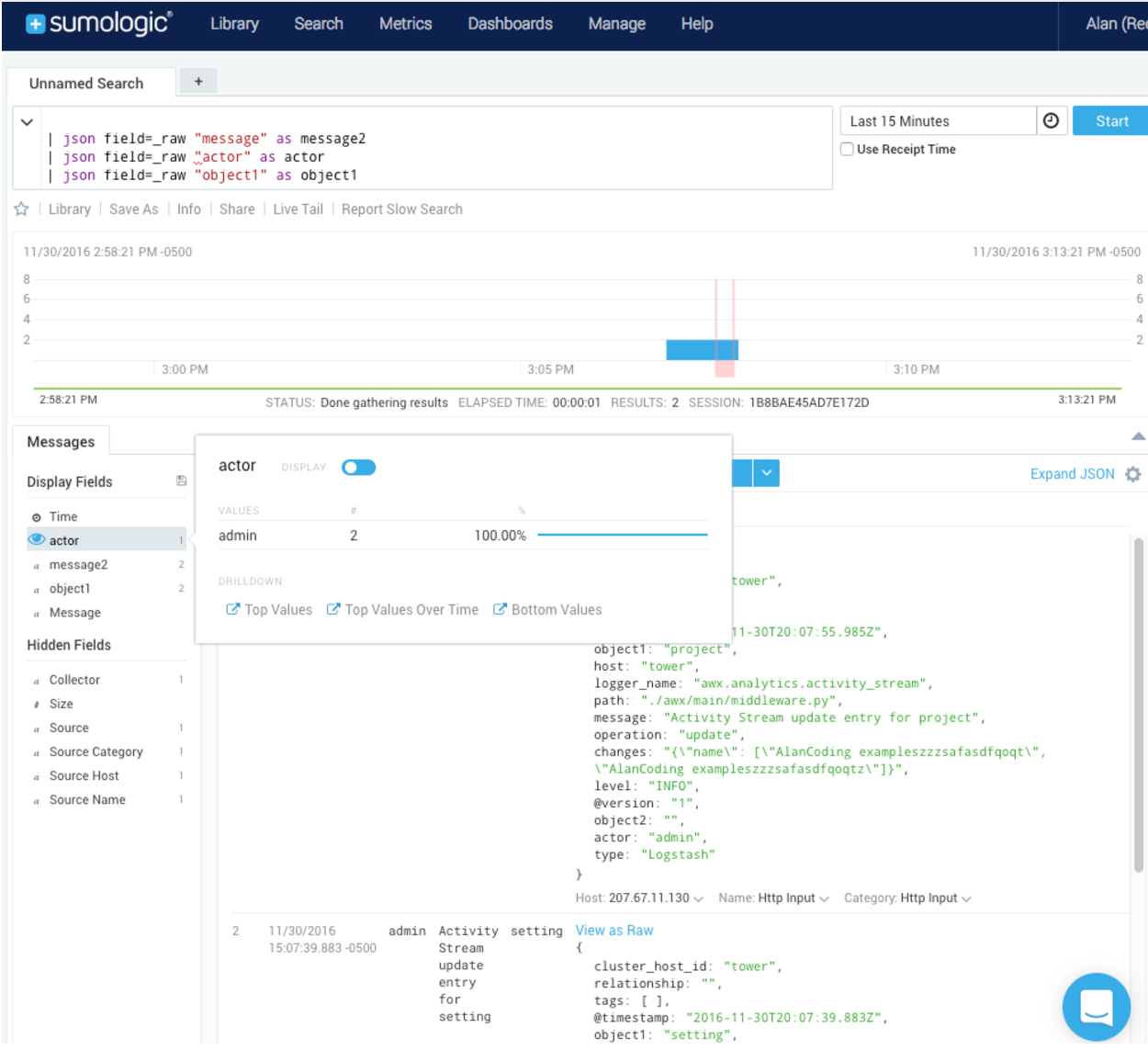

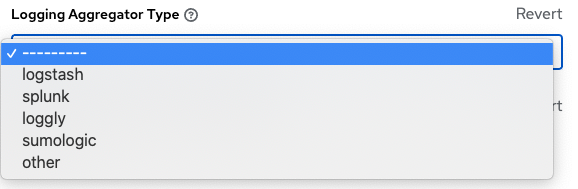

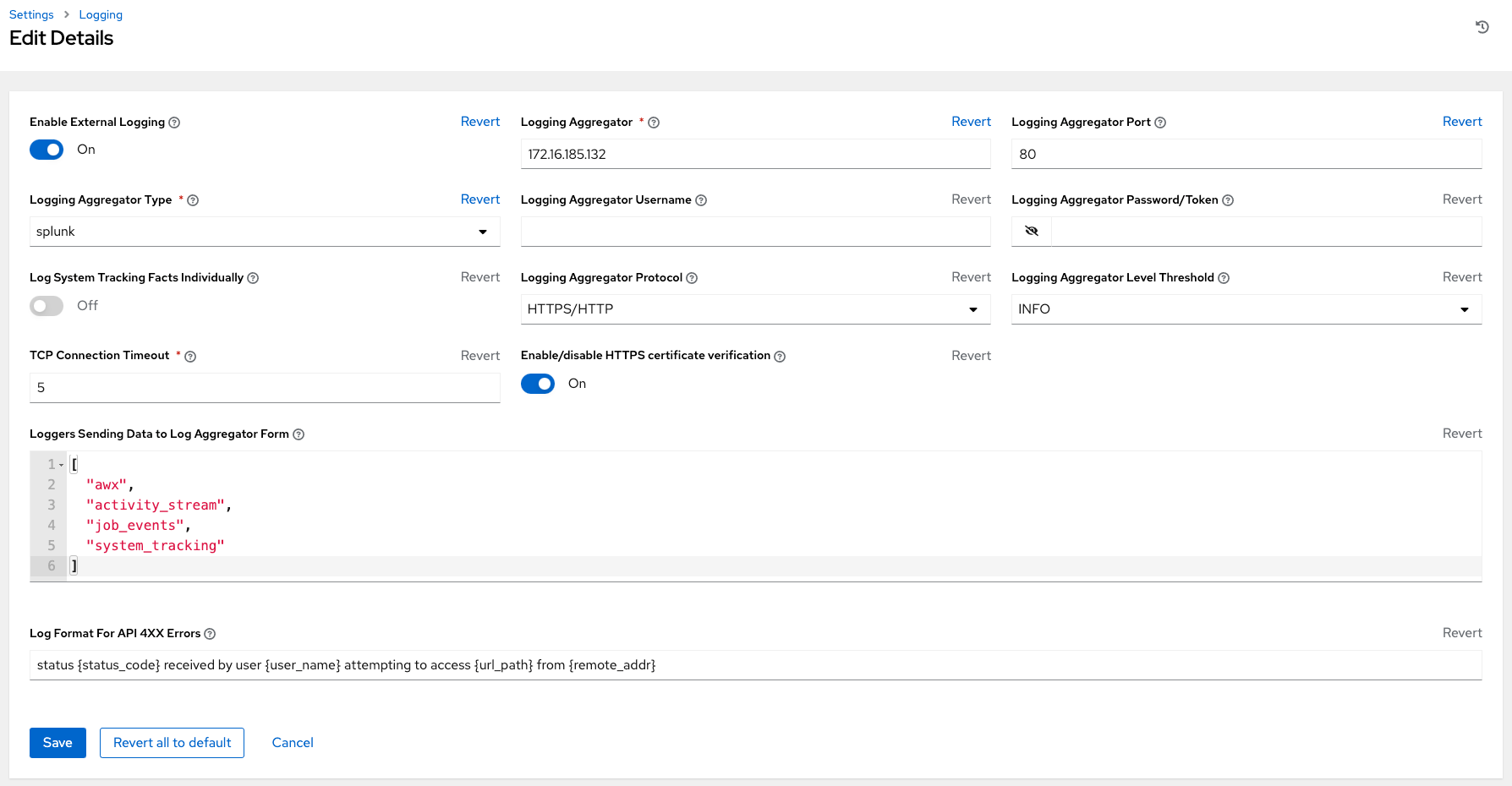

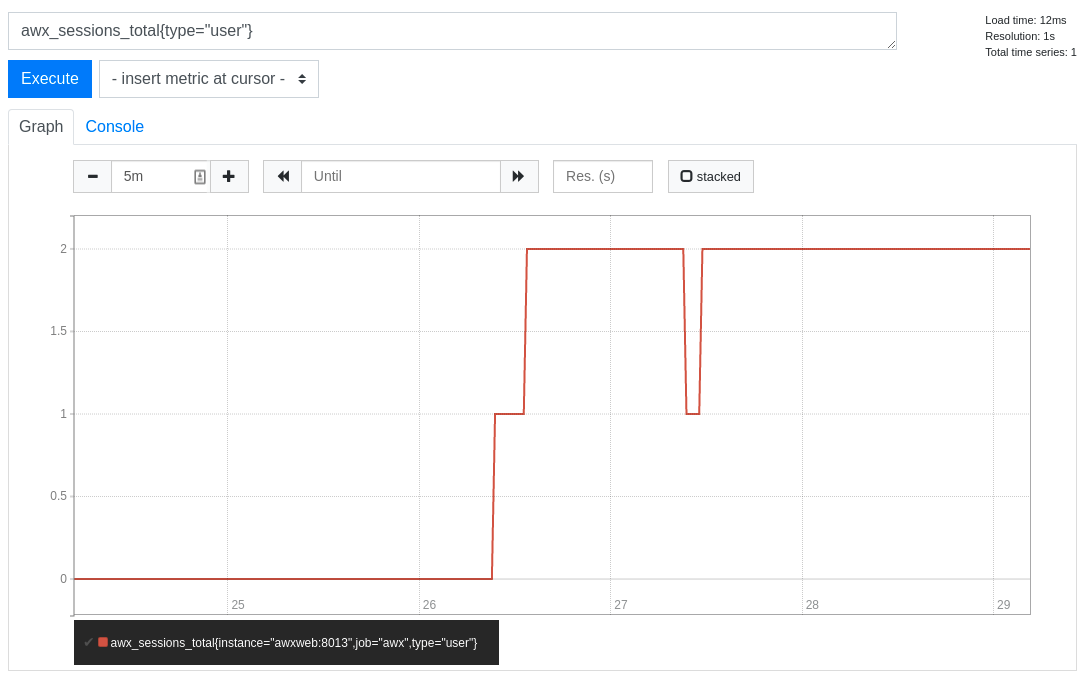

Logging provides the capability to send detailed logs to third-party external log aggregation services. Services connected to this data feed serve as a means of gaining insight into automation controller use or technical trends. The data can be used to analyze events in the infrastructure, monitor for anomalies, and correlate events in one service with events in another.