Deploying Red Hat Ceph Storage and Red Hat OpenStack Platform together with director

Configure director to deploy and use a Red Hat Ceph Storage cluster

OpenStack Documentation Team

rhos-docs@redhat.comAbstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Providing feedback on Red Hat documentation

We appreciate your input on our documentation. Tell us how we can make it better.

Providing documentation feedback in Jira

Use the Create Issue form to provide feedback on the documentation. The Jira issue will be created in the Red Hat OpenStack Platform Jira project, where you can track the progress of your feedback.

- Ensure that you are logged in to Jira. If you do not have a Jira account, create an account to submit feedback.

- Click the following link to open a the Create Issue page: Create Issue

- Complete the Summary and Description fields. In the Description field, include the documentation URL, chapter or section number, and a detailed description of the issue. Do not modify any other fields in the form.

- Click Create.

Chapter 1. Deploying an overcloud and Red Hat Ceph Storage

Red Hat OpenStack Platform (RHOSP) director deploys the cloud environment, also known as the overcloud, and Red Hat Ceph Storage. Director uses Ansible playbooks provided through the tripleo-ansible package to deploy the Ceph Storage cluster. The director also manages the configuration and scaling operations of the Ceph Storage cluster.

For more information about Red Hat Ceph Storage, see Red Hat Ceph Storage Architecture Guide.

For more information about services in the Red Hat OpenStack Platform, see Configuring a basic overcloud with the CLI tools in Installing and managing Red Hat OpenStack Platform with director.

Red Hat OpenStack Platform 17.1 only supports Red Hat Ceph Storage 6 for new deployments. Red Hat Ceph Storage 5 is not supported in new deployment scenarios.

1.1. Red Hat Ceph Storage clusters

Red Hat Ceph Storage is a distributed data object store designed for performance, reliability, and scalability. Distributed object stores use unstructured data to simultaneously service modern and legacy object interfaces.

Ceph Storage is deployed as a cluster. A Ceph Storage cluster consists of two primary types of daemons:

- Ceph Object Storage Daemon (CephOSD) - The CephOSD performs data storage, data replication, rebalancing, recovery, monitoring, and reporting tasks.

- Ceph Monitor (CephMon) - The CephMon maintains the primary copy of the cluster map with the current state of the cluster.

For more information about Red Hat Ceph Storage, see the Red Hat Ceph Storage Architecture Guide.

1.2. Red Hat Ceph Storage node requirements

There are additional node requirements using director to create a Ceph Storage cluster:

- Hardware requirements including processor, memory, and network interface card selection and disk layout are available in the Red Hat Ceph Storage Hardware Guide.

- Each Ceph Storage node requires a supported power management interface, such as Intelligent Platform Management Interface (IPMI) functionality, on the motherboard of the server.

-

Each Ceph Storage node must have at least two disks. RHOSP director uses

cephadmto deploy the Ceph Storage cluster. The cephadm functionality does not support installing Ceph OSD on the root disk of the node.

1.2.1. Red Hat Ceph Storage nodes and RHEL compatibility

RHOSP 17.1 is supported on RHEL 9.2. However, hosts that are mapped to the Red Hat Ceph Storage role update to the latest major RHEL release. Before upgrading, review the Red Hat Knowledgebase article Red Hat Ceph Storage: Supported configurations.

1.3. Deploying Red Hat Ceph Storage

You deploy Red Hat Ceph Storage in two phases:

- Create the Red Hat Ceph Storage cluster before deploying the overcloud.

- Configure the Red Hat Ceph Storage cluster during overcloud deployment.

A Ceph Storage cluster is created ready to serve the Ceph RADOS Block Device (RBD) service. Additionally, the following services are running on the appropriate nodes:

- Ceph Monitor (CephMon)

- Ceph Manager (CephMgr)

- Ceph OSD (CephOSD)

Pools and cephx keys are created during the configuration phase.

The following Ceph Storage components are not available until after the configuration phase:

- Ceph Dashboard (CephDashboard)

- Ceph Object Gateway (CephRGW)

- Ceph MDS (CephMds)

Red Hat Ceph Storage cluster configuration finalizes during overcloud deployment. Daemons and services such as Ceph Object Gateway and Ceph Dashboard deploy according to the overcloud definition. Red Hat OpenStack Platform (RHOSP) services are configured as Ceph Storage cluster clients.

1.4. Red Hat Ceph Storage deployment requirements

Provisioning of network resources and bare metal instances is required before Ceph Storage cluster creation. Configure the following before creating a Red Hat Ceph Storage cluster:

-

Provision networks with the

openstack overcloud network provisioncommand and thecli-overcloud-network-provision.yamlansible playbook. -

Provision bare metal instances with the

openstack overcloud node provisioncommand to provision bare metal instances using thecli-overcloud-node-provision.yamlansible playbook.

For more information about these tasks, see:

The following elements must be present in the overcloud environment to finalize the Ceph Storage cluster configuration:

- Red Hat OpenStack Platform director installed on an undercloud host. See Installing director in Installing and managing Red Hat OpenStack Platform with director.

- Installation of recommended hardware to support Red Hat Ceph Storage. For more information about recommended hardware, see the Red Hat Ceph Storage Hardware Guide.

1.5. Post deployment verification

Director deploys a Ceph Storage cluster ready to serve Ceph RADOS Block Device (RBD) using tripleo-ansible roles executed by the cephadm command.

Verify the following are in place after cephadm completes Ceph Storage deployment:

-

SSH access to a CephMon service node to use the

sudo cephadm shellcommand. All OSDs operational.

NoteCheck inoperative OSDs for environmental issues like uncleaned disks.

-

A Ceph configuration file and client administration keyring file in the

/etc/cephdirectory of CephMon service nodes. - The Ceph Storage cluster is ready to serve RBD.

Pools, cephx keys, CephDashboard, and CephRGW are configured during overcloud deployment by the openstack overcloud deploy command. This is for two reasons:

-

The Dashboard and RGW services must integrate with

haproxy. This is deployed with the overcloud. - The creation of pools and cephx keys are dependent on which OpenStack clients are deployed.

These resources are created in the Ceph Storage cluster using the client administration keyring file and the ~/deployed_ceph.yaml file output by the openstack overcloud ceph deploy command.

For more information about cephadm, see Red Hat Ceph Storage Installation Guide.

Chapter 2. Preparing Ceph Storage nodes for deployment

Red Hat Ceph Storage nodes are bare metal systems with IPMI power management. Director installs Red Hat Enterprise Linux on each node.

Director communicates with each node through the Provisioning network during the introspection and provisioning processes. All nodes connect to the Provisioning network through the native VLAN.

For more information about bare metal provisioning before overcloud deployment, see Provisioning and deploying your overcloud in Installing and managing Red Hat OpenStack Platform with director guide.

For a complete guide to bare metal provisioning, see Configuring the Bare Metal Provisioning service.

2.1. Cleaning Ceph Storage node disks

Ceph Storage OSDs and journal partitions require factory clean disks. All data and metadata must be erased by the Bare Metal Provisioning service (ironic) from these disks before installing the Ceph OSD services.

You can configure director to delete all disk data and metadata by default by using the Bare Metal Provisioning service. When director is configured to perform this task, the Bare Metal Provisioning service performs an additional step to boot the nodes each time a node is set to available.

The Bare Metal Provisioning service uses the wipefs --force --all command. This command deletes all data and metadata on the disk but it does not perform a secure erase. A secure erase takes much longer.

Procedure

Open

/home/stack/undercloud.confand add the following parameter:clean_nodes=true

-

Save

/home/stack/undercloud.conf. Update the undercloud configuration.

openstack undercloud install

2.2. Registering nodes

Register the nodes to enable communication with director.

Procedure

-

Create a node inventory JSON file in

/home/stack. Enter hardware and power management details for each node.

For example:

{ "nodes":[ { "mac":[ "b1:b1:b1:b1:b1:b1" ], "cpu":"4", "memory":"6144", "disk":"40", "arch":"x86_64", "pm_type":"ipmi", "pm_user":"admin", "pm_password":"p@55w0rd!", "pm_addr":"192.0.2.205" }, { "mac":[ "b2:b2:b2:b2:b2:b2" ], "cpu":"4", "memory":"6144", "disk":"40", "arch":"x86_64", "pm_type":"ipmi", "pm_user":"admin", "pm_password":"p@55w0rd!", "pm_addr":"192.0.2.206" }, { "mac":[ "b3:b3:b3:b3:b3:b3" ], "cpu":"4", "memory":"6144", "disk":"40", "arch":"x86_64", "pm_type":"ipmi", "pm_user":"admin", "pm_password":"p@55w0rd!", "pm_addr":"192.0.2.207" }, { "mac":[ "c1:c1:c1:c1:c1:c1" ], "cpu":"4", "memory":"6144", "disk":"40", "arch":"x86_64", "pm_type":"ipmi", "pm_user":"admin", "pm_password":"p@55w0rd!", "pm_addr":"192.0.2.208" }, { "mac":[ "c2:c2:c2:c2:c2:c2" ], "cpu":"4", "memory":"6144", "disk":"40", "arch":"x86_64", "pm_type":"ipmi", "pm_user":"admin", "pm_password":"p@55w0rd!", "pm_addr":"192.0.2.209" }, { "mac":[ "c3:c3:c3:c3:c3:c3" ], "cpu":"4", "memory":"6144", "disk":"40", "arch":"x86_64", "pm_type":"ipmi", "pm_user":"admin", "pm_password":"p@55w0rd!", "pm_addr":"192.0.2.210" }, { "mac":[ "d1:d1:d1:d1:d1:d1" ], "cpu":"4", "memory":"6144", "disk":"40", "arch":"x86_64", "pm_type":"ipmi", "pm_user":"admin", "pm_password":"p@55w0rd!", "pm_addr":"192.0.2.211" }, { "mac":[ "d2:d2:d2:d2:d2:d2" ], "cpu":"4", "memory":"6144", "disk":"40", "arch":"x86_64", "pm_type":"ipmi", "pm_user":"admin", "pm_password":"p@55w0rd!", "pm_addr":"192.0.2.212" }, { "mac":[ "d3:d3:d3:d3:d3:d3" ], "cpu":"4", "memory":"6144", "disk":"40", "arch":"x86_64", "pm_type":"ipmi", "pm_user":"admin", "pm_password":"p@55w0rd!", "pm_addr":"192.0.2.213" } ] }- Save the new file.

Initialize the stack user:

$ source ~/stackrc

Import the JSON inventory file into director and register nodes

$ openstack overcloud node import <inventory_file>

Replace

<inventory_file>with the name of the file created in the first step.Assign the kernel and ramdisk images to each node:

$ openstack overcloud node configure <node>

2.3. Verifying available Red Hat Ceph Storage packages

Verify all required packages are available to avoid overcloud deployment failures.

2.3.1. Verifying cephadm package installation

Verify the cephadm package is installed on at least one overcloud node. The cephadm package is used to bootstrap the first node of the Ceph Storage cluster.

The cephadm package is included in the overcloud-hardened-uefi-full.qcow2 image. The tripleo_cephadm role uses the Ansible package module to ensure it is present in the image.

2.4. Defining the root disk for multi-disk Ceph clusters

Ceph Storage nodes typically use multiple disks. Director must identify the root disk in multiple disk configurations. The overcloud image is written to the root disk during the provisioning process.

Hardware properties are used to identify the root disk. For more information about properties you can use to identify the root disk, see Properties that identify the root disk.

Procedure

Verify the disk information from the hardware introspection of each node:

(undercloud)$ openstack baremetal introspection data save <node_uuid> --file <output_file_name>

-

Replace

<node_uuid>with the UUID of the node. Replace

<output_file_name>with the name of the file that contains the output of the node introspection.For example, the data for one node might show three disks:

[ { "size": 299439751168, "rotational": true, "vendor": "DELL", "name": "/dev/sda", "wwn_vendor_extension": "0x1ea4dcc412a9632b", "wwn_with_extension": "0x61866da04f3807001ea4dcc412a9632b", "model": "PERC H330 Mini", "wwn": "0x61866da04f380700", "serial": "61866da04f3807001ea4dcc412a9632b" } { "size": 299439751168, "rotational": true, "vendor": "DELL", "name": "/dev/sdb", "wwn_vendor_extension": "0x1ea4e13c12e36ad6", "wwn_with_extension": "0x61866da04f380d001ea4e13c12e36ad6", "model": "PERC H330 Mini", "wwn": "0x61866da04f380d00", "serial": "61866da04f380d001ea4e13c12e36ad6" } { "size": 299439751168, "rotational": true, "vendor": "DELL", "name": "/dev/sdc", "wwn_vendor_extension": "0x1ea4e31e121cfb45", "wwn_with_extension": "0x61866da04f37fc001ea4e31e121cfb45", "model": "PERC H330 Mini", "wwn": "0x61866da04f37fc00", "serial": "61866da04f37fc001ea4e31e121cfb45" } ]

-

Replace

Set the root disk for the node by using a unique hardware property:

(undercloud)$ openstack baremetal node set --property root_device='{<property_value>}' <node-uuid>-

Replace

<property_value>with the unique hardware property value from the introspection data to use to set the root disk. Replace

<node_uuid>with the UUID of the node.NoteA unique hardware property is any property from the hardware introspection step that uniquely identifies the disk. For example, the following command uses the disk serial number to set the root disk:

(undercloud)$ openstack baremetal node set --property root_device='{"serial": "61866da04f380d001ea4e13c12e36ad6"}' 1a4e30da-b6dc-499d-ba87-0bd8a3819bc0

-

Replace

- Configure the BIOS of each node to first boot from the network and then the root disk.

Director identifies the specific disk to use as the root disk. When you run the openstack overcloud node provision command, director provisions and writes the overcloud image to the root disk.

2.4.1. Properties that identify the root disk

There are several properties that you can define to help director identify the root disk:

-

model(String): Device identifier. -

vendor(String): Device vendor. -

serial(String): Disk serial number. -

hctl(String): Host:Channel:Target:Lun for SCSI. -

size(Integer): Size of the device in GB. -

wwn(String): Unique storage identifier. -

wwn_with_extension(String): Unique storage identifier with the vendor extension appended. -

wwn_vendor_extension(String): Unique vendor storage identifier. -

rotational(Boolean): True for a rotational device (HDD), otherwise false (SSD). -

name(String): The name of the device, for example: /dev/sdb1.

Use the name property for devices with persistent names. Do not use the name property to set the root disk for devices that do not have persistent names because the value can change when the node boots.

2.5. Using the overcloud-minimal image to avoid using a Red Hat subscription entitlement

The default image for a Red Hat OpenStack Platform (RHOSP) deployment is overcloud-hardened-uefi-full.qcow2. The overcloud-hardened-uefi-full.qcow2 image uses a valid Red Hat OpenStack Platform (RHOSP) subscription. You can use the overcloud-minimal image when you do not want to consume your subscription entitlements, to avoid reaching the limit of your paid Red Hat subscriptions. This is useful, for example, when you want to provision nodes with only Ceph daemons, or when you want to provision a bare operating system (OS) where you do not want to run any other OpenStack services. For information about how to obtain the overcloud-minimal image, see Obtaining images for overcloud nodes.

The overcloud-minimal image supports only standard Linux bridges. The overcloud-minimal image does not support Open vSwitch (OVS) because OVS is an OpenStack service that requires a Red Hat OpenStack Platform subscription entitlement. OVS is not required to deploy Ceph Storage nodes. Use linux_bond instead of ovs_bond to define bonds.

Procedure

-

Open your

/home/stack/templates/overcloud-baremetal-deploy.yamlfile. Add or update the

imageproperty for the nodes that you want to use theovercloud-minimalimage. You can set the image toovercloud-minimalon specific nodes, or for all nodes for a role.NoteThe overcloud minimal image is not a whole disk image. The kernel and ramdisk must be specified in the

/home/stack/templates/overcloud-baremetal-deploy.yamlfile.Specific nodes

- name: Ceph count: 3 instances: - hostname: overcloud-ceph-0 name: node00 image: href: file:///var/lib/ironic/images/overcloud-minimal.raw kernel: file://var/lib/ironic/images/overcloud-minimal.vmlinuz ramdisk: file://var/lib/ironic/images/overcloud-minimal.initrd - hostname: overcloud-ceph-1 name: node01 image: href: file:///var/lib/ironic/images/overcloud-minimal.raw kernel: file://var/lib/ironic/images/overcloud-minimal.vmlinuz ramdisk: file://var/lib/ironic/images/overcloud-minimal.initrd - hostname: overcloud-ceph-2 name: node02 image: href: file:///var/lib/ironic/images/overcloud-minimal.raw kernel: file://var/lib/ironic/images/overcloud-minimal.vmlinuz ramdisk: file://var/lib/ironic/images/overcloud-minimal.initrdAll nodes for a specific role

- name: Ceph count: 3 defaults: image: href: file:///var/lib/ironic/images/overcloud-minimal.raw kernel: file://var/lib/ironic/images/overcloud-minimal.vmlinuz ramdisk: file://var/lib/ironic/images/overcloud-minimal.initrd instances: - hostname: overcloud-ceph-0 name: node00 - hostname: overcloud-ceph-1 name: node01 - hostname: overcloud-ceph-2 name: node02In the

roles_data.yamlrole definition file, set therhsm_enforceparameter toFalse.rhsm_enforce: False

Run the provisioning command:

(undercloud)$ openstack overcloud node provision \ --stack overcloud \ --output /home/stack/templates/overcloud-baremetal-deployed.yaml \ /home/stack/templates/overcloud-baremetal-deploy.yaml

-

Pass the

overcloud-baremetal-deployed.yamlenvironment file to theopenstack overcloud ceph deploycommand.

2.6. Designating nodes for Red Hat Ceph Storage

To designate nodes for Red Hat Ceph Storage, you must create a new role file to configure the CephStorage role, and configure the bare metal nodes with a resource class for CephStorage.

Procedure

-

Log in to the undercloud as the

stackuser. Source the

stackrcfile:[stack@director ~]$ source ~/stackrc

Generate a new roles data file named

roles_data.yamlthat includes theController,Compute, andCephStorageroles:(undercloud)$ openstack overcloud roles \ generate Controller Compute CephStorage -o /home/stack/templates/roles_data.yaml \

Open

roles_data.yamland ensure it has the following parameters and sections:Section/Parameter Value Role comment

Role: CephStorageRole name

name: CephStoragedescriptionCeph node roleHostnameFormatDefault%stackname%-novaceph-%index%deprecated_nic_config_nameceph.yaml- Register the Ceph nodes for the overcloud by adding them to your node definition template.

Inspect the node hardware:

(undercloud)$ openstack overcloud node introspect --all-manageable --provide

Tag each bare metal node that you want to designate for Ceph with a custom Ceph resource class:

(undercloud)$ openstack baremetal node set \ --resource-class baremetal.CEPH <node>

Replace

<node>with the ID of the bare metal node.Add the

CephStoragerole to yourovercloud-baremetal-deploy.yamlfile, and define any predictive node placements, resource classes, or other attributes that you want to assign to your nodes:- name: Controller count: 3 - name: Compute count: 3 - name: CephStorage count: 5 defaults: resource_class: baremetal.CEPHRun the provisioning command:

(undercloud)$ openstack overcloud node provision \ --stack stack \ --output /home/stack/templates/overcloud-baremetal-deployed.yaml \ /home/stack/templates/overcloud-baremetal-deploy.yaml

Monitor the provisioning progress in a separate terminal. When provisioning is successful, the node state changes from

availabletoactive:(undercloud)$ watch openstack baremetal node list

Additional resources

- For more information on node registration, see Section 2.2, “Registering nodes”.

- For more information inspecting node hardware, see Creating an inventory of the bare-metal node hardware in the Installing and managing Red Hat OpenStack Platform with director guide.

Chapter 3. Configuring the Red Hat Ceph Storage cluster

To deploy the Red Hat Ceph Storage cluster for your Red Hat OpenStack Platform environment, you must first configure the Red Hat Ceph Storage cluster options for your environment.

Configure the Red Hat Ceph Storage cluster options:

- Configuring time synchronization

- Configuring a top level domain

- Configuring the Red Hat Ceph Storage cluster name

- Configuring network options with the network data file

- Configuring network options with a configuration file

- Configuring a CRUSH hierarchy for an OSD

- Configuring Ceph service placement options

- Configuring SSH user options for Ceph nodes

- Configuring the container registry

Prerequisites

Before you can configure and deploy the Red Hat Ceph Storage cluster, use the Bare Metal Provisioning service (ironic) to provision the bare metal instances and networks. For more information, see Configuring the Bare Metal Provisioning service.

3.1. The openstack overcloud ceph deploy command

If you deploy the Ceph cluster using director, you must use the openstack overcloud ceph deploy command. For a complete listing of command options and parameters, see openstack overcloud ceph deploy in the Command line interface reference.

The command openstack overcloud ceph deploy --help provides the current options and parameters available in your environment.

3.2. Ceph configuration file

A standard format initialization file is one way to perform Ceph cluster configuration. This initialization file is used to configure the Ceph cluster. Use one of the following commands to use this file: * cephadm bootstap --config <file_name> * openstack overcloud ceph deploy --config <file_name> commands.

Example

The following example creates a simple initialization file called initial-ceph.conf and then uses the openstack overcloud ceph deploy command to configure the Ceph cluster with it. It demonstrates how to configure the messenger v2 protocol to use a secure mode that encrypts all data passing over the network.

$ cat <<EOF > initial-ceph.conf [global] ms_cluster_mode = secure ms_service_mode = secure ms_client_mode = secure EOF $ openstack overcloud ceph deploy --config initial-ceph.conf ...

3.3. Configuring time synchronization

The Time Synchronization Service (chrony) is enabled for time synchronization by default. You can perform the following tasks to configure the service.

Time synchronization is configured using either a delimited list or an environment file. Use the procedure that is best suited to your administrative practices.

3.3.1. Configuring time synchronization with a delimited list

You can configure the Time Synchronization Service (chrony) to use a delimited list to configure NTP servers.

Procedure

-

Log in to the undercloud node as the

stackuser. Configure NTP servers with a delimited list:

openstack overcloud ceph deploy \ --ntp-server "<ntp_server_list>"Replace

<ntp_server_list>with a comma delimited list of servers.openstack overcloud ceph deploy \ --ntp-server "0.pool.ntp.org,1.pool.ntp.org"

3.3.2. Configuring time synchronization with an environment file

You can configure the Time Synchronization Service (chrony) to use an environment file that defines NTP servers.

Procedure

-

Log in to the undercloud node as the

stackuser. -

Create an environment file, such as

/home/stack/templates/ntp-parameters.yaml, to contain the NTP server configuration. Add the

NtpServerparameter. TheNtpServerparameter contains a comma delimited list of NTP servers.parameter_defaults: NtpServer: 0.pool.ntp.org,1.pool.ntp.org

Configure NTP servers with an environment file:

openstack overcloud ceph deploy \ --ntp-heat-env-file "<ntp_file_name>"Replace

<ntp_file_name>with the name of the environment file you created.openstack overcloud ceph deploy \ --ntp-heat-env-file "/home/stack/templates/ntp-parameters.yaml"

3.3.3. Disabling time synchronization

The Time Synchronization Service (chrony) is enabled by default. You can disable the service if you do not want to use it.

Procedure

-

Log in to the undercloud node as the

stackuser. Disable the Time Synchronization Service (chrony):

openstack overcloud ceph deploy \ --skip-ntp

3.4. Configuring a top level domain suffix

You can configure a top level domain (TLD) suffix. This suffix is added to the short hostname to create a fully qualified domain name for overcloud nodes.

A fully qualified domain name is required for TLS-e configuration.

Procedure

-

Log in to the undercloud node as the

stackuser. Configure the top level domain suffix:

openstack overcloud ceph deploy \ --tld "<domain_name>"Replace

<domain_name>with the required domain name.openstack overcloud ceph deploy \ --tld "example.local"

3.5. Configuring the Red Hat Ceph Storage cluster name

You can deploy the Red Hat Ceph Storage cluster with a name that you configure. The default name is ceph.

Procedure

-

Log in to the undercloud node as the

stackuser. Configure the name of the Ceph Storage cluster by using the following command:

openstack overcloud ceph deploy \ --cluster <cluster_name>$ openstack overcloud ceph deploy \ --cluster central \

Keyring files are not created at this time. Keyring files are created during the overcloud deployment. Keyring files inherit the cluster name configured during this procedure. For more information about overcloud deployment see Section 8.1, “Initiating overcloud deployment”

In the example above, the Ceph cluster is named central. The configuration and keyring files for the central Ceph cluster would be created in /etc/ceph during the deployment process.

[root@oc0-controller-0 ~]# ls -l /etc/ceph/ total 16 -rw-------. 1 root root 63 Mar 26 21:49 central.client.admin.keyring -rw-------. 1 167 167 201 Mar 26 22:17 central.client.openstack.keyring -rw-------. 1 167 167 134 Mar 26 22:17 central.client.radosgw.keyring -rw-r--r--. 1 root root 177 Mar 26 21:49 central.conf

Troubleshooting

The following error may be displayed if you configure a custom name for the Ceph Storage cluster:

monclient: get_monmap_and_config cannot identify monitors to contact because

If this error is displayed, use the following command after Ceph deployment:

cephadm shell --config <configuration_file> --keyring <keyring_file>

For example, if this error was displayed when you configured the cluster name to central, you would use the following command:

cephadm shell --config /etc/ceph/central.conf \

--keyring /etc/ceph/central.client.admin.keyringThe following command could also be used as an alternative:

cephadm shell --mount /etc/ceph:/etc/ceph export CEPH_ARGS='--cluster central'

3.6. Configuring network options with the network data file

The network data file describes the networks used by the Red Hat Ceph Storage cluster.

Procedure

-

Log in to the undercloud node as the

stackuser. Create a YAML format file that defines the custom network attributes called

network_data.yaml.ImportantUsing network isolation, the standard network deployment consists of two storage networks which map to the two Ceph networks:

-

The storage network,

storage, maps to the Ceph network,public_network. This network handles storage traffic such as the RBD traffic from the Compute nodes to the Ceph cluster. -

The storage network,

storage_mgmt, maps to the Ceph network,cluster_network. This network handles storage management traffic such as data replication between Ceph OSDs.

-

The storage network,

Use the

openstack overcloud ceph deploycommand with the--crush-hierarchyoption to deploy the configuration.openstack overcloud ceph deploy \ deployed_metal.yaml \ -o deployed_ceph.yaml \ --network-data network_data.yamlImportantThe

openstack overcloud ceph deploycommand uses the network data file specified by the--network-dataoption to determine the networks to be used as thepublic_networkandcluster_network. The command assumes these networks are namedstorageandstorage_mgmtin network data file unless a different name is specified by the--public-network-nameand--cluster-network-nameoptions.You must use the

--network-dataoption when deploying with network isolation. The default undercloud (192.168.24.0/24) will be used for both thepublic_networkandcluster_networkif you do not use this option.

3.7. Configuring network options with a configuration file

Network options can be specified with a configuration file as an alternative to the network data file.

Using this method to configure network options overwrites automatically generated values in network_data.yaml. Ensure you set all four values when using this network configuration method.

Procedure

-

Log in to the undercloud node as the

stackuser. - Create a standard format initialization file to configure the Ceph cluster. If you have already created a file to include other configuration options, you can add the network configuration to it.

Add the following parameters to the

[global]section of the file:-

public_network -

cluster_network ms_bind_ipv4ImportantEnsure the

public_networkandcluster_networkmap to the same networks asstorageandstorage_mgmt.The following is an example of a configuration file entry for a network configuration with multiple subnets and custom networking names:

[global] public_network = 172.16.14.0/24,172.16.15.0/24 cluster_network = 172.16.12.0/24,172.16.13.0/24 ms_bind_ipv4 = True ms_bind_ipv6 = False

-

Use the command

openstack overcloud ceph deploywith the--configoption to deploy the configuration file.$ openstack overcloud ceph deploy \ --config initial-ceph.conf --network-data network_data.yaml

3.8. Configuring a CRUSH hierarchy for an OSD

You can configure a custom Controlled Replication Under Scalable Hashing (CRUSH) hierarchy during OSD deployment to add the OSD location attribute to the Ceph Storage cluster hosts specification. The location attribute configures where the OSD is placed within the CRUSH hierarchy.

The location attribute sets only the initial CRUSH location. Subsequent changes of the attribute are ignored.

Procedure

-

Log in to the undercloud node as the

stackuser. Source the

stackrcundercloud credentials file:$ source ~/stackrc-

Create a configuration file to define the custom CRUSH hierarchy, for example,

crush_hierarchy.yaml. Add the following configuration to the file:

<osd_host>: root: default rack: <rack_num> <osd_host>: root: default rack: <rack_num> <osd_host>: root: default rack: <rack_num>

-

Replace

<osd_host>with the hostnames of the nodes where the OSDs are deployed, for example,ceph-0. -

Replace

<rack_num>with the number of the rack where the OSDs are deployed, for example,r0.

-

Replace

Deploy the Ceph cluster with your custom OSD layout:

openstack overcloud ceph deploy \ deployed_metal.yaml \ -o deployed_ceph.yaml \ --osd-spec osd_spec.yaml \ --crush-hierarchy crush_hierarchy.yaml

The Ceph cluster is created with the custom OSD layout.

The example file above would result in the following OSD layout.

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF -1 0.02939 root default -3 0.00980 rack r0 -2 0.00980 host ceph-node-00 0 hdd 0.00980 osd.0 up 1.00000 1.00000 -5 0.00980 rack r1 -4 0.00980 host ceph-node-01 1 hdd 0.00980 osd.1 up 1.00000 1.00000 -7 0.00980 rack r2 -6 0.00980 host ceph-node-02 2 hdd 0.00980 osd.2 up 1.00000 1.00000

Device classes are automatically detected by Ceph but CRUSH rules are associated with pools. Pools are still defined and created using the CephCrushRules parameter during the overcloud deployment.

Additional resources

See Red Hat Ceph Storage workload considerations in the Red Hat Ceph Storage Installation Guide for additional information.

3.9. Configuring Ceph service placement options

You can define what nodes run what Ceph services using a custom roles file. A custom roles file is only necessary when default role assignments are not used because of the environment. For example, when deploying hyperconverged nodes, the predeployed compute nodes should be labeled as osd with a service type of osd to have a placement list containing a list of compute instances.

Service definitions in the roles_data.yaml file determine which bare metal instance runs which service. By default, the Controller role has the CephMon and CephMgr service while the CephStorage role has the CephOSD service. Unlike most composable services, Ceph services do not require heat output to determine how services are configured. The roles_data.yaml file always determines Ceph service placement even though the deployed Ceph process occurs before Heat runs.

Procedure

-

Log in to the undercloud node as the

stackuser. - Create a YAML format file that defines the custom roles.

Deploy the configuration file:

$ openstack overcloud ceph deploy \ deployed_metal.yaml \ -o deployed_ceph.yaml \ --roles-data custom_roles.yaml

3.10. Configuring SSH user options for Ceph nodes

The openstack overcloud ceph deploy command creates the user and keys and distributes them to the hosts so it is not necessary to perform the procedures in this section. However, it is a supported option.

Cephadm connects to all managed remote Ceph nodes using SSH. The Red Hat Ceph Storage cluster deployment process creates an account and SSH key pair on all overcloud Ceph nodes. The key pair is then given to Cephadm so it can communicate with the nodes.

3.10.1. Creating the SSH user before Red Hat Ceph Storage cluster creation

You can create the SSH user before Ceph cluster creation with the openstack overcloud ceph user enable command.

Procedure

-

Log in to the undercloud node as the

stackuser. Create the SSH user:

$ openstack overcloud ceph user enable <specification_file>Replace

<specification_file>with the path and name of a Ceph specification file that describes the cluster where the user is created and the public SSH keys are installed. The specification file provides the information to determine which nodes to modify and if the private keys are required.For more information on creating a specification file, see Generating the service specification.

NoteThe default user name is

ceph-admin. To specify a different user name, use the--cephadm-ssh-useroption to specify a different one.openstack overcloud ceph user enable --cephadm-ssh-user <custom_user_name>It is recommended to use the default name and not use the

--cephadm-ssh-userparameter.If the user is created in advance, use the parameter

--skip-user-createwhen executingopenstack overcloud ceph deploy.

3.10.2. Disabling the SSH user

Disabling the SSH user disables cephadm. Disabling cephadm removes the ability of the service to administer the Ceph cluster and prevents associated commands from working. It also prevents Ceph node overcloud scaling operations. It also removes all public and private SSH keys.

Procedure

-

Log in to the undercloud node as the

stackuser. Use the command

openstack overcloud ceph user disable --fsid <FSID> <specification_file>to disable the SSH user.-

Replace

<FSID>with the File System ID of the cluster. The FSID is a unique identifier for the cluster. The FSID is located in thedeployed_ceph.yamlenvironment file. Replace

<specification_file>with the path and name of a Ceph specification file that describes the cluster where the user was created.ImportantThe

openstack overcloud ceph user disablecommand is not recommended unless it is necessary to disablecephadm.ImportantTo enable the SSH user and Ceph orchestrator service after being disabled, use the

openstack overcloud ceph user enable --fsid <FSID> <specification_file>command.NoteThis command requires the path to a Ceph specification file to determine:

- Which hosts require the SSH user.

- Which hosts have the _admin label and require the private SSH key.

- Which hosts require the public SSH key.

For more information about specification files and how to generate them, see Generating the service specification.

-

Replace

3.11. Accessing Ceph Storage containers

Preparing container images in the Installing and managing Red Hat OpenStack Platform with director guide contains procedures and information on how to prepare the registry and your undercloud and overcloud configuration to use container images. Use the information in this section to adapt these procedures to access Ceph Storage containers.

There are two options for accessing Ceph Storage containers from the overcloud.

3.11.1. Downloading containers directly from a remote registry

You can configure Ceph to download containers directly from a remote registry.

Procedure

-

Create a

containers-prepare-parameter.yamlfile using the procedure Preparing container images in the Installing and managing Red Hat OpenStack Platform with director guide. -

Add the remote registry credentials to the

containers-prepare-parameter.yamlfile using theContainerImageRegistryCredentialsparameter as described in Obtaining container images from private registries. When you deploy Ceph, pass the

containers-prepare-parameter.yamlfile using theopenstack overcloud ceph deploycommand.openstack overcloud ceph deploy \ --container-image-prepare containers-prepare-parameter.yamlNoteIf you do not cache the containers on the undercloud, as described in Cacheing containers on the undercloud, then you should pass the same

containers-prepare-parameter.yamlfile to theopenstack overcloud ceph deploycommand when you deploy Ceph. This will cache containers on the undercloud.

Result

The credentials in the containers-prepare-parameter.yaml are used by the cephadm command to authenticate to the remote registry and download the Ceph Storage container.

3.11.2. Cacheing containers on the undercloud

The procedure Modifying images during preparation describes using the following command:

sudo openstack tripleo container image prepare \ -e ~/containers-prepare-parameter.yaml \

If you do not use the --container-image-prepare option to provide authentication credentials to the openstack overcloud ceph deploy command and directly download the Ceph containers from a remote registry, as described in Downloading containers directly from a remote registry, you must run the sudo openstack tripleo container image prepare command before deploying Ceph.

Chapter 4. Customizing the Red Hat Ceph Storage cluster

Director deploys Red Hat Ceph Storage with a default configuration. You can customize this default configuration.

Prerequisites

- Ceph Storage nodes deployed with their storage network configured.

-

The deployed bare metal file output by

openstack overcloud node provision -o ~/deployed_metal.yaml ….

4.1. Configuration options

There are several options for configuring the Red Hat Ceph Storage cluster.

Procedure

-

Log in to the undercloud node as the

stackuser. Optional: Use a standard format initialization (ini) file to configure the Ceph cluster.

Create the file with configuration options.

The following is an example of a simple configuration file:

[global] osd_crush_chooseleaf type = 0 log_file = /var/log/ceph/$cluster-$type.$id.log [mon] mon_cluster_log_to_syslog = true

- Save the configuration file.

Use the

openstack overcloud ceph deploy --config <configuration_file_name>command to deploy the configuration.Replace

<configuration_file_name>with the name of the file you created.$ openstack overcloud ceph deploy --config initial-ceph.conf

Optional: Send configuration values to the

cephadm bootstrapcommand:openstack overcloud ceph deploy --force \ --cephadm-extra-args '<optional_arguments>' \Replace

<optional_arguments>with the configuration values to provide to the underlying command.NoteWhen using the arguments

--log-to-fileand--skip-prepare-host, the commandopenstack overcloud ceph deploy --force \ --cephadm-extra-args '--log-to-file --skip-prepare-host' \is used.

4.2. Generating the service specification (optional)

The Red Hat Ceph Storage cluster service specification is a YAML file that describes the deployment of Ceph Storage services. It is automatically generated by tripleo before the Ceph Storage cluster is deployed. It does not usually have to be generated separately.

A custom service specification can be created to customize the Red Hat Ceph Storage cluster.

Procedure

-

Log in to the undercloud node as the

stackuser. Generate the specification file:

openstack overcloud ceph spec deployed_metal.yaml -o <specification_file>Replace

<specification_file>with the name of the file to generate with the current service specification.NoteThe

deployed_metal.yamlcomes from the output of theopenstack overcloud node provisioncommand.

- Edit the generated file with the required configuration.

Deploy the custom service specification:

openstack overcloud ceph deploy \ deployed_metal.yaml \ -o deployed_ceph.yaml \ --ceph-spec <specification_file>

-

Replace

<specification_file>with the name of the custom service specification file.

-

Replace

4.3. Ceph containers for Red Hat OpenStack Platform with Red Hat Ceph Storage

You must have a Ceph Storage container to configure Red Hat Openstack Platform (RHOSP) to use Red Hat Ceph Storage with NFS Ganesha. You do not require a Ceph Storage container if the external Ceph Storage cluster only provides Block (through RBD), Object (through RGW), or File (through native CephFS) storage.

RHOSP 17.1 will deploy Red Hat Ceph Storage 6.x (Ceph package 17.x). The Ceph Storage 6.x containers are hosted at registry.redhat.io, a registry that requires authentication. For more information, see Container image preparation parameters.

4.4. Configuring advanced OSD specifications

Configure an advanced OSD specification when the default specification does not provide the necessary functionality for your Ceph Storage cluster.

Procedure

-

Log in to the undercloud node as the

stackuser. Create a YAML format file that defines the advanced OSD specification.

The following is an example of a custom OSD specification.

data_devices: rotational: 1 db_devices: rotational: 0

This example would create an OSD specification where all rotating devices will be data devices and all non-rotating devices will be used as shared devices. When the dynamic Ceph service specification is built, whatever is in the specification file is appended to the section of the specification if the

service_typeisosd.- Save the specification file.

Deploy the specification:

openstack overcloud ceph deploy \ --osd-spec <osd_specification_file>Replace

<osd_specification_file>with the name of the specification file you created.$ openstack overcloud ceph deploy \ --osd-spec osd_spec.yaml \

Additional resources

For a list of OSD-related attributes used to configure OSDs in the service specification, see Advanced service specifications and filters for deploying OSDs in the Red Hat Ceph Storage Operations Guide.

4.5. Migrating from node-specific overrides

Node-specific overrides were used to manage non-homogenous server hardware before Red Hat OpenStack Platform 17.0. This is now done with a custom OSD specification file. See Configuring advanced OSD specifications for information on how to create a custom OSD specification file.

4.6. Enabling Ceph on-wire encryption

Enable encryption for all Ceph Storage traffic using the secure mode of the messenger version 2 protocol. Configure Ceph Storage as described in Encryption and Key Management in the Red Hat Ceph Storage Data Hardening Red Hat OpenStack Platform to enable Ceph on-wire encryption.

Additional resources

For more information about Ceph on-wire encryption, see Ceph on-wire encryption in the Red Hat Ceph Storage Architecture Guide.

Chapter 5. Customizing the storage service

The director heat template collection contains the necessary templates and environment files to enable a basic Ceph Storage configuration.

Director uses the /usr/share/openstack-tripleo-heat-templates/environments/cephadm/cephadm.yaml environment file to add configuration to the Ceph Storage cluster deployed by openstack overcloud ceph deploy and integrate it with your overcloud during deployment.

5.1. Configuring a custom environment file

Director applies basic, default settings to the deployed Red Hat Ceph Storage cluster. You must define additional configuration in a custom environment file.

Procedure

-

Log in to the undercloud as the

stackuser. Create a file to define the custom configuration.

vi /home/stack/templates/storage-config.yaml-

Add a

parameter_defaultssection to the file. Add the custom configuration parameters. For more information about parameter definitions, see Overcloud parameters.

parameter_defaults: CinderEnableIscsiBackend: false CinderEnableRbdBackend: true CinderBackupBackend: ceph NovaEnableRbdBackend: true GlanceBackend: rbdNoteParameters defined in a custom configuration file override any corresponding default settings in

/usr/share/openstack-tripleo-heat-templates/environments/cephadm/cephadm.yaml.- Save the file.

Additional resources

The custom configuration is applied during overcloud deployment.

5.2. Red Hat Ceph Storage placement groups

Placement groups (PGs) facilitate dynamic and efficient object tracking at scale. In the event of OSD failure or Ceph Storage cluster rebalancing, Ceph can move or replicate a placement group and the contents of the placement group. This allows a Ceph Storage cluster to rebalance and recover efficiently.

The placement group and replica count settings are not changed from the defaults unless the following parameters are included in a Ceph configuration file:

-

osd_pool_default_size -

osd_pool_default_pg_num -

osd_pool_default_pgp_num

When the overcloud is deployed with the openstack overcloud deploy command, a pool is created for every enabled Red Hat OpenStack Platform service. For example, the following command creates pools for the Compute service (nova), the Block Storage service (cinder), and the Image service (glance):

openstack overcloud deploy --templates \ -e /usr/share/openstack-tripleo-heat-templates/environments/cephadm/cephadm-rbd-only.yaml

Adding -e environments/cinder-backup.yaml to the command, creates a pool called backups:

openstack overcloud deploy --templates \ -e /usr/share/openstack-tripleo-heat-templates/environments/cephadm/cephadm-rbd-only.yaml -e environments/cinder-backup.yaml

It is not necessary to configure a placement group number per pool; the pg_autoscale_mode attribute is enabled by default. However, it is recommended to configure the target_size_ratio or pg_num attributes. This minimimzes data rebalancing.

To set the target_size_ratio attribute per pool, use a configuration file entry similar to the following example:

parameter_defaults:

CephPools:

- name: volumes

target_size_ratio: 0.4

application: rbd

- name: images

target_size_ratio: 0.1

application: rbd

- name: vms

target_size_ratio: 0.3

application: rbdIn this example, the percentage of data used per service will be:

- Cinder volumes - 40%

- Glance images - 10%

- Nova vms - 30%

- Free space for other pools - 20%

Set these values based on your expected usage . If you do not override the CephPools parameter, each pool uses the default placement group number. Though the autoscaler will adjust this number automatically over time based on usage, the data will be moved within the Ceph cluster. This uses computational resources.

If you prefer to set a placement group number instead of a target size ratio, replace target_size_ratio in the example with pg_num. Use a different integer per pool based on your expected usage.

See the Red Hat Ceph Storage Hardware Guide for Red Hat Ceph Storage processor, network interface card, and power management interface recommendations.

5.3. Enabling Ceph Metadata Server

The Ceph Metadata Server (MDS) runs the ceph-mds daemon. This daemon manages metadata related to files stored on CephFS. CephFS can be consumed natively or through the NFS protocol.

Red Hat supports deploying Ceph MDS with the native CephFS and CephFS NFS back ends for the Shared File Systems service (manila).

Procedure

To enable Ceph MDS, use the following environment file when you deploy the overcloud:

/usr/share/openstack-tripleo-heat-templates/environments/cephadm/ceph-mds.yaml

By default, Ceph MDS is deployed on the Controller node. You can deploy Ceph MDS on its own dedicated node.

Additional resources

5.4. Ceph Object Gateway object storage

The Ceph Object Gateway (RGW) provides an interface to access object storage capabilities within a Red Hat Ceph Storage cluster.

When you use director to deploy Ceph, director automatically enables RGW. This is a direct replacement for the Object Storage service (swift). Services that normally use the Object Storage service can use RGW instead without additional configuration. The Object Storage service remains available as an object storage option for upgraded Ceph clusters.

There is no requirement for a separate RGW environment file to enable it. For more information about environment files for other object storage options, see Section 5.5, “Deployment options for Red Hat OpenStack Platform object storage”.

By default, Ceph Storage allows 250 placement groups per Object Storage Daemon (OSD). When you enable RGW, Ceph Storage creates the following six additional pools required by RGW:

-

.rgw.root -

<zone_name>.rgw.control -

<zone_name>.rgw.meta -

<zone_name>.rgw.log -

<zone_name>.rgw.buckets.index -

<zone_name>.rgw.buckets.data

In your deployment, <zone_name> is replaced with the name of the zone to which the pools belong.

Additional resources

- For more information about RGW, see the Red Hat Ceph Storage Object Gateway Guide.

- For more information about using RGW instead of Swift, see the Backuping up BLock Storage volumes guide.

5.5. Deployment options for Red Hat OpenStack Platform object storage

There are three options for deploying overcloud object storage:

Ceph Object Gateway (RGW)

To deploy RGW as described in Section 5.4, “Ceph Object Gateway object storage”, include the following environment file during overcloud deployment:

-e environments/cephadm/cephadm.yaml

This environment file configures both Ceph block storage (RBD) and RGW.

Object Storage service (swift)

To deploy the Object Storage service (swift) instead of RGW, include the following environment file during overcloud deployment:

-e environments/cephadm/cephadm-rbd-only.yaml

The

cephadm-rbd-only.yamlfile configures Ceph RBD but not RGW.NoteIf you used the Object Storage service (swift) before upgrading your Red Hat Ceph Storage cluster, you can continue to use the Object Storage service (swift) instead of RGW by replacing the

environments/ceph-ansible/ceph-ansible.yamlfile with theenvironments/cephadm/cephadm-rbd-only.yamlduring the upgrade. For more information, see Performing a minor update of Red Hat OpenStack Platform.Red Hat OpenStack Platform does not support migration from the Object Storage service (swift) to Ceph Object Gateway (RGW).

No object storage

To deploy Ceph with RBD but not with RGW or the Object Storage service (swift), include the following environment files during overcloud deployment:

-e environments/cephadm/cephadm-rbd-only.yaml -e environments/disable-swift.yaml

The

cephadm-rbd-only.yamlfile configures RBD but not RGW. Thedisable-swift.yamlfile ensures that the Object Storage service (swift) does not deploy.

5.6. Configuring the Block Storage Backup Service to use Ceph

The Block Storage Backup service (cinder-backup) is disabled by default. It must be enabled to use it with Ceph.

Procedure

To enable the Block Storage Backup service (cinder-backup), use the following environment file when you deploy the overcloud:

`/usr/share/openstack-tripleo-heat-templates/environments/cinder-backup.yaml`.

5.7. Configuring multiple bonded interfaces for Ceph nodes

Use a bonded interface to combine multiple NICs and add redundancy to a network connection. If you have enough NICs on your Ceph nodes, you can create multiple bonded interfaces on each node to expand redundancy capability.

Use a bonded interface for each network connection the node requires. This provides both redundancy and a dedicated connection for each network.

See Provisioning the overcloud networks in the Installing and managing Red Hat OpenStack Platform with director guide for information and procedures.

Chapter 6. Deploying the Shared File Systems service with native CephFS

CephFS is the highly scalable, open-source, distributed file system component of Red Hat Ceph Storage, a unified distributed storage platform. Ceph Storage implements object, block, and file storage using Reliable Autonomic Distributed Object Store (RADOS). CephFS, which is POSIX compatible, provides file access to a Ceph Storage cluster.

The Shared File Systems service (manila) enables users to create shares in CephFS and access them using the native Ceph FS protocol. The Shared File Systems service manages the life cycle of these shares from within OpenStack.

With this release, director can deploy the Shared File Systems with a native CephFS back end on the overcloud.

This chapter pertains to the deployment and use of native CephFS to provide a self-service Shared File Systems service in your Red Hat OpenStack Platform(RHOSP) cloud through the native CephFS NAS protocol. This type of deployment requires guest VM access to Ceph public network and infrastructure. Deploy native CephFS with trusted OpenStack Platform tenants only, because it requires a permissive trust model that is not suitable for general purpose OpenStack Platform deployments. For general purpose OpenStack Platform deployments that use a conventional tenant trust model, you can deploy CephFS through the NFS protocol.

6.1. CephFS with native driver

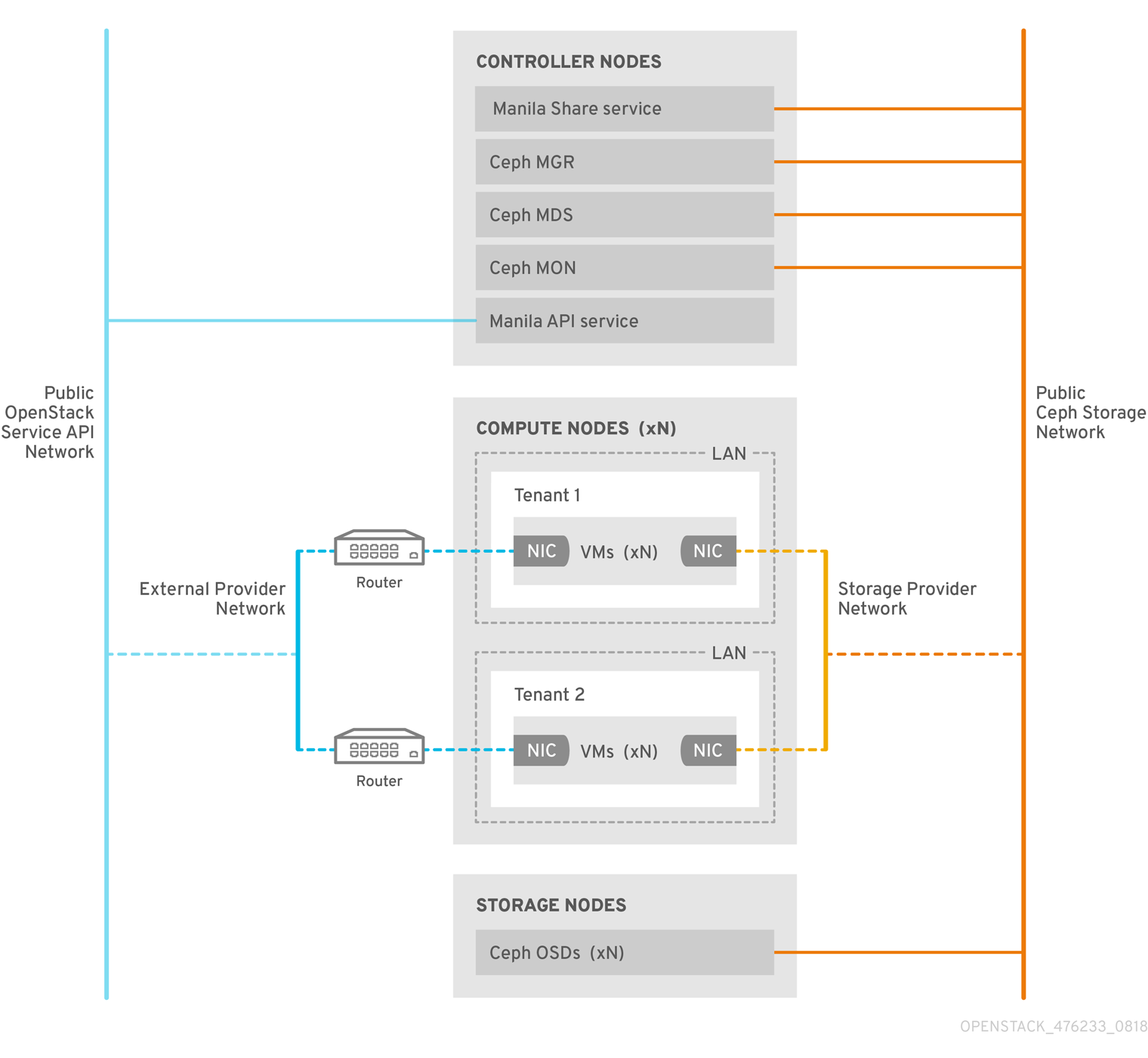

The CephFS native driver combines the OpenStack Shared File Systems service (manila) and Red Hat Ceph Storage. When you use Red Hat OpenStack (RHOSP) director, the Controller nodes host the Ceph daemons, such as the manager, metadata servers (MDS), and monitors (MON) and the Shared File Systems services.

Compute nodes can host one or more projects. Projects, which were formerly referred to as tenants, are represented in the following graphic by the white boxes. Projects contain user-managed VMs, which are represented by gray boxes with two NICs. To access the ceph and manila daemons projects, connect to the daemons over the public Ceph storage network.

On this network, you can access data on the storage nodes provided by the Ceph Object Storage Daemons (OSDs). Instances, or virtual machines (VMs), that are hosted on the project boot with two NICs: one dedicated to the storage provider network and the second to project-owned routers to the external provider network.

The storage provider network connects the VMs that run on the projects to the public Ceph storage network. The Ceph public network provides back-end access to the Ceph object storage nodes, metadata servers (MDS), and Controller nodes.

Using the native driver, CephFS relies on cooperation with the clients and servers to enforce quotas, guarantee project isolation, and for security. CephFS with the native driver works well in an environment with trusted end users on a private cloud. This configuration requires software that is running under user control to cooperate and work correctly.

6.2. Native CephFS back-end security

The native CephFS back end requires a permissive trust model for Red Hat OpenStack Platform (RHOSP) tenants. This trust model is not appropriate for general purpose OpenStack Platform clouds that deliberately block users from directly accessing the infrastructure behind the services that the OpenStack Platform provides.

With native CephFS, user Compute instances connect directly to the Ceph public network where the Ceph service daemons are exposed. CephFS clients that run on user VMs interact cooperatively with the Ceph service daemons, and they interact directly with RADOS to read and write file data blocks.

CephFS quotas, which enforce Shared File Systems (manila) share sizes, are enforced on the client side, such as on VMs that are owned by (RHOSP) users. The client side software on user VMs might not be current, which can leave critical cloud infrastructure vulnerable to malicious or inadvertently harmful software that targets the Ceph service ports.

Deploy native CephFS as a back end only in environments in which trusted users keep client-side software up to date. Ensure that no software that can impact the Red Hat Ceph Storage infrastructure runs on your VMs.

For a general purpose RHOSP deployment that serves many untrusted users, deploy CephFS-NFS. For more information about using CephFS-NFS, see Deploying Red Hat Ceph Storage and Red Hat OpenStack Platform together with director.

Users might not keep client-side software current, and they might fail to exclude harmful software from their VMs, but using CephFS-NFS, they only have access to the public side of an NFS server, not to the Ceph infrastructure itself. NFS does not require the same kind of cooperative client and, in the worst case, an attack from a user VM can damage the NFS gateway without damaging the Ceph Storage infrastructure behind it.

You can expose the native CephFS back end to all trusted users, but you must enact the following security measures:

- Configure the storage network as a provider network.

- Impose role-based access control (RBAC) policies to secure the Storage provider network.

- Create a private share type.

6.3. Native CephFS deployment

A typical native Ceph file system (CephFS) installation in a Red Hat OpenStack Platform (RHOSP) environment includes the following components:

- RHOSP Controller nodes that run containerized Ceph metadata server (MDS), Ceph monitor (MON) and Shared File Systems (manila) services. Some of these services can coexist on the same node or they can have one or more dedicated nodes.

- Ceph Storage cluster with containerized object storage daemons (OSDs) that run on Ceph Storage nodes.

- An isolated storage network that serves as the Ceph public network on which the clients can communicate with Ceph service daemons. To facilitate this, the storage network is made available as a provider network for users to connect their VMs and mount CephFS shares.

You cannot use the Shared File Systems service (manila) with the CephFS native driver to serve shares to OpenShift Container Platform through Manila CSI, because Red Hat does not support this type of deployment. For more information, contact Red Hat Support.

The Shared File Systems (manila) service provides APIs that allow the tenants to request file system shares, which are fulfilled by driver modules. The driver for Red Hat CephFS, manila.share.drivers.cephfs.driver.CephFSDriver, allows the Shared File Systems service to use native CephFS as a back end. You can install native CephFS in an integrated deployment managed by director.

When director deploys the Shared File Systems service with a CephFS back end on the overcloud, it automatically creates the required data center storage network. However, you must create the corresponding storage provider network on the overcloud.

For more information about network planning, see Overcloud networks in Installing and managing Red Hat OpenStack Platform with director.

Although you can manually configure the Shared File Systems service by editing the /var/lib/config-data/puppet-generated/manila/etc/manila/manila.conf file for the node, any settings can be overwritten by the Red Hat OpenStack Platform director in future overcloud updates. Red Hat only supports deployments of the Shared File Systems service that are managed by director.

6.4. Requirements

You can deploy a native CephFS back end with new or existing Red Hat OpenStack Platform (RHOSP) environments if you meet the following requirements:

- Use Red Hat OpenStack Platform version 17.0 or later.

- Configure a new Red Hat Ceph Storage cluster at the same time as the native CephFS back end. For information about how to deploy Ceph Storage, see Deploying Red Hat Ceph Storage and Red Hat OpenStack Platform together with director.

The RHOSP Shared File Systems service (manila) with the native CephFS back end is supported for use with Red Hat Ceph Storage version 5.2 or later. For more information about how to determine the version of Ceph Storage installed on your system, see Red Hat Ceph Storage releases and corresponding Ceph package versions.

- Install the Shared File Systems service on a Controller node. This is the default behavior.

- Use only a single instance of a CephFS back end for the Shared File Systems service.

6.5. File shares

The Shared File Systems service (manila), Ceph File System (CephFS), and CephFS-NFS manage shares differently.

The Shared File Systems service provides shares, where a share is an individual file system namespace and a unit of storage with a defined size. Shared file system storage allows multiple clients to connect, read, and write data to any given share, but you must give each client access to the share through the Shared File Systems service access control APIs before they can connect.

CephFS manages a share like a directory with a defined quota and a layout that points to a particular storage pool or namespace. CephFS quotas limit the size of a directory to the size of the share that the Shared File Systems service creates.

You control access to native CephFS shares by using Metadata Service (MDS) authentication capabilities. With native CephFS, file shares are provisioned and accessed through the CephFS protocol. Access control is performed with a CephX authentication scheme that uses CephFS usernames.

6.6. Network isolation for native CephFS

Native CephFS deployments use the isolated storage network deployed by director as the Red Hat Ceph Storage public network. Clients use this network to communicate with various Ceph Storage infrastructure service daemons. For more information about isolating networks, see Network isolation in Installing and managing Red Hat OpenStack Platform with director.

6.7. Deploying the native CephFS environment

When you are ready to deploy the environment, use the openstack overcloud deploy command with the custom environments and roles required to configure the native CephFS back end.

The openstack overcloud deploy command has the following options in addition to other required options.

| Action | Option | Additional Information |

|---|---|---|

|

Specify the network configuration with |

[filename] |

You can use a custom environment file to override values for the default networks specified in this network data environment file. This is the default network data file that is available when you use isolated networks. You can omit this file from the |

| Deploy the Ceph daemons. |

| Initiating overcloud deployment in Deploying Red Hat Ceph Storage and Red Hat OpenStack Platform together with director |

|

Deploy the Ceph metadata server with |

| Initiating overcloud deployment in Deploying Red Hat Ceph Storage and Red Hat OpenStack Platform together with director |

| Deploy the manila service with the native CephFS back end. |

|

The following example shows an openstack overcloud deploy command that includes options to deploy a Ceph cluster, Ceph MDS, the native CephFS back end, and the networks required for the Ceph cluster:

[stack@undercloud ~]$ openstack overcloud deploy \ ... -n /usr/share/openstack-tripleo-heat-templates/network_data.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e /home/stack/network-environment.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/cephadm/cephadm.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/cephadm/ceph-mds.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/manila-cephfsnative-config.yaml

For more information about the openstack overcloud deploy command, see Provisioning and deploying your overcloud in Installing and managing Red Hat OpenStack Platform with director.

6.8. Native CephFS back-end environment file

The environment file for defining a native CephFS back end, manila-cephfsnative-config.yaml is located in the following path of an undercloud node: /usr/share/openstack-tripleo-heat-templates/environments/manila-cephfsnative-config.yaml.

The manila-cephfsnative-config.yaml environment file contains settings relevant to the deployment of the Shared File Systems service. The back end default settings should work for most environments.

The example shows the default values that director uses during deployment of the Shared File Systems service:

[stack@undercloud ~]$ cat /usr/share/openstack-tripleo-heat-templates/environments/manila-cephfsnative-config.yaml # A Heat environment file which can be used to enable a # a Manila CephFS Native driver backend. resource_registry: OS::TripleO::Services::ManilaApi: ../deployment/manila/manila-api-container-puppet.yaml OS::TripleO::Services::ManilaScheduler: ../deployment/manila/manila-scheduler-container-puppet.yaml # Only manila-share is pacemaker managed: OS::TripleO::Services::ManilaShare: ../deployment/manila/manila-share-pacemaker-puppet.yaml OS::TripleO::Services::ManilaBackendCephFs: ../deployment/manila/manila-backend-cephfs.yaml parameter_defaults: ManilaCephFSBackendName: cephfs 1 ManilaCephFSDriverHandlesShareServers: false 2 ManilaCephFSCephFSAuthId: 'manila' 3 ManilaCephFSCephFSEnableSnapshots: true 4 ManilaCephFSCephVolumeMode: '0755' 5 # manila cephfs driver supports either native cephfs backend - 'CEPHFS' # (users mount shares directly from ceph cluster), or nfs-ganesha backend - # 'NFS' (users mount shares through nfs-ganesha server) ManilaCephFSCephFSProtocolHelperType: 'CEPHFS' 6

The parameter_defaults header signifies the start of the configuration. Specifically, settings under this header let you override default values set in resource_registry. This includes values set by OS::Tripleo::Services::ManilaBackendCephFs, which sets defaults for a CephFS back end.

- 1

ManilaCephFSBackendNamesets the name of the manila configuration of your CephFS backend. In this case, the default back end name iscephfs.- 2

ManilaCephFSDriverHandlesShareServerscontrols the lifecycle of the share server. When set tofalse, the driver does not handle the lifecycle. This is the only supported option for CephFS back ends.- 3

ManilaCephFSCephFSAuthIddefines the Ceph auth ID that the director creates for the manila service to access the Ceph cluster.- 4

ManilaCephFSCephFSEnableSnapshotscontrols snapshot activation. Snapshots are supported With Ceph Storage 4.1 and later, but the value of this parameter defaults tofalse. You can set the value totrueto ensure that the driver reports thesnapshot_supportcapability to the manila scheduler.- 5

ManilaCephFSCephVolumeModecontrols the UNIX permissions to set against the manila share created on the native CephFS back end. The value defaults to755.- 6

ManilaCephFSCephFSProtocolHelperTypemust be set toCEPHFSto use the native CephFS driver.

For more information about environment files, see Environment Files in the Installing and managing Red Hat OpenStack Platform with director guide.

Chapter 7. Deploying the Shared File Systems service with CephFS-NFS

When you use the Shared File Systems service (manila) with Ceph File System (CephFS) through an NFS gateway (NFS-Ganesha), you can use the same Red Hat Ceph Storage cluster that you use for block and object storage to provide file shares through the NFS protocol.

CephFS-NFS has been fully supported since Red Hat OpenStack Platform (RHOSP) version 13. The RHOSP Shared File Systems service (manila) with CephFS-NFS for RHOSP 17.0 and later is supported for use with Red Hat Ceph Storage version 5.2 or later. For more information about how to determine the version of Ceph Storage installed on your system, see Red Hat Ceph Storage releases and corresponding Ceph package versions.

CephFS is the highly scalable, open-source distributed file system component of Red Hat Ceph Storage, a unified distributed storage platform. Ceph Storage implements object, block, and file storage using Reliable Autonomic Distributed Object Store (RADOS). CephFS, which is POSIX compatible, provides file access to a Ceph Storage cluster.

The Shared File Systems service enables users to create shares in CephFS and access them with NFS 4.1 through user-space NFS server software, NFS-Ganesha. NFS-Ganesha controls access to the shares and exports them to clients through the NFS 4.1 protocol. The Shared File Systems service manages the life cycle of these shares in RHOSP. When cloud administrators configure the service to use CephFS-NFS, these file shares come from the CephFS cluster, but they are created and accessed as familiar NFS shares.

For more information about the Shared File Systems service, see Configuring the Shared File Systems service (manila) in Configuring persistent storage.

7.1. Prerequisites

- You install the Shared File Systems service on Controller nodes, as is the default behavior.

- You must create a StorageNFS network for storage traffic through RHOSP director.

- You install the NFS-Ganesha gateway service on the Pacemaker cluster of the Controller nodes.

- You configure only a single instance of a CephFS back end to use the Shared File Systems service. You can use other non-CephFS back ends with the single CephFS back end.

7.2. CephFS-NFS driver

The CephFS-NFS back end in the Shared File Systems service (manila) is composed of Ceph metadata servers (MDS), the NFS gateway (NFS-Ganesha), and the Red Hat Ceph Storage cluster service components.

The Shared File Systems service CephFS-NFS driver uses NFS-Ganesha to provide NFSv4 protocol access to CephFS shares. The Ceph MDS service maps the directories and file names of the file system to objects that are stored in RADOS clusters. NFS gateways can serve NFS file shares with different storage back ends, such as Ceph. The NFS-Ganesha service runs on the Controller nodes with the Ceph services.

Deployment with an isolated network is optional but recommended. In this scenario, instances are booted with at least two NICs: one NIC connects to the project router and the second NIC connects to the StorageNFS network, which connects directly to NFS-Ganesha. The instance mounts shares by using the NFS protocol. CephFS shares that are hosted on Ceph Object Storage Daemon (OSD) nodes are provided through the NFS gateway.

NFS-Ganesha improves security by preventing user instances from directly accessing the MDS and other Ceph services. Instances do not have direct access to the Ceph daemons.

7.3. Red Hat Ceph Storage services and client access

When you use Red Hat Ceph Storage to provide object and block storage, you require the following services for deployment:

- Ceph monitor (MON)

- Object Storage Daemon (OSD)

- Rados Gateway (RGW)

- Manager

For native CephFS, you also require the Ceph Storage Metadata Service (MDS), and for CephFS-NFS, you require the NFS-Ganesha service as a gateway to native CephFS using the NFS protocol.

NFS-Ganesha runs in its own container that interfaces both to the Ceph public network and to a new isolated network, StorageNFS. If you use the composable network feature of Red Hat OpenStack Platform (RHOSP) director, you can deploy the isolated network and connect it to the Controller nodes. As the cloud administrator, you can configure the network as a Networking (neutron) provider network.

NFS-Ganesha accesses CephFS over the Ceph public network and binds its NFS service using an address on the StorageNFS network.

To access NFS shares, you provision Compute (nova) instances with an additional NIC that connects to the Storage NFS network. Export locations for CephFS shares appear as standard NFS IP:<path> tuples that use the NFS-Ganesha server VIP on the StorageNFS network. The network uses the IP address of the instance to perform access control on the NFS shares.

Networking (neutron) security groups prevent an instance that belongs to project 1 from accessing an instance that belongs to project 2 over the StorageNFS network. Projects share the same CephFS file system, but project data path separation is enforced because instances can access files only under export trees: /path/to/share1/…, /path/to/share2/….

7.4. Shared File Systems service with CephFS-NFS fault tolerance

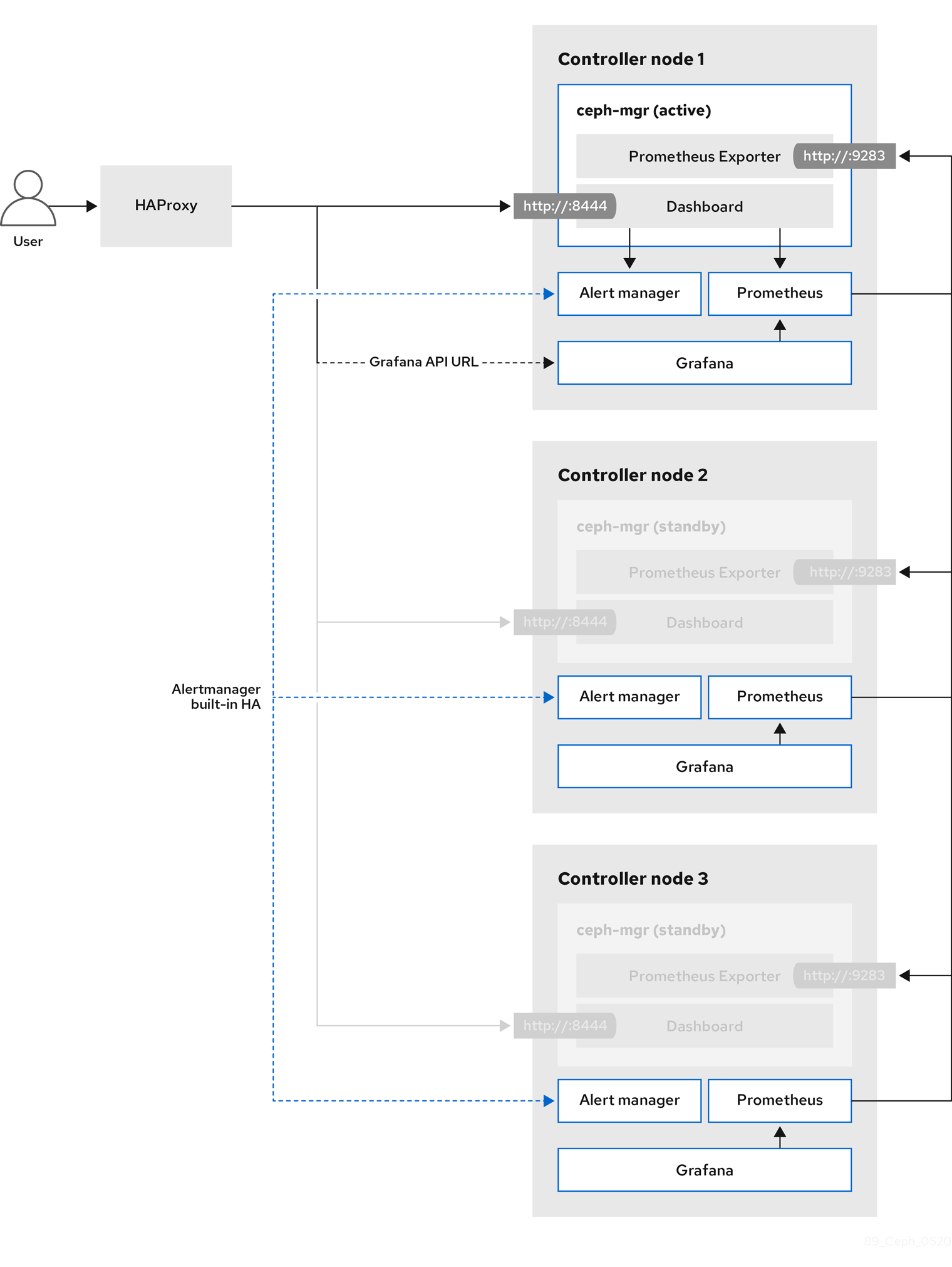

When Red Hat OpenStack Platform (RHOSP) director starts the Red Hat Ceph Storage service daemons, they manage their own high availability (HA) state and, in general, there are multiple instances of these daemons running. By contrast, in this release, only one instance of NFS-Ganesha can serve file shares at a time.

To avoid a single point of failure in the data path for CephFS-NFS shares, NFS-Ganesha runs on a RHOSP Controller node in an active-passive configuration that is managed by a Pacemaker-Corosync cluster. NFS-Ganesha acts across the Controller nodes as a virtual service with a virtual service IP address.

If a Controller node fails or the service on a particular Controller node fails and cannot be recovered on that node, Pacemaker-Corosync starts a new NFS-Ganesha instance on a different Controller node using the same virtual IP address. Existing client mounts are preserved because they use the virtual IP address for the export location of shares.

Using default NFS mount-option settings and NFS 4.1 or later, after a failure, TCP connections are reset and clients reconnect. I/O operations temporarily stop responding during failover, but they do not fail. Application I/O also stops responding but resumes after failover completes.

New connections, new lock states, and so on are refused until after a grace period of up to 90 seconds during which time the server waits for clients to reclaim their locks. NFS-Ganesha keeps a list of the clients and exits the grace period earlier if all clients reclaim their locks.

7.5. CephFS-NFS installation

A typical CephFS-NFS installation in a Red Hat OpenStack Platform (RHOSP) environment includes the following configurations:

OpenStack Controller nodes that are running the following:

- Ceph monitor (MON)

- Containerized Ceph metadata server (MDS)

- Shared File Systems service (manila)

NFS-Ganesha

Some of these services can coexist on the same node or can have one or more dedicated nodes.

- A Red Hat Ceph Storage cluster with containerized object storage daemons (OSDs) running on Ceph Storage nodes

- An isolated StorageNFS network that provides access from projects to the NFS-Ganesha service for NFS share provisioning

The Shared File Systems service with CephFS-NFS fully supports serving shares to Red Hat OpenShift Container Platform through Manila CSI. This solution is not intended for large scale deployments. For important recommendations, see https://access.redhat.com/articles/6667651.

The Shared File Systems service provides APIs that allow the projects to request file system shares, which are fulfilled by driver modules. If you use the driver for CephFS, manila.share.drivers.cephfs.driver.CephFSDriver, you can use the Shared File Systems service with a CephFS back end. RHOSP director configures the driver to deploy NFS-Ganesha so that the CephFS shares are presented through the NFS 4.1 protocol.

While preparing your CephFS NFS deployment, you will require the isolated StorageNFS network. You can use director to create this isolated StorageNFS network. For more information, see Configuring overcloud networking in Installing and managing Red Hat OpenStack Platform with director.

Manual configuration options for Shared File Systems service back ends