CephFS NFS Manila-CSI Workload Recommendations for Red Hat OpenStack Platform 16.x and 17.x

In Red Hat OpenStack Platform 16.x and 17.x, OpenStack Manila can be configured with one or more back end storage systems. These recommendations pertain only to the use of CephFS as a back end when exposed via NFS.

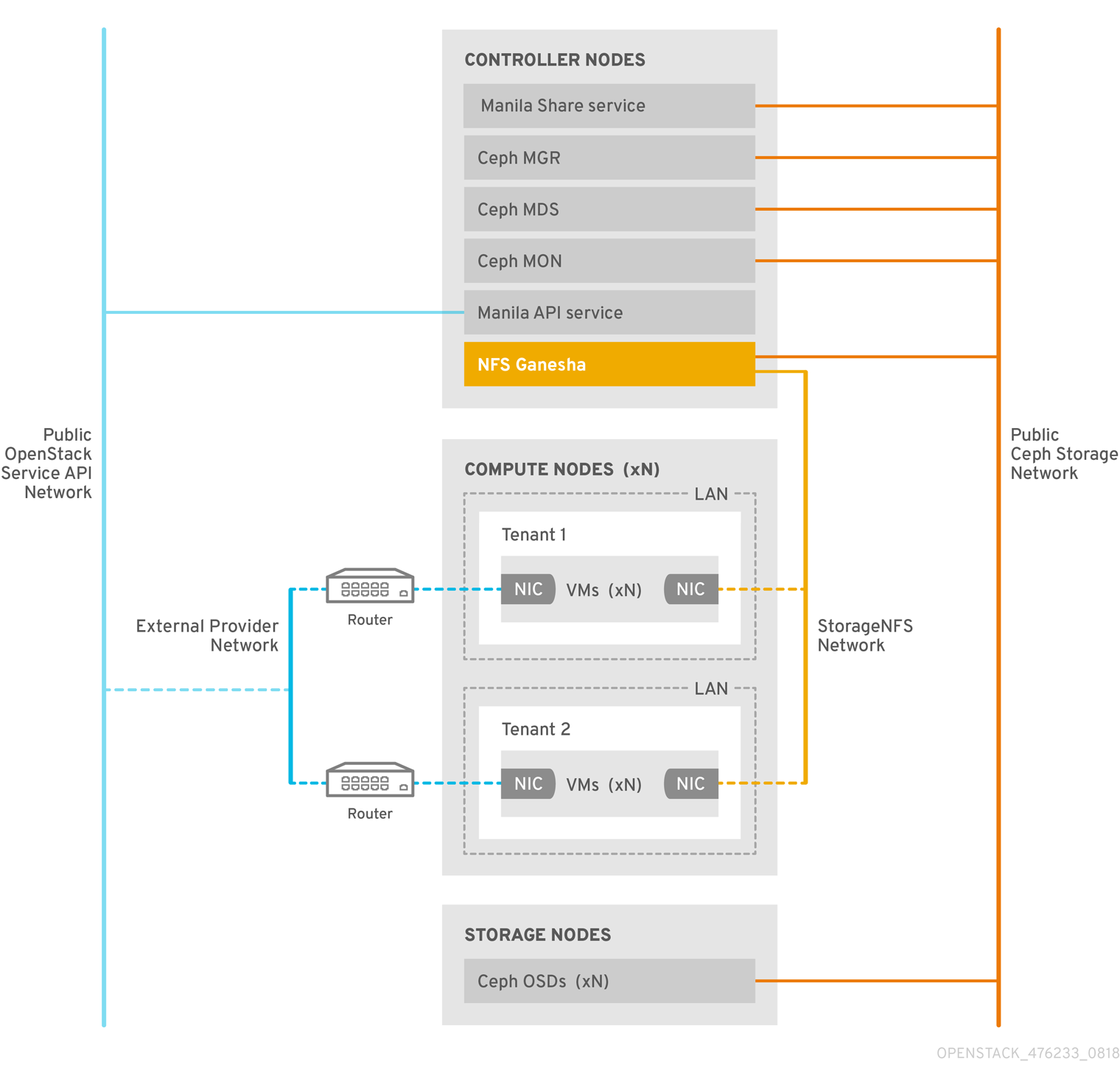

Architecture

The CephFS NFS back end in the Shared File Systems service (Manila) makes use of multiple components to provide NFSv4.1 protocol access to CephFS shares: the Ceph metadata servers (MDS), the CephFS NFS gateway (NFS-Ganesha) and the Ceph cluster service components. When deployed by Director, the NFS-Ganesha service runs, by default, on the Controller nodes with the Ceph services.

The following diagram shows how API and NFS clients interact with the service in the Red Hat OpenStack Platform:

The Ceph cluster components manage their own high availability (HA) state and, in general, there are multiple instances of these daemons running. By contrast, in Red Hat OpenStack Platform 16.x and 17.x, only one instance of NFS-Ganesha can serve file shares at a time.

To avoid a single point of failure in the data path for NFS shares, NFS-Ganesha runs on a OpenStack Controller node in an active-passive configuration, managed by a Pacemaker-Corosync cluster. NFS-Ganesha acts across the Controller nodes as a virtual service with a virtual service IP address.

Manila CSI Internals

The Manila CSI will orchestrate creation/lifecycle of NFS shares interacting with the Manila API; the OpenShift worker nodes will proceed to mounting the shares and make the RWX share accessible into PODs.

Recommendations

When planning for deployment of Manila and the NFS-Ganesha network, take care to size your controller nodes CPU and networking according to the intended use for this service.

-

You must ensure that the network interface of the StorageNFS network has sufficient bandwidth for workloads across all OpenShift and OpenStack consumers. Alternatively, isolating it on a dedicated NIC can avoid bandwidth saturation, which would would be problematic for other networks on the same NIC.

-

You must also ensure that the controller nodes have adequate CPU since the CPU utilization of the NFS-Ganesha gateway increases as more workloads are consuming manila shares.

-

The workloads utilizing NFS shares must also be resilient to the failover window for the service. Failovers occur in case of failure of the Controller node hosting the service or during planned maintenance. These failovers can take a few minutes to resolve, while the service is started on another node.

Other limitations for this architecture

Optional features of OpenStack Manila such as the ability to create snapshots, or cloning them to new shares are not available unless the OpenStack administrator explicitly asks for these capabilities via Share Type Extra Specifications. Be aware that as of OSP16.2, Manila's CephFS driver does not currently support cloning snapshots into shares. This feature will be available in a future release of the Red Hat OpenStack Platform.

When using the ReadWriteOnce access mode for your OpenStack Manila persistent volumes in OpenShift, fsGroups will not be honored. This should not affect read/write operations within the pods. However, these persistent volumes are not suitable for any application that requires specific file system ownership or permissions.

Comments