Configuring SAP HANA Scale-Up Multitarget System Replication for disaster recovery

Abstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code and documentation. We are beginning with these four terms: master, slave, blacklist, and whitelist. Due to the enormity of this endeavor, these changes will be gradually implemented over upcoming releases. For more details on making our language more inclusive, see our CTO Chris Wright’s message.

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

- Make sure you are logged in to the Jira website.

- Provide feedback by clicking on this link.

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- If you want to be notified about future updates, please make sure you are assigned as Reporter.

- Click Create at the bottom of the dialogue.

Chapter 1. Overview

Due to the growing demands on availability, one copy of data is not enough.

To ensure business continuity, a reliable and highly available architecture must replicate data across more than just one system. Using multitarget system replication, the primary system can replicate data changes to more than one secondary system. For more information, see SAP HANA Multitarget System Replication.

This document describes how to configure a replication site for disaster recovery using SAP HANA Multitarget System Replication on a 2-node cluster, installed as described in Automating SAP HANA Scale-Up System Replication using the RHEL HA Add-On.

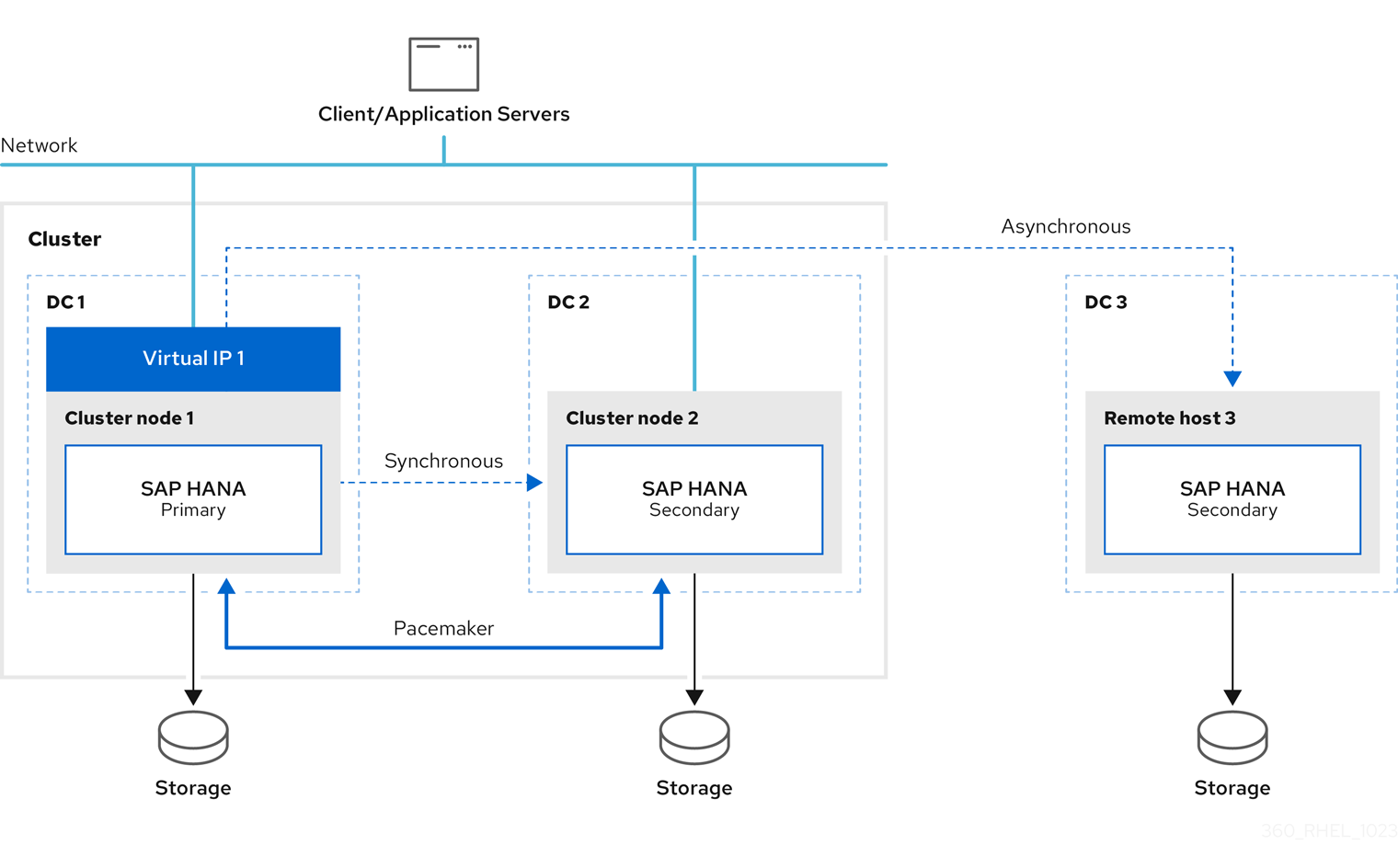

A sample configuration looks like this:

The initial setup is as follows:

- Replicate Primary site 1 (DC1) to Secondary site 2 (DC2)

- Replicate Primary site 1 (DC1) to Secondary site 3 (DC3)

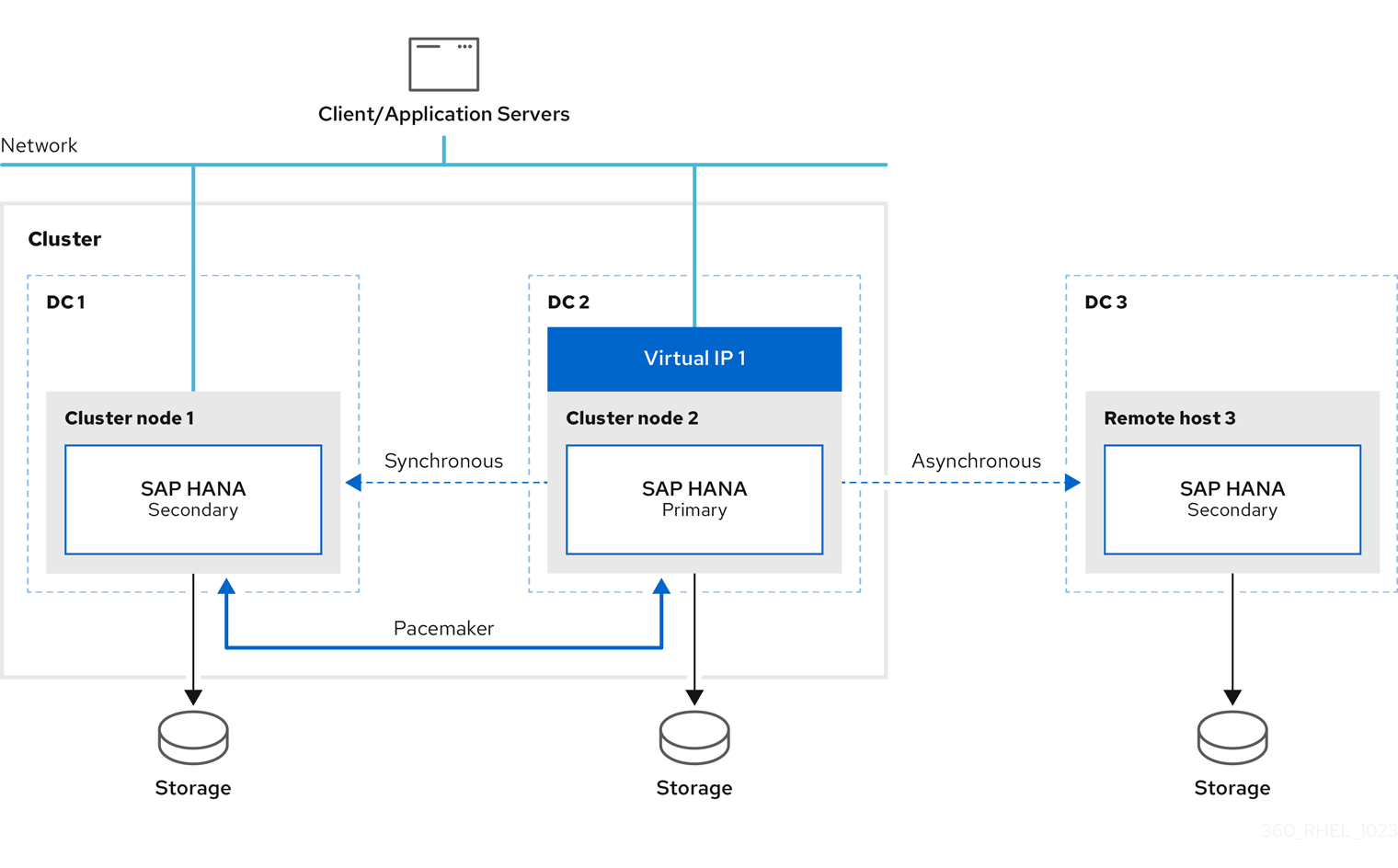

If the primary fails, the primary switches to secondary site 2 (DC2) and the former primary site 1 (DC1) will become the secondary site.

When failover occurs, this solution ensures that the configured primary site is switched at the third DR site as well. The configuration after failover is as follows:

- Primary running on DC2

- Secondary running on DC1 (synced from DC2)

- Secondary running on DC3 (synced from DC2)

The SAP HANA instance on remotehost3 will be automatically re-registered to the new primary as long as this instance is up and running during the failover.

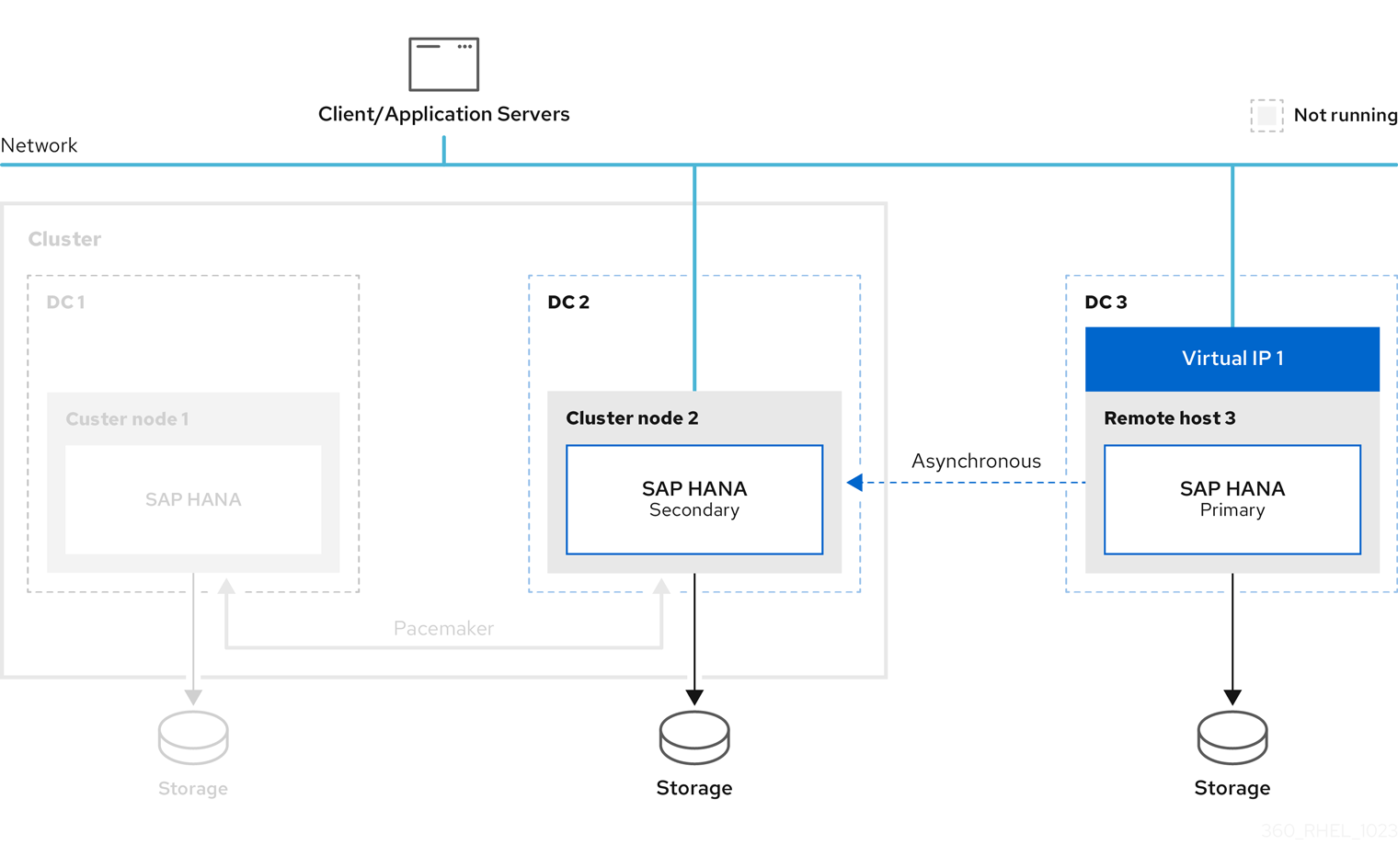

This document also describes the example of switching the primary database to the third site.

Please note that further network configuration is required for the connection of the clients to the database. This is not within the scope of this document.

For further information, please check the following:

Chapter 2. Parameters

These parameters of an existing two-node cluster are used to setup the third site:

| Parameter | Example | Description |

|---|---|---|

| SID | RH2 | System ID of the HANA Database |

| First SITE | DC1 | Name of the first datacenter /site |

| Second SITE | DC2 | Name of the second datacenter / site |

| Third SITE | DC3 | Name of the third datacenter / site |

| InstanceNr | 02 | HANA Instance Number |

| <sid>adm uid | 1000 | User-ID of sidadm user |

| sapsys gid | 980 | Group ID of sapsys |

It is required that all three HANA instances use the same values for the following:

- SID

- InstanceNr

- <sid>adm uid

- sapsys gid

Chapter 3. Prerequisite

For the solution to work, the following requirements must be met.

All nodes must have the same:

- number of CPUs and RAM

- software configuration

- RHEL release

- firewall settings

- SAP HANA release (SAP HANA 2.0 SPS04 or later)

The pacemaker packages are only installed on the cluster nodes and must use the same version of resource-agents-sap-hana (0.162.1 or later).

To be able to support SAP HANA Multitarget System Replication, refer to Add SAP HANA Multitarget System Replication autoregister support. Also, set the following:

-

use

register_secondaries_on_takeover=true -

use

log_mode=normal

The initial setup is based on the installation guide, Automating SAP HANA Scale-Up System Replication using the RHEL HA Add-On.

The system replication configuration of all SAP HANA instances is based on SAP requirements. For more information, refer to the guidelines from SAP based on the SAP HANA Administration Guide .

Chapter 4. Installation

This chapter describes the installation of the additional SAP HANA instance.

4.1. Check the 2-node Base Installation with a failover test

Verify that the installation is done based on Automating SAP HANA Scale-Up System Replication using the RHEL HA Add-On.

To be able to use SAP HANA Multitarget System Replication, the version of resource-agents-sap-hana must be 0.162.1 or later. This can be checked, as shown below:

# rpm -q resource-agents-sap-hana

resource-agents-sap-hana-0.162.1-0.el8_6.1.noarchYou can run a failover test to ensure that the environment is working. You can move the SAPHana resource, which is also described in Failover the SAPHana Resource using Move.

4.2. Install SAP HANA on third site

On the third site, you also need to install SAP HANA using the same version and parameters as for the SAP HANA instances on the two-node Pacemaker cluster, as shown below:

| Parameter | Value |

|---|---|

| SID | RH2 |

| InstanceNumber | 02 |

| <sid>adm user ID | rh2adm 999 |

| sapsys group ID | sapsys 999 |

The SAP HANA installation is done using hdblcm. For more details, see SAP HANA Installation using hdbclm. Optionally, the installation can also be done using Ansible.

In the examples in this chapter, we are using:

- hosts: clusternode1 on site DC1, clusternode2 on site DC2, and remotehost3 on site DC3

- SID RH2

- adminuser rh2adm

4.3. Setup SAP HANA System Replication on the third node

In the existing installation, there is already SAP HANA system replication configured between the primary and secondary SAP HANA instances in a two-node cluster. SAP HANA System Replication is enabled on the up-and-running primary SAP HANA database instance.

This chapter describes how to register the third SAP HANA instance as an additional secondary HANA System Replication site on node remotehost3 at site DC3. This step is similar to the registration of the original secondary HANA instance (DC2) on node clusternode2. More details are described in the following chapters. If you need further information, you can also check General Prerequisites for Configuring SAP HANA System Replication.

4.3.1. Check the primary database

You must check that the other databases are running and the system replication is working properly. Please refer to:

You can discover the primary HANA instance with:

clusternode1:rh2adm> hdbnsutil -sr_state | egrep -e "primary masters|^mode"

mode: primary4.3.2. Copy database keys

Before you are able to register a new secondary HANA instance, the database keys of the primary HANA instance need to be copied to the new additional HANA replication site. In our example, the hostname of the third site is remotehost3.

For example, on the primary node clusternode1, run:

clusternode1:rh2adm> scp -rp

/usr/sap/${SAPSYSTEMNAME}/SYS/global/security/rsecssfs/data/SSFS_${SAPSYSTEMNAME}.DAT remotehost3:/usr/sap/${SAPSYSTEMNAME}/SYS/global/security/rsecssfs/data/SSFS_${SAPSYSTEMNAME}.DAT

clusternode1:rh2adm> scp -rp

/usr/sap/${SAPSYSTEMNAME}/SYS/global/security/rsecssfs/key/SSFS_${SAPSYSTEMNAME}.KEY remotehost3:/usr/sap/${SAPSYSTEMNAME}/SYS/global/security/rsecssfs/key/SSFS_${SAPSYSTEMNAME}.KEY4.3.3. Register the third site as secondary

You need to know the name of the node that is running the primary database.

To monitor the registration, you can run the following command in a separate terminal on the primary node:

clusternode1:rh2adm> watch python

/usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/python_support/systemReplicationStatus.pyThis will show you the progress and any errors if they occur.

To register the HANA instance on the third site (DC3) as an additional secondary SAP HANA instance, run the following command on the third site host remotehost3:

remotehost3:rh2adm> hdbnsutil -sr_register --name=DC3

--remoteHost=clusternode1 --remoteInstance=${TINSTANCE}

--replicationMode=async --operationMode=logreplay --onlineIn this example, DC3 is the name of the third site, clusternode1 is the name of the primary node.

If the database instance is already running, you don’t have to stop it, you can use the option --online, which will register the instance while it is online. The necessary restart (stop and start) of the instance will then be initiated by hdbnsutil itself.

The option --online works in any case, both when the HANA instance is online and offline (this option is available with SAP HANA 2.0 SPS04 and later).

If the HANA instance is offline, you have to start it after the third node is registered. You can find additional information in SAP HANA System Replication.

4.3.4. Add SAP HANA Multitarget System Replication autoregister support

We are using a SAP HANA System Replication option called register_secondaries_on_takeover = true. This will automatically re-register with the new primary site in case of a failover between the previous primary site and the other secondary site. This option must be added to the global.ini file on all potential primary sites.

All HANA instances should have this entry in their global.ini:

[system_replication] register_secondaries_on_takeover = true

The following two chapters describe the global.ini configuration in detail.

Despite the parameter, if the third database is down when the failover is initiated, the third instance needs to be re-registered manually.

4.3.5. Configure global.ini on the pacemaker nodes

The option register_secondaries_on_takeover = true needs to be added to the [system_replication] section in global.ini of the SAP HANA nodes of site 1 and site 2, which are managed by the pacemaker cluster. Please edit the file global.ini always on the respective node, and do not copy the file from another node.

The global.ini file should only be edited if the HANA instance of a site has stopped processing.

Edit the global.ini as the rh2adm user:

clusternode1:rh2adm> vim

/usr/sap/${SAPSYSTEMNAME}/SYS/global/hdb/custom/config/global.iniExample:

# global.ini last modified 2023-07-14 16:31:14.120444 by hdbnsutil -sr_register --remoteHost=hana07 --remoteInstance=02 --replicationMode=syncmem --operationMode=logreplay --name=DC2 [multidb] mode = multidb database_isolation = low singletenant = yes [ha_dr_provider_SAPHanaSR] provider = SAPHanaSR path = /hana/shared/myHooks execution_order = 1 [persistence] basepath_datavolumes = /hana/data/RH2 basepath_logvolumes = /hana/log/RH2 log_mode = normal enable_auto_log_backup = true [system_replication] register_secondaries_on_takeover = true timetravel_logreplay_mode = auto operation_mode = logreplay mode = primary actual_mode = syncmem site_id = 1 site_name = DC2 [system_replication_site_masters] 2 = clusternode1:30201 [trace] ha_dr_saphanasr = info

This option is active as soon as the SAP HANA database instance is started.

4.3.6. Configure global.ini on remotehost3

Edit the global.ini as a <sid>adm user:

% vim /usr/sap/${SAPSYSTEMNAME}/SYS/global/hdb/custom/config/global.ini

On remotehost3, the ha_dr_provider_SAPHanaSR section is not used.

Example of global.ini on remotehost3:

# global.ini last modified 2023-06-22 17:22:54.154508 by hdbnameserver [multidb] mode = multidb database_isolation = low singletenant = yes [persistence] basepath_datavolumes = /hana/data/RH2 basepath_logvolumes = /hana/log/RH2 log_mode = normal enable_auto_log_backup = true [system_replication] operation_mode = logreplay register_secondaries_on_takeover = true reconnect_time_interval = 5 timetravel_logreplay_mode = auto site_id = 3 mode = syncmem actual_mode = syncmem site_name = DC3 [system_replication_site_masters] 2 = clusternode1:30201

4.3.7. Verify installation

After the installation, you have to check if all HANA instances are up and running and that HANA System Replication is working between them. The easiest way is to check the systemReplicationStatus, as described in more detail in Check the System Replication status. Please also refer to the Check Database for further information.

For HANA System Replication to work correctly, please ensure that the “log_mode” parameter is set to “normal”. Please refer to Checking the log_mode of the SAP HANA database for more information.

To verify that the setup is working as expected, please run the Test cases as described in the following chapter.

Chapter 5. Test cases

After finishing the installation, it is recommended to run some basic tests to check the installation and verify how SAP HANA Multitarget System Replication is working and how it recovers from a failure. It is always a good practice to run these test cases before starting production. If possible, you can also prepare a test environment to verify the changes before applying them in production.

All cases will describe:

- Subject of the test

- Test preconditions

- Test steps

- Monitoring the test

- Starting the test

- Expected result(s)

- Ways to return to an initial state

To automatically register a former primary HANA replication site as a new secondary HANA replication site on the HANA instances that are managed by the cluster, you can use the option AUTOMATED_REGISTER=true in the SAPHana resource. For more details, refer to AUTOMATED_REGISTER.

The names of the HA cluster nodes and the HANA replication sites (in brackets) used in the examples are:

- clusternode1 (DC1)

- clusternode2 (DC2)

- remotehost3 (DC3)

The following parameters are used for configuring the HANA instances and the cluster:

- SID=RH2

- INSTANCENUMBER=02

- CLUSTERNAME=cluster1

You can use clusternode1-2, remotehost3 also as alias in the /etc/hosts in your test environment.

The tests are described in more detail, including examples and additional checks of preconditions. At the end, there are examples of how to clean up the environment to be prepared for further testing.

In some cases, if the distance between clusternode1-2 and remotehost3 is too long, you should use –replcationMode=async instead of –replicationMode=syncmem. Please also ask your SAP HANA administrator before choosing the right option.

5.1. Prepare the tests

Before we run a test, the complete environment needs to be in a correct and healthy state. We have to check the cluster and the database via:

-

pcs status --full -

python systemReplicationStatus.py -

df -h

An example for pcs status --full can be found in Check cluster status with pcs status. If there are warnings or previous failures in the "Migration Summary", you should clean up the cluster before you start your test.

[root@clusternode1]# pcs resource clear SAPHana_RH2_02-clone

Cluster Cleanup describes some more ways to do it. It is important that the cluster and all the resources be started.

Besides the cluster, the database should also be up and running and in sync. The easiest way to verify the proper status of the database is to check the system replication status. See also Replication Status. This should be checked on the primary database.

To discover the primary node, you can check Discover Primary Database or use:

-

pcs status | grep -E "Promoted|Master" -

hdbnsutil -sr_stateConfiguration

Check if there is enough space on the file systems by running:

# df -h

Please also follow the guidelines for a system check before you continue. If the environment is clean, it is ready to run the tests. During the test, monitoring is helpful to observe progress.

5.2. Monitor the environment

In this section, we are focusing on monitoring the environment during the tests. This section will only cover the necessary monitors to see the changes. It is recommended to run the monitors from a dedicated terminal. To be able to detect changes during the test, it is recommended to start monitoring before starting the test.

In the Useful Commands section, more examples are shown.

5.2.1. Discover the primary node

You need to discover the primary node to monitor a failover or run certain commands that only provide information about the replication status when executed on the primary node.

To discover the primary node, you can run the following commands as the <sid>adm user:

clusternode1:rh2adm> watch -n 5 'hdbnsutil -sr_stateConfiguration | egrep -e "primary masters|^mode"'

Output example, when clusternode2 is the primary database:

mode: syncmem primary masters: clusternode2

A second way to identify the primary node is to run the following command as root on a cluster node:

# watch -n 5 'pcs status --full'

Output on the node that runs the primary database is:

mode: primary

5.2.2. Check the Replication status

The replication status shows the relationship between primary and secondary database nodes and the current status of the replication.

To discover the replication status, you can run as the <sid>adm user:

clusternode1:rh2adm> hdbnsutil -sr_stateConfiguration

If you want to permanently monitor changes in the system replication status, please run the following command:

clusternode1:rh2adm> watch -n 5 'python

/usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/exe/python_support/systemReplicationStatus.py ; echo Status $?'

This example repeatedly captures the replication status and also determines the current return code.

As long as the return code (status) is 15, the replication status is fine. The other return codes are:

- 10: NoHSR

- 11: Error

- 12: Unknown

- 13: Initializing

- 14: Syncing

- 15: Active

If you register a new secondary, you can run it in a separate window on the primary node, and you will see the progress of the replication. If you want to monitor a failover, you can run it in parallel on the old primary as well as on the new primary database server. For more information, please read Check SAP HANA System Replication Status.

5.2.3. Check /var/log/messages entries

Pacemaker is writing a lot of information into the /var/log/messages file. During a failover, a huge number of messages are written into this message file. To be able to follow only the important messages depending on the SAP HANA resource agent, it is useful to filter the detailed activities of the pacemaker SAP resources. It is enough to check the message file on a single cluster node.

For example, you can use this alias:

# alias tmsl='tail -1000f /var/log/messages | egrep -s "Setting master-rsc_SAPHana_${SAPSYSTEMNAME}_HDB${TINSTANCE}|sr_register|WAITING4LPA|PROMOTED|DEMOTED|UNDEFINED|master_walk|SWAIT|WaitforStopped|FAILED|LPT"'Run this alias in a separate window to monitor the progress of the test. Please also check the example Monitor failover and sync state.

5.2.4. Cluster status

There are several ways to check the cluster status.

Check if the cluster is running:

-

pcs cluster status

-

Check the cluster and all resources:

-

pcs status

-

Check the cluster, all resources and all node attributes:

-

pcs status --full

-

Check the resources only:

-

pcs resource

-

The pcs status --full command will give you all the necessary information. To monitor changes, you can run this command together with watch.

# pcs status --full

If you want to see changes, you can run, in a separate window, the command watch:

# watch pcs status --full

An output example and further options can be found in Check cluster status.

5.2.5. Discover leftovers

To ensure that your environment is ready to run the next test, leftovers from previous tests need to be fixed or removed.

stonithis used to fence a node in the cluster:-

Detect:

[root@clusternode1]# pcs stonith history -

Fix:

[root@clusternode1]# pcs stonith cleanup

-

Detect:

Multiple primary databases:

Detect:

clusternode1:rh2adm> hdbnsutil -sr_stateConfiguration | grep -i primaryAll nodes with the same primary need to be identified.

-

Fix: clusternode1:rh2adm> re-register the wrong primary with option

--force_full_replica

Location Constraints caused by move:

Detect:

[root@clusternode1]# pcs constraint locationCheck the warning section.

-

Fix:

[root@clusternode1]# pcs resource clear <clone-resource-which was moved>

Secondary replication relationship:

-

Detect: on the primary database run

clusternode1:rh2adm> python ${DIR_EXECUTABLES}/python_support/systemReplicationStatus.py - Fix: unregister and re-register the secondary databases.

-

Detect: on the primary database run

Check siteReplicationMode (same output on all SAP HANA nodes

-

clusternode1:rh2adm> hdbnsutil -sr_state --sapcontrol=1 |grep site.*Mode

-

Pcs property:

-

Detect:

[root@clusternode1]# pcs property config -

Fix:

[root@clusternode1]# pcs property set <key=value> -

Clear

maintenance_mode -

[root@clusternode1]# pcs property set maintenance-mode=false

-

Detect:

log_mode:Detect:

clusternode1:rh2adm> python systemReplicationStatus.pyWill respond in the replication status that

log_modenormally is required.log_modecan be detected as described in Usinghdbsqlto checkInifilecontents.-

Fix: change the

log_modeto normal and restart the primary database.

CIB entries:

Detect: SFAIL entries in the cluster information base.

Please refer to Check cluster consistency, to find and remove CIB entries.

Cleanup/clear:

Detect:

[root@clusternode1]# pcs status --fullSometimes it shows errors or warnings. You can cleanup/clear resources and if everything is fine, nothing happens. Before running the next test, you can cleanup your environment.

Examples to fix:

[root@clusternode1]# pcs resource clear <name-of-the-clone-resource>[root@clusternode1]# pcs resource cleanup <name-of-the-clone-resource>

This is also useful if you want to check if there is an issue in an existing environment. For more information, please refer to Useful commands.

5.3. Test 1:Failover of the primary node with an active third site

| Subject of the test | Automatic re-registration of the third site. Sync state changes to SOK after clearing. |

| Test preconditions |

|

| Test steps |

Move the SAPHana resource using the |

| Monitoring the test |

On the third site run as

On the secondary node run as root: |

| Starting the test | Execute the cluster command:

|

| Expected result | In the monitor command on site 3 the primary master changes from clusternode1 to clusternode2.

After clearing the resource the sync state will change from |

| Ways to return to an initial state | Run the test twice. |

(*)

remotehost3:rh2adm> watch hdbnsutil -sr_state [root@clusternode1]# tail -1000f /var/log/messages |egrep -e ‘SOK|SWAIT|SFAIL’

Detailed description

Check the initial state of your cluster as root on clusternode1 or clusternode2:

[root@clusternode1]# pcs status --full Cluster name: cluster1 Cluster Summary: * Stack: corosync * Current DC: clusternode1 (1) (version 2.1.2-4.el8_6.6-ada5c3b36e2) - partition with quorum * Last updated: Mon Sep 4 06:34:46 2023 * Last change: Mon Sep 4 06:33:04 2023 by root via crm_attribute on clusternode1 * 2 nodes configured * 6 resource instances configured Node List: * Online: [ clusternode1 (1) clusternode2 (2) ] Full List of Resources: * auto_rhevm_fence1 (stonith:fence_rhevm): Started clusternode1 * Clone Set: SAPHanaTopology_RH2_02-clone [SAPHanaTopology_RH2_02]: * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode2 * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode1 * Clone Set: SAPHana_RH2_02-clone [SAPHana_RH2_02] (promotable): * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Slave clusternode2 * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Master clusternode1 * vip_RH2_02_MASTER (ocf::heartbeat:IPaddr2): Started clusternode1 Node Attributes: * Node: clusternode1 (1): * hana_rh2_clone_state : PROMOTED * hana_rh2_op_mode : logreplay * hana_rh2_remoteHost : clusternode2 * hana_rh2_roles : 4:P:master1:master:worker:master * hana_rh2_site : DC1 * hana_rh2_sra : - * hana_rh2_srah : - * hana_rh2_srmode : syncmem * hana_rh2_sync_state : PRIM * hana_rh2_version : 2.00.062.00 * hana_rh2_vhost : clusternode1 * lpa_rh2_lpt : 1693809184 * master-SAPHana_RH2_02 : 150 * Node: clusternode2 (2): * hana_rh2_clone_state : DEMOTED * hana_rh2_op_mode : logreplay * hana_rh2_remoteHost : clusternode1 * hana_rh2_roles : 4:S:master1:master:worker:master * hana_rh2_site : DC2 * hana_rh2_sra : - * hana_rh2_srah : - * hana_rh2_srmode : syncmem * hana_rh2_sync_state : SOK * hana_rh2_version : 2.00.062.00 * hana_rh2_vhost : clusternode2 * lpa_rh2_lpt : 30 * master-SAPHana_RH2_02 : 100 Migration Summary: Tickets: PCSD Status: clusternode1: Online clusternode2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabledThis output shows you that HANA is promoted on clusternode1 which is the primary SAP HANA server, and that the name of the clone resource is SAPHana_RH2_02-clone, which is promotable.

You can run this in a separate window during the test to see the changes.

[root@clusternode1]# watch pcs status --full

Another way to identify the name of the SAP HANA clone resource is:

[root@clusternode2]# pcs resource * Clone Set: SAPHanaTopology_RH2_02-clone [SAPHanaTopology_RH2_02]: * Started: [ clusternode1 clusternode2 ] * Clone Set: SAPHana_RH2_02-clone [SAPHana_RH2_02] (promotable): * Promoted: [ clusternode2 ] * Unpromoted: [ clusternode1 ]To see the change of the primary server start monitoring on remotehost3 on a separate terminal window before you start the test.

remotehost3:rh2adm> watch 'hdbnsutil -sr_state | grep "primary masters"

The output will look like:

Every 2.0s: hdbnsutil -sr_state | grep "primary masters" remotehost3: Mon Sep 4 08:47:21 2023 primary masters: clusternode1

During the test the expected output will change to clusternode2.

Start the test by moving the clone resource discovered above to clusternode2:

[root@clusternode1]# pcs resource move SAPhana_RH2_02-clone clusternode2

The output of the monitor on remotehost3 will change to:

Every 2.0s: hdbnsutil -sr_state | grep "primary masters" remotehost3: Mon Sep 4 08:50:31 2023 primary masters: clusternode2

Pacemaker creates a location constraint for moving the clone resource. This needs to be manually removed. You can see the constraint using:

[root@clusternode1]# pcs constraint location

This constraint needs to be removed by executing the following steps.

Clear the clone resource to remove the location constraint:

[root@clusternode1]# pcs resource clear SAPhana_RH2_02-clone Removing constraint: cli-prefer-SAPHana_RH2_02-clone

Cleanup the resource:

[root@clusternode1]# pcs resource cleanup SAPHana_RH2_02-clone Cleaned up SAPHana_RH2_02:0 on clusternode2 Cleaned up SAPHana_RH2_02:1 on clusternode1 Waiting for 1 reply from the controller ... got reply (done)

Result of the test

- The “primary masters” monitor on remotehost3 should show an immediate switch to the new primary node.

-

If you check the cluster status, the former secondary will be promoted, the former primary gets re-registered, and the

Clone_Statechanges fromPromotedtoUndefinedtoWAITINGFORLPAtoDEMOTED. -

The secondary will change the

sync_statetoSFAILwhen theSAPHanamonitor is started for the first time after the failover. Because of existing location constraints, the resource needs to be cleared, and after a short time, thesync_stateof the secondary will change toSOKagain. - Secondary gets promoted.

To restore the initial state you can simply run the next test. After finishing the tests please run a Cluster Cleanup.

5.4. Test 2:Failover of the primary node with passive third site

| Subject of the test | No registration of the third site. Failover works even if the third site is down. |

| Test preconditions |

|

| Test steps |

Move the SAPHana resource using the |

| Starting the test | Execute the cluster command:

|

| Monitoring the test |

On the third site run as

On the cluster nodes run as root: |

| Expected result | No change on DC3. Replication stays on old relationship. |

| Ways to return to an initial state | Re-register DC3 on new primary and start SAP HANA. |

Detailed description

Check the initial state of your cluster as root on clusternode1 or clusternode2:

[root@clusternode1]# pcs status --full Cluster name: cluster1 Cluster Summary: * Stack: corosync * Current DC: clusternode1 (1) (version 2.1.2-4.el8_6.6-ada5c3b36e2) - partition with quorum * Last updated: Mon Sep 4 06:34:46 2023 * Last change: Mon Sep 4 06:33:04 2023 by root via crm_attribute on clusternode1 * 2 nodes configured * 6 resource instances configured Node List: * Online: [ clusternode1 (1) clusternode2 (2) ] Full List of Resources: * auto_rhevm_fence1 (stonith:fence_rhevm): Started clusternode1 * Clone Set: SAPHanaTopology_RH2_02-clone [SAPHanaTopology_RH2_02]: * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode2 * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode1 * Clone Set: SAPHana_RH2_02-clone [SAPHana_RH2_02] (promotable): * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Slave clusternode2 * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Master clusternode1 * vip_RH2_02_MASTER (ocf::heartbeat:IPaddr2): Started clusternode1 Node Attributes: * Node: clusternode1 (1): * hana_rh2_clone_state : PROMOTED * hana_rh2_op_mode : logreplay * hana_rh2_remoteHost : clusternode2 * hana_rh2_roles : 4:P:master1:master:worker:master * hana_rh2_site : DC1 * hana_rh2_sra : - * hana_rh2_srah : - * hana_rh2_srmode : syncmem * hana_rh2_sync_state : PRIM * hana_rh2_version : 2.00.062.00 * hana_rh2_vhost : clusternode1 * lpa_rh2_lpt : 1693809184 * master-SAPHana_RH2_02 : 150 * Node: clusternode2 (2): * hana_rh2_clone_state : DEMOTED * hana_rh2_op_mode : logreplay * hana_rh2_remoteHost : clusternode1 * hana_rh2_roles : 4:S:master1:master:worker:master * hana_rh2_site : DC2 * hana_rh2_sra : - * hana_rh2_srah : - * hana_rh2_srmode : syncmem * hana_rh2_sync_state : SOK * hana_rh2_version : 2.00.062.00 * hana_rh2_vhost : clusternode2 * lpa_rh2_lpt : 30 * master-SAPHana_RH2_02 : 100 Migration Summary: Tickets: PCSD Status: clusternode1: Online clusternode2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabledThis output of this example shows you that HANA is promoted on clusternode1, which is the primary SAP HANA server, and that the name of the clone resource is

SAPHana_RH2_02-clone, which is promotable. If you run test 3 before HANA, it might be promoted on clusternode2.Stop the database on remotehost3:

remotehost3:rh2adm> HDB stop hdbdaemon will wait maximal 300 seconds for NewDB services finishing. Stopping instance using: /usr/sap/RH2/SYS/exe/hdb/sapcontrol -prot NI_HTTP -nr 02 -function Stop 400 12.07.2023 11:33:14 Stop OK Waiting for stopped instance using: /usr/sap/RH2/SYS/exe/hdb/sapcontrol -prot NI_HTTP -nr 02 -function WaitforStopped 600 2 12.07.2023 11:33:30 WaitforStopped OK hdbdaemon is stopped.

Check the primary database on remotehost3:

remotehost3:rh2adm> hdbnsutil -sr_stateConfiguration| grep -i "primary masters" primary masters: clusternode2

Check the current primary in the cluster on a cluster node:

[root@clusternode1]# pcs resource | grep Masters * Masters: [ clusternode2 ]Check the

sr_stateto see the SAP HANA System Replication relationships:clusternode2remotehost3:rh2adm> hdbnsutil -sr_state System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~ online: true mode: primary operation mode: primary site id: 2 site name: DC1 is source system: true is secondary/consumer system: false has secondaries/consumers attached: true is a takeover active: false is primary suspended: false Host Mappings: ~~~~~~~~~~~~~~ clusternode1 -> [DC3] remotehost3 clusternode1 -> [DC1] clusternode1 clusternode1 -> [DC2] clusternode2 Site Mappings: ~~~~~~~~~~~~~~ DC1 (primary/primary) |---DC3 (syncmem/logreplay) |---DC2 (syncmem/logreplay) Tier of DC1: 1 Tier of DC3: 2 Tier of DC2: 2 Replication mode of DC1: primary Replication mode of DC3: syncmem Replication mode of DC2: syncmem Operation mode of DC1: primary Operation mode of DC3: logreplay Operation mode of DC2: logreplay Mapping: DC1 -> DC3 Mapping: DC1 -> DC2 done.The SAP HANA System Replication relations still have one primary (DC1), which is replicated to DC2 and DC3.

The replication relationship on remotehost3, which is down, can be displayed using:

remothost3:rh2adm> hdbnsutil -sr_stateConfiguration System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~ mode: syncmem site id: 3 site name: DC3 active primary site: 1 primary masters: clusternode1 done.

The database on remotehost3 which is offline checks the entries in the

global.inifile.Starting the test: Initiate a failover in the cluster, moving the

SAPHana-clone-resourceexample:[root@clusternode1]# pcs resource move SAPHana_RH2_02-clone clusternode2

NoteIf SAPHana is promoted on clusternode2, you have to move the clone resource to clusternode1. The example expects that SAPHana is promoted on clusternode1.

There will be no output. Similar to the former test, a location constraint will be created, which can be displayed with:

[root@clusternode1]# pcs constraint location Location Constraints: Resource: SAPHana_RH2_02-clone Enabled on: Node: clusternode1 (score:INFINITY) (role:Started)Even if the cluster looks fine again, this constraint avoids another failover unless the constraint is removed. One way is to clear the resource.

Clear the resource:

[root@clusternode1]# pcs constraint location Location Constraints: Resource: SAPHana_RH2_02-clone Enabled on: Node: clusternode1 (score:INFINITY) (role:Started) [root@clusternode1]# pcs resource clear SAPHana_RH2_02-clone Removing constraint: cli-prefer-SAPHana_RH2_02-cloneCleanup the resource:

[root@clusternode1]# pcs resource cleanup SAPHana_RH2_02-clone Cleaned up SAPHana_RH2_02:0 on clusternode2 Cleaned up SAPHana_RH2_02:1 on clusternode1 Waiting for 1 reply from the controller ... got reply (done)

Check the current status.

There are three ways to display the replication status, which needs to be in sync. Starting with the primary on remotehost3:

remotehost3clusternode2:rh2adm> hdbnsutil -sr_stateConfiguration| grep -i primary active primary site: 1 primary masters: clusternode1

The output shows site 1 or clusternode1, which was the primary before starting the test to move the primary to clusternode2.

Next check the system replication status on the new primary.

First detect the new primary:

[root@clusternode1]# pcs resource | grep Master * Masters: [ clusternode2 ]Here we have an inconsistency, which requires us to re-register remotehost3. You might think that if we run the test again, we might switch the primary back to the original clusternode1. In this case, we have a third way to identify if system replication is working. On the primary node run:

clusternode2:rh2adm> cdpy clusternode2:rh2adm> python ${DIR_EXECUTABLES}/python_support/systemReplicationStatus.py |Database |Host |Port |Service Name |Volume ID |Site ID |Site Name |Secondary |Secondary |Secondary |Secondary |Secondary |Replication |Replication |Replication |Secondary | | | | | | | | |Host |Port |Site ID |Site Name |Active Status |Mode |Status |Status Details |Fully Synced | |-------- |------ |----- |------------ |--------- |------- |--------- |--------- |--------- |--------- |--------- |------------- |----------- |----------- |-------------- |------------ | |SYSTEMDB |clusternode2 |30201 |nameserver | 1 | 2 |DC2 |clusternode1 | 30201 | 1 |DC1 |YES |SYNCMEM |ACTIVE | | True | |RH2 |clusternode2 |30207 |xsengine | 2 | 2 |DC2 |clusternode1 | 30207 | 1 |DC1 |YES |SYNCMEM |ACTIVE | | True | |RH2 |clusternode2 |30203 |indexserver | 3 | 2 |DC2 |clusternode1 | 30203 | 1 |DC1 |YES |SYNCMEM |ACTIVE | | True | status system replication site "1": ACTIVE overall system replication status: ACTIVE Local System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ mode: PRIMARY site id: 2 site name: DC2If you don’t see remotehost3 in this output, you have to re-register remotehost3. Before registering, please run the following on the primary node to watch the progress of the registration:

clusternode2:rh2adm> watch python ${DIR_EXECUTABLES}/python_support/systemReplicationStatus.pyNow you can re-register remotehost3 using this command:

remotehost3:rh2adm> hdbnsutil -sr_register --remoteHost=clusternode2 --remoteInstance=${TINSTANCE} --replicationMode=async --name=DC3 --remoteName=DC2 --operation Mode=logreplay --online adding site ... collecting information ... updating local ini files ... done.Even if the database on remotehost3 is not started yet, you are able to see the third site in the system replication status output. The registration can be finished by starting the database on remotehost3:

remotehost3:rh2adm> HDB start StartService Impromptu CCC initialization by 'rscpCInit'. See SAP note 1266393. OK OK Starting instance using: /usr/sap/RH2/SYS/exe/hdb/sapcontrol -prot NI_HTTP -nr 02 -function StartWait 2700 2 04.09.2023 11:36:47 Start OK

The monitor started above will immediately show the synchronization of remotehost3.

-

To switch back, run the test again. One optional test is to switch the primary to the node, which is configured on the

global.inion remotehost3 and then starting the database. The database might come up, but it will never be shown in the output of the system replication status unless it is re-registered. - The missing entry will be immediately created, and the system replication will start as soon as the SAP HANA database is started.

You can check this by executing:

sidadm@clusternode1% hdbnsutil -sr_state sidadm@clusternode1% python systemReplicationStatus.py ; echo $?

- You can find more information in Check SAP HANA System Replication status.

5.5. Test 3:Failover of the primary node to the third site

| Subject of the test | Failover the primary to the third site.. Third site becomes primary. Secondary will be re-registered to third site. |

| Test preconditions |

|

| Test steps |

Put the cluster into

Takeover the HANA database form the third node using: |

| Starting the test |

Execute the SAP HANA command on remotehost3:rh2adm>: |

| Monitoring the test |

On the third site run as |

| Expected result |

|

| Ways to return to an initial state |

Detailed description

Check if the databases are running using Check database and check the replication status:

clusternode2:rh2adm> hdbnsutil -sr_state | egrep -e "^mode:|primary masters"

The output is, for example:

mode: syncmem primary masters: clusternode1

In this case, the primary database is clusternode1. If you run this command on clusternode1, you will get:

mode: primary

On this primary node, you can also display the system replication status. It should look like this:

clusternode1:rh2adm> cdpy clusternode1:rh2adm> python systemReplicationStatus.py |Database |Host |Port |Service Name |Volume ID |Site ID |Site Name |Secondary |Secondary |Secondary |Secondary |Secondary |Replication |Replication |Replication |Secondary | | | | | | | | |Host |Port |Site ID |Site Name |Active Status |Mode |Status |Status Details |Fully Synced | |-------- |------ |----- |------------ |--------- |------- |--------- |--------- |--------- |--------- |--------- |------------- |----------- |----------- |-------------- |------------ | |SYSTEMDB |clusternode1 |30201 |nameserver | 1 | 1 |DC1 |remotehost3 | 30201 | 3 |DC3 |YES |SYNCMEM |ACTIVE | | True | |RH2 |clusternode1 |30207 |xsengine | 2 | 1 |DC1 |remotehost3 | 30207 | 3 |DC3 |YES |SYNCMEM |ACTIVE | | True | |RH2 |clusternode1 |30203 |indexserver | 3 | 1 |DC1 |remotehost3 | 30203 | 3 |DC3 |YES |SYNCMEM |ACTIVE | | True | |SYSTEMDB |clusternode1 |30201 |nameserver | 1 | 1 |DC1 |clusternode2 | 30201 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |RH2 |clusternode1 |30207 |xsengine | 2 | 1 |DC1 |clusternode2 | 30207 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |RH2 |clusternode1 |30203 |indexserver | 3 | 1 |DC1 |clusternode2 | 30203 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | status system replication site "3": ACTIVE status system replication site "2": ACTIVE overall system replication status: ACTIVE Local System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ mode: PRIMARY site id: 1 site name: DC1

Now we have a proper environment, and we can start monitoring the system replication status on all 3 nodes in separate windows. The 3 monitors should be started before the test is started. The output will change when the test is executed. So keep them running as long as the test is not completed.

On the old primary node, clusternode1 ran in a separate window during the test:

clusternode1:rh2adm> watch -n 5 'python /usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/exe/python_support/systemReplicationStatus.py ; echo Status $?'The output on clusternode1 will be:

Every 5.0s: python /usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/exe/python_support/systemReplicati... clusternode1: Tue XXX XX HH:MM:SS 2023 |Database |Host |Port |Service Name |Volume ID |Site ID |Site Name |Secondary |Secondary |Secondary |Secondary |Secondary | Replication |Replication |Replication |Secondary | | | | | | | | |Host |Port |Site ID |Site Name |Active Status | Mode |Status |Status Details |Fully Synced | |-------- |------ |----- |------------ |--------- |------- |--------- |--------- |--------- |--------- |--------- |------------- | ----------- |----------- |-------------- |------------ | |SYSTEMDB |clusternode1 |30201 |nameserver | 1 | 1 |DC1 |remotehost3 | 30201 | 3 |DC3 |YES | ASYNC |ACTIVE | | True | |RH2 |clusternode1 |30207 |xsengine | 2 | 1 |DC1 |remotehost3 | 30207 | 3 |DC3 |YES | ASYNC |ACTIVE | | True | |RH2 |clusternode1 |30203 |indexserver | 3 | 1 |DC1 |remotehost3 | 30203 | 3 |DC3 |YES | ASYNC |ACTIVE | | True | |SYSTEMDB |clusternode1 |30201 |nameserver | 1 | 1 |DC1 |clusternode2 | 30201 | 2 |DC2 |YES | SYNCMEM |ACTIVE | | True | |RH2 |clusternode1 |30207 |xsengine | 2 | 1 |DC1 |clusternode2 | 30207 | 2 |DC2 |YES | SYNCMEM |ACTIVE | | True | |RH2 |clusternode1 |30203 |indexserver | 3 | 1 |DC1 |clusternode2 | 30203 | 2 |DC2 |YES | SYNCMEM |ACTIVE | | True | status system replication site "3": ACTIVE status system replication site "2": ACTIVE overall system replication status: ACTIVE Local System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ mode: PRIMARY site id: 1 site name: DC1 Status 15On remotehost3, run the same command:

remotehost3:rh2adm> watch -n 5 'python /usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/exe/python_support/systemReplicationStatus.py ; echo Status $?'The response will be:

this system is either not running or is not primary system replication site

This will change after the test initiates the failover. The output looks similar to the example of the primary node before the test was started.

On the second node, start:

clusternode2:rh2adm> watch -n 10 'hdbnsutil -sr_state | grep masters'

This will show the current master clusternode1 and will switch immediately after the failover is initiated.

-

To ensure that everything is configured correctly, please also check the

global.ini. Check

global.inion DC1, DC2, and DC3:On all three nodes, the

global.inishould contain:[persistent] log_mode=normal [system_replication] register_secondaries_on_takeover=true

You can edit the

global.iniwith:clusternode1:rh2adm>vim /usr/sap/${SAPSYSTEMNAME}/SYS/global/hdb/custom/config/global.ini[Optional] Put the cluster into

maintenance-mode:[root@clusternode1]# pcs property set maintenance-mode=true

During the tests, you will find out that the failover will work with and without setting the

maintenance-mode. So you can run the first test without it. While recovering, it should be done; I just want to show you that it works with and without. This is an option if the primary is not accessible.Start the test: Failover to DC3. On remotehost3, please run:

remotehost3:rh2adm> hdbnsutil -sr_takeover done.

The test has started, and now please check the output of the previously started monitors. On the clusternode1, the system replication status will lose its relationship to remotehost3 and clusternode2 (DC2):

Every 5.0s: python /usr/sap/RH2/HDB02/exe/python_support/systemReplicationStatus.py ; echo Status $? clusternode1: Mon Sep 4 11:52:16 2023 |Database |Host |Port |Service Name |Volume ID |Site ID |Site Name |Secondary |Secondary |Secondary |Secondary |Secondary |Replication |Replication |Replic ation |Secondary | | | | | | | | |Host |Port |Site ID |Site Name |Active Status |Mode |Status |Status Details |Fully Synced | |-------- |------ |----- |------------ |--------- |------- |--------- |--------- |--------- |--------- |--------- |------------- |----------- |----------- |------ ---------------------- |------------ | |SYSTEMDB |clusternode1 |30201 |nameserver | 1 | 1 |DC1 |clusternode2 | 30201 | 2 |DC2 |YES |SYNCMEM |ERROR |Commun ication channel closed | False | |RH2 |clusternode1 |30207 |xsengine | 2 | 1 |DC1 |clusternode2 | 30207 | 2 |DC2 |YES |SYNCMEM |ERROR |Commun ication channel closed | False | |RH2 |clusternode1 |30203 |indexserver | 3 | 1 |DC1 |clusternode2 | 30203 | 2 |DC2 |YES |SYNCMEM |ERROR |Commun ication channel closed | False | status system replication site "2": ERROR overall system replication status: ERROR Local System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ mode: PRIMARY site id: 1 site name: DC1 Status 11

The cluster still doesn’t notice this behavior. If you check the return code of the system replication status, Returncode 11 means error, which tells you something is wrong. If you have access, it is a good idea to enter

maintenance-modenow.The remotehost3 becomes the new primary, and clusternode2 (DC2) gets automatically registered as the new primary on the remotehost3.

Example output of the system replication state of remotehost3:

Every 5.0s: python /usr/sap/RH2/HDB02/exe/python_support/systemReplicationStatus.py ; echo Status $? remotehost3: Mon Sep 4 13:55:29 2023 |Database |Host |Port |Service Name |Volume ID |Site ID |Site Name |Secondary |Secondary |Secondary |Secondary |Secondary |Replication |Replication |Replic ation |Secondary | | | | | | | | |Host |Port |Site ID |Site Name |Active Status |Mode |Status |Status Details |Fully Synced | |-------- |------ |----- |------------ |--------- |------- |--------- |--------- |--------- |--------- |--------- |------------- |----------- |----------- |------ -------- |------------ | |SYSTEMDB |remotehost3 |30201 |nameserver | 1 | 3 |DC3 |clusternode2 | 30201 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |RH2 |remotehost3 |30207 |xsengine | 2 | 3 |DC3 |clusternode2 | 30207 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |RH2 |remotehost3 |30203 |indexserver | 3 | 3 |DC3 |clusternode2 | 30203 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | status system replication site "2": ACTIVE overall system replication status: ACTIVE Local System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ mode: PRIMARY site id: 3 site name: DC3 Status 15The returncode 15 also says everything is okay, but clusternode1 is missing. This must be re-registered manually. The former primary clusternode1 is not listed, so the replication relationship is lost.

Set

maintenance-mode.If not already done before, set

maintenance-modeon the cluster on one node of the cluster with the command:[root@clusternode1]# pcs property set maintenance-mode=true

You can check if the

maintenance-modeis active by running this command:[root@clusternode1]# pcs resource * Clone Set: SAPHanaTopology_RH2_02-clone [SAPHanaTopology_RH2_02] (unmanaged): * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode2node2 (unmanaged) * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode1node1 (unmanaged) * Clone Set: SAPHana_RH2_02-clone [SAPHana_RH2_02] (promotable, unmanaged): * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Slave clusternode2node2 (unmanaged) * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Master clusternode1node1 (unmanaged) * vip_RH2_02_MASTER (ocf::heartbeat:IPaddr2): Started clusternode1node1 (unmanaged)The resources are displaying unmanaged, this indicates that the cluster is in

maintenance-mode=true. The virtual IP address is still started on clusternode1. If you want to use this IP on another node, please disablevip_RH2_02_MASTERbefore you set maintanence-mode=true.[root@clusternode1]# pcs resource disable vip_RH2_02_MASTER

Re-register clusternode1.

When we check the

sr_stateon clusternode1, you will see a relationship only to DC2:clusternode1:rh2adm> hdbnsutil -sr_state System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~ online: true mode: primary operation mode: primary site id: 1 site name: DC1 is source system: true is secondary/consumer system: false has secondaries/consumers attached: true is a takeover active: false is primary suspended: false Host Mappings: ~~~~~~~~~~~~~~ clusternode1 -> [DC2] clusternode2 clusternode1 -> [DC1] clusternode1 Site Mappings: ~~~~~~~~~~~~~~ DC1 (primary/primary) |---DC2 (syncmem/logreplay) Tier of DC1: 1 Tier of DC2: 2 Replication mode of DC1: primary Replication mode of DC2: syncmem Operation mode of DC1: primary Operation mode of DC2: logreplay Mapping: DC1 -> DC2 done.But when we check DC2, the primary database server is DC3. So the information from DC1 is not correct.

clusternode2:rh2adm> hdbnsutil -sr_state

If we check the system replication status on DC1, the returncode is 12, which is unknown. So DC1 needs to be re-registered.

You can use this command to register the former primary clusternode1 as a new secondary of remotehost3.

clusternode1:rh2adm> hdbnsutil -sr_register --remoteHost=remotehost3 --remoteInstance=${TINSTANCE} --replicationMode=asyncsyncmem --name=DC1 --remoteName=DC3 --operationMode=logreplay --onlineAfter the registration is done, you will see on remotehost3 all three sites replicated, and the status (return code) will change to 15.

If this fails, you have to manually remove the replication relationships on DC1 and DC3. Please follow the instructions described in Register Secondary.

For example, list the existing relationships with:

clusternode1:rh2adm> hdbnsutil -sr_state

To remove the existing relationships you can use:

clusternode1:rh2adm> hdbnsutil -sr_unregister --name=DC2`

This may not usually be necessary. We assume that test 4 will be performed after test 3. So the recovery step is to run test 4.

5.6. Test 4:Failback of the primary node to the first site

| Subject of the test | Primary switch back to a cluster node. Failback and enable the cluster again. Re-register the third site as secondary. |

| Test preconditions |

|

| Test steps | Check the expected primary of the cluster. Failover from the DC3 node to the DC1 node. Check if the former secondary has switched to the new primary. Re-register remotehost3 as a new secondary.

Set cluster |

| Monitoring the test | On the new primary start:

On the secondary start:

|

| Starting the test |

Check the expected primary of the cluster: VIP and promoted SAP HANA resources should run on the same node which is the potential new primary.

On this potential primary run as Re-register the former primary as new secondary:

Cluster continues to work after setting the |

| Expected result | New primary is starting SAP HANA. The replication status will show all 3 sites replicated. Second cluster site gets automatically re-registered to the new primary. DR site becomes an additional replica of the database. |

| Ways to return to an initial state | Run test 3. |

Detailed description

Check if the cluster is put into

maintenance-mode:[root@clusternode1]# pcs property config maintenance-mode Cluster Properties: maintenance-mode: true

If the

maintenance-modeis not true you can set it with:[root@clusternode1]# pcs property set maintenance-mode=true

Check the system replication status and discover the primary database on all nodes.

First of all, discover the primary database using:

clusternode1:rh2adm> hdbnsutil -sr_state | egrep -e "^mode:|primary masters"

The output should be as follows:

On clusternode1:

clusternode1:rh2adm> hdbnsutil -sr_state | egrep -e "^mode:|primary masters" mode: syncmem primary masters: remotehost3

On clusternode2:

clusternode2:rh2adm> hdbnsutil -sr_state | egrep -e "^mode:|primary masters" mode: syncmem primary masters: remotehost3

On remotehost3:

remotehost3:rh2adm> hdbnsutil -sr_state | egrep -e "^mode:|primary masters" mode: primary

On all three nodes, the primary database is remotehost3.

On this primary database, you have to ensure that the system replication status is active for all three nodes and the return code is 15:

remotehost3:rh2adm> python /usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/exe/python_support/systemReplicationStatus.py |Database |Host |Port |Service Name |Volume ID |Site ID |Site Name |Secondary |Secondary |Secondary |Secondary |Secondary |Replication |Replication |Replication |Secondary | | | | | | | | |Host |Port |Site ID |Site Name |Active Status |Mode |Status |Status Details |Fully Synced | |-------- |------ |----- |------------ |--------- |------- |--------- |--------- |--------- |--------- |--------- |------------- |----------- |----------- |-------------- |------------ | |SYSTEMDB |remotehost3 |30201 |nameserver | 1 | 3 |DC3 |clusternode2 | 30201 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |RH2 |remotehost3 |30207 |xsengine | 2 | 3 |DC3 |clusternode2 | 30207 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |RH2 |remotehost3 |30203 |indexserver | 3 | 3 |DC3 |clusternode2 | 30203 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |SYSTEMDB |remotehost3 |30201 |nameserver | 1 | 3 |DC3 |clusternode1 | 30201 | 1 |DC1 |YES |SYNCMEM |ACTIVE | | True | |RH2 |remotehost3 |30207 |xsengine | 2 | 3 |DC3 |clusternode1 | 30207 | 1 |DC1 |YES |SYNCMEM |ACTIVE | | True | |RH2 |remotehost3 |30203 |indexserver | 3 | 3 |DC3 |clusternode1 | 30203 | 1 |DC1 |YES |SYNCMEM |ACTIVE | | True | status system replication site "2": ACTIVE status system replication site "1": ACTIVE overall system replication status: ACTIVE Local System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ mode: PRIMARY site id: 3 site name: DC3 [rh2adm@remotehost3: python_support]# echo $? 15Check if all three

sr_statesare consistent.Please run on all three nodes,

hdbnsutil -sr_state --sapcontrol=1 |grep site.*Mode:clusternode1:rh2adm>hdbnsutil -sr_state --sapcontrol=1 |grep site.*Mode clusternode2:rh2adm> hsbnsutil -sr_state --sapcontrol=1 | grep site.*Mode remotehost3:rh2adm>hsbnsutil -sr_state --sapcontrol=1 | grep site.*Mode

The output should be the same on all nodes:

siteReplicationMode/DC1=primary siteReplicationMode/DC3=async siteReplicationMode/DC2=syncmem siteOperationMode/DC1=primary siteOperationMode/DC3=logreplay siteOperationMode/DC2=logreplay

Start monitoring in separate windows.

On clusternode1, start:

clusternode1:rh2adm> watch "python /usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/exe/python_support/systemReplicationStatus.py; echo \$?"On remotehost3, start:

remotehost3:rh2adm>watch "python /usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/exe/python_support/systemReplicationStatus.py; echo \$?"On clusternode2, start:

clusternode2:rh2adm> watch "hdbnsutil -sr_state --sapcontrol=1 |grep siteReplicationMode"

Start the test.

To failover to clusternode1, start on clusternode1:

clusternode1:rh2adm> hdbnsutil -sr_takeover done.

Check the output of the monitors.

The monitor on clusternode1 will change to:

Every 2.0s: python systemReplicationStatus.py; echo $? clusternode1: Mon Sep 4 23:34:30 2023 |Database |Host |Port |Service Name |Volume ID |Site ID |Site Name |Secondary |Secondary |Secondary |Secondary |Secondary |Replication |Replication |Replication |Secondary | | | | | | | | |Host |Port |Site ID |Site Name |Active Status |Mode |Status |Status Details |Fully Synced | |-------- |------ |----- |------------ |--------- |------- |--------- |--------- |--------- |--------- |--------- |------------- |----------- |----------- |-------------- |------------ | |SYSTEMDB |clusternode1 |30201 |nameserver | 1 | 1 |DC1 |clusternode2 | 30201 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |RH2 |clusternode1 |30207 |xsengine | 2 | 1 |DC1 |clusternode2 | 30207 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |RH2 |clusternode1 |30203 |indexserver | 3 | 1 |DC1 |clusternode2 | 30203 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | status system replication site "2": ACTIVE overall system replication status: ACTIVE Local System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ mode: PRIMARY site id: 1 site name: DC1 15

Important is also the return code 15.

The monitor on clusternode2 will change to:

Every 2.0s: hdbnsutil -sr_state --sapcontrol=1 |grep site.*Mode clusternode2: Mon Sep 4 23:35:18 2023 siteReplicationMode/DC1=primary siteReplicationMode/DC2=syncmem siteOperationMode/DC1=primary siteOperationMode/DC2=logreplay

DC3 is gone and needs to be re-registered.

On remotehost3, the

systemReplicationStatusreports an error, and the returncode changes to 11.Check if cluster nodes get re-registered:

clusternode1:rh2adm> hdbnsutil -sr_state System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~ online: true mode: primary operation mode: primary site id: 1 site name: DC1 is source system: true is secondary/consumer system: false has secondaries/consumers attached: true is a takeover active: false is primary suspended: false Host Mappings: ~~~~~~~~~~~~~~ clusternode1 -> [DC2] clusternode2 clusternode1 -> [DC1] clusternode1 Site Mappings: ~~~~~~~~~~~~~~ DC1 (primary/primary) |---DC2 (syncmem/logreplay) Tier of DC1: 1 Tier of DC2: 2 Replication mode of DC1: primary Replication mode of DC2: syncmem Operation mode of DC1: primary Operation mode of DC2: logreplay Mapping: DC1 -> DC2 done.The Site Mapping shows that clusternode2 (DC2) was re-registered.

Check or enable the vip resource:

[root@clusternode1]# pcs resource * Clone Set: SAPHanaTopology_RH2_02-clone [SAPHanaTopology_RH2_02] (unmanaged): * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode2 (unmanaged) * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode1 (unmanaged) * Clone Set: SAPHana_RH2_02-clone [SAPHana_RH2_02] (promotable, unmanaged): * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Master clusternode2 (unmanaged) * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Slave clusternode1 (unmanaged) * vip_RH2_02_MASTER (ocf::heartbeat:IPaddr2): Stopped (disabled, unmanaged)The vip resource

vip_RH2_02_MASTERis stopped.To start it again run:

[root@clusternode1]# pcs resource enable vip_RH2_02_MASTER Warning: 'vip_RH2_02_MASTER' is unmanaged

The warning is right because the cluster will not start any resources unless

maintenance-mode=false.Stop cluster

maintenance-mode.Before we stop the

maintenance-mode, we should start two monitors in separate windows to see the changes.On clusternode2, run:

[root@clusternode2]# watch pcs status --full

On clusternode1, run:

clusternode1:rh2adm> watch "python /usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/exe/python_support/systemReplicationStatus.py; echo $?"Now you can unset the

maintenance-modeon clusternode1 by running:[root@clusternode1]# pcs property set maintenance-mode=false

The monitor on clusternode1 should show you that everything is running now as expected:

Every 2.0s: pcs status --full clusternode1: Tue Sep 5 00:01:17 2023 Cluster name: cluster1 Cluster Summary: * Stack: corosync * Current DC: clusternode1 (1) (version 2.1.2-4.el8_6.6-ada5c3b36e2) - partition with quorum * Last updated: Tue Sep 5 00:01:17 2023 * Last change: Tue Sep 5 00:00:30 2023 by root via crm_attribute on clusternode1 * 2 nodes configured * 6 resource instances configured Node List: * Online: [ clusternode1 (1) clusternode2 (2) ] Full List of Resources: * auto_rhevm_fence1 (stonith:fence_rhevm): Started clusternode1 * Clone Set: SAPHanaTopology_RH2_02-clone [SAPHanaTopology_RH2_02]: * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode2 * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode1 * Clone Set: SAPHana_RH2_02-clone [SAPHana_RH2_02] (promotable): * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Slave clusternode2 * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Master clusternode1 * vip_RH2_02_MASTER (ocf::heartbeat:IPaddr2): Started clusternode1 Node Attributes: * Node: clusternode1 (1): * hana_rh2_clone_state : PROMOTED * hana_rh2_op_mode : logreplay * hana_rh2_remoteHost : clusternode2 * hana_rh2_roles : 4:P:master1:master:worker:master * hana_rh2_site : DC1 * hana_rh2_sra : - * hana_rh2_srah : - * hana_rh2_srmode : syncmem * hana_rh2_sync_state : PRIM * hana_rh2_version : 2.00.062.00 * hana_rh2_vhost : clusternode1 * lpa_rh2_lpt : 1693872030 * master-SAPHana_RH2_02 : 150 * Node: clusternode2 (2): * hana_rh2_clone_state : DEMOTED * hana_rh2_op_mode : logreplay * hana_rh2_remoteHost : clusternode1 * hana_rh2_roles : 4:S:master1:master:worker:master * hana_rh2_site : DC2 * hana_rh2_sra : - * hana_rh2_srah : - * hana_rh2_srmode : syncmem * hana_rh2_sync_state : SOK * hana_rh2_version : 2.00.062.00 * hana_rh2_vhost : clusternode2 * lpa_rh2_lpt : 30 * master-SAPHana_RH2_02 : 100 Migration Summary: Tickets: PCSD Status: clusternode1: Online clusternode2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabledAfter manual interaction, it is always good advice to cleanup the cluster, as described in Cluster Cleanup.

Re-register remotehost3 to the new primary on clusternode1.

Remotehost3 needs to be re-registered. To monitor the progress, please start on clusternode1:

con_cluster_cleanupclusternode1:rh2adm> watch -n 5 'python /usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/exe/python_support/systemReplicationStatus.py ; echo Status $?'On remotehost3, please start:

remotehost3:rh2adm> watch 'hdbnsutil -sr_state --sapcontrol=1 |grep siteReplicationMode'

Now you can re-register remotehost3 with this command:

remotehost3:rh2adm> hdbnsutil -sr_register --remoteHost=clusternode1 --remoteInstance=${TINSTANCE} --replicationMode=async --name=DC3 --remoteName=DC1 --operationMode=logreplay --onlineThe monitor on clusternode1 will change to:

Every 5.0s: python /usr/sap/${SAPSYSTEMNAME}/HDB${TINSTANCE}/exe/python_support/systemReplicationStatus.py ; echo Status $? clusternode1: Tue Sep 5 00:14:40 2023 |Database |Host |Port |Service Name |Volume ID |Site ID |Site Name |Secondary |Secondary |Secondary |Secondary |Secondary |Replication |Replication |Replication |Secondary | | | | | | | | |Host |Port |Site ID |Site Name |Active Status |Mode |Status |Status Details |Fully Synced | |-------- |------ |----- |------------ |--------- |------- |--------- |--------- |--------- |--------- |--------- |------------- |----------- |----------- |-------------- |------------ | |SYSTEMDB |clusternode1 |30201 |nameserver | 1 | 1 |DC1 |remotehost3 | 30201 | 3 |DC3 |YES |ASYNC |ACTIVE | | True | |RH2 |clusternode1 |30207 |xsengine | 2 | 1 |DC1 |remotehost3 | 30207 | 3 |DC3 |YES |ASYNC |ACTIVE | | True | |RH2 |clusternode1 |30203 |indexserver | 3 | 1 |DC1 |remotehost3 | 30203 | 3 |DC3 |YES |ASYNC |ACTIVE | | True | |SYSTEMDB |clusternode1 |30201 |nameserver | 1 | 1 |DC1 |clusternode2 | 30201 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |RH2 |clusternode1 |30207 |xsengine | 2 | 1 |DC1 |clusternode2 | 30207 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | |RH2 |clusternode1 |30203 |indexserver | 3 | 1 |DC1 |clusternode2 | 30203 | 2 |DC2 |YES |SYNCMEM |ACTIVE | | True | status system replication site "3": ACTIVE status system replication site "2": ACTIVE overall system replication status: ACTIVE Local System Replication State ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ mode: PRIMARY site id: 1 site name: DC1 Status 15And the monitor of remotehost3 will change to:

Every 2.0s: hdbnsutil -sr_state --sapcontrol=1 |grep site.*Mode remotehost3: Tue Sep 5 02:15:28 2023 siteReplicationMode/DC1=primary siteReplicationMode/DC3=syncmem siteReplicationMode/DC2=syncmem siteOperationMode/DC1=primary siteOperationMode/DC3=logreplay siteOperationMode/DC2=logreplay

Now we have again 3 entries, and remotehost3 (DC3) is again a secondary site replicated from clusternode1 (DC1).

Check if all nodes are part of the system replication status on clusternode1.

Please run on all three nodes,

hdbnsutil -sr_state --sapcontrol=1 |grep site.*Mode:clusternode1:rh2adm> hdbnsutil -sr_state --sapcontrol=1 |grep site.*ModesiteReplicationMode clusternode2:rh2adm> hsbnsutil -sr_state --sapcontrol=1 | grep site.*Mode remotehost3:rh2adm> hsbnsutil -sr_state --sapcontrol=1 | grep site.*Mode

On all nodes, we should get the same output:

siteReplicationMode/DC1=primary siteReplicationMode/DC3=syncmem siteReplicationMode/DC2=syncmem siteOperationMode/DC1=primary siteOperationMode/DC3=logreplay siteOperationMode/DC2=logreplay

Check pcs status --full and SOK.

Run:

[root@clusternode1]# pcs status --full| grep sync_state

The output should be either PRIM or SOK:

* hana_rh2_sync_state : PRIM * hana_rh2_sync_state : SOKFinally, the cluster status should look like this, including the

sync_statePRIM and SOK:[root@clusternode1]# pcs status --full Cluster name: cluster1 Cluster Summary: * Stack: corosync * Current DC: clusternode1 (1) (version 2.1.2-4.el8_6.6-ada5c3b36e2) - partition with quorum * Last updated: Tue Sep 5 00:18:52 2023 * Last change: Tue Sep 5 00:16:54 2023 by root via crm_attribute on clusternode1 * 2 nodes configured * 6 resource instances configured Node List: * Online: [ clusternode1 (1) clusternode2 (2) ] Full List of Resources: * auto_rhevm_fence1 (stonith:fence_rhevm): Started clusternode1 * Clone Set: SAPHanaTopology_RH2_02-clone [SAPHanaTopology_RH2_02]: * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode2 * SAPHanaTopology_RH2_02 (ocf::heartbeat:SAPHanaTopology): Started clusternode1 * Clone Set: SAPHana_RH2_02-clone [SAPHana_RH2_02] (promotable): * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Slave clusternode2 * SAPHana_RH2_02 (ocf::heartbeat:SAPHana): Master clusternode1 * vip_RH2_02_MASTER (ocf::heartbeat:IPaddr2): Started clusternode1 Node Attributes: * Node: clusternode1 (1): * hana_rh2_clone_state : PROMOTED * hana_rh2_op_mode : logreplay * hana_rh2_remoteHost : clusternode2 * hana_rh2_roles : 4:P:master1:master:worker:master * hana_rh2_site : DC1 * hana_rh2_sra : - * hana_rh2_srah : - * hana_rh2_srmode : syncmem * hana_rh2_sync_state : PRIM * hana_rh2_version : 2.00.062.00 * hana_rh2_vhost : clusternode1 * lpa_rh2_lpt : 1693873014 * master-SAPHana_RH2_02 : 150 * Node: clusternode2 (2): * hana_rh2_clone_state : DEMOTED * hana_rh2_op_mode : logreplay * hana_rh2_remoteHost : clusternode1 * hana_rh2_roles : 4:S:master1:master:worker:master * hana_rh2_site : DC2 * hana_rh2_sra : - * hana_rh2_srah : - * hana_rh2_srmode : syncmem * hana_rh2_sync_state : SOK * hana_rh2_version : 2.00.062.00 * hana_rh2_vhost : clusternode2 * lpa_rh2_lpt : 30 * master-SAPHana_RH2_02 : 100 Migration Summary: Tickets: PCSD Status: clusternode1: Online clusternode2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled- Refer to Check cluster status and Check database to verify that all works fine again.

Chapter 6. Useful commands

Below are 3 sections of useful commands. In most cases, it should help to verify successful operation or configuration. Examples are listed together with the response. In some cases, the output has been adjusted for formatting reasons.

-

All commands listed in this document when executed by the

<sid>admuser start with>. -

All commands run by the

root userstart with a#. -

To execute the commands, omit the prefix

>or#.

6.1. SAP HANA commands

The SAP HANA commands are executed by the <sid>adm user. Example:

[root@clusternode1]# su - rh2adm clusternode1:rh2adm> cdpy clusternode1:rh2adm> pwd /usr/sap/RH2/HDB02/exe/python_support clusternode1:rh2adm> python systemReplicationStatus.py -h systemReplicationStatus.py [-h|--help] [-a|--all] [-l|--localhost] [-m|--multiTaget] [-s|--site=<site name>] [-t|--printLandscapeTree] [--omitSecondaryActiveStatus] [--sapcontrol=1] clusternode1:rh2adm> python landscapeHostConfiguration.py -h landscapeHostConfiguration.py [-h|--help] [--localhost] [--sapcontrol=1] clusternode1:rh2adm> hdbnsutil # run hdbnsutil without parameters to get help

6.1.1. SAP HANA installation using hdbclm

The installation of the third site is similar to the installation of the second site. The installation can be done with hdblcm as user root. To ensure that nothing is installed before, run hdbuninst to check if SAP HANA is not already installed on this node.

Example output of HANA uninstallation:

[root@remotehost3]# cd /software/DATA_UNITS/HDB_SERVER_LINUX_X86_64 root@DC3/software/DATA_UNITS/HDB_SERVER_LINUX_X86_64# ./hdbuninst Option 0 will remove an already existing HANA Installation No SAP HANA Installation found is the expected answer

Example output of HANA installation on DC3:

----[root@remotehost3]# cd /software/DATA_UNITS/HDB_SERVER_LINUX_X86_64 # ./hdbuninst Option 0 will remove an already existing HANA Installation No SAP HANA Installation found is the expected answer ---- Example output of HANA installation: [source,text] ---- [root@remotehost3]# ./hdblcm 1 install 2 server /hana/shared is default directory Enter Local Hostname [remotehost3]: use the default name additional hosts only during Scale-Out Installation y default is n ENTER SAP HANA System ID: RH2 Enter Instance Number [02]: Enter Local Host Worker Group [default]: Select System Usage / Enter Index [4]: Choose encryption Enter Location of Data Volumes [/hana/data/RH2]: Enter Location of Log Volumes [/hana/log/RH2]: Restrict maximum memory allocation? [n]: Enter Certificate Host Name Enter System Administrator (rh2adm) Password: <Y0urPasswd> Confirm System Administrator (rh2adm) Password: <Y0urPasswd> Enter System Administrator Home Directory [/usr/sap/RH2/home]: Enter System Administrator Login Shell [/bin/sh]: Enter System Administrator User ID [1000]: Enter System Database User (SYSTEM) Password: <Y0urPasswd> Confirm System Database User (SYSTEM) Password: <Y0urPasswd> Restart system after machine reboot? [n]: ----

Before the installation starts, a summary is listed:

SAP HANA Database System Installation

Installation Parameters

Remote Execution: ssh

Database Isolation: low

Install Execution Mode: standard

Installation Path: /hana/shared

Local Host Name: dc3host

SAP HANA System ID: RH2

Instance Number: 02

Local Host Worker Group: default

System Usage: custom

Location of Data Volumes: /hana/data/RH2

Location of Log Volumes: /hana/log/RH2

SAP HANA Database secure store: ssfs

Certificate Host Names: remotehost3 -> remotehost3 System Administrator Home Directory: /usr/sap/RH2/home

System Administrator Login Shell: /bin/sh

System Administrator User ID: 1000

ID of User Group (sapsys): 1010

Software Components

SAP HANA Database

Install version 2.00.052.00.1599235305

Location: /software/DATA_UNITS/HDB_SERVER_LINUX_X86_64/server

SAP HANA Local Secure Store

Do not install

SAP HANA AFL (incl.PAL,BFL,OFL)

Do not install

SAP HANA EML AFL

Do not install

SAP HANA EPM-MDS

Do not install

SAP HANA Database Client

Do not install

SAP HANA Studio

Do not install

SAP HANA Smart Data Access

Do not install

SAP HANA XS Advanced Runtime

Do not install

Log File Locations

Log directory: /var/tmp/hdb_RH2_hdblcm_install_2021-06-09_18.48.13

Trace location: /var/tmp/hdblcm_2021-06-09_18.48.13_31307.trc

Do you want to continue? (y/n):Enter y to start the installation.

6.1.2. Using hdbsql to check Inifile contents

clusternode1:rh2adm> hdbsql -i ${TINSTANCE} -u system -p Y0urP8ssw0rd

Welcome to the SAP HANA Database interactive terminal.

Type: \h for help with commands

\q to quit

hdbsql RH2=> select * from M_INIFILE_CONTENTS where section='system_replication'

FILE_NAME,LAYER_NAME,TENANT_NAME,HOST,SECTION,KEY,VALUE

"global.ini","DEFAULT","","","system_replication","actual_mode","primary"

"global.ini","DEFAULT","","","system_replication","mode","primary"

"global.ini","DEFAULT","","","system_replication","operation_mode","logreplay"

"global.ini","DEFAULT","","","system_replication","register_secondaries_on_takeover

","true"

"global.ini","DEFAULT","","","system_replication","site_id","1"

"global.ini","DEFAULT","","","system_replication","site_name","DC2"

"global.ini","DEFAULT","","","system_replication","timetravel_logreplay_mode","auto

"

"global.ini","DEFAULT","","","system_replication","alternative_sources",""

"global.ini","DEFAULT","","","system_replication","datashipping_logsize_threshold",

"5368709120"

"global.ini","DEFAULT","","","system_replication","datashipping_min_time_interval",

"600"

"global.ini","DEFAULT","","","system_replication","datashipping_parallel_channels",

"4"

"global.ini","DEFAULT","","","system_replication","datashipping_parallel_processing

","true"

"global.ini","DEFAULT","","","system_replication","datashipping_snapshot_max_retent

ion_time","300"

"global.ini","DEFAULT","","","system_replication","enable_data_compression","false"

"global.ini","DEFAULT","","","system_replication","enable_full_sync","false"

"global.ini","DEFAULT","","","system_replication","enable_log_compression","false"

"global.ini","DEFAULT","","","system_replication","enable_log_retention","auto"

"global.ini","DEFAULT","","","system_replication","full_replica_on_failed_delta_syn

c_check","false"

"global.ini","DEFAULT","","","system_replication","hint_based_routing_site_name",""

"global.ini","DEFAULT","","","system_replication","keep_old_style_alert","false"

"global.ini","DEFAULT","","","system_replication","logshipping_async_buffer_size","

67108864"

"global.ini","DEFAULT","","","system_replication","logshipping_async_wait_on_buffer

_full","true"

"global.ini","DEFAULT","","","system_replication","logshipping_max_retention_size",

"1048576"

"global.ini","DEFAULT","","","system_replication","logshipping_replay_logbuffer_cac

he_size","1073741824"

"global.ini","DEFAULT","","","system_replication","logshipping_replay_push_persiste

nt_segment_count","5"

"global.ini","DEFAULT","","","system_replication","logshipping_snapshot_logsize_thr

eshold","3221225472"

"global.ini","DEFAULT","","","system_replication","logshipping_snapshot_min_time_in

terval","900"

"global.ini","DEFAULT","","","system_replication","logshipping_timeout","30"

"global.ini","DEFAULT","","","system_replication","preload_column_tables","true"

"global.ini","DEFAULT","","","system_replication","propagate_log_retention","off"

"global.ini","DEFAULT","","","system_replication","reconnect_time_interval","30"

"global.ini","DEFAULT","","","system_replication","retries_before_register_to_alter

native_source","20"

"global.ini","DEFAULT","","","system_replication","takeover_esserver_without_log_ba

ckup","false"

"global.ini","DEFAULT","","","system_replication","takeover_wait_until_esserver_res

tart","true"

"global.ini","DEFAULT","","","system_replication","timetravel_call_takeover_hooks",

"off"

"global.ini","DEFAULT","","","system_replication","timetravel_log_retention_policy"

,"none"

"global.ini","DEFAULT","","","system_replication","timetravel_max_retention_time","

0"

"global.ini","DEFAULT","","","system_replication","timetravel_snapshot_creation_int

erval","1440"

"indexserver.ini","DEFAULT","","","system_replication","logshipping_async_buffer_si

ze","268435456"

"indexserver.ini","DEFAULT","","","system_replication","logshipping_replay_logbuffe

r_cache_size","4294967296"

"indexserver.ini","DEFAULT","","","system_replication","logshipping_replay_push_per

sistent_segment_count","20"

41 rows selected (overall time 1971.958 msec; server time 31.359 msec)6.1.3. Check database

Check if the database is running and discover the current primary node.

List database instances

clusternode1:rh2adm> sapcontrol -nr ${TINSTANCE} -function

GetSystemInstanceList

23.06.2023 12:08:17

GetSystemInstanceList

OK

hostname, instanceNr, httpPort, httpsPort, startPriority, features, dispstatus

node1, 2, 50213, 50214, 0.3, HDB|HDB_WORKER, GREENIf the output is green the instance is running.

List database processes

clusternode1:rh2adm> sapcontrol -nr ${TINSTANCE} -function GetProcessList

GetProcessList

OK

name, description, dispstatus, textstatus, starttime, elapsedtime, pid

hdbdaemon, HDB Daemon, GREEN, Running, 2023 09 04 14:34:01, 18:41:33, 3788067