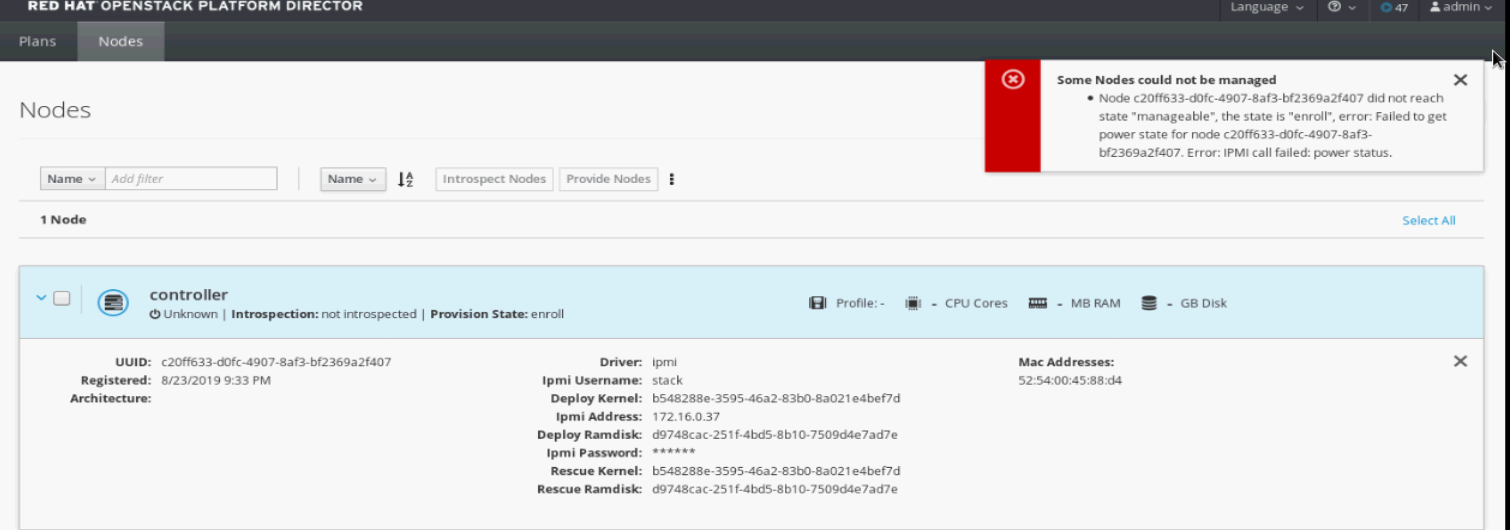

Unable to manage node

Goal is to have a small Red Hat openstack setup using virtual machines as nodes.

Set up is a HP dl380 gen9, with Ubuntu 18.04. Vm are kvm. Vm are usinf virt-manager

Currently have the undercloud installed on a vm running Red Hat Linux server 7.6. The undercloud was deployed using default settings from the undercloud.conf file. The vm has to virtual interfaces, which are the "default NAT.

Node is a vm running red hat linux server 7.6. It has two Virtual network interfaces one is a default NAT, the other is a Host device bond0: macvtap. with the ip address of 172.16.0.37. Trying to manage node via dashboard, but dashboard is unable to get an IPMI call