Redhat-operators catalog fails at some point and find itself in the staging registry

Issue

The OpenShift Marketplace that manages the Operator Life Cycle and Installed operators in a cluster was temporarily redirected to the Red Hat staging environment which is not accessible to the public.

Troubleshooting

-

Running the following

occommand will show all affected Operators (below is the command with sample output) as well as whether the Red Hat OpenShift Container Platform 4 - Cluster is affected by this issue:$ oc get --all-namespaces -o 'custom-columns=NAMESPACE:.metadata.namespace,NAME:.metadata.name,PHASE:.status.phase,BundleLookupPath:.status.bundleLookups[].path' installplans.operators.coreos.com | grep 'NAMESPACE\|Failed.*registry[.]stage[.]redhat[.]io' NAMESPACE NAME PHASE BundleLookupPath namespace-sso install-fbhlk Failed registry.stage.redhat.io/rh-sso-7/sso7-rhel8-operator-bundle@sha256:92604a0b429d49196624dce12924e37fdd767a98eaca3175363358bea39f1d84 namespace-mce install-9c2q6 Failed registry.stage.redhat.io/multicluster-engine/mce-operator-bundle@sha256:c4f901041fa60fae44c0b23a69f91b23e580b72029730b7e4624e2f07399bf45 namespace-oadp install-4pl6b Failed registry.stage.redhat.io/oadp/oadp-operator-bundle@sha256:be988cfb751174bd5c9d974225c205739b283c94a582b39d5cef5c6261c5314d namespace-acm install-cp5f8 Failed registry.stage.redhat.io/rhacm2/acm-operator-bundle@sha256:0beabc9346d25388c3fba22f6357eaa742c19cd4167cde3288d6150317e9d4b7 -

If the above command does not return any output, then the cluster is not affected by this issue.

-

Seeing

KubeJobFailedeventsinopenshift-marketplaceas seen in the below example, may be an indication that the Red Hat OpenShift Container Platform 4 - Cluster is impacted by this issue. To confirm whether the Red Hat OpenShift Container Platform 4 - Cluster is affected, used theoccommand mentioned above.$ oc get events -n openshift-marketplace | grep "KubeJobFailed" KubeJobFailed{namespace="openshift-marketplace",job_name=~"[0-9a-f]{63}"} -

Eventerrors similar as shown below may be another indication that the Red Hat OpenShift Container Platform 4 - Cluster is affected by this issue. Again to confirm, use the first command provided in this section:$ oc get event --all-namespaces | grep "Failed to pull image" Failed to pull image “registry.stage.redhat.io/...”: rpc error: code = Unknown desc = pinging container registry registry.stage.redhat.io: Get “https://registry.stage.redhat.io/v2/”: x509: certificate signed by unknown authority -

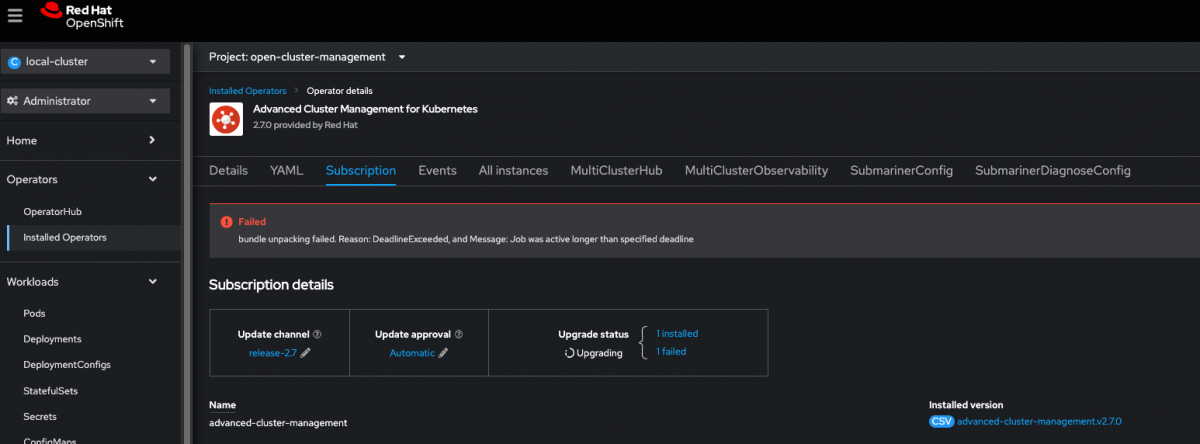

The Red Hat OpenShift Container Platform 4 - Console may eventually also report problems similar as to what is shown in the below picture. To check or see them, navigate to Operators -> Installed Operators -> (select-an-operator)). In case a similar error is reported, Red Hat still does recommend to use the initial

occommand from this section, as it will help to confirm the issue and also show all affected Operators.

Cluster Console - Operator with error

Resolution

While the change that caused this issue was immediately reverted, Operators installed using the Operator Life-Cycle Manager (OLM), that did fetch and started to update Operators based on the problematic Operator Life-Cycle Manager catalog, require manual clean-up before attempting further Operator updates. Operators, installed using the Operator Life-Cycle Manager on Red Hat OpenShift Container Platform 4 that did not fetch the problematic Operator Life-Cycle Manager catalog and thus have nothing reported when executing the above troubleshooting steps, do not need to take any action.

Prerequisites to recover OLM managed Operators from failing updates because of the wrongly pushed Operator Life-Cycle Manager catalog

- The pod associated with the

redhat-operatorscatalogSourcehas been restarted since Tuesday, February 28th, 2023.- In Red Hat OpenShift Container Platform 4 - Clusters that have the default

redhat-operatorscatalogSourceenabled, the following command will print the age of the pod:oc get pods -n openshift-marketplace -l olm.catalogSource=redhat-operators

- In Red Hat OpenShift Container Platform 4 - Clusters that have the default

- Role Based Access Control (RBAC) permissions to

getanddeleteInstallPlansin thenamespaceswhere installs/upgrades were initiated and are failing are required. - Role Based Access Control (RBAC) permissions to

getconfigMapsin thenamespacethat contains theredhat-operatorscatalogSourceare required.

Steps to recover OLM managed Operators from failing updates because of the wrongly pushed Operator Life-Cycle Manager catalog

- Important: The

recoveryprocedure will remove associatedConfigMapandInstallPlansto restore regular operation (update, installation, etc.) for Operators managed by OLM. Removing theInstallPlanis required to again allow updates of the affected Operator and the clean-up of theConfigMapis required to save the space inetcdand to avoid leaving orphaned resources on Red Hat OpenShift Container Platform 4. -

Generate the below

recoveryscript# Creates "oc delete commands" for each affected installPlan oc get installplans --all-namespaces -o 'custom-columns=NAMESPACE:.metadata.namespace,NAME:.metadata.name,PHASE:.status.phase,BundleLookupPath:.status.bundleLookups[].path' | grep Failed | grep 'registry[.]stage[.]redhat[.]io'| awk '{print "oc delete installplan -n", $1, $2}' >> incident_17669_resolution_script.sh # Creates "oc delete commands" for each job that failed. In this case, deleting the configMap of the same name will cause Kubernetes garbage collection to cleanup the failed job. oc get jobs --all-namespaces -o 'custom-columns=NAMESPACE:.metadata.namespace,NAME:.metadata.name,FAILED:.status.failed,IMAGE:.spec.template.spec.containers[0].env[0].value' | grep 'registry[.]stage[.]redhat[.]io' | awk '{ if($3==1) print "oc delete configmap -n", $1, $2}' >> incident_17669_resolution_script.sh -

Inspect the created

recoveryscript and validate that only resources affected by the issue are listed and therefore deleted. If in any doubt, contact Red Hat Technical Support.cat incident_17669_resolution_script.sh -

Run the

recoveryscript, which will remove the problematicInstallPlanand associatedConfigMapto get the affected Operator back into proper state and thus resume normal operation.chmod u+x incident_17669_resolution_script.sh ./incident_17669_resolution_script.sh -

Contact Red Hat Technical Support to raise questions or comments related to the procedure or overall problem documented.

Root Cause

At 15:32 UTC on 2023-02-28 staging OLM catalogues were pushed to production and were reverted shortly afterwards.

The staging registry holds pre-release and in-development operators, including feature and maintenance updates. These operator versions are not usually publicly available; however, if the staging catalogue's contents are pushed live for a brief period of time, a cluster may attempt to update its redhat-operators CatalogSource and pull a catalogue image containing references to the staging registry at registry.stage.redhat.io (every 10 minutes by default). This ultimately leads to operators (mainly those using auto-update functionality) trying to update using images from registry.stage.redhat.io. This staging registry is typically not accessible to the public or clusters and this causes the image pull issues noted in the troubleshooting section.

The full upgrade process:

- An upgrade is triggered via the Operator Lifecycle Manager because a new version is available in staging.

- The unpack job pulls the bundle image referenced by the catalogue as the first step in the installation process.

- Because the referenced bundle is in the staging registry, the cluster is unable to pull the image.

- When the unpack job times out, the operator installPlan fails.

In this case, the Operator deployments remain unaffected, the impact is limited to OLM's ability to upgrade the operator.

Comments