Deploy model in Red Hat Openshift AI failed: "RuntimeError: Failed to infer device type"

Environment

- Red Hat Openshift AI 2.19

Issue

- Deploy model in Red Hat Openshift AI failed:

$ oc logs <model name>-predictor-xxxxx-xxx

INFO 07-02 07:02:49 [__init__.py:243] No platform detected, vLLM is running on UnspecifiedPlatform

INFO 07-02 07:02:52 [api_server.py:1034] vLLM API server version 0.8.5.dev411+g7ad990749

... ...

Traceback (most recent call last):

File "<frozen runpy>", line 198, in _run_module_as_main

File "<frozen runpy>", line 88, in _run_code

File "/opt/vllm/lib64/python3.12/site-packages/vllm/entrypoints/openai/api_server.py", line 1121, in <module>

uvloop.run(run_server(args))

File "/opt/vllm/lib64/python3.12/site-packages/uvloop/__init__.py", line 109, in run

return __asyncio.run(

^^^^^^^^^^^^^^

File "/usr/lib64/python3.12/asyncio/runners.py", line 195, in run

return runner.run(main)

^^^^^^^^^^^^^^^^

File "/usr/lib64/python3.12/asyncio/runners.py", line 118, in run

return self._loop.run_until_complete(task)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "uvloop/loop.pyx", line 1518, in uvloop.loop.Loop.run_until_complete

File "/opt/vllm/lib64/python3.12/site-packages/uvloop/__init__.py", line 61, in wrapper

return await main

^^^^^^^^^^

File "/opt/vllm/lib64/python3.12/site-packages/vllm/entrypoints/openai/api_server.py", line 1069, in run_server

async with build_async_engine_client(args) as engine_client:

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib64/python3.12/contextlib.py", line 210, in __aenter__

return await anext(self.gen)

^^^^^^^^^^^^^^^^^^^^^

File "/opt/vllm/lib64/python3.12/site-packages/vllm/entrypoints/openai/api_server.py", line 146, in build_async_engine_client

async with build_async_engine_client_from_engine_args(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib64/python3.12/contextlib.py", line 210, in __aenter__

return await anext(self.gen)

^^^^^^^^^^^^^^^^^^^^^

File "/opt/vllm/lib64/python3.12/site-packages/vllm/entrypoints/openai/api_server.py", line 166, in build_async_engine_client_from_engine_args

vllm_config = engine_args.create_engine_config(usage_context=usage_context)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/vllm/lib64/python3.12/site-packages/vllm/engine/arg_utils.py", line 1153, in create_engine_config

device_config = DeviceConfig(device=self.device)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/vllm/lib64/python3.12/site-packages/vllm/config.py", line 2007, in __init__

raise RuntimeError(

RuntimeError: Failed to infer device type, please set the environment variable `VLLM_LOGGING_LEVEL=DEBUG` to turn on verbose logging to help debug the issue.

Resolution

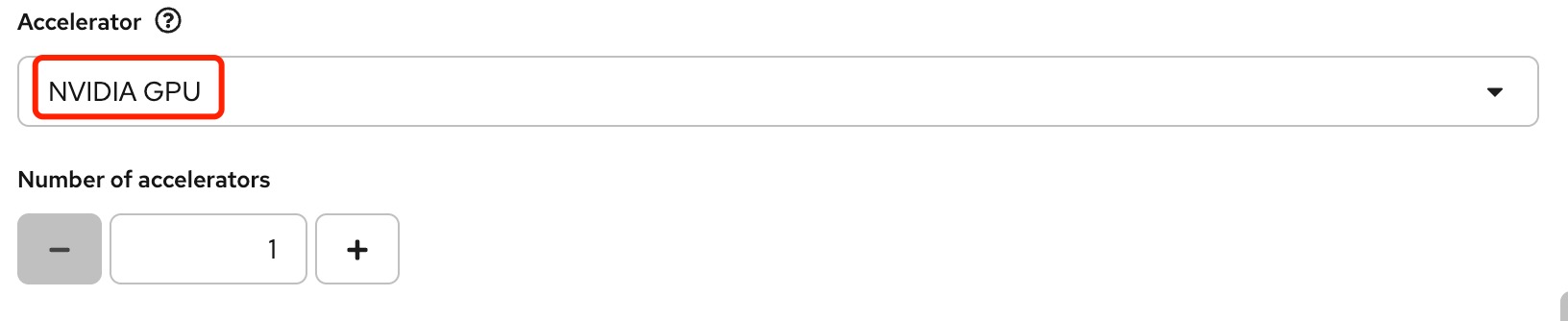

- Delete old model. In new model, configure the same

Acceleratoras theServing runtime, such as usevLLM NVIDIA GPU ServingRuntime for Kserveruntime requires addingNVIDIA GPUas accelerator, then deploy the model.

Root Cause

- Need to add the corresponding

AcceleratorinServing runtimewhen deploy model.

Diagnostic Steps

- Check model deploy page whether

AcceleratorisNone:

This solution is part of Red Hat’s fast-track publication program, providing a huge library of solutions that Red Hat engineers have created while supporting our customers. To give you the knowledge you need the instant it becomes available, these articles may be presented in a raw and unedited form.

Comments