-

Language:

English

-

Language:

English

Red Hat Training

A Red Hat training course is available for Red Hat Ceph Storage

Chapter 3. Monitor Configuration Reference

Understanding how to configure a Ceph monitor is an important part of building a reliable Red Hat Ceph Storage cluster. All clusters have at least one monitor. A monitor configuration usually remains fairly consistent, but you can add, remove or replace a monitor in a cluster.

3.1. Background

Ceph monitors maintain a "master copy" of the cluster map. That means a Ceph client can determine the location of all Ceph monitors and Ceph OSDs just by connecting to one Ceph monitor and retrieving a current cluster map.

Before Ceph clients can read from or write to Ceph OSDs, they must connect to a Ceph monitor first. With a current copy of the cluster map and the CRUSH algorithm, a Ceph client can compute the location for any object. The ability to compute object locations allows a Ceph client to talk directly to Ceph OSDs, which is a very important aspect of Ceph high scalability and performance.

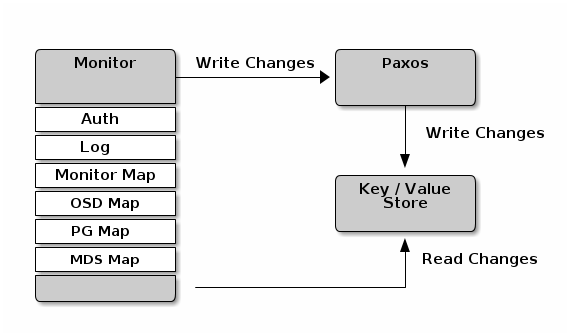

The primary role of the Ceph monitor is to maintain a master copy of the cluster map. Ceph monitors also provide authentication and logging services. Ceph monitors write all changes in the monitor services to a single Paxos instance, and Paxos writes the changes to a key-value store for strong consistency. Ceph monitors can query the most recent version of the cluster map during synchronization operations. Ceph monitors leverage the key-value store’s snapshots and iterators (using the leveldb database) to perform store-wide synchronization.

3.1.1. Cluster Maps

The cluster map is a composite of maps, including the monitor map, the OSD map, and the placement group map. The cluster map tracks a number of important events:

-

Which processes are

inthe Red Hat Ceph Storage cluster -

Which processes that are

inthe Red Hat Ceph Storage cluster areupand running ordown. -

Whether, the placement groups are

activeorinactive, andcleanor in some other state. other details that reflect the current state of the cluster such as:

- the total amount of storage space or

- the amount of storage used.

When there is a significant change in the state of the cluster for example, a Ceph OSD goes down, a placement group falls into a degraded state, and so on, the cluster map gets updated to reflect the current state of the cluster. Additionally, the Ceph monitor also maintains a history of the prior states of the cluster. The monitor map, OSD map, and placement group map each maintain a history of their map versions. Each version is called an epoch.

When operating the Red Hat Ceph Storage cluster, keeping track of these states is an important part of the cluster administration.

3.1.2. Monitor Quorum

A cluster will run sufficiently with a single monitor. However, a single monitor is a single-point-of-failure. To ensure high availability in a production Ceph storage cluster, run Ceph with multiple monitors so that the failure of a single monitor will not cause a failure of the entire cluster.

When a Ceph storage cluster runs multiple Ceph monitors for high availability, Ceph monitors use the Paxos algorithm to establish consensus about the master cluster map. A consensus requires a majority of monitors running to establish a quorum for consensus about the cluster map (for example, 1; 2 out of 3; 3 out of 5; 4 out of 6; and so on).

3.1.3. Consistency

When you add monitor settings to the Ceph configuration file, you need to be aware of some of the architectural aspects of Ceph monitors. Ceph imposes strict consistency requirements for a Ceph monitor when discovering another Ceph monitor within the cluster. Whereas, Ceph clients and other Ceph daemons use the Ceph configuration file to discover monitors, monitors discover each other using the monitor map (monmap), not the Ceph configuration file.

A Ceph monitor always refers to the local copy of the monitor map when discovering other Ceph monitors in the Red Hat Ceph Storage cluster. Using the monitor map instead of the Ceph configuration file avoids errors that could break the cluster, for example, typos in the Ceph configuration file when specifying a monitor address or port). Since monitors use monitor maps for discovery and they share monitor maps with clients and other Ceph daemons, the monitor map provides monitors with a strict guarantee that their consensus is valid.

Strict consistency also applies to updates to the monitor map. As with any other updates on the Ceph monitor, changes to the monitor map always run through a distributed consensus algorithm called Paxos. The Ceph monitors must agree on each update to the monitor map, such as adding or removing a Ceph monitor, to ensure that each monitor in the quorum has the same version of the monitor map. Updates to the monitor map are incremental so that Ceph monitors have the latest agreed upon version, and a set of previous versions. Maintaining a history enables a Ceph monitor that has an older version of the monitor map to catch up with the current state of the Red Hat Ceph Storage cluster.

If Ceph monitors discovered each other through the Ceph configuration file instead of through the monitor map, it would introduce additional risks because the Ceph configuration files are not updated and distributed automatically. Ceph monitors might inadvertently use an older Ceph configuration file, fail to recognize a Ceph monitor, fall out of a quorum, or develop a situation where Paxos is not able to determine the current state of the system accurately.

3.1.4. Bootstrapping Monitors

In most configuration and deployment cases, tools that deploy Ceph might help bootstrap the Ceph monitors by generating a monitor map for you (for example, ceph-deploy, and so on). A Ceph monitor requires a few explicit settings:

-

File System ID: The

fsidis the unique identifier for your object store. Since you can run multiple clusters on the same hardware, you must specify the unique ID of the object store when bootstrapping a monitor. Deployment tools usually do this for you (for example,ceph-deploycan call a tool likeuuidgen), but you can specify thefsidmanually too. -

Monitor ID: A monitor ID is a unique ID assigned to each monitor within the cluster. It is an alphanumeric value, and by convention the identifier usually follows an alphabetical increment (for example,

a,b, and so on). This can be set in the Ceph configuration file (for example,[mon.a],[mon.b], and so on), by a deployment tool, or using thecephcommand. -

Keys: The monitor must have secret keys. A deployment tool such as

ceph-deployusually does this for you, but you can perform this step manually too.

3.2. Configuring Monitors

To apply configuration settings to the entire cluster, enter the configuration settings under the [global] section. To apply configuration settings to all monitors in the cluster, enter the configuration settings under the [mon] section. To apply configuration settings to specific monitors, specify the monitor instance (for example, [mon.a]). By convention, monitor instance names use alpha notation.

[global] [mon] [mon.a] [mon.b] [mon.c]

3.2.1. Minimum Configuration

The bare minimum monitor settings for a Ceph monitor in the Ceph configuration file includes a host name for each monitor. You can configure these under [mon] or under the entry for a specific monitor.

[mon] mon host = hostname1,hostname2,hostname3 mon addr = 10.0.0.10:6789,10.0.0.11:6789,10.0.0.12:6789

Or

[mon.a] host = hostname1 mon addr = 10.0.0.10:6789

This minimum configuration for monitors assumes that a deployment tool generates the fsid and the mon. key for you.

Once you deploy a Ceph cluster, do not change the IP address of the monitors.

3.2.2. Cluster ID

Each Red Hat Ceph Storage cluster has a unique identifier (fsid). If specified, it usually appears under the [global] section of the configuration file. Deployment tools usually generate the fsid and store it in the monitor map, so the value may not appear in a configuration file. The fsid makes it possible to run daemons for multiple clusters on the same hardware.

- fsid

- Description

- The cluster ID. One per cluster.

- Type

- UUID

- Required

- Yes.

- Default

- N/A. May be generated by a deployment tool if not specified.

Do not set this value if you use a deployment tool that does it for you.

3.2.3. Initial Members

Red Hat recommends running a production Red Hat Ceph Storage cluster with at least three Ceph monitors to ensure high availability. When you run multiple monitors, you can specify the initial monitors that must be members of the cluster in order to establish a quorum. This may reduce the time it takes for the cluster to come online.

[mon] mon_initial_members = a,b,c

- mon_initial_members

- Description

- The IDs of initial monitors in a cluster during startup. If specified, Ceph requires an odd number of monitors to form an initial quorum (for example, 3).

- Type

- String

- Default

- None

A majority of monitors in your cluster must be able to reach each other in order to establish a quorum. You can decrease the initial number of monitors to establish a quorum with this setting.

3.2.4. Data

Ceph provides a default path where Ceph monitors store data. For optimal performance in a production Red Hat Ceph Storage cluster, Red Hat recommends running Ceph monitors on separate hosts and drives from Ceph OSDs. Ceph monitors call the fsync() function often, which can interfere with Ceph OSD workloads.

Ceph monitors store their data as key-value pairs. Using a data store prevents recovering Ceph monitors from running corrupted versions through Paxos, and it enables multiple modification operations in one single atomic batch, among other advantages.

Red Hat does not recommend changing the default data location. If you modify the default location, make it uniform across Ceph monitors by setting it in the [mon] section of the configuration file.

- mon_data

- Description

- The monitor’s data location.

- Type

- String

- Default

-

/var/lib/ceph/mon/$cluster-$id

3.2.5. Storage Capacity

When a Red Hat Ceph Storage cluster gets close to its maximum capacity (specifies by the mon_osd_full_ratio parameter), Ceph prevents you from writing to or reading from Ceph OSDs as a safety measure to prevent data loss. Therefore, letting a production Red Hat Ceph Storage cluster approach its full ratio is not a good practice, because it sacrifices high availability. The default full ratio is .95, or 95% of capacity. This a very aggressive setting for a test cluster with a small number of OSDs.

When monitoring a cluster, be alert to warnings related to the nearfull ratio. This means that a failure of some OSDs could result in a temporary service disruption if one or more OSDs fails. Consider adding more OSDs to increase storage capacity.

A common scenario for test clusters involves a system administrator removing a Ceph OSD from the Red Hat Ceph Storage cluster to watch the cluster re-balance. Then, removing another Ceph OSD, and so on until the Red Hat Ceph Storage cluster eventually reaches the full ratio and locks up.

Red Hat recommends a bit of capacity planning even with a test cluster. Planning enables you to gauge how much spare capacity you will need in order to maintain high availability. Ideally, you want to plan for a series of Ceph OSD failures where the cluster can recover to an active + clean state without replacing those Ceph OSDs immediately. You can run a cluster in an active + degraded state, but this is not ideal for normal operating conditions.

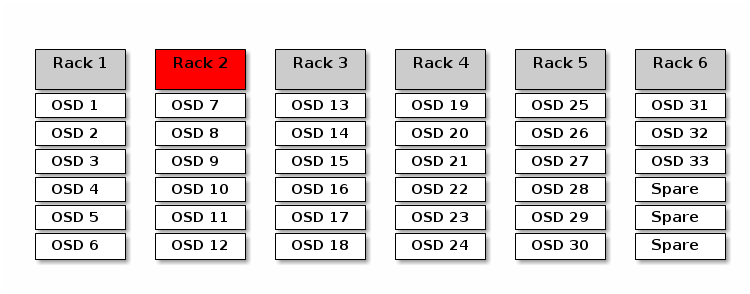

The following diagram depicts a simplistic Red Hat Ceph Storage cluster containing 33 Ceph Nodes with one Ceph OSD per host, each Ceph OSD Daemon reading from and writing to a 3TB drive. So this exemplary Red Hat Ceph Storage cluster has a maximum actual capacity of 99TB. With a mon osd full ratio of 0.95, if the Red Hat Ceph Storage cluster falls to 5 TB of remaining capacity, the cluster will not allow Ceph clients to read and write data. So the Red Hat Ceph Storage cluster’s operating capacity is 95 TB, not 99 TB.

It is normal in such a cluster for one or two OSDs to fail. A less frequent but reasonable scenario involves a rack’s router or power supply failing, which brings down multiple OSDs simultaneously (for example, OSDs 7-12). In such a scenario, you should still strive for a cluster that can remain operational and achieve an active + clean state, even if that means adding a few hosts with additional OSDs in short order. If your capacity utilization is too high, you might not lose data, but you could still sacrifice data availability while resolving an outage within a failure domain if capacity utilization of the cluster exceeds the full ratio. For this reason, Red Hat recommends at least some rough capacity planning.

Identify two numbers for your cluster:

- the number of OSDs

- the total capacity of the cluster

To determine the mean average capacity of an OSD within a cluster, divide the total capacity of the cluster by the number of OSDs in the cluster. Consider multiplying that number by the number of OSDs you expect to fail simultaneously during normal operations (a relatively small number). Finally, multiply the capacity of the cluster by the full ratio to arrive at a maximum operating capacity. Then, subtract the number of amount of data from the OSDs you expect to fail to arrive at a reasonable full ratio. Repeat the foregoing process with a higher number of OSD failures (for example, a rack of OSDs) to arrive at a reasonable number for a near full ratio.

[global] ... mon_osd_full_ratio = .80 mon_osd_nearfull_ratio = .70

- mon_osd_full_ratio

- Description

-

The percentage of disk space used before an OSD is considered

full. - Type

- Float:

- Default

-

.95

- mon_osd_nearfull_ratio

- Description

-

The percentage of disk space used before an OSD is considered

nearfull. - Type

- Float

- Default

-

.85

If some OSDs are nearfull, but others have plenty of capacity, you might have a problem with the CRUSH weight for the nearfull OSDs.

3.2.6. Heartbeat

Ceph monitors know about the cluster by requiring reports from each OSD, and by receiving reports from OSDs about the status of their neighboring OSDs. Ceph provides reasonable default settings for interaction between monitor and OSD, however, you can modify them as needed.

3.2.7. Monitor Store Synchronization

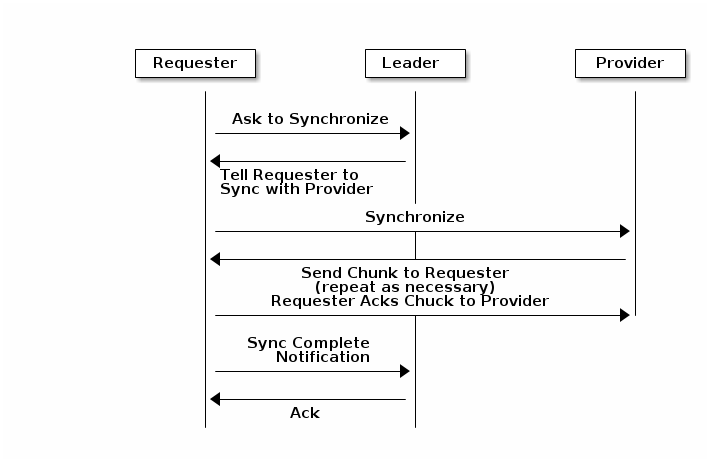

When you run a production cluster with multiple monitors which is recommended, each monitor checks to see if a neighboring monitor has a more recent version of the cluster map. For example, a map in a neighboring monitor with one or more epoch numbers higher than the most current epoch in the map of the instant monitor. Periodically, one monitor in the cluster might fall behind the other monitors to the point where it must leave the quorum, synchronize to retrieve the most current information about the cluster, and then rejoin the quorum. For the purposes of synchronization, monitors can assume one of three roles:

- Leader: The Leader is the first monitor to achieve the most recent Paxos version of the cluster map.

- Provider: The Provider is a monitor that has the most recent version of the cluster map, but was not the first to achieve the most recent version.

- Requester: The Requester is a monitor that has fallen behind the leader and must synchronize in order to retrieve the most recent information about the cluster before it can rejoin the quorum.

These roles enable a leader to delegate synchronization duties to a provider, which prevents synchronization requests from overloading the leader and improving performance. In the following diagram, the requester has learned that it has fallen behind the other monitors. The requester asks the leader to synchronize, and the leader tells the requester to synchronize with a provider.

Synchronization always occurs when a new monitor joins the cluster. During runtime operations, monitors can receive updates to the cluster map at different times. This means the leader and provider roles may migrate from one monitor to another. If this happens while synchronizing (for example, a provider falls behind the leader), the provider can terminate synchronization with a requester.

Once synchronization is complete, Ceph requires trimming across the cluster. Trimming requires that the placement groups are active + clean.

- mon_sync_trim_timeout

- Description, Type

- Double

- Default

-

30.0

- mon_sync_heartbeat_timeout

- Description, Type

- Double

- Default

-

30.0

- mon_sync_heartbeat_interval

- Description, Type

- Double

- Default

-

5.0

- mon_sync_backoff_timeout

- Description, Type

- Double

- Default

-

30.0

- mon_sync_timeout

- Description, Type

- Double

- Default

-

30.0

- mon_sync_max_retries

- Description, Type

- Integer

- Default

-

5

- mon_sync_max_payload_size

- Description

- The maximum size for a sync payload.

- Type

- 32-bit Integer

- Default

-

1045676

- mon_accept_timeout

- Description

- Number of seconds the Leader will wait for the Requester(s) to accept a Paxos update. It is also used during the Paxos recovery phase for similar purposes.

- Type

- Float

- Default

-

10.0

- paxos_propose_interval

- Description

- Gather updates for this time interval before proposing a map update.

- Type

- Double

- Default

-

1.0

- paxos_min_wait

- Description

- The minimum amount of time to gather updates after a period of inactivity.

- Type

- Double

- Default

-

0.05

- paxos_trim_tolerance

- Description

- The number of extra proposals tolerated before trimming.

- Type

- Integer

- Default

-

30

- paxos_trim_disabled_max_versions

- Description

- The maximum number of version allowed to pass without trimming.

- Type

- Integer

- Default

-

100

- mon_lease

- Description

- The length (in seconds) of the lease on the monitor’s versions.

- Type

- Float

- Default

-

5

- mon_lease_renew_interval

- Description

- The interval (in seconds) for the Leader to renew the other monitor’s leases.

- Type

- Float

- Default

-

3

- mon_lease_ack_timeout

- Description

- The number of seconds the Leader will wait for the Providers to acknowledge the lease extension.

- Type

- Float

- Default

-

10.0

- mon_min_osdmap_epochs

- Description

- Minimum number of OSD map epochs to keep at all times.

- Type

- 32-bit Integer

- Default

-

500

- mon_max_pgmap_epochs

- Description

- Maximum number of PG map epochs the monitor should keep.

- Type

- 32-bit Integer

- Default

-

500

- mon_max_log_epochs

- Description

- Maximum number of Log epochs the monitor should keep.

- Type

- 32-bit Integer

- Default

-

500

3.2.8. Clock

Ceph daemons pass critical messages to each other, which must be processed before daemons reach a timeout threshold. If the clocks in Ceph monitors are not synchronized, it can lead to a number of anomalies. For example:

- Daemons ignoring received messages (for example, timestamps outdated).

- Timeouts triggered too soon or late when a message was not received in time.

See Monitor Store Synchronization for details.

Install NTP on the Ceph monitor hosts to ensure that the monitor cluster operates with synchronized clocks.

Clock drift may still be noticeable with NTP even though the discrepancy is not yet harmful. Ceph clock drift and clock skew warnings can get triggered even though NTP maintains a reasonable level of synchronization. Increasing your clock drift may be tolerable under such circumstances. However, a number of factors such as workload, network latency, configuring overrides to default timeouts and the Monitor Store Synchronization settings may influence the level of acceptable clock drift without compromising Paxos guarantees.

Ceph provides the following tunable options to allow you to find acceptable values.

- clock_offset

- Description

-

How much to offset the system clock. See

Clock.ccfor details. - Type

- Double

- Default

-

0

- mon_tick_interval

- Description

- A monitor’s tick interval in seconds.

- Type

- 32-bit Integer

- Default

-

5

- mon_clock_drift_allowed

- Description

- The clock drift in seconds allowed between monitors.

- Type

- Float

- Default

-

.050

- mon_clock_drift_warn_backoff

- Description

- Exponential backoff for clock drift warnings.

- Type

- Float

- Default

-

5

- mon_timecheck_interval

- Description

- The time check interval (clock drift check) in seconds for the leader.

- Type

- Float

- Default

-

300.0

3.2.9. Client

- mon_client_hunt_interval

- Description

-

The client will try a new monitor every

Nseconds until it establishes a connection. - Type

- Double

- Default

-

3.0

- mon_client_ping_interval

- Description

-

The client will ping the monitor every

Nseconds. - Type

- Double

- Default

-

10.0

- mon_client_max_log_entries_per_message

- Description

- The maximum number of log entries a monitor will generate per client message.

- Type

- Integer

- Default

-

1000

- mon_client_bytes

- Description

- The amount of client message data allowed in memory (in bytes).

- Type

- 64-bit Integer Unsigned

- Default

-

100ul << 20

3.3. Miscellaneous

- mon_max_osd

- Description

- The maximum number of OSDs allowed in the cluster.

- Type

- 32-bit Integer

- Default

-

10000

- mon_globalid_prealloc

- Description

- The number of global IDs to pre-allocate for clients and daemons in the cluster.

- Type

- 32-bit Integer

- Default

-

100

- mon_sync_fs_threshold

- Description

-

Synchronize with the file system when writing the specified number of objects. Set it to

0to disable it. - Type

- 32-bit Integer

- Default

-

5

- mon_subscribe_interval

- Description

- The refresh interval (in seconds) for subscriptions. The subscription mechanism enables obtaining the cluster maps and log information.

- Type

- Double

- Default

-

300

- mon_stat_smooth_intervals

- Description

-

Ceph will smooth statistics over the last

NPG maps. - Type

- Integer

- Default

-

2

- mon_probe_timeout

- Description

- Number of seconds the monitor will wait to find peers before bootstrapping.

- Type

- Double

- Default

-

2.0

- mon_daemon_bytes

- Description

- The message memory cap for metadata server and OSD messages (in bytes).

- Type

- 64-bit Integer Unsigned

- Default

-

400ul << 20

- mon_max_log_entries_per_event

- Description

- The maximum number of log entries per event.

- Type

- Integer

- Default

-

4096