Red Hat Training

A Red Hat training course is available for Red Hat OpenStack Platform

Chapter 4. Configure OVS-DPDK Support for Virtual Networking

This section deploys DPDK with Open vSwitch (OVS-DPDK) within the Red Hat OpenStack Platform environment. The overcloud usually consists of nodes in predefined roles such as Controller nodes, Compute nodes, and different storage node types. Each of these default roles contains a set of services defined in the core Heat templates on the director node.

See Planning your OVS-DPDK Deployment for details on how to determine the best values for the OVS-DPDK parameters that you set in the network-environment.yaml file to optimize your OpenStack network for OVS-DPDK.

4.1. Naming Conventions

We recommend that you follow a consistent naming convention when you use custom roles in your OpenStack deployment, especially with multiple nodes. This naming convention can assist you when creating the following files and configurations:

instackenv.json- To differentiate between nodes with different hardware or NIC capabilities."name":"computeovsdpdk-0"

roles_data.yaml- To differentiate between compute-based roles that support DPDK.`ComputeOvsDpdk`

network_environment.yaml- To ensure that you match the custom role to the correct flavor name.`OvercloudComputeOvsDpdkFlavor: computeovsdpdk`

-

nic-configfile names - To differentiate NIC yaml files for compute nodes that support DPDK interfaces. Flavor creation - To help you match a flavor and

capabilities:profilevalue to the appropriate bare metal node and custom role.# openstack flavor create --id auto --ram 4096 --disk 40 --vcpus 4 computeovsdpdk # openstack flavor set --property "cpu_arch"="x86_64" --property "capabilities:boot_option"="local" --property "capabilities:profile"="computeovsdpdk" computeovsdpdk

Bare metal node - To ensure that you match the bare metal node with the appropriate hardware and

capability:profilevalue.# openstack baremetal node update computeovsdpdk-0 add properties/capabilities='profile:computeovsdpdk,boot_option:local'

The flavor name does not have to match the capabilities:profile value for the flavor, but the flavor capabilities:profile value must match the bare metal node properties/capabilities='profile value. All three use computeovsdpdk in this example.

Ensure that all your nodes used for a custom role and profile have the same CPU, RAM, and PCI hardware topology.

4.2. OVS-DPDK and Composable Roles

With Red Hat OpenStack Platform 11, you can create custom deployment roles, using the composable roles feature, adding or removing services from each role. For more information on Composable Roles, see Composable Roles and Services.

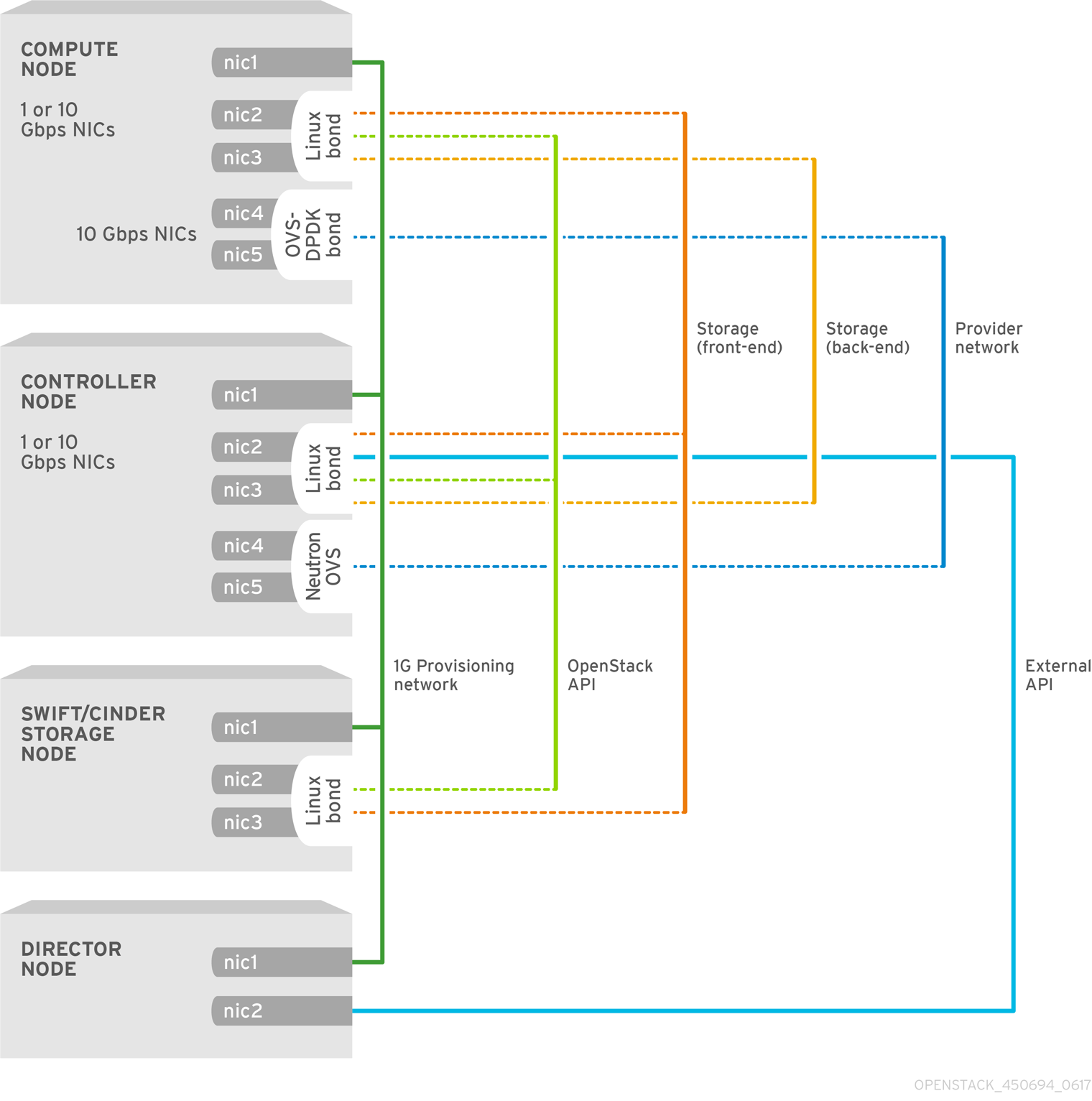

This image shows a sample OVS-DPDK topology with two bonded ports for the control plane and data plane:

Configuring OVS-DPDK comprises the following tasks:

-

If you use composable roles, copy and modify the

roles-data.yamlfile to add the composable role for OVS-DPDK. -

Update the appropriate

network-environment.yamlfile to include parameters for kernel arguments and DPDK arguments. -

Update the

compute.yamlfile to include the bridge for DPDK interface parameters. -

Update the

controller.yamlfile to include the same bridge details for DPDK interface parameters. -

Run the

overcloud_deploy.shscript to deploy the overcloud with the DPDK parameters.

This guide provides examples for CPU assignments, memory allocation, and NIC configurations that may vary from your topology and use case. See the Network Functions Virtualization Product Guide and the Network Functions Virtualization Planning and Prerequisite Guide to understand the hardware and configuration options.

Before you begin the procedure, ensure that you have the following:

- Red Hat OpenStack Platform 11 with Red Hat Enterprise Linux 7.3

- OVS 2.6

- DPDK 16.04

- Tested NIC. For a list of tested NICs for NFV, see Tested NICs.

Red Hat OpenStack Platform 11 operates in OVS client mode for OVS-DPDK deployments.

4.3. Configure Single-Port OVS-DPDK with VLAN Tunnelling

This section describes how to configure OVS-DPDK with one data plane port.

4.3.1. Modify first-boot.yaml

Modify the first-boot.yaml file to set up OVS and DPDK parameters and to configure tuned for CPU affinity.

Add additional resources.

resources: userdata: type: OS::Heat::MultipartMime properties: parts: - config: {get_resource: boot_config} - config: {get_resource: set_ovs_socket_config} - config: {get_resource: set_ovs_config} - config: {get_resource: set_dpdk_params} - config: {get_resource: install_tuned} - config: {get_resource: compute_kernel_args}Determine the

NeutronVhostUserSocketDirsetting.set_ovs_socket_config: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then mkdir -p $NEUTRON_VHOSTUSER_SOCKET_DIR chown -R qemu:qemu $NEUTRON_VHOSTUSER_SOCKET_DIR restorecon $NEUTRON_VHOSTUSER_SOCKET_DIR fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $NEUTRON_VHOSTUSER_SOCKET_DIR: {get_param: NeutronVhostuserSocketDir}Set the OVS configuration.

set_ovs_config: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then if [ -f /usr/lib/systemd/system/openvswitch-nonetwork.service ]; then ovs_service_path="/usr/lib/systemd/system/openvswitch-nonetwork.service" elif [ -f /usr/lib/systemd/system/ovs-vswitchd.service ]; then ovs_service_path="/usr/lib/systemd/system/ovs-vswitchd.service" fi grep -q "RuntimeDirectoryMode=.*" $ovs_service_path if [ "$?" -eq 0 ]; then sed -i 's/RuntimeDirectoryMode=.*/RuntimeDirectoryMode=0775/' $ovs_service_path else echo "RuntimeDirectoryMode=0775" >> $ovs_service_path fi grep -Fxq "Group=qemu" $ovs_service_path if [ ! "$?" -eq 0 ]; then echo "Group=qemu" >> $ovs_service_path fi grep -Fxq "UMask=0002" $ovs_service_path if [ ! "$?" -eq 0 ]; then echo "UMask=0002" >> $ovs_service_path fi ovs_ctl_path='/usr/share/openvswitch/scripts/ovs-ctl' grep -q "umask 0002 \&\& start_daemon \"\$OVS_VSWITCHD_PRIORITY\"" $ovs_ctl_path if [ ! "$?" -eq 0 ]; then sed -i 's/start_daemon \"\$OVS_VSWITCHD_PRIORITY.*/umask 0002 \&\& start_daemon \"$OVS_VSWITCHD_PRIORITY\" \"$OVS_VSWITCHD_WRAPPER\" \"$@\"/' $ovs_ctl_path fi fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat}Set the DPDK parameters.

set_dpdk_params: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash set -x get_mask() { local list=$1 local mask=0 declare -a bm max_idx=0 for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) bm[$index]=0 if [ $max_idx -lt $index ]; then max_idx=$(($index)) fi done for ((i=$max_idx;i>=0;i--)); do bm[$i]=0 done for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) temp=$((1<<$(($core % 32)))) bm[$index]=$((${bm[$index]} | $temp)) done printf -v mask "%x" "${bm[$max_idx]}" for ((i=$max_idx-1;i>=0;i--)); do printf -v hex "%08x" "${bm[$i]}" mask+=$hex done printf "%s" "$mask" } FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then pmd_cpu_mask=$( get_mask $PMD_CORES ) host_cpu_mask=$( get_mask $LCORE_LIST ) socket_mem=$(echo $SOCKET_MEMORY | sed s/\'//g ) ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem=$socket_mem ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=$pmd_cpu_mask ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=$host_cpu_mask fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $LCORE_LIST: {get_param: HostCpusList} $PMD_CORES: {get_param: NeutronDpdkCoreList} $SOCKET_MEMORY: {get_param: NeutronDpdkSocketMemory}Set the

tunedconfiguration to enable CPU affinity.install_tuned: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then tuned_conf_path="/etc/tuned/cpu-partitioning-variables.conf" if [ -n "$TUNED_CORES" ]; then grep -q "^isolated_cores" $tuned_conf_path if [ "$?" -eq 0 ]; then sed -i 's/^isolated_cores=.*/isolated_cores=$TUNED_CORES/' $tuned_conf_path else echo "isolated_cores=$TUNED_CORES" >> $tuned_conf_path fi tuned-adm profile cpu-partitioning fi fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $TUNED_CORES: {get_param: HostIsolatedCoreList}Set the kernel arguments.

compute_kernel_args: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then sed 's/^\(GRUB_CMDLINE_LINUX=".*\)"/\1 $KERNEL_ARGS isolcpus=$TUNED_CORES"/g' -i /etc/default/grub ; grub2-mkconfig -o /etc/grub2.cfg reboot fi params: $KERNEL_ARGS: {get_param: ComputeKernelArgs} $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $TUNED_CORES: {get_param: HostIsolatedCoreList}

4.3.2. Modify post-install.yaml

Set the

tunedconfiguration to enable CPU affinity.ExtraConfig: type: OS::Heat::SoftwareConfig properties: group: script config: str_replace: template: | #!/bin/bash set -x function tuned_service_dependency() { tuned_service=/usr/lib/systemd/system/tuned.service grep -q "network.target" $tuned_service if [ "$?" -eq 0 ]; then sed -i '/After=.*/s/network.target//g' $tuned_service fi grep -q "Before=.*network.target" $tuned_service if [ ! "$?" -eq 0 ]; then grep -q "Before=.*" $tuned_service if [ "$?" -eq 0 ]; then sed -i 's/^\(Before=.*\)/\1 network.target openvswitch.service/g' $tuned_service else sed -i '/After/i Before=network.target openvswitch.service' $tuned_service fi fi } get_mask() { local list=$1 local mask=0 declare -a bm max_idx=0 for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) bm[$index]=0 if [ $max_idx -lt $index ]; then max_idx=$(($index)) fi done for ((i=$max_idx;i>=0;i--)); do bm[$i]=0 done for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) temp=$((1<<$(($core % 32)))) bm[$index]=$((${bm[$index]} | $temp)) done printf -v mask "%x" "${bm[$max_idx]}" for ((i=$max_idx-1;i>=0;i--)); do printf -v hex "%08x" "${bm[$i]}" mask+=$hex done printf "%s" "$mask" } if hiera -c /etc/puppet/hiera.yaml service_names | grep -q neutron_ovs_dpdk_agent; then pmd_cpu_mask=$( get_mask $PMD_CORES ) host_cpu_mask=$( get_mask $LCORE_LIST ) ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=$pmd_cpu_mask ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=$host_cpu_mask tuned_service_dependency fi params: $LCORE_LIST: {get_param: HostCpusList} $PMD_CORES: {get_param: NeutronDpdkCoreList}

4.3.3. Modify network-environment.yaml

See Planning your OVS-DPDK Deployment for details on how to determine the best values for the OVS-DPDK parameters that you set in the network-environment.yaml file to optimize your OpenStack network for OVS-DPDK.

Add the custom resources for OVS-DPDK under

resource_registry.resource_registry: # Specify the relative/absolute path to the config files you want to use for override the default. OS::TripleO::Compute::Net::SoftwareConfig: nic-configs/compute-ovs-dpdk.yaml OS::TripleO::Controller::Net::SoftwareConfig: nic-configs/controller.yaml OS::TripleO::NodeUserData: first-boot.yaml OS::TripleO::NodeExtraConfigPost: post-install.yaml

Under

parameter_defaults, disable the tunnel type (set the value to""), and set the network type tovlan.NeutronTunnelTypes: '' NeutronNetworkType: 'vlan'

Under

parameter_defaults, map the physical network to the virtual bridge.NeutronBridgeMappings: 'dpdk_data:br-link0'

Under

parameter_defaults, set the OpenStack Networking ML2 and Open vSwitch VLAN mapping range.NeutronNetworkVLANRanges: 'dpdk_data:22:22'

This example sets the VLAN ranges on the physical network (

dpdk_data).Under

parameter_defaults, set the OVS-DPDK configuration parameters.NoteNeutronDPDKCoreListandNeutronDPDKMemoryChannelsare the required settings for this procedure. Attempting to deploy DPDK without appropriate values causes the deployment to fail or lead to unstable deployments.Provide a list of cores that can be used as DPDK poll mode drivers (PMDs) in the format -

[allowed_pattern: "[0-9,-]+"].NeutronDpdkCoreList: "1,17,9,25"

NoteYou must assign at least one CPU (with sibling thread) on each NUMA node with or without DPDK NICs present for DPDK PMD to avoid failures in creating guest instances.

To optimize OVS-DPDK performance, consider the following options:

-

Select CPUs associated with the NUMA node of the DPDK interface. Use

cat /sys/class/net/<interface>/device/numa_nodeto list the NUMA node associated with an interface and uselscputo list the CPUs associated with that NUMA node. -

Group CPU siblings together (in case of hyper-threading). Use

cat /sys/devices/system/cpu/<cpu>/topology/thread_siblings_listto find the sibling of a CPU. - Reserve CPU 0 for the host process.

- Isolate CPUs assigned to PMD so that the host process does not use these CPUs.

Use

NovaVcpuPinsetto exclude CPUs assigned to PMD from Compute scheduling.Provide the number of memory channels in the format -

[allowed_pattern: "[0-9]+"].NeutronDpdkMemoryChannels: "4"

Set the memory pre-allocated from the hugepage pool for each socket.

NeutronDpdkSocketMemory: "2048,2048"

This is a comma-separated string, in ascending order of the CPU socket. This example assumes a 2 NUMA node configuration and pre-allocates 2048 MB of huge pages to socket 0, and pre-allocates 2048 MB to socket 0. If you have a single NUMA node system, set this value to 1024,0.

Set the DPDK driver type and the datapath type for OVS bridges.

NeutronDpdkDriverType: "vfio-pci" NeutronDatapathType: "netdev"

Under

parameter_defaults, set the vhost-user socket directory for OVS.NeutronVhostuserSocketDir: "/var/lib/vhost_sockets"

Under

parameter_defaults, reserve the RAM for the host processes.NovaReservedHostMemory: 2048

Under

parameter_defaults, set a comma-separated list or range of physical CPU cores to reserve for virtual machine processes.NovaVcpuPinSet: "2,3,4,5,6,7,18,19,20,21,22,23,10,11,12,13,14,15,26,27,28,29,30,31"

Under

parameter_defaults, list the applicable filters.Nova scheduler applies these filters in the order they are listed. List the most restrictive filters first to make the filtering process for the nodes more efficient.

NovaSchedulerDefaultFilters: "RamFilter,ComputeFilter,AvailabilityZoneFilter,ComputeCapabilitiesFilter,ImagePropertiesFilter,PciPassthroughFilter,NUMATopologyFilter"

Under

parameter_defaults, define theComputeKernelArgsparameters to be included in the defaultgrubfile at first boot.ComputeKernelArgs: "default_hugepagesz=1GB hugepagesz=1G hugepages=32 iommu=pt intel_iommu=on"

NoteThese huge pages are consumed by the virtual machines, and also by OVS-DPDK using the

NeutronDpdkSocketMemoryparameter as shown in this procedure. The number of huge pages available for the virtual machines is thebootparameter minus theNeutronDpdkSocketMemory.You need to add

hw:mem_page_size=1GBto the flavor you associate with the DPDK instance. If you do not do this, the instance does not get a DHCP allocation.Under

parameter_defaults, set a list or range of physical CPU cores to be isolated from the host.The given argument is appended to the tuned

cpu-partitioningprofile.HostIsolatedCoreList: "1,2,3,4,5,6,7,9,10,17,18,19,20,21,22,23,11,12,13,14,15,25,26,27,28,29,30,31"

Under

parameter_defaults, set a list of logical cores used by OVS-DPDK processes for dpdk-lcore-mask. These cores must be mutually exclusive from the list of cores inNeutronDpdkCoreListandNovaVcpuPinSet.HostCpusList: "0,16,8,24"

4.3.4. Modify controller.yaml

Create the control plane Linux bond for an isolated network.

- type: linux_bond name: bond_api bonding_options: "mode=active-backup" use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic2 primary: true - type: interface name: nic3Assign VLANs to this Linux bond.

- type: vlan vlan_id: {get_param: InternalApiNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: InternalApiIpSubnet} - type: vlan vlan_id: {get_param: TenantNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: TenantIpSubnet} - type: vlan vlan_id: {get_param: StorageNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: StorageIpSubnet} - type: vlan vlan_id: {get_param: StorageMgmtNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: StorageMgmtIpSubnet} - type: vlan vlan_id: {get_param: ExternalNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: ExternalIpSubnet} routes: - default: true next_hop: {get_param: ExternalInterfaceDefaultRoute}Create the OVS bridge to the Compute node.

- type: ovs_bridge name: br-link0 use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic4

4.3.5. Modify compute.yaml

Create the compute-ovs-dpdk.yaml file from the default compute.yaml file and make the following changes:

Create the control plane Linux bond for an isolated network.

- type: linux_bond name: bond_api bonding_options: "mode=active-backup" use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic2 primary: true - type: interface name: nic3Assign VLANs to this Linux bond.

- type: vlan vlan_id: {get_param: InternalApiNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: InternalApiIpSubnet} - type: vlan vlan_id: {get_param: TenantNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: TenantIpSubnet} - type: vlan vlan_id: {get_param: StorageNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: StorageIpSubnet}Set a bridge with a DPDK port to link to the controller.

- type: ovs_user_bridge name: br-link0 use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: ovs_dpdk_port name: dpdk0 members: - type: interface name: nic4NoteTo include multiple DPDK devices, repeat the

typecode section for each DPDK device you want to add.NoteWhen using OVS-DPDK, all bridges on the same Compute node should be of type

ovs_user_bridge. The director may accept the configuration, but Red Hat OpenStack Platform does not support mixingovs_bridgeandovs_user_bridgeon the same node.

4.3.6. Run the overcloud_deploy.sh Script

The following example defines the openstack overcloud deploy command for the OVS-DPDK environment within a bash script:

#!/bin/bash openstack overcloud deploy \ --templates \ -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/neutron-ovs-dpdk.yaml \ -e /home/stack/ospd-11-vlan-dpdk-single-port-ctlplane-bonding/network-environment.yaml

-

/usr/share/openstack-tripleo-heat-templates/environments/neutron-ovs-dpdk.yamlis the location of the defaultneutron-ovs-dpdk.yamlfile, which enables the OVS-DPDK parameters for the Compute role. -

/home/stack/<relative-directory>/network-environment.yamlis the path for thenetwork-environment.yamlfile. Use this file to overwrite the default values from theneutron-ovs-dpdk.yamlfile.

Reboot the Compute nodes to enforce the tuned profile after the overcloud is deployed.

This configuration of OVS-DPDK does not support security groups and live migrations.

4.4. Configure Two-Port OVS-DPDK with VLAN Tunnelling

This section describes how to configure OVS-DPDK with two data plane ports.

4.4.1. Modify first-boot.yaml

Modify the first-boot.yaml file to set up OVS and DPDK parameters and to configure tuned for CPU affinity.

Add additional resources.

resources: userdata: type: OS::Heat::MultipartMime properties: parts: - config: {get_resource: boot_config} - config: {get_resource: set_ovs_socket_config} - config: {get_resource: set_ovs_config} - config: {get_resource: set_dpdk_params} - config: {get_resource: install_tuned} - config: {get_resource: compute_kernel_args}Determine the

NeutronVhostUserSocketDirsetting.set_ovs_socket_config: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then mkdir -p $NEUTRON_VHOSTUSER_SOCKET_DIR chown -R qemu:qemu $NEUTRON_VHOSTUSER_SOCKET_DIR restorecon $NEUTRON_VHOSTUSER_SOCKET_DIR fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $NEUTRON_VHOSTUSER_SOCKET_DIR: {get_param: NeutronVhostuserSocketDir}Set the OVS configuration.

set_ovs_config: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then if [ -f /usr/lib/systemd/system/openvswitch-nonetwork.service ]; then ovs_service_path="/usr/lib/systemd/system/openvswitch-nonetwork.service" elif [ -f /usr/lib/systemd/system/ovs-vswitchd.service ]; then ovs_service_path="/usr/lib/systemd/system/ovs-vswitchd.service" fi grep -q "RuntimeDirectoryMode=.*" $ovs_service_path if [ "$?" -eq 0 ]; then sed -i 's/RuntimeDirectoryMode=.*/RuntimeDirectoryMode=0775/' $ovs_service_path else echo "RuntimeDirectoryMode=0775" >> $ovs_service_path fi grep -Fxq "Group=qemu" $ovs_service_path if [ ! "$?" -eq 0 ]; then echo "Group=qemu" >> $ovs_service_path fi grep -Fxq "UMask=0002" $ovs_service_path if [ ! "$?" -eq 0 ]; then echo "UMask=0002" >> $ovs_service_path fi ovs_ctl_path='/usr/share/openvswitch/scripts/ovs-ctl' grep -q "umask 0002 \&\& start_daemon \"\$OVS_VSWITCHD_PRIORITY\"" $ovs_ctl_path if [ ! "$?" -eq 0 ]; then sed -i 's/start_daemon \"\$OVS_VSWITCHD_PRIORITY.*/umask 0002 \&\& start_daemon \"$OVS_VSWITCHD_PRIORITY\" \"$OVS_VSWITCHD_WRAPPER\" \"$@\"/' $ovs_ctl_path fi fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat}Set the DPDK parameters.

set_dpdk_params: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash set -x get_mask() { local list=$1 local mask=0 declare -a bm max_idx=0 for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) bm[$index]=0 if [ $max_idx -lt $index ]; then max_idx=$(($index)) fi done for ((i=$max_idx;i>=0;i--)); do bm[$i]=0 done for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) temp=$((1<<$(($core % 32)))) bm[$index]=$((${bm[$index]} | $temp)) done printf -v mask "%x" "${bm[$max_idx]}" for ((i=$max_idx-1;i>=0;i--)); do printf -v hex "%08x" "${bm[$i]}" mask+=$hex done printf "%s" "$mask" } FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then pmd_cpu_mask=$( get_mask $PMD_CORES ) host_cpu_mask=$( get_mask $LCORE_LIST ) socket_mem=$(echo $SOCKET_MEMORY | sed s/\'//g ) ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem=$socket_mem ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=$pmd_cpu_mask ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=$host_cpu_mask fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $LCORE_LIST: {get_param: HostCpusList} $PMD_CORES: {get_param: NeutronDpdkCoreList} $SOCKET_MEMORY: {get_param: NeutronDpdkSocketMemory}Set the

tunedconfiguration to enable CPU affinity.install_tuned: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then tuned_conf_path="/etc/tuned/cpu-partitioning-variables.conf" if [ -n "$TUNED_CORES" ]; then grep -q "^isolated_cores" $tuned_conf_path if [ "$?" -eq 0 ]; then sed -i 's/^isolated_cores=.*/isolated_cores=$TUNED_CORES/' $tuned_conf_path else echo "isolated_cores=$TUNED_CORES" >> $tuned_conf_path fi tuned-adm profile cpu-partitioning fi fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $TUNED_CORES: {get_param: HostIsolatedCoreList}Set the kernel arguments.

compute_kernel_args: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then sed 's/^\(GRUB_CMDLINE_LINUX=".*\)"/\1 $KERNEL_ARGS isolcpus=$TUNED_CORES"/g' -i /etc/default/grub ; grub2-mkconfig -o /etc/grub2.cfg reboot fi params: $KERNEL_ARGS: {get_param: ComputeKernelArgs} $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $TUNED_CORES: {get_param: HostIsolatedCoreList}

4.4.2. Modify post-install.yaml

Set the

tunedconfiguration to enable CPU affinity.ExtraConfig: type: OS::Heat::SoftwareConfig properties: group: script config: str_replace: template: | #!/bin/bash set -x function tuned_service_dependency() { tuned_service=/usr/lib/systemd/system/tuned.service grep -q "network.target" $tuned_service if [ "$?" -eq 0 ]; then sed -i '/After=.*/s/network.target//g' $tuned_service fi grep -q "Before=.*network.target" $tuned_service if [ ! "$?" -eq 0 ]; then grep -q "Before=.*" $tuned_service if [ "$?" -eq 0 ]; then sed -i 's/^\(Before=.*\)/\1 network.target openvswitch.service/g' $tuned_service else sed -i '/After/i Before=network.target openvswitch.service' $tuned_service fi fi } get_mask() { local list=$1 local mask=0 declare -a bm max_idx=0 for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) bm[$index]=0 if [ $max_idx -lt $index ]; then max_idx=$(($index)) fi done for ((i=$max_idx;i>=0;i--)); do bm[$i]=0 done for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) temp=$((1<<$(($core % 32)))) bm[$index]=$((${bm[$index]} | $temp)) done printf -v mask "%x" "${bm[$max_idx]}" for ((i=$max_idx-1;i>=0;i--)); do printf -v hex "%08x" "${bm[$i]}" mask+=$hex done printf "%s" "$mask" } if hiera -c /etc/puppet/hiera.yaml service_names | grep -q neutron_ovs_dpdk_agent; then pmd_cpu_mask=$( get_mask $PMD_CORES ) host_cpu_mask=$( get_mask $LCORE_LIST ) ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=$pmd_cpu_mask ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=$host_cpu_mask tuned_service_dependency fi params: $LCORE_LIST: {get_param: HostCpusList} $PMD_CORES: {get_param: NeutronDpdkCoreList}

4.4.3. Modify network-environment.yaml

See Planning your OVS-DPDK Deployment for details on how to determine the best values for the OVS-DPDK parameters that you set in the network-environment.yaml file to optimize your OpenStack network for OVS-DPDK.

Add the custom resources for OVS-DPDK under

resource_registry.resource_registry: # Specify the relative/absolute path to the config files you want to use for override the default. OS::TripleO::Compute::Net::SoftwareConfig: nic-configs/compute-ovs-dpdk.yaml OS::TripleO::Controller::Net::SoftwareConfig: nic-configs/controller.yaml OS::TripleO::NodeUserData: first-boot.yaml OS::TripleO::NodeExtraConfigPost: post-install.yaml

Under

parameter_defaults, disable the tunnel type (set the value to""), and set the network type tovlan.NeutronTunnelTypes: '' NeutronNetworkType: 'vlan'

Under

parameter_defaults, map the physical network to the virtual bridge.NeutronBridgeMappings: 'dpdk_mgmt:br-link0,dpdk_data:br-link1'

Under

parameter_defaults, set the OpenStack Networking ML2 and Open vSwitch VLAN mapping range.NeutronNetworkVLANRanges: 'dpdk_mgmt:22:22,dpdk_data:25:28'

Under

parameter_defaults, set the OVS-DPDK configuration parameters.NoteNeutronDPDKCoreListandNeutronDPDKMemoryChannelsare the required settings for this procedure. Attempting to deploy DPDK without appropriate values causes the deployment to fail or lead to unstable deployments.Provide a list of cores that can be used as DPDK poll mode drivers (PMDs) in the format -

[allowed_pattern: "[0-9,-]+"].NeutronDpdkCoreList: "1,17,9,25"

NoteYou must assign at least one CPU (with sibling thread) on each NUMA node with or without DPDK NICs present for DPDK PMD to avoid failures in creating guest instances.

To optimize OVS-DPDK performance, consider the following options:

-

Select CPUs associated with the NUMA node of the DPDK interface. Use

cat /sys/class/net/<interface>/device/numa_nodeto list the NUMA node associated with an interface and uselscputo list the CPUs associated with that NUMA node. -

Group CPU siblings together (in case of hyper-threading). Use

cat /sys/devices/system/cpu/<cpu>/topology/thread_siblings_listto find the sibling of a CPU. - Reserve CPU 0 for the host process.

- Isolate CPUs assigned to PMD so that the host process does not use these CPUs.

Use

NovaVcpuPinsetto exclude CPUs assigned to PMD from Compute scheduling.Provide the number of memory channels in the format -

[allowed_pattern: "[0-9]+"].NeutronDpdkMemoryChannels: "4"

Set the memory pre-allocated from the hugepage pool for each socket.

NeutronDpdkSocketMemory: "2048,2048"

This is a comma-separated string, in ascending order of the CPU socket. This example assumes a 2 NUMA node configuration and pre-allocates 2048 MB of huge pages to socket 0, and pre-allocates 2048 MB to socket 0. If you have a single NUMA node system, set this value to 1024,0.

Set the DPDK driver type and the datapath type for OVS bridges.

NeutronDpdkDriverType: "vfio-pci" NeutronDatapathType: "netdev"

Under

parameter_defaults, set the vhost-user socket directory for OVS.NeutronVhostuserSocketDir: "/var/lib/vhost_sockets"

Under

parameter_defaults, reserve the RAM for the host processes.NovaReservedHostMemory: 2048

Under

parameter_defaults, set a comma-separated list or range of physical CPU cores to reserve for virtual machine processes.NovaVcpuPinSet: "2,3,4,5,6,7,18,19,20,21,22,23,10,11,12,13,14,15,26,27,28,29,30,31"

Under

parameter_defaults, list the applicable filters.Nova scheduler applies these filters in the order they are listed. List the most restrictive filters first to make the filtering process for the nodes more efficient.

NovaSchedulerDefaultFilters: "RamFilter,ComputeFilter,AvailabilityZoneFilter,ComputeCapabilitiesFilter,ImagePropertiesFilter,PciPassthroughFilter,NUMATopologyFilter"

Under

parameter_defaults, define theComputeKernelArgsparameters to be included in the defaultgrubfile at first boot.ComputeKernelArgs: "default_hugepagesz=1GB hugepagesz=1G hugepages=32 iommu=pt intel_iommu=on"

NoteThese huge pages are consumed by the virtual machines, and also by OVS-DPDK using the

NeutronDpdkSocketMemoryparameter as shown in this procedure. The number of huge pages available for the virtual machines is thebootparameter minus theNeutronDpdkSocketMemory.You need to add

hw:mem_page_size=1GBto the flavor you associate with the DPDK instance. If you do not do this, the instance does get a DHCP allocation.Under

parameter_defaults, set a list or range of physical CPU cores to be isolated from the host.The given argument is appended to the tuned

cpu-partitioningprofile.HostIsolatedCoreList: "1,2,3,4,5,6,7,9,10,17,18,19,20,21,22,23,11,12,13,14,15,25,26,27,28,29,30,31"

Under

parameter_defaults, set a list of logical cores used by OVS-DPDK processes for dpdk-lcore-mask. These cores must be mutually exclusive from the list of cores inNeutronDpdkCoreListandNovaVcpuPinSet.HostCpusList: "0,16,8,24"

4.4.4. Modify controller.yaml

Create the control plane Linux bond for an isolated network.

- type: linux_bond name: bond_api bonding_options: "mode=active-backup" use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic2 primary: true - type: interface name: nic3Assign VLANs to this Linux bond.

- type: vlan vlan_id: {get_param: InternalApiNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: InternalApiIpSubnet} - type: vlan vlan_id: {get_param: TenantNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: TenantIpSubnet} - type: vlan vlan_id: {get_param: StorageNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: StorageIpSubnet} - type: vlan vlan_id: {get_param: StorageMgmtNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: StorageMgmtIpSubnet} - type: vlan vlan_id: {get_param: ExternalNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: ExternalIpSubnet} routes: - default: true next_hop: {get_param: ExternalInterfaceDefaultRoute}Create two OVS bridges to the Compute node.

- type: ovs_bridge name: br-link0 use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic4 - type: ovs_bridge name: br-link1 use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic5

4.4.5. Modify compute.yaml

Create the compute-ovs-dpdk.yaml file from the default compute.yaml file and make the following changes:

Create the control plane Linux bond for an isolated network.

- type: linux_bond name: bond_api bonding_options: "mode=active-backup" use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic2 primary: true - type: interface name: nic3Assign VLANs to this Linux bond.

- type: vlan vlan_id: {get_param: InternalApiNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: InternalApiIpSubnet} - type: vlan vlan_id: {get_param: TenantNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: TenantIpSubnet} - type: vlan vlan_id: {get_param: StorageNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: StorageIpSubnet}Set two bridges with a DPDK port each to link to the controller.

- type: ovs_user_bridge name: br-link0 use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: ovs_dpdk_port name: dpdk0 members: - type: interface name: nic4 - type: ovs_user_bridge name: br-link1 use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: ovs_dpdk_port name: dpdk1 members: - type: interface name: nic5NoteTo include multiple DPDK devices, repeat the

typecode section for each DPDK device you want to add.NoteWhen using OVS-DPDK, all bridges on the same Compute node should be of type

ovs_user_bridge. The director may accept the configuration, but Red Hat OpenStack Platform does not support mixingovs_bridgeandovs_user_bridgeon the same node.

4.4.6. Run the overcloud_deploy.sh Script

The following example defines the openstack overcloud deploy command for the OVS-DPDK environment within a bash script:

#!/bin/bash openstack overcloud deploy \ --templates \ -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/neutron-ovs-dpdk.yaml \ -e /home/stack/ospd-11-vlan-dpdk-two-ports-ctlplane-bonding/network-environment.yaml

-

/usr/share/openstack-tripleo-heat-templates/environments/neutron-ovs-dpdk.yamlis the location of the defaultneutron-ovs-dpdk.yamlfile, which enables the OVS-DPDK parameters for the Compute role. -

/home/stack/<relative-directory>/network-environment.yamlis the path for thenetwork-environment.yamlfile. Use this file to overwrite the default values from theneutron-ovs-dpdk.yamlfile.

Reboot the Compute nodes to enforce the tuned profile after the overcloud is deployed.

This configuration of OVS-DPDK does not support security groups and live migrations.

4.5. Configure Two-Port OVS-DPDK Data Plane Bonding with VLAN Tunnelling

This section describes how to configure OVS-DPDK with two data plane ports in an OVS-DPDK bond.

4.5.1. Modify first-boot.yaml

Modify the first-boot.yaml file to set up OVS and DPDK parameters and to configure tuned for CPU affinity.

Add additional resources.

resources: userdata: type: OS::Heat::MultipartMime properties: parts: - config: {get_resource: boot_config} - config: {get_resource: set_ovs_socket_config} - config: {get_resource: set_ovs_config} - config: {get_resource: set_dpdk_params} - config: {get_resource: install_tuned} - config: {get_resource: compute_kernel_args}Determine the

NeutronVhostUserSocketDirsetting.set_ovs_socket_config: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then mkdir -p $NEUTRON_VHOSTUSER_SOCKET_DIR chown -R qemu:qemu $NEUTRON_VHOSTUSER_SOCKET_DIR restorecon $NEUTRON_VHOSTUSER_SOCKET_DIR fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $NEUTRON_VHOSTUSER_SOCKET_DIR: {get_param: NeutronVhostuserSocketDir}Set the OVS configuration.

set_ovs_config: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then if [ -f /usr/lib/systemd/system/openvswitch-nonetwork.service ]; then ovs_service_path="/usr/lib/systemd/system/openvswitch-nonetwork.service" elif [ -f /usr/lib/systemd/system/ovs-vswitchd.service ]; then ovs_service_path="/usr/lib/systemd/system/ovs-vswitchd.service" fi grep -q "RuntimeDirectoryMode=.*" $ovs_service_path if [ "$?" -eq 0 ]; then sed -i 's/RuntimeDirectoryMode=.*/RuntimeDirectoryMode=0775/' $ovs_service_path else echo "RuntimeDirectoryMode=0775" >> $ovs_service_path fi grep -Fxq "Group=qemu" $ovs_service_path if [ ! "$?" -eq 0 ]; then echo "Group=qemu" >> $ovs_service_path fi grep -Fxq "UMask=0002" $ovs_service_path if [ ! "$?" -eq 0 ]; then echo "UMask=0002" >> $ovs_service_path fi ovs_ctl_path='/usr/share/openvswitch/scripts/ovs-ctl' grep -q "umask 0002 \&\& start_daemon \"\$OVS_VSWITCHD_PRIORITY\"" $ovs_ctl_path if [ ! "$?" -eq 0 ]; then sed -i 's/start_daemon \"\$OVS_VSWITCHD_PRIORITY.*/umask 0002 \&\& start_daemon \"$OVS_VSWITCHD_PRIORITY\" \"$OVS_VSWITCHD_WRAPPER\" \"$@\"/' $ovs_ctl_path fi fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat}Set the DPDK parameters.

set_dpdk_params: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash set -x get_mask() { local list=$1 local mask=0 declare -a bm max_idx=0 for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) bm[$index]=0 if [ $max_idx -lt $index ]; then max_idx=$(($index)) fi done for ((i=$max_idx;i>=0;i--)); do bm[$i]=0 done for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) temp=$((1<<$(($core % 32)))) bm[$index]=$((${bm[$index]} | $temp)) done printf -v mask "%x" "${bm[$max_idx]}" for ((i=$max_idx-1;i>=0;i--)); do printf -v hex "%08x" "${bm[$i]}" mask+=$hex done printf "%s" "$mask" } FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then pmd_cpu_mask=$( get_mask $PMD_CORES ) host_cpu_mask=$( get_mask $LCORE_LIST ) socket_mem=$(echo $SOCKET_MEMORY | sed s/\'//g ) ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem=$socket_mem ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=$pmd_cpu_mask ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=$host_cpu_mask fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $LCORE_LIST: {get_param: HostCpusList} $PMD_CORES: {get_param: NeutronDpdkCoreList} $SOCKET_MEMORY: {get_param: NeutronDpdkSocketMemory}Set the

tunedconfiguration to enable CPU affinity.install_tuned: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then tuned_conf_path="/etc/tuned/cpu-partitioning-variables.conf" if [ -n "$TUNED_CORES" ]; then grep -q "^isolated_cores" $tuned_conf_path if [ "$?" -eq 0 ]; then sed -i 's/^isolated_cores=.*/isolated_cores=$TUNED_CORES/' $tuned_conf_path else echo "isolated_cores=$TUNED_CORES" >> $tuned_conf_path fi tuned-adm profile cpu-partitioning fi fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $TUNED_CORES: {get_param: HostIsolatedCoreList}Set the kernel arguments.

compute_kernel_args: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then sed 's/^\(GRUB_CMDLINE_LINUX=".*\)"/\1 $KERNEL_ARGS isolcpus=$TUNED_CORES"/g' -i /etc/default/grub ; grub2-mkconfig -o /etc/grub2.cfg reboot fi params: $KERNEL_ARGS: {get_param: ComputeKernelArgs} $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $TUNED_CORES: {get_param: HostIsolatedCoreList}

4.5.2. Modify post-install.yaml

Set the

tunedconfiguration to enable CPU affinity.ExtraConfig: type: OS::Heat::SoftwareConfig properties: group: script config: str_replace: template: | #!/bin/bash set -x function tuned_service_dependency() { tuned_service=/usr/lib/systemd/system/tuned.service grep -q "network.target" $tuned_service if [ "$?" -eq 0 ]; then sed -i '/After=.*/s/network.target//g' $tuned_service fi grep -q "Before=.*network.target" $tuned_service if [ ! "$?" -eq 0 ]; then grep -q "Before=.*" $tuned_service if [ "$?" -eq 0 ]; then sed -i 's/^\(Before=.*\)/\1 network.target openvswitch.service/g' $tuned_service else sed -i '/After/i Before=network.target openvswitch.service' $tuned_service fi fi } get_mask() { local list=$1 local mask=0 declare -a bm max_idx=0 for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) bm[$index]=0 if [ $max_idx -lt $index ]; then max_idx=$(($index)) fi done for ((i=$max_idx;i>=0;i--)); do bm[$i]=0 done for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) temp=$((1<<$(($core % 32)))) bm[$index]=$((${bm[$index]} | $temp)) done printf -v mask "%x" "${bm[$max_idx]}" for ((i=$max_idx-1;i>=0;i--)); do printf -v hex "%08x" "${bm[$i]}" mask+=$hex done printf "%s" "$mask" } if hiera -c /etc/puppet/hiera.yaml service_names | grep -q neutron_ovs_dpdk_agent; then pmd_cpu_mask=$( get_mask $PMD_CORES ) host_cpu_mask=$( get_mask $LCORE_LIST ) ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=$pmd_cpu_mask ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=$host_cpu_mask tuned_service_dependency fi params: $LCORE_LIST: {get_param: HostCpusList} $PMD_CORES: {get_param: NeutronDpdkCoreList}

4.5.3. Modify network-environment.yaml

See Planning your OVS-DPDK Deployment for details on how to determine the best values for the OVS-DPDK parameters that you set in the network-environment.yaml file to optimize your OpenStack network for OVS-DPDK.

Add the custom resources for OVS-DPDK under

resource_registry.resource_registry: # Specify the relative/absolute path to the config files you want to use for override the default. OS::TripleO::Compute::Net::SoftwareConfig: nic-configs/compute.yaml OS::TripleO::Controller::Net::SoftwareConfig: nic-configs/controller.yaml OS::TripleO::NodeUserData: first-boot.yaml OS::TripleO::NodeExtraConfigPost: post-install.yaml

Under

parameter_defaults, disable the tunnel type (set the value to""), and set the network type tovlan.NeutronTunnelTypes: '' NeutronNetworkType: 'vlan'

Under

parameter_defaults, map the physical network to the virtual bridge.NeutronBridgeMappings: 'dpdk_mgmt:br-link'

Under

parameter_defaults, set the OpenStack Networking ML2 and Open vSwitch VLAN mapping range.NeutronNetworkVLANRanges: 'dpdk_mgmt:22:22'

This example sets the VLAN ranges on the physical network (dpdk_mgmt).

Under

parameter_defaults, set the OVS-DPDK configuration parameters.NoteNeutronDPDKCoreListandNeutronDPDKMemoryChannelsare the required settings for this procedure. Attempting to deploy DPDK without appropriate values causes the deployment to fail or lead to unstable deployments.Provide a list of cores that can be used as DPDK poll mode drivers (PMDs) in the format -

[allowed_pattern: "[0-9,-]+"].NeutronDpdkCoreList: "1,17,9,25"

NoteYou must assign at least one CPU (with sibling thread) on each NUMA node with or without DPDK NICs present for DPDK PMD to avoid failures in creating guest instances.

To optimize OVS-DPDK performance, consider the following options:

-

Select CPUs associated with the NUMA node of the DPDK interface. Use

cat /sys/class/net/<interface>/device/numa_nodeto list the NUMA node associated with an interface and uselscputo list the CPUs associated with that NUMA node. -

Group CPU siblings together (in case of hyper-threading). Use

cat /sys/devices/system/cpu/<cpu>/topology/thread_siblings_listto find the sibling of a CPU. - Reserve CPU 0 for the host process.

- Isolate CPUs assigned to PMD so that the host process does not use these CPUs.

Use

NovaVcpuPinsetto exclude CPUs assigned to PMD from Compute scheduling.Provide the number of memory channels in the format -

[allowed_pattern: "[0-9]+"].NeutronDpdkMemoryChannels: "4"

Set the memory pre-allocated from the hugepage pool for each socket.

NeutronDpdkSocketMemory: "1024,1024"

This is a comma-separated string, in ascending order of the CPU socket. This example assumes a 2 NUMA node configuration and pre-allocates 1024 MB of huge pages to socket 0, and pre-allocates 1024 MB to socket 0. If you have a single NUMA node system, set this value to 1024,0.

Set the DPDK driver type and the datapath type for OVS bridges.

NeutronDpdkDriverType: "vfio-pci" NeutronDatapathType: "netdev"

Under

parameter_defaults, set the vhost-user socket directory for OVS.NeutronVhostuserSocketDir: "/var/lib/vhost_sockets"

Under

parameter_defaults, reserve the RAM for the host processes.NovaReservedHostMemory: 2048

Under

parameter_defaults, set a comma-separated list or range of physical CPU cores to reserve for virtual machine processes.NovaVcpuPinSet: "2,3,4,5,6,7,18,19,20,21,22,23,10,11,12,13,14,15,26,27,28,29,30,31"

Under

parameter_defaults, list the applicable filters.Nova scheduler applies these filters in the order they are listed. List the most restrictive filters first to make the filtering process for the nodes more efficient.

NovaSchedulerDefaultFilters: "RamFilter,ComputeFilter,AvailabilityZoneFilter,ComputeCapabilitiesFilter,ImagePropertiesFilter,PciPassthroughFilter,NUMATopologyFilter"

Under

parameter_defaults, define theComputeKernelArgsparameters to be included in the defaultgrubfile at first boot.ComputeKernelArgs: "default_hugepagesz=1GB hugepagesz=1G hugepages=32 iommu=pt intel_iommu=on"

NoteThese huge pages are consumed by the virtual machines, and also by OVS-DPDK using the

NeutronDpdkSocketMemoryparameter as shown in this procedure. The number of huge pages available for the virtual machines is thebootparameter minus theNeutronDpdkSocketMemory.You need to add

hw:mem_page_size=1GBto the flavor you associate with the DPDK instance. If you do not do this, the instance does not get a DHCP allocation.Under

parameter_defaults, set a list or range of physical CPU cores to be isolated from the host.The given argument is appended to the tuned

cpu-partitioningprofile.HostIsolatedCoreList: "1,2,3,4,5,6,7,9,10,17,18,19,20,21,22,23,11,12,13,14,15,25,26,27,28,29,30,31"

Under

parameter_defaults, set a list of logical cores used by OVS-DPDK processes for dpdk-lcore-mask. These cores must be mutually exclusive from the list of cores inNeutronDpdkCoreListandNovaVcpuPinSet.HostCpusList: "0,16,8,24"

4.5.4. Modify controller.yaml

Create the control plane Linux bond for an isolated network.

- type: linux_bond name: bond1 bonding_options: "mode=active-backup" use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic2 primary: true - type: interface name: nic3Assign VLANs to this Linux bond.

- type: vlan vlan_id: {get_param: InternalApiNetworkVlanID} device: bond1 addresses: - ip_netmask: {get_param: InternalApiIpSubnet} - type: vlan vlan_id: {get_param: ExternalNetworkVlanID} device: bond1 addresses: - ip_netmask: {get_param: ExternalIpSubnet} - type: vlan vlan_id: {get_param: TenantNetworkVlanID} device: bond1 addresses: - ip_netmask: {get_param: TenantIpSubnet}Create the OVS bridge to the Compute node.

- type: ovs_bridge name: br-link use_dhcp: false members: - type: interface name: nic4

4.5.5. Modify compute.yaml

Create the control plane Linux bond for an isolated network.

- type: linux_bond name: bond_api bonding_options: "mode=active-backup" use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic2 primary: true - type: interface name: nic3Assign VLANs to this Linux bond.

- type: vlan vlan_id: {get_param: InternalApiNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: InternalApiIpSubnet} - type: vlan vlan_id: {get_param: TenantNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: TenantIpSubnet}Set a bridge with two DPDK ports in an OVS-DPDK bond to link to the controller.

- type: ovs_user_bridge name: br-link use_dhcp: false members: - type: ovs_dpdk_bond name: bond_dpdk0 members: - type: ovs_dpdk_port name: dpdk0 members: - type: interface name: nic4 - type: ovs_dpdk_port name: dpdk1 members: - type: interface name: nic5NoteTo include multiple DPDK devices, repeat the

typecode section for each DPDK device you want to add.NoteWhen using OVS-DPDK, all bridges on the same Compute node should be of type

ovs_user_bridge. The director may accept the configuration, but Red Hat OpenStack Platform does not support mixingovs_bridgeandovs_user_bridgeon the same node.

4.5.6. Run the overcloud_deploy.sh Script

The following example defines the openstack overcloud deploy command for the OVS-DPDK environment within a bash script:

#!/bin/bash openstack overcloud deploy \ --templates \ -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/neutron-ovs-dpdk.yaml \ -e /home/stack/ospd-11-vlan-ovs-dpdk-bonding-dataplane-bonding-ctlplane/network-environment.yaml

-

/usr/share/openstack-tripleo-heat-templates/environments/neutron-ovs-dpdk.yamlis the location of the defaultneutron-ovs-dpdk.yamlfile, which enables the OVS-DPDK parameters for the Compute role. -

/home/stack/<relative-directory>/network-environment.yamlis the path for thenetwork-environment.yamlfile. Use this file to overwrite the default values from theneutron-ovs-dpdk.yamlfile.

Reboot the Compute nodes to enforce the tuned profile after the overcloud is deployed.

This configuration of OVS-DPDK does not support security groups and live migrations.

4.6. Configure Single-Port OVS-DPDK with VXLAN Tunnelling

This section describes how to configure OVS-DPDK with VXLAN tunnelling.

4.6.1. Modify first-boot.yaml

Modify the first-boot.yaml file to set up OVS and DPDK parameters and to configure tuned for CPU affinity.

Add additional resources.

resources: userdata: type: OS::Heat::MultipartMime properties: parts: - config: {get_resource: set_ovs_socket_config} - config: {get_resource: set_ovs_config} - config: {get_resource: set_dpdk_params} - config: {get_resource: install_tuned} - config: {get_resource: compute_kernel_args}Determine the

NeutronVhostUserSocketDirsetting.set_ovs_socket_config: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then mkdir -p $NEUTRON_VHOSTUSER_SOCKET_DIR chown -R qemu:qemu $NEUTRON_VHOSTUSER_SOCKET_DIR restorecon $NEUTRON_VHOSTUSER_SOCKET_DIR fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $NEUTRON_VHOSTUSER_SOCKET_DIR: {get_param: NeutronVhostuserSocketDir}Set the OVS configuration.

set_ovs_config: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then if [ -f /usr/lib/systemd/system/openvswitch-nonetwork.service ]; then ovs_service_path="/usr/lib/systemd/system/openvswitch-nonetwork.service" elif [ -f /usr/lib/systemd/system/ovs-vswitchd.service ]; then ovs_service_path="/usr/lib/systemd/system/ovs-vswitchd.service" fi grep -q "RuntimeDirectoryMode=.*" $ovs_service_path if [ "$?" -eq 0 ]; then sed -i 's/RuntimeDirectoryMode=.*/RuntimeDirectoryMode=0775/' $ovs_service_path else echo "RuntimeDirectoryMode=0775" >> $ovs_service_path fi grep -Fxq "Group=qemu" $ovs_service_path if [ ! "$?" -eq 0 ]; then echo "Group=qemu" >> $ovs_service_path fi grep -Fxq "UMask=0002" $ovs_service_path if [ ! "$?" -eq 0 ]; then echo "UMask=0002" >> $ovs_service_path fi ovs_ctl_path='/usr/share/openvswitch/scripts/ovs-ctl' grep -q "umask 0002 \&\& start_daemon \"\$OVS_VSWITCHD_PRIORITY\"" $ovs_ctl_path if [ ! "$?" -eq 0 ]; then sed -i 's/start_daemon \"\$OVS_VSWITCHD_PRIORITY.*/umask 0002 \&\& start_daemon \"$OVS_VSWITCHD_PRIORITY\" \"$OVS_VSWITCHD_WRAPPER\" \"$@\"/' $ovs_ctl_path fi fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat}Set the DPDK parameters.

set_dpdk_params: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash set -x get_mask() { local list=$1 local mask=0 declare -a bm max_idx=0 for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) bm[$index]=0 if [ $max_idx -lt $index ]; then max_idx=$(($index)) fi done for ((i=$max_idx;i>=0;i--)); do bm[$i]=0 done for core in $(echo $list | sed 's/,/ /g') do index=$(($core/32)) temp=$((1<<$(($core % 32)))) bm[$index]=$((${bm[$index]} | $temp)) done printf -v mask "%x" "${bm[$max_idx]}" for ((i=$max_idx-1;i>=0;i--)); do printf -v hex "%08x" "${bm[$i]}" mask+=$hex done printf "%s" "$mask" } FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then pmd_cpu_mask=$( get_mask $PMD_CORES ) host_cpu_mask=$( get_mask $LCORE_LIST ) socket_mem=$(echo $SOCKET_MEMORY | sed s/\'//g ) ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem=$socket_mem ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask=$pmd_cpu_mask ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=$host_cpu_mask fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $LCORE_LIST: {get_param: HostCpusList} $PMD_CORES: {get_param: NeutronDpdkCoreList} $SOCKET_MEMORY: {get_param: NeutronDpdkSocketMemory}Set the

tunedconfiguration to enable CPU affinity.install_tuned: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then tuned_conf_path="/etc/tuned/cpu-partitioning-variables.conf" if [ -n "$TUNED_CORES" ]; then grep -q "^isolated_cores" $tuned_conf_path if [ "$?" -eq 0 ]; then sed -i 's/^isolated_cores=.*/isolated_cores=$TUNED_CORES/' $tuned_conf_path else echo "isolated_cores=$TUNED_CORES" >> $tuned_conf_path fi tuned-adm profile cpu-partitioning fi fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $TUNED_CORES: {get_param: HostIsolatedCoreList}Set the kernel arguments.

compute_kernel_args: type: OS::Heat::SoftwareConfig properties: config: str_replace: template: | #!/bin/bash FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then sed 's/^\(GRUB_CMDLINE_LINUX=".*\)"/\1 $KERNEL_ARGS isolcpus=$TUNED_CORES"/g' -i /etc/default/grub ; grub2-mkconfig -o /etc/grub2.cfg reboot fi params: $KERNEL_ARGS: {get_param: ComputeKernelArgs} $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat} $TUNED_CORES: {get_param: HostIsolatedCoreList}

4.6.2. Modify post-install.yaml

Set the

tunedconfiguration to enable CPU affinity.ExtraConfig: type: OS::Heat::SoftwareConfig properties: group: script config: str_replace: template: | #!/bin/bash set -x FORMAT=$COMPUTE_HOSTNAME_FORMAT if [[ -z $FORMAT ]] ; then FORMAT="compute" ; else # Assumption: only %index% and %stackname% are the variables in Host name format FORMAT=$(echo $FORMAT | sed 's/\%index\%//g' | sed 's/\%stackname\%//g') ; fi if [[ $(hostname) == *$FORMAT* ]] ; then tuned_service=/usr/lib/systemd/system/tuned.service grep -q "network.target" $tuned_service if [ "$?" -eq 0 ]; then sed -i '/After=.*/s/network.target//g' $tuned_service fi grep -q "Before=.*network.target" $tuned_service if [ ! "$?" -eq 0 ]; then grep -q "Before=.*" $tuned_service if [ "$?" -eq 0 ]; then sed -i 's/^\(Before=.*\)/\1 network.target openvswitch.service/g' $tuned_service else sed -i '/After/i Before=network.target openvswitch.service' $tuned_service fi fi systemctl daemon-reload fi params: $COMPUTE_HOSTNAME_FORMAT: {get_param: ComputeHostnameFormat}

4.6.3. Modify network-environment.yaml

See Planning your OVS-DPDK Deployment for details on how to determine the best values for the OVS-DPDK parameters that you set in the network-environment.yaml file to optimize your OpenStack network for OVS-DPDK.

Add the custom resources for OVS-DPDK under

resource_registry.resource_registry: # Specify the relative/absolute path to the config files you want to use for override the default. OS::TripleO::Compute::Net::SoftwareConfig: nic-configs/compute-ovs-dpdk.yaml OS::TripleO::Controller::Net::SoftwareConfig: nic-configs/controller.yaml OS::TripleO::NodeUserData: first-boot.yaml OS::TripleO::NodeExtraConfigPost: post-install.yaml

Under

parameter_defaults, set the tunnel type and the tenant type tovxlan.NeutronTunnelTypes: 'vxlan' NeutronNetworkType: 'vxlan'

Under

parameter_defaults, set the OVS-DPDK configuration parameters.NoteNeutronDPDKCoreListandNeutronDPDKMemoryChannelsare the required settings for this procedure. Attempting to deploy DPDK without appropriate values causes the deployment to fail or lead to unstable deployments.Provide a list of cores that can be used as DPDK poll mode drivers (PMDs) in the format -

[allowed_pattern: "[0-9,-]+"].NeutronDpdkCoreList: "1,17,9,25"

NoteYou must assign at least one CPU (with sibling thread) on each NUMA node with or without DPDK NICs present for DPDK PMD to avoid failures in creating guest instances.

To optimize OVS-DPDK performance, consider the following options:

-

Select CPUs associated with the NUMA node of the DPDK interface. Use

cat /sys/class/net/<interface>/device/numa_nodeto list the NUMA node associated with an interface and uselscputo list the CPUs associated with that NUMA node. -

Group CPU siblings together (in case of hyper-threading). Use

cat /sys/devices/system/cpu/<cpu>/topology/thread_siblings_listto find the sibling of a CPU. - Reserve CPU 0 for the host process.

- Isolate CPUs assigned to PMD so that the host process does not use these CPUs.

Use

NovaVcpuPinsetto exclude CPUs assigned to PMD from Compute scheduling.Provide the number of memory channels in the format -

[allowed_pattern: "[0-9]+"].NeutronDpdkMemoryChannels: "4"

Set the memory pre-allocated from the hugepage pool for each socket.

NeutronDpdkSocketMemory: "2048,2048"

This is a comma-separated string, in ascending order of the CPU socket. This example assumes a 2 NUMA node configuration and pre-allocates 2048 MB of huge pages to socket 0, and pre-allocates 2048 MB to socket 0. If you have a single NUMA node system, set this value to 1024,0.

Set the DPDK driver type and the datapath type for OVS bridges.

NeutronDpdkDriverType: "vfio-pci" NeutronDatapathType: "netdev"

Under

parameter_defaults, set the vhost-user socket directory for OVS.NeutronVhostuserSocketDir: "/var/lib/vhost_sockets"

Under

parameter_defaults, reserve the RAM for the host processes.NovaReservedHostMemory: 2048

Under

parameter_defaults, set a comma-separated list or range of physical CPU cores to reserve for virtual machine processes.NovaVcpuPinSet: "2,3,4,5,6,7,18,19,20,21,22,23,10,11,12,13,14,15,26,27,28,29,30,31"

Under

parameter_defaults, list the applicable filters.Nova scheduler applies these filters in the order they are listed. List the most restrictive filters first to make the filtering process for the nodes more efficient.

NovaSchedulerDefaultFilters: "RamFilter,ComputeFilter,AvailabilityZoneFilter,ComputeCapabilitiesFilter,ImagePropertiesFilter,PciPassthroughFilter,NUMATopologyFilter"

Under

parameter_defaults, define theComputeKernelArgsparameters to be included in the defaultgrubfile at first boot.ComputeKernelArgs: "default_hugepagesz=1GB hugepagesz=1G hugepages=32 iommu=pt intel_iommu=on"

NoteThese huge pages are consumed by the virtual machines, and also by OVS-DPDK using the

NeutronDpdkSocketMemoryparameter as shown in this procedure. The number of huge pages available for the virtual machines is thebootparameter minus theNeutronDpdkSocketMemory.You need to add

hw:mem_page_size=1GBto the flavor you associate with the DPDK instance. If you do not do this, the instance does not get a DHCP allocation.Under

parameter_defaults, set a list or range of physical CPU cores to be isolated from the host.The given argument is appended to the tuned

cpu-partitioningprofile.HostIsolatedCoreList: "1,2,3,4,5,6,7,9,10,17,18,19,20,21,22,23,11,12,13,14,15,25,26,27,28,29,30,31"

Under

parameter_defaults, set a list of logical cores used by OVS-DPDK processes for dpdk-lcore-mask. These cores must be mutually exclusive from the list of cores inNeutronDpdkCoreListandNovaVcpuPinSet.HostCpusList: "0,16,8,24"

4.6.4. Modify controller.yaml

Create the control plane Linux bond for an isolated network.

- type: linux_bond name: bond_api bonding_options: "mode=active-backup" use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic2 primary: true - type: interface name: nic3Assign VLANs to this Linux bond.

- type: vlan vlan_id: {get_param: InternalApiNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: InternalApiIpSubnet} - type: vlan vlan_id: {get_param: StorageNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: StorageIpSubnet} - type: vlan vlan_id: {get_param: StorageMgmtNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: StorageMgmtIpSubnet} - type: vlan vlan_id: {get_param: ExternalNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: ExternalIpSubnet}Create the OVS bridge to the Compute node.

- type: ovs_bridge name: br-link use_dhcp: false members: - type: interface name: nic4 - type: vlan vlan_id: {get_param: TenantNetworkVlanID} addresses: - ip_netmask: {get_param: TenantIpSubnet}

4.6.5. Modify compute.yaml

Create the compute-ovs-dpdk.yaml file from the default compute.yaml file and make the following changes:

Create the control plane Linux bond for an isolated network.

- type: linux_bond name: bond_api bonding_options: "mode=active-backup" use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic2 primary: true - type: interface name: nic3Assign VLANs to this Linux bond.

- type: vlan vlan_id: {get_param: InternalApiNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: InternalApiIpSubnet} - type: vlan vlan_id: {get_param: StorageNetworkVlanID} device: bond_api addresses: - ip_netmask: {get_param: StorageIpSubnet}Set a bridge with a DPDK port to link to the controller.

- type: ovs_user_bridge name: br-link use_dhcp: false ovs_extra: - str_replace: template: set port br-link tag=_VLAN_TAG_ params: _VLAN_TAG_: {get_param: TenantNetworkVlanID} addresses: - ip_netmask: {get_param: TenantIpSubnet} members: - type: ovs_dpdk_port name: dpdk0 members: - type: interface name: nic4NoteTo include multiple DPDK devices, repeat the

typecode section for each DPDK device you want to add.NoteWhen using OVS-DPDK, all bridges on the same Compute node should be of type

ovs_user_bridge. The director may accept the configuration, but Red Hat OpenStack Platform does not support mixingovs_bridgeandovs_user_bridgeon the same node.

4.6.6. Run the overcloud_deploy.sh Script

The following example defines the openstack overcloud deploy command for the OVS-DPDK environment within a Bash script:

#!/bin/bash openstack overcloud deploy \ --templates \ -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/neutron-ovs-dpdk.yaml \ -e /home/stack/ospd-11-vxlan-dpdk-single-port-ctlplane-bonding/network-environment.yaml

-

/usr/share/openstack-tripleo-heat-templates/environments/neutron-ovs-dpdk.yamlis the location of the defaultneutron-ovs-dpdk.yamlfile, which enables the OVS-DPDK parameters for the Compute role. -

/home/stack/<relative-directory>/network-environment.yamlis the path for thenetwork-environment.yamlfile. Use this file to overwrite the default values from theneutron-ovs-dpdk.yamlfile.

Reboot the Compute nodes to enforce the tuned profile after the overcloud is deployed.

This configuration of OVS-DPDK does not support security groups and live migrations.

4.7. Configure OVS-DPDK Composable Role

This section describes how to configure composable roles for OVS-DPDK and SR-IOV with VLAN tunnelling. The process to create and deploy a composable role includes:

-

Defining the new role in a local copy of the

role_data.yamlfile. - Creating the OpenStack flavor that uses this new role.

-

Modifying the

network_environment.yamlfile to include this new role. - Deploying the overcloud with this updated set of roles.

In this example, the ComputeOvsDpdk and ComputeSriov are composable role for compute nodes to enable DPDK or SR-IOV only on the nodes that have the SR-IOV NICs. The existing set of default roles provided by the Red Hat OpenStack Platform is stored in the /home/stack/roles_data.yaml file.

4.7.1. Modify roles_data.yaml to Create Composable Roles

Copy the roles_data.yaml file to your /home/stack/templates directory and add the new ComputeOvsDpdk and `ComputeSriov roles.

Define the OVS-DPDK composable role.

- name: ComputeOvsDpdk CountDefault: 1 HostnameFormatDefault: compute-ovs-dpdk-%index% disable_upgrade_deployment: True ServicesDefault: - OS::TripleO::Services::CACerts - OS::TripleO::Services::CephClient - OS::TripleO::Services::CephExternal - OS::TripleO::Services::Timezone - OS::TripleO::Services::Ntp - OS::TripleO::Services::Snmp - OS::TripleO::Services::Sshd - OS::TripleO::Services::NovaCompute - OS::TripleO::Services::NovaLibvirt - OS::TripleO::Services::Kernel - OS::TripleO::Services::ComputeNeutronCorePlugin - OS::TripleO::Services::ComputeNeutronOvsDpdkAgent - OS::TripleO::Services::ComputeCeilometerAgent - OS::TripleO::Services::ComputeNeutronL3Agent - OS::TripleO::Services::ComputeNeutronMetadataAgent - OS::TripleO::Services::TripleoPackages - OS::TripleO::Services::TripleoFirewall - OS::TripleO::Services::OpenDaylightOvs - OS::TripleO::Services::SensuClient - OS::TripleO::Services::FluentdClient - OS::TripleO::Services::AuditD - OS::TripleO::Services::CollectdIn this example, all the services of the new

ComputeOvsDpdkrole are same as that of a regular Compute role, with the exception ofComputeNeutronOvsAgent. ReplaceComputeNeutronOvsAgentwithComputeNeutronOvsDpdkAgentto map to the OVS-DPDK service.Define the SR-IOV composable role.

- name: ComputeSriov CountDefault: 1 HostnameFormatDefault: compute-sriov-%index% disable_upgrade_deployment: True ServicesDefault: - OS::TripleO::Services::CACerts - OS::TripleO::Services::CephClient - OS::TripleO::Services::CephExternal - OS::TripleO::Services::Timezone - OS::TripleO::Services::Ntp - OS::TripleO::Services::Snmp - OS::TripleO::Services::Sshd - OS::TripleO::Services::NovaCompute - OS::TripleO::Services::NovaLibvirt - OS::TripleO::Services::Kernel - OS::TripleO::Services::ComputeNeutronCorePlugin - OS::TripleO::Services::ComputeNeutronOvsAgent - OS::TripleO::Services::ComputeCeilometerAgent - OS::TripleO::Services::ComputeNeutronL3Agent - OS::TripleO::Services::ComputeNeutronMetadataAgent - OS::TripleO::Services::TripleoPackages - OS::TripleO::Services::TripleoFirewall - OS::TripleO::Services::NeutronSriovAgent - OS::TripleO::Services::OpenDaylightOvs - OS::TripleO::Services::SensuClient - OS::TripleO::Services::FluentdClient - OS::TripleO::Services::AuditD - OS::TripleO::Services::Collectd

4.7.2. Modify network-environment.yaml for the New Composable Roles

Add the resource mapping for the OVS-DPDK and SR-IOV services to the network-environment.yaml file along with the network configuration for this node as follows:

resource_registry: # Specify the relative/absolute path to the config files you want to use for override the default. OS::TripleO::ComputeSriov::Net::SoftwareConfig: nic-configs/compute-sriov.yaml OS::TripleO::Controller::Net::SoftwareConfig: nic-configs/controller.yaml OS::TripleO::ComputeOvsDpdk::Net::SoftwareConfig: nic-configs/compute-ovs-dpdk.yaml OS::TripleO::Services::ComputeNeutronOvsDpdkAgent: /usr/share/openstack-tripleo-heat-templates/puppet/services/neutron-ovs-dpdk-agent.yaml OS::TripleO::Services::NeutronSriovAgent: /usr/share/openstack-tripleo-heat-templates/puppet/services/neutron-sriov-agent.yaml OS::TripleO::NodeUserData: first-boot.yaml OS::TripleO::NodeExtraConfigPost: post-install.yaml

Configure the remainder of the network-environment.yaml file to override the default parameters from the neutron-ovs-dpdk-agent.yaml and neutron-sriov-agent.yaml files as needed for your OpenStack deployment.

See Planning your OVS-DPDK Deployment for details on how to determine the best values for the OVS-DPDK parameters that you set in the network-environment.yaml file to optimize your OpenStack network for OVS-DPDK.

4.7.3. Run the overcloud_deploy.sh Script

The following example defines the openstack overcloud deploy Bash script that uses composable roles:

# #!/bin/bash openstack overcloud deploy \ --templates \ -r /home/stack/ospd-11-vlan-dpdk-sriov-single-port-composable-roles/roles-data.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e /home/stack/ospd-11-vlan-dpdk-sriov-single-port-composable-roles/network-environment.yaml

/home/stack/templates/roles_data.yaml is the location of the updated roles_data.yaml file, which defines the OVS-DPDK and the SR-IOV composable roles.

Reboot the Compute nodes to enforce the tuned profile after the overcloud is deployed.

4.8. Set the MTU Value for OVS-DPDK Interfaces

Red Hat OpenStack Platform supports jumbo frames for OVS-DPDK. To set the MTU value for jumbo frames you must:

-

Set the global MTU value for networking in the

network-environment.yamlfile. -

Set the physical DPDK port MTU value in the

compute.yamlfile. This value is also used by the vhost user interface. - Set the MTU value within any guest instances on the Compute node to ensure that you have a comparable MTU value from end to end in your configuration.

VXLAN packets include an extra 50 bytes in the header. Calculate your MTU requirements based on these additional header bytes. For example, an MTU value of 9000 means the VXLAN tunnel MTU value is 8950 to account for these extra bytes.

You do not need any special configuration for the physical NIC since the NIC is controlled by the DPDK PMD and has the same MTU value set by the compute.yaml file. You cannot set an MTU value larger than the maximum value supported by the physical NIC.

To set the MTU value for OVS-DPDK interfaces:

Set the

NeutronGlobalPhysnetMtuparameter in thenetwork-environment.yamlfile.parameter_defaults: # Global MTU configuration on Neutron NeutronGlobalPhysnetMtu: 2000

NoteEnsure that the NeutronDpdkSocketMemory value in the

network-environment.yamlfile is large enough to support jumbo frames. See Memory Parameters for details.Set the MTU value on the bridge to the Compute node in the

controller.yamlfile.- type: ovs_bridge name: br-link0 use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic4 mtu: 2000 - type: ovs_bridge name: br-link1 use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: interface name: nic5 mtu: 2000Set the MTU value on the OVS-DPDK interfaces in the

compute.yamlfile.- type: ovs_user_bridge name: br-link0 use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: ovs_dpdk_port name: dpdk0 mtu: 2000 ovs_extra: - set interface $DEVICE options:n_rxq=2 - set interface $DEVICE mtu_request=$MTU members: - type: interface name: nic4 - type: ovs_user_bridge name: br-link1 use_dhcp: false dns_servers: {get_param: DnsServers} members: - type: ovs_dpdk_port name: dpdk1 mtu: 2000 ovs_extra: - set interface $DEVICE options:n_rxq=2 - set interface $DEVICE mtu_request=$MTU members: - type: interface name: nic5

4.9. Set Multiqueue for OVS-DPDK Interfaces

To set set same number of queues for interfaces in OVS-DPDK on the Compute node, modify the compute.yaml file as follows:

-

type: ovs_user_bridge

name: br-link0

use_dhcp: false

dns_servers: {get_param: DnsServers}

members:

-

type: ovs_dpdk_port

name: dpdk0

mtu: 2000

ovs_extra:

- set interface $DEVICE options:n_rxq=2

- set interface $DEVICE mtu_request=$MTU

members:

-

type: interface

name: nic4

-

type: ovs_user_bridge

name: br-link1

use_dhcp: false

dns_servers: {get_param: DnsServers}

members:

-

type: ovs_dpdk_port

name: dpdk1

mtu: 2000

ovs_extra:

- set interface $DEVICE options:n_rxq=2

- set interface $DEVICE mtu_request=$MTU

members:

-

type: interface

name: nic54.10. Known Limitations

There are certain limitations when configuring OVS-DPDK with Red Hat OpenStack Platform 11 for the NFV use case:

- Use Linux bonds for control plane networks. Ensure both PCI devices used in the bond are on the same NUMA node for optimum performance. Neutron Linux bridge configuration is not supported by Red Hat.

- Huge pages are required for every instance running on the hosts with OVS-DPDK. If huge pages are not present in the guest, the interface appears but does not function.

There is a performance degradation of services that use tap devices, because these devices do not support DPDK. For example, services such as DVR, FWaaS, and LBaaS use tap devices.

-

With OVS-DPDK, you can enable DVR with