Chapter 3. Configuring Hot Rod Java Clients

3.1. Programmatically Configuring Hot Rod Java Clients

Use the ConfigurationBuilder class to generate immutable configuration objects that you can pass to RemoteCacheManager.

For example, create a client instance with the Java fluent API as follows:

org.infinispan.client.hotrod.configuration.ConfigurationBuilder cb

= new org.infinispan.client.hotrod.configuration.ConfigurationBuilder();

cb.marshaller(new org.infinispan.commons.marshall.ProtoStreamMarshaller())

.statistics()

.enable()

.jmxDomain("org.example")

.addServer()

.host("127.0.0.1")

.port(11222);

RemoteCacheManager rmc = new RemoteCacheManager(cb.build());3.2. Configuring Hot Rod Java Client Property Files

Add hotrod-client.properties to your classpath so that the client passes configuration to RemoteCacheManager.

Example hotrod-client.properties

# Hot Rod client configuration infinispan.client.hotrod.server_list = 127.0.0.1:11222 infinispan.client.hotrod.marshaller = org.infinispan.commons.marshall.ProtoStreamMarshaller infinispan.client.hotrod.async_executor_factory = org.infinispan.client.hotrod.impl.async.DefaultAsyncExecutorFactory infinispan.client.hotrod.default_executor_factory.pool_size = 1 infinispan.client.hotrod.hash_function_impl.2 = org.infinispan.client.hotrod.impl.consistenthash.ConsistentHashV2 infinispan.client.hotrod.tcp_no_delay = true infinispan.client.hotrod.tcp_keep_alive = false infinispan.client.hotrod.request_balancing_strategy = org.infinispan.client.hotrod.impl.transport.tcp.RoundRobinBalancingStrategy infinispan.client.hotrod.key_size_estimate = 64 infinispan.client.hotrod.value_size_estimate = 512 infinispan.client.hotrod.force_return_values = false ## Connection pooling configuration maxActive = -1 maxIdle = -1 whenExhaustedAction = 1 minEvictableIdleTimeMillis=300000 minIdle = 1

To use hotrod-client.properties somewhere other than your classpath, do:

ConfigurationBuilder b = new ConfigurationBuilder();

Properties p = new Properties();

try(Reader r = new FileReader("/path/to/hotrod-client.properties")) {

p.load(r);

b.withProperties(p);

}

RemoteCacheManager rcm = new RemoteCacheManager(b.build());Reference

- org.infinispan.client.hotrod.configuration lists and describes Hot Rod client properties.

- org.infinispan.client.hotrod.RemoteCacheManager

- Java system properties

3.3. Client Intelligence

Hot Rod client intelligence refers to mechanisms for locating Data Grid servers to efficiently route requests.

Basic intelligence

Clients do not store any information about Data Grid clusters or key hash values.

Topology-aware

Clients receive and store information about Data Grid clusters. Clients maintain an internal mapping of the cluster topology that changes whenever servers join or leave clusters.

To receive a cluster topology, clients need the address (IP:HOST) of at least one Hot Rod server at startup. After the client connects to the server, Data Grid transmits the topology to the client. When servers join or leave the cluster, Data Grid transmits an updated topology to the client.

Distribution-aware

Clients are topology-aware and store consistent hash values for keys.

For example, take a put(k,v) operation. The client calculates the hash value for the key so it can locate the exact server on which the data resides. Clients can then connect directly to the owner to dispatch the operation.

The benefit of distribution-aware intelligence is that Data Grid servers do not need to look up values based on key hashes, which uses less resources on the server side. Another benefit is that servers respond to client requests more quickly because it skips additional network roundtrips.

3.3.1. Request Balancing

Clients that use topology-aware intelligence use request balancing for all requests. The default balancing strategy is round-robin, so topology-aware clients always send requests to servers in round-robin order.

For example, s1, s2, s3 are servers in a Data Grid cluster. Clients perform request balancing as follows:

CacheContainer cacheContainer = new RemoteCacheManager();

Cache<String, String> cache = cacheContainer.getCache();

//client sends put request to s1

cache.put("key1", "aValue");

//client sends put request to s2

cache.put("key2", "aValue");

//client sends get request to s3

String value = cache.get("key1");

//client dispatches to s1 again

cache.remove("key2");

//and so on...Clients that use distribution-aware intelligence use request balancing only for failed requests. When requests fail, distribution-aware clients retry the request on the next available server.

Custom balancing policies

You can implement FailoverRequestBalancingStrategy and specify your class in your hotrod-client.properties configuration with the following property:

infinispan.client.hotrod.request_balancing_strategy

3.3.2. Client Failover

Hot Rod clients can automatically failover when Data Grid cluster topologies change. For instance, Hot Rod clients that are topology-aware can detect when one or more Data Grid servers fail.

In addition to failover between clustered Data Grid servers, Hot Rod clients can failover between Data Grid clusters.

For example, you have a Data Grid cluster running in New York (NYC) and another cluster running in London (LON). Clients sending requests to NYC detect that no nodes are available so they switch to the cluster in LON. Clients then maintain connections to LON until you manually switch clusters or failover happens again.

Transactional Caches with Failover

Conditional operations, such as putIfAbsent(), replace(), remove(), have strict method return guarantees. Likewise, some operations can require previous values to be returned.

Even though Hot Rod clients can failover, you should use transactional caches to ensure that operations do not partially complete and leave conflicting entries on different nodes.

3.4. Configuring Authentication Mechanisms for Hot Rod Clients

Data Grid servers use different mechanisms to authenticate Hot Rod client connections.

Procedure

-

Specify the authentication mechanisms that Data Grid server uses with the

saslMechanism()method from theSecurityConfigurationBuilderclass.

SCRAM

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServer()

.host("127.0.0.1")

.port(11222)

.security()

.authentication()

.username("myuser")

.password("qwer1234!");

remoteCacheManager = new RemoteCacheManager(clientBuilder.build());

RemoteCache<String, String> cache = remoteCacheManager.getCache("secured");

DIGEST

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServer()

.host("127.0.0.1")

.port(11222)

.security()

.authentication()

.saslMechanism("DIGEST-MD5")

.username("myuser")

.password("qwer1234!");

remoteCacheManager = new RemoteCacheManager(clientBuilder.build());

RemoteCache<String, String> cache = remoteCacheManager.getCache("secured");

PLAIN

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServer()

.host("127.0.0.1")

.port(11222)

.security()

.authentication()

.saslMechanism("PLAIN")

.username("myuser")

.password("qwer1234!");

remoteCacheManager = new RemoteCacheManager(clientBuilder.build());

RemoteCache<String, String> cache = remoteCacheManager.getCache("secured");

OAUTHBEARER

String token = "..."; // Obtain the token from your OAuth2 provider

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServer()

.host("127.0.0.1")

.port(11222)

.security()

.authentication()

.saslMechanism("OAUTHBEARER")

.token(token);

remoteCacheManager = new RemoteCacheManager(clientBuilder.build());

RemoteCache<String, String> cache = remoteCacheManager.getCache("secured");

OAUTHBEARER authentication with TokenCallbackHandler

You can configure clients with a TokenCallbackHandler to refresh OAuth2 tokens before they expire, as in the following example:

String token = "..."; // Obtain the token from your OAuth2 provider

TokenCallbackHandler tokenHandler = new TokenCallbackHandler(token);

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServer()

.host("127.0.0.1")

.port(11222)

.security()

.authentication()

.saslMechanism("OAUTHBEARER")

.callbackHandler(tokenHandler);

remoteCacheManager = new RemoteCacheManager(clientBuilder.build());

RemoteCache<String, String> cache = remoteCacheManager.getCache("secured");

// Refresh the token

tokenHandler.setToken("newToken");EXTERNAL

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServer()

.host("127.0.0.1")

.port(11222)

.security()

.ssl()

// TrustStore stores trusted CA certificates for the server.

.trustStoreFileName("/path/to/truststore")

.trustStorePassword("truststorepassword".toCharArray())

// KeyStore stores valid client certificates.

.keyStoreFileName("/path/to/keystore")

.keyStorePassword("keystorepassword".toCharArray())

.authentication()

.saslMechanism("EXTERNAL");

remoteCacheManager = new RemoteCacheManager(clientBuilder.build());

RemoteCache<String, String> cache = remoteCacheManager.getCache("secured");

GSSAPI

LoginContext lc = new LoginContext("GssExample", new BasicCallbackHandler("krb_user", "krb_password".toCharArray()));

lc.login();

Subject clientSubject = lc.getSubject();

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServer()

.host("127.0.0.1")

.port(11222)

.security()

.authentication()

.enable()

.saslMechanism("GSSAPI")

.clientSubject(clientSubject)

.callbackHandler(new BasicCallbackHandler());

remoteCacheManager = new RemoteCacheManager(clientBuilder.build());

RemoteCache<String, String> cache = remoteCacheManager.getCache("secured");

The preceding configuration uses the BasicCallbackHandler to retrieve the client subject and handle authentication. However, this actually invokes different callbacks:

-

NameCallbackandPasswordCallbackconstruct the client subject. -

AuthorizeCallbackis called during SASL authentication.

Custom CallbackHandler

Hot Rod clients set up a default CallbackHandler to pass credentials to SASL mechanisms. In some cases, you might need to provide a custom CallbackHandler.

Your CallbackHandler needs to handle callbacks that are specific to the authentication mechanism that you use. However, it is beyond the scope of this document to provide examples for each possible callback type.

public class MyCallbackHandler implements CallbackHandler {

final private String username;

final private char[] password;

final private String realm;

public MyCallbackHandler(String username, String realm, char[] password) {

this.username = username;

this.password = password;

this.realm = realm;

}

@Override

public void handle(Callback[] callbacks) throws IOException, UnsupportedCallbackException {

for (Callback callback : callbacks) {

if (callback instanceof NameCallback) {

NameCallback nameCallback = (NameCallback) callback;

nameCallback.setName(username);

} else if (callback instanceof PasswordCallback) {

PasswordCallback passwordCallback = (PasswordCallback) callback;

passwordCallback.setPassword(password);

} else if (callback instanceof AuthorizeCallback) {

AuthorizeCallback authorizeCallback = (AuthorizeCallback) callback;

authorizeCallback.setAuthorized(authorizeCallback.getAuthenticationID().equals(

authorizeCallback.getAuthorizationID()));

} else if (callback instanceof RealmCallback) {

RealmCallback realmCallback = (RealmCallback) callback;

realmCallback.setText(realm);

} else {

throw new UnsupportedCallbackException(callback);

}

}

}

}

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServer()

.host("127.0.0.1")

.port(11222)

.security()

.authentication()

.enable()

.serverName("myhotrodserver")

.saslMechanism("DIGEST-MD5")

.callbackHandler(new MyCallbackHandler("myuser","default","qwer1234!".toCharArray()));

remoteCacheManager=new RemoteCacheManager(clientBuilder.build());

RemoteCache<String, String> cache=remoteCacheManager.getCache("secured");3.4.1. Hot Rod Endpoint Authentication Mechanisms

Data Grid supports the following SASL authentications mechanisms with the Hot Rod connector:

| Authentication mechanism | Description | Related details |

|---|---|---|

|

|

Uses credentials in plain-text format. You should use |

Similar to the |

|

|

Uses hashing algorithms and nonce values. Hot Rod connectors support |

Similar to the |

|

|

Uses salt values in addition to hashing algorithms and nonce values. Hot Rod connectors support |

Similar to the |

|

|

Uses Kerberos tickets and requires a Kerberos Domain Controller. You must add a corresponding |

Similar to the |

|

|

Uses Kerberos tickets and requires a Kerberos Domain Controller. You must add a corresponding |

Similar to the |

|

| Uses client certificates. |

Similar to the |

|

|

Uses OAuth tokens and requires a |

Similar to the |

3.4.2. Creating GSSAPI Login Contexts

To use the GSSAPI mechanism, you must create a LoginContext so your Hot Rod client can obtain a Ticket Granting Ticket (TGT).

Procedure

Define a login module in a login configuration file.

gss.conf

GssExample { com.sun.security.auth.module.Krb5LoginModule required client=TRUE; };For the IBM JDK:

gss-ibm.conf

GssExample { com.ibm.security.auth.module.Krb5LoginModule required client=TRUE; };Set the following system properties:

java.security.auth.login.config=gss.conf java.security.krb5.conf=/etc/krb5.conf

Notekrb5.confprovides the location of your KDC. Use the kinit command to authenticate with Kerberos and verifykrb5.conf.

3.5. Configuring Hot Rod Client Encryption

Data Grid servers that use SSL/TLS encryption present Hot Rod clients with certificates so they can establish trust and negotiate secure connections.

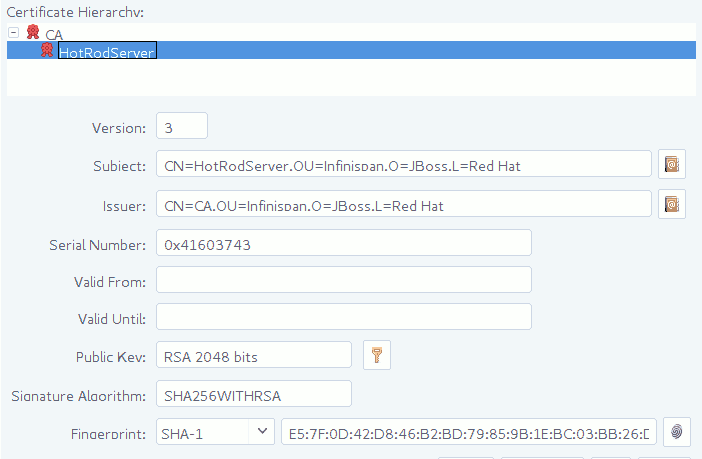

To verify server-issued certificates, Hot Rod clients require part of the TLS certificate chain. For example, the following image shows a certificate authority (CA), named "CA", that has issued a certificate for a server named "HotRodServer":

Figure 3.1. Certificate chain

Procedure

- Create a Java keystore with part of the server certificate chain. In most cases you should use the public certificate for the CA.

-

Specify the keystore as a TrustStore in the client configuration with the

SslConfigurationBuilderclass.

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServer()

.host("127.0.0.1")

.port(11222)

.security()

.ssl()

// Server SNI hostname.

.sniHostName("myservername")

// Server certificate keystore.

.trustStoreFileName("/path/to/truststore")

.trustStorePassword("truststorepassword".toCharArray())

// Client certificate keystore.

.keyStoreFileName("/path/to/client/keystore")

.keyStorePassword("keystorepassword".toCharArray());

RemoteCache<String, String> cache=remoteCacheManager.getCache("secured");Specify a path that contains certificates in PEM format and Hot Rod clients automatically generate trust stores.

Use .trustStorePath("/path/to/certificate").

3.6. Monitoring Hot Rod Client Statistics

Enable Hot Rod client statistics that include remote and near-cache hits and misses as well as connection pool usage.

Procedure

-

Use the

StatisticsConfigurationBuilderclass to enable and configure Hot Rod client statistics.

import org.infinispan.client.hotrod.configuration.ConfigurationBuilder;

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.statistics()

//Enable client statistics.

.enable()

//Register JMX MBeans for RemoteCacheManager and each RemoteCache.

.jmxEnable()

//Set JMX domain name to which MBeans are exposed.

.jmxDomain("org.example")

.addServer()

.host("127.0.0.1")

.port(11222);

remoteCacheManager = new RemoteCacheManager(clientBuilder.build());3.7. Defining Data Grid Clusters in Client Configuration

Provide the locations of Data Grid clusters in Hot Rod client configuration.

Procedure

Provide at least one Data Grid cluster name, hostname, and port with the

ClusterConfigurationBuilderclass.ConfigurationBuilder clientBuilder = new ConfigurationBuilder(); clientBuilder .addCluster("siteA") .addClusterNode("hostA1", 11222) .addClusterNode("hostA2", 11222) .addCluster("siteB") .addClusterNodes("hostB1:11222; hostB2:11222"); remoteCacheManager = new RemoteCacheManager(clientBuilder.build());

Default Cluster

When adding clusters to your Hot Rod client configuration, you can define a list of Data Grid servers in the format of hostname1:port; hostname2:port. Data Grid then uses the server list as the default cluster configuration.

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServers("hostA1:11222; hostA2:11222")

.addCluster("siteB")

.addClusterNodes("hostB1:11222; hostB2:11223");

remoteCacheManager = new RemoteCacheManager(clientBuilder.build());3.7.1. Manually Switching Data Grid Clusters

Manually switch Hot Rod Java client connections between Data Grid clusters.

Procedure

Call one of the following methods in the

RemoteCacheManagerclass:switchToCluster(clusterName)switches to a specific cluster defined in the client configuration.switchToDefaultCluster()switches to the default cluster in the client configuration, which is defined as a list of Data Grid servers.

Reference

3.8. Configuring Connection Pools

Hot Rod Java clients keep pools of persistent connections to Data Grid servers to reuse TCP connections instead of creating them on each request.

Clients use asynchronous threads that check the validity of connections by iterating over the connection pool and sending pings to Data Grid servers. This improves performance by finding broken connections while they are idle in the pool, rather than on application requests.

Procedure

-

Configure Hot Rod client connection pool settings with the

ConnectionPoolConfigurationBuilderclass.

ConfigurationBuilder clientBuilder = new ConfigurationBuilder();

clientBuilder

.addServer()

.host("127.0.0.1")

.port(11222)

//Configure client connection pools.

.connectionPool()

//Set the maximum number of active connections per server.

.maxActive(10)

//Set the minimum number of idle connections

//that must be available per server.

.minIdle(20);

remoteCacheManager = new RemoteCacheManager(clientBuilder.build());3.9. Hot Rod Java Client Marshalling

Hot Rod is a binary TCP protocol that requires you to transform Java objects into binary format so they can be transferred over the wire or stored to disk.

By default, Data Grid uses a ProtoStream API to encode and decode Java objects into Protocol Buffers (Protobuf); a language-neutral, backwards compatible format. However, you can also implement and use custom marshallers.

3.9.1. Configuring SerializationContextInitializer Implementations

You can add implementations of the ProtoStream SerializationContextInitializer interface to Hot Rod client configurations so Data Grid marshalls custom Java objects.

Procedure

-

Add your

SerializationContextInitializerimplementations to your Hot Rod client configuration as follows:

hotrod-client.properties

infinispan.client.hotrod.context-initializers=org.infinispan.example.LibraryInitializerImpl,org.infinispan.example.AnotherExampleSciImpl

Programmatic configuration

ConfigurationBuilder builder = new ConfigurationBuilder();

builder

.addServer()

.host("127.0.0.1")

.port(11222)

.addContextInitializers(new LibraryInitializerImpl(), new AnotherExampleSciImpl());

RemoteCacheManager rcm = new RemoteCacheManager(builder.build());

3.9.2. Configuring Custom Marshallers

Configure Hot Rod clients to use custom marshallers.

Procedure

-

Implement the

org.infinispan.commons.marshall.Marshallerinterface. - Specify the fully qualified name of your class in your Hot Rod client configuration.

Add your Java classes to the Data Grid deserialization whitelist.

In the following example, only classes with fully qualified names that contain

PersonorEmployeeare allowed:ConfigurationBuilder clientBuilder = new ConfigurationBuilder(); clientBuilder.marshaller("org.infinispan.example.marshall.CustomMarshaller") .addJavaSerialWhiteList(".*Person.*", ".*Employee.*"); ...

3.10. Configuring Hot Rod Client Data Formats

By default, Hot Rod client operations use the configured marshaller when reading and writing from Data Grid servers for both keys and values.

However, the DataFormat API lets you decorate remote caches so that all operations can happen with custom data formats.

Using different marshallers for key and values

Marshallers for keys and values can be overridden at run time. For example, to bypass all serialization in the Hot Rod client and read the byte[] as they are stored in the server:

// Existing RemoteCache instance

RemoteCache<String, Pojo> remoteCache = ...

// IdentityMarshaller is a no-op marshaller

DataFormat rawKeyAndValues =

DataFormat.builder()

.keyMarshaller(IdentityMarshaller.INSTANCE)

.valueMarshaller(IdentityMarshaller.INSTANCE)

.build();

// Creates a new instance of RemoteCache with the supplied DataFormat

RemoteCache<byte[], byte[]> rawResultsCache =

remoteCache.withDataFormat(rawKeyAndValues);

Using different marshallers and formats for keys, with keyMarshaller() and keyType() methods might interfere with the client intelligence routing mechanism and cause extra hops within the Data Grid cluster to perform the operation. If performance is critical, you should use keys in the format stored by the server.

Returning XML Values

Object xmlValue = remoteCache

.withDataFormat(DataFormat.builder()

.valueType(APPLICATION_XML)

.valueMarshaller(new UTF8StringMarshaller())

.build())

.get(key);

The preceding code example returns XML values as follows:

<?xml version="1.0" ?><string>Hello!</string>

Reading data in different formats

Request and send data in different formats specified by a org.infinispan.commons.dataconversion.MediaType as follows:

// Existing remote cache using ProtostreamMarshaller

RemoteCache<String, Pojo> protobufCache = ...

// Request values returned as JSON

// Use the UTF8StringMarshaller to convert UTF-8 to String

DataFormat jsonString =

DataFormat.builder()

.valueType(MediaType.APPLICATION_JSON)

.valueMarshaller(new UTF8StringMarshaller())

.build();

RemoteCache<byte[], byte[]> rawResultsCache =

protobufCache.withDataFormat(jsonString);// Alternatively, use a custom value marshaller

// that returns `org.codehaus.jackson.JsonNode` objects

DataFormat jsonNode =

DataFormat.builder()

.valueType(MediaType.APPLICATION_JSON)

.valueMarshaller(new CustomJacksonMarshaller()

.build();

RemoteCache<String, JsonNode> jsonNodeCache =

remoteCache.withDataFormat(jsonNode);In the preceding example, data conversion happens in the Data Grid server. Data Grid throws an exception if it does not support conversion to and from a storage format.

Reference

3.11. Configuring Near Caching

Hot Rod Java clients can keep local caches that store recently used data, which significantly increases performance of get() and getVersioned() operations because the data is local to the client.

When you enable near caching with Hot Rod Java clients, calls to get() or getVersioned() calls populate the near cache when entries are retrieved from servers. When entries are updated or removed on the server-side, entries in the near cache are invalidated. If keys are requested after they are invalidated, clients must fetch the keys from the server again.

You can also configure the number of entries that near caches can contain. When the maximum is reached, near-cached entries are evicted.

Do not use maximum idle expiration with near caches because near-cache reads do not propagate the last access time for entries.

- Near caches are cleared when clients failover to different servers when using clustered cache modes.

- You should always configure the maximum number of entries that can reside in the near cache. Unbounded near caches require you to keep the size of the near cache within the boundaries of the client JVM.

- Near cache invalidation messages can degrade performance of write operations

Procedure

-

Set the near cache mode to

INVALIDATEDin the client configuration for the caches you want - Define the size of the near cache by specifying the maximum number of entries.

import org.infinispan.client.hotrod.configuration.ConfigurationBuilder;

import org.infinispan.client.hotrod.configuration.NearCacheMode;

...

// Configure different near cache settings for specific caches

ConfigurationBuilder builder = new ConfigurationBuilder();

builder

.remoteCache("bounded")

.nearCacheMode(NearCacheMode.INVALIDATED)

.nearCacheMaxEntries(100);

.remoteCache("unbounded").nearCache()

.nearCacheMode(NearCacheMode.INVALIDATED)

.nearCacheMaxEntries(-1);You should always configure near caching on a per-cache basis. Even though Data Grid provides global near cache configuration properties, you should not use them.

3.12. Forcing Return Values

Method calls that modify entries can return previous values for keys, for example:

V remove(Object key); V put(K key, V value);

To reduce the amount of data sent across the network, RemoteCache operations always return null values.

Procedure

-

Use the

FORCE_RETURN_VALUEflag for invocations that need return values.

cache.put("aKey", "initialValue");

assert null == cache.put("aKey", "aValue");

assert "aValue".equals(cache.withFlags(Flag.FORCE_RETURN_VALUE).put("aKey",

"newValue"));Reference

3.13. Creating Caches on First Access

When Hot Rod Java clients attempt to access caches that do not exist, they return null for getCache("$cacheName") invocations.

You can change this default behavior so that clients automatically create caches on first access using caches configuration templates or Data Grid cache definitions in XML format.

Programmatic procedure

import org.infinispan.client.hotrod.configuration.ConfigurationBuilder;

import org.infinispan.client.hotrod.configuration.NearCacheMode;

...

ConfigurationBuilder builder = new ConfigurationBuilder();

builder

.remoteCache("my-cache")

.templateName("org.infinispan.DIST_SYNC") 1

.remoteCache("another-cache")

.configuration("<infinispan><cache-container><distributed-cache name=\"another-cache\"/></cache-container></infinispan>"); 2

Hot Rod client properties

infinispan.client.hotrod.cache.my-cache.template_name=org.infinispan.DIST_SYNC 1 infinispan.client.hotrod.cache.another-cache.configuration=<infinispan><cache-container><distributed-cache name=\"another-cache\"/></cache-container></infinispan> 2