Chapter 1. Understanding Operators

1.1. What are Operators?

Conceptually, Operators take human operational knowledge and encode it into software that is more easily shared with consumers.

Operators are pieces of software that ease the operational complexity of running another piece of software. They act like an extension of the software vendor’s engineering team, watching over a Kubernetes environment (such as OpenShift Container Platform) and using its current state to make decisions in real time. Advanced Operators are designed to handle upgrades seamlessly, react to failures automatically, and not take shortcuts, like skipping a software backup process to save time.

More technically, Operators are a method of packaging, deploying, and managing a Kubernetes application.

A Kubernetes application is an app that is both deployed on Kubernetes and managed using the Kubernetes APIs and kubectl or oc tooling. To be able to make the most of Kubernetes, you require a set of cohesive APIs to extend in order to service and manage your apps that run on Kubernetes. Think of Operators as the runtime that manages this type of app on Kubernetes.

1.1.1. Why use Operators?

Operators provide:

- Repeatability of installation and upgrade.

- Constant health checks of every system component.

- Over-the-air (OTA) updates for OpenShift components and ISV content.

- A place to encapsulate knowledge from field engineers and spread it to all users, not just one or two.

- Why deploy on Kubernetes?

- Kubernetes (and by extension, OpenShift Container Platform) contains all of the primitives needed to build complex distributed systems – secret handling, load balancing, service discovery, autoscaling – that work across on-premise and cloud providers.

- Why manage your app with Kubernetes APIs and

kubectltooling? -

These APIs are feature rich, have clients for all platforms and plug into the cluster’s access control/auditing. An Operator uses the Kubernetes extension mechanism, custom resource definitions (CRDs), so your custom object, for example

MongoDB, looks and acts just like the built-in, native Kubernetes objects. - How do Operators compare with service brokers?

- A service broker is a step towards programmatic discovery and deployment of an app. However, because it is not a long running process, it cannot execute Day 2 operations like upgrade, failover, or scaling. Customizations and parameterization of tunables are provided at install time, versus an Operator that is constantly watching the current state of your cluster. Off-cluster services are a good match for a service broker, although Operators exist for these as well.

1.1.2. Operator Framework

The Operator Framework is a family of tools and capabilities to deliver on the customer experience described above. It is not just about writing code; testing, delivering, and updating Operators is just as important. The Operator Framework components consist of open source tools to tackle these problems:

- Operator SDK

- The Operator SDK assists Operator authors in bootstrapping, building, testing, and packaging their own Operator based on their expertise without requiring knowledge of Kubernetes API complexities.

- Operator Lifecycle Manager

- Operator Lifecycle Manager (OLM) controls the installation, upgrade, and role-based access control (RBAC) of Operators in a cluster. Deployed by default in OpenShift Container Platform 4.5.

- Operator Registry

- The Operator Registry stores cluster service versions (CSVs) and custom resource definitions (CRDs) for creation in a cluster and stores Operator metadata about packages and channels. It runs in a Kubernetes or OpenShift cluster to provide this Operator catalog data to OLM.

- OperatorHub

- OperatorHub is a web console for cluster administrators to discover and select Operators to install on their cluster. It is deployed by default in OpenShift Container Platform.

- Operator Metering

- Operator Metering collects operational metrics about Operators on the cluster for Day 2 management and aggregating usage metrics.

These tools are designed to be composable, so you can use any that are useful to you.

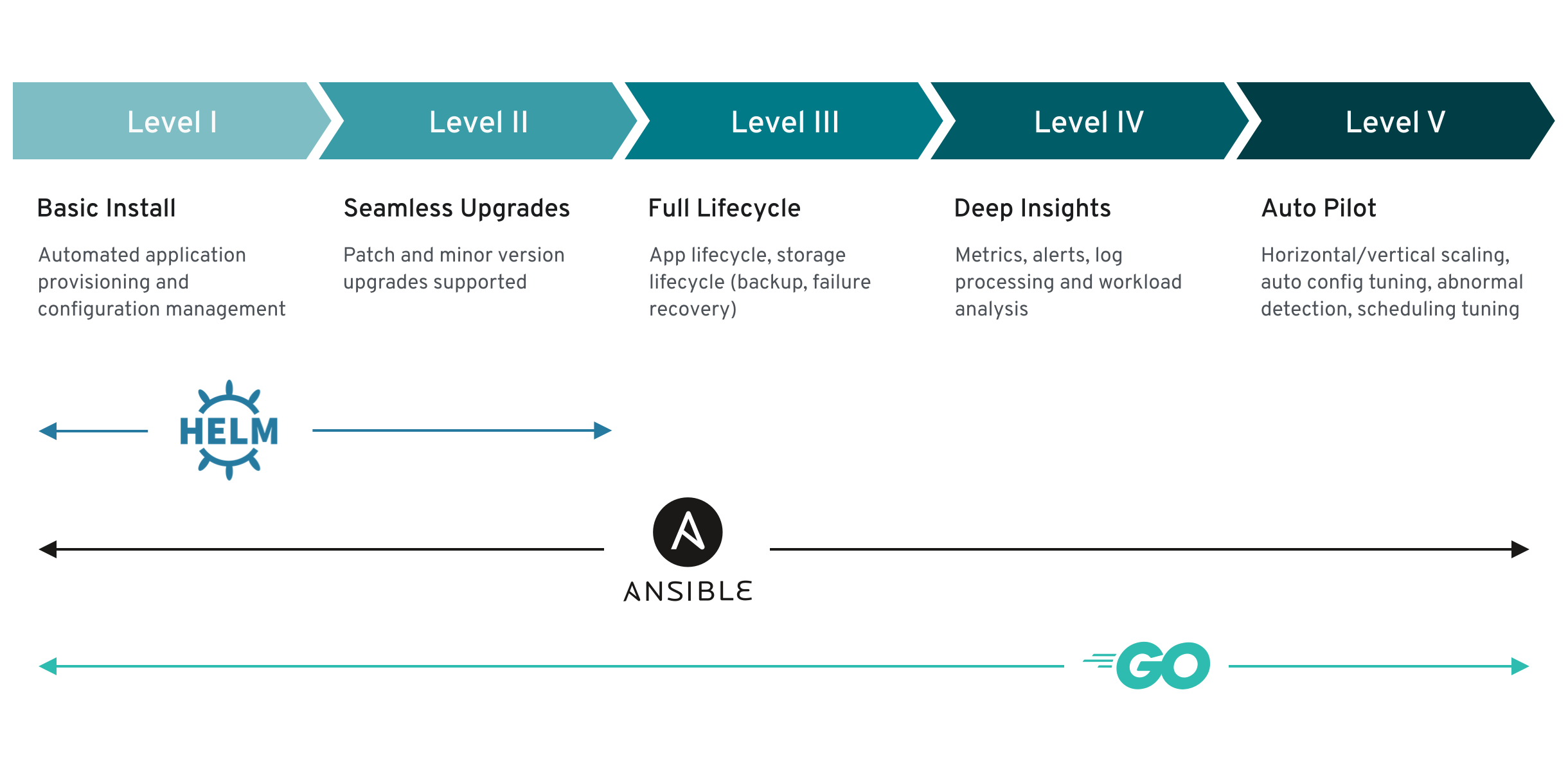

1.1.3. Operator maturity model

The level of sophistication of the management logic encapsulated within an Operator can vary. This logic is also in general highly dependent on the type of the service represented by the Operator.

One can however generalize the scale of the maturity of the encapsulated operations of an Operator for certain set of capabilities that most Operators can include. To this end, the following Operator maturity model defines five phases of maturity for generic day two operations of an Operator:

Figure 1.1. Operator maturity model

The above model also shows how these capabilities can best be developed through the Helm, Go, and Ansible capabilities of the Operator SDK.

1.2. Operator Framework glossary of common terms

This topic provides a glossary of common terms related to the Operator Framework, including Operator Lifecycle Manager (OLM) and the Operator SDK, for both packaging formats: Package Manifest Format and Bundle Format.

1.2.1. Common Operator Framework terms

1.2.1.1. Bundle

In the Bundle Format, a bundle is a collection of an Operator CSV, manifests, and metadata. Together, they form a unique version of an Operator that can be installed onto the cluster.

1.2.1.2. Bundle image

In the Bundle Format, a bundle image is a container image that is built from Operator manifests and that contains one bundle. Bundle images are stored and distributed by Open Container Initiative (OCI) spec container registries, such as Quay.io or DockerHub.

1.2.1.3. Catalog source

A catalog source is a repository of CSVs, CRDs, and packages that define an application.

1.2.1.4. Catalog image

In the Package Manifest Format, a catalog image is a containerized datastore that describes a set of Operator metadata and update metadata that can be installed onto a cluster using OLM.

1.2.1.5. Channel

A channel defines a stream of updates for an Operator and is used to roll out updates for subscribers. The head points to the latest version of that channel. For example, a stable channel would have all stable versions of an Operator arranged from the earliest to the latest.

An Operator can have several channels, and a subscription binding to a certain channel would only look for updates in that channel.

1.2.1.6. Channel head

A channel head refers to the latest known update in a particular channel.

1.2.1.7. Cluster service version

A cluster service version (CSV) is a YAML manifest created from Operator metadata that assists OLM in running the Operator in a cluster. It is the metadata that accompanies an Operator container image, used to populate user interfaces with information such as its logo, description, and version.

It is also a source of technical information that is required to run the Operator, like the RBAC rules it requires and which custom resources (CRs) it manages or depends on.

1.2.1.8. Dependency

An Operator may have a dependency on another Operator being present in the cluster. For example, the Vault Operator has a dependency on the etcd Operator for its data persistence layer.

OLM resolves dependencies by ensuring that all specified versions of Operators and CRDs are installed on the cluster during the installation phase. This dependency is resolved by finding and installing an Operator in a catalog that satisfies the required CRD API, and is not related to packages or bundles.

1.2.1.9. Index image

In the Bundle Format, an index image refers to an image of a database (a database snapshot) that contains information about Operator bundles including CSVs and CRDs of all versions. This index can host a history of Operators on a cluster and be maintained by adding or removing Operators using the opm CLI tool.

1.2.1.10. Install plan

An install plan is a calculated list of resources to be created to automatically install or upgrade a CSV.

1.2.1.11. Operator group

An Operator group configures all Operators deployed in the same namespace as the OperatorGroup object to watch for their CR in a list of namespaces or cluster-wide.

1.2.1.12. Package

In the Bundle Format, a package is a directory that encloses all released history of an Operator with each version. A released version of an Operator is described in a CSV manifest alongside the CRDs.

1.2.1.13. Registry

A registry is a database that stores bundle images of Operators, each with all of its latest and historical versions in all channels.

1.2.1.14. Subscription

A subscription keeps CSVs up to date by tracking a channel in a package.

1.2.1.15. Update graph

An update graph links versions of CSVs together, similar to the update graph of any other packaged software. Operators can be installed sequentially, or certain versions can be skipped. The update graph is expected to grow only at the head with newer versions being added.

1.3. Operator Framework packaging formats

This guide outlines the packaging formats for Operators supported by Operator Lifecycle Manager (OLM) in OpenShift Container Platform.

1.3.1. Package Manifest Format

The Package Manifest Format for Operators is the legacy packaging format introduced by the Operator Framework. While this format is deprecated in OpenShift Container Platform 4.5, it is still supported and Operators provided by Red Hat are currently shipped using this method.

In this format, a version of an Operator is represented by a single cluster service version (CSV) and typically the custom resource definitions (CRDs) that define the owned APIs of the CSV, though additional objects may be included.

All versions of the Operator are nested in a single directory:

Example Package Manifest Format layout

etcd ├── 0.6.1 │ ├── etcdcluster.crd.yaml │ └── etcdoperator.clusterserviceversion.yaml ├── 0.9.0 │ ├── etcdbackup.crd.yaml │ ├── etcdcluster.crd.yaml │ ├── etcdoperator.v0.9.0.clusterserviceversion.yaml │ └── etcdrestore.crd.yaml ├── 0.9.2 │ ├── etcdbackup.crd.yaml │ ├── etcdcluster.crd.yaml │ ├── etcdoperator.v0.9.2.clusterserviceversion.yaml │ └── etcdrestore.crd.yaml └── etcd.package.yaml

It also includes a <name>.package.yaml file that is the package manifest that defines the package name and channels details:

Example package manifest

packageName: etcd channels: - name: alpha currentCSV: etcdoperator.v0.9.2 - name: beta currentCSV: etcdoperator.v0.9.0 - name: stable currentCSV: etcdoperator.v0.9.2 defaultChannel: alpha

When loading package manifests into the Operator Registry database, the following requirements are validated:

- Every package has at least one channel.

- Every CSV pointed to by a channel in a package exists.

- Every version of an Operator has exactly one CSV.

- If a CSV owns a CRD, that CRD must exist in the directory of the Operator version.

- If a CSV replaces another, both the old and the new must exist in the package.

1.3.2. Bundle Format

The Bundle Format for Operators is a new packaging format introduced by the Operator Framework. To improve scalability and to better enable upstream users hosting their own catalogs, the Bundle Format specification simplifies the distribution of Operator metadata.

An Operator bundle represents a single version of an Operator. On-disk bundle manifests are containerized and shipped as a bundle image, which is a non-runnable container image that stores the Kubernetes manifests and Operator metadata. Storage and distribution of the bundle image is then managed using existing container tools like podman and docker and container registries such as Quay.

Operator metadata can include:

- Information that identifies the Operator, for example its name and version.

- Additional information that drives the UI, for example its icon and some example custom resources (CRs).

- Required and provided APIs.

- Related images.

When loading manifests into the Operator Registry database, the following requirements are validated:

- The bundle must have at least one channel defined in the annotations.

- Every bundle has exactly one cluster service version (CSV).

- If a CSV owns a custom resource definition (CRD), that CRD must exist in the bundle.

1.3.2.1. Manifests

Bundle manifests refer to a set of Kubernetes manifests that define the deployment and RBAC model of the Operator.

A bundle includes one CSV per directory and typically the CRDs that define the owned APIs of the CSV in its /manifests directory.

Example Bundle Format layout

etcd

├── manifests

│ ├── etcdcluster.crd.yaml

│ └── etcdoperator.clusterserviceversion.yaml

│ └── secret.yaml

│ └── configmap.yaml

└── metadata

└── annotations.yaml

└── dependencies.yaml

Optional objects

The following object types can also be optionally included in the /manifests directory of a bundle:

Supported optional objects

-

Secrets -

ConfigMaps

When these optional objects are included in a bundle, Operator Lifecycle Manager (OLM) can create them from the bundle and manage their lifecycle along with the CSV:

Lifecycle for optional objects

- When the CSV is deleted, OLM deletes the optional object.

When the CSV is upgraded:

- If the name of the optional object is the same, OLM updates it in place.

- If the name of the optional object has changed between versions, OLM deletes and recreates it.

1.3.2.2. Annotations

A bundle also includes an annotations.yaml file in its /metadata directory. This file defines higher level aggregate data that helps describe the format and package information about how the bundle should be added into an index of bundles:

Example annotations.yaml

annotations: operators.operatorframework.io.bundle.mediatype.v1: "registry+v1" 1 operators.operatorframework.io.bundle.manifests.v1: "manifests/" 2 operators.operatorframework.io.bundle.metadata.v1: "metadata/" 3 operators.operatorframework.io.bundle.package.v1: "test-operator" 4 operators.operatorframework.io.bundle.channels.v1: "beta,stable" 5 operators.operatorframework.io.bundle.channel.default.v1: "stable" 6

- 1

- The media type or format of the Operator bundle. The

registry+v1format means it contains a CSV and its associated Kubernetes objects. - 2

- The path in the image to the directory that contains the Operator manifests. This label is reserved for future use and currently defaults to

manifests/. The valuemanifests.v1implies that the bundle contains Operator manifests. - 3

- The path in the image to the directory that contains metadata files about the bundle. This label is reserved for future use and currently defaults to

metadata/. The valuemetadata.v1implies that this bundle has Operator metadata. - 4

- The package name of the bundle.

- 5

- The list of channels the bundle is subscribing to when added into an Operator Registry.

- 6

- The default channel an Operator should be subscribed to when installed from a registry.

In case of a mismatch, the annotations.yaml file is authoritative because the on-cluster Operator Registry that relies on these annotations only has access to this file.

1.3.2.3. Dependencies

The dependencies of an Operator are listed in a dependencies.yaml file inside the bundle’s metadata/ folder. This file is optional and currently only used to specify explicit Operator-version dependencies.

The dependency list contains a type field for each item to specify what kind of dependency this is. There are two supported types of Operator dependencies:

-

olm.package: A package type means this is a dependency for a specific Operator version. The dependency information must include the package name and the version of the package in semver format. For example, you can specify an exact version such as0.5.2or a range of versions such as>0.5.1. -

olm.gvk: With a GVK type, the author can specify a dependency with GVK information, similar to existing CRD and API-based usage in a CSV. This is a path to enable Operator authors to consolidate all dependencies, API or explicit versions, to be in the same place.

In the following example, dependencies are specified for a Prometheus Operator and etcd CRDs:

Example dependencies.yaml file

dependencies:

- type: olm.package

value:

packageName: prometheus

version: ">0.27.0"

- type: olm.gvk

value:

group: etcd.database.coreos.com

kind: EtcdCluster

version: v1beta2

1.3.2.4. opm CLI

The new opm CLI tool is introduced alongside the new Bundle Format. This tool allows you to create and maintain catalogs of Operators from a list of bundles, called an index, that are equivalent to a "repository". The result is a container image, called an index image, which can be stored in a container registry and then installed on a cluster.

An index contains a database of pointers to Operator manifest content that can be queried via an included API that is served when the container image is run. On OpenShift Container Platform, OLM can use the index image as a catalog by referencing it in a CatalogSource, which polls the image at regular intervals to enable frequent updates to installed Operators on the cluster.

1.4. Operator Lifecycle Manager (OLM)

1.4.1. Operator Lifecycle Manager concepts

This guide provides an overview of the concepts that drive Operator Lifecycle Manager (OLM) in OpenShift Container Platform.

1.4.1.1. What is Operator Lifecycle Manager?

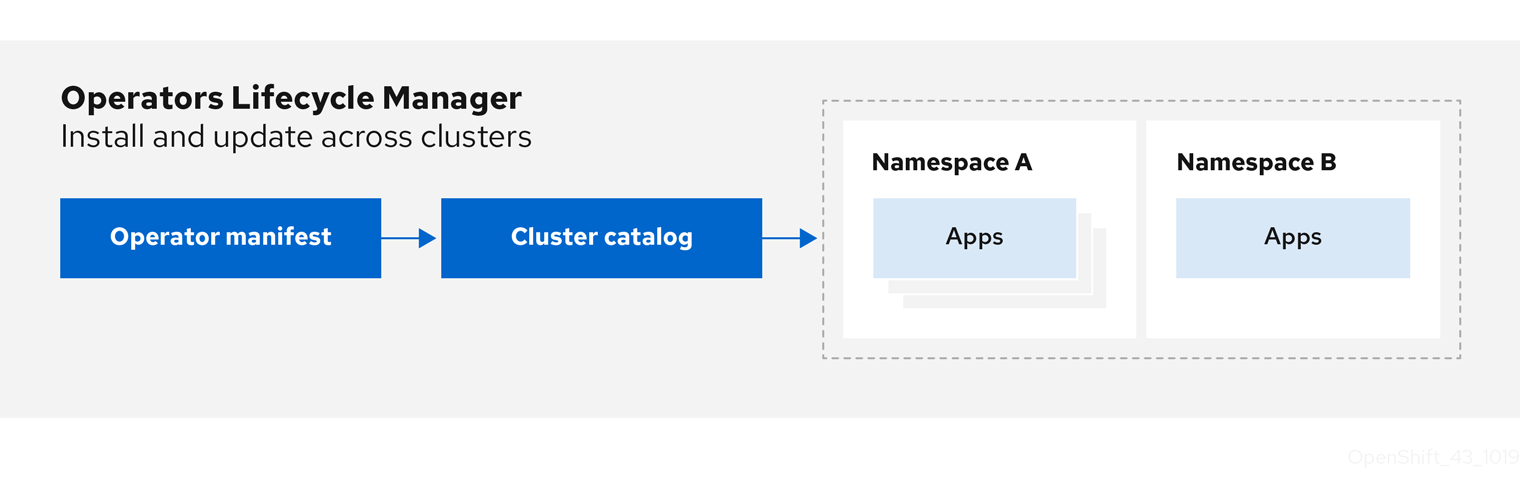

Operator Lifecycle Manager (OLM) helps users install, update, and manage the lifecycle of Kubernetes native applications (Operators) and their associated services running across their OpenShift Container Platform clusters. It is part of the Operator Framework, an open source toolkit designed to manage Operators in an effective, automated, and scalable way.

Figure 1.2. Operator Lifecycle Manager workflow

OLM runs by default in OpenShift Container Platform 4.5, which aids cluster administrators in installing, upgrading, and granting access to Operators running on their cluster. The OpenShift Container Platform web console provides management screens for cluster administrators to install Operators, as well as grant specific projects access to use the catalog of Operators available on the cluster.

For developers, a self-service experience allows provisioning and configuring instances of databases, monitoring, and big data services without having to be subject matter experts, because the Operator has that knowledge baked into it.

1.4.1.2. OLM resources

The following custom resource definitions (CRDs) are defined and managed by Operator Lifecycle Manager (OLM):

Table 1.1. CRDs managed by OLM and Catalog Operators

| Resource | Short name | Description |

|---|---|---|

|

|

| Application metadata. For example: name, version, icon, required resources. |

|

|

| A repository of CSVs, CRDs, and packages that define an application. |

|

|

| Keeps CSVs up to date by tracking a channel in a package. |

|

|

| Calculated list of resources to be created to automatically install or upgrade a CSV. |

|

|

|

Configures all Operators deployed in the same namespace as the |

1.4.1.2.1. Cluster service version

A cluster service version (CSV) represents a specific version of a running Operator on an OpenShift Container Platform cluster. It is a YAML manifest created from Operator metadata that assists Operator Lifecycle Manager (OLM) in running the Operator in the cluster.

OLM requires this metadata about an Operator to ensure that it can be kept running safely on a cluster, and to provide information about how updates should be applied as new versions of the Operator are published. This is similar to packaging software for a traditional operating system; think of the packaging step for OLM as the stage at which you make your rpm, deb, or apk bundle.

A CSV includes the metadata that accompanies an Operator container image, used to populate user interfaces with information such as its name, version, description, labels, repository link, and logo.

A CSV is also a source of technical information required to run the Operator, such as which custom resources (CRs) it manages or depends on, RBAC rules, cluster requirements, and install strategies. This information tells OLM how to create required resources and set up the Operator as a deployment.

1.4.1.2.2. Catalog source

A catalog source represents a store of metadata that OLM can query to discover and install Operators and their dependencies. The spec of a CatalogSource object indicates how to construct a pod or how to communicate with a service that serves the Operator Registry gRPC API.

There are three primary sourceTypes for a CatalogSource object:

-

grpcwith animagereference: OLM pulls the image and runs the pod, which is expected to serve a compliant API. -

grpcwith anaddressfield: OLM attempts to contact the gRPC API at the given address. This should not be used in most cases. -

internalorconfigmap: OLM parses the ConfigMap data and runs a pod that can serve the gRPC API over it.

The following example defines a catalog source for OperatorHub.io content:

Example CatalogSource object

apiVersion: operators.coreos.com/v1alpha1 kind: CatalogSource metadata: name: operatorhubio-catalog namespace: olm spec: sourceType: grpc image: quay.io/operator-framework/upstream-community-operators:latest displayName: Community Operators publisher: OperatorHub.io

The name of the CatalogSource object is used as input to a subscription, which instructs OLM where to look to find a requested Operator:

Example Subscription object referencing a catalog source

apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: name: my-operator namespace: olm spec: channel: stable name: my-operator source: operatorhubio-catalog

1.4.1.2.3. Subscription

A subscription, defined by a Subscription object, represents an intention to install an Operator. It is the custom resource that relates an Operator to a catalog source.

Subscriptions describe which channel of an Operator package to subscribe to, and whether to perform updates automatically or manually. If set to automatic, the subscription ensures Operator Lifecycle Manager (OLM) manages and upgrades the Operator to ensure that the latest version is always running in the cluster.

Example Subscription object

apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: name: my-operator namespace: operators spec: channel: stable name: my-operator source: my-catalog sourceNamespace: operators

This Subscription object defines the name and namespace of the Operator, as well as the catalog from which the Operator data can be found. The channel, such as alpha, beta, or stable, helps determine which Operator stream should be installed from the catalog source.

The names of channels in a subscription can differ between Operators, but the naming scheme should follow a common convention within a given Operator. For example, channel names might follow a minor release update stream for the application provided by the Operator (1.2, 1.3) or a release frequency (stable, fast).

In addition to being easily visible from the OpenShift Container Platform web console, it is possible to identify when there is a newer version of an Operator available by inspecting the status of the related subscription. The value associated with the currentCSV field is the newest version that is known to OLM, and installedCSV is the version that is installed on the cluster.

1.4.1.2.4. Install plan

An install plan, defined by an InstallPlan object, describes a set of resources to be created to install or upgrade to a specific version of an Operator, as defined by a cluster service version (CSV).

1.4.1.2.5. Operator groups

An Operator group, defined by the OperatorGroup resource, provides multitenant configuration to OLM-installed Operators. An Operator group selects target namespaces in which to generate required RBAC access for its member Operators.

The set of target namespaces is provided by a comma-delimited string stored in the olm.targetNamespaces annotation of a cluster service version (CSV). This annotation is applied to the CSV instances of member Operators and is projected into their deployments.

For more information, see the Operator groups guide.

1.4.2. Operator Lifecycle Manager architecture

This guide outlines the component architecture of Operator Lifecycle Manager (OLM) in OpenShift Container Platform.

1.4.2.1. Component responsibilities

Operator Lifecycle Manager (OLM) is composed of two Operators: the OLM Operator and the Catalog Operator.

Each of these Operators is responsible for managing the custom resource definitions (CRDs) that are the basis for the OLM framework:

Table 1.2. CRDs managed by OLM and Catalog Operators

| Resource | Short name | Owner | Description |

|---|---|---|---|

|

|

| OLM | Application metadata: name, version, icon, required resources, installation, and so on. |

|

|

| Catalog | Calculated list of resources to be created to automatically install or upgrade a CSV. |

|

|

| Catalog | A repository of CSVs, CRDs, and packages that define an application. |

|

|

| Catalog | Used to keep CSVs up to date by tracking a channel in a package. |

|

|

| OLM |

Configures all Operators deployed in the same namespace as the |

Each of these Operators is also responsible for creating the following resources:

Table 1.3. Resources created by OLM and Catalog Operators

| Resource | Owner |

|---|---|

|

| OLM |

|

| |

|

| |

|

| |

|

| Catalog |

|

|

1.4.2.2. OLM Operator

The OLM Operator is responsible for deploying applications defined by CSV resources after the required resources specified in the CSV are present in the cluster.

The OLM Operator is not concerned with the creation of the required resources; you can choose to manually create these resources using the CLI or using the Catalog Operator. This separation of concern allows users incremental buy-in in terms of how much of the OLM framework they choose to leverage for their application.

The OLM Operator uses the following workflow:

- Watch for cluster service versions (CSVs) in a namespace and check that requirements are met.

If requirements are met, run the install strategy for the CSV.

NoteA CSV must be an active member of an Operator group for the install strategy to run.

1.4.2.3. Catalog Operator

The Catalog Operator is responsible for resolving and installing cluster service versions (CSVs) and the required resources they specify. It is also responsible for watching catalog sources for updates to packages in channels and upgrading them, automatically if desired, to the latest available versions.

To track a package in a channel, you can create a Subscription object configuring the desired package, channel, and the CatalogSource object you want to use for pulling updates. When updates are found, an appropriate InstallPlan object is written into the namespace on behalf of the user.

The Catalog Operator uses the following workflow:

- Connect to each catalog source in the cluster.

Watch for unresolved install plans created by a user, and if found:

- Find the CSV matching the name requested and add the CSV as a resolved resource.

- For each managed or required CRD, add the CRD as a resolved resource.

- For each required CRD, find the CSV that manages it.

- Watch for resolved install plans and create all of the discovered resources for it, if approved by a user or automatically.

- Watch for catalog sources and subscriptions and create install plans based on them.

1.4.2.4. Catalog Registry

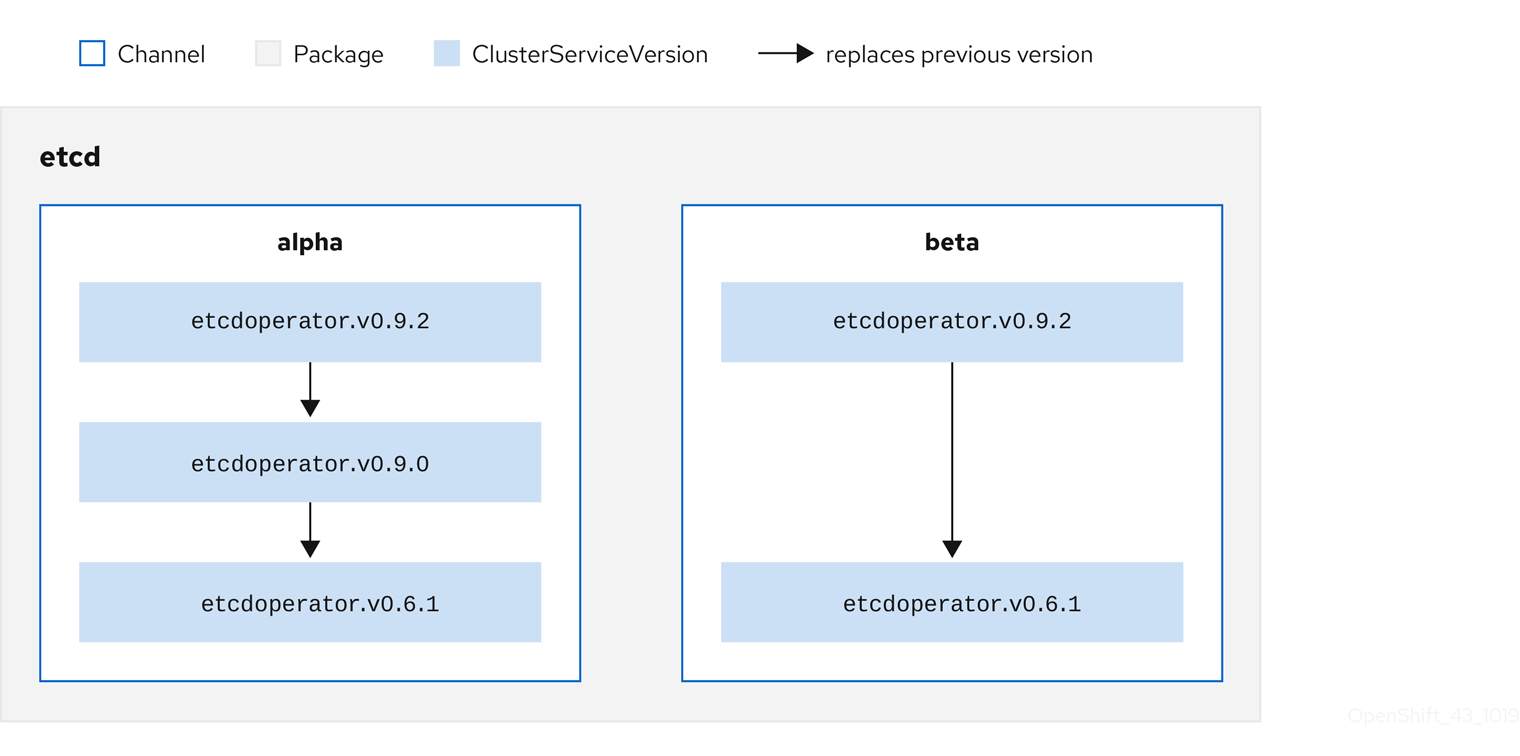

The Catalog Registry stores CSVs and CRDs for creation in a cluster and stores metadata about packages and channels.

A package manifest is an entry in the Catalog Registry that associates a package identity with sets of CSVs. Within a package, channels point to a particular CSV. Because CSVs explicitly reference the CSV that they replace, a package manifest provides the Catalog Operator with all of the information that is required to update a CSV to the latest version in a channel, stepping through each intermediate version.

1.4.3. Operator Lifecycle Manager workflow

This guide outlines the workflow of Operator Lifecycle Manager (OLM) in OpenShift Container Platform.

1.4.3.1. Operator installation and upgrade workflow in OLM

In the Operator Lifecycle Manager (OLM) ecosystem, the following resources are used to resolve Operator installations and upgrades:

-

ClusterServiceVersion(CSV) -

CatalogSource -

Subscription

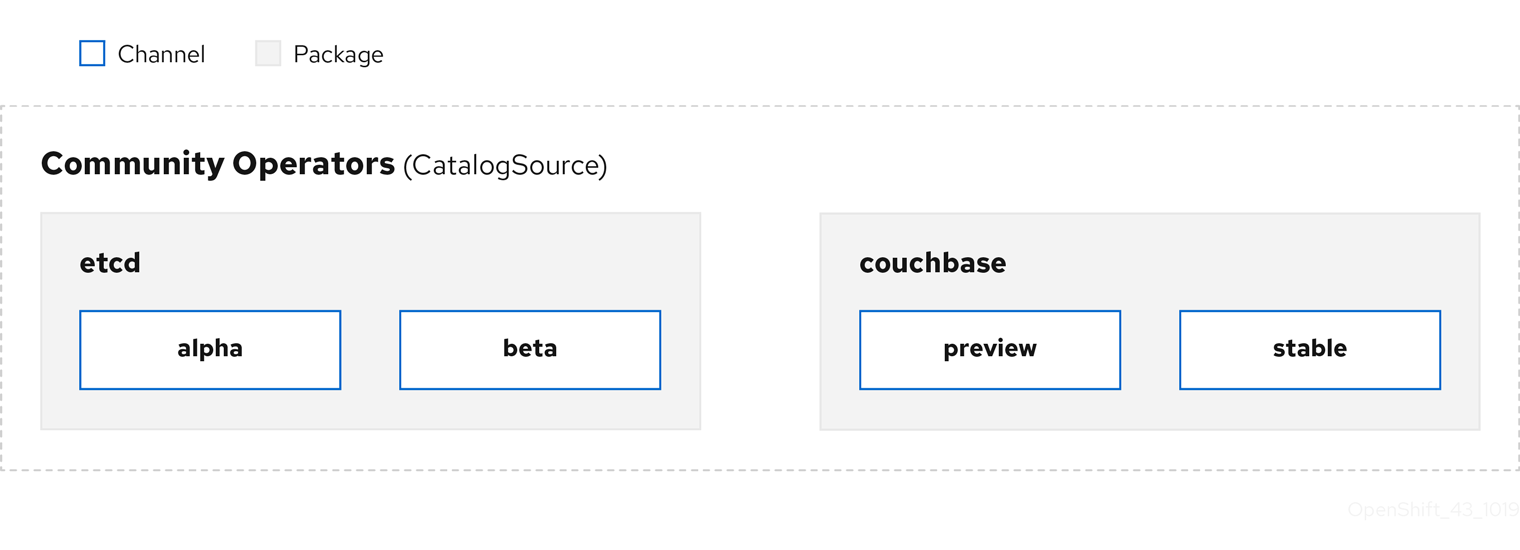

Operator metadata, defined in CSVs, can be stored in a collection called a catalog source. OLM uses catalog sources, which use the Operator Registry API, to query for available Operators as well as upgrades for installed Operators.

Figure 1.3. Catalog source overview

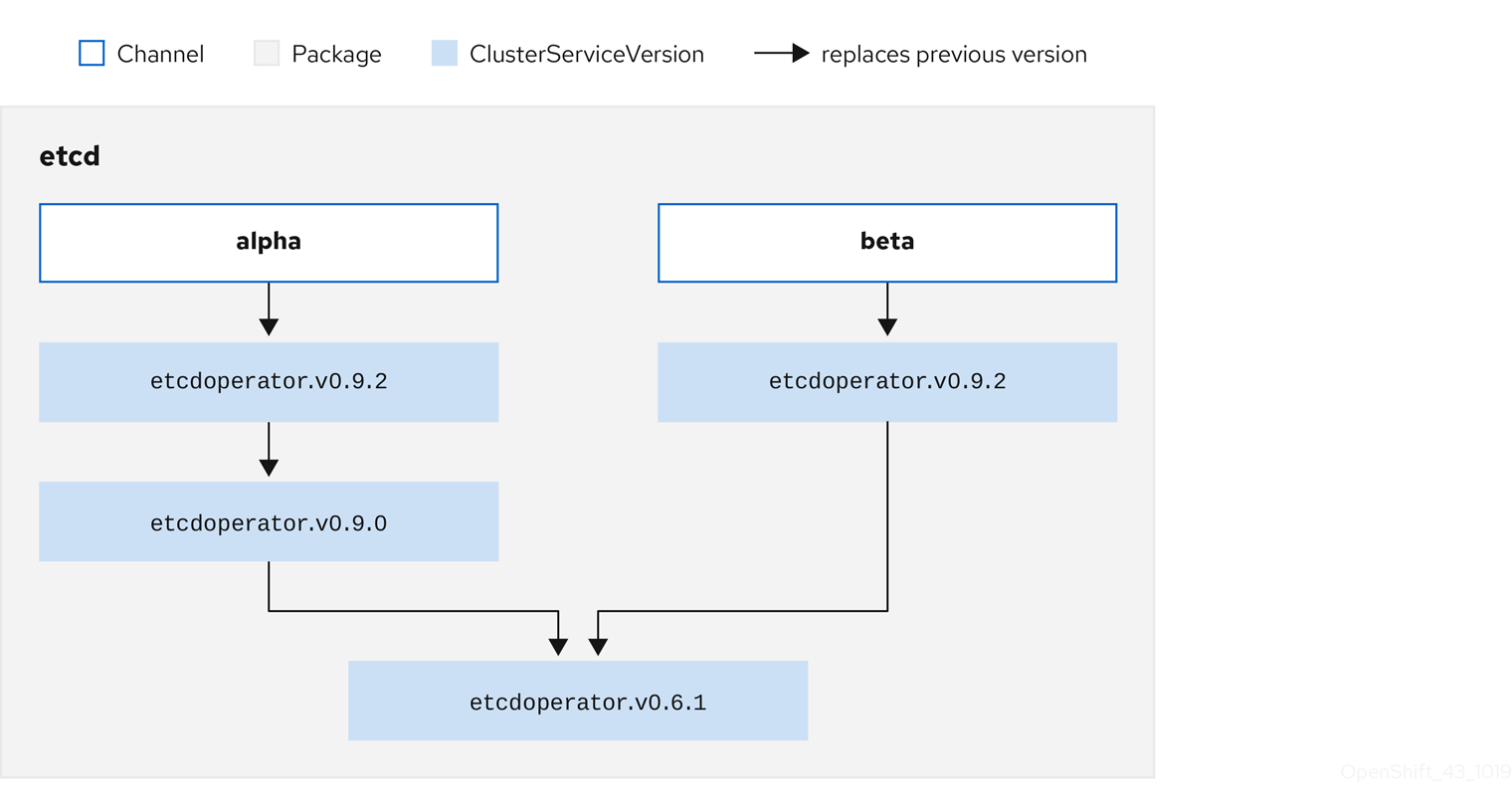

Within a catalog source, Operators are organized into packages and streams of updates called channels, which should be a familiar update pattern from OpenShift Container Platform or other software on a continuous release cycle like web browsers.

Figure 1.4. Packages and channels in a Catalog source

A user indicates a particular package and channel in a particular catalog source in a subscription, for example an etcd package and its alpha channel. If a subscription is made to a package that has not yet been installed in the namespace, the latest Operator for that package is installed.

OLM deliberately avoids version comparisons, so the "latest" or "newest" Operator available from a given catalog → channel → package path does not necessarily need to be the highest version number. It should be thought of more as the head reference of a channel, similar to a Git repository.

Each CSV has a replaces parameter that indicates which Operator it replaces. This builds a graph of CSVs that can be queried by OLM, and updates can be shared between channels. Channels can be thought of as entry points into the graph of updates:

Figure 1.5. OLM graph of available channel updates

Example channels in a package

packageName: example channels: - name: alpha currentCSV: example.v0.1.2 - name: beta currentCSV: example.v0.1.3 defaultChannel: alpha

For OLM to successfully query for updates, given a catalog source, package, channel, and CSV, a catalog must be able to return, unambiguously and deterministically, a single CSV that replaces the input CSV.

1.4.3.1.1. Example upgrade path

For an example upgrade scenario, consider an installed Operator corresponding to CSV version 0.1.1. OLM queries the catalog source and detects an upgrade in the subscribed channel with new CSV version 0.1.3 that replaces an older but not-installed CSV version 0.1.2, which in turn replaces the older and installed CSV version 0.1.1.

OLM walks back from the channel head to previous versions via the replaces field specified in the CSVs to determine the upgrade path 0.1.3 → 0.1.2 → 0.1.1; the direction of the arrow indicates that the former replaces the latter. OLM upgrades the Operator one version at the time until it reaches the channel head.

For this given scenario, OLM installs Operator version 0.1.2 to replace the existing Operator version 0.1.1. Then, it installs Operator version 0.1.3 to replace the previously installed Operator version 0.1.2. At this point, the installed operator version 0.1.3 matches the channel head and the upgrade is completed.

1.4.3.1.2. Skipping upgrades

The basic path for upgrades in OLM is:

- A catalog source is updated with one or more updates to an Operator.

- OLM traverses every version of the Operator until reaching the latest version the catalog source contains.

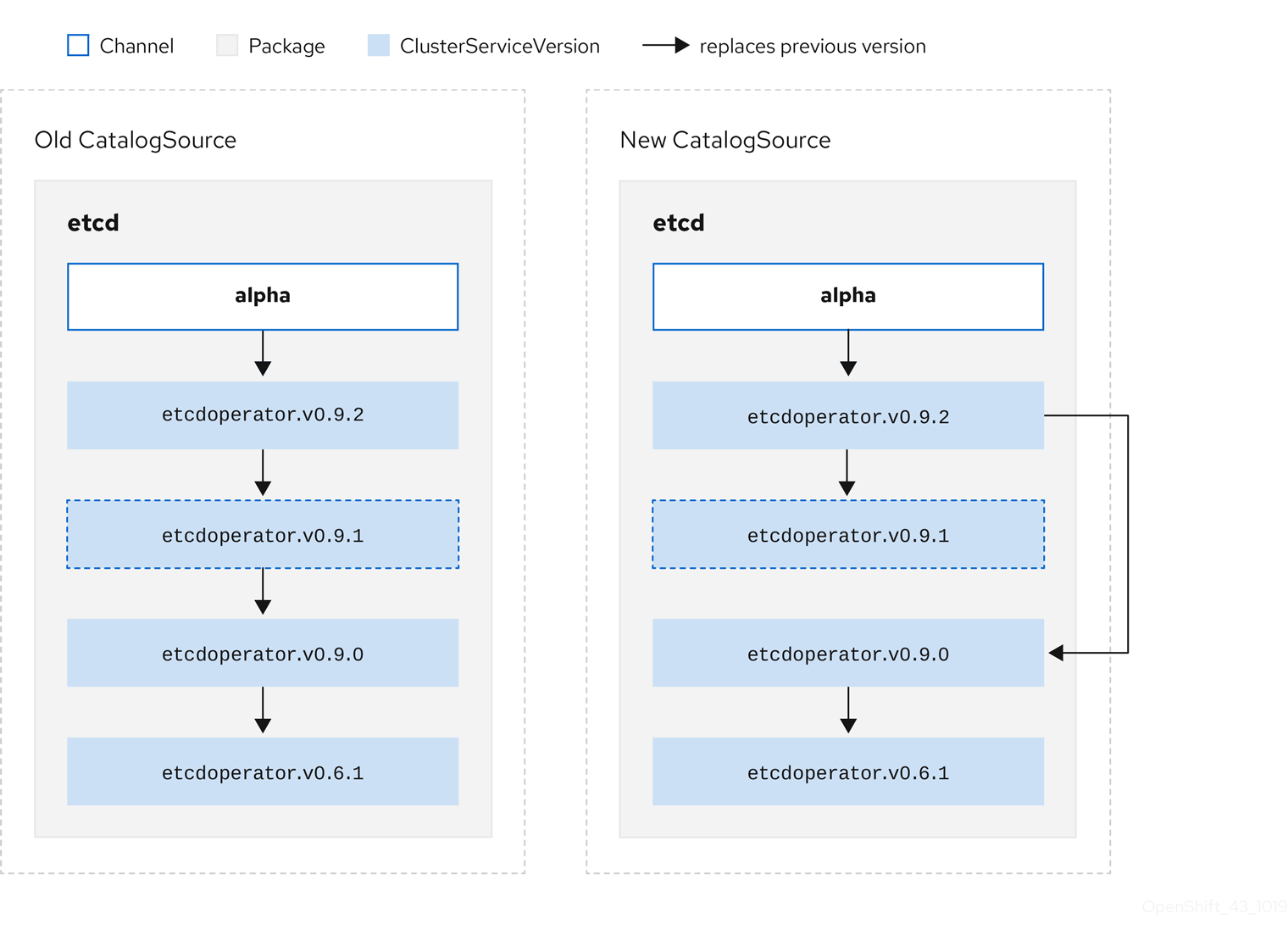

However, sometimes this is not a safe operation to perform. There will be cases where a published version of an Operator should never be installed on a cluster if it has not already, for example because a version introduces a serious vulnerability.

In those cases, OLM must consider two cluster states and provide an update graph that supports both:

- The "bad" intermediate Operator has been seen by the cluster and installed.

- The "bad" intermediate Operator has not yet been installed onto the cluster.

By shipping a new catalog and adding a skipped release, OLM is ensured that it can always get a single unique update regardless of the cluster state and whether it has seen the bad update yet.

Example CSV with skipped release

apiVersion: operators.coreos.com/v1alpha1

kind: ClusterServiceVersion

metadata:

name: etcdoperator.v0.9.2

namespace: placeholder

annotations:

spec:

displayName: etcd

description: Etcd Operator

replaces: etcdoperator.v0.9.0

skips:

- etcdoperator.v0.9.1

Consider the following example of Old CatalogSource and New CatalogSource.

Figure 1.6. Skipping updates

This graph maintains that:

- Any Operator found in Old CatalogSource has a single replacement in New CatalogSource.

- Any Operator found in New CatalogSource has a single replacement in New CatalogSource.

- If the bad update has not yet been installed, it will never be.

1.4.3.1.3. Replacing multiple Operators

Creating New CatalogSource as described requires publishing CSVs that replace one Operator, but can skip several. This can be accomplished using the skipRange annotation:

olm.skipRange: <semver_range>

where <semver_range> has the version range format supported by the semver library.

When searching catalogs for updates, if the head of a channel has a skipRange annotation and the currently installed Operator has a version field that falls in the range, OLM updates to the latest entry in the channel.

The order of precedence is:

-

Channel head in the source specified by

sourceNameon the subscription, if the other criteria for skipping are met. -

The next Operator that replaces the current one, in the source specified by

sourceName. - Channel head in another source that is visible to the subscription, if the other criteria for skipping are met.

- The next Operator that replaces the current one in any source visible to the subscription.

Example CSV with skipRange

apiVersion: operators.coreos.com/v1alpha1

kind: ClusterServiceVersion

metadata:

name: elasticsearch-operator.v4.1.2

namespace: <namespace>

annotations:

olm.skipRange: '>=4.1.0 <4.1.2'

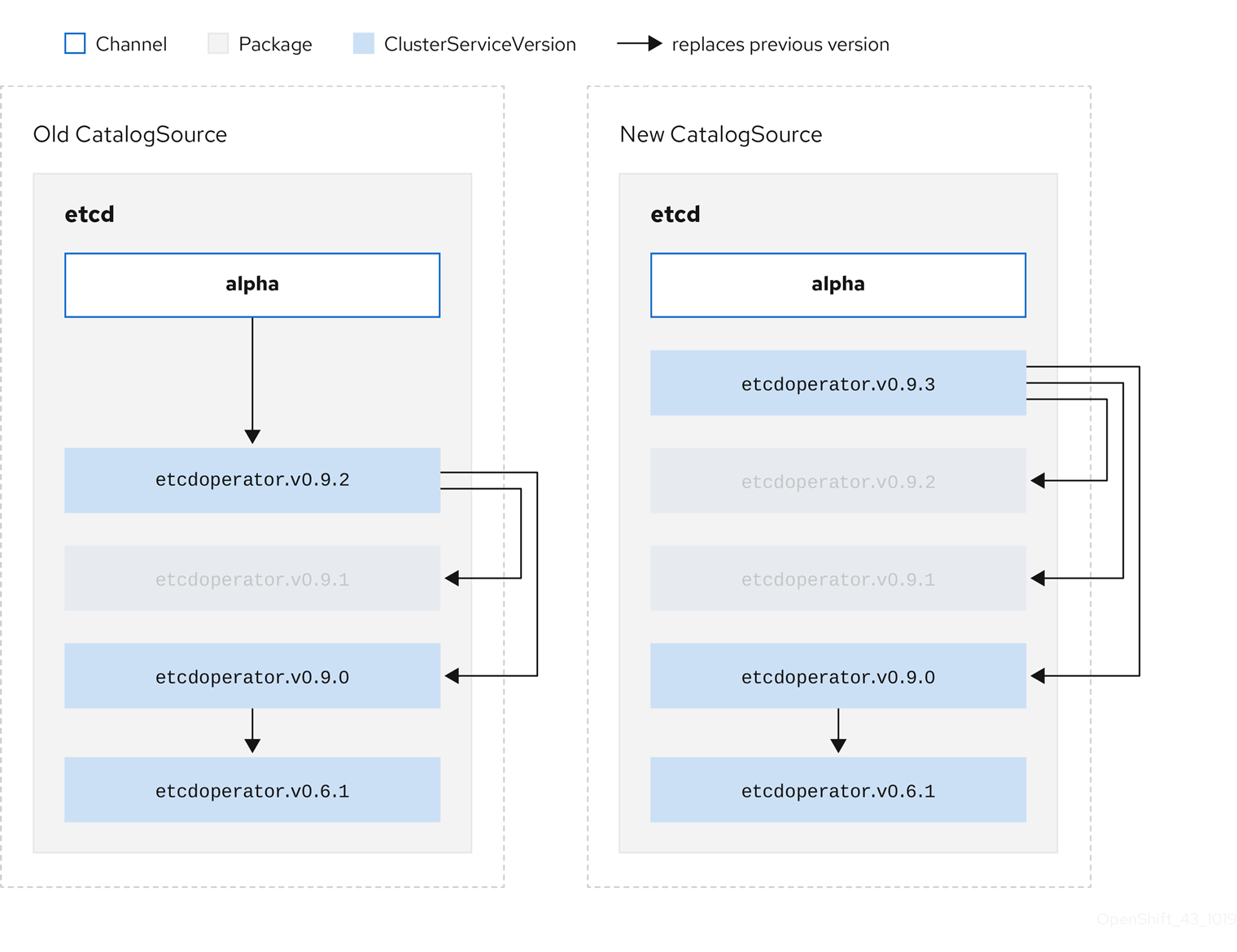

1.4.3.1.4. Z-stream support

A z-stream, or patch release, must replace all previous z-stream releases for the same minor version. OLM does not consider major, minor, or patch versions, it just needs to build the correct graph in a catalog.

In other words, OLM must be able to take a graph as in Old CatalogSource and, similar to before, generate a graph as in New CatalogSource:

Figure 1.7. Replacing several Operators

This graph maintains that:

- Any Operator found in Old CatalogSource has a single replacement in New CatalogSource.

- Any Operator found in New CatalogSource has a single replacement in New CatalogSource.

- Any z-stream release in Old CatalogSource will update to the latest z-stream release in New CatalogSource.

- Unavailable releases can be considered "virtual" graph nodes; their content does not need to exist, the registry just needs to respond as if the graph looks like this.

1.4.4. Operator Lifecycle Manager dependency resolution

This guide outlines dependency resolution and custom resource definition (CRD) upgrade lifecycles with Operator Lifecycle Manager (OLM) in OpenShift Container Platform.

1.4.4.1. About dependency resolution

OLM manages the dependency resolution and upgrade lifecycle of running Operators. In many ways, the problems OLM faces are similar to other operating system package managers like yum and rpm.

However, there is one constraint that similar systems do not generally have that OLM does: because Operators are always running, OLM attempts to ensure that you are never left with a set of Operators that do not work with each other.

This means that OLM must never:

- install a set of Operators that require APIs that cannot be provided, or

- update an Operator in a way that breaks another that depends upon it.

1.4.4.2. CRD upgrades

OLM upgrades a custom resource definition (CRD) immediately if it is owned by a singular cluster service version (CSV). If a CRD is owned by multiple CSVs, then the CRD is upgraded when it has satisfied all of the following backward compatible conditions:

- All existing serving versions in the current CRD are present in the new CRD.

- All existing instances, or custom resources, that are associated with the serving versions of the CRD are valid when validated against the validation schema of the new CRD.

1.4.4.2.1. Adding a new CRD version

Procedure

To add a new version of a CRD:

Add a new entry in the CRD resource under the

versionssection.For example, if the current CRD has a version

v1alpha1and you want to add a new versionv1beta1and mark it as the new storage version, add a new entry forv1beta1:versions: - name: v1alpha1 served: true storage: false - name: v1beta1 1 served: true storage: true- 1

- New entry.

Ensure the referencing version of the CRD in the

ownedsection of your CSV is updated if the CSV intends to use the new version:customresourcedefinitions: owned: - name: cluster.example.com version: v1beta1 1 kind: cluster displayName: Cluster- 1

- Update the

version.

- Push the updated CRD and CSV to your bundle.

1.4.4.2.2. Deprecating or removing a CRD version

Operator Lifecycle Manager (OLM) does not allow a serving version of a custom resource definition (CRD) to be removed right away. Instead, a deprecated version of the CRD must be first disabled by setting the served field in the CRD to false. Then, the non-serving version can be removed on the subsequent CRD upgrade.

Procedure

To deprecate and remove a specific version of a CRD:

Mark the deprecated version as non-serving to indicate this version is no longer in use and may be removed in a subsequent upgrade. For example:

versions: - name: v1alpha1 served: false 1 storage: true- 1

- Set to

false.

Switch the

storageversion to a serving version if the version to be deprecated is currently thestorageversion. For example:versions: - name: v1alpha1 served: false storage: false 1 - name: v1beta1 served: true storage: true 2NoteIn order to remove a specific version that is or was the

storageversion from a CRD, that version must be removed from thestoredVersionin the status of the CRD. OLM will attempt to do this for you if it detects a stored version no longer exists in the new CRD.- Upgrade the CRD with the above changes.

In subsequent upgrade cycles, the non-serving version can be removed completely from the CRD. For example:

versions: - name: v1beta1 served: true storage: true-

Ensure the referencing CRD version in the

ownedsection of your CSV is updated accordingly if that version is removed from the CRD.

1.4.4.3. Example dependency resolution scenarios

In the following examples, a provider is an Operator which "owns" a CRD or API service.

Example: Deprecating dependent APIs

A and B are APIs (CRDs):

- The provider of A depends on B.

- The provider of B has a subscription.

- The provider of B updates to provide C but deprecates B.

This results in:

- B no longer has a provider.

- A no longer works.

This is a case OLM prevents with its upgrade strategy.

Example: Version deadlock

A and B are APIs:

- The provider of A requires B.

- The provider of B requires A.

- The provider of A updates to (provide A2, require B2) and deprecate A.

- The provider of B updates to (provide B2, require A2) and deprecate B.

If OLM attempts to update A without simultaneously updating B, or vice-versa, it is unable to progress to new versions of the Operators, even though a new compatible set can be found.

This is another case OLM prevents with its upgrade strategy.

1.4.5. Operator groups

This guide outlines the use of Operator groups with Operator Lifecycle Manager (OLM) in OpenShift Container Platform.

1.4.5.1. About Operator groups

An Operator group, defined by the OperatorGroup resource, provides multitenant configuration to OLM-installed Operators. An Operator group selects target namespaces in which to generate required RBAC access for its member Operators.

The set of target namespaces is provided by a comma-delimited string stored in the olm.targetNamespaces annotation of a cluster service version (CSV). This annotation is applied to the CSV instances of member Operators and is projected into their deployments.

1.4.5.2. Operator group membership

An Operator is considered a member of an Operator group if the following conditions are true:

- The CSV of the Operator exists in the same namespace as the Operator group.

- The install modes in the CSV of the Operator support the set of namespaces targeted by the Operator group.

An install mode in a CSV consists of an InstallModeType field and a boolean Supported field. The spec of a CSV can contain a set of install modes of four distinct InstallModeTypes:

Table 1.4. Install modes and supported Operator groups

| InstallModeType | Description |

|---|---|

|

| The Operator can be a member of an Operator group that selects its own namespace. |

|

| The Operator can be a member of an Operator group that selects one namespace. |

|

| The Operator can be a member of an Operator group that selects more than one namespace. |

|

|

The Operator can be a member of an Operator group that selects all namespaces (target namespace set is the empty string |

If the spec of a CSV omits an entry of InstallModeType, then that type is considered unsupported unless support can be inferred by an existing entry that implicitly supports it.

1.4.5.3. Target namespace selection

You can explicitly name the target namespace for an Operator group using the spec.targetNamespaces parameter:

apiVersion: operators.coreos.com/v1 kind: OperatorGroup metadata: name: my-group namespace: my-namespace spec: targetNamespaces: - my-namespace

You can alternatively specify a namespace using a label selector with the spec.selector parameter:

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: my-group

namespace: my-namespace

spec:

selector:

cool.io/prod: "true"

Listing multiple namespaces via spec.targetNamespaces or use of a label selector via spec.selector is not recommended, as the support for more than one target namespace in an Operator group will likely be removed in a future release.

If both spec.targetNamespaces and spec.selector are defined, spec.selector is ignored. Alternatively, you can omit both spec.selector and spec.targetNamespaces to specify a global Operator group, which selects all namespaces:

apiVersion: operators.coreos.com/v1 kind: OperatorGroup metadata: name: my-group namespace: my-namespace

The resolved set of selected namespaces is shown in the status.namespaces parameter of an Opeator group. The status.namespace of a global Operator group contains the empty string (""), which signals to a consuming Operator that it should watch all namespaces.

1.4.5.4. Operator group CSV annotations

Member CSVs of an Operator group have the following annotations:

| Annotation | Description |

|---|---|

|

| Contains the name of the Operator group. |

|

| Contains the namespace of the Operator group. |

|

| Contains a comma-delimited string that lists the target namespace selection of the Operator group. |

All annotations except olm.targetNamespaces are included with copied CSVs. Omitting the olm.targetNamespaces annotation on copied CSVs prevents the duplication of target namespaces between tenants.

1.4.5.5. Provided APIs annotation

A group/version/kind (GVK) is a unique identifier for a Kubernetes API. Information about what GVKs are provided by an Operator group are shown in an olm.providedAPIs annotation. The value of the annotation is a string consisting of <kind>.<version>.<group> delimited with commas. The GVKs of CRDs and API services provided by all active member CSVs of an Operator group are included.

Review the following example of an OperatorGroup object with a single active member CSV that provides the PackageManifest resource:

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

annotations:

olm.providedAPIs: PackageManifest.v1alpha1.packages.apps.redhat.com

name: olm-operators

namespace: local

...

spec:

selector: {}

serviceAccount:

metadata:

creationTimestamp: null

targetNamespaces:

- local

status:

lastUpdated: 2019-02-19T16:18:28Z

namespaces:

- local1.4.5.6. Role-based access control

When an Operator group is created, three cluster roles are generated. Each contains a single aggregation rule with a cluster role selector set to match a label, as shown below:

| Cluster role | Label to match |

|---|---|

|

|

|

|

|

|

|

|

|

The following RBAC resources are generated when a CSV becomes an active member of an Operator group, as long as the CSV is watching all namespaces with the AllNamespaces install mode and is not in a failed state with reason InterOperatorGroupOwnerConflict:

- Cluster roles for each API resource from a CRD

- Cluster roles for each API resource from an API service

- Additional roles and role bindings

Table 1.5. Cluster roles generated for each API resource from a CRD

| Cluster role | Settings |

|---|---|

|

|

Verbs on

Aggregation labels:

|

|

|

Verbs on

Aggregation labels:

|

|

|

Verbs on

Aggregation labels:

|

|

|

Verbs on

Aggregation labels:

|

Table 1.6. Cluster roles generated for each API resource from an API service

| Cluster role | Settings |

|---|---|

|

|

Verbs on

Aggregation labels:

|

|

|

Verbs on

Aggregation labels:

|

|

|

Verbs on

Aggregation labels:

|

Additional roles and role bindings

-

If the CSV defines exactly one target namespace that contains

*, then a cluster role and corresponding cluster role binding are generated for each permission defined in thepermissionsfield of the CSV. All resources generated are given theolm.owner: <csv_name>andolm.owner.namespace: <csv_namespace>labels. -

If the CSV does not define exactly one target namespace that contains

*, then all roles and role bindings in the Operator namespace with theolm.owner: <csv_name>andolm.owner.namespace: <csv_namespace>labels are copied into the target namespace.

1.4.5.7. Copied CSVs

OLM creates copies of all active member CSVs of an Operator group in each of the target namespaces of that Operator group. The purpose of a copied CSV is to tell users of a target namespace that a specific Operator is configured to watch resources created there.

Copied CSVs have a status reason Copied and are updated to match the status of their source CSV. The olm.targetNamespaces annotation is stripped from copied CSVs before they are created on the cluster. Omitting the target namespace selection avoids the duplication of target namespaces between tenants.

Copied CSVs are deleted when their source CSV no longer exists or the Operator group that their source CSV belongs to no longer targets the namespace of the copied CSV.

1.4.5.8. Static Operator groups

An Operator group is static if its spec.staticProvidedAPIs field is set to true. As a result, OLM does not modify the olm.providedAPIs annotation of an Operator group, which means that it can be set in advance. This is useful when a user wants to use an Operator group to prevent resource contention in a set of namespaces but does not have active member CSVs that provide the APIs for those resources.

Below is an example of an Operator group that protects Prometheus resources in all namespaces with the something.cool.io/cluster-monitoring: "true" annotation:

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: cluster-monitoring

namespace: cluster-monitoring

annotations:

olm.providedAPIs: Alertmanager.v1.monitoring.coreos.com,Prometheus.v1.monitoring.coreos.com,PrometheusRule.v1.monitoring.coreos.com,ServiceMonitor.v1.monitoring.coreos.com

spec:

staticProvidedAPIs: true

selector:

matchLabels:

something.cool.io/cluster-monitoring: "true"1.4.5.9. Operator group intersection

Two Operator groups are said to have intersecting provided APIs if the intersection of their target namespace sets is not an empty set and the intersection of their provided API sets, defined by olm.providedAPIs annotations, is not an empty set.

A potential issue is that Operator groups with intersecting provided APIs can compete for the same resources in the set of intersecting namespaces.

When checking intersection rules, an Operator group namespace is always included as part of its selected target namespaces.

Rules for intersection

Each time an active member CSV synchronizes, OLM queries the cluster for the set of intersecting provided APIs between the Operator group of the CSV and all others. OLM then checks if that set is an empty set:

If

trueand the CSV’s provided APIs are a subset of the Operator group’s:- Continue transitioning.

If

trueand the CSV’s provided APIs are not a subset of the Operator group’s:If the Operator group is static:

- Clean up any deployments that belong to the CSV.

-

Transition the CSV to a failed state with status reason

CannotModifyStaticOperatorGroupProvidedAPIs.

If the Operator group is not static:

-

Replace the Operator group’s

olm.providedAPIsannotation with the union of itself and the CSV’s provided APIs.

-

Replace the Operator group’s

If

falseand the CSV’s provided APIs are not a subset of the Operator group’s:- Clean up any deployments that belong to the CSV.

-

Transition the CSV to a failed state with status reason

InterOperatorGroupOwnerConflict.

If

falseand the CSV’s provided APIs are a subset of the Operator group’s:If the Operator group is static:

- Clean up any deployments that belong to the CSV.

-

Transition the CSV to a failed state with status reason

CannotModifyStaticOperatorGroupProvidedAPIs.

If the Operator group is not static:

-

Replace the Operator group’s

olm.providedAPIsannotation with the difference between itself and the CSV’s provided APIs.

-

Replace the Operator group’s

Failure states caused by Operator groups are non-terminal.

The following actions are performed each time an Operator group synchronizes:

- The set of provided APIs from active member CSVs is calculated from the cluster. Note that copied CSVs are ignored.

-

The cluster set is compared to

olm.providedAPIs, and ifolm.providedAPIscontains any extra APIs, then those APIs are pruned. - All CSVs that provide the same APIs across all namespaces are requeued. This notifies conflicting CSVs in intersecting groups that their conflict has possibly been resolved, either through resizing or through deletion of the conflicting CSV.

1.4.5.10. Troubleshooting Operator groups

Membership

-

If more than one Operator group exists in a single namespace, any CSV created in that namespace transitions to a failure state with the reason

TooManyOperatorGroups. CSVs in a failed state for this reason transition to pending after the number of Operator groups in their namespaces reaches one. -

If the install modes of a CSV do not support the target namespace selection of the Operator group in its namespace, the CSV transitions to a failure state with the reason

UnsupportedOperatorGroup. CSVs in a failed state for this reason transition to pending after either the target namespace selection of the Operator group changes to a supported configuration, or the install modes of the CSV are modified to support the target namespace selection.

1.4.6. Operator Lifecycle Manager metrics

1.4.6.1. Exposed metrics

Operator Lifecycle Manager (OLM) exposes certain OLM-specific resources for use by the Prometheus-based OpenShift Container Platform cluster monitoring stack.

Table 1.7. Metrics exposed by OLM

| Name | Description |

|---|---|

|

| Number of catalog sources. |

|

|

When reconciling a cluster service version (CSV), present whenever a CSV version is in any state other than |

|

| Number of CSVs successfully registered. |

|

|

When reconciling a CSV, represents whether a CSV version is in a |

|

| Monotonic count of CSV upgrades. |

|

| Number of install plans. |

|

| Number of subscriptions. |

|

|

Monotonic count of subscription syncs. Includes the |

1.5. Understanding OperatorHub

1.5.1. About OperatorHub

OperatorHub is the web console interface in OpenShift Container Platform that cluster administrators use to discover and install Operators. With one click, an Operator can be pulled from its off-cluster source, installed and subscribed on the cluster, and made ready for engineering teams to self-service manage the product across deployment environments using Operator Lifecycle Manager (OLM).

Cluster administrators can choose from Operator sources grouped into the following categories:

| Category | Description |

|---|---|

| Red Hat Operators | Red Hat products packaged and shipped by Red Hat. Supported by Red Hat. |

| Certified Operators | Products from leading independent software vendors (ISVs). Red Hat partners with ISVs to package and ship. Supported by the ISV. |

| Community Operators | Optionally-visible software maintained by relevant representatives in the operator-framework/community-operators GitHub repository. No official support. |

| Custom Operators | Operators you add to the cluster yourself. If you have not added any Custom Operators, the Custom category does not appear in the web console on your OperatorHub. |

OperatorHub content automatically refreshes every 60 minutes.

Operators on OperatorHub are packaged to run on OLM. This includes a YAML file called a cluster service version (CSV) containing all of the CRDs, RBAC rules, Deployments, and container images required to install and securely run the Operator. It also contains user-visible information like a description of its features and supported Kubernetes versions.

The Operator SDK can be used to assist developers packaging their Operators for use on OLM and OperatorHub. If you have a commercial application that you want to make accessible to your customers, get it included using the certification workflow provided by Red Hat’s ISV partner portal at connect.redhat.com.

1.5.2. OperatorHub architecture

The OperatorHub UI component is driven by the Marketplace Operator by default on OpenShift Container Platform in the openshift-marketplace namespace.

The Marketplace Operator manages OperatorHub and OperatorSource custom resource definitions (CRDs).

Although some OperatorSource object information is exposed through the OperatorHub UI, it is only used directly by those who are creating their own Operators.

1.5.2.1. OperatorHub CRD

You can use the OperatorHub CRD to change the state of the default OperatorSource objects provided with OperatorHub on the cluster between enabled and disabled. This capability is useful when configuring OpenShift Container Platform in restricted network environments.

Example OperatorHub custom resource

apiVersion: config.openshift.io/v1 kind: OperatorHub metadata: name: cluster spec: disableAllDefaultSources: true 1 sources: [ 2 { name: "community-operators", disabled: false } ]

1.5.2.2. OperatorSource CRD

For each Operator, the OperatorSource CRD is used to define the external data store used to store Operator bundles.

Example OperatorSource custom resource

apiVersion: operators.coreos.com/v1 kind: OperatorSource metadata: name: community-operators namespace: marketplace spec: type: appregistry 1 endpoint: https://quay.io/cnr 2 registryNamespace: community-operators 3 displayName: "Community Operators" 4 publisher: "Red Hat" 5

- 1

- To identify the data store as an application registry,

typeis set toappregistry. - 2

- Currently, Quay is the external data store used by OperatorHub, so the endpoint is set to

https://quay.io/cnrfor the Quay.ioappregistry. - 3

- For a Community Operator,

registryNamespaceis set tocommunity-operator. - 4

- Optionally, set

displayNameto a name that appears for the Operator in the OperatorHub UI. - 5

- Optionally, set

publisherto the person or organization publishing the Operator that appears in the OperatorHub UI.

1.5.3. Additional resources

1.6. CRDs

1.6.1. Extending the Kubernetes API with custom resource definitions

This guide describes how cluster administrators can extend their OpenShift Container Platform cluster by creating and managing custom resource definitions (CRDs).

1.6.1.1. Custom resource definitions

In the Kubernetes API, a resource is an endpoint that stores a collection of API objects of a certain kind. For example, the built-in Pods resource contains a collection of Pod objects.

A custom resource definition (CRD) object defines a new, unique object type, called a kind, in the cluster and lets the Kubernetes API server handle its entire lifecycle.

Custom resource (CR) objects are created from CRDs that have been added to the cluster by a cluster administrator, allowing all cluster users to add the new resource type into projects.

When a cluster administrator adds a new CRD to the cluster, the Kubernetes API server reacts by creating a new RESTful resource path that can be accessed by the entire cluster or a single project (namespace) and begins serving the specified CR.

Cluster administrators that want to grant access to the CRD to other users can use cluster role aggregation to grant access to users with the admin, edit, or view default cluster roles. Cluster role aggregation allows the insertion of custom policy rules into these cluster roles. This behavior integrates the new resource into the RBAC policy of the cluster as if it was a built-in resource.

Operators in particular make use of CRDs by packaging them with any required RBAC policy and other software-specific logic. Cluster administrators can also add CRDs manually to the cluster outside of the lifecycle of an Operator, making them available to all users.

While only cluster administrators can create CRDs, developers can create the CR from an existing CRD if they have read and write permission to it.

1.6.1.2. Creating a custom resource definition

To create custom resource (CR) objects, cluster administrators must first create a custom resource definition (CRD).

Prerequisites

-

Access to an OpenShift Container Platform cluster with

cluster-adminuser privileges.

Procedure

To create a CRD:

Create a YAML file that contains the following field types:

Example YAML file for a CRD

apiVersion: apiextensions.k8s.io/v1beta1 1 kind: CustomResourceDefinition metadata: name: crontabs.stable.example.com 2 spec: group: stable.example.com 3 version: v1 4 scope: Namespaced 5 names: plural: crontabs 6 singular: crontab 7 kind: CronTab 8 shortNames: - ct 9

- 1

- Use the

apiextensions.k8s.io/v1beta1API. - 2

- Specify a name for the definition. This must be in the

<plural-name>.<group>format using the values from thegroupandpluralfields. - 3

- Specify a group name for the API. An API group is a collection of objects that are logically related. For example, all batch objects like

JoborScheduledJobcould be in the batch API group (such asbatch.api.example.com). A good practice is to use a fully-qualified-domain name (FQDN) of your organization. - 4

- Specify a version name to be used in the URL. Each API group can exist in multiple versions, for example

v1alpha,v1beta,v1. - 5

- Specify whether the custom objects are available to a project (

Namespaced) or all projects in the cluster (Cluster). - 6

- Specify the plural name to use in the URL. The

pluralfield is the same as a resource in an API URL. - 7

- Specify a singular name to use as an alias on the CLI and for display.

- 8

- Specify the kind of objects that can be created. The type can be in CamelCase.

- 9

- Specify a shorter string to match your resource on the CLI.

NoteBy default, a CRD is cluster-scoped and available to all projects.

Create the CRD object:

$ oc create -f <file_name>.yaml

A new RESTful API endpoint is created at:

/apis/<spec:group>/<spec:version>/<scope>/*/<names-plural>/...

For example, using the example file, the following endpoint is created:

/apis/stable.example.com/v1/namespaces/*/crontabs/...

You can now use this endpoint URL to create and manage CRs. The object kind is based on the

spec.kindfield of the CRD object you created.

1.6.1.3. Creating cluster roles for custom resource definitions

Cluster administrators can grant permissions to existing cluster-scoped custom resource definitions (CRDs). If you use the admin, edit, and view default cluster roles, you can take advantage of cluster role aggregation for their rules.

You must explicitly assign permissions to each of these roles. The roles with more permissions do not inherit rules from roles with fewer permissions. If you assign a rule to a role, you must also assign that verb to roles that have more permissions. For example, if you grant the get crontabs permission to the view role, you must also grant it to the edit and admin roles. The admin or edit role is usually assigned to the user that created a project through the project template.

Prerequisites

- Create a CRD.

Procedure

Create a cluster role definition file for the CRD. The cluster role definition is a YAML file that contains the rules that apply to each cluster role. A OpenShift Container Platform controller adds the rules that you specify to the default cluster roles.

Example YAML file for a cluster role definition

kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 1 metadata: name: aggregate-cron-tabs-admin-edit 2 labels: rbac.authorization.k8s.io/aggregate-to-admin: "true" 3 rbac.authorization.k8s.io/aggregate-to-edit: "true" 4 rules: - apiGroups: ["stable.example.com"] 5 resources: ["crontabs"] 6 verbs: ["get", "list", "watch", "create", "update", "patch", "delete", "deletecollection"] 7 --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: aggregate-cron-tabs-view 8 labels: # Add these permissions to the "view" default role. rbac.authorization.k8s.io/aggregate-to-view: "true" 9 rbac.authorization.k8s.io/aggregate-to-cluster-reader: "true" 10 rules: - apiGroups: ["stable.example.com"] 11 resources: ["crontabs"] 12 verbs: ["get", "list", "watch"] 13

- 1

- Use the

rbac.authorization.k8s.io/v1API. - 2 8

- Specify a name for the definition.

- 3

- Specify this label to grant permissions to the admin default role.

- 4

- Specify this label to grant permissions to the edit default role.

- 5 11

- Specify the group name of the CRD.

- 6 12

- Specify the plural name of the CRD that these rules apply to.

- 7 13

- Specify the verbs that represent the permissions that are granted to the role. For example, apply read and write permissions to the

adminandeditroles and only read permission to theviewrole. - 9

- Specify this label to grant permissions to the

viewdefault role. - 10

- Specify this label to grant permissions to the

cluster-readerdefault role.

Create the cluster role:

$ oc create -f <file_name>.yaml

1.6.1.4. Creating custom resources from a file

After a custom resource definitions (CRD) has been added to the cluster, custom resources (CRs) can be created with the CLI from a file using the CR specification.

Prerequisites

- CRD added to the cluster by a cluster administrator.

Procedure

Create a YAML file for the CR. In the following example definition, the

cronSpecandimagecustom fields are set in a CR ofKind: CronTab. TheKindcomes from thespec.kindfield of the CRD object:Example YAML file for a CR

apiVersion: "stable.example.com/v1" 1 kind: CronTab 2 metadata: name: my-new-cron-object 3 finalizers: 4 - finalizer.stable.example.com spec: 5 cronSpec: "* * * * /5" image: my-awesome-cron-image

- 1

- Specify the group name and API version (name/version) from the CRD.

- 2

- Specify the type in the CRD.

- 3

- Specify a name for the object.

- 4

- Specify the finalizers for the object, if any. Finalizers allow controllers to implement conditions that must be completed before the object can be deleted.

- 5

- Specify conditions specific to the type of object.

After you create the file, create the object:

$ oc create -f <file_name>.yaml

1.6.1.5. Inspecting custom resources

You can inspect custom resource (CR) objects that exist in your cluster using the CLI.

Prerequisites

- A CR object exists in a namespace to which you have access.

Procedure

To get information on a specific kind of a CR, run:

$ oc get <kind>

For example:

$ oc get crontab

Example output

NAME KIND my-new-cron-object CronTab.v1.stable.example.com

Resource names are not case-sensitive, and you can use either the singular or plural forms defined in the CRD, as well as any short name. For example:

$ oc get crontabs

$ oc get crontab

$ oc get ct

You can also view the raw YAML data for a CR:

$ oc get <kind> -o yaml

For example:

$ oc get ct -o yaml

Example output

apiVersion: v1 items: - apiVersion: stable.example.com/v1 kind: CronTab metadata: clusterName: "" creationTimestamp: 2017-05-31T12:56:35Z deletionGracePeriodSeconds: null deletionTimestamp: null name: my-new-cron-object namespace: default resourceVersion: "285" selfLink: /apis/stable.example.com/v1/namespaces/default/crontabs/my-new-cron-object uid: 9423255b-4600-11e7-af6a-28d2447dc82b spec: cronSpec: '* * * * /5' 1 image: my-awesome-cron-image 2

1.6.2. Managing resources from custom resource definitions

This guide describes how developers can manage custom resources (CRs) that come from custom resource definitions (CRDs).

1.6.2.1. Custom resource definitions

In the Kubernetes API, a resource is an endpoint that stores a collection of API objects of a certain kind. For example, the built-in Pods resource contains a collection of Pod objects.

A custom resource definition (CRD) object defines a new, unique object type, called a kind, in the cluster and lets the Kubernetes API server handle its entire lifecycle.

Custom resource (CR) objects are created from CRDs that have been added to the cluster by a cluster administrator, allowing all cluster users to add the new resource type into projects.

Operators in particular make use of CRDs by packaging them with any required RBAC policy and other software-specific logic. Cluster administrators can also add CRDs manually to the cluster outside of the lifecycle of an Operator, making them available to all users.

While only cluster administrators can create CRDs, developers can create the CR from an existing CRD if they have read and write permission to it.

1.6.2.2. Creating custom resources from a file

After a custom resource definitions (CRD) has been added to the cluster, custom resources (CRs) can be created with the CLI from a file using the CR specification.

Prerequisites

- CRD added to the cluster by a cluster administrator.

Procedure

Create a YAML file for the CR. In the following example definition, the

cronSpecandimagecustom fields are set in a CR ofKind: CronTab. TheKindcomes from thespec.kindfield of the CRD object:Example YAML file for a CR

apiVersion: "stable.example.com/v1" 1 kind: CronTab 2 metadata: name: my-new-cron-object 3 finalizers: 4 - finalizer.stable.example.com spec: 5 cronSpec: "* * * * /5" image: my-awesome-cron-image

- 1

- Specify the group name and API version (name/version) from the CRD.

- 2

- Specify the type in the CRD.

- 3

- Specify a name for the object.

- 4

- Specify the finalizers for the object, if any. Finalizers allow controllers to implement conditions that must be completed before the object can be deleted.

- 5

- Specify conditions specific to the type of object.

After you create the file, create the object:

$ oc create -f <file_name>.yaml

1.6.2.3. Inspecting custom resources

You can inspect custom resource (CR) objects that exist in your cluster using the CLI.

Prerequisites

- A CR object exists in a namespace to which you have access.

Procedure

To get information on a specific kind of a CR, run:

$ oc get <kind>

For example:

$ oc get crontab

Example output

NAME KIND my-new-cron-object CronTab.v1.stable.example.com

Resource names are not case-sensitive, and you can use either the singular or plural forms defined in the CRD, as well as any short name. For example:

$ oc get crontabs

$ oc get crontab

$ oc get ct

You can also view the raw YAML data for a CR:

$ oc get <kind> -o yaml

For example:

$ oc get ct -o yaml

Example output

apiVersion: v1 items: - apiVersion: stable.example.com/v1 kind: CronTab metadata: clusterName: "" creationTimestamp: 2017-05-31T12:56:35Z deletionGracePeriodSeconds: null deletionTimestamp: null name: my-new-cron-object namespace: default resourceVersion: "285" selfLink: /apis/stable.example.com/v1/namespaces/default/crontabs/my-new-cron-object uid: 9423255b-4600-11e7-af6a-28d2447dc82b spec: cronSpec: '* * * * /5' 1 image: my-awesome-cron-image 2