Chapter 5. Deploying the HCI nodes

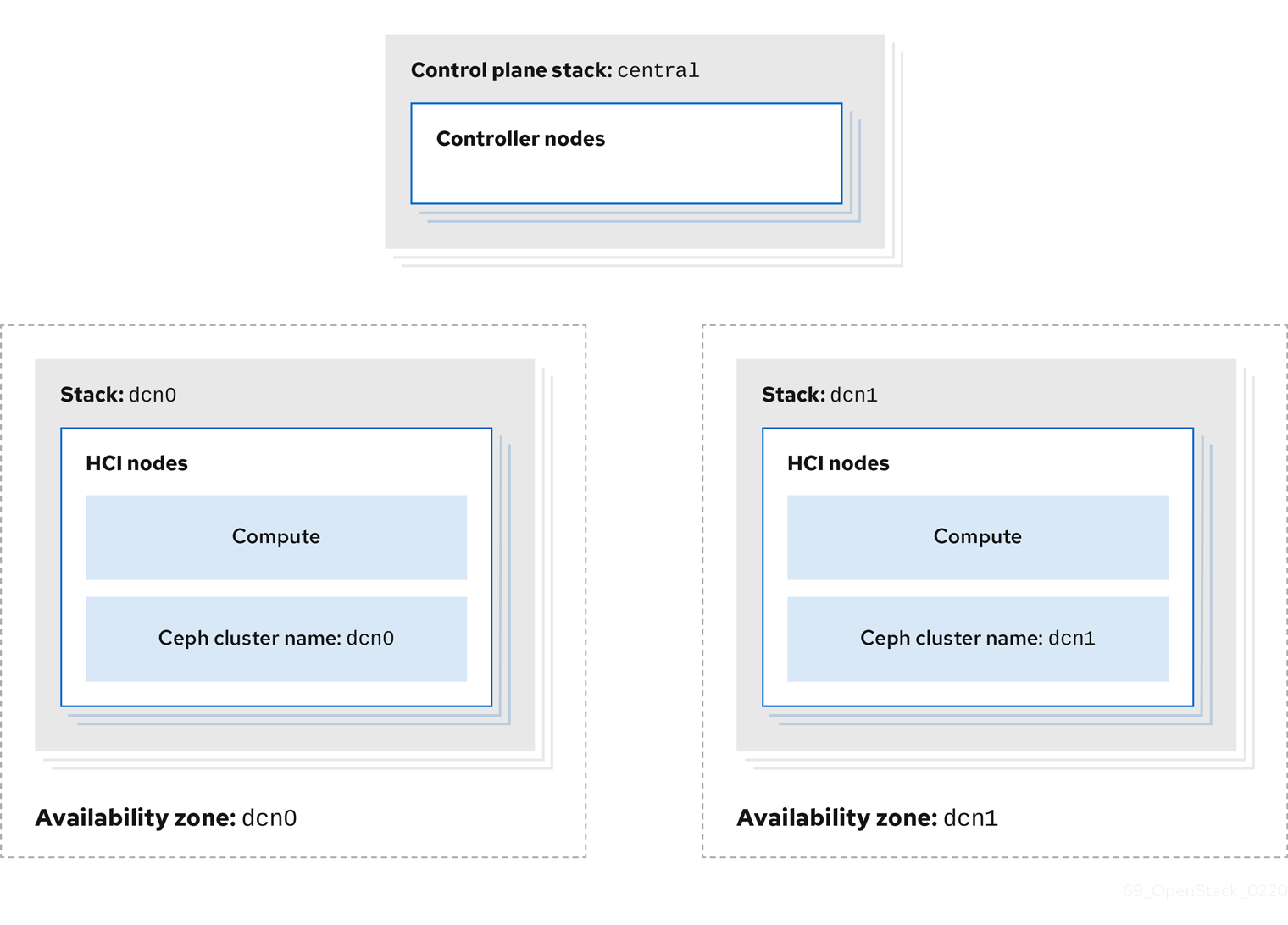

For DCN sites, you can deploy a hyper-converged infrastructure (HCI) stack that uses Compute and Ceph Storage on a single node. For example, the following diagram shows two DCN stacks named dcn0 and dcn1, each in their own availability zone (AZ). Each DCN stack has its own Ceph cluster and Compute services:

The procedures in Configuring the distributed compute node (DCN) environment files and Deploying HCI nodes to the distributed compute node (DCN) site describe this deployment method. These procedures demonstrate how to add a new DCN stack to your deployment and reuse the configuration from the existing heat stack to create new environment files. In the example procedures, the first heat stack deploys an overcloud within a centralized data center. Another heat stack is then created to deploy a batch of Compute nodes to a remote physical location.

5.1. Configuring the distributed compute node environment files

This procedure retrieves the metadata of your central site and then generates the configuration files that the distributed compute node (DCN) sites require:

Procedure

Export stack information from the

centralstack. You must deploy thecontrol-planestack before running this command:openstack overcloud export \ --config-download-dir /var/lib/mistral/central \ --stack central \ --output-file ~/dcn-common/control-plane-export.yaml \

This procedure creates a new control-plane-export.yaml environment file and uses the passwords in the plan-environment.yaml from the overcloud. The control-plane-export.yaml file contains sensitive security data. You can remove the file when you no longer require it to improve security.

5.2. Deploying HCI nodes to the distributed compute node site

This procedure uses the DistributedComputeHCI role to deploy HCI nodes to an availability zone (AZ) named dcn0. This role is used specifically for distributed compute HCI nodes.

CephMon runs on the HCI nodes and cannot run on the central Controller node. Additionally, the central Controller node is deployed without Ceph.

Procedure

Review the overrides for the distributed compute node (DCN) site in

dcn0/overrides.yaml:parameter_defaults: DistributedComputeHCICount: 3 DistributedComputeHCIFlavor: baremetal DistributedComputeHCISchedulerHints: 'capabilities:node': '0-ceph-%index%' CinderStorageAvailabilityZone: dcn0 NovaAZAttach: falseReview the proposed Ceph configuration in

dcn0/ceph.yaml.parameter_defaults: CephClusterName: dcn0 NovaEnableRbdBackend: false CephAnsiblePlaybookVerbosity: 3 CephPoolDefaultPgNum: 256 CephPoolDefaultSize: 3 CephAnsibleDisksConfig: osd_scenario: lvm osd_objectstore: bluestore devices: - /dev/sda - /dev/sdb - /dev/sdc - /dev/sdd - /dev/sde - /dev/sdf - /dev/sdg - /dev/sdh - /dev/sdi - /dev/sdj - /dev/sdk - /dev/sdl ## Everything below this line is for HCI Tuning CephAnsibleExtraConfig: ceph_osd_docker_cpu_limit: 1 is_hci: true CephConfigOverrides: osd_recovery_op_priority: 3 osd_recovery_max_active: 3 osd_max_backfills: 1 ## Set relative to your hardware: # DistributedComputeHCIParameters: # NovaReservedHostMemory: 181000 # DistributedComputeHCIExtraConfig: # nova::cpu_allocation_ratio: 8.2Replace the values for the following parameters with values that suit your environment. For more information, see the Deploying an overcloud with containerized Red Hat Ceph and Hyperconverged Infrastructure guides.

-

CephAnsibleExtraConfig -

DistributedComputeHCIParameters -

CephPoolDefaultPgNum -

CephPoolDefaultSize -

DistributedComputeHCIExtraConfig

-

Create a new file called

nova-az.yamlwith the following contents:resource_registry: OS::TripleO::Services::NovaAZConfig: /usr/share/openstack-tripleo-heat-templates/deployment/nova/nova-az-config.yaml parameter_defaults: NovaComputeAvailabilityZone: dcn0 RootStackName: central

Provided that the overcloud can access the endpoints that are listed in the

centralrcfile created by the central deployment, this command creates an AZ calleddcn0, with the new HCI Compute nodes added to that AZ during deployment.Run the

deploy.shdeployment script fordcn0:#!/bin/bash STACK=dcn0 source ~/stackrc if [[ ! -e distributed_compute_hci.yaml ]]; then openstack overcloud roles generate DistributedComputeHCI -o distributed_compute_hci.yaml fi time openstack overcloud deploy \ --stack $STACK \ --templates /usr/share/openstack-tripleo-heat-templates/ \ -r distributed_compute_hci.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/disable-telemetry.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/podman.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-ansible.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/cinder-volume-active-active.yaml \ -e ~/dcn-common/control-plane-export.yaml \ -e ~/containers-env-file.yaml \ -e ceph.yaml \ -e nova-az.yaml \ -e overrides.yamlWhen the overcloud deployment finishes, see the post-deployment configuration steps and checks in Chapter 6, Post-deployment configuration.