How to Safely Replace OSDs with Block.db in Cephadm 18.2.4 Reef

I have two scenarios regarding my Cephadm 18.2.4 Reef cluster (using Orchestrator on the monitor node for operations):

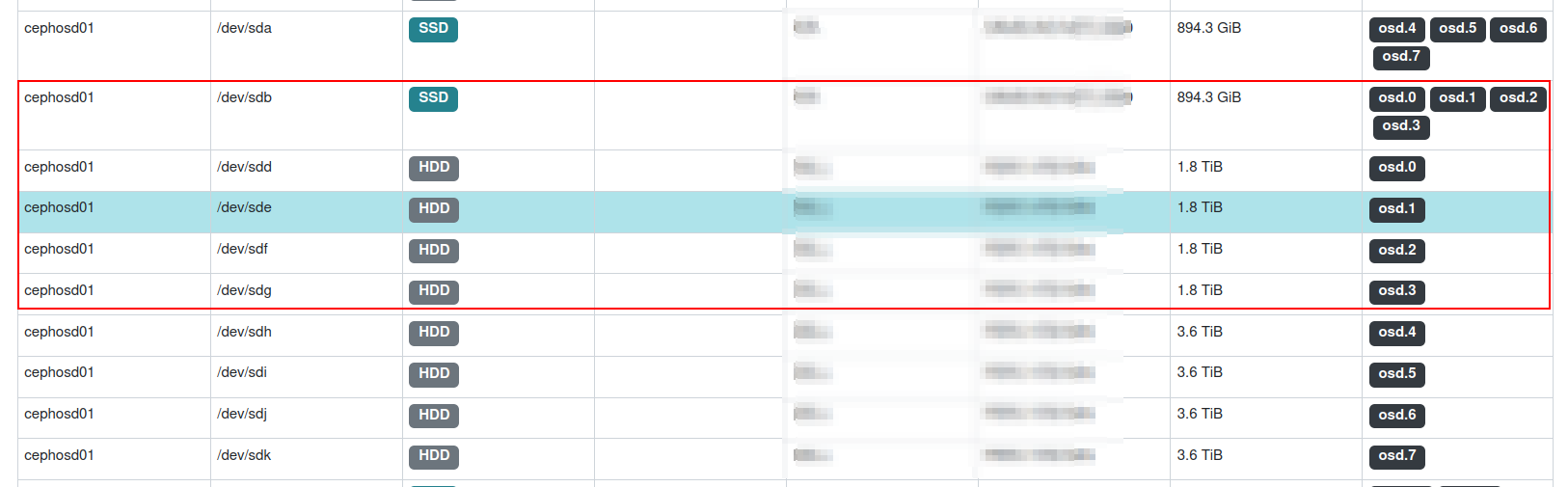

Scenario 1: (as shown in the attached image with the description "Replace all block.db HDDs in one go  ")

")

On one cephosd (running solely OSD containers), I want to replace all 1.8T OSDs with 3.6T OSDs. These 1.8T OSDs are currently linked to SSDs for block.db.

What is the safest method to replace them without significantly impacting the cluster (data loss may occur, but I believe that replication on other cephosd nodes will support recovery)?

My initial thought is to remove the 1.8T OSDs and then also remove the SSD, then re-add the HDDs and reassign the block.db. However, this method seems crude and less safe. I found the --replace option when removing OSDs, so what would be the correct approach for the removal phase and how should I handle the addition of the new OSDs and the SSD (block.db)? I would greatly appreciate guidance on a safe process and procedure.

Replace all block.db HDDs

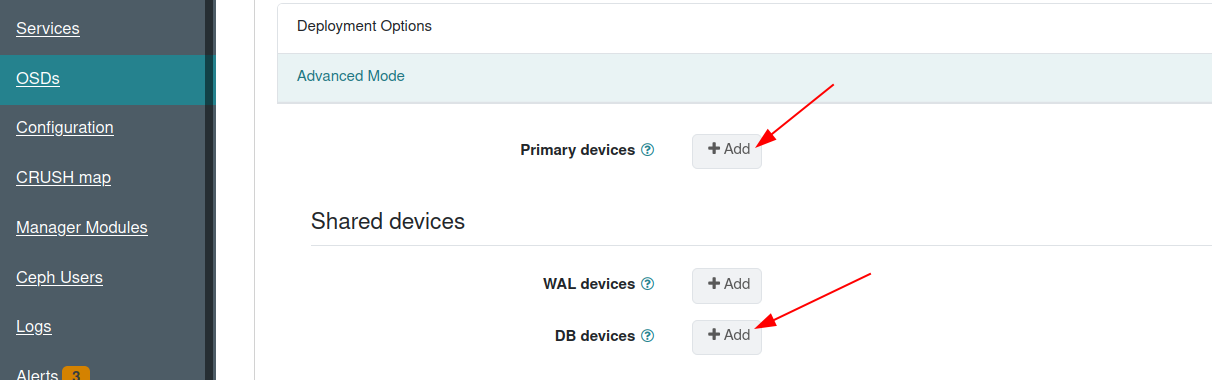

Scenario 2: Assuming my osd.0 is faulty and needs to be replaced (which is obvious), how can I designate the new osd.0 to link back to the SSD (block.db) that is still active with osd.1, osd.2, and osd.3 after removing osd.0 from the cluster? If I use the interface to add (OSDs -> Create), I can only designate the new HDD as the primary device and cannot specify the DB device because the SSD I want to add is unavailable (as it is still in use with osd.1, osd.2, and osd.3).(as shown in the attached image with the description " ".

".

How can I proceed in this scenario? I think this situation will occur frequently in clusters with block.db. Please note that my OSD nodes only run OSD containers and cannot use ceph-volume on them.

Thank you all for reading, and I look forward to your advice and assistance.