Accelerating Confidential AI on OpenShift with the Intel E2100 IPU, DPU Operator, and F5 NGINX

Table of Contents

Introduction

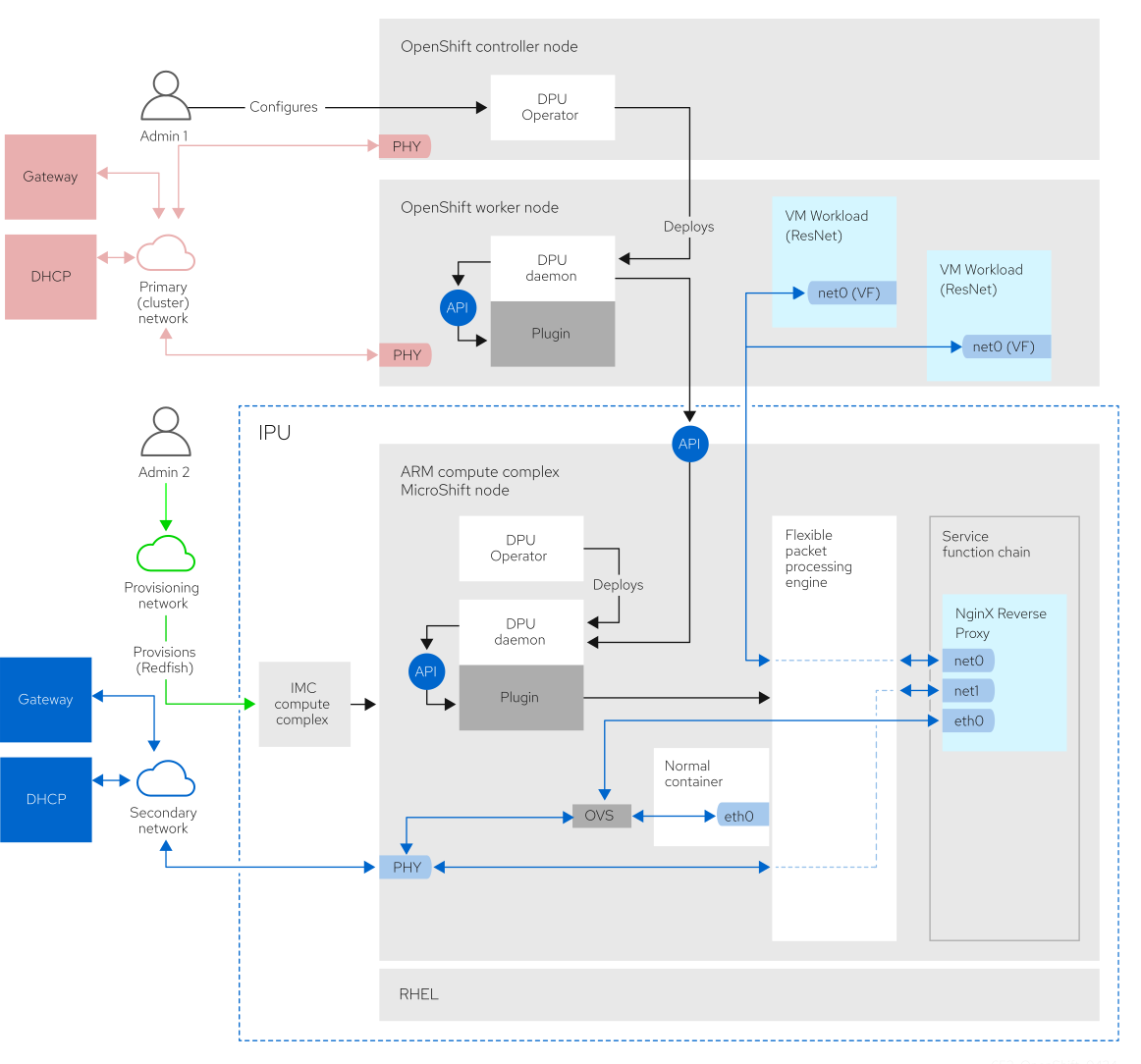

This knowledge base details the steps required to deploy a full end-to-end solution with Intel's Infrastructure Processing Unit (Intel® IPU E2100 Series) integrated into Red Hat OpenShift, the industry-leading hybrid cloud application platform. An IPU is an advanced network interface card with an embedded CPU complex that allows it to offload infrastructure tasks from the host server, which is a concept some vendors refer to as a Data Processing Unit (DPU). We will demonstrate how to leverage the OpenShift DPU Operator to offload network functions. Specifically, this involves using F5 NGINX as a reverse proxy for confidential AI workloads running on the host as pods, thereby enhancing application performance and security. Note that most of this knowledge base can be re-used for other workloads as well.

Key components and concepts:

- Intel IPU E2100 Series: An advanced programmable network device that combines a standard Ethernet network pass with a powerful embedded compute complex. It features an IPU Management Console (IMC) and an Arm-based Compute Complex (ACC). Its Flexible Programmable Packet Processing Engine (FPPE) allows for service function chaining, offloading tasks like firewalls, packet filtering, and compression. This frees host CPU resources and introduces a security boundary by physically separating infrastructure services from the user applications running on the host.

- Arm Compute Complex (ACC): Runs MicroShift on Red Hat Enterprise Linux (RHEL), enabling management with standard Red Hat tools, treating the IPU like any other server. The ACC is powerful enough to handle significant data plane traffic or run control plane functions locally.

- OpenShift DPU Operator: Deploys a daemonset on OpenShift worker nodes. These daemons interface with a daemon on the IPU via a vendor-agnostic API that is part of the Open Programmable Infrastructure (OPI) project, managing the lifecycle of IPU workloads through a vendor-agnostic, Kubernetes-native workflow.

- Solution Overview: This knowledge base walks through deploying F5 NGINX on the IPU. This NGINX instance functions as a reverse proxy, providing access to ResNet application pods (not VMs) running on the OpenShift worker nodes.

Prerequisites Before proceeding, ensure the following prerequisites are met:

- Intel IPU E2100 Series Hardware: Properly installed in your OpenShift worker nodes.

- IPU Firmware: Upgraded to version 2.0.0.11126 or later.

- Redfish:

- Enabled and reachable on the IPU.

- The Redfish instance must be able to reach the HTTPS server hosting the RHEL ISO for the IPU.

- If using self-signed certificates for your HTTPS server, ensure these are trusted by the IPU.

- Network Time Protocol (NTP) must be configured on the IPU, as it is a requirement for TLS connections used by Redfish.

- IPU Driver Configuration: The IPU must be configured to use the

idpfdriver instead of the defaulticcnetdriver for RHEL compatibility. Refer to the official Intel IPU documentation for instructions on how to perform this configuration. - OpenShift Container Platform: A functional OpenShift cluster, minimum version 4.19, must be installed and operational. This knowledge base focuses on worker nodes equipped with IPUs.

- Network Connectivity:

- Each network segment (OpenShift cluster, IPU management, IPU data plane) must have DHCP and DNS services.

- All components should have internet access for pulling images and packages.

Solution architecture and network topology

The deployment involves three networks:

- Red Network (OpenShift Network): The standard OpenShift cluster network for control plane and primary application traffic. This network connects the OpenShift controllers and worker nodes.

- Blue Network (Secondary/Data Plane Network): A secondary network enabling communication between workloads on the IPU (for example NGINX) and pods on the host that are attached to this secondary network.

- Green Network (Provisioning Network): A separate network meant to access the management complex of systems.

Step-by-Step deployment

Preparing the Intel IPU (Installing RHEL and MicroShift)

This section outlines deploying RHEL and MicroShift to the IPU. You have two primary options.

Option A: Install RHEL for Edge Red Hat Enterprise Linux for Edge is an immutable operating system, which provides a more robust and predictable environment.

-

Create RHEL for Edge image with kickstart: Follow the guidance in Creating RHEL for Edge images to build a complete installation image that includes MicroShift. The documentation explains more than just creating a kickstart file. Include the following in your kickstart file to enable iSCSI boot for the IPU's Arm Compute Complex (ACC). The

192.168.0.0/24network is internal to the IPU and should not be used elsewhere.bootloader --location=mbr --driveorder=sda --append="ip=192.168.0.2:::255.255.255.0::enp0s1f0:off netroot=iscsi:192.168.0.1::::iqn.e2000:acc" -

Boot ISO on IPU via Redfish: Use Redfish virtual media to boot the newly created RHEL for Edge ISO on the IPU. Consult the official Intel IPU documentation for specific Redfish procedures.

Option B: Install Standard RHEL and then MicroShift Alternatively, you can install a standard RHEL image and then install MicroShift manually.

- Install RHEL: Install a standard RHEL Server for ARM on the IPU.

- Install and Configure MicroShift: Once RHEL is installed, follow the guidance in the Red Hat build of MicroShift documentation to install MicroShift via RPM packages.

Continuing IPU Configuration (Both Options)

The following steps apply regardless of the RHEL installation method chosen.

-

Copy P4 artifacts to the DPU (IPU): The P4 program defines the packet processing pipeline on the IPU. Obtain the necessary

intel-ipu-acc-components.tar.gzarchive. Note: This is not part of the OpenShift DPU Operator and must be obtained from Intel. An example URL might behttps://<your-internal-mirror>/intel-ipu-acc-components-2.0.0.11126.tar.gz.Transfer and extract these artifacts to the appropriate location on the IPU's ACC:

# Copy the archive to the ACC (replace with actual URL) curl -L https://<your-internal-mirror>/intel-ipu-acc-components-2.0.0.11126.tar.gz -o /tmp/p4.tar.gz # Untar the archive rm -rf /opt/p4 && mkdir -p /opt/p4 tar -U -C /opt/p4 -xzf /tmp/p4.tar.gz --strip-components=1 # Rename directories mv /opt/p4/p4-cp /opt/p4/p4-cp-nws mv /opt/p4/p4-sde /opt/p4/p4sde -

Configure

hugepageson the ACC: Instead of using asystemdservice, it is recommended to configurehugepagesvia kernel arguments. This ensures memory is allocated at the earliest point during boot, preventing fragmentation. Run the following commands on the ACC:# Add kernel arguments to enable hugepages grubby --update-kernel=ALL --args="default_hugepagesz=2M hugepagesz=2M hugepages=512" # Rebuild grub configuration grub2-mkconfig -o /boot/grub2/grub.cfg # Reboot for the changes to take effect reboot -

Reload IDPF driver on Host: As a workaround for a known issue, after the IPU is fully set up, reload the

idpfdriver on the OpenShift worker node hosting the IPU. If the host OS is not yet installed, perform this step after it has been set up.sudo rmmod idpf sudo modprobe idpf

Install OpenShift Container Platform

Ensure you have a fully operational OpenShift cluster. For installation guidance, refer to the OpenShift documentation. The Assisted Installer method supports various deployment platforms with a focus on bare metal.

Install the DPU Operator

The DPU Operator must be installed on both the OpenShift cluster (host) and the MicroShift cluster (IPU).

- Install on your OpenShift cluster (Host): Install the DPU Operator from OperatorHub in the OpenShift web console.

-

Once installed, create a

DpuOperatorConfigresource to set the mode tohost:cat <<EOF | oc apply -f - apiVersion: config.openshift.io/v1 kind: DpuOperatorConfig metadata: name: dpu-operator-config spec: mode: host EOF -

Label the worker node that contains the IPU by running the following command:

$ oc label NODE dpu=true

Install on MicroShift (IPU)

Installing operators on MicroShift requires enabling OLM and adding a catalog source.

-

Install the OLM package on the IPU by following the guidance in Installing the Operator Lifecycle Manager (OLM) from an RPM package. For example run the following command:

$ sudo dnf install microshift-olm -

To apply the manifest from the package to an active cluster, run the following command:

$ sudo systemctl restart microshift -

On the OpenShift cluster retrieve the YAML definition of the

redhat-operatorsCatalogSource by running the following command:$ oc get catalogsource redhat-operators -n openshift-marketplace -o yaml > redhat-operators-catalog.yaml -

Transfer the

redhat-operators-catalog.yamlfile to the MicroShift system. You might need to edit the file to remove or modify certain fields for example status, OpenShift-specific annotations that are incompatible with MicroShift. Then, apply it to the MicroShift cluster by running the following command:$ oc apply -f redhat-operators-catalog.yaml -n openshift-marketplace -

Install the DPU Operator using the OLM.

-

Once installed, create a

DpuOperatorConfigresource to set the mode todpu:cat <<EOF | oc apply -f - apiVersion: config.openshift.io/v1 kind: DpuOperatorConfig metadata: name: dpu-operator-config spec: mode: dpu EOF -

Label the MicroShift node by running the following command:

$ oc label NODE dpu=true

Deploy F5 NGINX on the IPU

With the operator installed on both sides, deploy NGINX to the IPU by creating a ServiceFunctionChain custom resource on the MicroShift instance.

-

Create a YAML file named

nginx-sfc.yaml:apiVersion: config.openshift.io/v1 kind: ServiceFunctionChain metadata: name: sfc-test namespace: openshift-dpu-operator spec: networkFunctions: - name: nginx image: nginx imagePullPolicy: IfNotPresent -

Apply this manifest to the MicroShift cluster on the IPU by running the following command:

$ oc create -f nginx-sfc.yamlNOTE: Ensure you are using the correct

kubeconfigfor the MicroShift cluster.

Deploy workload pods on the Host

Deploy the ResNet workload pods on the OpenShift worker nodes.

-

Create a manifest named

resnet-pod.yaml:apiVersion: v1 kind: Pod metadata: name: resnet50-model-server-1 namespace: default annotations: k8s.v1.cni.cncf.io/networks: default-sriov-net labels: app: resnet50-model-server-service spec: securityContext: runAsUser: 0 nodeSelector: kubernetes.io/hostname: worker-238 volumes: - name: model-volume emptyDir: {} initContainers: - name: model-downloader image: ubuntu:latest securityContext: runAsUser: 0 command: - bash - -c - | apt-get update && \ apt-get install -y wget ca-certificates && \ mkdir -p /models/1 && \ wget --no-check-certificate [https://storage.openvinotoolkit.org/repositories/open_model_zoo/2022.1/models_bin/2/resnet50-binary-0001/FP32-INT1/resnet50-binary-0001.xml](https://storage.openvinotoolkit.org/repositories/open_model_zoo/2022.1/models_bin/2/resnet50-binary-0001/FP32-INT1/resnet50-binary-0001.xml) -O /models/1/model.xml && \ wget --no-check-certificate [https://storage.openvinotoolkit.org/repositories/open_model_zoo/2022.1/models_bin/2/resnet50-binary-0001/FP32-INT1/resnet50-binary-0001.bin](https://storage.openvinotoolkit.org/repositories/open_model_zoo/2022.1/models_bin/2/resnet50-binary-0001/FP32-INT1/resnet50-binary-0001.bin) -O /models/1/model.bin volumeMounts: - name: model-volume mountPath: /models containers: - name: ovms image: openvino/model_server:latest args: - "--model_path=/models" - "--model_name=resnet50" - "--port=9000" - "--rest_port=8000" ports: - containerPort: 8000 - containerPort: 9000 volumeMounts: - name: model-volume mountPath: /models securityContext: privileged: true resources: requests: openshift.io/dpu: '1' limits: openshift.io/dpu: '1'Key points in this manifest:

k8s.v1.cni.cncf.io/networks: default-sriov-net: This annotation attaches the pod to the secondary "Blue Network".openshift.io/dpu: '1': This resource request ensures the pod is scheduled on a node with the necessary DPU/IPU hardware resources attached.

-

Apply the manifest by running the following command:

$ oc apply -f resnet-pod.yaml -n <namespace> -

Repeat for additional ResNet pods.

Configure NGINX as a reverse proxy

The NGINX pod must be assigned a static IP address on the Blue Network to serve as a stable endpoint for clients. For this guide, we use the example static IP 172.16.3.200.

The NGINX pod running on the IPU needs configuration to forward requests to the ResNet pods on the Blue Network.

-

Obtain IPs of ResNet pods: Identify the IP addresses assigned to your ResNet pods on the Blue Network by looking at the

k8s.v1.cni.cncf.io/network-statusannotation for thenet1interface inoc describe pod/resnet50-model-server-1. Run the following command on the OCP cluster for each ResNet pod:$ oc describe pod/resnet50-model-server-1 | grep -A5 'k8s.v1.cni.cncf.io/network-status'Look for the IP address associated with the

net1interface. -

Configure and apply NGINX Configuration: For this article, we will exec into the NGINX pod to apply the configuration directly. Create an

nginx.conffile locally. Adjust theupstream model_serversIPs with the actual pod addresses discovered in the previous step:http { server { # Use the static IP assigned to the NGINX pod on the Blue Network listen 172.16.3.200:443 ssl http2; # Use the example IP 172.16.3.200 server_name grpc.example.com; # Replace with actual paths to your TLS certificates ssl_certificate /etc/nginx/server.crt; ssl_certificate_key /etc/nginx/server.key; # proxy gRPC → your upstream location / { grpc_set_header Host $http_host; grpc_set_header X-Real-IP $remote_addr; grpc_set_header X-Forwarded-For $proxy_add_x_forwarded_for; grpc_pass grpc://model_servers; } location /nginx_status { stub_status on; } } upstream model_servers { # ADJUST THESE IPs with the dynamic Blue Network IPs of your ResNet pods server <ResNet_Pod_IP_1>:9000; server <ResNet_Pod_IP_2>:9000; server <ResNet_Pod_IP_3>:9000; } } events { # ... -

Copy this file into the NGINX pod running on the IPU (use the MicroShift

kubeconfig). After modifying the configuration, you must runnginx -s reloadto apply the changes without interrupting the service.For a production environment, the process must be automated because pods can come and go at any time. In a production environment, you would run a dedicated tool or a script that monitors the ResNet pods (or an equivalent Kubernetes Controller/Operator). This tool would monitor the ResNet pods for lifecycle events (start/stop/scale). Extract the new, current Blue Network IP addresses from the

network-statusannotation. Dynamically update the NGINX configuration file inside the NGINX pod (or a mounted volume). Send thenginx -s reloadcommand to the NGINX master process to ensure the new configuration is applied instantly.

Accessing the Service and Performing Inference

Once NGINX is configured, clients can send inference requests to it.

-

Identify NGINX Access Point: This is the IP address and port on which NGINX is listening on the IPU's Blue Network interface (for example

172.16.3.200:443from the config).Client Access: Clients send their requests to

https://<NGINX_IPU_BLUE_NETWORK_IP>. Using HTTPS ensures that TLS termination is offloaded to the IPU. These clients must have network reachability to the IPU's NGINX IP on the Blue Network. -

Verification: Send a test request and verify you receive a response from a ResNet pod.

-

Monitor NGINX logs on the IPU and application logs on the pods for troubleshooting.

Conclusion

By following this knowledge base, you have successfully:

- Deployed the DPU Operator on OpenShift and MicroShift.

- Offloaded an F5 NGINX reverse proxy to the Intel IPU.

- Exposed ResNet pods running on host worker nodes.

This architecture leverages the IPU's capabilities to free up host CPU resources, improve network performance, and enhance security by isolating network functions. This setup provides a robust, Kubernetes-native approach to managing and utilizing DPUs within an OpenShift environment.

Further Information

- Intel IPU Documentation (for firmware and Redfish)

- Red Hat OpenShift Container Platform Documentation

- Red Hat MicroShift Documentation

- OpenShift DPU Operator Documentation

- F5 NGINX Documentation (for NGINX configuration details)

Comments