Onboarding guide to OCP4

A developer focused guide for OCP3 users new to OCP4

Red Hat OpenShift 4, is the next generation of Red Hat’s trusted enterprise Kubernetes platform, re-engineered to address the complexity of managing container-based applications in production systems. It is designed as a self-managing platform with automatic software updates and lifecycle management across hybrid cloud environments, built on the trusted foundation of Red Hat Enterprise Linux and Red Hat Enterprise Linux CoreOS.

OpenShift version

This document will describe changed and new features up to and including OpenShift Container Platform 4.8.

Onboarding topics and assumptions

This guide assumes basic knowledge of OpenShift and will highlight changes and new functionality of OpenShift 4 for end-users and developers using the platform.

OpenShift 4 brings many enhancements as well as new functionality. While many of the changes bring major functionality benefits to the platform operators, they are "under the hood" and not visible to end users of the OpenShift platform.

This document will try to highlight some of the major changes for developers as well as show added functionality. Highlighting platform architecture changes not visible for end-users are out of scope for this document.

The OpenShift Console

With OpenShift 4, the default web console will have a different look for the developer, as it's split into two perspectives (Developer and Administrator) and defaults to the application-centric Developer perspective with a graphical topology view of deployed applications.

The perspective switcher

Switch between the Developer and Administrator perspectives.

- Use the Administrator perspective to manage workload storage, networking, cluster settings, and more. This may require additional user access.

- Use the Developer perspective to build applications and associated components and services, define how they work together, and monitor their health over time.

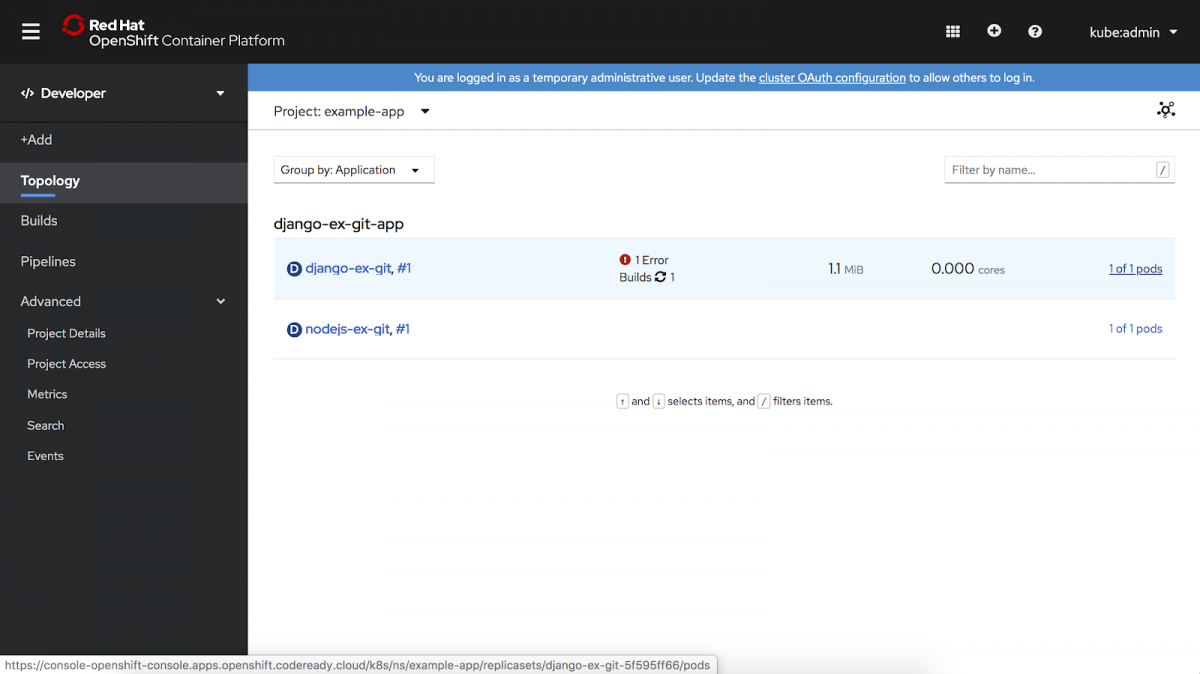

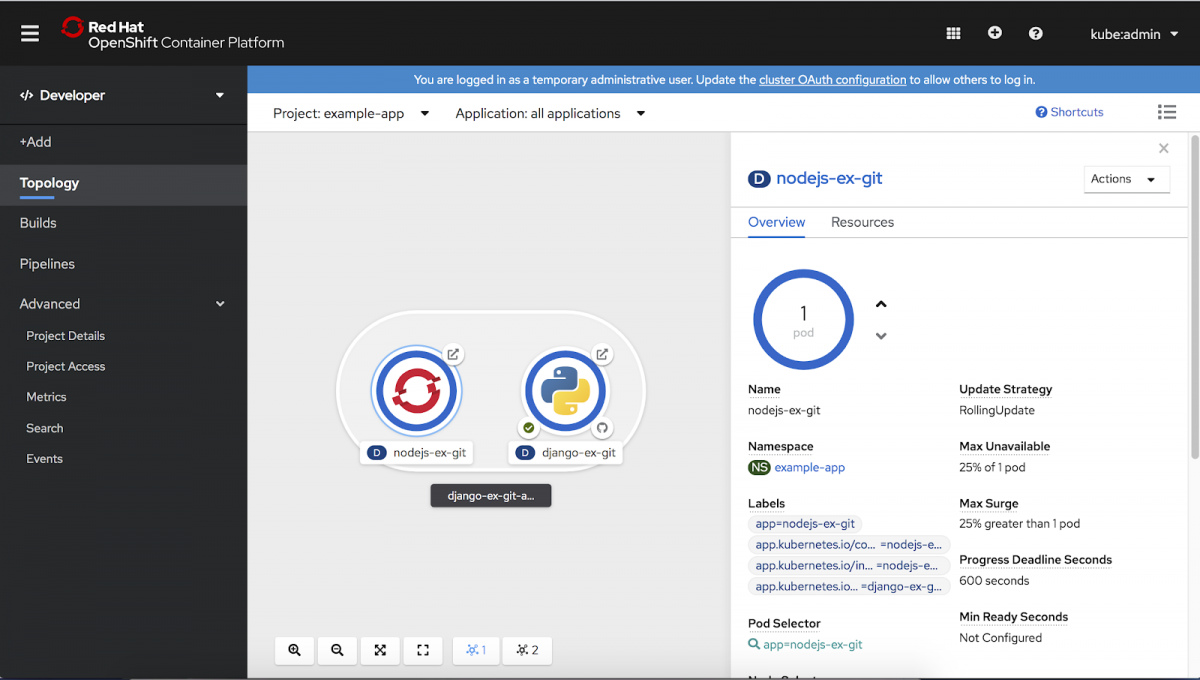

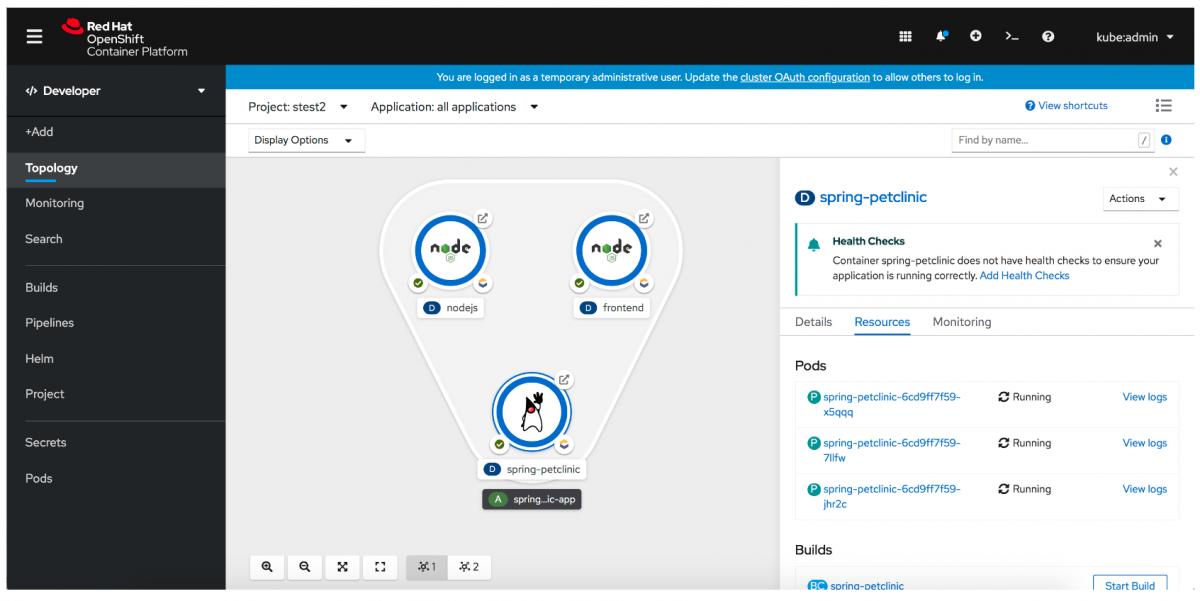

Topology

The Topology view in the Developer perspective of the web console provides a visual representation of all the applications within a project, their build status, and the components and services associated with them.

- Switch between a graphical and list presentation of your project.

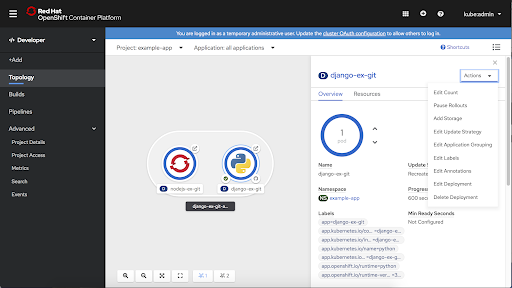

- Access the ability to scale up/down and increase/decrease your pod count easily via the side panel or the associated detail page.

-

View real-time visualization of rolling and recreate rollouts on the component in Topology, as well as in the associated side panel.

-

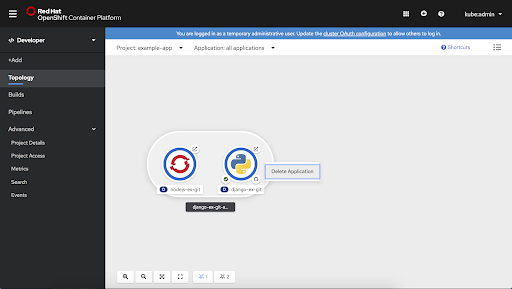

Delete an application, which is accomplished by deleting all components with the associated label, as defined by the Kubernetes-recommended labels.

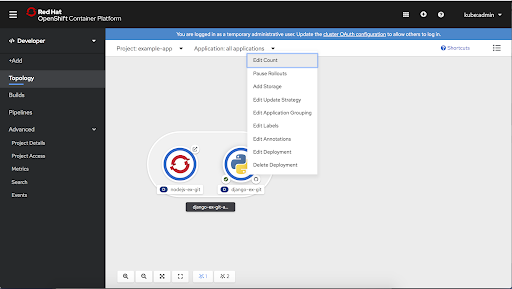

- Access context menus are via right-click as well as in the Actions menu in the associated side panel.

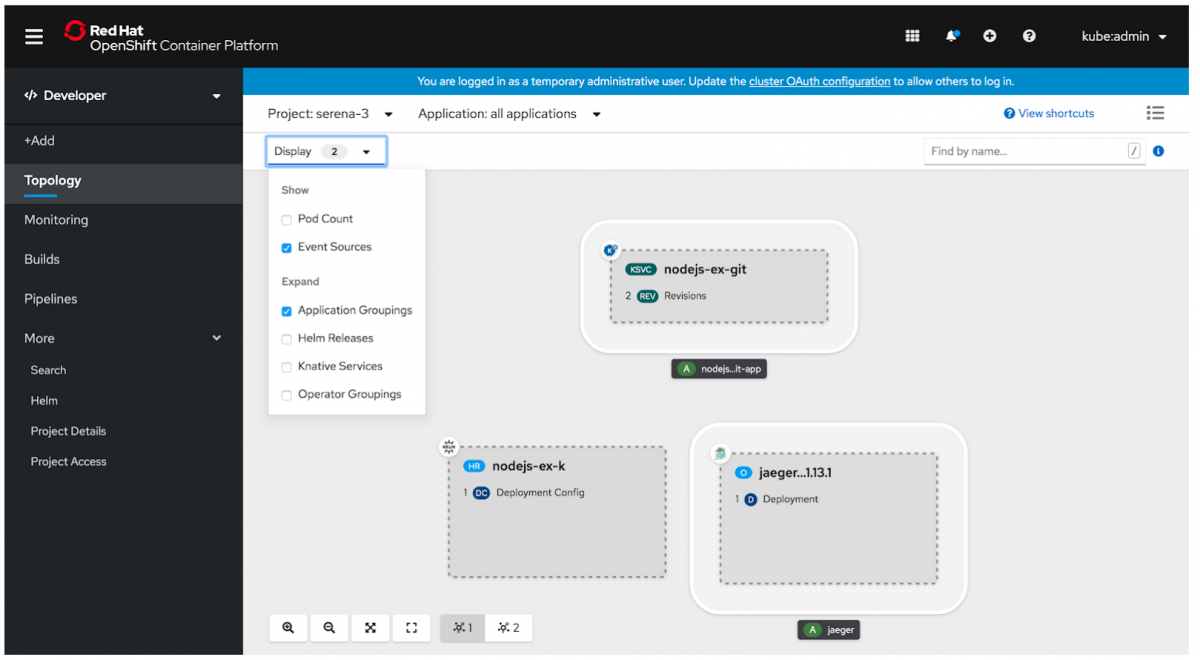

- The developer can collapse all application groupings, Operator Groupings, Knative Services, or Helm Releases from the Display dropdown menu. This feature helps the developer hide some of the complexity of their application and focus on individual pieces.

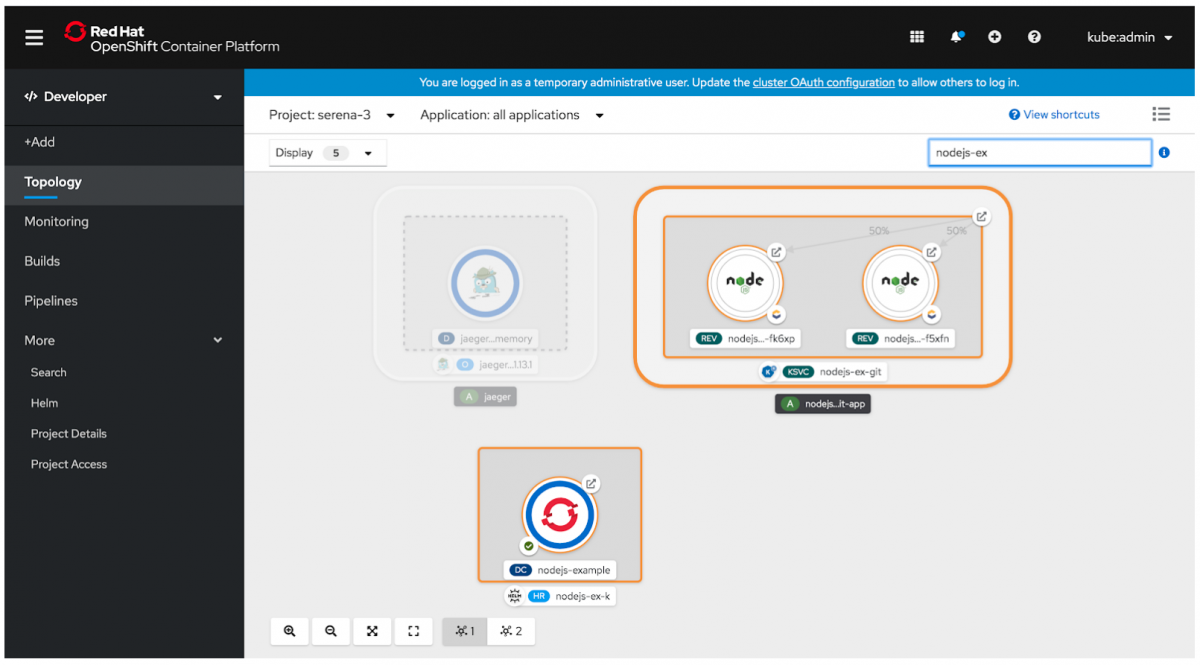

- Developers can easily locate components in a busy application structure with the new find feature, which highlights components based on a string match on the name. This feature increases the discoverability of a component.

- If you have not configured health checks, you will see a notification displayed in the side panel of the new Topology view. The notification supports discoverability and provides a quick link for remediation.

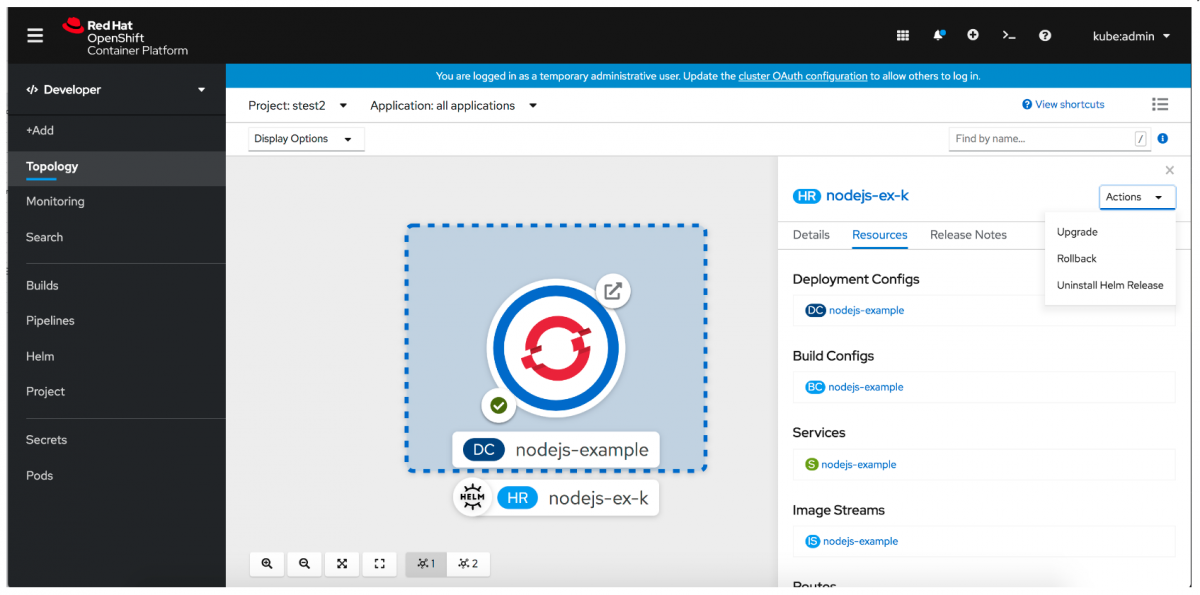

- You can use the side panel in the web console's Topology view to upgrade a Helm release. The new Upgrade action lets you update to a new version or directly edit the YAML file for your current release. The Rollback action lets you open the Revisions tab of a Helm Releases details page and see the revisions to a release. Finally, the new Uninstall action uninstalls and cleans up all of the resources that were added during a Helm chart installation. You can access these actions from the web console's Topology view, using the Actions menu in the Topology side panel. Another option is to use the Actions menu on the Helm Releases page.

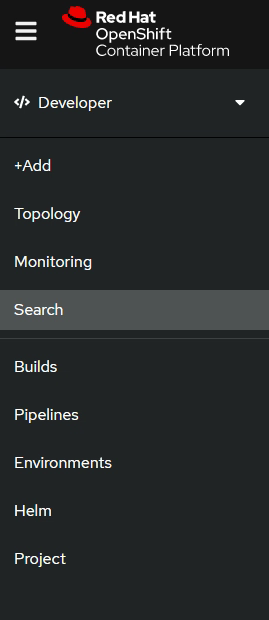

A new navigation scheme for discoverability

To help with discoverability, OpenShift moved to a flat navigation scheme that has three sections in the main navigation

The first section of the navigation is task-based. In this section, you can use the +Add button to add new applications or components to your project. This section is further organized into three areas:

- Topology: View and interact with the applications in your project.

- Monitoring: Access project details, including a dashboard, metrics, and events.

- Search: Find project resources based on the resource type, label, or name.

The second section is object-based, with the options:

- Builds: Access builds, BuildConfigs, and pipelines.

- Helm: Access Helm releases.

- Project: Access information about the project.

The third section is a customizable area where you can add your most frequently used resources for quick access. We added this section based on feedback that many users were going to the Administrator perspective to access resources such as secrets and config maps. You can now access secrets and config maps in this section by default.

Customizable navigation

You can now use the Search page to find and add items to the navigation.

You can also remove items from the navigation by hovering over the menu item and clicking the blue circle with the minus (-) symbol.

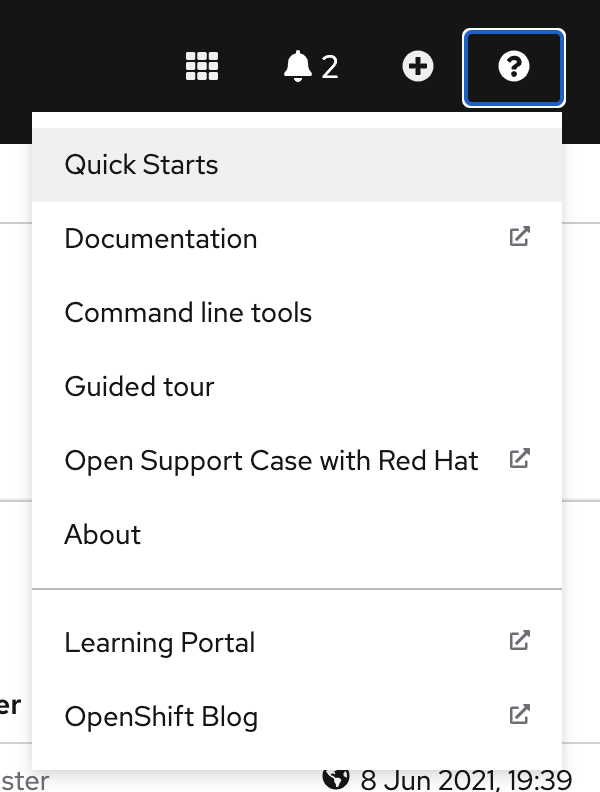

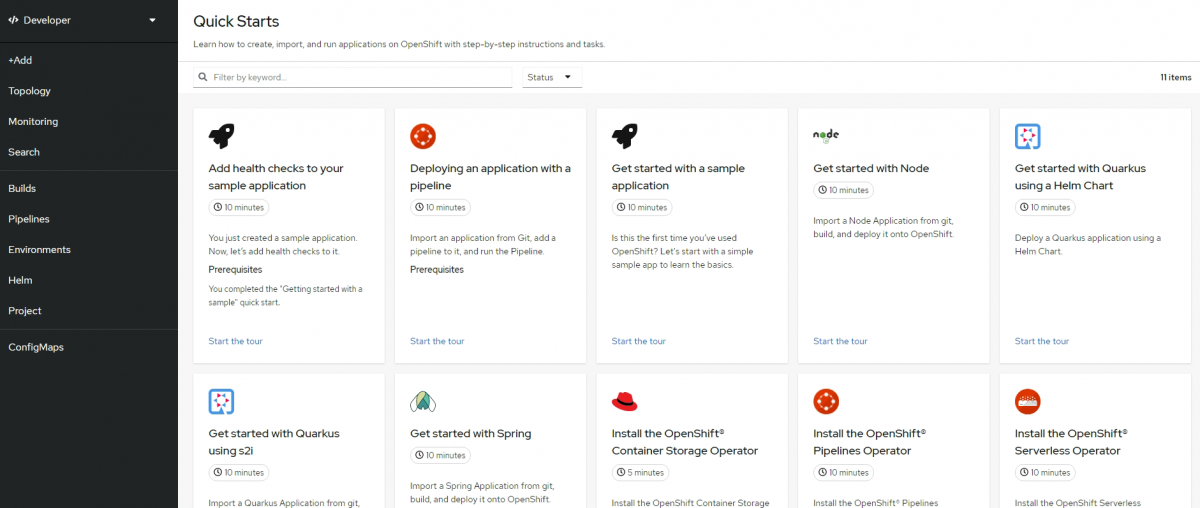

Quickstarts

Quickstarts are available from the console shortcuts and in a nutshell a quick start is a guided tutorial with user tasks. In the OpenShift console, you can access quick starts under the Help menu. They’re especially useful for getting up and running with a product.

Additional information about quickstarts can be found here.

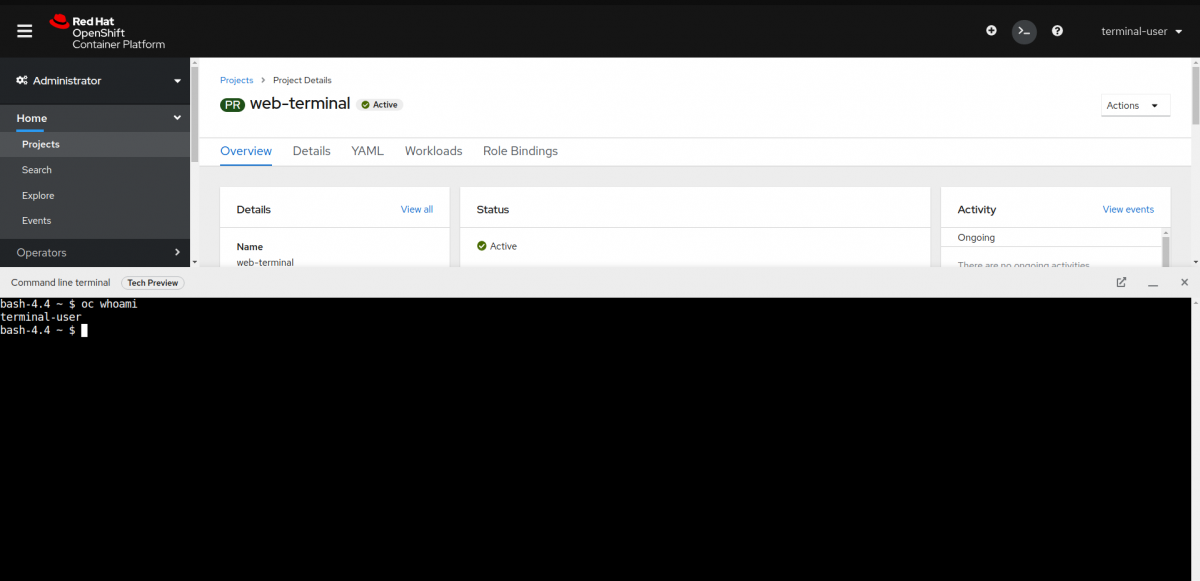

Web terminal

You can launch an embedded command line terminal instance in the web console. You must first install the Web Terminal Operator to use the web terminal.

This terminal instance is preinstalled with common CLI tools for interacting with the cluster, such as oc, kubectl,odo, kn, tkn, helm, kubens, and kubectx. It also has the context of the project you are working on and automatically logs you in using your credentials.

Web terminal is a Tech Preview feature only

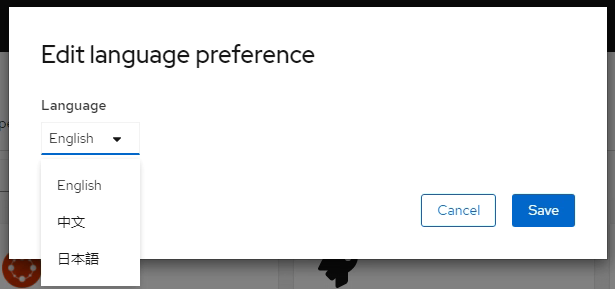

Internationalization (i18n)

OpenShift Console now offers the following features:

- Externalize all hard coded strings in the client code

- Starting with support for Chinese and Japanese, Korean(Coming in a z-stream)

- UI based language Selector

- Localizing dates and times

More languages are on the way.

Accessibility(A11Y)

When you work on an internationalization project, it’s also important to maintain accessibility.

Red Hat has translated many ARIA attributes to support a great experience for assistive technology users. The attributes we’ve targeted are aria-label, aria-placeholder, aria-roledescription, and aria-valuetext. They will update automatically when the language is changed so we can support users in multiple languages.

Namespaces and network policies for isolation

The default network isolation mode for OpenShift Container Platform 3.11 was the default-to-open ovs-subnet, though users frequently switched to use ovn-multitenant which provided network isolation between namespaces.. The default network isolation mode for OpenShift Container Platform 4.8 is controlled by a network policy.

Network policies are supported upstream, are more granular and flexible, and they also provide the functionality that ovs-multitenant does. Adding behaviour mimicking the ovs-multitenant isolation can easily be added to the platform using network policies. Doing this, projects and namespaces will have the same isolation behaviour as in OpenShift 3.

However, the Network Policy rules for namespace isolation are deployed within each namespace in OpenShift 4 and can be modified or deleted by a project administrator.

- Guide to Kubernetes Ingress Network Policies

- Network Policies: Controlling Cross-Project Communication on OpenShift

- Creating a network policy - Network policy | Networking | OpenShift Container Platform 4.8

Ingress and route annotations

Routes will work similar to OpenShift 3 from a developer perspective, and can be configured through annotations. The list of supported route annotations are described in

Route configuration - Configuring Routes | Networking | OpenShift Container Platform 4.8.

Build and deploy

Build and deployments have the same behaviour as in OpenShift 3, with added functionality and certain changes.

Builds

The Jenkins Pipeline build strategy is deprecated in OpenShift Container Platform 4. Equivalent and improved functionality is present in the OpenShift Pipelines based on Tekton, as described in the Pipelines section below.

Jenkins images on OpenShift Container Platform are fully supported and users should follow Jenkins user documentation for defining their jenkinsfile in a job or store it in a Source Control Management system.

Deployments

In addition to OpenShift specific DeploymentConfigs, OpenShift 4 now supports Deployments. Both objects provide two similar but different methods for fine-grained management over common user applications, as described in Understanding Deployments and DeploymentConfigs - Deployments | Applications | OpenShift Container Platform 4.8.

One important difference between Deployment and DeploymentConfig objects is the properties of the CAP theorem that each design has chosen for the rollout process. DeploymentConfig objects prefer consistency, whereas Deployments objects take availability over consistency.

While DeploymentConfig objects supports updates based on trigges defined directly in the object, Deployment objects use Kubernetes triggers based on annotations to the object, as described in https://docs.openshift.com/container-platform/4.8/openshift_images/triggering-updates-on-imagestream-changes.html

Protecting workloads from disruption (Pod Disruption Budgets)

While not a new feature in OpenShift 4, Pod Disruption Budgets is a Kubernetes capability that is evolved and fully supported in OCP4 and can be used to ensure the minimal number of or percentage of replicas in a deployment. Pod Disruption Budgets allow the specification of safety constraints on pods during operations, such as draining a node for maintenance.

It is relevant to understand that setting these in projects can be helpful during node maintenance (such as scaling a cluster down or a cluster upgrade) and is only honored on voluntary evictions (not on node failures).

CI/CD and GitOps

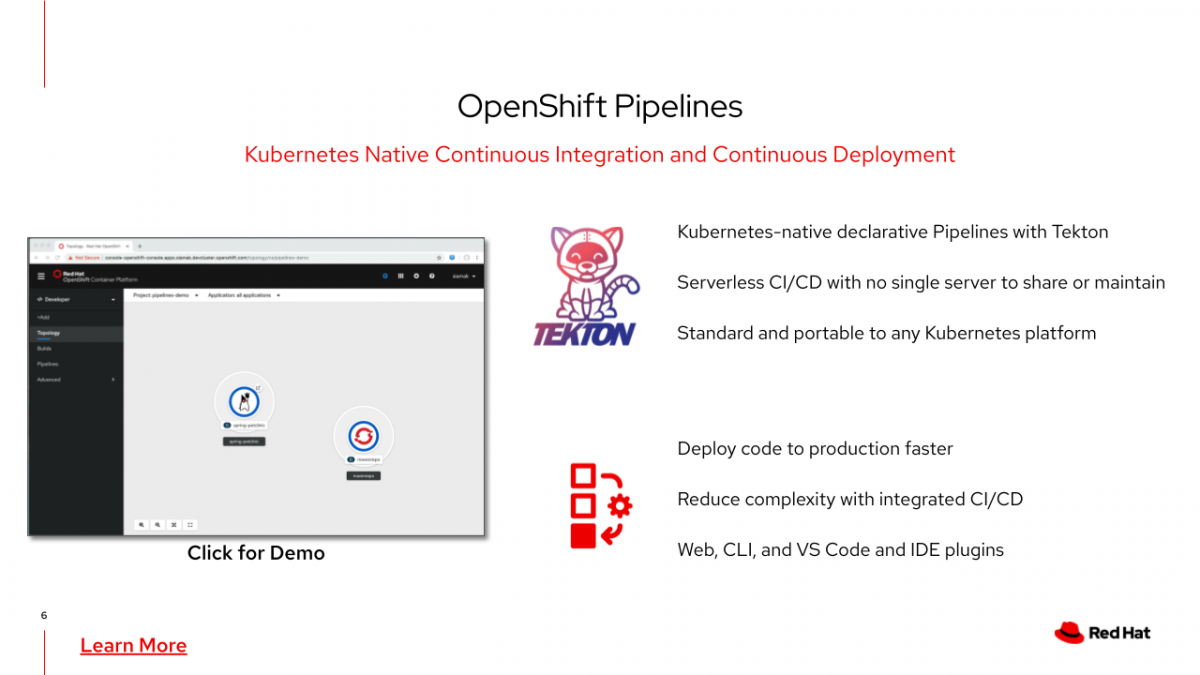

OpenShift Pipelines

OpenShift Pipelines define a set of Kubernetes Custom Resources that act as building blocks from which you can assemble CI/CD pipelines. It uses Tekton building blocks to automate deployments across multiple platforms by abstracting away the underlying implementation details. Tekton introduces a number of standard custom resource definitions (CRDs) for defining CI/CD pipelines that are portable across Kubernetes distributions.

Developer using Pipelines can:

- Add triggers to their pipelines (webhook support).

- Mount volumes as workspaces when starting a pipeline.

- Provide credentials for Git repositories and image registries, as needed.

- Work with Pipeline as code with GitHub

- Event filtering

- Task resolution

- Trigger on approved users and groups

- Pull-request commands

- GitHub Checks API

- GitHub and GitHub Enterprise

- Ability to customize default ClusterTasks and Pipeline templates

Documentation and tutorials:

- Understanding OpenShift Pipelines - Pipelines | CI/CD | OpenShift Container Platform 4.8

- https://www.openshift.com/learn/topics/ci-cd

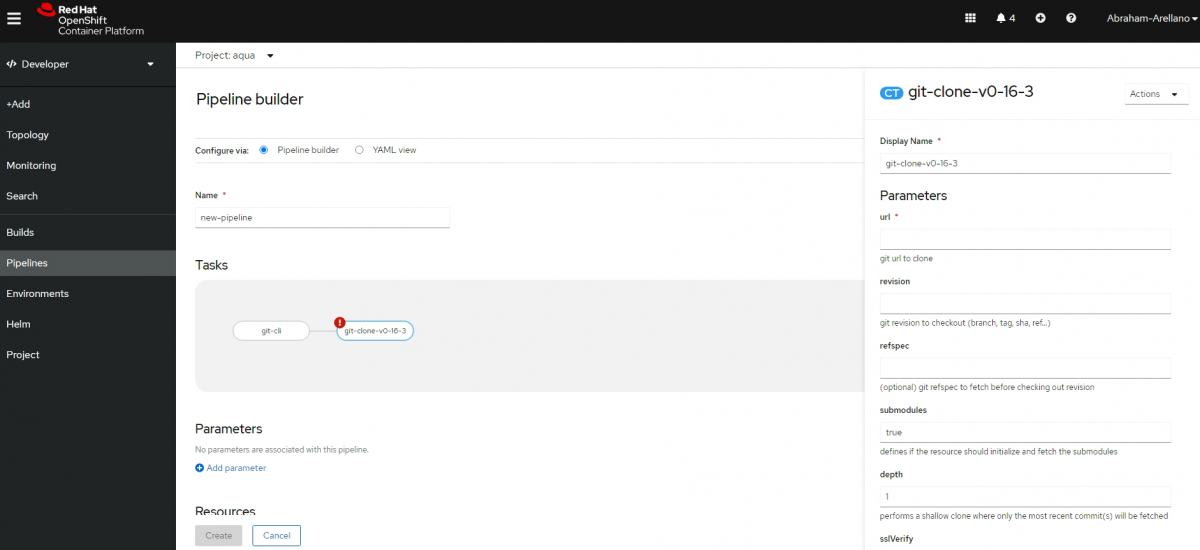

- OpenShift introduces the Pipeline Builder to the developer experience. It provides developers a visually guided experience to build their own pipelines.

- Pipeline UX enhancements highlights in Dev Console

- Metrics tab: pipeline execution metrics

- TaskRuns tab: list of TaskRuns created by a PipelineRun

- Events tab: related PipelineRun, TaskRun and Pod events

- Download PipelineRun logs

- Tekton CLI and IDE Plugins (VS Code & IntelliJ)

- Search and install Tasks from Tekton Hub

- Install Tasks as ClusterTask

- Code completion for variables when authoring Pipelines

- Support for creating PVC for workspaces when starting a pipeline

- Notifications for PipelineRun status upon completion

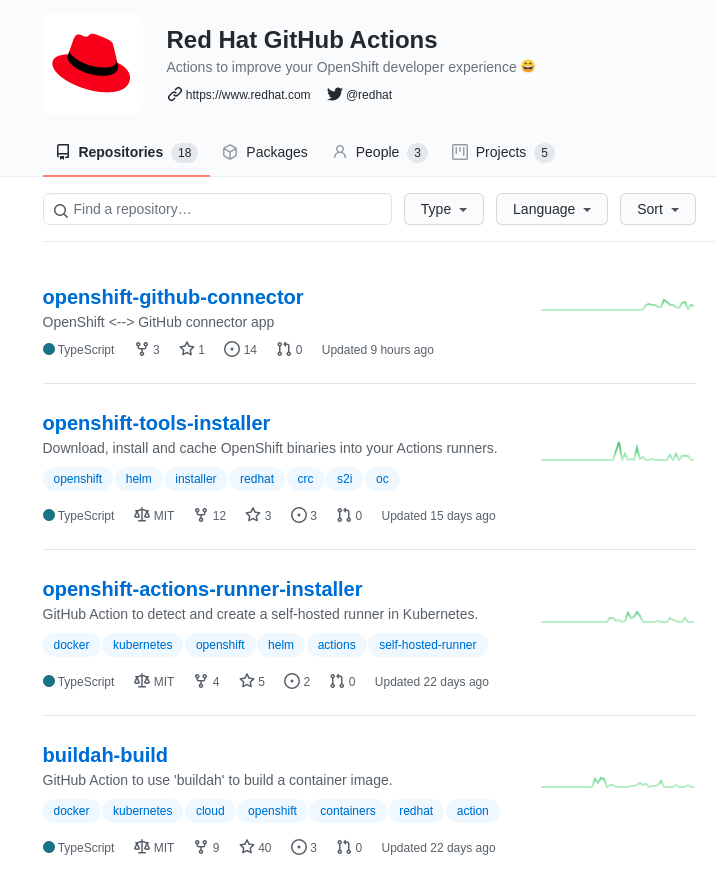

Red Hat GitHub Actions

Red Hat OpenShift now supports GitHub Actions, enabling organizations to standardize and scale their use of open, standardized developer toolchain components like Quay, Buildah, or Source-to-Image (s2i). This helps to meet developers where they are and provides greater choice and flexibility to OpenShift customers in how they build and deploy applications. The new GitHub Actions for Red Hat OpenShift, along with existing actions on GitHub Marketplace and action workflows, make it possible to achieve simple as well as complex application workflows on Red Hat’s enterprise Kubernetes platform using an extensive array of standards-based tools.

With this strategic partnership developer have access to:

- Interact with OpenShift from GitHub workflows

- Verified OpenShift actions on GitHub Marketplace

- OpenShift client (oc)

- OpenShift login

- S2I build

- Buildah builds

- Push image to registry

Dev Tools

Code Ready Containers - OpenShift on your laptop

With Red Hat CodeReady Containers now generally available, team members can develop on OpenShift on a laptop. A preconfigured OpenShift cluster is tailored for a laptop or desktop development making it easier to get going quickly with a personal cluster.

https://developers.redhat.com/products/codeready-containers/overview

Built on the open Eclipse Che project, Red Hat CodeReady Workspaces uses Kubernetes and containers to provide any member of the development or IT team with a consistent, secure, and zero-configuration development environment.

The experience is as fast and familiar as an integrated development environment on your laptop.

CodeReady Workspaces is included with your OpenShift subscription and is available in the Operator Hub. It provides development teams a faster and more reliable foundation on which to work, and it gives operations centralized control and peace of mind.

Getting started with CodeReady Workspaces and Red Hat OpenShift Application Runtimes launcher

CLI enhancements

In addition to the traditional oc CLI tool, a developer oriented tool odo is added.

odo is a fast, iterative, and straightforward CLI tool for developers who write, build, and deploy applications on Kubernetes and OpenShift.

Existing tools such as kubectl and oc are more operations-focused and require a deep-understanding of Kubernetes and OpenShift concepts. odo abstracts away complex Kubernetes and OpenShift concepts for the developer.

Red Hat Developers: Kubernetes integration and more in odo 2.0

Understanding odo - Developer CLI (odo) | CLI tools | OpenShift Container Platform 4.8

Additional tools for interacting with specific components such as Helm, OpenShift Pipelines, KNative and the Operator Framework are also available:

- helm (Helm 3 CLI)

- kn (Knative CLI)

- tkn (OpenShiftPipelines CLI)

- opm (Operator Framework bundle format)

- Operator SDK CLI

Additional information can be found:

https://access.redhat.com/documentation/en-us/openshift_container_platform/4.8/html/cli_tools/index

Red Hat OpenShift Connector

Red Hat OpenShift Connector for Microsoft Visual Studio Code, JetBrains IDE (including IntelliJ) and Eclipse Desktop IDE, making it easier to plug into existing developer pipelines. Developers can develop, build, debug and deploy their applications on OpenShift without leaving their favorite coding IDE.

The OpenShift Connector by Red Hat allows a developer to interact with a Red Hat OpenShift cluster or by using a local instance of OpenShift such as minishift or Red Hat CodeReady Containers. Leveraging the OpenShift Application Explorer view, you can improve the end-to-end experience of developing applications.

The extension provides a quick, simple way for any developer to work their “inner loop” process of coding, building and testing, directly using VS Code or IntelliJ - no CLI required.

Example of a developer use-case

On a developer workstation, when you load a Spring Boot project, the language support detection automatically proposes to load a Spring Boot language support extension and suggests downloading and installing the OpenShift Connector. You can install the recommended extension in Visual Studio Code.

Therefore, once the OpenShift Connector is installed, OpenShift Application View is enabled on the Explorer panel in Visual Studio Code. Then, you can access the view and connect to a running OpenShift cluster and perform the required operations.

Application development

HorizontalPodAutoscaler

With the new API version: autoscaling/v2beta2 it is possible to use memory utilization as an additional metric to the CPU utilization.

- https://docs.openshift.com/container-platform/4.8/nodes/pods/nodes-pods-autoscaling.html#supported-metrics

- In addition, it also provides the option to define scaling policies. A scaling policy controls how the OpenShift Container Platform horizontal pod autoscaler (HPA) scales pods. https://docs.openshift.com/container-platform/4.8/nodes/pods/nodes-pods-autoscaling.html#nodes-pods-autoscaling-policies_nodes-pods-autoscaling

VerticalPodAutoscaler

- Vertical Pod Autoscaling (VPA in short) provides an automatic way to set Container’s resource requests and limits.

- It uses historic CPU and memory usage data to fine-tune

- Prevents under and over utilization of resources

- In GA on OpenShift 4.8

Additional information can be found here

PodDisruptionBudget

PodDisruptionBudget is an API object that specifies the minimum number or percentage of replicas that must be up at a time.

Setting these in projects could have an impact during node maintenance (such as scaling a cluster down or a cluster upgrade) and is only honored on voluntary evictions (not on node failures).

See Protecting workloads from disruption (Pod Disruption Budgets) above.

Certified Helm Charts

Red Hat is pleased to introduce an expanded Red Hat OpenShift Certification to further support applications on Kubernetes and container orchestration across hybrid cloud footprints.

With this certification, Red Hat partners can enable and certify their software solutions on OpenShift through either Operators or Helm charts.

Service Serving Certificates

Service serving certificates are intended to support complex middleware applications that require encryption. These certificates are issued as TLS web server certificates.

Relevant improvement of the management of the SSC by the service-ca Operator.

Additional information can be found here.

Pid Limits

In OCP3 the pid limit in the containers was set to 4096. In OCP4 this is set to 1024 for crio.

As a developer you might reach this limit when running Threads in parallel in your Java program: a Thread is merely a process running with shared memory and will need a pid.

To reestablish the old limit on OCP4 Red Hat provides a knowledge base article: https://access.redhat.com/solutions/4717701

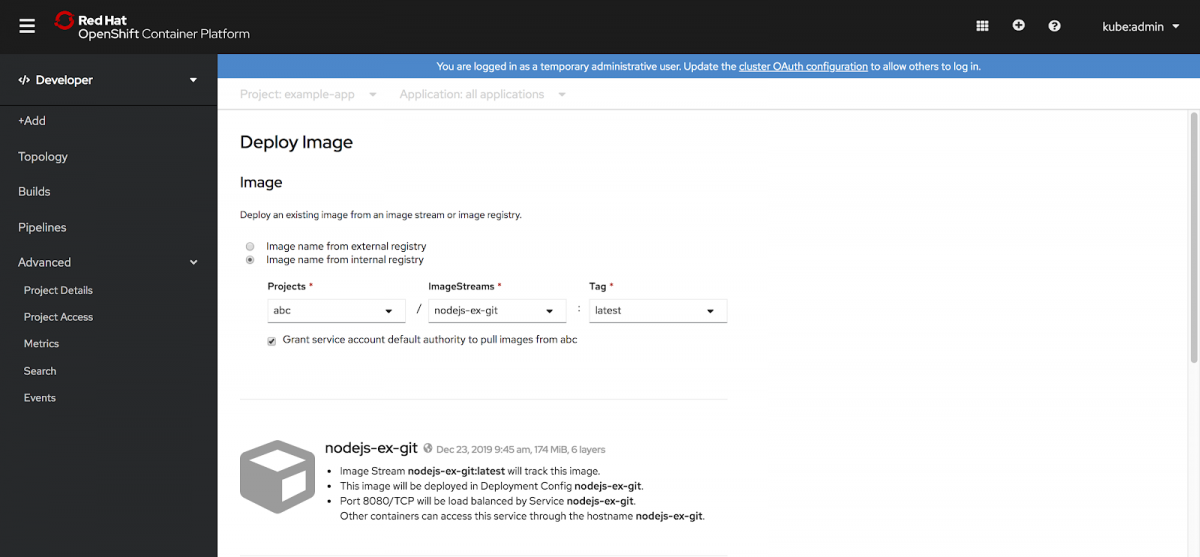

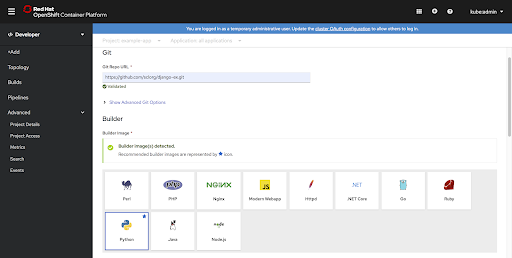

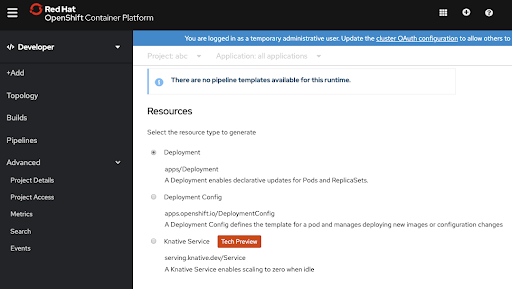

Application deployment is even easier

- Support for the deployment of an image stream from an internal registry.

- Builder image detection in the Import from Git user flow.

- Deployment options between Kubernetes Deployments (default), OpenShift DeploymentConfigs, and Knative service. (This option gives the developer easy ways to switch between deployment types without having to learn YAML or other templating solutions.

- The Developer Catalog now has options to allow developers to filter and group items more easily and labels to help visually identify the difference between catalog items.

- Improvements to creating Operator-backed services from the Developer Catalog:

- Developers can now perform this task via a form, rather than being forced to remain in a YAML editor. In the future, forms will be the default experience.

- Operator-backed services are exposed as a grouping in Topology. Additionally, the resource that manages the resources that are part of the Operator-backed service are now easily accessible from the side pane.

- Helm Charts:

- Developers can install Helm Charts through the Developer Catalog.

- Helm Releases can be seen on the Topology page. They are shown as a grouping, and all high-level components are viewed when the Helm Release is expanded, as shown in Figure 3. This feature allows the developer to quickly understand all of the resources the chart creates.

Monitoring improvements

User workload monitoring

The OpenShift 4 platform can be set up to support monitoring project workloads, enabling Prometheus to scrape metrics from application endpoints by defining a ServiceMonitor or PodMonitor object. The process is described in:

https://www.openshift.com/blog/monitoring-your-own-workloads-in-the-developer-console-in-openshift-container-platform-4.6

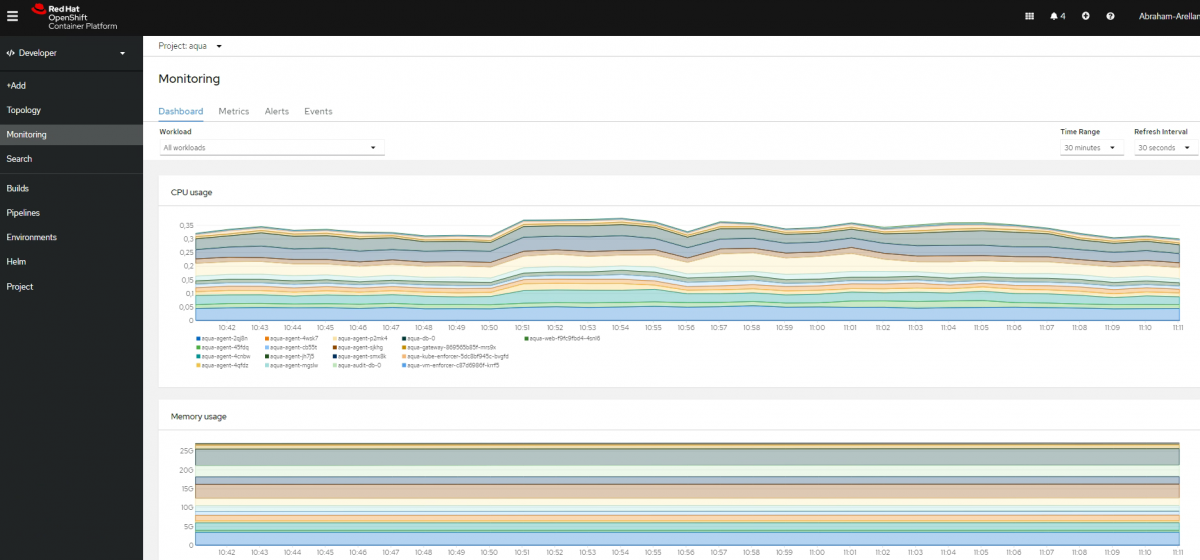

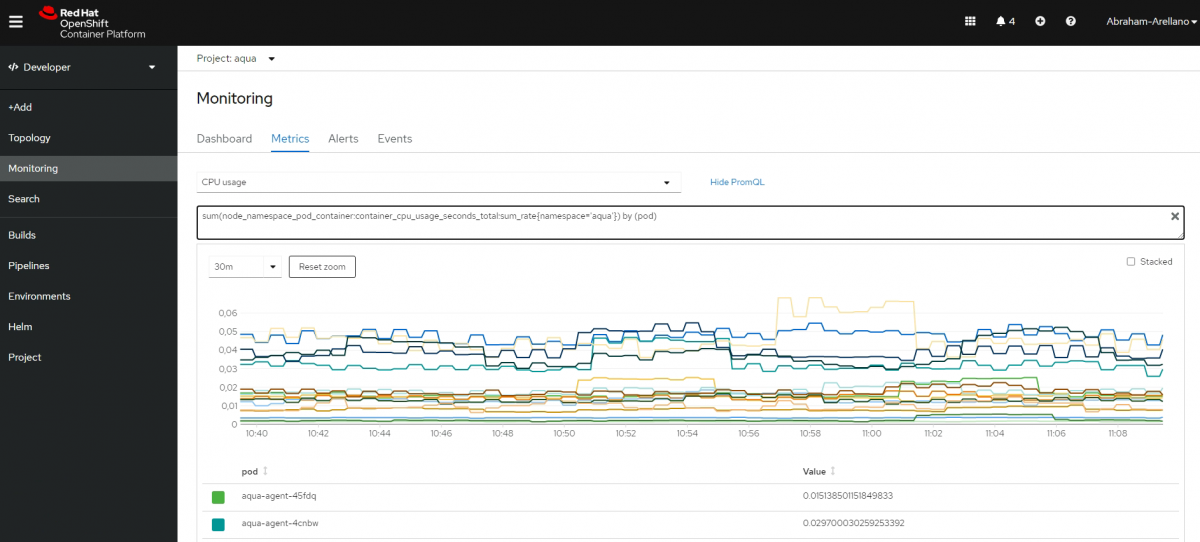

Monitoring Dashboard

You can get insights into your application metrics with your new monitoring dashboard featuring 10 metrics charts. This feature is available on the Dashboard tab of the Monitoring page.

Troubleshoot problems with your applications by running Prometheus Query Language (PromQL) queries on your Project and examining the metrics visualized on a plot. This feature is available on the Metrics page.

Logging

Considerations

-

You cannot transition your aggregate logging data from OpenShift Container Platform 3.11 into your new OpenShift Container Platform 4 cluster.

-

Some logging configurations that were available in OpenShift Container Platform 3.11 are no longer supported in OpenShift Container Platform 4.8:

- Configuring default log rotation. You cannot modify the default log rotation configuration.

- Configuring the collected log location. You cannot change the location of the log collector output file, which by default is /var/log/fluentd/fluentd.log.

- Throttling log collection. You cannot throttle down the rate at which the logs are read in by the log collector.

- Configuring log collection JSON parsing. You cannot format log messages in JSON.

- Configuring the logging collector using environment variables. You cannot use environment variables to modify the log collector.

- Configuring how the log collector normalizes logs. You cannot modify default log normalization.

- Configuring Curator in scripted deployments. You cannot configure log curation in scripted deployments.

- Using the Curator Action file. You cannot use the Curator config map to modify the Curator action file.

Improvements

-

In OpenShift 4 there has been increased the discoverability of critical logs by parsing JSON logs into objects so that developers can query by individual fields.

- It is also possible to configure what container logs are needed to parse and forward to either a third party solution or our managed Elasticsearch.

- Red Hat’s Elasticsearch stores JSON logs into individual indices per defined schema to reduce possible field explosion scenarios.

- Query individual fields via Kibana.

-

More flexibility to select and filter certain logs to forward.

- Extend our Log Forwarding API to allow users to select and forward certain logs based on any pod label.

- Additional information can be found here

Operators

Operator Lifecycle Manager

For starters it is important to know that the Operator Lifecycle Manager (OLM) helps users install, update, and manage the lifecycle of Kubernetes native applications (Operators) and their associated services running across their OpenShift Container Platform clusters.

It is part of the Operator Framework, an open source toolkit designed to manage Operators in an effective, automated, and scalable way.

Binding

The Service Binding Operator enables application developers to more easily bind applications together with Operator-managed backing services such as databases, without having to manually configure secrets, ConfigMaps, etc. In Topology in 4.3, you can quickly and easily create a binding between two components.

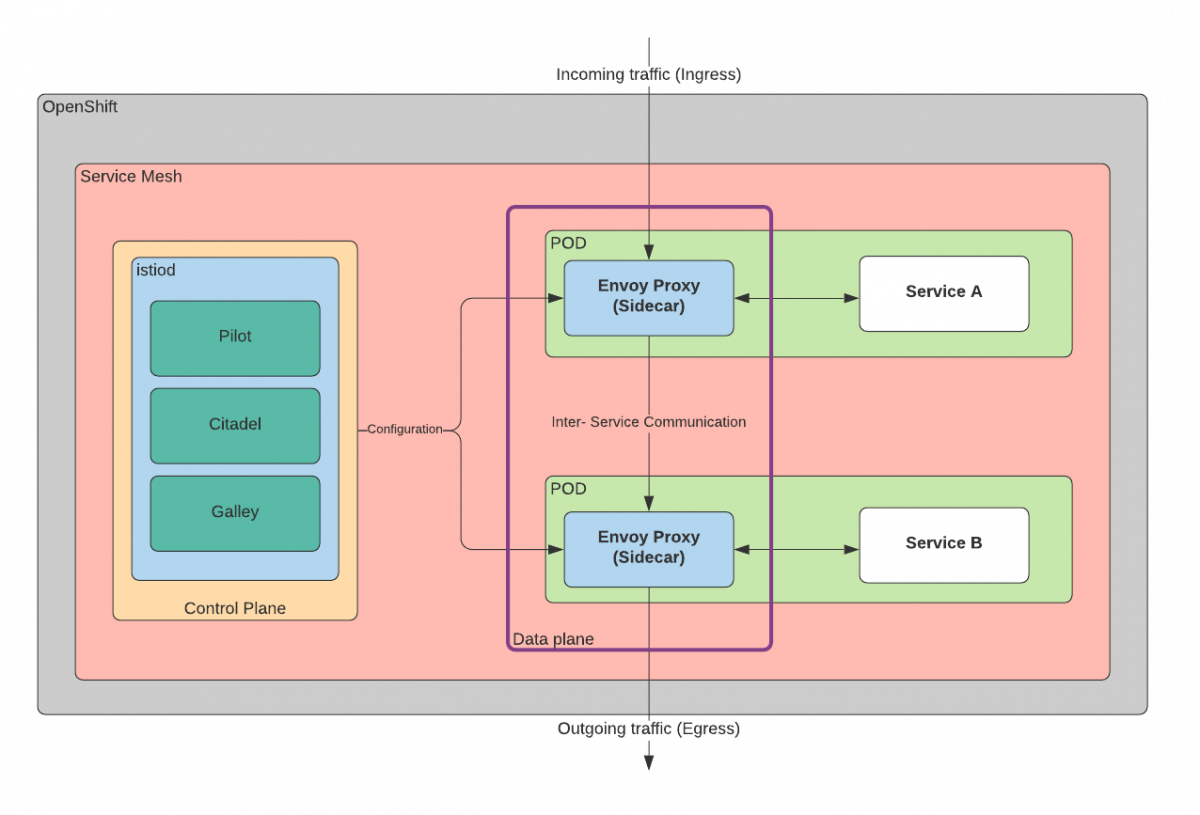

Service Mesh

Based on the Istio version 1.6.x. on OCP 4.7, Kiali and Jaeger projects and enhanced with Kubernetes Operators, OpenShift Service Mesh is designed to simplify the development, deployment and ongoing management of applications on OpenShift. It delivers a set of capabilities well suited for modern, cloud native applications such as microservices. This helps to free developer teams from the complex tasks of having to implement bespoke networking services for their applications and business logic.

Serverless

OpenShift Serverless helps developers deploy and run applications that will scale up or scale to zero on-demand. Based on the open source project Knative

The OpenShift Serverless Operator provides an easy way to get started and install the components necessary to deploy serverless applications or functions with OpenShift. With the Developer-focused Console perspective available in OpenShift, serverless options are enabled for common developer workflows, such as Import from Git or

Deploy an Image, allowing users to create serverless applications directly from the console.

Create event sources in the web console

The Red Hat OpenShift Serverless Operator already enhances the developer experience in OpenShift by letting developers create event sources through the web console.

Event sources provide a mechanism for event providers to connect to your application and send events. In Knative, events are based on the Cloud Events Specification, which lets users describe event data in a cloud-agnostic way. Knative services by nature are scaled down to zero once they've been idle for a set time. With event sources, providers can send events that automatically scale up the Knative service and make it available as needed.

Developers can now use a form-based mechanism to create event sources and then use a Knative service as a "sink" or destination for the events.

API changes

- The current list of API endpoints for OCP 4 is found at: https://docs.openshift.com/container-platform/4.8/rest_api/index.html

- The API reference for OCP 3.11 is found at: https://docs.openshift.com/container-platform/3.11/rest_api/index.html

API Migration Guideline

Event

The events.k8s.io/v1beta1 API version of Event will no longer be served in Kubernetes v1.25.

Migrate manifests and API clients to use the Kubernetes events.k8s.io/v1 API version or the OpenShift core/v1, available since v1.19 and 4.1 respectively.

All existing persisted objects are accessible via the new API

Notable changes in events.k8s.io/v1 and core/v1:

- type is limited to Normal and Warning

- involvedObject is renamed to regarding

- action, reason, reportingComponent, and reportingInstance are required when creating new events.k8s.io/v1 events

- use eventTime instead of the deprecated firstTimestamp field (which is renamed to deprecatedFirstTimestamp and not permitted in new events.k8s.io/v1 Events)

- use reportingComponent instead of the deprecated source.component field.

- use reportingInstance instead of the deprecated source.host field.

PodDisruptionBudget (OpenShift > 4.8)

The policy/v1beta1 API version of PodDisruptionBudget will no longer be served in v1.25.

- Migrate manifests and API clients to use the policy/v1 API version, available since v1.21.

- All existing persisted objects are accessible via the new API

- Notable changes in policy/v1:

- an empty spec.selector ({}) written to a policy/v1 PodDisruptionBudget selects all pods in the namespace (in policy/v1beta1 an empty spec.selector selected no pods). An unset spec.selector selects no pods in either API version.

PodSecurityPolicy (OpenShift > 4.10)

PodSecurityPolicy in the policy/v1beta1 API version will no longer be served in v1.25, and the PodSecurityPolicy admission controller will be removed.

PodSecurityPolicy replacements are still under discussion, but current use can be migrated to 3rd-party admission webhooks now.

Webhook resources

The admissionregistration.k8s.io/v1beta1 API version of MutatingWebhookConfiguration and ValidatingWebhookConfiguration will no longer be served in v1.22 (~ OpenShift 4.9).

Migrate manifests and API clients to use the admissionregistration.k8s.io/v1 API version, available since v1.16 / OpenShift 4.1.

All existing persisted objects are accessible via the new APIs

Notable changes:

- webhooks[*].failurePolicy default changed from Ignore to Fail for v1

- webhooks[*].matchPolicy default changed from Exact to Equivalent for v1

- webhooks[*].timeoutSeconds default changed from 30s to 10s for v1

- webhooks[*].sideEffects default value is removed, and the field made required, and only None and NoneOnDryRun are permitted for v1

- webhooks[*].admissionReviewVersions default value is removed and the field made required for v1 (supported versions for AdmissionReview are v1 and v1beta1)

- webhooks[*].name must be unique in the list for objects created via admissionregistration.k8s.io/v1

CustomResourceDefinition

The apiextensions.k8s.io/v1beta1 API version of CustomResourceDefinition will no longer be served in v1.22 / OpenShift 4.9.

Migrate manifests and API clients to use the apiextensions.k8s.io/v1 API version, available since v1.16. / OpenShift 4.1

All existing persisted objects are accessible via the new API

Notable changes:

- spec.scope is no longer defaulted to Namespaced and must be explicitly specified

- spec.version is removed in v1; use spec.versions instead

- spec.validation is removed in v1; use spec.versions[*].schema instead

- spec.subresources is removed in v1; use spec.versions[*].subresources instead

- spec.additionalPrinterColumns is removed in v1; use spec.versions[*].additionalPrinterColumns instead

- spec.conversion.webhookClientConfig is moved to spec.conversion.webhook.clientConfig in v1

- spec.conversion.conversionReviewVersions is moved to spec.conversion.webhook.conversionReviewVersions in v1

- spec.versions[*].schema.openAPIV3Schema is now required when creating v1 CustomResourceDefinition objects, and must be a structural schema

- spec.preserveUnknownFields: true is disallowed when creating v1 CustomResourceDefinition objects; it must be specified within schema definitions as x-kubernetes-preserve-unknown-fields: true

- In additionalPrinterColumns items, the JSONPath field was renamed to jsonPath in v1 (fixes #66531)

CertificateSigningRequest

The certificates.k8s.io/v1beta1 API version of CertificateSigningRequest will no longer be served in v1.22 / OpenShift 4.9.

Migrate manifests and API clients to use the certificates.k8s.io/v1 API version, available since v1.19 / OpenShift 4.6.

All existing persisted objects are accessible via the new API

Notable changes in certificates.k8s.io/v1:

-

For API clients requesting certificates:

- spec.signerName is now required (see known Kubernetes signers), and requests for kubernetes.io/legacy-unknown are not allowed to be created via the certificates.k8s.io/v1 API

- spec.usages is now required, may not contain duplicate values, and must only contain known usages

-

For API clients approving or signing certificates:

- status.conditions may not contain duplicate types

- status.conditions[*].status is now required

- status.certificate must be PEM-encoded, and contain only CERTIFICATE blocks

Ingress

The extensions/v1beta1 and networking.k8s.io/v1beta1 API versions of Ingress will no longer be served in v1.22 / OpenShift 4.9.

Migrate manifests and API clients to use the networking.k8s.io/v1 API version, available since v1.19 / OpenShift 4.6.

All existing persisted objects are accessible via the new API

Notable changes:

- spec.backend is renamed to spec.defaultBackend

- The backend serviceName field is renamed to service.name

- Numeric backend servicePort fields are renamed to service.port.number

- String backend servicePort fields are renamed to service.port.name

- pathType is now required for each specified path. Options are Prefix, Exact, and ImplementationSpecific. To match the undefined v1beta1 behavior, use ImplementationSpecific.

RBAC resources

The rbac.authorization.k8s.io/v1beta1 API version of ClusterRole, ClusterRoleBinding, Role, and RoleBinding will no longer be served in v1.22 / OpenShift 4.9.

Migrate manifests and API clients to use the rbac.authorization.k8s.io/v1 API version, available since v1.8 / OpenShift 4.4.

All existing persisted objects are accessible via the new APIs

No notable changes

PriorityClass

The scheduling.k8s.io/v1beta1 API version of PriorityClass will no longer be served in v1.22 / OpenShift 4.9.

Migrate manifests and API clients to use the scheduling.k8s.io/v1 API version, available since v1.14 / OpenShift 4.4.

All existing persisted objects are accessible via the new API

No notable changes

NetworkPolicy

The extensions/v1beta1 API version of NetworkPolicy is no longer served as of v1.16.

Migrate manifests and API clients to use the networking.k8s.io/v1 API version, available since v1.8.

All existing persisted objects are accessible via the new API

DaemonSet

The extensions/v1beta1 and apps/v1beta2 API versions of DaemonSet are no longer served as of v1.16.

Migrate manifests and API clients to use the apps/v1 API version, available since v1.9.

All existing persisted objects are accessible via the new API

Notable changes:

- spec.templateGeneration is removed

- spec.selector is now required and immutable after creation; use the existing template labels as the selector for seamless upgrades

- spec.updateStrategy.type now defaults to RollingUpdate (the default in extensions/v1beta1 was OnDelete)

Deployment

The extensions/v1beta1, apps/v1beta1, and apps/v1beta2 API versions of Deployment are no longer served as of v1.16 / OpenShift 4.1.

Migrate manifests and API clients to use the apps/v1 API version, available since v1.9.

All existing persisted objects are accessible via the new API

Notable changes:

- spec.rollbackTo is removed

- spec.selector is now required and immutable after creation; use the existing template labels as the selector for seamless upgrades

- spec.progressDeadlineSeconds now defaults to 600 seconds (the default in extensions/v1beta1 was no deadline)

- spec.revisionHistoryLimit now defaults to 10 (the default in apps/v1beta1 was 2, the default in extensions/v1beta1 was to retain all)

- maxSurge and maxUnavailable now default to 25% (the default in extensions/v1beta1 was 1)

StatefulSet

The apps/v1beta1 and apps/v1beta2 API versions of StatefulSet are no longer served as of v1.16 / OpenShift 4.

Migrate manifests and API clients to use the apps/v1 API version, available since v1.9 / OpenShift 4.1.

All existing persisted objects are accessible via the new API

Notable changes:

- spec.selector is now required and immutable after creation; use the existing template labels as the selector for seamless upgrades

- spec.updateStrategy.type now defaults to RollingUpdate (the default in apps/v1beta1 was OnDelete)

ReplicaSet

The extensions/v1beta1, apps/v1beta1, and apps/v1beta2 API versions of ReplicaSet are no longer served as of v1.16 / OpenShift 4.

Migrate manifests and API clients to use the apps/v1 API version, available since v1.9 / OpenShift 4.1.

All existing persisted objects are accessible via the new API

Notable changes:

- spec.selector is now required and immutable after creation; use the existing template labels as the selector for seamless upgrades

Security

Unauthenticated access to discovery endpoints

In OpenShift Container Platform 3.11, an unauthenticated user could access the discovery endpoints (for example, /api/* and /apis/*). For security reasons, unauthenticated access to the discovery endpoints is no longer allowed in OpenShift Container Platform 4.x. If you do need to allow unauthenticated access, you can configure the RBAC settings as necessary; however, under the current security requirements this has not been enabled.

Identity providers

Configuration for identity providers has changed for OpenShift Container Platform 4, including the following notable changes:

The request header identity provider in OpenShift Container Platform 4.x requires mutual TLS, where in OpenShift Container Platform 3.11 it did not.

The configuration of the OpenID Connect identity provider was simplified in OpenShift Container Platform 4.x. It now obtains data, which previously had to be specified in OpenShift Container Platform 3.11, from the provider’s /.well-known/openid-configuration endpoint.

OAuth token storage format

Newly created OAuth HTTP bearer tokens no longer match the names of their OAuth access token objects. The object names are now a hash of the bearer token and are no longer sensitive. This reduces the risk of leaking sensitive information.

Migration tooling to help users upgrade from OpenShift 3 to 4

Existing OpenShift users can move to the latest release of OpenShift Container Platform more easily with the new workload migration tooling available alongside OpenShift 4.

Migration Toolkit for Containers

The Migration Toolkit for Containers (MTC) web console and API, based on Kubernetes custom resources, enable you to migrate stateful application workloads at the granularity of a namespace.

It is possible to use the Migration Toolkit for Containers (MTC) to migrate Kubernetes resources, persistent volume data, and internal container images from to OpenShift Container Platform 4.8 by using the MTC web console or the Kubernetes API.

MTC migrates the following resources:

- A namespace specified in a migration plan.

- Namespace-scoped resources: When the MTC migrates a namespace, it migrates all the objects and resources associated with that namespace, such as services or pods. Additionally, if a resource that exists in the namespace but not at the cluster level depends on a resource that exists at the cluster level, the MTC migrates both resources.

- For example, a security context constraint (SCC) is a resource that exists at the cluster level and a service account (SA) is a resource that exists at the namespace level. If an SA exists in a namespace that the MTC migrates, the MTC automatically locates any SCCs that are linked to the SA and also migrates those SCCs. Similarly, the MTC migrates persistent volume claims that are linked to the persistent volumes of the namespace.

- NOTE:

- Cluster-scoped resources might have to be migrated manually, depending on the resource.

- Custom resources (CRs) and custom resource definitions (CRDs): MTC automatically migrates CRs and CRDs at the namespace level.

The MTC console is installed on the target cluster by default. You can configure the Migration Toolkit for Containers Operator to install the console on a remote cluster.

https://docs.openshift.com/container-platform/4.8/migration_toolkit_for_containers/about-mtc.html

Comments