Common kdump Configuration Mistakes

Issue

- Why does the kdump service fail to start?

- Why am I not getting a vmcore when RHEL crashes?

- What are the basic configuration requirements of kdump?

- How do I install and start kdump?

Environment

- Red Hat Enterprise Linux 8

- Red Hat Enterprise Linux 7

- Red Hat Enterprise Linux 6

Resolution

Provided by the kexec-tools package, kdump is a complex tool – kexec boots a separate kernel from the live running kernel which we more commonly refer to as the kdump or crash kernel. This kdump kernel creates a virtual memory image or vmcore of the live running kernel, that it then copies to either a local or remote dump target before ultimately (by default) rebooting the system. Within this process there exists a variety of configuration options that admins can utilize and tweak to best fit their specific environment. However this flexibility in kdump's configuration invites an increased potential for user error that can lead to misconfiguration of the kdump service and abnormal or unexpected behavior by kdump.

The purpose of this document is to explore the most common kdump configuration mistakes, the ways to identify these mistakes, and what steps should be taken to resolve them.

Table of Contents:

- Kdump Deployment

-Install kexec-tools

-Check if Kdump is Running

-Installing Kdump

-Starting Kdump - Crashkernel Reservation

-Recommended Crashkernel Reservation Size

-Checking the Crashkernel Reservation

-Setting the Crashkernel Reservation in RHEL 6

-Setting the Crashkernel Reservation in RHEL 7

-Setting the Crashkernel Reservation in RHEL 8

-Undersized Crashkernel Reservations - Local Dump Targets

-Available Storage Space Requirements

-Getting the Smallest Core

-Dump Path Misconfigurations - Remote Dump Targets

-Dumping a vmcore to an NFS Share

-Dumping a vmcore via SSH - Additional Considerations

-HPSA Driver Failure

-Kdump in Clustered Environments

-Third Party Kernel Modules

-Kdump Failure on UEFI Secure Boot Systems

Kdump Deployment

Install kexec-tools

Although not having the kexec-tools package installed isn't technically a misconfiguration, we sometimes see situations in which a vmcore is expected to be generated from a crash, but the most basic check hasn't been performed yet.

Ensure that the kexec-tools package is installed:

To check that the package is installed, use the rpm command in RHEL 6, RHEL 7, and RHEL 8:

[root@hostname.rhel6 ~]# rpm -q kexec-tools

kexec-tools-2.0.0-310.el6.x86_64

Check if Kdump is Running

To verify the status of the kdump service in RHEL 6, use both the service kdump status and the chkconfig commands:

[root@hostname.rhel6 ~]# service kdump status

Kdump is operational

[root@hostname.rhel6 ~]# chkconfig | grep kdump

kdump 0:off 1:off 2:off 3:on 4:on 5:on 6:off

The service command output above shows us that kdump was previously started and is actively working. The chkconfig output indicates that the kdump service is enabled at run levels 3, 4, and 5, and will attempt to start kdump when we enter these runlevels, ie. at boot.

With the introduction of systemd in RHEL 7, we use systemctl status kdump to check the status of the kdump service in versions of RHEL7 and higher:

[root@hostname.rhel8 ~]# systemctl status kdump ,---------

● kdump.service - Crash recovery kernel arming V

Loaded: loaded (/usr/lib/systemd/system/kdump.service; enabled; vendor preset: enabled)

Active: active (exited) since Fri 2020-08-21 09:43:38 EDT; 1h 34min ago <<-----

Process: 1150 ExecStart=/usr/bin/kdumpctl start (code=exited, status=0/SUCCESS)

Main PID: 1150 (code=exited, status=0/SUCCESS)

Aug 21 09:43:30 rhel8 dracut[1484]: *** Generating early-microcode cpio image ***

Aug 21 09:43:30 rhel8 dracut[1484]: *** Constructing GenuineIntel.bin ****

Aug 21 09:43:30 rhel8 dracut[1484]: *** Store current command line parameters ***

Aug 21 09:43:30 rhel8 dracut[1484]: Stored kernel commandline:

Aug 21 09:43:30 rhel8 dracut[1484]: rd.lvm.lv=rhel/root

Aug 21 09:43:30 rhel8 dracut[1484]: *** Creating image file '/boot/initramfs-4.18.0-193.el8.x86_64kdump.img' ***

Aug 21 09:43:37 rhel8 dracut[1484]: *** Creating initramfs image file '/boot/initramfs-4.18.0-193.el8.x86_64kdump.img' done ***

Aug 21 09:43:38 rhel8 kdumpctl[1150]: kexec: loaded kdump kernel

Aug 21 09:43:38 rhel8 kdumpctl[1150]: Starting kdump: [OK]

Aug 21 09:43:38 rhel8 systemd[1]: Started Crash recovery kernel arming.

Should the kexec-tools package not exist on the system, this will be reflected in the output of the rpm -q kexec-tools command and corresponding RHEL version's status check:

[root@hostname.rhel7 ~]# rpm -q kexec-tools

package kexec-tools is not installed

[root@hostname.rhel7 ~]# systemctl status kdump

Unit kdump.service could not be found.

Installing Kdump

Use yum to install the kdump service in RHEL 6, RHEL 7, and RHEL 8:

[root@hostname.rhel7 ~]# yum install -y kexec-tools

The kdump service is started and enabled differently in RHEL 6 than in RHEL 7 and RHEL 8.

Starting Kdump

For RHEL6 we use chkconfig:

[root@hostname.rhel6 ~]# chkconfig kdump on

For RHEL 7 and RHEL 8 systemctl start starts the service and systemctl enable enables it so that it automatically starts on boot:

[root@hostname.rhel7 ~]# systemctl start kdump

[root@hostname.rhel7 ~]# systemctl enable kdump

Crashkernel Reservation

Known as the crashkernel reservation, a portion of memory must be reserved at the boot time of the live running kernel. This is where the kdump/crash kernel resides that kexec boots. The amount of memory that is required varies depending on system architecture and amount of RAM on the system. This crashkernel reservation is unusable by the main live running kernel, so care should be given not to over-reserve memory if you use a manually generated crashkernel reservation size. On the other hand, reservations that are too small in size can lead to the kdump service either failing to start or failing to capture a vmcore when the system encounters a kernel panic.

The crashkernel reservation is set on the kernel command line of the GRUB bootloader in the following format:

crashkernel=<size>

Recommended Crashkernel Reservation Size

The default value since RHEL 6.2 is crashkernel=auto which calculates and uses a reservation size without further user input. While this default setting often works without issue, exceptions arise in the case of very small (<1GB) memory systems and when the kdump/crash kernel uses multiple/additional 3rd party drivers. In these cases a manual crashkernel reservation is required.

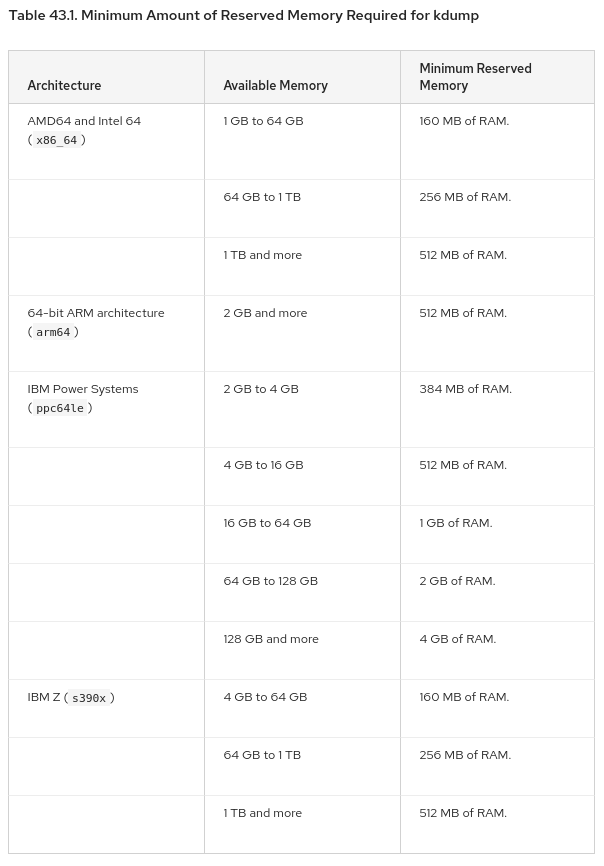

The following table is from Chapter 43 of the RHEL 8 System Design Guide and is a very useful table for what size manual crashkernel reservations are recommended:

Checking the Crashkernel Reservation

Misconfigurations within the crashkernel reservation present in different ways. If no reservation is being made, this is logged in /var/log/messages when the service fails to start:

[root@hostname.rhel6 ~]# grep kdump /var/log/messages

Aug 18 16:01:02 rhel6 kdump: No crashkernel parameter specified for running kernel

We can then check for a crashkernel reservation by examining the /proc/cmdline file:

[root@hostname.rhel6 ~]# cat /proc/cmdline

ro root=/dev/mapper/vg_rhel6-lv_root rd_NO_LUKS LANG=en_US.UTF-8 rd_NO_MD SYSFONT=latarcyrheb-sun16 rd_LVM_LV=vg_rhel6/lv_swap KEYBOARDTYPE=pc KEYTABLE=us rd_LVM_LV=vg_rhel6/lv_root rd_NO_DM console=tty0 console=ttyS0,115200

Setting the Crashkernel Reservation in RHEL 6

Above we see that no crashkernel reservation is being set on the kernel command line. This is resolved in RHEL 6 by adding the desired value to /boot/grub/grub.conf:

[root@hostname.rhel6 ~]# grep crashkernel /boot/grub/grub.conf

kernel /vmlinuz-2.6.32-754.el6.x86_64 ro root=/dev/mapper/vg_rhel6-lv_root rd_NO_LUKS LANG=en_US.UTF-8 rd_NO_MD SYSFONT=latarcyrheb-sun16 rd_LVM_LV=vg_rhel6/lv_swap crashkernel=auto KEYBOARDTYPE=pc KEYTABLE=us rd_LVM_LV=vg_rhel6/lv_root rd_NO_DM console=tty0 console=ttyS0,115200 crashkernel=auto

Setting the Crashkernel Reservation in RHEL 7

In RHEL 7 we specify a crashkernel reservation in /etc/default/grub, rebuild the /boot/grub2/grub.cfg configuration file, and reboot the system:

[root@hostname.rhel7 ~]# cat /etc/default/grub

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

GRUB_DEFAULT=saved

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX="crashkernel=128M rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap console=tty0 console=ttyS0,115200" <<<---- crashkernel reservation specified on this line

GRUB_TERMINAL="console serial"

GRUB_SERIAL_COMMAND="serial --speed=115200 --unit=0 --word=8 --parity=no --stop=1"

GRUB_DISABLE_RECOVERY="true"

[root@hostname.rhel7 ~]# grub2-mkconfig -o /boot/grub2/grub.cfg

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-3.10.0-862.el7.x86_64

Found initrd image: /boot/initramfs-3.10.0-862.el7.x86_64.img

Found linux image: /boot/vmlinuz-0-rescue-80530fa1fc3d4f879d2ca4089b43e8c6

Found initrd image: /boot/initramfs-0-rescue-80530fa1fc3d4f879d2ca4089b43e8c6.img

done

[root@hostname.rhel7 ~]# reboot now

Setting the Crashkernel Reservation in RHEL 8

In RHEL 8 the crashkernel reservation is set by using the kernelopts line in /boot/grub2/grubenv then rebooting the system:

[root@hostname.rhel8 ~]# grep crashkernel /boot/grub2/grubenv

kernelopts=root=/dev/mapper/rhel-root ro crashkernel=128M@16M resume=/dev/mapper/rhel-swap rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap console=tty0 console=ttyS0,115200

[root@hostname.rhel8 ~]# reboot now

Undersized Crashkernel Reservations

Undersized crashkernel reservations or typos in the configuration can lead to the kdump service failing to start with errors similar to the one shown below:

[root@hostname.rhel8 ~]# systemctl status kdump

● kdump.service - Crash recovery kernel arming

Loaded: loaded (/usr/lib/systemd/system/kdump.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Fri 2020-08-21 15:55:19 EDT; 2s ago

Process: 1153 ExecStart=/usr/bin/kdumpctl start (code=exited, status=1/FAILURE)

Main PID: 1153 (code=exited, status=1/FAILURE)

Aug 21 15:55:17 rhel8 systemd[1]: Starting Crash recovery kernel arming...

Aug 21 15:55:19 rhel8 kdumpctl[1153]: Could not find a free area of memory of 0x2d62000 bytes...

Aug 21 15:55:19 rhel8 kdumpctl[1153]: locate_hole failed

Aug 21 15:55:19 rhel8 kdumpctl[1153]: kexec: failed to load kdump kernel

Aug 21 15:55:19 rhel8 kdumpctl[1153]: Starting kdump: [FAILED]

Aug 21 15:55:19 rhel8 systemd[1]: kdump.service: Main process exited, code=exited, status=1/FAILURE

Aug 21 15:55:19 rhel8 systemd[1]: kdump.service: Failed with result 'exit-code'.

Aug 21 15:55:19 rhel8 systemd[1]: Failed to start Crash recovery kernel arming.

Another potential outcome of an undersized crashkernel reservation is the kdump service starting but failing to dump a vmcore upon encountering a kernel panic.

These situations can be more difficult to troubleshoot as identifying this requires capturing verbose serial console logs.

As a proactive measure ensure that an adequate crashkernel reservation is being set following the guidelines outlined in the table above, and keep in mind that 3rd party kernel modules will require additional memory be reserved for the crashkernel beyond the default recommendations.

Local Dump Targets

By default the kdump service uses the local directory /var/crash as the dump target and dump path for vmcores. Since this is one of the most frequently changed settings, it is also one of the most frequently encountered misconfigurations.

Available Storage Space Requirement

The most common misconfiguration seen is kdump using an undersized dump target (directory). This can lead to kdump failing to dump a vmcore entirely, or dumping an incomplete vmcore that stops once all available space is used.

Red Hat recommends a dump target with available space at least equal to the amount of RAM on the system. This can be checked by comparing the amount of available space at the dump target using the df command, and comparing it to the total amount of memory on the system shown in /proc/meminfo:

[root@hostname.rhel8 ~]# head -1 /proc/meminfo

MemTotal: 1485228 kB

[root@hostname.rhel8 ~]# df -h | grep Used ; df -h | grep \/var\/crash

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/pvcrash-logvol--crash 5.0G 68M 5.0G 2% /var/crash

Getting the Smallest Core

While this may seem like a simple “yes or no” situation it can get more nebulous as you work with large memory systems. Is it pragmatic to actually recommend a 9TB dump target if the system has 9TB RAM? The fact is that the recommendation doesn’t change, but we can look at the makedumpfile configuration in /etc/kdump.conf to ensure the customer is using compression and dump_level 31:

[root@hostname.rhel8 ~]# grep -v ^# /etc/kdump.conf

path /var/crash

core_collector makedumpfile -l --message-level 1 -d 31

The -l flag instructs makedumpfile to use compression, and -d 31 instructs it to use dump_level 31. Dump_level 31 is the default value and excludes zero pages, non-private cache, private cache, user data, and free pages - all of which are normally not necessary when investigating most incidents of a kernel crash.

Dump Path Misconfigurations

Another common mistake in configuring a local dump target is an unintended double declaration of the dump path when specifying the desired dump target. For example this system has the kdump service failing to start, but a cursory glance at the /etc/kdump.conf file doesn’t show any glaring mistakes:

[root@hostname.rhel7 ~]# grep -v ^# /etc/kdump.conf

xfs UUID="54717800-885d-4bec-9af7-d51c2b1c6da1"

path /var/crash

core_collector makedumpfile -l --message-level 1 -d 31

The path is specified to /var/crash of the XFS filesystem with UUID of "54717800-885d-4bec-9af7-d51c2b1c6da1". Yet when we check the status of the kdump service we see that it has failed to load indicating that Dump path /var/crash/var/crash does not exist.:

[root@hostname.rhel7 ~]# systemctl status kdump

● kdump.service - Crash recovery kernel arming

Loaded: loaded (/usr/lib/systemd/system/kdump.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Mon 2020-08-24 10:50:24 EDT; 17s ago

Process: 1031 ExecStart=/usr/bin/kdumpctl start (code=exited, status=1/FAILURE)

Main PID: 1031 (code=exited, status=1/FAILURE)

Aug 24 10:50:23 rhel7 kdumpctl[1031]: /etc/fstab

Aug 24 10:50:23 rhel7 kdumpctl[1031]: Rebuilding /boot/initramfs-3.10.0-862.el7.x86_64kdump.img

Aug 24 10:50:24 rhel7 kdumpctl[1031]: df: ‘/var/crash/var/crash’: No such file or directory

Aug 24 10:50:24 rhel7 kdumpctl[1031]: Dump path /var/crash/var/crash does not exist.

Aug 24 10:50:24 rhel7 kdumpctl[1031]: mkdumprd: failed to make kdump initrd

Aug 24 10:50:24 rhel7 kdumpctl[1031]: Starting kdump: [FAILED]

Aug 24 10:50:24 rhel7 systemd[1]: kdump.service: main process exited, code=exited, status=1/FAILURE

Aug 24 10:50:24 rhel7 systemd[1]: Failed to start Crash recovery kernel arming.

Aug 24 10:50:24 rhel7 systemd[1]: Unit kdump.service entered failed state.

Aug 24 10:50:24 rhel7 systemd[1]: kdump.service failed.

This behavior is explained in the man page for kdump.conf:

path <path>

"path" represents the file system path in which vmcore will be saved. If a dump

target is specified in kdump.conf, then "path" is relative to the specified dump

target.

Interpretation of "path" changes a bit if the user didn't specify any dump target

explicitly in kdump.conf. In this case, "path" represents the absolute path from

root.

The path is relative to the target when both are specified. Kdump is looking for a path /var/crash relative to the dump target, confirmed in /etc/fstab to already be mounted at /var/crash:

[root@hostname.rhel7 ~]# grep crash /etc/fstab

UUID=54717800-885d-4bec-9af7-d51c2b1c6da1 /var/crash xfs defaults 0 0

This usage is then interpreted to be /var/crash/var/crash when the kdump.conf file is read.

The correct usage would be to specify a path of / as follows:

[root@hostname.rhel7 ~]# grep -v ^# /etc/kdump.conf

xfs UUID="54717800-885d-4bec-9af7-d51c2b1c6da1"

path /

core_collector makedumpfile -l --message-level 1 -d 31

Note that the path / must be included, as omitting the path directive entirely causes kdump to default to /var/crash, as stated in man kdump.conf:

If unset, will use the default "/var/crash".

Remote Dump Target

In some environments a remote dump to an NFS share or over SSH may be preferable to a local one. However as we have seen with other configurations, this added functionality increases the opportunity for a misconfiguration.

Dumping a vmcore to an NFS Share

According to the kdump.conf man page, the proper format for using an NFS share is as follows:

nfs <nfs mount>

This configuration means that the dump path will be relative to the dump target. It must be present/mounted in the live running kernel. You cannot declare a NFS mount that is only configured in the kdump kernel. Also keep in mind where the NFS share is mounted so as to avoid a double declaration of the dump path, as seen here:

[root@hostname.rhel8 ~]# grep -v ^# /etc/kdump.conf

nfs rhel7:/kdump

path /vmcore

core_collector makedumpfile -l --message-level 1 -d 31

[root@hostname.rhel8 ~]# systemctl restart kdump

Job for kdump.service failed because the control process exited with error code.

See "systemctl status kdump.service" and "journalctl -xe" for details.

[root@hostname.rhel8 ~]# systemctl status kdump -l

● kdump.service - Crash recovery kernel arming

Loaded: loaded (/usr/lib/systemd/system/kdump.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Tue 2020-08-25 13:31:47 EDT; 6s ago

Process: 2056 ExecStart=/usr/bin/kdumpctl start (code=exited, status=1/FAILURE)

Main PID: 2056 (code=exited, status=1/FAILURE)

Aug 25 13:31:47 rhel8 kdumpctl[2056]: /etc/kdump.conf

Aug 25 13:31:47 rhel8 kdumpctl[2056]: /etc/fstab

Aug 25 13:31:47 rhel8 kdumpctl[2056]: Rebuilding /boot/initramfs-4.18.0-193.el8.x86_64kdump.img

Aug 25 13:31:47 rhel8 kdumpctl[2056]: df: /vmcore/vmcore: No such file or directory

Aug 25 13:31:47 rhel8 kdumpctl[2056]: Dump path /vmcore/vmcore does not exist.

Aug 25 13:31:47 rhel8 kdumpctl[2056]: mkdumprd: failed to make kdump initrd

Aug 25 13:31:47 rhel8 kdumpctl[2056]: Starting kdump: [FAILED]

Aug 25 13:31:47 rhel8 systemd[1]: kdump.service: Main process exited, code=exited, status=1/FAILURE

Aug 25 13:31:47 rhel8 systemd[1]: kdump.service: Failed with result 'exit-code'.

Aug 25 13:31:47 rhel8 systemd[1]: Failed to start Crash recovery kernel arming.

[root@hostname.rhel8 ~]# grep vmcore /etc/fstab

rhel7:/kdump /vmcore nfs _netdev 0 0

Above we see the kdump service fails to start and systemd logging shows that the dump path /vmcore/vmcore does not exist. This is again due to the dump path being relative to the dump target which is already mounted at /vmcore. The path directive must point to / for this configuration to work:

[root@hostname.rhel8 ~]# grep -v ^# /etc/kdump.conf

nfs rhel7:/kdump

path /

core_collector makedumpfile -l --message-level 1 -d 31

Note: In kexec-tools versions prior to 2.0.0-232 the nfs directive is not used, and instead is specified as net.

In addition to ensuring that the dump target and dump path are correctly and clearly defined, using an NFS share necessitates the required permissions and directory ownership is in place to write to the share, both on the dumping system and on the system sharing the export. Here a RHEL 8 system uses an NFS share /kdump from a RHEL 7 system. The share is mounted at /vmcore and has the correct ownership and permissions on the directory for vmcores to be captured:

[root@hostname.rhel8 ~]# grep -v ^# /etc/kdump.conf

nfs rhel7:/kdump

path /

core_collector makedumpfile -l --message-level 1 -d 31

[root@hostname.rhel8 ~]# grep vmcore /etc/fstab

rhel7:/kdump /vmcore nfs _netdev 0 0

[root@hostname.rhel8 ~]# ll -d /vmcore

drwxr-xr-x. 3 nobody nobody 49 Aug 25 13:48 /vmcore <<--- dumping system

[root@hostname.rhel7 ~]# ll -d /kdump

drwxr-xr-x. 3 nfsnobody nfsnobody 49 Aug 25 13:48 /kdump <<--- exporting system

Dumping a vmcore via SSH

Using a remote dump target via SSH introduces its own set of requirements in the kdump configuration.

By convention SSH utilizes a user account to write the vmcore to the remote target. This means the specified user on the remote system must exist, and must have write permissions on the dump path directory.

In addition to ensuring the correct permissions are used, remote dumping via SSH requires the necessary key pairs to use SSH are in place. If this has not been done, the kdump service will fail to start, and in most cases evidence of the failure will be logged. This system is attempting to dump via SSH using the user kdump. We see the following configuration in /etc/kdump.conf:

[root@hostname.rhel8 ~]# grep -v ^# /etc/kdump.conf

ssh kdump@rhel7

path /kdump

core_collector makedumpfile -l --message-level 1 -d 31

And we see the corresponding directory on the rhel7 host has valid directory ownership and permissions:

[root@hostname.rhel7 ~]# ll -d /kdump

drwxr-xr-x. 3 kdump kdump 49 Aug 25 13:48 /kdump

But the kdump service is failing to start:

[root@hostname.rhel8 ~]# systemctl start kdump

Job for kdump.service failed because the control process exited with error code.

See "systemctl status kdump.service" and "journalctl -xe" for details.

We see the following messages being logged:

Aug 25 15:10:54 rhel8 kdumpctl[9403]: Could not create kdump@rhel7:/kdump, you probably need to run "kdumpctl propagate"

Aug 25 15:10:54 rhel8 kdumpctl[9403]: Starting kdump: [FAILED]

Aug 25 15:10:54 rhel8 systemd[1]: kdump.service: Main process exited, code=exited, status=1/FAILURE

Aug 25 15:10:54 rhel8 systemd[1]: kdump.service: Failed with result 'exit-code'.

The messages suggest the command kdumpctl propagate needs to be run to start the service. This command performs the necessary SSH key pair exchange which avoids the administrator having to enter a password for the dump via SSH. However after performing the key pair exchange the kdump service is still failing to start:

[root@hostname.rhel8 ~]# kdumpctl propagate

Generating new ssh keys... done.

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/kdump_id_rsa.pub"

The authenticity of host 'rhel7 (192.168.122.187)' can't be established.

ECDSA key fingerprint is SHA256:hzaNWg6pdLHE0LNfvmpjYxYS3AITFfmwhcYDXJKIWIc.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

kdump@rhel7's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'kdump@rhel7'"

and check to make sure that only the key(s) you wanted were added.

/root/.ssh/kdump_id_rsa has been added to ~kdump/.ssh/authorized_keys on rhel7

[root@hostname.rhel8 ~]# systemctl start kdump

Job for kdump.service failed because the control process exited with error code.

See "systemctl status kdump.service" and "journalctl -xe" for details.

Another check of the message logs show the following:

[root@hostname.rhel8 ~]# tail /var/log/messages

Aug 25 15:13:59 rhel8 kdumpctl[9522]: /etc/kdump.conf

Aug 25 15:13:59 rhel8 kdumpctl[9522]: /etc/fstab

Aug 25 15:13:59 rhel8 kdumpctl[9522]: Rebuilding /boot/initramfs-4.18.0-193.el8.x86_64kdump.img

Aug 25 15:14:01 rhel8 kdumpctl[9522]: The specified dump target needs makedumpfile "-F" option.

Aug 25 15:14:01 rhel8 kdumpctl[9522]: mkdumprd: failed to make kdump initrd

Aug 25 15:14:01 rhel8 kdumpctl[9522]: Starting kdump: [FAILED]

Aug 25 15:14:01 rhel8 systemd[1]: kdump.service: Main process exited, code=exited, status=1/FAILURE

Aug 25 15:14:01 rhel8 systemd[1]: kdump.service: Failed with result 'exit-code'.

Aug 25 15:14:01 rhel8 systemd[1]: Failed to start Crash recovery kernel arming.

Aug 25 15:21:17 rhel8 sssd[kcm][9515]: Shutting down (status = 0)

Above we see that the -F flag is needed in the makedumpfile configuration. The man makedumpfile manpage explains:

-F Output the dump data in the flattened format to the standard output for transporting the dump data by SSH.

The flattened format is required to dump the vmcore via SSH. Once added to the configuration the kdump service starts successfully, and the final configuration appears as follows:

[root@hostname.rhel8 ~]# grep -v ^# /etc/kdump.conf

ssh kdump@rhel7

path /kdump

core_collector makedumpfile -l --message-level 1 -d 31 -F

Additional Considerations

Some misconfigurations are limited to specific environments and won't be a potential pitfall to watch out for in every RHEL deployment. The following items should be checked if vmcore collection continues to fail, the basic configuration requirements have been satisfied, and the environment/deployment circumstances of the issues outlined below match your own.

HPSA Driver Failure

HPE ProLiant Gen9-series servers running RHEL and configured with an HPE Smart Array (HPSA) or HPE Smart HBA controllers with firmware version 4.52 will stop responding and fail to create a kdump file for disks on the Smart Array.

In the event of this failure, there will be no logs captured in /var/log/messages to indicate this has happened, however, verbose serial console logging can be enabled and will show the following messages:

hpsa 0000:02:00.0: board not ready, timed out.

hpsa 0000:02:00.0: Failed waiting for board to become ready after

Alternatively, check the HPSA firmware version and upgrade if your version matches 4.52. The firmware version is typically printed to the dmesg at boot.

Further information regarding this issue can be found at the following link:

Disclaimer: The following link directs to a 3rd party website belonging to HPE support. Any questions regarding the information provided at the following link should be directed toward HPE support.

Kdump in Clustered Environments

Cluster environments potentially invite their own unique obstacles to vmcore collection. Some clusterware provides functionality to fence nodes via the SysRq or an NMI allows for vmcore collection upon fencing a node. Typically the clusterware vendor is the authority on any configuration that intends to capture a vmcore upon fence events or node evictions. This means that Red Hat will support configuring this set up for Pacemaker nodes, but third party cluster users should seek support from their clusterware provider.

In addition to ensuring that the cluster and kdump configuration is sound, if a system encounters a kernel panic there is the possibility that it can be fenced and rebooted by the cluster before finishing dumping the vmcore. If this is suspected in a cluster environment it may be a good idea to remove the node from the cluster and reproduce the issue as a test.

Third Party Kernel Modules

In some cases it may be necessary or beneficial to add third party modules to the naughtylist during the generation of the kdump initrd image. The presence of third party modules may require a larger crashkernel reservation than the standard recommendation. The size of the crashkernel can be increased to compensate for this increased demand from the third party modules, or one can simply naughtylist the modules to eliminate the uncertainty or added complexity introduced by using third party modules.

Naughtylisting modules is accomplished differently depending on RHEL version. In RHEL6 kernel modules can be naughtylisted by editing the /etc/kdump.conf file:

path /var/crash

core_collector makedumpfile -c --message-level 1 -d 31

blacklist module1 module2 module3 module4 <<<<<------

In RHEL 7 and RHEL 8 modules are naughtylisted on the KDUMP_COMMANDLINE_APPEND= line of the /etc/sysconfig/kdump file using the rd.driver.blacklist= directive as follows:

KDUMP_COMMANDLINE_APPEND="irqpoll nr_cpus=1 reset_devices cgroup_disable=memory mce=off numa=off udev.children-max=2 panic=10 rootflags=nofail acpi_no_memhotplug transparent_hugepage=never nokaslr novmcoredd hest_disable rd.driver.blacklist=module1,module2,module3,module4"

Kdump failure on UEFI Secure Boot Systems

Security boot enabled UEFI systems booting into previously used kernel versions will see the kdump service fail to start with the following message:

kexec_file_load failed: Required key not available

This issue can be fixed by updating the kernel to the latest version, or by specifying the updated kernel version in /etc/sysconfig/kdump on the KDUMP_KERNELVER= line.

While this document covers many potential common kdump misconfigurations, there are less common scenarios not covered here. Remember that issues can often be identified through capturing verbose serial console logs.

Comments