Getting Started with the Subscriptions Service

Abstract

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. To provide feedback, open a Jira issue that describes your concerns. Provide as much detail as possible so that your request can be addressed quickly.

Prerequisites

- You have a Red Hat Customer Portal account. This account enables you to log in to the Red Hat Jira Software instance. If you do not have an account, you will be prompted to create one.

Procedure

To provide your feedback, use the following steps:

- Click the following link: Create Issue

- In the Summary text box, enter a brief description of the issue.

- In the Description text box, provide more details about the issue. Include the URL where you found the issue.

- Provide information for any other required fields. Allow fields that contain default information to remain at the defaults.

- Click Create to create the Jira issue for the documentation team.

A documentation issue will be created and routed to the appropriate documentation team. Thank you for taking the time to provide feedback.

Part I. About the subscriptions service

The subscriptions service in the Hybrid Cloud Console provides a visual representation of the subscription experience across your hybrid infrastructure in a dashboard-based application. The subscriptions service is intended to simplify how you interact with your subscriptions, providing both a historical look-back at your subscription usage and an ability to make informed, forward-facing decisions based on that usage and your remaining subscription capacity.

Learn more

To learn more about the subscriptions service, see the following information:

To learn more about the benefits that the subscriptions service offers, see the following information:

To learn more about the current capabilities of the subscriptions service, see the following information:

Chapter 1. What is the subscriptions service?

The subscriptions service provides reporting of subscription usage information across the constituent parts of your hybrid infrastructure, including physical and virtual technology deployments; on-premise and cloud environments; and cluster, instance, and workload use cases for Red Hat product portfolios.

The subscriptions service supports the following product portfolios:

- Unified reporting of Red Hat Enterprise Linux subscription usage information for physical, virtual, hypervisor, and public cloud based usage. This unified reporting model enhances your ability to consume, track, report, and reconcile your RHEL subscriptions with your purchasing agreements and deployment types.

- Reporting of Red Hat OpenShift subscription usage information. The subscriptions service uses data available from Red Hat internal subscription services, in addition to data from Red Hat OpenShift reporting tools, to show aggregated cluster usage data in the context of Red Hat OpenShift subscription types such as Red Hat OpenShift Container Platform subscriptions. Other Red Hat OpenShift products and add-ons consume resources differently, so the tracking of that usage can vary per product. In general, that usage can be represented as a combination of one or more metrics, such as data transfer and data storage for workload activities and instance availability for the consumption of control plane resources. Usage can also be represented as cluster usage data for the virtual cores.

- Reporting of Red Hat Ansible subscription usage information. The subscriptions service uses data available from Ansible services to show this usage.

The simplified, consistent subscription reporting experience shows your account-wide Red Hat subscriptions compared to your total inventory across all deployments and programs. It is an at-a-glance impression of both your account’s remaining subscription capacity measured against a subscription threshold and the historical record of your software usage.

The subscriptions service provides increased and ongoing visibility of your subscription usage. By pairing the subscriptions service with simple content access to streamline the consumption of your subscriptions, your operations experience fewer barriers to Red Hat content deployment and usage tracking.

You can choose to use neither, either, or both of these services. However, the subscriptions service and simple content access are designed as complementary services and function best when they are used in tandem. Simple content access simplifies the subscription experience by allowing more flexible ways of consuming content. The subscriptions service provides account-wide visibility of usage across your subscription profile, adding governance capabilities to this flexible content consumption.

To learn more about the simple content access tool and how you can use it with the subscriptions service, see the Getting Started with Simple Content Access guide.

In October and November of 2024, Red Hat accounts and organizations that primarily use Red Hat Subscription Management for subscription management were migrated to use simple content access.

For Red Hat accounts and organizations that primarily use Satellite, versions 6.15 and earlier can continue to support an entitlement-based workflow for the remainder of the supported lifecycle for those versions. However, Satellite version 6.16 and later versions will support only the simple content access workflow.

Chapter 2. What are the benefits of the subscriptions service?

The subscriptions service provides these benefits:

- Tracks selected Red Hat product usage and capacity at the fleet or account level in a unified inventory and provides a daily snapshot of that data in a digestible, filterable dashboard at console.redhat.com.

- Tracks data over time for self-governance and analytics that can inform purchasing and renewal decisions, ongoing capacity planning, and mitigation for high-risk scenarios.

- Helps procurement officers make data-driven choices with portfolio-centered reporting dashboards that show both inventory-occupying subscriptions and current subscription limits across the entire organization.

- With its robust reporting capabilities, enables the transition to simple content access tooling that features broader, organizational-level subscription enforcement instead of system-level quantity enforcement.

Chapter 3. What does the subscriptions service track?

The subscriptions service currently tracks and reports usage information for Red Hat Enterprise Linux, some Red Hat OpenShift products, and some Red Hat Ansible products.

The subscriptions service identifies subscriptions through their stock-keeping units, or SKUs. Only a subset of Red Hat SKUs are tracked by the subscriptions service. In the usage reporting for a product, the tracked SKUs in your account contribute to the maximum capacity information, also known as the subscription threshold, for that product.

For the SKUs that are not tracked, the subscriptions service maintains an explicit deny list within the source code. To learn more about the SKUs that are not tracked, you can view this deny list in the code repository.

3.1. Red Hat Enterprise Linux

The subscriptions service tracks RHEL Annual subscription usage on physical systems, virtual systems, hypervisors, and public cloud. For a limited subset of subscriptions, currently Red Hat Enterprise Linux Extended Life Cycle Support Add-On on Amazon Web Services (AWS), it tracks RHEL pay-as-you-go On-Demand subscription usage for instances running in public cloud providers.

If your RHEL installations predate certificate-based subscription management, the subscriptions service will not track that inventory.

3.1.1. RHEL with a traditional Annual subscription

The subscriptions service tracks RHEL usage in sockets, as follows:

- Tracks physical RHEL usage in CPU sockets, where usage is counted by socket pairs.

- Tracks virtualized RHEL by the installed socket count for standard guest subscriptions with no detectable hypervisor management, where one virtual machine equals one socket.

- Tracks hypervisor RHEL usage in CPU sockets, with the socket-pair method, for virtual data center (VDC) subscriptions and similar virtualized environments. RHEL based hypervisors are counted both for the copy of RHEL that is used to run the hypervisor and the copy of RHEL for the virtual guests. Hypervisors that are not RHEL based are counted for the copy of RHEL for the virtual guests.

- Tracks public cloud RHEL instance usage in sockets, where one instance equals one socket.

- Additionally, tracks Red Hat Satellite to enable the visibility of RHEL that is bundled with Satellite.

3.1.2. RHEL with a pay-as-you-go On-Demand subscription

The subscriptions service tracks metered RHEL in vCPU hours, as follows:

- Tracks pay-as-you-go On-Demand instance usage in virtual CPU hours (vCPU hours), a measurement of availability for computational activity on one virtual core (as defined by the subscription terms), for a total of one hour, measured to the granularity of the meter that is used. For RHEL pay-as-you-go On-Demand subscription usage, availability for computational activity is the availability of the RHEL instance over time.

Currently, Red Hat Enterprise Linux for Third Party Linux Migration with Extended Life Cycle Support Add-on is the only RHEL pay-as-you-go On-Demand subscription offering that is tracked by the subscriptions service.

The subscriptions service ultimately aggregates all instance vCPU hour data in the account into a monthly total, the unit of time that is used by the billing service for the cloud provider marketplace.

3.2. Red Hat OpenShift

Generally, the subscriptions service tracks Red Hat OpenShift usage, such as the usage of Red Hat OpenShift Container Platform, as cluster size on physical and virtual systems. The cluster size is the sum of all subscribed nodes. A subscribed node is a compute or worker node that runs workloads, as opposed to a control plane or infrastructure node that manages the cluster.

Beyond the general rule for cluster-based tracking by cluster size, this tracking is dependent on several factors:

- The Red Hat OpenShift product

- The type of subscription that was purchased for that product

- The version of that product

- The unit of measurement for the product, as defined by the subscription terms, that determines how cluster size and overall usage is calculated

- The structure of nodes, including any labels used to assign node roles and the configuration of scheduling to control pod placement on nodes

However, there are exceptions for some other Red Hat OpenShift products and add-ons that track consumption of resources related to different types of workloads, such as data transfer and data storage for workload activities, or instance availability for consumption of control plane resources.

3.2.1. Impact of various factors on Red Hat OpenShift tracking

The subscriptions service tracks and reports usage for fully managed and self-managed Red Hat OpenShift products in both physical and virtualized environments. Due to changes in the reporting models between Red Hat OpenShift major versions 3 and 4, usage data for version 3 is reported at the node level, while usage data for version 4 is reported and aggregated at the cluster level. The following information is more applicable to the version 4 reporting model, with data aggregated at the cluster level.

Much of the work for the counting of Red Hat OpenShift usage takes place in the monitoring stack tools and OpenShift Cluster Manager. These tools then send core count or vCPU count data, as applicable, to the subscriptions service for usage reporting. The core and vCPU data is based on the subscribed cluster size, which is derived from the cluster nodes that are processing workloads.

For fully managed Red Hat OpenShift products, such as Red Hat OpenShift Dedicated or Red Hat OpenShift AI, usage counting is generally time-based, measured in units such as core hours or vCPU hours. The infrastructure of the Red Hat managed environment is more consistently available to Red Hat, including the monitoring stack tools and OpenShift Cluster Manager. Data for subscribed nodes, the nodes that can accept workloads, is readily discoverable, as is data about cores, vCPUs and other facts that contribute to usage data for the subscriptions service.

For self-managed Red Hat OpenShift products, such as Red Hat OpenShift Container Platform Annual and Red Hat OpenShift Container Platform On-Demand, usage counting is generally based on cores. The infrastructure of a customer-designed environment is less predictable, and in some cases facts pertinent to usage calculations might be less accessible, especially in a virtualized, x86-based environment.

Because some of these facts might be less accessible, the usage counting process contains assumptions about simultaneous multithreading, also known as hyperthreading, that are applied when analyzing and reporting usage data for virtualized Red Hat OpenShift clusters for x86 architectures. These assumptions are necessary because some vendors offer hypervisors that do not expose data about simultaneous multithreading to the guests.

Ongoing analysis and customer feedback have resulted in incremental improvements, both to the subscriptions service and to the associated data pipeline, that have enhanced the accuracy of usage counting for hyperthreading use cases. The foundational assumption currently used in the subscriptions service reporting is that simultaneous multithreading occurs at a factor of 2 threads per core. Internal research has shown that this factor is the most common configuration, applicable to a significant majority of customers. Therefore, assuming 2 threads per core follows common multithreading best practices and errs in favor of the small percentage of customers, approximately 10%, who are not using multithreading. This decision is the most equitable for all customers when deriving the number of cores from the observed number of threads.

A limited amount of self-managed Red Hat OpenShift offerings are available as socket-based subscriptions. For those socket-based subscriptions, the hypervisor reports the number of sockets to the operating system, usually Red Hat Enterprise Linux CoreOS, and that socket count is sent to the subscriptions service for usage tracking. The subscriptions service tracks and reports socket-based subscriptions with the socket-pair method that is used for RHEL.

3.2.2. Core-based usage counting workflow for self-managed Red Hat OpenShift products

For self-managed Red Hat OpenShift products such as Red Hat OpenShift Container Platform Annual and Red Hat OpenShift Container Platform On-Demand, the counting process initiated by the monitoring stack tools and OpenShift Cluster Manager works as follows:

- For a cluster, node types and node labels are examined to determine which nodes are subscribed nodes. A subscribed node is a node that can accept workloads. Only subscribed nodes contribute to usage counting for the subscriptions service.

The chip architecture for the node is examined to determine if the architecture is x86-based. If the architecture is x86-based, then simultaneous multithreading, also known as hyperthreading, must be considered during the usage counting.

- If the chip architecture is not x86-based, the monitoring stack counts usage according to the cores associated with the subscribed nodes and sends that core count to the subscriptions service.

- If the chip architecture is x86-based, the monitoring stack counts usage according to the number of threads on the subscribed nodes. Threads equate to vCPUs, according to the Red Hat definition of vCPUs. This counting method applies whether the multithreading data can accurately be detected, the multithreading data is ambiguous or missing, or multithreading data is specifically set to a value of false on the node. Based on a global assumption of multithreading at a factor of 2, the number of threads is divided by 2 to determine the number of cores. The core count is then sent to the subscriptions service.

3.2.3. Understanding the subscribed cluster size compared to the total cluster size

For Red Hat OpenShift, the subscriptions service does not focus merely on the total size of the cluster and the nodes within it. The subscriptions service focuses on the subscribed portion of clusters, that is, the cluster nodes that are processing workloads. Therefore, the subscriptions service reporting is for the subscribed cluster size, not the entire size of the cluster.

3.2.4. Determining the subscribed cluster size

To determine the subscribed cluster size, the data collection tools and the subscriptions service examine both the node type and the presence of node labels. The subscriptions service uses this data to determine which nodes can accept workloads. The sum of all noninfrastructure nodes plus master nodes that are schedulable is considered available for workload use. The nodes that are available for workload use are counted as subscribed nodes, contribute to the subscribed cluster size, and appear in the usage reporting for the subscriptions service.

The following information provides additional details about how node labels affect the countability of those nodes and in turn affect subscribed cluster size. Analysis of both internal and customer environments shows that these labels and label combinations represent the majority of customer configurations.

| Node label | Usage counted | Exceptions |

|---|---|---|

| worker | yes | Unless there is a combination of the worker label with an infra label |

| worker + infra | no | See Note |

| custom label | yes | Unless there is a combination of the custom label with the master, infra, or control plane label |

| custom label + master, infra, control plane (any combination) | no | |

| master + infra + control plane (any combination) | no | Unless there is a master label present and the node is marked as schedulable |

| schedulable master + infra, control plane (any combination) | yes |

A known issue with the Red Hat OpenShift monitoring stack tools can result in unexpected core counts for Red Hat OpenShift Container Platform versions earlier than 4.12. For those versions, the number of worker nodes can be artificially elevated.

For OpenShift Container Platform versions earlier than 4.12, the Machine Config Operator does not support a dual assignment of infra and worker roles on a node. The counting of worker nodes is correct in OpenShift Container Platform according to the principles of counting subscribed nodes, and this count will display correctly in the OpenShift Container Platform web console.

However, when the monitoring stack tools analyze this data and send it to the subscriptions service and other services in the Hybrid Cloud Console, the Machine Config Operator ignores the dual roles and sets the role on the node to worker. Therefore, worker node counts will be elevated in the subscriptions service and in OpenShift Cluster Manager.

3.2.5. Red Hat OpenShift Container Platform with a traditional Annual subscription

The subscriptions service tracks Red Hat OpenShift Container Platform usage in CPU cores or sockets for clusters and aggregates this data into an account view, as refined by the following version support:

- RHOCP 4.1 and later with Red Hat Enterprise Linux CoreOS based nodes or a mixed environment of Red Hat Enterprise Linux CoreOS and RHEL based nodes

- RHOCP 3.11

For RHOCP subscription usage, there was a change in reporting models between the major 3 and 4 versions. Version 3 usage is considered at the node level and version 4 usage is considered at the cluster level.

The difference in reporting models for the RHOCP major versions also results in some differences in how the subscriptions service and the associated services in the Hybrid Cloud Console calculate usage. For RHOCP version 4, the subscriptions service follows the rules for examining node types and node labels to calculate the subscribed cluster size as described in Determining the subscribed cluster size. The subscriptions service recognizes and ignores the parts of the cluster that perform overhead tasks and do not accept workloads. The subscriptions service recognizes and tracks only the parts of the cluster that do accept workloads.

However, for RHOCP version 3.11, the version 3 era reporting model cannot distinguish the parts of the cluster that perform overhead tasks and do not accept workloads, so the reporting model cannot find the subscribed and nonsubscribed nodes. Therefore, for RHOCP version 3.11, you can assume that approximately 15% of the subscription data reported by the subscriptions service is overhead for the nonsubscribed nodes that perform infrastructure-related tasks. This percentage is based on analysis of cluster overhead in RHOCP version 3 installations. In this particular case, usage results that show up to 15% over capacity are likely to still be in compliance.

3.2.6. Red Hat OpenShift Container Platform or Red Hat OpenShift Dedicated with a pay-as-you-go On-Demand subscription

- RHOCP or OpenShift Dedicated 4.7 and later

The subscriptions service tracks RHOCP or OpenShift Dedicated 4.7 and later usage from a pay-as-you-go On-Demand subscription in core hours, a measurement of cluster size in CPU cores over a range of time. For an OpenShift Dedicated On-Demand subscription, consumption of control plane resources by the availability of the service instance is tracked in instance hours. The subscriptions service ultimately aggregates all cluster core hour and instance hour data in the account into a monthly total, the unit of time that is used by the billing service for Red Hat Marketplace.

As described in the information about RHOCP 4.1 and later, The subscriptions service recognizes and tracks only the parts of the cluster that contain compute nodes, also commonly called worker nodes.

3.2.7. Red Hat OpenShift Service on AWS Hosted Control Planes with a pre-paid plus On-Demand subscription

The subscriptions service tracks Red Hat OpenShift Service on AWS Hosted Control Planes (ROSA Hosted Control Planes) usage from a pre-paid plus On-Demand subscription in vCPU hours and in control plane hours.

- A vCPU hour is a measurement of availability for computational activity on one virtual core (as defined by the subscription terms) for a total of one hour, measured to the granularity of the meter that is used. For ROSA Hosted Control Planes, availability for computational activity is the availability of the vCPUs for the ROSA Hosted Control Planes subscribed clusters over time. A subscribed cluster is comprised of subscribed nodes, which are the noninfrastructure nodes plus schedulable master nodes that are available for workload use, if applicable. Note that for ROSA Hosted Control Planes, schedulable master nodes are not applicable, unlike other products that also use this measurement. The vCPUs that are available to run the workloads for a subscribed cluster contribute to the vCPU hour count.

- A control plane hour is a measurement of the availability of the control plane. With ROSA Hosted Control Planes, each cluster has a dedicated control plane that is isolated in a ROSA Hosted Control Planes service account that is owned by Red Hat.

3.2.8. Red Hat OpenShift AI with a pay-as-you-go On-Demand subscription

The subscriptions service tracks Red Hat OpenShift AI (RHOAI) in vCPU hours, a measurement of availability for computational activity on one virtual core (as defined by the subscription terms), for a total of one hour, measured to the granularity of the meter that is used. For RHOAI pay-as-you-go On-Demand subscription usage, the availability for computational activity is the availability of the cluster over time.

The subscriptions service ultimately aggregates all cluster vCPU hour data in the account into a monthly total, the unit of time that is used by the billing service for the cloud provider marketplace.

3.2.9. Red Hat Advanced Cluster Security for Kubernetes with a pay-as-you-go On-Demand subscription

The subscriptions service tracks Red Hat Advanced Cluster Security for Kubernetes (RHACS) in vCPU hours, a measurement of availability for computational activity on one virtual core (as defined by the subscription terms), for a total of one hour, measured to the granularity of the meter that is used. For RHACS pay-as-you-go On-Demand subscription usage, the availability for computational activity is the availability of the cluster over time.

The subscriptions service aggregates all cluster vCPU hour data and then sums the data for each cluster where RHACS is running into a monthly total, the unit of time that is used by the billing service for the cloud provider marketplace.

3.3. Red Hat Ansible

The subscriptions service tracks usage of Red Hat Ansible products according to the consumption of resources related to different types of workloads, such as the number of managed execution nodes that run playbooks or instance availability for the control plane.

3.3.1. Red Hat Ansible Automation Platform, as a managed service

The subscriptions service tracks usage of the managed service offering of Red Hat Ansible Automation Platform in managed nodes and infrastructure hours.

- A managed node is a measurement of the number of unique managed nodes that are used within the monthly billing cycle, where the usage is tracked by the invoking of an Ansible task against that node.

- An infrastructure hour is a measurement of the availability of the Ansible Automation Platform infrastructure. Each deployment of Ansible Automation Platform has a dedicated control plane that is isolated in a service account that is owned and managed by Red Hat.

Additional resources

- For more information about the purpose of the subscriptions service deny list, see the What subscriptions (SKUs) are included in Subscription Usage? article.

- For more information about the contents of the subscriptions service deny list, including the specific SKUs in that list, see the deny list source code in GitHub.

Part II. Requirements and your responsibilities

Before you start using the subscriptions service, review the hardware and software requirements and your responsibilities when you use the service.

Learn more

Review the general requirements for using the subscriptions service:

Review information about the tools that you must use to supply the subscriptions service with data about your subscription usage:

Review information about improving the subscriptions service results by setting the right subscription attributes:

Review information about your responsibilities when you use the subscriptions service:

Chapter 4. Requirements

To begin using the subscriptions service, you must meet the following software requirements. For more complete information about these requirements, contact your Red Hat account team.

4.1. Red Hat Enterprise Linux

You must meet at least one of the following requirements for Red Hat Enterprise Linux management:

RHEL managed by Satellite

- The minimum Satellite version is 6.9 or later (versions that are under full or maintenance support are recommended).

- RHEL managed by Red Hat Insights

- RHEL managed by Red Hat Subscription Management

Red Hat Enterprise Linux for Third Party Linux Migration with Extended Life Cycle Support Add-on with a pay-as-you-go On-Demand subscription with metered billing configured

- This subscription requires the configuration of metered billing for the relevant cloud instances. You can choose to meter with the host-metering agent or with the cost management service in the Hybrid Cloud Console. For the most recent information about how to configure metered billing using either of these methods, see Enabling metering for Red Hat Enterprise Linux with Extended Lifecycle Support in your cloud environment.

4.2. Red Hat OpenShift

You must meet the following requirements for Red Hat OpenShift management, based on your product version and subscription type:

Red Hat OpenShift Container Platform with an Annual subscription

- RHOCP version 4.1 or later managed with the monitoring stack tools and OpenShift Cluster Manager

- RHOCP version 3.11 with RHEL nodes managed by Insights, Satellite, or Red Hat Subscription Management

RHOCP with a pay-as-you-go On-Demand subscription

- RHOCP version 4.7 or later managed with the monitoring stack tools and OpenShift Cluster Manager

Red Hat OpenShift Dedicated with a pay-as-you-go On-Demand subscription

- OpenShift Dedicated version 4.7 or later. The monitoring stack tools and OpenShift Cluster Manager are always in use for OpenShift Dedicated

Other managed services managed with the Red Hat OpenShift monitoring stack tools in combination with other Red Hat infrastructure

These managed services include offerings such as Red Hat OpenShift AI and Red Hat Advanced Cluster Security for Kubernetes. Generally for the Red Hat OpenShift managed services, no user setup of the monitoring stack tools or cloud integration (as applicable) is necessary.

NoteSome of the managed services in the Red Hat OpenShift portfolio might also gather and display their own usage data. This data is independent of the data that is gathered by the Red Hat OpenShift monitoring stack tools to display in the subscriptions service. The data displayed in these service-level dashboards is designed more for the needs of the owners of individual clusters, instances, and so on. However, the Red Hat OpenShift platform core capabilities provided by the monitoring stack tools typically gather and process the data that is used in the subscriptions service.

4.3. Red Hat Ansible

You must meet the following requirements for Red Hat Ansible management:

Red Hat Ansible Automation Platform as a managed service

- Red Hat Ansible Automation Platform with a provisioned Ansible control plane during product configuration. No additional setup is necessary.

Chapter 5. How to select the right data collection tool

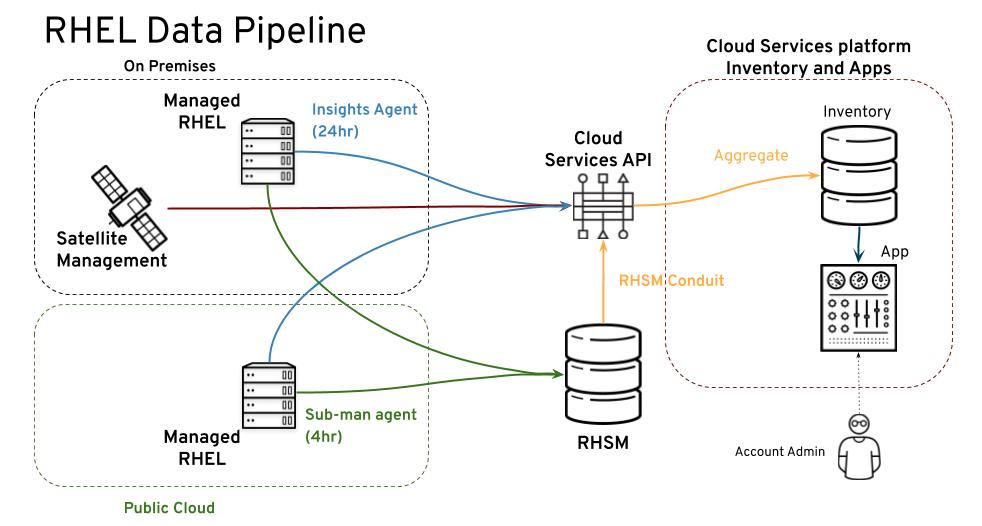

To display data about your subscription usage, the subscriptions service requires a data collection tool to obtain that data. The various data collection tools each have distinguishing characteristics that determine their effectiveness in a particular type of environment.

It is possible that the demands of your environment require more than one of the data collection tools to be running. When more than one data collection tool is supplying data to the services in the Hybrid Cloud Console, the tools that process this data are able to analyze and deduplicate the information from the various data collection tools into standardized facts, or canonical facts.

The following information can help you determine the best data collection tool or tools for your environment.

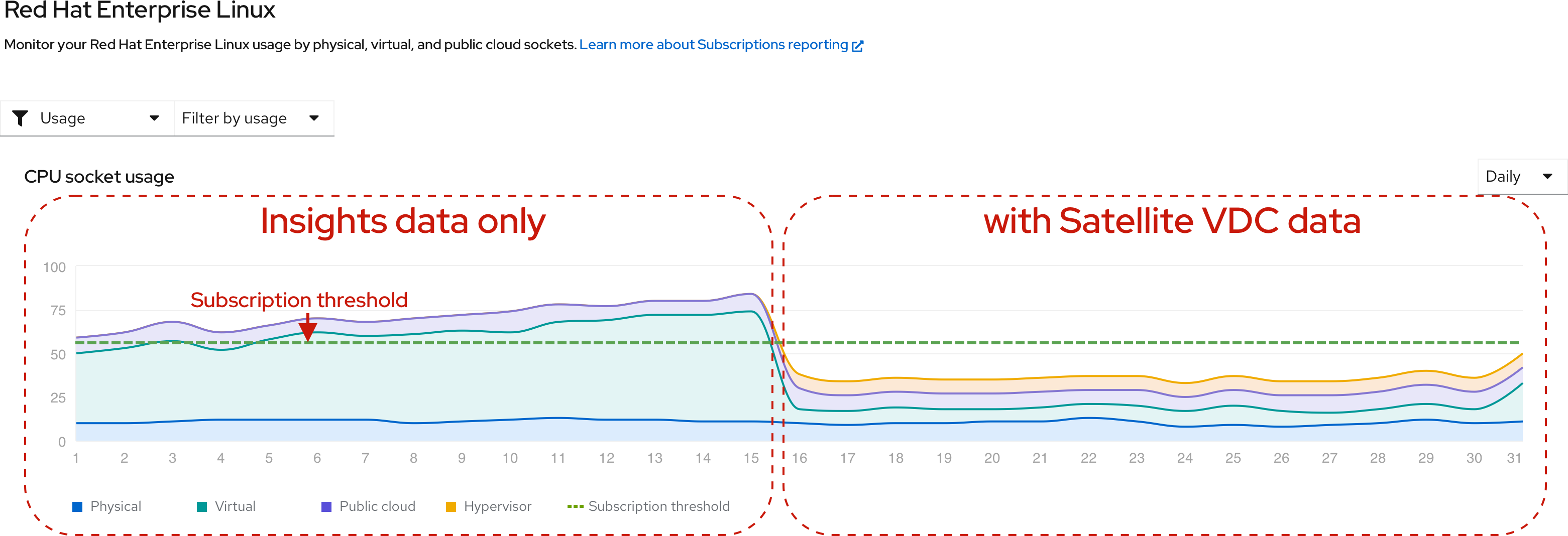

5.1. Red Hat Insights

Insights as a data collection tool is ideal for the always-connected customer. If you fit this profile, you are interested in using Insights not only as a data collection tool, but also as a solution that provides analytic, threat identification, remediation, and reporting capabilities.

With the inclusion of Insights with every Red Hat Enterprise Linux subscription beginning with version 8, and with the availability of Red Hat Insights for Red Hat OpenShift in April 2021, the use of Insights as your data collection tool becomes even more convenient.

However, using Insights as the data collection tool is not ideal if the Insights agent cannot connect directly to the console.redhat.com website or if Red Hat Satellite cannot be used as a proxy for that connection. In addition, it cannot be used as the sole solution if hypervisor host-guest mapping is required for virtual data centers (VDCs) or similar virtualized environments. In that case, Insights must be used in conjunction with Satellite.

5.2. Red Hat Subscription Management

Red Hat Subscription Management is an ideal data collection tool for the connected customer who uses the Subscription Manager agent to send data to Red Hat Subscription Management on the Red Hat Customer Portal.

For customers that are using the subscriptions service, Red Hat Subscription Management automatically synchronizes its data with the Hybrid Cloud Console services. Therefore, in situations where Red Hat Subscription Management is in use, or required, such as with RHEL 7 or later, it is being used as a data collection tool.

5.3. Red Hat Satellite

The use of Satellite as the data collection tool is useful for customers who have specific needs in their environment that either inhibit or prohibit the use of the Insights agent or the Subscription Manager agent for data collection.

For example, you might be able to connect to the Hybrid Cloud Console directly, but you might find the connection and maintenance of a per-organization Satellite installation is more convenient than the per-system installation of Insights. The use of Satellite also enables you to inspect the information that is being sent to the Hybrid Cloud Console on an organization-wide basis instead of a system-only basis.

As another example, your Satellite installation might not be able to connect directly to the Hybrid Cloud Console because you are running Satellite from a disconnected network. In that case, you must export the Satellite reports to a connected system and then upload that data to the Hybrid Cloud Console. To do this, you must use a minimum of Satellite 6.9 or later (versions that are under full support). You must also install the Satellite inventory upload plugin on your Satellite server.

Finally, you might have a need to view the subscriptions service results for RHEL usage from a virtual data center (VDC) subscription or similar virtualized environments. To do so, you must obtain accurate hypervisor host-guest mapping information as part of the data that is collected for analysis. This type of data collection requires the use of Satellite in combination with the Satellite inventory upload plugin and the virt-who tool.

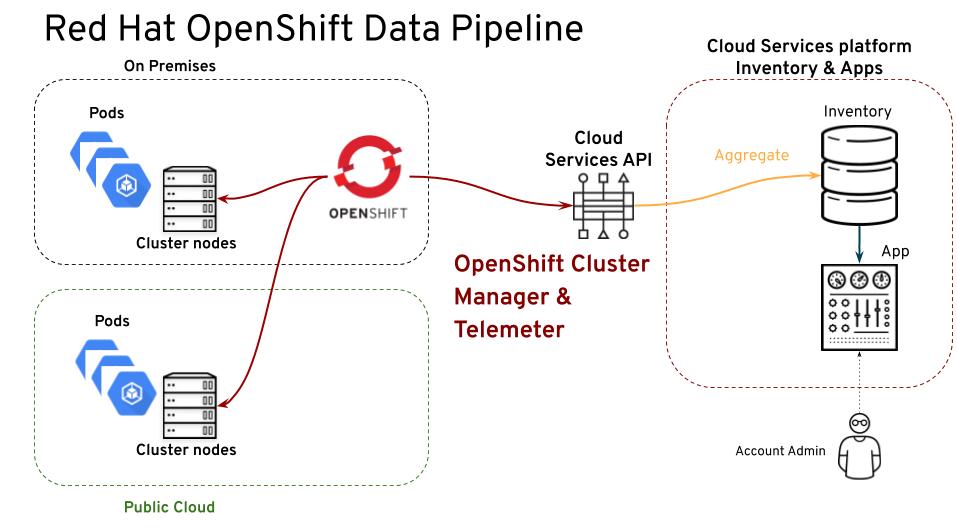

5.4. Red Hat OpenShift monitoring stack and other tools for Red Hat OpenShift data collection

The data collection for Red Hat OpenShift usage is dependent on several tools, including tools developed by the Red Hat OpenShift development team. One tool is Red Hat OpenShift Cluster Manager. Another set of tools is known as the monitoring stack. This set of tools is based on the open source Prometheus project and its ecosystem, and includes Prometheus, Telemetry, Thanos, Observatorium, and others.

The subscriptions service is designed to work with customers who use Red Hat OpenShift 4.1 and later products in connected environments. For the Red Hat OpenShift version 4.1 and later products that the subscriptions service can track, Red Hat OpenShift Cluster Manager and the monitoring stack tools are used to gather and process cluster data before sending it to Red Hat Subscription Management. Red Hat Subscription Management provides the relevant usage data to the Hybrid Cloud Console services such as inventory and the subscriptions service.

Customers with disconnected environments can use the Red Hat OpenShift data collection tools by manually creating each cluster in Red Hat OpenShift Cluster Manager. This workaround enables customers with disconnected environments to simulate an account-level view of their Red Hat OpenShift usage. For example, an organization with disconnected clusters distributed across several departments might find this workaround useful.

For Red Hat OpenShift Container Platform version 3.11, data collection is dependent on an older, RHEL based reporting model. Therefore, data collection is dependent upon the connection of the RHEL nodes to one of the RHEL data collection tools, such as Insights, Red Hat Subscription Management, or Satellite.

The Red Hat OpenShift portfolio also includes managed services that rely on Red Hat infrastructure. Part of that infrastructure is the monitoring stack tools that, among other jobs, supply data about subscription usage to the subscriptions service. No additional user action is necessary to set up these data collection tools for the following managed services:

- Red Hat OpenShift AI with a pay-as-you-go On-Demand subscription

- Red Hat Advanced Cluster Security for Kubernetes with a pay-as-you-go On-Demand subscription

5.5. Cloud integrations for Red Hat services

The data collection for some pay-as-you-go On-Demand subscriptions requires a connection known as a cloud integration, configured with the integrations service of the Hybrid Cloud Console.

A cloud integration on the Red Hat Hybrid Cloud Console is a connection to a service, application, or provider that supplies data to another Hybrid Cloud Console service. Through a cloud integration, the connected service can connect with and use data from public cloud providers and other services or tools to collect data for that service.

The following products require the configuration of a cloud integration to enable data collection for the subscriptions service:

- Red Hat Enterprise Linux for Third Party Linux Migration with Extended Life Cycle Support Add-on

Additional resources

- For additional information about registering version 4.1 disconnected clusters in Red Hat OpenShift Cluster Manager, see the chapter about cluster subscriptions and registration in the Managing Clusters guide.

Chapter 6. How to set subscription attributes

Red Hat subscriptions combine technology with use cases to help procurement and technical teams make the best purchasing and deployment decisions for their business needs. When the same product is offered in two different subscriptions, these use cases differentiate between the options. They inform the decision-making process at the time of purchase and remain associated with the subscription throughout its life cycle to help determine how the subscription is used.

Red Hat provides a method for you to associate use case information with products through the application of subscription attributes. These subscription attributes can be supplied at product installation time or as an update to the product.

The subscriptions service helps you to align your software deployments with the use cases that support them and compare actual consumption to the capacity provided by the subscription profile of your account. Proper, automated maintenance of the subscription attributes for your inventory is important to the accuracy of the subscriptions service reporting.

Subscription attributes can generally be organized into the following use cases:

- technical use case

- Attributes that describe how the product will be used upon deployment. Examples include role information for RHEL used as a server or alternatively used as a workstation.

- business use case

- Attributes that describe how the product will be used in relation to your business environment and workflows. Examples include usage as part of a production environment or alternatively as part of a disaster recovery environment.

- operational use case

- Attributes that describe various operational characteristics such as how the product will be supported. Examples include a service level agreement (SLA) of premium, or a service type of L1-L3.

The subscription attributes might be configured from the operating system or its management tools, or they might be configured from settings within the product itself. Collectively, these subscription attributes might be known as system purpose, subscription settings, or similar names across all of these tools.

Subscription attributes are used by the Hybrid Cloud Console services such as the inventory service to build the most accurate usage profile for products in your inventory. The subscriptions service uses the subscription attributes found and reported by these other tools to filter data about your subscriptions, enabling you to view this data with more granularity. For example, filtering your RHEL subscriptions to show only those with an SLA of premium could help you determine the current usage of those premium subscriptions compared to your overall capacity for premium subscriptions.

The quality of subscription attribute data can greatly affect the accuracy and usefulness of the subscriptions service data. Therefore, a best practice is to ensure that these attributes are properly set, both for current use and any possible future expansion of subscription attribute use within the subscriptions service.

6.1. Setting subscription attributes for RHEL

You can set subscription attributes for the RHEL product with RHEL or Satellite. For RHEL, the subscription attributes are known as system purpose.

You should set the system purpose subscription attributes from only one tool. If you use multiple tools, there is a possibility for mismatched settings. Because these tools report data to the Hybrid Cloud Console tools at different intervals, or heartbeats, and because the subscriptions service shows some information as a once-per-day snapshot based on last-reported data, adding subscription attributes to more than one tool could potentially affect the quality of the subscriptions service data.

Setting the subscription attributes with RHEL

You can use a few different methods to set the system purpose values, depending on whether you set them at RHEL installation or configuration time, whether you are installing RHEL interactively or automatically, and whether you are setting system purpose from a command line interface or with another method. For more specific guidance related to the installation or configuration process that you are following, see the system purpose information in these RHEL 9 guides, or see similar guides in the applicable version of RHEL:

Setting the subscription attributes from Satellite

For Satellite, the methods to set subscription attributes are described in instructions for editing the system purpose of a single host or multiple hosts. For more information about setting system purpose, see the section about administering hosts in the Satellite Managing Hosts guide:

6.2. Setting subscription attributes for Red Hat OpenShift

You can set subscription attributes from Red Hat OpenShift Cluster Manager for version 4. For version 3, you use the same reporting tools as those defined for RHEL.

Setting the subscription attributes for Red Hat OpenShift 4

You can set subscription attributes at the cluster level from Red Hat OpenShift Cluster Manager, where the attributes are described as subscription settings.

- From the Clusters view, select a cluster to display the cluster details.

- Click Edit Subscription Settings on the cluster details page or from the Actions menu.

- Make any needed changes to the values for the subscription attributes and then save those changes.

Setting the subscription attributes for Red Hat OpenShift 3

You can set subscription attributes at the node level by using the same methods that you use for RHEL, setting these values from RHEL itself, Red Hat Subscription Management, or Satellite. As described in that section, set subscription attributes by using only one method so that the settings are not duplicated.

If your subscription contains a mix of socket-based and core-based nodes, you can also set subscription attributes that identify this fact for each node. As you view your Red Hat OpenShift usage, you can use a filter to switch between cores and sockets as the unit of measurement.

To set this subscription attribute data, run the applicable command for each node:

For core-based nodes:

# echo '{"ocm.units":"Cores/vCPU"}' | sudo tee /etc/rhsm/facts/openshift-units.factsFor socket-based nodes:

# echo '{"ocm.units":"Sockets"}' | sudo tee /etc/rhsm/facts/openshift-units.facts

Setting subscription attributes for other Red Hat OpenShift subscriptions

Some offerings are of one subscription type only, for example, Red Hat OpenShift AI or Red Hat Advanced Cluster Security for Kubernetes. Therefore, setting subscription attributes is not required.

6.3. Setting subscription attributes for Red Hat Ansible

Currently, the only Red Hat Ansible offering that is tracked by the subscriptions service is Red Hat Ansible Automation Platform as a managed service. The Red Hat Ansible Automation Platform managed service offering is of one subscription type only. Therefore, setting subscription attributes is not required.

Chapter 7. Your responsibilities

The subscriptions service and the features that make up this service are new and are rapidly evolving. During this rapid development phase, you have the ability to view, and more importantly contribute to, the newest capabilities early in the process. Your feedback is valued and welcome. Work with your Red Hat account team, for example, your technical account manager (TAM) or customer success manager (CSM), to provide this feedback. You might also be asked to provide feedback or request features from within the subscriptions service itself.

As you use the subscriptions service, note the following agreements and contractual responsibilities that remain in effect:

- Customers are responsible for monitoring subscription utilization and complying with applicable subscription terms. The subscriptions service is a customer benefit to manage and view subscription utilization. Red Hat does not intend to create new billing events based on the subscriptions service tooling, rather the tooling will help the customer gain visibility into utilization so it can keep track of its environment.

Part III. Setting up the subscriptions service for data collection

To set up the environment for the subscriptions service data collection, connect your Red Hat Enterprise Linux, Red Hat OpenShift, and Red Hat Ansible systems to the Hybrid Cloud Console services through one or more data collection tools.

After you complete the steps to set up this environment, you can continue with the steps to activate and open the subscriptions service.

Do these steps

To gather Red Hat Enterprise Linux usage data, complete at least one of the following steps to connect your Red Hat Enterprise Linux systems to the Hybrid Cloud Console by enabling a data collection tool. This connection enables subscription usage data to show in the subscriptions service.

Deploy Insights on every RHEL system that is managed by Red Hat Satellite:

Ensure that Satellite is configured to manage your RHEL systems and install the Satellite inventory upload plugin:

Ensure that Red Hat Subscription Management is configured to manage your RHEL systems:

For pay-as-you-go On-Demand subscriptions for metered RHEL, ensure that a cloud integration is configured in the Hybrid Cloud Console for collection of the metering data.

To gather Red Hat OpenShift usage data, complete the following step for Red Hat OpenShift data collection on the Hybrid Cloud Console.

Set up the connection between Red Hat OpenShift and the subscriptions service based upon the operating system that is used for clusters:

- To gather Red Hat Ansible usage data, no additional setup steps are necessary. Ansible data collection is configured automatically during the provisioning of the Ansible control plane.

Chapter 8. Deploying Red Hat Insights

If you are using Red Hat Insights as the data collection tool, deploy Red Hat Insights on every RHEL system that is managed by Red Hat Satellite.

Do these steps

To install Red Hat Insights, see the following information:

Learn more

To learn more about what data Red Hat Insights collects and your options for controlling that data, see the following information:

8.1. Installing Red Hat Insights

Install Red Hat Insights to collect information about your inventory.

Procedure

Install the Insights client on every RHEL system that is managed by Red Hat Satellite by using the following instructions:

The Insights client is installed by default on RHEL 8 and later systems unless the minimal installation option was used to install RHEL. However, the client must still be registered, as documented in the client installation instructions.

8.2. What data does Red Hat Insights collect?

When the Red Hat Insights client is installed on a system, it collects data about that system on a daily basis and sends it to the Red Hat Insights cloud application. The data might also be shared with other applications on the Hybrid Cloud Console, such as inventory or the subscriptions service services. Insights provides configuration and command options, including options for data obfuscation and data redaction, to manage that data.

For more information, see the Client Configuration Guide for Red Hat Insights, available with the Red Hat Insights product documentation.

You might also want to examine the types of data that Insights collects and sends to Red Hat or add controls to the data that is sent. For additional information that supplements the information available in the product documentation, see the following articles:

-

For more information about the use of the

insights-client --offlinecommand to generate an offline dump of the data before you register a system to Insights, see How can I see what data is collected by Red Hat Insights? -

For more information about the use of the

insights-client --no-uploadcommand to run a test data collection process, see System Information Collected by Red Hat Insights. -

For more information about the use of the

remove.conffile and its options to exclude specific data from collection based on file, command, pattern, and keyword settings, see Opting Out of Sending Metadata from Red Hat Insights Client.

Chapter 9. Installing the Satellite inventory upload plugin

When you are using Red Hat Satellite as a data collection tool for the subscriptions service, you must use the Satellite inventory upload plugin in combination with the virt-who tool for accurate reporting of hypervisor host-guest mapping information for Red Hat Enterprise Linux virtual data center (VDC) subscriptions and similar virtualized environments. The use of this plugin enables the uploading of host-based data from Satellite into the inventory service and enables the association of hosts with guests and with the Satellite instance that manages them. If the plugin is not enabled, the subscriptions service cannot accurately report usage for RHEL virtualized subscriptions.

The plugin is also required by other Hybrid Cloud Console applications. In addition to the requirement for the inventory service of Red Hat Insights to enable the uploading of host inventory information, the plugin is also required for the remediations service of Red Hat Insights to enable remediation actions from both Satellite and Red Hat Insights.

After the Satellite inventory upload plugin is enabled, it is active for every Satellite organization, including existing and newly created organizations.

Prerequisites

Red Hat Satellite 6.9 or later (versions that are under full support)

Procedure

For a new installation of Satellite

For a new installation of Satellite, the Satellite inventory upload plugin is installed and enabled by default. No action is required to enable it.

For upgraded Satellite

For Satellite that is upgraded to a currently supported version, the Satellite inventory upload plugin is installed. However, you might have to enable the plugin.

- If the plugin was previously enabled for Satellite before the upgrade, it remains enabled. No action is required to enable it.

- If the plugin was not enabled for Satellite before the upgrade, then you must enable it.

To enable the Satellite inventory upload plugin, use these steps:

- In the Satellite web interface, expand the Configure options and select Red Hat Inventory.

- Follow the instructions on the Red Hat Inventory page to enable the Automatic inventory upload option for Satellite.

Usage tips

When the Automatic inventory upload option is enabled, the Satellite inventory upload plugin automatically reports once per day by default. You can also manually send data and view the status of the extract and upload actions for individual Satellite organizations.

The Satellite inventory upload plugin includes reporting settings that you can use to address data privacy concerns. Use the options on the Red Hat Inventory page to configure the plugin to exclude certain packages, obfuscate host names, and obfuscate host addresses.

Chapter 10. Registering systems to Red Hat Subscription Management

If you are using Red Hat Subscription Management as the data collection tool, register your RHEL systems to Red Hat Subscription Management. Systems that are registered to Red Hat Subscription Management can be found and tracked by the subscriptions service.

Some RHEL images can use the autoregistration feature of the RHEL management bundle and do not have to be manually registered to Red Hat Subscription Management. However, the following specific requirements must be met:

- The image must be based on RHEL 8.4 and later or 8.3.1 and later.

- The image must be an Amazon Web Services (AWS) or Microsoft Azure cloud services image.

-

The image can be a Cloud Access Gold Images image or a custom image, such as an image built with Image Builder. If it is a custom image, the

subscription-managertool in the image must be configured to use autoregistration. The image must be associated with an AWS or Azure integration, as configured from the Settings > Integrations option in the Hybrid Cloud Console, with the RHEL management bundle selected for activation.

NoteThe integrations service was formerly known as the sources service in the Hybrid Cloud Console.

- The image must be provisioned after this integration is created.

RHEL systems that do not meet these requirements must be registered manually to be tracked by the subscriptions service.

Procedure

Register your RHEL systems to Red Hat Subscription Management, if not already registered. For more information about this process, see the following information:

- Information in the Red Hat Subscription Management product documentation, including information about registering and unregistering systems in the Quick Registration for RHEL guide.

- Supplemental information about registering systems in the How do I register a system to Customer Portal Subscription Management? article.

Chapter 11. Connecting Red Hat OpenShift to the subscriptions service

If you use Red Hat OpenShift products, the steps you must do to connect the correct data collection tools to the subscriptions service depend on multiple factors. These factors include the installed version of Red Hat OpenShift Container Platform and Red Hat OpenShift Dedicated, whether you are working in a connected or disconnected environment, and whether you are using Red Hat Enterprise Linux, Red Hat Enterprise Linux CoreOS, or both as the operating system for clusters.

The subscriptions service is designed to work with customers who use Red Hat OpenShift in connected environments. One example of this customer profile is using RHOCP 4.1 and later with an Annual subscription with connected clusters. For this customer profile, Red Hat OpenShift has a robust set of tools that can perform the data collection. The connected clusters report data to Red Hat through Red Hat OpenShift Cluster Manager, Telemetry, and the other monitoring stack tools to supply information to the data pipeline for the subscriptions service.

Customers with disconnected RHOCP 4.1 and later environments can use Red Hat OpenShift as a data collection tool by manually creating each cluster in Red Hat OpenShift Cluster Manager.

Customers who use Red Hat OpenShift 3.11 can also use the subscriptions service. However, for Red Hat OpenShift version 3.11, the communication with the subscriptions service is enabled through other tools that supply the data pipeline, such as Insights, Satellite, or Red Hat Subscription Management.

For customers who use Red Hat OpenShift Container Platform or Red Hat OpenShift Dedicated 4.7 and later with a pay-as-you-go On-Demand subscription (available for connected clusters only), data collection is done through the same tools as those used by Red Hat OpenShift Container Platform 4.1 and later with an Annual subscription.

Procedure

Complete the following steps, based on your version of Red Hat OpenShift Container Platform and the cluster operating system for worker nodes.

For Red Hat OpenShift Container Platform 4.1 or later with Red Hat Enterprise Linux CoreOS

For this profile, cluster architecture is optimized to report data to Red Hat OpenShift Cluster Manager through the Telemetry tool in the monitoring stack. Therefore, setup of the subscriptions service reporting is essentially confirming that this monitoring tool is active.

- Make sure that all clusters are connected to Red Hat OpenShift Cluster Manager through the Telemetry monitoring component. If so, no additional configuration is needed. The subscriptions service is ready to track Red Hat OpenShift Container Platform usage and capacity.

For Red Hat OpenShift Container Platform 4.1 or later with a mixed environment with Red Hat Enterprise Linux CoreOS and Red Hat Enterprise Linux

For this profile, data gathering is affected by the change in the Red Hat OpenShift Container Platform reporting models between Red Hat OpenShift major versions 3 and 4. Version 3 relies upon RHEL to report RHEL cluster usage at the node level. This is still the reporting model used for version 4 RHEL nodes. However, the version 4 era reporting model reports Red Hat Enterprise Linux CoreOS usage at the cluster level through Red Hat OpenShift tools.

The tools that are used to gather this data are different. Therefore, the setup of the subscriptions service reporting is to confirm that both tool sets are configured correctly.

- Make sure that all clusters are connected to Red Hat OpenShift Cluster Manager through the Red Hat OpenShift Container Platform Telemetry monitoring component.

- Make sure that Red Hat Enterprise Linux nodes in all clusters are connected to at least one of the Red Hat Enterprise Linux data collection tools, Insights, Satellite, or Red Hat Subscription Management. For more information, see the instructions about connecting to each of these data collection tools in this guide.

For Red Hat OpenShift Container Platform version 3.11

Red Hat OpenShift Container Platform version 3.11 reports cluster usage based on the Red Hat Enterprise Linux nodes in the cluster. Therefore, for this profile, the subscriptions service reporting uses the standard Red Hat Enterprise Linux data collection tools.

- Make sure that all Red Hat Enterprise Linux nodes in all clusters are connected to at least one of the Red Hat Enterprise Linux data collection tools, Insights, Satellite, or Red Hat Subscription Management. For more information, see the instructions about connecting to each of these data collection tools in this guide.

Chapter 12. Connecting cloud integrations to the subscriptions service

Data collection for certain pay-as-you-go On-Demand subscriptions requires a connection known as a cloud integration, configured with the integrations service of the Hybrid Cloud Console.

A cloud integration on the Red Hat Hybrid Cloud Console is a connection to a service, application, or provider that supplies data to another Hybrid Cloud Console service. Through a cloud integration, the connected service can connect with and use data from public cloud providers and other services or tools to collect data for that service.

When a cloud integration is required to track the usage of a subscription, the post-purchase enablement steps generally include information about this requirement. These post-purchase enablement instructions might contain more current information about setting up the cloud integration.

The following products require the configuration of a cloud integration to enable data collection for the subscriptions service:

Red Hat Enterprise Linux for Third Party Linux Migration with Extended Life Cycle Support Add-on, metered with the the cost management service method

If you want to use the cost management service to meter the usage of RHEL for Third Party Linux Migration with ELS in the subscriptions service, you must create a cloud integration. The configuration of a cloud integration for includes a creating a connection between a cloud provider and the cost management service in the Hybrid Cloud Console. This cloud integration ensures that usage data from the cloud provider and from the cost management service is used to calculate metered usage in the subscriptions service and that usage data is returned to the cloud provider for billing purposes.

Procedure

For Red Hat Enterprise Linux for Third Party Linux Migration with Extended Life Cycle Support Add-on

The post-purchase enablement steps for RHEL for Third Party Linux Migration with ELS include information about setting up the required cloud integration, in addition to other setup information required for the subscription. To ensure that your cloud integration is configured correctly for use by the subscriptions service, review the following information and confirm that the cloud integration configuration steps were completed:

- For more information about the RHEL for Third Party Linux Migration with ELS post-purchase enablement steps, including the step to set up a cloud integration, see the Getting Started with Red Hat Enterprise Linux for Third Party Linux Migration Customer Portal support article.

- For more information about cloud integrations, see Configuring cloud integrations for Red Hat services

- For more information about setting up a cloud integration for the cost management service and a specific cloud platform, see Adding integrations to cost management in the cost management documentation.

Part IV. Activating and opening the subscriptions service

After you complete the steps to set up the environment for the subscriptions service, you can go to console.redhat.com to request the subscriptions service activation. After activation and the initial data collection cycle, you can open the subscriptions service and begin viewing usage data.

Do these steps

To find out if the subscriptions service activation is needed, see the following information:

To log in to console.redhat.com and activate the subscriptions service, see the following information:

To log in to console.redhat.com and open the subscriptions service after activation, see the following information:

If you cannot activate or log in to the subscriptions service, see the following information:

Chapter 13. Determining whether manual activation of the subscriptions service is necessary

The subscriptions service must be activated to begin tracking usage for the Red Hat account for your organization. The activation process can be automatic or manual.

Procedure

Review the following tasks that activate the subscriptions service automatically. If someone in your organization has completed one or more of these tasks, manual activation of the subscriptions service is not needed.

- Purchasing a pay-as-you-go On-Demand subscription for Red Hat OpenShift Container Platform or Red Hat OpenShift Dedicated through Red Hat Marketplace. As the pay-as-you-go clusters begin reporting usage through OpenShift Cluster Manager and the monitoring stack, the subscriptions service activates automatically for the organization.

- Purchasing an Red Hat OpenShift pay-as-you-go On-Demand subscription through a cloud provider marketplace, such as Red Hat Marketplace or Amazon Web Services (AWS). Examples of these types of products include Red Hat OpenShift AI or Red Hat Advanced Cluster Security for Kubernetes. As these products begin reporting usage through the monitoring stack, the subscriptions service activates automatically for the organization.

- Creating an Amazon Web Services integration through the integrations service in the Hybrid Cloud Console with the RHEL management bundle selected. The process of creating the integration also activates the subscriptions service.

Creating a Microsoft Azure integration through the integrations service in the Hybrid Cloud Console with the RHEL management bundle selected. The process of creating the integration also activates the subscriptions service.

NoteThe integrations service was formerly known as the {SourcesName} service in the Hybrid Cloud Console.

These tasks, especially purchasing tasks, might be performed by a user that has the Organization Administrator (org admin) role in the Red Hat organization for your company. The integration creation tasks must be performed by a user with the Cloud administrator role in the role-based access control (RBAC) system for the Hybrid Cloud Console.

Chapter 14. Activating the subscriptions service

If the subscriptions service is not activated by one of the tasks that include automatic activation, then the subscriptions service must be manually activated. Tasks that include automatic activation are purchasing an On-Demand subscription through Red Hat Marketplace or creating an Amazon Web Services or Microsoft Azure integration that includes the RHEL management bundle through the integrations service in the Hybrid Cloud Console.

If manual activation is needed, the subscriptions service must be activated by a user with access to the Red Hat account and organization through a Red Hat Customer Portal login. This login is not required to be a Red Hat Customer Portal Organization Administrator (org admin). In addition, that user must also have the Subscriptions administrator role or the Subscriptions viewer role in the user access role-based access control (RBAC) system for console.redhat.com.

If a Red Hat Customer Portal login is associated with an organization that does not have an account relationship with Red Hat, then the subscriptions service cannot be activated.

When the subscriptions service is activated, the Hybrid Cloud Console tools begin analyzing and processing data from the data collection tools for display in the subscriptions service.

The following procedure guides you through the steps to activate the subscriptions service from console.redhat.com. If the subscriptions service is not already activated, you can also access the activation page at the conclusion of the subscriptions service tour.

Procedure

- In a browser window, go to console.redhat.com.

- If prompted, enter your Red Hat Customer Portal login credentials.

- In the Hybrid Cloud Console dashboard, click Services and then click Subscriptions and Spend in the navigation panel.

- On the Subscriptions and Spend page, click the Overview option for Subscription Services.

- In the navigation panel of the Subscription Services page, expand Subscriptions Usage.

- Click one of the Red Hat platform options, Red Hat Enterprise Linux, OpenShift, or Ansible, to check the status of the subscriptions service for your Red Hat account and organization.

Complete one of the following steps, depending on the status of the subscriptions service activation:

- If the subscriptions service is not yet active for the account, the activation page displays. Click Activate Subscriptions.

- If the subscriptions service is activated but not yet ready to display data, the subscriptions service application opens, but it displays an empty graph. Try accessing the subscriptions service later, typically the next day.

- If the subscriptions service is activated and the initial data processing is complete, the subscriptions service application opens and displays data on the graph. You can begin using the subscriptions service to view data about subscription usage and capacity for the account.

Verification steps

Data processing for the initial display of the subscriptions service can take up to 24 hours. Until data for the account is ready, only an empty graph will display.

Chapter 15. Logging in to the subscriptions service

You access the subscriptions service from the Hybrid Cloud Console after logging in to your Red Hat Customer Portal login.

Procedure

- In a browser window, go to console.redhat.com.

- If prompted, enter your Red Hat Customer Portal login credentials.

- In the Hybrid Cloud Console dashboard, click Services and then click Subscriptions and Spend in the navigation panel.

- On the Subscriptions and Spend page, click the Overview option for Subscription Services.

- In the navigation panel of the Subscription Services page, expand Subscriptions Usage.

- Click one of the Red Hat platform options, Red Hat Enterprise Linux, OpenShift, or Ansible, depending on the type of product usage that you want to view.

If more than one product is generating usage for the subscriptions service, the platform page opens to a default view with a specific product variant or architecture in the Variant filter. Select another option in the Variant filter to view information about other architectures or product variants.

If the subscriptions service is activated and the initial data processing is complete, the subscriptions service opens and displays data on the graph. You can begin using the subscriptions service to view data about subscription usage and capacity for the account.

If the subscriptions service opens but displays an empty graph, then the subscriptions service is activated but the initial data processing is not complete. Try accessing the subscriptions service later, typically the next day.

Chapter 16. Verifying access to the subscriptions service

User access to Hybrid Cloud Console services, including the subscriptions service, is controlled through a role-based access control (RBAC) system. User management capabilities for this RBAC system are granted to the Red Hat Organization Administrator (sometimes also referred to as org admin) for your Red Hat organization, and might also be granted to other members of the organization through the User Access administrator RBAC role. These user access administrators then manage the Hybrid Cloud Console RBAC groups, roles, and permissions for the other members in the organization by using the Settings > User Access option at console.redhat.com.

The predefined role Subscriptions viewer controls the ability to activate and access the subscriptions service. By default, every user in the organization has this role. However, if your Organization Administrator has made changes to user access roles and groups, you might not be able to access the subscriptions service.

The RBAC system for the console.redhat.com contains two roles for the subscriptions service. The Subscriptions administrator role contains every available permission for the subscriptions service. The Subscriptions viewer role contains a subset of the permissions in the Subscriptions administrator role. An example of this type of user is one who only needs to view high-level report data, but does not need to view more detailed information about individual subscriptions and systems.

By default, all users are assigned the Subscriptions viewer role through the RBAC Default access group. However, the default behavior for role assignments is affected by how an organization is using RBAC groups to manage user access. If custom groups are in use instead of the Default access group, the Organization Administrator or another user with the User Access administrator RBAC role must manually update these groups to contain the new roles and manage any default assignment of them to the users in the organization.

Procedure

- If you cannot activate or access the subscriptions service, contact your Organization Administrator. Your Organization Administrator can provide information about the status of the subscriptions service for your organization.

Additional resources

- For more information about console.redhat.com user access, see the User Access Configuration Guide for Role-Based Access Control.

Part V. Viewing and understanding the subscriptions service data

After you set up the environment for the subscriptions service, such as setting up data collection tools or other data sources, completing any additional required subscriptions service activation steps, and waiting for the initial data ingestion, analysis, and processing to be complete (usually no longer than 24 hours), you can begin viewing subscription usage and capacity data in the subscriptions service.

Learn more

To learn more about how the subscriptions service displays information about your subscription usage and capacity, see the following information:

To learn more about what data the subscriptions service stores, see the following information:

To learn more about how your data gets to the subscriptions service and how frequently this data refreshes, see the following information:

Chapter 17. How does the subscriptions service show my subscription data?

The subscriptions service shows subscription data for Red Hat offerings such as software products or product sets, organized by the Red Hat software portfolio options in the Hybrid Cloud Console. {ProductNameStartSentenc} shows data for the Red Hat Enterprise Linux, Red Hat OpenShift (including the products from the former Application Services page), and Red Hat Ansible software portfolios.

Only a subset of Red Hat products in each portfolio, identified by their stock-keeping units (SKUs), are tracked by the subscriptions service. The subscriptions service maintains an explicit deny list within the source code for untracked products.

- For more information about the SKUs that are not tracked, see the deny list source code in GitHub.

- For more information about the purpose of the subscriptions service deny list, see the What subscriptions (SKUs) are included in Subscription Usage? article.

For each software portfolio, the Subscription Services menu shows options for navigating to the subscriptions service product pages for the available products within the selected portfolio. The Subscription Services menu also contains options for viewing other subscription-related data or for functions that are not part of the subscriptions service. These options include services for viewing more details about your subscription inventory or working with manifests.

Each product page for the subscriptions service offers multiple views. These views enable you to explore different aspects about your subscriptions for that product. When combined, the data from these views can help you recognize and mitigate problems or trends with excess subscription usage, organize subscription allocation across all of your resources, and improve decision-making for future purchasing and renewals.

For all of these activities, and for other questions about your subscription usage, the members of your Red Hat account team can provide expertise, guidance, and additional resources. Their assistance can add context to the account data that is reported in the subscriptions service and can help you understand and comply with your responsibilities as a customer. For more information, see Your responsibilities.

17.1. How to use the subscription data in the views

The subscriptions service views can be grouped generally into the graph view and the table view.

The graph view is a visual representation of the subscription usage and capacity for your organization, where your organization is also a Red Hat account. This view helps you track usage trends and determine utilization, which is the percentage of deployed software when measured against your total subscriptions.

The table view can contain one or more tables that provide more details about the general data in the graph view. The current instances table, also referred to as the current systems table, provides details about subscription usage on individual components of your environment, for example, systems in your inventory or clusters in your cloud infrastructure or restricted network. The current subscriptions table provides details about individual subscriptions in your account. The table view helps you to find where Red Hat software is deployed in your environment, to understand how individual subscriptions contribute to your overall capacity for usage of similar types of subscriptions, to resolve questions you might have about subscription usage, and to refine plans for future deployments.

For some product pages, the table view data is derived from data in the Hybrid Cloud Console inventory service. User access to subscriptions, inventory, and other services is controlled independently by a role-based access control (RBAC) system for the Hybrid Cloud Console services, where individual users belong to groups and groups are associated with roles. More specifically, user access to the inventory service is controlled through the Inventory administrator role.

When the Inventory administrator RBAC role is enabled for the group or groups for your organization, information in the current instances table for the subscriptions service can display as links, where you can open a more detailed record in the inventory application for the listed systems or instances, as applicable. Otherwise, current instances table information displays as nonlinked information. For more information about RBAC usage in your organization, contact the Organization Administrator for your account.

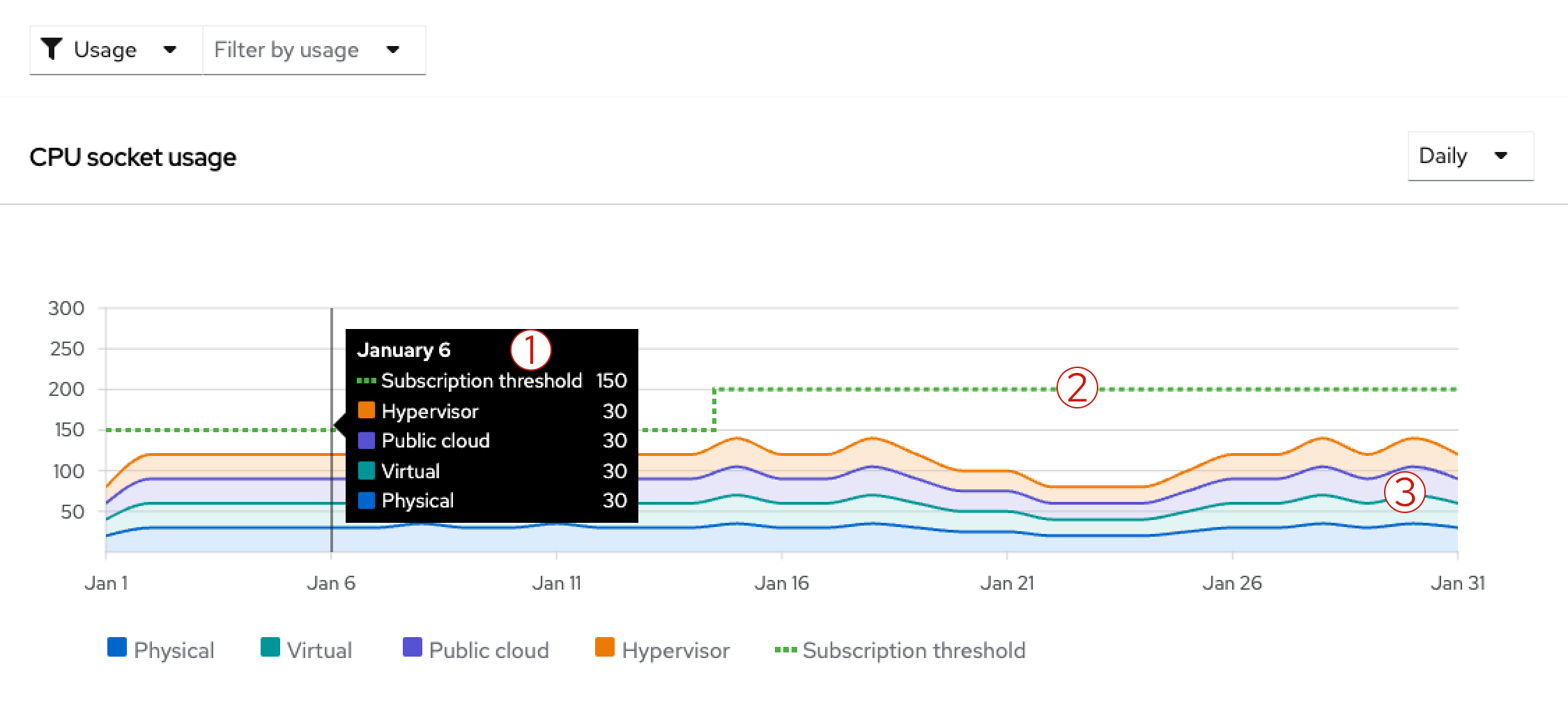

The usage and utilization graph view

The graph view shows you your total subscription usage and capacity over time in a graph form. It provides perspective on your account’s subscription threshold, current subscription utilization, and remaining subscription capacity, along with the historical trend of your software usage. The graph view might contain a single graph or multiple graphs, depending upon how subscription usage for a product is measured.