Chapter 5. Configuring an AMQ Streams on RHEL deployment

Use the Kafka and ZooKeeper properties files to configure AMQ Streams.

- ZooKeeper

-

/kafka/config/zookeeper.properties - Kafka

-

/kafka/config/server.properties

The properties files are in the Java format, with each property on separate line in the following format:

<option> = <value>

Lines starting with # or ! will be treated as comments and will be ignored by AMQ Streams components.

# This is a comment

Values can be split into multiple lines by using \ directly before the newline / carriage return.

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \

username="bob" \

password="bobs-password";After you save the changes in the properties files, you need to restart the Kafka broker or ZooKeeper. In a multi-node environment, you will need to repeat the process on each node in the cluster.

5.1. Using standard Kafka configuration properties

Use standard Kafka configuration properties to configure Kafka components.

The properties provide options to control and tune the configuration of the following Kafka components:

- Brokers

- Topics

- Clients (producers and consumers)

- Admin client

- Kafka Connect

- Kafka Streams

Broker and client parameters include options to configure authorization, authentication and encryption.

For AMQ Streams on OpenShift, some configuration properties are managed entirely by AMQ Streams and cannot be changed.

For further information on Kafka configuration properties and how to use the properties to tune your deployment, see the following guides:

5.2. Loading configuration values from environment variables

Use the Environment Variables Configuration Provider plugin to load configuration data from environment variables. You can use the Environment Variables Configuration Provider, for example, to load certificates or JAAS configuration from environment variables.

You can use the provider to load configuration data for all Kafka components, including producers and consumers. Use the provider, for example, to provide the credentials for Kafka Connect connector configuration.

Prerequisites

- AMQ Streams is downloaded and installed on the host

Environment Variables Configuration Provider JAR file

The JAR file is available from the AMQ Streams archive

Procedure

-

Add the Environment Variables Configuration Provider JAR file to the Kafka

libsdirectory. Initialize the Environment Variables Configuration Provider in the configuration properties file of the Kafka component. For example, to initialize the provider for Kafka, add the configuration to the

server.propertiesfile.Configuration to enable the Environment Variables Configuration Provider

config.providers=env config.providers.env.class=io.strimzi.kafka.EnvVarConfigProvider

Add configuration to the properties file to load data from environment variables.

Configuration to load data from an environment variable

option=${env:<MY_ENV_VAR_NAME>}Use capitalized or upper-case environment variable naming conventions, such as

MY_ENV_VAR_NAME.- Save the changes.

Restart the Kafka component.

For information on restarting brokers in a multi-node cluster, see Section 3.3, “Performing a graceful rolling restart of Kafka brokers”.

5.3. Configuring ZooKeeper

Kafka uses ZooKeeper to store configuration data and for cluster coordination. It is strongly recommended to run a cluster of replicated ZooKeeper instances.

5.3.1. Basic configuration

The most important ZooKeeper configuration options are:

tickTime- ZooKeeper’s basic time unit in milliseconds. It is used for heartbeats and session timeouts. For example, minimum session timeout will be two ticks.

dataDir-

The directory where ZooKeeper stores its transaction logs and snapshots of its in-memory database. This should be set to the

/var/lib/zookeeper/directory that was created during installation. clientPort-

Port number where clients can connect. Defaults to

2181.

An example ZooKeeper configuration file named config/zookeeper.properties is located in the AMQ Streams installation directory. It is recommended to place the dataDir directory on a separate disk device to minimize the latency in ZooKeeper.

ZooKeeper configuration file should be located in /opt/kafka/config/zookeeper.properties. A basic example of the configuration file can be found below. The configuration file has to be readable by the kafka user.

tickTime=2000 dataDir=/var/lib/zookeeper/ clientPort=2181

5.3.2. ZooKeeper cluster configuration

In most production environments, we recommend you deploy a cluster of replicated ZooKeeper instances. A stable and highly available ZooKeeper cluster is important for running for a reliable ZooKeeper service. ZooKeeper clusters are also referred to as ensembles.

ZooKeeper clusters usually consist of an odd number of nodes. ZooKeeper requires that a majority of the nodes in the cluster are up and running. For example:

- In a cluster with three nodes, at least two of the nodes must be up and running. This means it can tolerate one node being down.

- In a cluster consisting of five nodes, at least three nodes must be available. This means it can tolerate two nodes being down.

- In a cluster consisting of seven nodes, at least four nodes must be available. This means it can tolerate three nodes being down.

Having more nodes in the ZooKeeper cluster delivers better resiliency and reliability of the whole cluster.

ZooKeeper can run in clusters with an even number of nodes. The additional node, however, does not increase the resiliency of the cluster. A cluster with four nodes requires at least three nodes to be available and can tolerate only one node being down. Therefore it has exactly the same resiliency as a cluster with only three nodes.

Ideally, the different ZooKeeper nodes should be located in different data centers or network segments. Increasing the number of ZooKeeper nodes increases the workload spent on cluster synchronization. For most Kafka use cases, a ZooKeeper cluster with 3, 5 or 7 nodes should be sufficient.

A ZooKeeper cluster with 3 nodes can tolerate only 1 unavailable node. This means that if a cluster node crashes while you are doing maintenance on another node your ZooKeeper cluster will be unavailable.

Replicated ZooKeeper configuration supports all configuration options supported by the standalone configuration. Additional options are added for the clustering configuration:

initLimit-

Amount of time to allow followers to connect and sync to the cluster leader. The time is specified as a number of ticks (see the

tickTimeoption for more details). syncLimit-

Amount of time for which followers can be behind the leader. The time is specified as a number of ticks (see the

tickTimeoption for more details). reconfigEnabled- Enables or disables dynamic reconfiguration. Must be enabled in order to add or remove servers to a ZooKeeper cluster.

standaloneEnabled- Enables or disables standalone mode, where ZooKeeper runs with only one server.

In addition to the options above, every configuration file should contain a list of servers which should be members of the ZooKeeper cluster. The server records should be specified in the format server.id=hostname:port1:port2, where:

id- The ID of the ZooKeeper cluster node.

hostname- The hostname or IP address where the node listens for connections.

port1- The port number used for intra-cluster communication.

port2- The port number used for leader election.

The following is an example configuration file of a ZooKeeper cluster with three nodes:

tickTime=2000 dataDir=/var/lib/zookeeper/ initLimit=5 syncLimit=2 reconfigEnabled=true standaloneEnabled=false server.1=172.17.0.1:2888:3888:participant;172.17.0.1:2181 server.2=172.17.0.2:2888:3888:participant;172.17.0.2:2181 server.3=172.17.0.3:2888:3888:participant;172.17.0.3:2181

To use four letter word commands, specify 4lw.commands.whitelist=* in zookeeper.properties.

myid files

Each node in the ZooKeeper cluster must be assigned a unique ID. Each node’s ID must be configured in a myid file and stored in the dataDir folder, like /var/lib/zookeeper/. The myid files should contain only a single line with the written ID as text. The ID can be any integer from 1 to 255. You must manually create this file on each cluster node. Using this file, each ZooKeeper instance will use the configuration from the corresponding server. line in the configuration file to configure its listeners. It will also use all other server. lines to identify other cluster members.

In the above example, there are three nodes, so each one will have a different myid with values 1, 2, and 3 respectively.

5.3.3. Authentication

By default, ZooKeeper does not use any form of authentication and allows anonymous connections. However, it supports Java Authentication and Authorization Service (JAAS) which can be used to set up authentication using Simple Authentication and Security Layer (SASL). ZooKeeper supports authentication using the DIGEST-MD5 SASL mechanism with locally stored credentials.

5.3.3.1. Authentication with SASL

JAAS is configured using a separate configuration file. It is recommended to place the JAAS configuration file in the same directory as the ZooKeeper configuration (/opt/kafka/config/). The recommended file name is zookeeper-jaas.conf. When using a ZooKeeper cluster with multiple nodes, the JAAS configuration file has to be created on all cluster nodes.

JAAS is configured using contexts. Separate parts such as the server and client are always configured with a separate context. The context is a configuration option and has the following format:

ContextName {

param1

param2;

};SASL Authentication is configured separately for server-to-server communication (communication between ZooKeeper instances) and client-to-server communication (communication between Kafka and ZooKeeper). Server-to-server authentication is relevant only for ZooKeeper clusters with multiple nodes.

Server-to-Server authentication

For server-to-server authentication, the JAAS configuration file contains two parts:

- The server configuration

- The client configuration

When using DIGEST-MD5 SASL mechanism, the QuorumServer context is used to configure the authentication server. It must contain all the usernames to be allowed to connect together with their passwords in an unencrypted form. The second context, QuorumLearner, has to be configured for the client which is built into ZooKeeper. It also contains the password in an unencrypted form. An example of the JAAS configuration file for DIGEST-MD5 mechanism can be found below:

QuorumServer {

org.apache.zookeeper.server.auth.DigestLoginModule required

user_zookeeper="123456";

};

QuorumLearner {

org.apache.zookeeper.server.auth.DigestLoginModule required

username="zookeeper"

password="123456";

};In addition to the JAAS configuration file, you must enable the server-to-server authentication in the regular ZooKeeper configuration file by specifying the following options:

quorum.auth.enableSasl=true quorum.auth.learnerRequireSasl=true quorum.auth.serverRequireSasl=true quorum.auth.learner.loginContext=QuorumLearner quorum.auth.server.loginContext=QuorumServer quorum.cnxn.threads.size=20

Use the KAFKA_OPTS environment variable to pass the JAAS configuration file to the ZooKeeper server as a Java property:

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

For more information about server-to-server authentication, see ZooKeeper wiki.

Client-to-Server authentication

Client-to-server authentication is configured in the same JAAS file as the server-to-server authentication. However, unlike the server-to-server authentication, it contains only the server configuration. The client part of the configuration has to be done in the client. For information on how to configure a Kafka broker to connect to ZooKeeper using authentication, see the Kafka installation section.

Add the Server context to the JAAS configuration file to configure client-to-server authentication. For DIGEST-MD5 mechanism it configures all usernames and passwords:

Server {

org.apache.zookeeper.server.auth.DigestLoginModule required

user_super="123456"

user_kafka="123456"

user_someoneelse="123456";

};After configuring the JAAS context, enable the client-to-server authentication in the ZooKeeper configuration file by adding the following line:

requireClientAuthScheme=sasl authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

You must add the authProvider.<ID> property for every server that is part of the ZooKeeper cluster.

Use the KAFKA_OPTS environment variable to pass the JAAS configuration file to the ZooKeeper server as a Java property:

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

For more information about configuring ZooKeeper authentication in Kafka brokers, see Section 5.4.5, “ZooKeeper authentication”.

5.3.3.2. Enabling server-to-server authentication using DIGEST-MD5

This procedure describes how to enable authentication using the SASL DIGEST-MD5 mechanism between the nodes of the ZooKeeper cluster.

Prerequisites

- AMQ Streams is installed on the host

- ZooKeeper cluster is configured with multiple nodes.

Enabling SASL DIGEST-MD5 authentication

On all ZooKeeper nodes, create or edit the

/opt/kafka/config/zookeeper-jaas.confJAAS configuration file and add the following contexts:QuorumServer { org.apache.zookeeper.server.auth.DigestLoginModule required user_<Username>="<Password>"; }; QuorumLearner { org.apache.zookeeper.server.auth.DigestLoginModule required username="<Username>" password="<Password>"; };The username and password must be the same in both JAAS contexts. For example:

QuorumServer { org.apache.zookeeper.server.auth.DigestLoginModule required user_zookeeper="123456"; }; QuorumLearner { org.apache.zookeeper.server.auth.DigestLoginModule required username="zookeeper" password="123456"; };On all ZooKeeper nodes, edit the

/opt/kafka/config/zookeeper.propertiesZooKeeper configuration file and set the following options:quorum.auth.enableSasl=true quorum.auth.learnerRequireSasl=true quorum.auth.serverRequireSasl=true quorum.auth.learner.loginContext=QuorumLearner quorum.auth.server.loginContext=QuorumServer quorum.cnxn.threads.size=20

Restart all ZooKeeper nodes one by one. To pass the JAAS configuration to ZooKeeper, use the

KAFKA_OPTSenvironment variable.su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

5.3.3.3. Enabling Client-to-server authentication using DIGEST-MD5

This procedure describes how to enable authentication using the SASL DIGEST-MD5 mechanism between ZooKeeper clients and ZooKeeper.

Prerequisites

- AMQ Streams is installed on the host

- ZooKeeper cluster is configured and running.

Enabling SASL DIGEST-MD5 authentication

On all ZooKeeper nodes, create or edit the

/opt/kafka/config/zookeeper-jaas.confJAAS configuration file and add the following context:Server { org.apache.zookeeper.server.auth.DigestLoginModule required user_super="<SuperUserPassword>" user<Username1>_="<Password1>" user<USername2>_="<Password2>"; };The

superautomatically has administrator priviledges. The file can contain multiple users, but only one additional user is required by the Kafka brokers. The recommended name for the Kafka user iskafka.The following example shows the

Servercontext for client-to-server authentication:Server { org.apache.zookeeper.server.auth.DigestLoginModule required user_super="123456" user_kafka="123456"; };On all ZooKeeper nodes, edit the

/opt/kafka/config/zookeeper.propertiesZooKeeper configuration file and set the following options:requireClientAuthScheme=sasl authProvider.<IdOfBroker1>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.<IdOfBroker2>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.<IdOfBroker3>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

The

authProvider.<ID>property has to be added for every node which is part of the ZooKeeper cluster. An example three-node ZooKeeper cluster configuration must look like the following:requireClientAuthScheme=sasl authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

Restart all ZooKeeper nodes one by one. To pass the JAAS configuration to ZooKeeper, use the

KAFKA_OPTSenvironment variable.su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

5.3.4. Authorization

ZooKeeper supports access control lists (ACLs) to protect data stored inside it. Kafka brokers can automatically configure the ACL rights for all ZooKeeper records they create so no other ZooKeeper user can modify them.

For more information about enabling ZooKeeper ACLs in Kafka brokers, see Section 5.4.7, “ZooKeeper authorization”.

5.3.5. TLS

ZooKeeper supports TLS for encryption or authentication.

5.3.6. Additional configuration options

You can set the following additional ZooKeeper configuration options based on your use case:

maxClientCnxns- The maximum number of concurrent client connections to a single member of the ZooKeeper cluster.

autopurge.snapRetainCount-

Number of snapshots of ZooKeeper’s in-memory database which will be retained. Default value is

3. autopurge.purgeInterval-

The time interval in hours for purging snapshots. The default value is

0and this option is disabled.

All available configuration options can be found in the ZooKeeper documentation.

5.3.7. Logging

ZooKeeper is using log4j as their logging infrastructure. Logging configuration is by default read from the log4j.properties configuration file which should be placed either in the /opt/kafka/config/ directory or in the classpath. The location and name of the configuration file can be changed using the Java property log4j.configuration which can be passed to ZooKeeper using the KAFKA_LOG4J_OPTS environment variable:

su - kafka export KAFKA_LOG4J_OPTS="-Dlog4j.configuration=file:/my/path/to/log4j.properties"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

For more information about Log4j configurations, see Log4j documentation.

5.4. Configuring Kafka

Kafka uses a properties file to store static configuration. The recommended location for the configuration file is /opt/kafka/config/server.properties. The configuration file has to be readable by the kafka user.

AMQ Streams ships an example configuration file that highlights various basic and advanced features of the product. It can be found under config/server.properties in the AMQ Streams installation directory.

This chapter explains the most important configuration options.

5.4.1. ZooKeeper

Kafka brokers need ZooKeeper to store some parts of their configuration as well as to coordinate the cluster (for example to decide which node is a leader for which partition). Connection details for the ZooKeeper cluster are stored in the configuration file. The field zookeeper.connect contains a comma-separated list of hostnames and ports of members of the zookeeper cluster.

For example:

zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181

Kafka will use these addresses to connect to the ZooKeeper cluster. With this configuration, all Kafka znodes will be created directly in the root of ZooKeeper database. Therefore, such a ZooKeeper cluster could be used only for a single Kafka cluster. To configure multiple Kafka clusters to use single ZooKeeper cluster, specify a base (prefix) path at the end of the ZooKeeper connection string in the Kafka configuration file:

zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181/my-cluster-1

5.4.2. Listeners

Listeners are used to connect to Kafka brokers. Each Kafka broker can be configured to use multiple listeners. Each listener requires a different configuration so it can listen on a different port or network interface.

To configure listeners, edit the listeners property in the configuration file (/opt/kafka/config/server.properties). Add listeners to the listeners property as a comma-separated list. Configure each property as follows:

<listenerName>://<hostname>:<port>

If <hostname> is empty, Kafka uses the java.net.InetAddress.getCanonicalHostName() class as the hostname.

Example configuration for multiple listeners

listeners=internal-1://:9092,internal-2://:9093,replication://:9094

When a Kafka client wants to connect to a Kafka cluster, it first connects to the bootstrap server, which is one of the cluster nodes. The bootstrap server provides the client with a list of all the brokers in the cluster, and the client connects to each one individually. The list of brokers is based on the configured listeners.

Advertised listeners

Optionally, you can use the advertised.listeners property to provide the client with a different set of listener addresses than those given in the listeners property. This is useful if additional network infrastructure, such as a proxy, is between the client and the broker, or an external DNS name is being used instead of an IP address.

The advertised.listeners property is formatted in the same way as the listeners property.

Example configuration for advertised listeners

listeners=internal-1://:9092,internal-2://:9093 advertised.listeners=internal-1://my-broker-1.my-domain.com:1234,internal-2://my-broker-1.my-domain.com:1235

The names of the advertised listeners must match those listed in the listeners property.

Inter-broker listeners

Inter-broker listeners are used for communication between Kafka brokers. Inter-broker communication is required for:

- Coordinating workloads between different brokers

- Replicating messages between partitions stored on different brokers

- Handling administrative tasks from the controller, such as partition leadership changes

The inter-broker listener can be assigned to a port of your choice. When multiple listeners are configured, you can define the name of the inter-broker listener in the inter.broker.listener.name property.

Here, the inter-broker listener is named as REPLICATION:

listeners=REPLICATION://0.0.0.0:9091 inter.broker.listener.name=REPLICATION

Control plane listeners

By default, communication between the controller and other brokers uses the inter-broker listener. The controller is responsible for coordinating administrative tasks, such as partition leadership changes.

You can enable a dedicated control plane listener for controller connections. The control plane listener can be assigned to a port of your choice.

To enable the control plane listener, configure the control.plane.listener.name property with a listener name:

listeners=CONTROLLER://0.0.0.0:9090,REPLICATION://0.0.0.0:9091 ... control.plane.listener.name=CONTROLLER

Enabling the control plane listener might improve cluster performance because controller communications are not delayed by data replication across brokers. Data replication continues through the inter-broker listener.

If control.plane.listener is not configured, controller connections use the inter-broker listener.

5.4.3. Commit logs

Apache Kafka stores all records it receives from producers in commit logs. The commit logs contain the actual data, in the form of records, that Kafka needs to deliver. These are not the application log files which record what the broker is doing.

Log directories

You can configure log directories using the log.dirs property file to store commit logs in one or multiple log directories. It should be set to /var/lib/kafka directory created during installation:

log.dirs=/var/lib/kafka

For performance reasons, you can configure log.dirs to multiple directories and place each of them on a different physical device to improve disk I/O performance. For example:

log.dirs=/var/lib/kafka1,/var/lib/kafka2,/var/lib/kafka3

5.4.4. Broker ID

Broker ID is a unique identifier for each broker in the cluster. You can assign an integer greater than or equal to 0 as broker ID. The broker ID is used to identify the brokers after restarts or crashes and it is therefore important that the id is stable and does not change over time. The broker ID is configured in the broker properties file:

broker.id=1

5.4.5. ZooKeeper authentication

By default, connections between ZooKeeper and Kafka are not authenticated. However, Kafka and ZooKeeper support Java Authentication and Authorization Service (JAAS) which can be used to set up authentication using Simple Authentication and Security Layer (SASL). ZooKeeper supports authentication using the DIGEST-MD5 SASL mechanism with locally stored credentials.

5.4.5.1. JAAS Configuration

SASL authentication for ZooKeeper connections has to be configured in the JAAS configuration file. By default, Kafka will use the JAAS context named Client for connecting to ZooKeeper. The Client context should be configured in the /opt/kafka/config/jass.conf file. The context has to enable the PLAIN SASL authentication, as in the following example:

Client {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kafka"

password="123456";

};5.4.5.2. Enabling ZooKeeper authentication

This procedure describes how to enable authentication using the SASL DIGEST-MD5 mechanism when connecting to ZooKeeper.

Prerequisites

- Client-to-server authentication is enabled in ZooKeeper

Enabling SASL DIGEST-MD5 authentication

On all Kafka broker nodes, create or edit the

/opt/kafka/config/jaas.confJAAS configuration file and add the following context:Client { org.apache.kafka.common.security.plain.PlainLoginModule required username="<Username>" password="<Password>"; };The username and password should be the same as configured in ZooKeeper.

Following example shows the

Clientcontext:Client { org.apache.kafka.common.security.plain.PlainLoginModule required username="kafka" password="123456"; };Restart all Kafka broker nodes one by one. To pass the JAAS configuration to Kafka brokers, use the

KAFKA_OPTSenvironment variable.su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/jaas.conf"; /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

For information on restarting brokers in a multi-node cluster, see Section 3.3, “Performing a graceful rolling restart of Kafka brokers”.

Additional resources

5.4.6. Authorization

Authorization in Kafka brokers is implemented using authorizer plugins.

In this section we describe how to use the AclAuthorizer plugin provided with Kafka.

Alternatively, you can use your own authorization plugins. For example, if you are using OAuth 2.0 token-based authentication, you can use OAuth 2.0 authorization.

5.4.6.1. Simple ACL authorizer

Authorizer plugins, including AclAuthorizer, are enabled through the authorizer.class.name property:

authorizer.class.name=kafka.security.auth.AclAuthorizer

A fully-qualified name is required for the chosen authorizer. For AclAuthorizer, the fully-qualified name is kafka.security.auth.AclAuthorizer.

5.4.6.1.1. ACL rules

AclAuthorizer uses ACL rules to manage access to Kafka brokers.

ACL rules are defined in the format:

Principal P is allowed / denied operation O on Kafka resource R from host H

For example, a rule might be set so that user:

John can view the topic comments from host 127.0.0.1

Host is the IP address of the machine that John is connecting from.

In most cases, the user is a producer or consumer application:

Consumer01 can write to the consumer group accounts from host 127.0.0.1

If ACL rules are not present

If ACL rules are not present for a given resource, all actions are denied. This behavior can be changed by setting the property allow.everyone.if.no.acl.found to true in the Kafka configuration file /opt/kafka/config/server.properties.

5.4.6.1.2. Principals

A principal represents the identity of a user. The format of the ID depends on the authentication mechanism used by clients to connect to Kafka:

-

User:ANONYMOUSwhen connected without authentication. User:<username>when connected using simple authentication mechanisms, such as PLAIN or SCRAM.For example

User:adminorUser:user1.User:<DistinguishedName>when connected using TLS client authentication.For example

User:CN=user1,O=MyCompany,L=Prague,C=CZ.-

User:<Kerberos username>when connected using Kerberos.

The DistinguishedName is the distinguished name from the client certificate.

The Kerberos username is the primary part of the Kerberos principal, which is used by default when connecting using Kerberos. You can use the sasl.kerberos.principal.to.local.rules property to configure how the Kafka principal is built from the Kerberos principal.

5.4.6.1.3. Authentication of users

To use authorization, you need to have authentication enabled and used by your clients. Otherwise, all connections will have the principal User:ANONYMOUS.

For more information on methods of authentication, see Encryption and authentication.

5.4.6.1.4. Super users

Super users are allowed to take all actions regardless of the ACL rules.

Super users are defined in the Kafka configuration file using the property super.users.

For example:

super.users=User:admin,User:operator

5.4.6.1.5. Replica broker authentication

When authorization is enabled, it is applied to all listeners and all connections. This includes the inter-broker connections used for replication of data between brokers. If enabling authorization, therefore, ensure that you use authentication for inter-broker connections and give the users used by the brokers sufficient rights. For example, if authentication between brokers uses the kafka-broker user, then super user configuration must include the username super.users=User:kafka-broker.

5.4.6.1.6. Supported resources

You can apply Kafka ACLs to these types of resource:

- Topics

- Consumer groups

- The cluster

- TransactionId

- DelegationToken

5.4.6.1.7. Supported operations

AclAuthorizer authorizes operations on resources.

Fields with X in the following table mark the supported operations for each resource.

| Topics | Consumer Groups | Cluster | |

|---|---|---|---|

| Read | X | X | |

| Write | X | ||

| Create | X | ||

| Delete | X | ||

| Alter | X | ||

| Describe | X | X | X |

| ClusterAction | X | ||

| All | X | X | X |

5.4.6.1.8. ACL management options

ACL rules are managed using the bin/kafka-acls.sh utility, which is provided as part of the Kafka distribution package.

Use kafka-acls.sh parameter options to add, list and remove ACL rules, and perform other functions.

The parameters require a double-hyphen convention, such as --add.

| Option | Type | Description | Default |

|---|---|---|---|

|

| Action | Add ACL rule. | |

|

| Action | Remove ACL rule. | |

|

| Action | List ACL rules. | |

|

| Action | Fully-qualified class name of the authorizer. |

|

|

| Configuration | Key/value pairs passed to the authorizer for initialization.

For | |

|

| Resource | Host/port pairs to connect to the Kafka cluster. |

Use this option or the |

|

| Resource |

Configuration property file to pass to the Admin Client, which is used in conjunction with the | |

|

| Resource | Specifies a cluster as an ACL resource. | |

|

| Resource | Specifies a topic name as an ACL resource.

An asterisk (

A single command can specify multiple | |

|

| Resource | Specifies a consumer group name as an ACL resource.

A single command can specify multiple | |

|

| Resource | Specifies a transactional ID as an ACL resource. Transactional delivery means that all messages sent by a producer to multiple partitions must be successfully delivered or none of them.

An asterisk ( | |

|

| Resource | Specifies a delegation token as an ACL resource.

An asterisk ( | |

|

| Configuration |

Specifies a type of resource pattern for the

Use

Use |

|

|

| Principal | Principal added to an allow ACL rule.

A single command can specify multiple | |

|

| Principal | Principal added to a deny ACL rule.

A single command can specify multiple | |

|

| Principal |

Principal name used with the

A single command can specify multiple | |

|

| Host |

IP address that allows access to the principals listed in Hostnames or CIDR ranges are not supported. |

If |

|

| Host |

IP address that denies access to the principals listed in Hostnames or CIDR ranges are not supported. |

if |

|

| Operation | Allows or denies an operation.

A single command can specify multipleMultiple | All |

|

| Shortcut | A shortcut to allow or deny all operations needed by a message producer (WRITE and DESCRIBE on topic, CREATE on cluster). | |

|

| Shortcut | A shortcut to allow or deny all operations needed by a message consumer (READ and DESCRIBE on topic, READ on consumer group). | |

|

| Shortcut |

A shortcut to enable idempotence when used with the Idepmotence is enabled automatically if the producer is authorized to send messages based on a specific transactional ID. | |

|

| Shortcut | A shortcut to accept all queries and do not prompt. |

5.4.6.2. Enabling authorization

This procedure describes how to enable the AclAuthorizer plugin for authorization in Kafka brokers.

Prerequisites

- AMQ Streams is installed on all hosts used as Kafka brokers.

Procedure

Edit the

/opt/kafka/config/server.propertiesKafka configuration file to use theAclAuthorizer.authorizer.class.name=kafka.security.auth.AclAuthorizer

- (Re)start the Kafka brokers.

5.4.6.3. Adding ACL rules

AclAuthorizer uses Access Control Lists (ACLs), which define a set of rules describing what users can and cannot do.

This procedure describes how to add ACL rules when using the AclAuthorizer plugin in Kafka brokers.

Rules are added using the kafka-acls.sh utility and stored in ZooKeeper.

Prerequisites

- AMQ Streams is installed on all hosts used as Kafka brokers.

- Authorization is enabled in Kafka brokers.

Procedure

Run

kafka-acls.shwith the--addoption.Examples:

Allow

user1anduser2access to read frommyTopicusing theMyConsumerGroupconsumer group.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Read --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Describe --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Read --operation Describe --group MyConsumerGroup --allow-principal User:user1 --allow-principal User:user2

Deny

user1access to readmyTopicfrom IP address host127.0.0.1.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Describe --operation Read --topic myTopic --group MyConsumerGroup --deny-principal User:user1 --deny-host 127.0.0.1

Add

user1as the consumer ofmyTopicwithMyConsumerGroup.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --consumer --topic myTopic --group MyConsumerGroup --allow-principal User:user1

Additional resources

5.4.6.4. Listing ACL rules

This procedure describes how to list existing ACL rules when using the AclAuthorizer plugin in Kafka brokers.

Rules are listed using the kafka-acls.sh utility.

Prerequisites

- AMQ Streams is installed on all hosts used as Kafka brokers.

- Authorization is enabled in Kafka brokers

- ACLs have been added.

Procedure

Run

kafka-acls.shwith the--listoption.For example:

$ bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --list --topic myTopic Current ACLs for resource `Topic:myTopic`: User:user1 has Allow permission for operations: Read from hosts: * User:user2 has Allow permission for operations: Read from hosts: * User:user2 has Deny permission for operations: Read from hosts: 127.0.0.1 User:user1 has Allow permission for operations: Describe from hosts: * User:user2 has Allow permission for operations: Describe from hosts: * User:user2 has Deny permission for operations: Describe from hosts: 127.0.0.1

Additional resources

5.4.6.5. Removing ACL rules

This procedure describes how to remove ACL rules when using the AclAuthorizer plugin in Kafka brokers.

Rules are removed using the kafka-acls.sh utility.

Prerequisites

- AMQ Streams is installed on all hosts used as Kafka brokers.

- Authorization is enabled in Kafka brokers.

- ACLs have been added.

Procedure

Run

kafka-acls.shwith the--removeoption.Examples:

Remove the ACL allowing Allow

user1anduser2access to read frommyTopicusing theMyConsumerGroupconsumer group.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Read --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Describe --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Read --operation Describe --group MyConsumerGroup --allow-principal User:user1 --allow-principal User:user2

Remove the ACL adding

user1as the consumer ofmyTopicwithMyConsumerGroup.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --consumer --topic myTopic --group MyConsumerGroup --allow-principal User:user1

Remove the ACL denying

user1access to readmyTopicfrom IP address host127.0.0.1.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Describe --operation Read --topic myTopic --group MyConsumerGroup --deny-principal User:user1 --deny-host 127.0.0.1

Additional resources

5.4.7. ZooKeeper authorization

When authentication is enabled between Kafka and ZooKeeper, you can use ZooKeeper Access Control List (ACL) rules to automatically control access to Kafka’s metadata stored in ZooKeeper.

5.4.7.1. ACL Configuration

Enforcement of ZooKeeper ACL rules is controlled by the zookeeper.set.acl property in the config/server.properties Kafka configuration file.

The property is disabled by default and enabled by setting to true:

zookeeper.set.acl=true

If ACL rules are enabled, when a znode is created in ZooKeeper only the Kafka user who created it can modify or delete it. All other users have read-only access.

Kafka sets ACL rules only for newly created ZooKeeper znodes. If the ACLs are only enabled after the first start of the cluster, the zookeeper-security-migration.sh tool can set ACLs on all existing znodes.

Confidentiality of data in ZooKeeper

Data stored in ZooKeeper includes:

- Topic names and their configuration

- Salted and hashed user credentials when SASL SCRAM authentication is used.

But ZooKeeper does not store any records sent and received using Kafka. The data stored in ZooKeeper is assumed to be non-confidential.

If the data is to be regarded as confidential (for example because topic names contain customer IDs), the only option available for protection is isolating ZooKeeper on the network level and allowing access only to Kafka brokers.

5.4.7.2. Enabling ZooKeeper ACLs for a new Kafka cluster

This procedure describes how to enable ZooKeeper ACLs in Kafka configuration for a new Kafka cluster. Use this procedure only before the first start of the Kafka cluster. For enabling ZooKeeper ACLs in a cluster that is already running, see Section 5.4.7.3, “Enabling ZooKeeper ACLs in an existing Kafka cluster”.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

- ZooKeeper cluster is configured and running.

- Client-to-server authentication is enabled in ZooKeeper.

- ZooKeeper authentication is enabled in the Kafka brokers.

- Kafka brokers have not yet been started.

Procedure

Edit the

/opt/kafka/config/server.propertiesKafka configuration file to set thezookeeper.set.aclfield totrueon all cluster nodes.zookeeper.set.acl=true

- Start the Kafka brokers.

5.4.7.3. Enabling ZooKeeper ACLs in an existing Kafka cluster

This procedure describes how to enable ZooKeeper ACLs in Kafka configuration for a Kafka cluster that is running. Use the zookeeper-security-migration.sh tool to set ZooKeeper ACLs on all existing znodes. The zookeeper-security-migration.sh is available as part of AMQ Streams, and can be found in the bin directory.

Prerequisites

- Kafka cluster is configured and running.

Enabling the ZooKeeper ACLs

Edit the

/opt/kafka/config/server.propertiesKafka configuration file to set thezookeeper.set.aclfield totrueon all cluster nodes.zookeeper.set.acl=true

Restart all Kafka brokers one by one.

For information on restarting brokers in a multi-node cluster, see Section 3.3, “Performing a graceful rolling restart of Kafka brokers”.

Set the ACLs on all existing ZooKeeper

znodesusing thezookeeper-security-migration.shtool.su - kafka cd /opt/kafka KAFKA_OPTS="-Djava.security.auth.login.config=./config/jaas.conf"; ./bin/zookeeper-security-migration.sh --zookeeper.acl=secure --zookeeper.connect=<ZooKeeperURL> exitFor example:

su - kafka cd /opt/kafka KAFKA_OPTS="-Djava.security.auth.login.config=./config/jaas.conf"; ./bin/zookeeper-security-migration.sh --zookeeper.acl=secure --zookeeper.connect=zoo1.my-domain.com:2181 exit

5.4.8. Encryption and authentication

AMQ Streams supports encryption and authentication, which is configured as part of the listener configuration.

5.4.8.1. Listener configuration

Encryption and authentication in Kafka brokers is configured per listener. For more information about Kafka listener configuration, see Section 5.4.2, “Listeners”.

Each listener in the Kafka broker is configured with its own security protocol. The configuration property listener.security.protocol.map defines which listener uses which security protocol. It maps each listener name to its security protocol. Supported security protocols are:

PLAINTEXT- Listener without any encryption or authentication.

SSL- Listener using TLS encryption and, optionally, authentication using TLS client certificates.

SASL_PLAINTEXT- Listener without encryption but with SASL-based authentication.

SASL_SSL- Listener with TLS-based encryption and SASL-based authentication.

Given the following listeners configuration:

listeners=INT1://:9092,INT2://:9093,REPLICATION://:9094

the listener.security.protocol.map might look like this:

listener.security.protocol.map=INT1:SASL_PLAINTEXT,INT2:SASL_SSL,REPLICATION:SSL

This would configure the listener INT1 to use unencrypted connections with SASL authentication, the listener INT2 to use encrypted connections with SASL authentication and the REPLICATION interface to use TLS encryption (possibly with TLS client authentication). The same security protocol can be used multiple times. The following example is also a valid configuration:

listener.security.protocol.map=INT1:SSL,INT2:SSL,REPLICATION:SSL

Such a configuration would use TLS encryption and TLS authentication for all interfaces. The following chapters will explain in more detail how to configure TLS and SASL.

5.4.8.2. TLS Encryption

Kafka supports TLS for encrypting communication with Kafka clients.

In order to use TLS encryption and server authentication, a keystore containing private and public keys has to be provided. This is usually done using a file in the Java Keystore (JKS) format. A path to this file is set in the ssl.keystore.location property. The ssl.keystore.password property should be used to set the password protecting the keystore. For example:

ssl.keystore.location=/path/to/keystore/server-1.jks ssl.keystore.password=123456

In some cases, an additional password is used to protect the private key. Any such password can be set using the ssl.key.password property.

Kafka is able to use keys signed by certification authorities as well as self-signed keys. Using keys signed by certification authorities should always be the preferred method. In order to allow clients to verify the identity of the Kafka broker they are connecting to, the certificate should always contain the advertised hostname(s) as its Common Name (CN) or in the Subject Alternative Names (SAN).

It is possible to use different SSL configurations for different listeners. All options starting with ssl. can be prefixed with listener.name.<NameOfTheListener>., where the name of the listener has to be always in lower case. This will override the default SSL configuration for that specific listener. The following example shows how to use different SSL configurations for different listeners:

listeners=INT1://:9092,INT2://:9093,REPLICATION://:9094 listener.security.protocol.map=INT1:SSL,INT2:SSL,REPLICATION:SSL # Default configuration - will be used for listeners INT1 and INT2 ssl.keystore.location=/path/to/keystore/server-1.jks ssl.keystore.password=123456 # Different configuration for listener REPLICATION listener.name.replication.ssl.keystore.location=/path/to/keystore/server-1.jks listener.name.replication.ssl.keystore.password=123456

Additional TLS configuration options

In addition to the main TLS configuration options described above, Kafka supports many options for fine-tuning the TLS configuration. For example, to enable or disable TLS / SSL protocols or cipher suites:

ssl.cipher.suites- List of enabled cipher suites. Each cipher suite is a combination of authentication, encryption, MAC and key exchange algorithms used for the TLS connection. By default, all available cipher suites are enabled.

ssl.enabled.protocols-

List of enabled TLS / SSL protocols. Defaults to

TLSv1.2,TLSv1.1,TLSv1.

5.4.8.3. Enabling TLS encryption

This procedure describes how to enable encryption in Kafka brokers.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

Procedure

- Generate TLS certificates for all Kafka brokers in your cluster. The certificates should have their advertised and bootstrap addresses in their Common Name or Subject Alternative Name.

Edit the

/opt/kafka/config/server.propertiesKafka configuration file on all cluster nodes for the following:-

Change the

listener.security.protocol.mapfield to specify theSSLprotocol for the listener where you want to use TLS encryption. -

Set the

ssl.keystore.locationoption to the path to the JKS keystore with the broker certificate. Set the

ssl.keystore.passwordoption to the password you used to protect the keystore.For example:

listeners=UNENCRYPTED://:9092,ENCRYPTED://:9093,REPLICATION://:9094 listener.security.protocol.map=UNENCRYPTED:PLAINTEXT,ENCRYPTED:SSL,REPLICATION:PLAINTEXT ssl.keystore.location=/path/to/keystore/server-1.jks ssl.keystore.password=123456

-

Change the

- (Re)start the Kafka brokers

5.4.8.4. Authentication

For authentication, you can use:

- TLS client authentication based on X.509 certificates on encrypted connections

- A supported Kafka SASL (Simple Authentication and Security Layer) mechanism

- OAuth 2.0 token based authentication

5.4.8.4.1. TLS client authentication

TLS client authentication can be used only on connections which are already using TLS encryption. To use TLS client authentication, a truststore with public keys can be provided to the broker. These keys can be used to authenticate clients connecting to the broker. The truststore should be provided in Java Keystore (JKS) format and should contain public keys of the certification authorities. All clients with public and private keys signed by one of the certification authorities included in the truststore will be authenticated. The location of the truststore is set using field ssl.truststore.location. In case the truststore is password protected, the password should be set in the ssl.truststore.password property. For example:

ssl.truststore.location=/path/to/keystore/server-1.jks ssl.truststore.password=123456

Once the truststore is configured, TLS client authentication has to be enabled using the ssl.client.auth property. This property can be set to one of three different values:

none- TLS client authentication is switched off. (Default value)

requested- TLS client authentication is optional. Clients will be asked to authenticate using TLS client certificate but they can choose not to.

required- Clients are required to authenticate using TLS client certificate.

When a client authenticates using TLS client authentication, the authenticated principal name is the distinguished name from the authenticated client certificate. For example, a user with a certificate which has a distinguished name CN=someuser will be authenticated with the following principal CN=someuser,OU=Unknown,O=Unknown,L=Unknown,ST=Unknown,C=Unknown. When TLS client authentication is not used and SASL is disabled, the principal name will be ANONYMOUS.

5.4.8.4.2. SASL authentication

SASL authentication is configured using Java Authentication and Authorization Service (JAAS). JAAS is also used for authentication of connections between Kafka and ZooKeeper. JAAS uses its own configuration file. The recommended location for this file is /opt/kafka/config/jaas.conf. The file has to be readable by the kafka user. When running Kafka, the location of this file is specified using Java system property java.security.auth.login.config. This property has to be passed to Kafka when starting the broker nodes:

KAFKA_OPTS="-Djava.security.auth.login.config=/path/to/my/jaas.config"; bin/kafka-server-start.sh

SASL authentication is supported both through plain unencrypted connections as well as through TLS connections. SASL can be enabled individually for each listener. To enable it, the security protocol in listener.security.protocol.map has to be either SASL_PLAINTEXT or SASL_SSL.

SASL authentication in Kafka supports several different mechanisms:

PLAIN- Implements authentication based on username and passwords. Usernames and passwords are stored locally in Kafka configuration.

SCRAM-SHA-256andSCRAM-SHA-512- Implements authentication using Salted Challenge Response Authentication Mechanism (SCRAM). SCRAM credentials are stored centrally in ZooKeeper. SCRAM can be used in situations where ZooKeeper cluster nodes are running isolated in a private network.

GSSAPI- Implements authentication against a Kerberos server.

The PLAIN mechanism sends the username and password over the network in an unencrypted format. It should be therefore only be used in combination with TLS encryption.

The SASL mechanisms are configured via the JAAS configuration file. Kafka uses the JAAS context named KafkaServer. After they are configured in JAAS, the SASL mechanisms have to be enabled in the Kafka configuration. This is done using the sasl.enabled.mechanisms property. This property contains a comma-separated list of enabled mechanisms:

sasl.enabled.mechanisms=PLAIN,SCRAM-SHA-256,SCRAM-SHA-512

In case the listener used for inter-broker communication is using SASL, the property sasl.mechanism.inter.broker.protocol has to be used to specify the SASL mechanism which it should use. For example:

sasl.mechanism.inter.broker.protocol=PLAIN

The username and password which will be used for the inter-broker communication has to be specified in the KafkaServer JAAS context using the field username and password.

SASL PLAIN

To use the PLAIN mechanism, the usernames and password which are allowed to connect are specified directly in the JAAS context. The following example shows the context configured for SASL PLAIN authentication. The example configures three different users:

-

admin -

user1 -

user2

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

user_admin="123456"

user_user1="123456"

user_user2="123456";

};The JAAS configuration file with the user database should be kept in sync on all Kafka brokers.

When SASL PLAIN is also used for inter-broker authentication, the username and password properties should be included in the JAAS context:

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="123456"

user_admin="123456"

user_user1="123456"

user_user2="123456";

};SASL SCRAM

SCRAM authentication in Kafka consists of two mechanisms: SCRAM-SHA-256 and SCRAM-SHA-512. These mechanisms differ only in the hashing algorithm used - SHA-256 versus stronger SHA-512. To enable SCRAM authentication, the JAAS configuration file has to include the following configuration:

KafkaServer {

org.apache.kafka.common.security.scram.ScramLoginModule required;

};When enabling SASL authentication in the Kafka configuration file, both SCRAM mechanisms can be listed. However, only one of them can be chosen for the inter-broker communication. For example:

sasl.enabled.mechanisms=SCRAM-SHA-256,SCRAM-SHA-512 sasl.mechanism.inter.broker.protocol=SCRAM-SHA-512

User credentials for the SCRAM mechanism are stored in ZooKeeper. The kafka-configs.sh tool can be used to manage them. For example, run the following command to add user user1 with password 123456:

bin/kafka-configs.sh --bootstrap-server localhost:9092 --alter --add-config 'SCRAM-SHA-256=[password=123456],SCRAM-SHA-512=[password=123456]' --entity-type users --entity-name user1

To delete a user credential use:

bin/kafka-configs.sh --bootstrap-server localhost:9092 --alter --delete-config 'SCRAM-SHA-512' --entity-type users --entity-name user1

SASL GSSAPI

The SASL mechanism used for authentication using Kerberos is called GSSAPI. To configure Kerberos SASL authentication, the following configuration should be added to the JAAS configuration file:

KafkaServer {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/etc/security/keytabs/kafka_server.keytab"

principal="kafka/kafka1.hostname.com@EXAMPLE.COM";

};The domain name in the Kerberos principal has to be always in upper case.

In addition to the JAAS configuration, the Kerberos service name needs to be specified in the sasl.kerberos.service.name property in the Kafka configuration:

sasl.enabled.mechanisms=GSSAPI sasl.mechanism.inter.broker.protocol=GSSAPI sasl.kerberos.service.name=kafka

Multiple SASL mechanisms

Kafka can use multiple SASL mechanisms at the same time. The different JAAS configurations can be all added to the same context:

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

user_admin="123456"

user_user1="123456"

user_user2="123456";

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/etc/security/keytabs/kafka_server.keytab"

principal="kafka/kafka1.hostname.com@EXAMPLE.COM";

org.apache.kafka.common.security.scram.ScramLoginModule required;

};When multiple mechanisms are enabled, clients will be able to choose the mechanism which they want to use.

5.4.8.5. Enabling TLS client authentication

This procedure describes how to enable TLS client authentication in Kafka brokers.

Prerequisites

Procedure

- Prepare a JKS truststore containing the public key of the certification authority used to sign the user certificates.

Edit the

/opt/kafka/config/server.propertiesKafka configuration file on all cluster nodes for the following:-

Set the

ssl.truststore.locationoption to the path to the JKS truststore with the certification authority of the user certificates. -

Set the

ssl.truststore.passwordoption to the password you used to protect the truststore. Set the

ssl.client.authoption torequired.For example:

ssl.truststore.location=/path/to/truststore.jks ssl.truststore.password=123456 ssl.client.auth=required

-

Set the

- (Re)start the Kafka brokers

5.4.8.6. Enabling SASL PLAIN authentication

This procedure describes how to enable SASL PLAIN authentication in Kafka brokers.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

Procedure

Edit or create the

/opt/kafka/config/jaas.confJAAS configuration file. This file should contain all your users and their passwords. Make sure this file is the same on all Kafka brokers.For example:

KafkaServer { org.apache.kafka.common.security.plain.PlainLoginModule required user_admin="123456" user_user1="123456" user_user2="123456"; };Edit the

/opt/kafka/config/server.propertiesKafka configuration file on all cluster nodes for the following:-

Change the

listener.security.protocol.mapfield to specify theSASL_PLAINTEXTorSASL_SSLprotocol for the listener where you want to use SASL PLAIN authentication. Set the

sasl.enabled.mechanismsoption toPLAIN.For example:

listeners=INSECURE://:9092,AUTHENTICATED://:9093,REPLICATION://:9094 listener.security.protocol.map=INSECURE:PLAINTEXT,AUTHENTICATED:SASL_PLAINTEXT,REPLICATION:PLAINTEXT sasl.enabled.mechanisms=PLAIN

-

Change the

(Re)start the Kafka brokers using the KAFKA_OPTS environment variable to pass the JAAS configuration to Kafka brokers.

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/jaas.conf"; /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

5.4.8.7. Enabling SASL SCRAM authentication

This procedure describes how to enable SASL SCRAM authentication in Kafka brokers.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

Procedure

Edit or create the

/opt/kafka/config/jaas.confJAAS configuration file. Enable theScramLoginModulefor theKafkaServercontext. Make sure this file is the same on all Kafka brokers.For example:

KafkaServer { org.apache.kafka.common.security.scram.ScramLoginModule required; };Edit the

/opt/kafka/config/server.propertiesKafka configuration file on all cluster nodes for the following:-

Change the

listener.security.protocol.mapfield to specify theSASL_PLAINTEXTorSASL_SSLprotocol for the listener where you want to use SASL SCRAM authentication. Set the

sasl.enabled.mechanismsoption toSCRAM-SHA-256orSCRAM-SHA-512.For example:

listeners=INSECURE://:9092,AUTHENTICATED://:9093,REPLICATION://:9094 listener.security.protocol.map=INSECURE:PLAINTEXT,AUTHENTICATED:SASL_PLAINTEXT,REPLICATION:PLAINTEXT sasl.enabled.mechanisms=SCRAM-SHA-512

-

Change the

(Re)start the Kafka brokers using the KAFKA_OPTS environment variable to pass the JAAS configuration to Kafka brokers.

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/jaas.conf"; /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

Additional resources

5.4.8.8. Adding SASL SCRAM users

This procedure describes how to add new users for authentication using SASL SCRAM.

Prerequisites

Procedure

Use the

kafka-configs.shtool to add new SASL SCRAM users.bin/kafka-configs.sh --bootstrap-server <broker_address> --alter --add-config 'SCRAM-SHA-512=[password=<Password>]' --entity-type users --entity-name <Username>

For example:

bin/kafka-configs.sh --bootstrap-server localhost:9092 --alter --add-config 'SCRAM-SHA-512=[password=123456]' --entity-type users --entity-name user1

5.4.8.9. Deleting SASL SCRAM users

This procedure describes how to remove users when using SASL SCRAM authentication.

Prerequisites

Procedure

Use the

kafka-configs.shtool to delete SASL SCRAM users./opt/kafka/bin/kafka-configs.sh --bootstrap-server <broker_address> --alter --delete-config 'SCRAM-SHA-512' --entity-type users --entity-name <Username>

For example:

/opt/kafka/bin/kafka-configs.sh --bootstrap-server localhost:9092 --alter --delete-config 'SCRAM-SHA-512' --entity-type users --entity-name user1

5.4.9. Using OAuth 2.0 token-based authentication

AMQ Streams supports the use of OAuth 2.0 authentication using the OAUTHBEARER and PLAIN mechanisms.

OAuth 2.0 enables standardized token-based authentication and authorization between applications, using a central authorization server to issue tokens that grant limited access to resources.

You can configure OAuth 2.0 authentication, then OAuth 2.0 authorization.

Kafka brokers and clients both need to be configured to use OAuth 2.0. OAuth 2.0 authentication can also be used in conjunction with simple or OPA-based Kafka authorization.

Using OAuth 2.0 authentication, application clients can access resources on application servers (called resource servers) without exposing account credentials.

The application client passes an access token as a means of authenticating, which application servers can also use to determine the level of access to grant. The authorization server handles the granting of access and inquiries about access.

In the context of AMQ Streams:

- Kafka brokers act as OAuth 2.0 resource servers

- Kafka clients act as OAuth 2.0 application clients

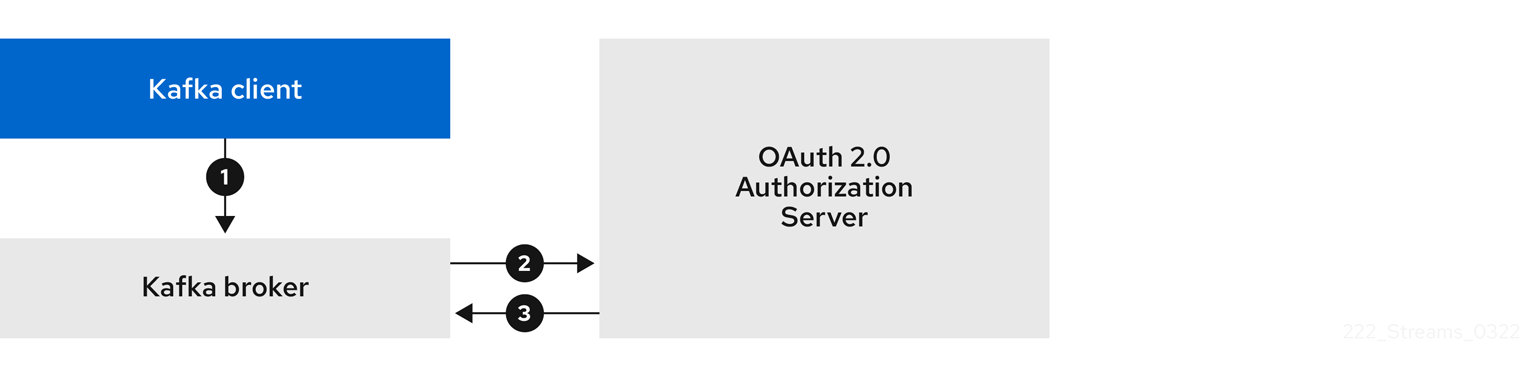

Kafka clients authenticate to Kafka brokers. The brokers and clients communicate with the OAuth 2.0 authorization server, as necessary, to obtain or validate access tokens.

For a deployment of AMQ Streams, OAuth 2.0 integration provides:

- Server-side OAuth 2.0 support for Kafka brokers

- Client-side OAuth 2.0 support for Kafka MirrorMaker, Kafka Connect, and the Kafka Bridge

AMQ Streams on RHEL includes two OAuth 2.0 libraries:

kafka-oauth-client-

Provides a custom login callback handler class named

io.strimzi.kafka.oauth.client.JaasClientOauthLoginCallbackHandler. To handle theOAUTHBEARERauthentication mechanism, use the login callback handler with theOAuthBearerLoginModuleprovided by Apache Kafka. kafka-oauth-common-

A helper library that provides some of the functionality needed by the

kafka-oauth-clientlibrary.

The provided client libraries also have dependencies on some additional third-party libraries, such as: keycloak-core, jackson-databind, and slf4j-api.

We recommend using a Maven project to package your client to ensure that all the dependency libraries are included. Dependency libraries might change in future versions.

Additional resources

5.4.9.1. OAuth 2.0 authentication mechanisms

AMQ Streams supports the OAUTHBEARER and PLAIN mechanisms for OAuth 2.0 authentication. Both mechanisms allow Kafka clients to establish authenticated sessions with Kafka brokers. The authentication flow between clients, the authorization server, and Kafka brokers is different for each mechanism.

We recommend that you configure clients to use OAUTHBEARER whenever possible. OAUTHBEARER provides a higher level of security than PLAIN because client credentials are never shared with Kafka brokers. Consider using PLAIN only with Kafka clients that do not support OAUTHBEARER.

You configure Kafka broker listeners to use OAuth 2.0 authentication for connecting clients. If necessary, you can use the OAUTHBEARER and PLAIN mechanisms on the same oauth listener. The properties to support each mechanism must be explicitly specified in the oauth listener configuration.

OAUTHBEARER overview

To use OAUTHBEARER, set sasl.enabled.mechanisms to OAUTHBEARER in the OAuth authentication listener configuration for the Kafka broker. For detailed configuration, see Section 5.4.9.2, “OAuth 2.0 Kafka broker configuration”.

listener.name.client.sasl.enabled.mechanisms=OAUTHBEARER

Many Kafka client tools use libraries that provide basic support for OAUTHBEARER at the protocol level. To support application development, AMQ Streams provides an OAuth callback handler for the upstream Kafka Client Java libraries (but not for other libraries). Therefore, you do not need to write your own callback handlers. An application client can use the callback handler to provide the access token. Clients written in other languages, such as Go, must use custom code to connect to the authorization server and obtain the access token.

With OAUTHBEARER, the client initiates a session with the Kafka broker for credentials exchange, where credentials take the form of a bearer token provided by the callback handler. Using the callbacks, you can configure token provision in one of three ways:

- Client ID and Secret (by using the OAuth 2.0 client credentials mechanism)

- A long-lived access token, obtained manually at configuration time

- A long-lived refresh token, obtained manually at configuration time

OAUTHBEARER authentication can only be used by Kafka clients that support the OAUTHBEARER mechanism at the protocol level.

PLAIN overview

To use PLAIN, add PLAIN to the value of sasl.enabled.mechanisms.

listener.name.client.sasl.enabled.mechanisms=OAUTHBEARER,PLAIN

PLAIN is a simple authentication mechanism used by all Kafka client tools. To enable PLAIN to be used with OAuth 2.0 authentication, AMQ Streams provides OAuth 2.0 over PLAIN server-side callbacks.

With the AMQ Streams implementation of PLAIN, the client credentials are not stored in ZooKeeper. Instead, client credentials are handled centrally behind a compliant authorization server, similar to when OAUTHBEARER authentication is used.

When used with the OAuth 2.0 over PLAIN callbacks, Kafka clients authenticate with Kafka brokers using either of the following methods:

- Client ID and secret (by using the OAuth 2.0 client credentials mechanism)

- A long-lived access token, obtained manually at configuration time

For both methods, the client must provide the PLAIN username and password properties to pass credentials to the Kafka broker. The client uses these properties to pass a client ID and secret or username and access token.

Client IDs and secrets are used to obtain access tokens.

Access tokens are passed as password property values. You pass the access token with or without an $accessToken: prefix.

-

If you configure a token endpoint (

oauth.token.endpoint.uri) in the listener configuration, you need the prefix. -

If you don’t configure a token endpoint (

oauth.token.endpoint.uri) in the listener configuration, you don’t need the prefix. The Kafka broker interprets the password as a raw access token.

If the password is set as the access token, the username must be set to the same principal name that the Kafka broker obtains from the access token. You can specify username extraction options in your listener using the oauth.username.claim, oauth.fallback.username.claim, oauth.fallback.username.prefix, and oauth.userinfo.endpoint.uri properties. The username extraction process also depends on your authorization server; in particular, how it maps client IDs to account names.

OAuth over PLAIN does not support passing a username and password (password grants) using the (deprecated) OAuth 2.0 password grant mechanism.

5.4.9.1.1. Configuring OAuth 2.0 with properties or variables

You can configure OAuth 2.0 settings using Java Authentication and Authorization Service (JAAS) properties or environment variables.

-

JAAS properties are configured in the

server.propertiesconfiguration file, and passed as key-values pairs of thelistener.name.LISTENER-NAME.oauthbearer.sasl.jaas.configproperty. If using environment variables, you still need to provide the

listener.name.LISTENER-NAME.oauthbearer.sasl.jaas.configproperty in theserver.propertiesfile, but you can omit the other JAAS properties.You can use capitalized or upper-case environment variable naming conventions.

The AMQ Streams OAuth 2.0 libraries use properties that start with:

-

oauth.to configure authentication -

strimzi.to configure OAuth 2.0 authorization

Additional resources

5.4.9.2. OAuth 2.0 Kafka broker configuration

Kafka broker configuration for OAuth 2.0 authentication involves:

- Creating the OAuth 2.0 client in the authorization server

- Configuring OAuth 2.0 authentication in the Kafka cluster

In relation to the authorization server, Kafka brokers and Kafka clients are both regarded as OAuth 2.0 clients.

5.4.9.2.1. OAuth 2.0 client configuration on an authorization server

To configure a Kafka broker to validate the token received during session initiation, the recommended approach is to create an OAuth 2.0 client definition in an authorization server, configured as confidential, with the following client credentials enabled:

-

Client ID of

kafka-broker(for example) - Client ID and secret as the authentication mechanism

You only need to use a client ID and secret when using a non-public introspection endpoint of the authorization server. The credentials are not typically required when using public authorization server endpoints, as with fast local JWT token validation.

5.4.9.2.2. OAuth 2.0 authentication configuration in the Kafka cluster

To use OAuth 2.0 authentication in the Kafka cluster, you enable an OAuth authentication listener configuration for your Kafka cluster, in the Kafka server.properties file. A minimum configuration is required. You can also configure a TLS listener, where TLS is used for inter-broker communication.

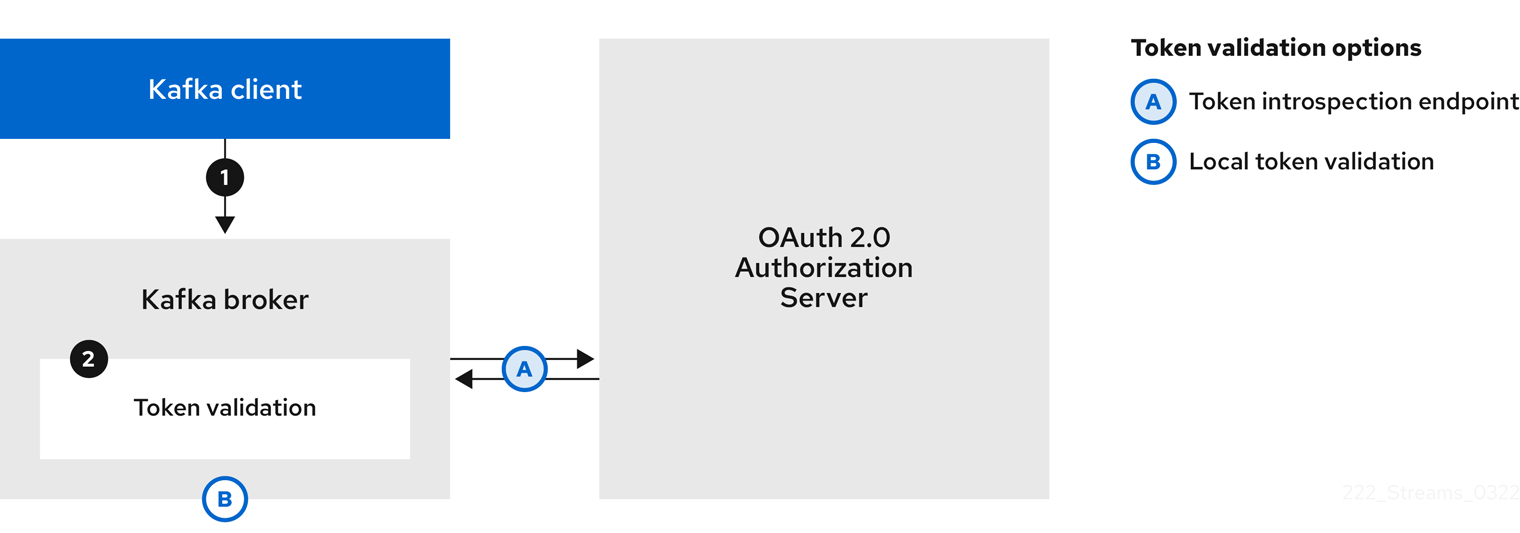

You can configure the broker for token validation by the authorization server using one of the following methods:

- Fast local token validation: a JWKS endpoint in combination with signed JWT-formatted access tokens

- Introspection endpoint

You can configure OAUTHBEARER or PLAIN authentication, or both.

The following example shows a minimum configuration that applies a global listener configuration, which means that inter-broker communication goes through the same listener as application clients.

The example also shows an OAuth 2.0 configuration for a specific listener, where you specify listener.name.LISTENER-NAME.sasl.enabled.mechanisms instead of sasl.enabled.mechanisms. LISTENER-NAME is the case-insensitive name of the listener. Here, we name the listener CLIENT, so the property name is listener.name.client.sasl.enabled.mechanisms.

The example uses OAUTHBEARER authentication.

Example: Minimum listener configuration for OAuth 2.0 authentication using a JWKS endpoint

sasl.enabled.mechanisms=OAUTHBEARER 1 listeners=CLIENT://0.0.0.0:9092 2 listener.security.protocol.map=CLIENT:SASL_PLAINTEXT 3 listener.name.client.sasl.enabled.mechanisms=OAUTHBEARER 4 sasl.mechanism.inter.broker.protocol=OAUTHBEARER 5 inter.broker.listener.name=CLIENT 6 listener.name.client.oauthbearer.sasl.server.callback.handler.class=io.strimzi.kafka.oauth.server.JaasServerOauthValidatorCallbackHandler 7 listener.name.client.oauthbearer.sasl.jaas.config=org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required \ 8 oauth.valid.issuer.uri="https://AUTH-SERVER-ADDRESS" \ 9 oauth.jwks.endpoint.uri="https://AUTH-SERVER-ADDRESS/jwks" \ 10 oauth.username.claim="preferred_username" \ 11 oauth.client.id="kafka-broker" \ 12 oauth.client.secret="kafka-secret" \ 13 oauth.token.endpoint.uri="https://AUTH-SERVER-ADDRESS/token" ; 14 listener.name.client.oauthbearer.sasl.login.callback.handler.class=io.strimzi.kafka.oauth.client.JaasClientOauthLoginCallbackHandler 15 listener.name.client.oauthbearer.connections.max.reauth.ms=3600000 16

- 1

- Enables the OAUTHBEARER mechanism for credentials exchange over SASL.

- 2

- Configures a listener for client applications to connect to. The system

hostnameis used as an advertised hostname, which clients must resolve in order to reconnect. The listener is namedCLIENTin this example. - 3

- Specifies the channel protocol for the listener.

SASL_SSLis for TLS.SASL_PLAINTEXTis used for an unencrypted connection (no TLS), but there is risk of eavesdropping and interception at the TCP connection layer. - 4

- Specifies the OAUTHBEARER mechanism for the CLIENT listener. The client name (

CLIENT) is usually specified in uppercase in thelistenersproperty, in lowercase forlistener.nameproperties (listener.name.client), and in lowercase when part of alistener.name.client.*property. - 5

- Specifies the OAUTHBEARER mechanism for inter-broker communication.

- 6

- Specifies the listener for inter-broker communication. The specification is required for the configuration to be valid.

- 7

- Configures OAuth 2.0 authentication on the client listener.

- 8

- Configures authentication settings for client and inter-broker communication. The

oauth.client.id,oauth.client.secret, andauth.token.endpoint.uriproperties relate to inter-broker configuration. - 9

- A valid issuer URI. Only access tokens issued by this issuer will be accepted. For example, https://AUTH-SERVER-ADDRESS/auth/realms/REALM-NAME.

- 10

- The JWKS endpoint URL. For example, https://AUTH-SERVER-ADDRESS/auth/realms/REALM-NAME/protocol/openid-connect/certs.

- 11

- The token claim (or key) that contains the actual user name in the token. The user name is the principal used to identify the user. The value will depend on the authentication flow and the authorization server used.

- 12

- Client ID of the Kafka broker, which is the same for all brokers. This is the client registered with the authorization server as

kafka-broker. - 13

- Secret for the Kafka broker, which is the same for all brokers.

- 14

- The OAuth 2.0 token endpoint URL to your authorization server. For production, always use

https://urls. For example, https://AUTH-SERVER-ADDRESS/auth/realms/REALM-NAME/protocol/openid-connect/token. - 15

- Enables (and is only required for) OAuth 2.0 authentication for inter-broker communication.

- 16

- (Optional) Enforces session expiry when a token expires, and also activates the Kafka re-authentication mechanism. If the specified value is less than the time left for the access token to expire, then the client will have to re-authenticate before the actual token expiry. By default, the session does not expire when the access token expires, and the client does not attempt re-authentication.

The following example shows a minimum configuration for a TLS listener, where TLS is used for inter-broker communication.

Example: TLS listener configuration for OAuth 2.0 authentication

listeners=REPLICATION://kafka:9091,CLIENT://kafka:9092 1 listener.security.protocol.map=REPLICATION:SSL,CLIENT:SASL_PLAINTEXT 2 listener.name.client.sasl.enabled.mechanisms=OAUTHBEARER inter.broker.listener.name=REPLICATION listener.name.replication.ssl.keystore.password=KEYSTORE-PASSWORD 3 listener.name.replication.ssl.truststore.password=TRUSTSTORE-PASSWORD listener.name.replication.ssl.keystore.type=JKS listener.name.replication.ssl.truststore.type=JKS listener.name.replication.ssl.endpoint.identification.algorithm=HTTPS 4 listener.name.replication.ssl.secure.random.implementation=SHA1PRNG 5 listener.name.replication.ssl.keystore.location=PATH-TO-KEYSTORE 6 listener.name.replication.ssl.truststore.location=PATH-TO-TRUSTSTORE 7 listener.name.replication.ssl.client.auth=required 8 listener.name.client.oauthbearer.sasl.server.callback.handler.class=io.strimzi.kafka.oauth.server.JaasServerOauthValidatorCallbackHandler listener.name.client.oauthbearer.sasl.jaas.config=org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required \ oauth.valid.issuer.uri="https://AUTH-SERVER-ADDRESS" \ oauth.jwks.endpoint.uri="https://AUTH-SERVER-ADDRESS/jwks" \ oauth.username.claim="preferred_username" ; 9

- 1

- Separate configurations are required for inter-broker communication and client applications.

- 2

- Configures the REPLICATION listener to use TLS, and the CLIENT listener to use SASL over an unencrypted channel. The client could use an encrypted channel (

SASL_SSL) in a production environment. - 3

- The

ssl.properties define the TLS configuration. - 4