Bare Metal Provisioning

Install, Configure, and Use Bare Metal Provisioning (Ironic)

Abstract

Preface

This document provides instructions for installing and configuring Bare Metal Provisioning (ironic) as an OpenStack service in the overcloud, and using the service to provision and manage physical machines for end users. Configuring this service allows users to launch instances on physical machines in the same way that they launch virtual machine instances.

The Bare Metal Provisioning components are also used by the Red Hat OpenStack Platform director, as part of the undercloud, to provision and manage the bare metal nodes that make up the OpenStack environment (the overcloud). For information on how the director uses Bare Metal Provisioning, see Director Installation and Usage.

This document describes how to manually install Bare Metal Provisioning on an already deployed overcloud. This manual procedure differs from how Bare Metal Provisioning is integrated into later versions of Red Hat OpenStack Platform director. Attempting to upgrade an overcloud that has undergone this process is unsupported and the results are undefined. This document should be treated as a guide to preview what is integrated into later versions of Red Hat OpenStack Platform director.

Chapter 1. Install and Configure OpenStack Bare Metal Provisioning (ironic)

OpenStack Bare Metal Provisioning (ironic) provides the components required to provision and manage physical (bare metal) machines for end users. Bare Metal Provisioning in the overcloud interacts with the following OpenStack services:

- OpenStack Compute (nova) provides scheduling, tenant quotas, IP assignment, and a user-facing API for virtual machine instance management, while Bare Metal Provisioning provides the administrative API for hardware management. Choose a single, dedicated openstack-nova-compute host to use the Bare Metal Provisioning drivers and handle Bare Metal Provisioning requests.

- OpenStack Identity (keystone) provides request authentication and assists Bare Metal Provisioning in locating other OpenStack services.

- OpenStack Image service (glance) manages images and image metadata used to boot bare metal machines.

- OpenStack Networking (neutron) provides DHCP and network configuration for the required Bare Metal Provisioning networks.

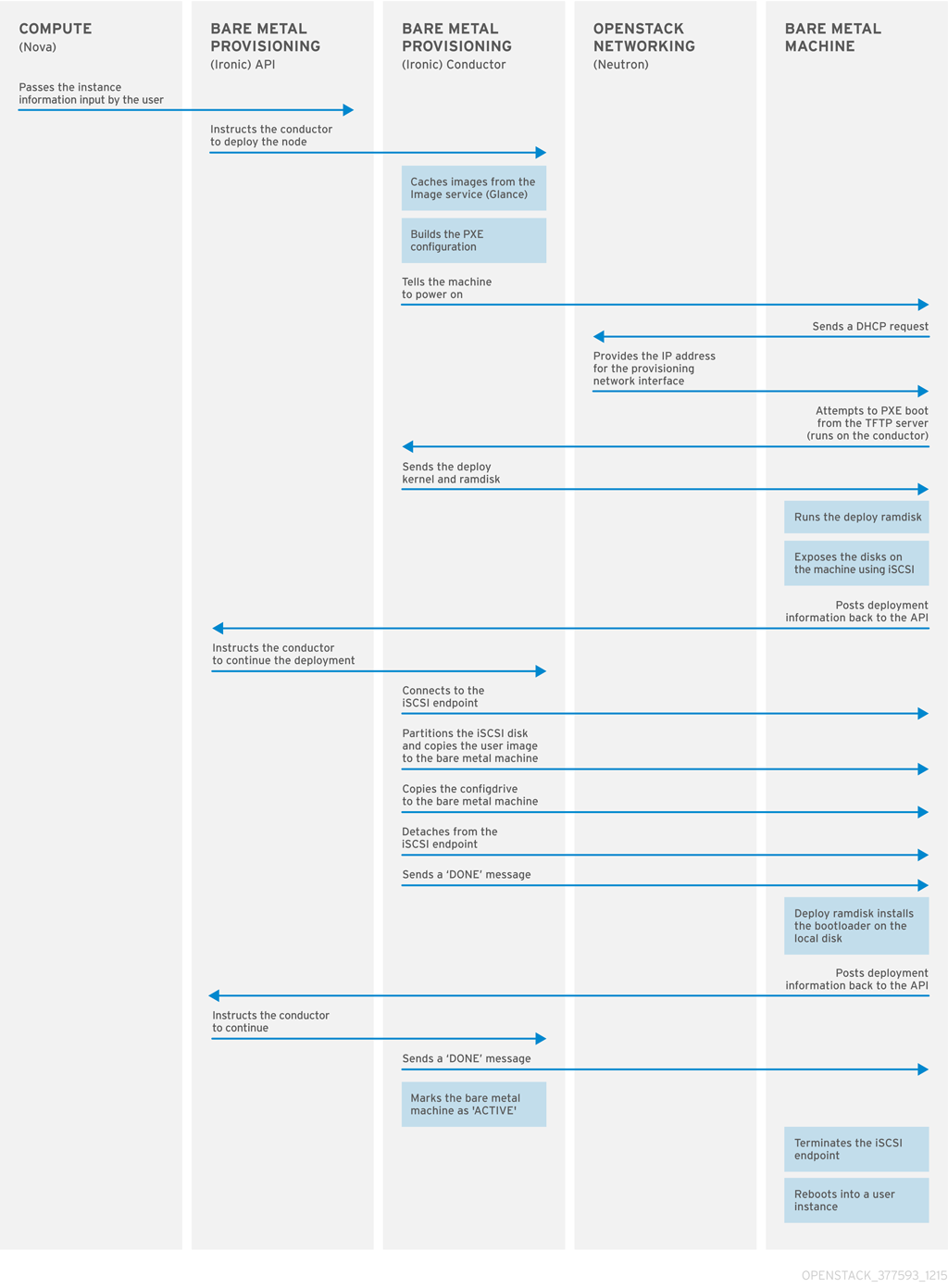

Bare Metal Provisioning uses PXE to provision physical machines. The following diagram outlines how the OpenStack services interact during the provisioning process when a user launches a new bare metal machine.

1.1. Requirements

This chapter outlines the requirements for setting up Bare Metal Provisioning, including installation assumptions, hardware requirements, and networking requirements.

1.1.1. Bare Metal Provisioning Installation Assumptions

Bare Metal Provisioning is a collection of components that can be configured to run on the same node or on separate nodes. The configuration examples in this guide install and configure all Bare Metal Provisioning components on a single node. This guide assumes that the services for OpenStack Identity, OpenStack Image, OpenStack Compute, and OpenStack Networking have already been installed and configured. Bare Metal Provisioning also requires the following external services, which must also be installed and configured as a prerequisite:

- A database server in which to store hardware information and state. This guide assumes that the MariaDB database service is configured for the Red Hat OpenStack Platform environment.

- A messaging service. This guide assumes that RabbitMQ is configured for the environment.

If you used the director to deploy your OpenStack environment, the database and messaging services are installed on a controller node in the overcloud.

Red Hat OpenStack Platform requires iptables instead of firewalld on Compute nodes and OpenStack Networking nodes running Red Hat Enterprise Linux 7. Firewall rules in this document are set using iptables.

Hardware inspection (ironic-inspector) uses iptables to blacklist the MAC addresses of ironic nodes. In the event that another process has locked iptables while ironic-inspector is attempting to make a modification, ironic-inspector uses the iptables -w flag, where supported (version 1.4.21, or higher).

1.1.2. Bare Metal Provisioning Hardware Requirements

A node running all Bare Metal Provisioning components requires the following hardware:

- 64-bit x86 processor with support for the Intel 64 or AMD64 CPU extensions.

- A minimum of 6 GB of RAM.

- A minimum of 40 GB of available disk space.

- A minimum of two 1 Gbps Network Interface Cards. However, a 10 Gbps interface is recommended for Bare Metal Provisioning Network traffic, especially if you are provisioning a large number of bare metal machines.

- Red Hat Enterprise Linux 7.2 (or later) installed as the host operating system.

Alternatively, install and configure Bare Metal Provisioning components on a dedicated openstack-nova-compute node; see Compute Node Requirements in the Director Installation and Usage guide for hardware requirements.

1.1.3. Bare Metal Provisioning Networking Requirements

Bare Metal Provisioning requires at least two networks:

- Provisioning network: This is a private network that Bare Metal Provisioning uses to provision and manage bare metal machines. The Bare Metal Provisioning Network provides DHCP and PXE boot functions to help discover bare metal systems. This network should ideally use a native VLAN on a trunked interface so that Bare Metal Provisioning serves PXE boot and DHCP requests. This is also the network used to control power management through out-of-band drivers on the bare metal machines to be provisioned.

- External network: A separate network for remote connectivity. The interface connecting to this network requires a routable IP address, either defined statically or dynamically through an external DHCP service.

1.1.4. Bare Metal Machine Requirements

Bare metal machines that will be provisioned require the following:

- Two NICs: one for the Bare Metal Provisioning Network, and one for external connectivity.

- A power management interface (e.g. IPMI) connected to the Bare Metal Provisioning Network. If you are using SSH for testing purposes, this is not required.

- PXE boot on the Bare Metal Provisioning Network at the top of the system’s boot order, ahead of hard disks and CD/DVD drives. Disable PXE boot on all other NICs on the system.

Other requirements for bare metal machines that will be provisioned vary depending on the operating system you are installing. For Red Hat Enterprise Linux 7, see the Red Hat Enterprise Linux 7 Installation Guide. For Red Hat Enterprise Linux 6, see the Red Hat Enterprise Linux 6 Installation Guide.

1.2. Configure OpenStack for the Bare Metal Provisioning Service

Every OpenStack service has a user name and password that is used to authenticate it with the Identity service. Each service also needs to be defined with the OpenStack Identity service and have an endpoint URL associated with it for Internal, Admin and Public connectivity.

To configure the Bare Metal Provisioning Service from the director node:

Source the

overcloudrcfile:# source ~stack/overcloudrc

Create the OpenStack Bare Metal Provisioning user:

# openstack user create --password IRONIC_PASSWORD --enable IRONIC_USER # openstack role add --project service --user IRONIC_USER admin

Here,

IRONIC_USERis the user for the Bare Metal Provisioning service andIRONIC_PASSWORDis the password.Create the OpenStack Bare Metal Provisioning service:

# openstack service create --name ironic --description "Ironic bare metal provisioning service" baremetal

Verify the virtual IP (VIP) address that the other OpenStack services are using:

# openstack endpoint list -c "Service Name" -c "PublicURL" --long

The output of this command lists the services and their

Public URL, which are usually all on the same server and use the same IP address.Get the Internal API network address of the Compute node that you are installing the Bare Metal Provisioning service on:

# route -n

The output of this command lists the IP routing table with the IP addresses and the

interfacefor each of the IP addresses.The Internal API network address is then used to create a service endpoint.

You can check the IP address associated with the NIC to use for the Internal and Admin URLs as follows:

# ifconfig INTERFACE

Create the service endpoint:

# openstack endpoint create --publicurl http://VIP:6385 --internalurl http://COMPUTE_INTERNAL_API_IP:6385 --adminurl http://COMPUTE_INTERNAL_API_IP:6385 --region regionOne SERVICE_ID

Here,

VIPis the virtual IP address configured in HAProxy,COMPUTE_INTERNAL_API_IPis the IP address for the Compute node running the Bare Metal Provisioning service that is connected to the Internal API network andSERVICE_IDis the ID of the Bare Metal Provisioning service created using theservice createcommand.

Next, you must configure the HAProxy to make sure you receive requests for the Public URL for the endpoints you created in the previous procedure. To configure the HAProxy value, ensure that you are logged in as the root user on your controller nodes.

Edit the

/etc/haproxy/haproxy.cfgfile and add the following line at the end of the file:listen ironic bind VIP:6385 transparent server SERVER_NAME COMPUTE_INTERNAL_API_IP:6385 check fall 5 inter 2000 rise 2

In this example:

-

VIPis the virtual IP address. -

SERVER_NAMEis the HAProxy identifying name for the Compute server where the Bare Metal Provisioning service will be installed and running. -

COMPUTE_INTERNAL_API_IPis the Internal API IP address of the Compute server where the Bare Metal Provisioning service will be installed and running. -

transparentallows the HAProxy to bind the IP address even if it does not exist on the Controller node so that in a clustered environment, the virtual IP address can move between controllers. check fall 5 inter 2000 rise 2refers to the following health checks on the back end server:-

fall 5- the server is considered unavailable after 5 consecutive failed health checks. -

inter 2000- the interval between health checks is 2000 ms or 2 seconds. -

rise 2- the server is considered available after 2 consecutive successful health checks.

-

-

Restart the HAProxy to make sure the changes take effect:

# systemctl restart haproxy.service

You can get the following message stating the back end server is not available: haproxy[4249]: proxy ironic has no server available!. This message can be ignored for now, since you have not yet installed or configured the service.

1.3. Configure the Controller Nodes for Bare Metal Provisioning Service

The following steps need to be performed on all the controller nodes in your Red Hat OpenStack Platform deployment as a root user, with the exception of the Create the Bare Metal Provisioning Database section. You must perform that procedure on one controller since they all share the database.

On the Controller nodes, you need to make sure your Bare Metal Provisioning Network is connected to Open vSwitch so your OpenStack deployment can reach it.

Add a bridge into Open vSwitch:

# ovs-vsctl add-br br-ironic # ovs-vsctl add-port br-ironic IRONIC_PROVISIONING_NIC # ovs-vsctl show

Here

br-ironicis the name of the bridge andIRONIC_PROVISIONING_NICis the NIC connected to the Bare Metal Provisioning Network.With the

ovs-vsctl showcommand, you can see that a new bridge is created with the associated port, however you will notice that thebr-intintegration bridge lacks a patch to the new bridge.To get the new bridge added to the integration bridge, you need to update the following plugin files:

Update the ML2 configuration file,

/etc/neutron/plugins/ml2/ml2_conf.inias follows:-

For the

type_driversparameter, make sureflatis listed among the drivers, for example,type_drivers = vxlan,vlan,flat,gre. This is a comma delimited list. -

For the

mechanism_driversparameter, make sureopenvswitchoption is listed among the drivers, for example,mechanism_drivers =openvswitch. This is a comma delimited list. -

For the

flat_networksparameters, create a name to refer to the Bare Metal Provisioning Network, for example,ironicnet. Make sure this name is listed among theflat_networkslisted, for example,flat_networks =datacentre,ironicnet. This is a comma delimited list. -

If you are using a VLAN for the Bare Metal Provisioning Network, add the

network_vlan_rangesparameter with the following format:ironicnet:VLAN_START:VLAN_END, for example,network_vlan_ranges =datacentre:1:1000. This is a comma delimited list. -

The

enable_security_groupparameter should already be enabled. But if it is not set, change the value toTrue, for example,enable_security_group = True.

-

For the

In the

/etc/neutron/plugins/ml2/openvswitch_agent.inifile, find thebridge_mappingsparameter and update as follows:bridge_mappings =datacentre:br-ex,ironicnet:br-ironic

The value of this comma delimited key-value pair maps the name of the Bare Metal Provisioning Network to the physical device which is connected to the network.

Restart the

neutron-openvswitch-agent.serviceto see thebr-ironicbridge as a part of the integration bridge:# systemctl restart neutron-openvswitch-agent.service

Restart the

neutron-server.serviceso that it detects the new connection:# systemctl restart neutron-server.service

NoteIf you do not perform this step, trying to create the Bare Metal Provisioning Network within the OpenStack Networking service will fail with a message that the requested flat network does not exist.

1.3.1. Create the Bare Metal Provisioning Database

Create the database and database user used by Bare Metal Provisioning. All steps in this procedure must be performed on the database server, while logged in as the root user.

Creating the Bare Metal Provisioning Database

Connect to the database service:

# mysql -u root

Create the ironic database:

mysql> CREATE DATABASE ironic CHARACTER SET utf8;

Create an ironic database user and grant the user access to the ironic database:

mysql> GRANT ALL PRIVILEGES ON ironic.* TO 'ironic'@'%' IDENTIFIED BY 'PASSWORD'; mysql> GRANT ALL PRIVILEGES ON ironic.* TO 'ironic'@'localhost' IDENTIFIED BY 'PASSWORD';

Replace PASSWORD with a secure password that will be used to authenticate with the database server as this user.

Flush the database privileges to ensure that they take effect immediately:

mysql> FLUSH PRIVILEGES;

Exit the mysql client:

mysql> quit

1.3.2. Configure OpenStack Compute Services For Bare Metal Provisioning

Configure Compute services for the Bare Metal Provisioning driver. Using this driver enables Compute to provision physical machines using the same API that is used to provision virtual machines. Only one driver can be specified for each openstack-nova-compute node; a node with the Bare Metal Provisioning driver can provision only physical machines. It is recommended that you allocate a single openstack-nova-compute node to provision all bare metal nodes using the Bare Metal Provisioning driver. All steps in the following procedure must be performed on a chosen compute node, while logged in as the root user.

Configuring OpenStack Compute for the Bare Metal Provisioning

Set Compute to use the Bare Metal Provisioning scheduler host manager:

# openstack-config --set /etc/nova/nova.conf \ DEFAULT scheduler_host_manager nova.scheduler.ironic_host_manager.IronicHostManager

Disable the Compute scheduler from tracking changes in instances:

# openstack-config --set /etc/nova/nova.conf DEFAULT scheduler_tracks_instance_changes false

Set the default filters as follows:

# openstack-config --set /etc/nova/nova.conf DEFAULT baremetal_scheduler_default_filters AvailabilityZoneFilter,ComputeFilter,ComputeCapabilitiesFilter,ImagePropertiesFilter

Set Compute to use default Bare Metal Provisioning scheduling filters:

# openstack-config --set /etc/nova/nova.conf \ DEFAULT scheduler_use_baremetal_filters True

Set Compute to use the correct authentication details for Bare Metal Provisioning:

# openstack-config --set /etc/nova/nova.conf \ ironic admin_username ironic # openstack-config --set /etc/nova/nova.conf \ ironic admin_password PASSWORD # openstack-config --set /etc/nova/nova.conf \ ironic admin_url http://IDENTITY_IP:35357/v2.0 # openstack-config --set /etc/nova/nova.conf \ ironic admin_tenant_name service # openstack-config --set /etc/nova/nova.conf \ ironic api_endpoint http://IRONIC_API_IP:6385/v1

Replace the following values:

- Replace PASSWORD with the password that Bare Metal Provisioning uses to authenticate with Identity.

- Replace IDENTITY_IP with the IP address or host name of the server hosting Identity.

- Replace IRONIC_API_IP with the IP address or host name of the server hosting the Bare Metal Provisioning API service.

Set the

novadatabase credentials on theironicCompute node:# openstack-config --set /etc/nova/nova.conf database connection "mysql+pymysql://nova:NOVA_DB_PASSWORD@DB_IP/nova"

Restart the Compute scheduler service on the Compute controller nodes:

# systemctl restart openstack-nova-scheduler.service

Restart the compute service on the compute nodes:

# systemctl restart openstack-nova-compute.service

1.3.3. Configure the OpenStack Networking DHCP Agent to Tag iPXE Requests

OpenStack Networking DHCP requests from iPXE need to have a DHCP tag called ipxe to let the DHCP server know that the client needs to perform an HTTP operation to get the boot.ipxe script. You can do this by adding a dhcp-userclass entry to the dnsmasq configuration file used by the OpenStack Networking DHCP Agent service.

On your overcloud controller, verify which

dnsmasqfile the DHCP Agent is using:# grep ^dnsmasq_config_file /etc/neutron/dhcp_agent.ini dnsmasq_config_file =/etc/neutron/dnsmasq-neutron.conf

Edit this file and add the following lines at the end of the file:

# Create the "ipxe" tag if request comes from iPXE user class dhcp-userclass=set:ipxe,iPXE

Save the file and restart the OpenStack Networking DHCP Agent service:

# systemctl restart neutron-dhcp-agent.service

1.4. Configure the Compute Node for Bare Metal Provisioning

The following instructions here apply ONLY to the Compute node that is also running the Bare Metal Provisioning service. You need to perform these steps as a root user on the Compute node.

On the Compute node, you have the Bare Metal Provisioning NIC, for example, eth6. The goals with this procedure are as follows:

-

To connect the Bare Metal Provisioning NIC,

eth6in this example, to Open vSwitch. - To assign an IP address on this connection as the Bare Metal server needs to pull down the boot images from the bare metal node as a part of the iPXE process.

Connecting eth6 to Open vSwitch

As with the Controller node in Section 1.3, “Configure the Controller Nodes for Bare Metal Provisioning Service”, create a bridge within Open vSwitch on the Compute node running the Bare Metal Provisioning service:

# ovs-vsctl add-br br-ironic # ovs-vsctl add-port br-ironic IRONIC_PROVISIONING_NIC

Here,

br-ironicis the name of the bridge andIRONIC_PROVISIONING NICis the NIC connected to the Bare Metal Provisioning Network, for example,eth6.NoteThe only difference between this and Section 1.3, “Configure the Controller Nodes for Bare Metal Provisioning Service” is that you do not restart the OpenStack Networking service on the Compute node.

This adds the bridge and port to the Open vSwitch, which you can verify using the

ovs-vsctl showcommand. However, it does not connect it to the integration bridge (br-int) for use by OpenStack.To create the connection, you need to update the OpenStack Networking plugin files as follows:

Update the ML2 configuration file,

/etc/neutron/plugins/ml2/ml2_conf.inias follows:-

For the

type_driversparameter, make sureflatis listed among the drivers, for example,type_drivers = vxlan,vlan,flat,gre. This is a comma delimited list. -

For the

mechanism_driversparameter, make sureopenvswitchoption is listed among the drivers, for example,mechanism_drivers =openvswitch. This is a comma delimited list. -

For the

flat_networksparameters, create a name to refer to the Bare Metal Provisioning Network, for example,ironicnet. Make sure this name is listed among theflat_networkslisted, for example,flat_networks =datacentre,ironicnet. This is a comma delimited list. -

If you are using a VLAN for the Bare Metal Provisioning Network, add the

network_vlan_rangesparameter with the following format:ironicnet:VLAN_START:VLAN_END, for example,network_vlan_ranges =datacentre:1:1000. This is a comma delimited list. -

The

enable_security_groupparameter should already be enabled. But if it is not set, change the value toTrue, for example,enable_security_group = True.

-

For the

In the

/etc/neutron/plugins/ml2/openvswitch_agent.inifile, find thebridge_mappingsparameter and update as follows:bridge_mappings =datacentre:br-ex,ironicnet:br-ironic

The value of this comma delimited key-value pair maps the name of the Bare Metal Provisioning Network to the physical device which is connected to the network.

Restart the OpenStack Networking Open vSwitch Agent service:

# systemctl restart neutron-openvswitch-agent.service

You have now achieved your first goal from this procedure. Next, you need to assign an IP address to your br-ironic bridge and make sure it persists after a reboot.

Assigning an IP address to the Bare Metal server

Create standard configuration files in the

/etc/sysconfig/network-scriptslocation. You can copy theifcfg*files already available in the tenant network and edit the following values:device,ipaddr,ovs_bridge,bridge nameandMACaddresses for thebr-ironicandeth6. When you have completed updating the new files, they should have the following values:ifcfg-br-ironicDEVICE=br-ironic ONBOOT=yes HOTPLUG=no NM_CONTROLLED=no PEERDNS=no DEVICETYPE=ovs TYPE=OVSBridge BOOTPROTO=static IPADDR=BARE_METAL_PROVISIONING_IP NETMASK=255.255.255.0 OVS_EXTRA="set bridge br-ironic other-config:hwaddr=MAC_ADDRESS"

ifcfg-eth6DEVICE=eth6 ONBOOT=yes HOTPLUG=no NM_CONTROLLED=no PEERDNS=no DEVICETYPE=ovs TYPE=OVSPort OVS_BRIDGE=br-ironic BOOTPROTO=none

Restart the network bridge to make your IP address pingable.

# ifup br-ironic

NoteIf you get disconnected from the node when you restart the network services, reboot the server.

1.4.1. Subscribe to the Required Channels

To install the Bare Metal Provisioning packages, you must register the server or servers with Red Hat Subscription Manager, and subscribe to the required channels. If you are installing Bare Metal Provisioning on a compute node, your server may already be appropriately subscribed. Run yum repolist to check whether the channels in the procedure below have been enabled.

Subscribing to the Required Channels

Register your system with the Content Delivery Network, entering your Customer Portal user name and password when prompted:

# subscription-manager register

Find entitlement pools containing the channels required to install Bare Metal Provisioning:

# subscription-manager list --available | grep -A13 "Red Hat Enterprise Linux Server" # subscription-manager list --available | grep -A13 "Red Hat OpenStack Platform"

Use the pool identifiers located in the previous step to attach the Red Hat Enterprise Linux 7 Server and Red Hat OpenStack Platform entitlements:

# subscription-manager attach --pool=POOL_IDEnable the required channels:

# subscription-manager repos --enable=rhel-7-server-rpms \ --enable=rhel-7-server-openstack-9-rpms \ --enable=rhel-7-server-rh-common-rpms --enable=rhel-7-server-optional-rpms \ --enable=rhel-7-server-openstack-9-optools-rpms

1.4.2. Install the Bare Metal Provisioning Packages

Bare Metal Provisioning requires the following packages:

openstack-ironic-api

Provides the Bare Metal Provisioning API service.

openstack-ironic-conductor

Provides the Bare Metal Provisioning conductor service. The conductor allows adding, editing, and deleting nodes, powering on or off nodes with IPMI or SSH, and provisioning, deploying, and decommissioning bare metal nodes.

python-ironicclient

Provides a command-line interface for interacting with the Bare Metal Provisioning services.

Install the packages:

# yum install openstack-ironic-api openstack-ironic-conductor python-ironicclient ipxe-bootimgs

1.4.3. Configure iPXE

Create the necessary directories for iPXE, map-files and copy the

undionly.kpxeboot image, iPXE andmap-fileinto place:# mkdir /httpboot # mkdir /tftpboot # echo 'r ([/]) /tftpboot/\1' > /tftpboot/map-file # echo 'r ^(/tftpboot/) /tftpboot/\2' >> /tftpboot/map-file # cp /usr/share/ipxe/undionly.kpxe /tftpboot/ # chown -R ironic:ironic /httpboot # chown -R ironic:ironic /tftpboot

By default, the Compute node deployed by the director runs SELinux in

Enforcingmode. To avoid getting permission errors when trying iPXE boot, make sure you set the appropriate labels on these directories. To apply these labels, run the following commands as arootuser:# semanage fcontext -a -t httpd_sys_content_t "/httpboot(/.*)?" # restorecon -Rv /httpboot # semanage fcontext -a -t tftpdir_t "/tftpboot(/.*)?" # restorecon -Rv /tftpboot

Configure HTTP so that it can serve requests for the images. The

httpdpackage is already installed, so it is a matter of creating the appropriate virtual host entry and starting the service.NoteThe

/etc/httpd/conf.dcontains number of files. As Red Hat utilizes a single overcloud full image for all the nodes, it includes these files on all the nodes even though it is only used on the Controller node. You can delete the contents of/etc/httpd/conf.dor copy them somewhere else as they are not used.Create a new file in for iPXE configuration. You can name this file anything, making sure it is in the

.confformat and has the following contents:# cat 10-ipxe_vhost.conf Listen 8088 <VirtualHost *:8088> DocumentRoot "/httpboot" <Directory "/httpboot"> Options Indexes FollowSymLinks AllowOverride None Order allow,deny Allow from all Require all granted </Directory> ## Logging ErrorLog "/var/log/httpd/ironic_error.log" ServerSignature Off CustomLog "/var/log/httpd/ironic_access.log" combined </VirtualHost>The above virtual host configuration configures HTTPD to listed on all addresses on port 8088 and sets the document root for all requests to that port to go to

/httpboot.Save this file and enable and restart HTTPD service on the Compute node:

# systemctl enable httpd.service # systemctl start httpd.service

1.4.4. Configure the Bare Metal Provisioning Service

In this section, you will make the necessary changes to the /etc/ironic/ironic.conf file.

1.4.4.1. Configure Bare Metal Provisioning to Communicate with the Database Server

Set the value of the connection configuration key:

# openstack-config --set /etc/ironic/ironic.conf \ database connection mysql+pymysql://ironic:PASSWORD@IP/ironic

Here, PASSWORD is the password of the database server, IP is the IP address or host name of the database server.

The IP address or host name specified in the connection configuration key must match the IP address or host name to which the Bare Metal Provisioning database user was granted access when creating the Bare Metal Provisioning database in Section 1.3.1, “Create the Bare Metal Provisioning Database”. Moreover, if the database is hosted locally and you granted permissions to localhost when creating the database, you must enter localhost.

1.4.4.2. Configure Bare Metal Provisioning Authentication

Configure Bare Metal Provisioning to use Identity for authentication. All steps in this procedure must be performed on the server or servers hosting Bare Metal Provisioning, while logged in as the root user.

Configuring Bare Metal Provisioning to Authenticate Through Identity

Set the Identity public and admin endpoints that Bare Metal Provisioning must use:

# openstack-config --set /etc/ironic/ironic.conf \ keystone_authtoken auth_uri http://IP:5000/v2.0 # openstack-config --set /etc/ironic/ironic.conf \ keystone_authtoken identity_uri http://IP:35357/

Replace IP with the IP address or host name of the Identity server.

Set Bare Metal Provisioning to authenticate as the service tenant:

# openstack-config --set /etc/ironic/ironic.conf \ keystone_authtoken admin_tenant_name service

Set Bare Metal Provisioning to authenticate using the ironic administrative user account:

# openstack-config --set /etc/ironic/ironic.conf \ keystone_authtoken admin_user ironic

Set Bare Metal Provisioning to use the correct ironic administrative user account password:

# openstack-config --set /etc/ironic/ironic.conf \ keystone_authtoken admin_password PASSWORDReplace PASSWORD with the password set when the ironic user was created.

1.4.4.3. Configure RabbitMQ Message Broker Settings for Bare Metal Provisioning

RabbitMQ is the default (and recommended) message broker. The RabbitMQ messaging service is provided by the rabbitmq-server package. All steps in the following procedure must be performed on the Controller or Compute nodes hosting Bare Metal Provisioning, while logged in as the root user.

This procedure assumes that the RabbitMQ messaging service has been installed and configured, and an ironic user and associated password have been created on the server hosting the messaging service.

Configuring Bare Metal Provisioning to use the RabbitMQ Message Broker

Set RabbitMQ as the RPC back end:

# openstack-config --set /etc/ironic/ironic.conf \ DEFAULT rpc_backend ironic.openstack.common.rpc.impl_kombu

Set the Bare Metal Provisioning to connect to the RabbitMQ host:

# openstack-config --set /etc/ironic/ironic.conf \ oslo_messaging_rabbit rabbit_host RABBITMQ_HOSTReplace RABBITMQ_HOST with the IP address or host name of the server hosting the message broker.

Set the message broker port to 5672:

# openstack-config --set /etc/ironic/ironic.conf \ oslo_messaging_rabbit rabbit_port 5672

Set the RabbitMQ user name and password created for Bare Metal Provisioning when RabbitMQ was configured:

# openstack-config --set /etc/ironic/ironic.conf \ oslo_messaging_rabbit rabbit_userid guest # openstack-config --set /etc/ironic/ironic.conf \ oslo_messaging_rabbit rabbit_password RABBIT_GUEST_PASSWORD

Replace RABBIT_GUEST_PASSWORD with the RabbitMQ password for the guest user.

When RabbitMQ was launched, the guest user was granted read and write permissions to all resources: specifically, through the virtual host. Configure Bare Metal Provisioning to connect to this virtual host:

# openstack-config --set /etc/ironic/ironic.conf \ oslo_messaging_rabbit rabbit_virtual_host /

1.4.4.4. Configure Bare Metal Provisioning Drivers

Bare Metal Provisioning supports multiple drivers for deploying and managing bare metal servers. Some drivers have hardware requirements, and require additional configuration or package installation. See Appendix A, Bare Metal Provisioning Drivers for detailed driver information. The first half of a driver’s name specifies its deployment method (e.g. PXE), and the second half specifies its power management method (e.g. IPMI).

Configuring Bare Metal Provisioning Drivers

Specify the driver or drivers that you will use to provision bare metal servers. Specify multiple drivers using a comma-separated list:

# openstack-config --set /etc/ironic/ironic.conf \ DEFAULT enabled_drivers DRIVER1,DRIVER2

The following drivers are supported:

IPMI with PXE deploy

-

pxe_ipmitool

-

DRAC with PXE deploy

-

pxe_drac

-

iLO with PXE deploy

-

pxe_ilo

-

SSH with PXE deploy

-

pxe_ssh

-

iRMC with PXE

-

pxe_irmc

-

AMT with PXE

-

pxe_amt

-

AMT with HTTP

-

agent_amt

-

Restart the Bare Metal conductor service:

# systemctl restart openstack-ironic-conductor.service

1.4.4.5. Configure the Bare Metal Provisioning Service to use PXE

Set the Bare Metal Provisioning service to use PXE templates:

# openstack-config --set /etc/ironic/ironic.conf \ pxe pxe_config_template \$pybasedir/drivers/modules/ipxe_config.template

Set the Bare Metal Provisioning service to use

tftp_server:# openstack-config --set /etc/ironic/ironic.conf \ pxe tftp_server BARE_METAL_PROVISIONING_NETWORK_IP

Set the PXE

tftp_root:# openstack-config --set /etc/ironic/ironic.conf \ pxe tftp_root /tftpboot

Set the PXE boot file name:

# openstack-config --set /etc/ironic/ironic.conf \ pxe pxe_bootfile_name undionly.kpxe

Enable the Bare Metal Provisioning service to use iPXE:

# openstack-config --set /etc/ironic/ironic.conf \ pxe ipxe_enabled true

Set the URL for the

httpserver:# openstack-config --set /etc/ironic/ironic.conf deploy http_url http://BARE_METAL_PROVISIONING_IP:8088

Restart the Bare Metal conductor service:

# systemctl restart openstack-ironic-conductor.service

1.4.4.6. Configure Bare Metal Provisioning to Communicate with OpenStack Networking and OpenStack Image

Bare Metal Provisioning uses OpenStack Networking for DHCP and network configuration, and uses the Image service for managing the images used to boot physical machines. Configure Bare Metal Provisioning to connect to and communicate with OpenStack Networking and the Image service. All steps in this procedure must be performed on the server hosting Bare Metal Provisioning, while logged in as the root user.

Configuring Bare Metal Provisioning to Communicate with OpenStack Networking and OpenStack Image

Set Bare Metal Provisioning to use the OpenStack Networking endpoint:

# openstack-config --set /etc/ironic/ironic.conf \ neutron url http://NEUTRON_IP:9696Replace NEUTRON_IP with the IP address or host name of the server hosting OpenStack Networking.

Set Bare Metal Provisioning to communicate with the Image service:

# openstack-config --set /etc/ironic/ironic.conf \ glance glance_host GLANCE_IPReplace GLANCE_IP with the IP address or host name of the server hosting the Image service.

Start the Bare Metal Provisioning API service, and configure it to start at boot time:

# systemctl start openstack-ironic-api.service # systemctl enable openstack-ironic-api.service

Create the Bare Metal Provisioning database tables:

# ironic-dbsync --config-file /etc/ironic/ironic.conf create_schema

Start the Bare Metal Provisioning conductor service, and configure it to start at boot time:

# systemctl restart openstack-ironic-conductor.service # systemctl enable openstack-ironic-conductor.service

1.4.4.7. Configure a Ceph Object Gateway for the Image and Bare Metal Provisioning Services

Red Hat Ceph Storage is a distributed storage system that includes a Ceph object (RADOS) gateway with a Swift-compatible API. To use a RADOS gateway for the Image service, you need to:

- Configure the Image service to grant access to the RADOS gateway.

- Configure the Bare Metal Provisioning service to use the RADOS gateway Swift API to provide bare metal images.

Before You Begin

Ensure that you have configured your Red Hat Ceph Storage with a Ceph object gateway. See Ceph Object Gateway Installation for details.

Configure the Ceph Object Gateway Access for the Image Service

Create the access credentials for the Image Service on the Ceph object gateway

adminhost.# radosgw-admin user create --uid=GLANCE_USERNAME --display-name="User for Glance" # radosgw-admin subuser create --uid=GLANCE_USERNAME --subuser=GLANCE_USERNAME:swift --access =full # radosgw-admin key create --subuser=GLANCE_USERNAME:swift --key-type=swift --secret=STORE_KEY # radosgw-admin user modify --uid=GLANCE_USERNAME --temp-url-key=TEMP_URL_KEY

Replace GLANCE_USERNAME with a user name for the Image service access, and replace STORE_KEY and TEMP_URL_KEY with suitable keys.

NoteDo not use the

--gen-secretCLI parameter because it will cause theradosgw-adminutility to generate keys with slash symbols which do not work with the OpenStack Image service.Edit the

/etc/glance/glance-api.conffile to configure the Image API service to use the Ceph object gateway Swift API as the backend.[glance_store] stores = file, http, swift default_store = swift swift_store_auth_version = 1 swift_store_auth_address = http://RADOS_IP:PORT/auth/1.0 swift_store_user = GLANCE_USERNAME:swift swift_store_key = STORE_KEY swift_store_container = glance swift_store_create_container_on_put = True

Replace RADOS_IP and PORT with the IP/port of the Ceph object gateway API service.

NoteThe Ceph object gateway uses the FastCGI protocol for interacting with the HTTP server. See your HTTP server documentation if you want to enable HTTPS support.

Restart the Image API service.

# systemctl restart openstack-glance-api.service

Configure Bare Metal Provisioning to use a Ceph Object Gateway

Edit the

/etc/ironic/ironic.conffile to configure the Bare Metal Provisioning conductor service to use the Ceph object gateway.[glance] swift_container = glance swift_api_version = v1 swift_endpoint_url = http://RADOS_IP:PORT swift_temp_url_key = TEMP_URL_KEY temp_url_endpoint_type=radosgw

Replace TEMP_URL_KEY and _RADOS_IP:PORT with the values used in the prior procedure.

Restart the Bare Metal Provisioning conductor service.

# systemctl restart openstack-ironic-conductor.service

1.4.5. Configure OpenStack Compute to Use Bare Metal Provisioning Service

In this section, you will update the /etc/nova/nova.conf file to configure the Compute service to use the Bare Metal Provisioning service:

Configuring OpenStack Compute to Use Bare Metal Provisioning

Set Compute to use the clustered compute manager:

# openstack-config --set /etc/nova/nova.conf \ DEFAULT compute_manager ironic.nova.compute.manager.ClusteredComputeManager

Set the virtual RAM to physical RAM allocation ratio:

# openstack-config --set /etc/nova/nova.conf \ DEFAULT ram_allocation_ratio 1.0

Set the amount of disk space in MB to reserve for the host:

# openstack-config --set /etc/nova/nova.conf \ DEFAULT reserved_host_memory_mb 0

Set Compute to use the Bare Metal Provisioning driver:

# openstack-config --set /etc/nova/nova.conf \ DEFAULT compute_driver nova.virt.ironic.IronicDriver

Set Compute to use the correct authentication details for Bare Metal Provisioning:

# openstack-config --set /etc/nova/nova.conf \ ironic admin_username ironic # openstack-config --set /etc/nova/nova.conf \ ironic admin_password PASSWORD # openstack-config --set /etc/nova/nova.conf \ ironic admin_url http://IDENTITY_IP:35357/v2.0 # openstack-config --set /etc/nova/nova.conf \ ironic admin_tenant_name service # openstack-config --set /etc/nova/nova.conf \ ironic api_endpoint http://IRONIC_API_IP:6385/v1

Replace the following values:

- Replace PASSWORD with the password that Bare Metal Provisioning uses to authenticate with Identity.

- Replace IDENTITY_IP with the IP address or host name of the server hosting Identity.

- Replace IRONIC_API_IP with the IP address or host name of the server hosting the Bare Metal Provisioning API service.

Restart the Compute scheduler service on the Compute controller nodes:

# systemctl restart openstack-nova-scheduler.service

Restart the compute service on the compute nodes:

# systemctl restart openstack-nova-compute.service

Chapter 2. Configure Bare Metal Deployment

Configure Bare Metal Provisioning, the Image service, and Compute to enable bare metal deployment in the OpenStack environment. The following sections outline the additional configuration steps required to successfully deploy a bare metal node.

2.1. Create OpenStack Configurations for Bare Metal Provisioning Service

2.1.1. Configure the OpenStack Networking Configuration

Configure OpenStack Networking to communicate with Bare Metal Provisioning for DHCP, PXE boot, and other requirements. The procedure below configures OpenStack Networking for a single, flat network use case for provisioning onto bare metal. The configuration uses the ML2 plug-in and the Open vSwitch agent.

Ensure that the network interface used for provisioning is not the same network interface that is used for remote connectivity on the OpenStack Networking node. This procedure creates a bridge using the Bare Metal Provisioning Network interface, and drops any remote connections.

All steps in the following procedure must be performed on the server hosting OpenStack Networking, while logged in as the root user.

Configuring OpenStack Networking to Communicate with Bare Metal Provisioning

Set up the shell to access Identity as the administrative user:

# source ~stack/overcloudrc

Create the flat network over which to provision bare metal instances:

# neutron net-create --tenant-id TENANT_ID sharednet1 --shared \ --provider:network_type flat --provider:physical_network PHYSNET

Replace TENANT_ID with the unique identifier of the tenant on which to create the network. Replace PHYSNET with the name of the physical network.

Create the subnet on the flat network:

# neutron subnet-create sharednet1 NETWORK_CIDR --name SUBNET_NAME \ --ip-version 4 --gateway GATEWAY_IP --allocation-pool \ start=START_IP,end=END_IP --enable-dhcp

Replace the following values:

- Replace NETWORK_CIDR with the Classless Inter-Domain Routing (CIDR) representation of the block of IP addresses the subnet represents. The block of IP addresses specified by the range started by START_IP and ended by END_IP must fall within the block of IP addresses specified by NETWORK_CIDR.

- Replace SUBNET_NAME with a name for the subnet.

- Replace GATEWAY_IP with the IP address or host name of the system that will act as the gateway for the new subnet. This address must be within the block of IP addresses specified by NETWORK_CIDR, but outside of the block of IP addresses specified by the range started by START_IP and ended by END_IP.

- Replace START_IP with the IP address that denotes the start of the range of IP addresses within the new subnet from which floating IP addresses will be allocated.

- Replace END_IP with the IP address that denotes the end of the range of IP addresses within the new subnet from which floating IP addresses will be allocated.

Attach the network and subnet to the router to ensure the metadata requests are served by the OpenStack Networking service.

# neutron router-create ROUTER_NAME

Replace

ROUTER_NAMEwith a name for the router.Add the Bare Metal subnet as an interface on this router:

# neutron router-interface-add ROUTER_NAME BAREMETAL_SUBNET

Replace ROUTER_NAME with the name of your router and

BAREMETAL_SUBNETwith the ID or subnet name that you previously created. This allows the metadata requests fromcloud-initto be served and the node configured.Update the

/etc/ironic/ironic.conffile on the Compute node running the Bare Metal Provisioning service to utilize the same network for the cleaning service. Login to the Compute node where the Bare Metal Provisioning service is running and execute the following as arootuser:# openstack-config --set /etc/ironic/ironic.conf neutron cleaning_network_uuid NETWORK_UUID

Replace the NETWORK_UUID with the ID of the Bare Metal Provisioning Network created in the previous steps.

Restart the Bare Metal Provisioning service:

# systemctl restart openstack-ironic-conductor.service

2.1.2. Create the Bare Metal Provisioning Flavor

You need to create a flavor to use as a part of the deployment which should have the specifications (memory, CPU and disk) that is equal to or less than what your bare metal node provides.

Set up the shell to access Identity as the administrative user:

# source ~stack/overcloudrc

List existing flavors:

# openstack flavor list

Create a new flavor for the Bare Metal Provisioning service:

# openstack flavor create --id auto --ram RAM --vcpus VCPU --disk DISK --public baremetal

Replace

RAMwith the RAM memory,VCPUwith the number of vCPUs andDISKwith the disk storage value.Verify that the new flavor is created with the respective values:

# openstack flavor list

2.1.3. Create the Bare Metal Images

Bare Metal Provisioning supports deploying whole-disk images or root partition images. The whole-disk image contains the partition table, kernel image, and final user image. Root partition images contains the root partition of the OS and requires the kernel and ramdisk image for the bare metal node to use to boot the final user image with. All supported bare metal agent drivers can deploy whole-disk or root partition images.

A whole-disk image requires one image that contains the partition table, boot loader, and user image. Bare Metal Provisioning does not control the subsequent reboot of a node deployed with a whole-disk image as the node supports localboot.

A root partition deployment requires two sets of images - deploy image and user image. Bare Metal Provisioning uses the deploy image to boot the node and copy the user image on to the bare metal node. After the deploy image is loaded into the Image service, you can use the bare metal node’s properties to associate the deploy image to the bare metal node to set it to use the deploy image as the boot image. A subsequent reboot of the node uses net-boot to pull down the user image.

This section uses a root partition image to provision bare metal nodes in the examples. For a whole-disk image deployment, see Section 3.3, “Create a Whole Windows Image”. For a partition-based deployment, you do not have to create the deploy image as it was already used when the overcloud was deployed by the undercloud. The deploy image consists of two images - the kernel image and the ramdisk image as follows:

ironic-python-agent.kernel ironic-python-agent.initramfs

These images will be in the /usr/share/rhosp-director-images/ironic-python-agent*.el7ost.tar file if you have the rhosp-director-images-ipa package installed.

Extract the images and load them to the Image service:

# openstack image create --container-format aki --disk-format aki --public --file ./ironic-python-agent.kernel bm-deploy-kernel # openstack image create --container-format ari --disk-format ari --public --file ./ironic-python-agent.initramfs bm-deploy-ramdisk

The final image that you need is the actual image that will be deployed on the Bare Metal Provisioning node. For example, you can download a Red Hat Enterprise Linux KVM image since it already has cloud-init.

Load the image to the Image service:

# openstack image create --container-format bare --disk-format qcow2 --property kernel_id=DEPLOY_KERNEL_ID \ --property ramdisk_id=DEPLOY_RAMDISK_ID --public --file ./IMAGE_FILE rhel

Where DEPLOY_KERNEL_ID is the UUID associated with the deploy-kernel images uploaded to the Image service. And DEPLOY_RAMDISK_ID is the UUID associated with the deploy-ramdisk image uploaded to the Image service. Use openstack image list to find these UUIDs.

2.1.4. Add the Bare Metal Provisioning Node to the Bare Metal Provisioning Service

In order to add the Bare Metal Provisioning node to the Bare Metal Provisioning service, copy the section of the instackenv.json file that was used to instantiate the cloud and modify it according to your needs.

Source the

overcloudrcfile and import the.jsonfile:# source ~stack/overcloudrc # openstack baremetal import --json ./baremetal.json

Update the bare metal node in the Bare Metal Provisioning service to use the deployed images as the initial boot image by specifying the

deploy_kernelanddeploy_ramdiskin thedriver_infosection of the node:# ironic node-update NODE_UUID add driver_info/deploy_kernel=DEPLOY_KERNEL_ID driver_info/deploy_ramdisk=DEPLOY_RAMDISK_ID

Replace NODE_UUID with the UUID of the bare metal node. You can get this value by executing the ironic node-list command on the director node. Replace DEPLOY_KERNEL_ID with the ID of the deploy kernel image. You can get this value by executing the glance image-list command on the director node. Replace the DEPLOY_RAMDISK_ID with the ID of the deploy ramdisk image. You can get this value by executing the glance image-list command on the director node.

2.1.5. Configure Proxy Services For Image Deployment

You can optionally configure a bare metal node to use Object Storage with HTTP or HTTPS proxy services to download images to the bare metal node. This allows you to cache images in the same physical network segments as the bare metal nodes to reduce overall network traffic and deployment time.

Before you Begin

Configure the proxy server with the following additional considerations:

- Use content caching, even for queries contained in the requested URL.

- Raise the maximum cache size to accommodate your image sizes.

Only configure the proxy server to store images as unencrypted if the images do not contain sensitive information.

Configure Image Proxy

Set up the shell to access Identity as the administrative user:

# source ~stack/overcloudrc

Configure the bare metal node driver to use HTTP or HTTPS:

# openstack baremetal node set NODE_UUID \ --driver_info image_https_proxy=HTTPS://PROXYIP:PORTThis example uses the HTTPS protocol. Set the

driverinfo/image_http_proxyparameter if you want to use HTTP instead of HTTPS.Set Bare Metal Provisioning to reuse cached Object Storage temporary URLs when an image is requested.

# openstack-config --set /etc/ironic/ironic.conf glance swift_temp_url_cache_enabled=true

The proxy server will not create new cache entries for the same image based on the query part of the URL when it contains some query parameters that change each time the request is regenerated.

Set the duration (in seconds) that the generated temporary URL remains valid:

# openstack-config --set /etc/ironic/ironic.conf glance swift_temp_url_duration=DURATIONOnly non-expired links to images will be returned from the Object Storage service temporary URLs cache. If swift_temp_url_duration=1200, then after 20 minutes a new image will be cached by the proxy server. The value of this option must be greater than or equal to swift_temp_url_expected_download_start_delay.

Set the download start delay (in seconds) for your hardware:

# openstack-config --set /etc/ironic/ironic.conf glance swift_temp_url_expected_download_start_delay=DELAYSet DELAY to cover the delay between when the deploy request is made (the temporary URL is generated) to when the URL is used to download an image to the bare metal node. This delay allows time for the ramdisk to boot and begin the image download. This value determines if a cached entry will still be valid when the image download starts.

2.1.6. Deploy the Bare Metal Provisioning Node

Deploy the Bare Metal Provisioning node using the nova boot command:

# nova boot --image BAREMETAL_USER_IMAGE --flavor BAREMETAL_FLAVOR --nic net-id=IRONIC_NETWORK_ID --key default MACHINE_HOSTNAME

Replace BAREMETAL_USER_IMAGE with image that was loaded to the Image service, BAREMETAL_FLAVOR with the flavor for the Bare Metal deployment, IRONIC_NETWORK_ID with the ID of the Bare Metal Provisioning Network in the OpenStack Networking service, and MACHINE_HOSTNAME with the hostname of the machine you want it to be after it is deployed.

2.2. Configure Hardware Inspection

Hardware inspection allows Bare Metal Provisioning to discover hardware information on a node. Inspection also creates ports for the discovered Ethernet MAC addresses. Alternatively, you can manually add hardware details to each node; see Section 2.3.2, “Add a Node Manually” for more information. All steps in the following procedure must be performed on the server hosting the Bare Metal Provisioning conductor service, while logged in as the root user.

Hardware inspection is supported in-band using the following drivers:

-

pxe_drac -

pxe_ipmitool -

pxe_ssh -

pxe_amt

Configuring Hardware Inspection

- Obtain the Ironic Python Agent kernel and ramdisk images used for bare metal system discovery over PXE boot. These images are available in a TAR archive labeled Ironic Python Agent Image for RHOSP director 9.0 at https://access.redhat.com/downloads/content/191/ver=9/rhel---7/9/x86_64/product-software. Download the TAR archive, extract the image files (ironic-python-agent.kernel and ironic-python-agent.initramfs) from it, and copy them to the /tftpboot directory on the TFTP server.

On the server that will host the hardware inspection service, enable the Red Hat OpenStack Platform 9 director for RHEL 7 (RPMs) channel:

# subscription-manager repos --enable=rhel-7-server-openstack-9-director-rpms

Install the openstack-ironic-inspector package:

# yum install openstack-ironic-inspector

Enable inspection in the ironic.conf file:

# openstack-config --set /etc/ironic/ironic.conf \ inspector enabled True

If the hardware inspection service is hosted on a separate server, set its URL on the server hosting the conductor service:

# openstack-config --set /etc/ironic/ironic.conf \ inspector service_url http://INSPECTOR_IP:5050Replace INSPECTOR_IP with the IP address or host name of the server hosting the hardware inspection service.

Provide the hardware inspection service with authentication credentials:

# openstack-config --set /etc/ironic-inspector/inspector.conf \ keystone_authtoken identity_uri http://IDENTITY_IP:35357 # openstack-config --set /etc/ironic-inspector/inspector.conf \ keystone_authtoken auth_uri http://IDENTITY_IP:5000/v2.0 # openstack-config --set /etc/ironic-inspector/inspector.conf \ keystone_authtoken admin_user ironic # openstack-config --set /etc/ironic-inspector/inspector.conf \ keystone_authtoken admin_password PASSWORD # openstack-config --set /etc/ironic-inspector/inspector.conf \ keystone_authtoken admin_tenant_name services # openstack-config --set /etc/ironic-inspector/inspector.conf \ ironic os_auth_url http://IDENTITY_IP:5000/v2.0 # openstack-config --set /etc/ironic-inspector/inspector.conf \ ironic os_username ironic # openstack-config --set /etc/ironic-inspector/inspector.conf \ ironic os_password PASSWORD # openstack-config --set /etc/ironic-inspector/inspector.conf \ ironic os_tenant_name service # openstack-config --set /etc/ironic-inspector/inspector.conf \ firewall dnsmasq_interface br-ironic # openstack-config --set /etc/ironic-inspector/inspector.conf \ database connection sqlite:////var/lib/ironic-inspector/inspector.sqlite

Replace the following values:

- Replace IDENTITY_IP with the IP address or host name of the Identity server.

- Replace PASSWORD with the password that Bare Metal Provisioning uses to authenticate with Identity.

Optionally, set the hardware inspection service to store logs for the ramdisk:

# openstack-config --set /etc/ironic-inspector/inspector.conf \ processing ramdisk_logs_dir /var/log/ironic-inspector/ramdisk

Optionally, enable an additional data processing plug-in that gathers block devices on bare metal machines with multiple local disks and exposes root devices.

ramdisk_error,root_disk_selection,scheduler, andvalidate_interfacesare enabled by default, and should not be disabled. The following command addsroot_device_hintto the list:# openstack-config --set /etc/ironic-inspector/inspector.conf \ processing processing_hooks '$default_processing_hooks,root_device_hint'

Generate the initial

ironic inspectordatabase:# ironic-inspector-dbsync --config-file /etc/ironic-inspector/inspector.conf upgrade

Update the inspector database file to be owned by ironic-inspector:

# chown ironic-inspector /var/lib/ironic-inspector/inspector.sqlite

Open the /etc/ironic-inspector/dnsmasq.conf file in a text editor, and configure the following PXE boot settings for the openstack-ironic-inspector-dnsmasq service:

port=0 interface=br-ironic bind-interfaces dhcp-range=START_IP,END_IP enable-tftp tftp-root=/tftpboot dhcp-boot=pxelinux.0

Replace the following values:

- Replace INTERFACE with the name of the Bare Metal Provisioning Network interface.

- Replace START_IP with the IP address that denotes the start of the range of IP addresses from which floating IP addresses will be allocated.

- Replace END_IP with the IP address that denotes the end of the range of IP addresses from which floating IP addresses will be allocated.

Copy the

syslinux bootloaderto thetftpdirectory:# cp /usr/share/syslinux/pxelinux.0 /tftpboot/pxelinux.0

Optionally, you can configure the hardware inspection service to store metadata in the swift section of the

/etc/ironic-inspector/inspector.conffile.[swift] username = ironic password = PASSWORD tenant_name = service os_auth_url = http://IDENTITY_IP:5000/v2.0

Replace the following values:

- Replace IDENTITY_IP with the IP address or host name of the Identity server.

- Replace PASSWORD with the password that Bare Metal Provisioning uses to authenticate with Identity.

Open the /tftpboot/pxelinux.cfg/default file in a text editor, and configure the following options:

default discover label discover kernel ironic-python-agent.kernel append initrd=ironic-python-agent.initramfs \ ipa-inspection-callback-url=http://INSPECTOR_IP:5050/v1/continue ipa-api-url=http://IRONIC_API_IP:6385 ipappend 3

Replace INSPECTOR_IP with the IP address or host name of the server hosting the hardware inspection service. Note that the text from

appendto/continuemust be on a single line, as indicated by the\in the block above.Reset the security context for the /tftpboot/ directory and its files:

# restorecon -R /tftpboot/

This step ensures that the directory has the correct SELinux security labels, and the dnsmasq service is able to access the directory.

Start the hardware inspection service and the dnsmasq service, and configure them to start at boot time:

# systemctl start openstack-ironic-inspector.service # systemctl enable openstack-ironic-inspector.service # systemctl start openstack-ironic-inspector-dnsmasq.service # systemctl enable openstack-ironic-inspector-dnsmasq.service

Hardware inspection can be used on nodes after they have been registered with Bare Metal Provisioning.

2.3. Add Physical Machines as Bare Metal Nodes

Add as nodes the physical machines onto which you will provision instances, and confirm that Compute can see the available hardware. Compute is not immediately notified of new resources, because Compute’s resource tracker synchronizes periodically. Changes will be visible after the next periodic task is run. This value, scheduler_driver_task_period, can be updated in /etc/nova/nova.conf. The default period is 60 seconds.

After systems are registered as bare metal nodes, hardware details can be discovered using hardware inspection, or added manually.

2.3.1. Add a Node with Hardware Inspection

Register a physical machine as a bare metal node, then use openstack-ironic-inspector to detect the node’s hardware details and create ports for each of its Ethernet MAC addresses. All steps in the following procedure must be performed on the server hosting the Bare Metal Provisioning conductor service, while logged in as the root user.

Adding a Node with Hardware Inspection

Set up the shell to use Identity as the administrative user:

# source ~/keystonerc_admin

Add a new node:

# ironic node-create -d DRIVER_NAMEReplace DRIVER_NAME with the name of the driver that Bare Metal Provisioning will use to provision this node. You must have enabled this driver in the /etc/ironic/ironic.conf file. To create a node, you must, at a minimum, specify the driver name.

ImportantNote the unique identifier for the node.

You can refer to a node by a logical name or by its UUID. Optionally assign a logical name to the node:

# ironic node-update NODE_UUID add name=NAME

Replace NODE_UUID with the unique identifier for the node. Replace NAME with a logical name for the node.

Determine the node information that is required by the driver, then update the node driver information to allow Bare Metal Provisioning to manage the node:

# ironic driver-properties DRIVER_NAME # ironic node-update NODE_UUID add \ driver_info/PROPERTY=VALUE \ driver_info/PROPERTY=VALUE

Replace the following values:

- Replace DRIVER_NAME with the name of the driver for which to show properties. The information is not returned unless the driver has been enabled in the /etc/ironic/ironic.conf file.

- Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

- Replace PROPERTY with a required property returned by the ironic driver-properties command.

- Replace VALUE with a valid value for that property.

Specify the deploy kernel and deploy ramdisk for the node driver:

# ironic node-update NODE_UUID add \ driver_info/deploy_kernel=KERNEL_UUID \ driver_info/deploy_ramdisk=INITRAMFS_UUID

Replace the following values:

- Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

- Replace KERNEL_UUID with the unique identifier for the .kernel image that was uploaded to the Image service.

- Replace INITRAMFS_UUID with the unique identifier for the .initramfs image that was uploaded to the Image service.

Configure the node to reboot after initial deployment from a local boot loader installed on the node’s disk, instead of via PXE or virtual media. The local boot capability must also be set on the flavor used to provision the node. To enable local boot, the image used to deploy the node must contain grub2. Configure local boot:

# ironic node-update NODE_UUID add \ properties/capabilities="boot_option:local"Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

Move the bare metal node to

manageablestate:# ironic node-set-provision-state NODE_UUID manageReplace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

Start inspection:

# openstack baremetal introspection start NODE_UUID --discoverd-url http://overcloud IP:5050

-

Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name. The node discovery and inspection process must run to completion before the node can be provisioned. To check the status of node inspection, run ironic node-list and look for

Provision State. Nodes will be inavailablestate after successful inspection. -

Replace overcloud IP with the

service_urlvalue that was previously set in ironic.conf.

-

Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name. The node discovery and inspection process must run to completion before the node can be provisioned. To check the status of node inspection, run ironic node-list and look for

Validate the node’s setup:

# ironic node-validate NODE_UUID +------------+--------+----------------------------+ | Interface | Result | Reason | +------------+--------+----------------------------+ | console | None | not supported | | deploy | True | | | inspect | True | | | management | True | | | power | True | | +------------+--------+----------------------------+Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name. The output of the command above should report either

TrueorNonefor each interface. Interfaces markedNoneare those that you have not configured, or those that are not supported for your driver.

2.3.2. Add a Node Manually

Register a physical machine as a bare metal node, then manually add its hardware details and create ports for each of its Ethernet MAC addresses. All steps in the following procedure must be performed on the server hosting the Bare Metal Provisioning conductor service, while logged in as the root user.

Adding a Node without Hardware Inspection

Set up the shell to use Identity as the administrative user:

# source ~/keystonerc_admin

Add a new node:

# ironic node-create -d DRIVER_NAMEReplace DRIVER_NAME with the name of the driver that Bare Metal Provisioning will use to provision this node. You must have enabled this driver in the /etc/ironic/ironic.conf file. To create a node, you must, at a minimum, specify the driver name.

ImportantNote the unique identifier for the node.

You can refer to a node by a logical name or by its UUID. Optionally assign a logical name to the node:

# ironic node-update NODE_UUID add name=NAME

Replace NODE_UUID with the unique identifier for the node. Replace NAME with a logical name for the node.

Determine the node information that is required by the driver, then update the node driver information to allow Bare Metal Provisioning to manage the node:

# ironic driver-properties DRIVER_NAME # ironic node-update NODE_UUID add \ driver_info/PROPERTY=VALUE \ driver_info/PROPERTY=VALUE

Replace the following values:

- Replace DRIVER_NAME with the name of the driver for which to show properties. The information is not returned unless the driver has been enabled in the /etc/ironic/ironic.conf file.

- Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

- Replace PROPERTY with a required property returned by the ironic driver-properties command.

- Replace VALUE with a valid value for that property.

Specify the deploy kernel and deploy ramdisk for the node driver:

# ironic node-update NODE_UUID add \ driver_info/deploy_kernel=KERNEL_UUID \ driver_info/deploy_ramdisk=INITRAMFS_UUID

Replace the following values:

- Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

- Replace KERNEL_UUID with the unique identifier for the .kernel image that was uploaded to the Image service.

- Replace INITRAMFS_UUID with the unique identifier for the .initramfs image that was uploaded to the Image service.

Update the node’s properties to match the hardware specifications on the node:

# ironic node-update NODE_UUID add \ properties/cpus=CPU \ properties/memory_mb=RAM_MB \ properties/local_gb=DISK_GB \ properties/cpu_arch=ARCH

Replace the following values:

- Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

- Replace CPU with the number of CPUs to use.

- Replace RAM_MB with the RAM (in MB) to use.

- Replace DISK_GB with the disk size (in GB) to use.

- Replace ARCH with the architecture type to use.

Configure the node to reboot after initial deployment from a local boot loader installed on the node’s disk, instead of via PXE or virtual media. The local boot capability must also be set on the flavor used to provision the node. To enable local boot, the image used to deploy the node must contain grub2. Configure local boot:

# ironic node-update NODE_UUID add \ properties/capabilities="boot_option:local"Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

Inform Bare Metal Provisioning of the network interface cards on the node. Create a port with each NIC’s MAC address:

# ironic port-create -n NODE_UUID -a MAC_ADDRESS

Replace NODE_UUID with the unique identifier for the node. Replace MAC_ADDRESS with the MAC address for a NIC on the node.

Validate the node’s setup:

# ironic node-validate NODE_UUID +------------+--------+----------------------------+ | Interface | Result | Reason | +------------+--------+----------------------------+ | console | None | not supported | | deploy | True | | | inspect | None | not supported | | management | True | | | power | True | | +------------+--------+----------------------------+Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name. The output of the command above should report either

TrueorNonefor each interface. Interfaces markedNoneare those that you have not configured, or those that are not supported for your driver.

2.3.3. Configure Manual Node Cleaning

When a bare metal server is initially provisioned or reprovisioned after the server is freed from a workload, Bare Metal Provisioning can automatically clean the server to ensure that the server is ready for another workload. You can also initiate a manual cleaning cycle when the server is in the manageable state. Manual cleaning cycles are useful for long running or destructive tasks. You can configure the specific cleaning steps for the bare metal server.

Configure a Cleaning Network

Bare Metal Provisioning uses the cleaning network to provide in-band cleaning steps for the bare metal server. You can create a separate network for this cleaning network or use the provisioning network.

To configure the Bare Metal Provisioning service cleaning network, follow these steps:

Set up the shell to access Identity as the administrative user.

# source ~stack/overcloudrc

Find the network UUID for the network you want Bare Metal Provisioning to use for cleaning bare metal servers.

# openstack network list

Select the UUID from the id field in the

neutron net-listoutput.Set

cleaning_network_uuidin the/etc/ironic/ironic.conffile to the cleaning network UUID.# openstack-config --set /etc/ironic/ironic.conf neutron cleaning_network_uuid CLEANING_NETWORK_UUIDReplace CLEANING_NETWORK_UUID with the network id retrieved in the earlier step.

Restart the Bare Metal Provisioning Service.

# systemctl restart openstack-ironic-conductor

Configure Manual Cleaning

Ensure that the bare metal server is in the manageable state.

# ironic node-set-provision-state NODE_ID manageReplace NODE_ID with the bare metal server UUID or node name.

Set the bare metal server in cleaning state and provide the cleaning steps.

# ironic node-set-provision-state NODE_ID clean --clean-steps CLEAN_STEPS

Replace NODE_ID with the bare metal server UUID or node name. Replace CLEAN_STEPS with the cleaning steps in JSON format, a path to a file that contains the cleaning steps, or directly from standard input. The following is an example of cleaning steps in JSON format:

'[{"interface": "deploy", "step": "erase_devices"}]'

See OpenStack - Node Cleaning for more details.

2.3.4. Specify the Preferred Root Disk on a Bare Metal Node

When the deploy ramdisk boots on a bare metal node, the first disk that Bare Metal Provisioning discovers becomes the root device (the device where the image is saved). If the bare metal node has more than one SATA, SCSI, or IDE disk controller, the order in which their corresponding disk devices are added is arbitrary and may change at each reboot. For example, devices such as /dev/sda and /dev/sdb may switch on each boot, which would result in Bare Metal Provisioning selecting a different disk each time the bare metal node is being deployed.

With disk hints, you can pass hints to the deploy ramdisk to specify which disk device Bare Metal Provisioning should deploy the image onto. The following table describes the hints you can use to select a preferred root disk on a bare metal node.

| Hint | Type | Description |

|---|---|---|

| model | (STRING): | Disk device identifier. |

| vendor | (STRING): | Disk device vendor. |

| serial | (STRING): | Disk device serial number. |

| size | (INT): | Size of the disk device in GB.

NOTE: The |

| wwn | (STRING): | Unique storage identifier. |

| wwn_with_extension | (STRING): | Unique storage identifier with the vendor extension appended. |

| wwn_vendor_extension | (STRING): | Unique vendor storage identifier. |

| name | (STRING): | The device name, for example /dev/md0. WARNING: The root device hint name should only be used for devices with constant names (for example, RAID volumes). Do not use this hint for SATA, SCSI, and IDE disk controllers because the order in which the device nodes are added in Linux is arbitrary, resulting in devices such as /dev/sda and /dev/sdb switching at boot time. See Persistent Naming for details. |

To associate one or more disk device hints with a bare metal node, update the node’s properties with a root_device key, for example:

# ironic node-update <node-uuid> add properties/root_device='{"wwn": "0x4000cca77fc4dba1"}'

This example guarantees that Bare Metal Provisioning picks the disk device that has the wwn equal to the specified WWN value, or fails the deployment if no disk device on that node has the specified WWN value.

The hints can have an operator at the beginning of the value string. If no operator is specified the default is == (for numerical values) and s== (for string values).

| Type | Operator | Description |

|---|---|---|

| numerical |

|

equal to or greater than (equivalent to |

|

| equal to | |

|

| not equal to | |

|

| greater than or equal to | |

|

| greater than | |

|

| less than or equal to | |

|

| less than | |

| string (python comparisons) |

| equal to |

|

| not equal to | |

|

| greater than or equal to | |

|

| greater than | |

|

| less than or equal to | |

|

| less than | |

|

| substring | |

| collections |

| all elements contained in collection |

|

| find one of these |

The following examples show how to update bare metal node properties to select a particular disk:

- Find a non-rotational (SSD) disk greater than or equal to 60 GB:

# ironic node-update <node-uuid> add properties/root_device='{"size": ">= 60", "rotational": false}'- Find a Samsung or Winsys disk:

# ironic node-update <node-uuid> add properties/root_device='{"vendor": "<or> samsung <or> winsys"}'If multiple hints are specified, a disk device must satisfy all the hints.

2.4. Use Host Aggregates to Separate Physical and Virtual Machine Provisioning

Host aggregates are used by OpenStack Compute to partition availability zones, and group nodes with specific shared properties together. Key value pairs are set both on the host aggregate and on instance flavors to define these properties. When an instance is provisioned, Compute’s scheduler compares the key value pairs on the flavor with the key value pairs assigned to host aggregates, and ensures that the instance is provisioned in the correct aggregate and on the correct host: either on a physical machine or as a virtual machine on an openstack-nova-compute node.

If your Red Hat OpenStack Platform environment is set up to provision both bare metal machines and virtual machines, use host aggregates to direct instances to spawn as either physical machines or virtual machines. The procedure below creates a host aggregate for bare metal hosts, and adds a key value pair specifying that the host type is baremetal. Any bare metal node grouped in this aggregate inherits this key value pair. The same key value pair is then added to the flavor that will be used to provision the instance.

If the image or images you will use to provision bare metal machines were uploaded to the Image service with the hypervisor_type=ironic property set, the scheduler will also use that key pair value in its scheduling decision. To ensure effective scheduling in situations where image properties may not apply, set up host aggregates in addition to setting image properties. See Section 2.1.3, “Create the Bare Metal Images” for more information on building and uploading images.

Creating a Host Aggregate for Bare Metal Provisioning

Create the host aggregate for

baremetalin the defaultnovaavailability zone:# nova aggregate-create baremetal nova

Set metadata on the

baremetalaggregate that will assign hosts added to the aggregate thehypervisor_type=ironicproperty:# nova aggregate-set-metadata baremetal hypervisor_type=ironic

Add the openstack-nova-compute node with Bare Metal Provisioning drivers to the

baremetalaggregate:# nova aggregate-add-host baremetal COMPUTE_HOSTNAMEReplace COMPUTE_HOSTNAME with the host name of the system hosting the openstack-nova-compute service. A single, dedicated compute host should be used to handle all Bare Metal Provisioning requests.

Add the

ironichypervisor property to the flavor or flavors that you have created for provisioning bare metal nodes:# nova flavor-key FLAVOR_NAME set hypervisor_type="ironic"Replace FLAVOR_NAME with the name of the flavor.

Add the following Compute filter scheduler to the existing list under

scheduler_default_filtersin /etc/nova/nova.conf:AggregateInstanceExtraSpecsFilter

This filter ensures that the Compute scheduler processes the key value pairs assigned to host aggregates.

2.5. Example: Test Bare Metal Provisioning with SSH and Virsh

Test the Bare Metal Provisioning setup by deploying instances on two virtual machines acting as bare metal nodes on a single physical host. Both virtual machines are virtualized using libvirt and virsh.

The SSH driver is for testing and evaluation purposes only. It is not recommended for Red Hat OpenStack Platform enterprise environments.

This scenario requires the following resources: