File System Guide

Configuring and Mounting Ceph File Systems

Abstract

Chapter 1. Introduction to the Ceph File System

As a storage administrator, you can gain an understanding of the features, system components, and limitations to manage a Ceph File System (CephFS) environment.

1.1. Ceph File System features and enhancements

The Ceph File System (CephFS) is a file system compatible with POSIX standards that is built on top of Ceph’s distributed object store, called RADOS (Reliable Autonomic Distributed Object Storage). CephFS provides file access to a Red Hat Ceph Storage cluster, and uses the POSIX semantics wherever possible. For example, in contrast to many other common network file systems like NFS, CephFS maintains strong cache coherency across clients. The goal is for processes using the file system to behave the same when they are on different hosts as when they are on the same host. However, in some cases, CephFS diverges from the strict POSIX semantics.

The Ceph File System has the following features and enhancements:

- Scalability

- The Ceph File System is highly scalable due to horizontal scaling of metadata servers and direct client reads and writes with individual OSD nodes.

- Shared File System

- The Ceph File System is a shared file system so multiple clients can work on the same file system at once.

- High Availability

- The Ceph File System provides a cluster of Ceph Metadata Servers (MDS). One is active and others are in standby mode. If the active MDS terminates unexpectedly, one of the standby MDS becomes active. As a result, client mounts continue working through a server failure. This behavior makes the Ceph File System highly available. In addition, you can configure multiple active metadata servers.

- Configurable File and Directory Layouts

- The Ceph File System allows users to configure file and directory layouts to use multiple pools, pool namespaces, and file striping modes across objects.

- POSIX Access Control Lists (ACL)

-

The Ceph File System supports the POSIX Access Control Lists (ACL). ACL are enabled by default with the Ceph File Systems mounted as kernel clients with kernel version

kernel-3.10.0-327.18.2.el7or newer. To use an ACL with the Ceph File Systems mounted as FUSE clients, you must enable them. - Client Quotas

- The Ceph File System supports setting quotas on any directory in a system. The quota can restrict the number of bytes or the number of files stored beneath that point in the directory hierarchy. CephFS client quotas are enabled by default.

- Resizing

- The Ceph File System size is only bound by the capacity of the OSDs servicing its data pool. To increase the capacity, add more OSDs to the CephFS data pool. To decrease the capacity, use either client quotas or pool quotas.

- Snapshots

- The Ceph File System supports read-only snapshots but not writable clones.

- POSIX file system operations

The Ceph File System supports standard and consistent POSIX file system operations including the following access patterns:

- Buffered write operations via the Linux page cache.

- Cached read operations via the Linux page cache.

- Direct I/O asynchronous or synchronous read/write operations, bypassing the page cache.

- Memory mapped I/O.

Additional Resources

- See the Installing Metadata servers section in the Installation Guide to install Ceph Metadata servers.

- See the Deploying Ceph File Systems section in the File System Guide to create Ceph File Systems.

1.2. Ceph File System components

The Ceph File System has two primary components:

- Clients

-

The CephFS clients perform I/O operations on behalf of applications using CephFS, such as,

ceph-fusefor FUSE clients andkcephfsfor kernel clients. CephFS clients send metadata requests to an active Metadata Server. In return, the CephFS client learns of the file metadata, and can begin safely caching both metadata and file data. - Metadata Servers (MDS)

The MDS does the following:

- Provides metadata to CephFS clients.

- Manages metadata related to files stored on the Ceph File System.

- Coordinates access to the shared Red Hat Ceph Storage cluster.

- Caches hot metadata to reduce requests to the backing metadata pool store.

- Manages the CephFS clients' caches to maintain cache coherence.

- Replicates hot metadata between active MDS.

- Coalesces metadata mutations to a compact journal with regular flushes to the backing metadata pool.

-

CephFS requires at least one Metadata Server daemon (

ceph-mds) to run.

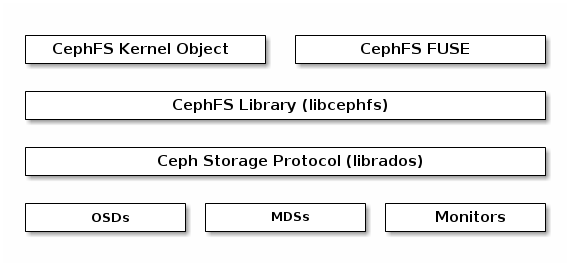

The diagram below shows the component layers of the Ceph File System.

The bottom layer represents the underlying core storage cluster components:

-

Ceph OSDs (

ceph-osd) where the Ceph File System data and metadata are stored. -

Ceph Metadata Servers (

ceph-mds) that manages Ceph File System metadata. -

Ceph Monitors (

ceph-mon) that manages the master copy of the cluster map.

The Ceph Storage protocol layer represents the Ceph native librados library for interacting with the core storage cluster.

The CephFS library layer includes the CephFS libcephfs library that works on top of librados and represents the Ceph File System.

The top layer represents two types of Ceph clients that can access the Ceph File Systems.

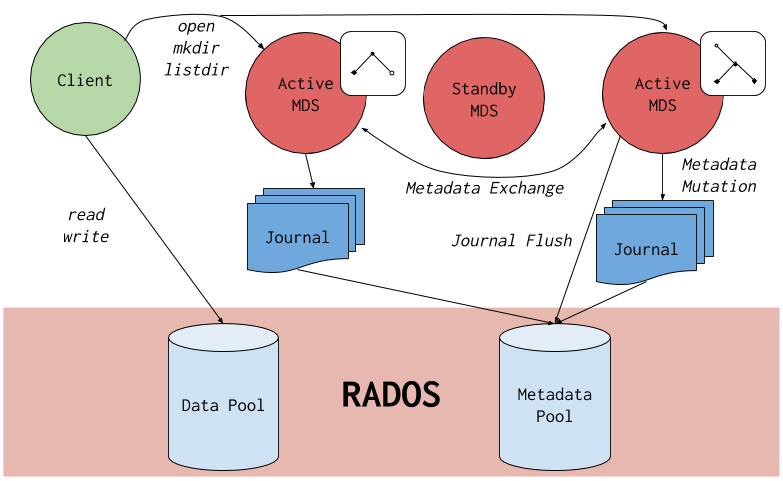

The diagram below shows more details on how the Ceph File System components interact with each other.

Additional Resources

- See the Installing Metadata servers section in the Red Hat Ceph Storage Installation Guide to install Ceph Metadata servers.

- See the Deploying Ceph File Systems section in the Red Hat Ceph Storage File System Guide to create Ceph File Systems.

1.3. Ceph File System and SELinux

Starting with Red Hat Enterprise Linux 8.3 and Red Hat Ceph Storage 4.2, support for using Security-Enhanced Linux (SELinux) on Ceph File Systems (CephFS) environments is available. You can now set any SELinux file type with CephFS, along with assigning a particular SELinux type on individual files. This support applies to the Ceph File System Metadata Server (MDS), the CephFS File System in User Space (FUSE) clients, and the CephFS kernel clients.

Additional Resources

- See the Using SELinux Guide on Red Hat Enterprise Linux 8 for more information on SELinux.

1.4. Ceph File System limitations and the POSIX standards

Creation of multiple Ceph File Systems on one Red Hat Ceph Storage cluster is disabled by default. An attempt to create an additional Ceph File System fails with the following error message:

Error EINVAL: Creation of multiple filesystems is disabled.

While technically possible, Red Hat does not support having multiple Ceph File Systems on one Red Hat Ceph Storage cluster. Doing so can cause the MDS or CephFS client nodes to terminate unexpectedly.

The Ceph File System diverges from the strict POSIX semantics in the following ways:

-

If a client’s attempt to write a file fails, the write operations are not necessarily atomic. That is, the client might call the

write()system call on a file opened with theO_SYNCflag with an 8MB buffer and then terminates unexpectedly and the write operation can be only partially applied. Almost all file systems, even local file systems, have this behavior. - In situations when the write operations occur simultaneously, a write operation that exceeds object boundaries is not necessarily atomic. For example, writer A writes "aa|aa" and writer B writes "bb|bb" simultaneously, where "|" is the object boundary, and "aa|bb" is written rather than the proper "aa|aa" or "bb|bb".

-

POSIX includes the

telldir()andseekdir()system calls that allow you to obtain the current directory offset and seek back to it. Because CephFS can fragment directories at any time, it is difficult to return a stable integer offset for a directory. As such, calling theseekdir()system call to a non-zero offset might often work but is not guaranteed to do so. Callingseekdir()to offset 0 will always work. This is an equivalent to therewinddir()system call. -

Sparse files propagate incorrectly to the

st_blocksfield of thestat()system call. CephFS does not explicitly track parts of a file that are allocated or written to, because thest_blocksfield is always populated by the quotient of file size divided by block size. This behavior causes utilities, such asdu, to overestimate used space. -

When the

mmap()system call maps a file into memory on multiple hosts, write operations are not coherently propagated to caches of other hosts. That is, if a page is cached on host A, and then updated on host B, host A page is not coherently invalidated. -

CephFS clients present a hidden

.snapdirectory that is used to access, create, delete, and rename snapshots. Although this directory is excluded from thereaddir()system call, any process that tries to create a file or directory with the same name returns an error. The name of this hidden directory can be changed at mount time with the-o snapdirname=.<new_name>option or by using theclient_snapdirconfiguration option.

Additional Resources

- See the Installing Metadata servers section in the Red Hat Ceph Storage Installation Guide to install Ceph Metadata servers.

- See the Deploying Ceph File Systems section in the Red Hat Ceph Storage File System Guide to create Ceph File Systems.

1.5. Additional Resources

- See the Installing Metadata Servers section in the Red Hat Ceph Storage Installation Guide for more details.

- If you want to use NFS Ganesha as an interface to the Ceph File System with Red Hat OpenStack Platform, see the CephFS with NFS-Ganesha deployment section in the Deploying the Shared File Systems service with CephFS through NFS guide for instructions on how to deploy such an environment.

Chapter 2. The Ceph File System Metadata Server

As a storage administrator, you can learn about the different states of the Ceph File System (CephFS) Metadata Server (MDS), along with learning about CephFS MDS ranking mechanic, configuring the MDS standby daemon, and cache size limits. Knowing these concepts can enable you to configure the MDS daemons for a storage environment.

2.1. Prerequisites

- A running, and healthy Red Hat Ceph Storage cluster.

-

Installation of the Ceph Metadata Server daemons (

ceph-mds).

2.2. Metadata Server daemon states

The Metadata Server (MDS) daemons operate in two states:

- Active — manages metadata for files and directories stores on the Ceph File System.

- Standby — serves as a backup, and becomes active when an active MDS daemon becomes unresponsive.

By default, a Ceph File System uses only one active MDS daemon. However, systems with many clients benefit from multiple active MDS daemons.

You can configure the file system to use multiple active MDS daemons so that you can scale metadata performance for larger workloads. The active MDS daemons dynamically share the metadata workload when metadata load patterns change. Note that systems with multiple active MDS daemons still require standby MDS daemons to remain highly available.

What Happens When the Active MDS Daemon Fails

When the active MDS becomes unresponsive, a Ceph Monitor daemon waits a number of seconds equal to the value specified in the mds_beacon_grace option. If the active MDS is still unresponsive after the specified time period has passed, the Ceph Monitor marks the MDS daemon as laggy. One of the standby daemons becomes active, depending on the configuration.

To change the value of mds_beacon_grace, add this option to the Ceph configuration file and specify the new value.

2.3. Metadata Server ranks

Each Ceph File System (CephFS) has a number of ranks, one by default, which starts at zero.

Ranks define the way how the metadata workload is shared between multiple Metadata Server (MDS) daemons. The number of ranks is the maximum number of MDS daemons that can be active at one time. Each MDS daemon handles a subset of the CephFS metadata that is assigned to that rank.

Each MDS daemon initially starts without a rank. The Ceph Monitor assigns a rank to the daemon. The MDS daemon can only hold one rank at a time. Daemons only lose ranks when they are stopped.

The max_mds setting controls how many ranks will be created.

The actual number of ranks in the CephFS is only increased if a spare daemon is available to accept the new rank.

Rank States

Ranks can be:

- Up - A rank that is assigned to the MDS daemon.

- Failed - A rank that is not associated with any MDS daemon.

-

Damaged - A rank that is damaged; its metadata is corrupted or missing. Damaged ranks are not assigned to any MDS daemons until the operator fixes the problem, and uses the

ceph mds repairedcommand on the damaged rank.

2.4. Metadata Server cache size limits

You can limit the size of the Ceph File System (CephFS) Metadata Server (MDS) cache by:

A memory limit: Use the

mds_cache_memory_limitoption. Red Hat recommends a value between 8 GB and 64 GB formds_cache_memory_limit. Setting more cache can cause issues with recovery. This limit is approximately 66% of the desired maximum memory use of the MDS.ImportantRed Hat recommends to use memory limits instead of inode count limits.

-

Inode count: Use the

mds_cache_sizeoption. By default, limiting the MDS cache by inode count is disabled.

In addition, you can specify a cache reservation by using the mds_cache_reservation option for MDS operations. The cache reservation is limited as a percentage of the memory or inode limit and is set to 5% by default. The intent of this parameter is to have the MDS maintain an extra reserve of memory for its cache for new metadata operations to use. As a consequence, the MDS should in general operate below its memory limit because it will recall old state from clients in order to drop unused metadata in its cache.

The mds_cache_reservation option replaces the mds_health_cache_threshold option in all situations, except when MDS nodes sends a health alert to the Ceph Monitors indicating the cache is too large. By default, mds_health_cache_threshold is 150% of the maximum cache size.

Be aware that the cache limit is not a hard limit. Potential bugs in the CephFS client or MDS or misbehaving applications might cause the MDS to exceed its cache size. The mds_health_cache_threshold option configures the storage cluster health warning message, so that operators can investigate why the MDS cannot shrink its cache.

Additional Resources

- See the Metadata Server daemon configuration reference section in the Red Hat Ceph Storage File System Guide for more information.

2.5. Configuring multiple active Metadata Server daemons

Configure multiple active Metadata Server (MDS) daemons to scale metadata performance for large systems.

Do not convert all standby MDS daemons to active ones. A Ceph File System (CephFS)requires at least one standby MDS daemon to remain highly available.

The scrubbing process is not currently supported when multiple active MDS daemons are configured.

Prerequisites

- Ceph administration capabilities on the MDS node.

Procedure

Set the

max_mdsparameter to the desired number of active MDS daemons:Syntax

ceph fs set NAME max_mds NUMBER

Example

[root@mon ~]# ceph fs set cephfs max_mds 2

This example increases the number of active MDS daemons to two in the CephFS called

cephfsNoteCeph only increases the actual number of ranks in the CephFS if a spare MDS daemon is available to take the new rank.

Verify the number of active MDS daemons:

Syntax

ceph fs status NAMEExample

[root@mon ~]# ceph fs status cephfs cephfs - 0 clients ====== +------+--------+-------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+-------+---------------+-------+-------+ | 0 | active | node1 | Reqs: 0 /s | 10 | 12 | | 1 | active | node2 | Reqs: 0 /s | 10 | 12 | +------+--------+-------+---------------+-------+-------+ +-----------------+----------+-------+-------+ | Pool | type | used | avail | +-----------------+----------+-------+-------+ | cephfs_metadata | metadata | 4638 | 26.7G | | cephfs_data | data | 0 | 26.7G | +-----------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ | node3 | +-------------+

Additional Resources

- See the Metadata Server daemons states section in the Red Hat Ceph Storage File System Guide for more details.

- See the Decreasing the Number of Active MDS Daemons section in the Red Hat Ceph Storage File System Guide for more details.

- See the Managing Ceph users section in the Red Hat Ceph Storage Administration Guide for more details.

2.6. Configuring the number of standby daemons

Each Ceph File System (CephFS) can specify the required number of standby daemons to be considered healthy. This number also includes the standby-replay daemon waiting for a rank failure.

Prerequisites

- User access to the Ceph Monitor node.

Procedure

Set the expected number of standby daemons for a particular CephFS:

Syntax

ceph fs set FS_NAME standby_count_wanted NUMBER

NoteSetting the NUMBER to zero disables the daemon health check.

Example

[root@mon]# ceph fs set cephfs standby_count_wanted 2

This example sets the expected standby daemon count to two.

2.7. Configuring the standby-replay Metadata Server

Configure each Ceph File System (CephFS) by adding a standby-replay Metadata Server (MDS) daemon. Doing this reduces failover time if the active MDS becomes unavailable.

This specific standby-replay daemon follows the active MDS’s metadata journal. The standby-replay daemon is only used by the active MDS of the same rank, and is not available to other ranks.

If using standby-replay, then every active MDS must have a standby-replay daemon.

Prerequisites

- User access to the Ceph Monitor node.

Procedure

Set the standby-replay for a particular CephFS:

Syntax

ceph fs set FS_NAME allow_standby_replay 1Example

[root@mon]# ceph fs set cephfs allow_standby_replay 1

In this example, the Boolean value is

1, which enables the standby-replay daemons to be assigned to the active Ceph MDS daemons.NoteSetting the

allow_standby_replayBoolean value back to0only prevents new standby-replay daemons from being assigned. To also stop the running daemons, mark them asfailedwith theceph mds failcommand.

Additional Resources

- See the Using the ceph mds fail command section in the Red Hat Ceph Storage File System Guide for details.

2.8. Decreasing the number of active Metadata Server daemons

How to decrease the number of active Ceph File System (CephFS) Metadata Server (MDS) daemons.

Prerequisites

-

The rank that you will remove must be active first, meaning that you must have the same number of MDS daemons as specified by the

max_mdsparameter.

Procedure

Set the same number of MDS daemons as specified by the

max_mdsparameter:Syntax

ceph fs status NAMEExample

[root@mon ~]# ceph fs status cephfs cephfs - 0 clients +------+--------+-------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+-------+---------------+-------+-------+ | 0 | active | node1 | Reqs: 0 /s | 10 | 12 | | 1 | active | node2 | Reqs: 0 /s | 10 | 12 | +------+--------+-------+---------------+-------+-------+ +-----------------+----------+-------+-------+ | Pool | type | used | avail | +-----------------+----------+-------+-------+ | cephfs_metadata | metadata | 4638 | 26.7G | | cephfs_data | data | 0 | 26.7G | +-----------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ | node3 | +-------------+

On a node with administration capabilities, change the

max_mdsparameter to the desired number of active MDS daemons:Syntax

ceph fs set NAME max_mds NUMBER

Example

[root@mon ~]# ceph fs set cephfs max_mds 1

-

Wait for the storage cluster to stabilize to the new

max_mdsvalue by watching the Ceph File System status. Verify the number of active MDS daemons:

Syntax

ceph fs status NAMEExample

[root@mon ~]# ceph fs status cephfs cephfs - 0 clients +------+--------+-------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+-------+---------------+-------+-------+ | 0 | active | node1 | Reqs: 0 /s | 10 | 12 | +------+--------+-------+---------------+-------+-------+ +-----------------+----------+-------+-------+ | Pool | type | used | avail | +-----------------+----------+-------+-------+ | cephfs_metadata | metadata | 4638 | 26.7G | | cephfs_data | data | 0 | 26.7G | +-----------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ | node3 | | node2 | +-------------+

Additional Resources

- See the Metadata Server daemons states section in the Red Hat Ceph Storage File System Guide.

- See the Configuring multiple active Metadata Server daemons section in the Red Hat Ceph Storage File System Guide.

2.9. Additional Resources

- See the Installing Metadata servers section of the Red Hat Ceph Storage Installation Guide for details.

- See the Red Hat Ceph Storage Installation Guide for details on installing a Red Hat Ceph Storage cluster.

Chapter 3. Deployment of the Ceph File System

As a storage administrator, you can deploy Ceph File Systems (CephFS) in a storage environment and have clients mount those Ceph File Systems to meet the storage needs.

Basically, the deployment workflow is three steps:

- Create a Ceph File System on a Ceph Monitor node.

- Create a Ceph client user with the appropriate capabilities, and make the client key available on the node where the Ceph File System will be mounted.

- Mount CephFS on a dedicated node, using either a kernel client or a File System in User Space (FUSE) client.

3.1. Prerequisites

- A running, and healthy Red Hat Ceph Storage cluster.

-

Installation and configuration of the Ceph Metadata Server daemon (

ceph-mds).

3.2. Layout, quota, snapshot, and network restrictions

These user capabilities can help you restrict access to a Ceph File System (CephFS) based on the needed requirements.

All user capability flags, except rw, must be specified in alphabetical order.

Layouts and Quotas

When using layouts or quotas, clients require the p flag, in addition to rw capabilities. Setting the p flag restricts all the attributes being set by special extended attributes, those with a ceph. prefix. Also, this restricts other means of setting these fields, such as openc operations with layouts.

Example

client.0

key: AQAz7EVWygILFRAAdIcuJ10opU/JKyfFmxhuaw==

caps: [mds] allow rwp

caps: [mon] allow r

caps: [osd] allow rw tag cephfs data=cephfs_a

client.1

key: AQAz7EVWygILFRAAdIcuJ11opU/JKyfFmxhuaw==

caps: [mds] allow rw

caps: [mon] allow r

caps: [osd] allow rw tag cephfs data=cephfs_a

In this example, client.0 can modify layouts and quotas on the file system cephfs_a, but client.1 cannot.

Snapshots

When creating or deleting snapshots, clients require the s flag, in addition to rw capabilities. When the capability string also contains the p flag, the s flag must appear after it.

Example

client.0

key: AQAz7EVWygILFRAAdIcuJ10opU/JKyfFmxhuaw==

caps: [mds] allow rw, allow rws path=/temp

caps: [mon] allow r

caps: [osd] allow rw tag cephfs data=cephfs_a

In this example, client.0 can create or delete snapshots in the temp directory of file system cephfs_a.

Network

Restricting clients connecting from a particular network.

Example

client.0 key: AQAz7EVWygILFRAAdIcuJ10opU/JKyfFmxhuaw== caps: [mds] allow r network 10.0.0.0/8, allow rw path=/bar network 10.0.0.0/8 caps: [mon] allow r network 10.0.0.0/8 caps: [osd] allow rw tag cephfs data=cephfs_a network 10.0.0.0/8

The optional network and prefix length is in CIDR notation, for example, 10.3.0.0/16.

Additional Resources

- See the Creating client users for a Ceph File System section in the Red Hat Ceph Storage File System Guide for details on setting the Ceph user capabilities.

3.3. Creating a Ceph File System

You can create a Ceph File System (CephFS) on a Ceph Monitor node.

By default, you can create only one CephFS per Ceph Storage cluster.

Prerequisites

- A running, and healthy Red Hat Ceph Storage cluster.

-

Installation and configuration of the Ceph Metadata Server daemon (

ceph-mds). - Root-level access to a Ceph monitor node.

Procedure

Create two pools, one for storing data and one for storing metadata:

Syntax

ceph osd pool create NAME _PG_NUMExample

[root@mon ~]# ceph osd pool create cephfs_data 64 [root@mon ~]# ceph osd pool create cephfs_metadata 64

Typically, the metadata pool can start with a conservative number of Placement Groups (PGs) as it will generally have far fewer objects than the data pool. It is possible to increase the number of PGs if needed. Recommended metadata pool sizes range from 64 PGs to 512 PGs. Size the data pool is proportional to the number and sizes of files you expect in the file system.

ImportantFor the metadata pool, consider to use:

- A higher replication level because any data loss to this pool can make the whole file system inaccessible.

- Storage with lower latency such as Solid-State Drive (SSD) disks because this directly affects the observed latency of file system operations on clients.

Create the CephFS:

Syntax

ceph fs new NAME METADATA_POOL DATA_POOL

Example

[root@mon ~]# ceph fs new cephfs cephfs_metadata cephfs_data

Verify that one or more MDSs enter to the active state based on you configuration.

Syntax

ceph fs status NAMEExample

[root@mon ~]# ceph fs status cephfs cephfs - 0 clients ====== +------+--------+-------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+-------+---------------+-------+-------+ | 0 | active | node1 | Reqs: 0 /s | 10 | 12 | +------+--------+-------+---------------+-------+-------+ +-----------------+----------+-------+-------+ | Pool | type | used | avail | +-----------------+----------+-------+-------+ | cephfs_metadata | metadata | 4638 | 26.7G | | cephfs_data | data | 0 | 26.7G | +-----------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ | node3 | | node2 | +-------------+----

Additional Resources

- See the Enabling the Red Hat Ceph Storage Repositories section in Red Hat Ceph Storage Installation Guide for more details.

- See the Pools chapter in the Red Hat Ceph Storage Storage Strategies Guide for more details.

- See the The Ceph File System section in the Red Hat Ceph Storage File System Guide for more details on the Ceph File System limitations.

- See the Red Hat Ceph Storage Installation Guide for details on installing Red Hat Ceph Storage.

- See the Installing Metadata Servers in the Red Hat Ceph Storage Installation Guide for details.

3.4. Creating Ceph File Systems with erasure coding (Technology Preview)

By default, Ceph uses replicated pools for data pools. You can also add an additional erasure-coded data pool, if needed. Ceph File Systems (CephFS) backed by erasure-coded pools use less overall storage compared to Ceph File Systems backed by replicated pools. While erasure-coded pools use less overall storage, they also use more memory and processor resources than replicated pools.

The Ceph File System using erasure-coded pools is a Technology Preview feature. Technology Preview features are not supported with Red Hat production service level agreements (SLAs), might not be functionally complete, and Red Hat does not recommend to use them for production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. See the support scope for Red Hat Technology Preview features for more details.

For production environments, Red Hat recommends using a replicated pool as the default data pool.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- A running CephFS environment.

- Pools using BlueStore OSDs.

- User-level access to a Ceph Monitor node.

Procedure

Create a replicated metadata pool for CephFS metadata:

Syntax

ceph osd pool create METADATA_POOL PG_NUM

Example

[root@mon ~]# ceph osd pool create cephfs-metadata 64

This example creates a pool named

cephfs-metadatawith 64 placement groups.Create a default replicated data pool for CephFS:

Syntax

ceph osd pool create DATA_POOL PG_NUM

Example

[root@mon ~]# ceph osd pool create cephfs-data 64

This example creates a replicated pool named

cephfs-datawith 64 placement groups.Create an erasure-coded data pool for CephFS:

Syntax

ceph osd pool create DATA_POOL PG_NUM erasure

Example

[root@mon ~]# ceph osd pool create cephfs-data-ec 64 erasure

This example creates an erasure-coded pool named

cephfs-data-ecwith 64 placement groups.Enable overwrites on the erasure-coded pool:

Syntax

ceph osd pool set DATA_POOL allow_ec_overwrites trueExample

[root@mon ~]# ceph osd pool set cephfs-data-ec allow_ec_overwrites true

This example enables overwrites on an erasure-coded pool named

cephfs-data-ec.Add the erasure-coded data pool to the CephFS Metadata Server (MDS):

Syntax

ceph fs add_data_pool cephfs-ec DATA_POOLExample

[root@mon ~]# ceph fs add_data_pool cephfs-ec cephfs-data-ec

Optionally, verify the data pool was added:

[root@mon ~]# ceph fs ls

Create the CephFS:

Syntax

ceph fs new cephfs METADATA_POOL DATA_POOL

Example

[root@mon ~]# ceph fs new cephfs cephfs-metadata cephfs-data

ImportantUsing an erasure-coded pool for the default data pool is not recommended.

Create the CephFS using erasure coding:

Syntax

ceph fs new cephfs-ec METADATA_POOL DATA_POOL

Example

[root@mon ~]# ceph fs new cephfs-ec cephfs-metadata cephfs-data-ec

Verify that one or more Ceph FS Metadata Servers (MDS) enters the active state:

Syntax

ceph fs status FS_ECExample

[root@mon ~]# ceph fs status cephfs-ec cephfs-ec - 0 clients ====== +------+--------+-------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+-------+---------------+-------+-------+ | 0 | active | node1 | Reqs: 0 /s | 10 | 12 | +------+--------+-------+---------------+-------+-------+ +-----------------+----------+-------+-------+ | Pool | type | used | avail | +-----------------+----------+-------+-------+ | cephfs-metadata | metadata | 4638 | 26.7G | | cephfs-data | data | 0 | 26.7G | | cephfs-data-ec | data | 0 | 26.7G | +-----------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ | node3 | | node2 | +-------------+

To add a new erasure-coded data pool to an existing file system.

Create an erasure-coded data pool for CephFS:

Syntax

ceph osd pool create DATA_POOL PG_NUM erasure

Example

[root@mon ~]# ceph osd pool create cephfs-data-ec1 64 erasure

Enable overwrites on the erasure-coded pool:

Syntax

ceph osd pool set DATA_POOL allow_ec_overwrites trueExample

[root@mon ~]# ceph osd pool set cephfs-data-ec1 allow_ec_overwrites true

Add the erasure-coded data pool to the CephFS Metadata Server (MDS):

Syntax

ceph fs add_data_pool cephfs-ec DATA_POOLExample

[root@mon ~]# ceph fs add_data_pool cephfs-ec cephfs-data-ec1

Create the CephFS using erasure coding:

Syntax

ceph fs new cephfs-ec METADATA_POOL DATA_POOL

Example

[root@mon ~]# ceph fs new cephfs-ec cephfs-metadata cephfs-data-ec1

Additional Resources

- See the The Ceph File System Metadata Server chapter in the Red Hat Ceph Storage File System Guide for more information on the CephFS MDS.

- See the Installing Metadata Servers section of the Red Hat Ceph Storage Installation Guide for details on installing CephFS.

- See the Erasure-Coded Pools section in the Red Hat Ceph Storage Storage Strategies Guide for more information.

- See the Erasure Coding with Overwrites section in the Red Hat Ceph Storage Storage Strategies Guide for more information.

3.5. Creating client users for a Ceph File System

Red Hat Ceph Storage uses cephx for authentication, which is enabled by default. To use cephx with the Ceph File System, create a user with the correct authorization capabilities on a Ceph Monitor node and make its key available on the node where the Ceph File System will be mounted.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Installation and configuration of the Ceph Metadata Server daemon (ceph-mds).

- Root-level access to a Ceph monitor node.

- Root-level access to a Ceph client node.

Procedure

On a Ceph Monitor node, create a client user:

Syntax

ceph fs authorize FILE_SYSTEM_NAME client.CLIENT_NAME /DIRECTORY CAPABILITY [/DIRECTORY CAPABILITY] ...

To restrict the client to only writing in the

tempdirectory of filesystemcephfs_a:Example

[root@mon ~]# ceph fs authorize cephfs_a client.1 / r /temp rw client.1 key: AQBSdFhcGZFUDRAAcKhG9Cl2HPiDMMRv4DC43A== caps: [mds] allow r, allow rw path=/temp caps: [mon] allow r caps: [osd] allow rw tag cephfs data=cephfs_a

To completely restrict the client to the

tempdirectory, remove the root (/) directory:Example

[root@mon ~]# ceph fs authorize cephfs_a client.1 /temp rw

NoteSupplying

allor asterisk as the file system name grants access to every file system. Typically, it is necessary to quote the asterisk to protect it from the shell.Verify the created key:

Syntax

ceph auth get client.IDExample

[root@mon ~]# ceph auth get client.1

Copy the keyring to the client.

On the Ceph Monitor node, export the keyring to a file:

Syntax

ceph auth get client.ID -o ceph.client.ID.keyring

Example

[root@mon ~]# ceph auth get client.1 -o ceph.client.1.keyring exported keyring for client.1

Copy the client keyring from the Ceph Monitor node to the

/etc/ceph/directory on the client node:Syntax

scp root@MONITOR_NODE_NAME:/root/ceph.client.1.keyring /etc/ceph/Replace_MONITOR_NODE_NAME_with the Ceph Monitor node name or IP.

Example

[root@client ~]# scp root@mon:/root/ceph.client.1.keyring /etc/ceph/ceph.client.1.keyring

Set the appropriate permissions for the keyring file:

Syntax

chmod 644 KEYRINGExample

[root@client ~]# chmod 644 /etc/ceph/ceph.client.1.keyring

Additional Resources

- See the User Management chapter in the Red Hat Ceph Storage Administration Guide for more details.

3.6. Mounting the Ceph File System as a kernel client

You can mount the Ceph File System (CephFS) as a kernel client, either manually or automatically on system boot.

Clients running on other Linux distributions, aside from Red Hat Enterprise Linux, are permitted but not supported. If issues are found in the CephFS Metadata Server or other parts of the storage cluster when using these clients, Red Hat will address them. If the cause is found to be on the client side, then the issue will have to be addressed by the kernel vendor of the Linux distribution.

Prerequisites

- Root-level access to a Linux-based client node.

- User-level access to a Ceph Monitor node.

- An existing Ceph File System.

Procedure

Configure the client node to use the Ceph storage cluster.

Enable the Red Hat Ceph Storage 4 Tools repository:

Red Hat Enterprise Linux 7

[root@client ~]# subscription-manager repos --enable=rhel-7-server-rhceph-4-tools-rpms

Red Hat Enterprise Linux 8

[root@client ~]# subscription-manager repos --enable=rhceph-4-tools-for-rhel-8-x86_64-rpms

Install the

ceph-commonpackage:Red Hat Enterprise Linux 7

[root@client ~]# yum install ceph-common

Red Hat Enterprise Linux 8

[root@client ~]# dnf install ceph-common

Copy the Ceph client keyring from the Ceph Monitor node to the client node:

Syntax

scp root@MONITOR_NODE_NAME:/etc/ceph/KEYRING_FILE /etc/ceph/

Replace MONITOR_NODE_NAME with the Ceph Monitor host name or IP address.

Example

[root@client ~]# scp root@192.168.0.1:/etc/ceph/ceph.client.1.keyring /etc/ceph/

Copy the Ceph configuration file from a Ceph Monitor node to the client node:

Syntax

scp root@MONITOR_NODE_NAME:/etc/ceph/ceph.conf /etc/ceph/ceph.confReplace MONITOR_NODE_NAME with the Ceph Monitor host name or IP address.

Example

[root@client ~]# scp root@192.168.0.1:/etc/ceph/ceph.conf /etc/ceph/ceph.conf

Set the appropriate permissions for the configuration file:

[root@client ~]# chmod 644 /etc/ceph/ceph.conf

Create a mount directory on the client node:

Syntax

mkdir -p MOUNT_POINTExample

[root@client]# mkdir -p /mnt/cephfs

Mount the Ceph File System. To specify multiple Ceph Monitor addresses, separate them with commas in the

mountcommand, specify the mount point, and set the client name:NoteAs of Red Hat Ceph Storage 4.1,

mount.cephcan read keyring files directly. As such, a secret file is no longer necessary. Just specify the client ID withname=CLIENT_ID, andmount.cephwill find the right keyring file.Syntax

mount -t ceph MONITOR-1_NAME:6789,MONITOR-2_NAME:6789,MONITOR-3_NAME:6789:/ MOUNT_POINT -o name=CLIENT_ID

Example

[root@client ~]# mount -t ceph mon1:6789,mon2:6789,mon3:6789:/ /mnt/cephfs -o name=1

NoteYou can configure a DNS server so that a single host name resolves to multiple IP addresses. Then you can use that single host name with the

mountcommand, instead of supplying a comma-separated list.NoteYou can also replace the Monitor host names with the string

:/andmount.cephwill read the Ceph configuration file to determine which Monitors to connect to.Verify that the file system is successfully mounted:

Syntax

stat -f MOUNT_POINTExample

[root@client ~]# stat -f /mnt/cephfs

Additional Resources

-

See the

mount(8)manual page. - See the Ceph user management chapter in the Red Hat Ceph Storage Administration Guide for more details on creating a Ceph user.

- See the Creating a Ceph File System section of the Red Hat Ceph Storage File System Guide for details.

3.7. Mounting the Ceph File System as a FUSE client

You can mount the Ceph File System (CephFS) as a File System in User Space (FUSE) client, either manually or automatically on system boot.

Prerequisites

- Root-level access to a Linux-based client node.

- User-level access to a Ceph Monitor node.

- An existing Ceph File System.

Procedure

Configure the client node to use the Ceph storage cluster.

Enable the Red Hat Ceph Storage 4 Tools repository:

Red Hat Enterprise Linux 7

[root@client ~]# subscription-manager repos --enable=rhel-7-server-rhceph-4-tools-rpms

Red Hat Enterprise Linux 8

[root@client ~]# subscription-manager repos --enable=rhceph-4-tools-for-rhel-8-x86_64-rpms

Install the

ceph-fusepackage:Red Hat Enterprise Linux 7

[root@client ~]# yum install ceph-fuse

Red Hat Enterprise Linux 8

[root@client ~]# dnf install ceph-fuse

Copy the Ceph client keyring from the Ceph Monitor node to the client node:

Syntax

scp root@MONITOR_NODE_NAME:/etc/ceph/KEYRING_FILE /etc/ceph/

Replace MONITOR_NODE_NAME with the Ceph Monitor host name or IP address.

Example

[root@client ~]# scp root@192.168.0.1:/etc/ceph/ceph.client.1.keyring /etc/ceph/

Copy the Ceph configuration file from a Ceph Monitor node to the client node:

Syntax

scp root@MONITOR_NODE_NAME:/etc/ceph/ceph.conf /etc/ceph/ceph.confReplace MONITOR_NODE_NAME with the Ceph Monitor host name or IP address.

Example

[root@client ~]# scp root@192.168.0.1:/etc/ceph/ceph.conf /etc/ceph/ceph.conf

Set the appropriate permissions for the configuration file:

[root@client ~]# chmod 644 /etc/ceph/ceph.conf

- Choose either automatically or manually mounting.

Manually Mounting

On the client node, create a directory for the mount point:

Syntax

mkdir PATH_TO_MOUNT_POINTExample

[root@client ~]# mkdir /mnt/mycephfs

NoteIf you used the

pathoption with MDS capabilities, then the mount point must be within what is specified bypath.Use the

ceph-fuseutility to mount the Ceph File System.Syntax

ceph-fuse -n client.CLIENT_ID MOUNT_POINT

Example

[root@client ~]# ceph-fuse -n client.1 /mnt/mycephfs

NoteIf you do not use the default name and location of the user keyring, that is

/etc/ceph/ceph.client.CLIENT_ID.keyring, then use the--keyringoption to specify the path to the user keyring, for example:Example

[root@client ~]# ceph-fuse -n client.1 --keyring=/etc/ceph/client.1.keyring /mnt/mycephfs

NoteUse the

-roption to instruct the client to treat that path as its root:Syntax

ceph-fuse -n client.CLIENT_ID MOUNT_POINT -r PATH

Example

[root@client ~]# ceph-fuse -n client.1 /mnt/cephfs -r /home/cephfs

Verify that the file system is successfully mounted:

Syntax

stat -f MOUNT_POINTExample

[user@client ~]$ stat -f /mnt/cephfs

Automatically Mounting

On the client node, create a directory for the mount point:

Syntax

mkdir PATH_TO_MOUNT_POINTExample

[root@client ~]# mkdir /mnt/mycephfs

NoteIf you used the

pathoption with MDS capabilities, then the mount point must be within what is specified bypath.Edit the

/etc/fstabfile as follows:Syntax

#DEVICE PATH TYPE OPTIONS DUMP FSCK HOST_NAME:_PORT_, MOUNT_POINT fuse.ceph ceph.id=CLIENT_ID, 0 0 HOST_NAME:_PORT_, ceph.client_mountpoint=/VOL/SUB_VOL_GROUP/SUB_VOL/UID_SUB_VOL, HOST_NAME:_PORT_:/ [ADDITIONAL_OPTIONS]

The first column sets the Ceph Monitor host names and the port number.

The second column sets the mount point

The third column sets the file system type, in this case,

fuse.ceph, for CephFS.The fourth column sets the various options, such as, the user name and the secret file using the

nameandsecretfileoptions, respectively. You can also set specific volumes, sub-volume groups, and sub-volumes using theceph.client_mountpointoption. Set the_netdevoption to ensure that the file system is mounted after the networking subsystem starts to prevent hanging and networking issues. If you do not need access time information, then setting thenoatimeoption can increase performance.Set the fifth and sixth columns to zero.

Example

#DEVICE PATH TYPE OPTIONS DUMP FSCK mon1:6789, /mnt/cephfs fuse.ceph ceph.id=1, 0 0 mon2:6789, ceph.client_mountpoint=/my_vol/my_sub_vol_group/my_sub_vol/0, mon3:6789:/ _netdev,defaults

The Ceph File System will be mounted on the next system boot.

Additional Resources

-

The

ceph-fuse(8)manual page. - See the Ceph user management chapter in the Red Hat Ceph Storage Administration Guide for more details on creating a Ceph user.

- See the Creating a Ceph File System section of the Red Hat Ceph Storage File System Guide for details.

3.8. Additional Resources

- See the Section 3.3, “Creating a Ceph File System” for details.

- See the Section 3.5, “Creating client users for a Ceph File System” for details.

- See the Section 3.6, “Mounting the Ceph File System as a kernel client” for details.

- See the Section 3.7, “Mounting the Ceph File System as a FUSE client” for details.

- See the Red Hat Ceph Storage Installation Guide for details on installing the CephFS Metadata Server.

- See the Chapter 2, The Ceph File System Metadata Server for details on configuring the CephFS Metadata Server daemon.

Chapter 4. Ceph File System administration

As a storage administrator, you can perform common Ceph File System (CephFS) administrative tasks, such as:

- To map a directory to a particular MDS rank, see Section 4.4, “Mapping directory trees to Metadata Server daemon ranks”.

- To disassociate a directory from a MDS rank, see Section 4.5, “Disassociating directory trees from Metadata Server daemon ranks”.

- To work with files and directory layouts, see Section 4.8, “Working with File and Directory Layouts”.

- To add a new data pool, see Section 4.6, “Adding data pools”.

- To work with quotas, see Section 4.7, “Working with Ceph File System quotas”.

- To remove a Ceph File System using the command-line interface, see Section 4.12, “Removing a Ceph File System using the command-line interface”.

- To remove a Ceph File System using Ansible, see Section 4.13, “Removing a Ceph File System using Ansible”.

- To set a minimum client version, see Section 4.14, “Setting a minimum client version”.

-

To use the

ceph mds failcommand, see Section 4.15, “Using theceph mds failcommand”.

4.1. Prerequisites

- A running, and healthy Red Hat Ceph Storage cluster.

-

Installation and configuration of the Ceph Metadata Server daemons (

ceph-mds). - Create and mount the Ceph File System.

4.2. Unmounting Ceph File Systems mounted as kernel clients

How to unmount a Ceph File System that is mounted as a kernel client.

Prerequisites

- Root-level access to the node doing the mounting.

Procedure

To unmount a Ceph File System mounted as a kernel client:

Syntax

umount MOUNT_POINTExample

[root@client ~]# umount /mnt/cephfs

Additional Resources

-

The

umount(8)manual page

4.3. Unmounting Ceph File Systems mounted as FUSE clients

Unmounting a Ceph File System that is mounted as a File System in User Space (FUSE) client.

Prerequisites

- Root-level access to the FUSE client node.

Procedure

To unmount a Ceph File System mounted in FUSE:

Syntax

fusermount -u MOUNT_POINTExample

[root@client ~]# fusermount -u /mnt/cephfs

Additional Resources

-

The

ceph-fuse(8)manual page

4.4. Mapping directory trees to Metadata Server daemon ranks

To map a directory and its subdirectories to a particular active Metadata Server (MDS) rank so that its metadata is only managed by the MDS daemon holding that rank. This approach enables you to evenly spread application load or limit impact of users' metadata requests to the entire storage cluster.

An internal balancer already dynamically spreads the application load. Therefore, only map directory trees to ranks for certain carefully chosen applications.

In addition, when a directory is mapped to a rank, the balancer cannot split it. Consequently, a large number of operations within the mapped directory can overload the rank and the MDS daemon that manages it.

Prerequisites

- At least two active MDS daemons.

- User access to the CephFS client node.

-

Verify that the

attrpackage is installed on the CephFS client node with a mounted Ceph File System.

Procedure

Add the

pflag to the Ceph user’s capabilities:Syntax

ceph fs authorize FILE_SYSTEM_NAME client.CLIENT_NAME /DIRECTORY CAPABILITY [/DIRECTORY CAPABILITY] ...

Example

[user@client ~]$ ceph fs authorize cephfs_a client.1 /temp rwp client.1 key: AQBSdFhcGZFUDRAAcKhG9Cl2HPiDMMRv4DC43A== caps: [mds] allow r, allow rwp path=/temp caps: [mon] allow r caps: [osd] allow rw tag cephfs data=cephfs_a

Set the

ceph.dir.pinextended attribute on a directory:Syntax

setfattr -n ceph.dir.pin -v RANK DIRECTORY

Example

[user@client ~]$ setfattr -n ceph.dir.pin -v 2 /temp

This example assigns the

/tempdirectory and all of its subdirectories to rank 2.

Additional Resources

-

See the Layout, quota, snapshot, and network restrictions section in the Red Hat Ceph Storage File System Guide for more details about the

pflag. - See the Disassociating directory trees from Metadata Server daemon ranks section in the Red Hat Ceph Storage File System Guide for more details.

- See the Configuring multiple active Metadata Server daemons section in the Red Hat Ceph Storage File System Guide for more details.

4.5. Disassociating directory trees from Metadata Server daemon ranks

Disassociate a directory from a particular active Metadata Server (MDS) rank.

Prerequisites

- User access to the Ceph File System (CephFS) client node.

-

Ensure that the

attrpackage is installed on the client node with a mounted CephFS.

Procedure

Set the

ceph.dir.pinextended attribute to -1 on a directory:Syntax

setfattr -n ceph.dir.pin -v -1 DIRECTORYExample

[user@client ~]$ serfattr -n ceph.dir.pin -v -1 /home/ceph-user

NoteAny separately mapped subdirectories of

/home/ceph-user/are not affected.

Additional Resources

- See the Mapping Directory Trees to MDS Ranks section in Red Hat Ceph Storage File System Guide for more details.

4.6. Adding data pools

The Ceph File System (CephFS) supports adding more than one pool to be used for storing data. This can be useful for:

- Storing log data on reduced redundancy pools

- Storing user home directories on an SSD or NVMe pool

- Basic data segregation.

Before using another data pool in the Ceph File System, you must add it as described in this section.

By default, for storing file data, CephFS uses the initial data pool that was specified during its creation. To use a secondary data pool, you must also configure a part of the file system hierarchy to store file data in that pool or optionally within a namespace of that pool, using file and directory layouts.

Prerequisites

- Root-level access to the Ceph Monitor node.

Procedure

Create a new data pool:

Syntax

ceph osd pool create POOL_NAME PG_NUMBER

Replace:

-

POOL_NAMEwith the name of the pool. -

PG_NUMBERwith the number of placement groups (PGs).

Example

[root@mon ~]# ceph osd pool create cephfs_data_ssd 64 pool 'cephfs_data_ssd' created

-

Add the newly created pool under the control of the Metadata Servers:

Syntax

ceph fs add_data_pool FS_NAME POOL_NAME

Replace:

-

FS_NAMEwith the name of the file system. -

POOL_NAMEwith the name of the pool.

Example:

[root@mon ~]# ceph fs add_data_pool cephfs cephfs_data_ssd added data pool 6 to fsmap

-

Verify that the pool was successfully added:

Example

[root@mon ~]# ceph fs ls name: cephfs, metadata pool: cephfs_metadata, data pools: [cephfs_data cephfs_data_ssd]

-

If you use the

cephxauthentication, make sure that clients can access the new pool.

Additional Resources

- See the Working with File and Directory Layouts for details.

- See the Creating Ceph File System Client Users for details.

4.7. Working with Ceph File System quotas

As a storage administrator, you can view, set, and remove quotas on any directory in the file system. You can place quota restrictions on the number of bytes or the number of files within the directory.

4.7.1. Prerequisites

-

Make sure that the

attrpackage is installed.

4.7.2. Ceph File System quotas

The Ceph File System (CephFS) quotas allow you to restrict the number of bytes or the number of files stored in the directory structure.

Limitations

- CephFS quotas rely on the cooperation of the client mounting the file system to stop writing data when it reaches the configured limit. However, quotas alone cannot prevent an adversarial, untrusted client from filling the file system.

- Once processes that write data to the file system reach the configured limit, a short period of time elapses between when the amount of data reaches the quota limit, and when the processes stop writing data. The time period generally measures in the tenths of seconds. However, processes continue to write data during that time. The amount of additional data that the processes write depends on the amount of time elapsed before they stop.

-

Previously, quotas were only supported with the userspace FUSE client. With Linux kernel version 4.17 or newer, the CephFS kernel client supports quotas against Ceph mimic or newer clusters. Those version requirements are met by Red Hat Enterprise Linux 8 and Red Hat Ceph Storage 4, respectively. The userspace FUSE client can be used on older and newer OS and cluster versions. The FUSE client is provided by the

ceph-fusepackage. -

When using path-based access restrictions, be sure to configure the quota on the directory to which the client is restricted, or to a directory nested beneath it. If the client has restricted access to a specific path based on the MDS capability, and the quota is configured on an ancestor directory that the client cannot access, the client will not enforce the quota. For example, if the client cannot access the

/home/directory and the quota is configured on/home/, the client cannot enforce that quota on the directory/home/user/. - Snapshot file data that has been deleted or changed does not count towards the quota.

4.7.3. Viewing quotas

Use the getfattr command and the ceph.quota extended attributes to view the quota settings for a directory.

If the attributes appear on a directory inode, then that directory has a configured quota. If the attributes do not appear on the inode, then the directory does not have a quota set, although its parent directory might have a quota configured. If the value of the extended attribute is 0, the quota is not set.

Prerequisites

-

Make sure that the

attrpackage is installed.

Procedure

To view CephFS quotas.

Using a byte-limit quota:

Syntax

getfattr -n ceph.quota.max_bytes DIRECTORYExample

[root@fs ~]# getfattr -n ceph.quota.max_bytes /cephfs/

Using a file-limit quota:

Syntax

getfattr -n ceph.quota.max_files DIRECTORYExample

[root@fs ~]# getfattr -n ceph.quota.max_files /cephfs/

Additional Resources

-

See the

getfattr(1)manual page for more information.

4.7.4. Setting quotas

This section describes how to use the setfattr command and the ceph.quota extended attributes to set the quota for a directory.

Prerequisites

-

Make sure that the

attrpackage is installed.

Procedure

To set CephFS quotas.

Using a byte-limit quota:

Syntax

setfattr -n ceph.quota.max_bytes -v 100000000 /some/dir

Example

[root@fs ~]# setfattr -n ceph.quota.max_bytes -v 100000000 /cephfs/

In this example, 100000000 bytes equals 100 MB.

Using a file-limit quota:

Syntax

setfattr -n ceph.quota.max_files -v 10000 /some/dir

Example

[root@fs ~]# setfattr -n ceph.quota.max_files -v 10000 /cephfs/

In this example, 10000 equals 10,000 files.

Additional Resources

-

See the

setfattr(1)manual page for more information.

4.7.5. Removing quotas

This section describes how to use the setfattr command and the ceph.quota extended attributes to remove a quota from a directory.

Prerequisites

-

Make sure that the

attrpackage is installed.

Procedure

To remove CephFS quotas.

Using a byte-limit quota:

Syntax

setfattr -n ceph.quota.max_bytes -v 0 DIRECTORYExample

[root@fs ~]# setfattr -n ceph.quota.max_bytes -v 0 /cephfs/

Using a file-limit quota:

Syntax

setfattr -n ceph.quota.max_files -v 0 DIRECTORYExample

[root@fs ~]# setfattr -n ceph.quota.max_files -v 0 /cephfs/

Additional Resources

-

See the

setfattr(1)manual page for more information.

4.7.6. Additional Resources

-

See the

getfattr(1)manual page for more information. -

See the

setfattr(1)manual page for more information.

4.8. Working with File and Directory Layouts

As a storage administrator, you can control how file or directory data is mapped to objects.

This section describes how to:

4.8.1. Prerequisites

-

The installation of the

attrpackage.

4.8.2. Overview of file and directory layouts

This section explains what file and directory layouts are in the context for the Ceph File System.

A layout of a file or directory controls how its content is mapped to Ceph RADOS objects. The directory layouts serves primarily for setting an inherited layout for new files in that directory.

To view and set a file or directory layout, use virtual extended attributes or extended file attributes (xattrs). The name of the layout attributes depends on whether a file is a regular file or a directory:

-

Regular files layout attributes are called

ceph.file.layout. -

Directories layout attributes are called

ceph.dir.layout.

The File and Directory Layout Fields table lists available layout fields that you can set on files and directories.

Layouts Inheritance

Files inherit the layout of their parent directory when you create them. However, subsequent changes to the parent directory layout do not affect children. If a directory does not have any layouts set, files inherit the layout from the closest directory with layout in the directory structure.

Additional Resources

- See the Layouts Inheritance for more details.

4.8.3. Setting file and directory layout fields

Use the setfattr command to set layout fields on a file or directory.

When you modify the layout fields of a file, the file must be empty, otherwise an error occurs.

Prerequisites

- Root-level access to the node.

Procedure

To modify layout fields on a file or directory:

Syntax

setfattr -n ceph.TYPE.layout.FIELD -v VALUE PATH

Replace:

-

TYPE with

fileordir. - FIELD with the name of the field.

- VALUE with the new value of the field.

- PATH with the path to the file or directory.

Example

[root@fs ~]# setfattr -n ceph.file.layout.stripe_unit -v 1048576 test

-

TYPE with

Additional Resources

- See the table in the Overview of the file and directory layouts section of the Red Hat Ceph Storage File System Guide for more details.

-

See the

setfattr(1)manual page.

4.8.4. Viewing file and directory layout fields

To use the getfattr command to view layout fields on a file or directory.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to all nodes in the storage cluster.

Procedure

To view layout fields on a file or directory as a single string:

Syntax

getfattr -n ceph.TYPE.layout PATH

- Replace

- PATH with the path to the file or directory.

-

TYPE with

fileordir.

Example

[root@mon ~] getfattr -n ceph.dir.layout /home/test ceph.dir.layout="stripe_unit=4194304 stripe_count=2 object_size=4194304 pool=cephfs_data"

A directory does not have an explicit layout until you set it. Consequently, attempting to view the layout without first setting it fails because there are no changes to display.

Additional Resources

-

The

getfattr(1)manual page. - For more information, see Setting file and directory layouts section in the Red Hat Ceph Storage File System Guide.

4.8.5. Viewing individual layout fields

Use the getfattr command to view individual layout fields for a file or directory.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to all nodes in the storage cluster.

Procedure

To view individual layout fields on a file or directory:

Syntax

getfattr -n ceph.TYPE.layout.FIELD _PATH

- Replace

-

TYPE with

fileordir. - FIELD with the name of the field.

- PATH with the path to the file or directory.

-

TYPE with

Example

[root@mon ~] getfattr -n ceph.file.layout.pool test ceph.file.layout.pool="cephfs_data"

NotePools in the

poolfield are indicated by name. However, newly created pools can be indicated by ID.

Additional Resources

-

The

getfattr(1)manual page. - For more information, see File and directory layout fields.

4.8.6. Removing directory layouts

Use the setfattr command to remove layouts from a directory.

When you set a file layout, you cannot change or remove it.

Prerequisites

- A directory with a layout.

Procedure

To remove a layout from a directory:

Syntax

setfattr -x ceph.dir.layout DIRECTORY_PATHExample

[user@client ~]$ setfattr -x ceph.dir.layout /home/cephfs

To remove the

pool_namespacefield:Syntax

setfattr -x ceph.dir.layout.pool_namespace DIRECTORY_PATHExample

[user@client ~]$ setfattr -x ceph.dir.layout.pool_namespace /home/cephfs

NoteThe

pool_namespacefield is the only field you can remove separately.

Additional Resources

-

The

setfattr(1)manual page

4.9. Ceph File System snapshot considerations

As a storage administrator, you can gain an understanding of the data structures, system components, and considerations to manage Ceph File System (CephFS) snapshots.

Snapshots create an immutable view of a file system at the point in time of creation. You can create a snapshot within any directory, and all data in the file system under that directory is covered.

4.9.1. Storing snapshot metadata for a Ceph File System

Storage of snapshot directory entries and their inodes occurs in-line as part of the directory they were in at the time of the snapshot. All directory entries include a first and last snapid for which they are valid.

4.9.2. Ceph File System snapshot writeback

Ceph snapshots rely on clients to help determine which operations apply to a snapshot and flush snapshot data and metadata back to the OSD and MDS clusters. Handling snapshot writeback is an involved process because snapshots apply to subtrees of the file hierarchy, and the creation of snapshots can occur anytime.

Parts of the file hierarchy that belong to the same set of snapshots are referred to by a single SnapRealm. Each snapshot applies to the subdirectory nested beneath a directory and divides the file hierarchy into multiple "realms" where all of the files contained by a realm share the same set of snapshots.

The Ceph Metadata Server (MDS) controls client access to inode metadata and file data by issuing capabilities (caps) for each inode. During snapshot creation, clients acquire dirty metadata on inodes with capabilities to describe the file state at that time. When a client receives a ClientSnap message, it updates the local SnapRealm and its links to specific inodes and generates a CapSnap for the inode. Capability writeback flushes out the CapSnap and, if dirty data exists, the CapSnap is used to block new data writes until the snapshot flushes to the OSDs.

The MDS generates snapshot-representing directory entries as part of the routine process for flushing them. The MDS keeps directory entries with outstanding CapSnap data pinned in memory and the journal until the writeback process flushes them.

Additional Resources

- See the Creating client users for a Ceph File System section in the Red Hat Ceph Storage File System Guide for details on setting the Ceph user capabilities.

4.9.3. Ceph File System snapshots and hard links

Ceph moves an inode with multiple hard links to a dummy global SnapRealm. This dummy SnapRealm covers all snapshots in the filesystem. Any new snapshots preserve the inode’s data. This preserved data covers snapshots on any linkage of the inode.

4.9.4. Updating a snapshot for a Ceph File System

The process of updating a snapshot is similar to the process of deleting a snapshot.

If you remove an inode out of its parent SnapRealm, Ceph generates a new SnapRealm for the renamed inode if the SnapRealm does not already exist. Ceph saves the IDs of snapshots that are effective on the original parent SnapRealm into the past_parent_snaps data structure of the new SnapRealm and then follows a process similar to creating a snapshot.

Additional Resources

- For details about snapshot data structures, see Ceph File System snapshot data structures in Red Hat Ceph Storage File System Guide.

4.9.5. Ceph File System snapshots and multiple file systems

Snapshots are known to not function properly with multiple file systems.

If you have multiple file systems sharing a single Ceph pool with namespaces, their snapshots will collide, and deleting one snapshot results in missing file data for other snapshots sharing the same Ceph pool.

4.9.6. Ceph File System snapshot data structures

The Ceph File System (CephFS) uses the following snapshot data structures to store data efficiently:

SnapRealm-

A

SnapRealmis created whenever you create a snapshot at a new point in the file hierarchy or when you move a snapshotted inode outside its parent snapshot. A singleSnapRealmrepresents the parts of the file hierarchy that belong to the same set of snapshots. ASnapRealmcontains asr_t_srnodeandinodes_with_capsthat are part of the snapshot. sr_t-

An

sr_tis the on-disk snapshot metadata. It contains sequence counters, time-stamps, and a list of associated snapshot IDs and thepast_parent_snaps. SnapServer-

A

SnapServermanages snapshot ID allocation, snapshot deletion, and maintaining a list of cumulative snapshots in the file system. A file system only has one instance of aSnapServer. SnapContextA

SnapContextconsists of a snapshot sequence ID (snapid) and all the snapshot IDs currently defined for an object. When a write operation occurs, a Ceph client provides aSnapContextto specify the set of snapshots that exist for an object. To generate aSnapContextlist, Ceph combines snapids associated with theSnapRealmand all valid snapids in thepast_parent_snapsdata structure.File data is stored using RADOS self-managed snapshots. In a self-managed snapshot, the client must provide the current

SnapContexton each write. Clients are careful to use the correctSnapContextwhen writing file data to the Ceph OSDs.SnapClientcached effective snapshots filter out stale snapids.SnapClient-

A

SnapClientis used to communicate with aSnapServerand cache cumulative snapshots locally. Each Metadata Server (MDS) rank has aSnapClientinstance.

4.10. Managing Ceph File System snapshots

As a storage administrator, you can take a point-in-time snapshot of a Ceph File System (CephFS) directory. CephFS snapshots are asynchronous, and you can choose which directory snapshot creation occurs in.

4.10.1. Prerequisites

- A running and healthy Red Hat Ceph Storage cluster.

- Deployment of a Ceph File System.

4.10.2. Ceph File System snapshots

A Ceph File System (CephFS) snapshot creates an immutable, point-in-time view of a Ceph File System. CephFS snapshots are asynchronous and are kept in a special hidden directory in the CephFS directory, named .snap. You can specify snapshot creation for any directory within a Ceph File System. When specifying a directory, the snapshot also includes all the subdirectories beneath it.

Each Ceph Metadata Server (MDS) cluster allocates the snap identifiers independently. Using snapshots for multiple Ceph File Systems that are sharing a single pool causes snapshot collisions and results in missing file data.

Additional Resources

- See the Creating a snapshot for a Ceph File System section in the Red Hat Ceph Storage File System Guide for more details.

4.10.3. Enabling a snapshot for a Ceph File System

New Ceph File Systems enable the snapshotting feature by default, but you must manually enable the feature on existing Ceph File Systems.

Prerequisites

- A running and healthy Red Hat Ceph Storage cluster.

- Deployment of a Ceph File System.

- Root-level access to a Ceph Metadata Server (MDS) node.

Procedure

For existing Ceph File Systems, enable the snapshotting feature:

Syntax

ceph fs set FILE_SYSTEM_NAME allow_new_snaps trueExample

[root@mds ~]# ceph fs set cephfs allow_new_snaps true enabled new snapshots

Additional Resources

- See the Creating a snapshot for a Ceph File System section in the Red Hat Ceph Storage File System Guide for details on creating a snapshot.

- See the Deleting a snapshot for a Ceph File System section in the Red Hat Ceph Storage File System Guide for details on deleting a snapshot.

- See the Restoring a snapshot for a Ceph File System section in the Red Hat Ceph Storage File System Guide for details on restoring a snapshot.

4.10.4. Creating a snapshot for a Ceph File System

You can create an immutable, point-in-time view of a Ceph File System by creating a snapshot. A snapshot uses a hidden directory located in the directory to snapshot. The name of this directory is .snap by default.

Prerequisites

- A running and healthy Red Hat Ceph Storage cluster.

- Deployment of a Ceph File System.

- Root-level access to a Ceph Metadata Server (MDS) node.

Procedure

To create a snapshot, create a new subdirectory inside the

.snapdirectory. The snapshot name is the new subdirectory name.Syntax

mkdir NEW_DIRECTORY_PATHExample

[root@mds cephfs]# mkdir .snap/new-snaps

This example creates the

new-snapssubdirectory on a Ceph File System that is mounted on/mnt/cephfsand informs the Ceph Metadata Server (MDS) to start making snapshots.

Verification

List the new snapshot directory:

Syntax

ls -l .snap/

The

new-snapssubdirectory displays under the.snapdirectory.

Additional Resources

- See the Deleting a snapshot for a Ceph File System section in the Red Hat Ceph Storage File System Guide for details on deleting a snapshot.

- See the Restoring a snapshot for a Ceph File System section in the Red Hat Ceph Storage File System Guide for details on restoring a snapshot.

4.10.5. Deleting a snapshot for a Ceph File System

You can delete a snapshot by removing the corresponding directory in a .snap directory.

Prerequisites

- A running and healthy Red Hat Ceph Storage cluster.

- Deployment of a Ceph File System.

- Creation of snapshots on a Ceph File System.

- Root-level access to a Ceph Metadata Server (MDS) node.

Procedure

To delete a snapshot, remove the corresponding directory:

Syntax

rmdir DIRECTORY_PATHExample

[root@mds cephfs]# rmdir .snap/new-snaps

This example deletes the

new-snapssubdirectory on a Ceph File System that is mounted on/mnt/cephfs.

Contrary to a regular directory, a rmdir command succeeds even if the directory is not empty, so you do not need to use a recursive rm command.

Attempting to delete root-level snapshots, which might contain underlying snapshots, will fail.

Additional Resources

- See the Restoring a snapshot for a Ceph File System section in the Red Hat Ceph Storage File System Guide for details on restoring a snapshot.

- See the Creating a snapshot for a Ceph File System section in the Red Hat Ceph Storage File System Guide for details on creating a snapshot.

4.10.6. Restoring a snapshot for a Ceph File System

You can restore a file from a snapshot or fully restore a complete snapshot for a Ceph File System (CephFS).

Prerequisites

- A running, and healthy Red Hat Ceph Storage cluster.

- Deployment of a Ceph File System.

- Root-level access to a Ceph Metadata Server (MDS) node.

Procedure

To restore a file from a snapshot, copy it from the snapshot directory to the regular tree:

Syntax

cp -a .snap/SNAP_DIRECTORY/FILENAME

Example

[root@mds dir1]# cp .snap/new-snaps/file1 .

This example restores

file1to the current directory.You can also fully restore a snapshot from the

.snapdirectory tree. Replace the current entries with copies from the desired snapshot:Syntax

[root@mds dir1]# rm -rf * [root@mds dir1]# cp -a .snap/SNAP_DIRECTORY/* .Example

[root@mds dir1]# rm -rf * [root@mds dir1]# cp -a .snap/new-snaps/* .

This example removes all files and directories under

dir1and restores the files from thenew-snapssnapshot to the current directory,dir1.

4.10.7. Additional Resources

- See the Deployment of the Ceph File System section in the Red Hat Ceph Storage File System Guide.

4.11. Taking down a Ceph File System cluster

You can take down Ceph File System (CephFS) cluster by simply setting the down flag true. Doing this gracefully shuts down the Metadata Server (MDS) daemons by flushing journals to the metadata pool and all client I/O is stopped.

You can also take the CephFS cluster down quickly for testing the deletion of a file system and bring the Metadata Server (MDS) daemons down, for example, practicing a disaster recovery scenario. Doing this sets the jointable flag to prevent the MDS standby daemons from activating the file system.

Prerequisites

- User access to the Ceph Monitor node.

Procedure

To mark the CephFS cluster down:

Syntax

ceph fs set FS_NAME down trueExmaple

[root@mon]# ceph fs set cephfs down true

To bring the CephFS cluster back up:

Syntax

ceph fs set FS_NAME down falseExmaple

[root@mon]# ceph fs set cephfs down false

or

To quickly take down a CephFS cluster:

Syntax

ceph fs fail FS_NAMEExmaple

[root@mon]# ceph fs fail cephfs

4.12. Removing a Ceph File System using the command-line interface

You can remove a Ceph File System (CephFS) using the command-line interface. Before doing so, consider backing up all the data and verifying that all clients have unmounted the file system locally.

This operation is destructive and will make the data stored on the Ceph File System permanently inaccessible.

Prerequisites

- Back-up the data.

- All clients have unmounted the Ceph File System (CephFS).

- Root-level access to a Ceph Monitor node.

Procedure

Display the CephFS status to determine the MDS ranks.

Syntax

ceph fs status

Example

[root@mon ~]# ceph fs status cephfs - 0 clients ====== +------+--------+----------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+----------------+---------------+-------+-------+ | 0 | active | cluster1-node6 | Reqs: 0 /s | 10 | 13 | +------+--------+----------------+---------------+-------+-------+ +-----------------+----------+-------+-------+ | Pool | type | used | avail | +-----------------+----------+-------+-------+ | cephfs_metadata | metadata | 2688k | 15.0G | | cephfs_data | data | 0 | 15.0G | +-----------------+----------+-------+-------+ +----------------+ | Standby MDS | +----------------+ | cluster1-node5 | +----------------+

In the example above, the rank is 0.

Mark the CephFS as down:

Syntax

ceph fs set FS_NAME down trueReplace FS_NAME with the name of the CephFS you want to remove.

Example

[root@mon]# ceph fs set cephfs down true marked down

Display the status of the CephFS to determine it has stopped:

Syntax

ceph fs status

Example

[root@mon ~]# ceph fs status cephfs - 0 clients ====== +------+----------+----------------+----------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+----------+----------------+----------+-------+-------+ | 0 | stopping | cluster1-node6 | | 10 | 12 | +------+----------+----------------+----------+-------+-------+ +-----------------+----------+-------+-------+ | Pool | type | used | avail | +-----------------+----------+-------+-------+ | cephfs_metadata | metadata | 2688k | 15.0G | | cephfs_data | data | 0 | 15.0G | +-----------------+----------+-------+-------+ +----------------+ | Standby MDS | +----------------+ | cluster1-node5 | +----------------+

After some time, the MDS is no longer listed:

Example

[root@mon ~]# ceph fs status cephfs - 0 clients ====== +------+-------+-----+----------+-----+------+ | Rank | State | MDS | Activity | dns | inos | +------+-------+-----+----------+-----+------+ +------+-------+-----+----------+-----+------+ +-----------------+----------+-------+-------+ | Pool | type | used | avail | +-----------------+----------+-------+-------+ | cephfs_metadata | metadata | 2688k | 15.0G | | cephfs_data | data | 0 | 15.0G | +-----------------+----------+-------+-------+ +----------------+ | Standby MDS | +----------------+ | cluster1-node5 | +----------------+

Fail all MDS ranks shown in the status of step one:

Syntax

ceph mds fail RANKReplace RANK with the rank of the MDS daemons to fail.

Example

[root@mon]# ceph mds fail 0

Remove the CephFS:

Syntax

ceph fs rm FS_NAME --yes-i-really-mean-itReplace FS_NAME with the name of the Ceph File System you want to remove.

Example

[root@mon]# ceph fs rm cephfs --yes-i-really-mean-it

Verify that the file system is removed:

Syntax

ceph fs ls

Example

[root@mon ~]# ceph fs ls No filesystems enabled

Optional: Remove the pools that were used by CephFS.

On a Ceph Monitor node, list the pools:

Syntax

ceph osd pool ls

Example

[root@mon ~]# ceph osd pool ls rbd cephfs_data cephfs_metadata

In the example output,

cephfs_metadataandcephfs_dataare the pools that were used by CephFS.Remove the metadata pool:

Syntax