OpenShift cluster not present or shown as Stale in OCM

Environment

- Red Hat OpenShift Container Platform (RHOCP)

- 4

- Azure Red Hat OpenShift (ARO)

- 4

- OpenShift Managed (Azure)

- Red Hat OpenShift Cluster Manager (OCM)

- Red Hat Hybrid Cloud Console

Issue

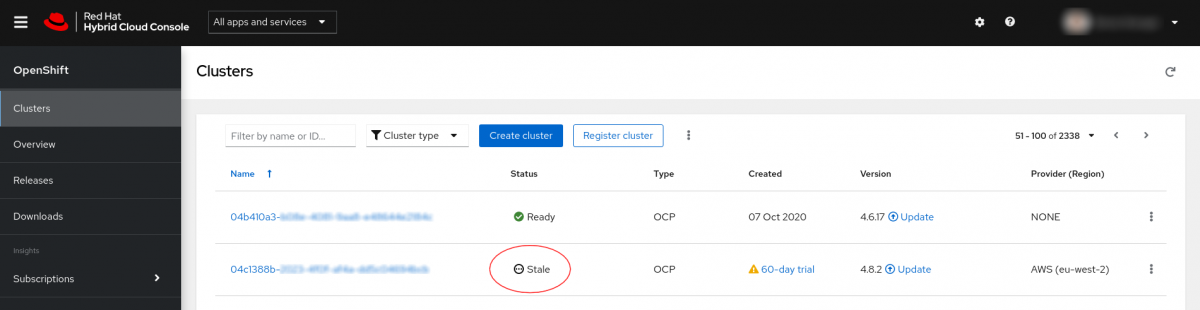

- After an OpenShift cluster was installed, it is not shown on

console.redhat.com. - An OCP cluster is not registering in

console.redhat.comand showing in OpenShift Cluster Manager. - Ownership of an OCP cluster on

console.redhat.comdoes not get updated and switched to the new owner after following the procedure Transferring ownership of a connected cluster - An OpenShift cluster shows up as "Stale" in OpenShift Cluster Manager, in the Red Hat Hybrid Cloud Console (console.redhat.com). Why is that?

- In the OCM console, a cluster is shown as "Stale" even though it is running. How can this be fixed?

Resolution

-

Check that the OpenShift Container Platform cluster can connect to the Telemetry endpoints (if applicable, ensure that the cluster proxy configuration allows access to the Telemetry endpoints.). The required Telemetry endpoints can be found in the documentation.

-

Follow the Diagnostic Steps section to verify that the cluster was registered with a Red Hat account that is in the same organization as the user viewing

console.redhat.com. Verify also that the cluster owner account is active in the Red Hat Customer Portal. -

Follow the Diagnostic Steps section to verify that the pull-secret is the correct type, if it is not then delete and re-create the pull-secret:

oc delete secret/pull-secret -n openshift-configoc create secret generic pull-secret -n openshift-config --type=kubernetes.io/dockerconfigjson --from-file=.dockerconfigjson=/path/to/downloaded/pull-secret -

Follow the Diagnostic Steps section to check if there are any errors in the

telemeter-clientpod, and try to recreate it:4.9 and earlier

$ oc delete pod -n openshift-monitoring -l k8s-app=telemeter-client4.10+

oc delete pod -n openshift-monitoring -l app.kubernetes.io/name=telemeter-client

Root Cause

The telemeter-client cannot contact the Red Hat Telemetry endpoints.

Diagnostic Steps

-

Review the logs of the

telemeter-clientpod running in theopenshift-monitoringnamespace to see if there are any errors:Check pod name in 4.9 and earlier

$ oc get pods -n openshift-monitoring -l k8s-app=telemeter-clientCheck pod name in 4.10+

oc get pods -n openshift-monitoring -l app.kubernetes.io/name=telemeter-clientLogs

oc logs telemeter-client-######### -n openshift-monitoring -c telemeter-client -

Check the cluster pull secret using the following command, and check it is of the correct type and if the user account in the secret is still active in the Red Hat Customer Portal:

$ oc get secret pull-secret -n openshift-config -o jsonpath='{.data.\.dockerconfigjson}' | base64 -d | jqType should be:

kubernetes.io/dockerconfigjson

Note:

jqis needed to execute the above command. If it's not available, remove the| jqfrom the command, but the output will be more difficult to read.

This solution is part of Red Hat’s fast-track publication program, providing a huge library of solutions that Red Hat engineers have created while supporting our customers. To give you the knowledge you need the instant it becomes available, these articles may be presented in a raw and unedited form.

Comments