-

Language:

English

-

Language:

English

Deployment Guide

Installing and Configuring OpenShift Enterprise

Red Hat OpenShift Documentation Team

Abstract

- Introductory information that includes hardware and software prerequisites, architecture information, upgrading from previous installations, and general information about the sample installation.

- Instructions on how to install and configure broker hosts and all necessary components and services.

- Instructions on how to install and configure node hosts and all necessary components and services.

- Information on how to test and validate an OpenShift Enterprise installation, and install and configure a developer workstation.

Chapter 1. Introduction to OpenShift Enterprise

1.1. Product Features

| Ease of administration | With OpenShift Enterprise, system administrators no longer have to create development, testing, and production environments. Developers can create their own application stacks using the OpenShift Enterprise Management Console, client tools, or the REST API. |

| Choice | Developers can choose their tools, languages, frameworks, and services. |

| Automatic scaling | With OpenShift Enterprise, applications can scale out as necessary, adjusting resources based on demand. |

| Avoid lock-in | Using standard languages and middleware runtimes means that customers are not tied to OpenShift Enterprise, and can easily move to another platform. |

| Multiple clouds | OpenShift Enterprise can be deployed on physical hardware, private clouds, public clouds, hybrid clouds, or a mixture of these, allowing full control over where applications are run. |

1.2. What's New in Current Release

Chapter 2. Prerequisites

2.1. Supported Operating Systems

Note

Important

2.2. Hardware Requirements

- AMD64 or Intel® 64 architecture

- Minimum 1 GB of memory

- Minimum 8 GB of hard disk space

- Network connectivity

2.3. Red Hat Subscription Requirements

- Red Hat Enterprise Linux 6 Server

- Red Hat Software Collections 1

- OpenShift Enterprise Infrastructure (broker and supporting services)

- OpenShift Enterprise Application Node

- OpenShift Enterprise Client Tools

- JBoss Enterprise Web Server 2

- JBoss Enterprise Application Platform 6

- Red Hat OpenShift Enterprise JBoss EAP add-on

Note

Chapter 3. Architecture

Figure 3.1. OpenShift Enterprise Components Legend

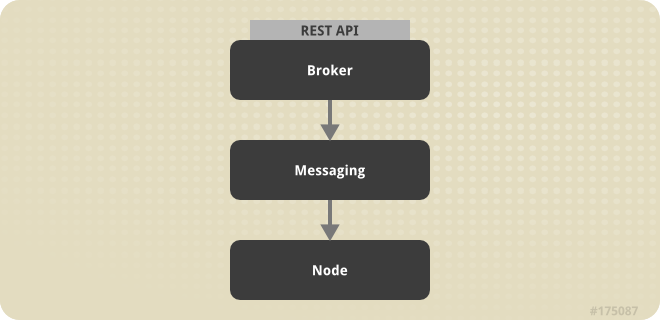

Figure 3.2. OpenShift Enterprise Host Types

Warning

3.1. Communication Mechanisms

Figure 3.3. OpenShift Enterprise Communication Mechanisms

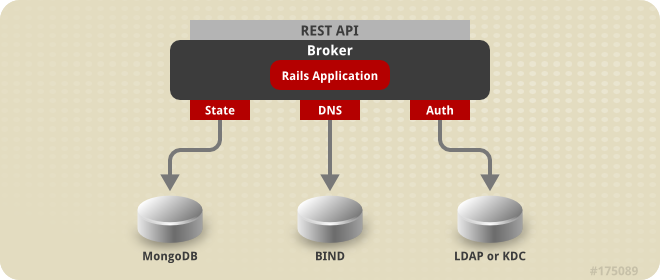

3.2. State Management

Table 3.1. Sections of Application Data

| Section | Description |

|---|---|

| State | This is the general application state where the data is stored using MongoDB by default. |

| DNS | This is the dynamic DNS state where BIND handles the data by default. |

| Auth | This is the user state for authentication and authorization. This state is stored using any authentication mechanism supported by Apache, such as mod_auth_ldap and mod_auth_kerb. |

Figure 3.4. OpenShift Enterprise State Management

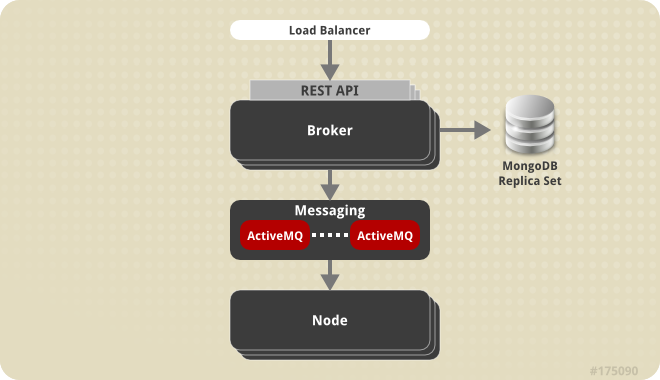

3.3. Redundancy

Figure 3.5. Implementing Redundancy in OpenShift Enterprise

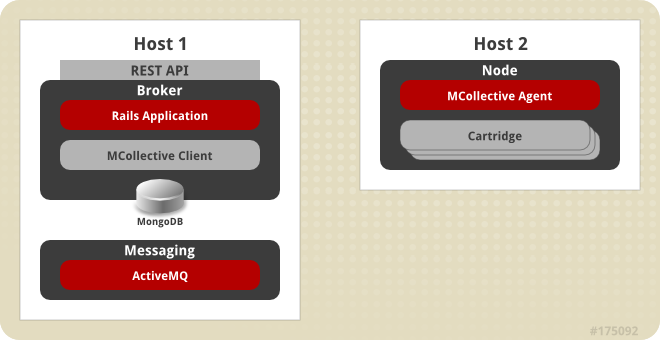

Figure 3.6. Simplified OpenShift Enterprise Installation Topology

3.4. Security

- SELinux

- SELinux is an implementation of a mandatory access control (MAC) mechanism in the Linux kernel. It checks for allowed operations at a level beyond what standard discretionary access controls (DAC) provide. SELinux can enforce rules on files and processes, and on their actions based on defined policy. SELinux provides a high level of isolation between applications running within OpenShift Enterprise because each gear and its contents are uniquely labeled.

- Control Groups (cgroups)

- Control Groups allow you to allocate processor, memory, and input and output (I/O) resources among applications. They provide control of resource utilization in terms of memory consumption, storage and networking I/O utilization, and process priority. This enables the establishment of policies for resource allocation, thus ensuring that no system resource consumes the entire system and affects other gears or services.

- Kernel Namespaces

- Kernel namespaces separate groups of processes so that they cannot see resources in other groups. From the perspective of a running OpenShift Enterprise application, for example, the application has access to a running Red Hat Enterprise Linux system, although it could be one of many applications running within a single instance of Red Hat Enterprise Linux.

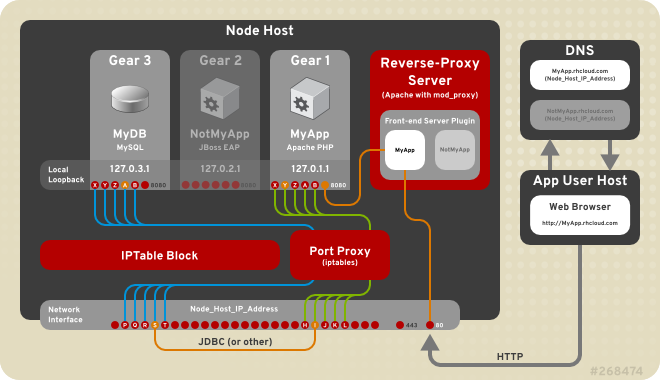

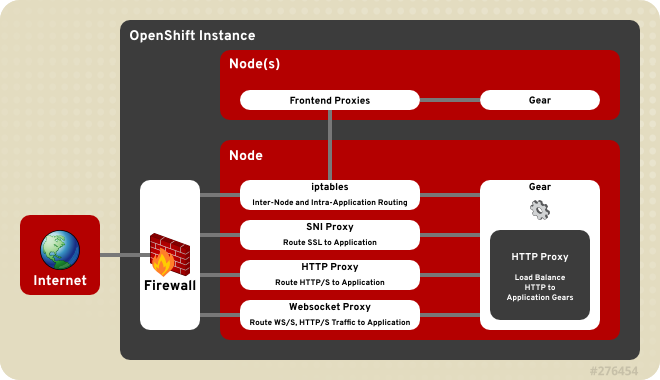

It is important to understand how routing works on a node to better understand the security architecture of OpenShift Enterprise. An OpenShift Enterprise node includes several front ends to proxy traffic to the gears connected to its internal network.

Figure 3.7. OpenShift Enterprise Networking

Warning

Chapter 4. Upgrading from Previous Versions

ose-upgrade tool. If you are deploying OpenShift Enterprise for the first time, see Section 6.3, “Using the Sample Deployment Steps” for installation instructions. If you are attempting to apply the latest errata within a minor release of OpenShift Enterprise 2 (for example, updating from release 2.1.6 to 2.1.8), see Chapter 15, Asynchronous Errata Updates for specific update instructions.

ose-upgrade tool to upgrade from 2.0 to 2.1, then use the tool again to upgrade from 2.1 to 2.2.

- Broker services are disabled during the upgrade.

- Applications are unavailable during certain steps of the upgrade. During the outage, users can still access their gears using

SSH, but should be advised against performing any Git pushes. See the section on your relevant upgrade path for more specific outage information. - Although it may not be necessary, Red Hat recommends rebooting all hosts after an upgrade. Due to the scheduled outage, this is a good time to apply any kernel updates that are included.

yum update command.

4.1. Upgrade Tool

ose-upgrade tool.

- Each step typically consists of one or more scripts to be executed and varies depending on the type of host.

- Upgrade steps and scripts must be executed in a given order, and are tracked by the

ose-upgradetool. The upgrade tool tracks all steps that have been executed and those that have failed. The next step or script is not executed when a previous one has failed. - Failed steps can be reattempted after the issues are resolved. Note that only scripts that previously failed are executed again, so ensure you are aware of the impact and that the issue has been resolved correctly. If necessary, use the

--skipoption to mark a step complete and proceed to the next step. However, only do this when absolutely required. - The

ose-upgradetool log file is stored at/var/log/openshift/upgrade.logfor review if required.

ose-upgrade status command to list the known steps and view the next step that must be performed. Performing all the steps without pausing with the ose-upgrade all command is only recommended for node hosts. For broker hosts, Red Hat recommends that you pause after each step to better understand the process, and understand the next step to be performed.

4.2. Preparing for an Upgrade

Procedure 4.1. To Prepare OpenShift Enterprise for an Upgrade:

- Perform the required backup steps before starting with the upgrade. Only proceed to the next step after the backup is complete, and the relevant personnel are notified of the upcoming outage.

- Disable any change management software that is being used to manage your OpenShift Enterprise installation configuration, and update it accordingly after the upgrade.

- If a configuration file already exists on disk during an update, the RPM package that provides the file does one of the following, depending on how the package is built:

- Backs up the existing file with an

.rpmsaveextension and creates the new file. - Leaves the existing file in place and creates the new file with an

.rpmnewextension.

Before updating, find any.rpm*files still on disk from previous updates using the following commands:#

updatedb#locate --regex '\.rpm(save|new)$'Compare these files to the relevant configuration files currently in use and note any differences. Manually merge any desired settings into the current configuration files, then either move the.rpm*files to an archive directory or remove them. - Before attempting to upgrade, ensure the latest errata have been applied for the current minor version of your OpenShift Enterprise installation. Run the

yum updatecommand, then check again for any new configuration files that have changed:#

yum update -y#updatedb#locate --regex '\.rpm(save|new)$'Resolve any.rpm*files found again as described in the previous step.Additional steps may also be required depending on the errata being applied. For more information on errata updates, see the relevant OpenShift Enterprise Release Notes at http://access.redhat.com/site/documentation. - Restart any services that had their configuration files updated.

- Run the

oo-admin-chkscript on a broker host:#

oo-admin-chkThis command checks the integrity of the MongoDB datastore against the actual deployment of application gears on the node hosts. Resolve any issues reported by this script, if possible, prior to performing an upgrade. For more information on using theoo-admin-chkscript and fixing gear discrepancies, see the OpenShift Enterprise Troubleshooting Guide at http://access.redhat.com/site/documentation. - Run the

oo-diagnosticsscript on all hosts:#

oo-diagnosticsUse the output of this command to compare after the upgrade is complete.

4.3. Upgrading from OpenShift Enterprise 1.2 to OpenShift Enterprise 2.0

begin step, adjusts the yum configurations in preparation for the upgrade. Red Hat recommends that you perform this step in advance of the scheduled outage to ensure any subscription issues are resolved before you proceed with the upgrade.

Procedure 4.2. To Bootstrap the Upgrade and Perform the begin Step:

- The openshift-enterprise-release RPM package includes the

ose-upgradetool that guides you through the upgrade process. Install the openshift-enterprise-release package on each host, and update it to the most current version.#

yum install openshift-enterprise-release - The

beginstep of the upgrade process applies to all hosts, and includes those hosts that contain only supporting services such as MongoDB and ActiveMQ. Hosts using Red Hat Subscription Management (RHSM) or Red Hat Network (RHN) Classic are unsubscribed from the 1.2 channels and subscribed to the new 2.0 channels.Warning

This step assumes that the channel names come directly from Red Hat Network. If the package source is an instance of Red Hat Satellite or Subscription Asset Manager and the channel names are remapped differently, you must change this yourself. Examine the scripts in the/usr/lib/ruby/site_ruby/1.8/ose-upgrade/host/upgrades/2/directory for use as models. You can also add your custom script to a subdirectory to be executed with theose-upgradetool.In addition to updating the channel set, modifications to theyumconfiguration give priority to the OpenShift Enterprise, Red Hat Enterprise Linux, and JBoss repositories. However, packages from other sources are excluded as required to prevent certain issues with dependency management that occur between the various channels.Run thebeginstep on each host. Note that the command output is different depending on the type of host. The following example output is from a broker host:#

ose-upgrade beginINFO: OpenShift broker installed. INFO: Setting host step 'begin' status to UPGRADING INFO: Starting upgrade number 2 to version 2.0. [...] INFO: Setting host step 'begin' status to COMPLETE INFO: To continue the upgrade, install a specific upgrade package.

Procedure 4.3. To Install the Upgrade RPM Specific to a Host:

- Depending on the host type, install the latest upgrade RPM package from the new OpenShift Enterprise 2.0 channels. For broker hosts, install the openshift-enterprise-upgrade-broker package:

#

yum install openshift-enterprise-upgrade-brokerFor node hosts, install the openshift-enterprise-upgrade-node package:#

yum install openshift-enterprise-upgrade-nodeIf the package is already installed because of a previous upgrade, it still must be updated to the latest package version for the OpenShift Enterprise 2.0 upgrade. - The

ose-upgradetool guides the upgrade process by listing the necessary steps that are specific to the upgrade scenario, and identifies the step to be performed next. Theose-upgrade statuscommand, orose-upgrade, provides a current status report. The command output varies depending on the type of host. The following example output is from a broker host:#

ose-upgrade statusINFO: OpenShift broker installed. Current upgrade is number 2 to version 2.0. Step sequence: begin pre outage rpms conf maintenance_mode pending_ops confirm_nodes data gears end_maintenance_mode post Next step is: pre

Procedure 4.4. To Perform the pre Step on Broker and Node Hosts:

- The

prestep manages the following actions:- Backs up OpenShift Enterprise configuration files.

- Clears pending operations older than one hour. (Broker hosts only)

- Performs any pre-upgrade datastore migration steps. (Broker hosts only)

- Updates authorization indexes. (Broker hosts only)

Run theprestep on one broker host and each node host:#

ose-upgrade preWhen one broker host begins this step, any attempts made by other broker hosts to run theprestep simultaneously will fail. - After the

prestep completes on the first broker host, run it on any remaining broker hosts.

Procedure 4.5. To Perform the outage Step on Broker and Node Hosts:

- The

outagestep stops services as required depending on the type of host.Warning

The broker enters outage mode during this upgrade step. A substantial outage also begins for applications on the node hosts. Scaled applications are unable to contact any child gears during the outage. These outages last until theend_maintenance_modestep is complete.Perform this step on all broker hosts first, and then on all node hosts. This begins the broker outage, and all communication between the broker host and the node hosts is stopped. Perform theoutagestep with the following command:#

ose-upgrade outageAfter the command completes on all hosts, node and broker hosts can be upgraded simultaneously until the upgrade steps are complete on all node hosts, and the broker host reaches theconfirm_nodesstep. - For all other hosts that are not a broker or a node host, run

yum updateto upgrade any services that are installed, such as MongoDB or ActiveMQ:#

yum update

Procedure 4.6. To Perform the rpms Step on Broker and Node Hosts:

- The

rpmsstep updates RPM packages installed on the node host, and installs any new RPM packages that are required.Run therpmsstep on each host:#

ose-upgrade rpms

Procedure 4.7. To Perform the conf Step on Broker and Node Hosts:

- The

confstep changes the OpenShift Enterprise configuration to match the new codebase installed in the previous step. Each modified file is first copied to a file with the same name plus a.ugsaveextension and a timestamp. This makes it easier to determine what files have changed.Run theconfstep on each host:#

ose-upgrade confWarning

If the configuration files have been significantly modified from the recommended configuration, manual intervention may be required to merge configuration changes so that they can be used with OpenShift Enterprise.

Procedure 4.8. To Perform the maintenance_mode Step on Broker and Node Hosts:

- The

maintenance_modestep manages the following actions:- Configures the broker to disable the API and return an outage notification to any requests. (Broker hosts only)

- Starts the broker service and, if installed, the console service in maintenance mode so that they provide clients with an outage notification. (Broker hosts only)

- Clears the broker and console caches. (Broker hosts only)

- Enables gear upgrade extensions. (Node hosts only)

- Starts the

ruby193-mcollectiveservice. (Node hosts only)

Run themaintenance_modestep on each host:#

ose-upgrade maintenance_mode

Procedure 4.9. To Perform the pending_ops Step on a Broker Host:

- The

pending_opsstep clears records of any pending application operations; the outage prevents them from ever completing. Run thepending_opsstep on one broker host. Do not run this command on multiple broker hosts at the same time. When one broker host begins this step, any attempts made by other broker hosts to run thepending_opsstep simultaneously will fail:#

ose-upgrade pending_ops - After the

pending_opsstep completes on the first broker host, run the command on any remaining broker hosts.

Procedure 4.10. To Perform the confirm_nodes Step on Broker Hosts:

- The

confirm_nodesstep attempts to access all known node hosts to determine whether they have all been upgraded before proceeding. This step fails if themaintenance_modestep has not been completed on all node hosts, or if MCollective cannot access any node hosts.Run theconfirm_nodesstep on a broker host:#

ose-upgrade confirm_nodes - If this step fails due to node hosts that are no longer deployed, you may need to skip the

confirm_nodesstep. Ensure that all node hosts reported missing are not actually expected to respond, then skip theconfirm_nodesstep with the following command:#

ose-upgrade --skip confirm_nodes

Procedure 4.11. To Perform the data Step on Broker Hosts:

- The

datastep runs a data migration against the shared broker datastore. Run thedatastep on one broker host:#

ose-upgrade dataWhen one broker host begins this step, any attempts made by other broker hosts to run thedatastep simultaneously will fail. - After the

datastep completes on the first broker host, run it on any remaining broker hosts.

Procedure 4.12. To Perform the gears Step on Broker Hosts:

- The

gearsstep runs a gear migration through the required changes so that they can be used in OpenShift Enterprise 2.0. Run thegearsstep on one broker host:#

ose-upgrade gearsWhen one broker host begins this step, any attempts made by other broker hosts to run thegearsstep simultaneously will fail. - After the

gearsstep completes on the first broker host, run it on any remaining broker hosts.

Procedure 4.13. To Perform the test_gears_complete Step on Node Hosts:

- The

test_gears_completestep verifies the gear migrations are complete before proceeding. This step blocks the upgrade on node hosts by waiting until thegearsstep has completed on an associated broker host. Run thetest_gears_completestep on all node hosts:#

ose-upgrade test_gears_complete

Procedure 4.14. To Perform the end_maintenance_mode Step on Broker and Node Hosts:

- The

end_maintenance_modestep starts the services that were stopped in themaintenance_modestep or added in the interim. It gracefully restartshttpdto complete the node host upgrade, and restarts the broker service and, if installed, the console service. Complete this step on all node hosts first before running it on the broker hosts:#

ose-upgrade end_maintenance_mode - Run the

oo-accept-nodescript on each node host to verify that it is correctly configured:#

oo-accept-node

Procedure 4.15. To Perform the post Step on Broker Hosts:

- The

poststep manages the following actions on the broker host:- Performs any post-upgrade datastore migration steps.

- Publishes updated district UIDs to the node hosts.

- Clears the broker and console caches.

Run thepoststep on a broker host:#

ose-upgrade postWhen one broker host begins this step, any attempts made by other broker hosts to run thepoststep simultaneously will fail. - After the

poststep completes on the first broker host, run it on any remaining broker hosts. - The upgrade is now complete for an OpenShift Enterprise installation. Run

oo-diagnosticson each host to diagnose any problems:#

oo-diagnostics

Although the goal is to make the upgrade process as easy as possible, some known issues must be addressed manually:

- Because Jenkins applications cannot be migrated, follow these steps to regain functionality:

- Save any modifications made to existing Jenkins jobs.

- Remove the existing Jenkins application.

- Add the Jenkins application again.

- Add the Jenkins client cartridge as required.

- Reapply the required modifications from the first step.

- There are no notifications when a gear is successfully migrated but fails to start. This may not be a migration failure because there may be multiple reasons why a gear fails to start. However, Red Hat recommends that you verify the operation of your applications after upgrading. The

service openshift-gears statuscommand may be helpful in certain situations.

4.4. Upgrading from OpenShift Enterprise 2.0 to OpenShift Enterprise 2.1

begin step, adjusts the yum configurations in preparation for the upgrade. Red Hat recommends that you perform this step in advance of the scheduled outage to ensure any subscription issues are resolved before you proceed with the upgrade.

Procedure 4.16. To Bootstrap the Upgrade and Perform the begin Step:

- The openshift-enterprise-release RPM package includes the

ose-upgradetool that guides you through the upgrade process. Install the openshift-enterprise-release package on each host, and update it to the most current version.#

yum install openshift-enterprise-release - The

beginstep of the upgrade process applies to all hosts, and includes those hosts that contain only supporting services such as MongoDB and ActiveMQ. Hosts using Red Hat Subscription Management (RHSM) or Red Hat Network (RHN) Classic are unsubscribed from the 2.0 channels and subscribed to the new 2.1 channels.Warning

This step assumes that the channel names come directly from Red Hat Network. If the package source is an instance of Red Hat Satellite or Subscription Asset Manager and the channel names are remapped differently, you must change this yourself. Examine the scripts in the/usr/lib/ruby/site_ruby/1.8/ose-upgrade/host/upgrades/3/directory for use as models. You can also add your custom script to a subdirectory to be executed with theose-upgradetool.In addition to updating the channel set, modifications to theyumconfiguration give priority to the OpenShift Enterprise, Red Hat Enterprise Linux, and JBoss repositories. However, packages from other sources are excluded as required to prevent certain issues with dependency management that occur between the various channels.Run thebeginstep on each host. Note that the command output is different depending on the type of host. The following example output is from a broker host:#

ose-upgrade beginINFO: OpenShift broker installed. INFO: Setting host step 'begin' status to UPGRADING INFO: Starting upgrade number 3 to version 2.1. [...] INFO: updating /etc/openshift-enterprise-release INFO: Setting host step 'begin' status to COMPLETE INFO: To continue the upgrade, install a specific upgrade package.Important

Theoo-admin-yum-validator --oo-version 2.1 --fix-allcommand is run automatically during thebeginstep. When using RHN Classic, the command does not automatically subscribe a system to the OpenShift Enterprise 2.1 channels, but instead reports the manual steps required. After the channels are manually subscribed, running thebeginstep again sets the properyumpriorities and continues as expected.

Procedure 4.17. To Install the Upgrade RPM Specific to a Host:

- Depending on the host type, install the latest upgrade RPM package from the new OpenShift Enterprise 2.1 channels. For broker hosts, install the openshift-enterprise-upgrade-broker package:

#

yum install openshift-enterprise-upgrade-brokerFor node hosts, install the openshift-enterprise-upgrade-node package:#

yum install openshift-enterprise-upgrade-nodeIf the package is already installed because of a previous upgrade, it still must be updated to the latest package version for the OpenShift Enterprise 2.1 upgrade. - The

ose-upgradetool guides the upgrade process by listing the necessary steps that are specific to the upgrade scenario, and identifies the step to be performed next. Theose-upgrade statuscommand, orose-upgrade, provides a current status report. The command output varies depending on the type of host. The following example output is from a broker host:#

ose-upgrade statusINFO: OpenShift broker installed. Current upgrade is number 3 to version 2.1. Step sequence: begin pre outage rpms conf maintenance_mode pending_ops confirm_nodes data gears end_maintenance_mode post Next step is: pre

Procedure 4.18. To Perform the pre Step on Broker and Node Hosts:

- The

prestep manages the following actions:- Backs up OpenShift Enterprise configuration files.

- Clears pending operations older than one hour. (Broker hosts only)

- Performs any pre-upgrade datastore migration steps. (Broker hosts only)

Run theprestep on one broker host and each node host:#

ose-upgrade preWhen one broker host begins this step, any attempts made by other broker hosts to run theprestep simultaneously will fail. - After the

prestep completes on the first broker host, run it on any remaining broker hosts.

Procedure 4.19. To Perform the outage Step on Broker and Node Hosts:

- The

outagestep stops services as required depending on the type of host.Warning

The broker enters outage mode during this upgrade step. A substantial outage also begins for applications on the node hosts. Scaled applications are unable to contact any child gears during the outage. These outages last until theend_maintenance_modestep is complete.Perform this step on all broker hosts first, and then on all node hosts. This begins the broker outage, and all communication between the broker host and the node hosts is stopped. Perform theoutagestep with the following command:#

ose-upgrade outageAfter the command completes on all hosts, node and broker hosts can be upgraded simultaneously until the upgrade steps are complete on all node hosts, and the broker host reaches theconfirm_nodesstep. - For all other hosts that are not a broker or a node host, run

yum updateto upgrade any services that are installed, such as MongoDB or ActiveMQ:#

yum update

Procedure 4.20. To Perform the rpms Step on Broker and Node Hosts:

- The

rpmsstep updates RPM packages installed on the host, and installs any new RPM packages that are required. For node hosts, this includes the recommended cartridge dependency metapackages for any cartridge already installed on a node. See Section 9.8.3, “Installing Cartridge Dependency Metapackages” for more information about cartridge dependency metapackages.Run therpmsstep on each host:#

ose-upgrade rpms

Procedure 4.21. To Perform the conf Step on Broker and Node Hosts:

- The

confstep changes the OpenShift Enterprise configuration to match the new codebase installed in the previous step. Each modified file is first copied to a file with the same name plus a.ugsaveextension and a timestamp. This makes it easier to determine what files have changed.Run theconfstep on each host:#

ose-upgrade confWarning

If the configuration files have been significantly modified from the recommended configuration, manual intervention may be required to merge configuration changes so that they can be used with OpenShift Enterprise.

Procedure 4.22. To Perform the maintenance_mode Step on Broker and Node Hosts:

- The

maintenance_modestep manages the following actions:- Configures the broker to disable the API and return an outage notification to any requests. (Broker hosts only)

- Starts the broker service and, if installed, the console service in maintenance mode so that they provide clients with an outage notification. (Broker hosts only)

- Clears the broker and console caches. (Broker hosts only)

- Enables gear upgrade extensions. (Node hosts only)

- Saves and regenerates configurations for any

apache-vhostfront ends. (Node hosts only) - Stops the

openshift-iptables-port-proxyservice. (Node hosts only) - Starts the

ruby193-mcollectiveservice. (Node hosts only)

Run themaintenance_modestep on each host:#

ose-upgrade maintenance_mode

Procedure 4.23. To Perform the pending_ops Step on Broker Hosts:

- The

pending_opsstep clears records of any pending application operations because the outage prevents them from ever completing. Run thepending_opsstep on one broker host only:#

ose-upgrade pending_ops - On any remaining broker hosts, run the following command to skip the

pending_opsstep:#

ose-upgrade pending_ops --skip

Procedure 4.24. To Perform the confirm_nodes Step on Broker Hosts:

- The

confirm_nodesstep attempts to access all known node hosts to determine whether they have all been upgraded before proceeding. This step fails if themaintenance_modestep has not been completed on all node hosts, or if MCollective cannot access any node hosts.Run theconfirm_nodesstep on a broker host:#

ose-upgrade confirm_nodes - If this step fails due to node hosts that are no longer deployed, you may need to skip the

confirm_nodesstep. Ensure that all node hosts reported missing are not actually expected to respond, then skip theconfirm_nodesstep with the following command:#

ose-upgrade --skip confirm_nodes

Procedure 4.25. To Perform the data Step on Broker Hosts:

- The

datastep runs a data migration against the shared broker datastore. Run thedatastep on one broker host:#

ose-upgrade dataWhen one broker host begins this step, any attempts made by other broker hosts to run thedatastep simultaneously will fail. - After the

datastep completes on the first broker host, run it on any remaining broker hosts.

Procedure 4.26. To Perform the gears Step on Broker Hosts:

- The

gearsstep runs a gear migration through the required changes so that they can be used in OpenShift Enterprise 2.1. Run thegearsstep on one broker host:#

ose-upgrade gearsWhen one broker host begins this step, any attempts made by other broker hosts to run thegearsstep simultaneously will fail. - After the

gearsstep completes on the first broker host, run it on any remaining broker hosts.

Procedure 4.27. To Perform the test_gears_complete Step on Node Hosts:

- The

test_gears_completestep verifies the gear migrations are complete before proceeding. This step blocks the upgrade on node hosts by waiting until thegearsstep has completed on an associated broker host. Run thetest_gears_completestep on all node hosts:#

ose-upgrade test_gears_complete

Procedure 4.28. To Perform the end_maintenance_mode Step on Broker and Node Hosts:

- The

end_maintenance_modestep starts the services that were stopped in themaintenance_modestep or added in the interim. It gracefully restartshttpdto complete the node host upgrade, and restarts the broker service and, if installed, the console service. Complete this step on all node hosts first before running it on the broker hosts:#

ose-upgrade end_maintenance_mode - Run the

oo-accept-nodescript on each node host to verify that it is correctly configured:#

oo-accept-node

Procedure 4.29. To Perform the post Step on Broker Hosts:

- The

poststep manages the following actions on the broker host:- Imports cartridges to the datastore.

- Performs any post-upgrade datastore migration steps.

- Clears the broker and console caches.

Run thepoststep on a broker host:#

ose-upgrade postWhen one broker host begins this step, any attempts made by other broker hosts to run thepoststep simultaneously will fail. - After the

poststep completes on the first broker host, run it on any remaining broker hosts. - The upgrade is now complete for an OpenShift Enterprise installation. Run

oo-diagnosticson each host to diagnose any problems:#

oo-diagnostics

Although the goal is to make the upgrade process as easy as possible, some known issues must be addressed manually:

- Because Jenkins applications cannot be migrated, follow these steps to regain functionality:

- Save any modifications made to existing Jenkins jobs.

- Remove the existing Jenkins application.

- Add the Jenkins application again.

- Add the Jenkins client cartridge as required.

- Reapply the required modifications from the first step.

- There are no notifications when a gear is successfully migrated but fails to start. This may not be a migration failure because there may be multiple reasons why a gear fails to start. However, Red Hat recommends that you verify the operation of your applications after upgrading. The

service openshift-gears statuscommand may be helpful in certain situations.

4.5. Upgrading from OpenShift Enterprise 2.1 to OpenShift Enterprise 2.2

begin step, adjusts the yum configurations in preparation for the upgrade. Red Hat recommends that you perform this step in advance of the scheduled outage to ensure any subscription issues are resolved before you proceed with the upgrade.

Procedure 4.30. To Bootstrap the Upgrade and Perform the begin Step:

- The openshift-enterprise-release RPM package includes the

ose-upgradetool that guides you through the upgrade process. Install the openshift-enterprise-release package on each host, and update it to the most current version.#

yum install openshift-enterprise-release - The

beginstep of the upgrade process applies to all hosts, and includes those hosts that contain only supporting services such as MongoDB and ActiveMQ. Hosts using Red Hat Subscription Management (RHSM) or Red Hat Network (RHN) Classic are unsubscribed from the 2.1 channels and subscribed to the new 2.2 channels.Warning

This step assumes that the channel names come directly from Red Hat Network. If the package source is an instance of Red Hat Satellite or Subscription Asset Manager and the channel names are remapped differently, you must change this yourself. Examine the scripts in the/usr/lib/ruby/site_ruby/1.8/ose-upgrade/host/upgrades/4/directory for use as models. You can also add your custom script to a subdirectory to be executed with theose-upgradetool.In addition to updating the channel set, modifications to theyumconfiguration give priority to the OpenShift Enterprise, Red Hat Enterprise Linux, and JBoss repositories. However, packages from other sources are excluded as required to prevent certain issues with dependency management that occur between the various channels.Run thebeginstep on each host. Note that the command output is different depending on the type of host. The following example output is from a broker host:#

ose-upgrade beginINFO: OpenShift broker installed. INFO: Setting host step 'begin' status to UPGRADING INFO: Starting upgrade number 4 to version 2.2. [...] INFO: updating /etc/openshift-enterprise-release INFO: Setting host step 'begin' status to COMPLETE INFO: To continue the upgrade, install a specific upgrade package.Important

Theoo-admin-yum-validator --oo-version 2.2 --fix-allcommand is run automatically during thebeginstep. When using RHN Classic, the command does not automatically subscribe a system to the OpenShift Enterprise 2.2 channels, but instead reports the manual steps required. After the channels are manually subscribed, running thebeginstep again sets the properyumpriorities and continues as expected.

Procedure 4.31. To Install the Upgrade RPM Specific to a Host:

- Depending on the host type, install the latest upgrade RPM package from the new OpenShift Enterprise 2.2 channels. For broker hosts, install the openshift-enterprise-upgrade-broker package:

#

yum install openshift-enterprise-upgrade-brokerFor node hosts, install the openshift-enterprise-upgrade-node package:#

yum install openshift-enterprise-upgrade-nodeIf the package is already installed because of a previous upgrade, it still must be updated to the latest package version for the OpenShift Enterprise 2.2 upgrade. - The

ose-upgradetool guides the upgrade process by listing the necessary steps that are specific to the upgrade scenario, and identifies the step to be performed next. Theose-upgrade statuscommand, orose-upgrade, provides a current status report. The command output varies depending on the type of host. The following example output is from a broker host:#

ose-upgrade statusINFO: OpenShift broker installed. Current upgrade is number 4 to version 2.2. Step sequence: begin pre outage rpms conf maintenance_mode pending_ops confirm_nodes data gears end_maintenance_mode post Next step is: pre

Procedure 4.32. To Perform the pre Step on Broker and Node Hosts:

- The

prestep manages the following actions:- Backs up OpenShift Enterprise configuration files.

- Clears pending operations older than one hour. (Broker hosts only)

- Performs any pre-upgrade datastore migration steps. (Broker hosts only)

Run theprestep on one broker host and each node host:#

ose-upgrade preWhen one broker host begins this step, any attempts made by other broker hosts to run theprestep simultaneously will fail. - After the

prestep completes on the first broker host, run it on any remaining broker hosts. - After the

prestep completes on all hosts, theose-upgradetool allows you to continue through the node and broker host upgrade steps in parallel. On broker hosts, the tool will block theconfirm_nodesstep if the associated node hosts have not completed theirmaintenance_modestep. On node hosts, the tool blocks thetest_gears_completestep if the associated broker has not completed thegearsstep.Continue through the following procedures for instructions on each subsequent step.

Procedure 4.33. To Perform the rpms Step on Broker and Node Hosts:

- The

rpmsstep updates RPM packages installed on the host and installs any new RPM packages that are required. For node hosts, this includes the recommended cartridge dependency metapackages for any cartridge already installed on a node. See Section 9.8.3, “Installing Cartridge Dependency Metapackages” for more information about cartridge dependency metapackages.Run therpmsstep on each host:#

ose-upgrade rpms - For all other hosts that are not a broker or a node host, run

yum updateto upgrade any services that are installed, such as MongoDB or ActiveMQ:#

yum update

Procedure 4.34. To Perform the conf Step on Broker and Node Hosts:

- The

confstep changes the OpenShift Enterprise configuration to match the new codebase installed in the previous step. Each modified file is first copied to a file with the same name plus a.ugsaveextension and a timestamp. This makes it easier to determine what files have changed.This step also disables the SSLv3 protocol on each broker host in favor of TLS due to CVE-2014-3566.Run theconfstep on each host:#

ose-upgrade confWarning

If the configuration files have been significantly modified from the recommended configuration, manual intervention may be required to merge configuration changes so that they can be used with OpenShift Enterprise.

Procedure 4.35. To Perform the maintenance_mode Step on Broker and Node Hosts:

Warning

end_maintenance_mode step is complete.

- Starting with OpenShift Enterprise 2.2, the

apache-mod-rewritefront-end server proxy plug-in is deprecated. New deployments of OpenShift Enterprise 2.2 now use theapache-vhostplug-in as the default.Important

Any new nodes added to your deployment after the upgrade will use theapache-vhostplug-in by default. Note that theapache-mod-rewriteplug-in is incompatible with theapache-vhostplug-in, and the front-end server configuration on all nodes across a deployment must be consistent. See Section 10.1, “Front-End Server Proxies” for more information.The default behavior of themaintenance_modestep is to leave theapache-mod-rewriteplug-in in place, if it is installed. Do not set theOSE_UPGRADE_MIGRATE_VHOSTenvironment variable at all, not even tofalseor0, if you require this default behavior.However, if your OpenShift Enterprise 2.1 deployment was configured to use theapache-mod-rewriteplug-in before starting the 2.2 upgrade, you can optionally allow theose-upgradetool to migrate your node hosts to the newly-defaultapache-vhostplug-in. To enable this option, set theOSE_UPGRADE_MIGRATE_VHOSTenvironment variable on each node host:# export OSE_UPGRADE_MIGRATE_VHOST=true

- The

maintenance_modestep manages actions in the following order:- Configures the broker to disable the API and return an outage notification to any requests. (Broker hosts only)

- Restarts the broker service and, if installed, the console service in maintenance mode so that they provide clients with an outage notification. (Broker hosts only)

- Clears the broker and console caches. (Broker hosts only)

- Stops the

ruby193-mcollectiveservice. (Node hosts only) - Saves the front-end server proxy configuration. (Node hosts only)

- If the

OSE_UPGRADE_MIGRATE_VHOSTenvironment variable was set in the previous step, migrates from theapache-mod-rewriteplug-in to theapache-vhostplug-in. (Node hosts only) - Disables the SSLv3 protocol in favor of TLS due to CVE-2014-3566. (Node hosts only)

- Enables gear upgrade extensions. (Node hosts only)

- Starts the

ruby193-mcollectiveservice. (Node hosts only)

Run themaintenance_modestep on each host:#

ose-upgrade maintenance_mode

Procedure 4.36. To Perform the pending_ops Step on Broker Hosts:

- The

pending_opsstep clears records of any pending application operations because the outage prevents them from ever completing. Run thepending_opsstep on one broker host:#

ose-upgrade pending_opsWhen one broker host begins this step, any attempts made by other broker hosts to run thepending_opsstep simultaneously will fail. - After the

pending_opsstep completes on the first broker host, run it on any remaining broker hosts.

Procedure 4.37. To Perform the confirm_nodes Step on Broker Hosts:

- The

confirm_nodesstep attempts to access all known node hosts to determine whether they have all been upgraded before proceeding. This step fails if themaintenance_modestep has not been completed on all node hosts, or if MCollective cannot access any node hosts.Run theconfirm_nodesstep on a broker host:#

ose-upgrade confirm_nodes - If this step fails due to node hosts that are no longer deployed, you may need to skip the

confirm_nodesstep. Ensure that all node hosts reported missing are not actually expected to respond, then skip theconfirm_nodesstep with the following command:#

ose-upgrade --skip confirm_nodes

Procedure 4.38. To Perform the data Step on Broker Hosts:

- The

datastep runs a data migration against the shared broker datastore. Run thedatastep on one broker host:#

ose-upgrade dataWhen one broker host begins this step, any attempts made by other broker hosts to run thedatastep simultaneously will fail. - After the

datastep completes on the first broker host, run it on any remaining broker hosts.

Procedure 4.39. To Perform the gears Step on Broker Hosts:

- The

gearsstep runs a gear migration through the required changes so that they can be used in OpenShift Enterprise 2.2. Run thegearsstep on one broker host:#

ose-upgrade gearsWhen one broker host begins this step, any attempts made by other broker hosts to run thegearsstep simultaneously will fail. - After the

gearsstep completes on the first broker host, run it on any remaining broker hosts.

Procedure 4.40. To Perform the test_gears_complete Step on Node Hosts:

- The

test_gears_completestep verifies the gear migrations are complete before proceeding. This step blocks the upgrade on node hosts by waiting until thegearsstep has completed on an associated broker host. Run thetest_gears_completestep on all node hosts:#

ose-upgrade test_gears_complete

Procedure 4.41. To Perform the end_maintenance_mode Step on Broker and Node Hosts:

- The

end_maintenance_modestep restarts the following services on the node hosts:httpd(Restarts gracefully)ruby193-mcollectiveopenshift-iptables-port-proxyopenshift-node-web-proxyopenshift-sni-proxyopenshift-watchman

Complete this step on all node hosts first before running it on the broker hosts:#

ose-upgrade end_maintenance_mode - After the

end_maintenance_modecommand has completed on all node hosts, run the same command on the broker hosts to disable the outage notification enabled during the brokermaintenance_modestep and restart the broker service and, if installed, the console service:#

ose-upgrade end_maintenance_modeThis allows the broker to respond to client requests normally again. - Run the

oo-accept-nodescript on each node host to verify that it is correctly configured:#

oo-accept-node

Procedure 4.42. To Perform the post Step on Broker Hosts:

- The

poststep manages the following actions on the broker host:- Imports cartridges to the datastore.

- Performs any post-upgrade datastore migration steps.

- Clears the broker and console caches.

Run thepoststep on a broker host:#

ose-upgrade postWhen one broker host begins this step, any attempts made by other broker hosts to run thepoststep simultaneously will fail. - After the

poststep completes on the first broker host, run it on any remaining broker hosts. - The upgrade is now complete for an OpenShift Enterprise installation. Run

oo-diagnosticson each host to diagnose any problems:#

oo-diagnostics

Although the goal is to make the upgrade process as easy as possible, some known issues must be addressed manually:

- Because Jenkins applications cannot be migrated, follow these steps to regain functionality:

- Save any modifications made to existing Jenkins jobs.

- Remove the existing Jenkins application.

- Add the Jenkins application again.

- Add the Jenkins client cartridge as required.

- Reapply the required modifications from the first step.

- There are no notifications when a gear is successfully migrated but fails to start. This may not be a migration failure because there may be multiple reasons why a gear fails to start. However, Red Hat recommends that you verify the operation of your applications after upgrading. The

service openshift-gears statuscommand may be helpful in certain situations.

Chapter 5. Host Preparation

5.1. Default umask Setting

umask value (022) for Red Hat Enterprise Linux 6 be set on all hosts prior to installing any OpenShift Enterprise packages. If a custom umask setting is used, it is possible for incorrect permissions to be set during installation for many files critical to OpenShift Enterprise operation.

5.2. Network Access

iptables firewall configuration by default to enable network access. If your environment requires a custom or external firewall solution, the configuration must accommodate the port requirements of OpenShift Enterprise.

5.2.1. Custom and External Firewalls

public in the Direction column. Ensure the firewall exposes these ports publicly.

Table 5.1. Required Ports for OpenShift Enterprise

| Host | Port | Protocol | Direction | Use |

|---|---|---|---|---|

| All | 22 | TCP | Inbound internal network | Remote administration. |

| All | 53 | TCP/UDP | Outbound to nameserver | Name resolution. |

| Broker | 22 | TCP | Outbound to node hosts | rsync access to gears for moving gears between nodes. |

| Broker | 80 | TCP | Inbound public traffic |

HTTP access. HTTP requests to port 80 are redirected to HTTPS on port 443.

|

| Broker | 443 | TCP | Inbound public traffic |

HTTPS access to the broker REST API by

rhc and Eclipse integration. HTTPS access to the Management Console.

|

| Broker | 27017 | TCP | Outbound to datastore host. | Optional if the same host has both the broker and datastore components. |

| Broker | 61613 | TCP | Outbound to ActiveMQ hosts |

ActiveMQ connections to communicate with node hosts.

|

| Node | 22 | TCP | Inbound public traffic |

Developers running

git push to their gears. Developer remote administration on their gears.

|

| Node | 80 | TCP | Inbound public traffic | HTTP requests to applications hosted on OpenShift Enterprise. |

| Node | 443 | TCP | Inbound public traffic | HTTPS requests to applications hosted on OpenShift Enterprise. |

| Node | 8000 | TCP | Inbound public traffic |

WebSocket connections to applications hosted on OpenShift Enterprise. Optional if you are not using WebSockets.

|

| Node | 8443 | TCP | Inbound public traffic |

Secure WebSocket connections to applications hosted on OpenShift Enterprise. Optional if you are not using secure WebSockets.

|

| Node | 2303 - 2308 [a] | TCP | Inbound public traffic |

Gear access through the SNI proxy. Optional if you are not using the SNI proxy.

|

| Node | 443 | TCP | Outbound to broker hosts | REST API calls to broker hosts. |

| Node | 35531 - 65535 [b] | TCP | Inbound public traffic |

Gear access through the

port-proxy service. Optional unless applications need to expose external ports in addition to the front-end proxies.

|

| Node | 35531 - 65535 [b] | TCP | Inbound/outbound with other node hosts |

Communications between cartridges running on separate gears.

|

| Node | 61613 | TCP | Outbound to ActiveMQ hosts | ActiveMQ connections to communicate with broker hosts. |

| ActiveMQ | 61613 | TCP | Inbound from broker and node hosts | Broker and node host connections to ActiveMQ. |

| ActiveMQ | 61616 | TCP | Inbound/outbound with other ActiveMQ brokers |

Communications between ActiveMQ hosts. Optional if no redundant ActiveMQ hosts exist.

|

| Datastore | 27017 | TCP | Inbound from broker hosts |

Broker host connections to MongoDB. Optional if the same host has both the broker and datastore components.

|

| Datastore | 27017 | TCP | Inbound/outbound with other MongoDB hosts |

Replication between datastore hosts. Optional if no redundant datastore hosts exist.

|

| Nameserver | 53 | TCP/UDP | Inbound from broker hosts | Publishing DNS updates. |

| Nameserver | 53 | TCP/UDP | Inbound public traffic | Name resolution for applications hosted on OpenShift Enterprise. |

| Nameserver | 53 | TCP/UDP | Outbound public traffic |

DNS forwarding. Optional unless the nameserver is recursively forwarding requests to other nameservers.

|

[a]

Note: The size and location of these SNI port range are configurable.

[b]

Note: If the value of PROXY_BEGIN in the /etc/openshift/node.conf file changes from 35531, adjust this port range accordingly.

| ||||

5.2.2. Manually Configuring an iptables Firewall

iptables commands to allow access on each host as needed:

Procedure 5.1. To Configure an iptables Firewall:

- Use the following command to make any changes to an

iptablesconfiguration:#

iptables --insert Rule --in-interface Network_Interface --protocol Protocol --source IP_Address --dport Destination_Port --jump ACCEPTExample 5.1. Allowing Broker Access to MongoDB

The following is an example set of commands for allowing a set of brokers with IP addresses 10.0.0.1-3 access to the MongoDB datastore:iptables --insert INPUT -i eth0 -p tcp --source 10.0.0.1 --dport 27017 --jump ACCEPT iptables --insert INPUT -i eth0 -p tcp --source 10.0.0.2 --dport 27017 --jump ACCEPT iptables --insert INPUT -i eth0 -p tcp --source 10.0.0.3 --dport 27017 --jump ACCEPT

Example 5.2. Allowing Public Access to the Nameserver

The following example allows inbound public DNS requests to the nameserver:iptables --insert INPUT --protocol tcp --dport 53 -j ACCEPT iptables --insert INPUT --protocol udp --dport 53 -j ACCEPT

Note that because the command is for public access, there is no--sourceoption. - Save any firewall changes to make them persistent:

#

service iptables save

5.2.3. IPv6 Tolerance

- OpenShift Enterprise client tools (

rhc) - OpenShift Enterprise Management Console

- ActiveMQ and MCollective

- Application access

- MongoDB can be configured to listen on IPv6 so that some client tools can connect over IPv6 if the

mongoclient is running version 1.10.0 or newer. However, the broker usesmongoidwhich currently requires IPv4. - Broker DNS updates may require IPv4, however IPv6 connectivity can be used when using the nsupdate DNS plug-in.

Caveats and Known Issues for IPv6 Tolerance

- Inter-gear communication relies on IPv6 to IPv4 fallback. If for some reason the application or library initiating the connection does not properly handle the fallback, then the connection fails.

- The OpenShift Enterprise installation script and Puppet module do not configure MongoDB to use IPv6 and configures IPv4 addresses for other settings where required, for example in the nsupdate DNS plug-in configuration.

- OpenShift Enterprise internals explicitly query interfaces for IPv4 addresses in multiple places.

- The

apache-mod-rewriteandnodejs-websocketfront-end server plug-ins have been tested, however the following components have not:- The

apache-vhostandhaproxy-sni-proxyfront-end server plug-ins. - DNS plug-ins other than nsupdate.

- Routing plug-in.

- Rsyslog plug-in.

- Individual cartridges for full IPv6 tolerance.

- Known Issue: BZ#1104337

- Known Issue: BZ#1107816

5.3. Configuring Time Synchronization

ntpdate command to set the system clock, replacing the NTP servers to suit your environment:

# ntpdate clock.redhat.com/etc/ntp.conf file to keep the clock synchronized during operation.

"the NTP socket is in use, exiting" is displayed after running the ntpdate command, it means that the ntpd daemon is already running. However, the clock may not be synchronized due to a substantial time difference. In this case, run the following commands to stop the ntpd service, set the clock, and start the service again:

# service ntpd stop# ntpdate clock.redhat.com# service ntpd start

hwclock command to synchronize the hardware clock to the system clock. Skip this step if you are installing on a virtual machine, such as an Amazon EC2 instance. For a physical hardware installation, run the following command:

# hwclock --systohcNote

synchronize_clock function performs these steps.

5.4. Enabling Remote Administration

# mkdir /root/.ssh# chmod 700 /root/.ssh

ssh-keygen command to generate a new key pair, or use an existing public key. In either case, edit the /root/.ssh/authorized_keys file on the host and append the public key, or use the ssh-copy-id command to do the same. For example, on your local workstation, run the following command, replacing the example IP address with the IP address of your broker host:

# ssh-copy-id root@10.0.0.1Chapter 6. Deployment Methods

- The

oo-installinstallation utility interactively gathers information about a deployment before automating the installation of a OpenShift Enterprise host. This method is intended for trials of simple deployments. - The installation scripts, available as either a kickstart or bash script, include configurable parameters that help automate the installation of a OpenShift Enterprise host. This method allows for increased customization of the installation process for use in production deployments.

- The sample deployment steps detailed later in this guide describe the various actions of the installation scripts. This method allows for a manual installation of a OpenShift Enterprise host.

6.1. Using the Installation Utility

oo-install installation utility, which is a front end to the basic installation scripts. The installation utility provides a UI for a single- or multi-host deployment either from your workstation, or from one of the hosts to be installed.

~/.openshift/oo-install-cfg.yml, which saves your responses to the installation utility so you can use them in future installations if your initial deployment is interrupted. After completing an initial deployment, only additional node hosts can be added to the deployment using the utility. To add broker, message server, or DB server components to an existing deployment, see Section 8.3, “Separating Broker Components by Host” or Section 8.4, “Configuring Redundancy” for more information.

Before running the installation utility, consider the following:

- Do you have ruby-1.8.7 or later, curl, tar, and gzip installed on your system? If required, the installation utility offers suggestions to install RPM packages of utilities that are missing.

- Does

yum repolistshow the correct repository setup? - Plan your host roles. Do you know which of your hosts will be the broker host and node hosts? If running the tool with the

-aoption, do you have hosts for MongoDB and ActivemQ? - Do you have password-less SSH login access into the instances where you will be running the oo-install command? Do your hosts have password-less SSH as well?

- You can use an existing DNS server. During installation, the oo-install tool asks if you would like to install a DNS server on the same host as the broker host. Answering

noresults in a BIND server being set up for you. However, answeringyesrequires you to input the settings of your existing DNS server. This BIND instance provides lookup information for applications that are created by any application developers.

There are two methods for using the installation utility. Both are outlined in the following procedures:

Procedure 6.1. To Run the Installation Utility From the Internet:

- You can run the installation utility directly from the Internet with the following command:

$

Additional options can be used with the command. These options are outlined later in this section:sh <(curl -s https://install.openshift.com/ose-2.2)$

sh <(curl -s https://install.openshift.com/ose-2.2) -s rhsm -u user@company.com - Follow the on-screen instructions to either deploy a new OpenShift Enterprise host, or add a node host to an existing deployment.

Procedure 6.2. To Download and Run the Installation Utility:

- Download and unpack the installation utility:

$

curl -o oo-install-ose.tgz https://install.openshift.com/portable/oo-install-ose.tgz$tar -zxf oo-install-ose.tgz - Execute the installation utility to interactively configure one or more hosts:

$

./oo-install-oseTheoo-install-oseutility automatically runs the installation utility in OpenShift Enterprise mode. Additional options can be used with the command. These options are outlined later in this section:$

./oo-install-ose -s rhsm -u user@company.com - Follow the on-screen instructions to either deploy a new OpenShift Enterprise host, or add a node host to an existing deployment.

The current iteration of the installation utility enables the initial deployment and configuration of OpenShift Enterprise according to the following scenarios:

- Broker, message server (ActiveMQ), and DB server (MongoDB) components on one host, and the node components on separate hosts.

- Broker, message server (ActiveMQ), DB server (MongoDB), and node components on separate hosts (using

-afor advanced mode only). - All components on one host.

Warning

Starting with OpenShift Enterprise 2.2, the installation utility can install a highly-available OpenShift Enterprise deployment by configuring your defined hosts for redundancy within the installation utility prompts. By default, and without the -a option, the installation utility scales and installs ActiveMQ and MongoDB services along with the defined broker hosts. If the -a option is used, you can define redundant services on separate hosts as well.

When you run the installation utility for the first time, you are asked a number of questions related to the components of your planned OpenShift Enterprise deployment, such as the following:

- User names and either the host names or IP addresses for access to hosts.

- DNS configuration for hosts.

- Valid gear sizes for the deployment.

- Default gear capabilities for new users.

- Default gear size for new applications.

- User names and passwords for configured services, with an option to automatically generate passwords.

- Gear size for new node hosts (profile name only).

- District membership for new node hosts.

- Red Hat subscription type. Note that when using the installation utility you can add multiple pool IDs by separating each pool ID with a space. You can find the required pool IDs with the procedure outlined in Section 7.1.1, “Using Red Hat Subscription Management on Broker Hosts”.

The installation utility can be used with the following options:

- -a (--advanced-mode)

- By default, the installation utility installs MongoDB and ActiveMQ on the system designated as the broker host. Use the

-aoption to install these services on a different host. - -c (--config-file) FILE_PATH

- Use the

-coption with the desired filepath to specify a configuration file other than the default~/.openshift/oo-install-cfg.ymlfile. If the specified file does not exist, a file will be created with some basic settings. - -l (--list-workflows)

- Before using the

-woption, use the-loption to find the desired workflow ID. - -w (--workflow) WORKFLOW_ID

- If you already have an OpenShift Enterprise deployment configuration file, use the install utility with the

-woption and theenterprise_deployworkflow ID to run the deployment without any user interaction. The configuration is assessed, then deployed if no problems are found. This is useful for restarting after a failed deployment or for running multiple similar deployments. - -s (--subscription-type) TYPE

- The

-soption determines how the deployment will obtain the RPMs needed to install OpenShift Enterprise, and overrides any method specified in the configuration file. Use the option with one of the following types:rhsm Red Hat Subscription Manager is used to register and configure the OpenShift software channels according to user, password, and pool settings. rhn RHN Classic is used to register and configure the OpenShift software channels according to user, password, and optional activation key settings. RHN Classic is primarily intended for existing, legacy systems. Red Hat strongly recommends that you use Red Hat Subscription Manager for new installations, because RHN Classic is being deprecated. yum New yumrepository entries are created in the/etc/yum.repos.d/directory according to several repository URL settings. This is not a standard subscription and it is assumed you have already created or have access to these repositories in the layout specified in theopenshift.shfile.none The default setting. Use this option when the software subscriptions on your deployment hosts are already configured as desired and changes are not needed. - -u (--username) USERNAME

- Use the

-uoption to specify the user for the Red Hat Subscription Management or RHN Classic subscription methods from the command line instead of in the configuration file. - -p (--password) PASSWORD

- Similar to the

-uoption, use the-poption to specify the password for the Red Hat Subscription Management or RHN Classic subscription methods from the command line instead of in the configuration file. As an alternative, the interactive UI mode also provides an option for entering subscription parameters for a one-time use without them being saved to the system. - -d (--debug)

- When using the

-doption, the installation utility prints information regarding any attempts to establish SSH sessions as it is running. This can be useful for debugging remote deployments.

Important

none is used for the subscription type, either by using the -s flag or by not configuring subscription information through the interactive UI or .yml configuration file, you must manually configure the correct yum repositories with the proper priorities before running the installation utility. See Section 7.1, “Configuring Broker Host Entitlements” and Section 9.1, “Configuring Node Host Entitlements” for instructions.

Once the oo-install tool has completed the install without errors, you have a working OpenShift Enterprise installation. Consult the following list for directions on what to do next:

- See information on creating any additional users in Section 12.2, “Creating a User Account”.

- See information on creating an application in the OpenShift Enterprise User Guide.

- See information on adding an external routing layer in Section 8.6, “Using an External Routing Layer for High-Availability Applications”.

6.2. Using the Installation Scripts

openshift.ks kickstart script is available at:

Example 6.1. Downloading the openshift.ks Kickstart Script

$ curl -O https://raw.githubusercontent.com/openshift/openshift-extras/enterprise-2.2/enterprise/install-scripts/openshift.ksopenshift.sh bash script is the extracted %post section of the openshift.ks script and is available at:

Example 6.2. Downloading the openshift.sh Bash Script

$ curl -O https://raw.githubusercontent.com/openshift/openshift-extras/enterprise-2.2/enterprise/install-scripts/generic/openshift.shImportant

When using the openshift.ks script, you can supply parameters as kernel parameters during the kickstart process. When using the openshift.sh script, you can similarly supply parameters as command-line arguments. See the commented notes in the header of the scripts for alternative methods of supplying parameters using the openshift.sh script.

Note

openshift.sh script by supplying parameters as command-line arguments. The same parameters can be supplied as kernel parameters for kickstarts using the openshift.ks script.

6.2.1. Selecting Components to Install

install_components parameter, the scripts can be configured to install one or more of the following components on a single host:

Table 6.1. Options for the install_components Parameter

| Options | Description |

|---|---|

broker | Installs the broker application and tools. |

named | Supporting service. Installs a BIND DNS server. |

activemq | Supporting service. Installs the messaging bus. |

datastore | Supporting service. Installs the MongoDB datastore. |

node | Installs node functionality, including cartridges. |

Warning

openshift.sh script and installs the broker, named, activemq, and datastore components on a single host, using default values for all unspecified parameters:

Example 6.3. Installing the broker, named, activemq, and datastore Components Using openshift.sh

$ sudo sh openshift.sh install_components=broker,named,activemq,datastoreopenshift.sh script and installs only the node component on a single host, using default values for all unspecified parameters:

Example 6.4. Installing the node Component Using openshift.sh

$ sudo sh openshift.sh install_components=node6.2.2. Selecting a Package Source

install_method parameter, the scripts assume that the installation source has already been configured to provide the required packages. Using the install_method parameter, the scripts can be configured to install packages from one of the following sources:

Table 6.2. Options for the install_method Parameter

| Parameter | Description | Additional Related Parameters |

|---|---|---|

yum | Configures yum based on supplied additional parameters. | rhel_repo, rhel_optional_repo, jboss_repo_base, rhscl_repo_base, ose_repo_base, ose_extra_repo_base |

rhsm | Uses Red Hat Subscription Management. | rhn_user, rhn_pass, sm_reg_pool, rhn_reg_opts |

rhn | Uses RHN Classic. | rhn_user, rhn_pass, rhn_reg_opts, rhn_reg_actkey |

Note

openshift.sh script and uses Red Hat Subscription Management as the package source, using default values for all unspecified parameters:

Example 6.5. Selecting a Package Source Using openshift.sh

$ sudo sh openshift.sh install_method=rhsm rhn_user=user@example.com rhn_pass=password sm_reg_pool=Example_3affb61f013b3ef6a5fe0b9a 6.2.3. Selecting Password Options

no_scramble parameter set to true to have default, insecure passwords used across the deployment.

install_component options:

Table 6.3. User Name and Password Parameters

| User Name Parameter | Password Parameter | Description |

|---|---|---|

mcollective_user

Default:

mcollective

| mcollective_password | These credentials are shared and must be the same between all broker and nodes for communicating over the mcollective topic channels in ActiveMQ. They must be specified and shared between separate ActiveMQ and broker hosts. These parameters are used by the install_component options broker and node. |

mongodb_broker_user

Default:

openshift

| mongodb_broker_password | These credentials are used by the broker and its MongoDB plug-in to connect to the MongoDB datastore. They must be specified and shared between separate MongoDB and broker hosts, as well as between any replicated MongoDB hosts. These parameters are used by the install_component options datastore and broker. |

|

Not available.

| mongodb_key

|

This key is shared and must be the same between any replicated MongoDB hosts. This parameter is used by the

install_component option datastore.

|

mongodb_admin_user

Default:

admin

| mongodb_admin_password | The credentials for this administrative user created in the MongoDB datastore are not used by OpenShift Enterprise, but an administrative user must be added to MongoDB so it can enforce authentication. These parameters are used by the install_component option datastore. |

openshift_user1

Default:

demo

| openshift_password1 | These credentials are created in the /etc/openshift/htpasswd file for the test OpenShift Enterprise user account. This test user can be removed after the installation is completed. These parameters are used by the install_component option broker. |

|

Not available.

Default:

amq

| activemq_amq_user_password | The password set for the ActiveMQ amq user is required by replicated ActiveMQ hosts to communicate with one another. The amq user is enabled only if replicated hosts are specified using the activemq_replicants parameter. If set, ensure the password is the same between all ActiveMQ hosts. These parameters are used by the install_component option activemq. |

openshift.sh script and sets unique passwords for various configured services, using default values for all unspecified parameters:

Example 6.6. Setting Unique Passwords Using openshift.sh

$ sudo sh openshift.sh install_components=broker,activemq,datastore mcollective_password=password1 mongodb_broker_password=password2 openshift_password1=password36.2.4. Setting Broker and Supporting Service Parameters

Table 6.4. Broker and Supporting Service Parameters

| Parameter | Description |

|---|---|

domain | This sets the network domain under which DNS entries for applications are placed. |

hosts_domain | If specified and host DNS is to be created, this domain is created and used for creating host DNS records; application records are still placed in the domain specified with the domain parameter. |

hostname | This is used to configure the host's actual host name. This value defaults to the value of the broker_hostname parameter if the broker component is being installed, otherwise named_hostname if installing named, activemq_hostname if installing activemq, or datastore_hostname if installing datastore. |

broker_hostname | This is used as a default for the hostname parameter when installing the broker component. It is also used both when configuring the broker and when configuring the node, so that the node can contact the broker's REST API for actions such as scaling applications up or down. It is also used when adding DNS records, if the named_entries parameter is not specified. |

named_ip_addr | This is used by every host to configure its primary name server. It defaults to the current IP address if installing the named component, otherwise it defaults to the broker_ip_addr parameter. |

named_entries | This specifies the host DNS entries to be created in comma-separated, colon-delimited hostname:ipaddress pairs, or can be set to none so that no DNS entries are created for hosts. The installation script defaults to creating entries only for other components being installed on the same host when the named component is installed. |

bind_key | This sets a key for updating BIND instead of generating one. If you are installing the broker component on a separate host from the named component, or are using an external DNS server, configure the BIND key so that the broker can update it. Any Base64-encoded value can be used, but ideally an HMAC-SHA256 key generated by dnssec-keygen should be used. For other key algorithms or sizes, ensure the bind_keyalgorithm and bind_keysize parameters are appropriately set as well. |

valid_gear_sizes | This is a comma-separated list of gear sizes that are valid for use in applications, and sets the VALID_GEAR_SIZES parameter in the /etc/openshift/broker.conf file. |

default_gear_size | This is the default gear size used when new gears are created, and sets the DEFAULT_GEAR_SIZE parameter in the /etc/openshift/broker.conf file. |

default_gear_capabilities | This is a comma-separated list of default gear sizes allowed on a new user account, and sets the DEFAULT_GEAR_CAPABILITIES parameter in the /etc/openshift/broker.conf file. |

VALID_GEAR_SIZES, DEFAULT_GEAR_SIZE, and DEFAULT_GEAR_CAPABILITIES parameters in the /etc/openshift/broker.conf file.

openshift.sh script and sets various parameters for the broker and supporting services, using default values for all unspecified parameters:

Example 6.7. Setting Broker and Supporting Service Parameters Using openshift.sh

$ sudo sh openshift.sh install_components=broker,named,activemq,datastore domain=apps.example.com hosts_domain=hosts.example.com broker_hostname=broker.hosts.example.com named_entries=broker:192.168.0.1,activemq:192.168.0.1,node1:192.168.0.2 valid_gear_sizes=medium default_gear_size=medium default_gear_capabilities=medium6.2.5. Setting Node Parameters

Table 6.5. Node Parameters

| Parameter | Description |

|---|---|

domain | This sets the network domain under which DNS entries for applications are placed. |

hosts_domain | If specified and host DNS is to be created, this domain is created and used for creating host DNS records; application records are still placed in the domain specified with the domain parameter. |

hostname | This is used to configure the host's actual host name. |

node_hostname | This is used as a default for the hostname parameter when installing the node component. It is also used when adding DNS records, if the named_entries parameter is not specified. |

named_ip_addr | This is used by every host to configure its primary name server. It defaults to the current IP address if installing the named component, otherwise it defaults to the broker_ip_addr parameter. |

node_ip_addr | This is used by the node to provide a public IP address if different from one on its NIC. It defaults to the current IP address when installing the node component. |

broker_hostname | This is used by the node to record the host name of its broker, as the node must be able to contact the broker's REST API for actions such as scaling applications up or down. |

node_profile | This sets the name of the node profile, also known as a gear profile or gear size, to be used on the node being installed. The value must also be a member of the valid_gear_sizes parameter used by the broker. |

cartridges | This is a comma-separated list of cartridges to install on the node and defaults to standard, which installs all cartridges that do not require add-on subscriptions. See the commented notes in the header of the scripts for the full list of individual cartridges and more detailed usage. |

openshift.sh script and sets various node parameters, using default values for all unspecified parameters:

Example 6.8. Setting Node Parameters Using openshift.sh

$ sudo sh openshift.sh install_components=node domain=apps.example.com hosts_domain=hosts.example.com node_hostname=node1.hosts.example.com broker_ip_addr=192.168.0.1 broker_hostname=broker.hosts.example.com node_profile=medium cartridges=php,ruby,postgresql,haproxy,jenkins6.2.6. Deploying Sample Broker and Node Hosts Using openshift.sh

openshift.sh script. Whereas the preceding openshift.sh examples demonstrate various parameters discussed in their respective sections, the examples in this section use a combination of the parameters discussed up to this point to demonstrate a specific deployment scenario. The broker and supporting service components are installed on one host (Host 1), and the node component is installed on a separate host (Host 2).

openshift.sh

For Host 1, the command shown in the example runs the openshift.sh script with:

- Red Hat Subscription Manager set as the package source.

- The

broker,named,activemq, anddatastoreoptions set as the installation components. - Unique passwords set for MCollective, ActiveMQ, MongoDB, and the test OpenShift Enterprise user account.

- Various parameters set for the broker and supporting services.

- Default values set for all unspecified parameters.