-

Language:

English

-

Language:

English

Red Hat Training

A Red Hat training course is available for Red Hat OpenStack Platform

Deploying an Overcloud with Containerized Red Hat Ceph

Configuring the Director to Deploy and Use a Containerized Red Hat Ceph Cluster

OpenStack Documentation Team

rhos-docs@redhat.comAbstract

Chapter 1. Introduction

Red Hat OpenStack Platform director creates a cloud environment called the overcloud. The director provides the ability to configure extra features for an Overcloud. One of these extra features includes integration with Red Hat Ceph Storage. This includes both Ceph Storage clusters created with the director or existing Ceph Storage clusters.

The Red Hat Ceph cluster described in this guide features containerized Ceph Storage. For more information about containerized services in OpenStack, see "Configuring a Basic Overcloud with the CLI Tools" in the Director Installation and Usage Guide.

1.1. Defining Ceph Storage

Red Hat Ceph Storage is a distributed data object store designed to provide excellent performance, reliability, and scalability. Distributed object stores are the future of storage, because they accommodate unstructured data, and because clients can use modern object interfaces and legacy interfaces simultaneously. At the heart of every Ceph deployment is the Ceph Storage Cluster, which consists of two types of daemons:

- Ceph OSD (Object Storage Daemon)

- Ceph OSDs store data on behalf of Ceph clients. Additionally, Ceph OSDs utilize the CPU and memory of Ceph nodes to perform data replication, rebalancing, recovery, monitoring and reporting functions.

- Ceph Monitor

- A Ceph monitor maintains a master copy of the Ceph storage cluster map with the current state of the storage cluster.

For more information about Red Hat Ceph Storage, see the Red Hat Ceph Storage Architecture Guide.

This guide only integrates Ceph Block storage and the Ceph Object Gateway (RGW). It does not include Ceph File (CephFS) storage.

1.2. Defining the Scenario

This guide provides instructions for deploying a containerized Red Hat Ceph cluster with your overcloud. To do this, the director uses Ansible playbooks provided through the ceph-ansible package. The director also manages the configuration and scaling operations of the cluster.

1.3. Setting Requirements

This guide acts as supplementary information for the Director Installation and Usage guide. This means the Requirements section also applies to this guide. Implement these requirements as necessary.

If using the Red Hat OpenStack Platform director to create Ceph Storage nodes, note the following requirements for these nodes:

Chapter 2. Ceph Storage node requirements

Ceph Storage nodes are responsible for providing object storage in a Red Hat OpenStack Platform environment.

- Placement Groups

- Ceph uses Placement Groups to facilitate dynamic and efficient object tracking at scale. In the case of OSD failure or cluster re-balancing, Ceph can move or replicate a placement group and its contents, which means a Ceph cluster can re-balance and recover efficiently. The default Placement Group count that Director creates is not always optimal so it is important to calculate the correct Placement Group count according to your requirements. You can use the Placement Group calculator to calculate the correct count: Placement Groups (PGs) per Pool Calculator

- Processor

- 64-bit x86 processor with support for the Intel 64 or AMD64 CPU extensions.

- Memory

- Red Hat typically recommends a baseline of 16GB of RAM per OSD host, with an additional 2 GB of RAM per OSD daemon.

- Disk Layout

Sizing is dependant on your storage need. The recommended Red Hat Ceph Storage node configuration requires at least three or more disks in a layout similar to the following example:

-

/dev/sda- The root disk. The director copies the main Overcloud image to the disk. This should be at minimum 40 GB of available disk space. -

/dev/sdb- The journal disk. This disk divides into partitions for Ceph OSD journals. For example,/dev/sdb1,/dev/sdb2,/dev/sdb3, and onward. The journal disk is usually a solid state drive (SSD) to aid with system performance. /dev/sdcand onward - The OSD disks. Use as many disks as necessary for your storage requirements.NoteRed Hat OpenStack Platform director uses

ceph-ansible, which does not support installing the OSD on the root disk of Ceph Storage nodes. This means you need at least two or more disks for a supported Ceph Storage node.

-

- Network Interface Cards

- A minimum of one 1 Gbps Network Interface Cards, although it is recommended to use at least two NICs in a production environment. Use additional network interface cards for bonded interfaces or to delegate tagged VLAN traffic. It is recommended to use a 10 Gbps interface for storage node, especially if creating an OpenStack Platform environment that serves a high volume of traffic.

- Power Management

- Each Controller node requires a supported power management interface, such as an Intelligent Platform Management Interface (IPMI) functionality, on the server’s motherboard.

This guide also requires the following:

- An Undercloud host with the Red Hat OpenStack Platform director installed. See Installing the Undercloud.

- Any additional hardware recommendation for Red Hat Ceph Storage. See the Red Hat Ceph Storage Hardware Selection Guide for these recommendations.

The Ceph Monitor service is installed on the Overcloud’s Controller nodes. This means you must provide adequate resources to alleviate performance issues. Ensure the Controller nodes in your environment use at least 16 GB of RAM for memory and solid-state drive (SSD) storage for the Ceph monitor data. For a medium to large Ceph installation, provide at least 500 GB of Ceph monitor data. This space is necessary to avoid levelDB growth if the cluster becomes unstable.

2.1. Additional Resources

The /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-ansible.yaml environment file instructs the director to use playbooks derived from the ceph-ansible project. These playbooks are installed in /usr/share/ceph-ansible/ of the undercloud. In particular, the following file lists all the default settings applied by the playbooks:

-

/usr/share/ceph-ansible/group_vars/all.yml.sample

While ceph-ansible uses playbooks to deploy containerized Ceph Storage, do not edit these files to customize your deployment. Doing this will result in a failed deployment. Rather, use Heat environment files to override the defaults set by these playbooks.

You can also consult the documentation of this project (http://docs.ceph.com/ceph-ansible/master/) to learn more about the playbook collection.

Alternatively, you can also consult the Heat templates in /usr/share/openstack-tripleo-heat-templates/docker/services/ceph-ansible/ for information about the default settings applied by director for containerized Ceph Storage.

Reading these templates requires a deeper understanding of how environment files and Heat templates work in director. See Understanding Heat Templates and Environment Files for reference.

Lastly, for more information about containerized services in OpenStack, see "Configuring a Basic Overcloud with the CLI Tools" in the Director Installation and Usage Guide.

Chapter 3. Preparing Overcloud Nodes

All nodes in this scenario are bare metal systems using IPMI for power management. These nodes do not require an operating system because the director copies a Red Hat Enterprise Linux 7 image to each node; in addition, the Ceph Storage services on the nodes described here are containerized. The director communicates to each node through the Provisioning network during the introspection and provisioning processes. All nodes connect to this network through the native VLAN.

3.1. Cleaning Ceph Storage Node Disks

The Ceph Storage OSDs and journal partitions require GPT disk labels. This means the additional disks on Ceph Storage require conversion to GPT before installing the Ceph OSD services. For this to happen, all metadata must be deleted from the disks; this will allow the director to set GPT labels on them.

You can set the director to delete all disk metadata by default by adding the following setting to your /home/stack/undercloud.conf file:

clean_nodes=true

With this option, the Bare Metal Provisioning service will run an additional step to boot the nodes and clean the disks each time the node is set to available. This adds an additional power cycle after the first introspection and before each deployment. The Bare Metal Provisioning service uses wipefs --force --all to perform the clean.

After setting this option, run the openstack undercloud install command to execute this configuration change.

The wipefs --force --all will delete all data and metadata on the disk, but does not perform a secure erase. A secure erase takes much longer.

3.2. Registering Nodes

A node definition template (instackenv.json) is a JSON format file and contains the hardware and power management details for registering nodes. For example:

{

"nodes":[

{

"mac":[

"b1:b1:b1:b1:b1:b1"

],

"cpu":"4",

"memory":"6144",

"disk":"40",

"arch":"x86_64",

"pm_type":"ipmi",

"pm_user":"admin",

"pm_password":"p@55w0rd!",

"pm_addr":"192.0.2.205"

},

{

"mac":[

"b2:b2:b2:b2:b2:b2"

],

"cpu":"4",

"memory":"6144",

"disk":"40",

"arch":"x86_64",

"pm_type":"ipmi",

"pm_user":"admin",

"pm_password":"p@55w0rd!",

"pm_addr":"192.0.2.206"

},

{

"mac":[

"b3:b3:b3:b3:b3:b3"

],

"cpu":"4",

"memory":"6144",

"disk":"40",

"arch":"x86_64",

"pm_type":"ipmi",

"pm_user":"admin",

"pm_password":"p@55w0rd!",

"pm_addr":"192.0.2.207"

},

{

"mac":[

"c1:c1:c1:c1:c1:c1"

],

"cpu":"4",

"memory":"6144",

"disk":"40",

"arch":"x86_64",

"pm_type":"ipmi",

"pm_user":"admin",

"pm_password":"p@55w0rd!",

"pm_addr":"192.0.2.208"

},

{

"mac":[

"c2:c2:c2:c2:c2:c2"

],

"cpu":"4",

"memory":"6144",

"disk":"40",

"arch":"x86_64",

"pm_type":"ipmi",

"pm_user":"admin",

"pm_password":"p@55w0rd!",

"pm_addr":"192.0.2.209"

},

{

"mac":[

"c3:c3:c3:c3:c3:c3"

],

"cpu":"4",

"memory":"6144",

"disk":"40",

"arch":"x86_64",

"pm_type":"ipmi",

"pm_user":"admin",

"pm_password":"p@55w0rd!",

"pm_addr":"192.0.2.210"

},

{

"mac":[

"d1:d1:d1:d1:d1:d1"

],

"cpu":"4",

"memory":"6144",

"disk":"40",

"arch":"x86_64",

"pm_type":"ipmi",

"pm_user":"admin",

"pm_password":"p@55w0rd!",

"pm_addr":"192.0.2.211"

},

{

"mac":[

"d2:d2:d2:d2:d2:d2"

],

"cpu":"4",

"memory":"6144",

"disk":"40",

"arch":"x86_64",

"pm_type":"ipmi",

"pm_user":"admin",

"pm_password":"p@55w0rd!",

"pm_addr":"192.0.2.212"

},

{

"mac":[

"d3:d3:d3:d3:d3:d3"

],

"cpu":"4",

"memory":"6144",

"disk":"40",

"arch":"x86_64",

"pm_type":"ipmi",

"pm_user":"admin",

"pm_password":"p@55w0rd!",

"pm_addr":"192.0.2.213"

}

]

}

After creating the template, save the file to the stack user’s home directory (/home/stack/instackenv.json). Initialize the stack user, then import instackenv.json into the director:

$ source ~/stackrc $ openstack overcloud node import ~/instackenv.json

This imports the template and registers each node from the template into the director.

Assign the kernel and ramdisk images to each node:

$ openstack overcloud node configure <node>

The nodes are now registered and configured in the director.

3.3. Manually Tagging the Nodes

After registering each node, you will need to inspect the hardware and tag the node into a specific profile. Profile tags match your nodes to flavors, and in turn the flavors are assigned to a deployment role.

To inspect and tag new nodes, follow these steps:

Trigger hardware introspection to retrieve the hardware attributes of each node:

$ openstack overcloud node introspect --all-manageable --provide

-

The

--all-manageableoption introspects only nodes in a managed state. In this example, it is all of them. The

--provideoption resets all nodes to anactivestate after introspection.ImportantMake sure this process runs to completion. This process usually takes 15 minutes for bare metal nodes.

-

The

Retrieve a list of your nodes to identify their UUIDs:

$ openstack baremetal node list

Add a profile option to the

properties/capabilitiesparameter for each node to manually tag a node to a specific profile.For example, a typical deployment will use three profiles:

control,compute, andceph-storage. The following commands tag three nodes for each profile:$ ironic node-update 1a4e30da-b6dc-499d-ba87-0bd8a3819bc0 add properties/capabilities='profile:control,boot_option:local' $ ironic node-update 6faba1a9-e2d8-4b7c-95a2-c7fbdc12129a add properties/capabilities='profile:control,boot_option:local' $ ironic node-update 5e3b2f50-fcd9-4404-b0a2-59d79924b38e add properties/capabilities='profile:control,boot_option:local' $ ironic node-update 484587b2-b3b3-40d5-925b-a26a2fa3036f add properties/capabilities='profile:compute,boot_option:local' $ ironic node-update d010460b-38f2-4800-9cc4-d69f0d067efe add properties/capabilities='profile:compute,boot_option:local' $ ironic node-update d930e613-3e14-44b9-8240-4f3559801ea6 add properties/capabilities='profile:compute,boot_option:local' $ ironic node-update da0cc61b-4882-45e0-9f43-fab65cf4e52b add properties/capabilities='profile:ceph-storage,boot_option:local' $ ironic node-update b9f70722-e124-4650-a9b1-aade8121b5ed add properties/capabilities='profile:ceph-storage,boot_option:local' $ ironic node-update 68bf8f29-7731-4148-ba16-efb31ab8d34f add properties/capabilities='profile:ceph-storage,boot_option:local'

TipYou can also configure a new custom profile that will allow you to tag a node for the Ceph MON and Ceph MDS services. See Chapter 4, Deploying Other Ceph Services on Dedicated Nodes for details.

The addition of the

profileoption tags the nodes into each respective profiles.

As an alternative to manual tagging, use the Automated Health Check (AHC) Tools to automatically tag larger numbers of nodes based on benchmarking data.

3.4. Defining the root disk

Director must identify the root disk during provisioning in the case of nodes with multiple disks. For example, most Ceph Storage nodes use multiple disks. By default, the director writes the overcloud image to the root disk during the provisioning process.

There are several properties that you can define to help the director identify the root disk:

-

model(String): Device identifier. -

vendor(String): Device vendor. -

serial(String): Disk serial number. -

hctl(String): Host:Channel:Target:Lun for SCSI. -

size(Integer): Size of the device in GB. -

wwn(String): Unique storage identifier. -

wwn_with_extension(String): Unique storage identifier with the vendor extension appended. -

wwn_vendor_extension(String): Unique vendor storage identifier. -

rotational(Boolean): True for a rotational device (HDD), otherwise false (SSD). -

name(String): The name of the device, for example: /dev/sdb1. -

by_path(String): The unique PCI path of the device. Use this property if you do not want to use the UUID of the device.

Use the name property only for devices with persistent names. Do not use name to set the root disk for any other device because this value can change when the node boots.

Complete the following steps to specify the root device using its serial number.

Procedure

Check the disk information from the hardware introspection of each node. Run the following command to display the disk information of a node:

(undercloud) $ openstack baremetal introspection data save 1a4e30da-b6dc-499d-ba87-0bd8a3819bc0 | jq ".inventory.disks"

For example, the data for one node might show three disks:

[ { "size": 299439751168, "rotational": true, "vendor": "DELL", "name": "/dev/sda", "wwn_vendor_extension": "0x1ea4dcc412a9632b", "wwn_with_extension": "0x61866da04f3807001ea4dcc412a9632b", "model": "PERC H330 Mini", "wwn": "0x61866da04f380700", "serial": "61866da04f3807001ea4dcc412a9632b" } { "size": 299439751168, "rotational": true, "vendor": "DELL", "name": "/dev/sdb", "wwn_vendor_extension": "0x1ea4e13c12e36ad6", "wwn_with_extension": "0x61866da04f380d001ea4e13c12e36ad6", "model": "PERC H330 Mini", "wwn": "0x61866da04f380d00", "serial": "61866da04f380d001ea4e13c12e36ad6" } { "size": 299439751168, "rotational": true, "vendor": "DELL", "name": "/dev/sdc", "wwn_vendor_extension": "0x1ea4e31e121cfb45", "wwn_with_extension": "0x61866da04f37fc001ea4e31e121cfb45", "model": "PERC H330 Mini", "wwn": "0x61866da04f37fc00", "serial": "61866da04f37fc001ea4e31e121cfb45" } ]Change to the

root_deviceparameter for the node definition. The following example shows how to set the root device to disk 2, which has61866da04f380d001ea4e13c12e36ad6as the serial number:(undercloud) $ openstack baremetal node set --property root_device='{"serial": "61866da04f380d001ea4e13c12e36ad6"}' 1a4e30da-b6dc-499d-ba87-0bd8a3819bc0NoteEnsure that you configure the BIOS of each node to include booting from the root disk that you choose. Configure the boot order to boot from the network first, then to boot from the root disk.

The director identifies the specific disk to use as the root disk. When you run the openstack overcloud deploy command, the director provisions and writes the Overcloud image to the root disk.

3.5. Using the overcloud-minimal image to avoid using a Red Hat subscription entitlement

By default, the director writes the QCOW2 overcloud-full image to the root disk during the provisioning process. The overcloud-full image uses a valid Red Hat subscription. However, you can also use the overcloud-minimal image if you do not require any other OpenStack services on your node and you do not want to use one of your Red Hat OpenStack Platform subscription entitlements. Use the overcloud-minimal image option to avoid reaching the limit of your paid Red Hat subscriptions.

Procedure

To configure director to use the

overcloud-minimalimage, create an environment file that contains the following image definition:parameter_defaults: <roleName>Image: overcloud-minimal

Replace

<roleName>with the name of the role and appendImageto the name of the role. The following example shows anovercloud-minimalimage for Ceph storage nodes:parameter_defaults: CephStorageImage: overcloud-minimal

-

Pass the environment file to the

openstack overcloud deploycommand.

The overcloud-minimal image supports only standard Linux bridges and not OVS because OVS is an OpenStack service that requires an OpenStack subscription entitlement.

Chapter 4. Deploying Other Ceph Services on Dedicated Nodes

By default, the director deploys the Ceph MON and Ceph MDS services on the Controller nodes. This is suitable for small deployments. However, with larger deployments we advise that you deploy the Ceph MON and Ceph MDS services on dedicated nodes to improve the performance of your Ceph cluster. You can do this by creating a custom role for either one.

For detailed information about custom roles, see Creating a New Role from the Advanced Overcloud Customization guide.

The director uses the following file as a default reference for all overcloud roles:

-

/usr/share/openstack-tripleo-heat-templates/roles_data.yaml

Copy this file to /home/stack/templates/ so you can add custom roles to it:

$ cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml /home/stack/templates/roles_data_custom.yaml

You invoke the /home/stack/templates/roles_data_custom.yaml file later during overcloud creation (Section 8.2, “Initiating Overcloud Deployment”). The following sub-sections describe how to configure custom roles for either Ceph MON and Ceph MDS services.

4.1. Creating a Custom Role and Flavor for the Ceph MON Service

This section describes how to create a custom role (named CephMon) and flavor (named ceph-mon) for the Ceph MON role. You should already have a copy of the default roles data file as described in Chapter 4, Deploying Other Ceph Services on Dedicated Nodes.

-

Open the

/home/stack/templates/roles_data_custom.yamlfile. - Remove the service entry for the Ceph MON service (namely, OS::TripleO::Services::CephMon) from under the Controller role.

Add the OS::TripleO::Services::CephClient service to the Controller role:

[...] - name: Controller # the 'primary' role goes first CountDefault: 1 ServicesDefault: - OS::TripleO::Services::CACerts - OS::TripleO::Services::CephMds - OS::TripleO::Services::CephClient - OS::TripleO::Services::CephExternal - OS::TripleO::Services::CephRbdMirror - OS::TripleO::Services::CephRgw - OS::TripleO::Services::CinderApi [...]At the end of

roles_data_custom.yaml, add a customCephMonrole containing the Ceph MON service and all the other required node services. For example:- name: CephMon ServicesDefault: # Common Services - OS::TripleO::Services::AuditD - OS::TripleO::Services::CACerts - OS::TripleO::Services::CertmongerUser - OS::TripleO::Services::Collectd - OS::TripleO::Services::Docker - OS::TripleO::Services::FluentdClient - OS::TripleO::Services::Kernel - OS::TripleO::Services::Ntp - OS::TripleO::Services::ContainersLogrotateCrond - OS::TripleO::Services::SensuClient - OS::TripleO::Services::Snmp - OS::TripleO::Services::Timezone - OS::TripleO::Services::TripleoFirewall - OS::TripleO::Services::TripleoPackages - OS::TripleO::Services::Tuned # Role-Specific Services - OS::TripleO::Services::CephMon

Using the

openstack flavor createcommand, define a new flavor namedceph-monfor this role:$ openstack flavor create --id auto --ram 6144 --disk 40 --vcpus 4 ceph-mon

NoteFor more details about this command, run

openstack flavor create --help.Map this flavor to a new profile, also named

ceph-mon:$ openstack flavor set --property "cpu_arch"="x86_64" --property "capabilities:boot_option"="local" --property "capabilities:profile"="ceph-mon" ceph-mon

NoteFor more details about this command, run

openstack flavor set --help.-

Tag nodes into the new

ceph-monprofile:

$ ironic node-update UUID add properties/capabilities='profile:ceph-mon,boot_option:local'

See Section 3.3, “Manually Tagging the Nodes” for more details about tagging nodes. See also Tagging Nodes Into Profiles for related information on custom role profiles.

4.2. Creating a Custom Role and Flavor for the Ceph MDS Service

This section describes how to create a custom role (named CephMDS) and flavor (named ceph-mds) for the Ceph MDS role. You should already have a copy of the default roles data file as described in Chapter 4, Deploying Other Ceph Services on Dedicated Nodes.

-

Open the

/home/stack/templates/roles_data_custom.yamlfile. Remove the service entry for the Ceph MDS service (namely, OS::TripleO::Services::CephMds) from under the Controller role:

[...] - name: Controller # the 'primary' role goes first CountDefault: 1 ServicesDefault: - OS::TripleO::Services::CACerts # - OS::TripleO::Services::CephMds 1 - OS::TripleO::Services::CephMon - OS::TripleO::Services::CephExternal - OS::TripleO::Services::CephRbdMirror - OS::TripleO::Services::CephRgw - OS::TripleO::Services::CinderApi [...]- 1

- Comment out this line. This same service will be added to a custom role in the next step.

At the end of

roles_data_custom.yaml, add a customCephMDSrole containing the Ceph MDS service and all the other required node services. For example:- name: CephMDS ServicesDefault: # Common Services - OS::TripleO::Services::AuditD - OS::TripleO::Services::CACerts - OS::TripleO::Services::CertmongerUser - OS::TripleO::Services::Collectd - OS::TripleO::Services::Docker - OS::TripleO::Services::FluentdClient - OS::TripleO::Services::Kernel - OS::TripleO::Services::Ntp - OS::TripleO::Services::ContainersLogrotateCrond - OS::TripleO::Services::SensuClient - OS::TripleO::Services::Snmp - OS::TripleO::Services::Timezone - OS::TripleO::Services::TripleoFirewall - OS::TripleO::Services::TripleoPackages - OS::TripleO::Services::Tuned # Role-Specific Services - OS::TripleO::Services::CephMds - OS::TripleO::Services::CephClient 1

- 1

- The Ceph MDS service requires the admin keyring, which can be set by either Ceph MON or Ceph Client service. As we are deploying Ceph MDS on a dedicated node (without the Ceph MON service), include the Ceph Client service on the role as well.

Using the

openstack flavor createcommand, define a new flavor namedceph-mdsfor this role:$ openstack flavor create --id auto --ram 6144 --disk 40 --vcpus 4 ceph-mds

NoteFor more details about this command, run

openstack flavor create --help.Map this flavor to a new profile, also named

ceph-mds:$ openstack flavor set --property "cpu_arch"="x86_64" --property "capabilities:boot_option"="local" --property "capabilities:profile"="ceph-mds" ceph-mds

NoteFor more details about this command, run

openstack flavor set --help.

Tag nodes into the new ceph-mds profile:

$ ironic node-update UUID add properties/capabilities='profile:ceph-mds,boot_option:local'

See Section 3.3, “Manually Tagging the Nodes” for more details about tagging nodes. See also Tagging Nodes Into Profiles for related information on custom role profiles.

Chapter 5. Customizing the Storage Service

The Heat template collection provided by the director already contains the necessary templates and environment files to enable a basic Ceph Storage configuration.

The /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-ansible.yaml environment file will create a Ceph cluster and integrate it with your overcloud upon deployment. This cluster will feature containerized Ceph Storage nodes. For more information about containerized services in OpenStack, see "Configuring a Basic Overcloud with the CLI Tools" in the Director Installation and Usage Guide.

The Red Hat OpenStack director will also apply basic, default settings to the deployed Ceph cluster. You need a custom environment file to pass custom settings to your Ceph cluster. To create one:

-

Create the file

storage-config.yamlin/home/stack/templates/. For the purposes of this document,~/templates/storage-config.yamlwill contain most of the overcloud-related custom settings for your environment. It will override all the default settings applied by the director to your overcloud. Add a

parameter_defaultssection to~/templates/storage-config.yaml. This section will contain custom settings for your overcloud. For example, to setvxlanas the network type of the networking service (neutron):parameter_defaults: NeutronNetworkType: vxlan

If needed, set the following options under

parameter_defaultsas you see fit:Option Description Default value CinderEnableIscsiBackend

Enables the iSCSI backend

false

CinderEnableRbdBackend

Enables the Ceph Storage back end

true

CinderBackupBackend

Sets ceph or swift as the back end for volume backups; see Section 5.3, “Configuring the Backup Service to Use Ceph” for related details

ceph

NovaEnableRbdBackend

Enables Ceph Storage for Nova ephemeral storage

true

GlanceBackend

Defines which back end the Image service should use:

rbd(Ceph),swift, orfilerbd

GnocchiBackend

Defines which back end the Telemetry service should use:

rbd(Ceph),swift, orfilerbd

NoteYou can omit an option from

~/templates/storage-config.yamlif you intend to use the default setting.

The contents of your environment file will change depending on the settings you apply in the sections that follow. See Appendix A, Sample Environment File: Creating a Ceph Cluster for a finished example.

The following subsections explain how to override common default storage service settings applied by the director.

5.1. Enabling the Ceph Metadata Server

The Ceph Metadata Server (MDS) runs the ceph-mds daemon, which manages metadata related to files stored on CephFS. CephFS can be consumed via NFS. For related information about using CephFS via NFS, see Ceph File System Guide and CephFS via NFS Back End Guide for the Shared File System Service.

Red Hat only supports deploying Ceph MDS with the CephFS via NFS back end for the Shared File System service.

To enable the Ceph Metadata Server, invoke the following environment file when creating your overcloud:

-

/usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-mds.yaml

See Section 8.2, “Initiating Overcloud Deployment” for more details. For more information about the Ceph Metadata Server, see Configuring Metadata Server Daemons.

By default, the Ceph Metadata Server will be deployed on the Controller node. You can deploy the Ceph Metadata Server on its own dedicated node; for instructions, see Section 4.2, “Creating a Custom Role and Flavor for the Ceph MDS Service”.

5.2. Enabling the Ceph Object Gateway

The Ceph Object Gateway provides applications with an interface to object storage capabilities within a Ceph storage cluster. Upon deploying the Ceph Object Gateway, you can then replace the default Object Storage service (swift) with Ceph. For more information, see Object Gateway Guide for Red Hat Enterprise Linux.

To enable a Ceph Object Gateway in your deployment, invoke the following environment file when creating your overcloud:

-

/usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-rgw.yaml

See Section 8.2, “Initiating Overcloud Deployment” for details.

The Ceph Object Gateway acts as a drop-in replacement for the default Object Storage service. As such, all other services that normally use swift can seamlessly start using the Ceph Object Gateway instead without further configuration. Refer to the Block Storage Backup Guide for instructions.

5.3. Configuring the Backup Service to Use Ceph

The Block Storage Backup service (cinder-backup) is disabled by default. To enable it, invoke the following environment file when creating your overcloud:

-

/usr/share/openstack-tripleo-heat-templates/environments/cinder-backup.yaml

Refer to the Block Storage Backup Guide for instructions.

5.4. Configuring Multiple Bonded Interfaces Per Ceph Node

Using a bonded interface allows you to combine multiple NICs to add redundancy to a network connection. If you have enough NICs on your Ceph nodes, you can take this a step further by creating multiple bonded interfaces per node.

With this, you can then use a bonded interface for each network connection required by the node. This provides both redundancy and a dedicated connection for each network.

The simplest implementation of this involves the use of two bonds, one for each storage network used by the Ceph nodes. These networks are the following:

- Front-end storage network (

StorageNet) - The Ceph client uses this network to interact with its Ceph cluster.

- Back-end storage network (

StorageMgmtNet) - The Ceph cluster uses this network to balance data in accordance with the placement group policy of the cluster. For more information, see Placement Groups (PG) (from the Red Hat Ceph Architecture Guide).

Configuring this involves customizing a network interface template, as the director does not provide any sample templates that deploy multiple bonded NICs. However, the director does provide a template that deploys a single bonded interface — namely, /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/ceph-storage.yaml. You can add a bonded interface for your additional NICs by defining it there.

For more detailed instructions on how to do this, see Creating Custom Interface Templates (from the Advanced Overcloud Customization guide). That section also explains the different components of a bridge and bonding definition.

The following snippet contains the default definition for the single bonded interface defined by /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/ceph-storage.yaml:

type: ovs_bridge // 1 name: br-bond members: - type: ovs_bond // 2 name: bond1 // 3 ovs_options: {get_param: BondInterfaceOvsOptions} 4 members: // 5 - type: interface name: nic2 primary: true - type: interface name: nic3 - type: vlan // 6 device: bond1 // 7 vlan_id: {get_param: StorageNetworkVlanID} addresses: - ip_netmask: {get_param: StorageIpSubnet} - type: vlan device: bond1 vlan_id: {get_param: StorageMgmtNetworkVlanID} addresses: - ip_netmask: {get_param: StorageMgmtIpSubnet}

- 1

- A single bridge named

br-bondholds the bond defined by this template. This line defines the bridge type, namely OVS. - 2

- The first member of the

br-bondbridge is the bonded interface itself, namedbond1. This line defines the bond type ofbond1, which is also OVS. - 3

- The default bond is named

bond1, as defined in this line. - 4

- The

ovs_optionsentry instructs director to use a specific set of bonding module directives. Those directives are passed through theBondInterfaceOvsOptions, which you can also configure in this same file. For instructions on how to configure this, see Section 5.4.1, “Configuring Bonding Module Directives”. - 5

- The

memberssection of the bond defines which network interfaces are bonded bybond1. In this case, the bonded interface usesnic2(set as the primary interface) andnic3. - 6

- The

br-bondbridge has two other members: namely, a VLAN for both front-end (StorageNetwork) and back-end (StorageMgmtNetwork) storage networks. - 7

- The

deviceparameter defines what device a VLAN should use. In this case, both VLANs will use the bonded interfacebond1.

With at least two more NICs, you can define an additional bridge and bonded interface. Then, you can move one of the VLANs to the new bonded interface. This results in added throughput and reliability for both storage network connections.

When customizing /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/ceph-storage.yaml for this purpose, it is advisable to also use Linux bonds (type: linux_bond ) instead of the default OVS (type: ovs_bond). This bond type is more suitable for enterprise production deployments.

The following edited snippet defines an additional OVS bridge (br-bond2) which houses a new Linux bond named bond2. The bond2 interface uses two additional NICs (namely, nic4 and nic5) and will be used solely for back-end storage network traffic:

type: ovs_bridge

name: br-bond

members:

-

type: linux_bond

name: bond1

bonding_options: {get_param: BondInterfaceOvsOptions} // 1

members:

-

type: interface

name: nic2

primary: true

-

type: interface

name: nic3

-

type: vlan

device: bond1

vlan_id: {get_param: StorageNetworkVlanID}

addresses:

-

ip_netmask: {get_param: StorageIpSubnet}

-

type: ovs_bridge

name: br-bond2

members:

-

type: linux_bond

name: bond2

bonding_options: {get_param: BondInterfaceOvsOptions}

members:

-

type: interface

name: nic4

primary: true

-

type: interface

name: nic5

-

type: vlan

device: bond1

vlan_id: {get_param: StorageMgmtNetworkVlanID}

addresses:

-

ip_netmask: {get_param: StorageMgmtIpSubnet}- 1

- As

bond1andbond2are both Linux bonds (instead of OVS), they usebonding_optionsinstead ofovs_optionsto set bonding directives. For related information, see Section 5.4.1, “Configuring Bonding Module Directives”.

For the full contents of this customized template, see Appendix B, Sample Custom Interface Template: Multiple Bonded Interfaces.

5.4.1. Configuring Bonding Module Directives

After adding and configuring the bonded interfaces, use the BondInterfaceOvsOptions parameter to set what directives each should use. You can find this in the parameters: section of /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/ceph-storage.yaml. The following snippet shows the default definition of this parameter (namely, empty):

BondInterfaceOvsOptions:

default: ''

description: The ovs_options string for the bond interface. Set

things like lacp=active and/or bond_mode=balance-slb

using this option.

type: string

Define the options you need in the default: line. For example, to use 802.3ad (mode 4) and a LACP rate of 1 (fast), use 'mode=4 lacp_rate=1', as in:

BondInterfaceOvsOptions:

default: 'mode=4 lacp_rate=1'

description: The bonding_options string for the bond interface. Set

things like lacp=active and/or bond_mode=balance-slb

using this option.

type: string

See Appendix C. Open vSwitch Bonding Options (from the Advanced Overcloud Optimization guide) for other supported bonding options. For the full contents of the customized /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/ceph-storage.yaml template, see Appendix B, Sample Custom Interface Template: Multiple Bonded Interfaces.

Chapter 6. Customizing the Ceph Storage Cluster

Deploying containerized Ceph Storage involves the use of /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-ansible.yaml during overcloud deployment (as described in Chapter 5, Customizing the Storage Service). This environment file also defines the following resources:

CephAnsibleDisksConfig- This resource maps the Ceph Storage node disk layout. See Section 6.1, “Mapping the Ceph Storage Node Disk Layout” for more details.

CephConfigOverrides- This resource applies all other custom settings to your Ceph cluster.

Use these resources to override any defaults set by the director for containerized Ceph Storage.

The /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-ansible.yaml environment file uses playbooks provided by the ceph-ansible package. As such, you need to install this package on your undercloud first:

$ sudo yum install ceph-ansible

To customize your Ceph cluster, define your custom parameters in a new environment file, namely /home/stack/templates/ceph-config.yaml. You can arbitrarily apply global Ceph cluster settings using the following syntax in the parameter_defaults section of your environment file:

parameter_defaults:

CephConfigOverrides:

KEY:VALUE

Replace KEY and VALUE with the Ceph cluster settings you want to apply. For example, consider the following snippet:

parameter_defaults:

CephConfigOverrides:

max_open_files: 131072This will result in the following settings defined in the configuration file of your Ceph cluster:

[global] max_open_files: 131072

See the Red Hat Ceph Storage Configuration Guide for information about supported parameters.

The CephConfigOverrides parameter applies only to the [global] section of the ceph.conf file. You cannot make changes to other sections, for example the [osd] section, with the CephConfigOverrides parameter.

The ceph-ansible tool has a group_vars directory that you can use to set many different Ceph parameters. For more information, see 3.2. Installing a Red Hat Ceph Storage Cluster in the Installation Guide for Red Hat Enterprise Linux.

To change the variable defaults in director, you can use the CephAnsibleExtraConfig parameter to pass the new values in heat environment files. For example, to set the ceph-ansible group variable journal_size to 40960, create an environment file with the following journal_size definition:

parameter_defaults:

CephAnsibleExtraConfig:

journal_size: 40960

Change ceph-ansible group variables with the override parameters; do not edit group variables directly in the /usr/share/ceph-ansible directory on the undercloud.

6.1. Mapping the Ceph Storage Node Disk Layout

When you deploy containerized Ceph Storage, you need to map the disk layout and specify dedicated block devices for the Ceph OSD service. You can do this in the environment file you created earlier to define your custom Ceph parameters — namely, /home/stack/templates/ceph-config.yaml.

Use the CephAnsibleDisksConfig resource in parameter_defaults to map your disk layout. This resource uses the following variables:

| Variable | Required? | Default value (if unset) | Description |

|---|---|---|---|

| osd_scenario | Yes | lvm

NOTE: For new deployments using Ceph 3.2 and later, |

With Ceph 3.2,

- co-located on the same device for

- stored on dedicated devices for |

| devices | Yes | NONE. Variable must be set. | A list of block devices to be used on the node for OSDs. |

| dedicated_devices |

Yes (only if | devices |

A list of block devices that maps each entry under devices to a dedicated journaling block device. This variable is only usable when |

| dmcrypt | No | false |

Sets whether data stored on OSDs are encrypted ( |

| osd_objectstore | No | bluestore

NOTE: For new deployments using Ceph 3.2 and later, | Sets the storage back end used by Ceph. |

If you deployed your Ceph cluster with a version of ceph-ansible older than 3.3 and 'osd_scenario` is set to `collocated' or 'non-collocated', OSD reboot failure can occur due to a device naming discrepancy. For more information about this fault, see https://bugzilla.redhat.com/show_bug.cgi?id=1670734. For information about a workaround, see https://access.redhat.com/solutions/3702681.

6.1.1. Using BlueStore in Ceph 3.2 and later

New deployments of OpenStack Platform 13 should use bluestore. Current deployments that use filestore should continue using filestore, as described in Using FileStore in Ceph 3.1 and earlier. Migrations from filestore to bluestore are not supported by default in RHCS 3.x.

To specify the block devices to be used as Ceph OSDs, use a variation of the following:

parameter_defaults:

CephAnsibleDisksConfig:

devices:

- /dev/sdb

- /dev/sdc

- /dev/sdd

- /dev/nvme0n1

osd_scenario: lvm

osd_objectstore: bluestore

Because /dev/nvme0n1 is in a higher performing device class—it is an SSD and the other devices are HDDs—the example parameter defaults produce three OSDs that run on /dev/sdb, /dev/sdc, and /dev/sdd. The three OSDs use /dev/nvme0n1 as a BlueStore WAL device. The ceph-volume tool does this by using the batch subcommand. The same setup is duplicated per Ceph storage node and assumes uniform hardware. If the BlueStore WAL data resides on the same disks as the OSDs, then the parameter defaults could be changed to the following:

parameter_defaults:

CephAnsibleDisksConfig:

devices:

- /dev/sdb

- /dev/sdc

- /dev/sdd

osd_scenario: lvm

osd_objectstore: bluestore6.1.2. Using FileStore in Ceph 3.1 and earlier

The default journaling scenario is set to osd_scenario=collocated, which has lower hardware requirements consistent with most testing environments. In a typical production environment, however, journals are stored on dedicated devices (osd_scenario=non-collocated) to accommodate heavier I/O workloads. For related information, see Identifying a Performance Use Case.

List each block device to be used by the OSDs as a simple list under the devices variable. For example:

devices: - /dev/sda - /dev/sdb - /dev/sdc - /dev/sdd

If osd_scenario=non-collocated, you must also map each entry in devices to a corresponding entry in dedicated_devices. For example, notice the following snippet in /home/stack/templates/ceph-config.yaml:

osd_scenario: non-collocated devices: - /dev/sda - /dev/sdb - /dev/sdc - /dev/sdd dedicated_devices: - /dev/sdf - /dev/sdf - /dev/sdg - /dev/sdg

Each Ceph Storage node in the resulting Ceph cluster has the following characteristics:

-

/dev/sdahas/dev/sdf1as its journal -

/dev/sdbhas/dev/sdf2as its journal -

/dev/sdchas/dev/sdg1as its journal -

/dev/sddhas/dev/sdg2as its journal

6.1.3. Referring to devices with persistent names

In some nodes, disk paths, such as /dev/sdb and /dev/sdc, may not point to the same block device during reboots. If this is the case with your CephStorage nodes, specify each disk through its /dev/disk/by-path/ symlink.

For example:

parameter_defaults:

CephAnsibleDisksConfig:

devices:

- /dev/disk/by-path/pci-0000:03:00.0-scsi-0:0:10:0

- /dev/disk/by-path/pci-0000:03:00.0-scsi-0:0:11:0

dedicated_devices

- /dev/nvme0n1

- /dev/nvme0n1This ensures that the block device mapping is consistent throughout deployments.

Because the list of OSD devices must be set prior to overcloud deployment, it may not be possible to identify and set the PCI path of disk devices. In this case, gather the /dev/disk/by-path/symlink data for block devices during introspection.

In the following example, the first command downloads the introspection data from the undercloud Object Storage service (swift) for the server b08-h03-r620-hci and saves the data in a file called b08-h03-r620-hci.json. The second command greps for “by-path” and the results show the required data.

(undercloud) [stack@b08-h02-r620 ironic]$ openstack baremetal introspection data save b08-h03-r620-hci | jq . > b08-h03-r620-hci.json

(undercloud) [stack@b08-h02-r620 ironic]$ grep by-path b08-h03-r620-hci.json

"by_path": "/dev/disk/by-path/pci-0000:02:00.0-scsi-0:2:0:0",

"by_path": "/dev/disk/by-path/pci-0000:02:00.0-scsi-0:2:1:0",

"by_path": "/dev/disk/by-path/pci-0000:02:00.0-scsi-0:2:3:0",

"by_path": "/dev/disk/by-path/pci-0000:02:00.0-scsi-0:2:4:0",

"by_path": "/dev/disk/by-path/pci-0000:02:00.0-scsi-0:2:5:0",

"by_path": "/dev/disk/by-path/pci-0000:02:00.0-scsi-0:2:6:0",

"by_path": "/dev/disk/by-path/pci-0000:02:00.0-scsi-0:2:7:0",

"by_path": "/dev/disk/by-path/pci-0000:02:00.0-scsi-0:2:0:0",For more information about naming conventions for storage devices, see Persistent Naming.

For details about each journaling scenario and disk mapping for containerized Ceph Storage, see the OSD Scenarios section of the project documentation for ceph-ansible.

osd_scenario: lvm is used in the example to default new deployments to bluestore as configured by ceph-volume; this is only available with ceph-ansible 3.2 or later and Ceph Luminous or later. The parameters to support filestore with ceph-ansible 3.2 are backwards compatible. Therefore, in existing FileStore deployments, do not simply change the osd_objectstore or osd_scenario parameters.

6.2. Assigning Custom Attributes to Different Ceph Pools

By default, Ceph pools created through the director have the same placement group (pg_num and pgp_num) and sizes. You can use either method in Chapter 6, Customizing the Ceph Storage Cluster to override these settings globally; that is, doing so will apply the same values for all pools.

You can also apply different attributes to each Ceph pool. To do so, use the CephPools parameter, as in:

parameter_defaults:

CephPools:

- name: POOL

pg_num: 128

application: rbd

Replace POOL with the name of the pool you want to configure along with the pg_num setting to indicate number of placement groups. This overrides the default pg_num for the specified pool.

If you use the CephPools parameter, you must also specify the application type. The application type for Compute, Block Storage, and Image Storage should be rbd, as shown in the examples, but depending on what the pool will be used for, you may need to specify a different application type. For example, the application type for the gnocchi metrics pool is openstack_gnocchi. See Enable Application in the Storage Strategies Guide for more information.

If you do not use the CephPools parameter, director sets the appropriate application type automatically, but only for the default pool list.

You can also create new custom pools through the CephPools parameter. For example, to add a pool called custompool:

parameter_defaults:

CephPools:

- name: custompool

pg_num: 128

application: rbdThis creates a new custom pool in addition to the default pools.

For typical pool configurations of common Ceph use cases, see the Ceph Placement Groups (PGs) per Pool Calculator. This calculator is normally used to generate the commands for manually configuring your Ceph pools. In this deployment, the director will configure the pools based on your specifications.

Red Hat Ceph Storage 3 (Luminous) introduces a hard limit on the maximum number of PGs an OSD can have, which is 200 by default. Do not override this parameter beyond 200. If there is a problem because the Ceph PG number exceeds the maximum, adjust the pg_num per pool to address the problem, not the mon_max_pg_per_osd.

6.3. Mapping the Disk Layout to Non-Homogeneous Ceph Storage Nodes

By default, all nodes of a role which will host Ceph OSDs (indicated by the OS::TripleO::Services::CephOSD service in roles_data.yaml), for example CephStorage or ComputeHCI nodes, will use the global devices and dedicated_devices lists set in Section 6.1, “Mapping the Ceph Storage Node Disk Layout”. This assumes that all of these servers have homogeneous hardware. If a subset of these servers do not have homogeneous hardware, then director needs to be aware that each of these servers has different devices and dedicated_devices lists. This is known as a node-specific disk configuration.

To pass director a node-specific disk configuration, a Heat environment file, such as node-spec-overrides.yaml, must be passed to the openstack overcloud deploy command and the file’s content must identify each server by a machine unique UUID and a list of local variables which override the global variables.

The machine unique UUID may be extracted for each individual server or from the Ironic database.

To locate the UUID for an individual server, log in to the server and run:

dmidecode -s system-uuid

To extract the UUID from the Ironic database, run the following command on the undercloud:

openstack baremetal introspection data save NODE-ID | jq .extra.system.product.uuid

If the undercloud.conf does not have inspection_extras = true prior to undercloud installation or upgrade and introspection, then the machine unique UUID will not be in the Ironic database.

The machine unique UUID is not the Ironic UUID.

A valid node-spec-overrides.yaml file may look like the following:

parameter_defaults:

NodeDataLookup: {"32E87B4C-C4A7-418E-865B-191684A6883B": {"devices": ["/dev/sdc"]}}

All lines after the first two lines must be valid JSON. An easy way to verify that the JSON is valid is to use the jq command. For example:

-

Remove the first two lines (

parameter_defaults:andNodeDataLookup:) from the file temporarily. -

Run

cat node-spec-overrides.yaml | jq .

As the node-spec-overrides.yaml file grows, jq may also be used to ensure that the embedded JSON is valid. For example, because the devices and dedicated_devices list should be the same length, use the following to verify that they are the same length before starting the deployment.

(undercloud) [stack@b08-h02-r620 tht]$ cat node-spec-c05-h17-h21-h25-6048r.yaml | jq '.[] | .devices | length' 33 30 33 (undercloud) [stack@b08-h02-r620 tht]$ cat node-spec-c05-h17-h21-h25-6048r.yaml | jq '.[] | .dedicated_devices | length' 33 30 33 (undercloud) [stack@b08-h02-r620 tht]$

In the above example, the node-spec-c05-h17-h21-h25-6048r.yaml has three servers in rack c05 in which slots h17, h21, and h25 are missing disks. A more complicated example is included at the end of this section.

After the JSON has been validated add back the two lines which makes it a valid environment YAML file (parameter_defaults: and NodeDataLookup:) and include it with a -e in the deployment.

In the example below, the updated Heat Environment File uses NodeDataLookup for Ceph deployment. All of the servers had a devices list with 35 disks except one of them had a disk missing. This environment file overrides the default devices list for only that single node and gives it the list of 34 disks it should use instead of the global list.

parameter_defaults:

# c05-h01-6048r is missing scsi-0:2:35:0 (00000000-0000-0000-0000-0CC47A6EFD0C)

NodeDataLookup: {

"00000000-0000-0000-0000-0CC47A6EFD0C": {

"devices": [

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:1:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:32:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:2:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:3:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:4:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:5:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:6:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:33:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:7:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:8:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:34:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:9:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:10:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:11:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:12:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:13:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:14:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:15:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:16:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:17:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:18:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:19:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:20:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:21:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:22:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:23:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:24:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:25:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:26:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:27:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:28:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:29:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:30:0",

"/dev/disk/by-path/pci-0000:03:00.0-scsi-0:2:31:0"

],

"dedicated_devices": [

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:81:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1",

"/dev/disk/by-path/pci-0000:84:00.0-nvme-1"

]

}

}Chapter 7. Deploying second-tier Ceph storage on OpenStack

Using OpenStack director, you can deploy different Red Hat Ceph Storage performance tiers by adding new Ceph nodes dedicated to a specific tier in a Ceph cluster.

For example, you can add new object storage daemon (OSD) nodes with SSD drives to an existing Ceph cluster to create a Block Storage (cinder) backend exclusively for storing data on these nodes. A user creating a new Block Storage volume can then choose the desired performance tier: either HDDs or the new SSDs.

This type of deployment requires Red Hat OpenStack Platform director to pass a customized CRUSH map to ceph-ansible. The CRUSH map allows you to split OSD nodes based on disk performance, but you can also use this feature for mapping physical infrastructure layout.

The following sections demonstrate how to deploy four nodes where two of the nodes use SSDs and the other two use HDDs. The example is kept simple to communicate a repeatable pattern. However, a production deployment should use more nodes and more OSDs to be supported as per the Red Hat Ceph Storage hardware selection guide.

7.1. Create a CRUSH map

The CRUSH map allows you to put OSD nodes into a CRUSH root. By default, a “default” root is created and all OSD nodes are included in it.

Inside a given root, you define the physical topology, rack, rooms, and so forth, and then place the OSD nodes in the desired hierarchy (or bucket). By default, no physical topology is defined; a flat design is assumed as if all nodes are in the same rack.

See Crush Administration in the Storage Strategies Guide for details about creating a custom CRUSH map.

7.2. Mapping the OSDs

Complete the following step to map the OSDs.

Procedure

Declare the OSDs/journal mapping:

parameter_defaults: CephAnsibleDisksConfig: devices: - /dev/sda - /dev/sdb dedicated_devices: - /dev/sdc - /dev/sdc osd_scenario: non-collocated journal_size: 8192

7.3. Setting the replication factor

Complete the following step to set the replication factor.

This is normally supported only for full SSD deployment. See Red Hat Ceph Storage: Supported configurations.

Procedure

Set the default replication factor to two. This example splits four nodes into two different roots.

parameter_defaults: CephPoolDefaultSize: 2

If you upgrade a deployment that uses gnocchi as the backend, you might encounter deployment timeout. To prevent this timeout, use the following CephPool definition to customize the gnocchi pool:

parameter_defaults

CephPools: {"name": metrics, "pg_num": 128, "pgp_num": 128, "size": 1}7.4. Defining the CRUSH hierarchy

Director provides the data for the CRUSH hierarchy, but ceph-ansible actually passes that data by getting the CRUSH mapping through the Ansible inventory file. Unless you keep the default root, you must specify the location of the root for each node.

For example if node lab-ceph01 (provisioning IP 172.16.0.26) is placed in rack1 inside the fast_root, the Ansible inventory should resemble the following:

172.16.0.26:

osd_crush_location: {host: lab-ceph01, rack: rack1, root: fast_root}

When you use director to deploy Ceph, you don’t actually write the Ansible inventory; it is generated for you. Therefore, you must use NodeDataLookup to append the data.

NodeDataLookup works by specifying the system product UUID stored on the motherboard of the systems. The Bare Metal service (ironic) also stores this information after the introspection phase.

To create a CRUSH map that supports second-tier storage, complete the following steps:

Procedure

Run the following commands to retrieve the UUIDs of the four nodes:

for ((x=1; x<=4; x++)); \ { echo "Node overcloud-ceph0${x}"; \ openstack baremetal introspection data save overcloud-ceph0${x} | jq .extra.system.product.uuid; } Node overcloud-ceph01 "32C2BC31-F6BB-49AA-971A-377EFDFDB111" Node overcloud-ceph02 "76B4C69C-6915-4D30-AFFD-D16DB74F64ED" Node overcloud-ceph03 "FECF7B20-5984-469F-872C-732E3FEF99BF" Node overcloud-ceph04 "5FFEFA5F-69E4-4A88-B9EA-62811C61C8B3"NoteIn the example, overcloud-ceph0[1-4] are the Ironic nodes names; they will be deployed as

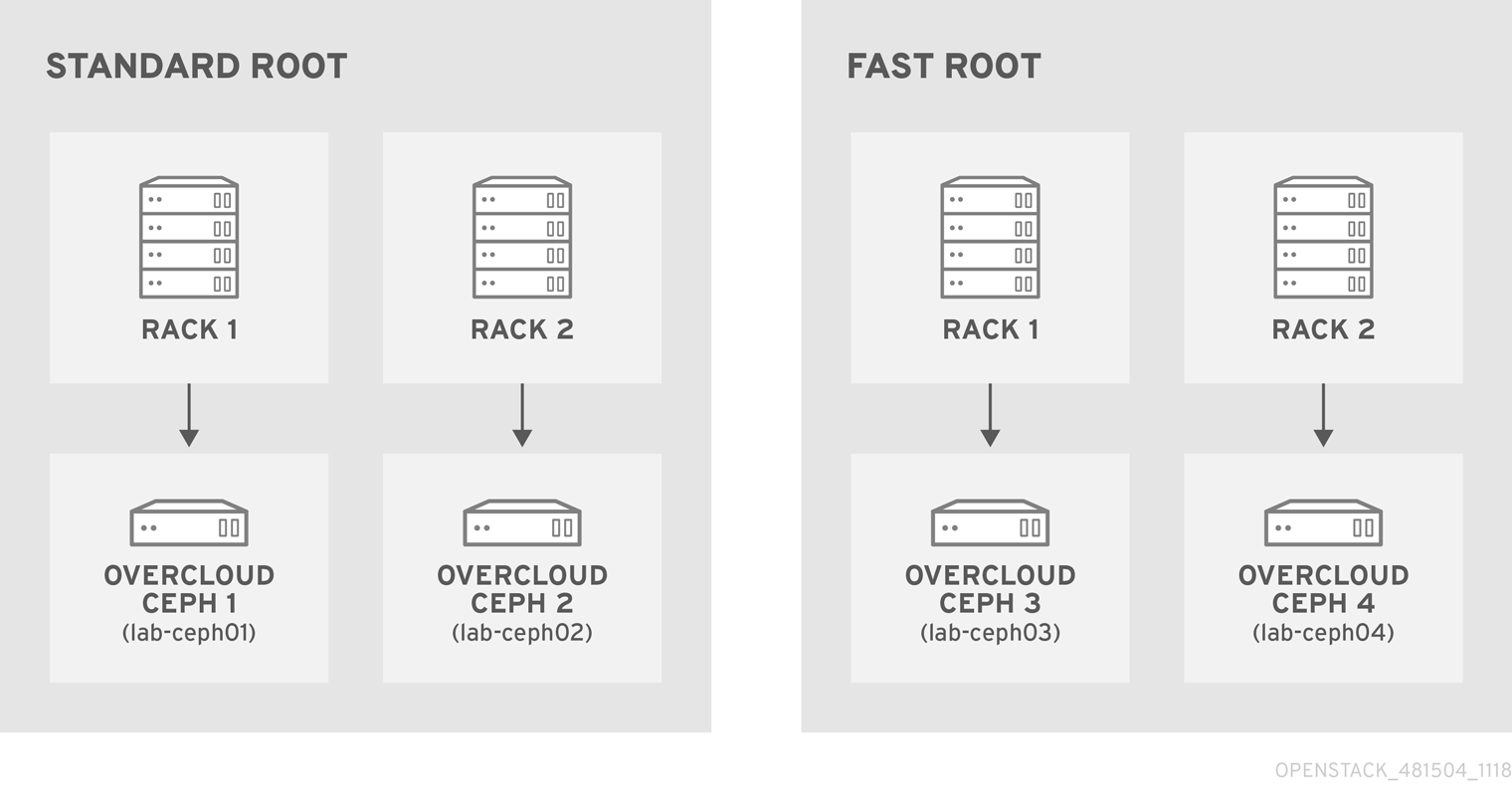

lab-ceph0[1–4](via HostnameMap.yaml).Specify the node placement as follows:

Root Rack Node standard_root

rack1_std

overcloud-ceph01 (lab-ceph01)

rack2_std

overcloud-ceph02 (lab-ceph02)

fast_root

rack1_fast

overcloud-ceph03 (lab-ceph03)

rack2_fast

overcloud-ceph04 (lab-ceph04)

NoteYou cannot have two buckets with the same name. Even if

lab-ceph01andlab-ceph03are in the same physical rack, you cannot have two buckets calledrack1. Therefore, we named themrack1_stdandrack1_fast.NoteThis example demonstrates how to create a specific route called “standard_root” to illustrate multiple custom roots. However, you could have kept the HDDs OSD nodes in the default root.

Use the following

NodeDataLookupsyntax:NodeDataLookup: {"SYSTEM_UUID": {"osd_crush_location": {"root": "$MY_ROOT", "rack": "$MY_RACK", "host": "$OVERCLOUD_NODE_HOSTNAME"}}}NoteYou must specify the system UUID and then the CRUSH hierarchy from top to bottom. Also, the

hostparameter must point to the node’s overcloud host name, not the Bare Metal service (ironic) node name. To match the example configuration, enter the following:parameter_defaults: NodeDataLookup: {"32C2BC31-F6BB-49AA-971A-377EFDFDB111": {"osd_crush_location": {"root": "standard_root", "rack": "rack1_std", "host": "lab-ceph01"}}, "76B4C69C-6915-4D30-AFFD-D16DB74F64ED": {"osd_crush_location": {"root": "standard_root", "rack": "rack2_std", "host": "lab-ceph02"}}, "FECF7B20-5984-469F-872C-732E3FEF99BF": {"osd_crush_location": {"root": "fast_root", "rack": "rack1_fast", "host": "lab-ceph03"}}, "5FFEFA5F-69E4-4A88-B9EA-62811C61C8B3": {"osd_crush_location": {"root": "fast_root", "rack": "rack2_fast", "host": "lab-ceph04"}}}Enable CRUSH map management at the ceph-ansible level:

parameter_defaults: CephAnsibleExtraConfig: create_crush_tree: trueUse scheduler hints to ensure the Bare Metal service node UUIDs correctly map to the hostnames:

parameter_defaults: CephStorageCount: 4 OvercloudCephStorageFlavor: ceph-storage CephStorageSchedulerHints: 'capabilities:node': 'ceph-%index%'Tag the Bare Metal service nodes with the corresponding hint:

openstack baremetal node set --property capabilities='profile:ceph-storage,node:ceph-0,boot_option:local' overcloud-ceph01 openstack baremetal node set --property capabilities=profile:ceph-storage,'node:ceph-1,boot_option:local' overcloud-ceph02 openstack baremetal node set --property capabilities='profile:ceph-storage,node:ceph-2,boot_option:local' overcloud-ceph03 openstack baremetal node set --property capabilities='profile:ceph-storage,node:ceph-3,boot_option:local' overcloud-ceph04

NoteFor more information about predictive placement, see Assigning Specific Node IDs in the Advanced Overcloud Customization guide.

7.5. Defining CRUSH map rules

Rules define how the data is written on a cluster. After the CRUSH map node placement is complete, define the CRUSH rules.

Procedure

Use the following syntax to define the CRUSH rules:

parameter_defaults: CephAnsibleExtraConfig: crush_rules: - name: $RULE_NAME root: $ROOT_NAME type: $REPLICAT_DOMAIN default: true/falseNoteSetting the default parameter to

truemeans that this rule will be used when you create a new pool without specifying any rule. There may only be one default rule.In the following example, rule

standardpoints to the OSD nodes hosted on thestandard_rootwith one replicate per rack. Rulefastpoints to the OSD nodes hosted on thestandard_rootwith one replicate per rack:parameter_defaults: CephAnsibleExtraConfig: crush_rule_config: true crush_rules: - name: standard root: standard_root type: rack default: true - name: fast root: fast_root type: rack default: falseNoteYou must set

crush_rule_configtotrue.

7.6. Configuring OSP pools

Ceph pools are configured with a CRUSH rules that define how to store data. This example features all built-in OSP pools using the standard_root (the standard rule) and a new pool using fast_root (the fast rule).

Procedure

Use the following syntax to define or change a pool property:

- name: $POOL_NAME pg_num: $PG_COUNT rule_name: $RULE_NAME application: rbdList all OSP pools and set the appropriate rule (standard, in this case), and create a new pool called

tier2that uses the fast rule. This pool will be used by Block Storage (cinder).parameter_defaults: CephPools: - name: tier2 pg_num: 64 rule_name: fast application: rbd - name: volumes pg_num: 64 rule_name: standard application: rbd - name: vms pg_num: 64 rule_name: standard application: rbd - name: backups pg_num: 64 rule_name: standard application: rbd - name: images pg_num: 64 rule_name: standard application: rbd - name: metrics pg_num: 64 rule_name: standard application: openstack_gnocchi

7.7. Configuring Block Storage to use the new pool

Add the Ceph pool to the cinder.conf file to enable Block Storage (cinder) to consume it:

Procedure

Update

cinder.confas follows:parameter_defaults: CinderRbdExtraPools: - tier2

7.8. Verifying customized CRUSH map

After the openstack overcloud deploy command creates or updates the overcloud, complete the following step to verify that the customized CRUSH map was correctly applied.

Be careful if you move a host from one route to another.

Procedure

Connect to a Ceph monitor node and run the following command:

# ceph osd tree ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY -7 0.39996 root standard_root -6 0.19998 rack rack1_std -5 0.19998 host lab-ceph02 1 0.09999 osd.1 up 1.00000 1.00000 4 0.09999 osd.4 up 1.00000 1.00000 -9 0.19998 rack rack2_std -8 0.19998 host lab-ceph03 0 0.09999 osd.0 up 1.00000 1.00000 3 0.09999 osd.3 up 1.00000 1.00000 -4 0.19998 root fast_root -3 0.19998 rack rack1_fast -2 0.19998 host lab-ceph01 2 0.09999 osd.2 up 1.00000 1.00000 5 0.09999 osd.5 up 1.00000 1.00000

Chapter 8. Creating the Overcloud

Once your custom environment files are ready, you can specify which flavors and nodes each role should use and then execute the deployment. The following subsections explain both steps in greater detail.

8.1. Assigning Nodes and Flavors to Roles

Planning an overcloud deployment involves specifying how many nodes and which flavors to assign to each role. Like all Heat template parameters, these role specifications are declared in the parameter_defaults section of your environment file (in this case, ~/templates/storage-config.yaml).

For this purpose, use the following parameters:

Table 8.1. Roles and Flavors for Overcloud Nodes

| Heat Template Parameter | Description |

|---|---|

| ControllerCount | The number of Controller nodes to scale out |

| OvercloudControlFlavor |

The flavor to use for Controller nodes ( |

| ComputeCount | The number of Compute nodes to scale out |

| OvercloudComputeFlavor |

The flavor to use for Compute nodes ( |

| CephStorageCount | The number of Ceph storage (OSD) nodes to scale out |

| OvercloudCephStorageFlavor |

The flavor to use for Ceph Storage (OSD) nodes ( |

| CephMonCount | The number of dedicated Ceph MON nodes to scale out |

| OvercloudCephMonFlavor |

The flavor to use for dedicated Ceph MON nodes ( |

| CephMdsCount | The number of dedicated Ceph MDS nodes to scale out |

| OvercloudCephMdsFlavor |

The flavor to use for dedicated Ceph MDS nodes ( |

The CephMonCount, CephMdsCount, OvercloudCephMonFlavor, and OvercloudCephMdsFlavor parameters (along with the ceph-mon and ceph-mds flavors) will only be valid if you created a custom CephMON and CephMds role, as described in Chapter 4, Deploying Other Ceph Services on Dedicated Nodes.

For example, to configure the overcloud to deploy three nodes for each role (Controller, Compute, Ceph-Storage, and CephMon), add the following to your parameter_defaults:

parameter_defaults: ControllerCount: 3 OvercloudControlFlavor: control ComputeCount: 3 OvercloudComputeFlavor: compute CephStorageCount: 3 OvercloudCephStorageFlavor: ceph-storage CephMonCount: 3 OvercloudCephMonFlavor: ceph-mon CephMdsCount: 3 OvercloudCephMdsFlavor: ceph-mds

See Creating the Overcloud with the CLI Tools from the Director Installation and Usage guide for a more complete list of Heat template parameters.

8.2. Initiating Overcloud Deployment

During undercloud installation, set generate_service_certificate=false in the undercloud.conf file. Otherwise, you must inject a trust anchor when you deploy the overcloud, as described in Enabling SSL/TLS on Overcloud Public Endpoints in the Advanced Overcloud Customization guide.

The creation of the overcloud requires additional arguments for the openstack overcloud deploy command. For example:

$ openstack overcloud deploy --templates -r /home/stack/templates/roles_data_custom.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-ansible.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-rgw.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-mds.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/cinder-backup.yaml \ -e /home/stack/templates/storage-config.yaml \ -e /home/stack/templates/ceph-config.yaml \ --ntp-server pool.ntp.org

The above command uses the following options:

-

--templates- Creates the Overcloud from the default Heat template collection (namely,/usr/share/openstack-tripleo-heat-templates/). -

-r /home/stack/templates/roles_data_custom.yaml- Specifies the customized roles definition file from Chapter 4, Deploying Other Ceph Services on Dedicated Nodes, which adds custom roles for either Ceph MON or Ceph MDS services. These roles allow either service to be installed on dedicated nodes. -

-e /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-ansible.yaml- Sets the director to create a Ceph cluster. In particular, this environment file will deploy a Ceph cluster with containerized Ceph Storage nodes. -

-e /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-rgw.yaml- Enables the Ceph Object Gateway, as described in Section 5.2, “Enabling the Ceph Object Gateway”. -

-e /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-mds.yaml- Enables the Ceph Metadata Server, as described in Section 5.1, “Enabling the Ceph Metadata Server”. -

-e /usr/share/openstack-tripleo-heat-templates/environments/cinder-backup.yaml- Enables the Block Storage Backup service (cinder-backup), as described in Section 5.3, “Configuring the Backup Service to Use Ceph”. -

-e /home/stack/templates/storage-config.yaml- Adds the environment file containing your custom Ceph Storage configuration. -

-e /home/stack/templates/ceph-config.yaml- Adds the environment file containing your custom Ceph cluster settings, as described in Chapter 6, Customizing the Ceph Storage Cluster. -

--ntp-server pool.ntp.org- Sets our NTP server.

You can also use an answers file to invoke all your templates and environment files. For example, you can use the following command to deploy an identical overcloud:

$ openstack overcloud deploy -r /home/stack/templates/roles_data_custom.yaml \ --answers-file /home/stack/templates/answers.yaml --ntp-server pool.ntp.org

In this case, the answers file /home/stack/templates/answers.yaml contains:

templates: /usr/share/openstack-tripleo-heat-templates/ environments: - /usr/share/openstack-tripleo-heat-templates/environments/ceph-ansible/ceph-ansible.yaml - /usr/share/openstack-tripleo-heat-templates/environments/ceph-rgw.yaml - /usr/share/openstack-tripleo-heat-templates/environments/ceph-mds.yaml - /usr/share/openstack-tripleo-heat-templates/environments/cinder-backup.yaml - /home/stack/templates/storage-config.yaml - /home/stack/templates/ceph-config.yaml

See Including Environment Files in Overcloud Creation for more details.

For a full list of options, run:

$ openstack help overcloud deploy

For more information, see Creating the Overcloud with the CLI Tools in the Director Installation and Usage guide.

The Overcloud creation process begins and the director provisions your nodes. This process takes some time to complete. To view the status of the Overcloud creation, open a separate terminal as the stack user and run:

$ source ~/stackrc $ openstack stack list --nested

Chapter 9. Post-Deployment

The following subsections describe several post-deployment operations for managing the Ceph cluster.

9.1. Accessing the Overcloud

The director generates a script to configure and help authenticate interactions with your overcloud from the director host. The director saves this file (overcloudrc) in your stack user’s home directory. Run the following command to use this file:

$ source ~/overcloudrc

This loads the necessary environment variables to interact with your overcloud from the director host’s CLI. To return to interacting with the director’s host, run the following command:

$ source ~/stackrc

9.2. Monitoring Ceph Storage Nodes

After you create the overcloud, check the status of the Ceph Storage cluster to ensure that it works correctly.

Procedure

Log in to a Controller node as the

heat-adminuser:$ nova list $ ssh heat-admin@192.168.0.25

Check the health of the cluster:

$ sudo docker exec ceph-mon-$HOSTNAME ceph health

If the cluster has no issues, the command reports back

HEALTH_OK. This means the cluster is safe to use.Log in to an overcloud node that runs the Ceph monitor service and check the status of all OSDs in the cluster:

sudo docker exec ceph-mon-$HOSTNAME ceph osd tree

Check the status of the Ceph Monitor quorum:

$ sudo ceph quorum_status

This shows the monitors participating in the quorum and which one is the leader.

Verify that all Ceph OSDs are running:

$ ceph osd stat

For more information on monitoring Ceph Storage clusters, see Monitoring in the Red Hat Ceph Storage Administration Guide.

Chapter 10. Rebooting the Environment

A situation might occur where you need to reboot the environment. For example, when you might need to modify the physical servers, or you might need to recover from a power outage. In this situation, it is important to make sure your Ceph Storage nodes boot correctly.

Make sure to boot the nodes in the following order:

- Boot all Ceph Monitor nodes first - This ensures the Ceph Monitor service is active in your high availability cluster. By default, the Ceph Monitor service is installed on the Controller node. If the Ceph Monitor is separate from the Controller in a custom role, make sure this custom Ceph Monitor role is active.

- Boot all Ceph Storage nodes - This ensures the Ceph OSD cluster can connect to the active Ceph Monitor cluster on the Controller nodes.

10.1. Rebooting a Ceph Storage (OSD) cluster

Complete the following steps to reboot a cluster of Ceph Storage (OSD) nodes.

Procedure

Log into a Ceph MON or Controller node and disable Ceph Storage cluster rebalancing temporarily:

$ sudo ceph osd set noout $ sudo ceph osd set norebalance

- Select the first Ceph Storage node to reboot and log into the node.

Reboot the node:

$ sudo reboot

- Wait until the node boots.

Log into the node and check the cluster status:

$ sudo ceph -s

Check the

pgmapreports allpgsas normal (active+clean).- Log out of the node, reboot the next node, and check its status. Repeat this process until you have rebooted all Ceph storage nodes.

When complete, log into a Ceph MON or Controller node and enable cluster rebalancing again:

$ sudo ceph osd unset noout $ sudo ceph osd unset norebalance

Perform a final status check to verify the cluster reports

HEALTH_OK:$ sudo ceph status

If a situation occurs where all Overcloud nodes boot at the same time, the Ceph OSD services might not start correctly on the Ceph Storage nodes. In this situation, reboot the Ceph Storage OSDs so they can connect to the Ceph Monitor service.

Verify a HEALTH_OK status of the Ceph Storage node cluster with the following command:

$ sudo ceph status

Chapter 11. Scaling the Ceph Cluster

11.1. Scaling Up the Ceph Cluster

You can scale up the number of Ceph Storage nodes in your overcloud by re-running the deployment with the number of Ceph Storage nodes you need.

Before doing so, ensure that you have enough nodes for the updated deployment. These nodes must be registered with the director and tagged accordingly.

Registering New Ceph Storage Nodes

To register new Ceph storage nodes with the director, follow these steps:

Log into the director host as the

stackuser and initialize your director configuration:$ source ~/stackrc

-

Define the hardware and power management details for the new nodes in a new node definition template; for example,

instackenv-scale.json. Import this file to the OpenStack director:

$ openstack overcloud node import ~/instackenv-scale.json