Composing, installing, and managing RHEL for Edge images

Creating, deploying, and managing Edge systems with Red Hat Enterprise Linux 8

Abstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

- Log in to the Jira website.

- Click Create in the top navigation bar.

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- Click Create at the bottom of the dialogue.

Chapter 1. Introducing RHEL for Edge images

A RHEL for Edge image is an rpm-ostree image that includes system packages to remotely install RHEL on Edge servers.

The system packages include:

-

Base OSpackage - Podman as the container engine

- Additional RPM content

Differently from RHEL images, RHEL for Edge is an immutable operating system, that is, it contains a read-only root directory with the following characteristics:

- The packages are isolated from root directory

- Package installs create layers that make it easy to rollback to previous versions

- Efficient updates to disconnected environments

- Supports multiple operating system branches and repositories

-

Has a hybrid

rpm-ostreepackage system

You can deploy a RHEL for Edge image on Bare Metal, Appliance, and Edge servers.

You can compose customized RHEL for Edge images using the RHEL image builder tool. You can also create RHEL for Edge images by accessing the edge management application in the Red Hat Hybrid Cloud Console platform and configure automated management.

The edge management application simplifies the way you can provision and register your images. To learn more about the edge management, see the Create RHEL for Edge images and configure automated management documentation.

Using RHEL for Edge customized images that were created using the RHEL image builder on-premise version artifacts is not supported in the edge management application. See Edge management supportability.

With a RHEL for Edge image, you can achieve the following:

1.1. RHEL for Edge—supported architecture

Currently, you can deploy RHEL for Edge images on AMD and Intel 64-bit systems.

RHEL for Edge does not support ARM systems in RHEL 8.

1.2. How to compose and deploy a RHEL for Edge image

Composing and deploying a RHEL for Edge image involves two phases:

-

Composing a RHEL

rpm-ostreeimage using the RHEL image builder tool. You can access RHEL image builder through a command-line interface in thecomposer-clitool, or use a graphical user interface in the RHEL web console. - Deploying the image using RHEL installer.

While composing a RHEL for Edge image, you can select any of the following image types. Composing the different RHEL for Edge images might or might not require network access. See the table:

Table 1.1. RHEL for Edge images type

| Image type | Description | Suitable for network-based deployments | Suitable for non-network-based deployments |

|---|---|---|---|

|

RHEL for Edge Commit ( |

The | Yes | No |

|

RHEL for Edge Container ( |

The | No | Yes |

|

RHEL for Edge Installer ( |

The | No | Yes |

| RHEL for Edge Raw Image (.raw.xz) |

The | Yes | Yes |

|

RHEL for Edge Simplified Installer ( |

The | Yes | Yes |

|

RHEL for Edge AMI ( |

The | Yes | Yes |

|

RHEL for Edge VMDK ( |

The | Yes | Yes |

The image types vary in terms of their contents, and are therefore suitable for different types of deployment environments.

Additional resources

1.3. Non-network-based deployments

Use RHEL image builder to create flexible RHEL rpm-ostree images to suit your requirements, and then use Anaconda to deploy them in your environment.

You can access RHEL image builder through a command-line interface in the composer-cli tool, or use a graphical user interface in the RHEL web console.

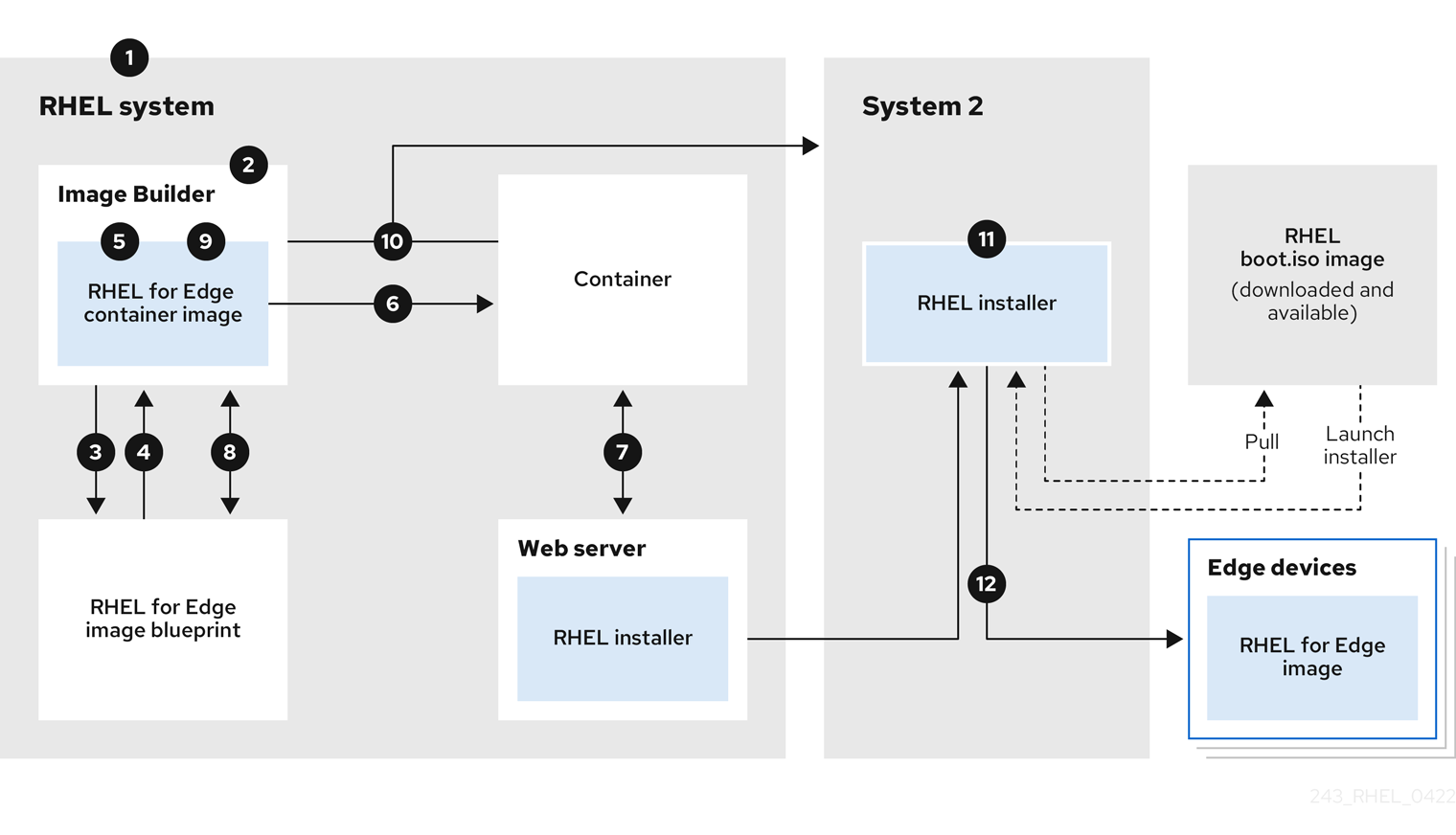

Composing and deploying a RHEL for Edge image in non-network-based deployments involves the following high-level steps:

- Install and register a RHEL system

- Install RHEL image builder

- Using RHEL image builder, create a blueprint with customizations for RHEL for Edge Container image

- Import the RHEL for Edge blueprint in RHEL image builder

- Create a RHEL for Edge image embed in an OCI container with a webserver ready to deploy the commit as an OSTree repository

- Download the RHEL for Edge Container image file

- Deploy the container serving a repository with the RHEL for Edge Container commit

- Using RHEL image builder, create another blueprint for RHEL for Edge Installer image

- Create a RHEL for Edge Installer image configured to pull the commit from the running container embedded with RHEL for Edge Container image

- Download the RHEL for Edge Installer image

- Run the installation

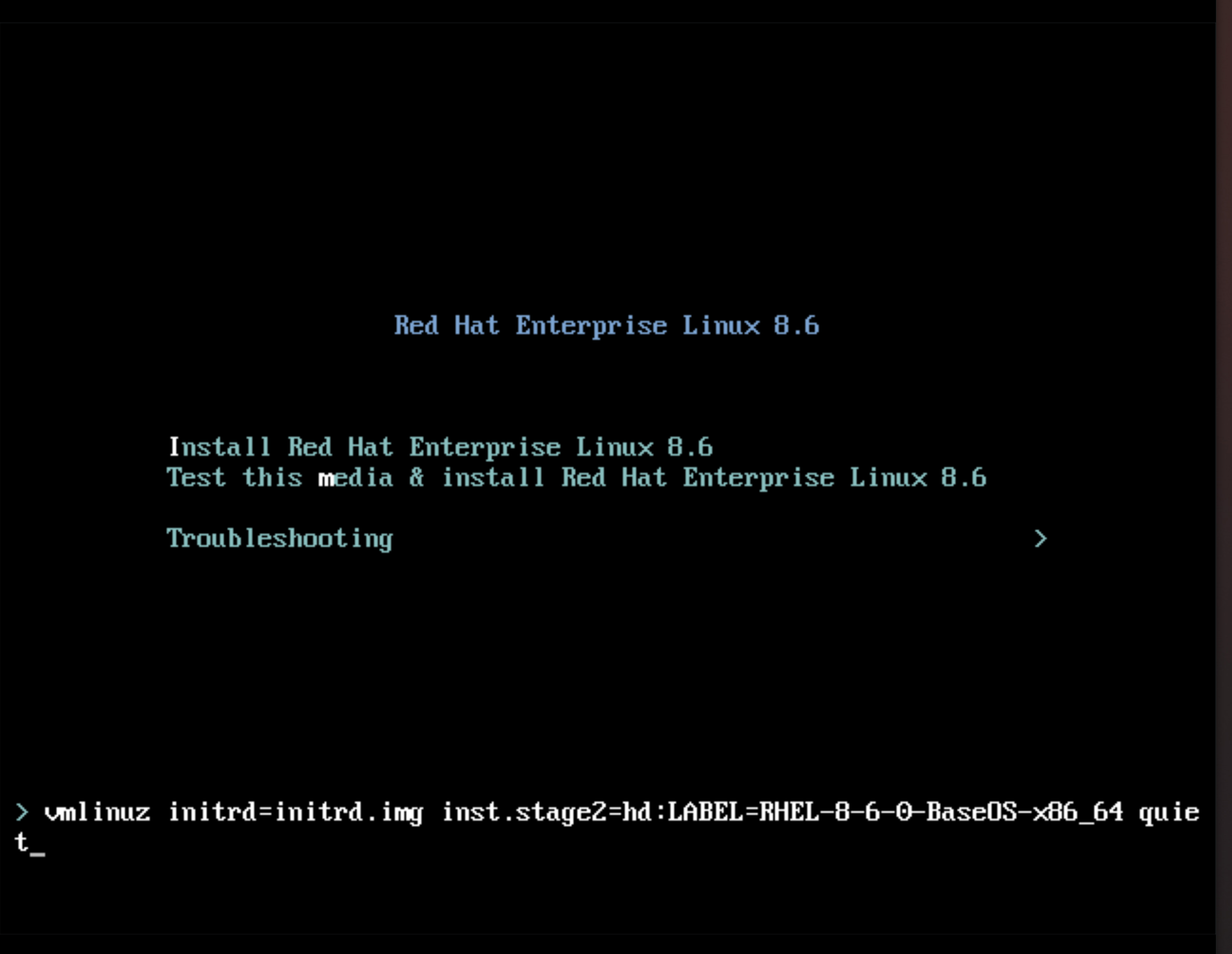

The following diagram represents the RHEL for Edge image non-network deployment workflow:

Figure 1.1. Deploying RHEL for Edge in non-network environment

1.4. Network-based deployments

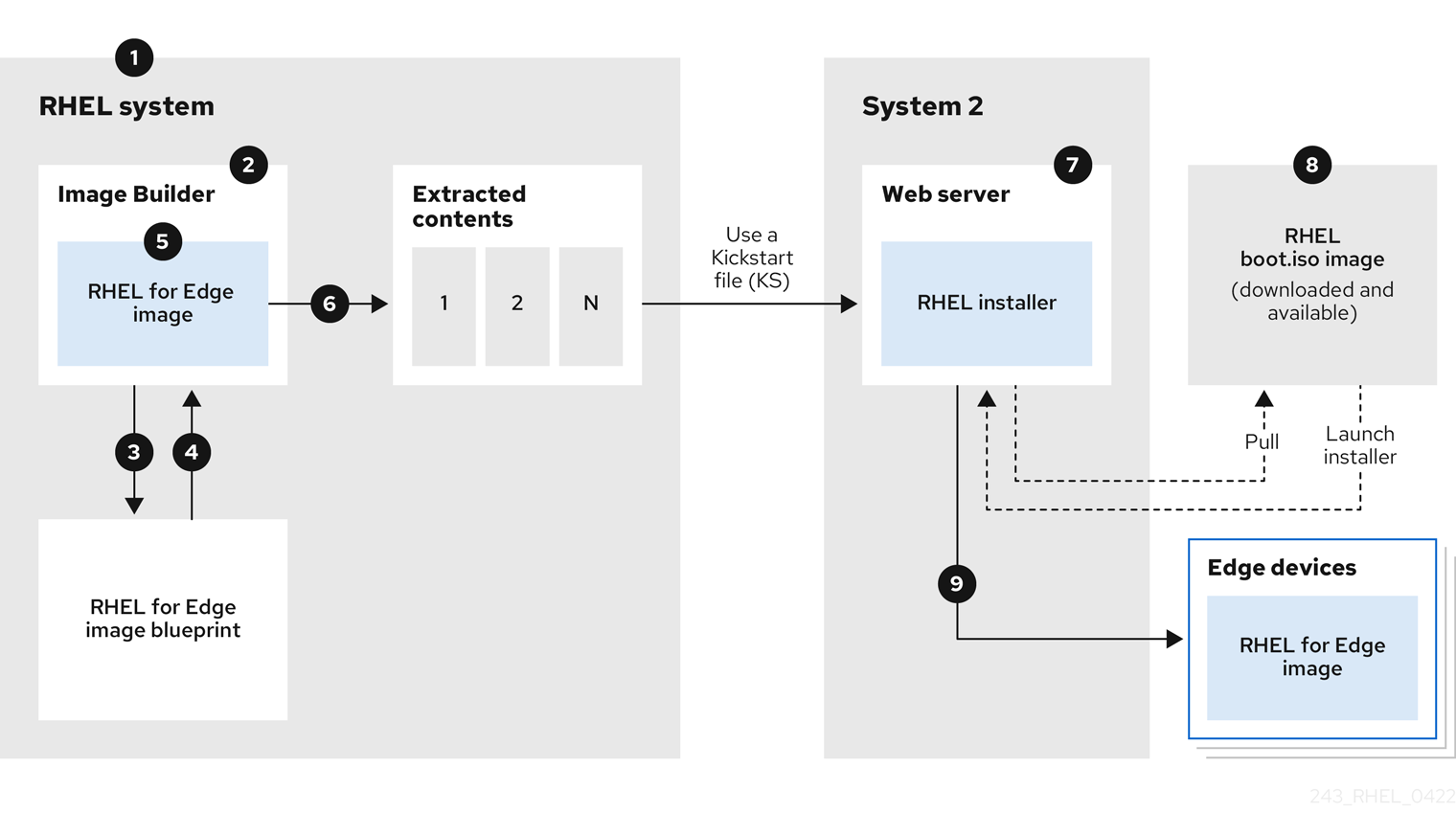

Use RHEL image builder to create flexible RHEL rpm-ostree images to suit your requirements, and then use Anaconda to deploy them in your environment. RHEL image builder automatically identifies the details of your deployment setup and generates the image output as an edge-commit as a .tar file.

You can access RHEL image builder through a command-line interface in the composer-cli tool, or use a graphical user interface in the RHEL web console.

You can compose and deploy the RHEL for Edge image by performing the following high-level steps:

For an attended installation

- Install and register a RHEL system

- Install RHEL image builder

- Using RHEL image builder, create a blueprint for RHEL for Edge image

- Import the RHEL for Edge blueprint in RHEL image builder

-

Create a RHEL for Edge Commit (

.tar) image - Download the RHEL for Edge image file

- On the same system where you have installed RHEL image builder, install a web server that you want to serve the the RHEL for Edge Commit content. For instructions, see Setting up and configuring NGINX

-

Extract the RHEL for Edge Commit (

.tar) content to the running web server - Create a Kickstart file that pulls the OSTree content from the running web server. For details on how to modify the Kickstart to pull the OSTree content, see Extracting the RHEL for Edge image commit

- Boot the RHEL installer ISO on the edge device and provide the Kickstart to it.

For an unattended installation, you can customize the RHEL installation ISO and embed the Kickstart file to it.

The following diagram represents the RHEL for Edge network image deployment workflow:

Figure 1.2. Deploying RHEL for Edge in network-base environment

1.5. Difference between RHEL RPM images and RHEL for Edge images

You can create RHEL system images in traditional package-based RPM format and also as RHEL for Edge (rpm-ostree) images.

You can use the traditional package-based RPMs to deploy RHEL on traditional data centers. However, with RHEL for Edge images you can deploy RHEL on servers other than traditional data centers. These servers include systems where processing of large amounts of data is done closest to the source where data is generated—Edge servers.

The RHEL for Edge (rpm-ostree) images are not a package manager. They only support complete bootable file system trees, not individual files. These images do not have information regarding the individual files such as how these files were generated or anything related to their origin.

The rpm-ostree images need a separate mechanism, the package manager, to install additional applications in the /var directory. With that, the rpm-ostree image keeps the operating system unchanged, while maintaining the state of the /var and /etc directories. The atomic updates enable rollbacks and background staging of updates.

Refer to the following table to know how RHEL for Edge images differ from the package-based RHEL RPM images.

Table 1.2. Difference between RHEL RPM images and RHEL for Edge images

| Key attributes | RHEL RPM image | RHEL for Edge image |

|

| You can assemble the packages locally to form an image. | The packages are assembled in an ostree which you can install on a system. |

|

|

You can use |

You can use |

|

| The package contains YUM repositories | The package contains Ostree remote repository |

|

| Read write |

Read-only ( |

|

| You can mount the image to any non tmpfs mount point |

|

Chapter 2. Setting up RHEL image builder

Use RHEL image builder to create your customized RHEL for Edge images. After you install RHEL image builder on a RHEL system, RHEL image builder is available as an application in RHEL web console. You can also access RHEL image builder through a command line interface in the composer-cli tool.

It is recommended to install RHEL image builder on a virtual machine.

2.1. Image builder system requirements

The environment where RHEL image builder runs, for example a virtual machine, must meet the requirements that are listed in the following table.

Running RHEL image builder inside a container is not supported.

Table 2.1. Image builder system requirements

| Parameter | Minimal Required Value |

| System type | A dedicated virtual machine |

| Processor | 2 cores |

| Memory | 4 GiB |

| Disk space | 20 GiB |

| Access privileges | Administrator level (root) |

| Network | Connectivity to Internet |

The 20 GiB disk space requirement is enough to install and run RHEL image builder in the host. To build and deploy image builds, you must allocate additional dedicated disk space.

2.2. Installing RHEL image builder

To install RHEL image builder on a dedicated virtual machine, follow these steps:

Prerequisites

- The virtual machine is created and is powered on.

- You have installed RHEL and you have subscribed to RHSM or Red Hat Satellite.

-

You have enabled the

BaseOSandAppStreamrepositories to be able to install the RHEL image builder packages.

Procedure

Install the following packages on the virtual machine.

- osbuild-composer

- composer-cli

- cockpit-composer

- bash-completion

- firewalld

# yum install osbuild-composer composer-cli cockpit-composer bash-completion firewalld

RHEL image builder is installed as an application in RHEL web console.

- Reboot the virtual machine

Configure the system firewall to allow access to the web console:

# firewall-cmd --add-service=cockpit && firewall-cmd --add-service=cockpit --permanent

Enable RHEL image builder.

# systemctl enable osbuild-composer.socket cockpit.socket --now

The osbuild-composer and cockpit services start automatically on first access.

Load the shell configuration script so that the autocomplete feature for the

composer-clicommand starts working immediately without reboot:$ source /etc/bash_completion.d/composer-cli

Additional resources

Chapter 3. Configuring RHEL image builder repositories

To use RHEL image builder, you must ensure that the repositories are configured. You can use the following types of repositories in RHEL image builder:

- Official repository overrides

- Use these if you want to download base system RPMs from elsewhere than the Red Hat Content Delivery Network (CDN) official repositories, for example, a custom mirror in your network. Using official repository overrides disables the default repositories, and your custom mirror must contain all the necessary packages.

- Custom third-party repositories

- Use these to include packages that are not available in the official RHEL repositories.

3.1. Adding custom third-party repositories to RHEL image builder

You can add custom third-party sources to your repositories and manage these repositories by using the composer-cli.

Prerequisites

- You have the URL of the custom third-party repository.

Procedure

Create a repository source file, such as

/root/repo.toml. For example:id = "k8s" name = "Kubernetes" type = "yum-baseurl" url = "https://server.example.com/repos/company_internal_packages/" check_gpg = false check_ssl = false system = false

The

typefield accepts the following valid values:yum-baseurl,yum-mirrorlist, andyum-metalink.- Save the file in the TOML format.

Add the new third-party source to RHEL image builder:

$ composer-cli sources add <file-name>.toml

Verification

Check if the new source was successfully added:

$ composer-cli sources listCheck the new source content:

$ composer-cli sources info <source_id>

3.2. Adding third-party repositories with specific distributions to RHEL image builder

You can specify a list of distributions in the custom third-party source file by using the optional field distro. The repository file uses the distribution string list while resolving dependencies during the image building.

Any request that specifies rhel-8 uses this source. For example, if you list packages and specify rhel-8, it includes this source. However, listing packages for the host distribution do not include this source.

Prerequisites

- You have the URL of the custom third-party repository.

- You have the list of distributions that you want to specify.

Procedure

Create a repository source file, such as

/root/repo.toml. For example, to specify the distribution:check_gpg = true check_ssl = true distros = ["rhel-8"] id = "rh9-local" name = "packages for RHEL" system = false type = "yum-baseurl" url = "https://local/repos/rhel8/projectrepo/"- Save the file in the TOML format.

Add the new third-party source to RHEL image builder:

$ composer-cli sources add <file-name>.toml

Verification

Check if the new source was successfully added:

$ composer-cli sources listCheck the new source content:

$ composer-cli sources info <source_id>

3.3. Checking repositories metadata with GPG

To detect and avoid corrupted packages, you can use the DNF package manager to check the GNU Privacy Guard (GPG) signature on RPM packages, and also to check if the repository metadata has been signed with a GPG key.

You can either enter the gpgkey that you want to do the check over https by setting the gpgkeys field with the key URL. Alternatively, to improve security, you can also embed the whole key into the gpgkeys field, to import it directly instead of fetching the key from the URL.

Prerequisites

- The directory that you want to use as a repository exists and contains packages.

Procedure

Access the folder where you want to create a repository:

$ cd repo/Run the

createrepo_cto create a repository from RPM packages:$ createrepo_c .Access the directory where the repodata is:

$ cd repodata/Sign your

repomd.xmlfile:$ gpg -u <_gpg-key-email_> --yes --detach-sign --armor /srv/repo/example/repomd.xmlTo enable GPG signature checks in the repository:

-

Set

check_repogpg = truein the repository source. Enter the

gpgkeythat you want to do the check. If your key is available overhttps, set thegpgkeysfield with the key URL for the key. You can add as many URL keys as you need.The following is an example:

check_gpg = true check_ssl = true id = "signed local packages" name = "repository_name" type = "yum-baseurl" url = "https://local/repos/projectrepo/" check_repogpg = true gpgkeys=["https://local/keys/repokey.pub"]

As an alternative, add the GPG key directly in the

gpgkeysfield, for example:check_gpg = true check_ssl = true check_repogpg id = "custom-local" name = "signed local packages" type = "yum-baseurl" url = "https://local/repos/projectrepo/" gpgkeys=["https://remote/keys/other-repokey.pub", '''-----BEGIN PGP PUBLIC KEY BLOCK----- … -----END PGP PUBLIC KEY BLOCK-----''']

If the test does not find the signature, the GPG tool shows an error similar to the following one:

$ GPG verification is enabled, but GPG signature is not available. This may be an error or the repository does not support GPG verification: Status code: 404 for http://repo-server/rhel/repodata/repomd.xml.asc (IP: 192.168.1.3)

If the signature is invalid, the GPG tool shows an error similar to the following one:

repomd.xml GPG signature verification error: Bad GPG signature

-

Set

Verification

Test the signature of the repository manually:

$ gpg --verify /srv/repo/example/repomd.xml.asc

3.4. RHEL image builder official repository overrides

RHEL image builder osbuild-composer back end does not inherit the system repositories located in the /etc/yum.repos.d/ directory. Instead, it has its own set of official repositories defined in the /usr/share/osbuild-composer/repositories directory. This includes the Red Hat official repository, which contains the base system RPMs to install additional software or update already installed programs to newer versions. If you want to override the official repositories, you must define overrides in /etc/osbuild-composer/repositories/. This directory is for user defined overrides and the files located there take precedence over those in the /usr/share/osbuild-composer/repositories/ directory.

The configuration files are not in the usual YUM repository format known from the files in /etc/yum.repos.d/. Instead, they are JSON files.

3.5. Overriding a system repository

You can configure your own repository override for RHEL image builder in the /etc/osbuild-composer/repositories directory.

Prior to RHEL 8.5 release, the name of the repository overrides is rhel-8.json. Starting from RHEL 8.5, the names also respect the minor version: rhel-84.json, rhel-85.json, and so on.

Prerequisites

- You have a custom repository that is accessible from your host system.

Procedure

Create the

/etc/osbuild-composer/repositories/directory to store your repository overrides:$ sudo mkdir -p /etc/osbuild-composer/repositories

Create a JSON file, using a name corresponding to your RHEL version. Alternatively, you can copy the file for your distribution from

/usr/share/osbuild-composer/and modify its content.For RHEL 8.9, use

/etc/osbuild-composer/repositories/rhel-89.json.Add the following structure to your JSON file. Specify only one of the following attributes, in the string format:

-

baseurl- The base URL of the repository. -

metalink- The URL of a metalink file that contains a list of valid mirror repositories. mirrorlist- The URL of a mirrorlist file that contains a list of valid mirror repositories. The remaining fields, such asgpgkey, andmetadata_expire, are optional.For example:

{ "x86_64": [ { "name": "baseos", "baseurl": "http://mirror.example.com/composes/released/RHEL-8/8.0/BaseOS/x86_64/os/", "gpgkey": "-----BEGIN PGP PUBLIC KEY BLOCK-----\n\n (…)", "check_gpg": true } ] }Alternatively, you can copy the JSON file for your distribution, by replacing

rhel-version.jsonwith your RHEL version, for example: rhel-8.json.$ cp /usr/share/osbuild-composer/repositories/rhel-version.json /etc/osbuild-composer/repositories/

-

Optional: Verify the JSON file:

$ json_verify /etc/osbuild-composer/repositories/<file>.json

Edit the

baseurlpaths in therhel-8.jsonfile and save it. For example:$ /etc/osbuild-composer/repositories/rhel-version.jsonRestart the

osbuild-composer.service:$ sudo systemctl restart osbuild-composer.service

Verification

Check if the repository points to the correct URLs:

$ cat /etc/yum.repos.d/redhat.repo

You can see that the repository points to the correct URLs which are copied from the

/etc/yum.repos.d/redhat.repofile.

Additional resources

3.6. Overriding a system repository that requires subscriptions

You can set up the osbuild-composer service to use system subscriptions that are defined in the /etc/yum.repos.d/redhat.repo file. To use a system subscription in osbuild-composer, define a repository override that has the following details:

-

The same

baseurlas the repository defined in/etc/yum.repos.d/redhat.repo. The value of

”rhsm”: truedefined in the JSON object.Noteosbuild-composerdoes not automatically use repositories defined in/etc/yum.repos.d/. You need to manually specify them either as a system repository override or as an additionalsourceby usingcomposer-cli. The “BaseOS” and “AppStream” repositories usually use system repository overrides, whereas all the other repositories usecomposer-clisources.

Prerequisites

-

Your system has a subscription defined in

/etc/yum.repos.d/redhat.repo - You have created a repository override. See Overriding a system repository.

Procedure

Get the

baseurlfrom the/etc/yum.repos.d/redhat.repofile:# cat /etc/yum.repos.d/redhat.repo [AppStream] name = AppStream mirror example baseurl = https://mirror.example.com/RHEL-8/8.0/AppStream/x86_64/os/ enabled = 1 gpgcheck = 0 sslverify = 1 sslcacert = /etc/pki/ca1/ca.crt sslclientkey = /etc/pki/ca1/client.key sslclientcert = /etc/pki/ca1/client.crt metadata_expire = 86400 enabled_metadata = 0Configure the repository override to use the same

baseurland setrhsmto true:{ "x86_64": [ { "name": "AppStream mirror example", "baseurl": "https://mirror.example.com/RHEL-8/8.0/AppStream/x86_64/os/", "gpgkey": "-----BEGIN PGP PUBLIC KEY BLOCK-----\n\n (…)", "check_gpg": true, "rhsm": true } ] }Restart the

osbuild-composer.service:$ sudo systemctl restart osbuild-composer.service

Additional resources

Chapter 4. Composing a RHEL for Edge image using image builder in RHEL web console

Use RHEL image builder to create a custom RHEL for Edge image (OSTree commit).

To access RHEL image builder and to create your custom RHEL for Edge image, you can either use the RHEL web console interface or the command-line interface.

You can compose RHEL for Edge images using RHEL image builder in RHEL web console by performing the following high-level steps:

- Access RHEL image builder in RHEL web console

- Create a blueprint for RHEL for Edge image.

Create a RHEL for Edge image. You can create the following images:

- RHEL for Edge Commit image.

- RHEL for Edge Container image.

- RHEL for Edge Installer image.

- Download the RHEL for Edge image

4.1. Accessing RHEL image builder in the RHEL web console

To access RHEL image builder in RHEL web console, ensure that you have met the following prerequisites and then follow the procedure.

Prerequisites

- You have installed a RHEL system.

- You have administrative rights on the system.

- You have subscribed the RHEL system to Red Hat Subscription Manager (RHSM) or to Red Hat Satellite Server.

- The system is powered on and accessible over network.

- You have installed RHEL image builder on the system.

Procedure

- On your RHEL system, access https://localhost:9090/ in a web browser.

- For more information about how to remotely access RHEL image builder, see Managing systems using the RHEL 8 web console document.

- Log in to the web console using an administrative user account.

- On the web console, in the left hand menu, click Apps.

Click Image Builder.

The RHEL image builder dashboard opens in the right pane. You can now proceed to create a blueprint for the RHEL for Edge images.

4.2. Creating a blueprint for a RHEL for Edge image using image builder in the web console

To create a blueprint for a RHEL for Edge image by using RHEL image builder in RHEL web console, ensure that you have met the following prerequisites and then follow the procedure.

Prerequisites

- On a RHEL system, you have opened the RHEL image builder dashboard.

Procedure

On the RHEL image builder dashboard, click Create Blueprint.

The Create Blueprint dialogue box opens.

On the

Detailspage:- Enter the name of the blueprint and, optionally, its description. Click Next.

Optional: In the

Packagespage:On the

Available packagessearch, enter the package name and click the > button to move it to the Chosen packages field. Search and include as many packages as you want. Click Next.NoteThese customizations are all optional unless otherwise specified.

-

On the

Kernelpage, enter a kernel name and the command-line arguments. -

On the

File systempage, selectUse automatic partitioning. OSTree systems do not support filesystem customization, because OSTree images have their own mount rule, such as read-only. Click Next. On the

Servicespage, you can enable or disable services:- Enter the service names you want to enable or disable, separating them by a comma, by space, or by pressing the Enter key. Click Next.

On the

Firewallpage, set up your firewall setting:-

Enter the

Ports, and the firewall services you want to enable or disable. - Click the Add zone button to manage your firewall rules for each zone independently. Click Next.

-

Enter the

On the

Userspage, add a users by following the steps:- Click Add user.

-

Enter a

Username, apassword, and aSSH key. You can also mark the user as a privileged user, by clicking theServer administratorcheckbox. Click Next.

On the

Groupspage, add groups by completing the following steps:Click the Add groups button:

-

Enter a

Group nameand aGroup ID. You can add more groups. Click Next.

-

Enter a

On the

SSH keyspage, add a key:Click the Add key button.

- Enter the SSH key.

-

Enter a

User. Click Next.

On the

Timezonepage, set your timezone settings:On the

Timezonefield, enter the timezone you want to add to your system image. For example, add the following timezone format: "US/Eastern".If you do not set a timezone, the system uses Universal Time, Coordinated (UTC) as default.

-

Enter the

NTPservers. Click Next.

On the

Localepage, complete the following steps:-

On the

Keyboardsearch field, enter the package name you want to add to your system image. For example: ["en_US.UTF-8"]. -

On the

Languagessearch field, enter the package name you want to add to your system image. For example: "us". Click Next.

-

On the

On the

Otherspage, complete the following steps:-

On the

Hostnamefield, enter the hostname you want to add to your system image. If you do not add a hostname, the operating system determines the hostname. -

Mandatory only for the Simplifier Installer image: On the

Installation Devicesfield, enter a valid node for your system image. For example:dev/sda. Click Next.

-

On the

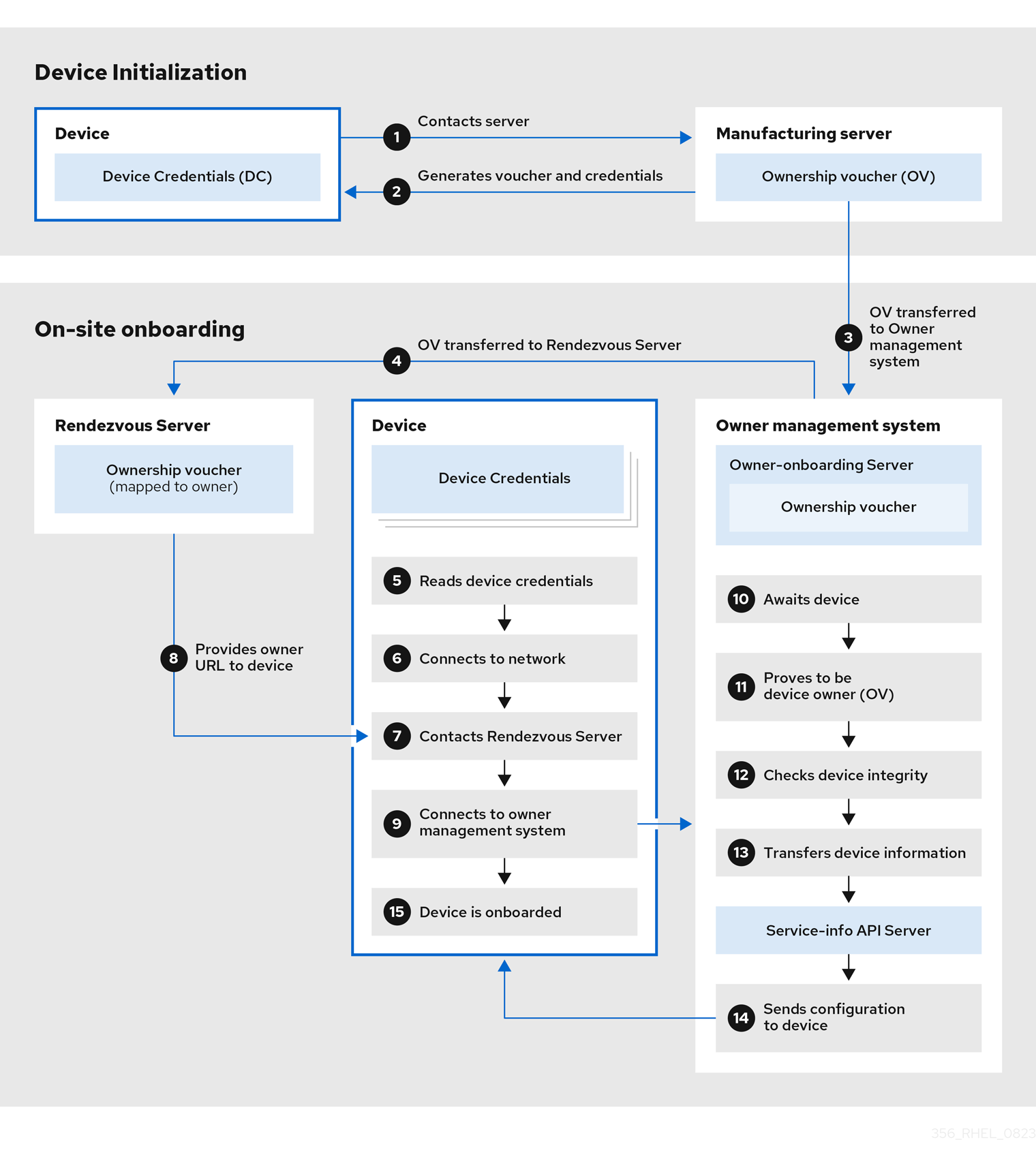

Mandatory only when building FIDO images: On the

FIDO device onboardingpage, complete the following steps:On the

Manufacturing server URLfield, enter the following information:-

On the

DIUN public key insecurefield, enter the insecure public key. -

On the

DIUN public key hashfield, enter the public key hash. -

On the

DIUN public key root certsfield, enter the public key root certs. Click Next.

-

On the

On the

OpenSCAPpage, complete the following steps:-

On the

Datastreamfield, enter thedatastreamremediation instructions you want to add to your system image. -

On the

Profile IDfield, enter theprofile_idsecurity profile you want to add to your system image. Click Next.

-

On the

Mandatory only when building Ignition images: On the

Ignitionpage, complete the following steps:-

On the

Firstboot URLfield, enter the package name you want to add to your system image. -

On the

Embedded Datafield, drag or upload your file. Click Next.

-

On the

-

. On the

Reviewpage, review the details about the blueprint. Click Create.

The RHEL image builder view opens, listing existing blueprints.

4.3. Creating a RHEL for Edge Commit image by using image builder in web console

You can create a “RHEL for Edge Commit” image by using RHEL image builder in RHEL web console. The “RHEL for Edge Commit (.tar)” image type contains a full operating system, but it is not directly bootable. To boot the Commit image type, you must deploy it in a running container.

Prerequisites

- On a RHEL system, you have accessed the RHEL image builder dashboard.

Procedure

- On the RHEL image builder dashboard click Create Image.

On the Image output page, perform the following steps:

- From the Select a blueprint dropdown menu, select the blueprint you want to use.

- From the Image output type dropdown list, select “RHEL for Edge Commit (.tar)” for network-based deployment.

- Click Next.

On the OSTree settings page, enter:

- Repository URL: specify the URL to the OSTree repository of the commit to embed in the image. For example, http://10.0.2.2:8080/repo/.

- Parent commit: specify a previous commit, or leave it empty if you do not have a commit at this time.

-

In the Ref textbox, specify a reference path for where your commit is going to be created. By default, the web console specifies

rhel/8/$ARCH/edge. The "$ARCH" value is determined by the host machine. Click Next.

On the Review page, check the customizations and click Create.

RHEL image builder starts to create a RHEL for Edge Commit image for the blueprint that you created.

NoteThe image creation process takes up to 20 minutes to complete.

Verification

To check the RHEL for Edge Commit image creation progress:

- Click the Images tab.

After the image creation process is complete, you can download the resulting “RHEL for Edge Commit (.tar)” image.

Additional resources

4.4. Creating a RHEL for Edge Container image by using RHEL image builder in RHEL web console

You can create RHEL for Edge images by selecting “RHEL for Edge Container (.tar)”. The RHEL for Edge Container (.tar) image type creates an OSTree commit and embeds it into an OCI container with a web server. When the container is started, the web server serves the commit as an OSTree repository.

Follow the steps in this procedure to create a RHEL for Edge Container image using image builder in RHEL web console.

Prerequisites

- On a RHEL system, you have accessed the RHEL image builder dashboard.

- You have created a blueprint.

Procedure

- On the RHEL image builder dashboard click Create Image.

- On the Image output page, perform the following steps:

From the Select a blueprint dropdown menu, select the blueprint you want to use.

- From the Image output type dropdown list, select “RHEL for Edge Container (.tar)” for network-based deployment.

- Click Next.

On the OSTree page, enter:

Repository URL: specify the URL to the OSTree repository of the commit to embed in the image. For example, http://10.0.2.2:8080/repo/. By default, the repository folder for a RHEL for Edge Container image is "/repo".

To find the correct URL to use, access the running container and check the

nginx.conffile. To find which URL to use, access the running container and check thenginx.conffile. Inside thenginx.conffile, find therootdirectory entry to search for the/repo/folder information. Note that, if you do not specify a repository URL when creating a RHEL for Edge Container image(.tar)by using RHEL image builder, the default/repo/entry is created in thenginx.conffile.- Parent commit: specify a previous commit, or leave it empty if you do not have a commit at this time.

-

In the Ref textbox, specify a reference path for where your commit is going to be created. By default, the web console specifies

rhel/8/$ARCH/edge. The "$ARCH" value is determined by the host machine. Click Next.

- On the Review page, check the customizations. Click Save blueprint.

Click Create.

RHEL image builder starts to create a RHEL for Edge Container image for the blueprint that you created.

NoteThe image creation process takes up to 20 minutes to complete.

Verification

To check the RHEL for Edge Container image creation progress:

- Click the Images tab.

After the image creation process is complete, you can download the resulting “RHEL for Edge Container (.tar)” image.

Additional resources

4.5. Creating a RHEL for Edge Installer image by using image builder in RHEL web console

You can create RHEL for Edge Installer images for non-network-based deployment by selecting RHEL for Edge Installer (.iso). The RHEL for Edge Installer (.iso) image type pulls the OSTree commit repository from the running container served by the RHEL for Edge Container (.tar) and creates an installable boot ISO image with a Kickstart file that is configured to use the embedded OSTree commit.

Follow the steps in this procedure to create a RHEL for Edge image using image builder in RHEL web console.

Prerequisites

- On a RHEL system, you have accessed the image builder dashboard.

- You created a blueprint.

- You created a RHEL for Edge Container image and loaded it into a running container. See Creating a RHEL for Edge Container image for non-network-based deployments.

Procedure

- On the RHEL image builder dashboard click Create Image.

On the Image output page, perform the following steps:

- From the Select a blueprint dropdown menu, select the blueprint you want to use.

-

From the Image output type dropdown list, select RHEL for Edge Installer (

.iso) image. - Click Next.

On the OSTree settings page, enter:

- Repository URL: specify the URL to the OSTree repository of the commit to embed in the image. For example, http://10.0.2.2:8080/repo/.

-

In the Ref textbox, specify a reference path for where your commit is going to be created. By default, the web console specifies

rhel/8/$ARCH/edge. The "$ARCH" value is determined by the host machine. Click Next.

- On the Review page, check the customizations. Click Save blueprint.

Click Create.

RHEL image builder starts to create a RHEL for Edge Installer image for the blueprint that you created.

NoteThe image creation process takes up to 20 minutes to complete.

Verification

After the image creation process is complete, you can download the resulting RHEL for Edge Installer (.iso) image.

To check the RHEL for Edge Installer image creation progress:

- Click the Images tab.

After the image creation process is complete, you can download the resulting RHEL for Edge Installer (.iso) image and boot the ISO image into a device.

Additional resources

4.6. Downloading a RHEL for Edge image

After you successfully create the RHEL for Edge image by using RHEL image builder, download the image on the local host.

Procedure

To download an image:

From the More Options menu, click Download.

The RHEL image builder tool downloads the file at your default download location.

The downloaded file consists of a .tar file with an OSTree repository for RHEL for Edge Commit and RHEL for Edge Container images, or a .iso file for RHEL for Edge Installer images, with an OSTree repository. This repository contains the commit and a json file which contains information metadata about the repository content.

4.7. Additional resources

Chapter 5. Composing a RHEL for Edge image using image builder command-line

You can use image builder to create a customized RHEL for Edge image (OSTree commit).

To access image builder and to create your custom RHEL for Edge image, you can either use the RHEL web console interface or the command-line interface.

For Network-based deployments, the workflow to compose RHEL for Edge images using the CLI, involves the following high-level steps:

- Create a blueprint for RHEL for Edge image

- Create a RHEL for Edge Commit image

- Download the RHEL for Edge Commit image

For Non-Network-based deployments, the workflow to compose RHEL for Edge images using the CLI, involves the following high-level steps:

- Create a blueprint for RHEL for Edge image

- Create a blueprint for the RHEL for Edge Installer image

- Create a RHEL for Edge Container image

- Create a RHEL for Edge Installer image

- Download the RHEL for Edge image

To perform the steps, use the composer-cli package.

To run the composer-cli commands as non-root, you must be part of the weldr group or you must have administrator access to the system.

5.1. Network-based deployments workflow

This provides steps on how to build OSTree commits. These OSTree commits contain a full operating system, but are not directly bootable. To boot them, you need to deploy them using a Kickstart file.

5.1.1. Creating a RHEL for Edge Commit image blueprint using image builder command-line interface

Create a blueprint for RHEL for Edge Commit image using the CLI.

Prerequisite

You do not have an existing blueprint. To verify that, list the existing blueprints:

$ sudo composer-cli blueprints list

Procedure

Create a plain text file in the TOML format, with the following content:

name = "blueprint-name" description = "blueprint-text-description" version = "0.0.1" modules = [ ] groups = [ ]

Where,

- blueprint-name is the name and blueprint-text-description is the description for your blueprint.

- 0.0.1 is the version number according to the Semantic Versioning scheme.

Modules describe the package name and matching version glob to be installed into the image, for example, the package name = "tmux" and the matching version glob is version = "2.9a".

Notice that currently there are no differences between packages and modules.

Groups are packages groups to be installed into the image, for example the group package anaconda-tools.

At this time, if you do not know the modules and groups, leave them empty.

Include the required packages and customize the other details in the blueprint to suit your requirements.

For every package that you want to include in the blueprint, add the following lines to the file:

[[packages]] name = "package-name" version = "package-version"

Where,

- package-name is the name of the package, such as httpd, gdb-doc, or coreutils.

package-version is the version number of the package that you want to use.

The package-version supports the following dnf version specifications:

- For a specific version, use the exact version number such as 8.0.

- For the latest available version, use the asterisk *.

- For the latest minor version, use formats such as 8.*.

Push (import) the blueprint to the RHEL image builder server:

# composer-cli blueprints push blueprint-name.tomlList the existing blueprints to check whether the created blueprint is successfully pushed and exists.

# composer-cli blueprints show BLUEPRINT-NAME

Check whether the components and versions listed in the blueprint and their dependencies are valid:

# composer-cli blueprints depsolve blueprint-name

Additional resources

5.1.2. Creating a RHEL for Edge Commit image using image builder command-line interface

To create a RHEL for Edge Commit image by using RHEL image builder command-line interface, ensure that you have met the following prerequisites and follow the procedure.

Prerequisites

- You have created a blueprint for RHEL for Edge Commit image.

Procedure

Create the RHEL for Edge Commit image.

# composer-cli compose start blueprint-name image-type

Where,

- blueprint-name is the RHEL for Edge blueprint name.

image-type is

edge-commitfor network-based deployment.A confirmation that the composer process has been added to the queue appears. It also shows a Universally Unique Identifier (UUID) number for the image created. Use the UUID number to track your build. Also keep the UUID number handy for further tasks.

Check the image compose status.

# composer-cli compose status

The output displays the status in the following format:

<UUID> RUNNING date blueprint-name blueprint-version image-type

NoteThe image creation process takes up to 20 minutes to complete.

To interrupt the image creation process, run:

# composer-cli compose cancel <UUID>To delete an existing image, run:

# composer-cli compose delete <UUID>After the image is ready, you can download it and use the image on your network deployments.

Additional resources

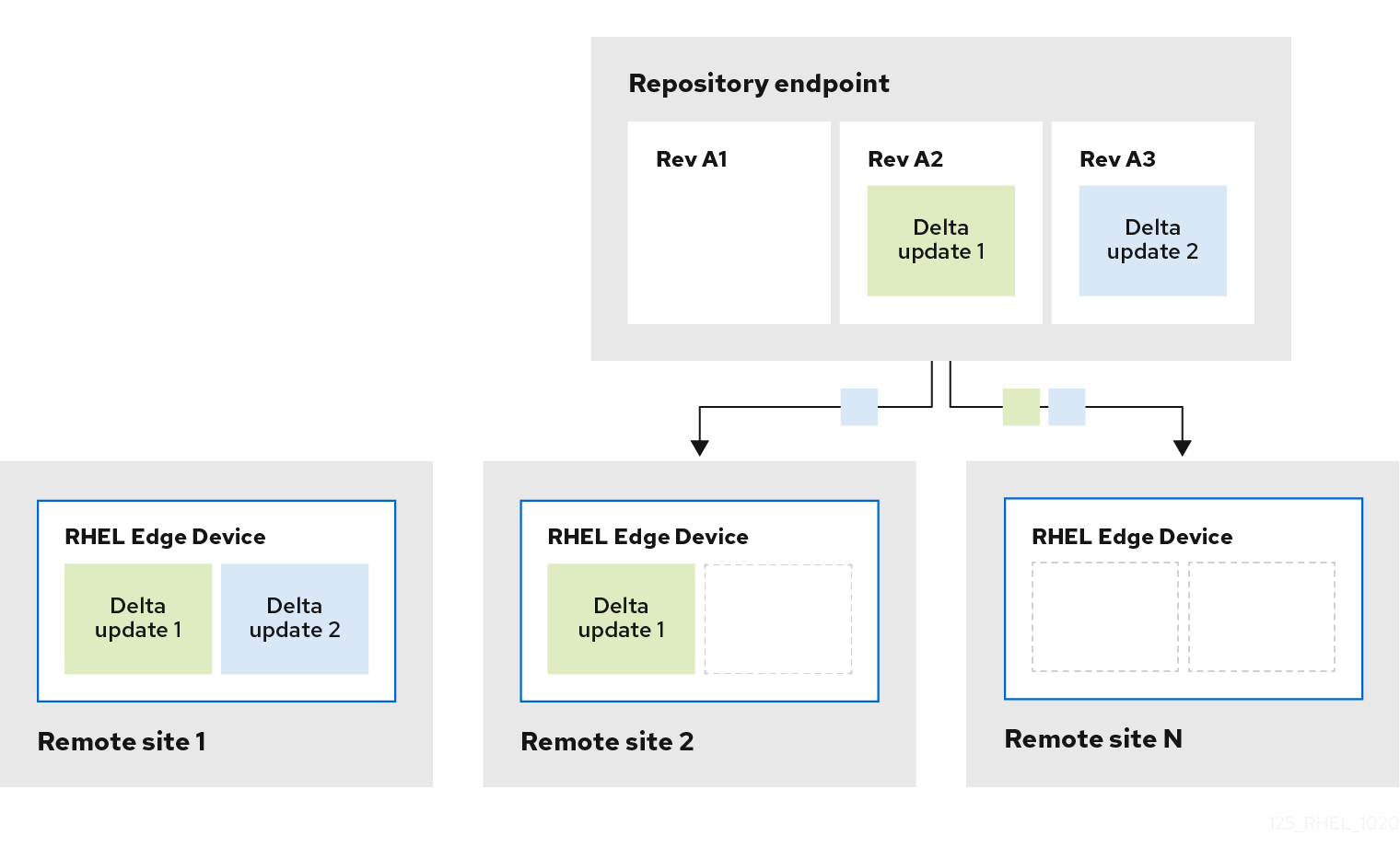

5.1.3. Creating a RHEL for Edge image update with a ref commit by using RHEL image builder CLI

If you performed a change in an existing blueprint, for example, you added a new package, and you want to update an existing RHEL for Edge image with this new package, you can use the --parent argument to generate an updated RHEL for Edge Commit (.tar) image. The --parent argument can be either a ref that exists in the repository specified by the URL argument, or you can use the Commit ID, which you can find in the extracted .tar image file. Both ref and Commit ID arguments retrieve a parent for the new commit that you are building. RHEL image builder can read information from the parent commit that will affect parts of the new commit that you are building. As a result, RHEL image builder reads the parent commit’s user database and preserves UIDs and GIDs for the package-created system users and groups.

Prerequisites

- You have updated an existing blueprint for RHEL for Edge image.

- You have an existing RHEL for Edge image (OSTree commit). See Extracting RHEL for Edge image commit.

-

The

refbeing built is available at theOSTreerepository specified by the URL.

Procedure

Create the RHEL for Edge commit image:

# composer-cli compose start-ostree --ref rhel/8/x86_64/edge --parent parent-OSTree-REF --url URL blueprint-name image-type

For example:

To create a new RHEL for Edge commit based on a

parentand with a newref, run the following command:# composer-cli compose start-ostree --ref rhel/8/x86_64/edge --parent rhel/8/x86_64/edge --url http://10.0.2.2:8080/repo rhel_update edge-commit

To create a new RHEL for Edge commit based on the same

ref, run the following command:# composer-cli compose start-ostree --ref rhel/8/x86_64/edge --url http://10.0.2.2:8080/repo rhel_update edge-commit

Where:

- The --ref argument specifies the same path value that you used to build an OSTree repository.

-

The --parent argument specifies the parent commit. It can be ref to be resolved and pulled, for example

rhel/8/x86_64/edge, or theCommit IDthat you can find in the extracted.tarfile. - blueprint-name is the RHEL for Edge blueprint name.

-

The

--urlargument specifies the URL to the OSTree repository of the commit to embed in the image, for example, http://10.0.2.2:8080/repo. image-type is

edge-commitfor network-based deployment.Note-

The

--parentargument can only be used for theRHEL for Edge Commit (.tar)image type. Using the--urland--parentarguments together results in errors with theRHEL for Edge Container (.tar)image type. -

If you omit the

parent refargument, the system falls back to therefspecified by the--refargument.

A confirmation that the composer process has been added to the queue appears. It also shows a Universally Unique Identifier (UUID) number for the image created. Use the UUID number to track your build. Also keep the UUID number handy for further tasks.

-

The

Check the image compose status.

# composer-cli compose status

The output displays the status in the following format:

<UUID> RUNNING date blueprint-name blueprint-version image-type

NoteThe image creation process takes a few minutes to complete.

(Optional) To interrupt the image creation process, run:

# composer-cli compose cancel <UUID>(Optional) To delete an existing image, run:

# composer-cli compose delete <UUID>

After the image creation is complete, to upgrade an existing OSTree deployment, you need:

- Set up a repository. See Deploying a RHEL for Edge image .

- Add this repository as a remote, that is, the http or https endpoint that hosts the OSTree content.

- Pull the new OSTree commit onto their existing running instance. See Deploying RHEL for Edge image updates manually .

5.1.4. Downloading a RHEL for Edge image using the image builder command-line interface

To download a RHEL for Edge image by using RHEL image builder command line interface, ensure that you have met the following prerequisites and then follow the procedure.

Prerequisites

- You have created a RHEL for Edge image.

Procedure

Review the RHEL for Edge image status.

# composer-cli compose status

The output must display the following:

$ <UUID> FINISHED date blueprint-name blueprint-version image-type

Download the image.

# composer-cli compose image <UUID>

RHEL image builder downloads the image as a

tarfile to the current directory.The UUID number and the image size is displayed alongside.

$ <UUID>-commit.tar: size MB

The image contains a commit and a json file with information metadata about the repository content.

Additional resources

5.2. Non-network-based deployments workflow

To build a boot ISO image that installs an OSTree-based system using the "RHEL for Edge Container" and the "RHEL for Edge Installer" images and that can be later deployed to a device in disconnected environments, follow the steps.

5.2.1. Creating a RHEL for Edge Container blueprint by using image builder CLI

To create a blueprint for RHEL for Edge Container image, perform the following steps:

Procedure

Create a plain text file in the TOML format, with the following content:

name = "blueprint-name" description = "blueprint-text-description" version = "0.0.1" modules = [ ] groups = [ ]

Where,

- blueprint-name is the name and blueprint-text-description is the description for your blueprint.

- 0.0.1 is the version number according to the Semantic Versioning scheme.

Modules describe the package name and matching version glob to be installed into the image, for example, the package name = "tmux" and the matching version glob is version = "2.9a".

Notice that currently there are no differences between packages and modules.

Groups are packages groups to be installed into the image, for example the group package anaconda-tools.

At this time, if you do not know the modules and groups, leave them empty.

Include the required packages and customize the other details in the blueprint to suit your requirements.

For every package that you want to include in the blueprint, add the following lines to the file:

[[packages]] name = "package-name" version = "package-version"

Where,

- package-name is the name of the package, such as httpd, gdb-doc, or coreutils.

package-version is the version number of the package that you want to use.

The package-version supports the following dnf version specifications:

- For a specific version, use the exact version number such as 8.0.

- For the latest available version, use the asterisk *.

- For the latest minor version, use formats such as 8.*.

Push (import) the blueprint to the RHEL image builder server:

# composer-cli blueprints push blueprint-name.tomlList the existing blueprints to check whether the created blueprint is successfully pushed and exists.

# composer-cli blueprints show BLUEPRINT-NAME

Check whether the components and versions listed in the blueprint and their dependencies are valid:

# composer-cli blueprints depsolve blueprint-name

Additional resources

5.2.2. Creating a RHEL for Edge Installer blueprint using image builder CLI

You can create a blueprint to build a RHEL for Edge Installer (.iso) image, and specify user accounts to automatically create one or more users on the system at installation time.

When you create a user in the blueprint with the customizations.user customization, the blueprint creates the user under the /usr/lib/passwd directory and the password, under the /usr/etc/shadow directory. Note that you cannot change the password in further versions of the image in a running system using OSTree updates. The users you create with blueprints must be used only to gain access to the created system. After you access the system, you need to create users, for example, using the useradd command.

To create a blueprint for RHEL for Edge Installer image, perform the following steps:

Procedure

Create a plain text file in the TOML format, with the following content:

name = "blueprint-installer" description = "blueprint-for-installer-image" version = "0.0.1" [[customizations.user]] name = "user" description = "account" password = "user-password" key = "user-ssh-key " home = "path" groups = ["user-groups"]

Where,

- blueprint-name is the name and blueprint-text-description is the description for your blueprint.

- 0.0.1 is the version number according to the Semantic Versioning scheme.

Push (import) the blueprint to the RHEL image builder server:

# composer-cli blueprints push blueprint-name.tomlList the existing blueprints to check whether the created blueprint is successfully pushed and exists.

# composer-cli blueprints show blueprint-nameCheck whether the components and versions listed in the blueprint and their dependencies are valid:

# composer-cli blueprints depsolve blueprint-name

Additional resources

5.2.3. Creating a RHEL for Edge Container image by using image builder CLI

To create a RHEL for Edge Container image by using RHEL image builder command-line interface, ensure that you have met the following prerequisites and follow the procedure.

Prerequisites

- You have created a blueprint for RHEL for Edge Container image.

Procedure

Create the RHEL for Edge Container image.

# composer-cli compose start-ostree --ref rhel/8/x86_64/edge --url URL-OSTree-repository blueprint-name image-type

Where,

-

--refis the same value that customer used to build ostree repository --urlis the URL to the OSTree repository of the commit to embed in the image. For example, http://10.0.2.2:8080/repo/. By default, the repository folder for a RHEL for Edge Container image is "/repo". See Setting up a web server to install RHEL for Edge image.To find the correct URL to use, access the running container and check the

nginx.conffile. To find which URL to use, access the running container and check thenginx.conffile. Inside thenginx.conffile, find therootdirectory entry to search for the/repo/folder information. Note that, if you do not specify a repository URL when creating a RHEL for Edge Container image(.tar)by using RHEL image builder, the default/repo/entry is created in thenginx.conffile.- blueprint-name is the RHEL for Edge blueprint name.

image-type is

edge-containerfor non-network-based deployment.A confirmation that the composer process has been added to the queue appears. It also shows a Universally Unique Identifier (UUID) number for the image created. Use the UUID number to track your build. Also keep the UUID number handy for further tasks.

-

Check the image compose status.

# composer-cli compose status

The output displays the status in the following format:

<UUID> RUNNING date blueprint-name blueprint-version image-type

NoteThe image creation process takes up to 20 minutes to complete.

To interrupt the image creation process, run:

# composer-cli compose cancel <UUID>To delete an existing image, run:

# composer-cli compose delete <UUID>After the image is ready, it can be used for non-network deployments. See Creating a RHEL for Edge Container image for non-network-based deployments.

Additional resources

5.2.4. Creating a RHEL for Edge Installer image using command-line interface for non-network-based deployments

To create a RHEL for Edge Installer image that embeds the OSTree commit, use the RHELimage builder command-line interface, and ensure that you have met the following prerequisites and then follow the procedure.

Prerequisites

- You have created a blueprint for RHEL for Edge Installer image.

- You have created a RHEL for Edge Edge Container image and deployed it using a web server.

Procedure

Begin to create the RHEL for Edge Installer image.

# composer-cli compose start-ostree --ref rhel/8/x86_64/edge --url URL-OSTree-repository blueprint-name image-type

Where,

- ref is the same value that customer used to build ostree repository

- URL-OSTree-repository is the URL to the OSTree repository of the commit to embed in the image. For example, http://10.0.2.2:8080/repo. See Creating a RHEL for Edge Container image for non-network-based deployments.

- blueprint-name is the RHEL for Edge Installer blueprint name.

image-type is

edge-installer.A confirmation that the composer process has been added to the queue appears. It also shows a Universally Unique Identifier (UUID) number for the image created. Use the UUID number to track your build. Also keep the UUID number handy for further tasks.

Check the image compose status.

# composer-cli compose status

The command output displays the status in the following format:

<UUID> RUNNING date blueprint-name blueprint-version image-type

NoteThe image creation process takes a few minutes to complete.

To interrupt the image creation process, run:

# composer-cli compose cancel <UUID>To delete an existing image, run:

# composer-cli compose delete <UUID>After the image is ready, you can use it for non-network deployments. See Installing the RHEL for Edge image for non-network-based deployments.

5.2.5. Downloading a RHEL for Edge Installer image using the image builder CLI

To download a RHEL for Edge Installer image by using RHEL image builder command line interface, ensure that you have met the following prerequisites and then follow the procedure.

Prerequisites

- You have created a RHEL for Edge Installer image.

Procedure

Review the RHEL for Edge image status.

# composer-cli compose status

The output must display the following:

$ <UUID> FINISHED date blueprint-name blueprint-version image-type

Download the image.

# composer-cli compose image <UUID>

RHEL image builder downloads the image as an

.isofile to the current directory.The UUID number and the image size is displayed alongside.

$ <UUID>-boot.iso: size MB

The resulting image is a bootable ISO image.

Additional resources

5.3. Supported image customizations

You can customize your image by adding customizations to your blueprint, such as:

- Adding an additional RPM package

- Enabling a service

- Customizing a kernel command line parameter.

Between others. You can use several image customizations within blueprints. By using the customizations, you can add packages and groups to the image that are not available in the default packages. To use these options, configure the customizations in the blueprint and import (push) it to RHEL image builder.

Additional resources

5.3.1. Selecting a distribution

You can use the distro field to select the distribution to use when composing your images, or depsolving the blueprint. If distro is left blank it will use the host distribution. If you do not specify a distribution, the blueprint uses the host distribution. In case you upgrade the host operating system, the blueprints with no distribution set build images using the new operating system version. You cannot build an operating system image that differs from the RHEL image builder host.

Procedure

Customize the blueprint with the distro to always build the specified RHEL image:

name = "blueprint_name" description = "blueprint_version" version = "0.1" distro = "different_minor_version"

Replace "different_minor_version" to build a different minor version, for example, if you want to build a RHEL 8.8 image, use distro = "rhel-88". On RHEL 8.9 image, you can build minor versions such as RHEL 8.8 and earlier releases.

5.3.2. Selecting a package group

Customize the blueprint with packages and modules. The name attribute is a required string. The version attribute is an optional string that, if not provided, uses the latest version in the repositories.

Currently, there are no differences between packages and modules in osbuild-composer. Both are treated as an RPM package dependency.

Procedure

Customize your blueprint with a package:

[[packages]] name = "package_group_name"Replace "package_group_name" with the name of the group. For example, "tmux".

[[packages]] name = "tmux" version = "2.9a"

5.3.3. Setting the image hostname

The customizations.hostname is an optional string that you can use to configure the final image hostname. This customization is optional, and if you do not set it, the blueprint uses the default hostname.

Procedure

Customize the blueprint to configure the hostname:

[customizations] hostname = "baseimage"

5.3.4. Specifying additional users

Add a user to the image, and optionally, set their SSH key. All fields for this section are optional except for the name.

Procedure

Customize the blueprint to add a user to the image:

[[customizations.user]] name = "USER-NAME" description = "USER-DESCRIPTION" password = "PASSWORD-HASH" key = "PUBLIC-SSH-KEY" home = "/home/USER-NAME/" shell = "/usr/bin/bash" groups = ["users", "wheel"] uid = NUMBER gid = NUMBER

The GID is optional and must already exist in the image. Optionally, a package creates it, or the blueprint creates the GID by using the

[[customizations.group]]entry.Replace PASSWORD-HASH with the actual

password hash. To generate thepassword hash, use a command such as:$ python3 -c 'import crypt,getpass;pw=getpass.getpass();print(crypt.crypt(pw) if (pw==getpass.getpass("Confirm: ")) else exit())'Replace the other placeholders with suitable values.

Enter the

namevalue and omit any lines you do not need.Repeat this block for every user to include.

5.3.5. Specifying additional groups

Specify a group for the resulting system image. Both the name and the gid attributes are mandatory.

Procedure

Customize the blueprint with a group:

[[customizations.group]] name = "GROUP-NAME" gid = NUMBER

Repeat this block for every group to include.

5.3.6. Setting SSH key for existing users

You can use customizations.sshkey to set an SSH key for the existing users in the final image. Both user and key attributes are mandatory.

Procedure

- Customize the blueprint by setting an SSH key for existing users:

[[customizations.sshkey]] user = "root" key = "PUBLIC-SSH-KEY"

You can only configure the customizations.sshkey customization for existing users. To create a user and set an SSH key, see the User specifications for the resulting system image customization.

5.3.7. Appending a kernel argument

You can append arguments to the boot loader kernel command line. By default, RHEL image builder builds a default kernel into the image. However, you can customize the kernel by configuring it in the blueprint.

Procedure

Append a kernel boot parameter option to the defaults:

[customizations.kernel] append = "KERNEL-OPTION"Define a kernel name to use in an image

[customizations.kernel] name = "KERNEL-rt"

5.3.8. Setting time zone and NTP

You can customize your blueprint to configure the time zone and the Network Time Protocol (NTP). Both timezone and ntpservers attributes are optional strings. If you do not customize the time zone, the system uses Universal Time, Coordinated (UTC). If you do not set NTP servers, the system uses the default distribution.

Procedure

Customize the blueprint with the

timezoneand thentpserversyou want:[customizations.timezone] timezone = "TIMEZONE" ntpservers = "NTP_SERVER"

For example:

[customizations.timezone] timezone = "US/Eastern" ntpservers = ["0.north-america.pool.ntp.org", "1.north-america.pool.ntp.org"]

NoteSome image types, such as Google Cloud, already have NTP servers set up. You cannot override it because the image requires the NTP servers to boot in the selected environment. However, you can customize the time zone in the blueprint.

5.3.9. Customizing the locale settings

You can customize the locale settings for your resulting system image. Both language and the keyboard attributes are mandatory. You can add many other languages. The first language you add is the primary language and the other languages are secondary.

Procedure

- Set the locale settings:

[customizations.locale] languages = ["LANGUAGE"] keyboard = "KEYBOARD"

For example:

[customizations.locale] languages = ["en_US.UTF-8"] keyboard = "us"

To list the values supported by the languages, run the following command:

$ localectl list-locales

To list the values supported by the keyboard, run the following command:

$ localectl list-keymaps

5.3.10. Customizing firewall

Set the firewall for the resulting system image. By default, the firewall blocks incoming connections, except for services that enable their ports explicitly, such as sshd.

If you do not want to use the [customizations.firewall] or the [customizations.firewall.services], either remove the attributes, or set them to an empty list []. If you only want to use the default firewall setup, you can omit the customization from the blueprint.

The Google and OpenStack templates explicitly disable the firewall for their environment. You cannot override this behavior by setting the blueprint.

Procedure

Customize the blueprint with the following settings to open other ports and services:

[customizations.firewall] ports = ["PORTS"]Where

portsis an optional list of strings that contain ports or a range of ports and protocols to open. You can configure the ports by using the following format:port:protocolformat. You can configure the port ranges by using theportA-portB:protocolformat. For example:[customizations.firewall] ports = ["22:tcp", "80:tcp", "imap:tcp", "53:tcp", "53:udp", "30000-32767:tcp", "30000-32767:udp"]

You can use numeric ports, or their names from the

/etc/servicesto enable or disable port lists.Specify which firewall services to enable or disable in the

customizations.firewall.servicesection:[customizations.firewall.services] enabled = ["SERVICES"] disabled = ["SERVICES"]

You can check the available firewall services:

$ firewall-cmd --get-services

For example:

[customizations.firewall.services] enabled = ["ftp", "ntp", "dhcp"] disabled = ["telnet"]

NoteThe services listed in

firewall.servicesare different from theservice-namesavailable in the/etc/servicesfile.

5.3.11. Enabling or disabling services

You can control which services to enable during the boot time. Some image types already have services enabled or disabled to ensure that the image works correctly and you cannot override this setup. The [customizations.services] settings in the blueprint do not replace these services, but add the services to the list of services already present in the image templates.

Procedure

Customize which services to enable during the boot time:

[customizations.services] enabled = ["SERVICES"] disabled = ["SERVICES"]

For example:

[customizations.services] enabled = ["sshd", "cockpit.socket", "httpd"] disabled = ["postfix", "telnetd"]

5.3.12. Specifying a custom filesystem configuration

You can specify a custom filesystem configuration in your blueprints and therefore create images with a specific disk layout, instead of the default layout configuration. By using the non-default layout configuration in your blueprints, you can benefit from:

- Security benchmark compliance

- Protection against out-of-disk errors

- Improved performance

- Consistency with existing setups

The filesystem customization is not supported for OSTree systems, because OSTree images have their own mount rule, such as read-only.

The blueprint supports the following mountpoints and their sub-directories:

-

/- the root mount point -

/var -

/home -

/opt -

/srv -

/usr -

/app -

/data -

/boot- The blueprint supports the/bootcustomization from RHEL 8.7 and RHEL 9.1 onward.

Customizing mount points is only supported from RHEL 8.5 distributions onward, by using the CLI. In earlier distributions, you can only specify the root partition as a mount point and specify the size argument as an alias for the image size. Beginning with RHEL 8.6, for the osbuild-composer-46.1-1.el8 RPM and later version, the physical partitions are no longer available and filesystem customizations create logical volumes.

If you have more than one partition in the customized image, you can create images with a customized file system partition on LVM and resize those partitions at runtime. To do this, you can specify a customized filesystem configuration in your blueprint and therefore create images with the required disk layout. The default filesystem layout remains unchanged - if you use plain images without file system customization, and cloud-init resizes the root partition.

The blueprint automatically converts the file system customization to an LVM partition.

You can use the custom file blueprint customization to create new files or to replace existing files. The parent directory of the file you specify must exist, otherwise, the image build fails. Ensure that the parent directory exists by specifying it in the [[customizations.directories]] customization.

If you combine the files customizations with other blueprint customizations, it might affect the functioning of the other customizations, or it might override the current files customizations.

With the [[customizations.files]] blueprint customization you can:

- Create new text files.

- Modifying existing files. WARNING: this can override the existing content.

- Set user and group ownership for the file you are creating.

- Set the mode permission in the octal format.

You cannot create or replace the following files:

-

/etc/fstab -

/etc/shadow -

/etc/passwd -

/etc/group

You can create customized files and directories in your image, by using the [[customizations.files]] and the [[customizations.directories]] blueprint customizations. You can use these customizations only in the /etc directory.

These blueprint customizations are supported by all image types, except the image types that deploy OSTree commits, such as edge-raw-image, edge-installer, and edge-simplified-installer.

If you use the customizations.directories with a directory path which already exists in the image with mode, user or group already set, the image build fails to prevent changing the ownership or permissions of the existing directory.

With the [[customizations.directories]] blueprint customization you can:

- Create new directories.

- Set user and group ownership for the directory you are creating.

- Set the directory mode permission in the octal format.

- Ensure that parent directories are created as needed.

With the [[customizations.files]] blueprint customization you can:

- Create new text files.

- Modifying existing files. WARNING: this can override the existing content.

- Set user and group ownership for the file you are creating.

- Set the mode permission in the octal format.

You cannot create or replace the following files:

-

/etc/fstab -

/etc/shadow -

/etc/passwd -

/etc/group

Procedure

Customize the filesystem configuration in your blueprint:

[[customizations.filesystem]] mountpoint = "MOUNTPOINT" size = MINIMUM-PARTITION-SIZE

The

MINIMUM-PARTITION-SIZEvalue has no default size format. The blueprint customization supports the following values and units: kB to TB and KiB to TiB. For example, you can define the mount point size in bytes:[[customizations.filesystem]] mountpoint = "/var" size = 1073741824

Define the mount point size by using units. For example:

[[customizations.filesystem]] mountpoint = "/opt" size = "20 GiB"

[[customizations.filesystem]] mountpoint = "/boot" size = "1 GiB"

Create customized directories under the

/etcdirectory for your image by using[[customizations.directories]]:[[customizations.directories]] path = "/etc/directory_name" mode = "octal_access_permission" user = "user_string_or_integer" group = "group_string_or_integer" ensure_parents = boolean

The blueprint entries are described as following:

-

path- Mandatory - enter the path to the directory that you want to create. It must be an absolute path under the/etcdirectory. -

mode- Optional - set the access permission on the directory, in the octal format. If you do not specify a permission, it defaults to 0755. The leading zero is optional. -

user- Optional - set a user as the owner of the directory. If you do not specify a user, it defaults toroot. You can specify the user as a string or as an integer. -

group- Optional - set a group as the owner of the directory. If you do not specify a group, it defaults toroot. You can specify the group as a string or as an integer. -

ensure_parents- Optional - Specify whether you want to create parent directories as needed. If you do not specify a value, it defaults tofalse. Create customized file under the

/etcdirectory for your image by using[[customizations.directories]]:[[customizations.files]] path = "/etc/directory_name" mode = "octal_access_permission" user = "user_string_or_integer" group = "group_string_or_integer" data = "Hello world!"

The blueprint entries are described as following:

-

path- Mandatory - enter the path to the file that you want to create. It must be an absolute path under the/etcdirectory. -

modeOptional - set the access permission on the file, in the octal format. If you do not specify a permission, it defaults to 0644. The leading zero is optional. -

user- Optional - set a user as the owner of the file. If you do not specify a user, it defaults toroot. You can specify the user as a string or as an integer. -

group- Optional - set a group as the owner of the file. If you do not specify a group, it defaults toroot. You can specify the group as a string or as an integer. -

data- Optional - Specify the content of a plain text file. If you do not specify a content, it creates an empty file.

5.4. Packages installed by RHEL image builder

When you create a system image using RHEL image builder, the system installs a set of base package groups.

When you add additional components to your blueprint, ensure that the packages in the components you added do not conflict with any other package components. Otherwise, the system fails to solve dependencies and creating your customized image fails. You can check if there is no conflict between the packages by running the command:

# composer-cli blueprints depsolve BLUEPRINT-NAME

By default, RHEL image builder uses the Core group as the base list of packages.

Table 5.1. Default packages to support image type creation

| Image type | Default Packages |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Additional resources

Chapter 6. Building simplified installer images to provision a RHEL for Edge image

You can build a RHEL for Edge Simplified Installer image, which is optimized for unattended installation to a device, and provision the image to a RHEL for Edge image.

6.1. Simplified installer image build and deployment

Build a RHEL for Edge Simplified Installer image by using the edge-simplified-installer image type,.

To build a RHEL for Edge Simplified Installer image, provide an existing OSTree commit. The resulting simplified image contains a raw image that has the OSTree commit deployed. After you boot the Simplified installer ISO image, it provisions a RHEL for Edge system that you can use on a hard disk or as a boot image in a virtual machine. You can log in to the deployed system with the user name and password that you specified in the blueprint that you used to create the Simplified Installer image.

The RHEL for Edge Simplified Installer image is optimized for unattended installation to a device and supports both network-based deployment and non-network-based deployments. However, for network-based deployment, it supports only UEFI HTTP boot.

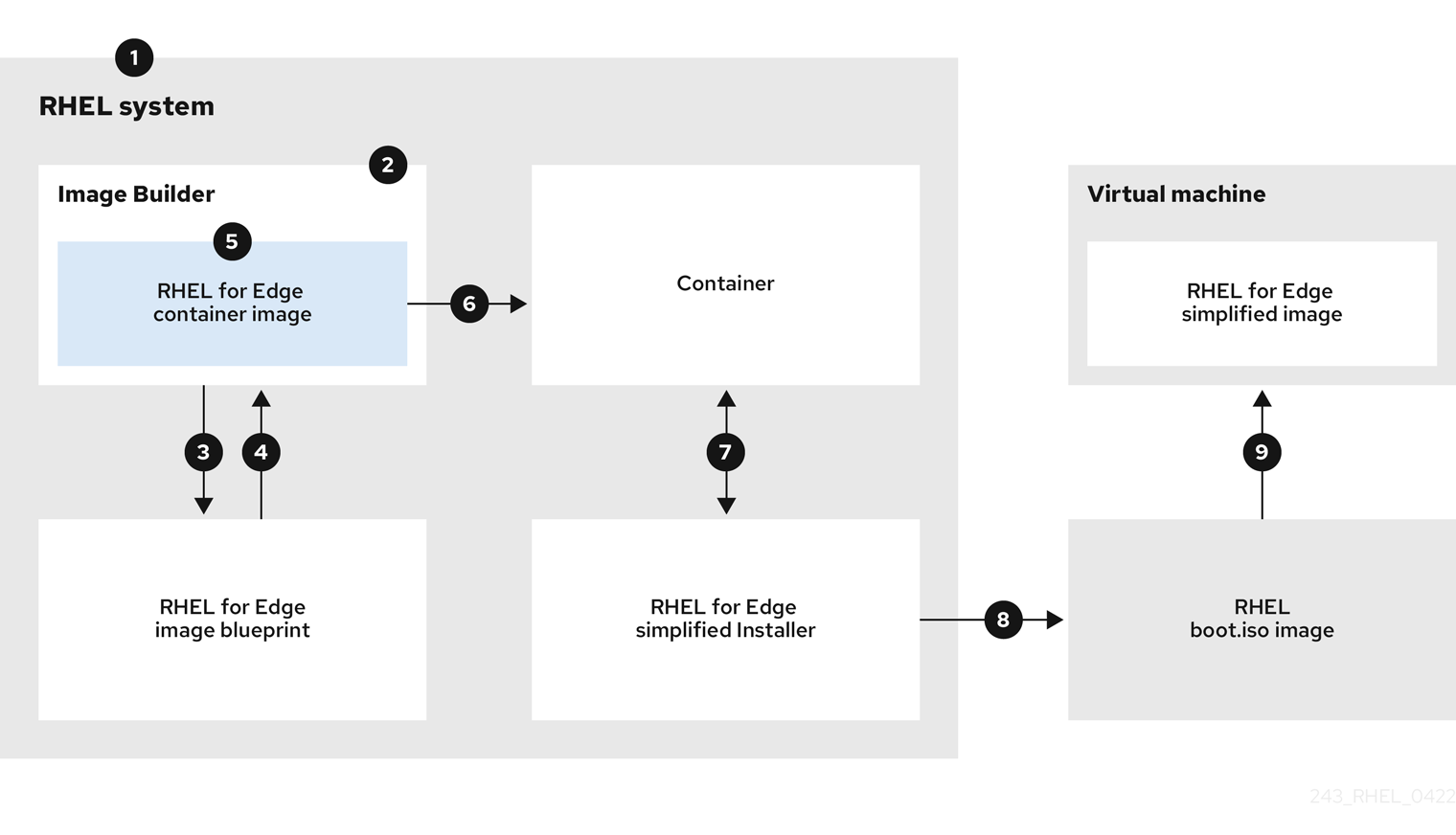

Composing and deploying a simplified RHEL for Edge image involves the following high-level steps:

- Install and register a RHEL system

- Install RHEL image builder

- Using RHEL image builder, create a blueprint with customizations for RHEL for Edge Container image

- Import the RHEL for Edge blueprint in RHEL image builder

- Create a RHEL for Edge image embed in an OCI container with a web server ready to deploy the commit as an OSTree repository

-

Create a blueprint for the

edge-simplified-installerimage - Build a simplified RHEL for Edge image

- Download the RHEL for Edge simplified image

-

Install the raw image with the

edge-simplified-installervirt-install

The following diagram represents the RHEL for Edge Simplified building and provisioning workflow:

Figure 6.1. Building and provisioning RHEL for Edge in network-based environment

6.2. Creating a blueprint for a Simplified image using RHEL image builder CLI

To create a blueprint for a simplified RHEL for Edge image, you must customize it with a device file location to enable an unattended installation to a device and a URL to perform the initial device credential exchange. You also must specify users and user groups in the blueprint. For that,follow the steps:

Procedure

Create a plain text file in the Tom’s Obvious, Minimal Language (TOML) format, with the following content:

name = "simplified-installer-blueprint" description = "blueprint for the simplified installer image" version = "0.0.1" packages = [] modules = [] groups = [] distro = "" [customizations] installation_device = "/dev/vda" [[customizations.user]] name = "admin" password = "admin" groups = ["users", "wheel"] [customizations.fdo] manufacturing_server_url = "http://10.0.0.2:8080" diun_pub_key_insecure = "true"

NoteThe FDO customization in the blueprints is optional, and you can build your RHEL for Edge Simplified Installer image with no errors.

- name is the name and description is the description for your blueprint.

- 0.0.1 is the version number according to the Semantic Versioning scheme.

- Modules describe the package name and matching version glob to be installed into the image, for example, the package name = "tmux" and the matching version glob is version = "2.9a". Notice that currently there are no differences between packages and modules.

-

Groups are packages groups to be installed into the image, for example the

anaconda-toolsgroup package. If you do not know the modules and groups, leave them empty. - installation-device is the customization to enable an unattended installation to your device.

- manufacturing_server_url is the URL to perform the initial device credential exchange.

- name is the user name to login to the image.

- password is a password of your choice.

- groups are any user groups, such as "widget".

Push (import) the blueprint to the RHEL image builder server:

# composer-cli blueprints push blueprint-name.tomlList the existing blueprints to check whether the created blueprint is successfully pushed and exists.

# composer-cli blueprints show blueprint-nameCheck whether the components and versions listed in the blueprint and their dependencies are valid:

# composer-cli blueprints depsolve blueprint-name

Additional resources

6.3. Creating a RHEL for Edge Simplified Installer image using image builder CLI

To create a RHEL for Edge Simplified image by using RHEL image builder command-line interface, ensure that you have met the following prerequisites and then follow the procedure.

Prerequisites

- You created a blueprint for the RHEL for Edge Simplified image.

- You served an OSTree repository of the commit to embed in the image. For example, http://10.0.2.2:8080/repo. See Setting up a web server to install RHEL for Edge image.

Procedure

Create the bootable ISO image.

# composer-cli compose start-ostree \ blueprint-name \ edge-simplified-installer \ --ref rhel/8/x86_64/edge \ --url URL-OSTree-repository \

Where,

-

blueprint-nameis the RHEL for Edge blueprint name. -