Operations Guide

Operational tasks for Red Hat Ceph Storage

Abstract

Chapter 1. Introduction to the Ceph Orchestrator

As a storage administrator, you can use the Ceph Orchestrator with Cephadm utility that provides the ability to discover devices and create services in a Red Hat Ceph Storage cluster.

1.1. Use of the Ceph Orchestrator

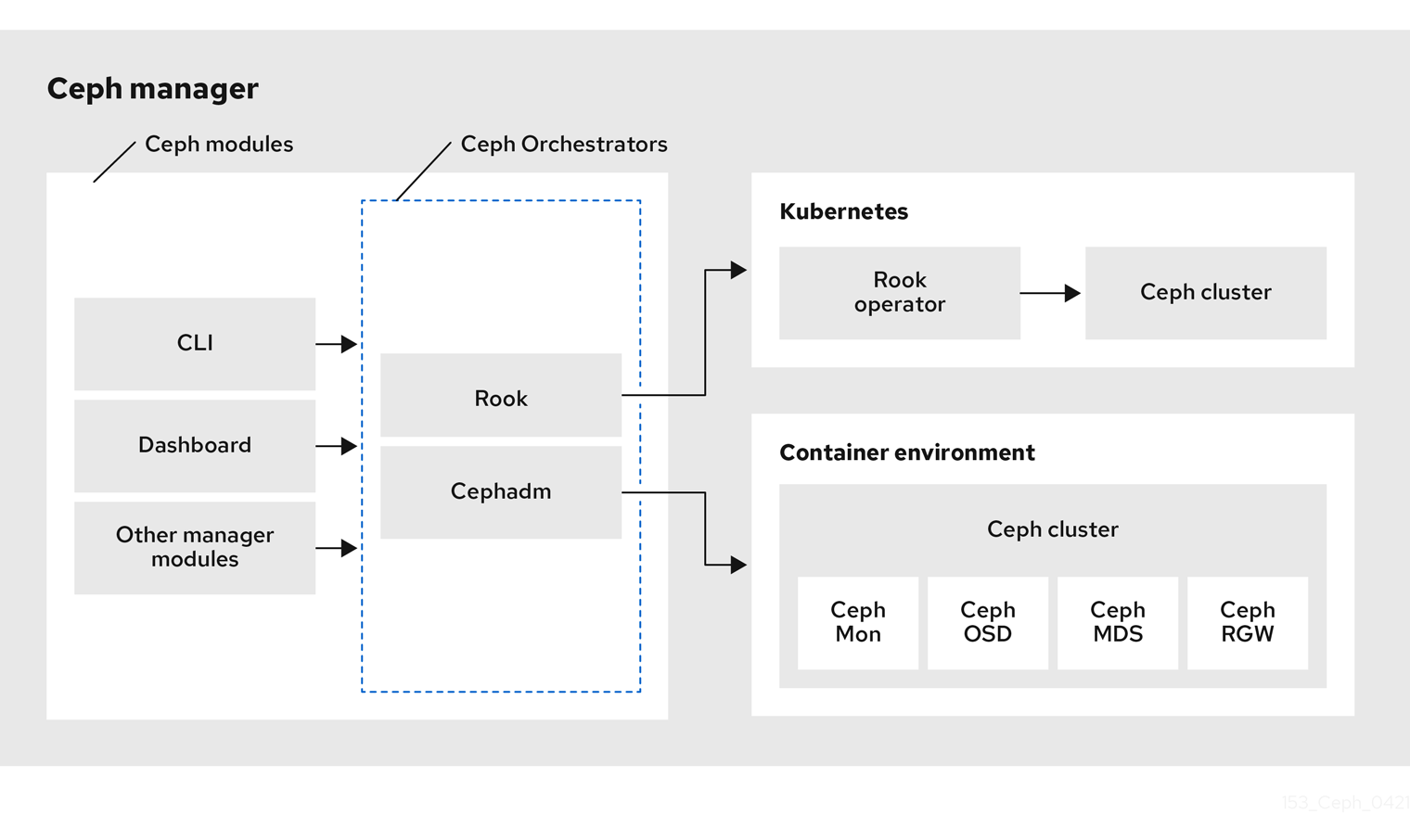

Red Hat Ceph Storage Orchestrators are manager modules that primarily act as a bridge between a Red Hat Ceph Storage cluster and deployment tools like Rook and Cephadm for a unified experience. They also integrate with the Ceph command line interface and Ceph Dashboard.

The following is a workflow diagram of Ceph Orchestrator:

Types of Red Hat Ceph Storage Orchestrators

There are three main types of Red Hat Ceph Storage Orchestrators:

Orchestrator CLI : These are common APIs used in Orchestrators and include a set of commands that can be implemented. These APIs also provide a common command line interface (CLI) to orchestrate

ceph-mgrmodules with external orchestration services. The following are the nomenclature used with the Ceph Orchestrator:- Host : This is the host name of the physical host and not the pod name, DNS name, container name, or host name inside the container.

- Service type : This is the type of the service, such as nfs, mds, osd, mon, rgw, mgr, and iscsi.

- Service : A functional service provided by a Ceph storage cluster such as monitors service, managers service, OSD services, Ceph Object Gateway service, and NFS service.

- Daemon : A specific instance of a service deployed by one or more hosts such as Ceph Object Gateway services can have different Ceph Object Gateway daemons running in three different hosts.

Cephadm Orchestrator - This is a Ceph Orchestrator module that does not rely on an external tool such as Rook or Ansible, but rather manages nodes in a cluster by establishing an SSH connection and issuing explicit management commands. This module is intended for day-one and day-two operations.

Using the Cephadm Orchestrator is the recommended way of installing a Ceph storage cluster without leveraging any deployment frameworks like Ansible. The idea is to provide the manager daemon with access to an SSH configuration and key that is able to connect to all nodes in a cluster to perform any management operations, like creating an inventory of storage devices, deploying and replacing OSDs, or starting and stopping Ceph daemons. In addition, the Cephadm Orchestrator will deploy container images managed by

systemdin order to allow independent upgrades of co-located services.This orchestrator will also likely highlight a tool that encapsulates all necessary operations to manage the deployment of container image based services on the current host, including a command that bootstraps a minimal cluster running a Ceph Monitor and a Ceph Manager.

Rook Orchestrator - Rook is an orchestration tool that uses the Kubernetes Rook operator to manage a Ceph storage cluster running inside a Kubernetes cluster. The rook module provides integration between Ceph’s Orchestrator framework and Rook. Rook is an open source cloud-native storage operator for Kubernetes.

Rook follows the “operator” model, in which a custom resource definition (CRD) object is defined in Kubernetes to describe a Ceph storage cluster and its desired state, and a rook operator daemon is running in a control loop that compares the current cluster state to desired state and takes steps to make them converge. The main object describing Ceph’s desired state is the Ceph storage cluster CRD, which includes information about which devices should be consumed by OSDs, how many monitors should be running, and what version of Ceph should be used. Rook defines several other CRDs to describe RBD pools, CephFS file systems, and so on.

The Rook Orchestrator module is the glue that runs in the

ceph-mgrdaemon and implements the Ceph orchestration API by making changes to the Ceph storage cluster in Kubernetes that describe desired cluster state. A Rook cluster’sceph-mgrdaemon is running as a Kubernetes pod, and hence, the rook module can connect to the Kubernetes API without any explicit configuration.

Chapter 2. Management of services using the Ceph Orchestrator

As a storage administrator, after installing the Red Hat Ceph Storage cluster, you can monitor and manage the services in a storage cluster using the Ceph Orchestrator. A service is a group of daemons that are configured together.

This section covers the following administrative information:

- Checking service status.

- Checking daemon status.

- Placement specification of the Ceph Orchestrator.

- Deploying the Ceph daemons using the command line interface.

- Deploying the Ceph daemons on a subset of hosts using the command line interface.

- Service specification of the Ceph Orchestrator.

- Deploying the Ceph daemons using the service specification.

2.1. Checking service status

You can check the following status of the services of the Red Hat Ceph Storage cluster using the ceph orch ls command:

- Print a list of services.

- Locate the service whose status you want to check.

- Print the status of the service.

If the services are applied with the ceph orch apply command while bootstrapping, changing the service specification file is complicated. Instead, you can use the --export option with the ceph orch ls command to export the running specification, update the yaml file, and re-apply the service.

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Log into the

cephadmshell.

Procedure

Print a list of services:

Syntax

ceph orch ls [--service_type SERVICE_TYPE] [--service_name SERVICE_NAME] [--export] [--format FORMAT] [--refresh]

The format can be plain,

json,json-pretty,yaml,xml-pretty, orxml.Example

[ceph: root@host01 /]# ceph orch ls

Check the status of a particular service or a daemon:

Syntax

ceph orch ls [--service_type SERVICE_TYPE] [--service_name SERVICE_NAME] [--refresh]

Example

[ceph: root@host01 /]# ceph orch ls --service-type mds [ceph: root@host01 /]# ceph orch ls --service-name rgw.realm.myzone

Export the service specification:

Example

[ceph: root@host01 /]# ceph orch ls --service-type mgr --export > mgr.yaml [ceph: root@host01 /]# ceph orch ls --export > cluster.yaml

This exports the file in the

.yamlfile format. This file can be used with theceph orch apply -icommand for retrieving the service specification of a single service.

2.2. Checking daemon status

A daemon is a systemd unit that is running and is part of the service.

You can check the following status of the daemons of the Red Hat Ceph Storage cluster using the ceph orch ps command:

- Print a list of all the daemons.

- Query the status of the target daemon.

Prerequisites

- A running Red Hat Ceph Storage cluster.

-

Log into the

cephadmshell.

Procedure

Print a list of daemons:

Syntax

ceph orch ps [--daemon-type DAEMON_TYPE] [--service_name SERVICE_NAME] [--daemon_id DAEMON_ID] [--format FORMAT] [--refresh]

Example

[ceph: root@host01 /]# ceph orch ps

Check the status of a particular service instance:

Syntax

ceph orch ls [--daemon-type DAEMON_TYPE] [--daemon_id DAEMON_ID] [--refresh]

Example

[ceph: root@host01 /]# ceph orch ps --daemon_type osd --daemon_id 0

2.3. Placement specification of the Ceph Orchestrator

You can use the Ceph Orchestrator to deploy osds, mons, mgrs, mds and rgw, and iSCSI services. Red Hat recommends deploying services using placement specifications. You need to know where and how many daemons have to be deployed to deploy a service using the Ceph Orchestrator. Placement specifications can either be passed as command line arguments or as a service specification in a yaml file.

There are two ways of deploying the services using the placement specification:

Using the placement specification directly in the command line interface. For example, if you want to deploy three monitors on the hosts, running the following command deploys three monitors on

host01,host02, andhost03.Example

[ceph: root@host01 /]# ceph orch apply mon --placement="3 host01 host02 host03"

Using the placement specification in the YAML file. For example, if you want to deploy

node-exporteron all the hosts, then you can specify the following in theyamlfile.Example

service_type: node-exporter placement: host_pattern: '*'

2.4. Deploying the Ceph daemons using the command line interface

Using the Ceph Orchestrator, you can deploy the daemons such as Ceph Manager, Ceph Monitors, Ceph OSDs, monitoring stack, and others using the ceph orch command. Placement specification is passed as --placement argument with the Orchestrator commands.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the storage cluster.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

Use one of the following methods to deploy the daemons on the hosts:

Method 1: Specify the number of daemons and the host names:

Syntax

ceph orch apply SERVICE_NAME --placement="NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_2 HOST_NAME_3"

Example

[ceph: root@host01 /]# ceph orch apply mon --placement="3 host01 host02 host03"

Method 2: Add the labels to the hosts and then deploy the daemons using the labels:

Add the labels to the hosts:

Syntax

ceph orch host label add HOSTNAME_1 LABEL

Example

[ceph: root@host01 /]# ceph orch host label add host01 mon

Deploy the daemons with labels:

Syntax

ceph orch apply DAEMON_NAME label:LABEL

Example

ceph orch apply mon label:mon

Method 3: Add the labels to the hosts and deploy using the

--placementargument:Add the labels to the hosts:

Syntax

ceph orch host label add HOSTNAME_1 LABEL

Example

[ceph: root@host01 /]# ceph orch host label add host01 mon

Deploy the daemons using the label placement specification:

Syntax

ceph orch apply DAEMON_NAME --placement="label:LABEL"

Example

ceph orch apply mon --placement="label:mon"

Verification

List the service:

Example

[ceph: root@host01 /]# ceph orch ls

List the hosts, daemons, and processes:

Syntax

ceph orch ps --daemon_type=DAEMON_NAME ceph orch ps --service_name=SERVICE_NAME

Example

[ceph: root@host01 /]# ceph orch ps --daemon_type=mon [ceph: root@host01 /]# ceph orch ps --service_name=mon

Additional Resources

- See the Adding hosts using the Ceph Orchestrator section in the Red Hat Ceph Storage Operations Guide.

2.5. Deploying the Ceph daemons on a subset of hosts using the command line interface

You can use the --placement option to deploy daemons on a subset of hosts. You can specify the number of daemons in the placement specification with the name of the hosts to deploy the daemons.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the cluster.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

List the hosts on which you want to deploy the Ceph daemons:

Example

[ceph: root@host01 /]# ceph orch host ls

Deploy the daemons:

Syntax

ceph orch apply SERVICE_NAME --placement="NUMBER_OF_DAEMONS HOST_NAME_1 _HOST_NAME_2 HOST_NAME_3"

Example

ceph orch apply mgr --placement="2 host01 host02 host03"

In this example, the

mgrdaemons are deployed only on two hosts.

Verification

List the hosts:

Example

[ceph: root@host01 /]# ceph orch host ls

Additional Resources

- See the Listing hosts using the Ceph Orchestrator section in the Red Hat Ceph Storage Operations Guide.

2.6. Service specification of the Ceph Orchestrator

A service specification is a data structure to specify the service attributes and configuration settings that is used to deploy the Ceph service. The following is an example of the multi-document YAML file, cluster.yaml, for specifying service specifications:

Example

service_type: mon placement: host_pattern: "mon*" --- service_type: mgr placement: host_pattern: "mgr*" --- service_type: osd service_id: default_drive_group placement: host_pattern: "osd*" data_devices: all: true

The following list are the parameters where the properties of a service specification are defined as follows:

service_type: The type of service:- Ceph services like mon, crash, mds, mgr, osd, rbd, or rbd-mirror.

- Ceph gateway like nfs or rgw.

- Monitoring stack like Alertmanager, Prometheus, Grafana or Node-exporter.

- Container for custom containers.

-

service_id: A unique name of the service. -

placement: This is used to define where and how to deploy the daemons. -

unmanaged: If set totrue, the Orchestrator will neither deploy nor remove any daemon associated with this service.

Stateless service of Orchestrators

A stateless service is a service that does not need information of the state to be available. For example, to start an rgw service, additional information is not needed to start or run the service. The rgw service does not create information about this state in order to provide the functionality. Regardless of when the rgw service starts, the state is the same.

2.7. Deploying the Ceph daemons using the service specification

Using the Ceph Orchestrator, you can deploy daemons such as ceph Manager, Ceph Monitors, Ceph OSDs, monitoring stack, and others using the service specification in a YAML file.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to all the nodes.

Procedure

Create the

yamlfile:Example

[root@host01 ~]# touch mon.yaml

This file can be configured in two different ways:

Edit the file to include the host details in placement specification:

Syntax

service_type: SERVICE_NAME placement: hosts: - HOST_NAME_1 - HOST_NAME_2

Example

service_type: mon placement: hosts: - host01 - host02 - host03Edit the file to include the label details in placement specification:

Syntax

service_type: SERVICE_NAME placement: label: "LABEL_1"

Example

service_type: mon placement: label: "mon"

Optional: You can also use extra container arguments in the service specification files such as CPUs, CA certificates, and other files while deploying services:

Example

extra_container_args: - "-v" - "/etc/pki/ca-trust/extracted:/etc/pki/ca-trust/extracted:ro" - "--security-opt" - "label=disable" - "cpus=2"

Mount the YAML file under a directory in the container:

Example

[root@host01 ~]# cephadm shell --mount mon.yaml:/var/lib/ceph/mon/mon.yaml

Navigate to the directory:

Example

[ceph: root@host01 /]# cd /var/lib/ceph/mon/

Deploy the Ceph daemons using service specification:

Syntax

ceph orch apply -i FILE_NAME.yamlExample

[ceph: root@host01 mon]# ceph orch apply -i mon.yaml

Verification

List the service:

Example

[ceph: root@host01 /]# ceph orch ls

List the hosts, daemons, and processes:

Syntax

ceph orch ps --daemon_type=DAEMON_NAMEExample

[ceph: root@host01 /]# ceph orch ps --daemon_type=mon

Additional Resources

- See the Listing hosts using the Ceph Orchestrator section in the Red Hat Ceph Storage Operations Guide.

Chapter 3. Management of hosts using the Ceph Orchestrator

As a storage administrator, you can use the Ceph Orchestrator with Cephadm in the backend to add, list, and remove hosts in an existing Red Hat Ceph Storage cluster.

You can also add labels to hosts. Labels are free-form and have no specific meanings. Each host can have multiple labels. For example, apply the mon label to all hosts that have monitor daemons deployed, mgr for all hosts with manager daemons deployed, rgw for Ceph object gateways, and so on.

Labeling all the hosts in the storage cluster helps to simplify system management tasks by allowing you to quickly identify the daemons running on each host. In addition, you can use the Ceph Orchestrator or a YAML file to deploy or remove daemons on hosts that have specific host labels.

This section covers the following administrative tasks:

- Adding hosts using the Ceph Orchestrator.

- Adding multiple hosts using the Ceph Orchestrator.

- Listing hosts using the Ceph Orchestrator.

- Adding labels to hosts using the Ceph Orchestrator.

- Removing a label from a host.

- Removing hosts using the Ceph Orchestrator.

- Placing hosts in the maintenance mode using the Ceph Orchestrator.

3.1. Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to all the nodes.

-

The IP addresses of the new hosts should be updated in

/etc/hostsfile.

3.2. Adding hosts using the Ceph Orchestrator

You can use the Ceph Orchestrator with Cephadm in the backend to add hosts to an existing Red Hat Ceph Storage cluster.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to all nodes in the storage cluster.

- Register the nodes to the CDN and attach subscriptions.

-

Ansible user with sudo and passwordless

sshaccess to all nodes in the storage cluster.

Procedure

From the Ceph administration node, log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

Extract the cluster’s public SSH keys to a folder:

Syntax

ceph cephadm get-pub-key > ~/PATHExample

[ceph: root@host01 /]# ceph cephadm get-pub-key > ~/ceph.pub

Copy Ceph cluster’s public SSH keys to the root user’s

authorized_keysfile on the new host:Syntax

ssh-copy-id -f -i ~/PATH root@HOST_NAME_2

Example

[ceph: root@host01 /]# ssh-copy-id -f -i ~/ceph.pub root@host02

From the Ansible administration node, add the new host to the Ansible inventory file. The default location for the file is

/usr/share/cephadm-ansible/hosts. The following example shows the structure of a typical inventory file:Example

host01 host02 host03 [admin] host00

NoteIf you have previously added the new host to the Ansible inventory file and run the preflight playbook on the host, skip to step 6.

Run the preflight playbook with the

--limitoption:Syntax

ansible-playbook -i INVENTORY_FILE cephadm-preflight.yml --extra-vars "ceph_origin=rhcs" --limit NEWHOST

Example

[ceph-admin@admin cephadm-ansible]$ ansible-playbook -i hosts cephadm-preflight.yml --extra-vars "ceph_origin=rhcs" --limit host02

The preflight playbook installs

podman,lvm2,chronyd, andcephadmon the new host. After installation is complete,cephadmresides in the/usr/sbin/directory.From the Ceph administration node, log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

Use the

cephadmorchestrator to add hosts to the storage cluster:Syntax

ceph orch host add HOST_NAME IP_ADDRESS_OF_HOST [--label=LABEL_NAME_1,LABEL_NAME_2]

The

--labeloption is optional and this adds the labels when adding the hosts. You can add multiple labels to the host.Example

[ceph: root@host01 /]# ceph orch host add host02 10.10.128.70 --labels=mon,mgr

Verification

List the hosts:

Example

[ceph: root@host01 /]# ceph orch host ls

Additional Resources

- See the Listing hosts using the Ceph Orchestrator section in the Red Hat Ceph Storage Operations Guide.

-

For more information about the

cephadm-preflightplaybook, see Running the preflight playbook section in the Red Hat Ceph Storage Installation Guide. - See the Registering Red Hat Ceph Storage nodes to the CDN and attaching subscriptions section in the Red Hat Ceph Storage Installation Guide.

- See the Creating an Ansible user with sudo access section in the Red Hat Ceph Storage Installation Guide.

3.3. Setting the initial CRUSH location of host

You can add the location identifier to the host which instructs cephadm to create a new CRUSH host located in the specified hierarchy.

The location attribute only affects the initial CRUSH location. Subsequent changes of the location property is ignored. Also, removing a host does not remove any CRUSH buckets.

Prerequisites

- A running Red Hat Ceph Storage cluster.

Procedure

Edit the

hosts.yamlfile to include the following details:Example

service_type: host hostname: host01 addr: 192.168.0.11 location: rack: rack1

Mount the YAML file under a directory in the container:

Example

[root@host01 ~]# cephadm shell --mount hosts.yaml:/var/lib/ceph/hosts.yaml

Navigate to the directory:

Example

[ceph: root@host01 /]# cd /var/lib/ceph/

Deploy the hosts using service specification:

Syntax

ceph orch apply -i FILE_NAME.yamlExample

[ceph: root@host01 ceph]# ceph orch apply -i hosts.yaml

Additional Resources

- See the Listing hosts using the Ceph Orchestrator section in the Red Hat Ceph Storage Operations Guide.

3.4. Adding multiple hosts using the Ceph Orchestrator

You can use the Ceph Orchestrator to add multiple hosts to a Red Hat Ceph Storage cluster at the same time using the service specification in YAML file format.

Prerequisites

- A running Red Hat Ceph Storage cluster.

Procedure

Create the

hosts.yamlfile:Example

[root@host01 ~]# touch hosts.yaml

Edit the

hosts.yamlfile to include the following details:Example

service_type: host addr: host01 hostname: host01 labels: - mon - osd - mgr --- service_type: host addr: host02 hostname: host02 labels: - mon - osd - mgr --- service_type: host addr: host03 hostname: host03 labels: - mon - osd

Mount the YAML file under a directory in the container:

Example

[root@host01 ~]# cephadm shell --mount hosts.yaml:/var/lib/ceph/hosts.yaml

Navigate to the directory:

Example

[ceph: root@host01 /]# cd /var/lib/ceph/

Deploy the hosts using service specification:

Syntax

ceph orch apply -i FILE_NAME.yamlExample

[ceph: root@host01 hosts]# ceph orch apply -i hosts.yaml

Verification

List the hosts:

Example

[ceph: root@host01 /]# ceph orch host ls

Additional Resources

- See the Listing hosts using the Ceph Orchestrator section in the Red Hat Ceph Storage Operations Guide.

3.5. Listing hosts using the Ceph Orchestrator

You can list hosts of a Ceph cluster with Ceph Orchestrators.

The STATUS of the hosts is blank, in the output of the ceph orch host ls command.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the storage cluster.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

List the hosts of the cluster:

Example

[ceph: root@host01 /]# ceph orch host ls

You will see that the STATUS of the hosts is blank which is expected.

3.6. Adding labels to hosts using the Ceph Orchestrator

You can use the Ceph Orchestrator to add labels to hosts in an existing Red Hat Ceph Storage cluster. A few examples of labels are mgr, mon, and osd based on the service deployed on the hosts.

You can also add the following host labels that have special meaning to cephadm and they begin with _:

-

_no_schedule: This label preventscephadmfrom scheduling or deploying daemons on the host. If it is added to an existing host that already contains Ceph daemons, it causescephadmto move those daemons elsewhere, except OSDs which are not removed automatically. When a host is added with the_no_schedulelabel, no daemons are deployed on it. When the daemons are drained before the host is removed, the_no_schedulelabel is set on that host. -

_no_autotune_memory: This label does not autotune memory on the host. It prevents the daemon memory from being tuned even when theosd_memory_target_autotuneoption or other similar options are enabled for one or more daemons on that host. -

_admin: By default, the_adminlabel is applied to the bootstrapped host in the storage cluster and theclient.adminkey is set to be distributed to that host with theceph orch client-keyring {ls|set|rm}function. Adding this label to additional hosts normally causescephadmto deploy configuration and keyring files in/etc/cephdirectory.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the storage cluster

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

Add labels to the hosts:

Syntax

ceph orch host label add HOST_NAME LABEL_NAME

Example

[ceph: root@host01 /]# ceph orch host label add host02 mon

Verification

List the hosts:

Example

[ceph: root@host01 /]# ceph orch host ls

3.7. Removing a label from a host

You can use the Ceph orchestrator to remove a label from a host.

Prerequisites

- A storage cluster that has been installed and bootstrapped.

- Root-level access to all nodes in the storage cluster.

Procedure

Launch the

cephadmshell:[root@host01 ~]# cephadm shell [ceph: root@host01 /]#

Remove the label.

Syntax

ceph orch host label rm HOSTNAME LABEL

Example

[ceph: root@host01 /]# ceph orch host label rm host02 mon

Verification

List the hosts:

Example

[ceph: root@host01 /]# ceph orch host ls

3.8. Removing hosts using the Ceph Orchestrator

You can remove hosts of a Ceph cluster with the Ceph Orchestrators. All the daemons are removed with the drain option which adds the _no_schedule label to ensure that you cannot deploy any daemons or a cluster till the operation is complete.

If you are removing the bootstrap host, be sure to copy the admin keyring and the configuration file to another host in the storage cluster before you remove the host.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to all the nodes.

- Hosts are added to the storage cluster.

- All the services are deployed.

- Cephadm is deployed on the nodes where the services have to be removed.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

Fetch the host details:

Example

[ceph: root@host01 /]# ceph orch host ls

Drain all the daemons from the host:

Syntax

ceph orch host drain HOSTNAMEExample

[ceph: root@host01 /]# ceph orch host drain host02

The

_no_schedulelabel is automatically applied to the host which blocks deployment.Check the status of OSD removal:

Example

[ceph: root@host01 /]# ceph orch osd rm status

When no placement groups (PG) are left on the OSD, the OSD is decommissioned and removed from the storage cluster.

Check if all the daemons are removed from the storage cluster:

Syntax

ceph orch ps HOSTNAMEExample

[ceph: root@host01 /]# ceph orch ps host02

Remove the host:

Syntax

ceph orch host rm HOSTNAMEExample

[ceph: root@host01 /]# ceph orch host rm host02

Additional Resources

- See the Adding hosts using the Ceph Orchestrator section in the Red Hat Ceph Storage Operations Guide for more information.

- See the Listing hosts using the Ceph Orchestrator section in the Red Hat Ceph Storage Operations Guide for more information.

3.9. Placing hosts in the maintenance mode using the Ceph Orchestrator

You can use the Ceph Orchestrator to place the hosts in and out of the maintenance mode. The ceph orch host maintenance enter command stops the systemd target which causes all the Ceph daemons to stop on the host. Similarly, the ceph orch host maintenance exit command restarts the systemd target and the Ceph daemons restart on their own.

The orchestrator adopts the following workflow when the host is placed in maintenance:

-

Confirms the removal of hosts does not impact data availability by running the

orch host ok-to-stopcommand. -

If the host has Ceph OSD daemons, it applies

nooutto the host subtree to prevent data migration from triggering during the planned maintenance slot. - Stops the Ceph target, thereby, stopping all the daemons.

-

Disables the

ceph targeton the host, to prevent a reboot from automatically starting Ceph services.

Exiting maintenance reverses the above sequence.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to all the nodes.

- Hosts added to the cluster.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

You can either place the host in maintenance mode or place it out of the maintenance mode:

Place the host in maintenance mode:

Syntax

ceph orch host maintenance enter HOST_NAME [--force]Example

[ceph: root@host01 /]# ceph orch host maintenance enter host02 --force

The

--forceflag allows the user to bypass warnings, but not alerts.Place the host out of the maintenance mode:

Syntax

ceph orch host maintenance exit HOST_NAMEExample

[ceph: root@host01 /]# ceph orch host maintenance exit host02

Verification

List the hosts:

Example

[ceph: root@host01 /]# ceph orch host ls

Chapter 4. Management of monitors using the Ceph Orchestrator

As a storage administrator, you can deploy additional monitors using placement specification, add monitors using service specification, add monitors to a subnet configuration, and add monitors to specific hosts. Apart from this, you can remove the monitors using the Ceph Orchestrator.

By default, a typical Red Hat Ceph Storage cluster has three or five monitor daemons deployed on different hosts.

Red Hat recommends deploying five monitors if there are five or more nodes in a cluster.

Red Hat recommends deploying three monitors when Ceph is deployed with the OSP director.

Ceph deploys monitor daemons automatically as the cluster grows, and scales back monitor daemons automatically as the cluster shrinks. The smooth execution of this automatic growing and shrinking depends upon proper subnet configuration.

If your monitor nodes or your entire cluster are located on a single subnet, then Cephadm automatically adds up to five monitor daemons as you add new hosts to the cluster. Cephadm automatically configures the monitor daemons on the new hosts. The new hosts reside on the same subnet as the bootstrapped host in the storage cluster.

Cephadm can also deploy and scale monitors to correspond to changes in the size of the storage cluster.

4.1. Ceph Monitors

Ceph Monitors are lightweight processes that maintain a master copy of the storage cluster map. All Ceph clients contact a Ceph monitor and retrieve the current copy of the storage cluster map, enabling clients to bind to a pool and read and write data.

Ceph Monitors use a variation of the Paxos protocol to establish consensus about maps and other critical information across the storage cluster. Due to the nature of Paxos, Ceph requires a majority of monitors running to establish a quorum, thus establishing consensus.

Red Hat requires at least three monitors on separate hosts to receive support for a production cluster.

Red Hat recommends deploying an odd number of monitors. An odd number of Ceph Monitors has a higher resiliency to failures than an even number of monitors. For example, to maintain a quorum on a two-monitor deployment, Ceph cannot tolerate any failures; with three monitors, one failure; with four monitors, one failure; with five monitors, two failures. This is why an odd number is advisable. Summarizing, Ceph needs a majority of monitors to be running and to be able to communicate with each other, two out of three, three out of four, and so on.

For an initial deployment of a multi-node Ceph storage cluster, Red Hat requires three monitors, increasing the number two at a time if a valid need for more than three monitors exists.

Since Ceph Monitors are lightweight, it is possible to run them on the same host as OpenStack nodes. However, Red Hat recommends running monitors on separate hosts.

Red Hat ONLY supports collocating Ceph services in containerized environments.

When you remove monitors from a storage cluster, consider that Ceph Monitors use the Paxos protocol to establish a consensus about the master storage cluster map. You must have a sufficient number of Ceph Monitors to establish a quorum.

Additional Resources

- See the Red Hat Ceph Storage Supported configurations Knowledgebase article for all the supported Ceph configurations.

4.2. Configuring monitor election strategy

The monitor election strategy identifies the net splits and handles failures. You can configure the election monitor strategy in three different modes:

-

classic- This is the default mode in which the lowest ranked monitor is voted based on the elector module between the two sites. -

disallow- This mode lets you mark monitors as disallowed, in which case they will participate in the quorum and serve clients, but cannot be an elected leader. This lets you add monitors to a list of disallowed leaders. If a monitor is in the disallowed list, it will always defer to another monitor. -

connectivity- This mode is mainly used to resolve network discrepancies. It evaluates connection scores, based on pings that check liveness, provided by each monitor for its peers and elects the most connected and reliable monitor to be the leader. This mode is designed to handle net splits, which may happen if your cluster is stretched across multiple data centers or otherwise susceptible. This mode incorporates connection score ratings and elects the monitor with the best score. If a specific monitor is desired to be the leader, configure the election strategy so that the specific monitor is the first monitor in the list with a rank is0.

Red Hat recommends you to stay in the classic mode unless you require features in the other modes.

Before constructing the cluster, change the election_strategy to classic, disallow, or connectivity in the following command:

Syntax

ceph mon set election_strategy {classic|disallow|connectivity}

4.3. Deploying the Ceph monitor daemons using the command line interface

The Ceph Orchestrator deploys one monitor daemon by default. You can deploy additional monitor daemons by using the placement specification in the command line interface. To deploy a different number of monitor daemons, specify a different number. If you do not specify the hosts where the monitor daemons should be deployed, the Ceph Orchestrator randomly selects the hosts and deploys the monitor daemons to them.

If you are using a cluster in stretched mode, before adding the Ceph Monitor, add the crush_location to the monitor manually:

Syntax

ceph mon add HOST IP_ADDRESS datacenter=DATACENTER

Example

[ceph: root@host01 /]# ceph mon add host01 213.222.226.50 datacenter=DC1 adding mon.host01 at [v2:213.222.226.50:3300/0,v1:213.222.226.50:6789/0]

In this example datacenter=DC1 is the crush_location.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the cluster.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

- There are four different ways of deploying Ceph monitor daemons:

Method 1

Use placement specification to deploy monitors on hosts:

NoteRed Hat recommends that you use the

--placementoption to deploy on specific hosts.Syntax

ceph orch apply mon --placement="HOST_NAME_1 HOST_NAME_2 HOST_NAME_3"

Example

[ceph: root@host01 /]# ceph orch apply mon --placement="host01 host02 host03"

NoteBe sure to include the bootstrap node as the first node in the command.

ImportantDo not add the monitors individually as

ceph orch apply monsupersedes and will not add the monitors to all the hosts. For example, if you run the following commands, then the first command creates a monitor onhost01. Then the second command supersedes the monitor on host1 and creates a monitor onhost02. Then the third command supersedes the monitor onhost02and creates a monitor onhost03. Eventually. there is a monitor only on the third host.# ceph orch apply mon host01 # ceph orch apply mon host02 # ceph orch apply mon host03

Method 2

Use placement specification to deploy specific number of monitors on specific hosts with labels:

Add the labels to the hosts:

Syntax

ceph orch host label add HOSTNAME_1 LABEL

Example

[ceph: root@host01 /]# ceph orch host label add host01 mon

Deploy the daemons:

Syntax

ceph orch apply mon --placement="HOST_NAME_1:mon HOST_NAME_2:mon HOST_NAME_3:mon"

Example

[ceph: root@host01 /]# ceph orch apply mon --placement="host01:mon host02:mon host03:mon"

Method 3

Use placement specification to deploy specific number of monitors on specific hosts:

Syntax

ceph orch apply mon --placement="NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_2 HOST_NAME_3"

Example

[ceph: root@host01 /]# ceph orch apply mon --placement="3 host01 host02 host03"

Method 4

Deploy monitor daemons randomly on the hosts in the storage cluster:

Syntax

ceph orch apply mon NUMBER_OF_DAEMONSExample

[ceph: root@host01 /]# ceph orch apply mon 3

Verification

List the service:

Example

[ceph: root@host01 /]# ceph orch ls

List the hosts, daemons, and processes:

Syntax

ceph orch ps --daemon_type=DAEMON_NAMEExample

[ceph: root@host01 /]# ceph orch ps --daemon_type=mon

4.4. Deploying the Ceph monitor daemons using the service specification

The Ceph Orchestrator deploys one monitor daemon by default. You can deploy additional monitor daemons by using the service specification, like a YAML format file.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the cluster.

Procedure

Create the

mon.yamlfile:Example

[root@host01 ~]# touch mon.yaml

Edit the

mon.yamlfile to include the following details:Syntax

service_type: mon placement: hosts: - HOST_NAME_1 - HOST_NAME_2Example

service_type: mon placement: hosts: - host01 - host02Mount the YAML file under a directory in the container:

Example

[root@host01 ~]# cephadm shell --mount mon.yaml:/var/lib/ceph/mon/mon.yaml

Navigate to the directory:

Example

[ceph: root@host01 /]# cd /var/lib/ceph/mon/

Deploy the monitor daemons:

Syntax

ceph orch apply -i FILE_NAME.yamlExample

[ceph: root@host01 mon]# ceph orch apply -i mon.yaml

Verification

List the service:

Example

[ceph: root@host01 /]# ceph orch ls

List the hosts, daemons, and processes:

Syntax

ceph orch ps --daemon_type=DAEMON_NAMEExample

[ceph: root@host01 /]# ceph orch ps --daemon_type=mon

4.5. Deploying the monitor daemons on specific network using the Ceph Orchestrator

The Ceph Orchestrator deploys one monitor daemon by default. You can explicitly specify the IP address or CIDR network for each monitor and control where each monitor is placed.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the cluster.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

Disable automated monitor deployment:

Example

[ceph: root@host01 /]# ceph orch apply mon --unmanaged

Deploy monitors on hosts on specific network:

Syntax

ceph orch daemon add mon HOST_NAME_1:IP_OR_NETWORK

Example

[ceph: root@host01 /]# ceph orch daemon add mon host03:10.1.2.123

Verification

List the service:

Example

[ceph: root@host01 /]# ceph orch ls

List the hosts, daemons, and processes:

Syntax

ceph orch ps --daemon_type=DAEMON_NAMEExample

[ceph: root@host01 /]# ceph orch ps --daemon_type=mon

4.6. Removing the monitor daemons using the Ceph Orchestrator

To remove the monitor daemons from the host, you can just redeploy the monitor daemons on other hosts.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the cluster.

- At least one monitor daemon deployed on the hosts.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

Run the

ceph orch applycommand to deploy the required monitor daemons:Syntax

ceph orch apply mon “NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_3”

If you want to remove monitor daemons from

host02, then you can redeploy the monitors on other hosts.Example

[ceph: root@host01 /]# ceph orch apply mon “2 host01 host03”

Verification

List the hosts,daemons, and processes:

Syntax

ceph orch ps --daemon_type=DAEMON_NAMEExample

[ceph: root@host01 /]# ceph orch ps --daemon_type=mon

Additional Resources

- See Deploying the Ceph monitor daemons using the command line interface section in the Red Hat Ceph Storage Operations Guide for more information.

- See Deploying the Ceph monitor daemons using the service specification section in the Red Hat Ceph Storage Operations Guide for more information.

4.7. Removing a Ceph Monitor from an unhealthy storage cluster

You can remove a ceph-mon daemon from an unhealthy storage cluster. An unhealthy storage cluster is one that has placement groups persistently in not active + clean state.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the Ceph Monitor node.

- At least one running Ceph Monitor node.

Procedure

Identify a surviving monitor and log into the host:

Syntax

ssh root@MONITOR_IDExample

[root@admin ~]# ssh root@host00

Log in to each Ceph Monitor host and stop all the Ceph Monitors:

Syntax

cephadm unit --name DAEMON_NAME.HOSTNAME stop

Example

[root@host00 ~]# cephadm unit --name mon.host00 stop

Set up the environment suitable for extended daemon maintenance and to run the daemon interactively:

Syntax

cephadm shell --name DAEMON_NAME.HOSTNAME

Example

[root@host00 ~]# cephadm shell --name mon.host00

Extract a copy of the

monmapfile:Syntax

ceph-mon -i HOSTNAME --extract-monmap TEMP_PATH

Example

[ceph: root@host00 /]# ceph-mon -i host01 --extract-monmap /tmp/monmap 2022-01-05T11:13:24.440+0000 7f7603bd1700 -1 wrote monmap to /tmp/monmap

Remove the non-surviving Ceph Monitor(s):

Syntax

monmaptool TEMPORARY_PATH --rm HOSTNAME

Example

[ceph: root@host00 /]# monmaptool /tmp/monmap --rm host01

Inject the surviving monitor map with the removed monitor(s) into the surviving Ceph Monitor:

Syntax

ceph-mon -i HOSTNAME --inject-monmap TEMP_PATH

Example

[ceph: root@host00 /]# ceph-mon -i host00 --inject-monmap /tmp/monmap

Start only the surviving monitors:

Syntax

cephadm unit --name DAEMON_NAME.HOSTNAME start

Example

[root@host00 ~]# cephadm unit --name mon.host00 start

Verify the monitors form a quorum:

Example

[ceph: root@host00 /]# ceph -s

-

Optional: Archive the removed Ceph Monitor’s data directory in

/var/lib/ceph/CLUSTER_FSID/mon.HOSTNAMEdirectory.

Chapter 5. Management of managers using the Ceph Orchestrator

As a storage administrator, you can use the Ceph Orchestrator to deploy additional manager daemons. Cephadm automatically installs a manager daemon on the bootstrap node during the bootstrapping process.

In general, you should set up a Ceph Manager on each of the hosts running the Ceph Monitor daemon to achieve same level of availability.

By default, whichever ceph-mgr instance comes up first is made active by the Ceph Monitors, and others are standby managers. There is no requirement that there should be a quorum among the ceph-mgr daemons.

If the active daemon fails to send a beacon to the monitors for more than the mon mgr beacon grace, then it is replaced by a standby.

If you want to pre-empt failover, you can explicitly mark a ceph-mgr daemon as failed with ceph mgr fail MANAGER_NAME command.

5.1. Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to all the nodes.

- Hosts are added to the cluster.

5.2. Deploying the manager daemons using the Ceph Orchestrator

The Ceph Orchestrator deploys two Manager daemons by default. You can deploy additional manager daemons using the placement specification in the command line interface. To deploy a different number of Manager daemons, specify a different number. If you do not specify the hosts where the Manager daemons should be deployed, the Ceph Orchestrator randomly selects the hosts and deploys the Manager daemons to them.

Ensure your deployment has at least three Ceph Managers in each deployment.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the cluster.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

- You can deploy manager daemons in two different ways:

Method 1

Deploy manager daemons using placement specification on specific set of hosts:

NoteRed Hat recommends that you use the

--placementoption to deploy on specific hosts.Syntax

ceph orch apply mgr --placement=" HOST_NAME_1 HOST_NAME_2 HOST_NAME_3"

Example

[ceph: root@host01 /]# ceph orch apply mgr --placement="host01 host02 host03"

Method 2

Deploy manager daemons randomly on the hosts in the storage cluster:

Syntax

ceph orch apply mgr NUMBER_OF_DAEMONSExample

[ceph: root@host01 /]# ceph orch apply mgr 3

Verification

List the service:

Example

[ceph: root@host01 /]# ceph orch ls

List the hosts, daemons, and processes:

Syntax

ceph orch ps --daemon_type=DAEMON_NAMEExample

[ceph: root@host01 /]# ceph orch ps --daemon_type=mgr

5.3. Removing the manager daemons using the Ceph Orchestrator

To remove the manager daemons from the host, you can just redeploy the daemons on other hosts.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to all the nodes.

- Hosts are added to the cluster.

- At least one manager daemon deployed on the hosts.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

Run the

ceph orch applycommand to redeploy the required manager daemons:Syntax

ceph orch apply mgr "NUMBER_OF_DAEMONS HOST_NAME_1 HOST_NAME_3"

If you want to remove manager daemons from

host02, then you can redeploy the manager daemons on other hosts.Example

[ceph: root@host01 /]# ceph orch apply mgr "2 host01 host03"

Verification

List the hosts,daemons, and processes:

Syntax

ceph orch ps --daemon_type=DAEMON_NAMEExample

[ceph: root@host01 /]# ceph orch ps --daemon_type=mgr

Additional Resources

- See Deploying the manager daemons using the Ceph Orchestrator section in the Red Hat Ceph Storage Operations Guide for more information.

5.4. Using the Ceph Manager modules

Use the ceph mgr module ls command to see the available modules and the modules that are presently enabled.

Enable or disable modules with ceph mgr module enable MODULE command or ceph mgr module disable MODULE command respectively.

If a module is enabled, then the active ceph-mgr daemon loads and executes it. In the case of modules that provide a service, such as an HTTP server, the module might publish its address when it is loaded. To see the addresses of such modules, run the ceph mgr services command.

Some modules might also implement a special standby mode which runs on standby ceph-mgr daemon as well as the active daemon. This enables modules that provide services to redirect their clients to the active daemon, if the client tries to connect to a standby.

Following is an example to enable the dashboard module:

[ceph: root@host01 /]# ceph mgr module enable dashboard

[ceph: root@host01 /]# ceph mgr module ls

MODULE

balancer on (always on)

crash on (always on)

devicehealth on (always on)

orchestrator on (always on)

pg_autoscaler on (always on)

progress on (always on)

rbd_support on (always on)

status on (always on)

telemetry on (always on)

volumes on (always on)

cephadm on

dashboard on

iostat on

nfs on

prometheus on

restful on

alerts -

diskprediction_local -

influx -

insights -

k8sevents -

localpool -

mds_autoscaler -

mirroring -

osd_perf_query -

osd_support -

rgw -

rook -

selftest -

snap_schedule -

stats -

telegraf -

test_orchestrator -

zabbix -

[ceph: root@host01 /]# ceph mgr services

{

"dashboard": "http://myserver.com:7789/",

"restful": "https://myserver.com:8789/"

}

The first time the cluster starts, it uses the mgr_initial_modules setting to override which modules to enable. However, this setting is ignored through the rest of the lifetime of the cluster: only use it for bootstrapping. For example, before starting your monitor daemons for the first time, you might add a section like this to your ceph.conf file:

[mon]

mgr initial modules = dashboard balancerWhere a module implements comment line hooks, the commands are accessible as ordinary Ceph commands and Ceph automatically incorporates module commands into the standard CLI interface and route them appropriately to the module:

[ceph: root@host01 /]# ceph <command | help>

You can use the following configuration parameters with the above command:

Table 5.1. Configuration parameters

| Configuration | Description | Type | Default |

|---|---|---|---|

|

| Path to load modules from. | String |

|

|

| Path to load daemon data (such as keyring) | String |

|

|

| How many seconds between manager beacons to monitors, and other periodic checks. | Integer |

|

|

| How long after last beacon should a manager be considered failed. | Integer |

|

5.5. Using the Ceph Manager balancer module

The balancer is a module for Ceph Manager (ceph-mgr) that optimizes the placement of placement groups (PGs) across OSDs in order to achieve a balanced distribution, either automatically or in a supervised fashion.

Currently the balancer module cannot be disabled. It can only be turned off to customize the configuration.

Modes

There are currently two supported balancer modes:

crush-compat: The CRUSH compat mode uses the compat

weight-setfeature, introduced in Ceph Luminous, to manage an alternative set of weights for devices in the CRUSH hierarchy. The normal weights should remain set to the size of the device to reflect the target amount of data that you want to store on the device. The balancer then optimizes theweight-setvalues, adjusting them up or down in small increments in order to achieve a distribution that matches the target distribution as closely as possible. Because PG placement is a pseudorandom process, there is a natural amount of variation in the placement; by optimizing the weights, the balancer counter-acts that natural variation.This mode is fully backwards compatible with older clients. When an OSDMap and CRUSH map are shared with older clients, the balancer presents the optimized weights as the real weights.

The primary restriction of this mode is that the balancer cannot handle multiple CRUSH hierarchies with different placement rules if the subtrees of the hierarchy share any OSDs. Because this configuration makes managing space utilization on the shared OSDs difficult, it is generally not recommended. As such, this restriction is normally not an issue.

upmap: Starting with Luminous, the OSDMap can store explicit mappings for individual OSDs as exceptions to the normal CRUSH placement calculation. These

upmapentries provide fine-grained control over the PG mapping. This CRUSH mode will optimize the placement of individual PGs in order to achieve a balanced distribution. In most cases, this distribution is "perfect", with an equal number of PGs on each OSD +/-1 PG, as they might not divide evenly.ImportantTo allow use of this feature, you must tell the cluster that it only needs to support luminous or later clients with the following command:

[ceph: root@host01 /]# ceph osd set-require-min-compat-client luminous

This command fails if any pre-luminous clients or daemons are connected to the monitors.

Due to a known issue, kernel CephFS clients report themselves as jewel clients. To work around this issue, use the

--yes-i-really-mean-itflag:[ceph: root@host01 /]# ceph osd set-require-min-compat-client luminous --yes-i-really-mean-it

You can check what client versions are in use with:

[ceph: root@host01 /]# ceph features

Prerequisites

- A running Red Hat Ceph Storage cluster.

Procedure

Ensure the balancer module is enabled:

Example

[ceph: root@host01 /]# ceph mgr module enable balancer

Turn on the balancer module:

Example

[ceph: root@host01 /]# ceph balancer on

The default mode is

upmap. The mode can be changed with:Example

[ceph: root@host01 /]# ceph balancer mode crush-compact

or

Example

[ceph: root@host01 /]# ceph balancer mode upmap

Status

The current status of the balancer can be checked at any time with:

Example

[ceph: root@host01 /]# ceph balancer status

Automatic balancing

By default, when turning on the balancer module, automatic balancing is used:

Example

[ceph: root@host01 /]# ceph balancer on

The balancer can be turned back off again with:

Example

[ceph: root@host01 /]# ceph balancer off

This will use the crush-compat mode, which is backward compatible with older clients and will make small changes to the data distribution over time to ensure that OSDs are equally utilized.

Throttling

No adjustments will be made to the PG distribution if the cluster is degraded, for example, if an OSD has failed and the system has not yet healed itself.

When the cluster is healthy, the balancer throttles its changes such that the percentage of PGs that are misplaced, or need to be moved, is below a threshold of 5% by default. This percentage can be adjusted using the target_max_misplaced_ratio setting. For example, to increase the threshold to 7%:

Example

[ceph: root@host01 /]# ceph config-key set mgr target_max_misplaced_ratio .07

For automatic balancing:

- Set the number of seconds to sleep in between runs of the automatic balancer:

Example

[ceph: root@host01 /]# ceph config set mgr mgr/balancer/sleep_interval 60

- Set the time of day to begin automatic balancing in HHMM format:

Example

[ceph: root@host01 /]# ceph config set mgr mgr/balancer/begin_time 0000

- Set the time of day to finish automatic balancing in HHMM format:

Example

[ceph: root@host01 /]# ceph config set mgr mgr/balancer/end_time 2359

-

Restrict automatic balancing to this day of the week or later. Uses the same conventions as crontab,

0is Sunday,1is Monday, and so on:

Example

[ceph: root@host01 /]# ceph config set mgr mgr/balancer/begin_weekday 0

-

Restrict automatic balancing to this day of the week or earlier. This uses the same conventions as crontab,

0is Sunday,1is Monday, and so on:

Example

[ceph: root@host01 /]# ceph config set mgr mgr/balancer/end_weekday 6

-

Define the pool IDs to which the automatic balancing is limited. The default for this is an empty string, meaning all pools are balanced. The numeric pool IDs can be gotten with the

ceph osd pool ls detailcommand:

Example

[ceph: root@host01 /]# ceph config set mgr mgr/balancer/pool_ids 1,2,3

Supervised optimization

The balancer operation is broken into a few distinct phases:

-

Building a

plan. -

Evaluating the quality of the data distribution, either for the current PG distribution, or the PG distribution that would result after executing a

plan. Executing the

plan.To evaluate and score the current distribution:

Example

[ceph: root@host01 /]# ceph balancer eval

To evaluate the distribution for a single pool:

Syntax

ceph balancer eval POOL_NAMEExample

[ceph: root@host01 /]# ceph balancer eval rbd

To see greater detail for the evaluation:

Example

[ceph: root@host01 /]# ceph balancer eval-verbose ...

To generate a plan using the currently configured mode:

Syntax

ceph balancer optimize PLAN_NAMEReplace PLAN_NAME with a custom plan name.

Example

[ceph: root@host01 /]# ceph balancer optimize rbd_123

To see the contents of a plan:

Syntax

ceph balancer show PLAN_NAMEExample

[ceph: root@host01 /]# ceph balancer show rbd_123

To discard old plans:

Syntax

ceph balancer rm PLAN_NAMEExample

[ceph: root@host01 /]# ceph balancer rm rbd_123

To see currently recorded plans use the status command:

[ceph: root@host01 /]# ceph balancer status

To calculate the quality of the distribution that would result after executing a plan:

Syntax

ceph balancer eval PLAN_NAMEExample

[ceph: root@host01 /]# ceph balancer eval rbd_123

To execute the plan:

Syntax

ceph balancer execute PLAN_NAMEExample

[ceph: root@host01 /]# ceph balancer execute rbd_123

NoteOnly execute the plan if it is expected to improve the distribution. After execution, the plan will be discarded.

5.6. Using the Ceph Manager alerts module

You can use the Ceph Manager alerts module to send simple alert messages about the Red Hat Ceph Storage cluster’s health by email.

This module is not intended to be a robust monitoring solution. The fact that it is run as part of the Ceph cluster itself is fundamentally limiting in that a failure of the ceph-mgr daemon prevents alerts from being sent. This module can, however, be useful for standalone clusters that exist in environments where existing monitoring infrastructure does not exist.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the Ceph Monitor node.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

Enable the alerts module:

Example

[ceph: root@host01 /]# ceph mgr module enable alerts

Ensure the alerts module is enabled:

Example

[ceph: root@host01 /]# ceph mgr module ls | more { "always_on_modules": [ "balancer", "crash", "devicehealth", "orchestrator", "pg_autoscaler", "progress", "rbd_support", "status", "telemetry", "volumes" ], "enabled_modules": [ "alerts", "cephadm", "dashboard", "iostat", "nfs", "prometheus", "restful" ]Configure the Simple Mail Transfer Protocol (SMTP):

Syntax

ceph config set mgr mgr/alerts/smtp_host SMTP_SERVER ceph config set mgr mgr/alerts/smtp_destination RECEIVER_EMAIL_ADDRESS ceph config set mgr mgr/alerts/smtp_sender SENDER_EMAIL_ADDRESS

Example

[ceph: root@host01 /]# ceph config set mgr mgr/alerts/smtp_host smtp.example.com [ceph: root@host01 /]# ceph config set mgr mgr/alerts/smtp_destination example@example.com [ceph: root@host01 /]# ceph config set mgr mgr/alerts/smtp_sender example2@example.com

Optional: Change the port to 465.

Syntax

ceph config set mgr mgr/alerts/smtp_port PORT_NUMBERExample

[ceph: root@host01 /]# ceph config set mgr mgr/alerts/smtp_port 587

ImportantSSL is not supported in Red Hat Ceph Storage 5 cluster. Do not set the

smtp_sslparameter while configuring alerts.Authenticate to the SMTP server:

Syntax

ceph config set mgr mgr/alerts/smtp_user USERNAME ceph config set mgr mgr/alerts/smtp_password PASSWORD

Example

[ceph: root@host01 /]# ceph config set mgr mgr/alerts/smtp_user admin1234 [ceph: root@host01 /]# ceph config set mgr mgr/alerts/smtp_password admin1234

Optional: By default, SMTP

Fromname isCeph. To change that, set thesmtp_from_nameparameter:Syntax

ceph config set mgr mgr/alerts/smtp_from_name CLUSTER_NAMEExample

[ceph: root@host01 /]# ceph config set mgr mgr/alerts/smtp_from_name 'Ceph Cluster Test'

Optional: By default, the alerts module checks the storage cluster’s health every minute, and sends a message when there is a change in the cluster health status. To change the frequency, set the

intervalparameter:Syntax

ceph config set mgr mgr/alerts/interval INTERVALExample

[ceph: root@host01 /]# ceph config set mgr mgr/alerts/interval "5m"

In this example, the interval is set to 5 minutes.

Optional: Send an alert immediately:

Example

[ceph: root@host01 /]# ceph alerts send

Additional Resources

- See the Health messages of a Ceph cluster section in the Red Hat Ceph Storage Troubleshooting Guide for more information on Ceph health messages.

5.7. Using the Ceph manager crash module

Using the Ceph manager crash module, you can collect information about daemon crashdumps and store it in the Red Hat Ceph Storage cluster for further analysis.

By default, daemon crashdumps are dumped in /var/lib/ceph/crash. You can configure with the option crash dir. Crash directories are named by time, date, and a randomly-generated UUID, and contain a metadata file meta and a recent log file, with a crash_id that is the same.

You can use ceph-crash.service to submit these crash automatically and persist in the Ceph Monitors. The ceph-crash.service watches watches the crashdump directory and uploads them with ceph crash post.

The RECENT_CRASH heath message is one of the most common health messages in a Ceph cluster. This health message means that one or more Ceph daemons has crashed recently, and the crash has not yet been archived or acknowledged by the administrator. This might indicate a software bug, a hardware problem like a failing disk, or some other problem. The option mgr/crash/warn_recent_interval controls the time period of what recent means, which is two weeks by default. You can disable the warnings by running the following command:

Example

[ceph: root@host01 /]# ceph config set mgr/crash/warn_recent_interval 0

The option mgr/crash/retain_interval controls the period for which you want to retain the crash reports before they are automatically purged. The default for this option is one year.

Prerequisites

- A running Red Hat Ceph Storage cluster.

Procedure

Ensure the crash module is enabled:

Example

[ceph: root@host01 /]# ceph mgr module ls | more { "always_on_modules": [ "balancer", "crash", "devicehealth", "orchestrator_cli", "progress", "rbd_support", "status", "volumes" ], "enabled_modules": [ "dashboard", "pg_autoscaler", "prometheus" ]Save a crash dump: The metadata file is a JSON blob stored in the crash dir as

meta. You can invoke the ceph command-i -option, which reads from stdin.Example

[ceph: root@host01 /]# ceph crash post -i meta

List the timestamp or the UUID crash IDs for all the new and archived crash info:

Example

[ceph: root@host01 /]# ceph crash ls

List the timestamp or the UUID crash IDs for all the new crash information:

Example

[ceph: root@host01 /]# ceph crash ls-new

List the timestamp or the UUID crash IDs for all the new crash information:

Example

[ceph: root@host01 /]# ceph crash ls-new

List the summary of saved crash information grouped by age:

Example

[ceph: root@host01 /]# ceph crash stat 8 crashes recorded 8 older than 1 days old: 2022-05-20T08:30:14.533316Z_4ea88673-8db6-4959-a8c6-0eea22d305c2 2022-05-20T08:30:14.590789Z_30a8bb92-2147-4e0f-a58b-a12c2c73d4f5 2022-05-20T08:34:42.278648Z_6a91a778-bce6-4ef3-a3fb-84c4276c8297 2022-05-20T08:34:42.801268Z_e5f25c74-c381-46b1-bee3-63d891f9fc2d 2022-05-20T08:34:42.803141Z_96adfc59-be3a-4a38-9981-e71ad3d55e47 2022-05-20T08:34:42.830416Z_e45ed474-550c-44b3-b9bb-283e3f4cc1fe 2022-05-24T19:58:42.549073Z_b2382865-ea89-4be2-b46f-9a59af7b7a2d 2022-05-24T19:58:44.315282Z_1847afbc-f8a9-45da-94e8-5aef0738954e

View the details of the saved crash:

Syntax

ceph crash info CRASH_IDExample

[ceph: root@host01 /]# ceph crash info 2022-05-24T19:58:42.549073Z_b2382865-ea89-4be2-b46f-9a59af7b7a2d { "assert_condition": "session_map.sessions.empty()", "assert_file": "/builddir/build/BUILD/ceph-16.1.0-486-g324d7073/src/mon/Monitor.cc", "assert_func": "virtual Monitor::~Monitor()", "assert_line": 287, "assert_msg": "/builddir/build/BUILD/ceph-16.1.0-486-g324d7073/src/mon/Monitor.cc: In function 'virtual Monitor::~Monitor()' thread 7f67a1aeb700 time 2022-05-24T19:58:42.545485+0000\n/builddir/build/BUILD/ceph-16.1.0-486-g324d7073/src/mon/Monitor.cc: 287: FAILED ceph_assert(session_map.sessions.empty())\n", "assert_thread_name": "ceph-mon", "backtrace": [ "/lib64/libpthread.so.0(+0x12b30) [0x7f679678bb30]", "gsignal()", "abort()", "(ceph::__ceph_assert_fail(char const*, char const*, int, char const*)+0x1a9) [0x7f6798c8d37b]", "/usr/lib64/ceph/libceph-common.so.2(+0x276544) [0x7f6798c8d544]", "(Monitor::~Monitor()+0xe30) [0x561152ed3c80]", "(Monitor::~Monitor()+0xd) [0x561152ed3cdd]", "main()", "__libc_start_main()", "_start()" ], "ceph_version": "16.2.8-65.el8cp", "crash_id": "2022-07-06T19:58:42.549073Z_b2382865-ea89-4be2-b46f-9a59af7b7a2d", "entity_name": "mon.ceph-adm4", "os_id": "rhel", "os_name": "Red Hat Enterprise Linux", "os_version": "8.5 (Ootpa)", "os_version_id": "8.5", "process_name": "ceph-mon", "stack_sig": "957c21d558d0cba4cee9e8aaf9227b3b1b09738b8a4d2c9f4dc26d9233b0d511", "timestamp": "2022-07-06T19:58:42.549073Z", "utsname_hostname": "host02", "utsname_machine": "x86_64", "utsname_release": "4.18.0-240.15.1.el8_3.x86_64", "utsname_sysname": "Linux", "utsname_version": "#1 SMP Wed Jul 06 03:12:15 EDT 2022" }Remove saved crashes older than KEEP days: Here, KEEP must be an integer.

Syntax

ceph crash prune KEEPExample

[ceph: root@host01 /]# ceph crash prune 60

Archive a crash report so that it is no longer considered for the

RECENT_CRASHhealth check and does not appear in thecrash ls-newoutput. It appears in thecrash ls.Syntax

ceph crash archive CRASH_IDExample

[ceph: root@host01 /]# ceph crash archive 2022-05-24T19:58:42.549073Z_b2382865-ea89-4be2-b46f-9a59af7b7a2d

Archive all crash reports:

Example

[ceph: root@host01 /]# ceph crash archive-all

Remove the crash dump:

Syntax

ceph crash rm CRASH_IDExample

[ceph: root@host01 /]# ceph crash rm 2022-05-24T19:58:42.549073Z_b2382865-ea89-4be2-b46f-9a59af7b7a2d

Additional Resources

- See the Health messages of a Ceph cluster section in the Red Hat Ceph Storage Troubleshooting Guide for more information on Ceph health messages.

Chapter 6. Management of OSDs using the Ceph Orchestrator

As a storage administrator, you can use the Ceph Orchestrators to manage OSDs of a Red Hat Ceph Storage cluster.

6.1. Ceph OSDs

When a Red Hat Ceph Storage cluster is up and running, you can add OSDs to the storage cluster at runtime.

A Ceph OSD generally consists of one ceph-osd daemon for one storage drive and its associated journal within a node. If a node has multiple storage drives, then map one ceph-osd daemon for each drive.

Red Hat recommends checking the capacity of a cluster regularly to see if it is reaching the upper end of its storage capacity. As a storage cluster reaches its near full ratio, add one or more OSDs to expand the storage cluster’s capacity.

When you want to reduce the size of a Red Hat Ceph Storage cluster or replace the hardware, you can also remove an OSD at runtime. If the node has multiple storage drives, you might also need to remove one of the ceph-osd daemon for that drive. Generally, it’s a good idea to check the capacity of the storage cluster to see if you are reaching the upper end of its capacity. Ensure that when you remove an OSD that the storage cluster is not at its near full ratio.

Do not let a storage cluster reach the full ratio before adding an OSD. OSD failures that occur after the storage cluster reaches the near full ratio can cause the storage cluster to exceed the full ratio. Ceph blocks write access to protect the data until you resolve the storage capacity issues. Do not remove OSDs without considering the impact on the full ratio first.

6.2. Ceph OSD node configuration

Configure Ceph OSDs and their supporting hardware similarly as a storage strategy for the pool(s) that will use the OSDs. Ceph prefers uniform hardware across pools for a consistent performance profile. For best performance, consider a CRUSH hierarchy with drives of the same type or size.

If you add drives of dissimilar size, adjust their weights accordingly. When you add the OSD to the CRUSH map, consider the weight for the new OSD. Hard drive capacity grows approximately 40% per year, so newer OSD nodes might have larger hard drives than older nodes in the storage cluster, that is, they might have a greater weight.

Before doing a new installation, review the Requirements for Installing Red Hat Ceph Storage chapter in the Installation Guide.

6.3. Automatically tuning OSD memory

The OSD daemons adjust the memory consumption based on the osd_memory_target configuration option. The option osd_memory_target sets OSD memory based upon the available RAM in the system.

If Red Hat Ceph Storage is deployed on dedicated nodes that do not share memory with other services, cephadm automatically adjusts the per-OSD consumption based on the total amount of RAM and the number of deployed OSDs.

By default, the osd_memory_target_autotune parameter is set to true in Red Hat Ceph Storage 5.1.

Syntax

ceph config set osd osd_memory_target_autotune true

Once the storage cluster is upgraded to Red Hat Ceph Storage 5.0, for cluster maintenance such as addition of OSDs or replacement of OSDs, Red Hat recommends setting osd_memory_target_autotune parameter to true to autotune osd memory as per system memory.

Cephadm starts with a fraction mgr/cephadm/autotune_memory_target_ratio, which defaults to 0.7 of the total RAM in the system, subtract off any memory consumed by non-autotuned daemons such as non-OSDS and for OSDs for which osd_memory_target_autotune is false, and then divide by the remaining OSDs.

By default, autotune_memory_target_ratio is 0.2 for hyper-converged infrastructure and 0.7 for other environments.

The osd_memory_target parameter is calculated as follows:

Syntax

osd_memory_target = TOTAL_RAM_OF_THE_OSD_NODE (in Bytes) * (autotune_memory_target_ratio) / NUMBER_OF_OSDS_IN_THE_OSD_NODE - (SPACE_ALLOCATED_FOR_OTHER_DAEMONS (in Bytes))

SPACE_ALLOCATED_FOR_OTHER_DAEMONS may optionally include the following daemon space allocations:

- Alertmanager: 1 GB

- Grafana: 1 GB

- Ceph Manager: 4 GB

- Ceph Monitor: 2 GB

- Node-exporter: 1 GB

- Prometheus: 1 GB

For example, if a node has 24 OSDs and has 251 GB RAM space, then osd_memory_target is 7860684936.

The final targets are reflected in the configuration database with options. You can view the limits and the current memory consumed by each daemon from the ceph orch ps output under MEM LIMIT column.

In Red Hat Ceph Storage 5.1, the default setting of osd_memory_target_autotune true is unsuitable for hyperconverged infrastructures where compute and Ceph storage services are colocated. In a hyperconverged infrastructure, the autotune_memory_target_ratio can be set to 0.2 to reduce the memory consumption of Ceph.

Example

[ceph: root@host01 /]# ceph config set mgr mgr/cephadm/autotune_memory_target_ratio 0.2

You can manually set a specific memory target for an OSD in the storage cluster.

Example

[ceph: root@host01 /]# ceph config set osd.123 osd_memory_target 7860684936

You can manually set a specific memory target for an OSD host in the storage cluster.

Syntax

ceph config set osd/host:HOSTNAME osd_memory_target TARGET_BYTES

Example

[ceph: root@host01 /]# ceph config set osd/host:host01 osd_memory_target 1000000000

Enabling osd_memory_target_autotune overwrites existing manual OSD memory target settings. To prevent daemon memory from being tuned even when the osd_memory_target_autotune option or other similar options are enabled, set the _no_autotune_memory label on the host.

Syntax

ceph orch host label add HOSTNAME _no_autotune_memory

You can exclude an OSD from memory autotuning by disabling the autotune option and setting a specific memory target.

Example

[ceph: root@host01 /]# ceph config set osd.123 osd_memory_target_autotune false [ceph: root@host01 /]# ceph config set osd.123 osd_memory_target 16G

6.4. Listing devices for Ceph OSD deployment

You can check the list of available devices before deploying OSDs using the Ceph Orchestrator. The commands are used to print a list of devices discoverable by Cephadm. A storage device is considered available if all of the following conditions are met:

- The device must have no partitions.

- The device must not have any LVM state.

- The device must not be mounted.

- The device must not contain a file system.

- The device must not contain a Ceph BlueStore OSD.

- The device must be larger than 5 GB.

Ceph will not provision an OSD on a device that is not available.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the cluster.

- All manager and monitor daemons are deployed.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

List the available devices to deploy OSDs:

Syntax

ceph orch device ls [--hostname=HOSTNAME_1 HOSTNAME_2] [--wide] [--refresh]

Example

[ceph: root@host01 /]# ceph orch device ls --wide --refresh

Using the

--wideoption provides all details relating to the device, including any reasons that the device might not be eligible for use as an OSD. This option does not support NVMe devices.Optional: To enable Health, Ident, and Fault fields in the output of

ceph orch device ls, run the following commands:NoteThese fields are supported by

libstoragemgmtlibrary and currently supports SCSI, SAS, and SATA devices.As root user outside the Cephadm shell, check your hardware’s compatibility with

libstoragemgmtlibrary to avoid unplanned interruption to services:Example

[root@host01 ~]# cephadm shell lsmcli ldl

In the output, you see the Health Status as Good with the respective SCSI VPD 0x83 ID.

NoteIf you do not get this information, then enabling the fields might cause erratic behavior of devices.

Log back into the Cephadm shell and enable

libstoragemgmtsupport:Example

[root@host01 ~]# cephadm shell [ceph: root@host01 /]# ceph config set mgr mgr/cephadm/device_enhanced_scan true

Once this is enabled,

ceph orch device lsgives the output of Health field as Good.

Verification

List the devices:

Example

[ceph: root@host01 /]# ceph orch device ls

6.5. Zapping devices for Ceph OSD deployment

You need to check the list of available devices before deploying OSDs. If there is no space available on the devices, you can clear the data on the devices by zapping them.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the cluster.

- All manager and monitor daemons are deployed.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

List the available devices to deploy OSDs:

Syntax

ceph orch device ls [--hostname=HOSTNAME_1 HOSTNAME_2] [--wide] [--refresh]

Example

[ceph: root@host01 /]# ceph orch device ls --wide --refresh

Clear the data of a device:

Syntax

ceph orch device zap HOSTNAME FILE_PATH --force

Example

[ceph: root@host01 /]# ceph orch device zap host02 /dev/sdb --force

Verification

Verify the space is available on the device:

Example

[ceph: root@host01 /]# ceph orch device ls

You will see that the field under Available is Yes.

Additional Resources

- See the Listing devices for Ceph OSD deployment section in the Red Hat Ceph Storage Operations Guide for more information.

6.6. Deploying Ceph OSDs on all available devices

You can deploy all OSDS on all the available devices. Cephadm allows the Ceph Orchestrator to discover and deploy the OSDs on any available and unused storage device. To deploy OSDs all available devices, run the command without the unmanaged parameter and then re-run the command with the parameter to prevent from creating future OSDs.

The deployment of OSDs with --all-available-devices is generally used for smaller clusters. For larger clusters, use the OSD specification file.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Hosts are added to the cluster.

- All manager and monitor daemons are deployed.

Procedure

Log into the Cephadm shell:

Example

[root@host01 ~]# cephadm shell

List the available devices to deploy OSDs:

Syntax

ceph orch device ls [--hostname=HOSTNAME_1 HOSTNAME_2] [--wide] [--refresh]

Example

[ceph: root@host01 /]# ceph orch device ls --wide --refresh

Deploy OSDs on all available devices:

Example