Chapter 1. About Red Hat Update Infrastructure

Red Hat Update Infrastructure (RHUI) is a highly scalable, highly redundant framework that enables you to manage repositories and content. It also enables cloud providers to deliver content and updates to Red Hat Enterprise Linux (RHEL) instances. Based on the upstream Pulp project, RHUI allows cloud providers to locally mirror Red Hat-hosted repository content, create custom repositories with their own content, and make those repositories available to a large group of end users through a load-balanced content delivery system.

As a system administrator, you can prepare your infrastructure for participation in the Red Hat Certified Cloud and Service Provider program by following the procedures to install and configure the Red Hat Update Appliance (RHUA), content delivery servers (CDSs), repositories, shared storage, and load balancing. Experienced RHEL system administrators are the target audience. System administrators with limited Red Hat Enterprise Linux skills should consider engaging Red Hat Consulting to provide a Red Hat Certified Cloud Provider Architecture Service.

Learn about configuring, managing, and updating RHUI with the following topics:

- the various RHUI components

- content provider types

- the command line interface (CLI) used to manage the components

- utility commands

- certificate management

- content management.

See Appendix A, Red Hat Update Infrastructure Management Tool menus and commands for a list of all of the available menus and commands in the Red Hat Update Infrastructure Management Tool.

See Appendix B, Red Hat Update Infrastructure command line interface for a list of the functions that you can run from a standard shell prompt.

See Appendix C, Resolve common problems in Red Hat Update Infrastructure for some of the common known issues and possible solutions.

See Appendix D, API Reference in Red Hat Update Infrastructure for a list of the Pulp APIs used in Red Hat Update Infrastructure.

See the following resources for more information on the various Red Hat Update Infrastructure components.

1.1. New features

Red Hat Update Infrastructure offers:

- Easy installation using Puppet

- Code rebased to Pulp 2.18 to be consistent with the code base in Red Hat Satellite 6

- Faster access to content due to reworked architecture for automated installations

- Default use of Red Hat Gluster Storage as shared storage to speed up content availability at the CDS and eliminate the need for synchronization

- High-availability deployment to reduce the error of one CDS not being synchronized with another CDS

- A load balancer/HAProxy node that is client-facing. (This functionality was integrated previously into the CDS logic.)

-

Certificates managed by the

rhui-installerandrhui-managercommands -

Updates to

yum.repos.d/*, certificates, and keys to use a new unified URL -

Removal of client-side load balancing functionality from

rhui-lb.py - Support for Docker and OSTree (atomic) content

1.2. Installation options

The following table presents the various Red Hat Update Infrastructure components.

Table 1.1. Red Hat Update Infrastructure components and functions

| Component | Acronym | Function | Alternative |

|---|---|---|---|

| Red Hat Update Appliance | RHUA | Downloads new packages from the Red Hat content delivery network and copies new packages to each CDS node | None |

| Content Delivery Server | CDS |

Provides the | None |

| HAProxy | None | Provides load balancing across CDS nodes | Existing storage solution |

| Gluster Storage | None | Provides shared storage | Existing storage solution |

The following table describes how to perform installation tasks.

Table 1.2. Red Hat Update Infrastructure installation tasks

| Installation Task | Performed on |

|---|---|

| Install Red Hat Enterprise Linux 7 | RHUA, CDS, and HAProxy |

| Subscribe the system | RHUA, CDS, and HAProxy |

| Attach a Red Hat Update Infrastructure subscription | RHUA, CDS, and HAProxy |

| Apply updates | RHUA, CDS and HAProxy |

| Mount the Red Hat Update Infrastructure ISO (optional) | RHUA, CDS, and HAProxy |

| Run the setup_package_repos script (optional if using the RHUI 3 ISO) | RHUA, CDS, and HAProxy |

| Install rhui-installer | RHUA |

| Run rhui-installer | RHUA |

There are several scenarios for setting up your cloud environment. Option 1: Full installation is described in detail and includes notes and remarks about how to alter the installation for other scenarios.

1.2.1. Option 1: Full installation

- A RHUA

- Three or more CDS nodes with Gluster Storage

- Two or more HAProxy servers

1.2.2. Option 2: Installation with an existing storage solution

- A RHUA

- Two or more CDS nodes with an existing storage solution

- Two or more HAProxy servers

1.2.3. Option 3: Installation with an existing load balancing solution

- A RHUA

- Three or more CDS nodes with Gluster Storage

- Existing load balancer

1.2.4. Option 4: Installation with existing storage and load balancing solutions

- A RHUA

- Two or more CDS nodes with an existing storage solution

- Existing load balancer

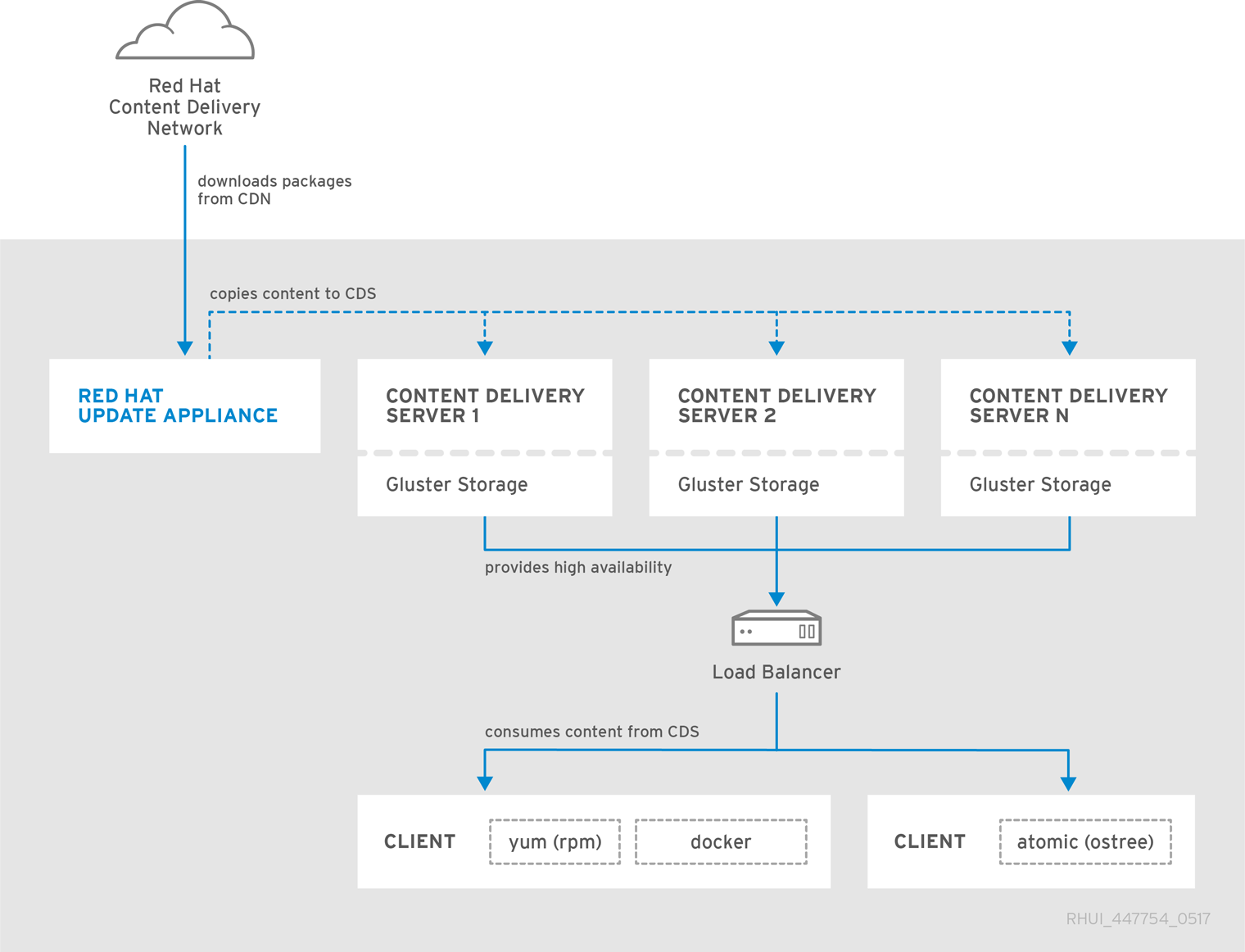

The following figure depicts a high-level view of how the various RHUI components interact.

Figure 1.1. Red Hat Update Infrastructure overview

You need the RHUI ISO and a Red Hat Certified Cloud and Service Provider subscription to install Red Hat Update Infrastructure. You also need an appropriate content certificate.

Install the RHUA and CDS nodes on separate x86_64 servers (bare metal or virtual machines). Ensure all the servers and networks that connect to RHUI can access the ISO or the Red Hat Subscription Management service.

Despite its name, the RHUA is not actually shipped as an appliance; it is an RPM installed on an instance in the cloud.

See the Release Notes before setting up Red Hat Update Infrastructure.

1.3. Red Hat Update Infrastructure components

Each RHUI component is described in detail.

1.3.1. Red Hat Update Appliance

There is one RHUA per RHUI installation, though in many cloud environments there will be one RHUI installation per region or data center, for example, Amazon’s EC2 cloud comprises several regions. In every region, there is a separate RHUI set up with its own RHUA node.

The RHUA allows you to perform the following tasks:

- Download new packages from the Red Hat content delivery network (CDN). The RHUA is the only RHUI component that connects to Red Hat, and you can configure the RHUA’s synchronization schedule.

- Copy new packages to each CDS node.

- Verify the RHUI installation’s health and write the results to a file located on the RHUA. Monitoring solutions use this file to determine the RHUI installation’s health.

- Provide a human-readable view of the RHUI installation’s health through a CLI tool.

The RHUI uses two main configuration files: /etc/rhui/rhui-tools.conf and /etc/rhui-installer/answers.yaml.

The /etc/rhui/rhui-tools.conf_ configuration file contains general options used by the RHUA, such as the default file locations for certificates, and default configuration parameters for the Red Hat CDN synchronization. This file normally does not require editing.

The Red Hat Update Infrastructure Management Tool generates the /etc/rhui-installer/answers.yaml configuration file based on user-inputted values. It contains all the information that drives the running of a RHUA in a particular region. An example configuration includes the destination on the RHUA to download packages and a list of CDS nodes (host names) in the RHUI installation.

The RHUA employs several services to synchronize, organize, and distribute content for easy delivery.

RHUA services

- Pulp

- The service that oversees management of the supporting services, providing a user interface for users to interact with

- MongoDB

- A NoSQL database used to keep track of currently synchronized repositories, packages, and other crucial metadata. MongoDB stores values in BSON (Binary JSON) objects.

- Qpid

- An Apache-based messaging broker system that allows the RHUA to interact securely with the CDSs to inform them of desired actions against the RHUA (synchronize, remove, adjust repositories, and so on). This allows for full control over the RHUI appliance from just the RHUA system.

The following considerations might apply:

- MongoDB’s files appear to take up a large amount of room on the file system and are sometimes larger than the database content itself. This is normal behavior based on Mongo’s allocation method. See RHUIs mongodb files are larger than the actual database contents for more information.

- If MongoDB fails to start, clearing database locks and performing a repair is often effective as outlined in Red Hat Update Infrastructure fails to start due to a MongoDB startup error.

1.3.2. Content delivery server

The CDS nodes provide the repositories that clients connect to for the updated content. There can be as few as one CDS. Because RHUI provides a load balancer with failover capabilities, we recommended that you use multiple CDS nodes.

The CDSs host content to end-user RHEL systems. While there is no required number of systems, the CDS works in a round-robin style load-balanced fashion (A, B, C, A, B, C) to deliver content to end-user systems. The CDS uses HTTP to host content to end-user systems via httpd-based yum repositories.

During configuration, you specify the CDS directory where packages are synchronized. Similar to the RHUA, the only requirement is that you mount the directory on the CDS. It is up to the cloud provider to determine the best course of action when allocating the necessary devices. The Red Hat Update Infrastructure Management Tool configuration RPM linkd the package directory with the Apache configuration to serve it.

If NFS is used, rhui-installer can configure an NFS share on the RHUA to store the content as well as a directory on the CDSs to mount the NFS share. The following rhui-manager options control these settings:

-

--remote-fs-mountpointis the file system location where the remote file system share should be mounted (default:/var/lib/rhui/remote_share) -

--remote-fs-serveris the remote mount point for a shared file system to use, for example,nfs.example.com:/path/to/share(default:nfs.example.com:/export)

If these default values are used, the /export directory on the RHUA and the /var/lib/rhui/remote_share directory on each CDS are identical. For example, the published subdirectory has the following structure if yum, docker, and ostree repositories are already synchronized.

[root@rhua ~]# ls /export/published/ docker ostree yum [root@cds01 ~]# ls /var/lib/rhui/remote_share/published/ docker ostree yum

The expected usage is that each CDS keeps its copy of the packages. It is possible the cloud provider will use some form of shared storage (such as Gluster Storage) that the RHUA writes packages to and each CDS reads from.

The storage solution must provide an NFS endpoint for mounting on the RHUA and CDSs. If local storage is implemented, shared storage is needed for the cluster to work. If you want to provide local storage to the RHUA, configure the RHUA to function as the NFS server with a rhua.example.com:/path/to/nfs/share endpoint configured.

The only nonstandard logic that takes place on each CDS is the entitlement certificate checking. This checking ensures that the client making requests on the yum or ostree repositories is authorized by the cloud provider to access those repositories. The check ensures the following conditions:

- The entitlement certificate was signed by the cloud provider’s Certificate Authority (CA) Certificate. The CA Certificate is installed on the CDS as part of its configuration to facilitate this verification.

- The requested URI matches an entitlement found in the client’s entitlement certificate

If the CA verification fails, the client sees an SSL error. See the CDS’s Apache logs under /var/log/httpd/ for more information.

[root@cds01 ~]# ls -1 /var/log/httpd/ access_log cds.example.com_access_ssl.log cds.example.com_error_ssl.log crane_access_ssl.log crane_error_ssl.log default_error.log error_log

The Apache configuration is handled through the /etc/httpd/conf.d/cds_ssl.conf file during the CDS installation.

If multiple clients experience problems updating against a repository, this may indicate a problem with the RHUI. See Yum generates 'Errno 14 HTTP Error 401: Authorization Required' while accessing RHUI CDS for more details.

1.3.3. HAProxy

If more than one CDS is used, a load-balancing solution must be in place to spread client HTTPS requests across all servers. RHUI ships with HAProxy, but it is up to you to choose what load-balancing solution (for example, the one from the cloud provider) to use during the installation. If HAProxy is used, you must also decide how many nodes to bring in. See HAProxy Configuration for more information.

Clients are not configured to go directly to a CDS; their repository files are configured to point to HAProxy, the RHUI load balancer. HAProxy is a TCP/HTTP reverse proxy particularly suited for high-availability environments. HAProxy performs the following tasks:

- Routes HTTP requests depending on statically assigned cookies

- Spreads the load among several servers while assuring server persistence through the use of HTTP cookies

- Switches to backup servers in the event a main server fails

- Accepts connections to special ports dedicated to service monitoring

- Stops accepting connections without breaking existing ones

- Adds, modifies, and deletes HTTP headers in both directions

- Blocks requests matching particular patterns

- Persists client connections to the correct application server, depending on application cookies

- Reports detailed status as HTML pages to authenticated users from a URI intercepted from the application

With RHEL 7, the load balancer technology is included in the base operating system. The load balancer must be installed on a separate node.

If you use an existing load balancer, ensure ports 5000 and 443 are configured in the load balancer for the [filename] cds-lb-hostname forwarded to the pool and that all CDSs in the cluster are in the load balancer’s pool. You do not need to follow the steps in Chapter 8.

The exact configuration depends on the particular load balancer software you use. See the following configuration, taken from a typical HAProxy setup, to understand how you should configure your load balancer:

[root@rhui3proxy ~]# cat /etc/haproxy/haproxy.cfg # This file managed by Puppet global chroot /var/lib/haproxy daemon group haproxy log 10.13.153.2 local0 maxconn 4000 pidfile /var/run/haproxy.pid stats socket /var/lib/haproxy/stats user haproxy defaults log global maxconn 8000 option redispatch retries 3 stats enable timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout check 10s listen crane00 bind 10.13.153.2:5000 balance roundrobin option tcplog option ssl-hello-chk server cds3-2.usersys.redhat.com cds3-2.usersys.redhat.com:5000 check server cds3-1.usersys.redhat.com cds3-1.usersys.redhat.com:5000 check listen https00 bind 10.13.153.2:443 balance roundrobin option tcplog option ssl-hello-chk server cds3-2.usersys.redhat.com cds3-2.usersys.redhat.com:443 check server cds3-1.usersys.redhat.com cds3-1.usersys.redhat.com:443 check

See the Load Balancer Administration Guide for Red Hat Enterprise Linux 7 for detailed descriptions of the individual global, default, and "listen" settings.

Keep in mind that when clients fail to connect successfully, it is important to review the httpd logs on the CDS under /var/log/httpd/ to ensure that any requests reached the CDS. If requests do not reach the CDS, issues such as DNS or general network connectivity may be at fault.

1.3.4. Repositories, containers, and content

A repository is a storage location for software packages (RPMs). RHEL uses yum commands to search a repository, download, install, and configure the RPMs. The RPMs contain all the dependencies needed to run an application. RPMs also download updates for software in your repositories.

RHEL 7 implements Linux containers using core technologies such as control groups (cgroups) for resource management, namespaces for process isolation, and SELinux for security, enabling secure multiple tenancy and reducing the potential for security exploits. Linux containers enable rapid application deployment, simpler testing, maintenance, and troubleshooting while improving security. Using RHEL 7 with Docker allows you to increase staff efficiency, deploy third-party applications faster, enable a more agile development environment, and manage resources more tightly.

There are two general scenarios for using Linux containers in RHEL 7. You can work with host containers as a tool for application sandboxing, or you can use the extended features of image-based containers. When you launch a container from an image, a writable layer is added on top of this image. Every time you commit a container (using the docker commit command), a new image layer is added to store your changes.

Content, as it relates to RHUI, is the software (such as RPMs) that you download from the Red Hat CDN for use on the RHUA and the CDS nodes. The RPMs provide the files necessary to run specific applications and tools. Clients are granted access by a set of SSL content certificates and keys provided by an rpm package, which also provides a set of generated yum repository files.

See What Channels Can Be Delivered at Red Hat’s Certified Certified Cloud & Service Provider (CCSP) Partners? for more information.

1.4. Content provider types

There are three types of cloud computing environments: public cloud, private cloud, and hybrid cloud. This guide focuses on public and private clouds. We assume the audience understands the implications of using public, private, and hybrid clouds.

1.5. Utility and command line interface commands

See Appendix A, Red Hat Update Infrastructure Management Tool menus and commands for a list of the functions you can perform using Red Hat Update Infrastructure Management Tool.

See Appendix B, Red Hat Update Infrastructure command line interface for a list of the functions that can also be run from a standard shell prompt.

1.6. Component communications

All RHUI components use the HTTPS communication protocol over port 443.

Table 1.3. Red Hat Update Infrastructure communication protocols

| Source | Destination | Protocol | Purpose |

|---|---|---|---|

| Red Hat Update Appliance | Red Hat Content Delivery Network | HTTPS | Downloads packages from Red Hat |

| Load Balancer | Content Delivery Server | HTTPS | Forwards the client’s yum, docker or ostree request |

| Client | Load Balancer | HTTPS | Used by yum, docker, or ostree on the client to download packages from a content delivery server |