Managing Red Hat Process Automation Manager and KIE Server settings

Abstract

Preface

As a developer or system administrator, you can modify Red Hat Process Automation Manager and KIE Server settings and properties to meet your business needs. You can modify the behavior of the Red Hat Process Automation Manager runtime, the Business Central interface, or the KIE Server.

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Part I. Managing and monitoring KIE Server

As a system administrator, you can install, configure, and upgrade Red Hat Process Automation Manager for production environments, quickly and easily troubleshoot system failures, and ensure that systems are running optimally.

Prerequisites

- Red Hat JBoss Enterprise Application Platform 7.3 is installed. For more information, see Red Hat JBoss Enterprise Application Platform 7.3 Installation Guide.

- Red Hat Process Automation Manager is installed. For more information, see Planning a Red Hat Process Automation Manager installation.

-

Red Hat Process Automation Manager is running and you can log in to Business Central with the

adminrole. For more information, see Planning a Red Hat Process Automation Manager installation.

Chapter 1. Red Hat Process Automation Manager components

Red Hat Process Automation Manager is made up of Business Central and KIE Server.

Business Central is the graphical user interface where you create and manage business rules. You can install Business Central in a Red Hat JBoss EAP instance or on the Red Hat OpenShift Container Platform (OpenShift).

Business Central is also available as a standalone JAR file. You can use the Business Central standalone JAR file to run Business Central without deploying it to an application server.

KIE Server is the server where rules and other artifacts are executed. It is used to instantiate and execute rules and solve planning problems. You can install KIE Server in a Red Hat JBoss EAP instance, in a Red Hat JBoss EAP cluster, on OpenShift, in an Oracle WebLogic server instance, in an IBM WebSphere Application Server instance, or as a part of Spring Boot application.

You can configure KIE Server to run in managed or unmanaged mode. If KIE Server is unmanaged, you must manually create and maintain KIE containers (deployment units). A KIE container is a specific version of a project. If KIE Server is managed, the Process Automation Manager controller manages the KIE Server configuration and you interact with the Process Automation Manager controller to create and maintain KIE containers.

Chapter 2. System integration with Maven

Red Hat Process Automation Manager is designed to be used with Red Hat JBoss Middleware Maven Repository and Maven Central repository as dependency sources. Ensure that both the dependencies are available for projects builds.

Ensure that your project depends on specific versions of an artifact. LATEST or RELEASE are commonly used to specify and manage dependency versions in your application.

-

LATESTrefers to the latest deployed (snapshot) version of an artifact. -

RELEASErefers to the last non-snapshot version release in the repository.

By using LATEST or RELEASE, you do not have to update version numbers when a new release of a third-party library is released, however, you lose control over your build being affected by a software release.

2.1. Preemptive authentication for local projects

If your environment does not have access to the internet, set up an in-house Nexus and use it instead of Maven Central or other public repositories. To import JARs from the remote Maven repository of Red Hat Process Automation Manager server to a local Maven project, turn on pre-emptive authentication for the repository server. You can do this by configuring authentication for guvnor-m2-repo in the pom.xml file as shown below:

<server>

<id>guvnor-m2-repo</id>

<username>admin</username>

<password>admin</password>

<configuration>

<wagonProvider>httpclient</wagonProvider>

<httpConfiguration>

<all>

<usePreemptive>true</usePreemptive>

</all>

</httpConfiguration>

</configuration>

</server>Alternatively, you can set Authorization HTTP header with Base64 encoded credentials:

<server>

<id>guvnor-m2-repo</id>

<configuration>

<httpHeaders>

<property>

<name>Authorization</name>

<!-- Base64-encoded "admin:admin" -->

<value>Basic YWRtaW46YWRtaW4=</value>

</property>

</httpHeaders>

</configuration>

</server>2.2. Duplicate GAV detection in Business Central

In Business Central, all Maven repositories are checked for any duplicated GroupId, ArtifactId, and Version (GAV) values in a project. If a GAV duplicate exists, the performed operation is canceled.

Duplicate GAV detection is disabled for projects in Development Mode. To enable duplicate GAV detection in Business Central, go to project Settings → General Settings → Version and toggle the Development Mode option to OFF (if applicable).

Duplicate GAV detection is executed every time you perform the following operations:

- Save a project definition for the project.

-

Save the

pom.xmlfile. - Install, build, or deploy a project.

The following Maven repositories are checked for duplicate GAVs:

-

Repositories specified in the

<repositories>and<distributionManagement>elements of thepom.xmlfile. -

Repositories specified in the Maven

settings.xmlconfiguration file.

2.3. Managing duplicate GAV detection settings in Business Central

Business Central users with the admin role can modify the list of repositories that are checked for duplicate GroupId, ArtifactId, and Version (GAV) values for a project.

Duplicate GAV detection is disabled for projects in Development Mode. To enable duplicate GAV detection in Business Central, go to project Settings → General Settings → Version and toggle the Development Mode option to OFF (if applicable).

Procedure

- In Business Central, go to Menu → Design → Projects and click the project name.

- Click the project Settings tab and then click Validation to open the list of repositories.

Select or clear any of the listed repository options to enable or disable duplicate GAV detection.

In the future, duplicate GAVs will be reported for only the repositories you have enabled for validation.

NoteTo disable this feature, set the

org.guvnor.project.gav.check.disabledsystem property totruefor Business Central at system startup:$ ~/EAP_HOME/bin/standalone.sh -c standalone-full.xml -Dorg.guvnor.project.gav.check.disabled=true

Chapter 3. Applying patch updates and minor release upgrades to Red Hat Process Automation Manager

Automated update tools are often provided with both patch updates and new minor versions of Red Hat Process Automation Manager to facilitate updating certain components of Red Hat Process Automation Manager, such as Business Central, KIE Server, and the headless Process Automation Manager controller. Other Red Hat Process Automation Manager artifacts, such as the decision engine and standalone Business Central, are released as new artifacts with each minor release and you must re-install them to apply the update.

You can use the same automated update tool to apply both patch updates and minor release upgrades to Red Hat Process Automation Manager 7.10. Patch updates of Red Hat Process Automation Manager, such as an update from version 7.10 to 7.10.1, include the latest security updates and bug fixes. Minor release upgrades of Red Hat Process Automation Manager, such as an upgrade from version 7.9.x to 7.10, include enhancements, security updates, and bug fixes.

Only updates for Red Hat Process Automation Manager are included in Red Hat Process Automation Manager update tools. Updates to Red Hat JBoss EAP must be applied using Red Hat JBoss EAP patch distributions. For more information about Red Hat JBoss EAP patching, see the Red Hat JBoss EAP patching and upgrading guide.

Prerequisites

- Your Red Hat Process Automation Manager and KIE Server instances are not running. Do not apply updates while you are running an instance of Red Hat Process Automation Manager or KIE Server.

Procedure

Navigate to the Software Downloads page in the Red Hat Customer Portal (login required), and select the product and version from the drop-down options.

If you are upgrading to a new minor release of Red Hat Process Automation Manager, such as an upgrade from version 7.9.x to 7.10, first apply the latest patch update to your current version of Red Hat Process Automation Manager and then follow this procedure again to upgrade to the new minor release.

Click Patches, download the Red Hat Process Automation Manager [VERSION] Update Tool, and extract the downloaded

rhpam-$VERSION-update.zipfile to a temporary directory.This update tool automates the update of certain components of Red Hat Process Automation Manager, such as Business Central, KIE Server, and the headless Process Automation Manager controller. Use this update tool first to apply updates and then install any other updates or new release artifacts that are relevant to your Red Hat Process Automation Manager distribution.

If you want to preserve any files from being updated by the update tool, navigate to the extracted

rhpam-$VERSION-updatefolder, open theblacklist.txtfile, and add the relative paths to the files that you do not want to be updated.When a file is listed in the

blacklist.txtfile, the update script does not replace the file with the new version but instead leaves the file in place and in the same location adds the new version with a.newsuffix. If you block files that are no longer being distributed, the update tool creates an empty marker file with a.removedsuffix. You can then choose to retain, merge, or delete these new files manually.Example files to be excluded in

blacklist.txtfile:WEB-INF/web.xml // Custom file styles/base.css // Obsolete custom file kept for record

The contents of the blocked file directories after the update:

$ ls WEB-INF web.xml web.xml.new

$ ls styles base.css base.css.removed

In your command terminal, navigate to the temporary directory where you extracted the

rhpam-$VERSION-update.zipfile and run theapply-updatesscript in the following format:ImportantMake sure that your Red Hat Process Automation Manager and KIE Server instances are not running before you apply updates. Do not apply updates while you are running an instance of Red Hat Process Automation Manager or KIE Server.

On Linux or Unix-based systems:

$ ./apply-updates.sh $DISTRO_PATH $DISTRO_TYPE

On Windows:

$ .\apply-updates.bat $DISTRO_PATH $DISTRO_TYPE

The

$DISTRO_PATHportion is the path to the relevant distribution directory and the$DISTRO_TYPEportion is the type of distribution that you are updating with this update.The following distribution types are supported in Red Hat Process Automation Manager update tool:

-

rhpam-business-central-eap7-deployable: Updates Business Central (business-central.war) rhpam-kie-server-ee8: Updates KIE Server (kie-server.war)NoteThe update tool will update and replace Red Hat JBoss EAP EE7 to Red Hat JBoss EAP EE8. Red Hat JBoss EAP EE7 is used for WebLogic and WebSphere, whereas version EE8 is used for Red Hat JBoss EAP. Make sure that the KIE Server on WebLogic and WebSphere is not updated by the update tool.

-

rhpam-kie-server-jws: Updates KIE Server on Red Hat JBoss Web Server (kie-server.war) -

rhpam-controller-ee7: Updates the headless Process Automation Manager controller (controller.war) rhpam-controller-jws: Updates the headless Process Automation Manager controller on Red Hat JBoss Web Server (controller.war)Example update to Business Central and KIE Server for a full Red Hat Process Automation Manager distribution on Red Hat JBoss EAP:

./apply-updates.sh ~EAP_HOME/standalone/deployments/business-central.war rhpam-business-central-eap7-deployable ./apply-updates.sh ~EAP_HOME/standalone/deployments/kie-server.war rhpam-kie-server-ee8

Example update to headless Process Automation Manager controller, if used:

./apply-updates.sh ~EAP_HOME/standalone/deployments/controller.war rhpam-controller-ee7

The update script creates a

backupfolder in the extractedrhpam-$VERSION-updatefolder with a copy of the specified distribution, and then proceeds with the update.

-

After the update tool completes, return to the Software Downloads page of the Red Hat Customer Portal where you downloaded the update tool and install any other updates or new release artifacts that are relevant to your Red Hat Process Automation Manager distribution.

For files that already exist in your Red Hat Process Automation Manager distribution, such as

.jarfiles for the decision engine or other add-ons, replace the existing version of the file with the new version from the Red Hat Customer Portal.If you use the standalone Red Hat Process Automation Manager 7.10.0 Maven Repository artifact (

rhpam-7.10.0-maven-repository.zip), such as in air-gap environments, download Red Hat Process Automation Manager 7.10.x Maven Repository and extract the downloadedrhpam-7.10.x-maven-repository.zipfile to your existing~/maven-repositorydirectory to update the relevant contents.Example Maven repository update:

$ unzip -o rhpam-7.10.x-maven-repository.zip 'rhba-7.10.1.GA-maven-repository/maven-repository/*' -d /tmp/rhbaMavenRepoUpdate $ mv /tmp/rhbaMavenRepoUpdate/rhba-7.10.0.GA-maven-repository/maven-repository/ $REPO_PATH/

NoteYou can remove the

/tmp/rhbaMavenRepoUpdatefolder after you complete the update.- After you finish applying all relevant updates, start Red Hat Process Automation Manager and KIE Server and log in to Business Central.

Verify that all project data is present and accurate in Business Central, and in the top-right corner of the Business Central window, click your profile name and click About to verify the updated product version number.

If you encounter errors or notice any missing data in Business Central, you can restore the contents in the

backupfolder within therhpam-$VERSION-updatefolder to revert the update tool changes. You can also re-install the relevant release artifacts from your previous version of Red Hat Process Automation Manager in the Red Hat Customer Portal. After restoring your previous distribution, you can try again to run the update.

Chapter 4. Configuring and starting KIE Server

You can configure your KIE Server location, user name, password, and other related properties by defining the necessary configurations when you start KIE Server.

Procedure

Navigate to the Red Hat Process Automation Manager 7.10 bin directory and start the new KIE Server with the following properties. Adjust the specific properties according to your environment.

$ ~/EAP_HOME/bin/standalone.sh --server-config=standalone-full.xml 1 -Dorg.kie.server.id=myserver 2 -Dorg.kie.server.user=kie_server_username 3 -Dorg.kie.server.pwd=kie_server_password 4 -Dorg.kie.server.controller=http://localhost:8080/business-central/rest/controller 5 -Dorg.kie.server.controller.user=controller_username 6 -Dorg.kie.server.controller.pwd=controller_password 7 -Dorg.kie.server.location=http://localhost:8080/kie-server/services/rest/server 8 -Dorg.kie.server.persistence.dialect=org.hibernate.dialect.PostgreSQLDialect 9 -Dorg.kie.server.persistence.ds=java:jboss/datasources/psjbpmDS 10

- 1

- Start command with

standalone-full.xmlserver profile - 2

- Server ID that must match the server configuration name defined in Business Central

- 3

- User name to connect with KIE Server from the Process Automation Manager controller

- 4

- Password to connect with KIE Server from the Process Automation Manager controller

- 5

- Process Automation Manager controller location, Business Central URL with

/rest/controllersuffix - 6

- User name to connect to the Process Automation Manager controller REST API

- 7

- Password to connect to the Process Automation Manager controller REST API

- 8

- KIE Server location (on the same instance as Business Central in this example)

- 9

- Hibernate dialect to be used

- 10

- JNDI name of the data source used for your previous Red Hat JBoss BPM Suite database

If Business Central and KIE Server are installed on separate application server instances (Red Hat JBoss EAP or other), use a separate port for the KIE Server location to avoid port conflicts with Business Central. If a separate KIE Server port has not already been configured, you can add a port offset and adjust the KIE Server port value accordingly in the KIE Server properties.

Example:

-Djboss.socket.binding.port-offset=150 -Dorg.kie.server.location=http://localhost:8230/kie-server/services/rest/server

If the Business Central port is 8080, as in this example, then the KIE Server port, with a defined offset of 150, is 8230.

KIE Server connects to the new Business Central and collects the list of deployment units (KIE containers) to be deployed.

When you use a class inside a dependency JAR file to access KIE Server from KIE Server client, you get the ConversionException and ForbiddenClassException in Business Central. To avoid generating these exceptions in Business Central, do one of the following:

- If the exceptions are generated on the client-side, add following system property to the kie-server client:

System.setProperty("org.kie.server.xstream.enabled.packages", "org.example.**");-

If the exceptions are generated on the server-side, open

standalone-full.xmlfrom the Red Hat Process Automation Manager installation directory, set the following property under the <system-properties> tag:

<property name="org.kie.server.xstream.enabled.packages" value="org.example.**"/>

- Set the following JVM property:

-Dorg.kie.server.xstream.enabled.packages=org.example.**

It is expected that you do not configure the classes that exists in KJAR using these system property. Ensure that only known classes are used in the system property to avoid any vulnerabilities.

The org.example is an example package, you can define any package that you want to use. You can specify multiple packages separated by comma , for example, org.example1.* * , org.example2.* * , org.example3.* *.

You can also add specific classes , for example, org.example1.Mydata1, org.example2.Mydata2.

Chapter 5. Configuring JDBC data sources for KIE Server

A data source is an object that enables a Java Database Connectivity (JDBC) client, such as an application server, to establish a connection with a database. Applications look up the data source on the Java Naming and Directory Interface (JNDI) tree or in the local application context and request a database connection to retrieve data. You must configure data sources for KIE Server to ensure correct data exchange between the servers and the designated database.

For production environments, specify an actual data source. Do not use the example data source in production environments.

Prerequisites

- The JDBC providers that you want to use to create database connections are configured on all servers on which you want to deploy KIE Server, as described in the "Creating Datasources" and "JDBC Drivers" sections of the Red Hat JBoss Enterprise Application Server Configuration Guide.

-

The Red Hat Process Automation Manager 7.10.0 Add Ons (

rhpam-7.10.0-add-ons.zip) file is downloaded from the Software Downloads page in the Red Hat Customer Portal.

Procedure

Complete the following steps to prepare your database:

-

Extract

rhpam-7.10.0-add-ons.zipin a temporary directory, for exampleTEMP_DIR. -

Extract

TEMP_DIR/rhpam-7.10.0-migration-tool.zip. -

Change your current directory to the

TEMP_DIR/rhpam-7.10.0-migration-tool/ddl-scriptsdirectory. This directory contains DDL scripts for several database types. Import the DDL script for your database type into the database that you want to use.

- The following example creates jBPM database structures in PostreSQL

psql jbpm < /ddl-scripts/postgresql/postgresql-jbpm-schema.sql

NoteIf you are using PostgreSQL or Oracle in conjunction with Spring Boot, you must import the respective Spring Boot DDL script, for example

/ddl-scripts/oracle/oracle-springboot-jbpm-schema.sqlor/ddl-scripts/postgresql/postgresql-springboot-jbpm-schema.sql.NoteThe PostgreSQL DDL scripts create the PostgreSQL schema with auto-incrementing integer value (OID) columns for entity attributes annotated with

@LOB. To use other binary column types such as BYTEA instead of OID, you must create the PostgreSQL schema with thepostgresql-bytea-jbpm-schema.sqlscript and set the Red Hat Process Automation Managerorg.kie.persistence.postgresql.useBytea=trueflag. Red Hat Process Automation Manager does not provide a migration tool to change from an OID-based to a BYTEA-based schema.

-

Extract

-

Open

EAP_HOME/standalone/configuration/standalone-full.xmlin a text editor and locate the<system-properties>tag. Add the following properties to the

<system-properties>tag where<DATASOURCE>is the JNDI name of your data source and<HIBERNATE_DIALECT>is the hibernate dialect for your database.NoteThe default value of the

org.kie.server.persistence.dsproperty isjava:jboss/datasources/ExampleDS. The default value of theorg.kie.server.persistence.dialectproperty isorg.hibernate.dialect.H2Dialect.<property name="org.kie.server.persistence.ds" value="<DATASOURCE>"/> <property name="org.kie.server.persistence.dialect" value="<HIBERNATE_DIALECT>"/>

The following example shows how to configure a datasource for the PostgreSQL hibernate dialect:

<system-properties> <property name="org.kie.server.repo" value="${jboss.server.data.dir}"/> <property name="org.kie.example" value="true"/> <property name="org.jbpm.designer.perspective" value="full"/> <property name="designerdataobjects" value="false"/> <property name="org.kie.server.user" value="rhpamUser"/> <property name="org.kie.server.pwd" value="rhpam123!"/> <property name="org.kie.server.location" value="http://localhost:8080/kie-server/services/rest/server"/> <property name="org.kie.server.controller" value="http://localhost:8080/business-central/rest/controller"/> <property name="org.kie.server.controller.user" value="kieserver"/> <property name="org.kie.server.controller.pwd" value="kieserver1!"/> <property name="org.kie.server.id" value="local-server-123"/> <!-- Data source properties. --> <property name="org.kie.server.persistence.ds" value="java:jboss/datasources/KieServerDS"/> <property name="org.kie.server.persistence.dialect" value="org.hibernate.dialect.PostgreSQLDialect"/> </system-properties>

The following dialects are supported:

-

DB2:

org.hibernate.dialect.DB2Dialect -

MSSQL:

org.hibernate.dialect.SQLServer2012Dialect -

MySQL:

org.hibernate.dialect.MySQL5InnoDBDialect -

MariaDB:

org.hibernate.dialect.MySQL5InnoDBDialect -

Oracle:

org.hibernate.dialect.Oracle10gDialect -

PostgreSQL:

org.hibernate.dialect.PostgreSQL82Dialect -

PostgreSQL plus:

org.hibernate.dialect.PostgresPlusDialect -

Sybase:

org.hibernate.dialect.SybaseASE157Dialect

Chapter 6. Managed KIE Server

A managed instance requires an available Process Automation Manager controller to start the KIE Server.

A Process Automation Manager controller manages the KIE Server configuration in a centralized way. Each Process Automation Manager controller can manage multiple configurations at once, and there can be multiple Process Automation Manager controllers in the environment. Managed KIE Server can be configured with a list of Process Automation Manager controllers, but will only connect to one at a time.

All Process Automation Manager controllers should be synchronized to ensure that the same set of configuration is provided to the server, regardless of the Process Automation Manager controller to which it connects.

When the KIE Server is configured with a list of Process Automation Manager controllers, it will attempt to connect to each of them at startup until a connection is successfully established with one of them. If a connection cannot be established, the server will not start, even if there is a local storage available with configuration. This ensures consistency and prevents the server from running with redundant configuration.

To run the KIE Server in standalone mode without connecting to Process Automation Manager controllers, see Chapter 7, Unmanaged KIE Server.

Chapter 7. Unmanaged KIE Server

An unmanaged KIE Server is a standalone instance, and therefore must be configured individually using REST/JMS API from the KIE Server itself. The configuration is automatically persisted by the server into a file and that is used as the internal server state, in case of restarts.

The configuration is updated during the following operations:

- Deploy KIE container

- Undeploy KIE container

- Start KIE container

- Stop KIE container

If the KIE Server is restarted, it will attempt to re-establish the same state that was persisted before shutdown. Therefore, KIE containers (deployment units) that were running will be started, but the ones that were stopped will not.

Chapter 8. Configuring the environment mode in KIE Server and Business Central

You can set KIE Server to run in production mode or in development mode. Development mode provides a flexible deployment policy that enables you to update existing deployment units (KIE containers) while maintaining active process instances for small changes. It also enables you to reset the deployment unit state before updating active process instances for larger changes. Production mode is optimal for production environments, where each deployment creates a new deployment unit.

In a development environment, you can click Deploy in Business Central to deploy the built KJAR file to a KIE Server without stopping any running instances (if applicable), or click Redeploy to deploy the built KJAR file and replace all instances. The next time you deploy or redeploy the built KJAR, the previous deployment unit (KIE container) is automatically updated in the same target KIE Server.

In a production environment, the Redeploy option in Business Central is disabled and you can click only Deploy to deploy the built KJAR file to a new deployment unit (KIE container) on a KIE Server.

Procedure

-

To configure the KIE Server environment mode, set the

org.kie.server.modesystem property toorg.kie.server.mode=developmentororg.kie.server.mode=production. To configure the deployment behavior for a project in Business Central, go to project Settings → General Settings → Version and toggle the Development Mode option.

NoteBy default, KIE Server and all new projects in Business Central are in development mode.

You cannot deploy a project with Development Mode turned on or with a manually added

SNAPSHOTversion suffix to a KIE Server that is in production mode.

Chapter 9. Configuring KIE Server to connect to Business Central

This section provides a sample setup that you can use for testing purposes. Some of the values are unsuitable for a production environment, and are marked as such.

If a KIE Server is not configured in your Red Hat Process Automation Manager environment, or if you require additional KIE Servers in your Red Hat Process Automation Manager environment, you must configure a KIE Server to connect to Business Central.

If you are deploying KIE Server on Red Hat OpenShift Container Platform, see the Deploying a Red Hat Process Automation Manager environment on Red Hat OpenShift Container Platform 4 using Operators document for instructions about configuring it to connect to Business Central.

KIE Server can be managed or unmanaged. If KIE Server is unmanaged, you must manually create and maintain KIE containers (deployment units). If KIE Server is managed, the Process Automation Manager controller manages the KIE Server configuration and you interact with the Process Automation Manager controller to create and maintain the KIE containers.

Make the changes described in this section if KIE Server is managed by Business Central and you have installed Red Hat Process Automation Manager from the ZIP files. If you have installed Business Central, you can use the headless Process Automation Manager controller to manage KIE Server, as described in Chapter 10, Installing and running the headless Process Automation Manager controller.

Prerequisites

-

Business Central and KIE Server are installed in the base directory of the Red Hat JBoss EAP installation (

EAP_HOME).

You must install Business Central and KIE Server on different servers in production environments. In this sample situation, we use only one user named controllerUser, containing both rest-all and the kie-server roles. However, if you install KIE Server and Business Central on the same server, for example in a development environment, make the changes in the shared standalone-full.xml file as described in this section.

Users with the following roles exist:

-

In Business Central, a user with the role

rest-all -

On the KIE Server, a user with the role

kie-server

-

In Business Central, a user with the role

Procedure

-

In your Red Hat Process Automation Manager installation directory, navigate to the

standalone-full.xmlfile. For example, if you use a Red Hat JBoss EAP installation for Red Hat Process Automation Manager, go to$EAP_HOME/standalone/configuration/standalone-full.xml. Open the

standalone-full.xmlfile and under the<system-properties>tag, set the following JVM properties:Table 9.1. JVM Properties for the KIE Server instance

Property Value Note org.kie.server.iddefault-kie-serverThe KIE Server ID.

org.kie.server.controllerhttp://localhost:8080/business-central/rest/controllerThe location of Business Central. The URL for connecting to the API of Business Central.

org.kie.server.controller.usercontrollerUserThe user name with the role

rest-allwho can log in to the Business Central.org.kie.server.controller.pwdcontrollerUser1234;The password of the user who can log in to the Business Central.

org.kie.server.locationhttp://localhost:8080/kie-server/services/rest/serverThe location of the KIE Server. The URL for connecting to the API of KIE Server.

Table 9.2. JVM Properties for the Business Central instance

Property Value Note org.kie.server.usercontrollerUserThe user name with the role

kie-server.org.kie.server.pwdcontrollerUser1234;The password of the user.

The following example shows how to configure a KIE Server instance:

<property name="org.kie.server.id" value="default-kie-server"/> <property name="org.kie.server.controller" value="http://localhost:8080/business-central/rest/controller"/> <property name="org.kie.server.controller.user" value="controllerUser"/> <property name="org.kie.server.controller.pwd" value="controllerUser1234;"/> <property name="org.kie.server.location" value="http://localhost:8080/kie-server/services/rest/server"/>

The following example shows how to configure a for Business Central instance:

<property name="org.kie.server.user" value="controllerUser"/> <property name="org.kie.server.pwd" value="controllerUser1234;"/>

To verify that KIE Server starts successfully, send a GET request to

http://SERVER:PORT/kie-server/services/rest/server/when KIE Server is running. For more information about running Red Hat Process Automation Manager on KIE Server, see Running Red Hat Process Automation Manager.After successful authentication, you receive an XML response similar to the following example:

<response type="SUCCESS" msg="Kie Server info"> <kie-server-info> <capabilities>KieServer</capabilities> <capabilities>BRM</capabilities> <capabilities>BPM</capabilities> <capabilities>CaseMgmt</capabilities> <capabilities>BPM-UI</capabilities> <capabilities>BRP</capabilities> <capabilities>DMN</capabilities> <capabilities>Swagger</capabilities> <location>http://localhost:8230/kie-server/services/rest/server</location> <messages> <content>Server KieServerInfo{serverId='first-kie-server', version='7.5.1.Final-redhat-1', location='http://localhost:8230/kie-server/services/rest/server', capabilities=[KieServer, BRM, BPM, CaseMgmt, BPM-UI, BRP, DMN, Swagger]}started successfully at Mon Feb 05 15:44:35 AEST 2018</content> <severity>INFO</severity> <timestamp>2018-02-05T15:44:35.355+10:00</timestamp> </messages> <name>first-kie-server</name> <id>first-kie-server</id> <version>7.5.1.Final-redhat-1</version> </kie-server-info> </response>Verify successful registration:

- Log in to Business Central.

Click Menu → Deploy → Execution Servers.

If registration is successful, you will see the registered server ID.

Chapter 10. Installing and running the headless Process Automation Manager controller

You can configure KIE Server to run in managed or unmanaged mode. If KIE Server is unmanaged, you must manually create and maintain KIE containers (deployment units). If KIE Server is managed, the Process Automation Manager controller manages the KIE Server configuration and you interact with the Process Automation Manager controller to create and maintain KIE containers.

Business Central has an embedded Process Automation Manager controller. If you install Business Central, use the Execution Server page to create and maintain KIE containers. If you want to automate KIE Server management without Business Central, you can use the headless Process Automation Manager controller.

10.1. Using the installer to configure KIE Server with the Process Automation Manager controller

KIE Server can be managed by the Process Automation Manager controller or it can be unmanaged. If KIE Server is unmanaged, you must manually create and maintain KIE containers (deployment units). If KIE Server is managed, the Process Automation Manager controller manages the KIE Server configuration and you interact with the Process Automation Manager controller to create and maintain KIE containers.

The Process Automation Manager controller is integrated with Business Central. If you install Business Central, you can use the Execution Server page in Business Central to interact with the Process Automation Manager controller.

You can use the installer in interactive or CLI mode to install Business Central and KIE Server, and then configure KIE Server with the Process Automation Manager controller.

Prerequisites

- Two computers with backed-up Red Hat JBoss EAP 7.3 server installations are available.

- Sufficient user permissions to complete the installation are granted.

Procedure

- On the first computer, run the installer in interactive mode or CLI mode. See Installing and configuring Red Hat Process Automation Manager on Red Hat JBoss EAP 7.3 for more information.

- On the Component Selection page, clear the KIE Server box.

- Complete the Business Central installation.

- On the second computer, run the installer in interactive mode or CLI mode.

- On the Component Selection page, clear the Business Central box.

- On the Configure Runtime Environment page, select Perform Advanced Configuration.

- Select Customize KIE Server properties and click Next.

Enter the controller URL for Business Central and configure additional properties for KIE Server. The controller URL has the following form where

<HOST:PORT>is the address of Business Central on the second computer:<HOST:PORT>/business-central/rest/controller

- Complete the installation.

- To verify that the Process Automation Manager controller is now integrated with Business Central, go to the Execution Servers page in Business Central and confirm that the KIE Server that you configured appears under REMOTE SERVERS.

10.2. Installing the headless Process Automation Manager controller

You can install the headless Process Automation Manager controller and use the REST API or the KIE Server Java Client API to interact with it.

Prerequisites

-

A backed-up Red Hat JBoss EAP installation version 7.3 is available. The base directory of the Red Hat JBoss EAP installation is referred to as

EAP_HOME. - Sufficient user permissions to complete the installation are granted.

Procedure

Navigate to the Software Downloads page in the Red Hat Customer Portal (login required), and select the product and version from the drop-down options:

- Product: Process Automation Manager

- Version: 7.10

-

Download Red Hat Process Automation Manager 7.10.0 Add Ons (the

rhpam-7.10.0-add-ons.zipfile). -

Extract the

rhpam-7.10.0-add-ons.zipfile. Therhpam-7.10.0-controller-ee7.zipfile is in the extracted directory. -

Extract the

rhpam-7.10.0-controller-ee7archive to a temporary directory. In the following examples this directory is calledTEMP_DIR. Copy the

TEMP_DIR/rhpam-7.10.0-controller-ee7/controller.wardirectory toEAP_HOME/standalone/deployments/.WarningEnsure that the names of the headless Process Automation Manager controller deployments you copy do not conflict with your existing deployments in the Red Hat JBoss EAP instance.

-

Copy the contents of the

TEMP_DIR/rhpam-7.10.0-controller-ee7/SecurityPolicy/directory toEAP_HOME/bin. - When prompted to overwrite files, select Yes.

-

In the

EAP_HOME/standalone/deployments/directory, create an empty file namedcontroller.war.dodeploy. This file ensures that the headless Process Automation Manager controller is automatically deployed when the server starts.

10.2.1. Creating a headless Process Automation Manager controller user

Before you can use the headless Process Automation Manager controller, you must create a user that has the kie-server role.

Prerequisites

-

The headless Process Automation Manager controller is installed in the base directory of the Red Hat JBoss EAP installation (

EAP_HOME).

Procedure

-

In a terminal application, navigate to the

EAP_HOME/bindirectory. Enter the following command and replace

<USER_NAME>and<PASSWORD>with the user name and password of your choice.$ ./add-user.sh -a --user <USER_NAME> --password <PASSWORD> --role kie-server

NoteMake sure that the specified user name is not the same as an existing user, role, or group. For example, do not create a user with the user name

admin.The password must have at least eight characters and must contain at least one number and one non-alphanumeric character, but not & (ampersand).

- Make a note of your user name and password.

10.2.2. Configuring KIE Server and the headless Process Automation Manager controller

If KIE Server will be managed by the headless Process Automation Manager controller, you must edit the standalone-full.xml file in KIE Server installation and the standalone.xml file in the headless Process Automation Manager controller installation.

Prerequisites

-

KIE Server is installed in an

EAP_HOME. The headless Process Automation Manager controller is installed in an

EAP_HOME.NoteYou should install KIE Server and the headless Process Automation Manager controller on different servers in production environments. However, if you install KIE Server and the headless Process Automation Manager controller on the same server, for example in a development environment, make these changes in the shared

standalone-full.xmlfile.-

On KIE Server nodes, a user with the

kie-serverrole exists. On the server nodes, a user with the

kie-serverrole exists.Procedure

In the

EAP_HOME/standalone/configuration/standalone-full.xmlfile, add the following properties to the<system-properties>section and replace<USERNAME>and<USER_PWD>with the credentials of a user with thekie-serverrole:<property name="org.kie.server.user" value="<USERNAME>"/> <property name="org.kie.server.pwd" value="<USER_PWD>"/>

In the KIE Server

EAP_HOME/standalone/configuration/standalone-full.xmlfile, add the following properties to the<system-properties>section:<property name="org.kie.server.controller.user" value="<CONTROLLER_USER>"/> <property name="org.kie.server.controller.pwd" value="<CONTROLLER_PWD>"/> <property name="org.kie.server.id" value="<KIE_SERVER_ID>"/> <property name="org.kie.server.location" value="http://<HOST>:<PORT>/kie-server/services/rest/server"/> <property name="org.kie.server.controller" value="<CONTROLLER_URL>"/>

- In this file, replace the following values:

-

Replace

<CONTROLLER_USER>and<CONTROLLER_PWD>with the credentials of a user with thekie-serverrole. -

Replace

<KIE_SERVER_ID>with the ID or name of the KIE Server installation, for example,rhpam-7.10.0-kie-server-1. -

Replace

<HOST>with the ID or name of the KIE Server host, for example,localhostor192.7.8.9. Replace

<PORT>with the port of the KIE Server host, for example,8080.NoteThe

org.kie.server.locationproperty specifies the location of KIE Server.-

Replace

<CONTROLLER_URL>with the URL of the headless Process Automation Manager controller. KIE Server connects to this URL during startup.

10.3. Running the headless Process Automation Manager controller

After you have installed the headless Process Automation Manager controller on Red Hat JBoss EAP, use this procedure to run the headless Process Automation Manager controller.

Prerequisites

-

The headless Process Automation Manager controller is installed and configured in the base directory of the Red Hat JBoss EAP installation (

EAP_HOME).

Procedure

-

In a terminal application, navigate to

EAP_HOME/bin. If you installed the headless Process Automation Manager controller on the same Red Hat JBoss EAP instance as the Red Hat JBoss EAP instance where you installed the KIE Server, enter one of the following commands:

On Linux or UNIX-based systems:

$ ./standalone.sh -c standalone-full.xml

On Windows:

standalone.bat -c standalone-full.xml

If you installed the headless Process Automation Manager controller on a separate Red Hat JBoss EAP instance from the Red Hat JBoss EAP instance where you installed the KIE Server, start the headless Process Automation Manager controller with the

standalone.shscript:NoteIn this case, ensure that you made all required configuration changes to the

standalone.xmlfile.On Linux or UNIX-based systems:

$ ./standalone.sh

On Windows:

standalone.bat

To verify that the headless Process Automation Manager controller is working on Red Hat JBoss EAP, enter the following command where

<CONTROLLER>and<CONTROLLER_PWD>is the user name and password. The output of this command provides information about the KIE Server instance.curl -X GET "http://<HOST>:<PORT>/controller/rest/controller/management/servers" -H "accept: application/xml" -u '<CONTROLLER>:<CONTROLLER_PWD>'

Alternatively, you can use the KIE Server Java API Client to access the headless Process Automation Manager controller.

10.4. Clustering KIE Servers with the headless Process Automation Manager controller

The Process Automation Manager controller is integrated with Business Central. However, if you do not install Business Central, you can install the headless Process Automation Manager controller and use the REST API or the KIE Server Java Client API to interact with it.

Prerequisites

-

A backed-up Red Hat JBoss EAP installation version 7.3 or later is available. The base directory of the Red Hat JBoss EAP installation is referred to as

EAP_HOME. - Sufficient user permissions to complete the installation are granted.

- An NFS server with a shared folder is available as described in Installing and configuring Red Hat Process Automation Manager in a Red Hat JBoss EAP clustered environment.

Procedure

Navigate to the Software Downloads page in the Red Hat Customer Portal (login required), and select the product and version from the drop-down options:

- Product: Process Automation Manager

- Version: 7.10

-

Download Red Hat Process Automation Manager 7.10.0 Add Ons (the

rhpam-7.10.0-add-ons.zipfile). -

Extract the

rhpam-7.10.0-add-ons.zipfile. Therhpam-7.10.0-controller-ee7.zipfile is in the extracted directory. -

Extract the

rhpam-7.10.0-controller-ee7archive to a temporary directory. In the following examples this directory is calledTEMP_DIR. Copy the

TEMP_DIR/rhpam-7.10.0-controller-ee7/controller.wardirectory toEAP_HOME/standalone/deployments/.WarningEnsure that the names of the headless Process Automation Manager controller deployments you copy do not conflict with your existing deployments in the Red Hat JBoss EAP instance.

-

Copy the contents of the

TEMP_DIR/rhpam-7.10.0-controller-ee7/SecurityPolicy/directory toEAP_HOME/bin. - When prompted to overwrite files, click Yes.

-

In the

EAP_HOME/standalone/deployments/directory, create an empty file namedcontroller.war.dodeploy. This file ensures that the headless Process Automation Manager controller is automatically deployed when the server starts. -

Open the

EAP_HOME/standalone/configuration/standalone.xmlfile in a text editor. Add the following properties to the

<system-properties>element and replace<NFS_STORAGE>with the absolute path to the NFS storage where the template configuration is stored:<system-properties> <property name="org.kie.server.controller.templatefile.watcher.enabled" value="true"/> <property name="org.kie.server.controller.templatefile" value="<NFS_STORAGE>"/> </system-properties>

Template files contain default configurations for specific deployment scenarios.

If the value of the

org.kie.server.controller.templatefile.watcher.enabledproperty is set to true, a separate thread is started to watch for modifications of the template file. The default interval for these checks is 30000 milliseconds and can be further controlled by theorg.kie.server.controller.templatefile.watcher.intervalsystem property. If the value of this property is set to false, changes to the template file are detected only when the server restarts.To start the headless Process Automation Manager controller, navigate to

EAP_HOME/binand enter the following command:On Linux or UNIX-based systems:

$ ./standalone.sh

On Windows:

standalone.bat

For more information about running Red Hat Process Automation Manager in a Red Hat JBoss Enterprise Application Platform clustered environment, see Installing and configuring Red Hat Process Automation Manager in a Red Hat JBoss EAP clustered environment.

Chapter 11. Configuring Smart Router for TLS support

You can configure Smart Router (KIE Server Router) for TLS support to allow HTTPS traffic.

Prerequisites

- KIE Server is installed on each node of a Red Hat JBoss EAP 7.3 cluster.

- Smart Router is installed and configured. For more information, see Installing and configuring Red Hat Process Automation Manager in a Red Hat JBoss EAP clustered environment.

Procedure

To start Smart Router with TLS support and HTTPS enabled, use the TLS keystore properties, for example:

java -Dorg.kie.server.router.tls.keystore = <KEYSTORE_PATH> -Dorg.kie.server.router.tls.keystore.password = <KEYSTORE_PWD> -Dorg.kie.server.router.tls.keystore.keyalias = <KEYSTORE_ALIAS> -Dorg.kie.server.router.tls.port = <HTTPS_PORT> -jar rhpam-7.9.0-smart-router.jarorg.kie.server.router.tls.portis a property used to configure the HTTPS port. The default HTTPS port value is9443.

Chapter 12. Activating or deactivating a KIE container on KIE Server

You can now stop the creation of new process instances from a given container by deactivating it but at the same time continue working on its existing process instances and tasks. In case the deactivation is temporary, you can activate the container again later. The activation or deactivation of KIE containers do not require restarting of KIE server.

Prerequisites

- A KIE container has been created and configured in Business Central.

Procedure

- Log in to Business Central.

- In the main menu, click Menu → Deploy → Execution Servers.

- From the Server Configurations pane, which is on the left of the page, select your server.

- From the Deployment Units pane, select the deployment unit you want to activate or deactivate.

Click Activate or Deactivate in the upper-right corner of the deployment unit pane.

You cannot create a process instance from a KIE container once it is deactivated.

Chapter 13. Deployment descriptors

Processes and rules are stored in Apache Maven based packaging and are known as knowledge archives, or KJAR. The rules, processes, assets, and other project artifacts are part of a JAR file built and managed by Maven. A file kept inside the META-INF directory of the KJAR called kmodule.xml can be used to define the KIE bases and sessions. This kmodule.xml file, by default, is empty.

Whenever a runtime component such as KIE Server is about to process the KJAR, it looks up kmodule.xml to build the runtime representation.

Deployment descriptors supplement the kmodule.xml file and provide granular control over your deployment. The presence of these descriptors is optional and your deployment will proceed successfully without them. You can set purely technical properties using these descriptors, including meta values such as persistence, auditing, and runtime strategy.

These descriptors allow you to configure the KIE Server on multiple levels, including server level default, different deployment descriptor per KJAR, and other server configurations. You can use descriptors to make simple customizations to the default KIE Server configuration, possibly per KJAR.

You can define these descriptors in a file called kie-deployment-descriptor.xml and place this file next to your kmodule.xml file in the META-INF folder. You can change this default location and the file name by specifying it as a system parameter:

-Dorg.kie.deployment.desc.location=file:/path/to/file/company-deployment-descriptor.xml

13.1. Deployment descriptor configuration

Deployment descriptors allow the user to configure the execution server on multiple levels:

- Server level: The main level and the one that applies to all KJARs deployed on the server.

- KJAR level: This enables you to configure descriptors on a per KJAR basis.

- Deploy time level: Descriptors that apply while a KJAR is being deployed.

The granular configuration items specified by the deployment descriptors take precedence over the server level ones, except in case of configuration items that are collection based, which are merged. The hierarchy works like this: deploy time configuration > KJAR configuration > server configuration.

The deploy time configuration applies to deployments done via the REST API.

For example, if the persistence mode (one of the items you can configure) defined at the server level is NONE but the same mode is specified as JPA at the KJAR level, the actual mode will be JPA for that KJAR. If nothing is specified for the persistence mode in the deployment descriptor for that KJAR (or if there is no deployment descriptor), it will fall back to the server level configuration, which in this case is NONE (or to JPA if there is no server level deployment descriptor).

What Can You Configure?

High level technical configuration details can be configured via deployment descriptors. The following table lists these along with the permissible and default values for each.

Table 13.1. Deployment Descriptors

| Configuration | XML Entry | Permissible Values | Default Value |

|---|---|---|---|

| Persistence unit name for runtime data | persistence-unit | Any valid persistence package name | org.jbpm.domain |

| Persistence unit name for audit data | audit-persistence-unit | Any valid persistence package name | org.jbpm.domain |

| Persistence mode | persistence-mode | JPA, NONE | JPA |

| Audit mode | audit-mode | JPA, JMS or NONE | JPA |

| Runtime Strategy | runtime-strategy | SINGLETON, PER_REQUEST or PER_PROCESS_INSTANCE | SINGLETON |

| List of Event Listeners to be registered | event-listeners |

Valid listener class names as | No default value |

| List of Task Event Listeners to be registered | task-event-listeners |

Valid listener class names as | No default value |

| List of Work Item Handlers to be registered | work-item-handlers |

Valid Work Item Handler classes given as | No default value |

| List of Globals to be registered | globals |

Valid Global variables given as | No default value |

| Marshalling strategies to be registered (for pluggable variable persistence) | marshalling-strategies |

Valid | No default value |

| Required Roles to be granted access to the resources of the KJAR | required-roles | String role names | No default value |

| Additional Environment Entries for KIE session | environment-entries |

Valid | No default value |

| Additional configuration options of KIE session | configurations |

Valid | No default value |

| Classes used for serialization in the remote services | remoteable-class |

Valid | No default value |

Do not use the Singleton runtime strategy with the EJB Timer Scheduler (the default scheduler in KIE Server) in a production environment. This combination can result in Hibernate problems under load. Per process instance runtime strategy is recommended if there is no specific reason to use other strategies. For more information about this limitation, see Hibernate issues with Singleton strategy and EJBTimerScheduler.

13.2. Managing deployment descriptors

Deployment descriptors can be configured in Business Central in Menu → Design → $PROJECT_NAME → Settings → Deployments.

Every time a project is created, a stock kie-deployment-descriptor.xml file is generated with default values.

It is not necessary to provide a full deployment descriptor for all KJARs. Providing partial deployment descriptors is possible and recommended. For example, if you need to use a different audit mode, you can specify that for the KJAR only, all other properties will have the default value defined at the server level.

When using OVERRIDE_ALL merge mode, all configuration items must be specified, because the relevant KJAR will always use specified configuration and will not merge with any other deployment descriptor in the hierarchy.

13.3. Restricting access to the runtime engine

The required-roles configuration item can be edited in the deployment descriptors. This property restricts access to the runtime engine on a per-KJAR or per-server level by ensuring that access to certain processes is only granted to users that belong to groups defined by this property.

The security role can be used to restrict access to process definitions or restrict access at run time.

The default behavior is to add required roles to this property based on repository restrictions. You can edit these properties manually if required by providing roles that match actual roles defined in the security realm.

Procedure

- To open the project deployment descriptors configuration in Business Central, open Menu → Design → $PROJECT_NAME → Settings → Deployments.

- From the list of configuration settings, click Required Roles, then click Add Required Role.

- In the Add Required Role window, type the name of the role that you want to have permission to access this deployment, then click Add.

- To add more roles with permission to access the deployment, repeat the previous steps.

- When you have finished adding all required roles, click Save.

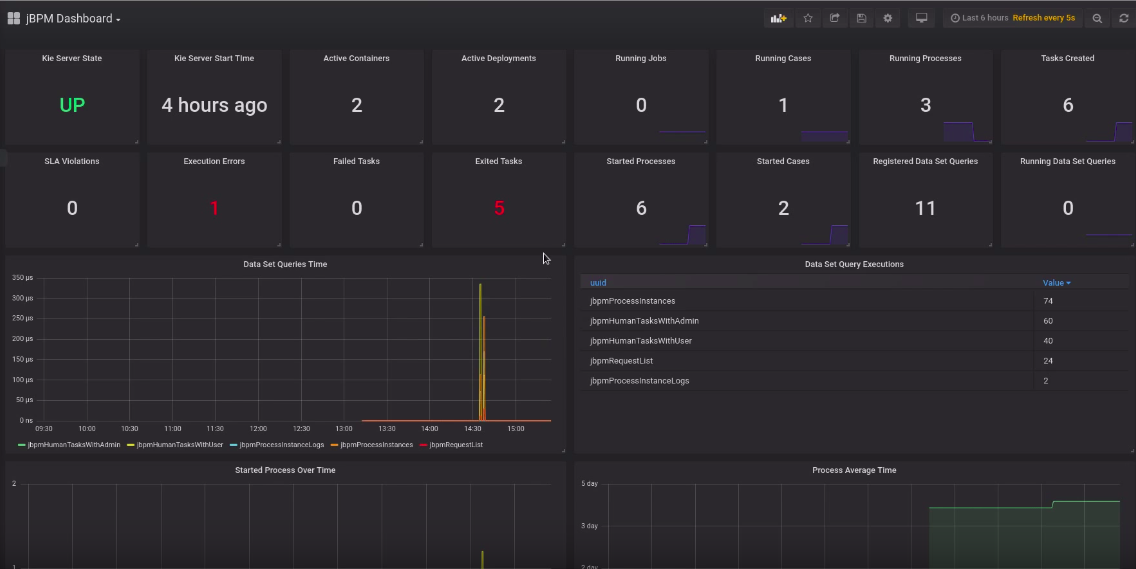

Chapter 14. Accessing runtime data from Business Central

The following pages in Business Central allow you to view the runtime data of the KIE Server:

- Process Reports

- Task Reports

- Process Definitions

- Process Instances

- Execution Errors

- Jobs

- Tasks

These pages use the credentials of the currently logged in user to load data from the KIE Server. Therefore, to be able to view the runtime data in Business Central, ensure that the following conditions are met:

-

The user exists in the KIE container (deployment unit) running the Business Central application. This user must have

admin,analyst, ordeveloperroles assigned, in addition to thekie-serverrole, with full access to the runtime data. Themanagerandprocess_adminroles also allow access to runtime data pages in Business Central. -

The user exists in the KIE container (deployment unit) running the KIE Server and has

kie-serverrole assigned. - Communication between Business Central and the KIE Server is established. That is, the KIE Server is registered in the Process Automation Manager controller, which is part of Business Central.

The

deployment.business-central.warlogin module is present in thestandalone.xmlconfiguration of the server running Business Central:<login-module code="org.kie.security.jaas.KieLoginModule" flag="optional" module="deployment.business-central.war"/>

Chapter 15. Execution error management

When an execution error occurs for a business process, the process stops and reverts to the most recent stable state (the closest safe point) and continues its execution. If an error of any kind is not handled by the process the entire transaction rolls back, leaving the process instance in the previous wait state. Any trace of this is only visible in the logs, and usually displayed to the caller who sent the request to the process engine.

Users with process administrator (process-admin) or administrator (admin) roles are able to access error messages in Business Central. Execution error messaging provides the following primary benefits:

- Better traceability

- Visibility in case of critical processes

- Reporting and analytics based on error situations

- External system error handling and compensation

Configurable error handling is responsible for receiving any technical errors thrown throughout the process engine execution (including task service). The following technical exceptions apply:

-

Anything that extends

java.lang.Throwable - Process level error handling and any other exceptions not previously handled

There are several components that make up the error handling mechanism and allow a pluggable approach to extend its capabilities.

The process engine entry point for error handling is the ExecutionErrorManager. This is integrated with RuntimeManager, which is then responsible for providing it to the underlying KieSession and TaskService.

From an API point of view, ExecutionErrorManager provides access to the following components:

-

ExecutionErrorHandler: The primary mechanism for error handling -

ExecutionErrorStorage: Pluggable storage for execution error information

15.1. Managing execution errors

By definition, every process error that is detected and stored is unacknowledged and must be handled by someone or something (in case of automatic error recovery). Errors are filtered on the basis of whether or not they have been acknowledged. Acknowledging an error saves the user information and time stamp for traceability. You can access the Error Management view at any time.

Procedure

- In Business Central, go to Menu → Manage → Execution Errors.

- Select an error from the list to open the Details tab. This displays information about the error or errors.

Click the Acknowledge button to acknowledge and clear the error. You can view the error later by selecting Yes on the Acknowledged filter in the Manage Execution Errors page.

If the error was related to a task, a Go to Task button is displayed.

Click the Go to Task button, if applicable, to view the associated job information in the Manage Tasks page.

In the Manage Tasks page, you can restart, reschedule, or retry the corresponding task.

15.2. The ExecutionErrorHandler

The ExecutionErrorHandler is the primary mechanism for all process error handling. It is bound to the life cycle of RuntimeEngine; meaning it is created when a new runtime engine is created, and is destroyed when RuntimeEngine is disposed. A single instance of the ExecutionErrorHandler is used within a given execution context or transaction. Both KieSession and TaskService use that instance to inform the error handling about processed nodes/tasks. ExecutionErrorHandler is informed about:

- Starting of processing of a given node instance.

- Completion of processing of a given node instance.

- Starting of processing of a given task instance.

- Completion of processing of a given task instance.

This information is mainly used for errors that are of unknown type; that is, errors that do not provide information about the process context. For example, upon commit time, database exceptions do not carry any process information.

15.3. Execution error storage

ExecutionErrorStorage is a pluggable strategy that permits various ways of persisting information about execution errors. Storage is used directly by the handler that gets an instance of the store when it is created (when RuntimeEngine is created). Default storage implementation is based on the database table, which stores every error and includes all of the available information. Some errors may not contain details, as this depends on the type of error and whether or not it is possible to extract specific information.

15.4. Error types and filters

Error handling attempts to catch and handle any kind of error, therefore it needs a way to categorize errors. By doing this, it is able to properly extract information from the error and make it pluggable, as some users may require specific types of errors to be thrown and handled in different ways than what is provided by default.

Error categorization and filtering is based on ExecutionErrorFilters. This interface is solely responsible for building instances of ExecutionError, which are later stored by way of the ExecutionErrorStorage strategy. It has following methods:

-

accept: indicates if given error can be handled by the filter. -

filter: where the actual filtering, handling, and so on happens. -

getPriority: indicates the priority that is used when calling filters.

Filters process one error at a time and use a priority system to avoid having multiple filters returning alternative “views” of the same error. Priority enables more specialized filters to see if the error can be accepted, or otherwise allow another filter to handle it.

ExecutionErrorFilter can be provided using the ServiceLoader mechanism, which enables the capability of error handling to be easily extended.

Red Hat Process Automation Manager ships with the following ExecutionErrorFilters :

Table 15.1. ExecutionErrorFilters

| Class name | Type | Priority |

|---|---|---|

| org.jbpm.runtime.manager.impl.error.filters.ProcessExecutionErrorFilter | Process | 100 |

| org.jbpm.runtime.manager.impl.error.filters.TaskExecutionErrorFilter | Task | 80 |

| org.jbpm.runtime.manager.impl.error.filters.DBExecutionErrorFilter | DB | 200 |

| org.jbpm.executor.impl.error.JobExecutionErrorFilter | Job | 100 |

Filters are given a higher execution order based on the lowest value of the priority. In the above table, filters are invoked in the following order:

- Task

- Process

- Job

- DB

15.5. Auto acknowledging execution errors

When executions errors occur they are unacknowledged by default, and require a manual acknowledgment to be performed otherwise they are always seen as information that requires attention. In case of larger volumes, manual actions can be time consuming and not suitable in some situations.

Auto acknowledgment resolves this issue. It is based on scheduled jobs by way of the jbpm-executor, with the following three types of jobs available:

org.jbpm.executor.commands.error.JobAutoAckErrorCommand-

Responsible for finding jobs that previously failed but now are either canceled, completed, or rescheduled for another execution. This job only acknowledges execution errors of type

Job. org.jbpm.executor.commands.error.TaskAutoAckErrorCommand-

Responsible for auto acknowledgment of user task execution errors for tasks that previously failed but now are in one of the exit states (completed, failed, exited, obsolete). This job only acknowledges execution errors of type

Task. org.jbpm.executor.commands.error.ProcessAutoAckErrorCommand-

Responsible for auto acknowledgment of process instances that have errors attached. It acknowledges errors where the process instance is already finished (completed or aborted), or the task that the error originated from is already finished. This is based on

init_activity_idvalue. This job acknowledges any type of execution error that matches the above criteria.

Jobs can be registered on the KIE Server. In Business Central you can configure auto acknowledge jobs for errors:

Prerequisites

- Execution errors of one or more type have accumulated during processes execution but require no further attention.

Procedure

- In Business Central, click Menu → Manage → Jobs.

- In the top right of the screen, click New Job.

- Type the process correlation key into the Business Key field.

- In the Type field, add type of the auto acknowledge job type from the list above.

Select a Due On time for the job to be completed:

-

To run the job immediately, select the

Run nowoption. To run the job at a specific time, select

Run later. A date and time field appears next to theRun lateroption. Click the field to open the calendar and schedule a specific time and date for the job.

-

To run the job immediately, select the

- Click Create to create the job and return to the Manage Jobs page.

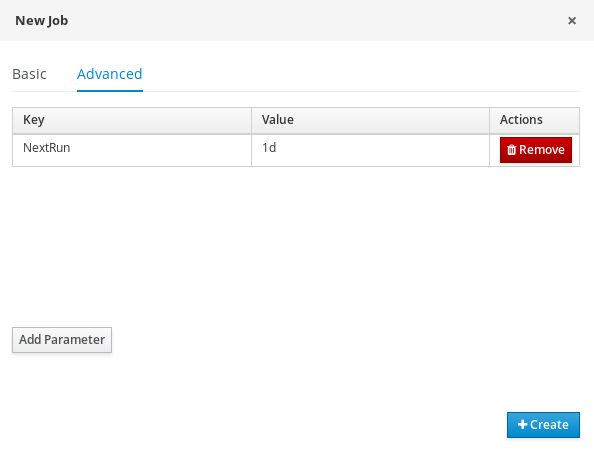

The following steps are optional, and allow you to configure auto acknowledge jobs to run either once (SingleRun), on specific time intervals (NextRun), or using the custom name of an entity manager factory to search for jobs to acknowledge (EmfName).

- Click the Advanced tab.

- Click the Add Parameter button.

Enter the configuration parameter you want to apply to the job:

-

SingleRun:trueorfalse -

NextRun: time expression, such as 2h, 5d, 1m, and so on. EmfName: custom entity manager factory name.

-

15.6. Cleaning up the error list

The ExecutionErrorInfo error list table can be cleaned up to remove redundant information. Depending on the life cycle of the process, errors may remain in the list for some time, and there is no direct API with which to clean up the list. Instead, the ExecutionErrorCleanupCommand command can be scheduled to periodically clean up errors.

The following parameters can be set for the clean up command. The command is restricted to deleting execution errors of already completed or aborted process instances:

DateFormat-

Date format for further date related parameters - if not given

yyyy-MM-ddis used (pattern ofSimpleDateFormatclass).

-

Date format for further date related parameters - if not given

EmfName- Name of the entity manager factory to be used for queries (valid persistence unit name).

SingleRun-

Indicates if execution should be single run only (

true|false).

-

Indicates if execution should be single run only (

NextRun- Provides next execution time (valid time expression, for example: 1d, 5h, and so on)

OlderThan- Indicates what errors should be deleted - older than given date.

OlderThanPeriod- Indicated what errors should be deleted older than given time expression (valid time expression e.g. 1d, 5h, and so on)

ForProcess- Indicates errors to be deleted only for given process definition.

ForProcessInstance- Indicates errors to be deleted only for given process instance.

ForDeployment- Indicates errors to be deleted that are from given deployment ID.

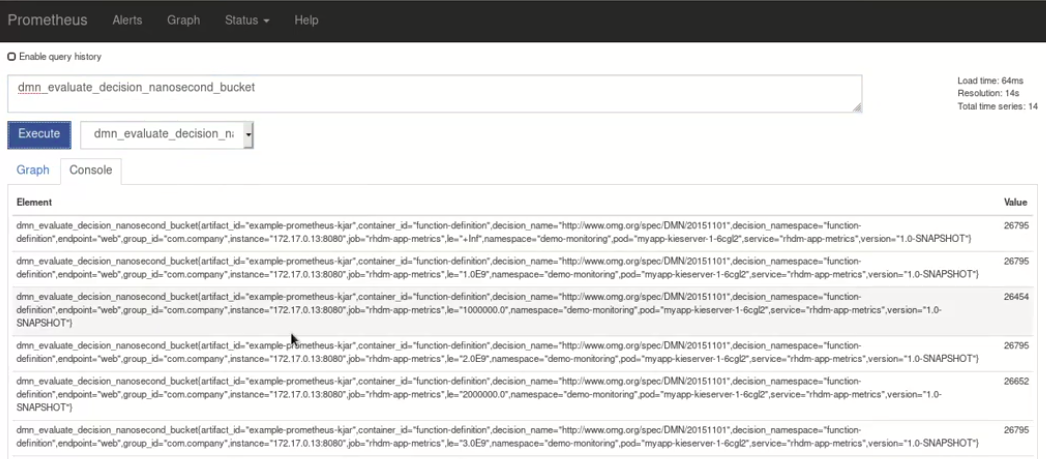

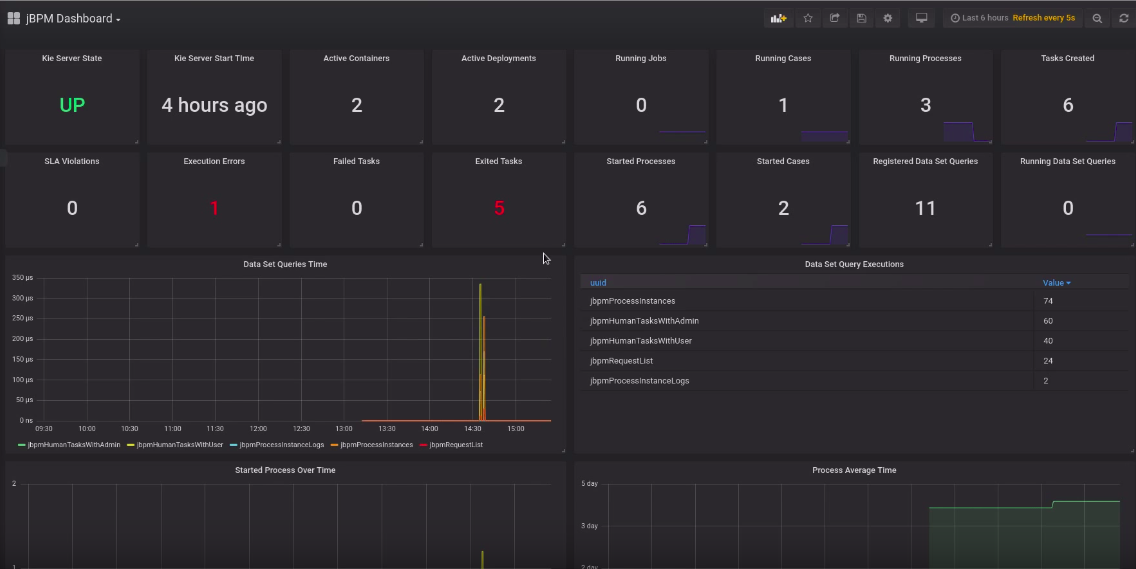

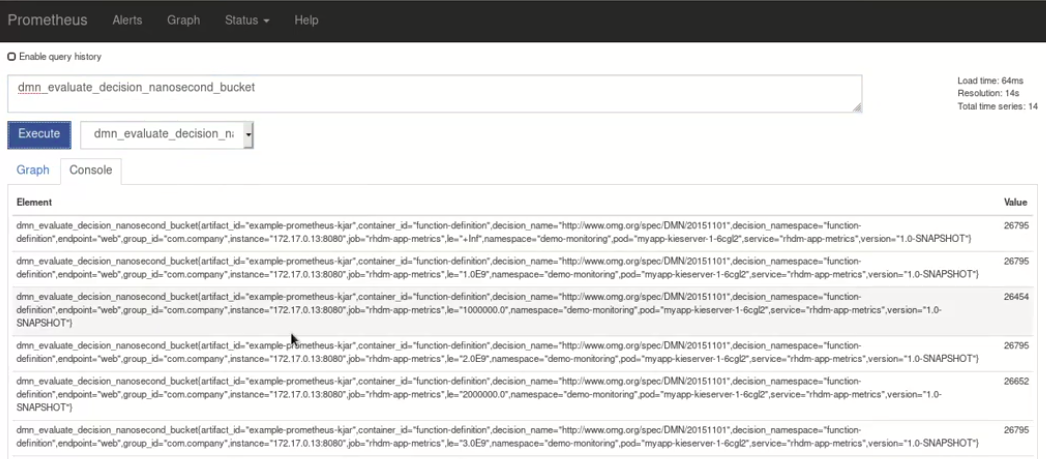

Chapter 16. Prometheus metrics monitoring in Red Hat Process Automation Manager

Prometheus is an open-source systems monitoring toolkit that you can use with Red Hat Process Automation Manager to collect and store metrics related to the execution of business rules, processes, Decision Model and Notation (DMN) models, and other Red Hat Process Automation Manager assets. You can access the stored metrics through a REST API call to the KIE Server, through the Prometheus expression browser, or using a data-graphing tool such as Grafana.

You can configure Prometheus metrics monitoring for an on-premise KIE Server instance, for KIE Server on Spring Boot, or for a KIE Server deployment on Red Hat OpenShift Container Platform.

For the list of available metrics that KIE Server exposes with Prometheus, download the Red Hat Process Automation Manager 7.10.0 Source Distribution from the Red Hat Customer Portal and navigate to ~/rhpam-7.10.0-sources/src/droolsjbpm-integration-$VERSION/kie-server-parent/kie-server-services/kie-server-services-prometheus/src/main/java/org/kie/server/services/prometheus.

Red Hat support for Prometheus is limited to the setup and configuration recommendations provided in Red Hat product documentation.

16.1. Configuring Prometheus metrics monitoring for KIE Server

You can configure your KIE Server instances to use Prometheus to collect and store metrics related to your business asset activity in Red Hat Process Automation Manager. For the list of available metrics that KIE Server exposes with Prometheus, download the Red Hat Process Automation Manager 7.10.0 Source Distribution from the Red Hat Customer Portal and navigate to ~/rhpam-7.10.0-sources/src/droolsjbpm-integration-$VERSION/kie-server-parent/kie-server-services/kie-server-services-prometheus/src/main/java/org/kie/server/services/prometheus.

Prerequisites

- KIE Server is installed.

-

You have

kie-serveruser role access to KIE Server. - Prometheus is installed. For information about downloading and using Prometheus, see the Prometheus documentation page.

Procedure

-

In your KIE Server instance, set the

org.kie.prometheus.server.ext.disabledsystem property tofalseto enable the Prometheus extension. You can define this property when you start KIE Server or in thestandalone.xmlorstandalone-full.xmlfile of Red Hat Process Automation Manager distribution. If you are running Red Hat Process Automation Manager on Spring Boot, configure the required key in the

application.propertiessystem property:Spring Boot application.properties key for Red Hat Process Automation Manager and Prometheus

kieserver.jbpm.enabled=true kieserver.drools.enabled=true kieserver.dmn.enabled=true kieserver.prometheus.enabled=true

In the

prometheus.yamlfile of your Prometheus distribution, add the following settings in thescrape_configssection to configure Prometheus to scrape metrics from KIE Server:Scrape configurations in prometheus.yaml file

scrape_configs: - job_name: 'kie-server' metrics_path: /SERVER_PATH/services/rest/metrics basicAuth: username: USER_NAME password: PASSWORD static_configs: - targets: ["HOST:PORT"]Scrape configurations in prometheus.yaml file for Spring Boot (if applicable)

scrape_configs: - job_name: 'kie' metrics_path: /rest/metrics static_configs: - targets: ["HOST:PORT"]Replace the values according to your KIE Server location and settings.

Start the KIE Server instance.

Example start command for Red Hat Process Automation Manager on Red Hat JBoss EAP

$ cd ~/EAP_HOME/bin $ ./standalone.sh --c standalone-full.xml

After you start the configured KIE Server instance, Prometheus begins collecting metrics and KIE Server publishes the metrics to the REST API endpoint

http://HOST:PORT/SERVER/services/rest/metrics(or on Spring Boot, tohttp://HOST:PORT/rest/metrics).In a REST client or curl utility, send a REST API request with the following components to verify that KIE Server is publishing the metrics:

For REST client:

-

Authentication: Enter the user name and password of the KIE Server user with the

kie-serverrole. HTTP Headers: Set the following header:

-

Accept:application/json

-

-

HTTP method: Set to

GET. -

URL: Enter the KIE Server REST API base URL and metrics endpoint, such as

http://localhost:8080/kie-server/services/rest/metrics(or on Spring Boot,http://localhost:8080/rest/metrics).

For curl utility:

-

-u: Enter the user name and password of the KIE Server user with thekie-serverrole. -H: Set the following header:-

accept:application/json

-

-

-X: Set toGET. -

URL: Enter the KIE Server REST API base URL and metrics endpoint, such as

http://localhost:8080/kie-server/services/rest/metrics(or on Spring Boot,http://localhost:8080/rest/metrics).

Example curl command for Red Hat Process Automation Manager on Red Hat JBoss EAP

curl -u 'baAdmin:password@1' -X GET "http://localhost:8080/kie-server/services/rest/metrics"

Example curl command for Red Hat Process Automation Manager on Spring Boot

curl -u 'baAdmin:password@1' -X GET "http://localhost:8080/rest/metrics"

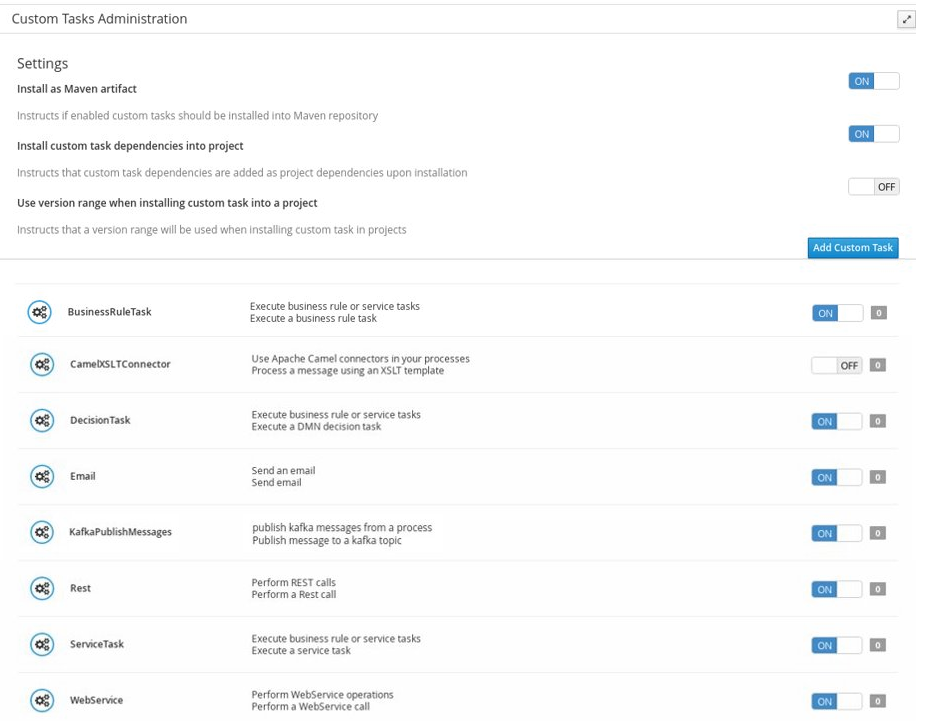

Example server response