Chapter 2. Working with ML2/OVN

Red Hat OpenStack Platform (RHOSP) networks are managed by the Networking service (neutron). The core of the Networking service is the Modular Layer 2 (ML2) plug-in, and the default mechanism driver for RHOSP ML2 plug-in is the Open Virtual Networking (OVN) mechanism driver.

Earlier RHOSP versions used the Open vSwitch (OVS) mechanism driver by default. Red Hat chose ML2/OVN as the default mechanism driver for all new deployments starting with RHOSP 16.0 because it offers immediate advantages over the ML2/OVS mechanism driver for most customers today. Those advantages multiply with each release while Red Hat and the community continue to enhance and improve the ML2/OVN feature set.

If your existing Red Hat OpenStack Platform (RHOSP) deployment uses the ML2/OVS mechanism driver, you must evaluate the benefits and feasibility of replacing the OVS driver with the ML2/OVN mechanism driver. Red Hat does not support a direct migration to ML2/OVN in RHOSP 16.1. You must upgrade to the latest RHOSP 16.2 version before migrating to the ML2/OVN mechanism driver.

2.1. List of components in the RHOSP OVN architecture

The RHOSP OVN architecture replaces the OVS Modular Layer 2 (ML2) mechanism driver with the OVN ML2 mechanism driver to support the Networking API. OVN provides networking services for the Red Hat OpenStack platform.

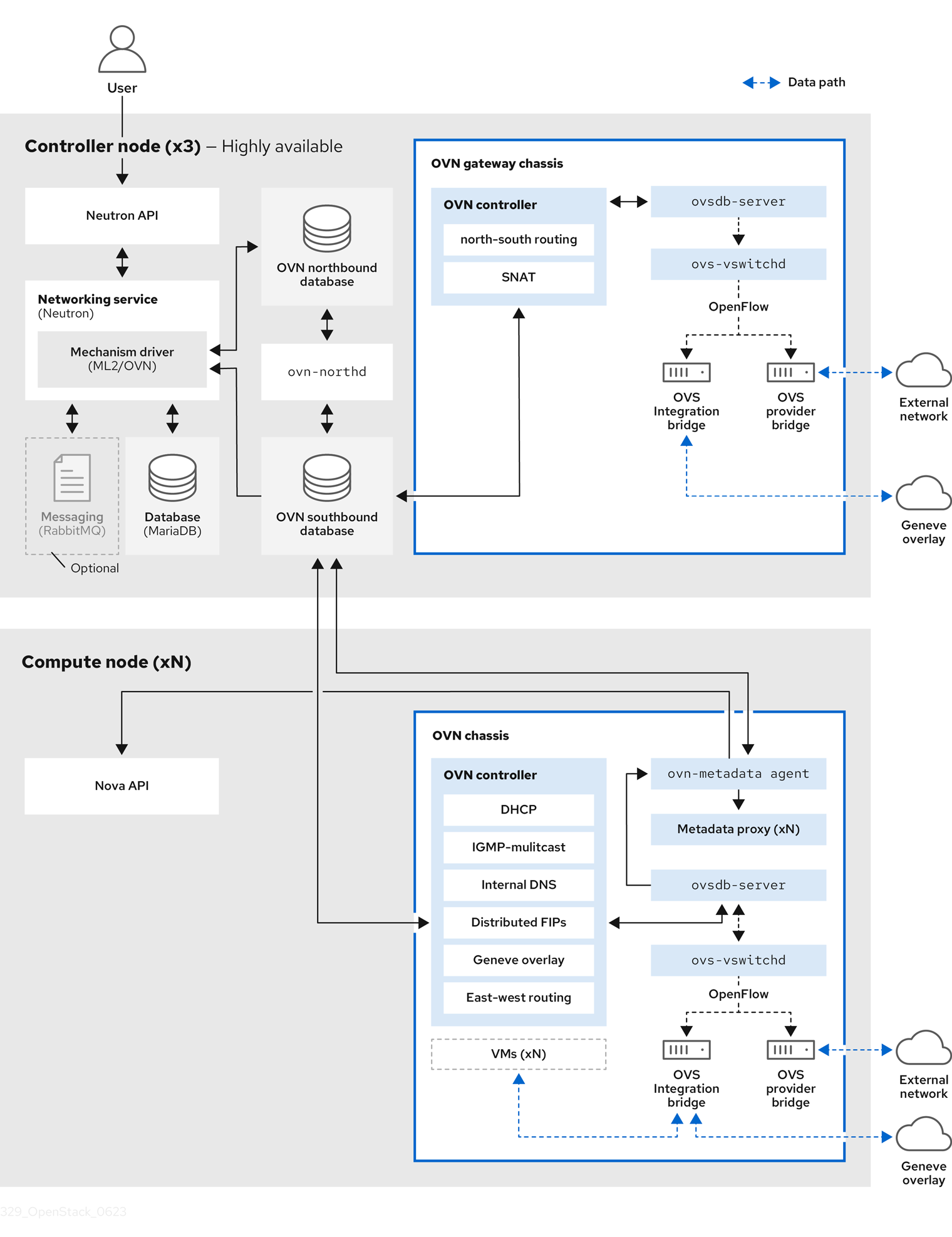

As illustrated in Figure 2.1, the OVN architecture consists of the following components and services:

- ML2 plug-in with OVN mechanism driver

- The ML2 plug-in translates the OpenStack-specific networking configuration into the platform-neutral OVN logical networking configuration. It typically runs on the Controller node.

- OVN northbound (NB) database (

ovn-nb) -

This database stores the logical OVN networking configuration from the OVN ML2 plugin. It typically runs on the Controller node and listens on TCP port

6641. - OVN northbound service (

ovn-northd) - This service converts the logical networking configuration from the OVN NB database to the logical data path flows and populates these on the OVN Southbound database. It typically runs on the Controller node.

- OVN southbound (SB) database (

ovn-sb) -

This database stores the converted logical data path flows. It typically runs on the Controller node and listens on TCP port

6642. - OVN controller (

ovn-controller) -

This controller connects to the OVN SB database and acts as the open vSwitch controller to control and monitor network traffic. It runs on all Compute and gateway nodes where

OS::Tripleo::Services::OVNControlleris defined. - OVN metadata agent (

ovn-metadata-agent) -

This agent creates the

haproxyinstances for managing the OVS interfaces, network namespaces and HAProxy processes used to proxy metadata API requests. The agent runs on all Compute and gateway nodes whereOS::TripleO::Services::OVNMetadataAgentis defined. - OVS database server (OVSDB)

-

Hosts the OVN Northbound and Southbound databases. Also interacts with

ovs-vswitchdto host the OVS databaseconf.db.

The schema file for the NB database is located in /usr/share/ovn/ovn-nb.ovsschema, and the SB database schema file is in /usr/share/ovn/ovn-sb.ovsschema.

Figure 2.1. OVN architecture in a RHOSP environment

2.2. ML2/OVN databases

In Red Hat OpenStack Platform ML2/OVN deployments, network configuration information passes between processes through shared distributed databases. You can inspect these databases to verify the status of the network and identify issues.

- OVN northbound database

The northbound database (

OVN_Northbound) serves as the interface between OVN and a cloud management system such as Red Hat OpenStack Platform (RHOSP). RHOSP produces the contents of the northbound database.The northbound database contains the current desired state of the network, presented as a collection of logical ports, logical switches, logical routers, and more. Every RHOSP Networking service (neutron) object is represented in a table in the northbound database.

- OVN southbound database

-

The southbound database (

OVN_Southbound) holds the logical and physical configuration state for OVN system to support virtual network abstraction. Theovn-controlleruses the information in this database to configure OVS to satisfy Networking service (neutron) requirements.

2.3. The ovn-controller service on Compute nodes

The ovn-controller service runs on each Compute node and connects to the OVN southbound (SB) database server to retrieve the logical flows. The ovn-controller translates these logical flows into physical OpenFlow flows and adds the flows to the OVS bridge (br-int). To communicate with ovs-vswitchd and install the OpenFlow flows, the ovn-controller connects to the local ovsdb-server (which hosts conf.db) using the UNIX socket path that was passed when ovn-controller was started (for example unix:/var/run/openvswitch/db.sock).

The ovn-controller service expects certain key-value pairs in the external_ids column of the Open_vSwitch table; puppet-ovn uses puppet-vswitch to populate these fields. The following example shows the key-value pairs that puppet-vswitch configures in the external_ids column:

hostname=<HOST NAME> ovn-encap-ip=<IP OF THE NODE> ovn-encap-type=geneve ovn-remote=tcp:OVN_DBS_VIP:6642

2.4. OVN metadata agent on Compute nodes

The OVN metadata agent is configured in the tripleo-heat-templates/deployment/ovn/ovn-metadata-container-puppet.yaml file and included in the default Compute role through OS::TripleO::Services::OVNMetadataAgent. As such, the OVN metadata agent with default parameters is deployed as part of the OVN deployment.

OpenStack guest instances access the Networking metadata service available at the link-local IP address: 169.254.169.254. The neutron-ovn-metadata-agent has access to the host networks where the Compute metadata API exists. Each HAProxy is in a network namespace that is not able to reach the appropriate host network. HaProxy adds the necessary headers to the metadata API request and then forwards the request to the neutron-ovn-metadata-agent over a UNIX domain socket.

The OVN Networking service creates a unique network namespace for each virtual network that enables the metadata service. Each network accessed by the instances on the Compute node has a corresponding metadata namespace (ovnmeta-<datapath_uuid>).

2.5. The OVN composable service

Red Hat OpenStack Platform usually consists of nodes in pre-defined roles, such as nodes in Controller roles, Compute roles, and different storage role types. Each of these default roles contains a set of services that are defined in the core heat template collection.

In a default OSP 16.1 deployment, the ML2/OVN composable service runs on Controller nodes. You can optionally create a custom Networker role and run the OVN composable service on dedicated Networker nodes.

The OVN composable service ovn-dbs is deployed in a container called ovn-dbs-bundle. In a default installation ovn-dbs is included in the Controller role and runs on Controller nodes. Because the service is composable, you can assign it to another role, such as a Networker role.

If you assign the OVN composable service to another role, ensure that the service is co-located on the same node as the pacemaker service, which controls the OVN database containers.

Related information

2.6. Layer 3 high availability with OVN

OVN supports Layer 3 high availability (L3 HA) without any special configuration. OVN automatically schedules the router port to all available gateway nodes that can act as an L3 gateway on the specified external network. OVN L3 HA uses the gateway_chassis column in the OVN Logical_Router_Port table. Most functionality is managed by OpenFlow rules with bundled active_passive outputs. The ovn-controller handles the Address Resolution Protocol (ARP) responder and router enablement and disablement. Gratuitous ARPs for FIPs and router external addresses are also periodically sent by the ovn-controller.

L3HA uses OVN to balance the routers back to the original gateway nodes to avoid any nodes becoming a bottleneck.

BFD monitoring

OVN uses the Bidirectional Forwarding Detection (BFD) protocol to monitor the availability of the gateway nodes. This protocol is encapsulated on top of the Geneve tunnels established from node to node.

Each gateway node monitors all the other gateway nodes in a star topology in the deployment. Gateway nodes also monitor the compute nodes to let the gateways enable and disable routing of packets and ARP responses and announcements.

Each compute node uses BFD to monitor each gateway node and automatically steers external traffic, such as source and destination Network Address Translation (SNAT and DNAT), through the active gateway node for a given router. Compute nodes do not need to monitor other compute nodes.

External network failures are not detected as would happen with an ML2-OVS configuration.

L3 HA for OVN supports the following failure modes:

- The gateway node becomes disconnected from the network (tunneling interface).

-

ovs-vswitchdstops (ovs-switchdis responsible for BFD signaling) -

ovn-controllerstops (ovn-controllerremoves itself as a registered node).

This BFD monitoring mechanism only works for link failures, not for routing failures.

2.7. Limitations of the ML2/OVN mechanism driver

Some features available with the ML2/OVS mechanism driver are not yet supported with the ML2/OVN mechanism driver.

2.7.1. ML2/OVS features not yet supported by ML2/OVN

| Feature | Notes | Track this Feature |

|---|---|---|

| Distributed virtual routing (DVR) with OVN on VLAN project (tenant) networks. | FIP traffic does not pass to a VLAN tenant network with ML2/OVN and DVR. DVR is enabled by default in new ML2/OVN deployments. If you need VLAN tenant networks with OVN, you can disable DVR. To disable DVR, include the following lines in an environment file: parameter_defaults: NeutronEnableDVR: false | https://bugzilla.redhat.com/show_bug.cgi?id=1704596https://bugzilla.redhat.com/show_bug.cgi?id=1766930 |

| Provisioning Baremetal Machines with OVN DHCP |

The built-in DHCP server on OVN presently can not provision baremetal nodes. It cannot serve DHCP for the provisioning networks. Chainbooting iPXE requires tagging ( |

2.7.2. Core OVN limitations

North/south routing on VF(direct) ports on VLAN tenant networks does not work with SR-IOV because the external ports are not colocated with the logical router’s gateway ports. See https://bugs.launchpad.net/neutron/+bug/1875852.

2.8. Limit for non-secure ports with ML2/OVN

Ports might become unreachable if you disable the port security plug-in extension in Red Hat Open Stack Platform (RHOSP) deployments with the default ML2/OVN mechanism driver and a large number of ports.

In some large ML2/OVN RHSOP deployments, a flow chain limit inside ML2/OVN can drop ARP requests that are targeted to ports where the security plug-in is disabled.

There is no documented maximum limit for the actual number of logical switch ports that ML2/OVN can support, but the limit approximates 4,000 ports.

Attributes that contribute to the approximated limit are the number of resubmits in the OpenFlow pipeline that ML2/OVN generates, and changes to the overall logical topology.

2.9. Using ML2/OVS instead of the default ML2/OVN in a new RHOSP 16.1 deployment

In Red Hat OpenStack Platform (RHOSP) 16.0 and later deployments, the Modular Layer 2 plug-in with Open Virtual Network (ML2/OVN) is the default mechanism driver for the RHOSP Networking service. You can change this setting if your application requires the ML2/OVS mechanism driver.

Procedure

-

Log in to your undercloud as the

stackuser. In the template file,

/home/stack/templates/containers-prepare-parameter.yaml, useovsinstead ofovnas value of theneutron_driverparameter:parameter_defaults: ContainerImagePrepare: - set: ... neutron_driver: ovsIn the environment file,

/usr/share/openstack-tripleo-heat-templates/environments/services/neutron-ovs.yaml, ensure that theNeutronNetworkTypeparameter includesvxlanorgreinstead ofgeneve.Example

parameter_defaults: ... NeutronNetworkType: 'vxlan'

Run the

openstack overcloud deploycommand and include the core heat templates, environment files, and the files that you modified.ImportantThe order of the environment files is important because the parameters and resources defined in subsequent environment files take precedence.

$ openstack overcloud deploy --templates \ -e <your_environment_files> \ -e /usr/share/openstack-tripleo-heat-templates/environments/services/ \ neutron-ovs.yaml \ -e /home/stack/templates/containers-prepare-parameter.yaml \

Additional resources

- Environment files in the Advanced Overcloud Customization guide

- Including environment files in overcloud creation in the Advanced Overcloud Customization guide

2.10. Deploying a custom role with ML2/OVN

In a default OSP 16.1 deployment, the ML2/OVN composable service runs on Controller nodes. You can optionally use supported custom roles like those described in the following examples.

- Networker

- Run the OVN composable services on dedicated networker nodes.

- Networker with SR-IOV

- Run the OVN composable services on dedicated networker nodes with SR-IOV.

- Controller with SR-IOV

- Run the OVN composable services on SR-IOV capable controller nodes.

You can also generate your own custom roles.

Limitations

The following limitations apply to the use of SR-IOV with ML2/OVN and native OVN DHCP in this release.

- All external ports are scheduled on a single gateway node because there is only one HA Chassis Group for all of the ports.

- North/south routing on VF(direct) ports on VLAN tenant networks does not work with SR-IOV because the external ports are not colocated with the logical router’s gateway ports. See https://bugs.launchpad.net/neutron/+bug/1875852.

Prerequisites

- You know how to deploy custom roles. For more information see Composable services and custom roles in the Advanced Overcloud Customization guide.

Procedure

Log in to the undercloud host as the

stackuser and source thestackrcfile.$ source stackrc

Choose the custom roles file that is appropriate for your deployment. Use it directly in the deploy command if it suits your needs as-is. Or you can generate your own custom roles file that combines other custom roles files.

Deployment Role Role File Networker role

Networker

Networker.yamlNetworker role with SR-IOV

NetworkerSriov

NetworkerSriov.yamlCo-located control and networker with SR-IOV

ControllerSriov

ControllerSriov.yaml- [Optional] Generate a new custom roles data file that combines one of these custom roles files with other custom roles files. Follow the instructions in Creating a roles_data file in the Advanced Overcloud Customization guide. Include the appropriate source role files depending on your deployment.

- [Optional] To identify specific nodes for the role, you can create a specific hardware flavor and assign the flavor to specific nodes. Then use an environment file define the flavor for the role, and to specify a node count. For more information, see the example in Creating a new role in the Advanced Overcloud Customization guide.

Create an environment file as appropriate for your deployment.

Deployment Sample Environment File Networker role

neutron-ovn-dvr-ha.yaml

Networker role with SR-IOV

ovn-sriov.yaml

Include the following settings as appropriate for your deployment.

Deployment Settings Networker role

ControllerParameters: OVNCMSOptions: "" ControllerSriovParameters: OVNCMSOptions: "" NetworkerParameters: OVNCMSOptions: "enable-chassis-as-gw" NetworkerSriovParameters: OVNCMSOptions: ""Networker role with SR-IOV

OS::TripleO::Services::NeutronDhcpAgent: OS::Heat::None ControllerParameters: OVNCMSOptions: "" ControllerSriovParameters: OVNCMSOptions: "" NetworkerParameters: OVNCMSOptions: "" NetworkerSriovParameters: OVNCMSOptions: "enable-chassis-as-gw"Co-located control and networker with SR-IOV

OS::TripleO::Services::NeutronDhcpAgent: OS::Heat::None ControllerParameters: OVNCMSOptions: "" ControllerSriovParameters: OVNCMSOptions: "enable-chassis-as-gw" NetworkerParameters: OVNCMSOptions: "" NetworkerSriovParameters: OVNCMSOptions: ""-

Deploy the overcloud. Include the environment file in your deployment command with the

-eoption. Include the custom roles data file in your deployment command with the -r option. For example:-r Networker.yamlor-r mycustomrolesfile.yaml.

Verification steps - OVN deployments

Log in to a Controller or Networker node as the overcloud SSH user, which is

heat-adminby default.Example

ssh heat-admin@controller-0

Ensure that

ovn_metadata_agentis running on Controller and Networker nodes.$ sudo podman ps | grep ovn_metadata

Sample output

a65125d9588d undercloud-0.ctlplane.localdomain:8787/rh-osbs/rhosp16-openstack-neutron-metadata-agent-ovn:16.1_20200813.1 kolla_start 23 hours ago Up 21 hours ago ovn_metadata_agent

Ensure that Controller nodes with OVN services or dedicated Networker nodes have been configured as gateways for OVS.

$ sudo ovs-vsctl get Open_Vswitch . external_ids:ovn-cms-options

Sample output

... enable-chassis-as-gw ...

Verification steps - SR-IOV deployments

Log in to a Compute node as the overcloud SSH user, which is

heat-adminby default.Example

ssh heat-admin@compute-0

Ensure that

neutron_sriov_agentis running on the Compute nodes.$ sudo podman ps | grep neutron_sriov_agent

Sample output

f54cbbf4523a undercloud-0.ctlplane.localdomain:8787/rh-osbs/rhosp16-openstack-neutron-sriov-agent:16.2_20200813.1 kolla_start 23 hours ago Up 21 hours ago neutron_sriov_agent

Ensure that network-available SR-IOV NICs have been successfully detected.

$ sudo podman exec -uroot galera-bundle-podman-0 mysql nova -e 'select hypervisor_hostname,pci_stats from compute_nodes;'

Sample output

computesriov-1.localdomain {... {"dev_type": "type-PF", "physical_network": "datacentre", "trusted": "true"}, "count": 1}, ... {"dev_type": "type-VF", "physical_network": "datacentre", "trusted": "true", "parent_ifname": "enp7s0f3"}, "count": 5}, ...} computesriov-0.localdomain {... {"dev_type": "type-PF", "physical_network": "datacentre", "trusted": "true"}, "count": 1}, ... {"dev_type": "type-VF", "physical_network": "datacentre", "trusted": "true", "parent_ifname": "enp7s0f3"}, "count": 5}, ...}

Additional resources

- Composable services and custom roles in the Advanced Overcloud Customization guide.

2.11. SR-IOV with ML2/OVN and native OVN DHCP

You can deploy a custom role to use SR-IOV in an ML2/OVN deployment with native OVN DHCP. See Section 2.10, “Deploying a custom role with ML2/OVN”.

Limitations

The following limitations apply to the use of SR-IOV with ML2/OVN and native OVN DHCP in this release.

- All external ports are scheduled on a single gateway node because there is only one HA Chassis Group for all of the ports.

- North/south routing on VF(direct) ports on VLAN tenant networks does not work with SR-IOV because the external ports are not colocated with the logical router’s gateway ports. See https://bugs.launchpad.net/neutron/+bug/1875852.

Additional resources

- Composable services and custom roles in the Advanced Overcloud Customization guide.