Chapter 2. CephFS with native driver

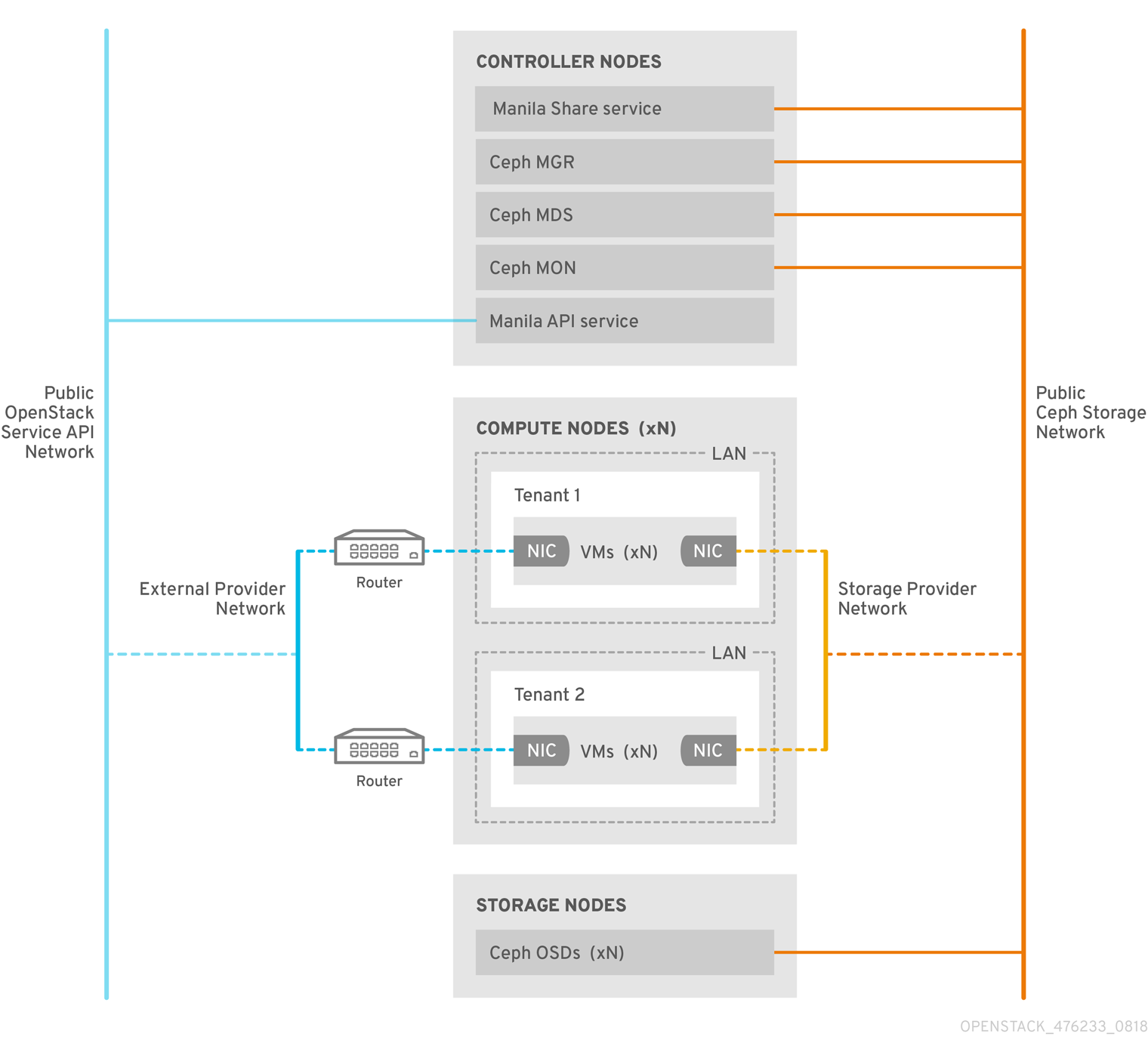

The CephFS native driver combines the OpenStack Shared File Systems service (manila) and Red Hat Ceph Storage. When you use Red Hat OpenStack (RHOSP) director, the Controller nodes host the Ceph daemons, such as the manager, metadata servers (MDS), and monitors (MON) and the Shared File Systems services.

Compute nodes can host one or more projects. Projects(formerly known as tenants), which are represented in the following graphic by the white boxes, contain user-managed VMs, which are represented by gray boxes with two NICs. To access the ceph and manila daemons projects connect to the daemons over the public Ceph storage network. On this network, you can access data on the storage nodes provided by the Ceph Object Storage Daemons (OSDs). Instances (VMs) that are hosted on the project boot with two NICs: one dedicated to the storage provider network and the second to project-owned routers to the external provider network.

The storage provider network connects the VMs that run on the projects to the public Ceph storage network. The Ceph public network provides back-end access to the Ceph object storage nodes, metadata servers (MDS), and Controller nodes.

Using the native driver, CephFS relies on cooperation with the clients and servers to enforce quotas, guarantee project isolation, and for security. CephFS with the native driver works well in an environment with trusted end users on a private cloud. This configuration requires software that is running under user control to cooperate and work correctly.

2.1. Security considerations

The native CephFS back end requires a permissive trust model for OpenStack Platform tenants. This trust model is not appropriate for general purpose OpenStack Platform clouds that deliberately block users from directly accessing the infrastructure behind the services that the OpenStack Platform provides.

With native CephFS, user compute instances (VMs) must connect directly to the Ceph public network to access shares. Ceph infrastructure service daemons are deployed on the Ceph public network where they are exposed to user VMs. CephFS clients that run on user VMs interact cooperatively with the Ceph service daemons, and they interact directly with RADOS to read and write file data blocks.

CephFS quotas, which enforce Shared File Systems (manila) share sizes, are enforced on the client side, such as on VMs that are owned by Red Hat OpenStack Platform (RHOSP) users. Client-side software versions on user VMs might not be current, which can leave critical cloud infrastructure vulnerable to malicious or inadvertently harmful software that targets the Ceph service ports.

For these reasons, Red Hat recommends that you deploy native CephFS as a back end only in environments in which trusted users maintain the latest versions of client-side software. Users must also ensure that no software runs on their VMs that can impact the Ceph Storage infrastructure.

For a general purpose RHOSP deployment that serves many untrusted users, Red Hat recommends that you deploy CephFS through NFS. For more information about using CephFS through NFS, see Deploying the Shared File Systems service with CephFS through NFS.

Users might not keep client-side software current, and they might fail to exclude harmful software from their VMs, but using CephFS through NFS, users only have access to the public side of an NFS server, not to the Ceph infrastructure itself. NFS does not require the same kind of cooperative client and, in the worst case, an attack from a user VM could damage the NFS gateway without damaging the Ceph Storage infrastructure behind it.

Red Hat recommends the following security measures:

- Configure the Storage network as a provider network.

- Impose role-based access control (RBAC) policies to secure the Storage provider network.

- Create a private share type for the native CephFS back end and expose it only to trusted tenants.