Managing OpenShift Container Storage

Instructions for cluster and storage administrators

storage-docs@redhat.com

Abstract

Chapter 1. Overview

Managing OpenShift Container Storage is written to help administrators understand how to manage and administer their Red Hat OpenShift Container Storage cluster.

Most management tasks focus on a single resource. This document is divided into chapters based on the resource that an administrator is trying to modify:

- Chapter 2, Configure storage for OpenShift Container Platform services shows you how to use OpenShift Container Storage for core OpenShift Container Platform services.

- Chapter 3, Backing OpenShift Container Platform applications with OpenShift Container Storage provides information about how to configure OpenShift Container Platform applications to use OpenShift Container Storage.

- Chapter 4, Scaling storage nodes provides information about scaling storage capacity of OpenShift Container Storage nodes.

- Chapter 5, Managing Persistent Volume Claims provides information about managing Persistent Volume Claim requests, and automating the fulfillment of those requests.

- Chapter 6, Managing container storage interface (CSI) component placements provides information about setting tolerations to bring up container storage interface component on the nodes.

- Chapter 7, Multicloud Object Gateway provides information about the Multicloud Object Gateway.

- Chapter 9, Replacing storage nodes for OpenShift Container Storage shows you how to replace an operational or failed node on AWS UPI, AWS IPI, and VMware UPI for OpenShift Container Storage.

- Chapter 10, Replacing a storage device provides instructions for replacing a device for OpenShift Container Storage deployed dynamically of VMware infrastructure and OpenShift Container Storage deployed using local storage devices.

- Chapter 11, Updating OpenShift Container Storage provides instructions for upgrading your OpenShift Container Storage cluster.

Chapter 2. Configure storage for OpenShift Container Platform services

You can use OpenShift Container Storage to provide storage for OpenShift Container Platform services such as image registry, monitoring, and logging.

The process for configuring storage for these services depends on the infrastructure used in your OpenShift Container Storage deployment.

Always ensure that you have plenty of storage capacity for these services. If the storage for these critical services runs out of space, the cluster becomes inoperable and very difficult to recover.

Red Hat recommends configuring shorter curation and retention intervals for these services. See Configuring the Curator schedule and the Modifying retention time for Prometheus metrics data sub section of Configuring persistent storage in the OpenShift Container Platform documentation for details.

If you do run out of storage space for these services, contact Red Hat Customer Support.

2.1. Configuring Image Registry to use OpenShift Container Storage

OpenShift Container Platform provides a built in Container Image Registry which runs as a standard workload on the cluster. A registry is typically used as a publication target for images built on the cluster as well as a source of images for workloads running on the cluster.

Follow the instructions in this section to configure OpenShift Container Storage as storage for the Container Image Registry. On AWS, it is not required to change the storage for the registry. However, it is recommended to change the storage to OpenShift Container Storage Persistent Volume for vSphere and Baremetal platforms.

This process does not migrate data from an existing image registry to the new image registry. If you already have container images in your existing registry, back up your registry before you complete this process, and re-register your images when this process is complete.

Prerequisites

- You have administrative access to OpenShift Web Console.

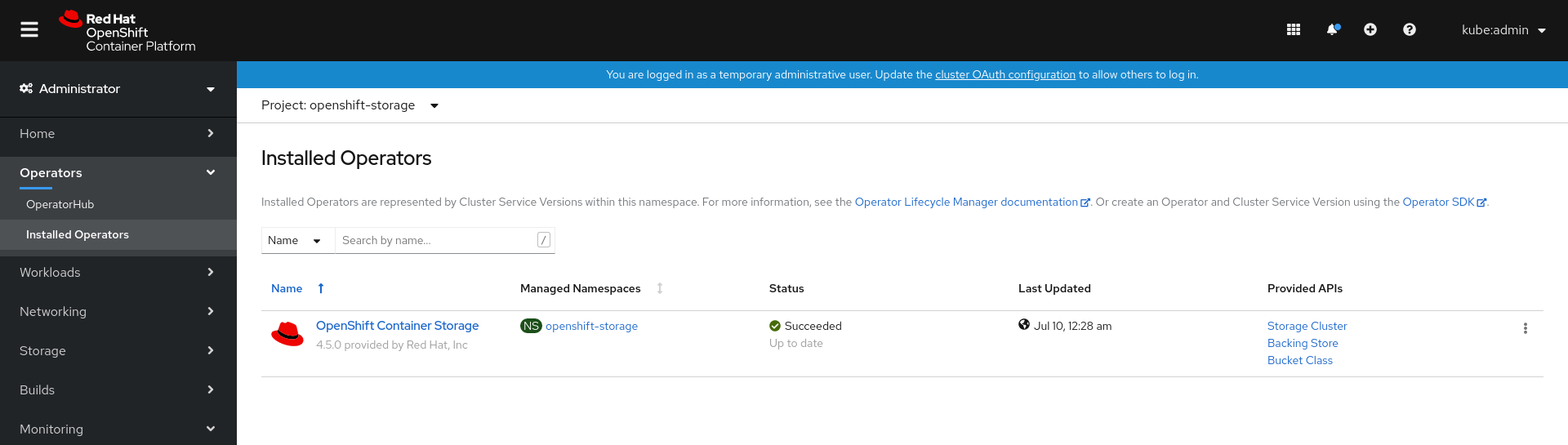

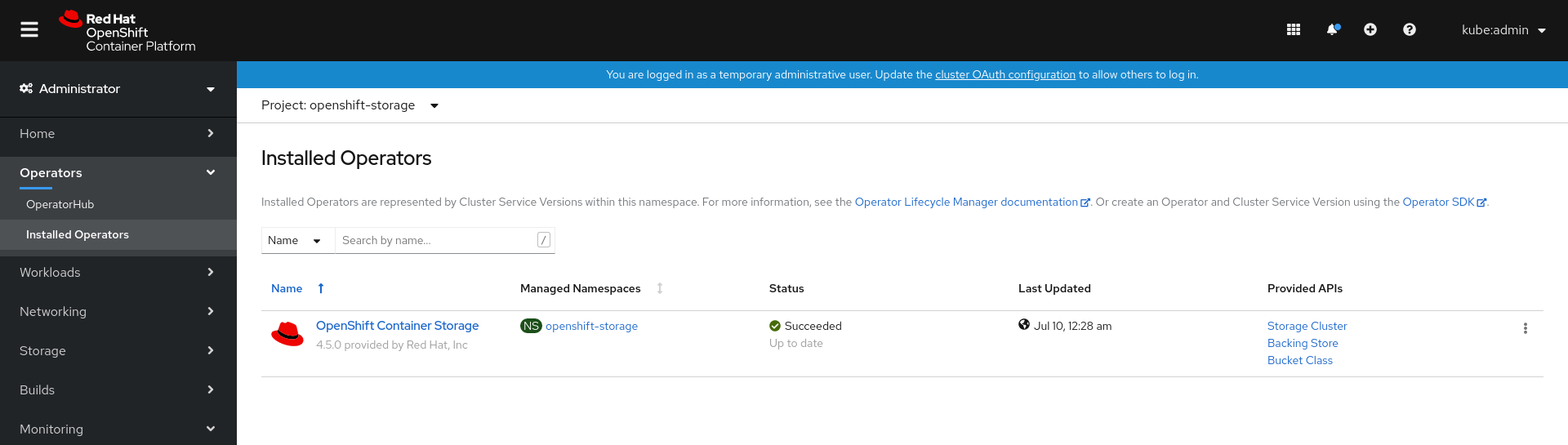

-

OpenShift Container Storage Operator is installed and running in the

openshift-storagenamespace. In OpenShift Web Console, click Operators → Installed Operators to view installed operators. -

Image Registry Operator is installed and running in the

openshift-image-registrynamespace. In OpenShift Web Console, click Administration → Cluster Settings → Cluster Operators to view cluster operators. -

A storage class with provisioner

openshift-storage.cephfs.csi.ceph.comis available. In OpenShift Web Console, click Storage → Storage Classes to view available storage classes.

Procedure

Create a Persistent Volume Claim for the Image Registry to use.

- In OpenShift Web Console, click Storage → Persistent Volume Claims.

-

Set the Project to

openshift-image-registry. Click Create Persistent Volume Claim.

-

From the list of available storage classes retrieved above, specify the Storage Class with the provisioner

openshift-storage.cephfs.csi.ceph.com. -

Specify the Persistent Volume Claim Name, for example,

ocs4registry. -

Specify an Access Mode of

Shared Access (RWX). - Specify a Size of at least 100 GB.

Click Create.

Wait until the status of the new Persistent Volume Claim is listed as

Bound.

-

From the list of available storage classes retrieved above, specify the Storage Class with the provisioner

Configure the cluster’s Image Registry to use the new Persistent Volume Claim.

- Click Administration →Custom Resource Definitions.

-

Click the

Configcustom resource definition associated with theimageregistry.operator.openshift.iogroup. - Click the Instances tab.

- Beside the cluster instance, click the Action Menu (⋮) → Edit Config.

Add the new Persistent Volume Claim as persistent storage for the Image Registry.

Add the following under

spec:, replacing the existingstorage:section if necessary.storage: pvc: claim: <new-pvc-name>For example:

storage: pvc: claim: ocs4registry- Click Save.

Verify that the new configuration is being used.

- Click Workloads → Pods.

-

Set the Project to

openshift-image-registry. -

Verify that the new

image-registry-*pod appears with a status ofRunning, and that the previousimage-registry-*pod terminates. -

Click the new

image-registry-*pod to view pod details. -

Scroll down to Volumes and verify that the

registry-storagevolume has a Type that matches your new Persistent Volume Claim, for example,ocs4registry.

2.2. Configuring monitoring to use OpenShift Container Storage

OpenShift Container Storage provides a monitoring stack that is comprised of Prometheus and AlertManager.

Follow the instructions in this section to configure OpenShift Container Storage as storage for the monitoring stack.

Monitoring will not function if it runs out of storage space. Always ensure that you have plenty of storage capacity for monitoring.

Red Hat recommends configuring a short retention intervals for this service. See the Modifying retention time for Prometheus metrics data sub section of Configuring persistent storage in the OpenShift Container Platform documentation for details.

Prerequisites

- You have administrative access to OpenShift Web Console.

-

OpenShift Container Storage Operator is installed and running in the

openshift-storagenamespace. In OpenShift Web Console, click Operators → Installed Operators to view installed operators. -

Monitoring Operator is installed and running in the

openshift-monitoringnamespace. In OpenShift Web Console, click Administration → Cluster Settings → Cluster Operators to view cluster operators. -

A storage class with provisioner

openshift-storage.rbd.csi.ceph.comis available. In OpenShift Web Console, click Storage → Storage Classes to view available storage classes.

Procedure

- In OpenShift Web Console, go to Workloads → Config Maps.

-

Set the Project dropdown to

openshift-monitoring. - Click Create Config Map.

Define a new

cluster-monitoring-configConfig Map using the following example.Replace the content in angle brackets (

<,>) with your own values, for example,retention: 24horstorage: 40Gi.Replace the storageClassName with the

storageclassthat uses the provisioneropenshift-storage.rbd.csi.ceph.com. In the example given below the name of the storageclass isocs-storagecluster-ceph-rbd.Example

cluster-monitoring-configConfig MapapiVersion: v1 kind: ConfigMap metadata: name: cluster-monitoring-config namespace: openshift-monitoring data: config.yaml: | prometheusK8s: retention: <time to retain monitoring files, e.g. 24h> volumeClaimTemplate: metadata: name: ocs-prometheus-claim spec: storageClassName: ocs-storagecluster-ceph-rbd resources: requests: storage: <size of claim, e.g. 40Gi> alertmanagerMain: volumeClaimTemplate: metadata: name: ocs-alertmanager-claim spec: storageClassName: ocs-storagecluster-ceph-rbd resources: requests: storage: <size of claim, e.g. 40Gi>- Click Create to save and create the Config Map.

Verification steps

Verify that the Persistent Volume Claims are bound to the pods.

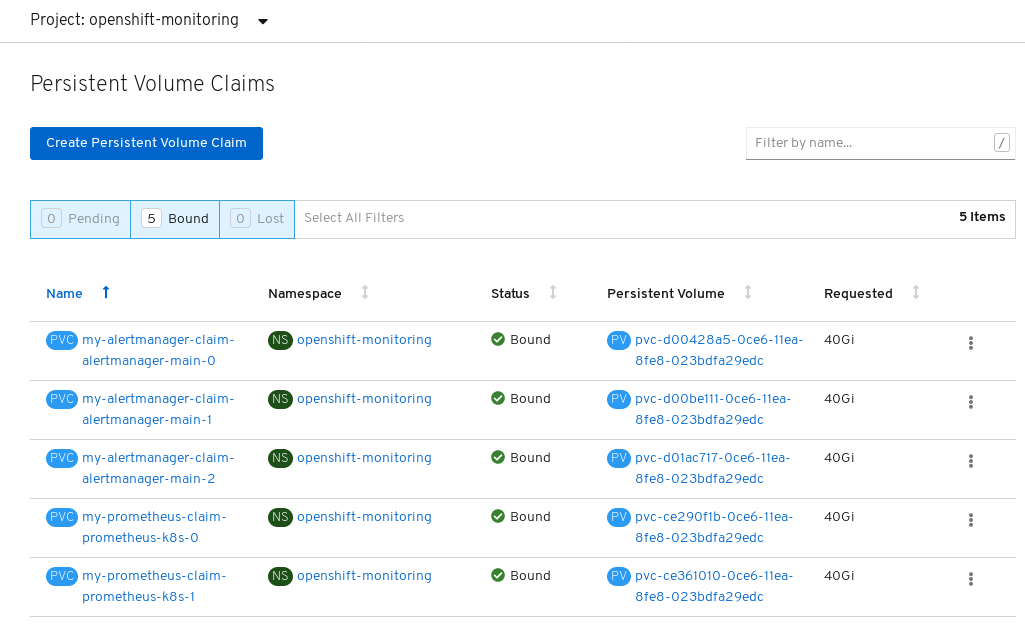

- Go to Storage → Persistent Volume Claims.

-

Set the Project dropdown to

openshift-monitoring. Verify that 5 Persistent Volume Claims are visible with a state of

Bound, attached to threealertmanager-main-*pods, and twoprometheus-k8s-*pods.Monitoring storage created and bound

Verify that the new

alertmanager-main-*pods appear with a state ofRunning.-

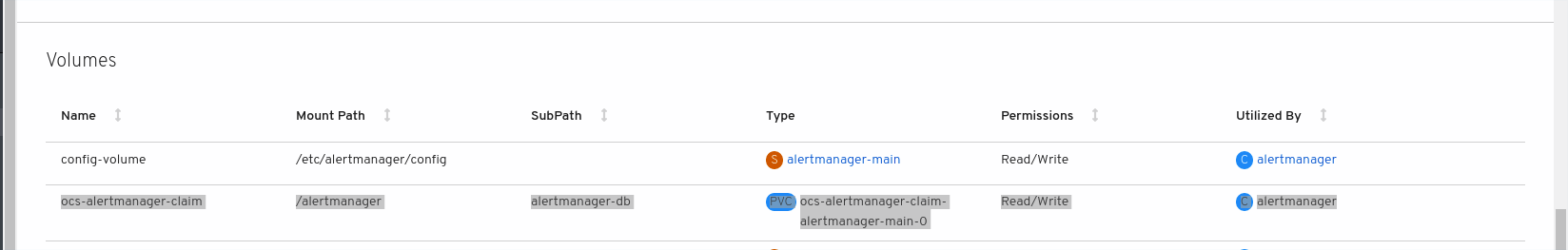

Click the new

alertmanager-main-*pods to view the pod details. Scroll down to Volumes and verify that the volume has a Type,

ocs-alertmanager-claimthat matches one of your new Persistent Volume Claims, for example,ocs-alertmanager-claim-alertmanager-main-0.Persistent Volume Claims attached to

alertmanager-main-*pod

-

Click the new

Verify that the new

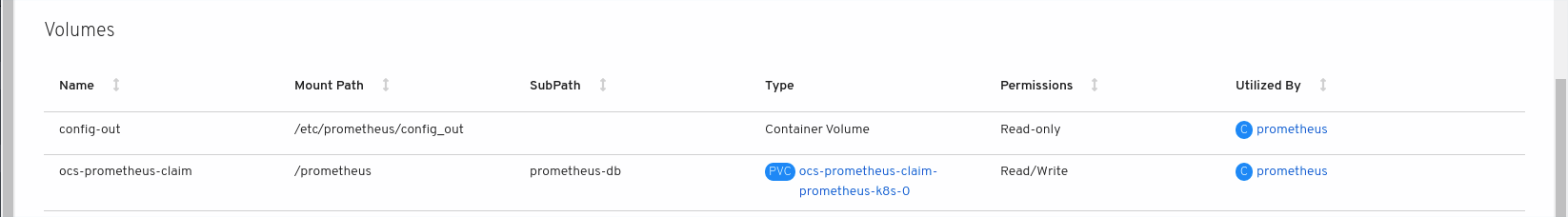

prometheus-k8s-*pods appear with a state ofRunning.-

Click the new

prometheus-k8s-*pods to view the pod details. Scroll down to Volumes and verify that the volume has a Type,

ocs-prometheus-claimthat matches one of your new Persistent Volume Claims, for example,ocs-prometheus-claim-prometheus-k8s-0.Persistent Volume Claims attached to

prometheus-k8s-*pod

-

Click the new

2.3. Cluster logging for OpenShift Container Storage

You can deploy cluster logging to aggregate logs for a range of OpenShift Container Platform services. For information about how to deploy cluster logging, see Deploying cluster logging.

Upon initial OpenShift Container Platform deployment, OpenShift Container Storage is not configured by default and the OpenShift Container Platform cluster will solely rely on default storage available from the nodes. You can edit the default configuration of OpenShift logging (ElasticSearch) to be backed by OpenShift Container Storage to have OpenShift Container Storage backed logging (Elasticsearch).

Always ensure that you have plenty of storage capacity for these services. If you run out of storage space for these critical services, the logging application becomes inoperable and very difficult to recover.

Red Hat recommends configuring shorter curation and retention intervals for these services. See Cluster logging curator in the OpenShift Container Platform documentation for details.

If you run out of storage space for these services, contact Red Hat Customer Support.

2.3.1. Configuring persistent storage

You can configure a persistent storage class and size for the Elasticsearch cluster using the storage class name and size parameters. The Cluster Logging Operator creates a Persistent Volume Claim for each data node in the Elasticsearch cluster based on these parameters. For example:

spec:

logStore:

type: "elasticsearch"

elasticsearch:

nodeCount: 3

storage:

storageClassName: "ocs-storagecluster-ceph-rbd”

size: "200G"

This example specifies that each data node in the cluster will be bound to a Persistent Volume Claim that requests 200GiB of ocs-storagecluster-ceph-rbd storage. Each primary shard will be backed by a single replica. A copy of the shard is replicated across all the nodes and are always available and the copy can be recovered if at least two nodes exist due to the single redundancy policy. For information about Elasticsearch replication policies, see Elasticsearch replication policy in About deploying and configuring cluster logging.

Omission of the storage block will result in a deployment backed by default storage. For example:

spec:

logStore:

type: "elasticsearch"

elasticsearch:

nodeCount: 3

storage: {}For more information, see Configuring cluster logging.

2.3.2. Configuring cluster logging to use OpenShift Container Storage

Follow the instructions in this section to configure OpenShift Container Storage as storage for the OpenShift cluster logging.

You can obtain all the logs when you configure logging for the first time in OpenShift Container Storage. However, after you uninstall and reinstall logging, the old logs are removed and only the new logs are processed.

Prerequisites

- You have administrative access to OpenShift Web Console.

-

OpenShift Container Storage Operator is installed and running in the

openshift-storagenamespace. -

Cluster logging Operator is installed and running in the

openshift-loggingnamespace.

Procedure

- Click Administration → Custom Resource Definitions from the left pane of the OpenShift Web Console.

- On the Custom Resource Definitions page, click ClusterLogging.

- On the Custom Resource Definition Overview page, select View Instances from the Actions menu or click the Instances Tab.

On the Cluster Logging page, click Create Cluster Logging.

You might have to refresh the page to load the data.

In the YAML, replace the storageClassName with the

storageclassthat uses the provisioneropenshift-storage.rbd.csi.ceph.com. In the example given below the name of the storageclass isocs-storagecluster-ceph-rbd:apiVersion: "logging.openshift.io/v1" kind: "ClusterLogging" metadata: name: "instance" namespace: "openshift-logging" spec: managementState: "Managed" logStore: type: "elasticsearch" elasticsearch: nodeCount: 3 storage: storageClassName: ocs-storagecluster-ceph-rbd size: 200G redundancyPolicy: "SingleRedundancy" visualization: type: "kibana" kibana: replicas: 1 curation: type: "curator" curator: schedule: "30 3 * * *" collection: logs: type: "fluentd" fluentd: {}- Click Save.

Verification steps

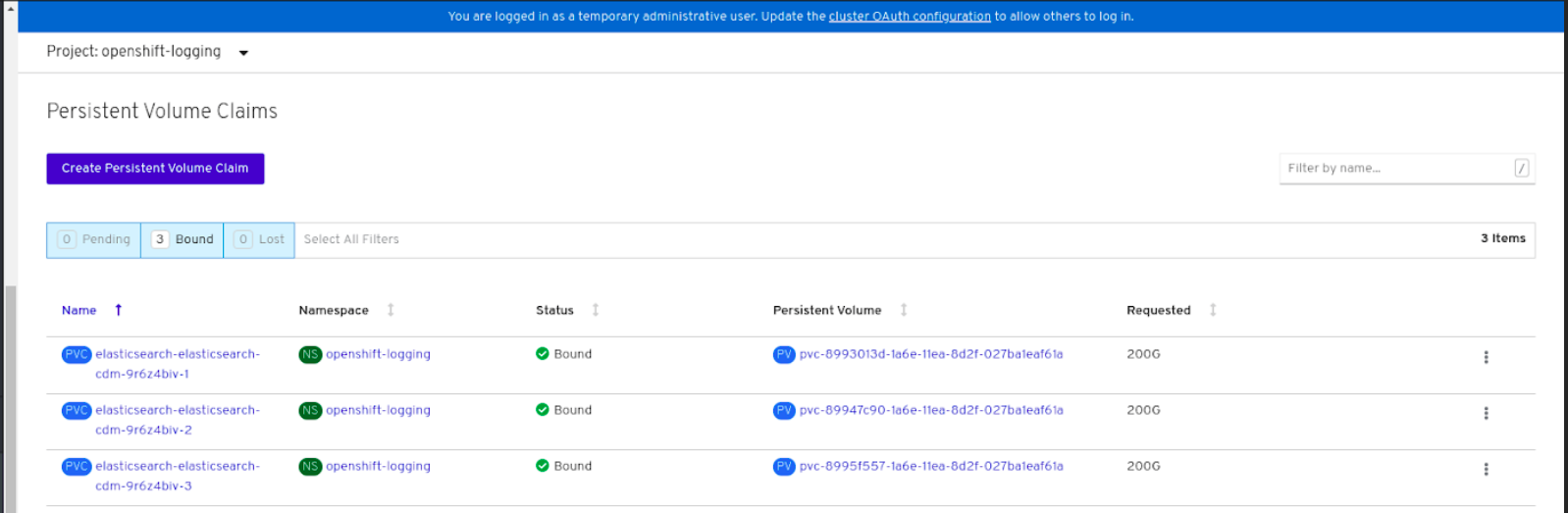

Verify that the Persistent Volume Claims are bound to the

elasticsearchpods.- Go to Storage → Persistent Volume Claims.

-

Set the Project dropdown to

openshift-logging. Verify that Persistent Volume Claims are visible with a state of

Bound, attached toelasticsearch-* pods.Figure 2.1. Cluster logging created and bound

Verify that the new cluster logging is being used.

- Click Workload → Pods.

-

Set the Project to

openshift-logging. -

Verify that the new

elasticsearch-* pods appear with a state ofRunning. -

Click the new

elasticsearch-* pod to view pod details. -

Scroll down to Volumes and verify that the elasticsearch volume has a Type that matches your new Persistent Volume Claim, for example,

elasticsearch-elasticsearch-cdm-9r624biv-3. - Click the Persistent Volume Claim name and verify the storage class name in the PersistenVolumeClaim Overview page.

Make sure to use a shorter curator time to avoid PV full scenario on PVs attached to Elasticsearch pods.

You can configure Curator to delete Elasticsearch data based on retention settings. It is recommended that you set the following default index data retention of 5 days as a default.

config.yaml: |

openshift-storage:

delete:

days: 5For more details, see Curation of Elasticsearch Data.

To uninstall the cluster logging backed by Persistent Volume Claim, use the procedure removing the cluster logging operator from OpenShift Container Storage in the uninstall chapter of the respective deployment guide.

Chapter 3. Backing OpenShift Container Platform applications with OpenShift Container Storage

You cannot directly install OpenShift Container Storage during the OpenShift Container Platform installation. However, you can install OpenShift Container Storage on an existing OpenShift Container Platform by using the Operator Hub and then configure the OpenShift Container Platform applications to be backed by OpenShift Container Storage.

Prerequisites

- OpenShift Container Platform is installed and you have administrative access to OpenShift Web Console.

-

OpenShift Container Storage is installed and running in the

openshift-storagenamespace.

Procedure

In the OpenShift Web Console, perform one of the following:

Click Workloads → Deployments.

In the Deployments page, you can do one of the following:

- Select any existing deployment and click Add Storage option from the Action menu (⋮).

Create a new deployment and then add storage.

- Click Create Deployment to create a new deployment.

-

Edit the

YAMLbased on your requirement to create a deployment. - Click Create.

- Select Add Storage from the Actions drop down menu on the top right of the page.

Click Workloads → Deployment Configs.

In the Deployment Configs page, you can do one of the following:

- Select any existing deployment and click Add Storage option from the Action menu (⋮).

Create a new deployment and then add storage.

- Click Create Deployment Config to create a new deployment.

-

Edit the

YAMLbased on your requirement to create a deployment. - Click Create.

- Select Add Storage from the Actions drop down menu on the top right of the page.

In the Add Storage page, you can choose one of the following options:

- Click the Use existing claim option and select a suitable PVC from the drop down list.

Click the Create new claim option.

-

Select the appropriate

CephFSorRBDstorage class from the Storage Class drop down list. - Provide a name for the Persistent Volume Claim.

Select ReadWriteOnce (RWO) or ReadWriteMany (RWX) access mode.

NoteReadOnlyMany (ROX) is deactivated as it is not supported.

Select the size of the desired storage capacity.

NoteYou cannot resize the storage capacity after the creation of Persistent Volume Claim.

-

Select the appropriate

- Specify the mount path and subpath (if required) for the mount path volume inside the container.

- Click Save.

Verification steps

Depending on your configuration, perform one of the following:

- Click Workloads → Deployments.

- Click Workloads → Deployment Configs.

- Set the Project as required.

- Click the deployment for you which you added storage to view the deployment details.

- Scroll down to Volumes and verify that your deployment has a Type that matches the Persistent Volume Claim that you assigned.

- Click the Persistent Volume Claim name and verify the storage class name in the PersistenVolumeClaim Overview page.

Chapter 4. Scaling storage nodes

To scale the storage capacity of OpenShift Container Storage in internal mode, you can do either of the following:

- Scale up storage nodes - Add storage capacity to the existing Red Hat OpenShift Container Storage worker nodes

- Scale out storage nodes - Add new worker nodes containing storage capacity

For scaling your storage in external mode, see Red Hat Ceph Storage documentation.

4.1. Requirements for scaling storage nodes

Before you proceed to scale the storage nodes, refer to the following sections to understand the node requirements for your specific Red Hat OpenShift Container Storage instance:

- Platform requirements

Storage device requirements

Always ensure that you have plenty of storage capacity.

If storage ever fills completely, it is not possible to add capacity or delete or migrate content away from the storage to free up space. Completely full storage is very difficult to recover.

Capacity alerts are issued when cluster storage capacity reaches 75% (near-full) and 85% (full) of total capacity. Always address capacity warnings promptly, and review your storage regularly to ensure that you do not run out of storage space.

If you do run out of storage space completely, contact Red Hat Customer Support.

4.1.1. Supported Deployments for Red Hat OpenShift Container Storage

User-provisioned infrastructure:

- Amazon Web Services (AWS)

- VMware

- Bare metal

Installer-provisioned infrastructure:

- Amazon Web Services (AWS)

4.2. Scaling up storage capacity

Depending on the type of your deployment, you can choose one of the following procedures to scale up storage capacity.

- For AWS or VMware infrastructures using dynamic or automated provisioning of storage devices, see Section 4.2.1, “Scaling up storage by adding capacity to your OpenShift Container Storage nodes on AWS or VMware infrastructure”

- For bare metal, Amazon EC2 I3, or VMware infrastructures using local storage devices, see Section 4.2.2, “Scaling up storage by adding capacity to your OpenShift Container Storage nodes using local storage devices”

4.2.1. Scaling up storage by adding capacity to your OpenShift Container Storage nodes on AWS or VMware infrastructure

Use this procedure to add storage capacity and performance to your configured Red Hat OpenShift Container Storage worker nodes.

Prerequisites

- A running OpenShift Container Storage Platform

- Administrative privileges on the OpenShift Web Console

Procedure

- Navigate to the OpenShift Web Console.

- Click on Operators on the left navigation bar.

- Select Installed Operators.

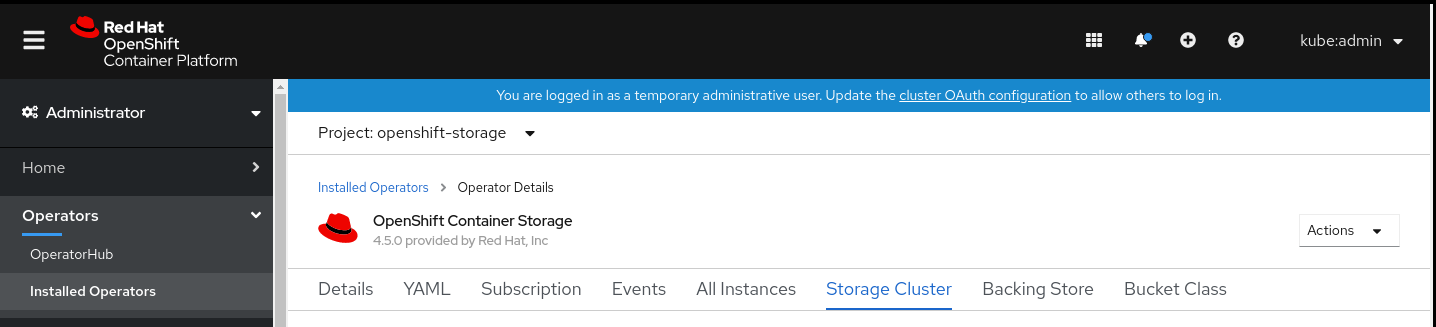

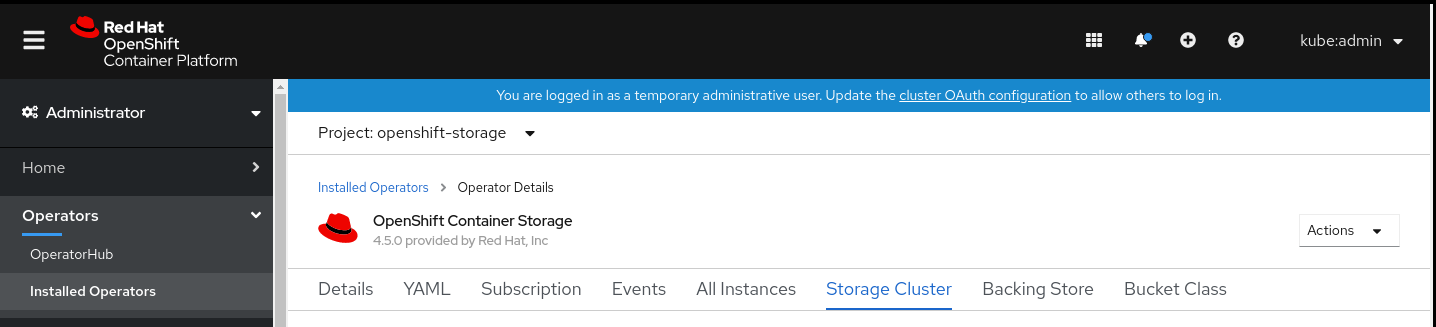

In the window, click OpenShift Container Storage Operator:

In the top navigation bar, scroll right and click Storage Cluster tab.

- The visible list should have only one item. Click (⋮) on the far right to extend the options menu.

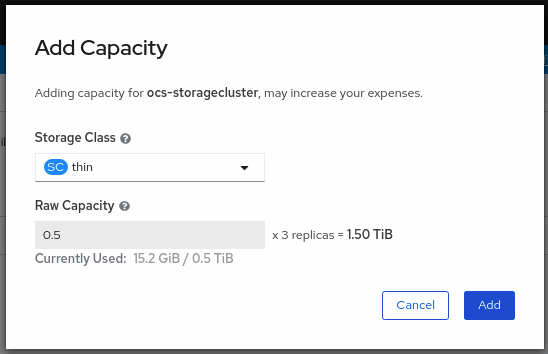

Select Add Capacity from the options menu.

From this dialog box, you can set the requested additional capacity and the storage class. Add capacity will show the capacity selected at the time of installation and will allow to add the capacity only in this increment. On AWS, the storage class should be set to gp2. On VMware, the storage class should be set to thin.

NoteThe effectively provisioned capacity will be three times as much as what you see in the Raw Capacity field because OpenShift Container Storage uses a replica count of 3.

- Once you are done with your setting, click Add. You might need to wait a couple of minutes for the storage cluster to reach Ready state.

Verification steps

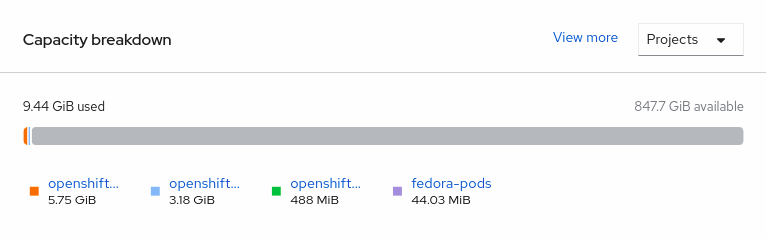

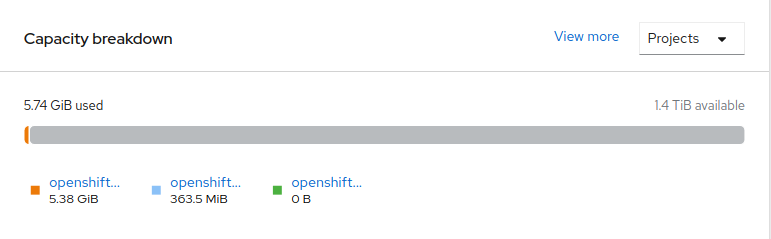

Navigate to Overview → Persistent Storage tab, then check the Capacity breakdown card.

- Note that the capacity increases based on your selections.

As of OpenShift Container Storage 4.2, cluster reduction, whether by reducing OSDs or nodes, is not supported.

4.2.2. Scaling up storage by adding capacity to your OpenShift Container Storage nodes using local storage devices

Use this procedure to add storage capacity (additional storage devices) to your configured local storage based OpenShift Container Storage worker nodes on bare metal, and VMware infrastructures.

Scaling up storage on Amazon EC2 I3 is a Technology Preview feature. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For Amazon EC2 I3 infrastructure, adding nodes is the only option for adding capacity, as deployment is done using both the available NVMe devices.

Prerequisites

- You must be logged into OpenShift Container Platform cluster.

You must have installed local storage operator. Use the following procedures, see

- You must have three OpenShift Container Platform worker nodes with the same storage type and size attached to each node (for example, 2TB NVMe drive) as the original OCS StorageCluster was created with.

Procedure

To add storage capacity to OpenShift Container Platform nodes with OpenShift Container Storage installed, you need to

Find the unique

by-ididentifier for available devices that you want to add, that is, a minimum of one device per worker node. You can follow the procedure for finding available storage devices in the respective deployment guide.NoteMake sure you perform this process for all the existing nodes (minimum of 3) for which you want to add storage.

Add the unique device ID to the

LocalVolumecustom resource (CR).$ oc edit -n local-storage localvolume local-block

Example output:

spec: logLevel: Normal managementState: Managed nodeSelector: nodeSelectorTerms: - matchExpressions: - key: cluster.ocs.openshift.io/openshift-storage operator: In values: - "" storageClassDevices: - devicePaths: - /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T7_PHLF733402P51P0GGN - /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T7_PHLF733402LM1P0GGN - /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T7_PHLF733402M21P0GGN - /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T7_PHLF733402B71P0GGN # newly added device by-id - /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T7_PHLF733402A31P0GGN # newly added device by-id - /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T7_PHLF733402Q71P0GGN # newly added device by-id storageClassName: localblock volumeMode: BlockMake sure to save the changes after editing the CR.

Example output:

localvolume.local.storage.openshift.io/local-block edited

You can see in this CR that new devices using

by-idhave been added. Each new device maps to one NVMe device on the three worker nodes.-

nvme-INTEL_SSDPE2KX010T7_PHLF733402B71P0GGN -

nvme-INTEL_SSDPE2KX010T7_PHLF733402A31P0GGN -

nvme-INTEL_SSDPE2KX010T7_PHLF733402Q71P0GGN

-

Display the newly created PVs with

storageclassname used inlocalVolumeCR.$ oc get pv | grep localblock | grep Available

Example output:

local-pv-5ee61dcc 931Gi RWO Delete Available localblock 2m35s local-pv-b1fa607a 931Gi RWO Delete Available localblock 2m27s local-pv-e971c51d 931Gi RWO Delete Available localblock 2m22s ...

There are three more available PVs of same size which will be used for new OSDs.

- Navigate to the OpenShift Web Console.

- Click on Operators on the left navigation bar.

- Select Installed Operators.

In the window, click OpenShift Container Storage Operator:

In the top navigation bar, scroll right and click Storage Cluster tab.

- The visible list should have only one item. Click (⋮) on the far right to extend the options menu.

Select Add Capacity from the options menu.

From this dialog box, set the Storage Class name to the name used in the

localVolumeCR. Available Capacity displayed is based on the local disks available in storage class.- Once you are done with your setting, click Add. You might need to wait a couple of minutes for the storage cluster to reach Ready state.

Verify that the new OSDs and their corresponding new PVCs are created.

$ oc get -n openshift-storage pods -l app=rook-ceph-osd

Example output:

NAME READY STATUS RESTARTS AGE rook-ceph-osd-0-77c4fdb758-qshw4 1/1 Running 0 1h rook-ceph-osd-1-8645c5fbb6-656ks 1/1 Running 0 1h rook-ceph-osd-2-86895b854f-r4gt6 1/1 Running 0 1h rook-ceph-osd-3-dc7f787dd-gdnsz 1/1 Running 0 10m rook-ceph-osd-4-554b5c46dd-hbf9t 1/1 Running 0 10m rook-ceph-osd-5-5cf94c4448-k94j6 1/1 Running 0 10m

In the above example, osd-3, osd-4, and osd-5 are the newly added pods to the OpenShift Container Storage cluster.

$ oc get pvc -n openshift-storage |grep localblock

Example output:

ocs-deviceset-0-0-qc29m Bound local-pv-fc5562d3 931Gi RWO localblock 1h ocs-deviceset-0-1-qdmrl Bound local-pv-b1fa607a 931Gi RWO localblock 10m ocs-deviceset-1-0-mpwmk Bound local-pv-58cdd0bc 931Gi RWO localblock 1h ocs-deviceset-1-1-85892 Bound local-pv-e971c51d 931Gi RWO localblock 10m ocs-deviceset-2-0-rll47 Bound local-pv-29d8ad8d 931Gi RWO localblock 1h ocs-deviceset-2-1-cgth2 Bound local-pv-5ee61dcc 931Gi RWO localblock 10m

In the above example, we see three new PVCs are created.

Verification steps

Navigate to Overview → Persistent Storage tab, then check the Capacity breakdown card.

Note that the capacity increases based on your selections.

ImportantOpenShift Container Storage does not support cluster reduction either by reducing OSDs or reducing nodes.

4.3. Scaling out storage capacity

To scale out storage capacity, you need to perform the following steps:

- Add a new node

- Verify that the new node is added successfully

- Scale up the storage capacity

4.3.1. Adding a node

You can add nodes to increase the storage capacity when existing worker nodes are already running at their maximum supported OSDs, which is increment of 3 OSDs of the capacity selected during initial configuration.

Depending on the type of your deployment, you can choose one of the following procedures to add a storage node:

- For AWS installer-provisioned infrastructure, see Section 4.3.1.1, “Adding a node on an AWS installer-provisioned infrastructure”

- For AWS or VMware user-provisioned infrastructure, see Section 4.3.1.2, “Adding a node on an AWS or a VMware user-provisioned infrastructure”

- For bare metal, Amazon EC2 I3, or VMware infrastructures, see Section 4.3.1.3, “Adding a node using a local storage device”

4.3.1.1. Adding a node on an AWS installer-provisioned infrastructure

Prerequisites

- You must be logged into OpenShift Container Platform (OCP) cluster.

Procedure

- Navigate to Compute → Machine Sets.

- On the machine set where you want to add nodes, select Edit Machine Count.

- Add the amount of nodes, and click Save.

- Click Compute → Nodes and confirm if the new node is in Ready state.

Apply the OpenShift Container Storage label to the new node.

- For the new node, Action menu (⋮) → Edit Labels.

- Add cluster.ocs.openshift.io/openshift-storage and click Save.

It is recommended to add 3 nodes each in different zones. You must add 3 nodes and perform this procedure for all of them.

Verification steps

To verify that the new node is added, see Section 4.3.2, “Verifying the addition of a new node”.

4.3.1.2. Adding a node on an AWS or a VMware user-provisioned infrastructure

Prerequisites

- You must be logged into OpenShift Container Platform (OCP) cluster.

Procedure

Depending on whether you are adding a node on an AWS user provisioned infrastructure or a VMware user-provisioned infrastructure, perform the following steps:

For AWS

- Create a new AWS machine instance with the required infrastructure. See Platform requirements.

- Create a new OpenShift Container Platform node using the new AWS machine instance.

For VMware:

- Create a new VM on vSphere with the required infrastructure. See Platform requirements.

- Create a new OpenShift Container Platform worker node using the new VM.

Check for certificate signing requests (CSRs) related to OpenShift Container Storage that are in

Pendingstate:$ oc get csr

Approve all required OpenShift Container Storage CSRs for the new node:

$ oc adm certificate approve <Certificate_Name>

- Click Compute → Nodes, confirm if the new node is in Ready state.

Apply the OpenShift Container Storage label to the new node using any one of the following:

- From User interface

- For the new node, click Action Menu (⋮) → Edit Labels

-

Add

cluster.ocs.openshift.io/openshift-storageand click Save.

- From Command line interface

Execute the following command to apply the OpenShift Container Storage label to the new node:

$ oc label node <new_node_name> cluster.ocs.openshift.io/openshift-storage=""

NoteIt is recommended to add 3 nodes each in different zones. You must add 3 nodes and perform this procedure for all of them.

Verification steps

To verify that the new node is added, see Section 4.3.2, “Verifying the addition of a new node”.

4.3.1.3. Adding a node using a local storage device

Use this procedure to add a node on bare metal, Amazon EC2, and VMware infrastructures.

Scaling storage nodes for Amazon EC2 infrastructure is a Technology Preview feature. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

Prerequisites

- You must be logged into OpenShift Container Platform (OCP) cluster.

- You must have three OpenShift Container Platform worker nodes with the same storage type and size attached to each node (for example, 2TB NVMe drive) as the original OCS StorageCluster was created with.

Procedure

Depending on whether you are adding a node on bare metal, Amazon EC2, or VMware infrastructure, perform the following steps:

For Amazon EC2

- Create a new Amazon EC2 I3 machine instance with the required infrastructure. See Creating a MachineSet in AWS and Platform requirements.

- Create a new OpenShift Container Platform node using the new Amazon EC2 I3 machine instance.

For VMware:

- Create a new VM on vSphere with the required infrastructure. See Platform requirements.

- Create a new OpenShift Container Platform worker node using the new VM.

For bare metal:

- Get a new bare metal machine with the required infrastructure. See Platform requirements.

- Create a new OpenShift Container Platform node using the new bare metal machine.

Check for certificate signing requests (CSRs) related to OpenShift Container Storage that are in

Pendingstate:$ oc get csr

Approve all required OpenShift Container Storage CSRs for the new node:

$ oc adm certificate approve <Certificate_Name>

- Click Compute → Nodes, confirm if the new node is in Ready state.

Apply the OpenShift Container Storage label to the new node using any one of the following:

- From User interface

- For the new node, click Action Menu (⋮) → Edit Labels

-

Add

cluster.ocs.openshift.io/openshift-storageand click Save.

- From Command line interface

Execute the following command to apply the OpenShift Container Storage label to the new node:

$ oc label node <new_node_name> cluster.ocs.openshift.io/openshift-storage=""

NoteIt is recommended to add 3 nodes each in different zones. You must add 3 nodes and perform this procedure for all of them.

Verification steps

To verify that the new node is added, see Section 4.3.2, “Verifying the addition of a new node”.

4.3.2. Verifying the addition of a new node

Execute the following command and verify that the new node is present in the output:

$ oc get nodes --show-labels | grep cluster.ocs.openshift.io/openshift-storage= |cut -d' ' -f1

Click Workloads → Pods, confirm that at least the following pods on the new node are in Running state:

-

csi-cephfsplugin-* -

csi-rbdplugin-*

-

4.3.3. Scaling up storage capacity

To scale up storage capacity, see Scaling up storage by adding capacity.

Chapter 5. Managing Persistent Volume Claims

5.1. Configuring application pods to use OpenShift Container Storage

Follow the instructions in this section to configure OpenShift Container Storage as storage for an application pod.

Prerequisites

- You have administrative access to OpenShift Web Console.

-

OpenShift Container Storage Operator is installed and running in the

openshift-storagenamespace. In OpenShift Web Console, click Operators → Installed Operators to view installed operators. - The default storage classes provided by OpenShift Container Storage are available. In OpenShift Web Console, click Storage → Storage Classes to view default storage classes.

Procedure

Create a Persistent Volume Claim (PVC) for the application to use.

- In OpenShift Web Console, click Storage → Persistent Volume Claims.

- Set the Project for the application pod.

Click Create Persistent Volume Claim.

- Specify a Storage Class provided by OpenShift Container Storage.

-

Specify the PVC Name, for example,

myclaim. - Select the required Access Mode.

- Specify a Size as per application requirement.

-

Click Create and wait until the PVC is in

Boundstatus.

Configure a new or existing application pod to use the new PVC.

For a new application pod, perform the following steps:

- Click Workloads →Pods.

- Create a new application pod.

Under the

spec:section, addvolume:section to add the new PVC as a volume for the application pod.volumes: - name: <volume_name> persistentVolumeClaim: claimName: <pvc_name>For example:

volumes: - name: mypd persistentVolumeClaim: claimName: myclaim

For an existing application pod, perform the following steps:

- Click Workloads →Deployment Configs.

- Search for the required deployment config associated with the application pod.

- Click on its Action menu (⋮) → Edit Deployment Config.

Under the

spec:section, addvolume:section to add the new PVC as a volume for the application pod and click Save.volumes: - name: <volume_name> persistentVolumeClaim: claimName: <pvc_name>For example:

volumes: - name: mypd persistentVolumeClaim: claimName: myclaim

Verify that the new configuration is being used.

- Click Workloads → Pods.

- Set the Project for the application pod.

-

Verify that the application pod appears with a status of

Running. - Click the application pod name to view pod details.

-

Scroll down to Volumes section and verify that the volume has a Type that matches your new Persistent Volume Claim, for example,

myclaim.

5.2. Viewing Persistent Volume Claim request status

Use this procedure to view the status of a PVC request.

Prerequisites

- Administrator access to OpenShift Container Storage.

Procedure

- Log in to OpenShift Web Console.

- Click Storage → Persistent Volume Claims

- Search for the required PVC name by using the Filter textbox. You can also filter the list of PVCs by Name or Label to narrow down the list

- Check the Status column corresponding to the required PVC.

- Click the required Name to view the PVC details.

5.3. Reviewing Persistent Volume Claim request events

Use this procedure to review and address Persistent Volume Claim (PVC) request events.

Prerequisites

- Administrator access to OpenShift Web Console.

Procedure

- Log in to OpenShift Web Console.

- Click Home → Overview → Persistent Storage

- Locate the Inventory card to see the number of PVCs with errors.

- Click Storage → Persistent Volume Claims

- Search for the required PVC using the Filter textbox.

- Click on the PVC name and navigate to Events

- Address the events as required or as directed.

5.4. Expanding Persistent Volume Claims

OpenShift Container Storage 4.5 introduces the ability to expand Persistent Volume Claims as a Technology Preview feature providing more flexibility in the management of persistent storage resources.

Expansion is supported for the following Persistent Volumes:

-

PVC with ReadWriteOnce (RWO) and ReadWriteMany (RWX) access that is based on Ceph File System (CephFS) for volume mode

Filesystem. -

PVC with ReadWriteOnce (RWO) access that is based on Ceph RADOS Block Devices (RBDs) with volume mode

Filesystem. -

PVC with ReadWriteOnce (RWO) access that is based on Ceph RADOS Block Devices (RBDs) with volume mode

Block.

Expanding Persistent Volumes is a Technology Preview feature. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more informaton, see Technology Preview Features Support Scope.

OSD and MON PVC expansion is not supported by Red Hat.

This Technology Preview feature is only available with fresh installations of OpenShift Container Storage version 4.5. It does not apply to clusters upgraded from previous OpenShift Container Storage releases.

Prerequisites

- Administrator access to OpenShift Web Console.

Procedure

-

In OpenShift Web Console, navigate to

Storage→Persistent Volume Claims. - Click the Action Menu (⋮) next to the Persistent Volume Claim you want to expand.

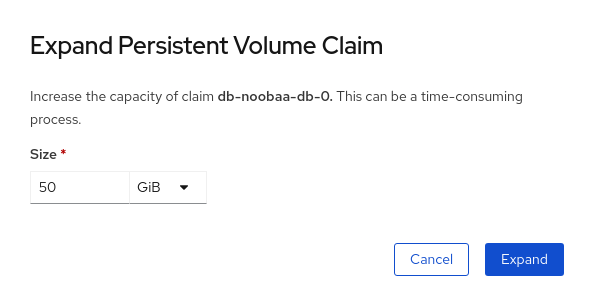

Click

Expand PVC:

Select the new size of the Persistent Volume Claim, then click

Expand:

To verify the expansion, navigate to the PVC’s details page and verify the

Capacityfield has the correct size requested.NoteWhen expanding PVCs based on Ceph RADOS Block Devices (RBDs), if the PVC is not already attached to a pod the

Condition typeisFileSystemResizePendingin the PVC’s details page. Once the volume is mounted, Filesystem resize succeeds and the new size is reflected in theCapacityfield.

5.5. Dynamic provisioning

5.5.1. About dynamic provisioning

The StorageClass resource object describes and classifies storage that can be requested, as well as provides a means for passing parameters for dynamically provisioned storage on demand. StorageClass objects can also serve as a management mechanism for controlling different levels of storage and access to the storage. Cluster Administrators (cluster-admin) or Storage Administrators (storage-admin) define and create the StorageClass objects that users can request without needing any intimate knowledge about the underlying storage volume sources.

The OpenShift Container Platform persistent volume framework enables this functionality and allows administrators to provision a cluster with persistent storage. The framework also gives users a way to request those resources without having any knowledge of the underlying infrastructure.

Many storage types are available for use as persistent volumes in OpenShift Container Platform. While all of them can be statically provisioned by an administrator, some types of storage are created dynamically using the built-in provider and plug-in APIs.

5.5.2. Dynamic provisioning in OpenShift Container Storage

Red Hat OpenShift Container Storage is software-defined storage that is optimised for container environments. It runs as an operator on OpenShift Container Platform to provide highly integrated and simplified persistent storage management for containers.

OpenShift Container Storage supports a variety of storage types, including:

- Block storage for databases

- Shared file storage for continuous integration, messaging, and data aggregation

- Object storage for archival, backup, and media storage

Version 4.5 uses Red Hat Ceph Storage to provide the file, block, and object storage that backs persistent volumes, and Rook.io to manage and orchestrate provisioning of persistent volumes and claims. NooBaa provides object storage, and its Multicloud Gateway allows object federation across multiple cloud environments (available as a Technology Preview).

In OpenShift Container Storage 4.5, the Red Hat Ceph Storage Container Storage Interface (CSI) driver for RADOS Block Device (RBD) and Ceph File System (CephFS) handles the dynamic provisioning requests. When a PVC request comes in dynamically, the CSI driver has the following options:

-

Create a PVC with ReadWriteOnce (RWO) and ReadWriteMany (RWX) access that is based on Ceph RBDs with volume mode

Block -

Create a PVC with ReadWriteOnce (RWO) access that is based on Ceph RBDs with volume mode

Filesystem -

Create a PVC with ReadWriteOnce (RWO) and ReadWriteMany (RWX) access that is based on CephFS for volume mode

Filesystem

The judgement of which driver (RBD or CephFS) to use is based on the entry in the storageclass.yaml file.

5.5.3. Available dynamic provisioning plug-ins

OpenShift Container Platform provides the following provisioner plug-ins, which have generic implementations for dynamic provisioning that use the cluster’s configured provider’s API to create new storage resources:

| Storage type | Provisioner plug-in name | Notes |

|---|---|---|

| OpenStack Cinder |

| |

| AWS Elastic Block Store (EBS) |

|

For dynamic provisioning when using multiple clusters in different zones, tag each node with |

| AWS Elastic File System (EFS) | Dynamic provisioning is accomplished through the EFS provisioner pod and not through a provisioner plug-in. | |

| Azure Disk |

| |

| Azure File |

|

The |

| GCE Persistent Disk (gcePD) |

| In multi-zone configurations, it is advisable to run one OpenShift Container Platform cluster per GCE project to avoid PVs from being created in zones where no node in the current cluster exists. |

|

|

Any chosen provisioner plug-in also requires configuration for the relevant cloud, host, or third-party provider as per the relevant documentation.

Chapter 6. Managing container storage interface (CSI) component placements

Each cluster consists of a number of dedicated nodes such as infra and storage nodes. However, an infra node with a custom taint will not be able to use OpenShift Container Storage Persistent Volume Claims (PVCs) on the node. So, if you want to use such nodes, you can set tolerations to bring up csi-plugins on the nodes. For more information, see https://access.redhat.com/solutions/4827161.

Procedure

Edit the configmap to add the toleration for the custom taint. Remember to save before exiting the editor.

$ oc edit configmap rook-ceph-operator-config -n openshift-storage

Display the

configmapto check the added toleration.$ oc get configmap rook-ceph-operator-config -n openshift-storage -o yaml

Example output of the added toleration for the taint,

nodetype=infra:NoSchedule:apiVersion: v1 data: [...] CSI_PLUGIN_TOLERATIONS: | - effect: NoSchedule key: nodetype operator: Equal value: infra - effect: NoSchedule key: node.ocs.openshift.io/storage operator: Exists [...] kind: ConfigMap metadata: [...]Restart the

rook-ceph-operatorif thecsi-cephfsplugin-* andcsi-rbdplugin-* pods fail to come up on their own on the infra nodes.$ oc delete -n openshift-storage pod <name of the rook_ceph_operator pod>

Example :

$ oc delete -n openshift-storage pod rook-ceph-operator-5446f9b95b-jrn2j pod "rook-ceph-operator-5446f9b95b-jrn2j" deleted

Verification step

Verify that the csi-cephfsplugin-* and csi-rbdplugin-* pods are running on the infra nodes.

Chapter 7. Multicloud Object Gateway

7.1. About the Multicloud Object Gateway

The Multicloud Object Gateway (MCG) is a lightweight object storage service for OpenShift, allowing users to start small and then scale as needed on-premise, in multiple clusters, and with cloud-native storage.

7.2. Accessing the Multicloud Object Gateway with your applications

You can access the object service with any application targeting AWS S3 or code that uses AWS S3 Software Development Kit (SDK). Applications need to specify the MCG endpoint, an access key, and a secret access key. You can use your terminal or the MCG CLI to retrieve this information.

For information on accessing the RADOS Object Gateway S3 endpoint, see Chapter 8, Accessing the RADOS Object Gateway S3 endpoint.

Prerequisites

- A running OpenShift Container Storage Platform

Download the MCG command-line interface for easier management:

# subscription-manager repos --enable=rh-ocs-4-for-rhel-8-x86_64-rpms # yum install mcg

-

Alternatively, you can install the

mcgpackage from the OpenShift Container Storage RPMs found at Download RedHat OpenShift Container Storage page.

You can access the relevant endpoint, access key, and secret access key two ways:

7.2.1. Accessing the Multicloud Object Gateway from the terminal

Procedure

Run the describe command to view information about the MCG endpoint, including its access key (AWS_ACCESS_KEY_ID value) and secret access key (AWS_SECRET_ACCESS_KEY value):

# oc describe noobaa -n openshift-storage

The output will look similar to the following:

Name: noobaa

Namespace: openshift-storage

Labels: <none>

Annotations: <none>

API Version: noobaa.io/v1alpha1

Kind: NooBaa

Metadata:

Creation Timestamp: 2019-07-29T16:22:06Z

Generation: 1

Resource Version: 6718822

Self Link: /apis/noobaa.io/v1alpha1/namespaces/openshift-storage/noobaas/noobaa

UID: 019cfb4a-b21d-11e9-9a02-06c8de012f9e

Spec:

Status:

Accounts:

Admin:

Secret Ref:

Name: noobaa-admin

Namespace: openshift-storage

Actual Image: noobaa/noobaa-core:4.0

Observed Generation: 1

Phase: Ready

Readme:

Welcome to NooBaa!

-----------------

Welcome to NooBaa!

-----------------

NooBaa Core Version:

NooBaa Operator Version:

Lets get started:

1. Connect to Management console:

Read your mgmt console login information (email & password) from secret: "noobaa-admin".

kubectl get secret noobaa-admin -n openshift-storage -o json | jq '.data|map_values(@base64d)'

Open the management console service - take External IP/DNS or Node Port or use port forwarding:

kubectl port-forward -n openshift-storage service/noobaa-mgmt 11443:443 &

open https://localhost:11443

2. Test S3 client:

kubectl port-forward -n openshift-storage service/s3 10443:443 &

1

NOOBAA_ACCESS_KEY=$(kubectl get secret noobaa-admin -n openshift-storage -o json | jq -r '.data.AWS_ACCESS_KEY_ID|@base64d')

2

NOOBAA_SECRET_KEY=$(kubectl get secret noobaa-admin -n openshift-storage -o json | jq -r '.data.AWS_SECRET_ACCESS_KEY|@base64d')

alias s3='AWS_ACCESS_KEY_ID=$NOOBAA_ACCESS_KEY AWS_SECRET_ACCESS_KEY=$NOOBAA_SECRET_KEY aws --endpoint https://localhost:10443 --no-verify-ssl s3'

s3 ls

Services:

Service Mgmt:

External DNS:

https://noobaa-mgmt-openshift-storage.apps.mycluster-cluster.qe.rh-ocs.com

https://a3406079515be11eaa3b70683061451e-1194613580.us-east-2.elb.amazonaws.com:443

Internal DNS:

https://noobaa-mgmt.openshift-storage.svc:443

Internal IP:

https://172.30.235.12:443

Node Ports:

https://10.0.142.103:31385

Pod Ports:

https://10.131.0.19:8443

serviceS3:

External DNS: 3

https://s3-openshift-storage.apps.mycluster-cluster.qe.rh-ocs.com

https://a340f4e1315be11eaa3b70683061451e-943168195.us-east-2.elb.amazonaws.com:443

Internal DNS:

https://s3.openshift-storage.svc:443

Internal IP:

https://172.30.86.41:443

Node Ports:

https://10.0.142.103:31011

Pod Ports:

https://10.131.0.19:6443

The output from the oc describe noobaa command lists the internal and external DNS names that are available. When using the internal DNS, the traffic is free. The external DNS uses Load Balancing to process the traffic, and therefore has a cost per hour.

7.2.2. Accessing the Multicloud Object Gateway from the MCG command-line interface

Prerequisites

Download the MCG command-line interface:

# subscription-manager repos --enable=rh-ocs-4-for-rhel-8-x86_64-rpms # yum install mcg

Procedure

Run the status command to access the endpoint, access key, and secret access key:

noobaa status -n openshift-storage

The output will look similar to the following:

INFO[0000] Namespace: openshift-storage INFO[0000] INFO[0000] CRD Status: INFO[0003] ✅ Exists: CustomResourceDefinition "noobaas.noobaa.io" INFO[0003] ✅ Exists: CustomResourceDefinition "backingstores.noobaa.io" INFO[0003] ✅ Exists: CustomResourceDefinition "bucketclasses.noobaa.io" INFO[0004] ✅ Exists: CustomResourceDefinition "objectbucketclaims.objectbucket.io" INFO[0004] ✅ Exists: CustomResourceDefinition "objectbuckets.objectbucket.io" INFO[0004] INFO[0004] Operator Status: INFO[0004] ✅ Exists: Namespace "openshift-storage" INFO[0004] ✅ Exists: ServiceAccount "noobaa" INFO[0005] ✅ Exists: Role "ocs-operator.v0.0.271-6g45f" INFO[0005] ✅ Exists: RoleBinding "ocs-operator.v0.0.271-6g45f-noobaa-f9vpj" INFO[0006] ✅ Exists: ClusterRole "ocs-operator.v0.0.271-fjhgh" INFO[0006] ✅ Exists: ClusterRoleBinding "ocs-operator.v0.0.271-fjhgh-noobaa-pdxn5" INFO[0006] ✅ Exists: Deployment "noobaa-operator" INFO[0006] INFO[0006] System Status: INFO[0007] ✅ Exists: NooBaa "noobaa" INFO[0007] ✅ Exists: StatefulSet "noobaa-core" INFO[0007] ✅ Exists: Service "noobaa-mgmt" INFO[0008] ✅ Exists: Service "s3" INFO[0008] ✅ Exists: Secret "noobaa-server" INFO[0008] ✅ Exists: Secret "noobaa-operator" INFO[0008] ✅ Exists: Secret "noobaa-admin" INFO[0009] ✅ Exists: StorageClass "openshift-storage.noobaa.io" INFO[0009] ✅ Exists: BucketClass "noobaa-default-bucket-class" INFO[0009] ✅ (Optional) Exists: BackingStore "noobaa-default-backing-store" INFO[0010] ✅ (Optional) Exists: CredentialsRequest "noobaa-cloud-creds" INFO[0010] ✅ (Optional) Exists: PrometheusRule "noobaa-prometheus-rules" INFO[0010] ✅ (Optional) Exists: ServiceMonitor "noobaa-service-monitor" INFO[0011] ✅ (Optional) Exists: Route "noobaa-mgmt" INFO[0011] ✅ (Optional) Exists: Route "s3" INFO[0011] ✅ Exists: PersistentVolumeClaim "db-noobaa-core-0" INFO[0011] ✅ System Phase is "Ready" INFO[0011] ✅ Exists: "noobaa-admin" #------------------# #- Mgmt Addresses -# #------------------# ExternalDNS : [https://noobaa-mgmt-openshift-storage.apps.mycluster-cluster.qe.rh-ocs.com https://a3406079515be11eaa3b70683061451e-1194613580.us-east-2.elb.amazonaws.com:443] ExternalIP : [] NodePorts : [https://10.0.142.103:31385] InternalDNS : [https://noobaa-mgmt.openshift-storage.svc:443] InternalIP : [https://172.30.235.12:443] PodPorts : [https://10.131.0.19:8443] #--------------------# #- Mgmt Credentials -# #--------------------# email : admin@noobaa.io password : HKLbH1rSuVU0I/souIkSiA== #----------------# #- S3 Addresses -# #----------------# 1 ExternalDNS : [https://s3-openshift-storage.apps.mycluster-cluster.qe.rh-ocs.com https://a340f4e1315be11eaa3b70683061451e-943168195.us-east-2.elb.amazonaws.com:443] ExternalIP : [] NodePorts : [https://10.0.142.103:31011] InternalDNS : [https://s3.openshift-storage.svc:443] InternalIP : [https://172.30.86.41:443] PodPorts : [https://10.131.0.19:6443] #------------------# #- S3 Credentials -# #------------------# 2 AWS_ACCESS_KEY_ID : jVmAsu9FsvRHYmfjTiHV 3 AWS_SECRET_ACCESS_KEY : E//420VNedJfATvVSmDz6FMtsSAzuBv6z180PT5c #------------------# #- Backing Stores -# #------------------# NAME TYPE TARGET-BUCKET PHASE AGE noobaa-default-backing-store aws-s3 noobaa-backing-store-15dc896d-7fe0-4bed-9349-5942211b93c9 Ready 141h35m32s #------------------# #- Bucket Classes -# #------------------# NAME PLACEMENT PHASE AGE noobaa-default-bucket-class {Tiers:[{Placement: BackingStores:[noobaa-default-backing-store]}]} Ready 141h35m33s #-----------------# #- Bucket Claims -# #-----------------# No OBC's found.

You now have the relevant endpoint, access key, and secret access key in order to connect to your applications.

Example 7.1. Example

If AWS S3 CLI is the application, the following command will list buckets in OCS:

AWS_ACCESS_KEY_ID=<AWS_ACCESS_KEY_ID> AWS_SECRET_ACCESS_KEY=<AWS_SECRET_ACCESS_KEY> aws --endpoint <ENDPOINT> --no-verify-ssl s3 ls

7.3. Allowing user access to the Multicloud Object Gateway Console

To allow access to the Multicloud Object Gateway Console to a user, ensure that the user meets the following conditions:

- User is in cluster-admins group.

- User is in system:cluster-admins virtual group.

Prerequisites

- A running OpenShift Container Storage Platform.

Procedure

Enable access to the Multicloud Object Gateway console.

Perform the following steps once on the cluster :

Create a

cluster-adminsgroup.# oc adm groups new cluster-admins

Bind the group to the

cluster-adminrole.# oc adm policy add-cluster-role-to-group cluster-admin cluster-admins

Add or remove users from the

cluster-adminsgroup to control access to the Multicloud Object Gateway console.To add a set of users to the

cluster-adminsgroup :# oc adm groups add-users cluster-admins <user-name> <user-name> <user-name>...

where

<user-name>is the name of the user to be added.NoteIf you are adding a set of users to the

cluster-adminsgroup, you do not need to bind the newly added users to the cluster-admin role to allow access to the OpenShift Container Storage dashboard.To remove a set of users from the

cluster-adminsgroup :# oc adm groups remove-users cluster-admins <user-name> <user-name> <user-name>...

where

<user-name>is the name of the user to be removed.

Verification steps

- On the OpenShift Web Console, login as a user with access permission to Multicloud Object Gateway Console.

- Navigate to Home → Overview → Persistent Storage tab → select the noobaa link .

- On the Multicloud Object Gateway Console, login as the same user with access permission.

- Click Allow selected permissions.

7.4. Adding storage resources for hybrid or Multicloud

7.4.1. Creating a new backing store

Use this procedure to create a new backing store in OpenShift Container Storage.

Prerequisites

- Administrator access to OpenShift.

Procedure

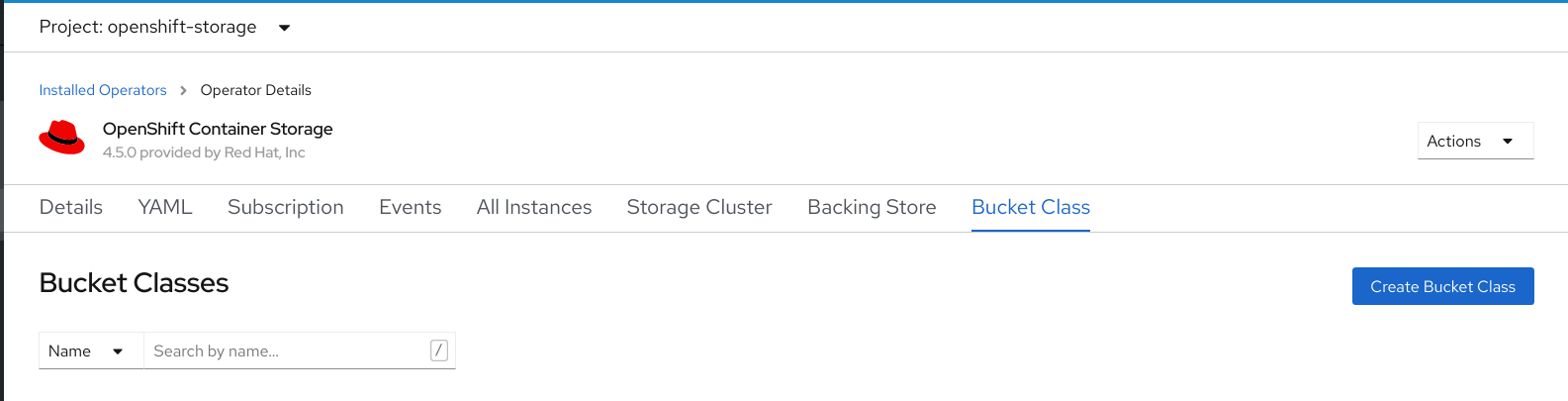

- Click Operators → Installed Operators from the left pane of the OpenShift Web Console to view the installed operators.

- Click OpenShift Container Storage Operator.

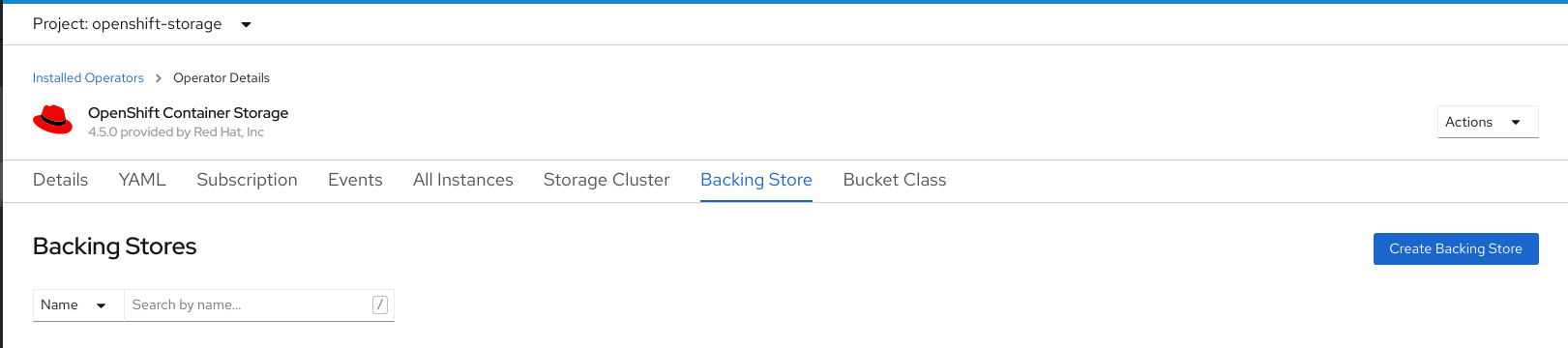

On the OpenShift Container Storage Operator page, scroll right and click the Backing Store tab.

Figure 7.1. OpenShift Container Storage Operator page with backing store tab

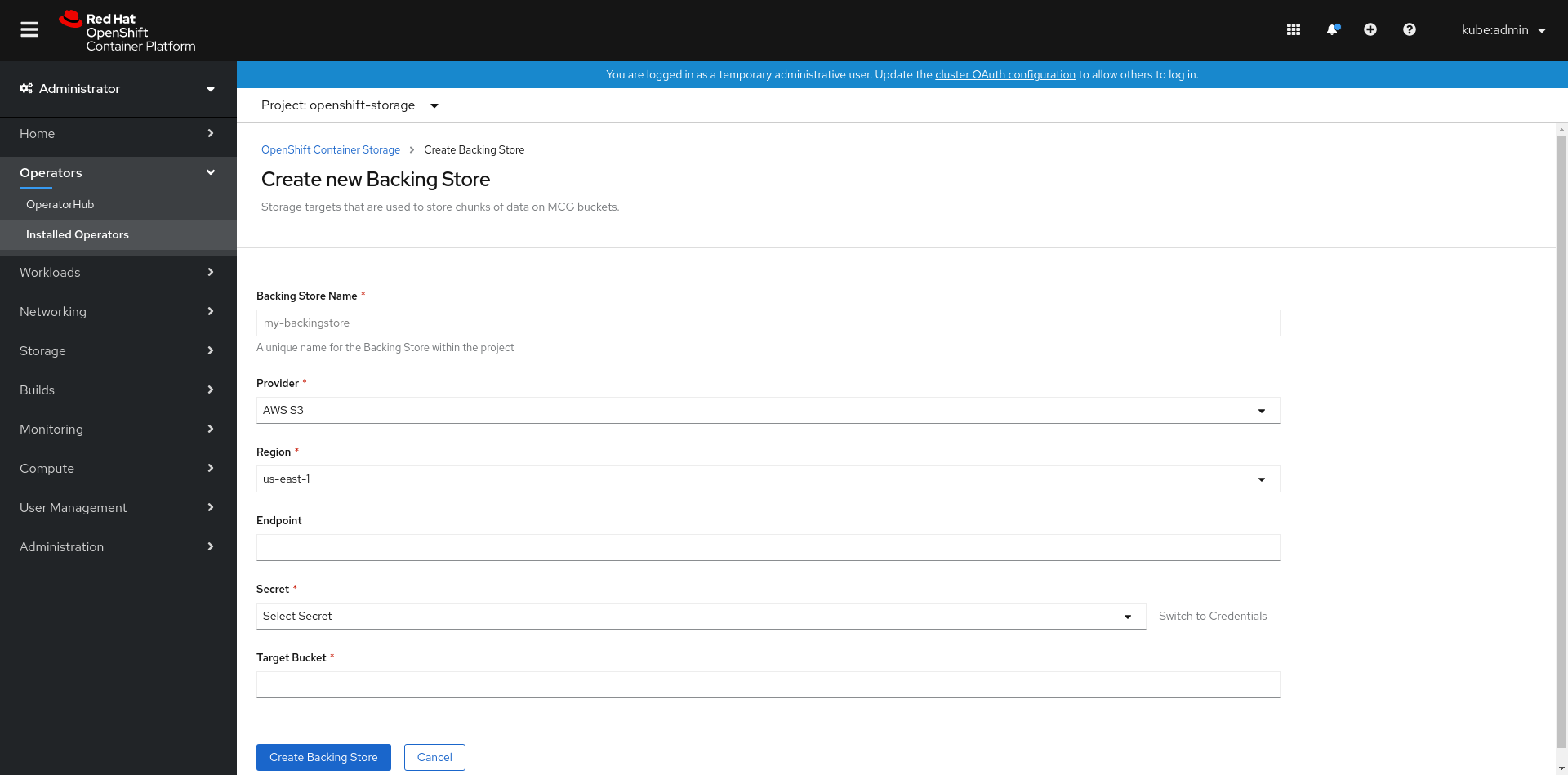

Click Create Backing Store.

Figure 7.2. Create Backing Store page

On the Create New Backing Store page, perform the following:

- Enter a Backing Store Name.

- Select a Provider.

- Select a Region.

- Enter an Endpoint. This is optional.

Select a Secret from drop down list, or create your own secret. Optionally, you can Switch to Credentials view which lets you fill in the required secrets.

For more information on creating an OCP secret, see the section Creating the secret in the Openshift Container Platform documentation.

Each backingstore requires a different secret. For more information on creating the secret for a particular backingstore, see the Section 7.4.2, “Adding storage resources for hybrid or Multicloud using the MCG command line interface” and follow the procedure for the addition of storage resources using a YAML.

NoteThis menu is relevant for all providers except Google Cloud and local PVC.

- Enter Target bucket. The target bucket is a container storage that is hosted on the remote cloud service. It allows you to create a connection that tells MCG that it can use this bucket for the system.

- Click Create Backing Store.

Verification steps

- Click Operators → Installed Operators.

- Click OpenShift Container Storage Operator.

- Search for the new backing store or click Backing Store tab to view all the backing stores.

7.4.2. Adding storage resources for hybrid or Multicloud using the MCG command line interface

The Multicloud Object Gateway (MCG) simplifies the process of spanning data across cloud provider and clusters.

You must add a backing storage that can be used by the MCG.

Depending on the type of your deployment, you can choose one of the following procedures to create a backing storage:

- For creating an AWS-backed backingstore, see Section 7.4.2.1, “Creating an AWS-backed backingstore”

- For creating an IBM COS-backed backingstore, see Section 7.4.2.2, “Creating an IBM COS-backed backingstore”

- For creating an Azure-backed backingstore, see Section 7.4.2.3, “Creating an Azure-backed backingstore”

- For creating a GCP-backed backingstore, see Section 7.4.2.4, “Creating a GCP-backed backingstore”

- For creating a local Persistent Volume-backed backingstore, see Section 7.4.2.5, “Creating a local Persistent Volume-backed backingstore”

For VMware deployments, skip to Section 7.4.3, “Creating an s3 compatible Multicloud Object Gateway backingstore” for further instructions.

7.4.2.1. Creating an AWS-backed backingstore

Prerequisites

Download the Multicloud Object Gateway (MCG) command-line interface:

# subscription-manager repos --enable=rh-ocs-4-for-rhel-8-x86_64-rpms # yum install mcg

-

Alternatively, you can install the

mcgpackage from the OpenShift Container Storage RPMs found here https://access.redhat.com/downloads/content/547/ver=4/rhel---8/4/x86_64/packages

Procedure

From the MCG command-line interface, run the following command:

noobaa backingstore create <backingstore_name> --access-key=<AWS ACCESS KEY> --secret-key=<AWS SECRET ACCESS KEY> --target-bucket <bucket-name>

-

Replace

<backingstore_name>with the name of the backingstore. -

Replace

<AWS ACCESS KEY>and<AWS SECRET ACCESS KEY>with an AWS access key ID and secret access key you created for this purpose. Replace

<bucket-name>with an existing AWS bucket name. This argument tells Multicloud Object Gateway which bucket to use as a target bucket for its backing store, and subsequently, data storage and administration.The output will be similar to the following:

INFO[0001] ✅ Exists: NooBaa "noobaa" INFO[0002] ✅ Created: BackingStore "aws-resource" INFO[0002] ✅ Created: Secret "backing-store-secret-aws-resource"

You can also add storage resources using a YAML:

Create a secret with the credentials:

apiVersion: v1 kind: Secret metadata: name: <backingstore-secret-name> type: Opaque data: AWS_ACCESS_KEY_ID: <AWS ACCESS KEY ID ENCODED IN BASE64> AWS_SECRET_ACCESS_KEY: <AWS SECRET ACCESS KEY ENCODED IN BASE64>

-

You must supply and encode your own AWS access key ID and secret access key using Base64, and use the results in place of

<AWS ACCESS KEY ID ENCODED IN BASE64>and<AWS SECRET ACCESS KEY ENCODED IN BASE64>. -

Replace

<backingstore-secret-name>with a unique name.

-

You must supply and encode your own AWS access key ID and secret access key using Base64, and use the results in place of

Apply the following YAML for a specific backing store:

apiVersion: noobaa.io/v1alpha1 kind: BackingStore metadata: finalizers: - noobaa.io/finalizer labels: app: noobaa name: bs namespace: openshift-storage spec: awsS3: secret: name: <backingstore-secret-name> namespace: noobaa targetBucket: <bucket-name> type: aws-s3-

Replace

<bucket-name>with an existing AWS bucket name. This argument tells Multicloud Object Gateway which bucket to use as a target bucket for its backing store, and subsequently, data storage and administration. -

Replace

<backingstore-secret-name>with the name of the secret created in the previous step.

-

Replace

7.4.2.2. Creating an IBM COS-backed backingstore

Prerequisites

Download the Multicloud Object Gateway (MCG) command-line interface:

# subscription-manager repos --enable=rh-ocs-4-for-rhel-8-x86_64-rpms # yum install mcg

-

Alternatively, you can install the

mcgpackage from the OpenShift Container Storage RPMs found here https://access.redhat.com/downloads/content/547/ver=4/rhel---8/4/x86_64/packages

Procedure

From the MCG command-line interface, run the following command:

noobaa backingstore create ibm-cos <backingstore_name> --access-key=<IBM ACCESS KEY> --secret-key=<IBM SECRET ACCESS KEY> --endpoint=<IBM COS ENDPOINT> --target-bucket <bucket-name>

-

Replace

<backingstore_name>with the name of the backingstore. Replace

<IBM ACCESS KEY>,<IBM SECRET ACCESS KEY>,<IBM COS ENDPOINT>with an IBM access key ID, secret access key and the appropriate regional endpoint that corresponds to the location of the existing IBM bucket.To generate the above keys on IBM cloud, you must include HMAC credentials while creating the service credentials for your target bucket.

Replace

<bucket-name>with an existing IBM bucket name. This argument tells Multicloud Object Gateway which bucket to use as a target bucket for its backing store, and subsequently, data storage and administration.The output will be similar to the following:

INFO[0001] ✅ Exists: NooBaa "noobaa" INFO[0002] ✅ Created: BackingStore "ibm-resource" INFO[0002] ✅ Created: Secret "backing-store-secret-ibm-resource"

-

Replace

You can also add storage resources using a YAML:

Create a secret with the credentials:

apiVersion: v1 kind: Secret metadata: name: <backingstore-secret-name> type: Opaque data: IBM_COS_ACCESS_KEY_ID: <IBM COS ACCESS KEY ID ENCODED IN BASE64> IBM_COS_SECRET_ACCESS_KEY: <IBM COS SECRET ACCESS KEY ENCODED IN BASE64>

-

You must supply and encode your own IBM COS access key ID and secret access key using Base64, and use the results in place of

<IBM COS ACCESS KEY ID ENCODED IN BASE64>and<IBM COS SECRET ACCESS KEY ENCODED IN BASE64>. -

Replace

<backingstore-secret-name>with a unique name.

-

You must supply and encode your own IBM COS access key ID and secret access key using Base64, and use the results in place of

Apply the following YAML for a specific backing store:

apiVersion: noobaa.io/v1alpha1 kind: BackingStore metadata: finalizers: - noobaa.io/finalizer labels: app: noobaa name: bs namespace: openshift-storage spec: ibmCos: endpoint: <endpoint> secret: name: <backingstore-secret-name> namespace: openshift-storage targetBucket: <bucket-name> type: ibm-cos-

Replace

<bucket-name>with an existing IBM COS bucket name. This argument tells Multicloud Object Gateway which bucket to use as a target bucket for its backing store, and subsequently, data storage and administration. -

Replace

<endpoint>with a regional endpoint that corresponds to the location of the existing IBM bucket name. This argument tells Multicloud Object Gateway which endpoint to use for its backing store, and subsequently, data storage and administration. -

Replace

<backingstore-secret-name>with the name of the secret created in the previous step.

-

Replace

7.4.2.3. Creating an Azure-backed backingstore

Prerequisites

Download the Multicloud Object Gateway (MCG) command-line interface:

# subscription-manager repos --enable=rh-ocs-4-for-rhel-8-x86_64-rpms # yum install mcg

-

Alternatively, you can install the

mcgpackage from the OpenShift Container Storage RPMs found here https://access.redhat.com/downloads/content/547/ver=4/rhel---8/4/x86_64/packages

Procedure

From the MCG command-line interface, run the following command:

noobaa backingstore create azure-blob <backingstore_name> --account-key=<AZURE ACCOUNT KEY> --account-name=<AZURE ACCOUNT NAME> --target-blob-container <blob container name>

-

Replace

<backingstore_name>with the name of the backingstore. -

Replace

<AZURE ACCOUNT KEY>and<AZURE ACCOUNT NAME>with an AZURE account key and account name you created for this purpose. Replace

<blob container name>with an existing Azure blob container name. This argument tells Multicloud Object Gateway which bucket to use as a target bucket for its backing store, and subsequently, data storage and administration.The output will be similar to the following:

INFO[0001] ✅ Exists: NooBaa "noobaa" INFO[0002] ✅ Created: BackingStore "azure-resource" INFO[0002] ✅ Created: Secret "backing-store-secret-azure-resource"

-

Replace

You can also add storage resources using a YAML:

Create a secret with the credentials:

apiVersion: v1 kind: Secret metadata: name: <backingstore-secret-name> type: Opaque data: AccountName: <AZURE ACCOUNT NAME ENCODED IN BASE64> AccountKey: <AZURE ACCOUNT KEY ENCODED IN BASE64>

-

You must supply and encode your own Azure Account Name and Account Key using Base64, and use the results in place of

<AZURE ACCOUNT NAME ENCODED IN BASE64>and<AZURE ACCOUNT KEY ENCODED IN BASE64>. -

Replace

<backingstore-secret-name>with a unique name.

-

You must supply and encode your own Azure Account Name and Account Key using Base64, and use the results in place of

Apply the following YAML for a specific backing store:

apiVersion: noobaa.io/v1alpha1 kind: BackingStore metadata: finalizers: - noobaa.io/finalizer labels: app: noobaa name: bs namespace: openshift-storage spec: azureBlob: secret: name: <backingstore-secret-name> namespace: openshift-storage targetBlobContainer: <blob-container-name> type: azure-blob-

Replace

<blob-container-name>with an existing Azure blob container name. This argument tells Multicloud Object Gateway which bucket to use as a target bucket for its backing store, and subsequently, data storage and administration. -

Replace

<backingstore-secret-name>with the name of the secret created in the previous step.

-

Replace

7.4.2.4. Creating a GCP-backed backingstore

Prerequisites

Download the Multicloud Object Gateway (MCG) command-line interface:

# subscription-manager repos --enable=rh-ocs-4-for-rhel-8-x86_64-rpms # yum install mcg

-

Alternatively, you can install the

mcgpackage from the OpenShift Container Storage RPMs found here https://access.redhat.com/downloads/content/547/ver=4/rhel---8/4/x86_64/packages

Procedure

From the MCG command-line interface, run the following command:

noobaa backingstore create google-cloud-storage <backingstore_name> --private-key-json-file=<PATH TO GCP PRIVATE KEY JSON FILE> --target-bucket <GCP bucket name>

-

Replace

<backingstore_name>with the name of the backingstore. -

Replace

<PATH TO GCP PRIVATE KEY JSON FILE>with a path to your GCP private key created for this purpose. Replace

<GCP bucket name>with an existing GCP object storage bucket name. This argument tells Multicloud Object Gateway which bucket to use as a target bucket for its backing store, and subsequently, data storage and administration.The output will be similar to the following:

INFO[0001] ✅ Exists: NooBaa "noobaa" INFO[0002] ✅ Created: BackingStore "google-gcp" INFO[0002] ✅ Created: Secret "backing-store-google-cloud-storage-gcp"

-

Replace

You can also add storage resources using a YAML:

Create a secret with the credentials:

apiVersion: v1 kind: Secret metadata: name: <backingstore-secret-name> type: Opaque data: GoogleServiceAccountPrivateKeyJson: <GCP PRIVATE KEY ENCODED IN BASE64>

-

You must supply and encode your own GCP service account private key using Base64, and use the results in place of

<GCP PRIVATE KEY ENCODED IN BASE64>. - Replace <backingstore-secret-name> with a unique name.

-

You must supply and encode your own GCP service account private key using Base64, and use the results in place of

Apply the following YAML for a specific backing store:

apiVersion: noobaa.io/v1alpha1 kind: BackingStore metadata: finalizers: - noobaa.io/finalizer labels: app: noobaa name: bs namespace: openshift-storage spec: googleCloudStorage: secret: name: <backingstore-secret-name> namespace: openshift-storage targetBucket: <target bucket> type: google-cloud-storage-

Replace

<target bucket>with an existing Google storage bucket. This argument tells Multicloud Object Gateway which bucket to use as a target bucket for its backing store, and subsequently, data storage and administration. -

Replace

<backingstore-secret-name>with the name of the secret created in the previous step.

-

Replace

7.4.2.5. Creating a local Persistent Volume-backed backingstore

Prerequisites

Download the Multicloud Object Gateway (MCG) command-line interface:

# subscription-manager repos --enable=rh-ocs-4-for-rhel-8-x86_64-rpms # yum install mcg

-

Alternatively, you can install the

mcgpackage from the OpenShift Container Storage RPMs found here https://access.redhat.com/downloads/content/547/ver=4/rhel---8/4/x86_64/packages

Procedure

From the MCG command-line interface, run the following command:

noobaa backingstore create pv-pool <backingstore_name> --num-volumes=<NUMBER OF VOLUMES> --pv-size-gb=<VOLUME SIZE> --storage-class=<LOCAL STORAGE CLASS>

-

Replace

<backingstore_name>with the name of the backingstore. -

Replace

<NUMBER OF VOLUMES>with the number of volumes you would like to create. -

Replace

<VOLUME SIZE>with the required size, in GB, of each volume Replace

<LOCAL STORAGE CLASS>with the local storage class, recommended to use ocs-storagecluster-ceph-rbdThe output will be similar to the following:

INFO[0001] ✅ Exists: NooBaa "noobaa" INFO[0002] ✅ Exists: BackingStore "local-mcg-storage"

-

Replace

You can also add storage resources using a YAML:

Apply the following YAML for a specific backing store:

apiVersion: noobaa.io/v1alpha1 kind: BackingStore metadata: finalizers: - noobaa.io/finalizer labels: app: noobaa name: <backingstore_name> namespace: openshift-storage spec: pvPool: numVolumes: <NUMBER OF VOLUMES> resources: requests: storage: <VOLUME SIZE> storageClass: <LOCAL STORAGE CLASS> type: pv-pool-

Replace

<backingstore_name>with the name of the backingstore. -

Replace

<NUMBER OF VOLUMES>with the number of volumes you would like to create. -

Replace

<VOLUME SIZE>with the required size, in GB, of each volume. Note that the letter G should remain -

Replace

<LOCAL STORAGE CLASS>with the local storage class, recommended to use ocs-storagecluster-ceph-rbd

-

Replace

7.4.3. Creating an s3 compatible Multicloud Object Gateway backingstore

The Multicloud Object Gateway can use any S3 compatible object storage as a backing store, for example, Red Hat Ceph Storage’s RADOS Gateway (RGW). The following procedure shows how to create an S3 compatible Multicloud Object Gateway backing store for Red Hat Ceph Storage’s RADOS Gateway. Note that when RGW is deployed, Openshift Container Storage operator creates an S3 compatible backingstore for Multicloud Object Gateway automatically.

Procedure

From the Multicloud Object Gateway (MCG) command-line interface, run the following NooBaa command:

noobaa backingstore create s3-compatible rgw-resource --access-key=<RGW ACCESS KEY> --secret-key=<RGW SECRET KEY> --target-bucket=<bucket-name> --endpoint=http://rook-ceph-rgw-ocs-storagecluster-cephobjectstore.openshift-storage.svc.cluster.local:80

To get the

<RGW ACCESS KEY>and<RGW SECRET KEY>, run the following command using your RGW user secret name:oc get secret <RGW USER SECRET NAME> -o yaml

- Decode the access key ID and the access key from Base64 and keep them.

-

Replace

<RGW USER ACCESS KEY>and<RGW USER SECRET ACCESS KEY>with the appropriate, decoded data from the previous step. Replace

<bucket-name>with an existing RGW bucket name. This argument tells Multicloud Object Gateway which bucket to use as a target bucket for its backing store, and subsequently, data storage and administration.The output will be similar to the following:

INFO[0001] ✅ Exists: NooBaa "noobaa" INFO[0002] ✅ Created: BackingStore "rgw-resource" INFO[0002] ✅ Created: Secret "backing-store-secret-rgw-resource"

You can also create the backingstore using a YAML:

Create a

CephObjectStoreuser. This also creates a secret containing the RGW credentials:apiVersion: ceph.rook.io/v1 kind: CephObjectStoreUser metadata: name: <RGW-Username> namespace: openshift-storage spec: store: ocs-storagecluster-cephobjectstore displayName: "<Display-name>"

-

Replace

<RGW-Username>and<Display-name>with a unique username and display name.

-

Replace

Apply the following YAML for an S3-Compatible backing store:

apiVersion: noobaa.io/v1alpha1 kind: BackingStore metadata: finalizers: - noobaa.io/finalizer labels: app: noobaa name: <backingstore-name> namespace: openshift-storage spec: s3Compatible: endpoint: http://rook-ceph-rgw-ocs-storagecluster-cephobjectstore.openshift-storage.svc.cluster.local:80 secret: name: <backingstore-secret-name> namespace: openshift-storage signatureVersion: v4 targetBucket: <RGW-bucket-name> type: s3-compatible-

Replace

<backingstore-secret-name>with the name of the secret that was created withCephObjectStorein the previous step. -

Replace

<bucket-name>with an existing RGW bucket name. This argument tells Multicloud Object Gateway which bucket to use as a target bucket for its backing store, and subsequently, data storage and administration.

-

Replace

7.4.4. Adding storage resources for hybrid and Multicloud using the user interface

Procedure

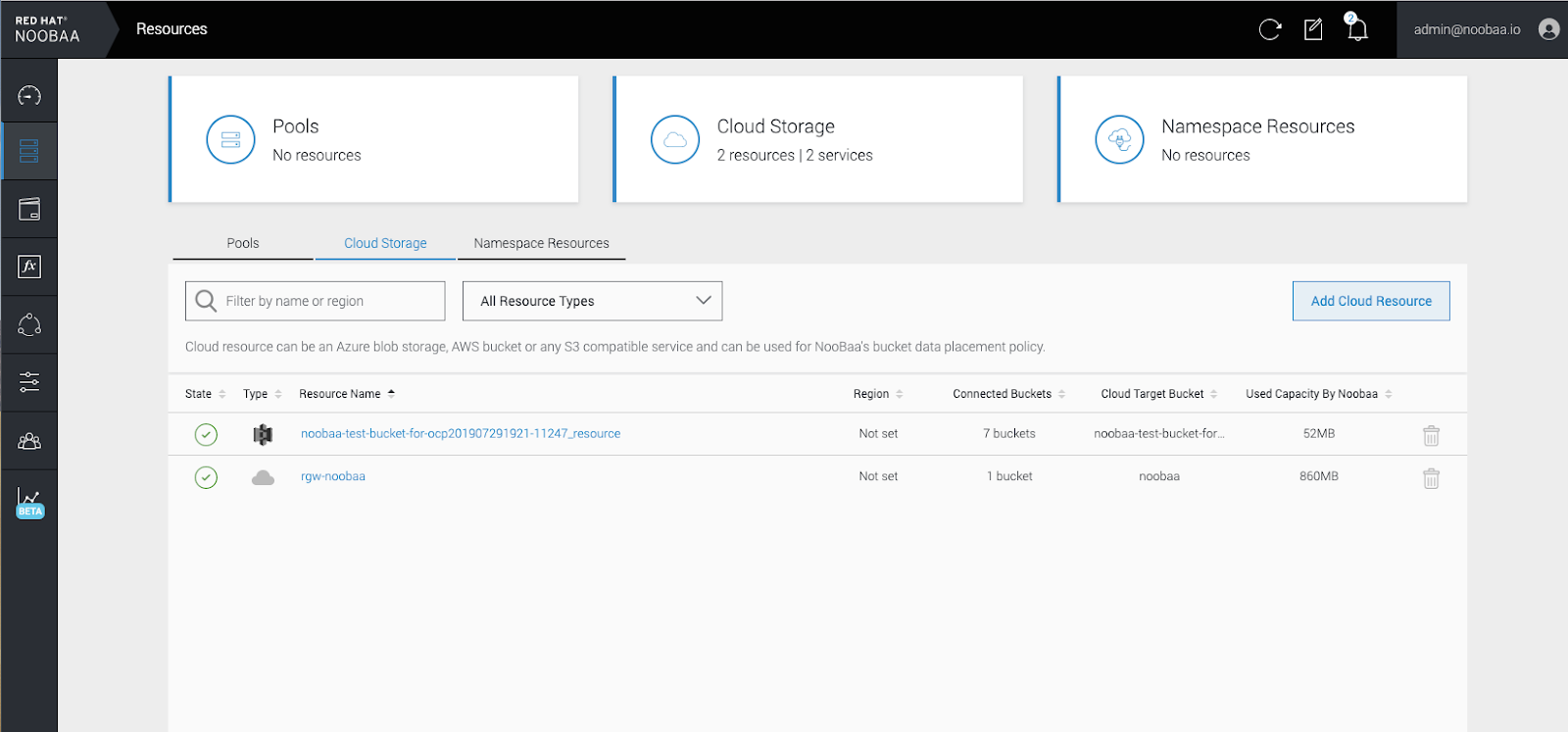

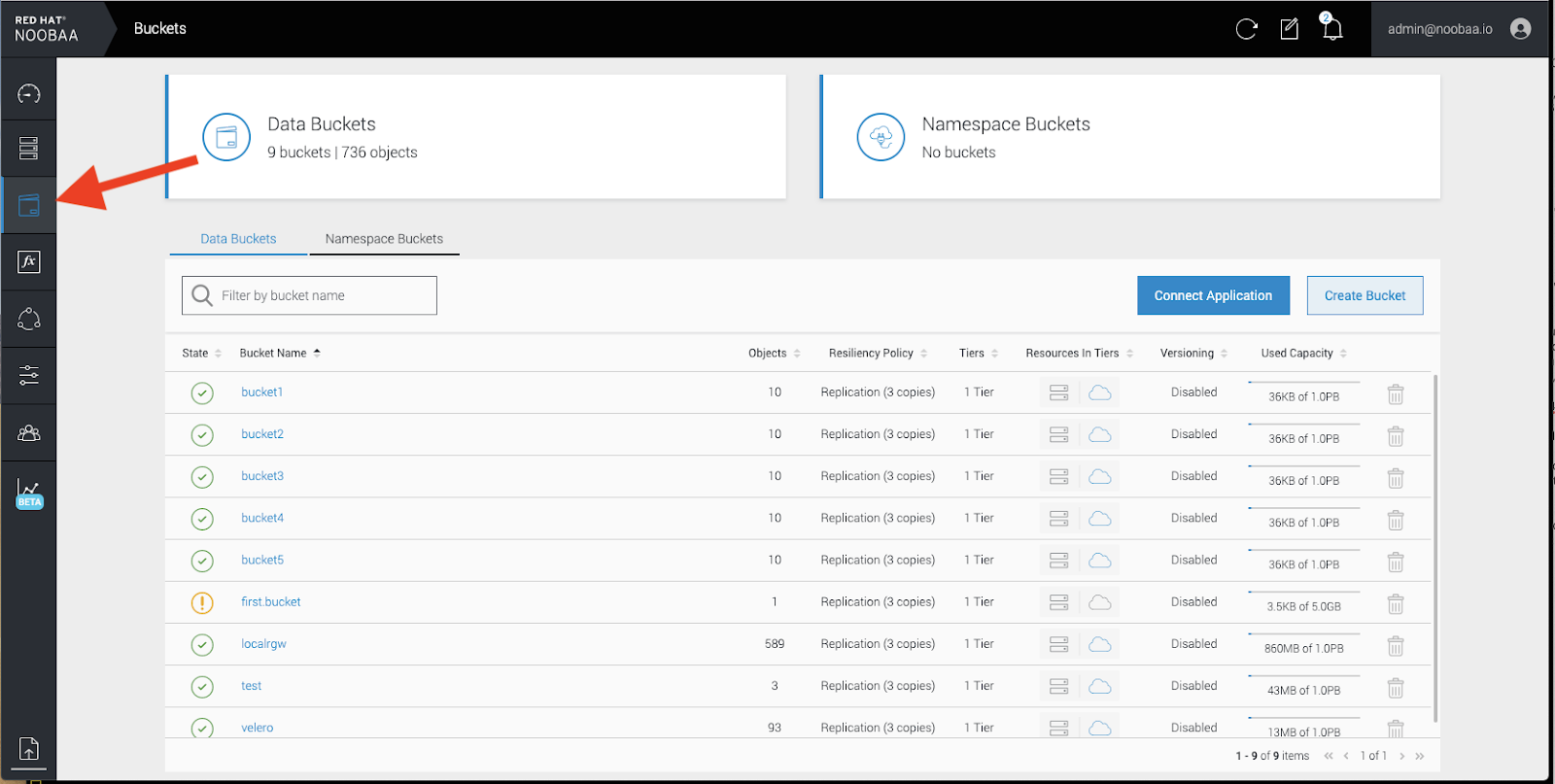

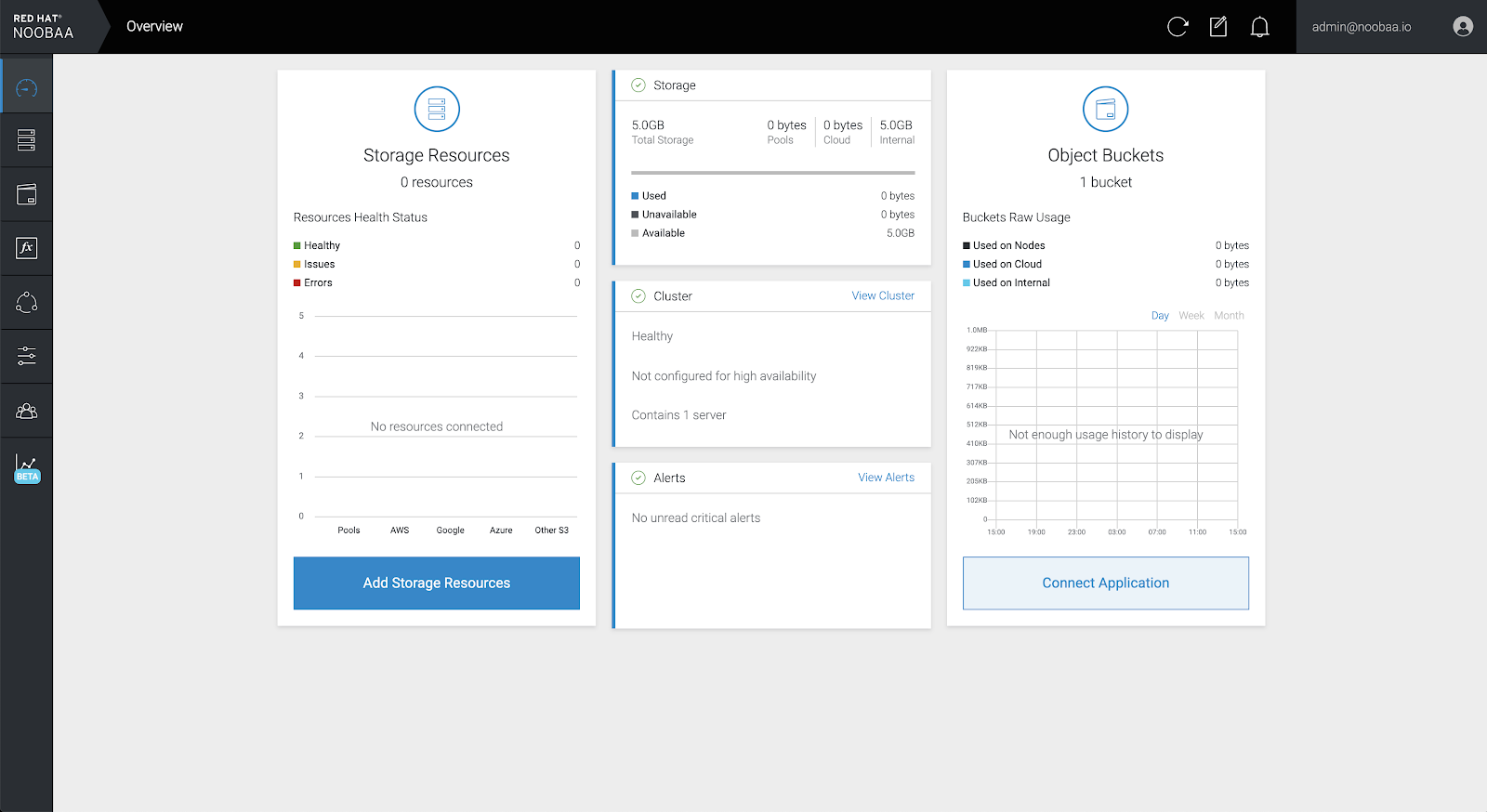

In your OpenShift Storage console, navigate to Overview → Object Service → select the noobaa link:

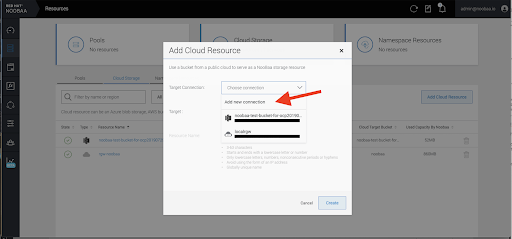

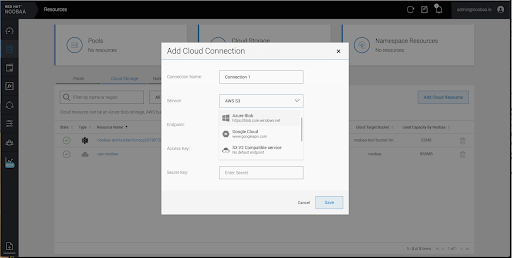

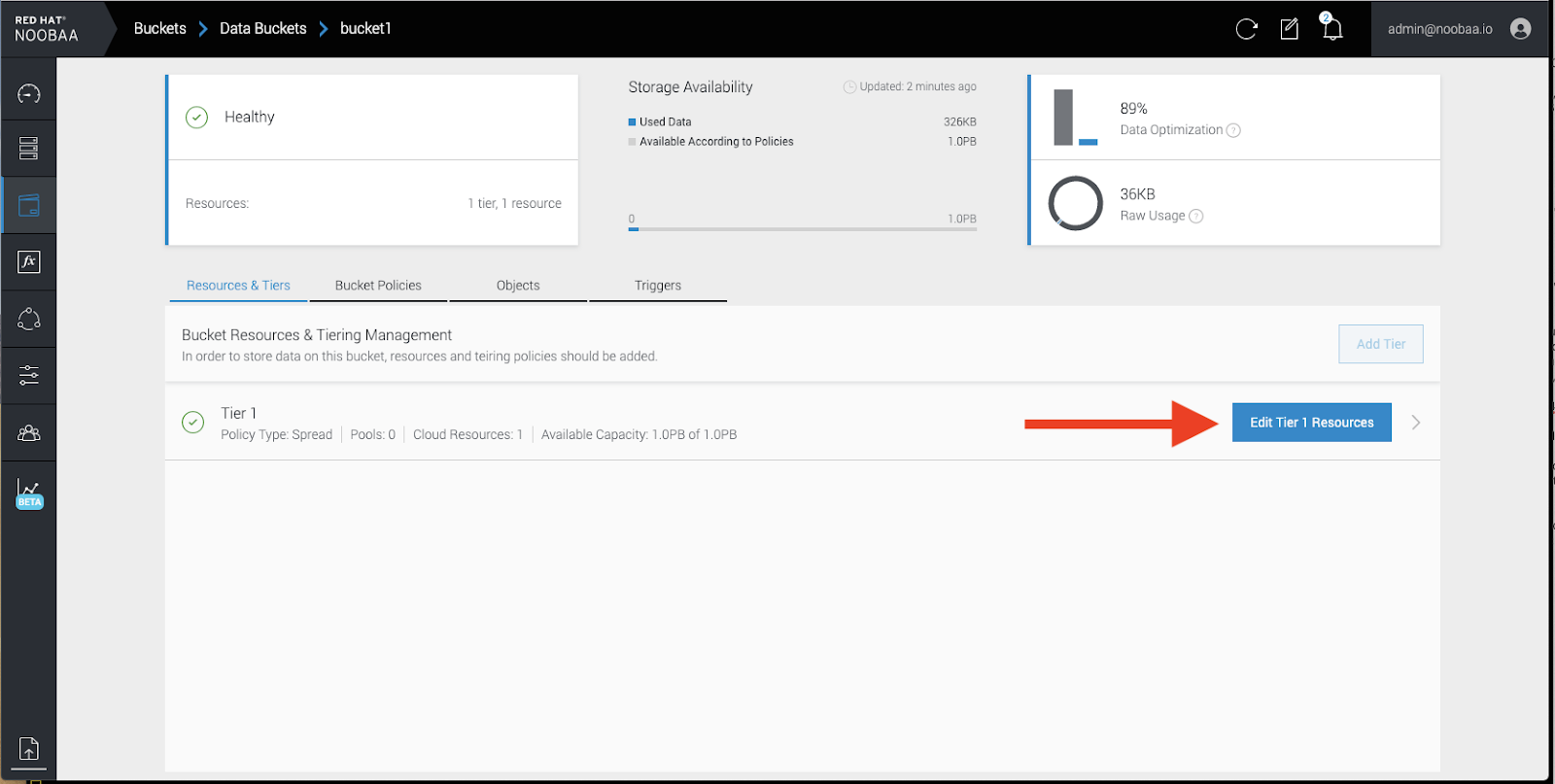

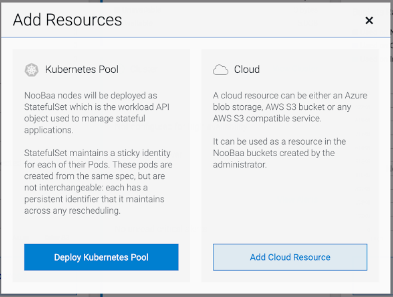

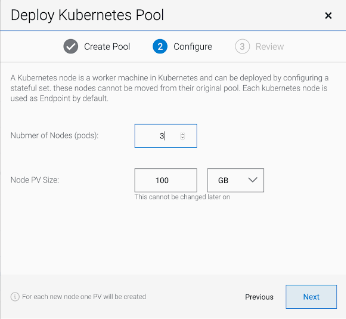

Select the Resources tab in the left, highlighted below. From the list that populates, select Add Cloud Resource:

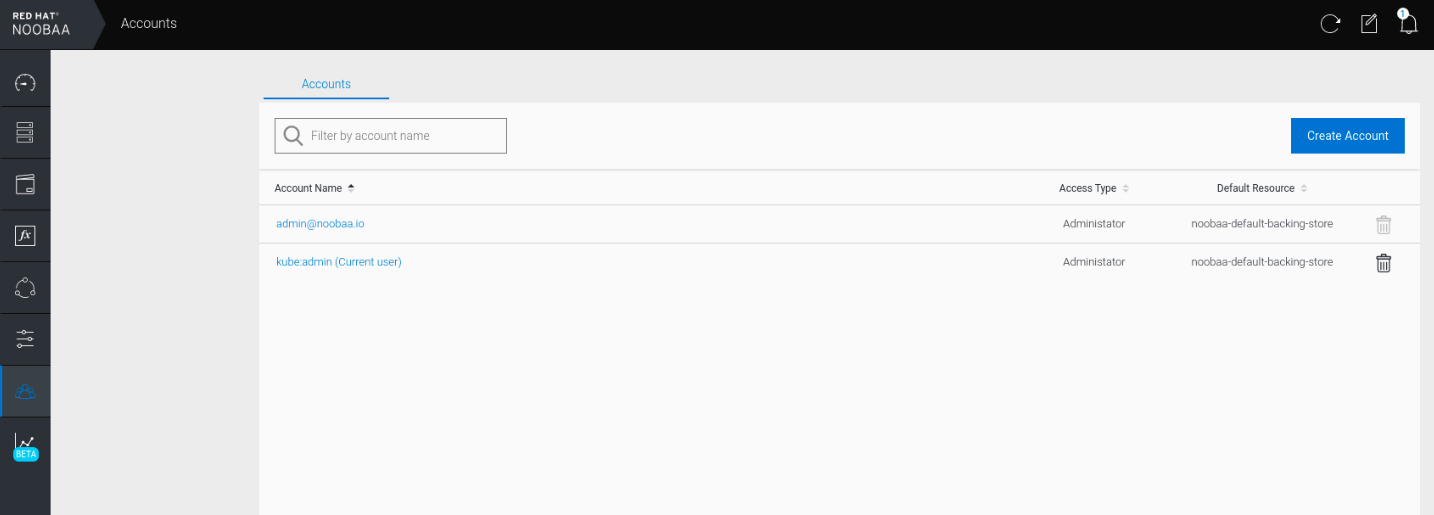

Select Add new connection:

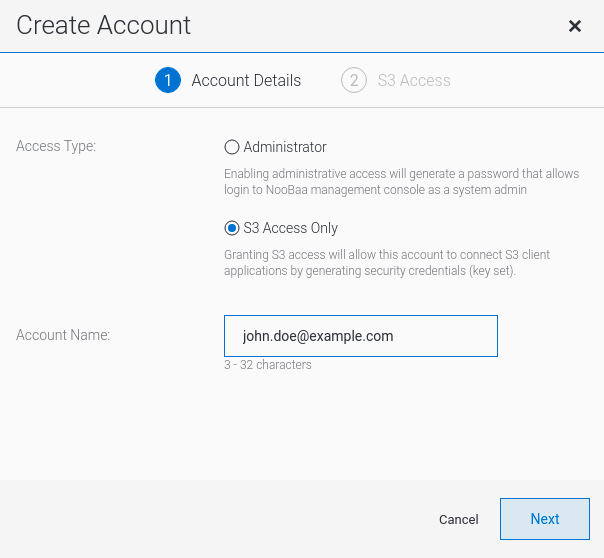

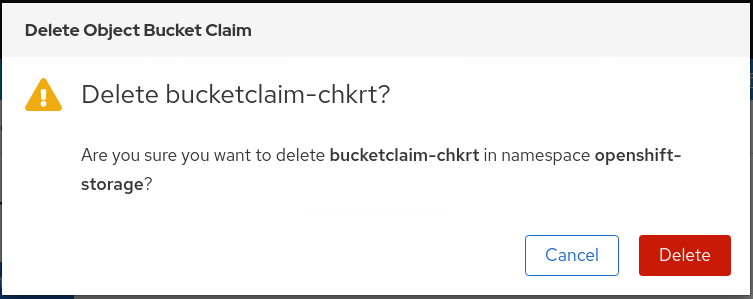

Select the relevant native cloud provider or S3 compatible option and fill in the details: