-

Language:

English

-

Language:

English

Red Hat Training

A Red Hat training course is available for Red Hat Fuse

Configuring and Running JBoss Fuse

Managing the runtime container

Red Hat

Copyright © 2011-2020 Red Hat, Inc. and/or its affiliates.

Abstract

Chapter 1. Configuring the Initial Features in a Standalone Container

Abstract

Overview

etc/org.apache.karaf.features.cfg file to discover the feature URLs (feature repository locations) and to determine which features it will load. By default, Red Hat JBoss Fuse loads a large number of features and you may not need all of them. You may also decide you need features that are not included in the default configuration.

etc/org.apache.karaf.features.cfg are only used the first time the container is started. On subsequent start-ups, the container uses the contents of the InstallDir/data directory to determine what to load. If you need to adjust the features loaded into a container, you can delete the data directory, but this will also destroy any state or persistence information stored by the container.

Modifying the default installed features

Modifying the default set of feature URLs

Chapter 2. Installing Red Hat JBoss Fuse as a Service

Abstract

2.1. Overview

bin/contrib directory.

2.1.1. Running Fuse as a Service

- the init script

- the init configuration file

2.1.2. Customizing karaf-service.sh Utility

Table 2.1.

| Command Line Option | Environment Variable | Description |

|---|---|---|

| -k | KARAF_SERVICE_PATH | Karaf installation path |

| -d | KARAF_SERVICE_DATA | Karaf data path (default to \${KARAF_SERVICE_PATH}/data) |

| -c | KARAF_SERVICE_CONF | Karaf configuration file (default to \${KARAF_SERVICE_PATH/etc/\${KARAF_SERVICE_NAME}.conf) |

| -t | KARAF_SERVICE_ETC | Karaf etc path (default to \${KARAF_SERVICE_PATH/etc}) |

| -p | KARAF_SERVICE_PIDFILE | Karaf pid path (default to \${KARAF_SERVICE_DATA}/\${KARAF_SERVICE_NAME}.pid)) |

| -n | KARAF_SERVICE_NAME | Karaf service name (default karaf) |

| -e | KARAF_ENV | Karaf environment variable |

| -u | KARAF_SERVICE_USER | Karaf user |

| -g | KARAF_SERVICE_GROUP | Karaf group (default \${KARAF_SERVICE_USER) |

| -l | KARAF_SERVICE_LOG | Karaf console log (default to \${KARAF_SERVICE_DATA}/log/\${KARAF_SERVICE_NAME}-console.log) |

| -f | KARAF_SERVICE_TEMPLATE | Template file to use |

| -x | KARAF_SERVICE_EXECUTABLE | Karaf executable name (defaul karaf support daemon and stop commands) |

CONF_TEMPLATE="karaf-service-template.conf" SYSTEMD_TEMPLATE="karaf-service-template.systemd" SYSTEMD_TEMPLATE_INSTANCES="karaf-service-template.systemd-instances" INIT_TEMPLATE="karaf-service-template.init" INIT_REDHAT_TEMPLATE="karaf-service-template.init-redhat" INIT_DEBIAN_TEMPLATE="karaf-service-template.init-debian" SOLARIS_SMF_TEMPLATE="karaf-service-template.solaris-smf"

2.1.3. Systemd

karaf-service.sh utility identifies Systemd, it generates three files:

- a systemd unit file to manage the root Apache Karaf container

- a systemd environment file with variables used by the root Apache Karaf container

- a systemd template unit file to manage Apache Karaf child containers

$ ./karaf-service.sh -k /opt/karaf-4 -n karaf-4 Writing service file "/opt/karaf-4/bin/contrib/karaf-4.service" Writing service configuration file ""/opt/karaf-4/etc/karaf-4.conf" Writing service file "/opt/karaf-4/bin/contrib/karaf-4@.service" $ cp /opt/karaf-4/bin/contrib/karaf-4.service /etc/systemd/system $ cp /opt/karaf-4/bin/contrib/karaf-4@.service /etc/systemd/system $ systemctl enable karaf-4.service

2.1.4. SysV

karaf-service.sh utility identifies a SysV system, it generates two files:

- an init script to manage the root Apache Karaf container

- an environment file with variables used by the root Apache Karaf container

$ ./karaf-service.sh -k /opt/karaf-4 -n karaf-4 Writing service file "/opt/karaf-4/bin/contrib/karaf-4" Writing service configuration file "/opt/karaf-4/etc/karaf-4.conf" $ ln -s /opt/karaf-4/bin/contrib/karaf-4 /etc/init.d/ $ chkconfig karaf-4 on

2.1.5. Solaris SMF

$ ./karaf-service.sh -k /opt/karaf-4 -n karaf-4 Writing service file "/opt/karaf-4/bin/contrib/karaf-4.xml" $ svccfg validate /opt/karaf-4/bin/contrib/karaf-4.xml $ svccfg import /opt/karaf-4/bin/contrib/karaf-4.xml

2.1.6. Windows

- Rename the

karaf-service-win.exefile tokaraf-4.exefile. - Rename the

karaf-service-win.xmlfile tokaraf-4.xmlfile. - Customize the service descriptor as per your requirements.

- Use the service executable to install, start and stop the service.

C:\opt\apache-karaf-4\bin\contrib> karaf-4.exe install C:\opt\apache-karaf-4\bin\contrib> karaf-4.exe start

Chapter 3. Basic Security

Abstract

3.1. Configuring Basic Security

Overview

Before you start the container

Create a secure JAAS user

InstallDir/etc/users.properties file and add a new user field, as follows:

Username=Password,Administrator

Username and Password are the new user credentials. The Administrator role gives this user the privileges to access all administration and management functions of the container. For more details about JAAS, see Chapter 14, Configuring JAAS Security.

FuseMQ:karaf@root>echo 0123 123FuseMQ:karaf@root>echo 00.123 0.123FuseMQ:karaf@root>

Role-based access control

Table 3.1. Standard Roles for Access Control

| Roles | Description |

|---|---|

Monitor, Operator, Maintainer | Grants read-only access to the container. |

Deployer, Auditor | Grants read-write access at the appropriate level for ordinary users, who want to deploy and run applications. But blocks access to sensitive container configuration settings. |

Administrator, SuperUser | Grants unrestricted access to the container. |

Ports exposed by the JBoss Fuse container

Figure 3.1. Ports Exposed by the JBoss Fuse Container

- Console port—enables remote control of a container instance, through Apache Karaf shell commands. This port is enabled by default and is secured both by JAAS authentication and by SSH.

- JMX port—enables management of the container through the JMX protocol. This port is enabled by default and is secured by JAAS authentication.

- Web console port—provides access to an embedded Jetty container that can host Web console servlets. By default, the Fuse Management Console is installed in the Jetty container.

Enabling the remote console port

- JAAS is configured with at least one set of login credentials.

- The JBoss Fuse runtime has not been started in client mode (client mode disables the remote console port completely).

./client -u Username -p Password

Username and Password are the credentials of a JAAS user with Administrator privileges. For more details, see Chapter 8, Using Remote Connections to Manage a Container.

Strengthening security on the remote console port

- Make sure that the JAAS user credentials have strong passwords.

- Customize the X.509 certificate (replace the Java keystore file,

InstallDir/etc/host.key, with a custom key pair).

Enabling the JMX port

jconsole) and connect to the following JMX URI:

service:jmx:rmi:///jndi/rmi://localhost:1099/karaf-root

/karaf-ContainerName. If you change the container name from root to some other name, you must modify the JMX URI accordingly.

Strengthening security on the Fuse Management Console port

3.2. Disabling Broker Security

Overview

Standalone server

InstallDir/etc/broker.xml file using a text editor and look for the following lines:

...

<plugins>

<jaasAuthenticationPlugin configuration="karaf" />

</plugins>

...jaasAuthenticationPlugin element. The next time you start up the Red Hat JBoss Fuse container (using the InstallDir/bin/fusemq script), the broker will run with unsecured ports.

Chapter 4. Starting and Stopping JBoss Fuse

Abstract

4.1. Starting JBoss Fuse

Abstract

Overview

Setting up your environment

bin subdirectory of your installation, without modifying your environment. However, if you want to start it in a different folder you will need to add the bin directory of your JBoss Fuse installation to the PATH environment variable, as follows:

set PATH=%PATH%;InstallDir\bin

export PATH=$PATH,InstallDir/bin

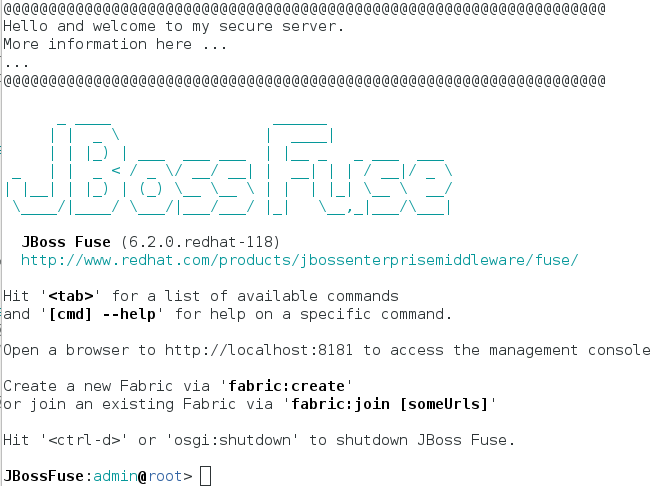

Launching the runtime in console mode

bin\fuse.bat./bin/fuse

_ ____ ______

| | _ \ | ____|

| | |_) | ___ ___ ___ | |__ _ _ ___ ___

_ | | _ / _ \/ __/ __| | __| | | / __|/ _ \

| |__| | |_) | (_) \__ \__ \ | | | |_| \__ \ __/

\____/|____/ \___/|___/___/ |_| \__,_|___/\___|

JBoss Fuse (6.3.0.redhat-xxx)

http://www.redhat.com/products/jbossenterprisemiddleware/fuse/

Hit '<tab>' for a list of available commands

and '[cmd] --help' for help on a specific command.

Hit '<ctrl-d>' or 'osgi:shutdown' to shutdown JBoss Fuse.

JBossFuse:karaf@root>./bin/karaf, which is executing the Karaf console; and the child process, which is executing the Karaf server in a java JVM. The shutdown behaviour remains the same as before, however. That is, you can shut down the server from the console using either Ctrl-D or osgi:shutdown, which kills both processes.

Launching the runtime in server mode

bin\start.bat./bin/startLaunching the runtime in client mode

bin\fuse.bat client./bin/fuse client4.2. Stopping JBoss Fuse

Abstract

stop script.

Stopping an instance from a local console

fuse or fuse client, you can stop it by doing one of the following at the karaf> prompt:

- Type

shutdown - Press Ctrl+D

Stopping an instance running in server mode

InstallDir/bin directory, as follows:

bin\stop.bat

./bin/stop

stop script is similar to the shutdown mechanism implemented in Apache Tomcat. The Karaf server opens a dedicated shutdown port (not the same as the SSH port) to receive the shutdown notification. By default, the shutdown port is chosen randomly, but you can configure it to use a specific port if you prefer.

InstallDir/etc/config.properties file:

karaf.shutdown.port- Specifies the TCP port to use as the shutdown port. Setting this property to

-1disables the port. Default is0(for a random port). karaf.shutdown.host- Specifies the hostname to which the shutdown port is bound. This setting could be useful on a multi-homed host. Defaults to

localhost.NoteIf you wanted to use thebin/stopscript to shut down the Karaf server running on a remote host, you would need to set this property to the hostname (or IP address) of the remote host. But beware that this setting also affects the Karaf server located on the same host as theetc/config.propertiesfile. karaf.shutdown.port.file- After the Karaf instance starts up, it writes the current shutdown port to the file specified by this property. The

stopscript reads the file specified by this property to discover the value of the current shutdown port. Defaults to${karaf.data}/port.NoteIf you wanted to use thebin/stopscript to shut down the Karaf server running on a remote host, you would need to set this property equal to the remote host's shutdown port. But beware that this setting also affects the Karaf server located on the same host as theetc/config.propertiesfile. karaf.shutdown.command- Specifies the UUID value that must be sent to the shutdown port in order to trigger shutdown. This provides an elementary level of security, as long as the UUID value is kept a secret. For example, the

etc/config.propertiesfile could be read-protected to prevent this value from being read by ordinary users.When Apache Karaf is started for the very first time, a random UUID value is automatically generated and this setting is written to the end of theetc/config.propertiesfile. Alternatively, ifkaraf.shutdown.commandis already set, the Karaf server uses the pre-existing UUID value (which enables you to customize the UUID setting, if required).NoteIf you wanted to use thebin/stopscript to shut down the Karaf server running on a remote host, you would need to set this property to be equal to the value of the remote host'skaraf.shutdown.command. But beware that this setting also affects the Karaf server located on the same host as theetc/config.propertiesfile.

Stopping a child container instance

admin family of console commands (for example, using admin:create, admin:start, admin:stop, and so on). To stop the ChildContainerName child container running on your local host, invoke the admin(.bat) script from the command line, as follows:

bin\admin.bat stop ChildContainerName./bin/admin stop ChildContainerNameStopping a remote instance

Chapter 5. Creating a New Fabric

Abstract

Static IP address required for Fabric Server

- For simple examples and tests (with a single Fabric Server) you can work around the static IP requirement by using the loopback address,

127.0.0.1. - For distributed tests (multiple Fabric Servers) and production deployments, you must assign a static IP address to each of the Fabric Server hosts.

--resolver manualip --manual-ip StaticIPAddress options to specify the static IP address explicitly, when creating a new Fabric Server.

Make Quickstart Examples Available

$FUSE_HOME/fabric/io.fabric8.import.profiles.properties file by uncommenting the line that starts with the following:

# importProfileURLs =

- Check that the fabric is running.

- In the

$FUSE_HOME/quickstartsdirectory, change to the directory in which the quickstart example you want to run is located, for example:cd beginner - In that directory, execute the following command:

mvn fabric8:deployYou would need to run this command in each directory that contains a quickstart example that you want to run.

Procedure

- (Optional) Customise the name of the root container by editing the

InstallDir/etc/system.propertiesfile and specifying a different name for this property:karaf.name=root

NoteFor the first container in your fabric, this step is optional. But at some later stage, if you want to join a root container to the fabric, you might need to customise the container's name to prevent it from clashing with any existing root containers in the fabric. - Any existing users in the

InstallDir/etc/users.propertiesfile are automatically used to initialize the fabric's user data, when you create the fabric. You can populate theusers.propertiesfile, by adding one or more lines of the following form:Username=Password[,RoleA][,RoleB]...

But there must not be any users in this file that have administrator privileges (Administrator,SuperUser, oradminroles). If theInstallDir/etc/users.propertiesalready contains users with administrator privileges, you should delete those users before creating the fabric.ImportantIf you leave some administrator credentials in theusers.propertiesfile, this represents a security risk because the file could potentially be accessed by other containers in the fabric.NoteThe initialization of user data fromusers.propertieshappens only once, at the time the fabric is created. After the fabric has been created, any changes you make tousers.propertieswill have no effect on the fabric's user data. - If you use a VPN (virtual private network) on your local machine, it is advisable to log off VPN before you create the fabric and to stay logged off while you are using the local container.NoteA local Fabric Server is permanently associated with a fixed IP address or hostname. If VPN is enabled when you create the fabric, the underlying Java runtime is liable to detect and use the VPN hostname instead of your permanent local hostname. This can also be an issue with multi-homed machines.

- Start up your local container.In JBoss Fuse, start the local container as follows:

cd InstallDir/bin ./fuse

- Create a new fabric by entering the following command:

JBossFuse:karaf@root> fabric:create --new-user AdminUser --new-user-password AdminPass --new-user-role Administrator --zookeeper-password ZooPass --resolver manualip --manual-ip StaticIPAddress --wait-for-provisioning

The current container, namedrootby default, becomes a Fabric Server with a registry service installed. Initially, this is the only container in the fabric. The--new-user,--new-user-password, and--new-user-roleoptions specify the credentials for a newAdministratoruser. The Zookeeper password is used to protect sensitive data in the Fabric registry service (all of the nodes under/fabric). The--manual-ipoption specifies the Fabric Server's static IP addressStaticIPAddress(see the section called “Static IP address required for Fabric Server”).For more details on fabric:create see section "fabric:create" in "Console Reference".For more details about resolver policies, see section "fabric:container-resolver-list" in "Console Reference" and section "fabric:container-resolver-set" in "Console Reference".

Fabric creation process

- The container installs the requisite OSGi bundles to become a Fabric Server.

- The Fabric Server starts a registry service, which listens on TCP port 2181 (which makes fabric configuration data available to all of the containers in the fabric).NoteYou can customize the value of the registry service port by specifying the

--zookeeper-server-portoption. - The Fabric Server installs a new JAAS realm (based on the ZooKeeper login module), which overrides the default JAAS realm and stores its user data in the ZooKeeper registry.

- The new Fabric Ensemble consists of a single Fabric Server (the current container).

- A default set of profiles is imported from

InstallDir/fabric/import(can optionally be overridden). - After the standalone container is converted into a Fabric Server, the previously installed OSGi bundles and Karaf features are completely cleared away and replaced by the default Fabric Server configuration. For example, some of the shell command sets that were available in the standalone container are no longer available in the Fabric Server.

Expanding a Fabric

- Child container, created on the local machine as a child process in its own JVM.Instructions on creating a child container are found in Child Containers.

- SSH container, created on any remote machine for which you have

sshaccess.Instructions on creating a SSH container are found in SSH Containers.

Chapter 6. Joining a Fabric

Overview

- A managed container is a full member of the fabric and is managed by a Fabric Agent. The agent configures the container based on information provided by the fabric's ensemble. The ensemble knows which profiles are associated with the container and the agent determines what to install based on the contents of the profiles.

- A non-managed container is not managed by a Fabric Agent. Its configuration remains intact after it joins the fabric and is controlled as if the container were a standalone container. Joining the fabric in this manner registers the container with the fabric's ensemble and allows clients to locate the services running in the container using the fabric's discovery mechanism.

Joining a fabric as a managed container

fabric profile. If you want to preserve the previous configuration of the container, however, you must ensure that the fabric has an appropriately configured profile, which you can deploy into the container after it joins the fabric.

-p option enables you to specify a profile to install into the container once the agent is installed.

Joining a fabric as an non-managed container

osgi:install, features:install, and hot deployment), because a Fabric Agent does not take control of its configuration. The agent only registers the container with the fabric's ensemble and keeps the registry entries for it up to date. This enables the newly joined container to discover services running in the container (through Fabric's discovery mechanisms) and to administer these services.

How to join a fabric

- Get the registry service URL for one of the Fabric Servers in the existing fabric. The registry service URL has the following format:

Hostname[:IPPort]

Normally, it is sufficient to specify just the hostname, Hostname, because the registry service uses the fixed port number, 2182, by default. In exceptional cases, you can discover the registry service port by following the instructions in the section called “How to discover the URL of a Fabric Server”. - Get the ZooKeeper password for the fabric. An administrator can access the fabric's ZooKeeper password at any time, by entering the following console command (while logged into one of the Fabric Containers):

JBossFuse:karaf@root> fabric:ensemble-password

- Connect to the standalone container's command console.

- Join a container in one of the following ways:

- Join as a managed container, with a default profile—uses the

fabricprofile.JBossFuse:karaf@root> fabric:join --zookeeper-password ZooPass URL ContainerName

- Join as a managed container, specifying a custom profile—uses a custom profile.

JBossFuse:karaf@root> fabric:join --zookeeper-password ZooPass -p Profile URL ContainerName

- Join as a non-managed container—preserves the existing container configuration.

JBossFuse:karaf@root> fabric:join -n --zookeeper-password ZooPass URL ContainerName

Where you can specify the following values:-

ZooPass - The existing fabric's ZooKeeper password.

-

URL - The URL for one of the fabric's registry services (usually just the hostname where a Fabric Server is running).

-

ContainerName - The new name of the container when it registers itself with the fabric.WarningIf the container your're adding to the fabric has the same name as a container already registered with the fabric, both containers will be reset and will always share the same configuration.

-

Profile - The name of the custom profile to install into the container after it joins the fabric (managed container only).

- If you joined the container as a managed container, you can subsequently deploy a different profile into the container using the

fabric:container-change-profileconsole command.

How to discover the URL of a Fabric Server

- Connect to the command console of one of the containers in the fabric.

- Enter the following sequence of console commands:

JBossA-MQ:karaf@root>config:edit io.fabric8.zookeeperJBossA-MQ:karaf@root>config:proplistservice.pid = io.fabric8.zookeeper zookeeper.url = myhostA:2181,myhostB:2181,myhostC:2181,myhostC:2182,myhostC:2183 fabric.zookeeper.pid = io.fabric8.zookeeperJBossA-MQ:karaf@root>config:cancelThezookeeper.urlproperty holds a comma-separated list of Fabric Server URLs. You can use any one of these URLs to join the fabric.

Chapter 7. Shutting Down a Fabric

Overview

Shutting down a managed container

fabric:container-stop command and specify the name of the managed container, for example:

fabric:container-stop -f ManagedContainerName

-f flag is required when shutting down a container that belongs to the ensemble.

fabric:container-stop command looks up the container name in the registry and retrieves the data it needs to shut down that container. This approach works no matter where the container is deployed: whether locally or on a remote host.

Shutting down a Fabric Server

registry1, registry2, and registry3. You can shut down only one of these Fabric Servers at a time by using the fabric:container-stop command, for example:

fabric:container-stop -f registry3

fabric:container-start registry3

Shutting down an entire fabric

fabric:ensemble-remove and fabric:ensemble-add commands. Each time you execute one of these commands, it creates a new ensemble. This new ensemble URL is propagated to all containers in the fabric and all containers need to reconnect to the new ensemble. There is a risk for TCP port numbers to be reallocated, which means that your network configuration might become out-of-date because services might start up on different ports.

- Three Fabric Servers (ensemble servers):

registry1,registry2,registry3. - Four managed containers:

managed1,managed2,managed3,managed4.

- Use the

clientconsole utility to log on to one of the Fabric Servers in the ensemble. For example, to log on to theregistry1server, enter a command in the following format:./client -u AdminUser -p AdminPass -h Registry1Host

ReplaceAdminUserandAdminPasswith the credentials of a user with administration privileges. ReplaceRegistry1Hostwith name of the host whereregistry1is running. It is assumed that theregistry1server is listening for console connections on the default TCP port (that is,8101) - Ensure that all managed containers in the fabric are running. Execution of

fabric:container-listshould displaytruein thealivecolumn for each container. This is required for execution of thefabric:ensemble-removecommand, which is the next step. - Remove all but one of the Fabric Servers from the ensemble. For example, if you logged on to

registry1, enter:fabric:ensemble-remove registry2 registry3

- Shut down all managed containers in the fabric, except the container on the Fabric Server you are logged into. In the following example, the first command shuts down

managed1,managed2,managed3andmanaged4:fabric:container-stop -f managed* fabric:container-stop -f registry2 fabric:container-stop -f registry3

- Shut down the last container that is still running. This is the container that is on the Fabric Server you are logged in to. For example:

shutdown -f

- Use the

clientconsole utility to log in to theregistry1container host. - Start all containers in the fabric.

- Add the other Fabric Servers, for example:

fabric:ensemble-add registry2 registry3

Note on shutting down a complete fabric

fabric:container-stop -f registry1 fabric:container-stop -f registry2 fabric:container-stop -f registry3

fabric:container-stop fails and throws an error because only one Fabric Server is still running. At least two Fabric Servers must be running to stop a container. With only one Fabric Server running, the registry shuts down and refuses service requests because a quorum of Fabric Servers is no longer available. The fabric:container-stop command needs the registry to be running so it can retrieve details about the container it is trying to shut down.

Chapter 8. Using Remote Connections to Manage a Container

Abstract

8.1. Configuring a Container for Remote Access

Overview

Configuring a standalone container for remote access

InstallDir/etc/org.apache.karaf.shell.cfg configuration file. Example 8.1, “Changing the Port for Remote Access” shows a sample configuration that changes the port used to 8102.

Example 8.1. Changing the Port for Remote Access

sshPort=8102 sshHost=0.0.0.0

Configuring a fabric container for remote access

8.2. Connecting and Disconnecting Remotely

Abstract

8.2.1. Connecting to a Standalone Container from a Remote Container

Overview

Using the ssh:ssh console command

Example 8.2. ssh:ssh Command Syntax

ssh:ssh {

-l username

} {

-P password

} {

-p port

} {

hostname

}

-

-l username - The username used to connect to the remote container. Use valid JAAS login credentials that have

adminprivileges (see Chapter 14, Configuring JAAS Security). -

-P password - The password used to connect to the remote container.

-

-p port - The SSH port used to access the desired container's remote console.By default this value is

8101. See the section called “Configuring a standalone container for remote access” for details on changing the port number. -

hostname - The hostname of the machine that the remote container is running on. See the section called “Configuring a standalone container for remote access” for details on changing the hostname.

etc/users.properties file. See Chapter 14, Configuring JAAS Securityfor details.

Example 8.3. Connecting to a Remote Console

JBossFuse:karaf@root>ssh:ssh -l smx -P smx -p 8108 hostnameshell:info at the prompt. Information about the currently connected instance is returned, as shown in Example 8.4, “Output of the shell:info Command”.

Example 8.4. Output of the shell:info Command

Karaf Karaf version 2.4.0.redhat-630187 Karaf home /home/jboss-fuse-6.3.0.redhat-187 Karaf base /home/jboss-fuse-6.3.0.redhat-187 OSGi Framework org.apache.felix.framework - 4.4.1 JVM Java Virtual Machine Java HotSpot(TM) Server VM version 25.121-b13 Version 1.8.0_121 Vendor Oracle Corporation Pid 4647 Uptime 32.558 seconds Total compile time 45.154 seconds Threads Live threads 87 Daemon threads 69 Peak 88 Total started 113 Memory Current heap size 206,941 kbytes Maximum heap size 932,096 kbytes Committed heap size 655,360 kbytes Pending objects 0 Garbage collector Name = 'PS Scavenge', Collections = 13, Time = 0.343 seconds Garbage collector Name = 'PS MarkSweep', Collections = 2, Time = 0.272 seconds Classes Current classes loaded 10,152 Total classes loaded 10,152 Total classes unloaded 0 Operating system Name Linux version 4.8.14-100.fc23.x86_64 Architecture i386 Processors 4 Disconnecting from a remote console

logout or press Ctrl+D at the prompt.

8.2.2. Connecting to a Fabric Container From another Fabric Container

Overview

Using the fabric:container-connect command

Example 8.5. fabric:container-connect Command Syntax

fabric:container-connect {

-u username

} {

-p password

} {

containerName

}

-

-u username - The username used to connect to the remote console. The default value is

admin. -

-p password - The password used to connect to the remote console. The default value is

admin. -

containerName - The name of the container.

Example 8.6. Connecting to a Remote Container

JBossFuse:karaf@root>fabric:container-connect -u admin -p admin containerNameshell:info at the prompt. Information about the currently connected instance is returned, as shown in Example 8.7, “Output of the shell:info Command”.

Example 8.7. Output of the shell:info Command

Karaf Karaf version 2.4.0.redhat-630187 Karaf home /home/aaki/Downloads/jboss-fuse-6.3.0.redhat-187 Karaf base /home/aaki/Downloads/jboss-fuse-6.3.0.redhat-187 OSGi Framework org.apache.felix.framework - 4.4.1 JVM Java Virtual Machine Java HotSpot(TM) Server VM version 25.121-b13 Version 1.8.0_121 Vendor Oracle Corporation Pid 4647 Uptime 32.558 seconds Total compile time 45.154 seconds Threads Live threads 87 Daemon threads 69 Peak 88 Total started 113 Memory Current heap size 206,941 kbytes Maximum heap size 932,096 kbytes Committed heap size 655,360 kbytes Pending objects 0 Garbage collector Name = 'PS Scavenge', Collections = 13, Time = 0.343 seconds Garbage collector Name = 'PS MarkSweep', Collections = 2, Time = 0.272 seconds Classes Current classes loaded 10,152 Total classes loaded 10,152 Total classes unloaded 0 Operating system Name Linux version 4.8.14-100.fc23.x86_64 Architecture i386 Processors 4 Disconnecting from a remote console

logout or press Ctrl+D at the prompt.

8.2.3. Connecting to a Container Using the Client Command-Line Utility

Using the remote client

InstallDir/bin directory), as follows:

clientclient -a 8101 -h hostname -u username -p password shell:info-p option, you will be prompted to enter a password.

admin privileges.

admin and admin.

client --helpExample 8.8. Karaf Client Help

Apache Karaf client -a [port] specify the port to connect to -h [host] specify the host to connect to -u [user] specify the user name -p [password] specify the password (optional, if not provided, the password is prompted) NB: this option is deprecated and will be removed in next Karaf version --help shows this help message -v raise verbosity -l set client logging level. Set to 0 for ERROR logging and up to 4 for TRACE. -r [attempts] retry connection establishment (up to attempts times) -d [delay] intra-retry delay (defaults to 2 seconds) -b batch mode, specify multiple commands via standard input -f [file] read commands from the specified file -k [keyFile] specify the private keyFile location when using key login [commands] commands to run If no commands are specified, the client will be put in an interactive mode Remote client default credentials

bin/client, without supplying any credentials. This is because the remote client program is pre-configured to use default credentials. If no credentials are specified, the remote client automatically tries to use the following default credentials (in sequence):

- Default SSH key—tries to login using the default Apache Karaf SSH key. The corresponding configuration entry that would allow this login to succeed is commented out by default in the

etc/keys.propertiesfile. - Red Hat Fuse does not use

admin/adminas the remote default credential. When you log into the application, Fuse would try to useusername/passwordof an item randomly chosen from theetc/users.propertiesfile. However, it is recommended not to usebin/clientscript without-uoption, when you need to useusername/password.

admin/admin credentials in users.properties, you will find that the bin/client utility can log in without supplying credentials.

bin/client without supplying credentials, this shows that your container is insecure and you must take steps to fix this in a production environment.

Disconnecting from a remote client console

8.2.4. Connecting to a Container Using the SSH Command-Line Utility

Overview

ssh command-line utility (a standard utility on UNIX-like operating systems) to log in to the Red Hat JBoss Fuse container, where the authentication mechanism is based on public key encryption (the public key must first be installed in the container). For example, given that the container is configured to listen on TCP port 8101, you could log in as follows:

ssh -p 8101 jdoe@localhost

Prerequisites

- The container must be standalone (Fabric is not supported) with the

PublickeyLoginModuleinstalled. - You must have created an SSH key pair (see the section called “Creating a new SSH key pair”).

- You must install the public key from the SSH key pair into the container (see the section called “Installing the SSH public key in the container”).

Default key location

ssh command automatically looks for the private key in the default key location. It is recommended that you install your key in the default location, because it saves you the trouble of specifying the location explicitly.

~/.ssh/id_rsa ~/.ssh/id_rsa.pub

C:\Documents and Settings\Username\.ssh\id_rsa C:\Documents and Settings\Username\.ssh\id_rsa.pub

Creating a new SSH key pair

ssh-keygen utility. Open a new command prompt and enter the following command:

ssh-keygen -t rsa -b 2048

Generating public/private rsa key pair. Enter file in which to save the key (/Users/Username/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Installing the SSH public key in the container

InstallDir/etc/keys.properties file. Each user entry in this file appears on a single line, in the following format:

Username=PublicKey,Role1,Role2,...

~/.ssh/id_rsa.pub, has the following contents:

ssh-rsa AAAAB3NzaC1kc3MAAACBAP1/U4EddRIpUt9KnC7s5Of2EbdSPO9EAMMeP4C2USZpRV1AIlH7WT2NWPq/xfW6MPbLm1Vs14E7 gB00b/JmYLdrmVClpJ+f6AR7ECLCT7up1/63xhv4O1fnfqimFQ8E+4P208UewwI1VBNaFpEy9nXzrith1yrv8iIDGZ3RSAHHAAAAFQCX YFCPFSMLzLKSuYKi64QL8Fgc9QAAAnEA9+GghdabPd7LvKtcNrhXuXmUr7v6OuqC+VdMCz0HgmdRWVeOutRZT+ZxBxCBgLRJFnEj6Ewo FhO3zwkyjMim4TwWeotifI0o4KOuHiuzpnWRbqN/C/ohNWLx+2J6ASQ7zKTxvqhRkImog9/hWuWfBpKLZl6Ae1UlZAFMO/7PSSoAAACB AKKSU2PFl/qOLxIwmBZPPIcJshVe7bVUpFvyl3BbJDow8rXfskl8wO63OzP/qLmcJM0+JbcRU/53Jj7uyk31drV2qxhIOsLDC9dGCWj4 7Y7TyhPdXh/0dthTRBy6bqGtRPxGa7gJov1xm/UuYYXPIUR/3x9MAZvZ5xvE0kYXO+rx jdoe@doemachine.local

jdoe user with the admin role by adding the following entry to the InstallDir/etc/keys.properties file (on a single line):

jdoe=AAAAB3NzaC1kc3MAAACBAP1/U4EddRIpUt9KnC7s5Of2EbdSPO9EAMMeP4C2USZpRV1AIlH7WT2NWPq/xfW6MPbLm1Vs14E7 gB00b/JmYLdrmVClpJ+f6AR7ECLCT7up1/63xhv4O1fnfqimFQ8E+4P208UewwI1VBNaFpEy9nXzrith1yrv8iIDGZ3RSAHHAAAAFQCX YFCPFSMLzLKSuYKi64QL8Fgc9QAAAnEA9+GghdabPd7LvKtcNrhXuXmUr7v6OuqC+VdMCz0HgmdRWVeOutRZT+ZxBxCBgLRJFnEj6Ewo FhO3zwkyjMim4TwWeotifI0o4KOuHiuzpnWRbqN/C/ohNWLx+2J6ASQ7zKTxvqhRkImog9/hWuWfBpKLZl6Ae1UlZAFMO/7PSSoAAACB AKKSU2PFl/qOLxIwmBZPPIcJshVe7bVUpFvyl3BbJDow8rXfskl8wO63OzP/qLmcJM0+JbcRU/53Jj7uyk31drV2qxhIOsLDC9dGCWj4 7Y7TyhPdXh/0dthTRBy6bqGtRPxGa7gJov1xm/UuYYXPIUR/3x9MAZvZ5xvE0kYXO+rx,admin

id_rsa.pub file here. Insert just the block of symbols which represents the public key itself.

Checking that public key authentication is supported

jaas:realms console command, as follows:

Index Realm Module Class

1 karaf org.apache.karaf.jaas.modules.properties.PropertiesLoginModule

2 karaf org.apache.karaf.jaas.modules.publickey.PublickeyLoginModulePublickeyLoginModule is installed. With this configuration you can log in to the container using either username/password credentials or public key credentials.

Logging in using key-based SSH

$ ssh -p 8101 jdoe@localhost

_ ____ ______

| | _ \ | ____|

| | |_) | ___ ___ ___ | |__ _ _ ___ ___

_ | | _ / _ \/ __/ __| | __| | | / __|/ _ \

| |__| | |_) | (_) \__ \__ \ | | | |_| \__ \ __/

\____/|____/ \___/|___/___/ |_| \__,_|___/\___|

JBoss Fuse (6.3.0.redhat-xxx)

http://www.redhat.com/products/jbossenterprisemiddleware/fuse/

Hit '<tab>' for a list of available commands

and '[cmd] --help' for help on a specific command.

Hit '<ctrl-d>' or 'osgi:shutdown' to shutdown JBoss Fuse.

JBossFuse:karaf@root>ssh utility will prompt you to enter the pass phrase.

8.3. Stopping a Remote Container

Using the remote console

Using the fabric:container-stop console command

child1, you would enter the following console command:

JBossFuse:karaf@root>fabric:container-stop child1

Chapter 9. Managing Child Containers

Abstract

9.1. Standalone Child Containers

Using the admin console commands

Installing the admin console commands

admin commands are not installed by default. To install the command set, install the admin feature with the following command:

JBossFuse:karaf@root> features:install admin

Cloning a container

-s option. For example, to create a new child with the SSH port number of 8102:

JBossFuse:karaf@root> admin:clone -s 8102 root cloned

Creating a Karaf child container

admin:create command creates a new Apache Karaf child container. That is, the new child container is not a full JBoss Fuse container, and is missing many of the standard bundles, features, and feature repositories that are normally available in a JBoss Fuse container. What you get is effectively a plain Apache Karaf container with JBoss Fuse branding. Additional feature repositories or features that you require will have to be added to the child manually.

instances/containerName directory. The child container is assigned an SSH port number based on an incremental count starting at 8101.

Example 9.1. Creating a Runtime Instance

JBossFuse:karaf@root> admin:create finn Creating new instance on SSH port 8102 and RMI ports 1100/44445 at: /home/jdoe/apps/fuse/jboss-fuse-6.3.0.redhat-xxx/instances/finn

Changing a child's SSH port

admin:change-port {

containerName

} {

portNumber

}

Starting child containers

Listing all child containers

Example 9.2. Listing Instances

JBossFuse:karaf@root> admin:list Port State Pid Name [ 8107] [Started ] [10628] harry [ 8101] [Started ] [20076] root [ 8106] [Started ] [15924] dick [ 8105] [Started ] [18224] tom

Connecting to a child container

Example 9.3. Admin connect Command

admin:connect {

containerName

} {

-u username

} {

-p password

}

- containerName

- The name of the child to which you want to connect.

-

-uusername - The username used to connect to the child's remote console. Use valid JAAS user credentials that have admin privileges (see Chapter 14, Configuring JAAS Security).

-

-ppassword - This argument specifies the password used to connect to the child's remote console.

JBossFuse:karaf@harry>

Stopping a child container

osgi:shutdown or simply shutdown.

admin:stop containerName.

Destroying a child container

Changing the JVM options on a child container

admin:change-opts command. For example, you could change the amount of memory allocated to the child container's JVM, as follows:

JBossFuse:karaf@harry> admin:change-opts tom "-server -Xms128M -Xmx1345m -Dcom.sun.management.jmxremote"

Using the admin script

InstallDir/bin directory provides all of the admin console commands except for admin:connect.

Example 9.4. The admin Script

admin.bat: Ignoring predefined value for KARAF_HOME Available commands: change-port - Changes the port of an existing container instance. create - Creates a new container instance. destroy - Destroys an existing container instance. list - List all existing container instances. start - Starts an existing container instance. stop - Stops an existing container instance. Type 'command --help' for more help on the specified command.

admin.bat list./admin list9.2. Fabric Child Containers

Creating child containers

fabric:container-create-child console command, which has the following syntax:

karaf@root> fabric:container-create-child parent child [number]

child1, child2, and so on.

karaf@root> fabric:container-create-child root child 2 The following containers have been created successfully: child1 child2

Listing all container instances

fabric:container-list console command. For example:

JBossFuse:karaf@root> fabric:container-list [id] [version] [alive] [profiles] [provision status] root 1.0 true fabric, fabric-ensemble-0000-1 child1 1.0 true default success child2 1.0 true default success

Assigning a profile to a child container

default profile when it is created. To assign a new profile (or profiles) to a child container after it has been created, use the fabric:container-change-profile console command.

default to a newly created container by using the fabric:container-create-child command's --profile argument.

example-camel profile to the child1 container, enter the following console command:

JBossFuse:karaf@root> fabric:container-change-profile child1 example-camel

child1 and replaces them with the specified list of profiles (where in this case, there is just one profile in the list, example-camel).

Connecting to a child container

fabric:container-connect console command. For example, to connect to child1, enter the following console command:

JBossFuse:karaf@root>fabric:container-connect -u admin -p admin child1

Connecting to host YourHost on port 8102

Connected

_ ____ ______

| | _ \ | ____|

| | |_) | ___ ___ ___ | |__ _ _ ___ ___

_ | | _ < / _ \/ __/ __| | __| | | / __|/ _ \

| |__| | |_) | (_) \__ \__ \ | | | |_| \__ \ __/

\____/|____/ \___/|___/___/ |_| \__,_|___/\___|

JBoss Fuse (6.0.0.redhat-xxx)

http://www.redhat.com/products/jbossenterprisemiddleware/fuse/

Hit '<tab>' for a list of available commands

and '[cmd] --help' for help on a specific command.

Hit '<ctrl-d>' or 'osgi:shutdown' to shutdown JBoss Fuse.

JBossFuse:admin@child1>Ctrl-D.

Starting a child container

child1:

JBossFuse:karaf@root>fabric:container-start child1

Stopping a child container

child1:

JBossFuse:karaf@root>fabric:container-stop child1

child1 container.

Destroying a child container

child1 container instance, enter the following console command:

JBossFuse:karaf@root> fabric:container-delete child1

- stops the child's JVM process

- physically removes all files related to the child container

Chapter 10. Deploying a New Broker Instance

Abstract

Overview

Standalone containers

- Create a template Apache ActiveMQ XML configuration file in a location that is accessible to the container.

- In the JBoss Fuse command console, use the config:edit command to create a new OSGi configuration file.ImportantThe PID must start with

io.fabric8.mq.fabric.server-. - Use the config:propset command to associate your template XML configuration with the broker OSGi configuration as shown in Example 10.1, “Specifying a Broker's Template XML Configuration”.

Example 10.1. Specifying a Broker's Template XML Configuration

JBossFuse:karaf@root>config:propset config configFile - Use the config:propset command to set the required properties.The properties that need to be set will depend on the properties you specified using property place holders in the template XML configuration and the broker's network settings.For information on using config:propset see section "config:propset, propset" in "Console Reference".

- Save the new OSGi configuration using the config:update command.

${karaf.base}/etc/broker.xml. You will also need to provide values for the data property, the broker-name property, and the openwire-port property.

Example

myBroker that stores its data in InstallDir/data/myBroker and opens a port at 61617, you would do the following:

- Open the JBoss Fuse command console.

- In the JBoss Fuse command console, use the config:edit command to create a new OSGi configuration file:

JBossFuse:karaf@root>config:edit io.fabric8.mq.fabric.server-myBroker - Use the config:propset command to associate your template XML configuration with the broker OSGi configuration:

JBossFuse:karaf@root>config:propset config ${karaf.base}/etc/broker.xml - Use the config:propset command to specify the new broker's data directory:

JBossFuse:karaf@root>config:propset data ${karaf.data}/myBroker - Use the config:propset command to specify the new broker's name:

JBossFuse:karaf@root>config:propset broker-name myBroker - Use the config:propset command to specify the new broker's openwire port:

JBossFuse:karaf@root>config:propset openwire-port 61617 - Save the new OSGi configuration using the config:update command.

Chapter 11. Configuring JBoss Fuse

Abstract

11.1. Introducing JBoss Fuse Configuration

OSGi configuration

.cfg file in the InstallDir/etc directory. The file is interpreted using the Java properties file format. The filename is mapped to the persistent identifier (PID) of the service that is to be configured. In OSGi, a PID is used to identify a service across restarts of the container.

Configuration files

Table 11.1. JBoss Fuse Configuration Files

| Filename | Description |

|---|---|

broker.xml | Configures the default Apache ActiveMQ broker in a Fabric (used in combination with the io.fabric8.mq.fabric.server-default.cfg file). |

config.properties | The main configuration file for the container See Section 11.2, “Setting OSGi Framework and Initial Container Properties” for details. |

keys.properties | Lists the users who can access the JBoss Fuse runtime using the SSH key-based protocol. The file's contents take the format username=publicKey,role |

org.apache.aries.transaction.cfg | Configures the transaction feature |

org.apache.felix.fileinstall-deploy.cfg | Configures a watched directory and polling interval for hot deployment. |

org.apache.karaf.features.cfg | Configures a list of feature repositories to be registered and a list of features to be installed when JBoss Fuse starts up for the first time. |

org.apache.karaf.features.obr.cfg | Configures the default values for the features OSGi Bundle Resolver (OBR). |

org.apache.karaf.jaas.cfg | Configures options for the Karaf JAAS login module. Mainly used for configuring encrypted passwords (disabled by default). |

org.apache.karaf.log.cfg | Configures the output of the log console commands. See Section 16.2, “Logging Configuration”. |

org.apache.karaf.management.cfg |

Configures the JMX system. See Chapter 13, Configuring JMX for details.

|

org.apache.karaf.shell.cfg |

Configures the properties of remote consoles. For more information see Section 8.1, “Configuring a Container for Remote Access”.

|

io.fabric8.maven.cfg | Configures the Maven repositories used by the Fabric Maven Proxy when downloading artifacts, (The Fabric Maven Proxy is used for provisioning new containers on a remote host.) |

io.fabric8.mq.fabric.server-default.cfg | Configures the default Apache ActiveMQ broker in a Fabric (used in combination with the broker.xml file). |

org.ops4j.pax.logging.cfg |

Configures the logging system. For more, see Section 16.2, “Logging Configuration”.

|

org.ops4j.pax.url.mvn.cfg | Configures additional URL resolvers. |

org.ops4j.pax.web.cfg | Configures the default Jetty container (Web server). See Securing the Web Console. |

startup.properties

| Specifies which bundles are started in the container and their start-levels. Entries take the format bundle=start-level. |

system.properties |

Specifies Java system properties. Any properties set in this file are available at runtime using

System.getProperties(). See Setting System and Config Properties for more.

|

users.properties | Lists the users who can access the JBoss Fuse runtime either remotely or via the web console. The file's contents take the format username=password,role |

setenv or setenv.bat | This file is in the /bin directory. It is used to set JVM options. The file's contents take the format JAVA_MIN_MEM=512M, where 512M is the minimum size of Java memory. See Setting Java Options for more information. |

Configuration file naming convention

<PID>.cfg

<PID> is the persistent ID of the OSGi Managed Service (as defined in the OSGi Configuration Admin specification). A persistent ID is normally dot-delimited—for example, org.ops4j.pax.web.

<PID>-<InstanceID>.cfg

<PID> is the persistent ID of the OSGi Managed Service Factory. In the case of a managed service factory's <PID>, you can append a hyphen followed by an arbitrary instance ID, <InstanceID>. The managed service factory then creates a unique service instance for each <InstanceID> that it finds.

Setting Java Options

/bin/setenv file in Linux, or the bin/setenv.bat file for Windows. Use this file to directly set a group of Java options: JAVA_MIN_MEM, JAVA_MAX_MEM, JAVA_PERM_MEM, JAVA_MAX_PERM_MEM. Other Java options can be set using the EXTRA_JAVA_OPTS variable.

JAVA_MIN_MEM=512M # Minimum memory for the JVMTo set a Java option other than the direct options, use

EXTRA_JAVA_OPTS="Java option"For example,

EXTRA_JAVA_OPTS="-XX:+UseG1GC"

11.2. Setting OSGi Framework and Initial Container Properties

Overview

etc folder:

config.properties—specifies the bootstrap properties for the OSGi frameworksystem.properties—specifies properties to configure container functions

OSGi framework properties

etc/config.properties file contains the properties used to specify which OSGi framework implementation to load and properties for configuring the framework's behaviors. Table 11.2, “Properties for the OSGi Framework” describes the key properties to set.

Table 11.2. Properties for the OSGi Framework

| Property | Description |

|---|---|

| karaf.framework | Specifies the OSGi framework that Red Hat JBoss Fuse uses. The default framework is Apache Felix which is specified using the value felix. |

| karaf.framework.felix | Specifies the path to the Apache Felix JAR on the file system. |

Initial container properties

etc/system.properties file contains properties that configure how various aspects of the container behave including:

- the container's name

- the default feature repository used by the container

- the default port used by the OSGi HTTP service

- the initial message broker configuration

Table 11.3. Container Properties

| Property | Description |

|---|---|

| karaf.name | Specifies the name of this container. The default is root. |

| karaf.default.repository | Specifies the location of the feature repository the container will use by default. The default setting is the local feature repository installed with JBoss Fuse. |

| org.osgi.service.http.port | Specifies the default port for the OSGi HTTP Service. |

11.3. Configuring Standalone Containers Using the Command Console

Overview

Listing the current configuration

Example 11.1. Output of the config:list Command

...

----------------------------------------------------------------

Pid: org.ops4j.pax.logging

BundleLocation: mvn:org.ops4j.pax.logging/pax-logging-service/1.4

Properties:

log4j.appender.out.layout.ConversionPattern = %d{ABSOLUTE} | %-5.5p | %-16.16

t | %-32.32c{1} | %-32.32C %4L | %m%n

felix.fileinstall.filename = org.ops4j.pax.logging.cfg

service.pid = org.ops4j.pax.logging

log4j.appender.stdout.layout.ConversionPattern = %d{ABSOLUTE} | %-5.5p | %-16

.16t | %-32.32c{1} | %-32.32C %4L | %m%n

log4j.appender.out.layout = org.apache.log4j.PatternLayout

log4j.rootLogger = INFO, out, osgi:VmLogAppender

log4j.appender.stdout.layout = org.apache.log4j.PatternLayout

log4j.appender.out.file = /home/apache/jboss-fuse-6.3.0.redhat-xxx/data/log/karaf.log

log4j.appender.stdout = org.apache.log4j.ConsoleAppender

log4j.appender.out.append = true

log4j.appender.out = org.apache.log4j.FileAppender

----------------------------------------------------------------

Pid: org.ops4j.pax.web

BundleLocation: mvn:org.ops4j.pax.web/pax-web-runtime/0.7.1

Properties:

org.apache.karaf.features.configKey = org.ops4j.pax.web

service.pid = org.ops4j.pax.web

org.osgi.service.http.port = 8181

----------------------------------------------------------------

...Editing the configuration

- Start an editing session by typing

config:edit PID.PID is the PID for the configuration you are editing. It must be entered exactly. If it does not match the desired PID, the container will create a new PID with the specified name. - Remind yourself of the available properties in a particular configuration by typing

config:proplist. - Use one of the editing commands to change the properties in the configuration.The editing commands include:

- config:propappend—appends a new property to the configuration

- config:propset—set the value for a configuration property

- config:propdel—delete a property from the configuration

- Update the configuration in memory and save it to disk by typing

config:update.

config:cancel.

Example 11.2. Editing a Configuration

JBossFuse:karaf@root>config:edit org.apache.karaf.logJBossFuse:karaf@root>config:proplistservice.pid = org.apache.karaf.log size = 500 felix.fileinstall.filename = org.apache.karaf.log.cfg pattern = %d{ABSOLUTE} | %-5.5p | %-16.16t | %-32.32c{1} | %-32.32C %4L | %m%nJBossFuse:karaf@root>config:propset size 300JBossFuse:karaf@root>config:update

11.4. Configuring Fabric Containers

Overview

Profiles

- the Apache Karaf features to be deployed

- OSGi bundles to be deployed

- the feature repositories to be scanned for features

- properties that configure the container's runtime behavior

Best practices

Making changes using the command console

- fabric:version-create—create a new version

- fabric:profile-create—create a new profile

- fabric:profile-edit—edit the properties in a profile

- fabric:container-change-profile—change the profiles assigned to a container

Example 11.3. Editing Fabric Profile

JBossFuse:karaf@root>fabric:version-createCreated version: 1.1 as copy of: 1.0JBossFuse:karaf@root>fabric:profile-edit -p org.apache.karaf.log/size=300 NEBroker

Using the management console

Chapter 12. Configuring the Hot Deployment System

Abstract

Overview

org.apache.felix.fileinstall-deploy PID.

Specifying the hot deployment folder

deploy folder that is relative to the folder from which you launched the container. You change the folder the container monitors by setting the felix.fileinstall.dir property in the rg.apache.felix.fileinstall-deploy PID. The value is the absolute path of the folder to monitor. If you set the value to /home/joe/deploy, the container will monitor a folder in Joe's home directory.

Specifying the scan interval

org.apache.felix.fileinstall-deploy PID. The value is specified in milliseconds.

Example

/home/karaf/hot as the hot deployment folder and sets the scan interval to half a second.

Example 12.1. Configuring the Hot Deployment Folders

JBossFuse:karaf@root>config:edit org.apache.felix.fileinstall-deployJBossFuse:karaf@root>config:propset felix.fileinstall.dir /home/karaf/hotJBossFuse:karaf@root>config:propset felix.fileinstall.poll 500JBossFuse:karaf@root>config:update

Chapter 13. Configuring JMX

Abstract

Overview

org.apache.karaf.management PID.

Changing the RMI port and JMX URL

Table 13.1. JMX Access Properties

| Property | Description |

|---|---|

| rmiRegistryPort | Specifies the RMI registry port. The default value is 1099. |

| serviceUrl | Specifies the the URL used to connect to the JMX server. The default URL is service:jmx:rmi://${rmiServerHost}:${rmiServerPort}/jndi/rmi://${rmiRegistryHost}:${rmiRegistryPort}/karaf-${karaf.name}, where karaf.name is the container's name (by default, root). All ${...} placeholders are replaced by properties with the same names as they are inside parentheses |

Setting the JMX username and password

admin and the default password is admin.

Restricting JMX to Accept Only Local Connections

service:jmx:rmi://127.0.0.1:44444/jndi/rmi://127.0.0.1:1099/karaf-root

- The RMI registry tells JMX clients where to find the JMX RMI server port; information can be obtained under key jmxrmi.

- The RMI registry port is generally known as it is set through the system properties at JVM startup. The default value is 1099.

- The JMX RMI server port is generally not known as the JVM chooses it at random.

- Change the iptables to add a redirecting rule. When you call on 44444 port, it redirects all the network interfaces to IP 127.0.0.1:44444.

sudo iptables -t nat -I OUTPUT -p tcp -o lo --dport 44444 -j REDIRECT --to-ports 44444

- Before starting the container, set the system property java.rmi.server.hostname to 127.0.0.1 port. It works even without iptables re-directing the rule in place.

export JAVA_OPTS="-Djava.rmi.server.hostname=127.0.0.1" bin/fuse

etc/org.apache.karaf.management.cfg configuration file.

Troubleshooting on Linux platforms

- Check that the hostname resolves to the correct IP address. For example, if the

hostname -icommand returns 127.0.0.1, JConsole will not be able to connect to the JMX server. To fix this, edit the/etc/hostsfile so that the hostname resolves to the correct IP address. - Check whether the Linux machine is configured to accept packets from the host where JConsole is running (packet filtering is built in the Linux kernel). You can enter the command,

/sbin/iptables --list, to determine whether an external client is allowed to connect to the JMX server.Use the following command to add a rule to allow an external client such as JConsole to connect:/usr/sbin/iptables -I INPUT -s JconsoleHost -p tcp --destination-port JMXRemotePort -j ACCEPT

Where JconsoleHost is either the hostname or the IP address of the host on which JConsole is running and JMXRemotePort is the TCP port exposed by the JMX server.

Chapter 14. Configuring JAAS Security

14.1. Alternative JAAS Realms

Overview

Default realm

karaf realm name. The standard administration services in JBoss Fuse (SSH remote console, JMX port, and so on) are all configured to use the karaf realm by default.

Available realm implementations

Standalone JAAS realm

karaf realm installs four JAAS login modules, which are used in parallel:

PropertiesLoginModule- Authenticates username/password credentials and stores the secure user data in the

InstallDir/etc/users.propertiesfile. PublickeyLoginModule- Authenticates SSH key-based credentials (consisting of a username and a public/private key pair). Secure user data is stored in the

InstallDir/etc/keys.propertiesfile. FileAuditLoginModule- Provides an audit trail of successful/failed login attempts, which are logged to an audit file. Does not perform user authentication.

EventAdminAuditLoginModule- Provides an audit trail of successful/failed login attempts, which are logged to the OSGi Event Admin service. Does not perform user authentication.

Fabric JAAS realm

karaf realm based on the ZookeeperLoginModule login module is automatically installed in every container (the fabric-jaas feature is included in the default profile) and is responsible for securing the SSH remote console and other administrative services. The Zookeeper login module stores the secure user data in the Fabric Registry.

karaf realm with a higher rank.

LDAP JAAS realm

14.2. JAAS Console Commands

Editing user data from the console

jaas:* console commands, which you can use to edit JAAS user data from the console. This works both for standalone JAAS realms and for Fabric JAAS realms.

jaas:* console commands are not compatible with the LDAP JAAS module.

Standalone realm configuration

PropertiesLoginModule and the PublickeyLoginModule) maintains its own database of secure user data, independently of any other containers. To configure the user data for a standalone container, you must log into the specific container (see Connecting and Disconnecting Remotely) whose data you want to modify. Each standalone container must be configured separately.

jaas:realms command, as follows:

JBossFuse:karaf@root> jaas:realms

Index Realm Module Class

1 karaf org.apache.karaf.jaas.modules.properties.PropertiesLoginModule

2 karaf org.apache.karaf.jaas.modules.publickey.PublickeyLoginModule

3 karaf org.apache.karaf.jaas.modules.audit.FileAuditLoginModule

4 karaf org.apache.karaf.jaas.modules.audit.EventAdminAuditLoginModulekaraf JAAS realm. Enter the following console command to start editing the properties login module in the karaf realm:

JBossFuse:karaf@root> jaas:manage --index 1

Fabric realm configuration

ZookeeperLoginModule by default) shares its secure user data with all of the other containers in the fabric and the user data is stored in the Fabric Registry. To configure the user data for a fabric, you can log into any of the containers. Because the user data is shared in the registry, any modifications you make are instantly propagated to all of the containers in the fabric.

jaas:realms console command, you might see a listing similar to this:

Index Realm Module Class

1 karaf io.fabric8.jaas.ZookeeperLoginModule

2 karaf org.apache.karaf.jaas.modules.properties.PropertiesLoginModule

3 karaf org.apache.karaf.jaas.modules.publickey.PublickeyLoginModuleZookeeperLoginModule login module has the highest priority and is used by the fabric (you cannot see this from the listing, but its realm is defined to have a higher rank than the other modules). In this example, the ZookeeperLoginModule has the index 1, but it might have a different index number in your container.

ZookeeperLoginModule):

JBossFuse:karaf@root> jaas:manage --index 1

Adding a new user to the JAAS realm

jdoe, to the JAAS realm.

- List the available realms and login modules by entering the following command:

JBossFuse:karaf@root> jaas:realms

- Choose the login module to edit by specifying its index, Index, using a command of the following form:

JBossFuse:karaf@root> jaas:manage --index Index

jdoe, with password, secret, by entering the following console command:

JBossFuse:karaf@root> jaas:useradd jdoe secret

admin role to jdoe, by entering the following console command:

JBossFuse:karaf@root> jaas:roleadd jdoe admin

jaas:pending console command, as follows:

JBossFuse:karaf@root> jaas:pending

Jaas Realm:karaf Jaas Module:org.apache.karaf.jaas.modules.properties.PropertiesLoginModule

UserAddCommand{username='jdoe', password='secret'}

RoleAddCommand{username='jdoe', role='admin'}jaas:update, as follows:

JBossFuse:karaf@root> jaas:update

etc/users.properties file, in the case of a standalone container, or by storing the user data in the Fabric Registry, in the case of a fabric).

\. For example, jaas:useradd username hello\!world. Your password will be hello!world.

Canceling pending changes

jaas:update command, you could abort the pending changes using the jaas:cancel command, as follows:

JBossFuse:karaf@root> jaas:cancel

14.3. Standalone Realm Properties File

Overview

PropertiesLoginModule JAAS module. This login module stores its user data in a Java properties file in the following location:

InstallDir/etc/users.properties

Format of users.properties entries

etc/users.properties file has the following format (on its own line):

Username=Password[,UserGroup|Role][,UserGroup|Role]...

Changing the default username and password

etc/users.properties file initially contains a commented out entry for a single user, admin, with password admin and role admin. It is strongly recommended that you create a new user entry that is different from the admin user example.

Username=Password,Administrator

Administrator role grants full administration privileges to this user.

Chapter 15. Securing Fabric Containers

Abstract

Default authentication system

io.fabric8.jaas.ZookeeperLoginModule). This system allows you to define user accounts and assign passwords and roles to the users. Out of the box, the user credentials are stored in the Fabric registry, unencrypted.

Managing users

jaas:* family of console commands. First of all you need to attach the jaas:* commands to the ZookeeperLoginModule login module, as follows:

JBossFuse:karaf@root> jaas:realms

Index Realm Module Class

1 karaf org.apache.karaf.jaas.modules.properties.PropertiesLoginModule

2 karaf org.apache.karaf.jaas.modules.publickey.PublickeyLoginModule

3 karaf io.fabric8.jaas.ZookeeperLoginModule

JBossFuse:karaf@root> jaas:manage --index 3jaas:* commands to the ZookeeperLoginModule login module. You can then add users and roles, using the jaas:useradd and jaas:roleadd commands. Finally, when you are finished editing the user data, you must commit the changes by entering the jaas:update command, as follows:

JBossFuse:karaf@root> jaas:update

jaas:cancel.

Obfuscating stored passwords

ZookeeperLoginModule stores passwords in plain text. You can provide additional protection to passwords by storing them in an obfuscated format. This can be done by adding the appropriate configuration properties to the io.fabric8.jaas PID and ensuring that they are applied to all of the containers in the fabric.

Enabling LDAP authentication

LDAPLoginModule), which you can enable by adding the requisite configuration to the default profile.

Chapter 16. Logging

Abstract

16.1. Logging Overview

- Apache Log4j

- Apache Commons Logging

- SLF4J

- Java Util Logging

16.2. Logging Configuration

Overview

etc/system.properties—the configuration file that sets the logging level during the container’s boot process. The file contains a single property, org.ops4j.pax.logging.DefaultServiceLog.level, that is set toERRORby default.org.ops4j.pax.logging—the PID used to configure the logging back end service. It sets the logging levels for all of the defined loggers and defines the appenders used to generate log output. It uses standard Log4j configuration. By default, it sets the root logger's level toINFOand defines two appenders: one for the console and one for the log file.NoteThe console's appender is disabled by default. To enable it, addlog4j.appender.stdout.append=trueto the configuration For example, to enable the console appender in a standalone container, you would use the following commands:JBossFuse:karaf@root>config:edit org.ops4j.pax.loggingJBossFuse:karaf@root>config:propappend log4j.appender.stdout.append trueJBossFuse:karaf@root>config:updateorg.apache.karaf.log.cfg—configures the output of the log console commands.

Changing the log levels

org.ops4j.pax.logging PID's log4j.rootLogger property so that the logging level is one of the following:

TRACEDEBUGINFOWARNERRORFATALOFF

Example 16.1. Changing Logging Levels

JBossFuse:karaf@root>config:edit org.ops4j.pax.loggingJBossFuse:karaf@root>config:propset log4j.rootLogger "DEBUG, out, osgi:VmLogAppender"JBossFuse:karaf@root>config:update

Changing the appenders' thresholds

log4j.appender.appenderName.threshold property that controls what level of messages are written to the appender. The appender threshold values are the same as the log level values.

DEBUG but limiting the information displayed on the console to WARN.

Example 16.2. Changing the Log Information Displayed on the Console

JBossFuse:karaf@root>config:edit org.ops4j.pax.loggingJBossFuse:karaf@root>config:propset log4j.rootLogger "DEBUG, out, osgi:VmLogAppender"JBossFuse:karaf@root>config:propappend log4j.appender.stdout.threshold WARNJBossFuse:karaf@root>config:update

Logging per bundle

sift appender to the Log4j root logger as shown in Example 16.3, “Enabling Per Bundle Logging”.

Example 16.3. Enabling Per Bundle Logging

JBossFuse:karaf@root>config:edit org.ops4j.pax.loggingJBossFuse:karaf@root>config:propset log4j.rootLogger "INFO, out, sift, osgi:VmLogAppender"JBossFuse:karaf@root>config:update

data/log/BundleName.log.

org.ops4j.pax.logging.cfg.

Logging History

~/.karaf/karaf.history. JBoss Fuse can be configured to prevent the history from being saved each time.

Procedure 16.1. Stop JBoss Fuse saving Logging History on shutdown.

- Stop JBoss Fuse.

- Edit

$FUSE_HOME/etc/system.properties. - Uncomment the line that says

# karaf.shell.history.maxSize = 0. - Restart JBoss Fuse

~/.karaf/karaf.history will still be created, but it will always have a size of 0, and will be empty.

16.3. Logging per Application

Overview

Application key

Enabling per application logging

- In each of your applications, edit the Java source code to define a unique application key.If you are using slf4j, add the following static method call to your application:

org.slf4j.MDC.put("app.name","MyFooApp");If you are using log4j, add the following static method call to your application:org.apache.log4j.MDC.put("app.name","MyFooApp"); - Edit the

etc/org.ops4j.pax.loggingPID to customize the sift appender.- Set

log4j.appender.sift.keytoapp.name. - Set

log4j.appender.sift.appender.fileto=${karaf.data}/log/$\\{app.name\\}.log.

- Edit the

etc/org.ops4j.pax.loggingPID to add the sift appender to the root logger.JBossFuse:karaf@root>config:edit org.ops4j.pax.loggingJBossFuse:karaf@root>config:propset log4j.rootLogger "INFO, out, sift, osgi:VmLogAppender"JBossFuse:karaf@root>config:update

16.4. Log Commands

- log:display

- Displays the most recent log entries. By default, the number of entries returned and the pattern of the output depends on the size and pattern properties in the

org.apache.karaf.log.cfgfile. You can override these using the-pand-darguments. - log:display-exception

- Displays the most recently logged exception.

- log:get

- Displays the current log level.

- log:set

- Sets the log level.

- log:tail

- Continuously display log entries .

- log:clear

- Clear log entries.

Chapter 17. Persistence

Abstract

Overview

data folder in the directory from which you launch the container. This folder is populated by folders storing information about the message broker used by the container, the OSGi framework, and the Karaf container.

The data folder

data folder is used by the JBoss Fuse runtime to store persistent state information. It contains the following folders:

amq- Contains persistent data needed by any Apache ActiveMQ brokers that are started by the container.

cache- The OSGi bundle cache. The cache contains a directory for each bundle, where the directory name corresponds to the bundle identifier number.

generated-bundles- Contains bundles that are generated by the container.

log- Contains the log files.

maven- A temporary directory used by the Fabric Maven Proxy when uploading files.

txlog- Contains the log files used by the transaction management system. You can set the location of this directory in the

org.apache.aries.transaction.cfgfile

Changing the bundle cache location

InstallDir/data/cache.

config.properties.

Flushing the bundle cache

config.properties. This property is set to none by default.

Changing the generated-bundle cache location

org.apache.felix.fileinstall-deploy.cfg file.

Adjusting the bundle cache buffer

config.properties configuration file. The value is specified in bytes.

Chapter 18. Failover Deployments

Abstract

18.1. Using a Simple Lock File System

Overview

Configuring a lock file system

etc/system.properties file on both the master and the slave installation to include the properties in Example 18.1, “Lock File Failover Configuration”.

Example 18.1. Lock File Failover Configuration

karaf.lock=true karaf.lock.class=org.apache.karaf.main.SimpleFileLock karaf.lock.dir=PathToLockFileDirectory karaf.lock.delay=10000

- karaf.lock—specifies whether the lock file is written.

- karaf.lock.class—specifies the Java class implementing the lock. For a simple file lock it should always be

org.apache.karaf.main.SimpleFileLock. - karaf.lock.dir—specifies the directory into which the lock file is written. This must be the same for both the master and the slave installation.

- karaf.lock.delay—specifies, in milliseconds, the delay between attempts to reaquire the lock.

18.2. Using a JDBC Lock System

Overview

Adding the JDBC driver to the classpath

- Copy the JDBC driver JAR file to the

ESBInstallDir/libdirectory for each Red Hat JBoss Fuse instance. - Modify the

bin/karafstart script so that it includes the JDBC driver JAR in itsCLASSPATHvariable.For example, given the JDBC JAR file,JDBCJarFile.jar, you could modify the start script as follows (on a *NIX operating system):... # Add the jars in the lib dir for file in "$KARAF_HOME"/lib/karaf*.jar do if [ -z "$CLASSPATH" ]; then CLASSPATH="$file" else CLASSPATH="$CLASSPATH:$file" fi done CLASSPATH="$CLASSPATH:$KARAF_HOME/lib/JDBCJarFile.jar"NoteIf you are adding a MySQL driver JAR or a PostgreSQL driver JAR, you must rename the driver JAR by prefixing it with thekaraf-prefix. Otherwise, Apache Karaf will hang and the log will tell you that Apache Karaf was unable to find the driver.

Configuring a JDBC lock system

etc/system.properties file for each instance in the master/slave deployment as shown

Example 18.2. JDBC Lock File Configuration

karaf.lock=true karaf.lock.class=org.apache.karaf.main.DefaultJDBCLock karaf.lock.level=50 karaf.lock.delay=10000 karaf.lock.jdbc.url=jdbc:derby://dbserver:1527/sample karaf.lock.jdbc.driver=org.apache.derby.jdbc.ClientDriver karaf.lock.jdbc.user=user karaf.lock.jdbc.password=password karaf.lock.jdbc.table=KARAF_LOCK karaf.lock.jdbc.clustername=karaf karaf.lock.jdbc.timeout=30

Configuring JDBC locking on Oracle

etc/system.properties file must point to org.apache.karaf.main.OracleJDBCLock.

system.properties file as normal for your setup, as shown:

Example 18.3. JDBC Lock File Configuration for Oracle

karaf.lock=true karaf.lock.class=org.apache.karaf.main.OracleJDBCLock karaf.lock.jdbc.url=jdbc:oracle:thin:@hostname:1521:XE karaf.lock.jdbc.driver=oracle.jdbc.OracleDriver karaf.lock.jdbc.user=user karaf.lock.jdbc.password=password karaf.lock.jdbc.table=KARAF_LOCK karaf.lock.jdbc.clustername=karaf karaf.lock.jdbc.timeout=30

Configuring JDBC locking on Derby

etc/system.properties file should point to org.apache.karaf.main.DerbyJDBCLock. For example, you could configure the system.properties file as shown:

Example 18.4. JDBC Lock File Configuration for Derby

karaf.lock=true karaf.lock.class=org.apache.karaf.main.DerbyJDBCLock karaf.lock.jdbc.url=jdbc:derby://127.0.0.1:1527/dbname karaf.lock.jdbc.driver=org.apache.derby.jdbc.ClientDriver karaf.lock.jdbc.user=user karaf.lock.jdbc.password=password karaf.lock.jdbc.table=KARAF_LOCK karaf.lock.jdbc.clustername=karaf karaf.lock.jdbc.timeout=30

Configuring JDBC locking on MySQL

etc/system.properties file must point to org.apache.karaf.main.MySQLJDBCLock. For example, you could configure the system.properties file as shown:

Example 18.5. JDBC Lock File Configuration for MySQL

karaf.lock=true karaf.lock.class=org.apache.karaf.main.MySQLJDBCLock karaf.lock.jdbc.url=jdbc:mysql://127.0.0.1:3306/dbname karaf.lock.jdbc.driver=com.mysql.jdbc.Driver karaf.lock.jdbc.user=user karaf.lock.jdbc.password=password karaf.lock.jdbc.table=KARAF_LOCK karaf.lock.jdbc.clustername=karaf karaf.lock.jdbc.timeout=30

Configuring JDBC locking on PostgreSQL