Getting Started with JBoss EAP for OpenShift Online

Guide to developing with Red Hat JBoss Enterprise Application Platform for OpenShift Online

Abstract

Chapter 1. Introduction

1.1. What is Red Hat JBoss Enterprise Application Platform (JBoss EAP)?

Red Hat JBoss Enterprise Application Platform 7 (JBoss EAP) is a middleware platform built on open standards and compliant with the Java Enterprise Edition 7 specification. It provides preconfigured options for features such as high-availability clustering, messaging, and distributed caching. It includes a modular structure that allows you to enable services only when required, which results in improved startup speed.

The web-based management console and management command line interface (CLI) make editing XML configuration files unnecessary and add the ability to script and automate tasks. In addition, JBoss EAP includes APIs and development frameworks that allow you to quickly develop, deploy, and run secure and scalable Jakarta EE applications. JBoss EAP 7 is a Jakarta EE 8 compatible implementation for both Web Profile and Full Platform specifications and also a certified implementation of the Java EE 8 Full Platform and Web Profile specifications.

1.2. How Does JBoss EAP Work on OpenShift?

Red Hat offers a containerized image for JBoss EAP that is designed for use with OpenShift. Using this image, developers can quickly and easily build, scale, and test applications that are deployed across hybrid environments.

1.3. Comparison: JBoss EAP and JBoss EAP for OpenShift

There are some notable differences when comparing the JBoss EAP product with the JBoss EAP for OpenShift image. The following table describes these differences and notes which features are included or supported in the current version of JBoss EAP for OpenShift.

Table 1.1. Differences between JBoss EAP and JBoss EAP for OpenShift

| JBoss EAP Feature | Status in JBoss EAP for OpenShift | Description |

|---|---|---|

| JBoss EAP management console | Not included | The JBoss EAP management console is not included in this release of JBoss EAP for OpenShift. |

| JBoss EAP management CLI | Not recommended | The JBoss EAP management CLI is not recommended for use with JBoss EAP running in a containerized environment. Any configuration changes made using the management CLI in a running container will be lost when the container restarts. The management CLI is accessible from within a pod for troubleshooting purposes. |

| Managed domain | Not supported | Although a JBoss EAP managed domain is not supported, creation and distribution of applications are managed in the containers on OpenShift. |

| Default root page | Disabled |

The default root page is disabled, but you can deploy your own application to the root context as |

| Remote messaging | Supported | Red Hat AMQ for inter-pod and remote messaging is supported. ActiveMQ Artemis is only supported for messaging within a single pod with JBoss EAP instances, and is only enabled when Red Hat AMQ is absent. |

| Transaction recovery | Partially supported | There are some unsupported transaction recovery scenarios and caveats when undertaking transaction recovery with the JBoss EAP for OpenShift image. The EAP operator is the only tested and supported option of transaction recovery in OpenShift 4. For more information about recovering transactions using the EAP operator, see EAP Operator for Safe Transaction Recovery. |

| Embedded messaging broker | Deprecated | The use of an embedded messaging broker in OpenShift containers is deprecated. Support for an embedded broker will be removed in a future release. If a container is configured to use an embedded messaging broker, and if no remote broker is configured, a warning is logged.

If the container configuration does not include messaging destinations, set the |

1.4. Version Compatibility and Support

JBoss EAP for OpenShift provides images for JDK 8, JDK 11, and Eclipse OpenJ9.

Two variants of each image are available: an S2I builder image and a runtime image. The S2I builder image contains a complete JBoss EAP server with tooling needed during S2I build. The runtime image contains dependencies needed to run JBoss EAP but does not contain a server. The server is installed in the runtime image during a chained build.

The following modifications have been applied to images in JBoss EAP for OpenShift 7.3.

- Default drivers and modules have been removed.

- Templates for MySQL and PostgreSQL have been removed. You can provision these capabilities with a custom layer.

- The Hawkular agent is not active in these images. If configured, it is ignored.

-

The default datasource,

ExampleDS, is no longer added by default at container startup. If you need the default datasource, use the environment variableENABLE_GENERATE_DEFAULT_DATASOURCEwith a value oftrue(ENABLE_GENERATE_DEFAULT_DATASOURCE=true) to include it.

The following discovery mechanism protocols are deprecated and have been replaced by other protocols:

-

The

openshift.DNS_PINGprotocol was deprecated and is replaced with thedns.DNS_PINGprotocol. If you referenced theopenshift.DNS_PINGprotocol in acustomized standalone-openshift.xmlfile, replace the protocol with thedns.DNS_PINGprotocol. -

The

openshift.KUBE_PINGdiscovery mechanism protocol was deprecated and is replaced with thekubernetes.KUBE_PINGprotocol.

JDK 8 Images

- Red Hat Universal Base Image: 7

- Prefix for template names: eap73-*

- Builder Image: https://access.redhat.com/containers/#/registry.access.redhat.com/jboss-eap-7/eap73-openjdk8-openshift-rhel7

- Runtime Image: https://access.redhat.com/containers/#/registry.access.redhat.com/jboss-eap-7/eap73-openjdk8-runtime-openshift-rhel7

A JDK 8 image for JBoss EAP is not provided for IBM Z and IBM Power Systems.

JDK 11 Images

- Red Hat Universal Base Image: 8

- Prefix for template names: eap73-openjdk11-*

- Builder Image: https://access.redhat.com/containers/#/registry.access.redhat.com/jboss-eap-7/eap73-openjdk11-openshift-rhel8

- Runtime Image: https://access.redhat.com/containers/#/registry.access.redhat.com/jboss-eap-7/eap73-openjdk11-runtime-openshift-rhel8

Eclipse OpenJ9 Images

- Red Hat Universal Base Image: 8

- Prefix for template names: eap73-*

- Builder Image: https://access.redhat.com/containers/#/registry.access.redhat.com/jboss-eap-7/eap73-openj9-11-openshift-rhel8

- Runtime Image: https://access.redhat.com/containers/#/registry.access.redhat.com/jboss-eap-7/eap73-openj9-11-runtime-openshift-rhel8

JBoss EAP for OpenShift is updated frequently. Therefore, it is important to understand which versions of the images are compatible with which versions of OpenShift. See OpenShift and Atomic Platform Tested Integrations on the Red Hat Customer Portal for more information on version compatibility and support.

Additional Resources

1.4.1. OpenShift 4.x Support

Changes in OpenShift 4.1 affect access to Jolokia, and the Open Java Console is no longer available in the OpenShift 4.x web console.

In previous releases of OpenShift, certain kube-apiserver proxied requests were authenticated and passed through to the cluster. This behavior is now considered insecure, and so, accessing Jolokia in this manner is no longer supported.

Due to changes in codebase for the OpenShift console, the link to the Open Java Console is no longer available.

1.4.2. IBM Z and IBM Power Systems Support

The s390x and ppc64le variant of libartemis-native is not included in the image. Thus, any settings related to AIO will not be taken into account.

-

journal-type: Setting thejournal-typetoASYNCIOhas no effect. The value of this attribute defaults toNIOat runtime. -

journal-max-io: This attribute has no effect. -

journal-store-enable-async-io: This attribute has no effect.

1.4.3. Upgrades from JBoss EAP 7.1 to JBoss EAP 7.3 on OpenShift

The file standalone-openshift.xml installed with JBoss EAP 7.1 on OpenShift is not compatible with JBoss EAP 7.3 and later. You must modify a standalone-openshift.xml file installed with JBoss EAP 7.1 before you use it to start a JBoss EAP 7.3 or later container for OpenShift.

1.5. Deployment Options

You can deploy the JBoss EAP Java applications on OpenShift using one of the following options:

- A JBoss EAP for OpenShift template. For more information, see Build and Run a Java Application on the JBoss EAP CD for OpenShift Image.

- The EAP operator, a JBoss EAP-specific controller that extends the OpenShift API to create, configure, and manage instances of complex stateful applications on behalf of an OpenShift user. For more information, see EAP Operator for Automating Application Deployment on OpenShift.

The EAP operator is supported only on OpenShift 4 and later versions.

Chapter 2. Build and Run a Java Application on the JBoss EAP for OpenShift Image

The following workflow demonstrates using the Source-to-Image (S2I) process to build and run a Java application on the JBoss EAP for OpenShift image.

As an example, the kitchensink quickstart is used in this procedure. It demonstrates a Jakarta EE web-enabled database application using JSF, CDI, EJB, JPA, and Bean Validation. See the kitchensink quickstart that ships with JBoss EAP 7 for more information.

2.1. Prerequisites

This workflow assumes that you already have an active OpenShift Online subscription and that you have installed the OpenShift CLI.

2.2. Prepare OpenShift for Application Deployment

-

Log in to your OpenShift instance using the

oc logincommand. Create a new project in OpenShift.

A project allows a group of users to organize and manage content separately from other groups. You can create a project in OpenShift using the following command.

$ oc new-project PROJECT_NAMEFor example, for the

kitchensinkquickstart, create a new project namedeap-demousing the following command.$ oc new-project eap-demo

Optional: Create a keystore and a secret.

NoteCreating a keystore and a secret is required if you are using any HTTPS-enabled features in your OpenShift project. For example, if you are using the

eap73-https-s2itemplate (for JDK 8) or theeap73-openjdk11-https-s2itemplate (for JDK 11), you must create a keystore and secret.This workflow demonstration for the

kitchensinkquickstart does not use an HTTPS template, so a keystore and secret are not required.Create a keystore.

WarningThe following commands generate a self-signed certificate, but for production environments Red Hat recommends that you use your own SSL certificate purchased from a verified Certificate Authority (CA) for SSL-encrypted connections (HTTPS).

You can use the Java

keytoolcommand to generate a keystore using the following command.$ keytool -genkey -keyalg RSA -alias ALIAS_NAME -keystore KEYSTORE_FILENAME.jks -validity 360 -keysize 2048

For example, for the

kitchensinkquickstart, use the following command to generate a keystore.$ keytool -genkey -keyalg RSA -alias eapdemo-selfsigned -keystore keystore.jks -validity 360 -keysize 2048

Create a secret from the keystore.

Create a secret from the previously created keystore using the following command.

$ oc create secret SECRET_NAME KEYSTORE_FILENAME.jks

For example, for the

kitchensinkquickstart, use the following command to create a secret.$ oc create secret eap7-app-secret keystore.jks

2.3. Import the Latest JBoss EAP for OpenShift Imagestreams and Templates

You must import the latest JBoss EAP for OpenShift imagestreams and templates for your JDK into the namespace of your OpenShift project.

Log in to the Red Hat Container Registry using your Customer Portal credentials to import the JBoss EAP imagestreams and templates. For more information, see Red Hat Container Registry Authentication.

Import command for JDK 8

for resource in \

eap73-amq-persistent-s2i.json \

eap73-amq-s2i.json \

eap73-basic-s2i.json \

eap73-https-s2i.json \

eap73-image-stream.json \

eap73-sso-s2i.json \

eap73-starter-s2i.json \

do

oc replace --force -f \

https://raw.githubusercontent.com/jboss-container-images/jboss-eap-7-openshift-image/eap73/templates/${resource}

doneThis command imports the following imagestreams and templates.

-

The JDK 8 builder imagestream:

jboss-eap73-openshift -

The JDK 8 runtime imagestream:

jboss-eap73-runtime-openshift - All templates specified in the command.

Import command for JDK 11

for resource in \

eap73-openjdk11-amq-persistent-s2i.json \

eap73-openjdk11-amq-s2i.json \

eap73-openjdk11-basic-s2i.json \

eap73-openjdk11-https-s2i.json \

eap73-openjdk11-image-stream.json \

eap73-openjdk11-sso-s2i.json \

eap73-openjdk11-starter-s2i.json \

do

oc replace --force -f \

https://raw.githubusercontent.com/jboss-container-images/jboss-eap-7-openshift-image/eap73/templates/${resource}

doneThis command imports the following imagestreams and templates.

-

The JDK 11 builder imagestream:

jboss-eap73-openjdk11-openshift -

The JDK 11 runtime imagestream:

jboss-eap73-openjdk11-runtime-openshift - All templates specified in the command.

Import command for Eclipse OpenJ9 on IBM Z and IBM Power Systems

oc replace --force -f \

https://raw.githubusercontent.com/jboss-container-images/jboss-eap-openshift-templates/eap73/eap73-openj9-image-stream.json

for resource in \

eap73-amq-persistent-s2i.json \

eap73-amq-s2i.json \

eap73-basic-s2i.json \

eap73-https-s2i.json \

eap73-sso-s2i.json \

do

oc replace --force -f \

https://raw.githubusercontent.com/jboss-container-images/jboss-eap-openshift-templates/eap73/templates/${resource}

doneThis command imports the following imagestreams and templates.

-

The Eclipse OpenJ9 builder imagestream:

jboss-eap-7-openj9-11-openshift -

The Eclipse OpenJ9 runtime imagestream:

jboss-eap-7-openj9-11-runtime-openshift - All templates specified in the command.

The JBoss EAP imagestreams and templates imported using these commands are only available within that OpenShift project.

If you want to import the image streams and templates into a different project, add the -n PROJECT_NAME to the oc replace line of the command. For example:

...

oc replace -n PROJECT_NAME --force -f

...If you use the cluster-samples-operator, refer to the OpenShift documentation on configuring the cluster samples operator. See Configuring the Samples Operator for details about configuring the cluster samples operator.

2.4. Deploy a JBoss EAP Source-to-Image (S2I) Application to OpenShift

After you import the images and templates, you can deploy applications to OpenShift.

OpenJDK 8 and Eclipse OpenJ9 template names use the prefix eap73-*; for example, eap73-https-s2i. OpenJDK 11 template names use the prefix eap73-openjdk11-*; for example, eap73-openjdk11-https-s2i.

Prerequisites

Optional: A template can specify default values for many template parameters, and you might have to override some, or all, of the defaults. To see template information, including a list of parameters and any default values, use the command oc describe template TEMPLATE_NAME.

Procedure

Create a new OpenShift application that uses the JBoss EAP for OpenShift image and the source code of your Java application. You can use one of the provided JBoss EAP for OpenShift templates for S2I builds. You can also choose to provision a trimmed server.

For example, to deploy the

kitchensinkquickstart using the JDK 8 builder image, enter the following command to use theeap73-basic-s2itemplate in theeap-demoproject, created in Prepare OpenShift for Application Deployment, with thekitchensinksource code on GitHub. This quickstart does not support the trimming capability.oc new-app --template=eap73-basic-s2i \1 -p IMAGE_STREAM_NAMESPACE=eap-demo \2 -p SOURCE_REPOSITORY_URL=https://github.com/jboss-developer/jboss-eap-quickstarts \3 -p SOURCE_REPOSITORY_REF=7.3.x-openshift \4 -p CONTEXT_DIR=kitchensink5

- 1

- The template to use. The

eap73prefix specifies the JDK 8 template. - 2

- The latest imagestreams and templates were imported into the project’s namespace, so you must specify the namespace where to find the imagestream. This is usually the project’s name

- 3

- URL to the repository containing the application source code.

- 4

- The Git repository reference to use for the source code. This can be a Git branch or tag reference.

- 5

- The directory within the source repository to build.

NoteUse a modified version of this command for the Eclipse OpenJ9 builder image on IBM Z and IBM Power Systems. Include the following image name parameters in the command. The JDK environment uses default values for these parameters.

- EAP_IMAGE_NAME=jboss-eap-7-openj9-11-openshift \

- EAP_RUNTIME_IMAGE_NAME=jboss-eap-7-openj9-11-runtime-openshift \

As another example, to deploy the

helloworld-html5quickstart using the JDK 11 runtime image and trimming JBoss EAP to include only thejaxrs-serverlayer, enter the following command. The command uses theeap73-openjdk11-basic-s2itemplate in theeap-demoproject, created in Prepare OpenShift for Application Deployment, with thehelloworld-html5source code on GitHub.oc new-app --template=eap73-openjdk11-basic-s2i \1 -p IMAGE_STREAM_NAMESPACE=eap-demo \2 -p SOURCE_REPOSITORY_URL=https://github.com/jboss-developer/jboss-eap-quickstarts \3 -p SOURCE_REPOSITORY_REF=7.3.x-openshift \4 -p GALLEON_PROVISION_LAYERS=jaxrs-server \5 -p CONTEXT_DIR=helloworld-html56

- 1

- The template to use. The

eap73-openjdk11prefix specifies the JDK 11 template. - 2

- The latest imagestreams and templates were imported into the project’s namespace, so you must specify the namespace where to find the imagestream. This is usually the project’s name.

- 3

- URL to the repository containing the application source code.

- 4

- The Git repository reference to use for the source code. This can be a Git branch or tag reference.

- 5

- Provision a trimmed server with only the

jaxrs-serverlayer. - 6

- The directory within the source repository to build.

NoteYou might also want to configure environment variables when creating your new OpenShift application.

For example, if you are using an HTTPS template such as

eap73-https-s2i, you must specify the required HTTPS environment variablesHTTPS_NAME,HTTPS_PASSWORD, andHTTPS_KEYSTOREto match your keystore details.NoteIf the template uses AMQ, you must include the

AMQ_IMAGE_NAMEparameter with the appropriate value.If the template uses SSO, you must include the

SSO_IMAGE_NAMEparameter with the appropriate value.Retrieve the name of the build configuration.

$ oc get bc -o name

Use the name of the build configuration from the previous step to view the Maven progress of the build.

$ oc logs -f buildconfig/BUILD_CONFIG_NAMEFor example, for the

kitchensinkquickstart, the following command shows the progress of the Maven build.$ oc logs -f buildconfig/eap-app

Additional Resources

2.5. Post Deployment Tasks

Depending on your application, some tasks might need to be performed after your OpenShift application has been built and deployed. This might include exposing a service so that the application is viewable from outside of OpenShift, or scaling your application to a specific number of replicas.

Get the service name of your application using the following command.

$ oc get service

Expose the main service as a route so you can access your application from outside of OpenShift. For example, for the

kitchensinkquickstart, use the following command to expose the required service and port.$ oc expose service/eap-app --port=8080

NoteIf you used a template to create the application, the route might already exist. If it does, continue on to the next step.

Get the URL of the route.

$ oc get route

Access the application in your web browser using the URL. The URL is the value of the

HOST/PORTfield from previous command’s output.If your application does not use the JBoss EAP root context, append the context of the application to the URL. For example, for the

kitchensinkquickstart, the URL might behttp://HOST_PORT_VALUE/kitchensink/.Optionally, you can also scale up the application instance by running the following command. This increases the number of replicas to

3.$ oc scale deploymentconfig DEPLOYMENTCONFIG_NAME --replicas=3For example, for the

kitchensinkquickstart, use the following command to scale up the application.$ oc scale deploymentconfig eap-app --replicas=3

2.6. Chained Build Support in JBoss EAP for OpenShift

JBoss EAP for OpenShift supports chained builds in OpenShift.

JBoss EAP for OpenShift templates employ chained builds. When you use these templates, two builds result:

-

An intermediate image named

[application name]-build-artifacts -

The final image,

[application name]

For details about chained builds, see the OpenShift documentation.

Additional Resources

Chapter 3. Configuring the JBoss EAP for OpenShift Image for Your Java Application

The JBoss EAP for OpenShift image is preconfigured for basic use with your Java applications. However, you can configure the JBoss EAP instance inside the image. The recommended method is to use the OpenShift S2I process, together with application template parameters and environment variables.

Any configuration changes made on a running container will be lost when the container is restarted or terminated.

This includes any configuration changes made using scripts that are included with a traditional JBoss EAP installation, for example add-user.sh or the management CLI.

It is strongly recommended that you use the OpenShift S2I process, together with application template parameters and environment variables, to make any configuration changes to the JBoss EAP instance inside the JBoss EAP for OpenShift image.

3.1. How the JBoss EAP for OpenShift S2I Process Works

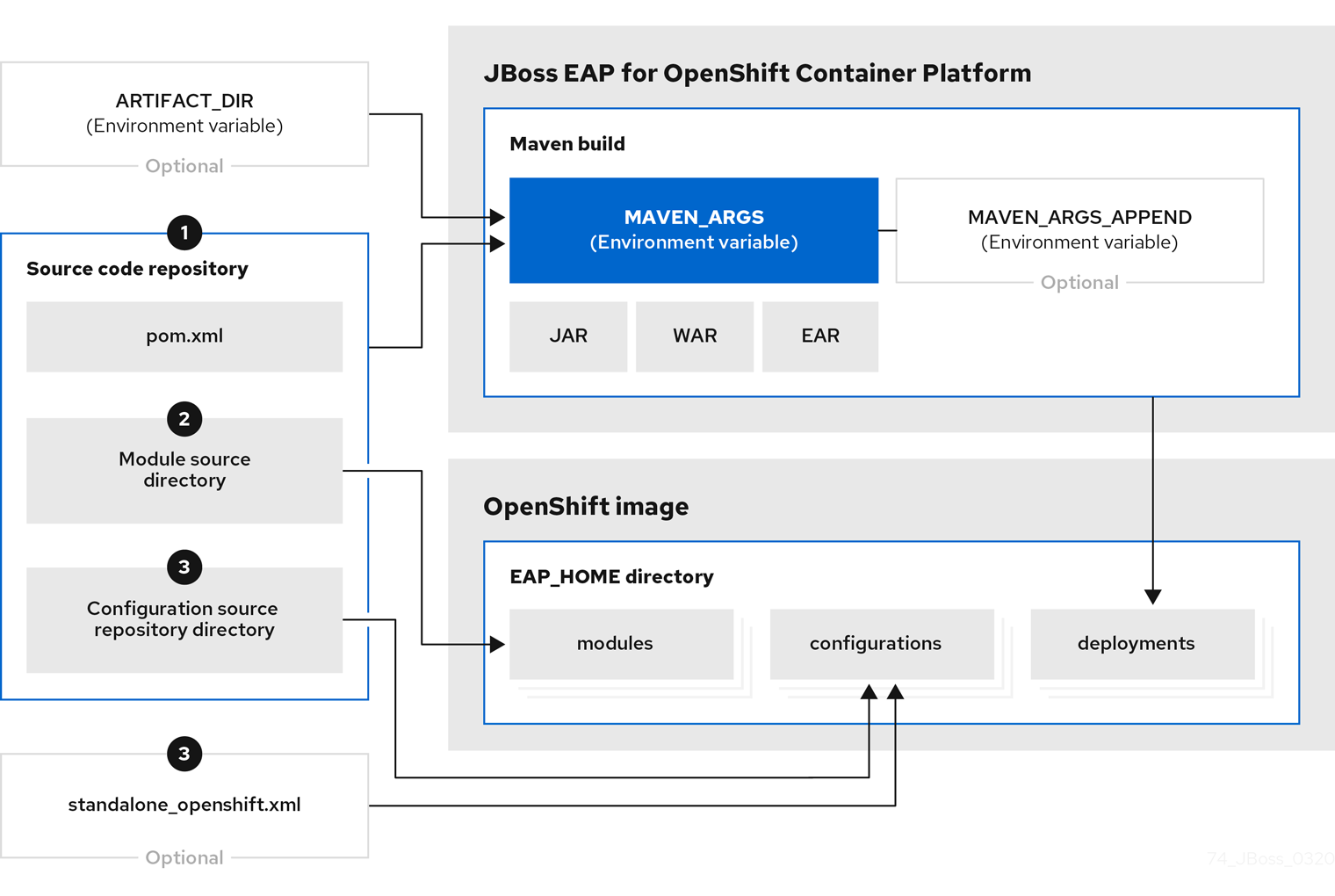

Flowchart illustrating the S2I process for JBoss EAP:

If a

pom.xmlfile is present in the source code repository, the S2I builder image initiates a Maven build process. The Maven build uses the contents of$MAVEN_ARGS.If a

pom.xmlfile is not present in the source code repository, the S2I builder image initiates a binary type build.To add custom Maven arguments or options, use

$MAVEN_ARGS_APPEND. The$MAVEN_ARGS_APPENDvariable appends options to$MAVEN_ARGS.By default, the OpenShift profile uses the Maven

packagegoal, which includes system properties for skipping tests (-DskipTests) and enabling the Red Hat GA repository (-Dcom.redhat.xpaas.repo).The results of a successful Maven build are copied to the

EAP_HOME/standalone/deployments/directory inside the JBoss EAP for OpenShift image. This includes all JAR, WAR, and EAR files from the source repository specified by the$ARTIFACT_DIRenvironmental variable. The default value ofARTIFACT_DIRis the Maven target directory.NoteTo use Maven behind a proxy on JBoss EAP for OpenShift image, set the

$HTTP_PROXY_HOSTand$HTTP_PROXY_PORTenvironment variables. Optionally, you can also set the$HTTP_PROXY_USERNAME,$HTTP_PROXY_PASSWORD, and$HTTP_PROXY_NONPROXYHOSTSvariables.-

All files in the

modulessource repository directory are copied to theEAP_HOME/modules/directory in the JBoss EAP for OpenShift image. -

All files in the

configurationsource repository directory are copied to theEAP_HOME/standalone/configuration/directory in the JBoss EAP for OpenShift image. If you want to use a custom JBoss EAP configuration file, name the filestandalone-openshift.xml.

Additional Resources

- See Binary (local) source on the OpenShift 4.2 documentation for additional information on binary type builds.

- See Artifact Repository Mirrors for additional guidance on how to instruct the S2I process to use the custom Maven artifacts repository mirror.

3.2. Configuring JBoss EAP for OpenShift Using Environment Variables

Using environment variables is the recommended method of configuring the JBoss EAP for OpenShift image. See the OpenShift documentation for instructions on specifying environment variables for application containers and build containers.

For example, you can set the JBoss EAP instance’s management username and password using environment variables when creating your OpenShift application:

oc new-app --template=eap73-basic-s2i \ -p IMAGE_STREAM_NAMESPACE=eap-demo \ -p SOURCE_REPOSITORY_URL=https://github.com/jboss-developer/jboss-eap-quickstarts \ -p SOURCE_REPOSITORY_REF=7.3.x-openshift \ -p CONTEXT_DIR=kitchensink \ -e ADMIN_USERNAME=myspecialuser \ -e ADMIN_PASSWORD=myspecialp@ssw0rd

This example uses the JDK 8 template. For JDK 11, use the eap73-openjdk11-basic-s2i template.

Available environment variables for the JBoss EAP for OpenShift image are listed in Reference Information.

3.2.1. JVM Memory Configuration

The OpenShift EAP image has a mechanism to automatically calculate the default JVM memory settings based on the current environment, but you can also configure the JVM memory settings using environment variables.

3.2.1.1. JVM Default Memory Settings

If a memory limit is defined for the current container, and the limit is lower than the total available memory, the default JVM memory settings are calculated automatically. Otherwise, the default JVM memory settings are the default defined in the standalone.conf file of the EAP version used as the base server for the image.

The container memory limit is retrieved from the file /sys/fs/cgroup/memory/memory.limit_in_bytes. The total available memory is retrieved using the /proc/meminfo command.

When memory settings are calculated automatically, the following formulas are used:

- Maximum heap size (-Xmx): fifty percent (50%) of user memory

- Initial heap size (-Xms): twenty-five percent (25%) of the calculated maximum heap size.

For example, the defined memory limit is 1 GB, and this limit is lower than the total available memory reported by /proc/meminfo, then the memory settings will be: -Xms128m -Xmx512

You can use the following environment variables to modify the JVM settings calculated automatically. Note that these variables are only used when default memory size is calculated automatically (in other words, when a valid container memory limit is defined).

-

JAVA_MAX_MEM_RATIO -

JAVA_INITIAL_MEM_RATIO -

JAVA_MAX_INITIAL_MEM

You can disable automatic memory calculation by setting the value of the following two environment variables to 0.

-

JAVA_INITIAL_MEM_RATIO -

JAVA_MAX_MEM_RATIO

3.2.1.2. JVM Garbage Collection Settings

The EAP image for OpenShift includes settings for both garbage collection and garbage collection logging

Garbage Collection Settings

-XX:+UseParallelOldGC -XX:MinHeapFreeRatio=10 -XX:MaxHeapFreeRatio=20 -XX:GCTimeRatio=4 -XX:AdaptiveSizePolicyWeight=90 -XX:+ExitOnOutOfMemoryError

Garbage Collection Logging Settings for Java 8 (non-modular JVM)

-verbose:gc -Xloggc:/opt/eap/standalone/log/gc.log -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=5 -XX:GCLogFileSize=3M -XX:-TraceClassUnloading

Garbage Collection Logging Settings for Java 11 (modular JVM)

-Xlog:gc*:file=/opt/eap/standalone/log/gc.log:time,uptimemillis:filecount=5,filesize=3M

3.2.1.3. Resource Limits in Default Settings

If set, additional default settings are included in the image.

-XX:ParallelGCThreads={core-limit} -Djava.util.concurrent.ForkJoinPool.common.parallelism={core-limit} -XX:CICompilerCount=2

The value of {core-limit} is defined using the JAVA_CORE_LIMIT environment variable, or by the CPU core limit imposed by the container.

The value of CICompilerCount is always fixed as 2.

3.2.1.4. JVM Environment Variables

Use these environment variables to configure the JVM in the EAP for OpenShift image.

Table 3.1. JVM Environment Variables

| Variable Name | Example | Default Value | JVM Settings | Description |

|---|---|---|---|---|

| JAVA_OPTS | -verbose:class | No default | Multiple |

JVM options to pass to the

Use

Using

In addition, if automatic memory calculation is not enabled, the inital Java memory (-Xms) and maximum Java memory (-Xmx) are not defined.

Add these defaults if you use |

| JAVA_OPTS_APPEND | -Dsome.property=value | No default | Multiple |

User-specified Java options to append to generated options in |

| JAVA_MAX_MEM_RATIO | 50 | 50 | -Xmx |

Use this variable when the |

| JAVA_INITIAL_MEM_RATIO | 25 | 25 | -Xms |

Use this variable when the |

| JAVA_MAX_INITIAL_MEM | 4096 | 4096 | -Xms |

Use this variable when the |

| JAVA_DIAGNOSTICS | true | false (disabled) | The settings depend on the JDK used by the container.

|

Set the value of this variable to true to include diagnostic information in standard output when events occur. If this variable is defined as true in an environment where |

| DEBUG | true | false | -agentlib:jdwp=transport=dt_socket,address=$DEBUG_PORT,server=y,suspend=n | Enables remote debugging. |

| DEBUG_PORT | 8787 | 8787 | -agentlib:jdwp=transport=dt_socket,address=$DEBUG_PORT,server=y,suspend=n | Specifies the port used for debugging. |

| JAVA_CORE_LIMIT | Undefined | -XX:parallelGCThreads -Djava.util.concurrent.ForkJoinPool.common.parallelism -XX:CICompilerCount | A user-defined limit on the number of cores. If the container reports a limit constraint, the value of the JVM settings is limited to the container core limit. The value of -XXCICompilerCount is always 2. By default, this variable is undefined. In that case, if a limit is not defined on the container, the JVM settings are not set. | |

| GC_MIN_HEAP_FREE_RATIO | 20 | 10 | -XX:MinHeapFreeRatio | Minimum percentage of heap free after garbage collection to avoid expansion. |

| GC_MAX_HEAP_FREE_RATIO | 40 | 20 | -XX:MaxHeapFreeRatio | Maximum percentage of heap free after garbage collection to avoid shrinking. |

| GC_TIME_RATIO | 4 | 4 | -XX:GCTimeRatio | Specifies the ratio of the time spent outside of garbage collection (for example, time spent in application execution) to the time spent in garbage collection. |

| GC_ADAPTIVE_SIZE_POLICY_WEIGHT | 90 | 90 | -XX:AdaptiveSizePolicyWeight | The weighting given to the current garbage collection time versus the previous garbage collection times. |

| GC_METASPACE_SIZE | 20 | 96 | -XX:MetaspaceSize | The initial metaspace size. |

| GC_MAX_METASPACE_SIZE | 100 | 256 | -XX:MaxMetaspaceSize | The maximum metaspace size. |

| GC_CONTAINER_OPTIONS | -XX:+UserG1GC | -XX:-UseParallelOldGC | -XX:-UseParallelOldGC | Specifies the Java garbage collection to use. The value of the variable should be the JRE command-line options to specify the required garbage collection. The JRE command specified overrides the default. |

The following environment variables are deprecated:

-

JAVA_OPTIONS: UseJAVA_OPTS. -

INITIAL_HEAP_PERCENT: UseJAVA_INITIAL_MEM_RATIO. -

CONTAINER_HEAP_PERCENT: UseJAVA_MAX_MEM_RATIO.

3.3. Build Extensions and Project Artifacts

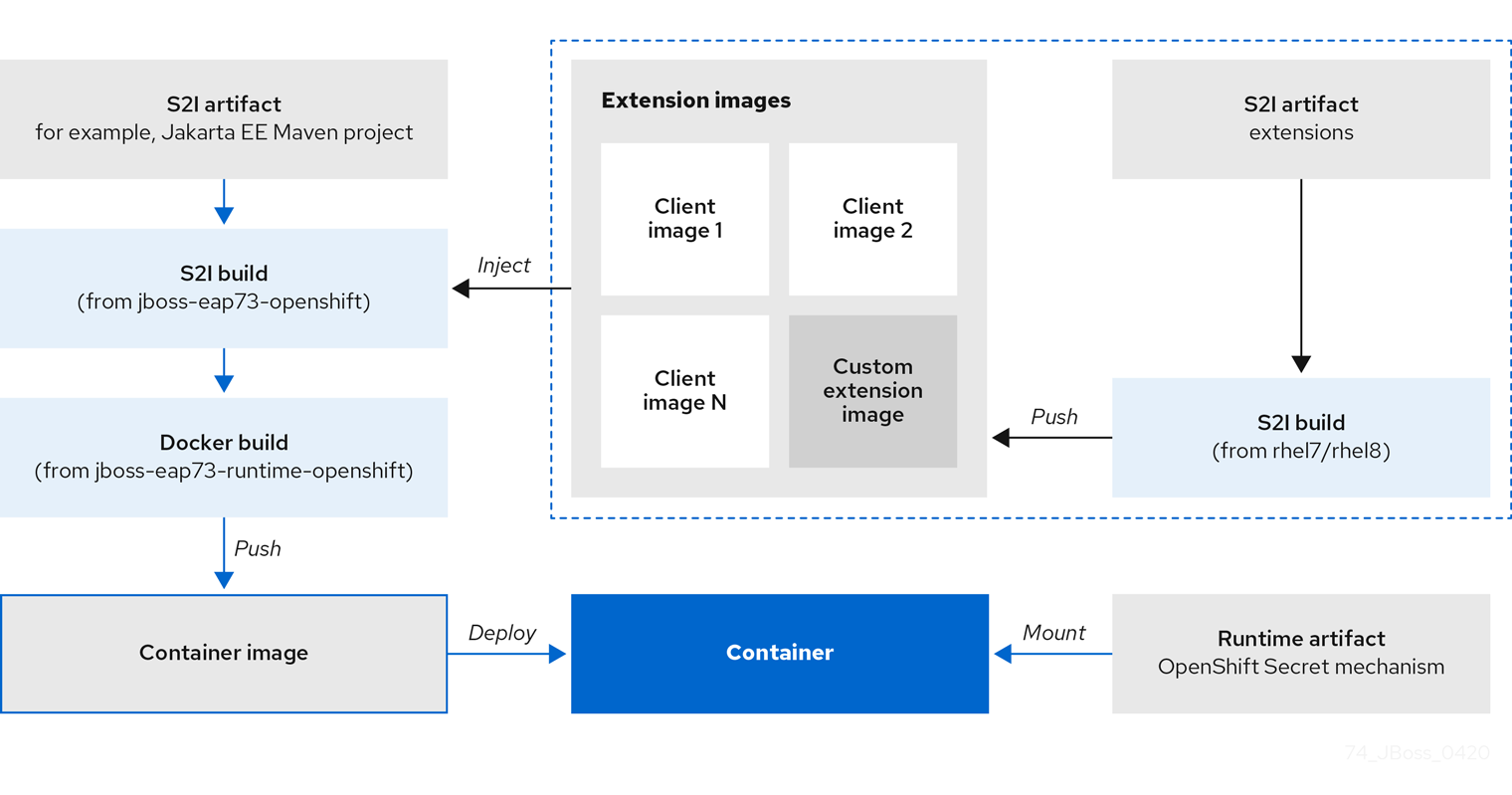

The JBoss EAP for OpenShift image extends database support in OpenShift using various artifacts. These artifacts are included in the built image through different mechanisms:

- S2I artifacts that are injected into the image during the S2I process.

- Runtime artifacts from environment files provided through the OpenShift Secret mechanism.

Support for using the Red Hat-provided internal datasource drivers with the JBoss EAP for OpenShift image is now deprecated. Red Hat recommends that you use JDBC drivers obtained from your database vendor for your JBoss EAP applications.

The following internal datasources are no longer provided with the JBoss EAP for OpenShift image:

- MySQL

- PostgreSQL

For more information about installing drivers, see Modules, Drivers, and Generic Deployments.

For more information on configuring JDBC drivers with JBoss EAP, see JDBC drivers in the JBoss EAP Configuration Guide.

Note that you can also create a custom layer to install these drivers and datasources if you want to add them to a provisioned server.

Additional Resources

3.3.1. S2I Artifacts

The S2I artifacts include modules, drivers, and additional generic deployments that provide the necessary configuration infrastructure required for the deployment. This configuration is built into the image during the S2I process so that only the datasources and associated resource adapters need to be configured at runtime.

See Artifact Repository Mirrors for additional guidance on how to instruct the S2I process to utilize the custom Maven artifacts repository mirror.

3.3.1.1. Modules, Drivers, and Generic Deployments

There are a few options for including these S2I artifacts in the JBoss EAP for OpenShift image:

- Include the artifact in the application source deployment directory. The artifact is downloaded during the build and injected into the image. This is similar to deploying an application on the JBoss EAP for OpenShift image.

Include the

CUSTOM_INSTALL_DIRECTORIESenvironment variable, a list of comma-separated list of directories used for installation and configuration of artifacts for the image during the S2I process. There are two methods for including this information in the S2I:An

install.shscript in the nominated installation directory. The install script executes during the S2I process and operates with impunity.install.shScript Example#!/bin/bash injected_dir=$1 source /usr/local/s2i/install-common.sh install_deployments ${injected_dir}/injected-deployments.war install_modules ${injected_dir}/modules configure_drivers ${injected_dir}/drivers.envThe

install.shscript is responsible for customizing the base image using APIs provided byinstall-common.sh.install-common.shcontains functions that are used by theinstall.shscript to install and configure the modules, drivers, and generic deployments.Functions contained within

install-common.sh:-

install_modules -

configure_drivers install_deploymentsModules

A module is a logical grouping of classes used for class loading and dependency management. Modules are defined in the

EAP_HOME/modules/directory of the application server. Each module exists as a subdirectory, for exampleEAP_HOME/modules/org/apache/. Each module directory then contains a slot subdirectory, which defaults to main and contains themodule.xmlconfiguration file and any required JAR files.For more information about configuring

module.xmlfiles for MySQL and PostgreSQL JDBC drivers, see the Datasource Configuration Examples in the JBoss EAP Configuration Guide.Example

module.xmlFile for PostgreSQL Datasource<?xml version="1.0" encoding="UTF-8"?> <module xmlns="urn:jboss:module:1.0" name="org.postgresql"> <resources> <resource-root path="postgresql-jdbc.jar"/> </resources> <dependencies> <module name="javax.api"/> <module name="javax.transaction.api"/> </dependencies> </module>

Example

module.xmlFile for MySQL Connect/J 8 Datasource<?xml version="1.0" encoding="UTF-8"?> <module xmlns="urn:jboss:module:1.0" name="com.mysql"> <resources> <resource-root path="mysql-connector-java-8.0.Z.jar" /> </resources> <dependencies> <module name="javax.api"/> <module name="javax.transaction.api"/> </dependencies> </module>

NoteThe ".Z" in

mysql-connector-java-8.0.Z.jarindicates the version of theJARfile downloaded. The file can be renamed, but the name must match the name in themodule.xmlfile.The

install_modulesfunction ininstall.shcopies the respective JAR files to the modules directory in JBoss EAP, along with themodule.xml.Drivers

Drivers are installed as modules. The driver is then configured in

install.shby theconfigure_driversfunction, the configuration properties for which are defined in a runtime artifact environment file.Adding Datasource Drivers

The MySQL and PostgreSQL datasources are no longer provided as pre-configured internal datasources. You can still install these drivers as modules; see the description in Modules, Drivers, and Generic Deployments. You can obtain these JDBC drivers from the database vendor for your JBoss EAP applications.

Create a

drivers.envfile for each datasource to be installed.Example

drivers.envFile for MySQL Datasource#DRIVER DRIVERS=MYSQL MYSQL_DRIVER_NAME=mysql MYSQL_DRIVER_MODULE=org.mysql MYSQL_DRIVER_CLASS=com.mysql.cj.jdbc.Driver MYSQL_XA_DATASOURCE_CLASS=com.mysql.jdbc.jdbc2.optional.MysqlXADataSource

Example

drivers.envFile for PostgreSQL Datasource#DRIVER DRIVERS=POSTGRES POSTGRES_DRIVER_NAME=postgresql POSTGRES_DRIVER_MODULE=org.postgresql POSTGRES_DRIVER_CLASS=org.postgresql.Driver POSTGRES_XA_DATASOURCE_CLASS=org.postgresql.xa.PGXADataSource

For information about download locations for various drivers, such as MySQL or PostgreSQL, see JDBC Driver Download Locations in the Configuration Guide.

-

Generic Deployments

Deployable archive files, such as JARs, WARs, RARs, or EARs, can be deployed from an injected image using the install_deployments function supplied by the API in install-common.sh.

If the

CUSTOM_INSTALL_DIRECTORIESenvironment variable has been declared but noinstall.shscripts are found in the custom installation directories, the following artifact directories will be copied to their respective destinations in the built image:-

modules/*copied to$JBOSS_HOME/modules/system/layers/openshift -

configuration/*copied to$JBOSS_HOME/standalone/configuration -

deployments/*copied to$JBOSS_HOME/standalone/deployments

This is a basic configuration approach compared to the

install.shalternative, and requires the artifacts to be structured appropriately.-

3.3.2. Runtime Artifacts

3.3.2.1. Datasources

There are two types of datasources:

- Internal datasources. These datasources run on OpenShift, but are not available by default through the Red Hat Registry. Configuration of these datasources is provided by environment files added to OpenShift Secrets.

- External datasources. These datasources do not run on OpenShift. Configuration of external datasources is provided by environment files added to OpenShift Secrets.

Example: Datasource Environment File

DB_SERVICE_PREFIX_MAPPING=PostgresXA-POSTGRES=DS1 DS1_JNDI=java:jboss/datasources/pgds DS1_DRIVER=postgresql-42.2.5.jar DS1_USERNAME=postgres DS1_PASSWORD=postgres DS1_MAX_POOL_SIZE=20 DS1_MIN_POOL_SIZE=20 DS1_CONNECTION_CHECKER=org.jboss.jca.adapters.jdbc.extensions.postgres.PostgreSQLValidConnectionChecker DS1_EXCEPTION_SORTER=org.jboss.jca.adapters.jdbc.extensions.postgres.PostgreSQLExceptionSorter

The DB_SERVICE_PREFIX_MAPPING property is a comma-separated list of datasource property prefixes. These prefixes are then appended to all properties for that datasource. Multiple datasources can then be included in a single environment file. Alternatively, each datasource can be provided in separate environment files.

Datasources contain two types of properties: connection pool-specific properties and database driver-specific properties. The connection pool-specific properties produce a connection to a datasource. Database driver-specific properties determine the driver for a datasource and are configured as a driver S2I artifact.

In the above example, DS1 is the datasource prefix, CONNECTION_CHECKER specifies a connection checker class used to validate connections for a database, and EXCEPTION_SORTER specifies the exception sorter class used to detect fatal database connection exceptions.

The datasources environment files are added to the OpenShift Secret for the project. These environment files are then called within the template using the ENV_FILES environment property, the value of which is a comma-separated list of fully qualified environment files as shown below.

{

“Name”: “ENV_FILES”,

“Value”: “/etc/extensions/datasources1.env,/etc/extensions/datasources2.env”

}3.3.2.2. Resource Adapters

Configuration of resource adapters is provided by environment files added to OpenShift Secrets.

Table 3.2. Resource Adapter Properties

| Attribute | Description |

|---|---|

| PREFIX_ID | The identifier of the resource adapter as specified in the server configuration file. |

| PREFIX_ARCHIVE | The resource adapter archive. |

| PREFIX_MODULE_SLOT |

The slot subdirectory, which contains the |

| PREFIX_MODULE_ID | The JBoss Module ID where the object factory Java class can be loaded from. |

| PREFIX_CONNECTION_CLASS | The fully qualified class name of a managed connection factory or admin object. |

| PREFIX_CONNECTION_JNDI | The JNDI name for the connection factory. |

| PREFIX_PROPERTY_ParentDirectory | Directory where the data files are stored. |

| PREFIX_PROPERTY_AllowParentPaths |

Set |

| PREFIX_POOL_MAX_SIZE | The maximum number of connections for a pool. No more connections will be created in each sub-pool. |

| PREFIX_POOL_MIN_SIZE | The minimum number of connections for a pool. |

| PREFIX_POOL_PREFILL | Specifies if the pool should be prefilled. Changing this value requires a server restart. |

| PREFIX_POOL_FLUSH_STRATEGY |

How the pool should be flushed in case of an error. Valid values are: |

The RESOURCE_ADAPTERS property is a comma-separated list of resource adapter property prefixes. These prefixes are then appended to all properties for that resource adapter. Multiple resource adapter can then be included in a single environment file. In the example below, MYRA is used as the prefix for a resource adapter. Alternatively, each resource adapter can be provided in separate environment files.

Example: Resource Adapter Environment File

#RESOURCE_ADAPTER RESOURCE_ADAPTERS=MYRA MYRA_ID=myra MYRA_ARCHIVE=myra.rar MYRA_CONNECTION_CLASS=org.javaee7.jca.connector.simple.connector.outbound.MyManagedConnectionFactory MYRA_CONNECTION_JNDI=java:/eis/MySimpleMFC

The resource adapter environment files are added to the OpenShift Secret for the project namespace. These environment files are then called within the template using the ENV_FILES environment property, the value of which is a comma-separated list of fully qualified environment files as shown below.

{

"Name": "ENV_FILES",

"Value": "/etc/extensions/resourceadapter1.env,/etc/extensions/resourceadapter2.env"

}3.4. Results of using JBoss EAP Templates for OpenShift

When you use JBoss EAP templates to compile your application, two images might be generated.

An intermediate image named [application name]-build-artifacts might be generated before the final image, [application name], is created.

You can remove the [application name]-build-artifacts image after your application has been deployed.

3.5. SSO Configuration of Red Hat JBoss Enterprise Application Platform for OpenShift Images

In Red Hat JBoss Enterprise Application Platform for OpenShift images, SSO is configured to use the legacy security subsystem.

The environmment variable SSO_FORCE_LEGACY_SECURITY is set to true in these images.

If you want to use the elytron subsystem for SSO security, update the value of the SSO_FORCE_LEGACY_SECURITY environment variable to false.

3.6. Default Datasource

In JBoss EAP 7.3, the default datasource, ExampleDS, is removed.

Some quickstarts require this datasource:

-

cmt -

thread-racing

Applications developed by customers might also require the default datasource.

If you need the default datasource, use the GENERATE_DEFAULT_DATASOURCE environment variable to include it when provisioning a JBoss EAP server.

ENABLE_GENERATE_DEFAULT_DATASOURCE=true

3.7. Deployment Considerations for the JBoss EAP for OpenShift Image

3.7.1. Scaling Up and Persistent Storage Partitioning

There are two methods for deploying JBoss EAP with persistent storage: single-node partitioning, and multi-node partitioning.

Single-node partitioning stores the JBoss EAP data store directory, including transaction data, in the storage volume.

Multi-node partitioning creates additional, independent split-n directories to store the transaction data for each JBoss EAP pod, where n is an incremental integer. This communication is not altered if a JBoss EAP pod is updated, goes down unexpectedly, or is redeployed. When the JBoss EAP pod is operational again, it reconnects to the associated split directory and continues as before. If a new JBoss EAP pod is added, a corresponding split-n directory is created for that pod.

To enable the multi-node configuration you must set the SPLIT_DATA parameter to true. This results in the server creating independent split-n directories for each instance within the persistent volume which are used as their data store.

Using the environment variables like SPLIT_DATA while using the EAP operator can cause consistency issues. You must use the EAP operator to manage transaction discovery in OpenShift 4 and later versions.

Due to the different storage methods of single-node and multi-node partitioning, changing a deployment from single-node to multi-node results in the application losing all data previously stored in the data directory, including messages, transaction logs, and so on. This is also true if changing a deployment from multi-node to single-node, as the storage paths will not match.

3.7.2. Scaling Down and Transaction Recovery

When the JBoss EAP for OpenShift image is deployed using a multi-node configuration, it is possible for unexpectedly terminated transactions to be left in the data directory of a terminating pod if the cluster is scaled down.

See manual transaction recovery to complete these branches.

Chapter 4. Capability Trimming in JBoss EAP for OpenShift

When building an image that includes JBoss EAP, you can control the JBoss EAP features and subsystems to include in the image.

The default JBoss EAP server included in S2I images includes the complete server and all features. You might want to trim the capabilities included in the provisioned server. For example, you might want to reduce the security exposure of the provisioned server, or you might want to reduce the memory footprint so it is more appropriate for a microservice container.

4.1. Provision a Custom JBoss EAP Server

To provision a custom server with trimmed capabilities, pass the GALLEON_PROVISION_LAYERS environment variable during the S2I build phase.

The value of the environment variable is a comma-separated list of the layers to provision to build the server.

For example, if you specify the environment variable as GALLEON_PROVISION_LAYERS=jaxrs-server,sso, a JBoss EAP server is provisioned with the following capabilities:

- A servlet container

- The ability to configure a datasource

-

The

jaxrs,weld, andjpasubsystems - Red Hat SSO integration

4.2. Available JBoss EAP Layers

Red Hat makes available six layers to customize provisioning of the JBoss EAP server in OpenShift.

Three layers are base layers that provide core functionality. Three are decorator layers that enhance the base layers.

The following Jakarta EE specifications are not supported in any provisioning layer:

- Jakarta Server Faces 2.3

- Jakarta Enterprise Beans 3.2

- Jakarta XML Web Services 2.3

4.2.1. Base Layers

Each base layer includes core functionality for a typical server user case.

datasources-web-server

This layer includes a servlet container and the ability to configure a datasource.

This layer does not include MicroProfile capabilities.

The following are the JBoss EAP subsystems included by default in the datasources-web-server:

-

core-management -

datasources -

deployment-scanner -

ee -

elytron -

io -

jca -

jmx -

logging -

naming -

request-controller -

security-manager -

transactions -

undertow

The following Jakarta EE specifications are supported in this layer:

- Jakarta JSON Processing 1.1

- Jakarta JSON Binding 1.0

- Jakarta Servlet 4.0

- Jakarta Expression Language 3.0

- Jakarta Server Pages 2.3

- Jakarta Standard Tag Library 1.2

- Jakarta Concurrency 1.1

- Jakarta Annotations 1.3

- Jakarta XML Binding 2.3

- Jakarta Debugging Support for Other Languages 1.0

- Jakarta Transactions 1.3

- Jakarta Connectors 1.7

jaxrs-server

This layer enhances the datasources-web-server layer with the following JBoss EAP subsystems:

-

jaxrs -

weld -

jpa

This layer also adds Infinispan-based second-level entity caching locally in the container.

The following MicroProfile capability is included in this layer:

- MicroProfile REST Client

The following Jakarta EE specifications are supported in this layer in addition to those supported in the datasources-web-server layer:

- Jakarta Contexts and Dependency Injection 2.0

- Jakarta Bean Validation 2.0

- Jakarta Interceptors 1.2

- Jakarta RESTful Web Services 2.1

- Jakarta Persistence 2.2

cloud-server

This layer enhances the jaxrs-server layer with the following JBoss EAP subsystems:

-

resource-adapters -

messaging-activemq(remote broker messaging, not embedded messaging)

This layer also adds the following observability features to the jaxrs-server layer:

- MicroProfile Health

- MicroProfile Metrics

- MicroProfile Config

- MicroProfile OpenTracing

The following Jakarta EE specification is supported in this layer in addition to those supported in the jaxrs-server layer:

- Jakarta Security 1.0

4.2.2. Decorator Layers

Decorator layers are not used alone. You can configure one or more decorator layers with a base layer to deliver additional functionality.

sso

This decorator layer adds Red Hat Single Sign-On integration to the provisioned server.

observability

This decorator layer adds the following observability features to the provisioned server:

- MicroProfile Health

- MicroProfile Metrics

- MicroProfile Config

- MicroProfile OpenTracing

This layer is built in to the cloud-server layer. You do not need to add this layer to the cloud-server layer.

web-clustering

This layer adds embedded Infinispan-based web session clustering to the provisioned server.

4.3. Provisioning User-developed Layers in JBoss EAP

In addition to provisioning layers available from Red Hat, you can provision custom layers you develop.

Procedure

Build a custom layer using the Galleon Maven plugin.

For more information, see Building Custom Layers for JBoss EAP.

- Deploy the custom layer to an accessible Maven repository.

Create a custom provisioning file to reference the user-defined layer and supported JBoss EAP layers and store it in your application directory.

For more information, see Custom Provisioning Files for JBoss EAP.

Run the S2I process to provision a JBoss EAP server in OpenShift.

For more information, see Building an Application Provisioned with User-developed Layers.

4.3.1. Building Custom Layers for JBoss EAP

Create your custom layer feature pack as a Maven project.

- Custom layers depend on at least a base layer. Select the base layer that provides the capabilities you need for your custom layer.

Within the Maven project, create your layer content in the directory

src/main/resources.For example, to create layers to provision support for PostgreSQL and a PostgreSQL datasource, in the directory

src/main/resourcescreate thelayers/standalonesubdirectories. Thestandalonesubdirectory includes the following content.postgresql-driverThis directory contains a

layer-spec.xmlfile with the following content.<?xml version="1.0" ?> <layer-spec xmlns="urn:jboss:galleon:layer-spec:1.0" name="postgresql-driver"> <feature spec="subsystem.datasources"> <feature spec="subsystem.datasources.jdbc-driver"> <param name="driver-name" value="postgresql"/> <param name="jdbc-driver" value="postgresql"/> <param name="driver-xa-datasource-class-name" value="org.postgresql.xa.PGXADataSource"/> <param name="driver-module-name" value="org.postgresql.jdbc"/> </feature> </feature> <packages> <package name="org.postgresql.jdbc"/> </packages> </layer-spec>postgresql-datasourceThis directory contains a

layer-spec.xmlfile with the following content.<?xml version="1.0" ?> <layer-spec xmlns="urn:jboss:galleon:layer-spec:1.0" name="postgresql-datasource"> <dependencies> <layer name="postgresql-driver"/> </dependencies> <feature spec="subsystem.datasources.data-source"> <param name="use-ccm" value="true"/> <param name="data-source" value="PostgreSQLDS"/> <param name="enabled" value="true"/> <param name="use-java-context" value="true"/> <param name="jndi-name" value="java:jboss/datasources/${env.POSTGRESQL_DATASOURCE,env.OPENSHIFT_POSTGRESQL_DATASOURCE:PostgreSQLDS}"/> <param name="connection-url" value="jdbc:postgresql://${env.POSTGRESQL_SERVICE_HOST,\ env.OPENSHIFT_POSTGRESQL_DB_HOST}:${env.POSTGRESQL_SERVICE_PORT,\ env.OPENSHIFT_POSTGRESQL_DB_PORT}/${env.POSTGRESQL_DATABASE, env.OPENSHIFT_POSTGRESQL_DB_NAME}"/> <param name="driver-name" value="postgresql"/> <param name="user-name" value="${env.POSTGRESQL_USER, env.OPENSHIFT_POSTGRESQL_DB_USERNAME}"/> <param name="password" value="${env.POSTGRESQL_PASSWORD, env.OPENSHIFT_POSTGRESQL_DB_PASSWORD}"/> <param name="check-valid-connection-sql" value="SELECT 1"/> <param name="background-validation" value="true"/> <param name="background-validation-millis" value="60000"/> <param name="flush-strategy" value="IdleConnections"/> <param name="statistics-enabled" value="${wildfly.datasources.statistics-enabled:${wildfly.statistics-enabled:false}}" /> </feature>

In the

pom.xmlfile used to build your custom feature pack, refer to the JBoss EAP dependencies.<dependency> <groupId>org.jboss.eap</groupId> <artifactId>wildfly-ee-galleon-pack</artifactId>1 <version>7.3.0.GA-redhat-00004</version> <type>zip</type> </dependency>- 1

- When using the JBoss EAP expansion pack (JBoss EAP XP), the value of this element should be

wildfly-galleon-pack.

These dependencies are available in the Red Hat Maven repository: https://maven.repository.redhat.com/ga/

-

Use the

build-user-feature-packgoal in the Galleon Maven plugin to build custom layers.

Additional Resources

WildFly Galleon Maven Plugin Documentation

Example illustrating packaging of drivers and datasources as Galleon layers

4.3.2. Custom Provisioning Files for JBoss EAP

Custom provisioning files are XML files with the file name provisioning.xml that are stored in the galleon subdirectory.

The following code illustrates a custom provisioning file.

<?xml version="1.0" ?>

<installation xmlns="urn:jboss:galleon:provisioning:3.0">

<feature-pack location="eap-s2i@maven(org.jboss.universe:s2i-universe)">1

<default-configs inherit="false"/>2

<packages inherit="false"/>3

</feature-pack>

<feature-pack location="com.example.demo:my-galleon-feature-pack:1.0

">4

<default-configs inherit="false"/>

<packages inherit="false"/>

</feature-pack>

<config model="standalone" name="standalone.xml">5

<layers>

<include name="cloud-server"/>

<include name="my-custom-driver"/>

<include name="my-custom-datasource"/>

</layers>

</config>

<options>6

<option name="optional-packages" value="passive+"/>

</options>

</installation>- 1

- This element instructs the provisioning process to provision the current eap-s2i feature-pack. Note that a builder image includes only one feature pack.

- 2

- This element instructs the provisioning process to exclude default configurations.

- 3

- This element instructs the provisioning process to exclude default packages.

- 4

- This element instructs the provisioning process to provision the

com.example.demo:my-galleon-feature-pack:1.0feature pack. The child elements instruct the process to exclude default configurations and default packages. - 5

- This element instructs the provisioning process to create a custom standalone configuration. The configuration includes the

cloud-serverbase layer and themy-custom-driverandmy-custom-datasourcecustom layers from thecom.example.demo:my-galleon-feature-pack:1.0feature pack. - 6

- This element instructs the provisioning process to optimize provisioning of JBoss EAP modules.

4.3.3. Building an Application Provisioned with User-developed Layers

When you build an application from a directory that includes a custom provisioning file, the S2I build process detects the provisioning file and provisions the JBoss EAP server as instructed.

Prerequisites

- The user-developed layers must exist in an accessible Maven repository.

- The application directory must contain a valid provisioning file that refers to the user-developed layers and the feature pack that contains them.

Procedure

Enter a standard S2I build command to build the application.

For example, assume you create the following custom provisioning file in your application directory.

<?xml version="1.0" ?> <installation xmlns="urn:jboss:galleon:provisioning:3.0"> <feature-pack location="eap-s2i@maven(org.jboss.universe:s2i-universe)"> <default-configs inherit="false"/> <packages inherit="false"/> </feature-pack> <feature-pack location="com.example.demo:my-galleon-feature-pack:1.0"> <default-configs inherit="false"/> <packages inherit="false"/> </feature-pack> <config model="standalone" name="standalone.xml"> <layers> <include name="cloud-server"/> <include name="my-custom-driver"/> <include name="my-custom-datasource"/> </layers> </config> <options> <option name="optional-packages" value="passive+"/> </options> </installation>The following command builds an application using the

com.example.demo:my-galleon-feature-pack:1.0feature pack, which includes themy-custom-driverandmy-custom-datasourcelayers. The resulting application is namedeap-my-custom-db. The connection to the database is configured using environment variables.oc build my-app \ -e DEMO_DB=demo \ -e DEMO_PASSWORD=demo \ -e DEMO_HOST=127.0.0.1 \ -e DEMO_PORT=5432 \ -e DEMO_USER=demo \ eap-my-custom-db

You can log in to the database on port 5432 with the user demo and the password demo.

Additional Resources

Chapter 5. Migration of Applications from JBoss EAP Imagestreams on OpenShift 4 to eap73 Imagestreams

Applications developed for the eap71 and eap72 imagestreams require changes to function correctly in the eap73 imagestream.

5.1. Updates to Liveness and Readiness Probe Configuration for eap73 Imagestreams

The YAML configuration of probes must be adjusted when migrating from the eap72 image running on OpenShift 3.11 to any eap73 image.

On the eap72 image, the default YAML configuration for a liveness probe is similar to the following code example:

Example YAML Configuration for eap72 Image on OpenShift 3.11 Liveness Probe

livenessProbe:

exec:

command:

- /bin/bash

- '-c'

- /opt/eap/bin/livenessProbe.sh

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

In this example, the liveness probe is located at /opt/eap/bin/livenessProbe.sh within the JBoss EAP image. The probe is triggered the first time after a 60 second initial delay and then every 10 seconds after a pod is started on the JBoss EAP server.

After three unsuccessful probes, the container is deemed unhealthy and OpenShift restarts the container in its pod.

On the eap72 image, a single call lasts 5 seconds before it returns as a success or failure. The call is followed by a 10 second waiting period. This means that 3 calls last approximately 35 seconds before the container inside the pod is restarted if the JBoss EAP image is unhealthy.

On any eap73 image, a single call lasts less than 1 second. Three calls last approximately 23 seconds. The configuration of the probe for eap73 images should be adjusted in the YAML configuration as follows:

Example YAML Configuration for any eap73 Imagestream Liveness Probe

livenessProbe:

exec:

command:

- /bin/bash

- '-c'

- /opt/eap/bin/livenessProbe.sh

initialDelaySeconds: 60

periodSeconds: 16

successThreshold: 1

failureThreshold: 3

In this example, periodSeconds has been increased by 6 seconds. Now the first call lasts 1 second, followed by a 16 second waiting period. Three calls would last approximately 34 seconds, which is nearly equivalent to the eap72 image behavior of the probe.

In the readiness probe, update periodSeconds in the YAML configuration with a similar value.

Example YAML Configuration for any eap73 Imagestream Readiness Probe

readinessProbe:

exec:

command:

- /bin/bash

- '-c'

- /opt/eap/bin/readinessProbe.sh

initialDelaySeconds: 10

periodSeconds: 16

successThreshold: 1

failureThreshold: 3

Additional Resources

5.2. Default Datasource Removed

In JBoss EAP 7.3, the default datasource is removed from JBoss EAP imagestreams.

If you developed custom applications that use the default datasource, you can include it when provisioning a server. Use the ENABLE_GENERATE_DEFAULT_DATASOURCE environment variable with a value of true.

ENABLE_GENERATE_DEFAULT_DATASOURCE=true

5.3. Updates to standalone-openshift.xml when upgrading JBoss EAP 7.1 to JBoss EAP 7.3 on OpenShift

The configuration file standalone-openshift.xml installed with JBoss EAP 7.1 is not compatible with JBoss EAP 7.3 and later.

If you want to use the standalone-openshift.xml file after upgrading from JBoss EAP 7.1 to JBoss EAP 7.3, you must make the following changes to the file:

Update the version of the

loggingsubsystem.Replace

<subsystem xmlns="urn:jboss:domain:logging:3.0">

with

<subsystem xmlns="urn:jboss:domain:logging:8.0">

Update the log formatter in the

loggingsubsystem configuration.Replace

<custom-formatter module="org.jboss.logmanager.ext" class="org.jboss.logmanager.ext.formatters.LogstashFormatter"> <properties> <property name="metaData" value="log-handler=CONSOLE"/> </properties> </custom-formatter>with

<json-formatter> <exception-output-type value="formatted"/> <key-overrides timestamp="@timestamp"/> <meta-data> <property name="@version" value="1"/> </meta-data> </json-formatter>

Chapter 6. Troubleshooting

6.1. Troubleshooting Pod Restarts

Pods can restart for a number of reasons, but a common cause of JBoss EAP pod restarts might include OpenShift resource constraints, especially out-of-memory issues. See the OpenShift documentation for more information on OpenShift pod eviction.

By default, JBoss EAP for OpenShift templates are configured to automatically restart affected containers when they encounter situations like out-of-memory issues. The following steps can help you diagnose and troubleshoot out-of-memory and other pod restart issues.

Get the name of the pod that has been having trouble.

You can see pod names, as well as the number times each pod has restarted with the following command.

$ oc get pods

To diagnose why a pod has restarted, you can examine the JBoss EAP logs of the previous pod, or the OpenShift events.

To see the JBoss EAP logs of the previous pod, use the following command.

oc logs --previous POD_NAMETo see the OpenShift events, use the following command.

$ oc get events

- If a pod has restarted because of a resource issue, you can attempt to modify your OpenShift pod configuration to increase its resource requests and limits. See the OpenShift documentation for more information on configuring pod compute resources.

6.2. Troubleshooting Using the JBoss EAP Management CLI

The JBoss EAP management CLI, EAP_HOME/bin/jboss-cli.sh, is accessible from within a container for troubleshooting purposes.

It is not recommended to make configuration changes in a running pod using the JBoss EAP management CLI. Any configuration changes made using the management CLI in a running container will be lost when the container restarts.

To make configuration changes to JBoss EAP for OpenShift, see Configuring the JBoss EAP for OpenShift Image for Your Java Application.

First open a remote shell session to the running pod.

$ oc rsh POD_NAMERun the following command from the remote shell session to launch the JBoss EAP management CLI:

$ /opt/eap/bin/jboss-cli.sh

Chapter 7. EAP Operator for Automating Application Deployment on OpenShift

EAP operator is a JBoss EAP-specific controller that extends the OpenShift API. You can use the EAP operator to create, configure, manage, and seamlessly upgrade instances of complex stateful applications.

The EAP operator manages multiple JBoss EAP Java application instances across the cluster. It also ensures safe transaction recovery in your application cluster by verifying all transactions are completed before scaling down the replicas and marking a pod as clean for termination. The EAP operator uses StatefulSet for the appropriate handling of EJB remoting and transaction recovery processing. The StatefulSet ensures persistent storage and network hostname stability even after pods are restarted.

You must install the EAP operator using OperatorHub, which can be used by OpenShift cluster administrators to discover, install, and upgrade operators.

In OpenShift Container Platform 4, you can use the Operator Lifecycle Manager (OLM) to install, update, and manage the lifecycle of all operators and their associated services running across multiple clusters.

The OLM runs by default in OpenShift Container Platform 4. It aids cluster administrators in installing, upgrading, and granting access to operators running on their cluster. The OpenShift Container Platform web console provides management screens for cluster administrators to install operators, as well as grant specific projects access to use the catalog of operators available on the cluster.

For more information about operators and the OLM, see the OpenShift documentation.

7.1. Installing EAP Operator Using the Web Console

As a JBoss EAP cluster administrator, you can install an EAP operator from Red Hat OperatorHub using the OpenShift Container Platform web console. You can then subscribe the EAP operator to one or more namespaces to make it available for developers on your cluster.

Here are a few points you must be aware of before installing the EAP operator using the web console:

- Installation Mode: Choose All namespaces on the cluster (default) to have the operator installed on all namespaces or choose individual namespaces, if available, to install the operator only on selected namespaces.

- Update Channel: If the EAP operator is available through multiple channels, you can choose which channel you want to subscribe to. For example, to deploy from the stable channel, if available, select it from the list.

- Approval Strategy: You can choose automatic or manual updates. If you choose automatic updates for the EAP operator, when a new version of the operator is available, the Operator Lifecycle Manager (OLM) automatically upgrades the running instance of EAP operator. If you choose manual updates, when a newer version of the operator is available, the OLM creates an update request. You must then manually approve the update request to have the operator updated to the new version.

The following procedure might change in accordance with the modifications in the OpenShift Container Platform web console. For the latest and most accurate procedure, see the Installing from the OperatorHub using the web console section in the latest version of the Working with Operators in OpenShift Container Platform guide.

Prerequisites

-

Access to an OpenShift Container Platform cluster using an account with

cluster-adminpermissions.

Procedure

- In the OpenShift Container Platform web console, navigate to Operators→ OperatorHub.

-

Scroll down or type

EAPinto the Filter by keyword box to find the EAP operator. - Select JBoss EAP operator and click Install.

On the Create Operator Subscription page:

Select one of the following:

-

All namespaces on the cluster (default) installs the operator in the default

openshift-operatorsnamespace to watch and be made available to all namespaces in the cluster. This option is not always available. - A specific namespace on the cluster installs the operator in a specific, single namespace that you choose. The operator is made available for use only in this single namespace.

-

All namespaces on the cluster (default) installs the operator in the default

- Select an Update Channel.

- Select Automatic or Manual approval strategy, as described earlier.

Click Subscribe to make the EAP operator available to the selected namespaces on this OpenShift Container Platform cluster.

- If you selected a manual approval strategy, the subscription’s upgrade status remains Upgrading until you review and approve its install plan. After you approve the install plan on the Install Plan page, the subscription upgrade status moves to Up to date.

- If you selected an automatic approval strategy, the upgrade status moves to Up to date without intervention.

After the subscription’s upgrade status is Up to date, select Operators → Installed Operators to verify that the EAP ClusterServiceVersion (CSV) shows up and its Status changes to InstallSucceeded in the relevant namespace.

NoteFor the All namespaces… installation mode, the status displayed is InstallSucceeded in the

openshift-operatorsnamespace. In other namespaces the status displayed is Copied.-

If the Status field does not change to InstallSucceeded, check the logs in any pod in the

openshift-operatorsproject (or other relevant namespace if A specific namespace… installation mode was selected) on the Workloads → Pods page that are reporting issues to troubleshoot further.

7.2. Installing EAP Operator Using the CLI

As a JBoss EAP cluster administrator, you can install an EAP operator from Red Hat OperatorHub using the OpenShift Container Platform CLI. You can then subscribe the EAP operator to one or more namespaces to make it available for developers on your cluster.

When installing the EAP operator from the OperatorHub using the CLI, use the oc command to create a Subscription object.

Prerequisites

-

You have access to an OpenShift Container Platform cluster using an account with

cluster-adminpermissions. -

You have installed the

octool in your local system.

Procedure

View the list of operators available to the cluster from the OperatorHub:

$ oc get packagemanifests -n openshift-marketplace | grep eap NAME CATALOG AGE ... eap Red Hat Operators 43d ...

Create a

Subscriptionobject YAML file (for example,eap-operator-sub.yaml) to subscribe a namespace to your EAP operator. The following is an exampleSubscriptionobject YAML file:apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: name: eap namespace: openshift-operators spec: channel: alpha installPlanApproval: Automatic name: eap 1 source: redhat-operators 2 sourceNamespace: openshift-marketplace

For information about channels and approval strategy, see the web console version of this procedure.

Create the

Subscriptionobject from the YAML file:$ oc apply -f eap-operator-sub.yaml $ oc get csv -n openshift-operators NAME DISPLAY VERSION REPLACES PHASE eap-operator.v1.0.0 JBoss EAP 1.0.0 Succeeded

The EAP operator is successfully installed. At this point, the OLM is aware of the EAP operator. A ClusterServiceVersion (CSV) for the operator appears in the target namespace, and APIs provided by the EAP operator is available for creation.

7.3. Java application deployment on OpenShift using the EAP operator

With the EAP operator, you can automate Java application deployment on OpenShift. For information about the EAP operator APIs, see EAP Operator: API Information.

You can choose one of the following application image types for deploying your Java application on OpenShift:

-

An application image based on a builder image or a runtime image. You can use the

eap-s2i-buildtemplate to prepare such an image. -

A bootable JAR application image based on the base image

registry.access.redhat.com/ubi8/openjdk-11or any other Red Hat ubi8 that supplies a higher JDK version.

Some configurations are mandatory while deploying a Java application on OpenShift using the EAP operator. Some other configurations are required only if the EAP operator CustomResource (CR) for your application references a Secret object or a ConfigMap.

Additional resources

-

For information about the

eap-s2i-buildtemplate, see The eap-s2i-build template for creating application images. -

For more information about building an application image using the

eap-s2i-buildtemplate, see Building an application image usingeap-s2i-buildtemplate. - For information about using the bootable JAR application image, see Bootable JAR for packaging EAP server and a Java application.

- For information about packaging your application image as a bootable JAR, see Using a bootable JAR on a JBoss EAP OpenShift platform.

- For information about completing the mandatory configurations while deploying your Java application on OpenShift, see Deploying a Java application using the EAP operator: Completing mandatory configurations.

- For information about completing the optional configurations while deploying your Java application on OpenShift, see Deploying a Java application using the EAP operator:Completing the optional configurations.

7.3.1. The eap-s2i-build template for creating application images

Use the eap-s2i-build template to create your application images. The eap-s2i-build template adds several parameters to configure the location of the application source repository and the EAP S2I images to use to build your application.

The APPLICATION_IMAGE parameter in the eap-s2i-build template specifies the name of the imagestream corresponding to the application image. For example, if you created an application image named my-app from the eap-s2i-build template, you can use the my-app:latest imagestreamtag from the my-app imagestream to deploy your application. For more information about the parameters used in the eap-s2i-build template, see Building an application image using eap-s2i-build template.

With this template, the EAP operator can seamlessly upgrade your applications deployed on OpenShift. To enable seamless upgrades, you must configure a webhook in your GitHub repository and specify the webhook in the build configuration. The webhook notifies OpenShift when your repository is updated and a new build is triggered.

You can use this template to build an application image using an imagestream for any JBoss EAP version, such as JBoss EAP 7.3, JBoss EAP XP, or JBoss EAP CD.

Additional resources

7.3.2. Building an application image using eap-s2i-build template

The eap-s2i-build template adds several parameters to configure the location of your application source repository and the EAP S2I images to use to build the application. With this template, you can use an imagestream for any JBoss EAP version, such as JBoss EAP 7.3, JBoss EAP XP, or JBoss EAP CD.

Procedure

- Import EAP images in OpenShift. For more information, see Importing the latest OpenShift image streams and templates for JBoss EAP XP.

- Configure the imagestream to receive updates about the changes in the application imagestream and to trigger new builds. For more information, see Configuring periodic importing of imagestreamtags.

Create the

eap-s2i-buildtemplate for building the application image using EAP S2I images:$ oc replace --force -f https://raw.githubusercontent.com/jboss-container-images/jboss-eap-openshift-templates/master/eap-s2i-build.yaml

This

eap-s2i-buildtemplate creates two build configurations and two imagestreams corresponding to the intermediate build artifacts and the final application image.Process the

eap-s2i-buildtemplate with parameters to create the resources for the final application image. The following example creates an application image,my-app:$ oc process eap-s2i-build \ -p APPLICATION_IMAGE=my-app \ 1 \ -p EAP_IMAGE=jboss-eap-xp1-openjdk11-openshift:1.0 \ 2 -p EAP_RUNTIME_IMAGE=jboss-eap-xp1-openjdk11-runtime-openshift:1.0 \ 3 -p EAP_IMAGESTREAM_NAMESPACE=$(oc project -q) \ 4 \ -p SOURCE_REPOSITORY_URL=https://github.com/jboss-developer/jboss-eap-quickstarts.git \ 5 -p SOURCE_REPOSITORY_REF=xp-1.0.x \ 6 -p CONTEXT_DIR=microprofile-config | oc create -f - 7

- 1

- The name for the application imagestream. The application image is tagged with the

latesttag. - 2

- The imagestreamtag for EAP builder image.

- 3

- The imagestreamtag for EAP runtime image.

- 4

- The namespace in which the imagestreams for Red Hat Middleware images are installed. If omitted, the

openshiftnamespace is used. Modify this only if you have installed the imagestreams in a namespace other thanopenshift. - 5

- The Git source URL of your application.

- 6

- The Git branch or tag reference

- 7