Business Resource Planner Guide

For JBoss Developers

Red Hat Customer Content Services

brms-docs@redhat.comEmily Murphy

Gemma Sheldon

Michele Haglund

Mikhail Ramendik

Stetson Robinson

Vidya Iyengar

Abstract

Chapter 1. Business Resource Planner Introduction

1.1. What is Business Resource Planner

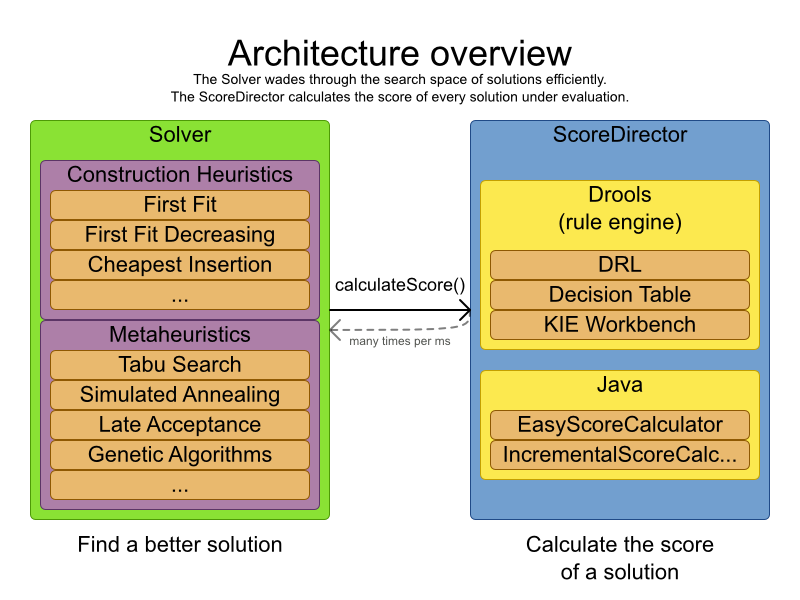

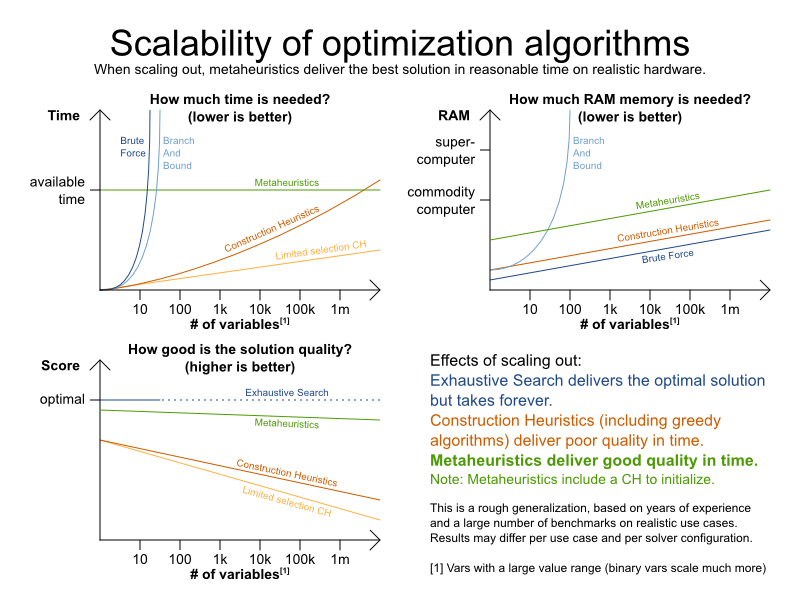

Business Resource Planner is a lightweight, embeddable planning engine that optimizes planning problems. It helps normal Java™ programmers solve planning problems efficiently, and it combines optimization heuristics and metaheuristics with very efficient score calculations.

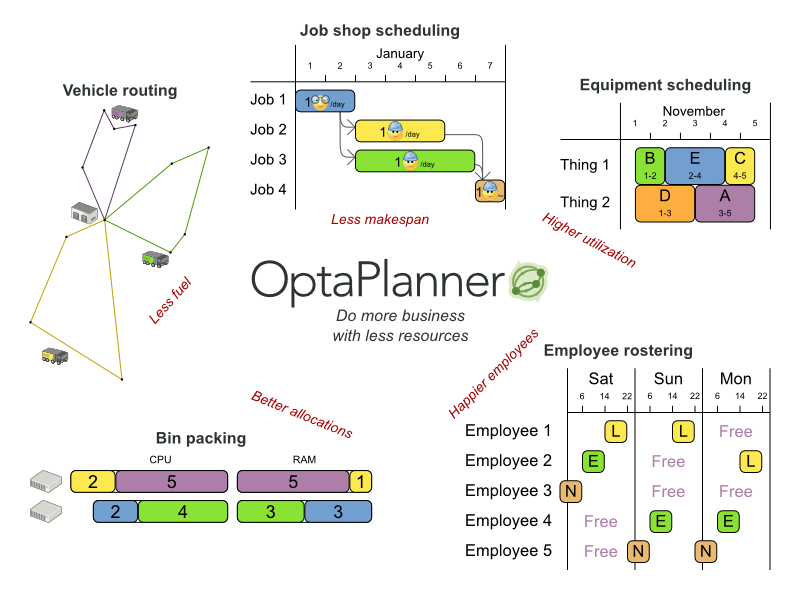

Business Resource Planner helps solve various use cases like the following:

- Employee/Patient Rosters. It helps create timetables for nurses and keeps track of patient bed management.

- Educational Timetables. It helps schedule lessons, courses, exams, and conference presentations.

- Shop Schedules: It tracks car assembly lines, machine queue planning, and workforce task planning.

- Cutting Stock: It minimizes waste by reducing the consumption of resources such as paper and steel.

Every organization faces planning problems; that is, they provide products and services with a limited set of constrained resources (employees, assets, time, and money).

Figure 1.1. Use Case Overview

Business Resource Planner is open source software under the Apache Software License 2.0. It is 100% pure Java™ and runs on any Java virtual machine.

1.2. Download Business Resource Planner

Business Resource Planner is production ready. The API is stable but backward incompatible changes can occur. With the recipe called UpgradeFromPreviousVersionRecipe.txt, you can easily upgrade to a newer version and quickly deal with any backwards incompatible changes. This recipe file is included in every release.

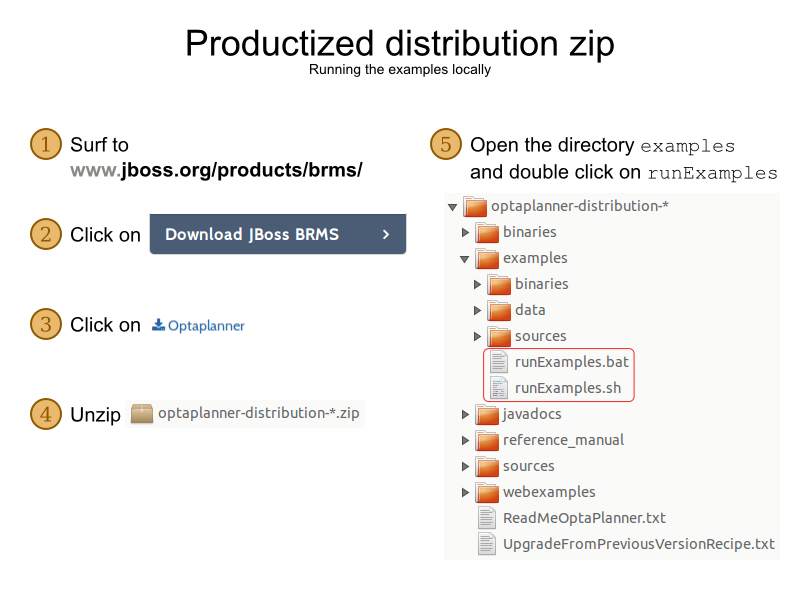

Procedure: Get the release ZIP and run the examples

- Navigate to the Red Hat Customer Portal and log in with your user credentials.

- Select Downloads → Red Hat JBoss Middleware → Download Software.

- From the Products drop-down menu, select BPM Suite or BRMS Platform.

- From the Version drop-down menu, select the product version 6.4.

- Select the Standalone or Deployable file and then click download.

- Unzip the files.

Open the directory

examplesand run the following script:Linux or Mac:

$ cd examples $ ./runExamples.sh

Windows:

$ cd examples $ runExamples.bat

Figure 1.2. Download Business Resource Planner

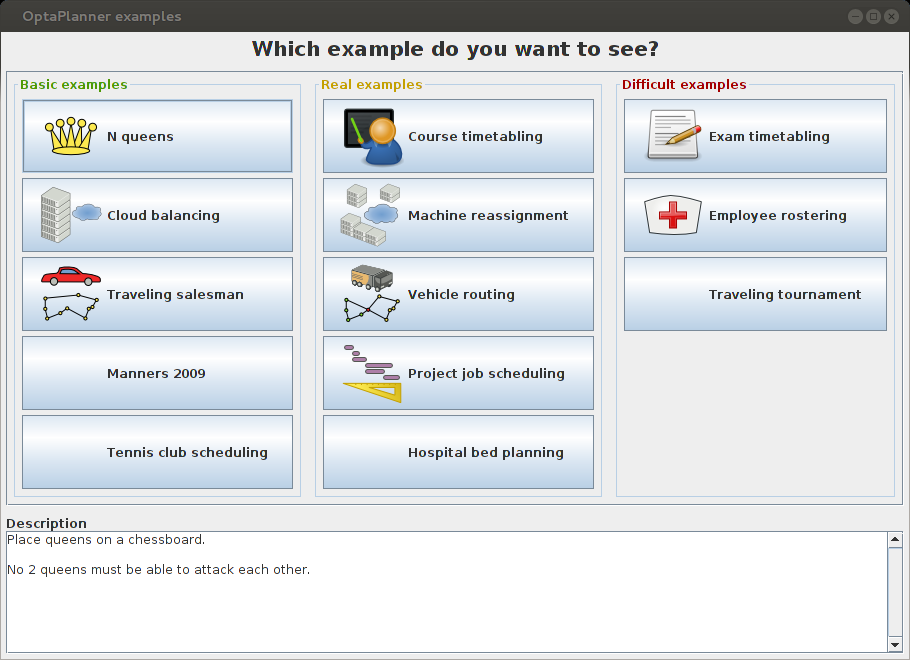

The Examples GUI application will open. Just pick an example:

Business Resource Planner itself has no GUI dependencies. It runs just as well on a server or a mobile JVM as it does on the desktop.

1.3. Run the Examples

Procedure: To run the examples in your favorite IDE:

Configure your IDE:

-

In IntelliJ and NetBeans, just open the file

examples/sources/pom.xmlas a new project, the Maven integration will take care of the rest. -

In Eclipse, open a new project for the directory

examples/sources. -

Add all the JARs to the classpath from the directory

binariesand the directoryexamples/binaries, except for the fileexamples/binaries/optaplanner-examples-*.jar. -

Add the Java source directory

src/main/javaand the Java resources directorysrc/main/resources.

-

In IntelliJ and NetBeans, just open the file

Next, create a run configuration:

-

Main class:

org.optaplanner.examples.app.OptaPlannerExamplesApp -

VM parameters (optional):

-Xmx512M -server -

Working directory:

examples(this is the directory that contains the directorydata)

-

Main class:

- Run that run configuration.

Procedure: Use Business Resource Planner with Maven, Gradle, Ivy, Buildr or Ant:

- Get the Business Resource Planner JARs at the Central Repository (and also at the JBoss Nexus Repository).

If you use Maven, add a dependency to

optaplanner-corein your project’spom.xml:<dependency> <groupId>org.optaplanner</groupId> <artifactId>optaplanner-core</artifactId> <version>...</version> </dependency>To identify the latest version, check the Central Repository. This is similar for Gradle, Ivy, and Buildr.

-

If you’re still using Ant (without Ivy), copy all the JARs from the downloaded ZIP’s

binariesdirectory and manually verify that your classpath doesn’t contain duplicate JARs.

The downloaded ZIP’s binaries directory contains far more JARs then optaplanner-core actually uses. It also contains the JARs used by other modules, such as optaplanner-benchmark.

Check the Maven repository pom.xml files to determine the minimal dependency set for a specific version of a specific module.

Examples are not a part of supported distribution. Customers can use them for learning purposes but Red Hat does not provide support for example code.

Business Resource Planner will be known as Planner for the rest of this book.

Chapter 2. Quick Start

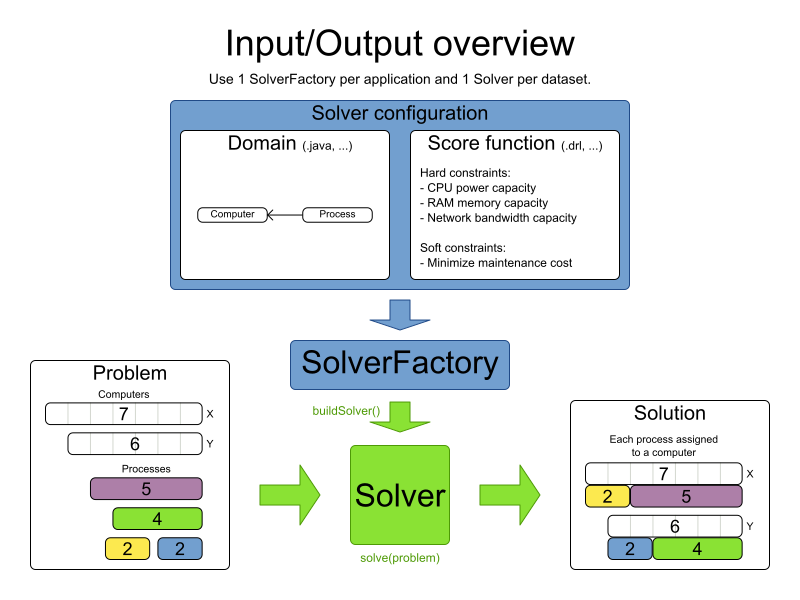

2.1. Cloud Balancing Tutorial

2.1.1. Problem Description

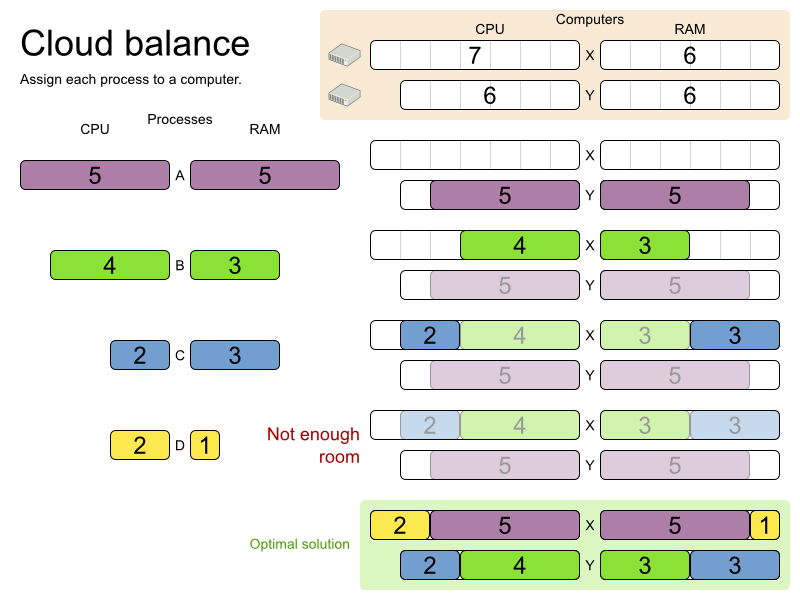

Suppose your company owns a number of cloud computers and needs to run a number of processes on those computers. Assign each process to a computer under the following four constraints.

The following hard constraints must be fulfilled:

Every computer must be able to handle the minimum hardware requirements of the sum of its processes:

- The CPU power of a computer must be at least the sum of the CPU power required by the processes assigned to that computer.

- The RAM memory of a computer must be at least the sum of the RAM memory required by the processes assigned to that computer.

- The network bandwidth of a computer must be at least the sum of the network bandwidth required by the processes assigned to that computer.

The following soft constraints should be optimized:

Each computer that has one or more processes assigned, incurs a maintenance cost (which is fixed per computer).

- Minimize the total maintenance cost.

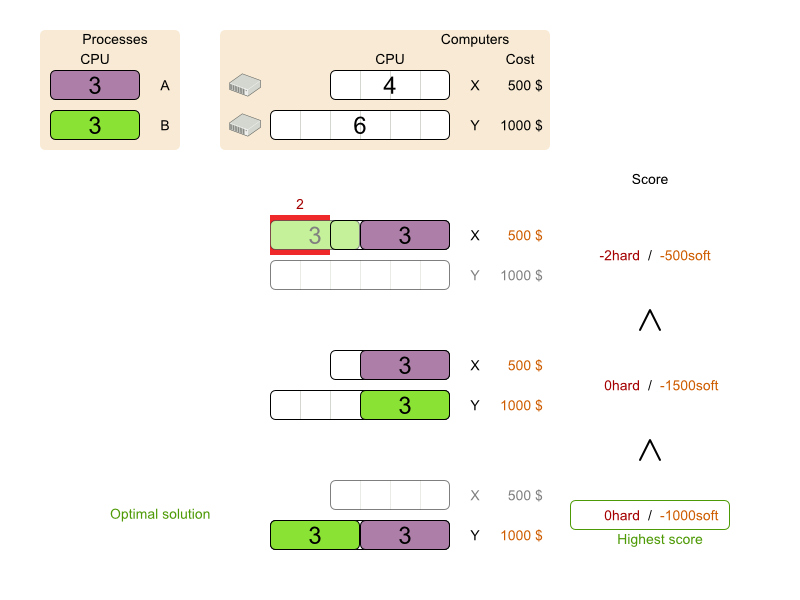

This problem is a form of bin packing. The following is a simplified example, where we assign four processes to two computers with two constraints (CPU and RAM) with a simple algorithm:

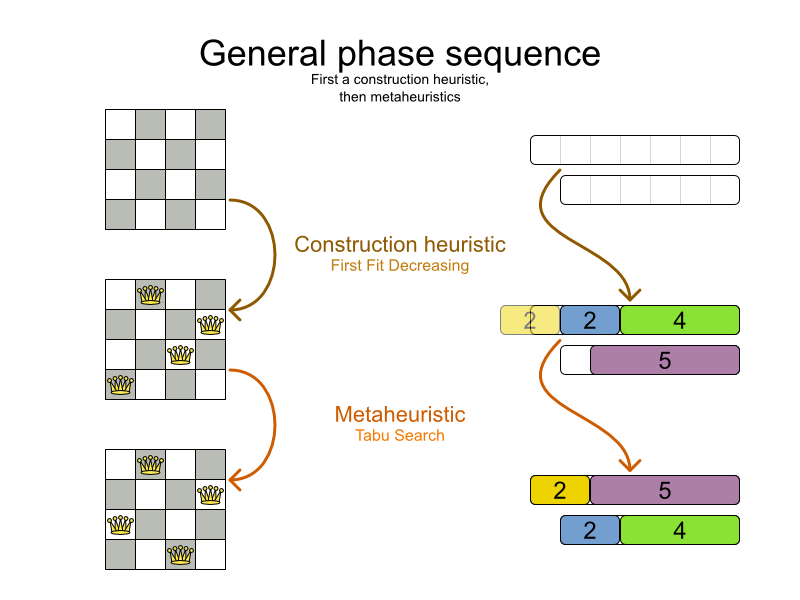

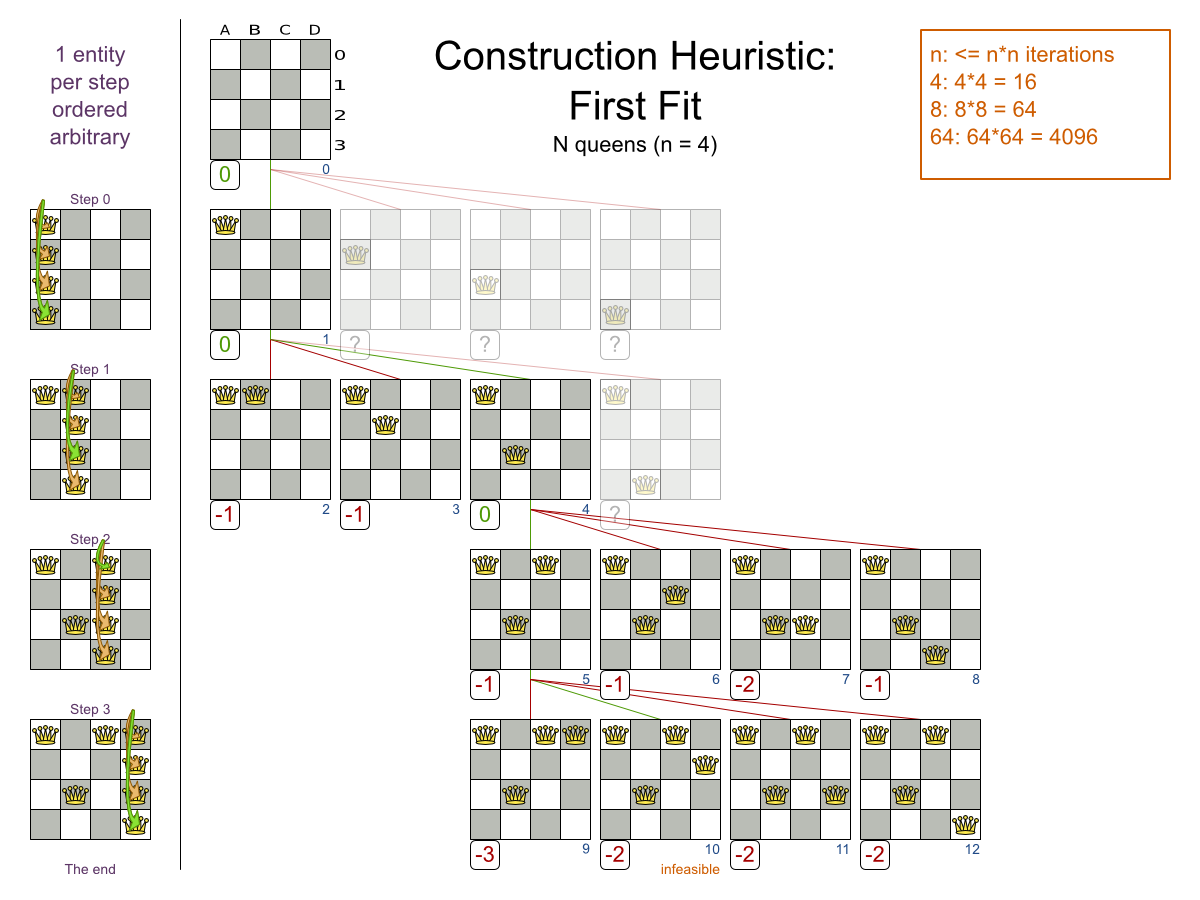

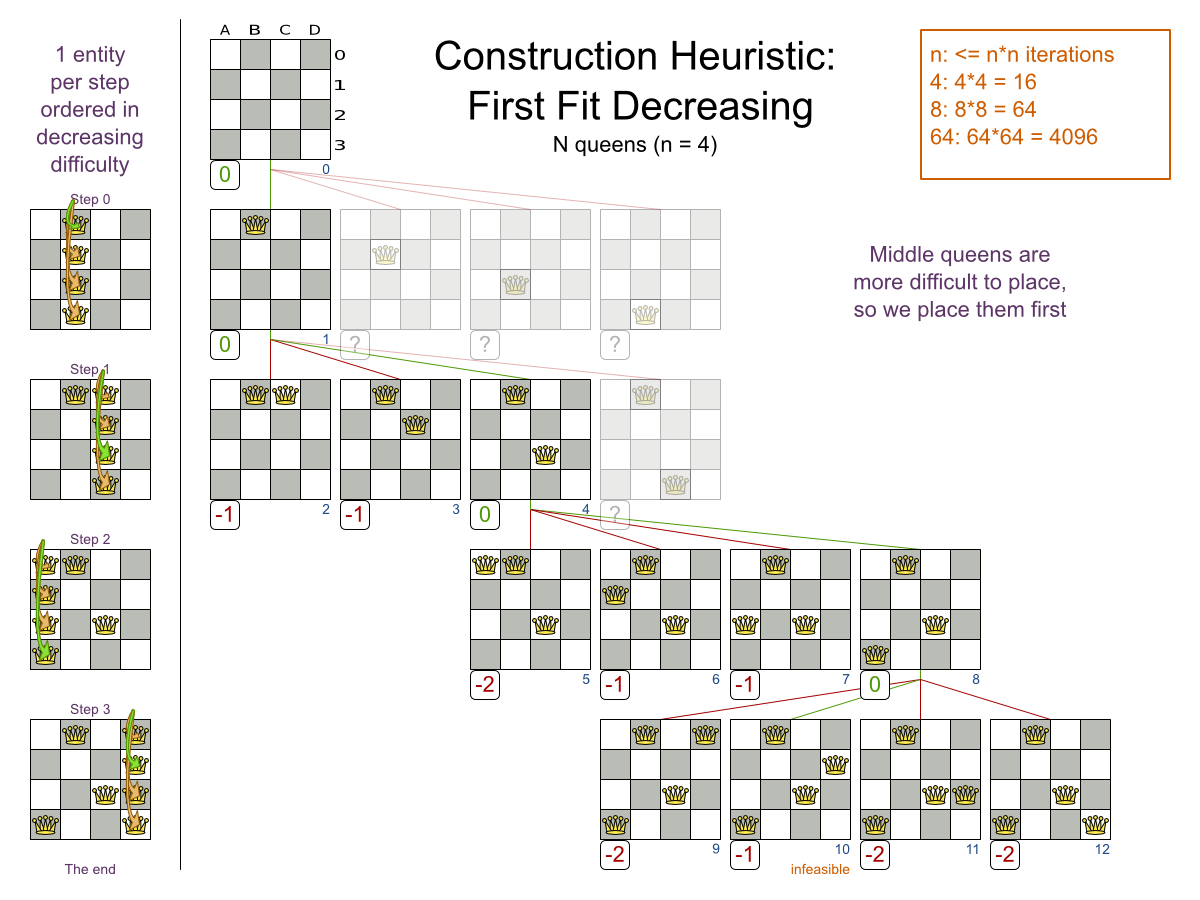

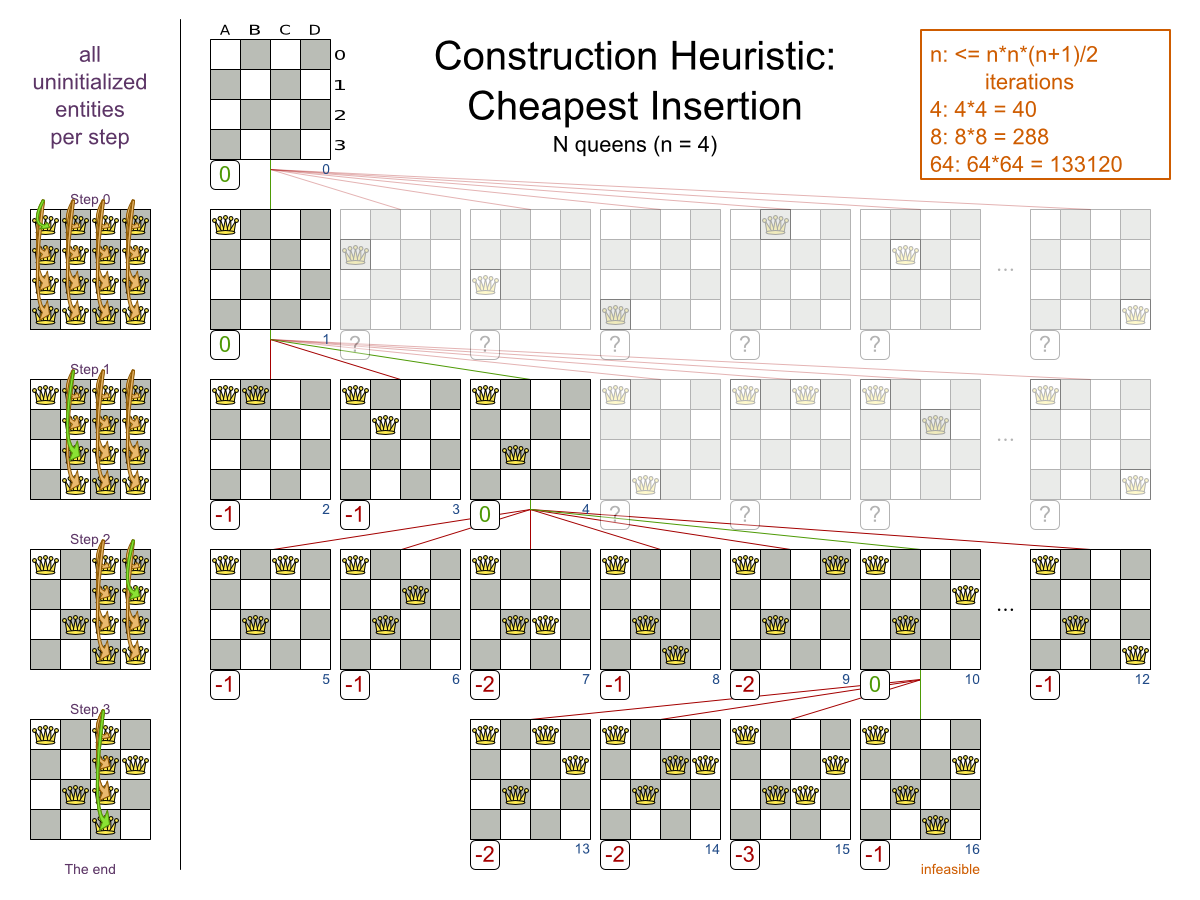

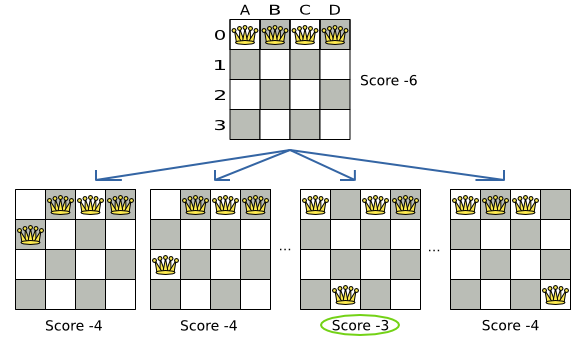

The simple algorithm used here is the First Fit Decreasing algorithm, which assigns the bigger processes first and assigns the smaller processes to the remaining space. As you can see, it is not optimal, as it does not leave enough room to assign the yellow process “D”.

Planner does find the more optimal solution fast by using additional, smarter algorithms. It also scales: both in data (more processes, more computers) and constraints (more hardware requirements, other constraints). So see how Planner can be used in this scenario.

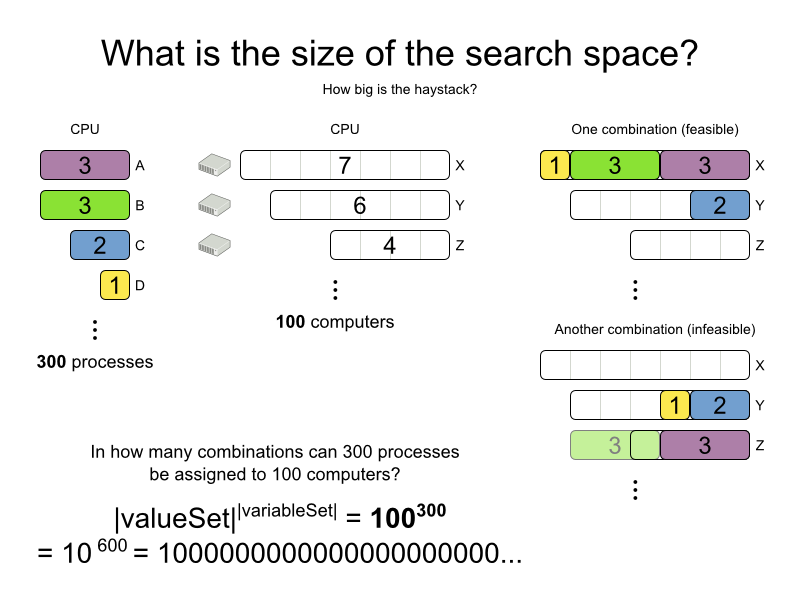

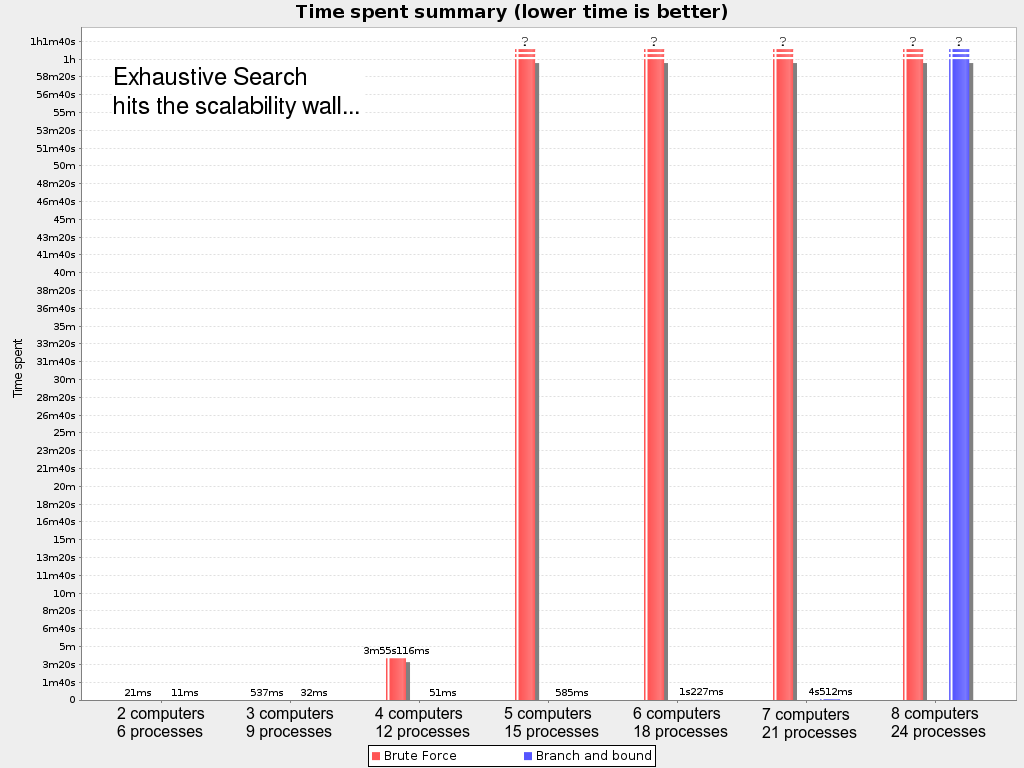

2.1.2. Problem Size

Table 2.1. Cloud Balancing Problem Size

| Problem Size | Computers | Processes | Search Space |

|---|---|---|---|

| 2computers-6processes | 2 | 6 | 64 |

| 3computers-9processes | 3 | 9 | 104 |

| 4computers-012processes | 4 | 12 | 107 |

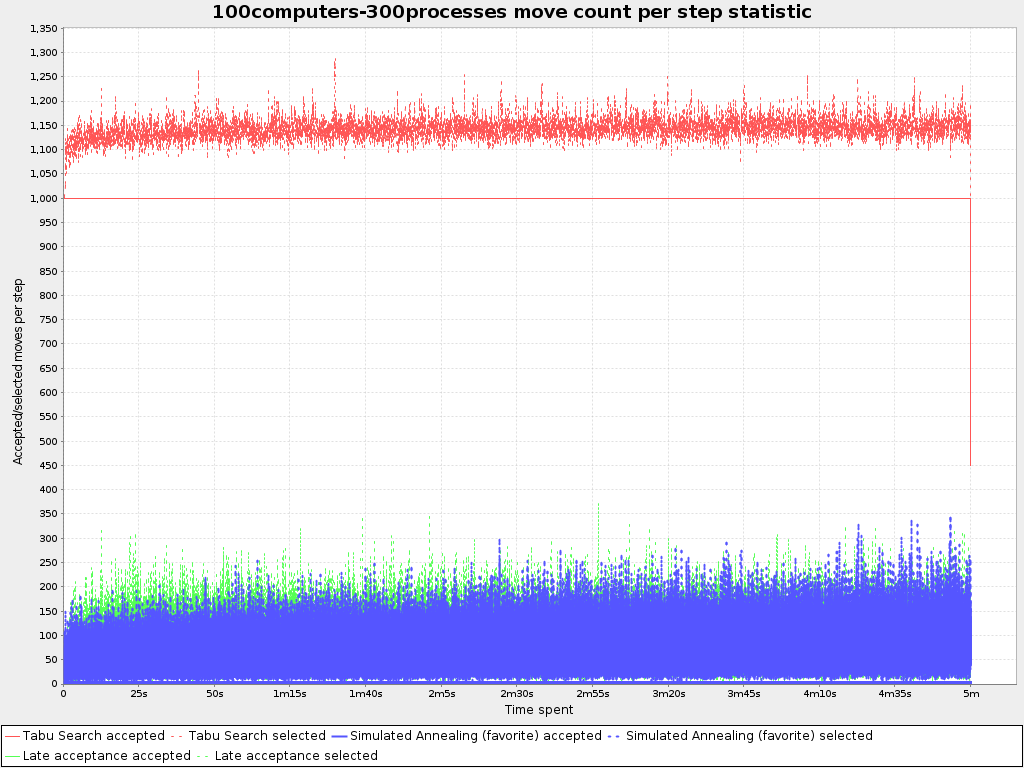

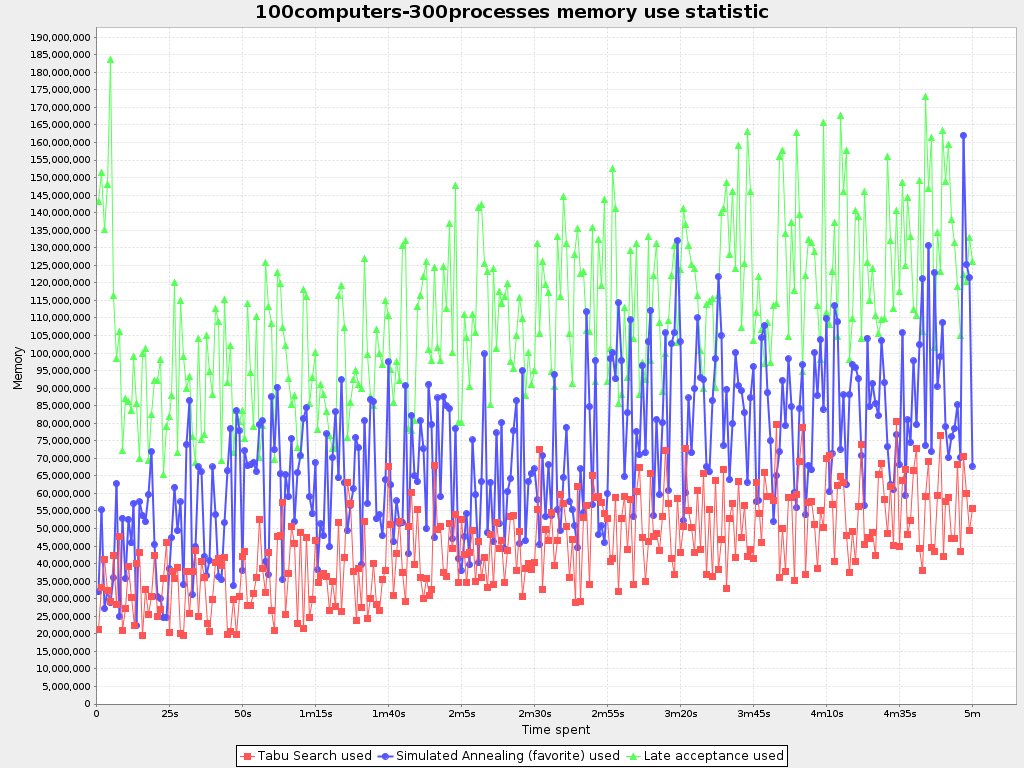

| 100computers-300processes | 100 | 300 | 10600 |

| 200computers-600processes | 200 | 600 | 101380 |

| 400computers-1200processes | 400 | 1200 | 103122 |

| 800computers-2400processes | 800 | 2400 | 106967 |

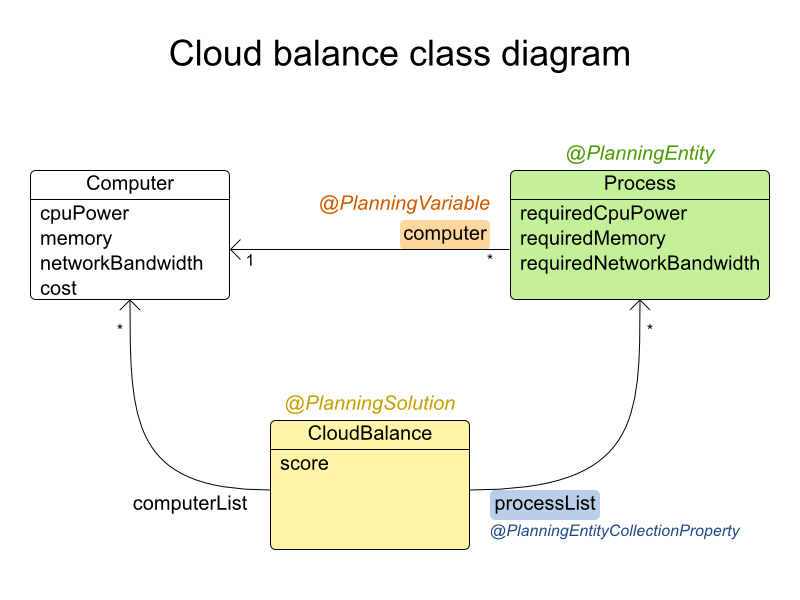

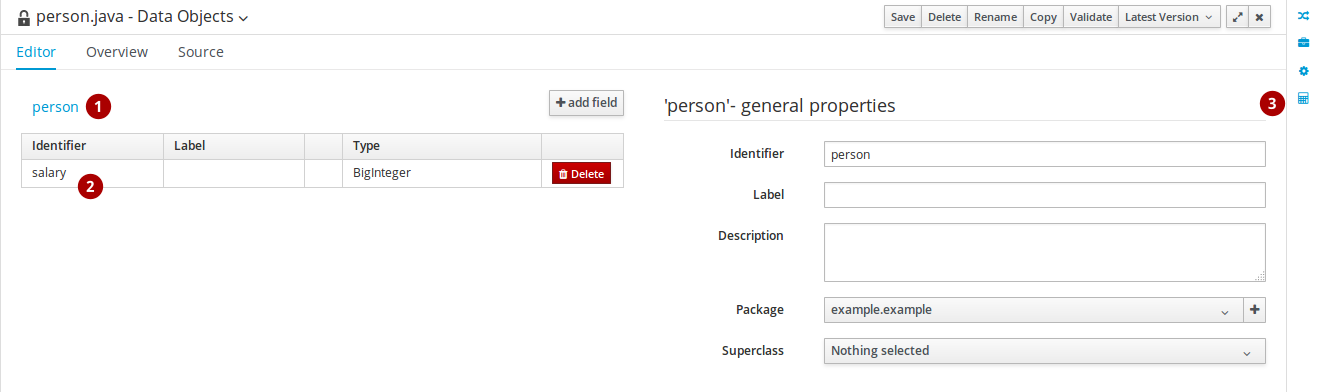

2.1.3. Domain Model Design

Beginning with the domain model:

-

Computer: represents a computer with certain hardware (CPU power, RAM memory, network bandwidth) and maintenance cost. -

Process: represents a process with a demand. Needs to be assigned to aComputerby Planner. -

CloudBalance: represents a problem. Contains everyComputerandProcessfor a certain data set.

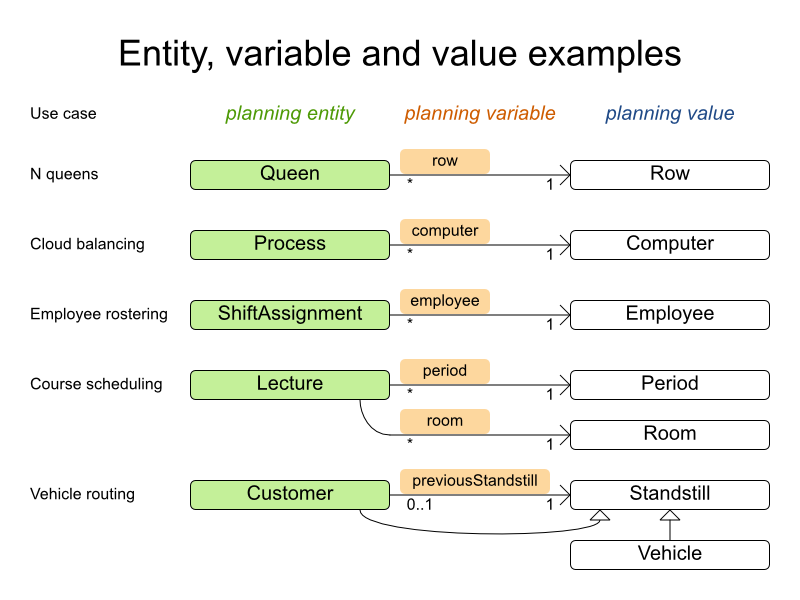

In the UML class diagram above, the Planner concepts are already annotated:

-

Planning entity: the class (or classes) that changes during planning. In this example, it is the class

Process. -

Planning variable: the property (or properties) of a planning entity class that changes during planning. In this example, it is the property

computeron the classProcess. -

Solution: the class that represents a data set and contains all planning entities. In this example that is the class

CloudBalance.

2.1.4. Main Method

Try it yourself. Download and configure the examples in your preferred IDE. Run org.optaplanner.examples.cloudbalancing.app.CloudBalancingHelloWorld. By default, it is configured to run for 120 seconds. It will execute this code:

Example 2.1. CloudBalancingHelloWorld.java

public class CloudBalancingHelloWorld {

public static void main(String[] args) {

// Build the Solver

SolverFactory<CloudBalance> solverFactory = SolverFactory.createFromXmlResource(

"org/optaplanner/examples/cloudbalancing/solver/cloudBalancingSolverConfig.xml");

Solver<CloudBalance> solver = solverFactory.buildSolver();

// Load a problem with 400 computers and 1200 processes

CloudBalance unsolvedCloudBalance = new CloudBalancingGenerator().createCloudBalance(400, 1200);

// Solve the problem

CloudBalance solvedCloudBalance = solver.solve(unsolvedCloudBalance);

// Display the result

System.out.println("\nSolved cloudBalance with 400 computers and 1200 processes:\n"

+ toDisplayString(solvedCloudBalance));

}

...

}The code example does the following:

Build the

Solverbased on a solver configuration (in this case an XML file from the classpath).SolverFactory<CloudBalance> solverFactory = SolverFactory.createFromXmlResource( "org/optaplanner/examples/cloudbalancing/solver/cloudBalancingSolverConfig.xml"); Solver solver<CloudBalance> = solverFactory.buildSolver();Load the problem.

CloudBalancingGeneratorgenerates a random problem: you will replace this with a class that loads a real problem, for example from a database.CloudBalance unsolvedCloudBalance = new CloudBalancingGenerator().createCloudBalance(400, 1200);

Solve the problem.

CloudBalance solvedCloudBalance = solver.solve(unsolvedCloudBalance);

Display the result.

System.out.println("\nSolved cloudBalance with 400 computers and 1200 processes:\n" + toDisplayString(solvedCloudBalance));

The only complicated part is building the Solver, as detailed in the next section.

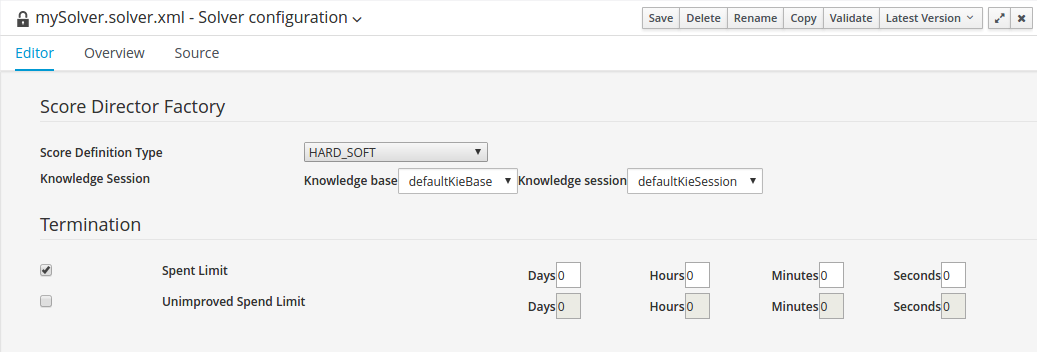

2.1.5. Solver Configuration

Take a look at the solver configuration:

Example 2.2. cloudBalancingSolverConfig.xml

<?xml version="1.0" encoding="UTF-8"?>

<solver>

<!-- Domain model configuration -->

<scanAnnotatedClasses/>

<!-- Score configuration -->

<scoreDirectorFactory>

<scoreDefinitionType>HARD_SOFT</scoreDefinitionType>

<easyScoreCalculatorClass>org.optaplanner.examples.cloudbalancing.solver.score.CloudBalancingEasyScoreCalculator</easyScoreCalculatorClass>

<!--<scoreDrl>org/optaplanner/examples/cloudbalancing/solver/cloudBalancingScoreRules.drl</scoreDrl>-->

</scoreDirectorFactory>

<!-- Optimization algorithms configuration -->

<termination>

<secondsSpentLimit>30</secondsSpentLimit>

</termination>

</solver>This solver configuration consists of three parts:

Domain model configuration: What can Planner change? We need to make Planner aware of our domain classes. In this configuration, it will automatically scan all classes in your classpath (for an

@PlanningEntityor@PlanningSolutionannotation):<scanAnnotatedClasses/>

Score configuration: How should Planner optimize the planning variables? What is our goal? Since we have hard and soft constraints, we use a

HardSoftScore. But we also need to tell Planner how to calculate the score, depending on our business requirements. Further down, we will look into two alternatives to calculate the score: using an easy Java implementation, or using Drools DRL.<scoreDirectorFactory> <scoreDefinitionType>HARD_SOFT</scoreDefinitionType> <easyScoreCalculatorClass>org.optaplanner.examples.cloudbalancing.solver.score.CloudBalancingEasyScoreCalculator</easyScoreCalculatorClass> <!--<scoreDrl>org/optaplanner/examples/cloudbalancing/solver/cloudBalancingScoreRules.drl</scoreDrl>--> </scoreDirectorFactory>Optimization algorithms configuration: How should Planner optimize it? In this case, we use the default optimization algorithms (because no explicit optimization algorithms are configured) for 30 seconds:

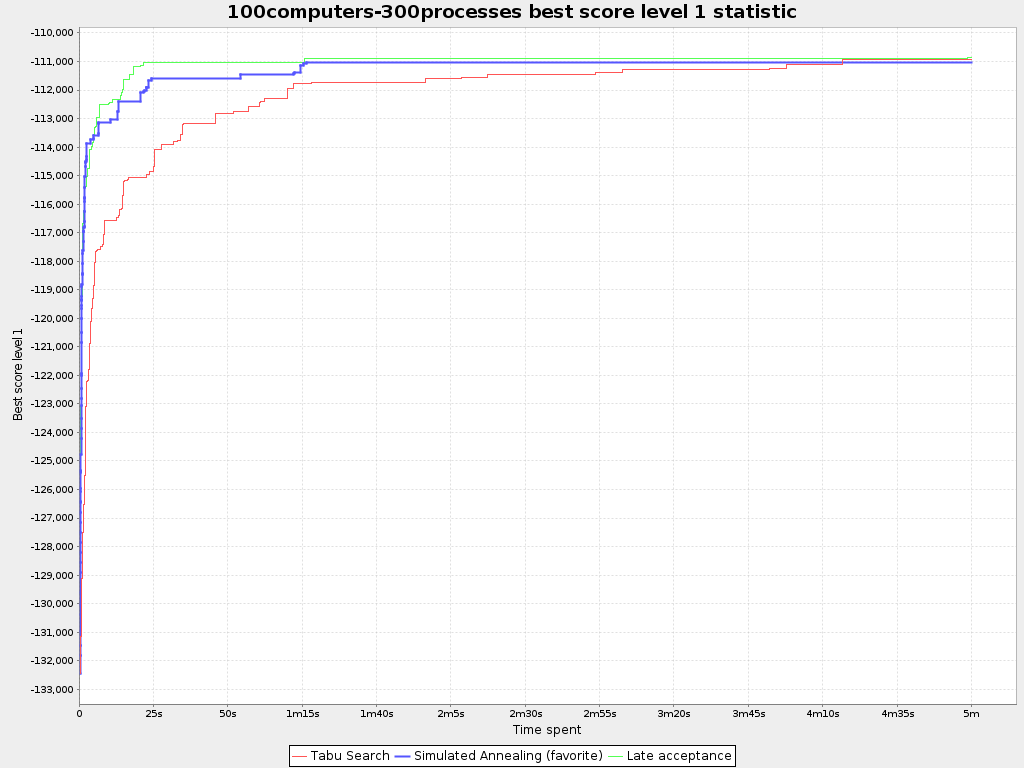

<termination> <secondsSpentLimit>30</secondsSpentLimit> </termination>Planner should get a good result in seconds (and even in less than 15 milliseconds with real-time planning), but the more time it has, the better the result will be. Advanced use cases will likely use a different termination criteria than a hard time limit.

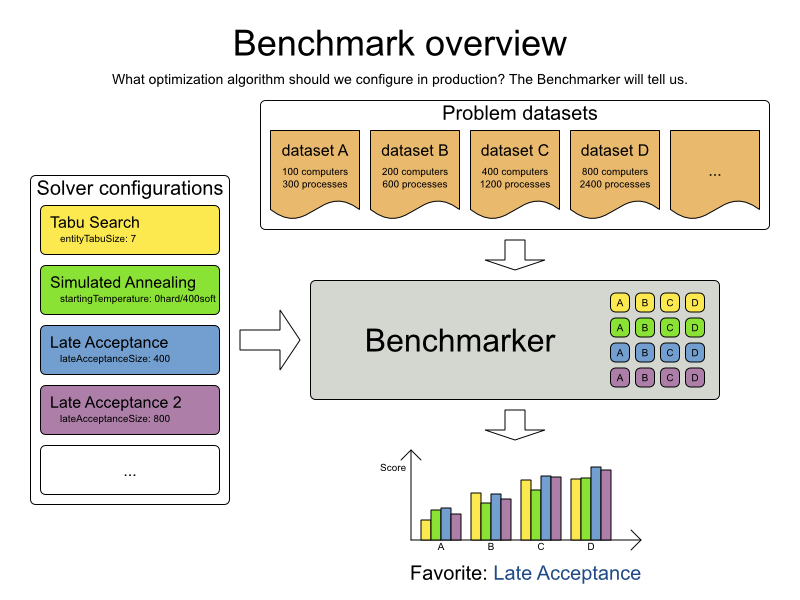

The default algorithms will already easily surpass human planners and most in-house implementations. Use the Benchmarker to power tweak to get even better results.

Let’s examine the domain model classes and the score configuration.

2.1.6. Domain Model Implementation

2.1.6.1. The Computer Class

The Computer class is a POJO (Plain Old Java Object). Usually, you will have more of this kind of classes.

Example 2.3. CloudComputer.java

public class CloudComputer ... {

private int cpuPower;

private int memory;

private int networkBandwidth;

private int cost;

... // getters

}2.1.6.2. The Process Class

The Process class is particularly important. We need to tell Planner that it can change the field computer, so we annotate the class with @PlanningEntity and the getter getComputer() with @PlanningVariable:

Example 2.4. CloudProcess.java

@PlanningEntity(...)

public class CloudProcess ... {

private int requiredCpuPower;

private int requiredMemory;

private int requiredNetworkBandwidth;

private CloudComputer computer;

... // getters

@PlanningVariable(valueRangeProviderRefs = {"computerRange"})

public CloudComputer getComputer() {

return computer;

}

public void setComputer(CloudComputer computer) {

computer = computer;

}

// ************************************************************************

// Complex methods

// ************************************************************************

...

}

The values that Planner can choose from for the field computer, are retrieved from a method on the Solution implementation: CloudBalance.getComputerList(), which returns a list of all computers in the current data set. The valueRangeProviderRefs property is used to pass this information to the Planner.

Instead of getter annotations, it is also possible to use field annotations.

2.1.6.3. The CloudBalance Class

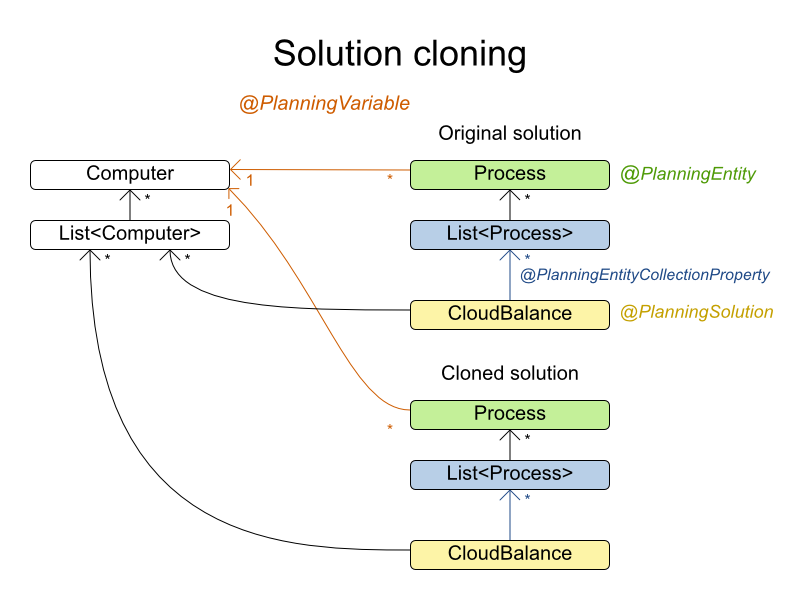

The CloudBalance class implements the Solution interface. It holds a list of all computers and processes. We need to tell Planner how to retrieve the collection of processes that it can change, therefore we must annotate the getter getProcessList with @PlanningEntityCollectionProperty.

The CloudBalance class also has a property score, which is the Score of that Solution instance in its current state:

Example 2.5. CloudBalance.java

@PlanningSolution

public class CloudBalance ... implements Solution<HardSoftScore> {

private List<CloudComputer> computerList;

private List<CloudProcess> processList;

private HardSoftScore score;

@ValueRangeProvider(id = "computerRange")

public List<CloudComputer> getComputerList() {

return computerList;

}

@PlanningEntityCollectionProperty

public List<CloudProcess> getProcessList() {

return processList;

}

...

public HardSoftScore getScore() {

return score;

}

public void setScore(HardSoftScore score) {

this.score = score;

}

// ************************************************************************

// Complex methods

// ************************************************************************

public Collection<? extends Object> getProblemFacts() {

List<Object> facts = new ArrayList<Object>();

facts.addAll(computerList);

// Do not add the planning entity's (processList) because that will be done automatically

return facts;

}

...

}

The getProblemFacts() method is only needed for score calculation with Drools. It is not needed for the other score calculation types.

2.1.7. Score Configuration

Planner will search for the Solution with the highest Score. This example uses a HardSoftScore, which means Planner will look for the solution with no hard constraints broken (fulfill hardware requirements) and as little as possible soft constraints broken (minimize maintenance cost).

Of course, Planner needs to be told about these domain-specific score constraints. There are several ways to implement such a score function:

- Easy Java

- Incremental Java

- Drools

Let’s take a look at two different implementations:

2.1.7.1. Easy Java Score Configuration

One way to define a score function is to implement the interface EasyScoreCalculator in plain Java.

<scoreDirectorFactory>

<scoreDefinitionType>HARD_SOFT</scoreDefinitionType>

<easyScoreCalculatorClass>org.optaplanner.examples.cloudbalancing.solver.score.CloudBalancingEasyScoreCalculator</easyScoreCalculatorClass>

</scoreDirectorFactory>

Just implement the calculateScore(Solution) method to return a HardSoftScore instance.

Example 2.6. CloudBalancingEasyScoreCalculator.java

public class CloudBalancingEasyScoreCalculator implements EasyScoreCalculator<CloudBalance> {

/**

* A very simple implementation. The double loop can easily be removed by using Maps as shown in

* {@link CloudBalancingMapBasedEasyScoreCalculator#calculateScore(CloudBalance)}.

*/

public HardSoftScore calculateScore(CloudBalance cloudBalance) {

int hardScore = 0;

int softScore = 0;

for (CloudComputer computer : cloudBalance.getComputerList()) {

int cpuPowerUsage = 0;

int memoryUsage = 0;

int networkBandwidthUsage = 0;

boolean used = false;

// Calculate usage

for (CloudProcess process : cloudBalance.getProcessList()) {

if (computer.equals(process.getComputer())) {

cpuPowerUsage += process.getRequiredCpuPower();

memoryUsage += process.getRequiredMemory();

networkBandwidthUsage += process.getRequiredNetworkBandwidth();

used = true;

}

}

// Hard constraints

int cpuPowerAvailable = computer.getCpuPower() - cpuPowerUsage;

if (cpuPowerAvailable < 0) {

hardScore += cpuPowerAvailable;

}

int memoryAvailable = computer.getMemory() - memoryUsage;

if (memoryAvailable < 0) {

hardScore += memoryAvailable;

}

int networkBandwidthAvailable = computer.getNetworkBandwidth() - networkBandwidthUsage;

if (networkBandwidthAvailable < 0) {

hardScore += networkBandwidthAvailable;

}

// Soft constraints

if (used) {

softScore -= computer.getCost();

}

}

return HardSoftScore.valueOf(hardScore, softScore);

}

}

Even if we optimize the code above to use Maps to iterate through the processList only once, it is still slow because it does not do incremental score calculation. To fix that, either use an incremental Java score function or a Drools score function. Let’s take a look at the latter.

2.1.7.2. Drools Score Configuration

To use the Drools rule engine as a score function, simply add a scoreDrl resource in the classpath:

<scoreDirectorFactory>

<scoreDefinitionType>HARD_SOFT</scoreDefinitionType>

<scoreDrl>org/optaplanner/examples/cloudbalancing/solver/cloudBalancingScoreRules.drl</scoreDrl>

</scoreDirectorFactory>First, we want to make sure that all computers have enough CPU, RAM and network bandwidth to support all their processes, so we make these hard constraints:

Example 2.7. cloudBalancingScoreRules.drl - Hard Constraints

...

import org.optaplanner.examples.cloudbalancing.domain.CloudBalance;

import org.optaplanner.examples.cloudbalancing.domain.CloudComputer;

import org.optaplanner.examples.cloudbalancing.domain.CloudProcess;

global HardSoftScoreHolder scoreHolder;

// ############################################################################

// Hard constraints

// ############################################################################

rule "requiredCpuPowerTotal"

when

$computer : CloudComputer($cpuPower : cpuPower)

$requiredCpuPowerTotal : Number(intValue > $cpuPower) from accumulate(

CloudProcess(

computer == $computer,

$requiredCpuPower : requiredCpuPower),

sum($requiredCpuPower)

)

then

scoreHolder.addHardConstraintMatch(kcontext, $cpuPower - $requiredCpuPowerTotal.intValue());

end

rule "requiredMemoryTotal"

...

end

rule "requiredNetworkBandwidthTotal"

...

endNext, if those constraints are met, we want to minimize the maintenance cost, so we add that as a soft constraint:

Example 2.8. cloudBalancingScoreRules.drl - Soft Constraints

// ############################################################################

// Soft constraints

// ############################################################################

rule "computerCost"

when

$computer : CloudComputer($cost : cost)

exists CloudProcess(computer == $computer)

then

scoreHolder.addSoftConstraintMatch(kcontext, - $cost);

endIf you use the Drools rule engine for score calculation, you can integrate with other Drools technologies, such as decision tables (XLS or web based), the KIE Workbench, …

2.1.8. Beyond this Tutorial

Now that this simple example works, try going further. Enrich the domain model and add extra constraints such as these:

-

Each

Processbelongs to aService. A computer might crash, so processes running the same service should be assigned to different computers. -

Each

Computeris located in aBuilding. A building might burn down, so processes of the same services should be assigned to computers in different buildings.

Chapter 3. Use Cases and Examples

3.1. Examples Overview

Planner has several examples. In this manual we explain mainly using the n queens example and cloud balancing example. So it’s advisable to read at least those sections.

The source code of all these examples is available in the distribution ZIP under examples/sources and also in git under optaplanner/optaplanner-examples.

Table 3.1. Examples Overview

| Example | Domain | Size | Competition? | Special features used |

|---|---|---|---|---|

|

|

| None | |

|

|

| ||

|

|

| ||

|

|

|

| |

|

|

| ||

|

|

| ||

|

|

| ||

|

|

| ||

|

|

|

| |

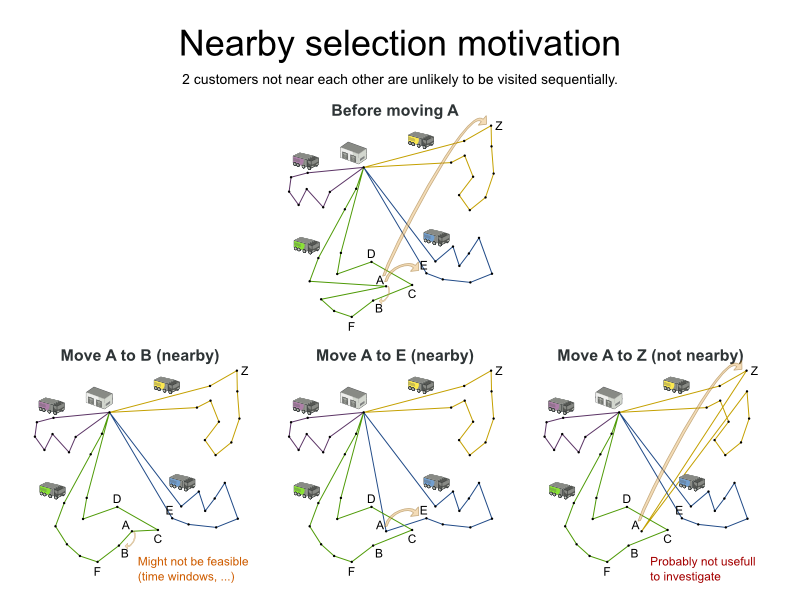

| Vehicle routing with time windows | Extra on Vehicle routing:

|

|

| Extra on Vehicle routing:

|

|

|

| ||

|

|

| ||

|

|

|

| |

|

|

| ||

|

|

|

| |

|

|

| ||

|

|

|

A realistic competition is an official, independent competition:

- that clearly defines a real-word use case

- with real-world constraints

- with multiple, real-world datasets

- that expects reproducible results within a specific time limit on specific hardware

- that has had serious participation from the academic and/or enterprise Operations Research community

These realistic competitions provide an objective comparison of Planner with competitive software and academic research.

3.2. Basic Examples

3.2.1. N Queens

3.2.1.1. Problem Description

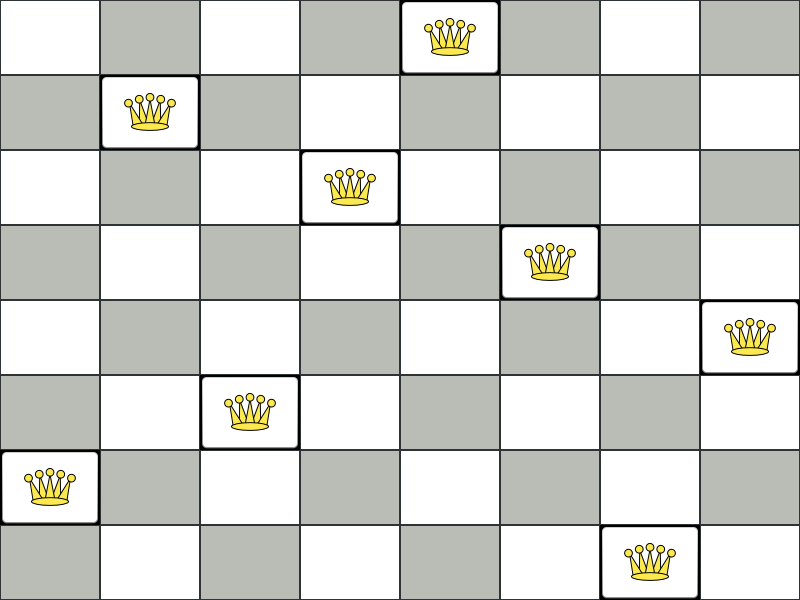

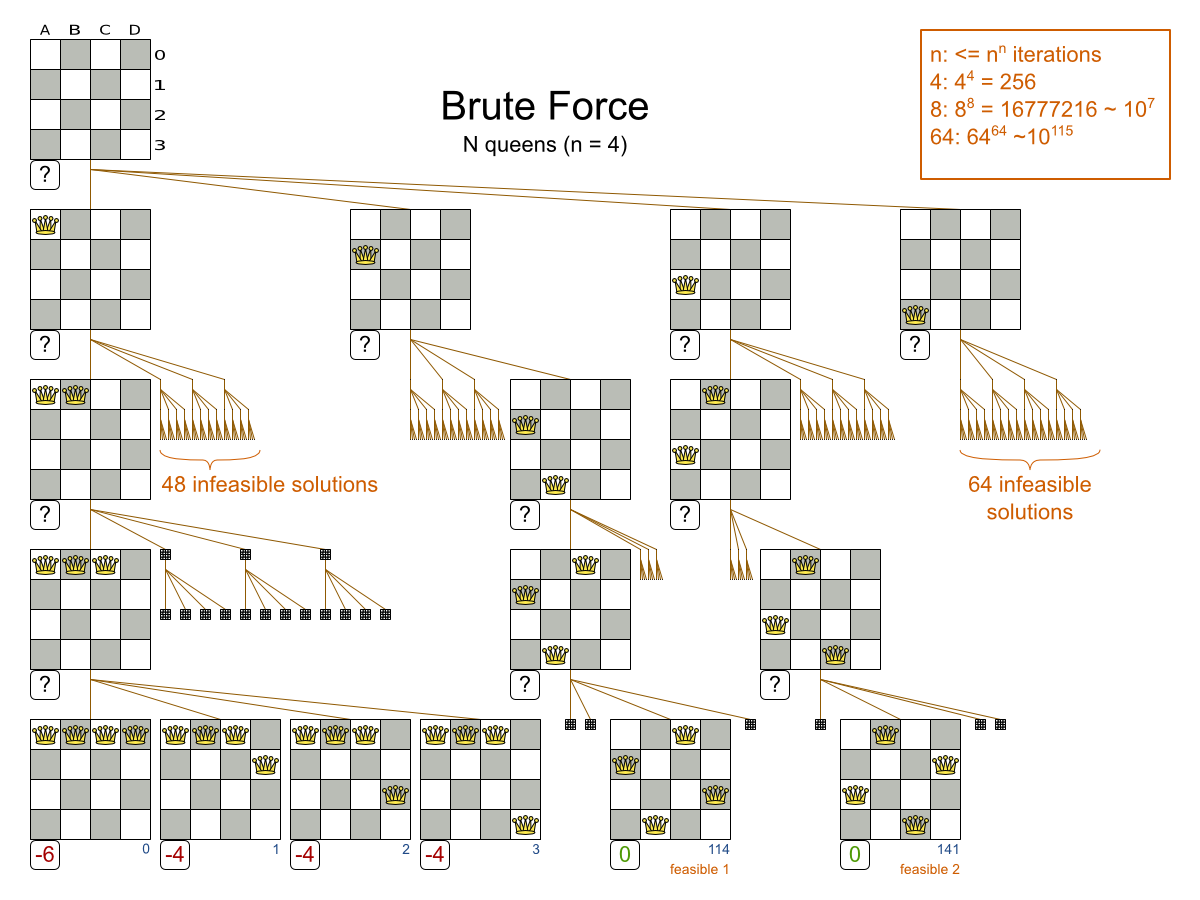

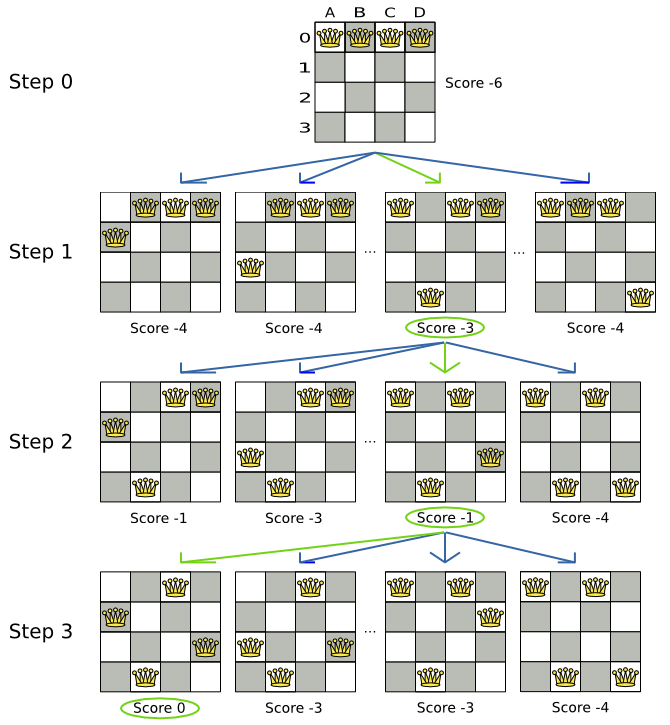

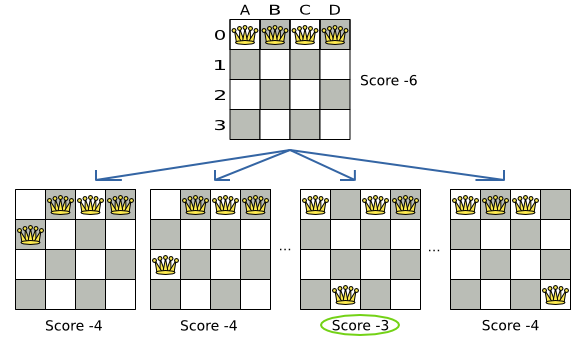

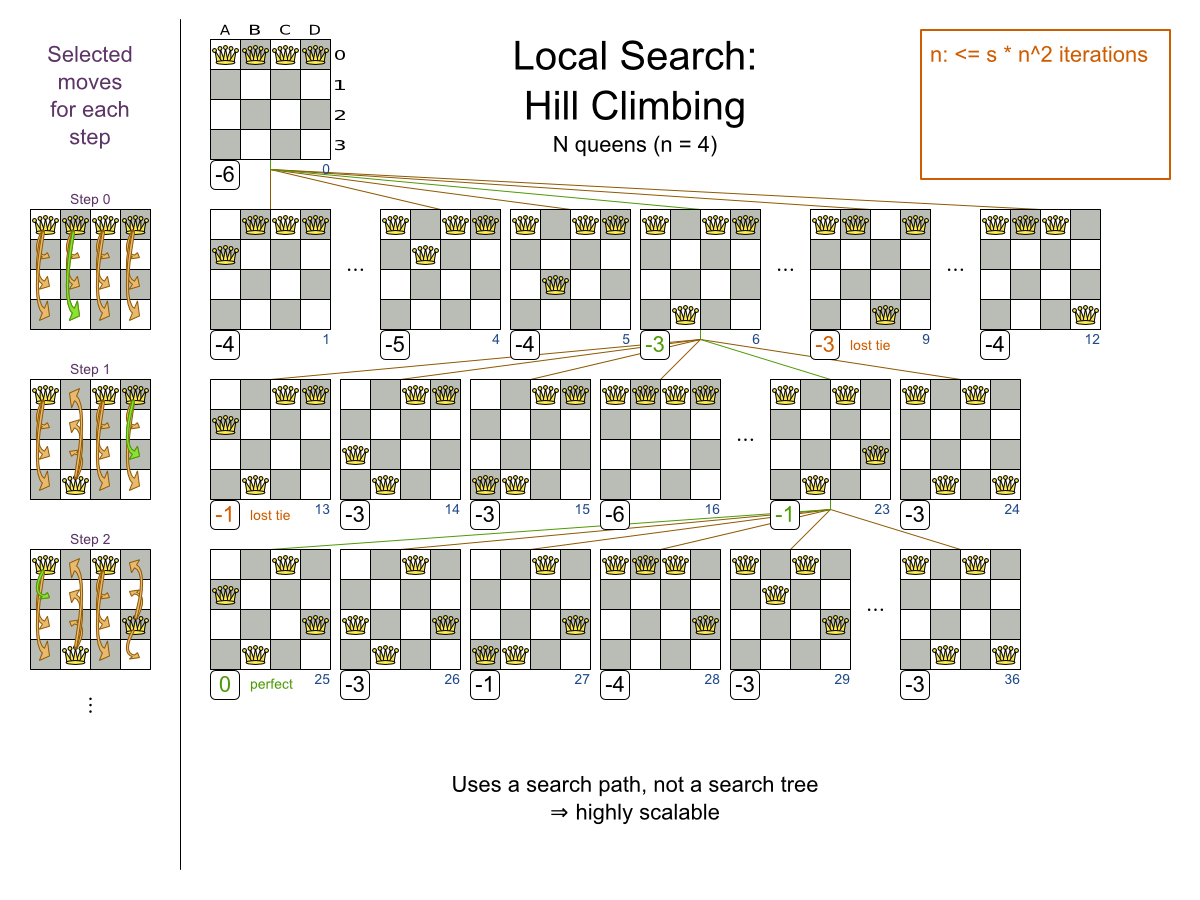

Place n queens on a n sized chessboard so no 2 queens can attack each other. The most common n queens puzzle is the 8 queens puzzle, with n = 8:

Constraints:

- Use a chessboard of n columns and n rows.

- Place n queens on the chessboard.

- No 2 queens can attack each other. A queen can attack any other queen on the same horizontal, vertical or diagonal line.

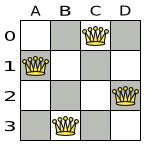

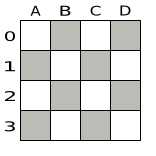

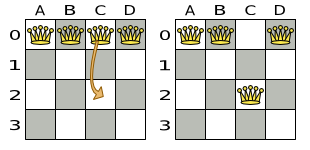

This documentation heavily uses the 4 queens puzzle as the primary example.

A proposed solution could be:

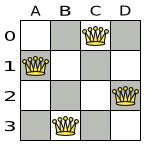

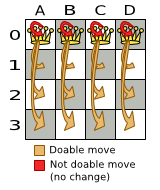

Figure 3.1. A Wrong Solution for the 4 Queens Puzzle

The above solution is wrong because queens A1 and B0 can attack each other (so can queens B0 and D0). Removing queen B0 would respect the “no 2 queens can attack each other” constraint, but would break the “place n queens” constraint.

Below is a correct solution:

Figure 3.2. A Correct Solution for the 4 Queens Puzzle

All the constraints have been met, so the solution is correct. Note that most n queens puzzles have multiple correct solutions. We’ll focus on finding a single correct solution for a given n, not on finding the number of possible correct solutions for a given n.

3.2.1.2. Problem Size

4queens has 4 queens with a search space of 256. 8queens has 8 queens with a search space of 10^7. 16queens has 16 queens with a search space of 10^19. 32queens has 32 queens with a search space of 10^48. 64queens has 64 queens with a search space of 10^115. 256queens has 256 queens with a search space of 10^616.

The implementation of the N queens example has not been optimized because it functions as a beginner example. Nevertheless, it can easily handle 64 queens. With a few changes it has been shown to easily handle 5000 queens and more.

3.2.1.3. Domain Model

Use a good domain model: it will be easier to understand and solve your planning problem. This is the domain model for the n queens example:

public class Column {

private int index;

// ... getters and setters

}public class Row {

private int index;

// ... getters and setters

}public class Queen {

private Column column;

private Row row;

public int getAscendingDiagonalIndex() {...}

public int getDescendingDiagonalIndex() {...}

// ... getters and setters

}

A Queen instance has a Column (for example: 0 is column A, 1 is column B, …) and a Row (its row, for example: 0 is row 0, 1 is row 1, …). Based on the column and the row, the ascending diagonal line as well as the descending diagonal line can be calculated. The column and row indexes start from the upper left corner of the chessboard.

public class NQueens implements Solution<SimpleScore> {

private int n;

private List<Column> columnList;

private List<Row> rowList;

private List<Queen> queenList;

private SimpleScore score;

// ... getters and setters

}

A single NQueens instance contains a list of all Queen instances. It is the Solution implementation which will be supplied to, solved by and retrieved from the Solver. Notice that in the 4 queens example, NQueens’s getN() method will always return 4.

Table 3.2. A Solution for 4 Queens Shown in the Domain Model

| A solution | Queen | columnIndex | rowIndex | ascendingDiagonalIndex (columnIndex + rowIndex) | descendingDiagonalIndex (columnIndex - rowIndex) |

|---|---|---|---|---|---|

| A1 | 0 | 1 | 1 (**) | -1 |

| B0 | 1 | 0 (*) | 1 (**) | 1 | |

| C2 | 2 | 2 | 4 | 0 | |

| D0 | 3 | 0 (*) | 3 | 3 |

When 2 queens share the same column, row or diagonal line, such as (*) and (**), they can attack each other.

3.2.2. Cloud Balancing

This example is explained in a tutorial.

3.2.3. Traveling Salesman (TSP - Traveling Salesman Problem)

3.2.3.1. Problem Description

Given a list of cities, find the shortest tour for a salesman that visits each city exactly once.

The problem is defined by Wikipedia. It is one of the most intensively studied problems in computational mathematics. Yet, in the real world, it’s often only part of a planning problem, along with other constraints, such as employee shift rostering constraints.

3.2.3.2. Problem Size

dj38 has 38 cities with a search space of 10^58. europe40 has 40 cities with a search space of 10^62. st70 has 70 cities with a search space of 10^126. pcb442 has 442 cities with a search space of 10^1166. lu980 has 980 cities with a search space of 10^2927.

3.2.3.3. Problem Difficulty

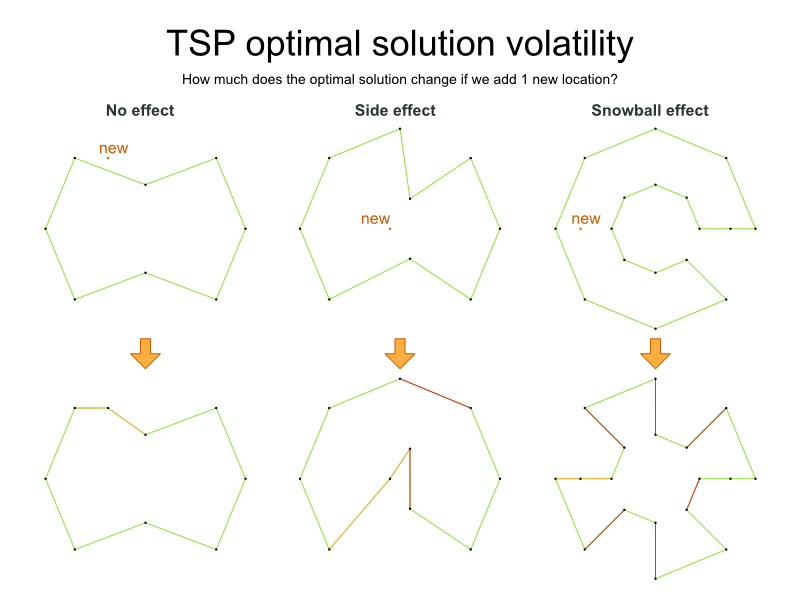

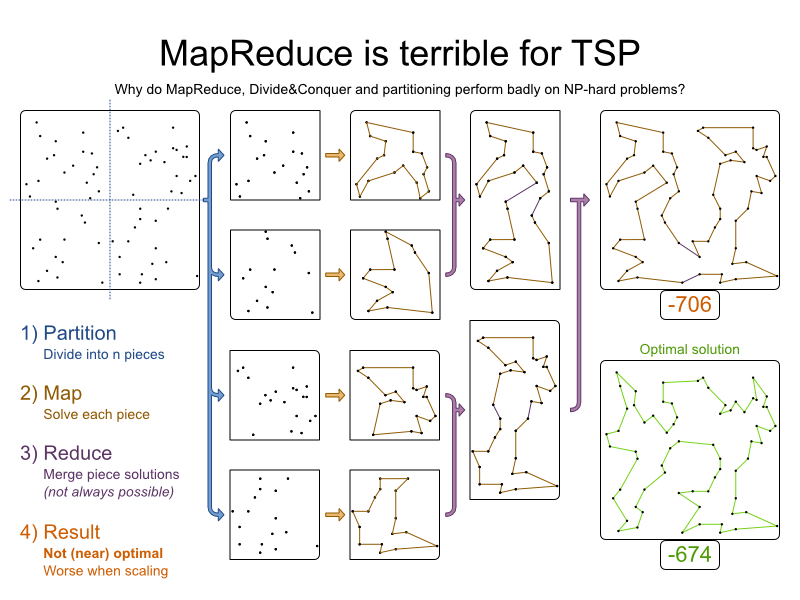

Despite TSP’s simple definition, the problem is surprisingly hard to solve. Because it’s an NP-hard problem (like most planning problems), the optimal solution for a specific problem dataset can change a lot when that problem dataset is slightly altered:

3.2.4. Dinner Party

3.2.4.1. Problem Description

Miss Manners is throwing another dinner party.

- This time she invited 144 guests and prepared 12 round tables with 12 seats each.

- Every guest should sit next to someone (left and right) of the opposite gender.

- And that neighbour should have at least one hobby in common with the guest.

- At every table, there should be 2 politicians, 2 doctors, 2 socialites, 2 coaches, 2 teachers and 2 programmers.

- And the 2 politicians, 2 doctors, 2 coaches and 2 programmers shouldn’t be the same kind at a table.

Drools Expert also has the normal Miss Manners example (which is much smaller) and employs an exhaustive heuristic to solve it. Planner’s implementation is far more scalable because it uses heuristics to find the best solution and Drools Expert to calculate the score of each solution.

3.2.4.2. Problem Size

wedding01 has 18 jobs, 144 guests, 288 hobby practicians, 12 tables and 144 seats with a search space of 10^310.

3.2.5. Tennis Club Scheduling

3.2.5.1. Problem Description

Every week the tennis club has 4 teams playing round robin against each other. Assign those 4 spots to the teams fairly.

Hard constraints:

- Conflict: A team can only play once per day.

- Unavailability: Some teams are unavailable on some dates.

Medium constraints:

- Fair assignment: All teams should play an (almost) equal number of times.

Soft constraints:

- Evenly confrontation: Each team should play against every other team an equal number of times.

3.2.5.2. Problem Size

munich-7teams has 7 teams, 18 days, 12 unavailabilityPenalties and 72 teamAssignments with a search space of 10^60.

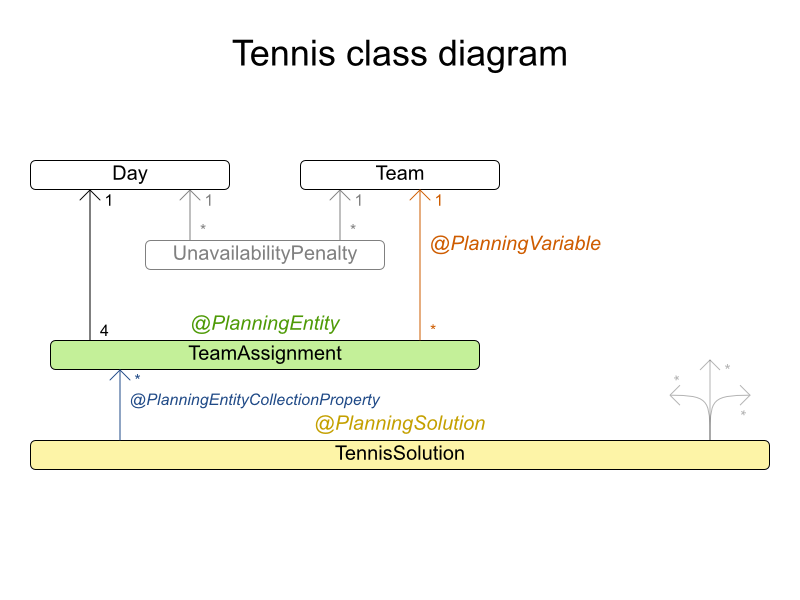

3.2.5.3. Domain Model

3.2.6. Meeting Scheduling

3.2.6.1. Problem Description

Assign each meeting to a starting time and a room. Meetings have different durations.

Hard constraints:

- Room conflict: 2 meetings must not use the same room at the same time.

- Required attendance: A person cannot have 2 required meetings at the same time.

Medium constraints:

- Preferred attendance: A person cannot have 2 preferred meetings at the same time, nor a preferred and a required meeting at the same time.

Soft constraints:

- Sooner rather than later: Schedule all meetings as soon as possible.

3.2.6.2. Problem Size

50meetings-160timegrains-5rooms has 50 meetings, 160 timeGrains and 5 rooms with a search space of 10^145. 100meetings-320timegrains-5rooms has 100 meetings, 320 timeGrains and 5 rooms with a search space of 10^320.

3.3. Real Examples

3.3.1. Course Timetabling (ITC 2007 Track 3 - Curriculum Course Scheduling)

3.3.1.1. Problem Description

Schedule each lecture into a timeslot and into a room.

Hard constraints:

- Teacher conflict: A teacher must not have 2 lectures in the same period.

- Curriculum conflict: A curriculum must not have 2 lectures in the same period.

- Room occupancy: 2 lectures must not be in the same room in the same period.

- Unavailable period (specified per dataset): A specific lecture must not be assigned to a specific period.

Soft constraints:

- Room capacity: A room’s capacity should not be less than the number of students in its lecture.

- Minimum working days: Lectures of the same course should be spread out into a minimum number of days.

- Curriculum compactness: Lectures belonging to the same curriculum should be adjacent to each other (so in consecutive periods).

- Room stability: Lectures of the same course should be assigned the same room.

The problem is defined by the International Timetabling Competition 2007 track 3.

3.3.1.2. Problem Size

comp01 has 24 teachers, 14 curricula, 30 courses, 160 lectures, 30 periods, 6 rooms and 53 unavailable period constraints with a search space of 10^360. comp02 has 71 teachers, 70 curricula, 82 courses, 283 lectures, 25 periods, 16 rooms and 513 unavailable period constraints with a search space of 10^736. comp03 has 61 teachers, 68 curricula, 72 courses, 251 lectures, 25 periods, 16 rooms and 382 unavailable period constraints with a search space of 10^653. comp04 has 70 teachers, 57 curricula, 79 courses, 286 lectures, 25 periods, 18 rooms and 396 unavailable period constraints with a search space of 10^758. comp05 has 47 teachers, 139 curricula, 54 courses, 152 lectures, 36 periods, 9 rooms and 771 unavailable period constraints with a search space of 10^381. comp06 has 87 teachers, 70 curricula, 108 courses, 361 lectures, 25 periods, 18 rooms and 632 unavailable period constraints with a search space of 10^957. comp07 has 99 teachers, 77 curricula, 131 courses, 434 lectures, 25 periods, 20 rooms and 667 unavailable period constraints with a search space of 10^1171. comp08 has 76 teachers, 61 curricula, 86 courses, 324 lectures, 25 periods, 18 rooms and 478 unavailable period constraints with a search space of 10^859. comp09 has 68 teachers, 75 curricula, 76 courses, 279 lectures, 25 periods, 18 rooms and 405 unavailable period constraints with a search space of 10^740. comp10 has 88 teachers, 67 curricula, 115 courses, 370 lectures, 25 periods, 18 rooms and 694 unavailable period constraints with a search space of 10^981. comp11 has 24 teachers, 13 curricula, 30 courses, 162 lectures, 45 periods, 5 rooms and 94 unavailable period constraints with a search space of 10^381. comp12 has 74 teachers, 150 curricula, 88 courses, 218 lectures, 36 periods, 11 rooms and 1368 unavailable period constraints with a search space of 10^566. comp13 has 77 teachers, 66 curricula, 82 courses, 308 lectures, 25 periods, 19 rooms and 468 unavailable period constraints with a search space of 10^824. comp14 has 68 teachers, 60 curricula, 85 courses, 275 lectures, 25 periods, 17 rooms and 486 unavailable period constraints with a search space of 10^722.

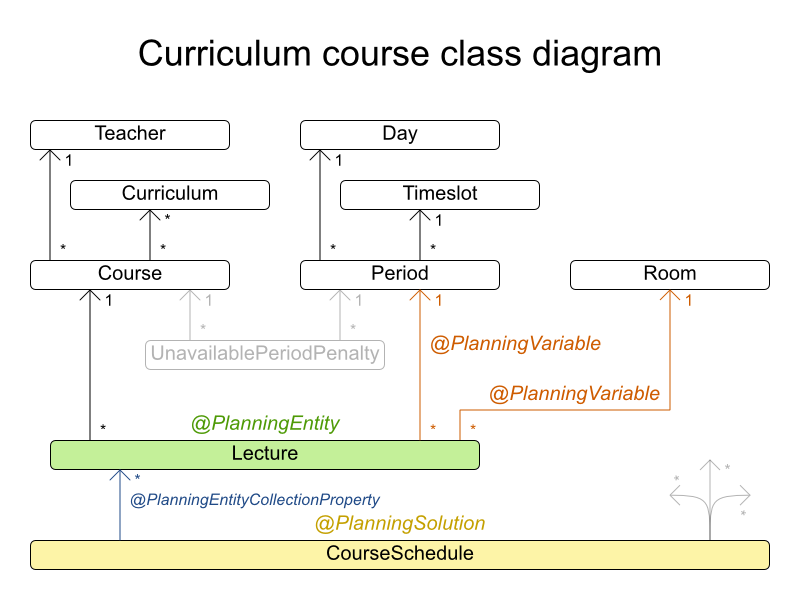

3.3.1.3. Domain Model

3.3.2. Machine Reassignment (Google ROADEF 2012)

3.3.2.1. Problem Description

Assign each process to a machine. All processes already have an original (unoptimized) assignment. Each process requires an amount of each resource (such as CPU, RAM, …). This is a more complex version of the Cloud Balancing example.

Hard constraints:

- Maximum capacity: The maximum capacity for each resource for each machine must not be exceeded.

- Conflict: Processes of the same service must run on distinct machines.

- Spread: Processes of the same service must be spread out across locations.

- Dependency: The processes of a service depending on another service must run in the neighborhood of a process of the other service.

- Transient usage: Some resources are transient and count towards the maximum capacity of both the original machine as the newly assigned machine.

Soft constraints:

- Load: The safety capacity for each resource for each machine should not be exceeded.

- Balance: Leave room for future assignments by balancing the available resources on each machine.

- Process move cost: A process has a move cost.

- Service move cost: A service has a move cost.

- Machine move cost: Moving a process from machine A to machine B has another A-B specific move cost.

The problem is defined by the Google ROADEF/EURO Challenge 2012.

3.3.2.2. Problem Size

model_a1_1 has 2 resources, 1 neighborhoods, 4 locations, 4 machines, 79 services, 100 processes and 1 balancePenalties with a search space of 10^60. model_a1_2 has 4 resources, 2 neighborhoods, 4 locations, 100 machines, 980 services, 1000 processes and 0 balancePenalties with a search space of 10^2000. model_a1_3 has 3 resources, 5 neighborhoods, 25 locations, 100 machines, 216 services, 1000 processes and 0 balancePenalties with a search space of 10^2000. model_a1_4 has 3 resources, 50 neighborhoods, 50 locations, 50 machines, 142 services, 1000 processes and 1 balancePenalties with a search space of 10^1698. model_a1_5 has 4 resources, 2 neighborhoods, 4 locations, 12 machines, 981 services, 1000 processes and 1 balancePenalties with a search space of 10^1079. model_a2_1 has 3 resources, 1 neighborhoods, 1 locations, 100 machines, 1000 services, 1000 processes and 0 balancePenalties with a search space of 10^2000. model_a2_2 has 12 resources, 5 neighborhoods, 25 locations, 100 machines, 170 services, 1000 processes and 0 balancePenalties with a search space of 10^2000. model_a2_3 has 12 resources, 5 neighborhoods, 25 locations, 100 machines, 129 services, 1000 processes and 0 balancePenalties with a search space of 10^2000. model_a2_4 has 12 resources, 5 neighborhoods, 25 locations, 50 machines, 180 services, 1000 processes and 1 balancePenalties with a search space of 10^1698. model_a2_5 has 12 resources, 5 neighborhoods, 25 locations, 50 machines, 153 services, 1000 processes and 0 balancePenalties with a search space of 10^1698. model_b_1 has 12 resources, 5 neighborhoods, 10 locations, 100 machines, 2512 services, 5000 processes and 0 balancePenalties with a search space of 10^10000. model_b_2 has 12 resources, 5 neighborhoods, 10 locations, 100 machines, 2462 services, 5000 processes and 1 balancePenalties with a search space of 10^10000. model_b_3 has 6 resources, 5 neighborhoods, 10 locations, 100 machines, 15025 services, 20000 processes and 0 balancePenalties with a search space of 10^40000. model_b_4 has 6 resources, 5 neighborhoods, 50 locations, 500 machines, 1732 services, 20000 processes and 1 balancePenalties with a search space of 10^53979. model_b_5 has 6 resources, 5 neighborhoods, 10 locations, 100 machines, 35082 services, 40000 processes and 0 balancePenalties with a search space of 10^80000. model_b_6 has 6 resources, 5 neighborhoods, 50 locations, 200 machines, 14680 services, 40000 processes and 1 balancePenalties with a search space of 10^92041. model_b_7 has 6 resources, 5 neighborhoods, 50 locations, 4000 machines, 15050 services, 40000 processes and 1 balancePenalties with a search space of 10^144082. model_b_8 has 3 resources, 5 neighborhoods, 10 locations, 100 machines, 45030 services, 50000 processes and 0 balancePenalties with a search space of 10^100000. model_b_9 has 3 resources, 5 neighborhoods, 100 locations, 1000 machines, 4609 services, 50000 processes and 1 balancePenalties with a search space of 10^150000. model_b_10 has 3 resources, 5 neighborhoods, 100 locations, 5000 machines, 4896 services, 50000 processes and 1 balancePenalties with a search space of 10^184948.

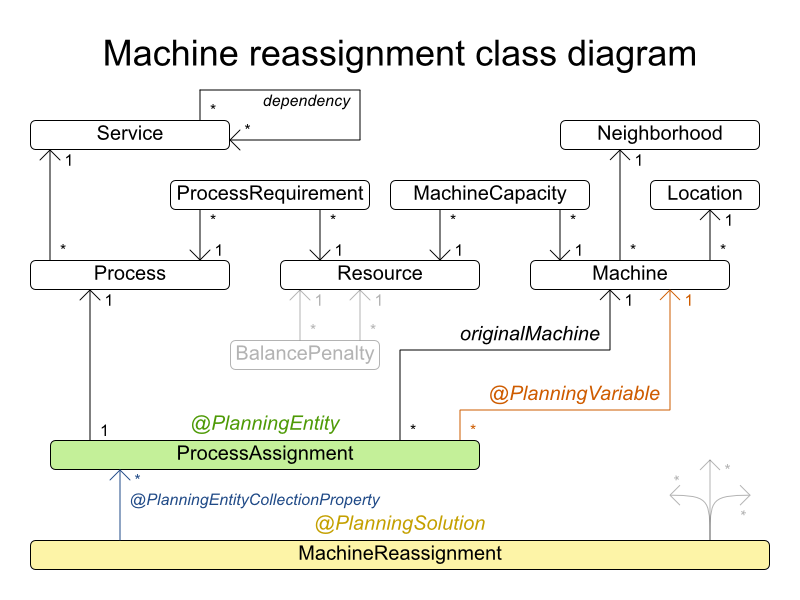

3.3.2.3. Domain Model

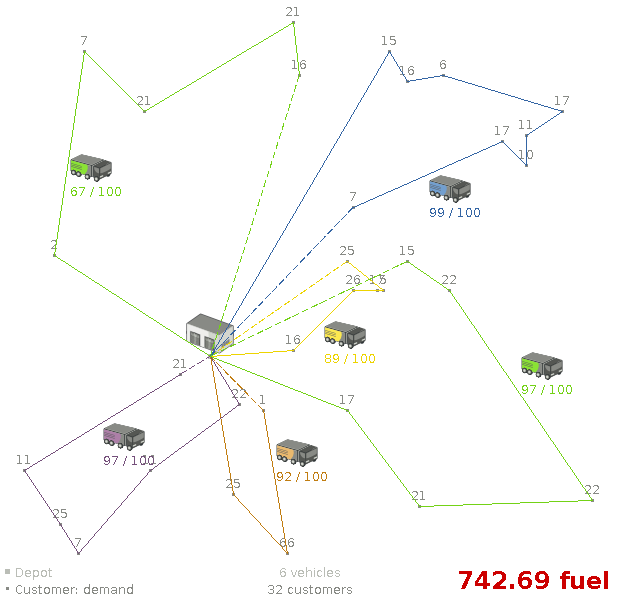

3.3.3. Vehicle Routing

3.3.3.1. Problem Description

Using a fleet of vehicles, pick up the objects of each customer and bring them to the depot. Each vehicle can service multiple customers, but it has a limited capacity.

Besides the basic case (CVRP), there is also a variant with time windows (CVRPTW).

Hard constraints:

- Vehicle capacity: a vehicle cannot carry more items than its capacity.

Time windows (only in CVRPTW):

- Travel time: Traveling from one location to another takes time.

- Customer service duration: a vehicle must stay at the customer for the length of the service duration.

- Customer ready time: a vehicle may arrive before the customer’s ready time, but it must wait until the ready time before servicing.

- Customer due time: a vehicle must arrive on time, before the customer’s due time.

Soft constraints:

- Total distance: minimize the total distance driven (fuel consumption) of all vehicles.

The capacitated vehicle routing problem (CVRP) and its timewindowed variant (CVRPTW) are defined by the VRP web.

3.3.3.2. Problem Size

CVRP instances (without time windows):

A-n32-k5 has 1 depots, 5 vehicles and 31 customers with a search space of 10^46. A-n33-k5 has 1 depots, 5 vehicles and 32 customers with a search space of 10^48. A-n33-k6 has 1 depots, 6 vehicles and 32 customers with a search space of 10^48. A-n34-k5 has 1 depots, 5 vehicles and 33 customers with a search space of 10^50. A-n36-k5 has 1 depots, 5 vehicles and 35 customers with a search space of 10^54. A-n37-k5 has 1 depots, 5 vehicles and 36 customers with a search space of 10^56. A-n37-k6 has 1 depots, 6 vehicles and 36 customers with a search space of 10^56. A-n38-k5 has 1 depots, 5 vehicles and 37 customers with a search space of 10^58. A-n39-k5 has 1 depots, 5 vehicles and 38 customers with a search space of 10^60. A-n39-k6 has 1 depots, 6 vehicles and 38 customers with a search space of 10^60. A-n44-k7 has 1 depots, 7 vehicles and 43 customers with a search space of 10^70. A-n45-k6 has 1 depots, 6 vehicles and 44 customers with a search space of 10^72. A-n45-k7 has 1 depots, 7 vehicles and 44 customers with a search space of 10^72. A-n46-k7 has 1 depots, 7 vehicles and 45 customers with a search space of 10^74. A-n48-k7 has 1 depots, 7 vehicles and 47 customers with a search space of 10^78. A-n53-k7 has 1 depots, 7 vehicles and 52 customers with a search space of 10^89. A-n54-k7 has 1 depots, 7 vehicles and 53 customers with a search space of 10^91. A-n55-k9 has 1 depots, 9 vehicles and 54 customers with a search space of 10^93. A-n60-k9 has 1 depots, 9 vehicles and 59 customers with a search space of 10^104. A-n61-k9 has 1 depots, 9 vehicles and 60 customers with a search space of 10^106. A-n62-k8 has 1 depots, 8 vehicles and 61 customers with a search space of 10^108. A-n63-k10 has 1 depots, 10 vehicles and 62 customers with a search space of 10^111. A-n63-k9 has 1 depots, 9 vehicles and 62 customers with a search space of 10^111. A-n64-k9 has 1 depots, 9 vehicles and 63 customers with a search space of 10^113. A-n65-k9 has 1 depots, 9 vehicles and 64 customers with a search space of 10^115. A-n69-k9 has 1 depots, 9 vehicles and 68 customers with a search space of 10^124. A-n80-k10 has 1 depots, 10 vehicles and 79 customers with a search space of 10^149. F-n135-k7 has 1 depots, 7 vehicles and 134 customers with a search space of 10^285. F-n45-k4 has 1 depots, 4 vehicles and 44 customers with a search space of 10^72. F-n72-k4 has 1 depots, 4 vehicles and 71 customers with a search space of 10^131.

CVRPTW instances (with time windows):

Solomon_025_C101 has 1 depots, 25 vehicles and 25 customers with a search space of 10^34. Solomon_025_C201 has 1 depots, 25 vehicles and 25 customers with a search space of 10^34. Solomon_025_R101 has 1 depots, 25 vehicles and 25 customers with a search space of 10^34. Solomon_025_R201 has 1 depots, 25 vehicles and 25 customers with a search space of 10^34. Solomon_025_RC101 has 1 depots, 25 vehicles and 25 customers with a search space of 10^34. Solomon_025_RC201 has 1 depots, 25 vehicles and 25 customers with a search space of 10^34. Solomon_100_C101 has 1 depots, 25 vehicles and 100 customers with a search space of 10^200. Solomon_100_C201 has 1 depots, 25 vehicles and 100 customers with a search space of 10^200. Solomon_100_R101 has 1 depots, 25 vehicles and 100 customers with a search space of 10^200. Solomon_100_R201 has 1 depots, 25 vehicles and 100 customers with a search space of 10^200. Solomon_100_RC101 has 1 depots, 25 vehicles and 100 customers with a search space of 10^200. Solomon_100_RC201 has 1 depots, 25 vehicles and 100 customers with a search space of 10^200. Homberger_0200_C1_2_1 has 1 depots, 50 vehicles and 200 customers with a search space of 10^460. Homberger_0200_C2_2_1 has 1 depots, 50 vehicles and 200 customers with a search space of 10^460. Homberger_0200_R1_2_1 has 1 depots, 50 vehicles and 200 customers with a search space of 10^460. Homberger_0200_R2_2_1 has 1 depots, 50 vehicles and 200 customers with a search space of 10^460. Homberger_0200_RC1_2_1 has 1 depots, 50 vehicles and 200 customers with a search space of 10^460. Homberger_0200_RC2_2_1 has 1 depots, 50 vehicles and 200 customers with a search space of 10^460. Homberger_0400_C1_4_1 has 1 depots, 100 vehicles and 400 customers with a search space of 10^1040. Homberger_0400_C2_4_1 has 1 depots, 100 vehicles and 400 customers with a search space of 10^1040. Homberger_0400_R1_4_1 has 1 depots, 100 vehicles and 400 customers with a search space of 10^1040. Homberger_0400_R2_4_1 has 1 depots, 100 vehicles and 400 customers with a search space of 10^1040. Homberger_0400_RC1_4_1 has 1 depots, 100 vehicles and 400 customers with a search space of 10^1040. Homberger_0400_RC2_4_1 has 1 depots, 100 vehicles and 400 customers with a search space of 10^1040. Homberger_0600_C1_6_1 has 1 depots, 150 vehicles and 600 customers with a search space of 10^1666. Homberger_0600_C2_6_1 has 1 depots, 150 vehicles and 600 customers with a search space of 10^1666. Homberger_0600_R1_6_1 has 1 depots, 150 vehicles and 600 customers with a search space of 10^1666. Homberger_0600_R2_6_1 has 1 depots, 150 vehicles and 600 customers with a search space of 10^1666. Homberger_0600_RC1_6_1 has 1 depots, 150 vehicles and 600 customers with a search space of 10^1666. Homberger_0600_RC2_6_1 has 1 depots, 150 vehicles and 600 customers with a search space of 10^1666. Homberger_0800_C1_8_1 has 1 depots, 200 vehicles and 800 customers with a search space of 10^2322. Homberger_0800_C2_8_1 has 1 depots, 200 vehicles and 800 customers with a search space of 10^2322. Homberger_0800_R1_8_1 has 1 depots, 200 vehicles and 800 customers with a search space of 10^2322. Homberger_0800_R2_8_1 has 1 depots, 200 vehicles and 800 customers with a search space of 10^2322. Homberger_0800_RC1_8_1 has 1 depots, 200 vehicles and 800 customers with a search space of 10^2322. Homberger_0800_RC2_8_1 has 1 depots, 200 vehicles and 800 customers with a search space of 10^2322. Homberger_1000_C110_1 has 1 depots, 250 vehicles and 1000 customers with a search space of 10^3000. Homberger_1000_C210_1 has 1 depots, 250 vehicles and 1000 customers with a search space of 10^3000. Homberger_1000_R110_1 has 1 depots, 250 vehicles and 1000 customers with a search space of 10^3000. Homberger_1000_R210_1 has 1 depots, 250 vehicles and 1000 customers with a search space of 10^3000. Homberger_1000_RC110_1 has 1 depots, 250 vehicles and 1000 customers with a search space of 10^3000. Homberger_1000_RC210_1 has 1 depots, 250 vehicles and 1000 customers with a search space of 10^3000.

3.3.3.3. Domain Model

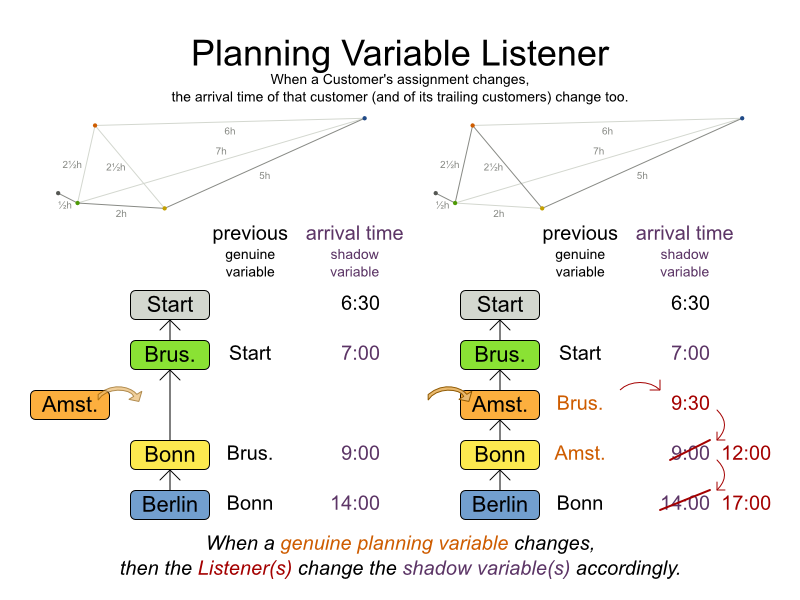

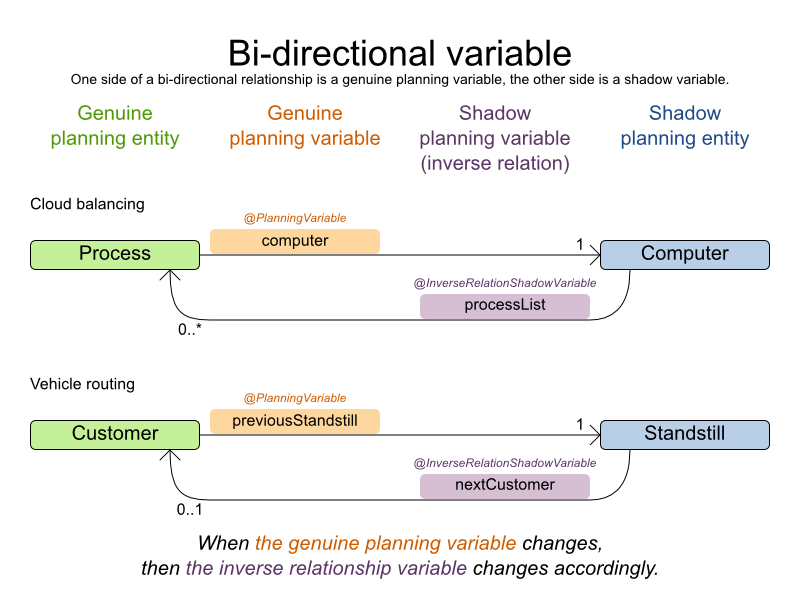

The vehicle routing with timewindows domain model makes heavy use of shadow variables. This allows it to express its constraints more naturally, because properties such as arrivalTime and departureTime, are directly available on the domain model.

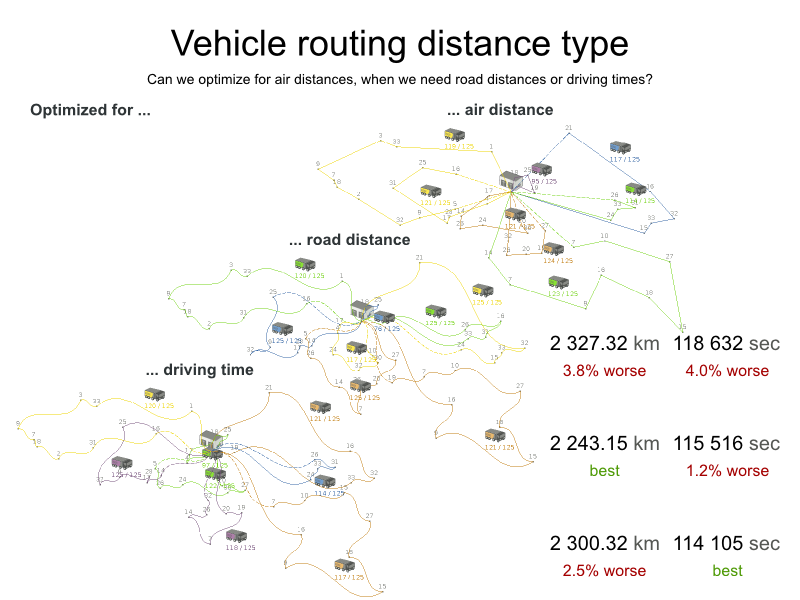

3.3.3.4. Road Distances Instead of Air Distances

In the real world, vehicles can’t follow a straight line from location to location: they have to use roads and highways. From a business point of view, this matters a lot:

For the optimization algorithm, this doesn’t matter much, as long as the distance between 2 points can be looked up (and are preferably precalculated). The road cost doesn’t even need to be a distance, it can also be travel time, fuel cost, or a weighted function of those. There are several technologies available to precalculate road costs, such as GraphHopper (embeddable, offline Java engine), Open MapQuest (web service) and Google Maps Client API (web service).

There are also several technologies to render it, such as Leaflet and Google Maps for developers: the optaplanner-webexamples-*.war has an example which demonstrates such rendering:

It’s even possible to render the actual road routes with GraphHopper or Google Map Directions, but because of route overlaps on highways, it can become harder to see the standstill order:

Take special care that the road costs between 2 points use the same optimization criteria as the one used in Planner. For example, GraphHopper etc will by default return the fastest route, not the shortest route. Don’t use the km (or miles) distances of the fastest GPS routes to optimize the shortest trip in Planner: this leads to a suboptimal solution as shown below:

Contrary to popular belief, most users don’t want the shortest route: they want the fastest route instead. They prefer highways over normal roads. They prefer normal roads over dirt roads. In the real world, the fastest and shortest route are rarely the same.

3.3.4. Project Job Scheduling

3.3.4.1. Problem Description

Schedule all jobs in time and execution mode to minimize project delays. Each job is part of a project. A job can be executed in different ways: each way is an execution mode that implies a different duration but also different resource usages. This is a form of flexible job shop scheduling.

Hard constraints:

- Job precedence: a job can only start when all its predecessor jobs are finished.

Resource capacity: do not use more resources than available.

- Resources are local (shared between jobs of the same project) or global (shared between all jobs)

- Resource are renewable (capacity available per day) or nonrenewable (capacity available for all days)

Medium constraints:

- Total project delay: minimize the duration (makespan) of each project.

Soft constraints:

- Total makespan: minimize the duration of the whole multi-project schedule.

The problem is defined by the MISTA 2013 challenge.

3.3.4.2. Problem Size

Schedule A-1 has 2 projects, 24 jobs, 64 execution modes, 7 resources and 150 resource requirements. Schedule A-2 has 2 projects, 44 jobs, 124 execution modes, 7 resources and 420 resource requirements. Schedule A-3 has 2 projects, 64 jobs, 184 execution modes, 7 resources and 630 resource requirements. Schedule A-4 has 5 projects, 60 jobs, 160 execution modes, 16 resources and 390 resource requirements. Schedule A-5 has 5 projects, 110 jobs, 310 execution modes, 16 resources and 900 resource requirements. Schedule A-6 has 5 projects, 160 jobs, 460 execution modes, 16 resources and 1440 resource requirements. Schedule A-7 has 10 projects, 120 jobs, 320 execution modes, 22 resources and 900 resource requirements. Schedule A-8 has 10 projects, 220 jobs, 620 execution modes, 22 resources and 1860 resource requirements. Schedule A-9 has 10 projects, 320 jobs, 920 execution modes, 31 resources and 2880 resource requirements. Schedule A-10 has 10 projects, 320 jobs, 920 execution modes, 31 resources and 2970 resource requirements. Schedule B-1 has 10 projects, 120 jobs, 320 execution modes, 31 resources and 900 resource requirements. Schedule B-2 has 10 projects, 220 jobs, 620 execution modes, 22 resources and 1740 resource requirements. Schedule B-3 has 10 projects, 320 jobs, 920 execution modes, 31 resources and 3060 resource requirements. Schedule B-4 has 15 projects, 180 jobs, 480 execution modes, 46 resources and 1530 resource requirements. Schedule B-5 has 15 projects, 330 jobs, 930 execution modes, 46 resources and 2760 resource requirements. Schedule B-6 has 15 projects, 480 jobs, 1380 execution modes, 46 resources and 4500 resource requirements. Schedule B-7 has 20 projects, 240 jobs, 640 execution modes, 61 resources and 1710 resource requirements. Schedule B-8 has 20 projects, 440 jobs, 1240 execution modes, 42 resources and 3180 resource requirements. Schedule B-9 has 20 projects, 640 jobs, 1840 execution modes, 61 resources and 5940 resource requirements. Schedule B-10 has 20 projects, 460 jobs, 1300 execution modes, 42 resources and 4260 resource requirements.

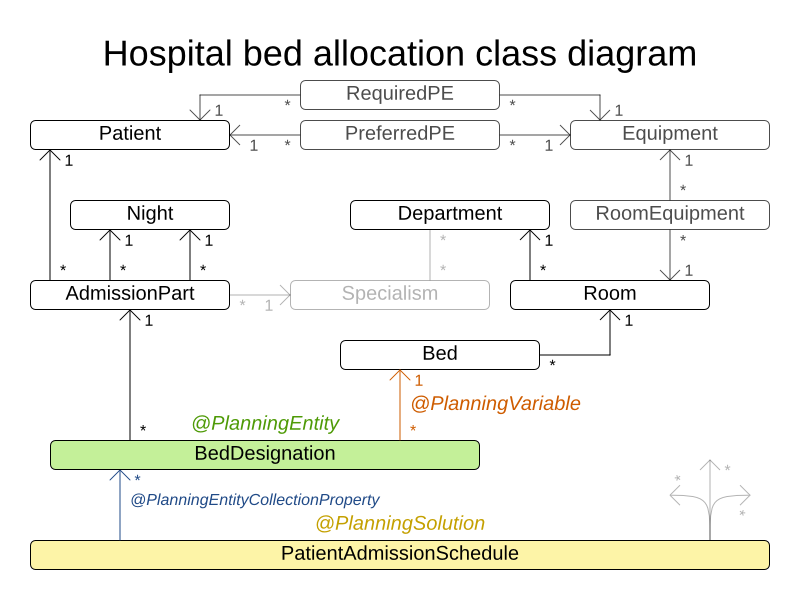

3.3.5. Hospital Bed Planning (PAS - Patient Admission Scheduling)

3.3.5.1. Problem Description

Assign each patient (that will come to the hospital) into a bed for each night that the patient will stay in the hospital. Each bed belongs to a room and each room belongs to a department. The arrival and departure dates of the patients are fixed: only a bed needs to be assigned for each night.

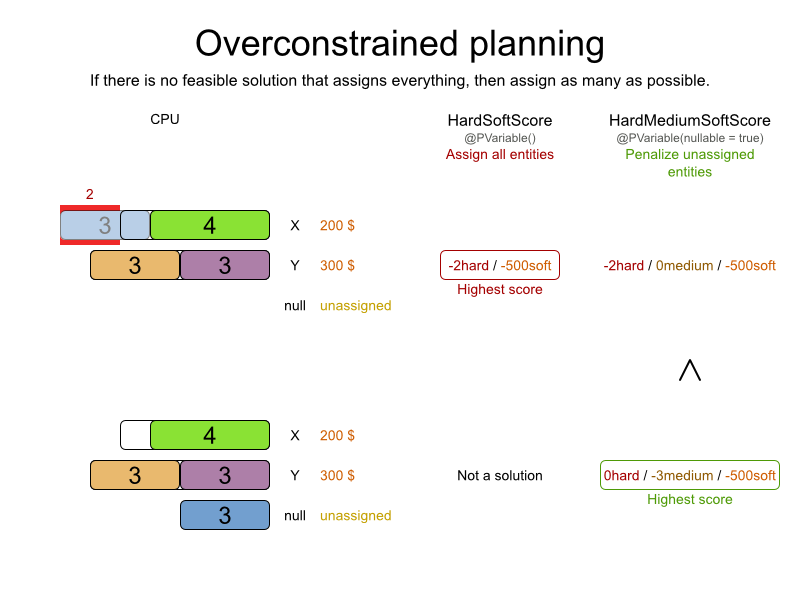

This problem features overconstrained datasets.

Hard constraints:

-

2 patients must not be assigned to the same bed in the same night. Weight:

-1000hard * conflictNightCount. -

A room can have a gender limitation: only females, only males, the same gender in the same night or no gender limitation at all. Weight:

-50hard * nightCount. -

A department can have a minimum or maximum age. Weight:

-100hard * nightCount. -

A patient can require a room with specific equipment(s). Weight:

-50hard * nightCount.

Medium constraints:

-

Assign every patient to a bed, unless the dataset is overconstrained. Weight:

-1medium * nightCount.

Soft constraints:

-

A patient can prefer a maximum room size, for example if he/she wants a single room. Weight:

-8soft * nightCount. -

A patient is best assigned to a department that specializes in his/her problem. Weight:

-10soft * nightCount. A patient is best assigned to a room that specializes in his/her problem. Weight:

-20soft * nightCount.-

That room speciality should be priority 1. Weight:

-10soft * (priority - 1) * nightCount.

-

That room speciality should be priority 1. Weight:

-

A patient can prefer a room with specific equipment(s). Weight:

-20soft * nightCount.

The problem is a variant on Kaho’s Patient Scheduling and the datasets come from real world hospitals.

3.3.5.2. Problem Size

testdata01 has 4 specialisms, 2 equipments, 4 departments, 98 rooms, 286 beds, 14 nights, 652 patients and 652 admissions with a search space of 10^1601. testdata02 has 6 specialisms, 2 equipments, 6 departments, 151 rooms, 465 beds, 14 nights, 755 patients and 755 admissions with a search space of 10^2013. testdata03 has 5 specialisms, 2 equipments, 5 departments, 131 rooms, 395 beds, 14 nights, 708 patients and 708 admissions with a search space of 10^1838. testdata04 has 6 specialisms, 2 equipments, 6 departments, 155 rooms, 471 beds, 14 nights, 746 patients and 746 admissions with a search space of 10^1994. testdata05 has 4 specialisms, 2 equipments, 4 departments, 102 rooms, 325 beds, 14 nights, 587 patients and 587 admissions with a search space of 10^1474. testdata06 has 4 specialisms, 2 equipments, 4 departments, 104 rooms, 313 beds, 14 nights, 685 patients and 685 admissions with a search space of 10^1709. testdata07 has 6 specialisms, 4 equipments, 6 departments, 162 rooms, 472 beds, 14 nights, 519 patients and 519 admissions with a search space of 10^1387. testdata08 has 6 specialisms, 4 equipments, 6 departments, 148 rooms, 441 beds, 21 nights, 895 patients and 895 admissions with a search space of 10^2366. testdata09 has 4 specialisms, 4 equipments, 4 departments, 105 rooms, 310 beds, 28 nights, 1400 patients and 1400 admissions with a search space of 10^3487. testdata10 has 4 specialisms, 4 equipments, 4 departments, 104 rooms, 308 beds, 56 nights, 1575 patients and 1575 admissions with a search space of 10^3919. testdata11 has 4 specialisms, 4 equipments, 4 departments, 107 rooms, 318 beds, 91 nights, 2514 patients and 2514 admissions with a search space of 10^6291. testdata12 has 4 specialisms, 4 equipments, 4 departments, 105 rooms, 310 beds, 84 nights, 2750 patients and 2750 admissions with a search space of 10^6851. testdata13 has 5 specialisms, 4 equipments, 5 departments, 125 rooms, 368 beds, 28 nights, 907 patients and 1109 admissions with a search space of 10^2845.

3.3.5.3. Domain Model

3.4. Difficult Examples

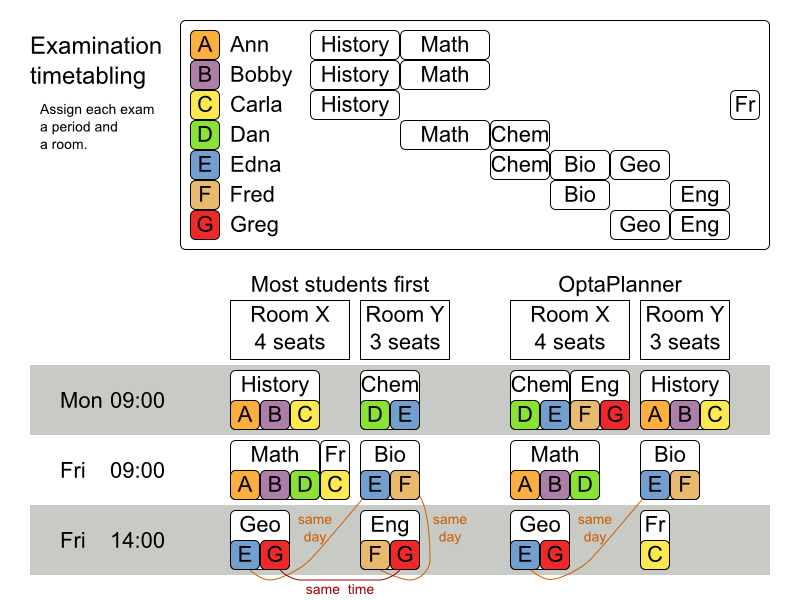

3.4.1. Exam Timetabling (ITC 2007 track 1 - Examination)

3.4.1.1. Problem Description

Schedule each exam into a period and into a room. Multiple exams can share the same room during the same period.

Hard constraints:

- Exam conflict: 2 exams that share students must not occur in the same period.

- Room capacity: A room’s seating capacity must suffice at all times.

- Period duration: A period’s duration must suffice for all of its exams.

Period related hard constraints (specified per dataset):

- Coincidence: 2 specified exams must use the same period (but possibly another room).

- Exclusion: 2 specified exams must not use the same period.

- After: A specified exam must occur in a period after another specified exam’s period.

Room related hard constraints (specified per dataset):

- Exclusive: 1 specified exam should not have to share its room with any other exam.

Soft constraints (each of which has a parametrized penalty):

- The same student should not have 2 exams in a row.

- The same student should not have 2 exams on the same day.

- Period spread: 2 exams that share students should be a number of periods apart.

- Mixed durations: 2 exams that share a room should not have different durations.

- Front load: Large exams should be scheduled earlier in the schedule.

- Period penalty (specified per dataset): Some periods have a penalty when used.

- Room penalty (specified per dataset): Some rooms have a penalty when used.

It uses large test data sets of real-life universities.

The problem is defined by the International Timetabling Competition 2007 track 1. Geoffrey De Smet finished 4th in that competition with a very early version of Planner. Many improvements have been made since then.

3.4.1.2. Problem Size

exam_comp_set1 has 7883 students, 607 exams, 54 periods, 7 rooms, 12 period constraints and 0 room constraints with a search space of 10^1564. exam_comp_set2 has 12484 students, 870 exams, 40 periods, 49 rooms, 12 period constraints and 2 room constraints with a search space of 10^2864. exam_comp_set3 has 16365 students, 934 exams, 36 periods, 48 rooms, 168 period constraints and 15 room constraints with a search space of 10^3023. exam_comp_set4 has 4421 students, 273 exams, 21 periods, 1 rooms, 40 period constraints and 0 room constraints with a search space of 10^360. exam_comp_set5 has 8719 students, 1018 exams, 42 periods, 3 rooms, 27 period constraints and 0 room constraints with a search space of 10^2138. exam_comp_set6 has 7909 students, 242 exams, 16 periods, 8 rooms, 22 period constraints and 0 room constraints with a search space of 10^509. exam_comp_set7 has 13795 students, 1096 exams, 80 periods, 15 rooms, 28 period constraints and 0 room constraints with a search space of 10^3374. exam_comp_set8 has 7718 students, 598 exams, 80 periods, 8 rooms, 20 period constraints and 1 room constraints with a search space of 10^1678.

3.4.1.3. Domain Model

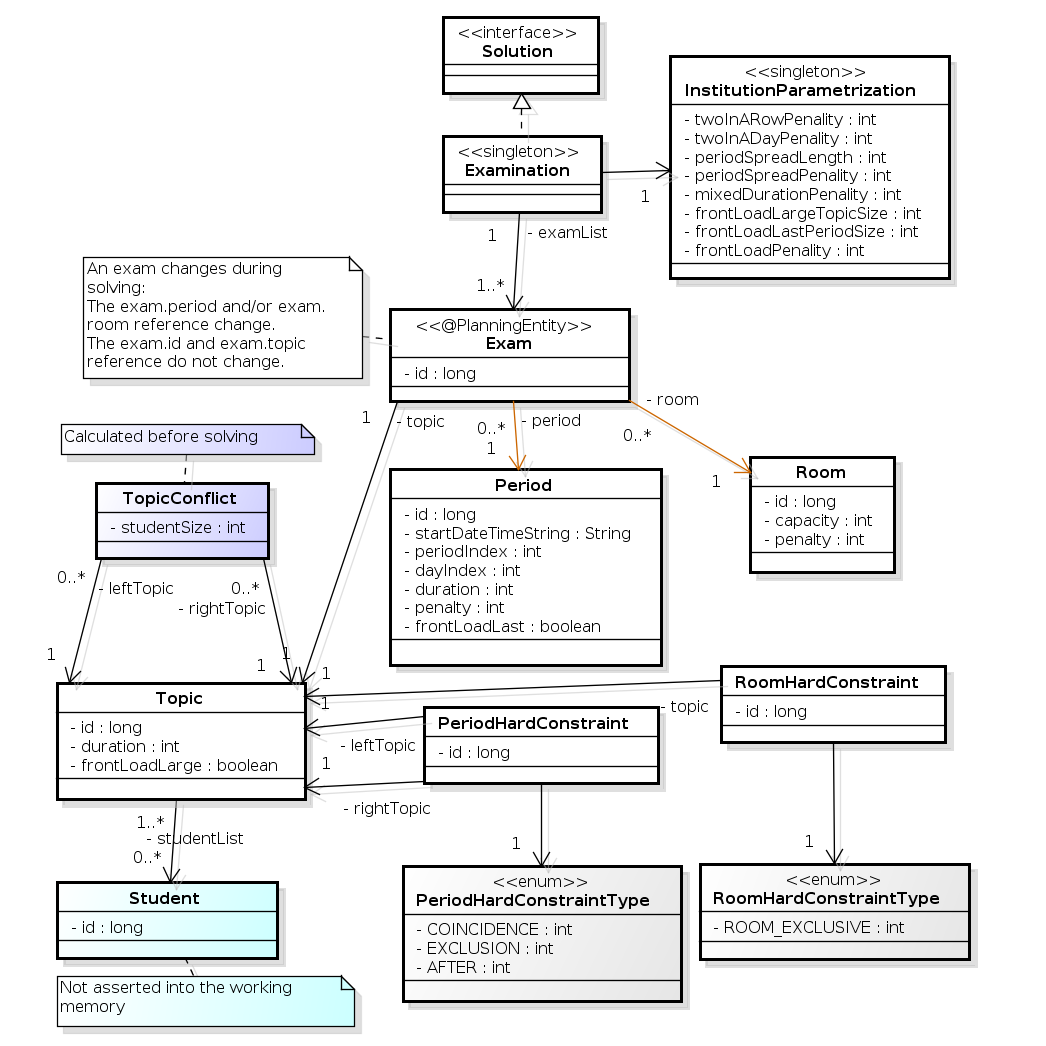

Below you can see the main examination domain classes:

Figure 3.3. Examination Domain Class Diagram

Notice that we’ve split up the exam concept into an Exam class and a Topic class. The Exam instances change during solving (this is the planning entity class), when their period or room property changes. The Topic, Period and Room instances never change during solving (these are problem facts, just like some other classes).

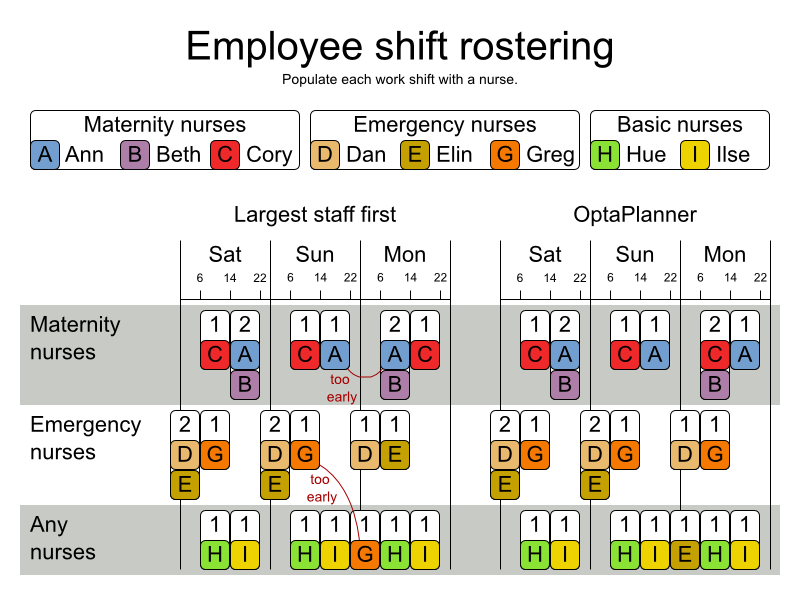

3.4.2. Employee Rostering (INRC 2010 - Nurse Rostering)

3.4.2.1. Problem Description

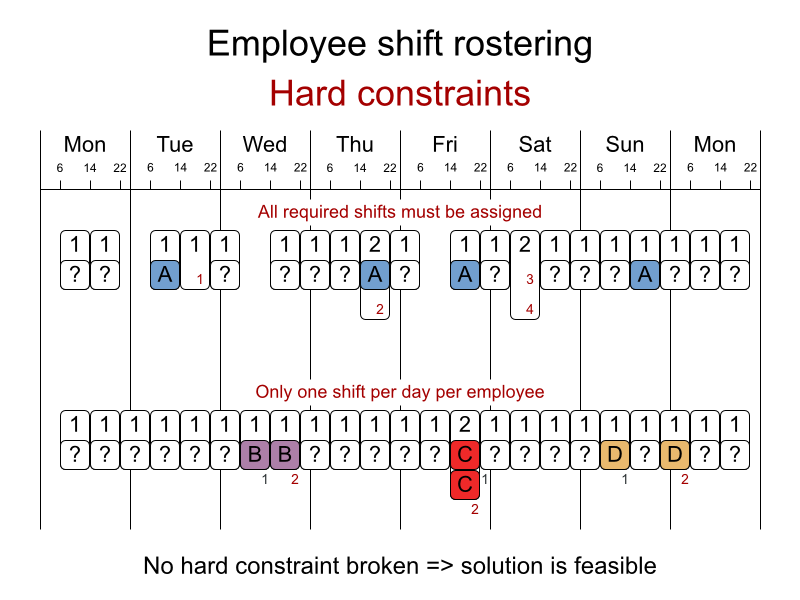

For each shift, assign a nurse to work that shift.

Hard constraints:

- No unassigned shifts (built-in): Every shift need to be assigned to an employee.

- Shift conflict: An employee can have only 1 shift per day.

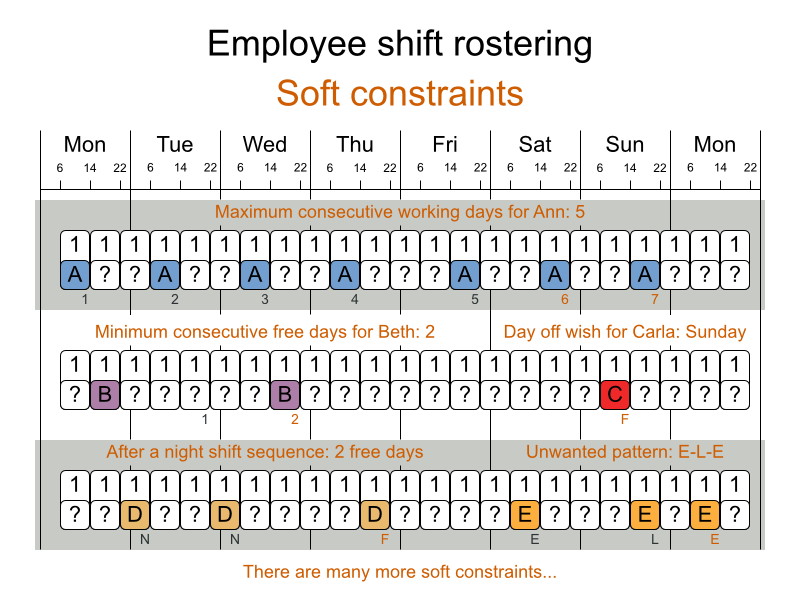

Soft constraints:

Contract obligations. The business frequently violates these, so they decided to define these as soft constraints instead of hard constraints.

- Minimum and maximum assignments: Each employee needs to work more than x shifts and less than y shifts (depending on their contract).

- Minimum and maximum consecutive working days: Each employee needs to work between x and y days in a row (depending on their contract).

- Minimum and maximum consecutive free days: Each employee needs to be free between x and y days in a row (depending on their contract).

- Minimum and maximum consecutive working weekends: Each employee needs to work between x and y weekends in a row (depending on their contract).

- Complete weekends: Each employee needs to work every day in a weekend or not at all.

- Identical shift types during weekend: Each weekend shift for the same weekend of the same employee must be the same shift type.

- Unwanted patterns: A combination of unwanted shift types in a row. For example: a late shift followed by an early shift followed by a late shift.

Employee wishes:

- Day on request: An employee wants to work on a specific day.

- Day off request: An employee does not want to work on a specific day.

- Shift on request: An employee wants to be assigned to a specific shift.

- Shift off request: An employee does not want to be assigned to a specific shift.

- Alternative skill: An employee assigned to a shift should have a proficiency in every skill required by that shift.

The problem is defined by the International Nurse Rostering Competition 2010.

3.4.2.2. Problem Size

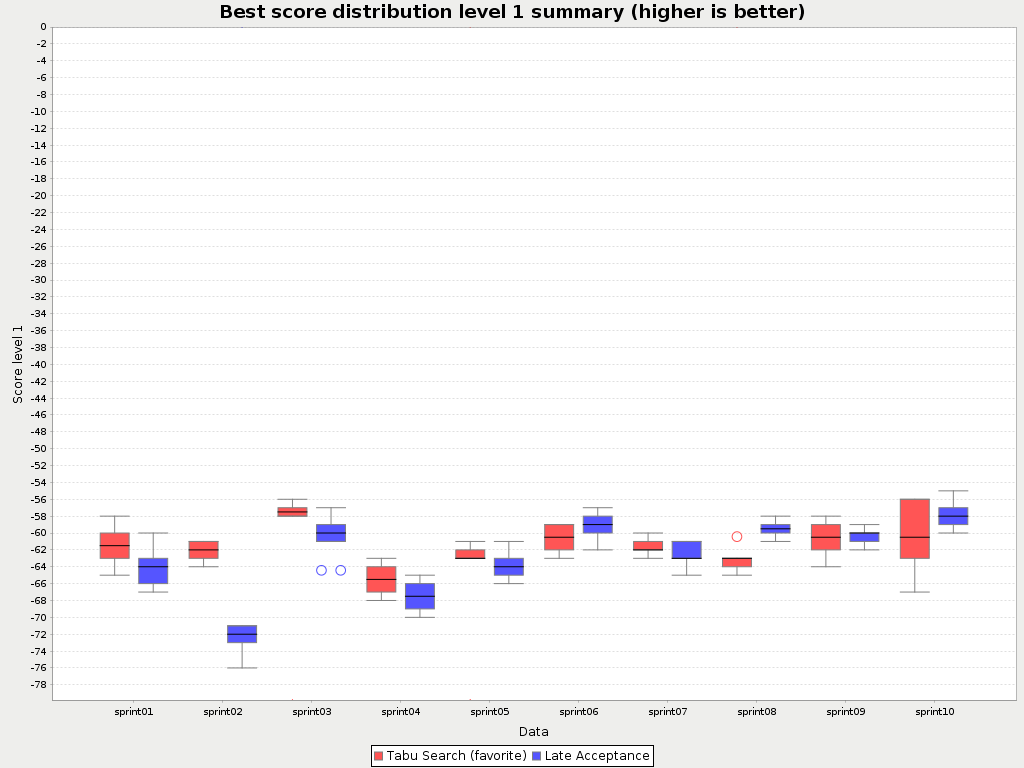

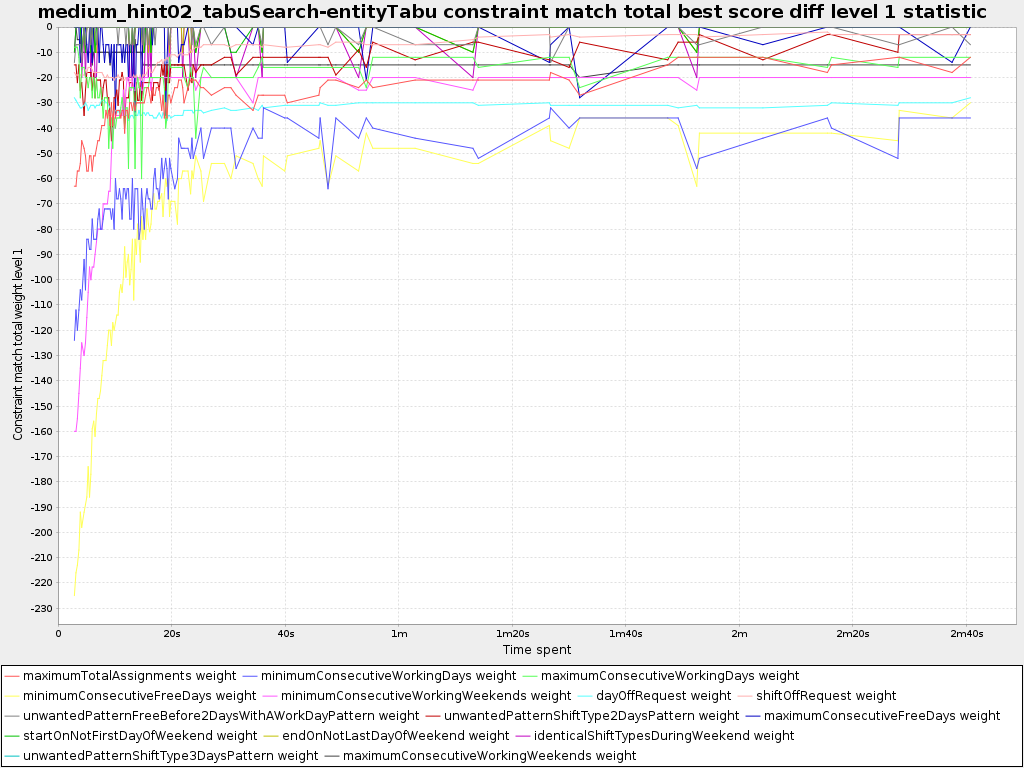

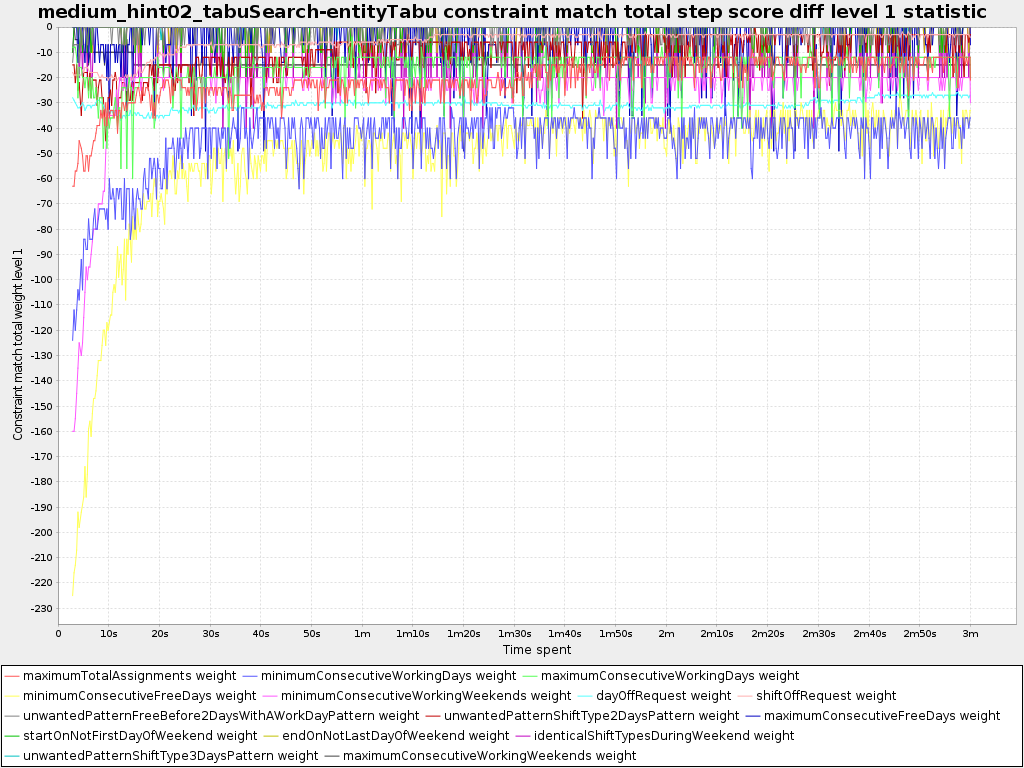

There are 3 dataset types:

- sprint: must be solved in seconds.

- medium: must be solved in minutes.

- long: must be solved in hours.

toy1 has 1 skills, 3 shiftTypes, 2 patterns, 1 contracts, 6 employees, 7 shiftDates, 35 shiftAssignments and 0 requests with a search space of 10^27. toy2 has 1 skills, 3 shiftTypes, 3 patterns, 2 contracts, 20 employees, 28 shiftDates, 180 shiftAssignments and 140 requests with a search space of 10^234. sprint01 has 1 skills, 4 shiftTypes, 3 patterns, 4 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint02 has 1 skills, 4 shiftTypes, 3 patterns, 4 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint03 has 1 skills, 4 shiftTypes, 3 patterns, 4 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint04 has 1 skills, 4 shiftTypes, 3 patterns, 4 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint05 has 1 skills, 4 shiftTypes, 3 patterns, 4 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint06 has 1 skills, 4 shiftTypes, 3 patterns, 4 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint07 has 1 skills, 4 shiftTypes, 3 patterns, 4 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint08 has 1 skills, 4 shiftTypes, 3 patterns, 4 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint09 has 1 skills, 4 shiftTypes, 3 patterns, 4 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint10 has 1 skills, 4 shiftTypes, 3 patterns, 4 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint_hint01 has 1 skills, 4 shiftTypes, 8 patterns, 3 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint_hint02 has 1 skills, 4 shiftTypes, 0 patterns, 3 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint_hint03 has 1 skills, 4 shiftTypes, 8 patterns, 3 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint_late01 has 1 skills, 4 shiftTypes, 8 patterns, 3 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint_late02 has 1 skills, 3 shiftTypes, 4 patterns, 3 contracts, 10 employees, 28 shiftDates, 144 shiftAssignments and 139 requests with a search space of 10^144. sprint_late03 has 1 skills, 4 shiftTypes, 8 patterns, 3 contracts, 10 employees, 28 shiftDates, 160 shiftAssignments and 150 requests with a search space of 10^160. sprint_late04 has 1 skills, 4 shiftTypes, 8 patterns, 3 contracts, 10 employees, 28 shiftDates, 160 shiftAssignments and 150 requests with a search space of 10^160. sprint_late05 has 1 skills, 4 shiftTypes, 8 patterns, 3 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint_late06 has 1 skills, 4 shiftTypes, 0 patterns, 3 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint_late07 has 1 skills, 4 shiftTypes, 0 patterns, 3 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. sprint_late08 has 1 skills, 4 shiftTypes, 0 patterns, 3 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 0 requests with a search space of 10^152. sprint_late09 has 1 skills, 4 shiftTypes, 0 patterns, 3 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 0 requests with a search space of 10^152. sprint_late10 has 1 skills, 4 shiftTypes, 0 patterns, 3 contracts, 10 employees, 28 shiftDates, 152 shiftAssignments and 150 requests with a search space of 10^152. medium01 has 1 skills, 4 shiftTypes, 0 patterns, 4 contracts, 31 employees, 28 shiftDates, 608 shiftAssignments and 403 requests with a search space of 10^906. medium02 has 1 skills, 4 shiftTypes, 0 patterns, 4 contracts, 31 employees, 28 shiftDates, 608 shiftAssignments and 403 requests with a search space of 10^906. medium03 has 1 skills, 4 shiftTypes, 0 patterns, 4 contracts, 31 employees, 28 shiftDates, 608 shiftAssignments and 403 requests with a search space of 10^906. medium04 has 1 skills, 4 shiftTypes, 0 patterns, 4 contracts, 31 employees, 28 shiftDates, 608 shiftAssignments and 403 requests with a search space of 10^906. medium05 has 1 skills, 4 shiftTypes, 0 patterns, 4 contracts, 31 employees, 28 shiftDates, 608 shiftAssignments and 403 requests with a search space of 10^906. medium_hint01 has 1 skills, 4 shiftTypes, 7 patterns, 4 contracts, 30 employees, 28 shiftDates, 428 shiftAssignments and 390 requests with a search space of 10^632. medium_hint02 has 1 skills, 4 shiftTypes, 7 patterns, 3 contracts, 30 employees, 28 shiftDates, 428 shiftAssignments and 390 requests with a search space of 10^632. medium_hint03 has 1 skills, 4 shiftTypes, 7 patterns, 4 contracts, 30 employees, 28 shiftDates, 428 shiftAssignments and 390 requests with a search space of 10^632. medium_late01 has 1 skills, 4 shiftTypes, 7 patterns, 4 contracts, 30 employees, 28 shiftDates, 424 shiftAssignments and 390 requests with a search space of 10^626. medium_late02 has 1 skills, 4 shiftTypes, 7 patterns, 3 contracts, 30 employees, 28 shiftDates, 428 shiftAssignments and 390 requests with a search space of 10^632. medium_late03 has 1 skills, 4 shiftTypes, 0 patterns, 4 contracts, 30 employees, 28 shiftDates, 428 shiftAssignments and 390 requests with a search space of 10^632. medium_late04 has 1 skills, 4 shiftTypes, 7 patterns, 3 contracts, 30 employees, 28 shiftDates, 416 shiftAssignments and 390 requests with a search space of 10^614. medium_late05 has 2 skills, 5 shiftTypes, 7 patterns, 4 contracts, 30 employees, 28 shiftDates, 452 shiftAssignments and 390 requests with a search space of 10^667. long01 has 2 skills, 5 shiftTypes, 3 patterns, 3 contracts, 49 employees, 28 shiftDates, 740 shiftAssignments and 735 requests with a search space of 10^1250. long02 has 2 skills, 5 shiftTypes, 3 patterns, 3 contracts, 49 employees, 28 shiftDates, 740 shiftAssignments and 735 requests with a search space of 10^1250. long03 has 2 skills, 5 shiftTypes, 3 patterns, 3 contracts, 49 employees, 28 shiftDates, 740 shiftAssignments and 735 requests with a search space of 10^1250. long04 has 2 skills, 5 shiftTypes, 3 patterns, 3 contracts, 49 employees, 28 shiftDates, 740 shiftAssignments and 735 requests with a search space of 10^1250. long05 has 2 skills, 5 shiftTypes, 3 patterns, 3 contracts, 49 employees, 28 shiftDates, 740 shiftAssignments and 735 requests with a search space of 10^1250. long_hint01 has 2 skills, 5 shiftTypes, 9 patterns, 3 contracts, 50 employees, 28 shiftDates, 740 shiftAssignments and 0 requests with a search space of 10^1257. long_hint02 has 2 skills, 5 shiftTypes, 7 patterns, 3 contracts, 50 employees, 28 shiftDates, 740 shiftAssignments and 0 requests with a search space of 10^1257. long_hint03 has 2 skills, 5 shiftTypes, 7 patterns, 3 contracts, 50 employees, 28 shiftDates, 740 shiftAssignments and 0 requests with a search space of 10^1257. long_late01 has 2 skills, 5 shiftTypes, 9 patterns, 3 contracts, 50 employees, 28 shiftDates, 752 shiftAssignments and 0 requests with a search space of 10^1277. long_late02 has 2 skills, 5 shiftTypes, 9 patterns, 4 contracts, 50 employees, 28 shiftDates, 752 shiftAssignments and 0 requests with a search space of 10^1277. long_late03 has 2 skills, 5 shiftTypes, 9 patterns, 3 contracts, 50 employees, 28 shiftDates, 752 shiftAssignments and 0 requests with a search space of 10^1277. long_late04 has 2 skills, 5 shiftTypes, 9 patterns, 4 contracts, 50 employees, 28 shiftDates, 752 shiftAssignments and 0 requests with a search space of 10^1277. long_late05 has 2 skills, 5 shiftTypes, 9 patterns, 3 contracts, 50 employees, 28 shiftDates, 740 shiftAssignments and 0 requests with a search space of 10^1257.

3.4.2.3. Domain Model

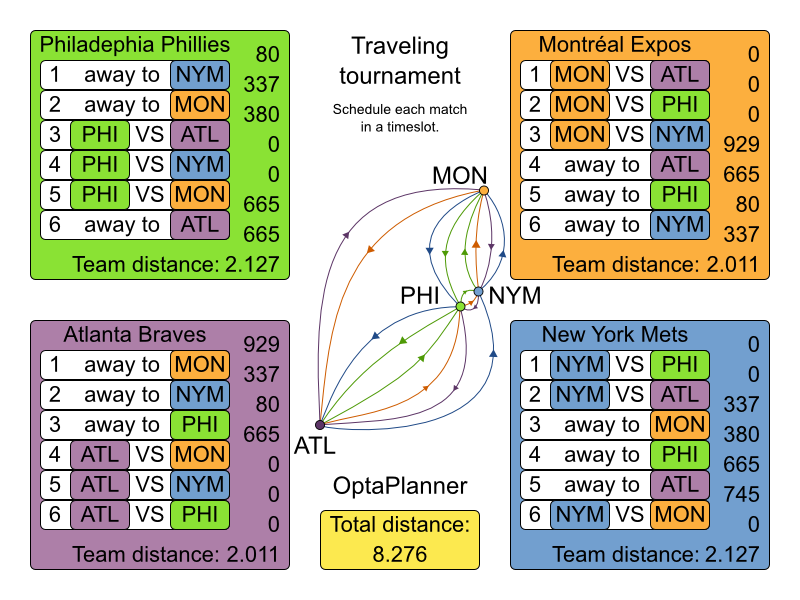

3.4.3. Traveling Tournament Problem (TTP)

3.4.3.1. Problem Description

Schedule matches between n teams.

Hard constraints:

- Each team plays twice against every other team: once home and once away.

- Each team has exactly 1 match on each timeslot.

- No team must have more than 3 consecutive home or 3 consecutive away matches.

- No repeaters: no 2 consecutive matches of the same 2 opposing teams.

Soft constraints:

- Minimize the total distance traveled by all teams.

The problem is defined on Michael Trick’s website (which contains the world records too).

3.4.3.2. Problem Size

1-nl04 has 6 days, 4 teams and 12 matches with a search space of 10^9. 1-nl06 has 10 days, 6 teams and 30 matches with a search space of 10^30. 1-nl08 has 14 days, 8 teams and 56 matches with a search space of 10^64. 1-nl10 has 18 days, 10 teams and 90 matches with a search space of 10^112. 1-nl12 has 22 days, 12 teams and 132 matches with a search space of 10^177. 1-nl14 has 26 days, 14 teams and 182 matches with a search space of 10^257. 1-nl16 has 30 days, 16 teams and 240 matches with a search space of 10^354. 2-bra24 has 46 days, 24 teams and 552 matches with a search space of 10^917. 3-nfl16 has 30 days, 16 teams and 240 matches with a search space of 10^354. 3-nfl18 has 34 days, 18 teams and 306 matches with a search space of 10^468. 3-nfl20 has 38 days, 20 teams and 380 matches with a search space of 10^600. 3-nfl22 has 42 days, 22 teams and 462 matches with a search space of 10^749. 3-nfl24 has 46 days, 24 teams and 552 matches with a search space of 10^917. 3-nfl26 has 50 days, 26 teams and 650 matches with a search space of 10^1104. 3-nfl28 has 54 days, 28 teams and 756 matches with a search space of 10^1309. 3-nfl30 has 58 days, 30 teams and 870 matches with a search space of 10^1534. 3-nfl32 has 62 days, 32 teams and 992 matches with a search space of 10^1778. 4-super04 has 6 days, 4 teams and 12 matches with a search space of 10^9. 4-super06 has 10 days, 6 teams and 30 matches with a search space of 10^30. 4-super08 has 14 days, 8 teams and 56 matches with a search space of 10^64. 4-super10 has 18 days, 10 teams and 90 matches with a search space of 10^112. 4-super12 has 22 days, 12 teams and 132 matches with a search space of 10^177. 4-super14 has 26 days, 14 teams and 182 matches with a search space of 10^257. 5-galaxy04 has 6 days, 4 teams and 12 matches with a search space of 10^9. 5-galaxy06 has 10 days, 6 teams and 30 matches with a search space of 10^30. 5-galaxy08 has 14 days, 8 teams and 56 matches with a search space of 10^64. 5-galaxy10 has 18 days, 10 teams and 90 matches with a search space of 10^112. 5-galaxy12 has 22 days, 12 teams and 132 matches with a search space of 10^177. 5-galaxy14 has 26 days, 14 teams and 182 matches with a search space of 10^257. 5-galaxy16 has 30 days, 16 teams and 240 matches with a search space of 10^354. 5-galaxy18 has 34 days, 18 teams and 306 matches with a search space of 10^468. 5-galaxy20 has 38 days, 20 teams and 380 matches with a search space of 10^600. 5-galaxy22 has 42 days, 22 teams and 462 matches with a search space of 10^749. 5-galaxy24 has 46 days, 24 teams and 552 matches with a search space of 10^917. 5-galaxy26 has 50 days, 26 teams and 650 matches with a search space of 10^1104. 5-galaxy28 has 54 days, 28 teams and 756 matches with a search space of 10^1309. 5-galaxy30 has 58 days, 30 teams and 870 matches with a search space of 10^1534. 5-galaxy32 has 62 days, 32 teams and 992 matches with a search space of 10^1778. 5-galaxy34 has 66 days, 34 teams and 1122 matches with a search space of 10^2041. 5-galaxy36 has 70 days, 36 teams and 1260 matches with a search space of 10^2324. 5-galaxy38 has 74 days, 38 teams and 1406 matches with a search space of 10^2628. 5-galaxy40 has 78 days, 40 teams and 1560 matches with a search space of 10^2951.

3.4.4. Cheap Time Scheduling

3.4.4.1. Problem Description

Schedule all tasks in time and on a machine to minimize power cost. Power prices differs in time. This is a form of job shop scheduling.

Hard constraints:

- Start time limits: each task must start between its earliest start and latest start limit.

- Maximum capacity: the maximum capacity for each resource for each machine must not be exceeded.

- Startup and shutdown: each machine must be active in the periods during which it has assigned tasks. Between tasks it is allowed to be idle to avoid startup and shutdown costs.

Medium constraints:

Power cost: minimize the total power cost of the whole schedule.

- Machine power cost: Each active or idle machine consumes power, which infers a power cost (depending on the power price during that time).

- Task power cost: Each task consumes power too, which infers a power cost (depending on the power price during its time).

- Machine startup and shutdown cost: Every time a machine starts up or shuts down, an extra cost is inflicted.

Soft constraints (addendum to the original problem definition):

- Start early: prefer starting a task sooner rather than later.

The problem is defined by the ICON challenge.

3.4.4.2. Problem Size