-

Language:

English

-

Language:

English

Fabric Guide

A system for provisioning containers deployed across a network

Red Hat

Copyright © 2011-2015 Red Hat, Inc. and/or its affiliates.

Abstract

Chapter 1. An Overview of Fuse Fabric

Abstract

1.1. Concepts

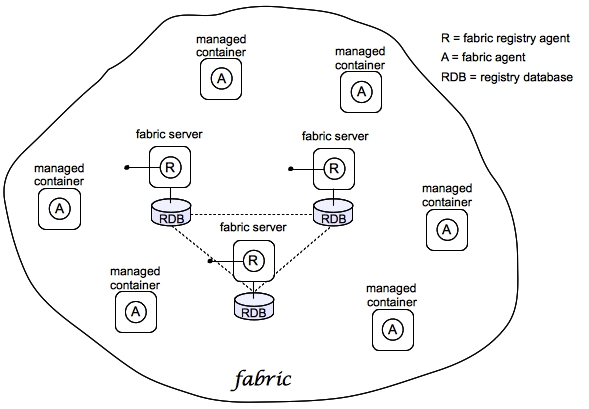

Fabric

A sample fabric

Figure 1.1. Containers in a Fabric

Registry

- Configuration Registry—the logical configuration of your fabric, which typically contains no physical machine information. It contains details of the applications to be deployed and their dependencies.

- Runtime Registry—contains details of how many machines are actually running, their physical location, and what services they are implementing.

Fabric Ensemble

Fabric Server

Fabric Container (managed container)

Fabric Agent

Git

Profile

osgi:install or features:install, respectively), these modifications are impermanent. As soon as you restart the container or refresh its contents, the Fabric agent replaces the container's existing contents with whatever is specified by the deployed profiles.

1.2. Containers

- Java processes running directly on hardware.

- A PaaS (Platform as a Service) such as OpenShift for either a public or private cloud.

- OpenStack as an IaaS (Infrastructure as a Service).

- Amazon Web Services, Rackspace or some other IaaS to manage services.

- Docker containers for each service.

- An open hybrid cloud composing all of the above entries.

1.3. Provisioning and Configuration

Overview

Changing the configuration

How discovery works

Part I. Basic Fabric Deployment

Abstract

Chapter 2. Getting Started with Fuse Fabric

Abstract

2.1. Create a Fabric

Overview

Figure 2.1. A Sample Fabric with Child Containers

Steps to create the fabric

- To create the first fabric container, which acts as the seed for the new fabric, enter this console command:

JBossFuse:karaf@root> fabric:create --new-user AdminUser --new-user-password AdminPass --new-user-role Administrator --zookeeper-password ZooPass --resolver manualip --manual-ip 127.0.0.1 --wait-for-provisioning

The current container, namedrootby default, becomes a Fabric Server with a registry service installed. Initially, this is the only container in the fabric. The--new-user,--new-user-password, and--new-user-roleoptions specify the credentials for a newAdministratoruser. The Zookeeper password is used to protect sensitive data in the Fabric registry service (all of the nodes under/fabric). The--manual-ipoption specifies the loopback address,127.0.0.1, as the Fabric Server's IP address.NoteA Fabric Server requires a static IP address. For simple trials and tests, you can use the loopback address,127.0.0.1, to work around this requirement. But if you are deploying a fabric in production or if you want to create a distributed ensemble, you must assign a static IP address to the each of the Fabric Server hosts.NoteMost of the time, you are not prompted to enter the Zookeeper password when accessing the registry service, because it is cached in the current session. When you join a container to a fabric, however, you must provide the fabric's Zookeeper password. - Create a child container. Assuming that your root container is named

root, enter this console command:JBossFuse:karaf@root> fabric:container-create-child root child The following containers have been created successfully: Container: child.

- Invoke the following command to monitor the status of the child container, as it is being provisioned:

JBossFuse:karaf@root> shell:watch container-list

After the deployment of thechildhas completed, you should see a listing something like this:JBossFuse:karaf@root> shell:watch container-list [id] [version] [alive] [profiles] [provision status] root 1.0 true fabric, fabric-ensemble-0000-1, fuse-esb-full success child 1.0 true default success

Type theReturnkey to get back to the JBoss Fuse console prompt.

2.2. Deploy a Profile

Deploy a profile to the child container

- Deploy the

quickstarts-beginner-camel.logprofile into thechildcontainer by entering this console command:JBossFuse:karaf@root> fabric:container-change-profile child quickstarts-beginner-camel.log

- Verify that the

quickstarts-beginner-camel.logprofile deploys successfully to thechildcontainer, using thefabric:container-listcommand. Enter the following command to monitor the container status:JBossFuse:karaf@root> shell:watch container-list

And wait until thechildcontainer status changes tosuccess.

View the sample output

quickstarts-beginner-camel.log profile writes a message to the container's log every five seconds. To verify that the profile is running properly, you can look for these messages in the child container's log, as follows:

- Connect to the

childcontainer, by entering the following console command:JBossFuse:karaf@root> container-connect child

- After logging on to the

childcontainer, view thechildcontainer's log using thelog:tailcommand, as follows:JBossFuse:karaf@root> log:tail

You should see some output like the following:2015-06-16 11:47:51,012 | INFO | #2 - timer://foo | log-route | ? ? | 153 - org.apache.camel.camel-core - 2.15.1.redhat-620123 | >>> Hello from Fabric based Camel route! : child 2015-06-16 11:47:56,011 | INFO | #2 - timer://foo | log-route | ? ? | 153 - org.apache.camel.camel-core - 2.15.1.redhat-620123 | >>> Hello from Fabric based Camel route! : child - Type Ctrl-C to exit the log view and get back to the child container's console prompt.

- Type Ctrl-D to exit the child container's console, which brings you back to the root container console.

2.3. Update a Profile

Atomic container upgrades

Profile versioning

Upgrade to a new profile

quickstarts-beginner-camel.log profile, when it is deployed and running in a container, follow the recommended procedure:

- Create a new version, 1.1, to hold the pending changes by entering this console command:

JBossFuse:karaf@root> fabric:version-create Created version: 1.1 as copy of: 1.0

The new version is initialised with a copy of all of the profiles from version 1.0. - Use the

fabric:profile-editcommand to change the message that is written to the container log by the Camel route. Enter the followingprofile-editcommand to edit thecamel.xmlresource:JBossFuse:karaf@root> fabric:profile-edit --resource camel.xml quickstarts-beginner-camel.log 1.1

This opens the built-in text editor for editing profile resources (see Appendix A, Editing Profiles with the Built-In Text Editor).Remember to specify version1.1to thefabric:profile-editcommand, so that the modifications are applied to version 1.1 of thequickstarts-beginner-camel.logprofile.When you are finished editing, type Ctrl-S to save your changes and then type Ctrl-X to quit the text editor and get back to the console prompt. - Upgrade the

childcontainer to version1.1by entering this console command:JBossFuse:karaf@root> fabric:container-upgrade 1.1 child

Roll back to an old profile

quickstarts-beginner-camel.log profile, using the fabric:container-rollback command like this:

JBossFuse:karaf@root> fabric:container-rollback 1.0 child

2.4. Shutting Down the Containers

Shutting down the containers

fabric:container-stop command. For example, to shut down the current fabric completely, enter these console commands:

JBossFuse:karaf@root> fabric:container-stop child JBossFuse:karaf@root> shutdown -f

fabric:container-start console command.

Chapter 3. Creating a New Fabric

Abstract

Static IP address required for Fabric Server

- For simple examples and tests (with a single Fabric Server) you can work around the static IP requirement by using the loopback address,

127.0.0.1. - For distributed tests (multiple Fabric Servers) and production deployments, you must assign a static IP address to each of the Fabric Server hosts.

--resolver manualip --manual-ip StaticIPAddress options to specify the static IP address explicitly, when creating a new Fabric Server.

Procedure

- (Optional) Customise the name of the root container by editing the

InstallDir/etc/system.propertiesfile and specifying a different name for this property:karaf.name=root

NoteFor the first container in your fabric, this step is optional. But at some later stage, if you want to join a root container to the fabric, you might need to customise the container's name to prevent it from clashing with any existing root containers in the fabric. - Any existing users in the

InstallDir/etc/users.propertiesfile are automatically used to initialize the fabric's user data, when you create the fabric. You can populate theusers.propertiesfile, by adding one or more lines of the following form:Username=Password[,RoleA][,RoleB]...

But there must not be any users in this file that have administrator privileges (Administrator,SuperUser, oradminroles). If theInstallDir/etc/users.propertiesalready contains users with administrator privileges, you should delete those users before creating the fabric.WarningIf you leave some administrator credentials in theusers.propertiesfile, this represents a security risk because the file could potentially be accessed by other containers in the fabric.NoteThe initialization of user data fromusers.propertieshappens only once, at the time the fabric is created. After the fabric has been created, any changes you make tousers.propertieswill have no effect on the fabric's user data. - If you use a VPN (virtual private network) on your local machine, it is advisable to log off VPN before you create the fabric and to stay logged off while you are using the local container.NoteA local Fabric Server is permanently associated with a fixed IP address or hostname. If VPN is enabled when you create the fabric, the underlying Java runtime is liable to detect and use the VPN hostname instead of your permanent local hostname. This can also be an issue with multi-homed machines.

- Start up your local container.In JBoss A-MQ, start the local container as follows:

cd InstallDir/bin ./amq

- Create a new fabric by entering the following command:

JBossFuse:karaf@root> fabric:create --new-user AdminUser --new-user-password AdminPass --new-user-role Administrator --zookeeper-password ZooPass --resolver manualip --manual-ip StaticIPAddress --wait-for-provisioning

The current container, namedrootby default, becomes a Fabric Server with a registry service installed. Initially, this is the only container in the fabric. The--new-user,--new-user-password, and--new-user-roleoptions specify the credentials for a newAdministratoruser. The Zookeeper password is used to protect sensitive data in the Fabric registry service (all of the nodes under/fabric). The--manual-ipoption specifies the Fabric Server's static IP addressStaticIPAddress(see the section called “Static IP address required for Fabric Server”).For more details on fabric:create see section "fabric:create" in "Console Reference".For more details about resolver policies, see section "fabric:container-resolver-list" in "Console Reference" and section "fabric:container-resolver-set" in "Console Reference".

Fabric creation process

- The container installs the requisite OSGi bundles to become a Fabric Server.

- The Fabric Server starts a registry service, which listens on TCP port 2181 (which makes fabric configuration data available to all of the containers in the fabric).NoteYou can customize the value of the registry service port by specifying the

--zookeeper-server-portoption. - The Fabric Server installs a new JAAS realm (based on the ZooKeeper login module), which overrides the default JAAS realm and stores its user data in the ZooKeeper registry.

- The new Fabric Ensemble consists of a single Fabric Server (the current container).

- A default set of profiles is imported from

InstallDir/fabric/import(can optionally be overridden). - After the standalone container is converted into a Fabric Server, the previously installed OSGi bundles and Karaf features are completely cleared away and replaced by the default Fabric Server configuration. For example, some of the shell command sets that were available in the standalone container are no longer available in the Fabric Server.

Expanding a Fabric

- Child container, created on the local machine as a child process in its own JVM.Instructions on creating a child container are found in Child Containers.

- SSH container, created on any remote machine for which you have

sshaccess.Instructions on creating a SSH container are found in SSH Containers. - Cloud container, created on compute instance in the cloud.Instructions on creating a cloud container are found in Cloud Containers.

Chapter 4. Fabric Containers

4.1. Child Containers

Abstract

Overview

One container or many?

Creating a child container

fabric:container-create-child command, specifying the parent container name and the name of the new child container. For example, to create the new child container, onlychild, with root as its parent, enter the following command:

fabric:container-create-child root onlychild

fabric:container-create-child root child 3

child1 child2 child3

Stopping and starting a child container

fabric:container-stop command. For example, to shut down the child1 container:

fabric:container-stop child1

fabric:container-start command, as follows:

fabric:container-start child1

ps and kill.

Deleting a child container

fabric:container-delete command, as follows:

fabric:container-delete child1

4.2. SSH Containers

Abstract

Overview

Prerequisites

- Linux or UNIX operating system,

- SSHD running on the target host and:

- A valid account credentials, or

- Configured public key authentication

- Java is installed (for supported versions, see Red Hat JBoss A-MQ Supported Configurations).

- Curl installed.

- GNU tar installed.

- Telnet installed.

Creating an SSH container

fabric:container-create-ssh console command, for creating SSH containers.

myhost (accessible from the local network) with the SSH user account, myuser, and the password, mypassword, your could create an SSH container on myhost, using the following console command:

fabric:container-create-ssh --host myhost --user myuser --password mypassword myremotecontainername

myuser user on myhost has configured public key authentication for SSH, you can skip the password option:

fabric:container-create-ssh --host myhost --user myuser myremotecontainername

~/.ssh/id_rsa for authentication. If you need to use a different key, you can specify the key location explicitly with the --private-key option:

fabric:container-create-ssh --host myhost --user myuser --private-key ~/.ssh/fabric_pk myremotecontainername

--pass-phrase option, in case your key requires a pass phrase.

Creating a Fabric server using SSH

fabric:container-create-ssh supports the --ensemble-server option, which can be invoked to create a container which is a Fabric server. For example, the following container-create-ssh command creates a new fabric consisting of one Fabric server on the myhost host:

fabric:container-create-ssh --host myhost --user myuser --ensemble-server myremotecontainername

Managing remote SSH containers

fabric:container-stop myremotecontainername

fabric:container-start myremotecontainername

fabric:container-delete myremotecontainername

References

4.3. Fabric Containers on Windows

Abstract

Overview

Creating a Fabric container on Windows

- Following the instructions in the JBoss Fuse Installation Guide, manually install the JBoss Fuse product on the Windows target host.

- Open a new command prompt and enter the following commands to start the container on the target host:

cd InstallDir\bin fuse.bat

- If the Fabric servers from the Fabric ensemble are not already running, start them now.

- Join the container to the existing fabric, by entering the following console command:

JBossFuse:karaf@root> fabric:join --zookeeper-password ZooPass ZooHost:ZooPort Name

WhereZooPassis the ZooKeeper password for the Fabric ensemble (specified when you create the fabric withfabric:create);ZooHostis the hostname (or IP address) where the Fabric server is running andZooPortis the ZooKeeper port (defaults to2181). If necessary, you can discover the ZooKeeper host and port by logging into the Fabric server and entering the following console command:config:proplist --pid io.fabric8.zookeeper

TheNameargument (which is optional) specifies a new name for the container after it joins the fabric. It is good practice to provide this argument, because all freshly installed containers have the namerootby default. If you do not specify a new container name when you join the fabric, there are bound to be conflicts.NoteThe container where you run thefabric:joincommand must be a standalone container. It is an error to invokefabric:joinin a container that is already part of a fabric.

default profile deployed on it.

Creating a Fabric server on Windows

- Following the instructions in the JBoss Fuse Installation Guide, manually install the JBoss Fuse product on the Windows target host.

- To start the container on the target host, open a new command prompt and enter the following commands:

cd InstallDir\bin fuse.bat

- To create a new fabric (thereby turning the current host into a Fabric server), enter the following console command:

JBossFuse:karaf@root> fabric:create --new-user AdminUser --new-user-password AdminPass --new-user-role Administrator --zookeeper-password ZooPass --resolver manualip --manual-ip StaticIPAddress --wait-for-provisioning

The current container, namedrootby default, becomes a Fabric Server with a registry service installed. Initially, this is the only container in the fabric. The--new-user,--new-user-password, and--new-user-roleoptions specify the credentials for a newAdministratoruser. The Zookeeper password is used to protect sensitive data in the Fabric registry service (all of the nodes under/fabric). The--manual-ipoption specifies the Fabric Server's static IP addressStaticIPAddress(see the section called “Static IP address required for Fabric Server”).

Managing remote containers on Windows

fabric:join), there are certain restrictions on which commands you can use to manage it. In particular, the following commands are not supported:

fabric:container-stop fabric:container-start fabric:container-delete

4.4. Cloud Containers

Abstract

4.4.1. Preparing to use Fabric in the Cloud

Overview

Prerequisites

- A valid account with one of the cloud providers implemented by JClouds. The list of cloud providers can be found at JClouds supported providers.NoteIn the context of JClouds, the term supported provider does not imply commercial support for the listed cloud providers. It just indicates that there is an available implementation.

Hybrid clusters

- Fabric registry is running inside the public cloud.In this case, local containers will have no problem accessing the registry, as long as they are able to connect to the Internet.

- Cloud and local containers are part of a Virtual Private Network (VPN).If the Fabric registry is running on the premises, the cloud containers will not be able to access the registry, unless you set up a VPN (or make the registry accessible from the Internet, which is not recommended).

- Fabric registry is accessible from the Internet (not recommended).

Preparation

fabric:create command. You cannot access the requisite cloud console commands until you create a Fabric locally.

JBossFuse:karaf@root> fabric:create --new-user AdminUser --new-user-password AdminPass --zookeeper-password ZooPass --wait-for-provisioning

--new-user and --new-user-password options specify the credentials for a new administrator user. The ZooPass password specifies the password that is used to protect the Zookeeper registry.

JBossFuse:karaf@root> profile-list [id] [# containers] [parents] ... cloud-aws.ec2 0 cloud-base ... cloud-openstack 0 cloud-base cloud-servers.uk 0 cloud-base cloud-servers.us 0 cloud-base ...

cloud-aws.ec2 profile, as follows:

fabric:container-add-profile root cloud-aws.ec2

root is the name of your local container.

Feature naming convention

cloud-aws.ec2 profile is the jclouds-aws-ec2 feature, which provides the necessary bundles for interacting with Amazon EC2:

JBossFuse:karaf@root> profile-display cloud-aws.ec2 Profile id: cloud-aws.ec2 Version : 1.0 ... Container settings ---------------------------- Features : jclouds-aws-ec2 ...

jclouds-aws-ec2- Feature for the Amazon EC2 cloud provider.

jclouds-cloudservers-us- Feature for the Rackspace cloud provider.

jclouds-ProviderID, where ProviderID is one of the provider IDs listed in the JClouds supported providers page. Or you can list the available JClouds features using the features:list command:

features:list | grep jclouds

fabric:profile-edit --features jclouds-ProviderID MyProfile fabric:container-add-profile root MyProfile

Registering a cloud provider

fabric:cloud-service-add console command (the registration process will store the provider credentials in the Fabric registry, so that they are available from any Fabric container).

fabric:cloud-service-add --name aws-ec2 --provider aws-ec2 --identity AccessKeyID --credential SecretAccessKey

--name option is an alias that you use to refer to this registered cloud provider instance. It is possible to register the same cloud provider more than once, with different user accounts. The cloud provider alias thus enables you distinguish between multiple accounts with the same cloud provider.

4.4.2. Administering Cloud Containers

Creating a new fabric in the cloud

fabric:container-create-cloud with the --ensemble-server option, which creates a new Fabric server. For example, to create a Fabric server on Amazon EC2:

fabric:container-create-cloud --ensemble-server --name aws-ec2 --new-user AdminUser --new-user-password AdminPass --zookeeper-password ZooPass mycontainer

Basic security

fabric:container-create-cloud command, to ensure that the new fabric is adequately protected. You need to specify the following security data:

- JAAS credentials—the

--new-userand--new-user-passwordoptions define JAAS credentials for a new user with administrative privileges on the fabric. These credentials can subsequently be used to log on to the JMX port or the SSH port of the newly created Fabric server. - ZooKeeper password—is used to protect the data stored in the ZooKeeper registry in the Fabric server. The only time you will be prompted to enter the ZooKeeper password is when you try to join a container to the fabric using the

fabric:joincommand.

Joining a standalone container to the fabric

fabric:join -n --zookeeper-password ZooPass PublicIPAddress

PublicIPAddress is the public host name or the public IP address of the compute instance that hosts the Fabric server (you can get this address either from the JBoss Fuse console output or from the Amazon EC2 console).

Creating a cloud container

fabric:container-create-cloud command to create new Fabric containers in the cloud. For example to create a container on Amazon EC2:

fabric:container-create-cloud --name aws-ec2 mycontainer

fabric:container-create-cloud command with the --os-family option as follows:

fabric:container-create-cloud --name aws-ec2 --os-family centos mycontainer

--os-version option:

fabric:container-create-cloud --name aws-ec2 --os-family centos --os-version 5 mycontainer

--image option.

fabric:container-create-cloud --name aws-ec2 --image myimageid mycontainer

Looking up for compute service.

Creating 1 nodes in the cloud. Using operating system: ubuntu. It may take a while ...

Node fabric-f674a68f has been created.

Configuring firewall.

Installing fabric agent on container cloud. It may take a while...

Overriding resolver to publichostname.

[id] [container] [public addresses] [status]

us-east-1/i-f674a68f cloud [23.20.114.82] successImages

- Linux O/S

- RedHat or Debian packaging style

- Either no Java installed or Java 1.7+ installed. If there is no Java installed on the image, Fabric will install Java for you. If the wrong Java version is installed, however, the container installation will fail.

fabric:cloud-service-add --name aws-ec2 --provider aws-ec2 --identity AccessKeyID --credential SecretAccessKey --owner myownerid

Locations and hardware

jclouds:location-list

jclouds:hardware-list

fabric:container-create-cloud command. For example:

fabric:container-create-cloud --name aws-ec2 --location eu-west-1 --hardware m2.4xlarge mycontainer

Chapter 5. Shutting Down a Fabric

Overview

Shutting down a managed container

fabric:container-stop command and specify the name of the managed container, for example:

fabric:container-stop -f ManagedContainerName

-f flag is required when shutting down a container that belongs to the ensemble.

fabric:container-stop command looks up the container name in the registry and retrieves the data it needs to shut down that container. This approach works no matter where the container is deployed: whether on a remote host or in a cloud.

Shutting down a Fabric Server

registry1, registry2, and registry3. You can shut down only one of these Fabric Servers at a time by using the fabric:container-stop command, for example:

fabric:container-stop -f registry3

fabric:container-start registry3

Shutting down an entire fabric

fabric:ensemble-remove and fabric:ensemble-add commands. Each time you execute one of these commands, it creates a new ensemble. This new ensemble URL is propagated to all containers in the fabric and all containers need to reconnect to the new ensemble. There is a risk for TCP port numbers to be reallocated, which means that your network configuration might become out-of-date because services might start up on different ports.

- Three Fabric Servers (ensemble servers):

registry1,registry2,registry3. - Four managed containers:

managed1,managed2,managed3,managed4.

- Use the

clientconsole utility to log on to one of the Fabric Servers in the ensemble. For example, to log on to theregistry1server, enter a command in the following format:./client -u AdminUser -p AdminPass -h Registry1Host

ReplaceAdminUserandAdminPasswith the credentials of a user with administration privileges. ReplaceRegistry1Hostwith name of the host whereregistry1is running. It is assumed that theregistry1server is listening for console connections on the default TCP port (that is,8101) - Ensure that all managed containers in the fabric are running. Execution of

fabric:container-listshould displaytruein thealivecolumn for each container. This is required for execution of thefabric:ensemble-removecommand, which is the next step. - Remove all but one of the Fabric Servers from the ensemble. For example, if you logged on to

registry1, enter:fabric:ensemble-remove registry2 registry3

- Shut down all managed containers in the fabric, except the container on the Fabric Server you are logged into. In the following example, the first command shuts down

managed1,managed2,managed3andmanaged4:fabric:container-stop -f managed* fabric:container-stop -f registry2 fabric:container-stop -f registry3

- Shut down the last container that is still running. This is the container that is on the Fabric Server you are logged in to. For example:

shutdown -f

- Use the

clientconsole utility to log in to theregistry1container host. - Start all containers in the fabric.

- Add the other Fabric Servers, for example:

fabric:ensemble-add registry2 registry3

Note on shutting down a complete fabric

fabric:container-stop -f registry1 fabric:container-stop -f registry2 fabric:container-stop -f registry3

fabric:container-stop fails and throws an error because only one Fabric Server is still running. At least two Fabric Servers must be running to stop a container. With only one Fabric Server running, the registry shuts down and refuses service requests because a quorum of Fabric Servers is no longer available. The fabric:container-stop command needs the registry to be running so it can retrieve details about the container it is trying to shut down.

Chapter 6. Fabric Profiles

Abstract

6.1. Introduction to Profiles

Overview

What is in a profile?

- OSGi bundle URLs

- Web ARchive (WAR) URLs

- Fuse Application Bundle (FAB) URLs

- OSGi Configuration Admin PIDs

- Apache Karaf feature repository URLs

- Apache Karaf features

- Maven artifact repository URLs

- Blueprint XML files or Spring XML files (for example, for defining broker configurations or Camel routes)

- Any kind of resource that might be needed by an application (for example, Java properties file, JSON file, XML file, YML file)

- System properties that affect the Apache Karaf container (analogous to editing

etc/config.properties) - System properties that affect installed bundles (analogous to editing

etc/system.properties)

Profile hierarchies

Some basic profiles

- [default]

- The

defaultprofile defines all of the basic requirements for a Fabric container. For example it specifies thefabric-agentfeature, the Fabric registry URL, and the list of Maven repositories from which artifacts can be downloaded. - [karaf]

- Inherits from the

defaultprofile and defines the Karaf feature repositories, which makes the Apache Karaf features accessible. - [feature-camel]

- Inherits from

karaf, defines the Camel feature repositories, and installs some core Camel features: such ascamel-coreandcamel-blueprint. If you are deploying a Camel application, it is recommended that you inherit from this profile. - [feature-cxf]

- Inherits from

karaf, defines the CXF feature repositories, and installs some core CXF features. If you are deploying a CXF application, it is recommended that you inherit from this profile. - [mq-base]

- Inherits from the

karafprofile and installs themq-fabricfeature - [mq-default]

- Inherits from the

mq-baseprofile and provides the configuration for an A-MQ broker. Use this profile, if you want to deploy a minimal installation of an ActiveMQ broker. - [jboss-fuse-full]

- Includes all of the features and bundles required for the JBoss Fuse full container.

6.2. Working with Profiles

Changing the profiles in a container

fabric:container-change-profile command as follows:

fabric:container-change-profile mycontainer myprofile

myprofile profile to the mycontainer container. All profiles previously assigned to the container are removed. You can also deploy multiple profiles to the container, with the following command:

fabric:container-change-profile mycontainer myprofile myotherprofile

Adding a profile to a container

fabric:container-add-profile command gives you a simple way to add profiles to a container, without having to list all of the profiles that were already assigned. For example, to add the example-camel profile to the mycontainer container:

fabric:container-add-profile mycontainer example-camel

Listing available profiles

fabric:profile-list console command:

fabric:profile-list

Inspecting profiles

fabric:profile-display command. For example, to display what is defined in the feature-camel profile, enter the following command:

fabric:profile-display feature-camel

Profile id: feature-camel

Version : 1.0

Attributes:

parents: karaf

Containers:

Container settings

----------------------------

Repositories :

mvn:org.apache.camel.karaf/apache-camel/${version:camel}/xml/features

Features :

camel-core

camel-blueprint

fabric-camel

Configuration details

----------------------------

Other resources

----------------------------

Resource: io.fabric8.insight.metrics.jsonfeature-camel profile, taking into account all of its ancestors, you must specify the --overlay switch, as follows:

fabric:profile-display --overlay feature-camel

--display-resources switch (or -r for short) to the profile-display command, as follows:

fabric:profile-display -r feature-camel

Creating a new profile

fabric:profile-create command, as follows:

fabric:profile-create myprofile

--parents option to the command:

fabric:profile-create --parents feature-camel myprofile

Adding or removing features

fabric:profile-edit command. For example, to add the camel-jclouds feature to the feature-camel profile.

fabric:profile-edit --feature camel-jclouds feature-camel

fabric:profile-display command to see what the camel profile looks like now. You should see that the camel-jclouds feature appears in the list of features for the feature-camel profile.

Features :

camel-jclouds

camel-blueprint/2.9.0.fuse-7-061

camel-core/2.9.0.fuse-7-061

fabric-camel/99-master-SNAPSHOT--delete option. For example, if you need to remove the camel-jclouds feature, you could use the following command:

fabric:profile-edit --delete --feature camel-jclouds feature-camel

Editing PID properties

- Edit the PID using the built-in text editor—the Karaf console has a built-in text editor which you can use to edit profile resources such as PID properties. To start editing a PID using the text editor, enter the following console command:

fabric:profile-edit --pid PID ProfileName

For more details about the built-in text editor, see Appendix A, Editing Profiles with the Built-In Text Editor. - Edit the PID inline, using console commands—alternatively, you can edit PIDs directly from the console, using the appropriate form of the

fabric:profile-editcommand. This approach is particularly useful for scripting. For example, to set a specific key-value pair,Key=Value, in a PID, enter the following console command:fabric:profile-edit --pid PID/Key=Value ProfileName

Editing a PID inline

fabric:profile-edit command:

- Assign a value to a PID property, as follows:

fabric:profile-edit --pid PID/Key=Value ProfileName

- Append a value to a delimited list (that is, where the property value is a comma-separated list), as follows:

fabric:profile-edit --append --pid PID/Key=ListItem ProfileName

- Remove a value from a delimited list, as follows:

fabric:profile-edit --remove --pid PID/Key=ListItem ProfileName

- Delete a specific property key, as follows:

fabric:profile-edit --delete --pid PID/Key ProfileName

- Delete a complete PID, as follows:

fabric:profile-edit --delete --pid PID ProfileName

Example of editing a PID inline

io.fabric8.agent PID, changing the Maven repository list setting. The default profile contains a section like this:

Agent Properties :

org.ops4j.pax.url.mvn.repositories = http://repo1.maven.org/maven2,

http://repo.fusesource.com/nexus/content/repositories/releases,

http://repo.fusesource.com/nexus/content/groups/ea,

http://repository.springsource.com/maven/bundles/release,

http://repository.springsource.com/maven/bundles/external,

http://scala-tools.org/repo-releasesio.fabric8.agent PID. So, by modifying the io.fabric8.agent PID, we effectively change the agent properties. You can modify the list of Maven repositories in the agent properties PID as follows:

fabric:profile-edit --pid io.fabric8.agent/org.ops4j.pax.url.mvn.repositories=http://repositorymanager.mylocalnetwork.net default

fabric:profile-display on the default profile, you should see agent properties similar to the following:

Agent Properties :

org.ops4j.pax.url.mvn.repositories = http://repositorymanager.mylocalnetwork.netSetting encrypted PID property values

- Use the

fabric:encrypt-messagecommand to encrypt the property value, as follows:fabric:encrypt-message PropValue

This command returns the encrypted property value,EncryptedValue.NoteThe default encryption algorithm used by Fabric isPBEWithMD5AndDES. - You can now set the property to the encrypted value,

EncryptedValue, using the following syntax:my.sensitive.property = ${crypt:EncryptedValue}For example, using thefabric:profile-editcommand, you can set an encrypted value as follows:fabric:profile-edit --pid com.example.my.pid/my.sensitive.property=${crypt:EncryptedValue} Profile

Alternative method for encrypting PID property values

- Use the Jasypt

encryptcommand-line tool to encrypt the property value, as follows:./encrypt.sh input="Property value to be encrypted" password=ZooPass verbose=false

This command returns the encrypted property value,EncryptedValue.NoteThe default encryption algorithm used by Fabric isPBEWithMD5AndDES. You must ensure that theencrypt.shutility is using the same algorithm as Fabric.

Customizing the PID property encryption mechanism

- Customize the master password for encryption—using the following console command:

fabric:crypt-password-set MasterPassword

You can retrieve the current master password by entering thefabric:crypt-password-getcommand. The default value is the ensemble password (as returned byfabric:ensemble-password). - Customize the encryption algorithm—using the following console command:

fabric:crypt-algorithm-set Algorithm

Where the encryption algorithm must be one of the algorithms supported by the underlying Jasypt encryption toolkit. You can retrieve the current encryption algorithm by entering thefabric:crypt-algorithm-getcommand. The default isPBEWithMD5AndDES.

Profile editor

fabric:profile-edit command without any options, as follows:

fabric:profile-edit Profile [Version]

Editing resources with the profile editor

broker.xml file in the mq-amq profile, enter the following console command:

fabric:profile-edit --resource broker.xml mq-amq

6.3. Profile Versions

Overview

fabric-agent, will choose the defined version and retrieve all the information provided by the specific version of the profile.

Creating a new version

fabric:version-create command (analogous to creating a new branch in the underlying Git repository). The default version is 1.0. To create version 1.1, enter the following command:

fabric:version-create 1.1

fabric:version-create --description "expanding all camel routes" 1.1.

feature-camel profile:

fabric:profile-display --version 1.1 feature-camel

fabric:profile-edit command, specifying the version right after the profile argument. For example, to add the camel-jclouds feature to version 1.1 of the feature-camel profile, enter the following command:

fabric:profile-edit --feature camel-jclouds feature-camel 1.1

Rolling upgrades and rollbacks

mycontainer container to the 1.1 version, invoke the fabric:container-upgrade command as follows:

fabric:container-upgrade 1.1 mycontainer

mycontainer to use version 1.1 of all the profiles currently assigned to it.

fabric:container-rollback command, as follows:

fabric:container-rollback 1.0 mycontainer

--all option, as follows:

fabric:container-upgrade --all 1.1 mycontainer

Chapter 7. Fabric8 Maven Plug-In

Abstract

7.1. Preparing to Use the Plug-In

Edit your Maven settings

~/.m2/settings.xml file to add the fabric server's user and password so that the maven plugin can log in to the fabric. For example, you could add the following server element to your settings.xml file:

<settings>

<servers>

<server>

<id>fabric8.upload.repo</id>

<username>Username</username>

<password>Password</password>

</server>

...

</servers>

</settings>Username and Password are the credentials of a Fabric user with administrative privileges (for example, the credentials you would use to log on to the Management Console).

Customising the repository ID

fabric8.upload.repo. You can specify additional server elements in your settings.xml file for each of the fabrics you need to work with. To select the relevant credentials, you can set the serverId property in the Fabric8 Maven plug-in configuration section (see Section 7.4, “Configuration Properties”) or set the fabric8.serverId Maven property.

7.2. Using the Plug-In to Deploy a Maven Project

Prerequisites

- Your Maven

~/.m2/settings.xmlfile is configured as described in Section 7.1, “Preparing to Use the Plug-In”. - A JBoss Fuse container instance is running on your local machine (alternatively, if the container instance is running on a remote host, you must configure the plug-in's

jolokiaUrlproperty appropriately).

Running the plug-in on any Maven project

mvn io.fabric8:fabric8-maven-plugin:1.0.0.redhat-355:deploy

Adding the plug-in to a Maven POM

pom.xml file as follows:

<plugins>

<plugin>

<groupId>io.fabric8</groupId>

<artifactId>fabric8-maven-plugin</artifactId>

<version>1.2.0.redhat-621084</version>

<configuration>

<profile>testprofile</profile>

<version>1.2</version>

</configuration>

</plugin>

</plugins>plugin/configuration/version element specifies the Fabric8 version of the target system (which is not necessarily the same as the version of the Fabric8 Maven plug-in).

mvn fabric8:deploy

What does the plug-in do?

- Uploads any artifacts into the fabric's maven repository,

- Lazily creates the Fabric profile or version you specify,

- Adds/updates the maven project artifact into the profile configuration,

- Adds any additional parent profile, bundles or features to the profile.

Example

quickstart examples, as follows:

cd InstallDir/quickstarts/rest mvn io.fabric8:fabric8-maven-plugin:1.0.0.redhat-355:deploy

7.3. Configuring the Plug-In

Specifying profile information

configuration element to the plug-in configuration in your pom.xml file, as follows:

<plugins>

<plugin>

<groupId>io.fabric8</groupId>

<artifactId>fabric8-maven-plugin</artifactId>

<configuration>

<profile>my-thing</profile>

</configuration>

</plugin>

</plugins>Multi-module Maven projects

pom.xml foo/ pom.xml a/pom.xml b/pom.xml ... bar/ pom.xml c/pom.xml d/pom.xml ...

pom.xml file, as follows:

<plugins>

<plugin>

<groupId>io.fabric8</groupId>

<artifactId>fabric8-maven-plugin</artifactId>

</plugin>

</plugins>foo/pom.xml file you need only define the fabric8.profile property, as follows:

<project>

...

<properties>

<fabric8.profile>my-foo</fabric8.profile>

...

</properties>

...

</project>foo folder, such as foo/a and foo/b, will deploy to the same profile (in this case the profile, my-foo). You can use the same approach to put all of the projects under the bar folder into a different profile too.

fabric8.profile property to specify exactly where it gets deployed; along with any other property on the plug-in (see the Property Reference below).

Specifying features, additional bundles, repositories and parent profiles

<plugins>

<plugin>

<groupId>io.fabric8</groupId>

<artifactId>fabric8-maven-plugin</artifactId>

<configuration>

<profile>my-rest</profile>

<features>fabric-cxf-registry fabric-cxf cxf war swagger</features>

<featureRepos>mvn:org.apache.cxf.karaf/apache-cxf/${version:cxf}/xml/features</featureRepos>

</configuration>

</plugin>

</plugins>features element allows you to specify a space-separated list of features to include in the profile.

Configuring with Maven properties

fabric8.. For example, to deploy a maven project to the cheese profile name, enter the command:

mvn fabric8:deploy -Dfabric8.profile=cheese

fabric8.upload=false—for example:

mvn fabric8:deploy -Dfabric8.upload=false

Specifying profile resources

src/main/fabric8, in your Maven project and add any resource files or a ReadMe.md file to your project, they will automatically be uploaded into the profile as well. For example, if you run the following commands from your Maven project directory:

mkdir -p src/main/fabric8 echo "## Hello World" >> src/main/fabric8/ReadMe.md mvn fabric8:deploy

ReadMe.md wiki page.

7.4. Configuration Properties

Specifying properties

configuration element of the plug-in in your project's pom.xml file. For example, the profile property can be set as follows:

<plugins>

<plugin>

<groupId>io.fabric8</groupId>

<artifactId>fabric8-maven-plugin</artifactId>

<configuration>

<profile>${fabric8.profile}</profile>

</configuration>

</plugin>

</plugins>fabric8.. For example, to set the profile name, you could add the following property to your pom.xml file:

<project>

...

<properties>

<fabric8.profile>my-foo</fabric8.profile>

...

</properties>

...mvn fabric8:deploy -Dfabric8.profile=my-foo

Property reference

configuration element in the pom.xml file or as Maven properties, when prefixed by fabric8.):

| Parameter | Description |

|---|---|

abstractProfile

|

Specifies whether the profile is abstract. Default is false.

|

artifactBundleType

|

Type to use for the project artifact bundle reference. |

artifactBundleClassifier

|

Classifier to use for the project artifact bundle reference. |

baseVersion

|

If the version does not exist, the baseVersion provides the initial values for the newly created version. This is like creating a branch from the baseVersion for a new version branch in git.

|

bundles

|

Space-separated list of additional bundle URLs (of the form mvn:groupId/artifactId/version) to add to the newly created profile. Note you do not have to include the current Maven project artifact; this configuration is intended as a way to list dependent required bundles.

|

featureRepos

|

Space-separated list of feature repository URLs to add to the profile. The URL has the general form mvn:groupId/artifactId/version/xml/features.

|

features

|

Space-separated list of features to add to the profile. For example, the following setting would include both the camel feature and the cxf feature: <features>camel cxf</features>

|

generateSummaryFile

|

Whether or not to generate a Summary.md file from the pom.xml file's description element text value. Default is true.

|

ignoreProject

|

Whether or not we should ignore this maven project in goals like fabric8:deploy or fabric8:zip. Default is false.

|

includeArtifact

|

Whether or not we should add the Maven deployment unit to the Fabric profile. Default is true.

|

includeReadMe

|

Whether or not to upload the Maven project's ReadMe file, if no specific ReadMe file exists in the your profile configuration directory (as set by profileConfigDir). Default is true.

|

jolokiaUrl

|

The Jolokia URL of the JBoss Fuse Management Console. Defaults to http://localhost:8181/jolokia.

|

locked

|

Specifies whether or not the profile should be locked. |

minInstanceCount

|

The minimum number of instances of this profile which we require to run. Default is 1.

|

parentProfiles

|

Space-separated list of parent profile IDs to be added to the newly created profile. Defaults to karaf.

|

profile

|

The name of the Fabric profile to deploy your project to. Defaults to the groupId-artifactId of your Maven project.

|

profileConfigDir

|

The folder in your Maven project containing resource files to be deployed into the profile, along with the artifact configuration. Defaults to src/main/fabric8. You should create the directory and add any configuration files or documentation you wish to add to your profile.

|

profileVersion

|

The profile version in which to update the profile. If not specified, it defaults to the current version of the fabric. |

replaceReadmeLinksPrefix

|

If provided, then any links in the ReadMe.md files will be replaced to include the given prefix.

|

scope

|

The Maven scope to filter by, when resolving the dependency tree. Possible values are: compile, provided, runtime, test, system, import.

|

serverId

|

The server ID used to lookup in ~/.m2/settings/xml for the server element to find the username and password to log in to the fabric. Defaults to fabric8.upload.repo.

|

upload

|

Whether or not the deploy goal should upload the local builds to the fabric's Maven repository. You can disable this step if you have configured your fabric's Maven repository to reuse your local Maven repository. Defaults to true.

|

useResolver

|

Whether or not the OSGi resolver is used for bundles or Karaf based containers to deduce the additional bundles or features that need to be added to your projects dependencies to be able to satisfy the OSGi package imports. Defaults to true.

|

webContextPath

|

The context path to use for Web applications, for projects using war packaging.

|

Chapter 8. ActiveMQ Brokers and Clusters

Abstract

fabric:mq-create command to create and deploy clusters of brokers.

8.1. Creating a Single Broker Instance

MQ profiles

mq-base- An abstract profile, which defines some important properties and resources for the broker, but should never be used directly to instantiate a broker.

mq-default- A basic single broker, which inherits most of its properties from the

mq-baseprofile.

fabric:profile-display command, as follows:

JBossFuse:karaf@root> fabric:profile-display mq-default ... JBossFuse:karaf@root> fabric:profile-display mq-base ...

Creating a new broker instance

mq-default profile.

mq-default broker instance called broker1, enter the following console command:

JBossFuse:karaf@root>fabric:container-create-child --profile mq-default root broker1

The following containers have been created successfully:

broker1broker1 with a broker of the same name running on it.

fabric:mq-create command

fabric:mq-create command provides a short cut to creating a broker, but with more flexibility, because it also creates a new profile. To create a new broker instance called brokerx using fabric:mq-create, enter the following console command:

JBossFuse:karaf@root> fabric:mq-create --create-container broker --replicas 1 brokerx MQ profile mq-broker-default.brokerx ready

fabric:container-create-child command, fabric:mq-create creates a container called broker1 and runs a broker instance on it. There are some differences, however:

- The new

broker1container is implicitly created as a child of the current container, - The new broker has its own profile,

mq-broker-default.brokerx, which is based on themq-baseprofile template, - It is possible to edit the

mq-broker-default.brokerxprofile, to customize the configuration of this new broker. - The

--replicasoption lets you specify the number of master/slave broker replicas (for more details, see Section 8.3.2, “Master-Slave Cluster”). In this example, we specify one replica (the default is two).

mq-broker-Group.BrokerName by default. If you want the profile to have the same name as the broker (which was the default in JBoss A-MQ version 6.0), you can specify the profile name explicitly using the --profile option.

Starting a broker on an existing container

fabric:mq-create command can be used to deploy brokers on existing containers. Consider the following example, which creates a new Fuse MQ broker in two steps:

JBossFuse:karaf@root> fabric:container-create-child root broker1

The following containers have been created successfully:

broker1

JBossFuse:karaf@root> fabric:mq-create --assign-container broker1 brokerx

MQ profile mq-broker-default.brokerx readymq-broker-default.brokerx profile to the container, by invoking fabric:mq-create with the --assign-container option. Of course, instead of deploying to a local child container (as in this example), we could assign the broker to an SSH container or a cloud container.

Broker groups

fabric:mq-create command are always registered with a specific broker group. If you do not specify the group name explicitly at the time you create the broker, the broker gets registered with the default group by default.

--group option of the fabric:mq-create command. For example, to create a new broker that registers with the west-coast group, enter the following console command:

JBossFuse:karaf@root> fabric:mq-create --create-container broker --replicas 1 --group west-coast brokery MQ profile mq-broker-default.brokery ready

west-coast group does not exist prior to running this command, it is automatically created by Fabric. Broker groups are important for defining clusters of brokers, providing the underlying mechanism for creating load-balancing clusters and master-slave clusters. For details, see Section 8.3, “Topologies”.

8.2. Connecting to a Broker

Overview

default group.

Client URL

discovery:(fabric:GroupName)

default group, the client would use the following URL:

discovery:(fabric:default)

Example client profiles

example-mq profile into a container. The example-mq profile instantiates a pair of messaging clients: a producer client, that sends messages continuously to the FABRIC.DEMO queue on the broker; and a consumer client, that consumes messages from the FABRIC.DEMO queue.

example-mq profile, by entering the following command:

JBossFuse:karaf@root> fabric:container-create-child --profile example-mq root example

example container is successfully provisioned, using the following console command:

JBossFuse:karaf@root> watch container-list

JBossFuse:karaf@root> container-connect example JBossFuse:karaf@example> log:display

8.3. Topologies

8.3.1. Load-Balancing Cluster

Overview

loadbal, and with three brokers registered in the group: brokerx, brokery, and brokerz. This type of topology is ideal for load balancing non-persistent messages across brokers and for providing high-availability.

Figure 8.1. Load-Balancing Cluster

Create brokers in a load-balancing cluster

- Choose a group name for the load-balancing cluster.

- Each broker in the cluster registers with the chosen group.

- Each broker must be identified by a unique broker name.

- Normally, each broker is deployed in a separate container.

loadbal and the cluster consists of three broker instances with broker names: brokerx, brokery, and brokerz.

- First of all create some containers:

JBossFuse:karaf@root> container-create-child root broker 3 The following containers have been created successfully: Container: broker1. Container: broker2. Container: broker3.

- Wait until the containers are successfully provisioned. You can conveniently monitor them using the

watchcommand, as follows:JBossFuse:karaf@root> watch container-list

- You can then assign broker profiles to each of the containers, using the

fabric:mq-createcommand, as follows:JBossFuse:karaf@root> mq-create --group loadbal --assign-container broker1 brokerx MQ profile mq-broker-loadbal.brokerx ready JBossFuse:karaf@root> mq-create --group loadbal --assign-container broker2 brokery MQ profile mq-broker-loadbal.brokery ready JBossFuse:karaf@root> mq-create --group loadbal --assign-container broker3 brokerz MQ profile mq-broker-loadbal.brokerz ready

- You can use the

fabric:profile-listcommand to see the new profiles created for these brokers:JBossFuse:karaf@root> profile-list --hidden [id] [# containers] [parents] ... mq-broker-loadbal.brokerx 1 mq-base mq-broker-loadbal.brokery 1 mq-base mq-client-loadbal ...

- You can use the

fabric:cluster-listcommand to view the cluster configuration for this load balancing cluster:JBossFuse:karaf@root> cluster-list [cluster] [masters] [slaves] [services] ... fusemq/loadbal brokerx broker1 - tcp://MyLocalHost:50394 brokery broker2 - tcp://MyLocalHost:50604 brokerz broker3 - tcp://MyLocalHost:50395

Configure clients of a load-balancing cluster

discovery:(fabric:GroupName), which automatically load balances the client across the available brokers in the cluster. For example, to connect a client to the loadbal cluster, you would use a URL like the following:

discovery:(fabric:loadbal)

mq-create command automatically generates a profile named mq-client-GroupName, which you can combine either with the example-mq-consumer profile or with the example-mq-producer profile to create a client of the load-balancing cluster.

loadbal group, you can deploy the mq-client-loadbal profile and the example-mq-consumer profile together in a child container, by entering the following command:

JBossFuse:karaf@root> container-create-child --profile mq-client-loadbal --profile example-mq-consumer root consumer The following containers have been created successfully: Container: consumer.

loadbal group, you can deploy the mq-client-loadbal profile and the example-mq-producer profile together in a child container, by entering the following command:

JBossFuse:karaf@root> container-create-child --profile mq-client-loadbal --profile example-mq-producer root producer The following containers have been created successfully: Container: producer.

JBossFuse:karaf@root> container-connect consumer JBossFuse:admin@consumer> log:display 2014-01-16 14:31:41,776 | INFO | Thread-42 | ConsumerThread | io.fabric8.mq.ConsumerThread 54 | 110 - org.jboss.amq.mq-client - 6.1.0.redhat-312 | Received test message: 982 2014-01-16 14:31:41,777 | INFO | Thread-42 | ConsumerThread | io.fabric8.mq.ConsumerThread 54 | 110 - org.jboss.amq.mq-client - 6.1.0.redhat-312 | Received test message: 983

8.3.2. Master-Slave Cluster

Overview

masterslave, and three brokers that compete with each other to register as the broker, hq-broker. A broker becomes the master by acquiring a lock (where the lock implementation is provided by the underlying ZooKeeper registry). The other two brokers that fail to acquire the lock remain as slaves (but they continue trying to acquire the lock, at regular time intervals).

Figure 8.2. Master-Slave Cluster

Create brokers in a master-slave cluster

- Choose a group name for the master-slave cluster.

- Each broker in the cluster registers with the chosen group.

- Each broker must be identified by the same virtual broker name.

- Normally, each broker is deployed in a separate container.

masterslave and the cluster consists of three broker instances, each with the same broker name: hq-broker. You can create this cluster by entering a single fabric:mq-create command, as follows:

JBossFuse:karaf@root> mq-create --create-container broker --replicas 3 --group masterslave hq-broker

broker1, broker2 and broker3 (possibly running on separate machines), you can deploy a cluster of three brokers to the containers by entering the following command:

JBossFuse:karaf@root> mq-create --assign-container broker1,broker2,broker3 --group masterslave hq-broker

Configure clients of a master-slave cluster

discovery:(fabric:GroupName), which automatically connects the client to the current master server. For example, to connect a client to the masterslave cluster, you would use a URL like the following:

discovery:(fabric:masterslave)

mq-client-masterslave, to create sample clients. For example, to create an example consumer client in its own container, enter the following console command:

JBossFuse:karaf@root> container-create-child --profile mq-client-masterslave --profile example-mq-consumer root consumer The following containers have been created successfully: Container: consumer.

JBossFuse:karaf@root> container-create-child --profile mq-client-masterslave --profile example-mq-producer root producer The following containers have been created successfully: Container: producer.

Locking mechanism

Re-using containers for multiple clusters

broker1, broker2, and broker3, already running the hq-broker cluster, it is possible to reuse the same containers for another highly available broker cluster, web-broker. You can assign the web-broker profile to the existing containers with the following command:

mq-create --assign-container broker1,broker2,broker3 web-broker

web-broker profile to the same containers already running hq-broker. Fabric automatically prevents two masters from running on the same container, so the master for hq-broker will run on a different container from the master for web-broker. This arrangement makes optimal use of the available resources.

Configuring persistent data

fabric:mq-create command enables you to specify the location of the data directory, as follows:

mq-create --assign-container broker1 --data /var/activemq/hq-broker hq-broker

hq-broker virtual broker, which uses the /var/activemq/hq-broker directory for the data (and store) location. You can then mount some shared storage to this path and share the storage amongst the brokers in the master-slave cluster.

8.3.3. Broker Networks

Overview

Broker networks

Creating network connectors

--network option to the fabric:mq-create command.

Example broker network

Figure 8.3. Broker Network with Master-Slave Clusters

- The first cluster has the group name,

us-west, and provides high-availability with a master-slave cluster of two brokers,us-west1andus-west2. - The second cluster has the group name,

us-east, and provides high-availability with a master-slave cluster of two brokers,us-east1andus-east2.

us-east group (consisting of the two containers us-east1 and us-east2), you would log on to a root container running in the US East location and enter a command like the following:

mq-create --group us-east --network us-west --networks-username User --networks-password Pass --create-container us-east us-east

--network option specifies the name of the broker group you want to connect to, and the User and Pass are the credentials required to log on to the us-west broker cluster. By default, the fabric:mq-create command creates a master/slave pair of brokers.

us-west group (consisting of the two containers us-west1 and us-west2), you would log on to a root container running in the US West location and enter a command like the following:

mq-create --group us-west --network us-east --networks-username User --networks-password Pass --create-container us-west us-west

User and Pass are the credentials required to log on to the us-east broker cluster.

--assign-container option in place of --create-container.

Connecting to the example broker network

discovery:(fabric:us-east)

discovery:(fabric:us-west)

8.4. Alternative Master-Slave Cluster

Why use an alternative master-slave cluster?

Alternative locking mechanism

- Disable the default Zookeeper locking mechanism (which can be done by setting

standalone=truein the broker'sio.fabric8.mq.fabric.server-BrokerNamePID). - Enable the shared file system master/slave locking mechanism in the KahaDB persistence layer (see section "Shared File System Master/Slave" in "Fault Tolerant Messaging").

standalone property

standalone property belongs to the io.fabric8.mq.fabric.server-BrokerName PID and is normally used for a non-Fabric broker deployment (for example, it is set to true in the etc/io.fabric8.mq.fabric.server-broker.cfg file). By setting this property to true, you instruct the broker to stop using the discovery and coordination services provided by Fabric (but it is still possible to deploy the broker in a Fabric container). One consequence of this is that the broker stops using the Zookeeper locking mechanism. But this setting has other side effects as well.

Side effects of setting standalone=true

standalone=true, on a broker deployed in Fabric has the following effects:

- Fabric no longer coordinates the locks for the brokers (hence, the broker's persistence adapter needs to be configured as shared file system master/slave instead).

- The broker no longer uses the

ZookeeperLoginModulefor authentication and falls back to using thePropertiesLoginModuleinstead. This requires users to be stored in theetc/users.propertiesfile or added to thePropertiesLoginModuleJAAS Realm in the container where the broker is running for the brokers to continue to accept connections - Fabric discovery of brokers no longer works (which affects client configuration).

Configuring brokers in the cluster

- Set the property,

standalone=true, in each broker'sio.fabric8.mq.fabric.server-BrokerNamePID. For example, given a broker with the broker name,brokerx, which is configured by the profile,mq-broker-default.brokerx, you could set thestandaloneproperty totrueusing the following console command:profile-edit --pid io.fabric8.mq.fabric.server-brokerx/standalone=true mq-broker-default.brokerx

- To customize the broker's configuration settings further, you need to create a unique copy of the broker configuration file in the broker's own profile (instead of inheriting the broker configuration file from the base profile,

mq-base). If you have not already done so, follow the instructions in the section called “Customizing the broker configuration file” to create a custom broker configuration file for each of the broker's in the cluster. - Configure each broker's KahaDB persistence adapter to use the shared file system locking mechanism. For this you must customize each broker configuration file, adding or modifying (as appropriate) the following XML snippet:

<broker ... > ... <persistenceAdapter> <kahaDB directory="/sharedFileSystem/sharedBrokerData" lockKeepAlivePeriod="5000"> <locker> <shared-file-locker lockAcquireSleepInterval="10000" /> </locker> </kahaDB> </persistenceAdapter> ... </broker>You can edit this profile resource either though the Fuse Management Console, through the Git configuration approach (see Section 8.5, “Broker Configuration”), or using thefabric:profile-editcommand.

Configuring authentication data

standalone=true on a broker, it can no longer use the default ZookeeperLoginModule authentication mechanism and falls back on the PropertiesLoginModule. This implies that you must populate authentication data in the etc/users.properties file on each of the hosts where a broker is running. Each line of this file takes an entry in the following format:

Username=Password,Role1,Role2,...

Username and Password credentials and a list of one or more roles, Role1, Role2,....

Configuring a client

failover:(tcp://broker1:61616,tcp://broker2:61616,tcp://broker3:61616)

8.5. Broker Configuration

Overview

Setting OSGi Config Admin properties

broker1 profile created by entering the following fabric:mq-create command:

fabric:mq-create --create-container broker --replicas 1 --network us-west brokerx

mq-broker-default.brokerx, and assigns this profile to the newly created broker1 container.

mq-broker-Group.BrokerName by default. If you want the profile to have the same name as the broker (which was the default in JBoss A-MQ version 6.0), you can specify the profile name explicitly using the --profile option.

mq-broker-default.brokerx profile using the fabric:profile-display command, as follows:

JBossFuse:karaf@root> profile-display mq-broker-default.brokerx

Profile id: mq-broker-default.brokerx

Version : 1.0

Attributes:

parents: mq-base

Containers: broker

Container settings

----------------------------

Configuration details

----------------------------

PID: io.fabric8.mq.fabric.server-brokerx

replicas 1

standby.pool default

broker-name brokerx

keystore.password JA7GNMZV

data ${runtime.data}brokerx

keystore.file profile:keystore.jks

ssl true

kind MasterSlave

truststore.file profile:truststore.jks

keystore.cn localhost

connectors openwire mqtt amqp stomp

truststore.password JA7GNMZV

keystore.url profile:keystore.jks

config profile:ssl-broker.xml

group default

network us-west

Other resources

----------------------------

Resource: keystore.jks

Resource: truststore.jksio.fabric8.mq.fabric.server-brokerx PID are a variety of property settings, such as network and group. You can now modify the existing properties or add more properties to this PID to customize the broker configuration.

Modifying basic configuration properties

io.fabric8.mq.fabric.server-brokerx PID by invoking the fabric:profile-edit command, with the appropriate syntax for modifying PID properties.

network property to us-east, enter the following console command:

profile-edit --pid io.fabric8.mq.fabric.server-brokerx/network=us-east mq-broker-default.brokerx

Customizing the SSL keystore.jks and truststore.jks file

mq-broker-default.brokerx profile when SSL is enabled (which is the default case):

keystore.jks- A Java keystore file containing this broker's own X.509 certificate. The broker uses this certificate to identify itself to other brokers in the network. The password for this file is stored in the

io.fabric8.mq.fabric.server-brokerx/keystore.passwordproperty. truststore.jks- A Java truststore file containing one or more Certificate Authority (CA) certificates or other certificates, which are used to verify the certificates presented by other brokers during the SSL handshake. The password for this file is stored in the

io.fabric8.mq.fabric.server-brokerx/truststore.passwordproperty.

mq-broker-default.brokerx profile, perform the following steps:

- If you have not done so already, clone the git repository that stores all of the profile data in your Fabric. Enter a command like the following:

git clone -b 1.0 http://Username:Password@localhost:8181/git/fabric cd fabric

WhereUsernameandPasswordare the credentials of a Fabric user withAdministratorrole and we assume that you are currently working with profiles in version1.0(which corresponds to the git branch named1.0).NoteIn this example, it is assumed that the fabric is set up to use the git cluster architecture (which is the default) and also that the Fabric server running onlocalhostis currently the master instance of the git cluster. - The

keystore.jksfile and thetruststore.jksfile can be found at the following locations in the git repository:fabric/profiles/mq/broker/default.brokerx.profile/keystore.jks fabric/profiles/mq/broker/default.brokerx.profile/truststore.jks

Copy your custom versions of thekeystore.jksfile andtruststore.jksfile to these locations, over-writing the default versions of these files. - You also need to modify the corresponding passwords for the keystore and truststore. To modify the passwords, edit the following file in a text editor:

fabric/profiles/mq/broker/default.brokerx.profile/io.fabric8.mq.fabric.server-brokerx.properties

Modify thekeystore.passwordandtruststore.passwordsettings in this file, to specify the correct password values for your custom JKS files. - When you are finished modifying the profile configuration, commit and push the changes back to the Fabric server using git, as follows:

git commit -a -m "Put a description of your changes here!" git push

- For these SSL configuration changes to take effect, a restart of the affected broker (or brokers) is required. For example, assuming that the modified profile is deployed on the

brokercontainer, you would restart thebrokercontainer as follows:fabric:container-stop broker fabric:container-start broker

Customizing the broker configuration file

ssl-broker.xml, for an SSL-enabled broker; and broker.xml, for a non-SSL-enabled broker.

mq-base parent profile). The easiest way to make this kind of change is to use a git repository of profile data that has been cloned from a Fabric ensemble server.

mq-broker-default.brokerx profile, perform the following steps:

- It is assumed that you have already cloned the git repository of profile data from the Fabric ensemble server (see the section called “Customizing the SSL keystore.jks and truststore.jks file”). Make sure that you have checked out the branch corresponding to the profile version that you want to edit (which is assumed to be

1.0here). It is also a good idea to do a git pull to ensure that your local git repository is up-to-date. In your git repository, enter the following git commands:git checkout 1.0 git pull

- The default broker configuration files are stored at the following location in the git repository:

fabric/profiles/mq/base.profile/ssl-broker.xml fabric/profiles/mq/base.profile/broker.xml

Depending on whether your broker is configured with SSL or not, you should copy either thessl-broker.xmlfile or thebroker.xmlfile into your broker's profile. For example, assuming that your broker uses themq-broker-default.brokerxprofile and is configured to use SSL, you would copy the broker configuration as follows:cp fabric/profiles/mq/base.profile/ssl-broker.xml fabric/profiles/mq/broker/default.brokerx.profile/

- You can now edit the copy of the broker configuration file, customizing the broker's Spring XML configuration as required.

- When you are finished modifying the broker configuration, commit and push the changes back to the Fabric server using git, as follows:

git commit -a -m "Put a description of your changes here!" git push

- For the configuration changes to take effect, a restart of the affected broker (or brokers) is required. For example, assuming that the modified profile is deployed on the

brokercontainer, you would restart thebrokercontainer as follows:fabric:container-stop broker fabric:container-start broker

Additional broker configuration templates in mq-base

mq-base profile, which can then be used as templates for creating new brokers with the fabric:mq-create command. Additional template configurations must be added to the following location in the git repository:

fabric/profiles/mq/base.profile/

--config option to the fabric:mq-create command.

mybrokertemplate.xml, has just been installed:

fabric/profiles/mq/base.profile/mybrokertemplate.xml

mybrokertemplate.xml configuration template by invoking the fabric:mq-create command with the --config option, as follows:

fabric:mq-create --config mybrokertemplate.xml brokerx

--config option assumes that the configuration file is stored in the current version of the mq-base profile, so you need to specify only the file name (that is, the full ZooKeeper path is not required).

Setting network connector properties

network.NetworkPropName. For example, to add the setting, network.bridgeTempDestinations=false, to the PID for brokerx (which has the profile name, mq-broker-default.brokerx), enter the following console command:

profile-edit --pid io.fabric8.mq.fabric.server-brokerx/network.bridgeTempDestinations=false mq-broker-default.brokerx

Network connector properties by reflection

network.OptionName can be used to set the corresponding OptionName property on the org.apache.activemq.network.NetworkBridgeConfiguration class. In particular, this implies you can set any of the following network.OptionName properties:

| Property | Default | Description |

|---|---|---|

name | bridge | Name of the network - for more than one network connector between the same two brokers, use different names |

userName | None | Username for logging on to the remote broker port, if authentication is enabled. |

password | None | Password for logging on to the remote broker port, if authentication is enabled. |

dynamicOnly | false | If true, only activate a networked durable subscription when a corresponding durable subscription reactivates, by default they are activated on start-up. |

dispatchAsync | true | Determines how the network bridge sends messages to the local broker. If true, the network bridge sends messages asynchronously. |

decreaseNetworkConsumerPriority | false | If true, starting at priority -5, decrease the priority for dispatching to a network Queue consumer the further away it is (in network hops) from the producer. If false, all network consumers use same default priority (that is, 0) as local consumers. |

consumerPriorityBase | -5 | Sets the starting priority for consumers. This base value will be decremented by the length of the broker path when decreaseNetworkConsumerPriority is set. |

networkTTL | 1 | The number of brokers in the network that messages and subscriptions can pass through (sets both messageTTL and consumerTTL) |

messageTTL | 1 | The number of brokers in the network that messages can pass through. |

consumerTTL | 1 | The number of brokers in the network that subscriptions can pass through (keep to 1 in a mesh). |

conduitSubscriptions | true | Multiple consumers subscribing to the same destination are treated as one consumer by the network. |

duplex | false | If true, a network connection is used both to produce and to consume messages. This is useful for hub and spoke scenarios, when the hub is behind a firewall, and so on. |