Deploying Red Hat Enterprise Linux based RHHI for Virtualization on a single node

Create a hyperconverged configuration with a single server

Laura Bailey

lbailey@redhat.comAbstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Chapter 1. Workflow for deploying a single hyperconverged host

- Verify that your planned deployment meets Support Requirements, with exceptions described in Chapter 2, Additional requirements for single node deployments.

- Install the hyperconverged host machine.

- Configure key-based SSH without a password from the node to itself.

- Browse to the Web Console and deploy a single hyperconverged node.

- Browse to the Red Hat Virtualization Administration Console and configure Red Hat Gluster Storage as a Red Hat Virtualization storage domain.

Chapter 2. Additional requirements for single node deployments

Red Hat Hyperconverged Infrastructure for Virtualization is supported for deployment on a single node provided that all Support Requirements are met, with the following additions and exceptions.

A single node deployment requires a physical machine with:

- 1 Network Interface Controller

- at least 12 cores

- at least 64GB RAM

- at most 48TB storage

Single node deployments cannot be scaled, and are not highly available.

Chapter 3. Install host physical machine

Your physical machine needs an operating system and access to the appropriate software repositories in order to be used as a hyperconverged host.

3.1. Installing a Red Hat Enterprise Linux host

Red Hat Enterprise Linux hosts add virtualization capabilities to the Red Hat Enterprise Linux operating system. This allows the host to be used as a hyperconverged host in Red Hat Hyperconverged Infrastructure for Virtualization.

Prerequisites

- Ensure that your physical machine meets the requirements outlined in Physical machines.

Procedure

Download the Red Hat Enterprise Linux 7 ISO image from the Customer Portal:

- Log in to the Customer Portal at https://access.redhat.com.

- Click Downloads in the menu bar.

- Beside Red Hat Enterprise Linux click Versions 7 and below.

-

In the Version drop-down menu, select version

7.6. - Go to Red Hat Enterprise Linux 7.6 Binary DVD and and click Download Now.

- Create a bootable media device. See Making Media in the Red Hat Enterprise Linux Installation Guide for more information.

- Start the machine you are installing as a Red Hat Enterprise Linux host, and boot from the prepared installation media.

From the boot menu, select Install Red Hat Enterprise Linux 7.6 and press Enter.

NoteYou can also press the Tab key to edit the kernel parameters. Kernel parameters must be separated by a space, and you can boot the system using the specified kernel parameters by pressing the Enter key. Press the Esc key to clear any changes to the kernel parameters and return to the boot menu.

- Select a language, and click Continue.

Select a time zone from the Date & Time screen and click Done.

ImportantRed Hat recommends using Coordinated Universal Time (UTC) on all hosts. This helps ensure that data collection and connectivity are not impacted by variation in local time, such as during daylight savings time.

- Select a keyboard layout from the Keyboard screen and click Done.

Specify the installation location from the Installation Destination screen.

Important- Red Hat strongly recommends using the Automatically configure partitioning option.

- All disks are selected by default, so deselect disks that you do not want to use as installation locations.

- At-rest encryption is not supported. Do not enable encryption.

Red Hat recommends increasing the size of

/var/logto at least 15GB to provide sufficient space for the additional logging requirements of Red Hat Gluster Storage.Follow the instructions in Growing a logical volume using the Web Console to increase the size of this partition after installing the operating system.

Click Done.

Select the Ethernet network from the Network & Host Name screen.

- Click Configure… → General and select the Automatically connect to this network when it is available check box.

- Optionally configure Language Support, Security Policy, and Kdump. See Installing Using Anaconda in the Red Hat Enterprise Linux 7 Installation Guide for more information on each of the sections in the Installation Summary screen.

- Click Begin Installation.

Set a root password and, optionally, create an additional user while Red Hat Enterprise Linux installs.

WarningRed Hat strongly recommends not creating untrusted users on the hyperconverged host, as this can lead to exploitation of local security vulnerabilities.

- Click Reboot to complete the installation.

3.2. Enabling software repositories

Register your machine to Red Hat Network.

# subscription-manager register --username=<username> --password=<password>

Attach the pool.

# subscription-manager attach --pool=Pool ID number

Disable the repositories.

# subscription-manager repos --disable="*"

Enable the additional channels required for Red Hat Enterprise Linux hosts.

# subscription-manager repos --enable=rhel-7-server-rpms --enable=rh-gluster-3-for-rhel-7-server-rpms --enable=rhel-7-server-rhv-4-mgmt-agent-rpms --enable=rhel-7-server-ansible-2.9-rpms

3.3. Install and configure RHHI for Virtualization requirements

Install the packages required for RHHI for Virtualization.

# yum install glusterfs-server vdsm-gluster ovirt-hosted-engine-setup cockpit-ovirt-dashboard gluster-ansible-roles

Start and enable the Web Console.

# systemctl start cockpit # systemctl enable cockpit

Configure the firewall for the Web Console traffic

Open ports for the

cockpitservice.# firewall-cmd --add-service=cockpit # firewall-cmd --add-service=cockpit --permanent

Verify that the

cockpitservice is allowed by the firewall.Ensure that

cockpitappears in the output of the following command.# firewall-cmd --list-services | grep cockpit

Chapter 4. Configure key based SSH authentication without a password

Configure key-based SSH authentication without a password for the root user from the host, to the FQDNs of both storage and management interfaces on the same host.

4.1. Generating SSH key pairs without a password

Generating a public/private key pair lets you use key-based SSH authentication. Generating a key pair that does not use a password makes it simpler to use Ansible to automate deployment and configuration processes.

Procedure

- Log in to the first hyperconverged host as the root user.

Generate an SSH key that does not use a password.

Start the key generation process.

# ssh-keygen -t rsa Generating public/private rsa key pair.

Enter a location for the key.

The default location, shown in parentheses, is used if no other input is provided.

Enter file in which to save the key (/home/username/.ssh/id_rsa): <location>/<keyname>

Specify and confirm an empty passphrase by pressing

Entertwice.Enter passphrase (empty for no passphrase): Enter same passphrase again:

The private key is saved in

<location>/<keyname>. The public key is saved in<location>/<keyname>.pub.Your identification has been saved in <location>/<keyname>. Your public key has been saved in <location>/<keyname>.pub. The key fingerprint is SHA256:8BhZageKrLXM99z5f/AM9aPo/KAUd8ZZFPcPFWqK6+M root@server1.example.com The key's randomart image is: +---[ECDSA 256]---+ | . . +=| | . . . = o.o| | + . * . o...| | = . . * . + +..| |. + . . So o * ..| | . o . .+ = ..| | o oo ..=. .| | ooo...+ | | .E++oo | +----[SHA256]-----+

WarningYour identificationin this output is your private key. Never share your private key. Possession of your private key allows someone else to impersonate you on any system that has your public key.

4.2. Copying SSH keys

To access a host using your private key, that host needs a copy of your public key.

Prerequisites

- Generate a public/private key pair.

Procedure

- Log in to the first host as the root user.

Copy your public key to each host that you want to access, including the host on which you execute the command, using both the front-end and the back-end FQDNs.

# ssh-copy-id -i <location>/<keyname>.pub <user>@<hostname>

Enter the password for

<user>@<hostname>when prompted.WarningMake sure that you use the file that ends in

.pub. Never share your private key. Possession of your private key allows someone else to impersonate you on any system that has your public key.For example, if you are logged in as the root user on

server1.example.com, you would run the following commands:# ssh-copy-id -i <location>/<keyname>.pub root@server1front.example.com # ssh-copy-id -i <location>/<keyname>.pub root@server2front.example.com # ssh-copy-id -i <location>/<keyname>.pub root@server3front.example.com # ssh-copy-id -i <location>/<keyname>.pub root@server1back.example.com # ssh-copy-id -i <location>/<keyname>.pub root@server2back.example.com # ssh-copy-id -i <location>/<keyname>.pub root@server3back.example.com

Chapter 5. Configuring a single node RHHI for Virtualization deployment

5.1. Configuring Red Hat Gluster Storage on a single node

Ensure that disks specified as part of this deployment process do not have any partitions or labels.

Log into the Web Console

Browse to the the Web Console management interface of the first hyperconverged host, for example, https://node1.example.com:9090/, and log in with the credentials you created in the previous section.

Start the deployment wizard

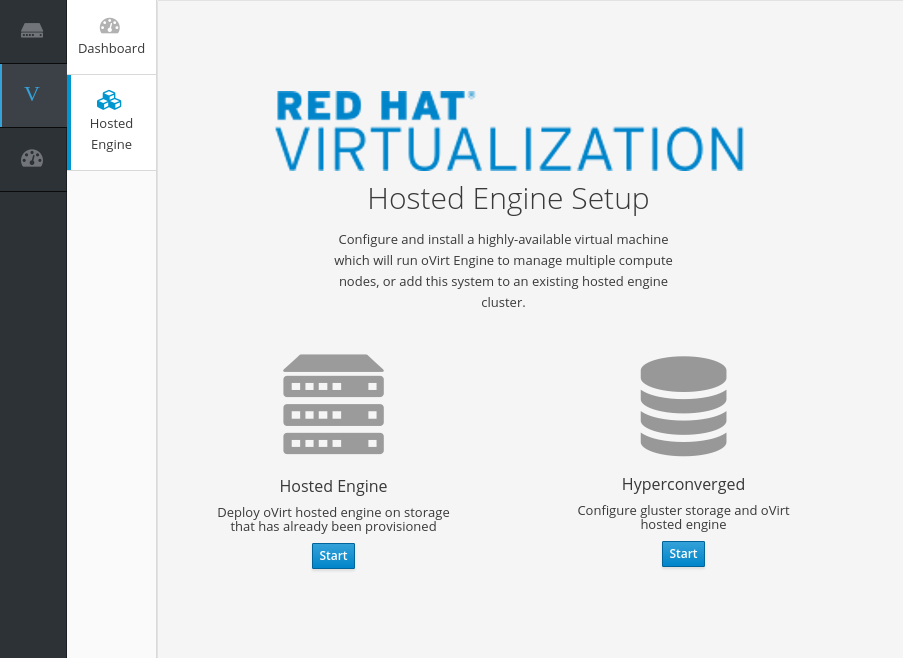

Click Virtualization → Hosted Engine and click Start underneath Hyperconverged.

The Gluster Configuration window opens.

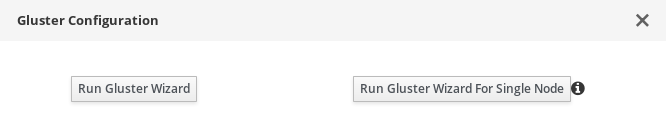

Click the Run Gluster Wizard for Single Node button.

The Gluster Deployment window opens in single node mode.

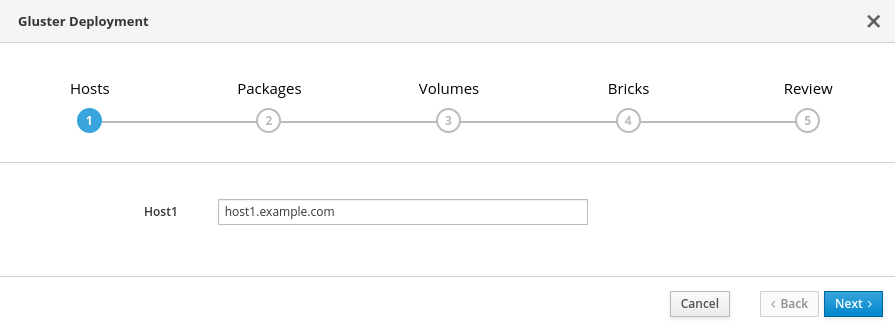

Specify hyperconverged host

Specify the back-end FQDN on the storage network of the hyperconverged host and click Next.

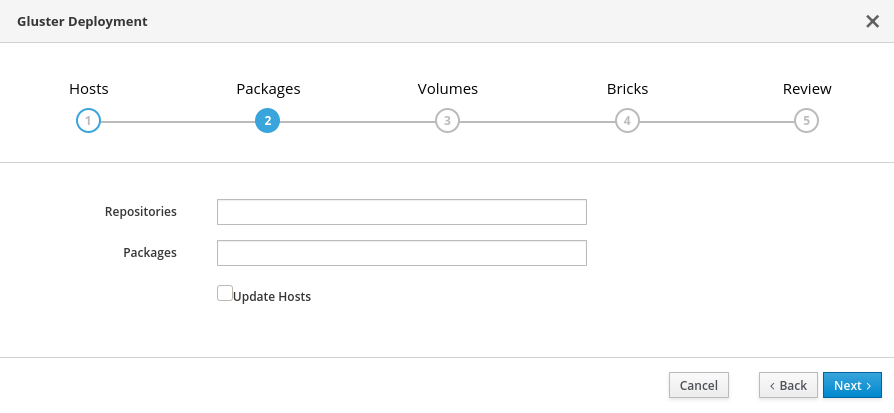

Specify packages

No additional packages are required for Red Hat Hyperconverged Infrastructure for Virtualization, but you can use this tab to install any additional packages you require on the host.

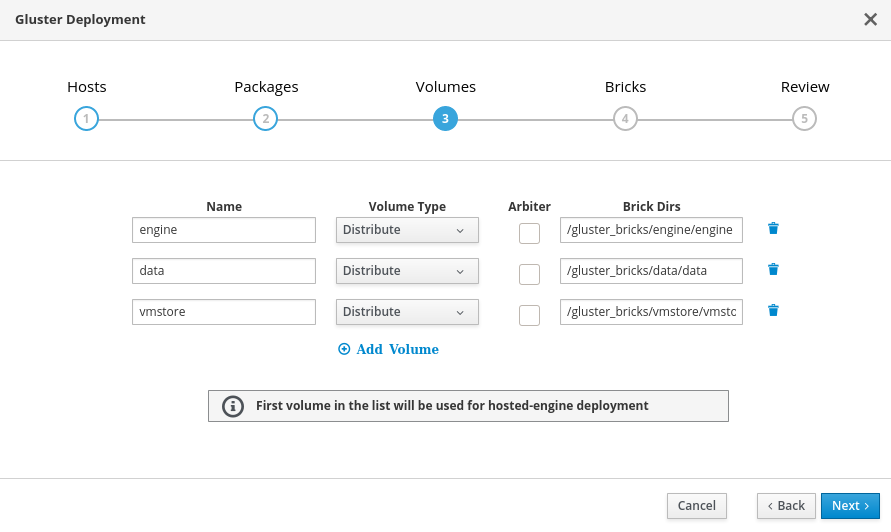

Specify volumes

Specify the volumes to create.

- Name

- Specify the name of the volume to be created.

- Volume Type

- Specify a Distribute volume type. Only distributed volumes are supported for single node deployments.

- Brick Dirs

- The directory that contains this volume’s bricks.

- Add Volume

-

To add more volumes, click the Add Volume option and it will create a blank entry. Specify the name of the volume to be added and if arbiter volume is required, check the arbiter check box. It is recommended to use the brick path as

/gluster_bricks/<volname>/<volname>.

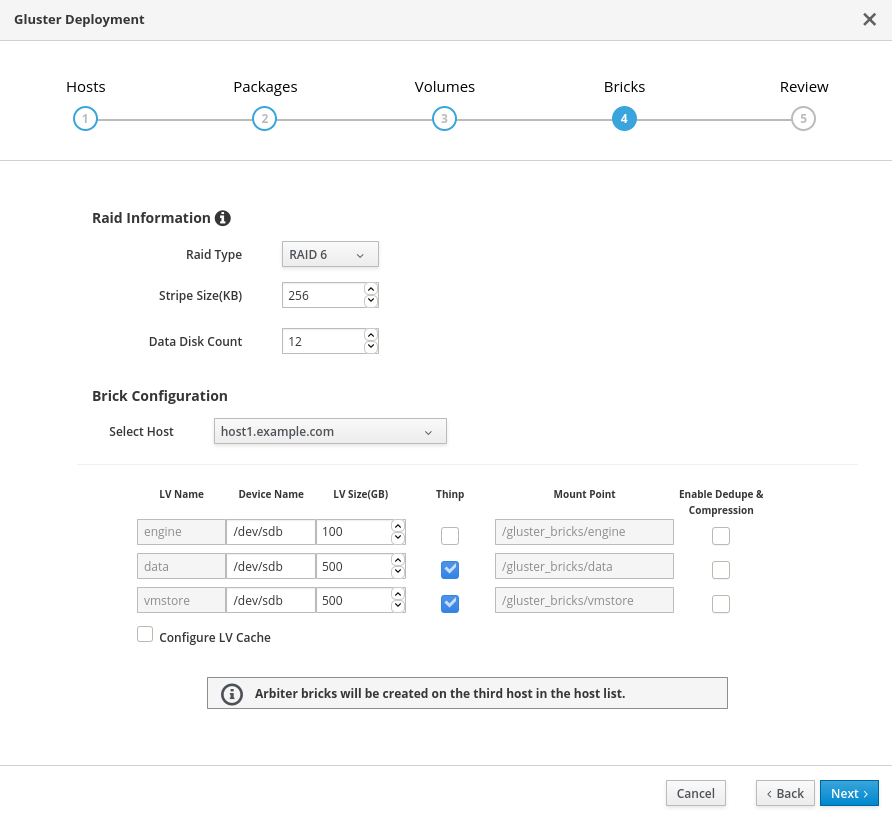

Specify bricks

Specify the bricks to create.

- RAID

- Specify the RAID configuration to use. This should match the RAID configuration of your host. Supported values are raid5, raid6, and jbod. Setting this option ensures that your storage is correctly tuned for your RAID configuration.

- Stripe Size

- Specify the RAID stripe size in KB. Do not enter units, only the number. This can be ignored for jbod configurations.

- Disk Count

- Specify the number of data disks in a RAID volume. This can be ignored for jbod configurations.

- LV Name

- The name of the logical volume to be created. This is pre-filled with the name that you specified on the previous page of the wizard.

- Device

- Specify the raw device you want to use. Red Hat recommends an unpartitioned device.

- Size

- Specify the size of the logical volume to create in GB. Do not enter units, only the number. This number should be the same for all bricks in a replicated set. Arbiter bricks can be smaller than other bricks in their replication set.

- Mount Point

- The mount point for the logical volume. This is pre-filled with the brick directory that you specified on the previous page of the wizard.

- Thinp

-

This option is enabled and volumes are thinly provisioned by default, except for the

enginevolume, which must be thickly provisioned. - Enable Dedupe & Compression

- Specify whether to provision the volume using VDO for compression and deduplication at deployment time.

- Logical Size (GB)

- Specify the logical size of the VDO volume. This can be up to 10 times the size of the physical volume, with an absolute maximum logical size of 4 PB.

- Configure LV Cache

- Optionally, check this checkbox to configure a small, fast SSD device as a logical volume cache for a larger, slower logical volume. Add the device path to the SSD field, the size to the LV Size (GB) field, and set the Cache Mode used by the device.

WarningTo avoid data loss when using write-back mode, Red Hat recommends using two separate SSD/NVMe devices. Configuring the two devices in a RAID-1 configuration (via software or hardware), significantly reduces the potential of data loss from lost writes.

For further information about lvmcache configuration, see Red Hat Enterprise Linux 7 LVM Administration.

(Optional) If your system has multipath devices, additional configuration is required.

To use multipath devices

If you want to use multipath devices in your RHHI for Virtualization deployment, use multipath WWIDs to specify the device. For example, use

/dev/mapper/3600508b1001caab032303683327a6a2einstead of/dev/sdb.To disable multipath device use

If multipath devices exist in your environment, but you do not want to use them for your RHHI for Virtualization deployment, blacklist the devices.

Create a custom multipath configuration file.

# mkdir /etc/multipath/conf.d # touch /etc/multipath/conf.d/99-custom-multipath.conf

Add the following content to the file, replacing

<device>with the name of the device to blacklist:blacklist { devnode "<device>" }For example, to blacklist the

/dev/sdbdevice, add the following:blacklist { devnode "sdb" }Restart multipathd.

# systemctl restart multipathd

Verify that your disks no longer have multipath names by using the

lsblkcommand.If multipath names are still present, reboot hosts.

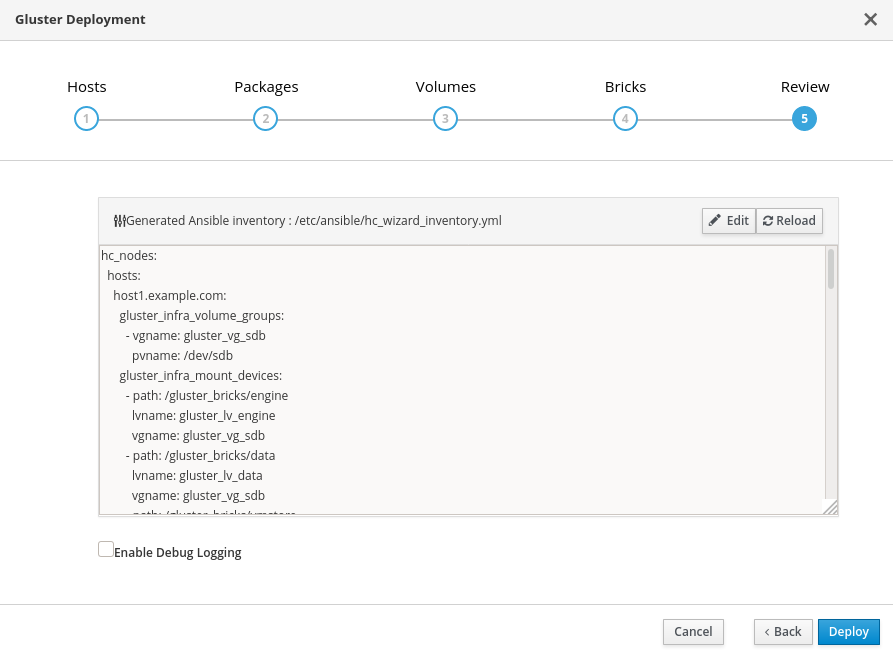

Review and edit configuration

Review the contents of the generated configuration file and click Edit to modify the file, and Save to keep your changes.

Click Deploy when you are satisfied with the configuration file.

Wait for deployment to complete

You can watch the deployment process in the text field.

The window displays Successfully deployed gluster when complete.

Click Continue to Hosted Engine Deployment and continue the deployment process with the instructions in Section 5.2, “Deploy the Hosted Engine on a single node using the Web Console”.

If deployment fails, click Clean up to remove any potentially incorrect changes to the system.

If deployment fails, click the Redeploy button. This returns you to the Review and edit configuration tab so that you can correct any issues in the generated configuration file before reattempting deployment.

5.2. Deploy the Hosted Engine on a single node using the Web Console

This section shows you how to deploy the Hosted Engine on a single node using the Web Console. Following this process results in Red Hat Virtualization Manager running in a virtual machine on your node, and managing that virtual machine. It also configures a Default cluster consisting only of that node, and enables Red Hat Gluster Storage functionality and the virtual-host tuned performance profile for the cluster of one.

Prerequisites

- The RHV-M Appliance is installed during the deployment process; however, if required, you can install it on the deployment host before starting the installation:

# yum install rhvm-appliance

Manually installing the Manager virtual machine is not supported.

- Configure Red Hat Gluster Storage on a single node

Gather the information you need for Hosted Engine deployment

Have the following information ready before you start the deployment process.

- IP address for a pingable gateway to the hyperconverged host

- IP address of the front-end management network

- Fully-qualified domain name (FQDN) for the Hosted Engine virtual machine

- MAC address that resolves to the static FQDN and IP address of the Hosted Engine

Procedure

Open the Hosted Engine Deployment wizard

If you continued directly from the end of Configure Red Hat Gluster Storage on a single node, the wizard is already open.

Otherwise:

- Click Virtualization → Hosted Engine.

- Click Start underneath Hyperconverged.

Click Use existing configuration.

ImportantIf the previous deployment attempt failed, click Clean up instead of Use existing configuration to discard the previous attempt and start from scratch.

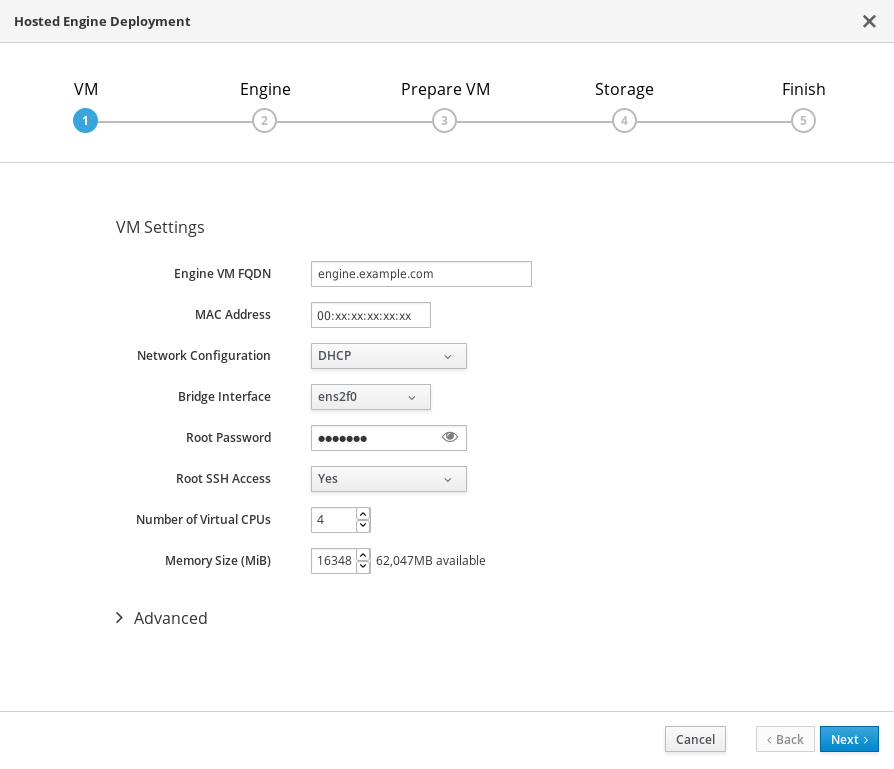

Specify virtual machine details

Enter the following details:

- Engine VM FQDN

-

The fully qualified domain name to be used for the Hosted Engine virtual machine, for example,

engine.example.com. - MAC Address

The MAC address associated with the Engine VM FQDN.

ImportantThe pre-populated MAC address must be replaced.

- Network Configuration

Choose either DHCP or Static from the Network Configuration drop-down list.

- If you choose DHCP, you must have a DHCP reservation for the Hosted Engine virtual machine so that its host name resolves to the address received from DHCP. Specify its MAC address in the MAC Address field.

If you choose Static, enter the following details:

- VM IP Address - The IP address must belong to the same subnet as the host. For example, if the host is in 10.1.1.0/24, the Hosted Engine virtual machine’s IP must be in the same subnet range (10.1.1.1-254/24).

- Gateway Address

- DNS Servers

- Bridge Interface

- Select the Bridge Interface from the drop-down list.

- Root password

- The root password to be used for the Hosted Engine virtual machine.

- Root SSH Access

- Specify whether to allow Root SSH Access.The default value of Root SSH Access is set to Yes.

- Number of Virtual CPUs

- Enter the Number of Virtual CPUs for the virtual machine.

- Memory Size (MiB)

Enter the Memory Size (MiB). The available memory is displayed next to the input field.

NoteRed Hat recommends to retain the values of Root SSH Access, Number of Virtual CPUs and Memory Size to default values.

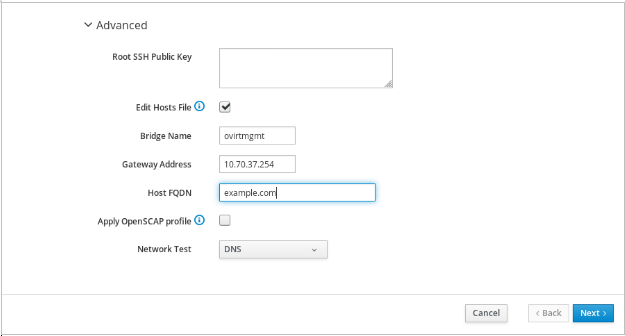

Optionally expand the Advanced fields.

- Root SSH Public Key

- Enter a Root SSH Public Key to use for root access to the Hosted Engine virtual machine.

- Edit Hosts File

- Select or clear the Edit Hosts File check box to specify whether to add entries for the Hosted Engine virtual machine and the base host to the virtual machine’s /etc/hosts file. You must ensure that the host names are resolvable.

- Bridge Name

- Change the management Bridge Name, or accept the default ovirtmgmt.

- Gateway Address

- Enter the Gateway Address for the management bridge.

- Host FQDN

- Enter the Host FQDN of the first host to add to the Manager. This is the front-end FQDN of the base host you are running the deployment on.

- Network Test

-

If you have a static network configuration or are using an isolated environment with addresses defined in

/etc/hosts, set Network Test to Ping.

- Click Next. Your FQDNs are validated before the next screen appears.

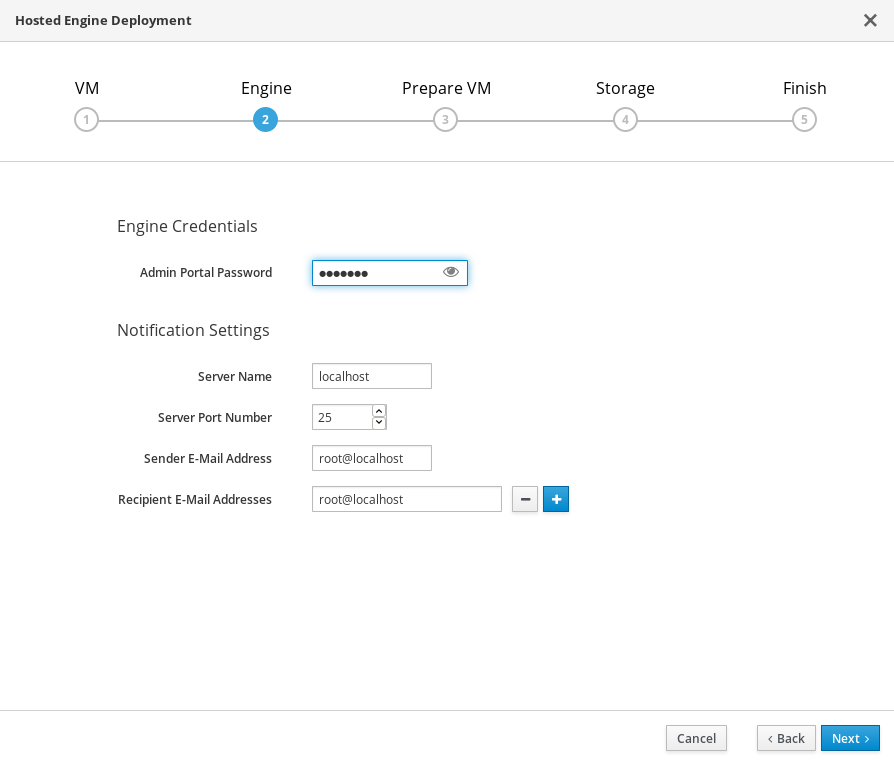

Specify virtualization management details

Enter the password to be used by the

adminaccount in the Administration Portal. You can also specify an email address for notifications, which requires further configuration after deployment; see Chapter 8, Post-deployment configuration suggestions.

- Click Next.

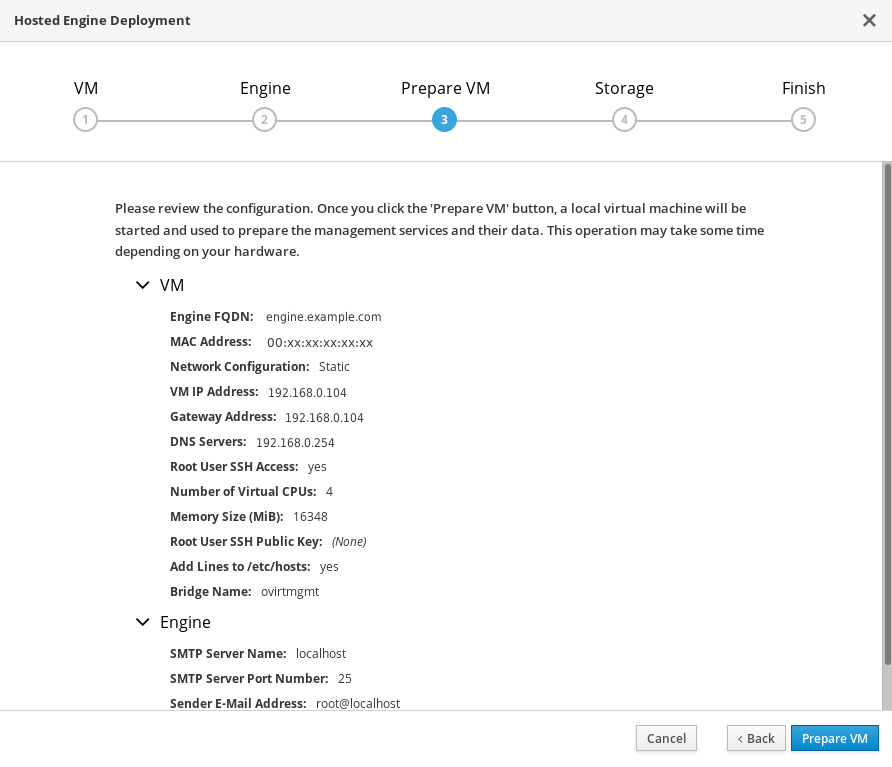

Review virtual machine configuration

Ensure that the details listed on this tab are correct. Click Back to correct any incorrect information.

- Click Prepare VM.

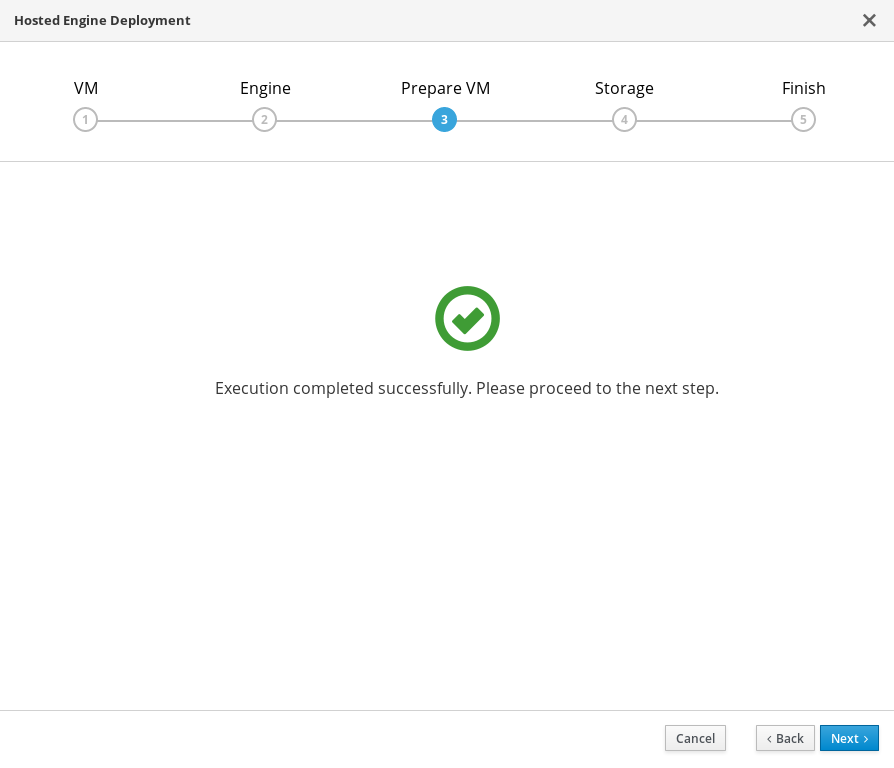

Wait for virtual machine preparation to complete.

If preparation does not occur successfully, see Viewing Hosted Engine deployment errors.

- Click Next.

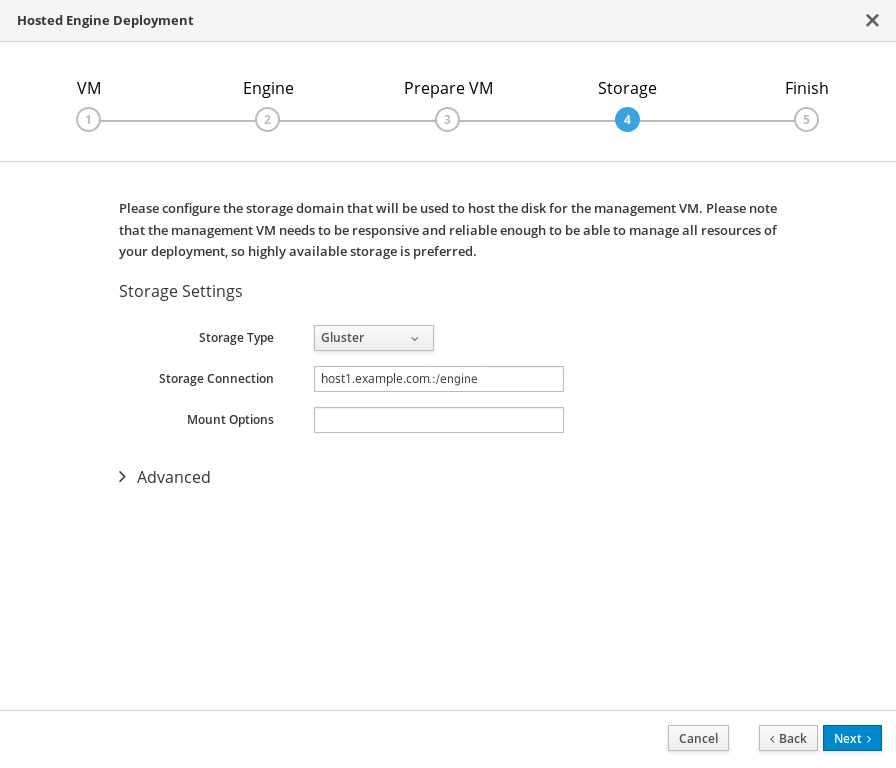

Specify storage for the Hosted Engine virtual machine

Specify the back-end address and location of the

enginevolume.

- Click Next.

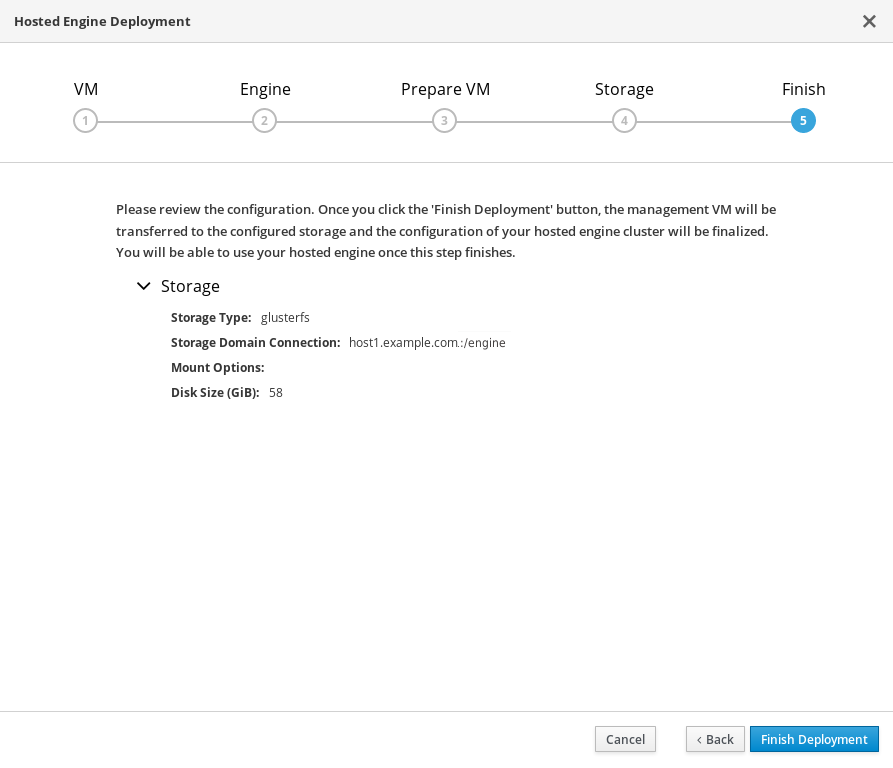

Finalize Hosted Engine deployment

Review your deployment details and verify that they are correct.

NoteThe responses you provided during configuration are saved to an answer file to help you reinstall the hosted engine if necessary. The answer file is created at

/etc/ovirt-hosted-engine/answers.confby default. This file should not be modified manually without assistance from Red Hat Support.

- Click Finish Deployment.

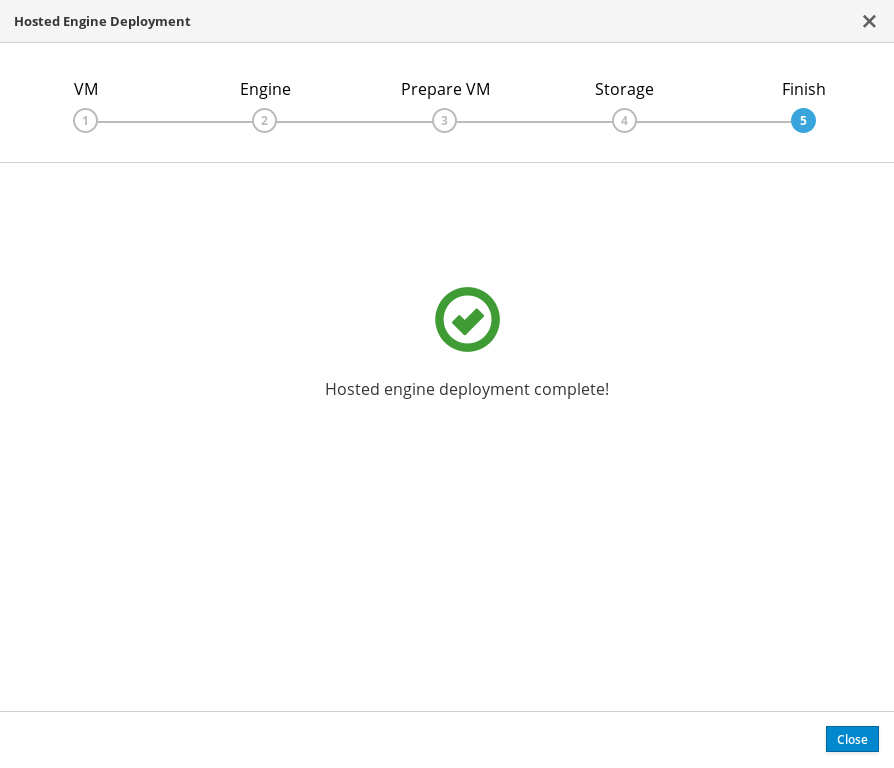

Wait for deployment to complete

This can take some time, depending on your configuration details.

The window displays the following when complete.

Important

ImportantIf deployment does not complete successfully, see Viewing Hosted Engine deployment errors.

Click Close.

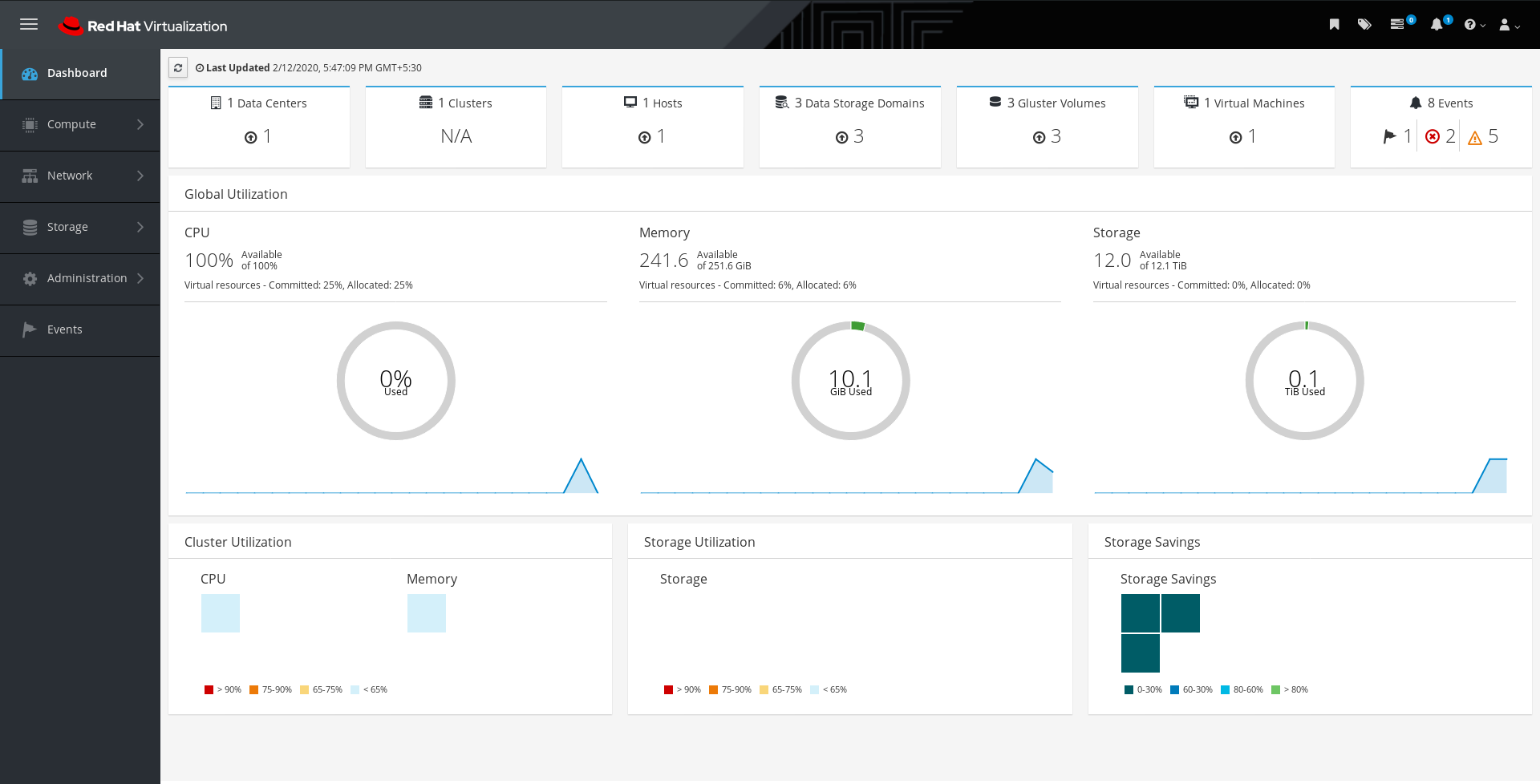

Verify hosted engine deployment

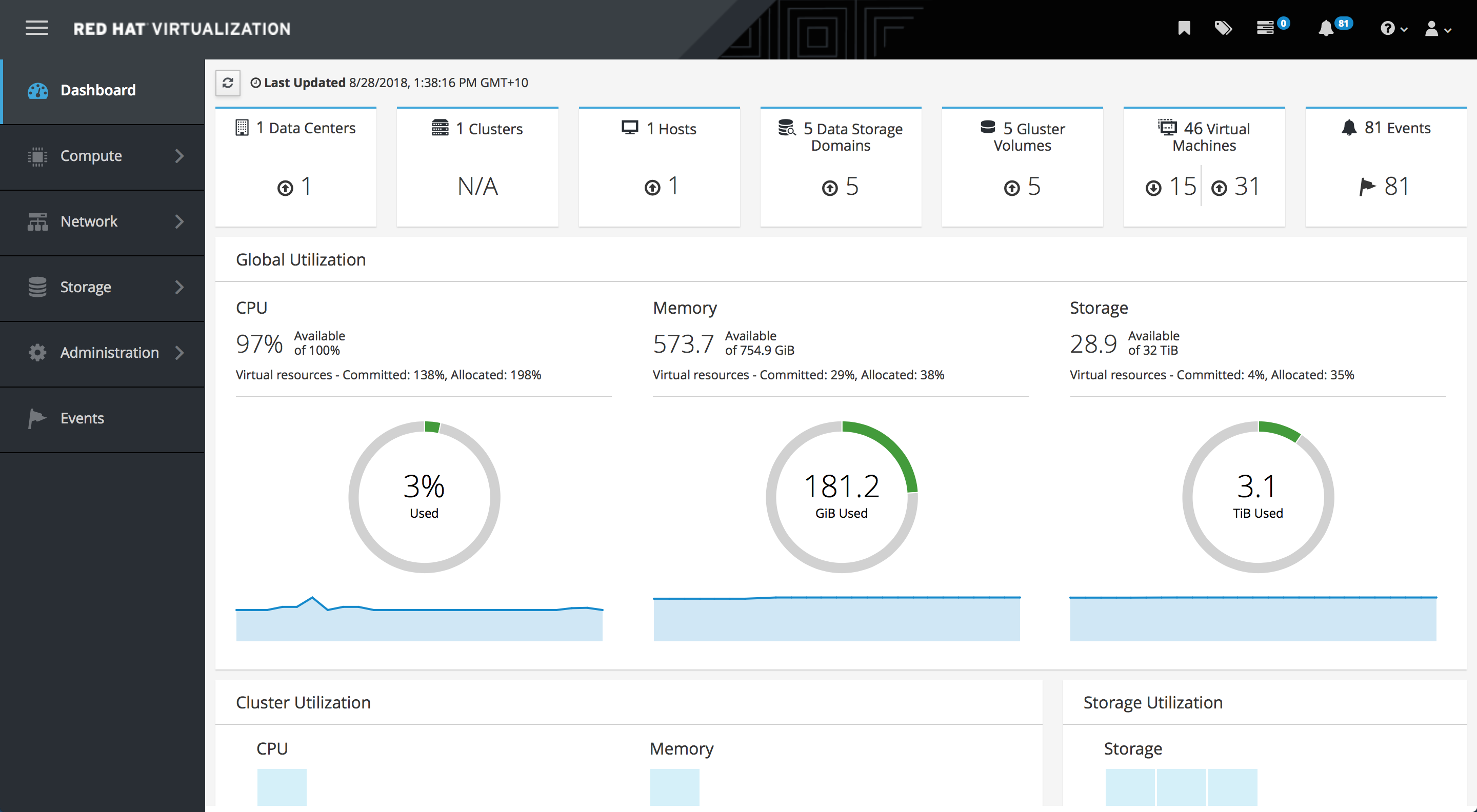

Browse to the Administration Portal (for example, http://engine.example.com/ovirt-engine) and verify that you can log in using the administrative credentials you configured earlier. Click Dashboard and look for your hosts, storage domains, and virtual machines.

Chapter 6. Configure Red Hat Gluster Storage as a Red Hat Virtualization storage domain

The hosted engine storage domain is imported automatically, but other storage domains must be added to be used.

- Click the Storage tab and then click New Domain.

- Select GlusterFS as the Storage Type and provide a Name for the domain.

- Check the Use managed gluster volume option and select the volume to use.

- Click OK to save.

Chapter 7. Verify your deployment

After deployment is complete, verify that your deployment has completed successfully.

Browse to the Administration Portal, for example, http://engine.example.com/ovirt-engine.

Administration Console Login

Log in using the administrative credentials added during hosted engine deployment.

When login is successful, the Dashboard appears.

Administration Console Dashboard

Verify that your cluster is available.

Administration Console Dashboard - Clusters

Verify that one host is available.

- Click Compute → Hosts.

-

Verify that your host is listed with a Status of

Up.

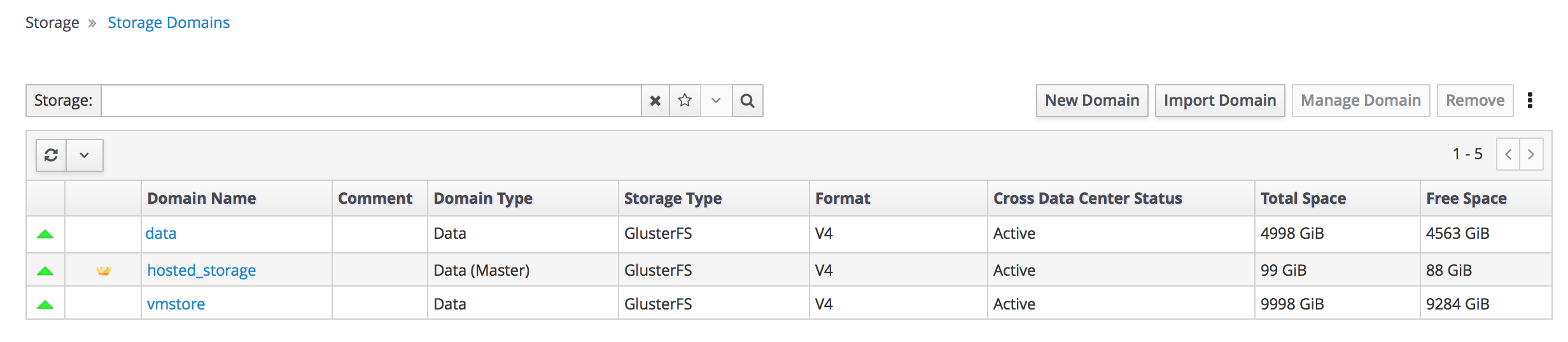

Verify that all storage domains are available.

- Click Storage → Domains.

Verify that the

Activeicon is shown in the first column.Administration Console - Storage Domains

Chapter 8. Post-deployment configuration suggestions

Depending on your requirements, you may want to perform some additional configuration on your newly deployed Red Hat Hyperconverged Infrastructure for Virtualization. This section contains suggested next steps for additional configuration.

Details on these processes are available in Maintaining Red Hat Hyperconverged Infrastructure for Virtualization.

8.1. Configure notifications

See Configuring Event Notifications in the Administration Portal to configure email notifications.

8.2. Configure fencing for high availability

Fencing allows a cluster to enforce performance and availability policies and react to unexpected host failures by automatically rebooting hyperconverged hosts.

See Configure High Availability using fencing policies for further information.

8.3. Configure backup and recovery options

Red Hat recommends configuring at least basic disaster recovery capabilities on all production deployments.

See Configuring backup and recovery options in Maintaining Red Hat Hyperconverged Infrastructure for Virtualization for more information.

Chapter 9. Next steps

- Learn to create and manage Red Hat Gluster Storage using the Administration Portal in Managing Red Hat Gluster Storage using the RHV Administration Portal.

- Learn to create and manage virtual machines in the Red Hat Virtualization Virtual Machine Management Guide.

- Review the RHHI for Virtualization documentation on the Red Hat Customer Portal.

Part I. Reference material

Appendix A. Glossary of terms

A.1. Virtualization terms

- Administration Portal

- A web user interface provided by Red Hat Virtualization Manager, based on the oVirt engine web user interface. It allows administrators to manage and monitor cluster resources like networks, storage domains, and virtual machine templates.

- Hosted Engine

- The instance of Red Hat Virtualization Manager that manages RHHI for Virtualization.

- Hosted Engine virtual machine

- The virtual machine that acts as Red Hat Virtualization Manager. The Hosted Engine virtual machine runs on a virtualization host that is managed by the instance of Red Hat Virtualization Manager that is running on the Hosted Engine virtual machine.

- Manager node

- A virtualization host that runs Red Hat Virtualization Manager directly, rather than running it in a Hosted Engine virtual machine.

- Red Hat Enterprise Linux host

- A physical machine installed with Red Hat Enterprise Linux plus additional packages to provide the same capabilities as a Red Hat Virtualization host. Support for this type of host is limited.

- Red Hat Virtualization

- An operating system and management interface for virtualizing resources, processes, and applications for Linux and Microsoft Windows workloads.

- Red Hat Virtualization host

- A physical machine installed with Red Hat Virtualization that provides the physical resources to support the virtualization of resources, processes, and applications for Linux and Microsoft Windows workloads. This is the only type of host supported with RHHI for Virtualization.

- Red Hat Virtualization Manager

- A server that runs the management and monitoring capabilities of Red Hat Virtualization.

- Self-Hosted Engine node

- A virtualization host that contains the Hosted Engine virtual machine. All hosts in a RHHI for Virtualization deployment are capable of becoming Self-Hosted Engine nodes, but there is only one Self-Hosted Engine node at a time.

- storage domain

- A named collection of images, templates, snapshots, and metadata. A storage domain can be comprised of block devices or file systems. Storage domains are attached to data centers in order to provide access to the collection of images, templates, and so on to hosts in the data center.

- virtualization host

- A physical machine with the ability to virtualize physical resources, processes, and applications for client access.

- VM Portal

- A web user interface provided by Red Hat Virtualization Manager. It allows users to manage and monitor virtual machines.

A.2. Storage terms

- brick

- An exported directory on a server in a trusted storage pool.

- cache logical volume

- A small, fast logical volume used to improve the performance of a large, slow logical volume.

- geo-replication

- One way asynchronous replication of data from a source Gluster volume to a target volume. Geo-replication works across local and wide area networks as well as the Internet. The target volume can be a Gluster volume in a different trusted storage pool, or another type of storage.

- gluster volume

- A logical group of bricks that can be configured to distribute, replicate, or disperse data according to workload requirements.

- logical volume management (LVM)

- A method of combining physical disks into larger virtual partitions. Physical volumes are placed in volume groups to form a pool of storage that can be divided into logical volumes as needed.

- Red Hat Gluster Storage

- An operating system based on Red Hat Enterprise Linux with additional packages that provide support for distributed, software-defined storage.

- source volume

- The Gluster volume that data is being copied from during geo-replication.

- storage host

- A physical machine that provides storage for client access.

- target volume

- The Gluster volume or other storage volume that data is being copied to during geo-replication.

- thin provisioning

- Provisioning storage such that only the space that is required is allocated at creation time, with further space being allocated dynamically according to need over time.

- thick provisioning

- Provisioning storage such that all space is allocated at creation time, regardless of whether that space is required immediately.

- trusted storage pool

- A group of Red Hat Gluster Storage servers that recognise each other as trusted peers.

A.3. Hyperconverged Infrastructure terms

- Red Hat Hyperconverged Infrastructure (RHHI) for Virtualization

- RHHI for Virtualization is a single product that provides both virtual compute and virtual storage resources. Red Hat Virtualization and Red Hat Gluster Storage are installed in a converged configuration, where the services of both products are available on each physical machine in a cluster.

- hyperconverged host

- A physical machine that provides physical storage, which is virtualized and consumed by virtualized processes and applications run on the same host. All hosts installed with RHHI for Virtualization are hyperconverged hosts.

- Web Console

- The web user interface for deploying, managing, and monitoring RHHI for Virtualization. The Web Console is provided by the the Web Console service and plugins for Red Hat Virtualization Manager.