Optimizing RHEL 8 for Real Time for low latency operation

Optimizing the RHEL for Real Time kernel on Red Hat Enterprise Linux

Abstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

- Log in to the Jira website.

- Click Create in the top navigation bar.

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- Click Create at the bottom of the dialogue.

Chapter 1. Real-time kernel tuning in RHEL 8

Latency, or response time, refers to the time from an event and to the system response. It is generally measured in microseconds (μs).

For most applications running under a Linux environment, basic performance tuning can improve latency sufficiently. For those industries where latency must be low, accountable, and predictable, Red Hat has a replacement kernel that can be set for latency to meet those requirements. RHEL for Real Time 8 provides seamless integration with RHEL 8 and offers clients the opportunity to measure, configure, and record latency times within their organization.

Use the real-time kernel for well-tuned systems and for applications with extremely high determinism requirements. With kernel system tuning, you can achieve good improvement in determinism. Before you begin, perform general system tuning of the standard RHEL 8 system and then deploy the RHEL for Real Time kernel.

Failure to perform these tasks might prevent a consistent performance from a RHEL for Real Time deployment.

1.1. Tuning guidelines

Real-time tuning is an iterative process; you will almost never be able to tweak a few variables and know that the change is the best that can be achieved. Be prepared to spend days or weeks narrowing down the set of tuning configurations that work best for your system.

Additionally, always make long test runs. Changing some tuning parameters then doing a five minute test run is not a good validation of a particular set of tuning changes. Make the length of your test runs adjustable and run them for longer than a few minutes. You can narrow down to a few different tuning configuration sets with test runs of a few hours, then run those sets for many hours or days at a time to detect corner-cases of highest latency or resource exhaustion.

- Build a measurement mechanism into your application, so that you can accurately gauge how a particular set of tuning changes affect the application’s performance. Anecdotal evidence, for example, "The mouse moves more smoothly" is usually wrong and can vary. Do hard measurements and record them for later analysis.

- It is very tempting to make multiple changes to tuning variables between test runs, but doing so means that you do not have a way to narrow down which tuning parameter affected your test results. Keep the tuning changes between test runs as small as you can.

- It is also tempting to make large changes when tuning, but it is almost always better to make incremental changes. You will find that working your way up from the lowest to highest priority values will yield better results in the long run.

-

Use the available tools. The

tunatuning tool makes it easy to change processor affinities for threads and interrupts, thread priorities and to isolate processors for application use. Thetasksetandchrtcommand line utilities allow you to do most of whattunadoes. If you run into performance problems, theftraceandperfutilities can help locate latency problems. - Rather than hard-coding values into your application, use external tools to change policy, priority and affinity. Using external tools allows you to try many different combinations and simplifies your logic. Once you have found some settings that give good results, you can either add them to your application, or set up startup logic to implement the settings when the application starts.

1.2. Balancing logging parameters

The syslog server forwards log messages from programs over a network. The less often this occurs, the larger the pending transaction is likely to be. If the transaction is very large, it can cause an I/O spike. To prevent this, keep the interval reasonably small.

The system logging daemon, syslogd, is used to collect messages from different programs. It also collects information reported by the kernel from the kernel logging daemon, klogd. Typically, syslogd logs to a local file, but it can also be configured to log over a network to a remote logging server.

Procedure

To enable remote logging:

- Configure the machine to which the logs will be sent. For more information, see Remote Syslogging with rsyslog on Red Hat Enterprise Linux.

Configure each system that will send logs to the remote log server, so that its

syslogoutput is written to the server, rather than to the local file system. To do so, edit the/etc/rsyslog.conffile on each client system. For each of the logging rules defined in that file, replace the local log file with the address of the remote logging server.# Log all kernel messages to remote logging host. kern.* @my.remote.logging.server

The example above configures the client system to log all kernel messages to the remote machine at

@my.remote.logging.server.Alternatively, you can configure

syslogdto log all locally generated system messages, by adding the following line to the/etc/rsyslog.conffile:# Log all messages to a remote logging server: . @my.remote.logging.server

The syslogd daemon does not include built-in rate limiting on its generated network traffic. Therefore, Red Hat recommends that when using RHEL for Real Time systems, only log messages that are required to be remotely logged by your organization. For example, kernel warnings, authentication requests, and the like. Other messages should be logged locally.

Additional resources

-

syslog(3)man page -

rsyslog.conf(5)man page -

rsyslogd(8)man page

1.3. Improving performance by avoiding running unnecessary applications

Every running application uses system resources. Ensuring that there are no unnecessary applications running on your system can significantly improve performance.

Prerequisites

- You have root permissions on the system.

Procedure

Do not run the graphical interface where it is not absolutely required, especially on servers.

Check if the system is configured to boot into the GUI by default:

# systemctl get-defaultIf the output of the command is

graphical.target, configure the system to boot to text mode:# systemctl set-default multi-user.targetUnless you are actively using a Mail Transfer Agent (MTA) on the system you are tuning, disable it. If the MTA is required, ensure it is well-tuned or consider moving it to a dedicated machine.

For more information, refer to the MTA’s documentation.

ImportantMTAs are used to send system-generated messages, which are executed by programs such as

cron. This includes reports generated by logging functions likelogwatch(). You will not be able to receive these messages if the MTAs on your machine are disabled.Peripheral devices, such as mice, keyboards, webcams send interrupts that may negatively affect latency. If you are not using a graphical interface, remove all unused peripheral devices and disable them.

For more information, refer to the devices' documentation.

Check for automated

cronjobs that might impact performance.# crontab -lDisable the

crondservice or any unneededcronjobs.- Check your system for third-party applications and any components added by external hardware vendors, and remove any that are unnecessary.

Additional resources

-

cron(8)man page

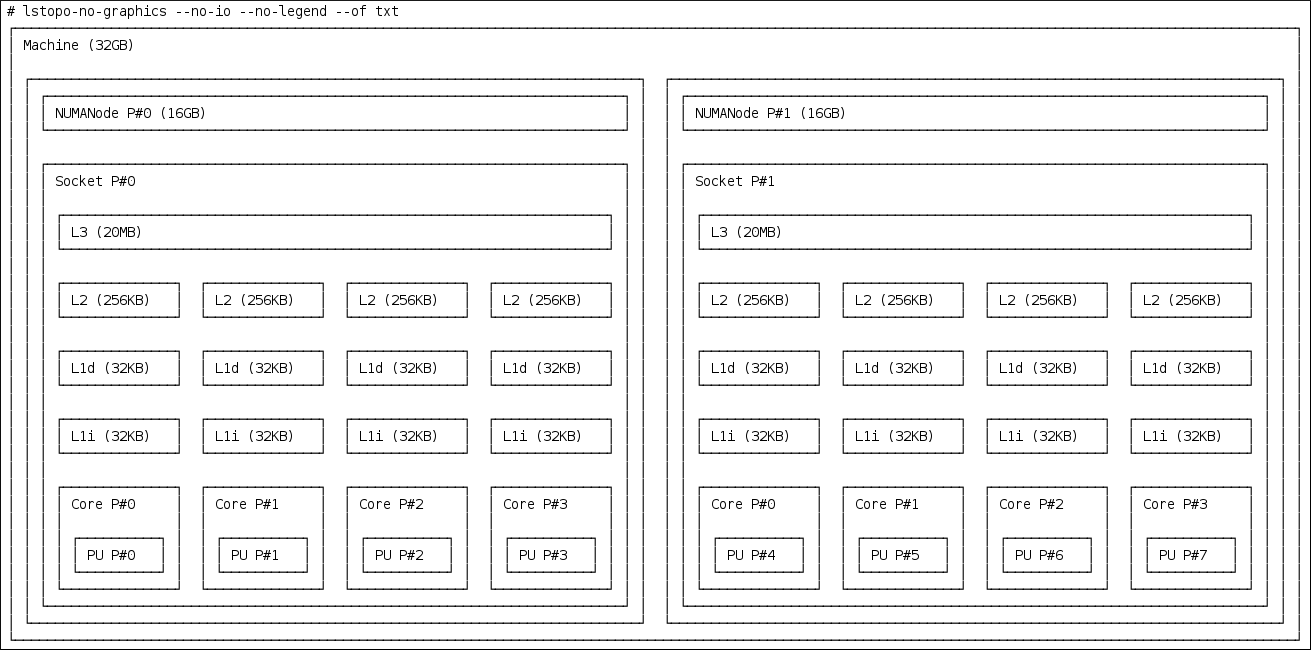

1.4. Non-Uniform Memory Access

The taskset utility only works on CPU affinity and has no knowledge of other NUMA resources such as memory nodes. If you want to perform process binding in conjunction with NUMA, use the numactl command instead of taskset.

For more information about the NUMA API, see Andi Kleen’s whitepaper An NUMA API for Linux.

Additional resources

-

numactl(8)man page

1.5. Ensuring that debugfs is mounted

The debugfs file system is specially designed for debugging and making information available to users. It is mounted automatically in RHEL 8 in the /sys/kernel/debug/ directory.

The debugfs file system is mounted using the ftrace and trace-cmd commands.

Procedure

To verify that debugfs is mounted:

Run the following command:

# mount | grep ^debugfs debugfs on /sys/kernel/debug type debugfs (rw,nosuid,nodev,noexec,relatime,seclabel)If

debugfsis mounted, the command displays the mount point and properties fordebugfs.If

debugfsis not mounted, the command returns nothing.

1.6. InfiniBand in RHEL for Real Time

InfiniBand is a type of communications architecture often used to increase bandwidth, improve quality of service (QOS), and provide for failover. It can also be used to improve latency by using the Remote Direct Memory Access (RDMA) mechanism.

The support for InfiniBand on RHEL for Real Time is the same as the support available on Red Hat Enterprise Linux 8. For more information, see Configuring InfiniBand and RDMA networks.

1.7. Using RoCEE and High-Performance Networking

RoCEE (RDMA over Converged Enhanced Ethernet) is a protocol that implements Remote Direct Memory Access (RDMA) over Ethernet networks. It allows you to maintain a consistent, high-speed environment in your data centers, while providing deterministic, low latency data transport for critical transactions.

High Performance Networking (HPN) is a set of shared libraries that provides RoCEE interfaces into the kernel. Instead of going through an independent network infrastructure, HPN places data directly into remote system memory using standard Ethernet infrastructure, resulting in less CPU overhead and reduced infrastructure costs.

Support for RoCEE and HPN under RHEL for Real Time does not differ from the support offered under RHEL 8.

Additional resources

1.8. Tuning containers for RHEL for real-time

The main RHEL kernels enable the real time group scheduling feature, CONFIG_RT_GROUP_SCHED, by default. However, for real-time kernels, this feature is disabled.

The CONFIG_RT_GROUP_SCHED feature was developed independently of the PREEMPT_RT patchset used in the kernel-rt package and is intended to operate on real time processes on the main RHEL kernel. The CONFIG_RT_GROUP_SCHED feature might cause latency spikes and is therefore disabled on PREEMPT_RT enabled kernels. Therefore, when testing your workload in a container running on the main RHEL kernel, some real-time bandwidth must be allocated to the container to be able to run the SCHED_FIFO or SCHED_RR tasks inside it.

Procedure

Configure the following global setting before using podman’s

--cpu-rt-runtimecommand line option:# echo 950000 > /sys/fs/cgroup/cpu,cpuacct/machine.slice/cpu.rt_runtime_us- For CPU isolation, use the existing recommendations for setting aside a set of cores for the RT workload.

-

Run

podman run --cpuset-cpuswith the list of isolated CPU cores to be used. Specify the Non-Uniform Memory Access (NUMA) memory nodes to use.

*podman run --cpuset-mems=number-of-memory-nodesThis avoids cross-NUMA node memory access.

-

To verify that the minimal amount of memory required by the real-time workload running on the container is available at container start time, use the

*podman run --memory-reservation=limitcommand.

Additional resources

-

podman-run(1)man page

Chapter 2. Scheduling policies for RHEL for Real Time

In real-time, the scheduler is the kernel component that determines the runnable thread to run. Each thread has an associated scheduling policy and a static scheduling priority, known as sched_priority. The scheduling is preemptive and therefore the currently running thread stops when a thread with a higher static priority gets ready to run. The running thread then returns to the waitlist for its static priority.

All Linux threads have one of the following scheduling policies:

-

SCHED_OTHERorSCHED_NORMAL: is the default policy. -

SCHED_BATCH: is similar toSCHED_OTHER, but with incremental orientation. -

SCHED_IDLE: is the policy with lower priority thanSCHED_OTHER. -

SCHED_FIFO: is the first in and first out real-time policy. -

SCHED_RR: is the round-robin real-time policy. -

SCHED_DEADLINE: is a scheduler policy to prioritize tasks according to the job deadline. The job with the earliest absolute deadline runs first.

2.1. Scheduler policies

The real-time threads have higher priority than the standard threads. The policies have scheduling priority values that range from the minimum value of 1 to the maximum value of 99.

The following policies are critical to real-time:

SCHED_OTHERorSCHED_NORMALpolicyThis is the default scheduling policy for Linux threads. It has a dynamic priority that is changed by the system based on the characteristics of the thread.

SCHED_OTHERthreads have nice values between 20, which is the highest priority and 19, which is the lowest priority. The default nice value forSCHED_OTHERthreads is 0.SCHED_FIFOpolicyThreads with

SCHED_FIFOrun with higher priority overSCHED_OTHERtasks. Instead of using nice values,SCHED_FIFOuses a fixed priority between 1, which is the lowest and 99, which is the highest. ASCHED_FIFOthread with a priority of 1 always schedules first over aSCHED_OTHERthread.SCHED_RRpolicyThe

SCHED_RRpolicy is similar to theSCHED_FIFOpolicy. The threads of equal priority are scheduled in a round-robin fashion.SCHED_FIFOandSCHED_RRthreads run until one of the following events occurs:- The thread goes to sleep or waits for an event.

A higher-priority real-time thread gets ready to run.

Unless one of the above events occurs, the threads run indefinitely on the specified processor, while the lower-priority threads remain in the queue waiting to run. This might cause the system service threads to be resident and prevent being swapped out and fail the filesystem data flushing.

SCHED_DEADLINEpolicyThe

SCHED_DEADLINEpolicy specifies the timing requirements. It schedules each task according to the task’s deadline. The task with the earliest deadline first (EDF) schedule runs first.The kernel requires

runtime⇐deadline⇐periodto be true. The relation between the required options isruntime⇐deadline⇐period.

2.2. Parameters for SCHED_DEADLINE policy

Each SCHED_DEADLINE task is characterized by period, runtime, and deadline parameters. The values for these parameters are integers of nanoseconds.

Table 2.1. SCHED_DEADLINE parameters

| Parameter | Description |

|---|---|

|

|

For example, if a video processing task has 60 frames per second to process, a new frame is queued for service every 16 milliseconds. Therefore, the |

|

|

For example, if a video processing tool can take, in the worst case, five milliseconds to process an image, the |

|

|

For example, if a task needs to deliver the processed frame within ten milliseconds, the |

Chapter 3. Setting persistent kernel tuning parameters

When you have decided on a tuning configuration that works for your system, you can make the changes persistent across reboots.

By default, edited kernel tuning parameters only remain in effect until the system reboots or the parameters are explicitly changed. This is effective for establishing the initial tuning configuration. It also provides a safety mechanism. If the edited parameters cause the machine to behave erratically, rebooting the machine returns the parameters to the previous configuration.

3.1. Making persistent kernel tuning parameter changes

You can make persistent changes to kernel tuning parameters by adding the parameter to the /etc/sysctl.conf file.

This procedure does not change any of the kernel tuning parameters in the current session. The changes entered into /etc/sysctl.conf only affect future sessions.

Prerequisites

- You have root permissions on the system.

Procedure

-

Open

/etc/sysctl.confin a text editor. Insert the new entry into the file with the parameter’s value.

Modify the parameter name by removing the

/proc/sys/path, changing the remaining slash (/) to a period (.), and including the parameter’s value.For example, to make the command

echo 0 > /proc/sys/kernel/hung_task_panicpersistent, enter the following into/etc/sysctl.conf:# Enable gettimeofday(2) kernel.hung_task_panic = 0

- Save and close the file.

- Reboot the system for changes to take effect.

Verification

To verify the configuration:

# cat /proc/sys/kernel/hung_task_panic 0

Chapter 4. Application tuning and deployment

Tuning a real-time kernel with a combination of optimal configurations and settings can help in enhancing and developing RHEL for Real Time applications.

In general, try to use POSIX defined APIs (application programming interfaces). RHEL for Real Time is compliant with POSIX standards. Latency reduction in RHEL for Real Time kernel is also based on POSIX.

4.1. Signal processing in real-time applications

Traditional UNIX and POSIX signals have their uses, especially for error handling, but they are not suitable as an event delivery mechanism in real-time applications. This is because the current Linux kernel signal handling code is quite complex, mainly due to legacy behavior and the many APIs that need to be supported. This complexity means that the code paths that are taken when delivering a signal are not always optimal, and long latencies can be experienced by applications.

The original motivation behind UNIX signals was to multiplex one thread of control (the process) between different "threads" of execution. Signals behave somewhat like operating system interrupts. That is, when a signal is delivered to an application, the application’s context is saved and it starts executing a previously registered signal handler. Once the signal handler completes, the application returns to executing where it was when the signal was delivered. This can get complicated in practice.

Signals are too non-deterministic to trust in a real-time application. A better option is to use POSIX Threads (pthreads) to distribute your workload and communicate between various components. You can coordinate groups of threads using the pthreads mechanisms of mutexes, condition variables, and barriers. The code paths through these relatively new constructs are much cleaner than the legacy handling code for signals.

Additional resources

4.2. Synchronizing threads

The sched_yield command is a synchronization mechanism that can allow lower priority threads a chance to run. This type of request is prone to failure when issued from within a poorly-written application.

A higher priority thread can call sched_yield() to allow other threads a chance to run. The calling process gets moved to the tail of the queue of processes running at that priority. When this occurs in a situation where there are no other processes running at the same priority, the calling process continues running. If the priority of that process is high, it can potentially create a busy loop, rendering the machine unusable.

When a SCHED_DEADLINE task calls sched_yield(), it gives up the configured CPU, and the remaining runtime is immediately throttled until the next period. The sched_yield() behavior allows the task to wake up at the start of the next period.

The scheduler is better able to determine when, and if, there actually are other threads waiting to run. Avoid using sched_yield() on any real-time task.

Procedure

To call the

sched_yield()function, run the following code:for(;;) { do_the_computation(); /* * Notify the scheduler the end of the computation * This syscall will block until the next replenishment */ sched_yield(); }The

SCHED_DEADLINEtask gets throttled by the conflict-based search (CBS) algorithm until the next period (start of next execution of the loop).

Additional resources

-

pthread.h(P)man page -

sched_yield(2)man page -

sched_yield(3p)man page

4.3. Real-time scheduler priorities

The systemd command can be used to set real-time priority for services launched during the boot process. Some kernel threads can be given a very high priority. This allows the default priorities to integrate well with the requirements of the Real Time Specification for Java (RTSJ). RTSJ requires a range of priorities from 10 to 89.

For deployments where RTSJ is not in use, there is a wide range of scheduling priorities below 90 that can be used by applications. Use extreme caution when scheduling any application thread above priority 49 because it can prevent essential system services from running, because it can prevent essential system services from running. This can result in unpredictable behavior, including blocked network traffic, blocked virtual memory paging, and data corruption due to blocked filesystem journaling.

If any application threads are scheduled above priority 89, ensure that the threads run only a very short code path. Failure to do so would undermine the low latency capabilities of the RHEL for Real Time kernel.

Setting real-time priority for users without mandatory privileges

By default, only users with root permissions on the application can change priority and scheduling information. To provide root permissions, you can modify settings and the preferred method is to add a user to the realtime group.

You can also change user privileges by editing the /etc/security/limits.conf file. However, this can result in duplication and render the system unusable for regular users. If you decide to edit this file, exercise caution and always create a copy before making changes.

4.4. Loading dynamic libraries

When developing real-time application, consider resolving symbols at startup to avoid non-deterministic latencies during program execution. Resolving symbols at startup can slow down program initialization. You can instruct Dynamic Libraries to load at application startup by setting the LD_BIND_NOW variable with ld.so, the dynamic linker/loader.

For example, the following shell script exports the LD_BIND_NOW variable with a value of 1, then runs a program with a scheduler policy of FIFO and a priority of 1.

#!/bin/sh LD_BIND_NOW=1 export LD_BIND_NOW chrt --fifo 1 _/opt/myapp/myapp-server &_

Additional resources

-

ld.so(8)man page

Chapter 5. Setting BIOS parameters for system tuning

The BIOS plays a key role in the functioning of the system. By configuring the BIOS parameters correctly you can significantly improve the system performance.

Every system and BIOS vendor uses different terms and navigation methods. For more information about BIOS settings, see the BIOS documentation or contact the BIOS vendor.

5.1. Disabling power management to improve response times

BIOS power management options help save power by changing the system clock frequency or by putting the CPU into one of various sleep states. These actions are likely to affect how quickly the system responds to external events.

To improve response times, disable all power management options in the BIOS.

5.2. Improving response times by disabling error detection and correction units

Error Detection and Correction (EDAC) units are devices for detecting and correcting errors signaled from Error Correcting Code (ECC) memory. Usually EDAC options range from no ECC checking to a periodic scan of all memory nodes for errors. The higher the EDAC level, the more time the BIOS uses. This may result in missing crucial event deadlines.

To improve response times, turn off EDAC. If this is not possible, configure EDAC to the lowest functional level.

5.3. Improving response time by configuring System Management Interrupts

System Management Interrupts (SMIs) are a hardware vendors facility to ensure that the system is operating correctly. The BIOS code usually services the SMI interrupt. SMIs are typically used for thermal management, remote console management (IPMI), EDAC checks, and various other housekeeping tasks.

If the BIOS contains SMI options, check with the vendor and any relevant documentation to determine the extent to which it is safe to disable them.

While it is possible to completely disable SMIs, Red Hat strongly recommends that you do not do this. Removing the ability of your system to generate and service SMIs can result in catastrophic hardware failure.

Chapter 6. Running and interpreting hardware and firmware latency tests

With the hwlatdetect program, you can test and verify if a potential hardware platform is suitable for using real-time operations.

Prerequisites

-

Ensure that the

RHEL-RT(RHEL for Real Time) andrt-testspackages are installed. Check the vendor documentation for any tuning steps required for low latency operation.

The vendor documentation can provide instructions to reduce or remove any System Management Interrupts (SMIs) that would transition the system into System Management Mode (SMM). While a system is in SMM, it runs firmware and not operating system code. This means that any timers that expire while in SMM wait until the system transitions back to normal operation. This can cause unexplained latencies, because SMIs cannot be blocked by Linux, and the only indication that we actually took an SMI can be found in vendor-specific performance counter registers.

WarningRed Hat strongly recommends that you do not completely disable SMIs, as it can result in catastrophic hardware failure.

6.1. Running hardware and firmware latency tests

It is not required to run any load on the system while running the hwlatdetect program, because the test looks for latencies introduced by the hardware architecture or BIOS or EFI firmware. The default values for hwlatdetect are to poll for 0.5 seconds each second, and report any gaps greater than 10 microseconds between consecutive calls to fetch the time. hwlatdetect returns the best maximum latency possible on the system. Therefore, if you have an application that requires maximum latency values of less than 10us and hwlatdetect reports one of the gaps as 20us, then the system can only guarantee latency of 20us.

If hwlatdetect shows that the system cannot meet the latency requirements of the application, try changing the BIOS settings or working with the system vendor to get new firmware that meets the latency requirements of the application.

Prerequisites

-

Ensure that the

RHEL-RTandrt-testspackages are installed.

Procedure

Run

hwlatdetect, specifying the test duration in seconds.hwlatdetectlooks for hardware and firmware-induced latencies by polling the clock-source and looking for unexplained gaps.# hwlatdetect --duration=60s hwlatdetect: test duration 60 seconds detector: tracer parameters: Latency threshold: 10us Sample window: 1000000us Sample width: 500000us Non-sampling period: 500000us Output File: None Starting test test finished Max Latency: Below threshold Samples recorded: 0 Samples exceeding threshold: 0

Additional resources

-

hwlatdetectman page. - Interpreting hardware and firmware latency tests

6.2. Interpreting hardware and firmware latency test results

The hardware latency detector (hwlatdetect) uses the tracer mechanism to detect latencies introduced by the hardware architecture or BIOS/EFI firmware. By checking the latencies measured by hwlatdetect, you can determine if a potential hardware is suitable to support the RHEL for Real Time kernel.

Examples

The example result represents a system tuned to minimize system interruptions from firmware. In this situation, the output of

hwlatdetectlooks like this:# hwlatdetect --duration=60s hwlatdetect: test duration 60 seconds detector: tracer parameters: Latency threshold: 10us Sample window: 1000000us Sample width: 500000us Non-sampling period: 500000us Output File: None Starting test test finished Max Latency: Below threshold Samples recorded: 0 Samples exceeding threshold: 0The example result represents a system that could not be tuned to minimize system interruptions from firmware. In this situation, the output of

hwlatdetectlooks like this:# hwlatdetect --duration=10s hwlatdetect: test duration 10 seconds detector: tracer parameters: Latency threshold: 10us Sample window: 1000000us Sample width: 500000us Non-sampling period: 500000us Output File: None Starting test test finished Max Latency: 18us Samples recorded: 10 Samples exceeding threshold: 10 SMIs during run: 0 ts: 1519674281.220664736, inner:17, outer:15 ts: 1519674282.721666674, inner:18, outer:17 ts: 1519674283.722667966, inner:16, outer:17 ts: 1519674284.723669259, inner:17, outer:18 ts: 1519674285.724670551, inner:16, outer:17 ts: 1519674286.725671843, inner:17, outer:17 ts: 1519674287.726673136, inner:17, outer:16 ts: 1519674288.727674428, inner:16, outer:18 ts: 1519674289.728675721, inner:17, outer:17 ts: 1519674290.729677013, inner:18, outer:17----The output shows that during the consecutive reads of the system

clocksource, there were 10 delays that showed up in the 15-18 us range.NotePrevious versions used a kernel module rather than the

ftracetracer.

Understanding the results

The information on testing method, parameters, and results helps you understand the latency parameters and the latency values detected by the hwlatdetect utility.

The table for Testing method, parameters, and results, lists the parameters and the latency values detected by the hwlatdetect utility.

Table 6.1. Testing method, parameters, and results

| Parameter | Value | Description |

|---|---|---|

|

|

| The duration of the test in seconds |

|

|

|

The utility that runs the |

|

| ||

|

|

| The maximum allowable latency |

|

|

| 1 second |

|

|

| 0.05 seconds |

|

|

| 0.05 seconds |

|

|

| The file to which the output is saved. |

|

| ||

|

|

|

The highest latency during the test that exceeded the |

|

|

| The number of samples recorded by the test. |

|

|

|

The number of samples recorded by the test where the latency exceeded the |

|

|

| The number of System Management Interrupts (SMIs) that occurred during the test run. |

The values printed by the hwlatdetect utility for inner and outer are the maximum latency values. They are deltas between consecutive reads of the current system clocksource (usually the TSC or TSC register, but potentially the HPET or ACPI power management clock) and any delays between consecutive reads introduced by the hardware-firmware combination.

After finding the suitable hardware-firmware combination, the next step is to test the real-time performance of the system while under a load.

Chapter 7. Running and interpreting system latency tests

RHEL for Real Time provides the rteval utility to test the system real-time performance under load.

7.1. Running system latency tests

With the rteval utility, you can test a system’s real-time performance under load.

Prerequisites

-

The

RHEL for Real Timepackage group is installed. - You have root permissions on the system.

Procedure

Run the

rtevalutility.# rtevalThe

rtevalutility starts a heavy system load ofSCHED_OTHERtasks. It then measures real-time response on each online CPU. The loads are a parallelmakeof the Linux kernel tree in a loop and thehackbenchsynthetic benchmark.The goal is to bring the system into a state, where each core always has a job to schedule. The jobs perform various tasks, such as memory allocation/free, disk I/O, computational tasks, memory copies, and other.

Once the loads start,

rtevalstarts thecyclictestmeasurement program. This program starts theSCHED_FIFOreal-time thread on each online core. It then measures the real-time scheduling response time.Each measurement thread takes a timestamp, sleeps for an interval, then takes another timestamp after waking up. The latency measured is

t1 - (t0 + i), which is the difference between the actual wakeup timet1, and the theoretical wakeup time of the first timestampt0plus the sleep intervali.The details of the

rtevalrun are written to an XML file along with the boot log for the system. This report is displayed on the screen and saved to a compressed file.The file name is in the form

rteval-<date>-N-tar.bz2, where<date>is the date the report was generated,Nis a counter for the Nth run on<date>.The following is an example of an

rtevalreport:System: Statistics: Samples: 1440463955 Mean: 4.40624790712us Median: 0.0us Mode: 4us Range: 54us Min: 2us Max: 56us Mean Absolute Dev: 1.0776661507us Std.dev: 1.81821060672us CPU core 0 Priority: 95 Statistics: Samples: 36011847 Mean: 5.46434910711us Median: 4us Mode: 4us Range: 38us Min: 2us Max: 40us Mean Absolute Dev: 2.13785341159us Std.dev: 3.50155558554us

The report includes details about the system hardware, length of the run, options used, and the timing results, both per-cpu and system-wide.

NoteTo regenerate an

rtevalreport from its generated file, run# rteval --summarize rteval-<date>-N.tar.bz2

Chapter 8. Setting CPU affinity on RHEL for Real Time

All threads and interrupt sources in the system has a processor affinity property. The operating system scheduler uses this information to determine the threads and interrupts to run on a CPU. By setting processor affinity, along with effective policy and priority settings, you can achieve maximum possible performance. Applications always compete for resources, especially CPU time, with other processes. Depending on the application, related threads are often run on the same core. Alternatively, one application thread can be allocated to one core.

Systems that perform multitasking are naturally more prone to indeterminism. Even high priority applications can be delayed from executing while a lower priority application is in a critical section of code. After the low priority application exits the critical section, the kernel safely preempts the low priority application and schedules the high priority application on the processor. Additionally, migrating processes from one CPU to another can be costly due to cache invalidation. RHEL for Real Time includes tools that address some of these issues and allows latency to be better controlled.

Affinity is represented as a bit mask, where each bit in the mask represents a CPU core. If the bit is set to 1, then the thread or interrupt runs on that core; if 0 then the thread or interrupt is excluded from running on the core. The default value for an affinity bit mask is all ones, meaning the thread or interrupt can run on any core in the system.

By default, processes can run on any CPU. However, by changing the affinity of the process, you can define a process to run on a predetermined set of CPUs. Child processes inherit the CPU affinities of their parents.

Setting the following typical affinity setups can achieve maximum possible performance:

- Using a single CPU core for all system processes and setting the application to run on the remainder of the cores.

-

Configuring a thread application and a specific kernel thread, such as network

softirqor a driver thread, on the same CPU. - Pairing the producer-consumer threads on each CPU. Producers and consumers are two classes of threads, where producers insert data into the buffer and consumers remove it from the buffer.

The usual good practice for tuning affinities on a real-time system is to determine the number of cores required to run the application and then isolate those cores. You can achieve this with the Tuna tool or with the shell scripts to modify the bit mask value, such as the taskset command. The taskset command changes the affinity of a process and modifying the /proc/ file system entry changes the affinity of an interrupt.

8.1. Tuning processor affinity using the taskset command

On real-time, the taskset command helps to set or retrieve the CPU affinity of a running process. The taskset command takes -p and -c options. The -p or --pid option work an existing process and does not start a new task. The -c or --cpu-list specify a numerical list of processors instead of a bitmask. The list can contain more than one items, separated by comma, and a range of processors. For example, 0,5,7,9-11.

Prerequisites

- You have root permissions on the system.

Procedure

To verify the process affinity for a specific process:

# taskset -p -c 1000 pid 1000’s current affinity list: 0,1The command prints the affinity of the process with PID 1000. The process is set up to use CPU 0 or CPU 1.

(Optional) To configure a specific CPU to bind a process:

# taskset -p -c 1 1000 pid 1000’s current affinity list: 0,1 pid 1000’s new affinity list: 1(Optional) To define more than one CPU affinity:

# taskset -p -c 0,1 1000 pid 1000’s current affinity list: 1 pid 1000’s new affinity list: 0,1

(Optional) To configure a priority level and a policy on a specific CPU:

# taskset -c 5 chrt -f 78 /bin/my-appFor further granularity, you can also specify the priority and policy. In the example, the command runs the

/bin/my-appapplication on CPU 5 withSCHED_FIFOpolicy and a priority value of 78.

8.2. Setting processor affinity using the sched_setaffinity() system call

You can also set processor affinity using the real-time sched_setaffinity() system call.

Prerequisite

- You have root permissions on the system.

Procedure

To set the processor affinity with

sched_setaffinity():#define _GNU_SOURCE #include <stdio.h> #include <stdlib.h> #include <unistd.h> #include <errno.h> #include <sched.h> int main(int argc, char **argv) { int i, online=0; ulong ncores = sysconf(_SC_NPROCESSORS_CONF); cpu_set_t *setp = CPU_ALLOC(ncores); ulong setsz = CPU_ALLOC_SIZE(ncores); CPU_ZERO_S(setsz, setp); if (sched_getaffinity(0, setsz, setp) == -1) { perror("sched_getaffinity(2) failed"); exit(errno); } for (i=0; i < CPU_COUNT_S(setsz, setp); i) { if (CPU_ISSET_S(i, setsz, setp)) online; } printf("%d cores configured, %d cpus allowed in affinity mask\n", ncores, online); CPU_FREE(setp); }

8.3. Isolating a single CPU to run high utilization tasks

With the cpusets mechanism, you can assign a set of CPUs and memory nodes for SCHED_DEADLINE tasks. In a task set that has high and low CPU utilizing tasks, isolating a CPU to run the high utilization task and scheduling small utilization tasks on different sets of CPU, enables all tasks to meet the assigned runtime.

Prerequisites

- You have root permissions on the system.

Procedure

Create two directories named as

cpuset:# cd /sys/fs/cgroup/cpuset/ # mkdir cluster # mkdir partition

Disable the load balance of the root

cpusetto create two new root domains in thecpusetdirectory:# echo 0 > cpuset.sched_load_balanceIn the cluster

cpuset, schedule the low utilization tasks to run on CPU 1 to 7, verify memory size, and name the CPU as exclusive:# cd cluster/ # echo 1-7 > cpuset.cpus # echo 0 > cpuset.mems # echo 1 > cpuset.cpu_exclusive

Move all low utilization tasks to the cpuset directory:

# ps -eLo lwp | while read thread; do echo $thread > tasks ; doneCreate a partition named as

cpusetand assign the high utilization task:# cd ../partition/ # echo 1 > cpuset.cpu_exclusive # echo 0 > cpuset.mems # echo 0 > cpuset.cpus

Set the shell to the cpuset and start the deadline workload:

# echo $$ > tasks # /root/d &

With this setup, the task isolated in the partitioned

cpusetdirectory does not interfere with the task in the clustercpusetdirectory. This enables all real-time tasks to meet the scheduler deadline.

8.4. Reducing CPU performance spikes

A common source of latency spikes is when multiple CPUs contend on common locks in the kernel timer tick handler. The usual lock responsible for the contention is xtime_lock, which is used by the timekeeping system and the Read-Copy-Update (RCU) structure locks. By using skew_tick=1, you can offset the timer tick per CPU to start at a different time and avoid potential lock conflicts.

The skew_tick kernel command line parameter might prevent latency fluctuations on moderate to large systems with large core-counts and have latency-sensitive workloads.

Prerequisites

- You have administrator permissions.

Procedure

Enable the

skew_tick=1parameter withgrubby.# grubby --update-kernel=ALL --args="skew_tick=1"

Reboot for changes to take effect.

# reboot

Enabling skew_tick=1 causes a significant increase in power consumption and, therefore, you must enable the skew boot parameter only if you are running latency sensitive real-time workloads and consistent latency is an important consideration over power consumption.

Verification

Display the /proc/cmdline file and ensure skew_tick=1 is specified. The /proc/cmdline file shows the parameters passed to the kernel.

Check the new settings in the

/proc/cmdlinefile.# cat /proc/cmdline

8.5. Lowering CPU usage by disabling the PC card daemon

The pcscd daemon manages connections to parallel communication (PC or PCMCIA) and smart card (SC) readers. Although pcscd is usually a low priority task, it can often use more CPU than any other daemon. Therefore, the additional background noise can lead to higher preemption costs to real-time tasks and other undesirable impacts on determinism.

Prerequisites

- You have root permissions on the system.

Procedure

Check the status of the

pcscddaemon.# systemctl status pcscd ● pcscd.service - PC/SC Smart Card Daemon Loaded: loaded (/usr/lib/systemd/system/pcscd.service; indirect; vendor preset: disabled) Active: active (running) since Mon 2021-03-01 17:15:06 IST; 4s ago TriggeredBy: ● pcscd.socket Docs: man:pcscd(8) Main PID: 2504609 (pcscd) Tasks: 3 (limit: 18732) Memory: 1.1M CPU: 24ms CGroup: /system.slice/pcscd.service └─2504609 /usr/sbin/pcscd --foreground --auto-exitThe

Activeparameter shows the status of thepcsddaemon.If the

pcsddaemon is running, stop it.# systemctl stop pcscd Warning: Stopping pcscd.service, but it can still be activated by: pcscd.socketConfigure the system to ensure that the

pcsddaemon does not restart when the system boots.# systemctl disable pcscd Removed /etc/systemd/system/sockets.target.wants/pcscd.socket.

Verification steps

Check the status of the

pcscddaemon.# systemctl status pcscd ● pcscd.service - PC/SC Smart Card Daemon Loaded: loaded (/usr/lib/systemd/system/pcscd.service; indirect; vendor preset: disabled) Active: inactive (dead) since Mon 2021-03-01 17:10:56 IST; 1min 22s ago TriggeredBy: ● pcscd.socket Docs: man:pcscd(8) Main PID: 4494 (code=exited, status=0/SUCCESS) CPU: 37ms-

Ensure that the value for the

Activeparameter isinactive (dead).

Chapter 9. Using mlock() system calls on RHEL for Real Time

The RHEL for Real-Time memory lock (mlock()) function enables the real-time calling processes to lock or unlock a specified range of the address space. This range prevents Linux from paging the locked memory when swapping memory space. After you allocate the physical page to the page table entry, references to that page become fast. The mlock() system calls include two functions: mlock() and mlockall(). Similarly, munlock() system call includes the munlock() and munlockall() functions.

9.1. mlock() and munlock() system calls

The mlock() and mlockall() system calls lock a specified memory range and do not page this memory. The following are the mlock() system call groups:

-

mlock()system calls: lock a specified range of address. -

munlock()system calls: unlock a specified range of address.

The mlock() system calls, lock pages in the address range starting at addr and continuing for len bytes. When the call returns successfully, all pages that contain a part of the specified address range stay in the memory until unlocked later.

With mlockall() system calls, you can lock all mapped pages into the specified address range. Memory locks do not stack. Any page locked by several calls will unlock the specified address range or the entire region with a single munlock() system call. With munlockall() system calls, you can unlock the entire program space.

The status of the pages contained in a specific range depends on the value in the flags argument. The flags argument can be 0 or MLOCK_ONFAULT.

Memory locks are not inherited by a child process through fork and automatically removed when a process terminates.

Use mlock() system calls with caution. Excessive use can cause out-of-memory (OOM) errors. When an application is large or if it has a large data domain, the mlock() calls can cause thrashing when the system is not able to allocate memory for other tasks.

When using mlockall() calls for real-time processes, ensure that you reserve sufficient stack pages.

9.2. Using mlock() system calls to lock pages

The real-time mlock() system calls use the addr parameter to specify the start of an address range and len to define the length of the address space in bytes. The alloc_workbuf() function dynamically allocates a memory buffer and locks it. Memory allocation is done by the posix_memalig() function to align the memory area to a page. The function free_workbuf() unlocks the memory area.

Prerequisites:

-

You have root privileges or the

CAP_IPC_LOCKcapability to usemlockall()ormlock()on large buffers

Procedure

To lock pages with

mlock()system call, run the following command:#include <stdlib.h> #include <unistd.h> #include <sys/mman.h> void *alloc_workbuf(size_t size) { void ptr; int retval; // alloc memory aligned to a page, to prevent two mlock() in the same page. retval = posix_memalign(&ptr, (size_t) sysconf(_SC_PAGESIZE), size); // return NULL on failure if (retval) return NULL; // lock this buffer into RAM if (mlock(ptr, size)) { free(ptr); return NULL; } return ptr; } void free_workbuf(void *ptr, size_t size) { // unlock the address range munlock(ptr, size); // free the memory free(ptr); }

Verification

The real-time mlock() and munlock() calls return 0 when successful. In case of an error, they return -1 and set a errno to indicate the error.

9.3. Using mlockall() system calls to lock all mapped pages

To lock and unlock real-time memory with mlockall() and munlockall() system calls, set the flags argument to 0 or one of the constants: MCL_CURRENT or MCL_FUTURE. With MCL_FUTURE, a future system call, such as mmap2(), sbrk2(), or malloc3(), might fail, because it causes the number of locked bytes to exceed the permitted maximum.

Prerequisites

- You have root permissions on the system.

Procedure

To use

mlockall()andmunlockall()real-time system calls :Lock all mapped pages by using

mlockall()system call:#include <sys/mman.h> int mlockall (int flags)

Unlock all mapped pages by using

munlockall()system call:#include <sys/mman.h> int munlockall (void)

Additional resources

-

capabilities(7)man page -

mlock(2)man page -

mlock(3)man page -

move_pages(2)man page -

posix_memalign(3)man page -

posix_memalign(3p)man page

9.4. Using mmap() system calls to map files or devices into memory

For large memory allocations on real-time systems, the memory allocation (malloc) method uses the mmap() system call to find memory space. You can assign and lock memory areas by setting MAP_LOCKED in the flags parameter. As mmap() assigns memory on a page basis, it avoids two locks on the same page, which prevents the double-lock or single-unlock problems.

Prerequisites

- You have root permissions on the system.

Procedure

To map a specific process-address space:

#include <sys/mman.h> #include <stdlib.h> void *alloc_workbuf(size_t size) { void *ptr; ptr = mmap(NULL, size, PROT_READ | PROT_WRITE, MAP_PRIVATE | MAP_ANONYMOUS | MAP_LOCKED, -1, 0); if (ptr == MAP_FAILED) return NULL; return ptr; } void free_workbuf(void *ptr, size_t size) { munmap(ptr, size); }

Verification

-

When the

mmap()function completes successfully, it returns a pointer to the mapped area. On error, it returns theMAP_FAILEDvalue and sets aerrnoto indicate the error. -

When the

munmap()function completes successfully, it returns0. On error, it returns-1and sets anerrnoto indicate the error.

Additional resources

-

mmap(2)man page -

mlockall(2)man page

9.5. Parameters for mlock() system calls

The parameters for memory lock system call and the functions they perform are listed and described in the mlock parameters table.

Table 9.1. mlock parameters

| Parameter | Description |

|---|---|

|

|

Specifies the process address space to lock or unlock. When NULL, the kernel chooses the page-aligned arrangement of data in the memory. If |

|

| Specifies the length of the mapping, which must be greater than 0. |

|

| Specifies the file descriptor. |

|

|

|

|

|

Controls the mapping visibility to other processes that map the same file. It takes one of the values: |

|

| Locks all pages that are currently mapped into a process. |

|

| Sets the mode to lock subsequent memory allocations. These could be new pages required by a growing heap and stack, new memory-mapped files, or shared memory regions. |

Chapter 10. Minimizing or avoiding system slowdowns due to journaling

The order in which journal changes are written to disk might differ from the order in which they arrive. The kernel I/O system can reorder the journal changes to optimize the use of available storage space. Journal activity can result in system latency by re-ordering journal changes and committing data and metadata. As a result, journaling file systems can slow down the system.

XFS is the default file system used by RHEL 8. This is a journaling file system. An older file system called ext2 does not use journaling. Unless your organization specifically requires journaling, consider the ext2 file system. In many of Red Hat’s best benchmark results, the ext2 filesystem is used. This is one of the top initial tuning recommendations.

Journaling file systems like XFS, records the time a file was last accessed (the atime attribute). If you need to use a journaling file system, consider disabling atime.

10.1. Disabling atime

Disabling the atime attribute increases performance and decreases power usage by limiting the number of writes to the file-system journal.

Procedure

Open the

/etc/fstabfile using your chosen text editor and locate the entry for the root mount point./dev/mapper/rhel-root / xfs defaults…

Edit the options sections to include the terms

noatimeandnodiratime. Thenoatimeoption prevents access timestamps being updated when a file is read, and thenodiratimeoption stops directory inode access times being updated./dev/mapper/rhel-root / xfs noatime,nodiratime…

Some applications rely on atime being updated. Therefore, this option is reasonable only on systems where such applications are not used.

Alternatively, you can use the relatime mount option, which ensures that the access time is only updated if the previous access time is older than the current modify time.

10.2. Additional resources

-

mkfs.ext2(8)man page -

mkfs.xfs(8)man page -

mount(8)man page

Chapter 11. Disabling graphics console output for latency sensitive workloads

The kernel starts passing messages to printk() as soon as it starts. The kernel sends messages to the log file and also displays on the graphics console even in the absence of a monitor attached to a headless server.

In some systems, the output sent to the graphics console might introduce stalls in the pipeline. This might cause potential delay in task execution while waiting for data transfers. For example, outputs sent to teletype0 (/dev/tty0), might cause potential stalls in some systems.

To prevent unexpected stalls, you can limit or disable the information that is sent to the graphic console by:

-

Removing the

tty0definition. - Changing the order of console definitions.

-

Turning off most

printk()functions and ensuring that you set theignore_loglevelkernel parameter tonot configured.

By disabling the graphics console output from logging on and by controlling the messages that print on the graphics console, you can improve latency on sensitive workloads.

11.1. Disabling graphics console logging to graphics adapter

The teletype (tty) default kernel console enables your interaction with the system by passing input data to the system and displaying the output information about the graphics console.

Not configuring the graphics console, prevents it from logging on the graphics adapter. This makes tty0 unavailable to the system and helps disable printing messages on the graphics console.

Disabling graphics console output does not delete information. The information prints in the system log and you can access them using the journalctl or dmesg utilities.

Procedure

Remove the

console=tty0option from the kernel configuration:# grubby --update-kernel=ALL --remove-args="console=tty0"

11.2. Disabling messages from printing on graphics console

You can control the amount of output messages that are sent to the graphics console by configuring the required log levels in the /proc/sys/kernel/printk file.

Procedure

View the current console log level:

$ cat /proc/sys/kernel/printk 7 4 1 7The command prints the current settings for system log levels. The numbers correspond to current, default, minimum, and boot-default values for the system logger.

Configure the desired log level in the

/proc/sys/kernel/printkfile.$ echo “1” > /proc/sys/kernel/printkThe command changes the current console log level. For example, setting log level 1, will print only alert messages and prevent display of other messages on the graphics console.

Chapter 12. Managing system clocks to satisfy application needs

Multiprocessor systems such as NUMA or SMP have multiple instances of hardware clocks. During boot time the kernel discovers the available clock sources and selects one to use. To improve performance, you can change the clock source used to meet the minimum requirements of a real-time system.

12.1. Hardware clocks

Multiple instances of clock sources found in multiprocessor systems, such as non-uniform memory access (NUMA) and Symmetric multiprocessing (SMP), interact among themselves and the way they react to system events, such as CPU frequency scaling or entering energy economy modes, determine whether they are suitable clock sources for the real-time kernel.

The preferred clock source is the Time Stamp Counter (TSC). If the TSC is not available, the High Precision Event Timer (HPET) is the second best option. However, not all systems have HPET clocks, and some HPET clocks can be unreliable.

In the absence of TSC and HPET, other options include the ACPI Power Management Timer (ACPI_PM), the Programmable Interval Timer (PIT), and the Real Time Clock (RTC). The last two options are either costly to read or have a low resolution (time granularity), therefore they are sub-optimal for use with the real-time kernel.

12.2. Viewing the available clock sources in your system

The list of available clock sources in your system is in the /sys/devices/system/clocksource/clocksource0/available_clocksource file.

Procedure

Display the

available_clocksourcefile.# cat /sys/devices/system/clocksource/clocksource0/available_clocksource tsc hpet acpi_pmIn this example, the available clock sources in the system are TSC, HPET, and ACPI_PM.

12.3. Viewing the clock source currently in use

The currently used clock source in your system is stored in the /sys/devices/system/clocksource/clocksource0/current_clocksource file.

Procedure

Display the

current_clocksourcefile.# cat /sys/devices/system/clocksource/clocksource0/current_clocksource tscIn this example, the current clock source in the system is TSC.

12.4. Temporarily changing the clock source to use

Sometimes the best-performing clock for a system’s main application is not used due to known problems on the clock. After ruling out all problematic clocks, the system can be left with a hardware clock that is unable to satisfy the minimum requirements of a real-time system.

Requirements for crucial applications vary on each system. Therefore, the best clock for each application, and consequently each system, also varies. Some applications depend on clock resolution, and a clock that delivers reliable nanoseconds readings can be more suitable. Applications that read the clock too often can benefit from a clock with a smaller reading cost (the time between a read request and the result).

In these cases it is possible to override the clock selected by the kernel, provided that you understand the side effects of the override and can create an environment which will not trigger the known shortcomings of the given hardware clock.

The kernel automatically selects the best available clock source. Overriding the selected clock source is not recommended unless the implications are well understood.

Prerequisites

- You have root permissions on the system.

Procedure

View the available clock sources.

# cat /sys/devices/system/clocksource/clocksource0/available_clocksource tsc hpet acpi_pmAs an example, consider the available clock sources in the system are TSC, HPET, and ACPI_PM.

Write the name of the clock source you want to use to the

/sys/devices/system/clocksource/clocksource0/current_clocksourcefile.# echo hpet > /sys/devices/system/clocksource/clocksource0/current_clocksourceNoteThe changes apply to the clock source currently in use. When the system reboots, the default clock is used. To make the change persistent, see Making persistent kernel tuning parameter changes.

Verification steps

Display the

current_clocksourcefile to ensure that the current clock source is the specified clock source.# cat /sys/devices/system/clocksource/clocksource0/current_clocksource hpetThe example uses HPET as the current clock source in the system.

12.5. Comparing the cost of reading hardware clock sources

You can compare the speed of the clocks in your system. Reading from the TSC involves reading a register from the processor. Reading from the HPET clock involves reading a memory area. Reading from the TSC is faster, which provides a significant performance advantage when timestamping hundreds of thousands of messages per second.

Prerequisites

- You have root permissions on the system.

-

The

clock_timingprogram must be on the system. For more information, see the clock_timing program.

Procedure

Change to the directory in which the

clock_timingprogram is saved.# cd clock_testView the available clock sources in your system.

# cat /sys/devices/system/clocksource/clocksource0/available_clocksource tsc hpet acpi_pmIn this example, the available clock sources in the system are

TSC,HPET, andACPI_PM.View the currently used clock source.

# cat /sys/devices/system/clocksource/clocksource0/current_clocksource tscIn this example, the current clock source in the system is

TSC.Run the

timeutility in conjunction with the ./clock_timingprogram. The output displays the duration required to read the clock source 10 million times.# time ./clock_timing real 0m0.601s user 0m0.592s sys 0m0.002sThe example shows the following parameters:

-

real- The total time spent beginning from program invocation until the process ends.realincludes user and kernel times, and will usually be larger than the sum of the latter two. If this process is interrupted by an application with higher priority, or by a system event such as a hardware interrupt (IRQ), this time spent waiting is also computed underreal. -

user- The time the process spent in user space performing tasks that did not require kernel intervention. -

sys- The time spent by the kernel while performing tasks required by the user process. These tasks include opening files, reading and writing to files or I/O ports, memory allocation, thread creation, and network related activities.

-

Write the name of the next clock source you want to test to the

/sys/devices/system/clocksource/clocksource0/current_clocksourcefile.# echo hpet > /sys/devices/system/clocksource/clocksource0/current_clocksourceIn this example, the current clock source is changed to

HPET.- Repeat steps 4 and 5 for all of the available clock sources.

- Compare the results of step 4 for all of the available clock sources.

Additional resources

-

time(1)man page

12.6. Synchronizing the TSC timer on Opteron CPUs

The current generation of AMD64 Opteron processors can be susceptible to a large gettimeofday skew. This skew occurs when both cpufreq and the Time Stamp Counter (TSC) are in use. RHEL for Real Time provides a method to prevent this skew by forcing all processors to simultaneously change to the same frequency. As a result, the TSC on a single processor never increments at a different rate than the TSC on another processor.

Prerequisites

- You have root permissions on the system.

Procedure

Enable the

clocksource=tscandpowernow-k8.tscsync=1kernel options:# grubby --update-kernel=ALL --args="clocksource=tsc powernow-k8.tscsync=1"

This forces the use of TSC and enables simultaneous core processor frequency transitions.

- Restart the machine.

Additional resources

-

gettimeofday(2)man page

12.7. The clock_timing program

The clock_timing program reads the current clock source 10 million times. In conjunction with the time utility it measures the amount of time needed to do this.

Procedure

To create the clock_timing program:

Create a directory for the program files.

$ mkdir clock_testChange to the created directory.

$ cd clock_testCreate a source file and open it in a text editor.

$ {EDITOR} clock_timing.cEnter the following into the file:

#include <time.h> void main() { int rc; long i; struct timespec ts; for(i=0; i<10000000; i++) { rc = clock_gettime(CLOCK_MONOTONIC, &ts); } }- Save the file and exit the editor.

Compile the file.

$ gcc clock_timing.c -o clock_timing -lrtThe

clock_timingprogram is ready and can be run from the directory in which it is saved.

Chapter 13. Controlling power management transitions

You can control power management transitions to improve latency.

Prerequisites

- You have root permissions on the system.

13.1. Power saving states

Modern processors actively transition to higher power saving states (C-states) from lower states. Unfortunately, transitioning from a high power saving state back to a running state can consume more time than is optimal for a real-time application. To prevent these transitions, an application can use the Power Management Quality of Service (PM QoS) interface.

With the PM QoS interface, the system can emulate the behavior of the idle=poll and processor.max_cstate=1 parameters, but with a more fine-grained control of power saving states. idle=poll prevents the processor from entering the idle state. processor.max_cstate=1 prevents the processor from entering deeper C-states (energy-saving modes).

When an application holds the /dev/cpu_dma_latency file open, the PM QoS interface prevents the processor from entering deep sleep states, which cause unexpected latencies when they are being exited. When the file is closed, the system returns to a power-saving state.

13.2. Configuring power management states

You can control power management transitions by configuring power management states with one of the following ways:

-

Write a value to the

/dev/cpu_dma_latencyfile to change the maximum response time for processes in microseconds and hold the file descriptor open until low latency is required. -

Reference the

/dev/cpu_dma_latencyfile in an application or a script.

Prerequisites

- You have administrator privileges.

Procedure

Specify latency tolerance by writing a 32-bit number that represents a maximum response time in microseconds in

/dev/cpu_dma_latencyand keep the file descriptor open through the low-latency operation. A value of0disables C-state completely.For example:

import os import os.path import signal import sys if not os.path.exists('/dev/cpu_dma_latency'): print("no PM QOS interface on this system!") sys.exit(1) fd = os.open('/dev/cpu_dma_latency', os.O_WRONLY) os.write(fd, b'\0\0\0\0') print("Press ^C to close /dev/cpu_dma_latency and exit") signal.pause() except KeyboardInterrupt: print("closing /dev/cpu_dma_latency") os.close(fd) sys.exit(0)NoteThe Power Management Quality of Service interface (

pm_qos) interface is only active while it has an open file descriptor. Therefore, any script or program you use to access/dev/cpu_dma_latencymust hold the file open until power-state transitions are allowed.

Chapter 14. Minimizing system latency by isolating interrupts and user processes

Real-time environments need to minimize or eliminate latency when responding to various events. To do this, you can isolate interrupts (IRQs) from user processes from one another on different dedicated CPUs.

14.1. Interrupt and process binding

Isolating interrupts (IRQs) from user processes on different dedicated CPUs can minimize or eliminate latency in real-time environments.

Interrupts are generally shared evenly between CPUs. This can delay interrupt processing when the CPU has to write new data and instruction caches. These interrupt delays can cause conflicts with other processing being performed on the same CPU.

It is possible to allocate time-critical interrupts and processes to a specific CPU (or a range of CPUs). In this way, the code and data structures for processing this interrupt will most likely be in the processor and instruction caches. As a result, the dedicated process can run as quickly as possible, while all other non-time-critical processes run on the other CPUs. This can be particularly important where the speeds involved are near or at the limits of memory and available peripheral bus bandwidth. Any wait for memory to be fetched into processor caches will have a noticeable impact in overall processing time and determinism.

In practice, optimal performance is entirely application-specific. For example, tuning applications with similar functions for different companies, required completely different optimal performance tunings.

- One firm saw optimal results when they isolated 2 out of 4 CPUs for operating system functions and interrupt handling. The remaining 2 CPUs were dedicated purely for application handling.

- Another firm found optimal determinism when they bound the network related application processes onto a single CPU which was handling the network device driver interrupt.

To bind a process to a CPU, you usually need to know the CPU mask for a given CPU or range of CPUs. The CPU mask is typically represented as a 32-bit bitmask, a decimal number, or a hexadecimal number, depending on the command you are using.

Table 14.1. Example of the CPU Mask for given CPUs

| CPUs | Bitmask | Decimal | Hexadecimal |

| 0 | 00000000000000000000000000000001 | 1 | 0x00000001 |

| 0, 1 | 00000000000000000000000000000011 | 3 | 0x00000011 |

14.2. Disabling the irqbalance daemon

The irqbalance daemon is enabled by default and periodically forces interrupts to be handled by CPUs in an even manner. However in real-time deployments, irqbalance is not needed, because applications are typically bound to specific CPUs.

Procedure

Check the status of

irqbalance.# systemctl status irqbalance irqbalance.service - irqbalance daemon Loaded: loaded (/usr/lib/systemd/system/irqbalance.service; enabled) Active: active (running) …If

irqbalanceis running, disable it, and stop it.# systemctl disable irqbalance # systemctl stop irqbalance

Verification

Check that the

irqbalancestatus is inactive.# systemctl status irqbalance

14.3. Excluding CPUs from IRQ balancing

You can use the IRQ balancing service to specify which CPUs you want to exclude from consideration for interrupt (IRQ) balancing. The IRQBALANCE_BANNED_CPUS parameter in the /etc/sysconfig/irqbalance configuration file controls these settings. The value of the parameter is a 64-bit hexadecimal bit mask, where each bit of the mask represents a CPU core.

Procedure

Open

/etc/sysconfig/irqbalancein your preferred text editor and find the section of the file titledIRQBALANCE_BANNED_CPUS.# IRQBALANCE_BANNED_CPUS # 64 bit bitmask which allows you to indicate which cpu's should # be skipped when reblancing irqs. Cpu numbers which have their # corresponding bits set to one in this mask will not have any # irq's assigned to them on rebalance # #IRQBALANCE_BANNED_CPUS=

-

Uncomment the

IRQBALANCE_BANNED_CPUSvariable. - Enter the appropriate bitmask to specify the CPUs to be ignored by the IRQ balance mechanism.

- Save and close the file.

Restart the

irqbalanceservice for the changes to take effect:# systemctl restart irqbalance

If you are running a system with up to 64 CPU cores, separate each group of eight hexadecimal digits with a comma. For example: IRQBALANCE_BANNED_CPUS=00000001,0000ff00

Table 14.2. Examples

| CPUs | Bitmask |

| 0 | 00000001 |

| 8 - 15 | 0000ff00 |

| 8 - 15, 33 | 00000002,0000ff00 |

In RHEL 7.2 and higher, the irqbalance utility automatically avoids IRQs on CPU cores isolated via the isolcpus kernel parameter if IRQBALANCE_BANNED_CPUS is not set in /etc/sysconfig/irqbalance.

14.4. Manually assigning CPU affinity to individual IRQs

Assigning CPU affinity enables binding and unbinding processes and threads to a specified CPU or range of CPUs. This can reduce caching problems.

Procedure

Check the IRQs in use by each device by viewing the

/proc/interruptsfile.# cat /proc/interruptsEach line shows the IRQ number, the number of interrupts that happened in each CPU, followed by the IRQ type and a description.

CPU0 CPU1 0: 26575949 11 IO-APIC-edge timer 1: 14 7 IO-APIC-edge i8042

Write the CPU mask to the

smp_affinityentry of a specific IRQ. The CPU mask must be expressed as a hexadecimal number.For example, the following command instructs IRQ number 142 to run only on CPU 0.

# echo 1 > /proc/irq/142/smp_affinityThe change only takes effect when an interrupt occurs.

Verification steps

- Perform an activity that will trigger the specified interrupt.

Check

/proc/interruptsfor changes.The number of interrupts on the specified CPU for the configured IRQ increased, and the number of interrupts for the configured IRQ on CPUs outside the specified affinity did not increase.

14.5. Binding processes to CPUs with the taskset utility

The taskset utility uses the process ID (PID) of a task to view or set its CPU affinity. You can use the utility to run a command with a chosen CPU affinity.

To set the affinity, you need to get the CPU mask to be as a decimal or hexadecimal number. The mask argument is a bitmask that specifies which CPU cores are legal for the command or PID being modified.

The taskset utility works on a NUMA (Non-Uniform Memory Access) system, but it does not allow the user to bind threads to CPUs and the closest NUMA memory node. On such systems, taskset is not the preferred tool, and the numactl utility should be used instead for its advanced capabilities.

For more information, see the numactl(8) man page.

Procedure

Run

tasksetwith the necessary options and arguments.You can specify a CPU list using the -c parameter instead of a CPU mask. In this example,

my_embedded_processis being instructed to run only on CPUs 0,4,7-11.# taskset -c 0,4,7-11 /usr/local/bin/my_embedded_processThis invocation is more convenient in most cases.

To set the affinity of a process that is not currently running, use

tasksetand specify the CPU mask and the process.In this example,

my_embedded_processis being instructed to use only CPU 3 (using the decimal version of the CPU mask).# taskset 8 /usr/local/bin/my_embedded_processYou can specify more than one CPU in the bitmask. In this example,

my_embedded_processis being instructed to execute on processors 4, 5, 6, and 7 (using the hexadecimal version of the CPU mask).# taskset 0xF0 /usr/local/bin/my_embedded_processYou can set the CPU affinity for processes that are already running by using the

-p(--pid) option with the CPU mask and the PID of the process you want to change. In this example, the process with a PID of 7013 is being instructed to run only on CPU 0.# taskset -p 1 7013

You can combine the listed options.

Additional resources

-

taskset(1)man page -

numactl(8)man page

Chapter 15. Managing Out of Memory states

Out-of-memory (OOM) is a computing state where all available memory, including swap space, has been allocated. Normally this causes the system to panic and stop functioning as expected. The provided instructions help in avoiding OOM states on your system.

Prerequisites

- You have root permissions on the system.

15.1. Changing the Out of Memory value

The /proc/sys/vm/panic_on_oom file contains a value which is the switch that controls Out of Memory (OOM) behavior. When the file contains 1, the kernel panics on OOM and stops functioning as expected.

The default value is 0, which instructs the kernel to call the oom_killer() function when the system is in an OOM state. Usually, oom_killer() terminates unnecessary processes, which allows the system to survive.

You can change the value of /proc/sys/vm/panic_on_oom.

Procedure

Display the current value of

/proc/sys/vm/panic_on_oom.# cat /proc/sys/vm/panic_on_oom 0To change the value in

/proc/sys/vm/panic_on_oom:Echo the new value to

/proc/sys/vm/panic_on_oom.# echo 1 > /proc/sys/vm/panic_on_oom

It is recommended that you make the Real-Time kernel panic on OOM (1). Otherwise, when the system encounters an OOM state, it is no longer deterministic.

Verification steps

Display the value of

/proc/sys/vm/panic_on_oom.# cat /proc/sys/vm/panic_on_oom 1- Verify that the displayed value matches the value specified.

15.2. Prioritizing processes to kill when in an Out of Memory state

You can prioritize the processes that get terminated by the oom_killer() function. This can ensure that high-priority processes keep running during an OOM state. Each process has a directory, /proc/PID. Each directory includes the following files:

-

oom_adj- Valid scores foroom_adjare in the range -16 to +15. This value is used to calculate the performance footprint of the process, using an algorithm that also takes into account how long the process has been running, among other factors. -

oom_score- Contains the result of the algorithm calculated using the value inoom_adj.

In an Out of Memory state, the oom_killer() function terminates processes with the highest oom_score.

You can prioritize the processes to terminate by editing the oom_adj file for the process.

Prerequisites

- Know the process ID (PID) of the process you want to prioritize.

Procedure

Display the current

oom_scorefor a process.# cat /proc/12465/oom_score 79872Display the contents of

oom_adjfor the process.# cat /proc/12465/oom_adj 13Edit the value in

oom_adj.# echo -5 > /proc/12465/oom_adj

Verification steps

Display the current

oom_scorefor the process.# cat /proc/12465/oom_score 78- Verify that the displayed value is lower than the previous value.

15.3. Disabling the Out of Memory killer for a process

You can disable the oom_killer() function for a process by setting oom_adj to the reserved value of -17. This will keep the process alive, even in an OOM state.

Procedure

Set the value in

oom_adjto-17.# echo -17 > /proc/12465/oom_adj

Verification steps

Display the current

oom_scorefor the process.# cat /proc/12465/oom_score 0-

Verify that the displayed value is

0.

Chapter 16. Improving latency using the tuna CLI

You can use the tuna CLI to improve latency on your system. The options used with the tuna command determine the method invoked to improve latency. By using the tuna CLI, you perform the following functions:

- Modify the scheduler tunables

- Tune a IRQ handlers and thread priorities

- Isolate CPU cores and sockets

- Reduce the complexity to tune tasks

16.1. Prerequisites

-

The

tunaand thepython-linux-procfspackages are installed. - You have root permissions on the system.

16.2. The tuna CLI

The tuna command-line interface (CLI) is a tool to help you make tuning changes to your system.