Red Hat Training

A Red Hat training course is available for RHEL 8

Chapter 1. Overview of available storage options

There are several local, remote, and cluster-based storage options available on RHEL 8.

Local storage implies that the storage devices are either installed on the system or directly attached to the system.

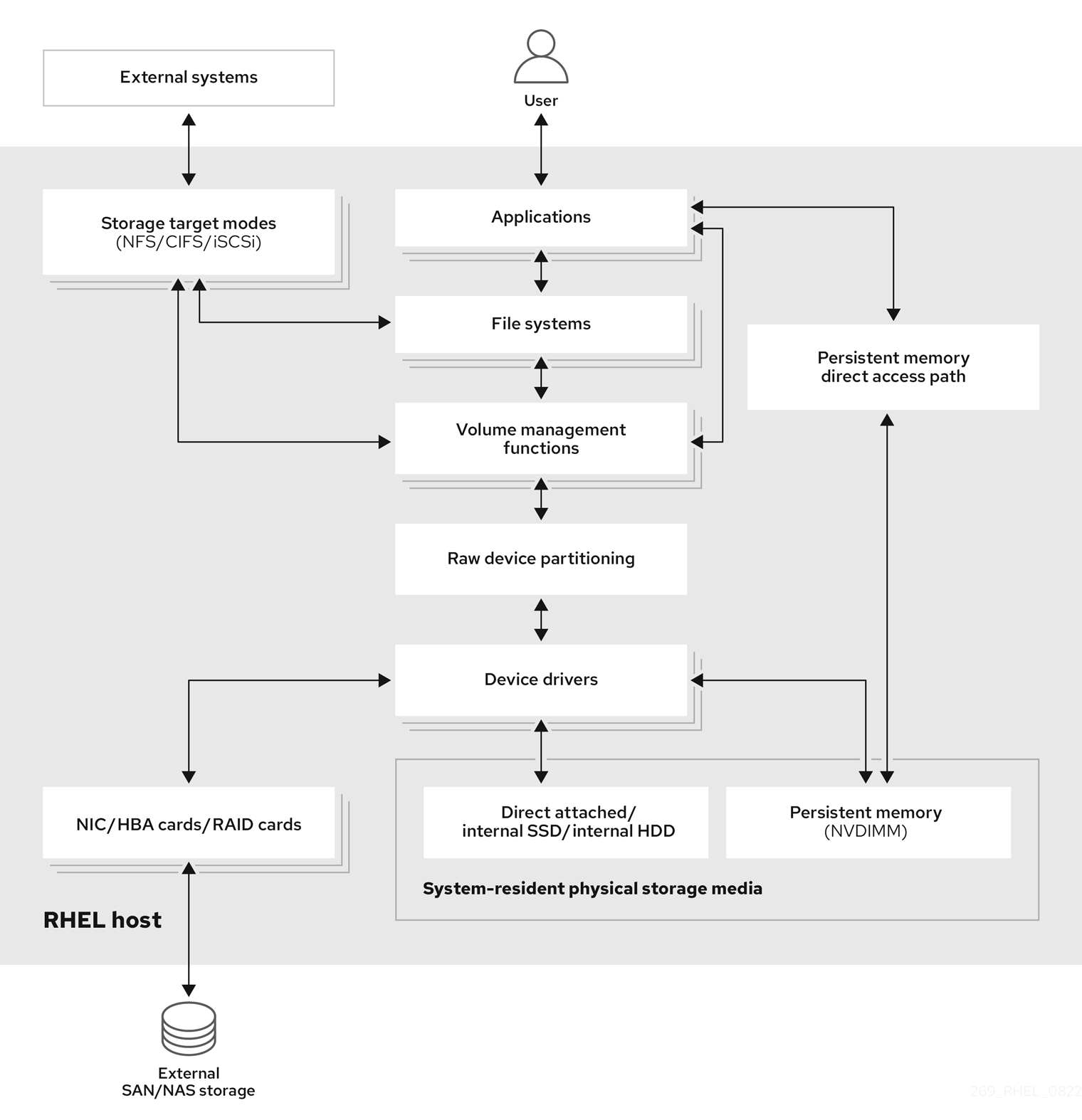

With remote storage, devices are accessed over LAN, the internet, or using a Fibre channel network. The following high level Red Hat Enterprise Linux storage diagram describes the different storage options.

Figure 1.1. High level Red Hat Enterprise Linux storage diagram

1.1. Local storage overview

Red Hat Enterprise Linux 8 offers several local storage options.

- Basic disk administration

Using

partedandfdisk, you can create, modify, delete, and view disk partitions. The following are the partitioning layout standards:- Master Boot Record (MBR)

- It is used with BIOS-based computers. You can create primary, extended, and logical partitions.

- GUID Partition Table (GPT)

- It uses Globally Unique identifier (GUID) and provides unique disk and partition GUID.

To encrypt the partition, you can use Linux Unified Key Setup-on-disk-format (LUKS). To encrypt the partition, select the option during the installation and the prompt displays to enter the passphrase. This passphrase unlocks the encryption key.

- Storage consumption options

- Non-Volatile Dual In-line Memory Modules (NVDIMM) Management

- It is a combination of memory and storage. You can enable and manage various types of storage on NVDIMM devices connected to your system.

- Block Storage Management

- Data is stored in the form of blocks where each block has a unique identifier.

- File Storage

- Data is stored at file level on the local system. These data can be accessed locally using XFS (default) or ext4, and over a network by using NFS and SMB.

- Logical volumes

- Logical Volume Manager (LVM)

It creates logical devices from physical devices. Logical volume (LV) is a combination of the physical volumes (PV) and volume groups (VG). Configuring LVM include:

- Creating PV from the hard drives.

- Creating VG from the PV.

- Creating LV from the VG assigning mount points to the LV.

- Virtual Data Optimizer (VDO)

It is used for data reduction by using deduplication, compression, and thin provisioning. Using LV below VDO helps in:

- Extending of VDO volume

- Spanning VDO volume over multiple devices

- Local file systems

- XFS

- The default RHEL file system.

- Ext4

- A legacy file system.

- Stratis

- It is available as a Technology Preview. Stratis is a hybrid user-and-kernel local storage management system that supports advanced storage features.

1.2. Remote storage overview

The following are the remote storage options available in RHEL 8:

- Storage connectivity options

- iSCSI

- RHEL 8 uses the targetcli tool to add, remove, view, and monitor iSCSI storage interconnects.

- Fibre Channel (FC)

RHEL 8 provides the following native Fibre Channel drivers:

-

lpfc -

qla2xxx -

Zfcp

-

- Non-volatile Memory Express (NVMe)

An interface which allows host software utility to communicate with solid state drives. Use the following types of fabric transport to configure NVMe over fabrics:

- NVMe over fabrics using Remote Direct Memory Access (RDMA).

- NVMe over fabrics using Fibre Channel (FC)

- Device Mapper multipathing (DM Multipath)

- Allows you to configure multiple I/O paths between server nodes and storage arrays into a single device. These I/O paths are physical SAN connections that can include separate cables, switches, and controllers.

- Network file system

- NFS

- SMB

1.3. GFS2 file system overview

The Red Hat Global File System 2 (GFS2) file system is a 64-bit symmetric cluster file system which provides a shared name space and manages coherency between multiple nodes sharing a common block device. A GFS2 file system is intended to provide a feature set which is as close as possible to a local file system, while at the same time enforcing full cluster coherency between nodes. To achieve this, the nodes employ a cluster-wide locking scheme for file system resources. This locking scheme uses communication protocols such as TCP/IP to exchange locking information.

In a few cases, the Linux file system API does not allow the clustered nature of GFS2 to be totally transparent; for example, programs using POSIX locks in GFS2 should avoid using the GETLK function since, in a clustered environment, the process ID may be for a different node in the cluster. In most cases however, the functionality of a GFS2 file system is identical to that of a local file system.

The Red Hat Enterprise Linux Resilient Storage Add-On provides GFS2, and it depends on the Red Hat Enterprise Linux High Availability Add-On to provide the cluster management required by GFS2.

The gfs2.ko kernel module implements the GFS2 file system and is loaded on GFS2 cluster nodes.

To get the best performance from GFS2, it is important to take into account the performance considerations which stem from the underlying design. Just like a local file system, GFS2 relies on the page cache in order to improve performance by local caching of frequently used data. In order to maintain coherency across the nodes in the cluster, cache control is provided by the glock state machine.

Additional resources