Red Hat Training

A Red Hat training course is available for RHEL 8

Configuring and managing virtualization

Setting up your host, creating and administering virtual machines, and understanding virtualization features in Red Hat Enterprise Linux 8

Abstract

- What the capabilities and use cases of virtualization are

- How to manage your host and your virtual machines by using command-line utilities, as well as by using the web console

- What the support limitations of virtualization are on various system architectures, such as Intel 64, AMD64, IBM POWER, and IBM Z

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

- Log in to the Jira website.

- Click Create in the top navigation bar.

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- Click Create at the bottom of the dialogue.

Chapter 1. Introducing virtualization in RHEL

If you are unfamiliar with the concept of virtualization or its implementation in Linux, the following sections provide a general overview of virtualization in RHEL 8: its basics, advantages, components, and other possible virtualization solutions provided by Red Hat.

1.1. What is virtualization?

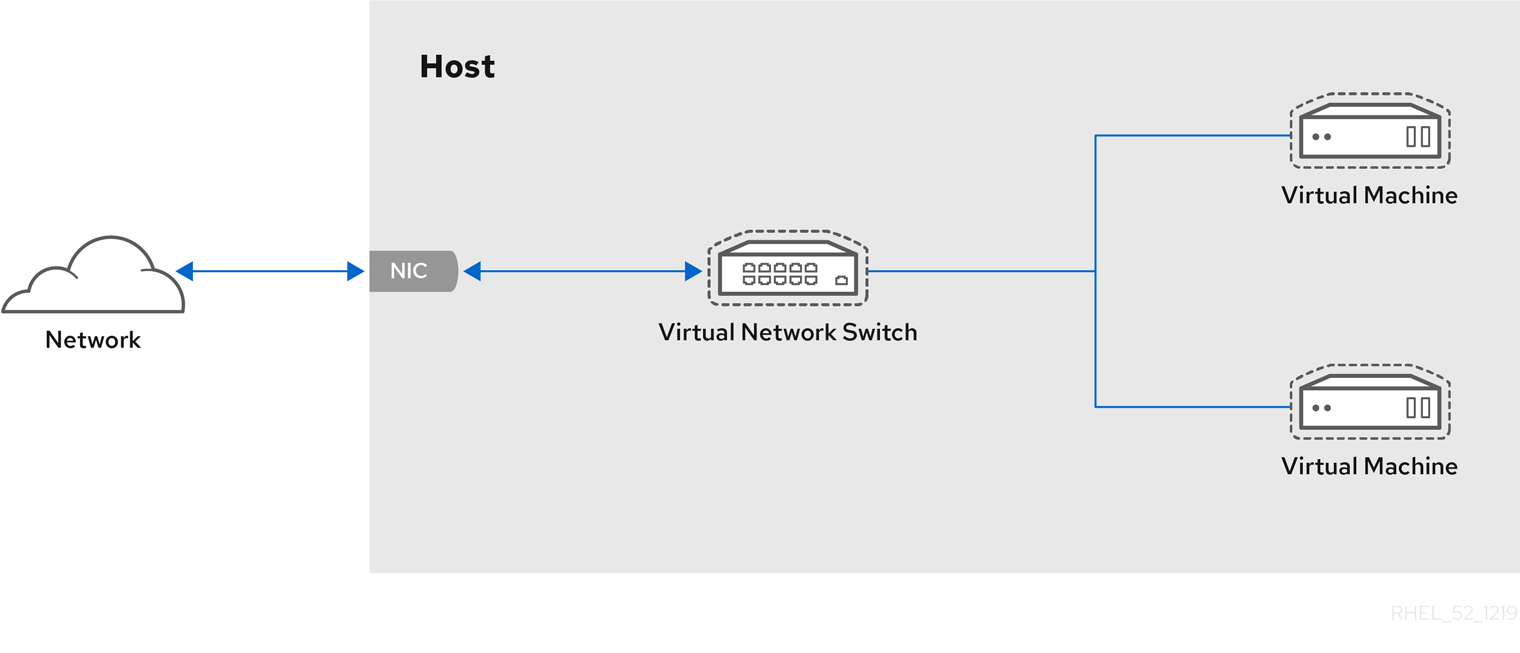

RHEL 8 provides the virtualization functionality, which enables a machine running RHEL 8 to host multiple virtual machines (VMs), also referred to as guests. VMs use the host’s physical hardware and computing resources to run a separate, virtualized operating system (guest OS) as a user-space process on the host’s operating system.

In other words, virtualization makes it possible to have operating systems within operating systems.

VMs enable you to safely test software configurations and features, run legacy software, or optimize the workload efficiency of your hardware. For more information about the benefits, see Advantages of virtualization.

For more information about what virtualization is, see the Virtualization topic page.

Next steps

- To try out virtualization in Red Hat Enterprise Linux 8, see Getting started with virtualization.

- In addition to Red Hat Enterprise Linux 8 virtualization, Red Hat offers a number of specialized virtualization solutions, each with a different user focus and features. For more information, see Red Hat virtualization solutions.

1.2. Advantages of virtualization

Using virtual machines (VMs) has the following benefits in comparison to using physical machines:

Flexible and fine-grained allocation of resources

A VM runs on a host machine, which is usually physical, and physical hardware can also be assigned for the guest OS to use. However, the allocation of physical resources to the VM is done on the software level, and is therefore very flexible. A VM uses a configurable fraction of the host memory, CPUs, or storage space, and that configuration can specify very fine-grained resource requests.

For example, what the guest OS sees as its disk can be represented as a file on the host file system, and the size of that disk is less constrained than the available sizes for physical disks.

Software-controlled configurations

The entire configuration of a VM is saved as data on the host, and is under software control. Therefore, a VM can easily be created, removed, cloned, migrated, operated remotely, or connected to remote storage.

Separation from the host

A guest OS runs on a virtualized kernel, separate from the host OS. This means that any OS can be installed on a VM, and even if the guest OS becomes unstable or is compromised, the host is not affected in any way.

Space and cost efficiency

A single physical machine can host a large number of VMs. Therefore, it avoids the need for multiple physical machines to do the same tasks, and thus lowers the space, power, and maintenance requirements associated with physical hardware.

Software compatibility

Because a VM can use a different OS than its host, virtualization makes it possible to run applications that were not originally released for your host OS. For example, using a RHEL 7 guest OS, you can run applications released for RHEL 7 on a RHEL 8 host system.

NoteNot all operating systems are supported as a guest OS in a RHEL 8 host. For details, see Recommended features in RHEL 8 virtualization.

1.3. Virtual machine components and their interaction

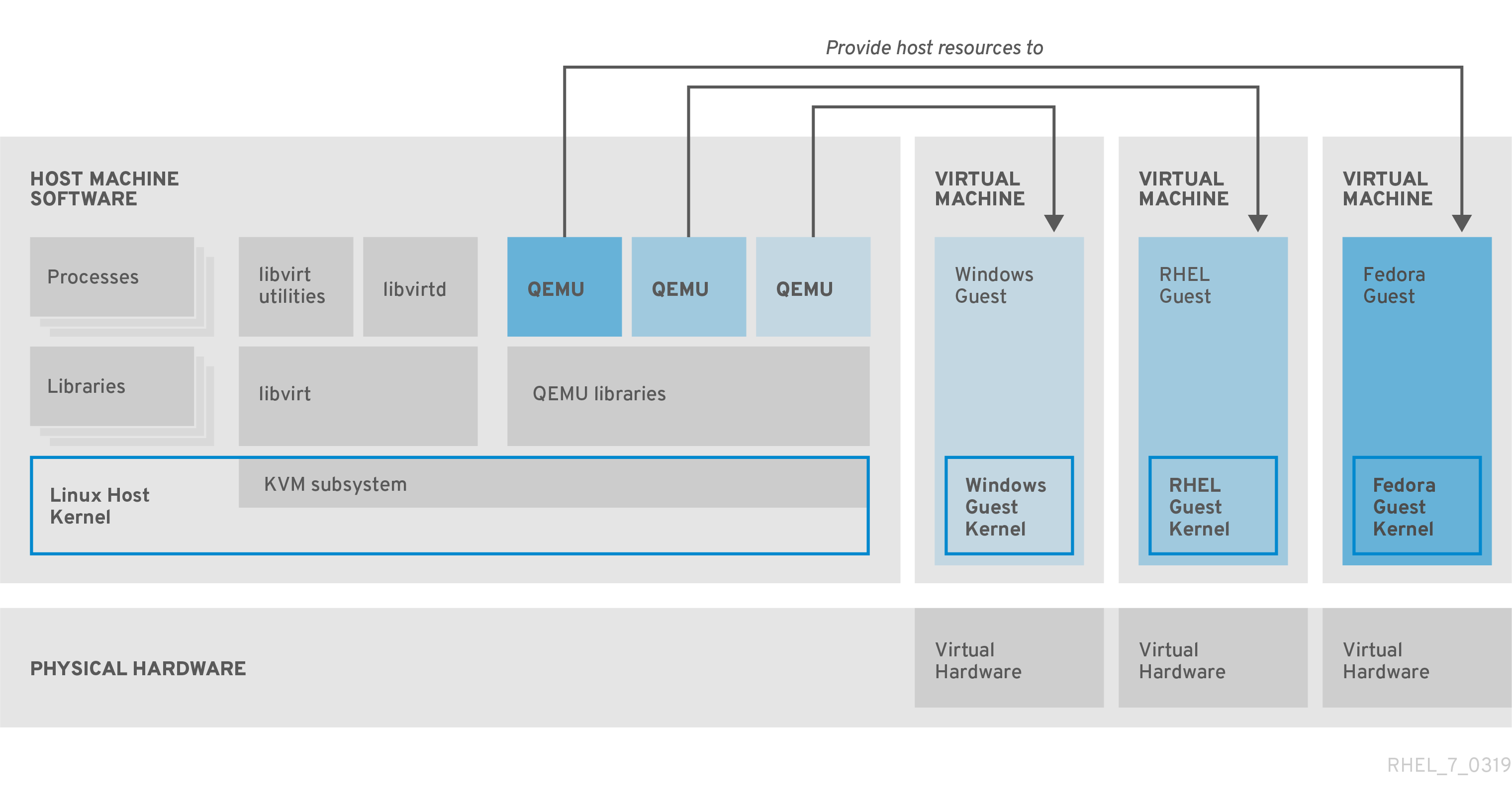

Virtualization in RHEL 8 consists of the following principal software components:

Hypervisor

The basis of creating virtual machines (VMs) in RHEL 8 is the hypervisor, a software layer that controls hardware and enables running multiple operating systems on a host machine.

The hypervisor includes the Kernel-based Virtual Machine (KVM) module and virtualization kernel drivers. These components ensure that the Linux kernel on the host machine provides resources for virtualization to user-space software.

At the user-space level, the QEMU emulator simulates a complete virtualized hardware platform that the guest operating system can run in, and manages how resources are allocated on the host and presented to the guest.

In addition, the libvirt software suite serves as a management and communication layer, making QEMU easier to interact with, enforcing security rules, and providing a number of additional tools for configuring and running VMs.

XML configuration

A host-based XML configuration file (also known as a domain XML file) determines all settings and devices in a specific VM. The configuration includes:

- Metadata such as the name of the VM, time zone, and other information about the VM.

- A description of the devices in the VM, including virtual CPUs (vCPUS), storage devices, input/output devices, network interface cards, and other hardware, real and virtual.

- VM settings such as the maximum amount of memory it can use, restart settings, and other settings about the behavior of the VM.

For more information about the contents of an XML configuration, see Sample virtual machine XML configuration.

Component interaction

When a VM is started, the hypervisor uses the XML configuration to create an instance of the VM as a user-space process on the host. The hypervisor also makes the VM process accessible to the host-based interfaces, such as the virsh, virt-install, and guestfish utilities, or the web console GUI.

When these virtualization tools are used, libvirt translates their input into instructions for QEMU. QEMU communicates the instructions to KVM, which ensures that the kernel appropriately assigns the resources necessary to carry out the instructions. As a result, QEMU can execute the corresponding user-space changes, such as creating or modifying a VM, or performing an action in the VM’s guest operating system.

While QEMU is an essential component of the architecture, it is not intended to be used directly on RHEL 8 systems, due to security concerns. Therefore, qemu-* commands are not supported by Red Hat, and it is highly recommended to interact with QEMU by using libvirt.

For more information about the host-based interfaces, see Tools and interfaces for virtualization management.

Figure 1.1. RHEL 8 virtualization architecture

1.4. Tools and interfaces for virtualization management

You can manage virtualization in RHEL 8 by using the command-line interface (CLI) or several graphical user interfaces (GUIs).

Command-line interface

The CLI is the most powerful method of managing virtualization in RHEL 8. Prominent CLI commands for virtual machine (VM) management include:

virsh - A versatile virtualization command-line utility and shell with a great variety of purposes, depending on the provided arguments. For example:

-

Starting and shutting down a VM -

virsh startandvirsh shutdown -

Listing available VMs -

virsh list -

Creating a VM from a configuration file -

virsh create -

Entering a virtualization shell -

virsh

For more information, see the

virsh(1)man page.-

Starting and shutting down a VM -

-

virt-install- A CLI utility for creating new VMs. For more information, see thevirt-install(1)man page. -

virt-xml- A utility for editing the configuration of a VM. -

guestfish- A utility for examining and modifying VM disk images. For more information, see theguestfish(1)man page.

Graphical interfaces

You can use the following GUIs to manage virtualization in RHEL 8:

The RHEL 8 web console, also known as Cockpit, provides a remotely accessible and easy to use graphical user interface for managing VMs and virtualization hosts.

For instructions on basic virtualization management with the web console, see Managing virtual machines in the web console.

The Virtual Machine Manager (virt-manager) application provides a specialized GUI for managing VMs and virtualization hosts.

ImportantAlthough still supported in RHEL 8, virt-manager has been deprecated. The web console is intended to become its replacement in a subsequent release. It is, therefore, recommended that you get familiar with the web console for managing virtualization in a GUI.

However, in RHEL 8, some features may only be accessible from either virt-manager or the command line. For details, see Differences between virtualization features in Virtual Machine Manager and the web console.

The Gnome Boxes application is a lightweight graphical interface to view and access VMs and remote systems. Gnome Boxes is primarily designed for use on desktop systems.

ImportantGnome Boxes is provided as a part of the GNOME desktop environment and is supported on RHEL 8, but Red Hat recommends that you use the web console for managing virtualization in a GUI.

Additional resources

1.5. Red Hat virtualization solutions

The following Red Hat products are built on top of RHEL 8 virtualization features and expand the KVM virtualization capabilities available in RHEL 8. In addition, many limitations of RHEL 8 virtualization do not apply to these products:

- OpenShift Virtualization

Based on the KubeVirt technology, OpenShift Virtualization is a part of the Red Hat OpenShift Container Platform, and makes it possible to run virtual machines in containers.

For more information about OpenShift Virtualization see the Red Hat Hybrid Cloud pages.

- Red Hat OpenStack Platform (RHOSP)

Red Hat OpenStack Platform offers an integrated foundation to create, deploy, and scale a secure and reliable public or private OpenStack cloud.

For more information about Red Hat OpenStack Platform, see the Red Hat Customer Portal or the Red Hat OpenStack Platform documentation suite.

For details on virtualization features not supported in RHEL but supported in other Red Hat virtualization solutions, see: Unsupported features in RHEL 8 virtualization

Chapter 2. Getting started with virtualization

To start using virtualization in RHEL 8, follow the steps below. The default method for this is using the command-line interface (CLI), but for user convenience, some of the steps can be completed in the web console GUI.

- Enable the virtualization module and install the virtualization packages - see Enabling virtualization.

Create a virtual machine (VM):

Start the VM:

- For CLI, see Starting a virtual machine by using the command-line interface.

- For GUI, see Starting virtual machines by using the web console.

Connect to the VM:

The web console currently provides only a subset of VM management functions, so using the command line is recommended for advanced use of virtualization in RHEL 8.

2.1. Enabling virtualization

To use virtualization in RHEL 8, you must enable the virtualization module, install virtualization packages, and ensure your system is configured to host virtual machines (VMs).

Prerequisites

- RHEL 8 is installed and registered on your host machine.

Your system meets the following hardware requirements to work as a virtualization host:

The following minimum system resources are available:

- 6 GB free disk space for the host, plus another 6 GB for each intended VM.

- 2 GB of RAM for the host, plus another 2 GB for each intended VM.

- 4 CPUs on the host. VMs can generally run with a single assigned vCPU, but Red Hat recommends assigning 2 or more vCPUs per VM to avoid VMs becoming unresponsive during high load.

The architecture of your host machine supports KVM virtualization.

- Notably, RHEL 8 does not support virtualization on the 64-bit ARM architecture (ARM 64).

The procedure below applies to the AMD64 and Intel 64 architecture (x86_64). To enable virtualization on a host with a different supported architecture, see one of the following sections:

Procedure

Install the packages in the RHEL 8 virtualization module:

# yum module install virtInstall the

virt-installandvirt-viewerpackages:# yum install virt-install virt-viewerStart the

libvirtdservice:# systemctl start libvirtd

Verification

Verify that your system is prepared to be a virtualization host:

# virt-host-validate [...] QEMU: Checking for device assignment IOMMU support : PASS QEMU: Checking if IOMMU is enabled by kernel : WARN (IOMMU appears to be disabled in kernel. Add intel_iommu=on to kernel cmdline arguments) LXC: Checking for Linux >= 2.6.26 : PASS [...] LXC: Checking for cgroup 'blkio' controller mount-point : PASS LXC: Checking if device /sys/fs/fuse/connections exists : FAIL (Load the 'fuse' module to enable /proc/ overrides)Review the return values of

virt-host-validatechecks and take appropriate actions:-

If all

virt-host-validatechecks return thePASSvalue, your system is prepared for creating VMs. -

If any of the checks return a

FAILvalue, follow the displayed instructions to fix the problem. -

If any of the checks return a

WARNvalue, consider following the displayed instructions to improve virtualization capabilities

-

If all

Troubleshooting

If KVM virtualization is not supported by your host CPU,

virt-host-validategenerates the following output:QEMU: Checking for hardware virtualization: FAIL (Only emulated CPUs are available, performance will be significantly limited)

However, VMs on such a host system will fail to boot, rather than have performance problems.

To work around this, you can change the

<domain type>value in the XML configuration of the VM toqemu. Note, however, that Red Hat does not support VMs that use theqemudomain type, and setting this is highly discouraged in production environments.

2.2. Creating virtual machines

To create a virtual machine (VM) in RHEL 8, use the command-line interface or the RHEL 8 web console.

2.2.1. Creating virtual machines by using the command-line interface

To create a virtual machine (VM) on your RHEL 8 host by using the virt-install utility, follow the instructions below.

Prerequisites

- Virtualization is enabled on your host system.

- You have a sufficient amount of system resources to allocate to your VMs, such as disk space, RAM, or CPUs. The recommended values may vary significantly depending on the intended tasks and workload of the VMs.

An operating system (OS) installation source is available locally or on a network. This can be one of the following:

- An ISO image of an installation medium

A disk image of an existing VM installation

WarningInstalling from a host CD-ROM or DVD-ROM device is not possible in RHEL 8. If you select a CD-ROM or DVD-ROM as the installation source when using any VM installation method available in RHEL 8, the installation will fail. For more information, see the Red Hat Knowledgebase.

Also note that Red Hat provides support only for a limited set of guest operating systems.

- Optional: A Kickstart file can be provided for faster and easier configuration of the installation.

Procedure

To create a VM and start its OS installation, use the virt-install command, along with the following mandatory arguments:

-

--name: the name of the new machine -

--memory: the amount of allocated memory -

--vcpus: the number of allocated virtual CPUs -

--disk: the type and size of the allocated storage -

--cdromor--location: the type and location of the OS installation source

Based on the chosen installation method, the necessary options and values can vary. See the commands below for examples:

The listed commands use the VNC remote display protocol instead of the default SPICE protocol. VNC currently does not have some of the features that SPICE does, but is fully supported on RHEL 9. As a result, VMs that use VNC will not stop working if you migrate your host to RHEL 9. For more information, see Considerations in adopting RHEL 9.

The following command creates a VM named demo-guest1 that installs the Windows 10 OS from an ISO image locally stored in the /home/username/Downloads/Win10install.iso file. This VM is also allocated with 2048 MiB of RAM and 2 vCPUs, and an 80 GiB qcow2 virtual disk is automatically configured for the VM.

# virt-install \ --graphics vnc \ --name demo-guest1 --memory 2048 \ --vcpus 2 --disk size=80 --os-variant win10 \ --cdrom /home/username/Downloads/Win10install.iso

The following command creates a VM named demo-guest2 that uses the /home/username/Downloads/rhel8.iso image to run a RHEL 8 OS from a live CD. No disk space is assigned to this VM, so changes made during the session will not be preserved. In addition, the VM is allocated with 4096 MiB of RAM and 4 vCPUs.

# virt-install \ --graphics vnc \ --name demo-guest2 --memory 4096 --vcpus 4 \ --disk none --livecd --os-variant rhel8.0 \ --cdrom /home/username/Downloads/rhel8.iso

The following command creates a RHEL 8 VM named demo-guest3 that connects to an existing disk image, /home/username/backup/disk.qcow2. This is similar to physically moving a hard drive between machines, so the OS and data available to demo-guest3 are determined by how the image was handled previously. In addition, this VM is allocated with 2048 MiB of RAM and 2 vCPUs.

# virt-install \ --graphics vnc \ --name demo-guest3 --memory 2048 --vcpus 2 \ --os-variant rhel8.0 --import \ --disk /home/username/backup/disk.qcow2

Note that the

--os-variantoption is highly recommended when importing a disk image. If it is not provided, the performance of the created VM will be negatively affected.The following command creates a VM named demo-guest4 that installs from the

http://example.com/OS-installURL. For the installation to start successfully, the URL must contain a working OS installation tree. In addition, the OS is automatically configured by using the /home/username/ks.cfg kickstart file. This VM is also allocated with 2048 MiB of RAM, 2 vCPUs, and a 160 GiB qcow2 virtual disk.# virt-install \ --graphics vnc \ --name demo-guest4 --memory 2048 --vcpus 2 --disk size=160 \ --os-variant rhel8.0 --location http://example.com/OS-install \ --initrd-inject /home/username/ks.cfg --extra-args="inst.ks=file:/ks.cfg console=tty0 console=ttyS0,115200n8"

The following command creates a VM named demo-guest5 that installs from a

RHEL8.isoimage file in text-only mode, without graphics. It connects the guest console to the serial console. The VM has 16384 MiB of memory, 16 vCPUs, and 280 GiB disk. This kind of installation is useful when connecting to a host over a slow network link.# virt-install \ --name demo-guest5 --memory 16384 --vcpus 16 --disk size=280 \ --os-variant rhel8.0 --location RHEL8.iso \ --graphics none --extra-args='console=ttyS0'

The following command creates a VM named demo-guest6, which has the same configuration as demo-guest5, but resides on the 192.0.2.1 remote host.

# virt-install \ --connect qemu+ssh://root@192.0.2.1/system --name demo-guest6 --memory 16384 \ --vcpus 16 --disk size=280 --os-variant rhel8.0 --location RHEL8.iso \ --graphics none --extra-args='console=ttyS0'

Verification

- If the VM is created successfully, a virt-viewer window opens with a graphical console of the VM and starts the guest OS installation.

Troubleshooting

If

virt-installfails with acannot find default networkerror:Ensure that the

libvirt-daemon-config-networkpackage is installed:# {PackageManagerCommand} info libvirt-daemon-config-network Installed Packages Name : libvirt-daemon-config-network [...]Verify that the

libvirtdefault network is active and configured to start automatically:# virsh net-list --all Name State Autostart Persistent -------------------------------------------- default active yes yesIf it is not, activate the default network and set it to auto-start:

# virsh net-autostart default Network default marked as autostarted # virsh net-start default Network default started

If activating the default network fails with the following error, the

libvirt-daemon-config-networkpackage has not been installed correctly.error: failed to get network 'default' error: Network not found: no network with matching name 'default'

To fix this, re-install

libvirt-daemon-config-network:# {PackageManagerCommand} reinstall libvirt-daemon-config-networkIf activating the default network fails with an error similar to the following, a conflict has occurred between the default network’s subnet and an existing interface on the host.

error: Failed to start network default error: internal error: Network is already in use by interface ens2

To fix this, use the

virsh net-edit defaultcommand and change the192.0.2.*values in the configuration to a subnet not already in use on the host.

Additional resources

-

The

virt-install (1)man page - Creating virtual machines and installing guest operating systems by using the web console

- Cloning virtual machines

2.2.2. Creating virtual machines and installing guest operating systems by using the web console

To manage virtual machines (VMs) in a GUI on a RHEL 8 host, use the web console. The following sections provide information about how to use the RHEL 8 web console to create VMs and install guest operating systems on them.

VMs created by using the web console currently use the SPICE remote desktop protocol by default. However, SPICE is unsupported on RHEL 9, so if you upgrade your host to RHEL 9, the VM will stop working. For more information, see Considerations in adopting RHEL 9.

To create a VM that uses the VNC protocol, which will work correctly on RHEL 9, use the command-line interface.

2.2.2.1. Creating virtual machines by using the web console

To create a virtual machine (VM) on a host machine to which your RHEL 8 web console is connected, use the instructions below.

Prerequisites

- Virtualization is enabled on your host system.

- The web console VM plug-in is installed on your host system.

- You have a sufficient amount of system resources to allocate to your VMs, such as disk space, RAM, or CPUs. The recommended values might vary significantly depending on the intended tasks and workload of the VMs.

Procedure

In the Virtual Machines interface of the web console, click Create VM.

The Create new virtual machine dialog appears.

Enter the basic configuration of the VM you want to create.

- Name - The name of the VM.

- Connection - The level of privileges granted to the session. For more details, expand the associated dialog box in the web console.

- Installation type - The installation can use a local installation medium, a URL, a PXE network boot, a cloud base image, or download an operating system from a limited set of operating systems.

Operating system - The guest operating system running on the VM. Note that Red Hat provides support only for a limited set of guest operating systems.

NoteTo download and install Red Hat Enterprise Linux directly from web console, you must add an offline token in the Offline token field.

- Storage - The type of storage.

- Storage Limit - The amount of storage space.

- Memory - The amount of memory.

Create the VM:

- If you want the VM to automatically install the operating system, click Create and run.

- If you want to edit the VM before the operating system is installed, click Create and edit.

Additional resources

2.2.2.2. Creating virtual machines by importing disk images by using the web console

You can create a virtual machine (VM) by importing a disk image of an existing VM installation in the RHEL 8 web console.

Prerequisites

- The web console VM plug-in is installed on your system.

- You have a sufficient amount of system resources to allocate to your VMs, such as disk space, RAM, or CPUs. The recommended values can vary significantly depending on the intended tasks and workload of the VMs.

- You have downloaded a disk image of an existing VM installation.

Procedure

In the Virtual Machines interface of the web console, click Import VM.

The Import a virtual machine dialog appears.

Enter the basic configuration of the VM you want to create:

- Name - The name of the VM.

- Disk image - The path to the existing disk image of a VM on the host system.

- Operating system - The operating system running on a VM disk. Note that Red Hat provides support only for a limited set of guest operating systems.

- Memory - The amount of memory to allocate for use by the VM.

Import the VM:

- To install the operating system on the VM without additional edits to the VM settings, click Import and run.

- To edit the VM settings before the installation of the operating system, click Import and edit.

2.2.2.3. Installing guest operating systems by using the web console

When a virtual machine (VM) boots for the first time, you must install an operating system on the VM.

If you click Create and run or Import and run while creating a new VM, the installation routine for the operating system starts automatically when the VM is created.

Prerequisites

Procedure

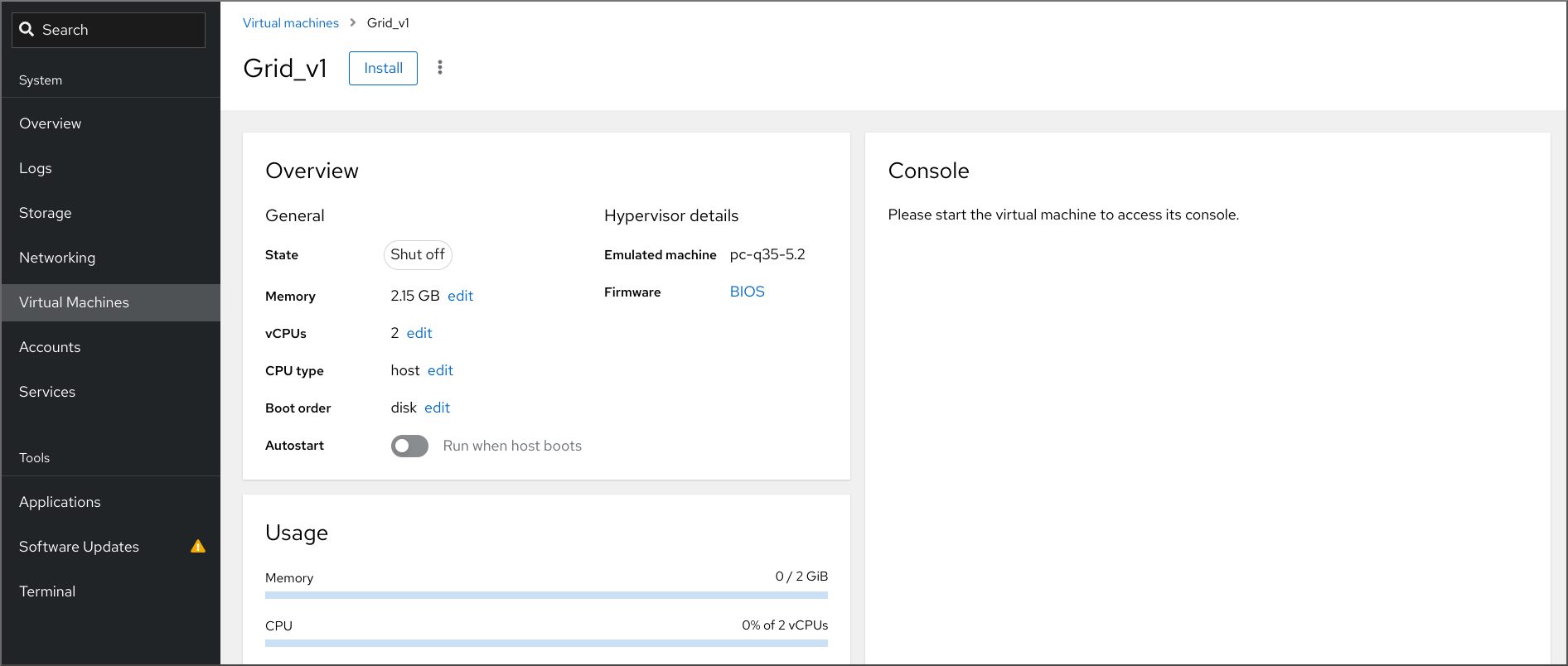

In the Virtual Machines interface, click the VM on which you want to install a guest OS.

A new page opens with basic information about the selected VM and controls for managing various aspects of the VM.

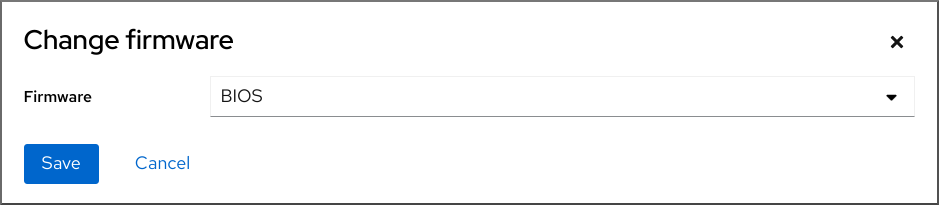

Optional: Change the firmware.

NoteYou can change the firmware only if you selected Create and edit or Import and edit while creating a new VM and if the OS is not already installed on the VM.

- Click the firmware.

- In the Change Firmware window, select the required firmware.

- Click Save.

Click Install.

The installation routine of the operating system runs in the VM console.

Troubleshooting

- If the installation routine fails, delete and recreate the VM before starting the installation again.

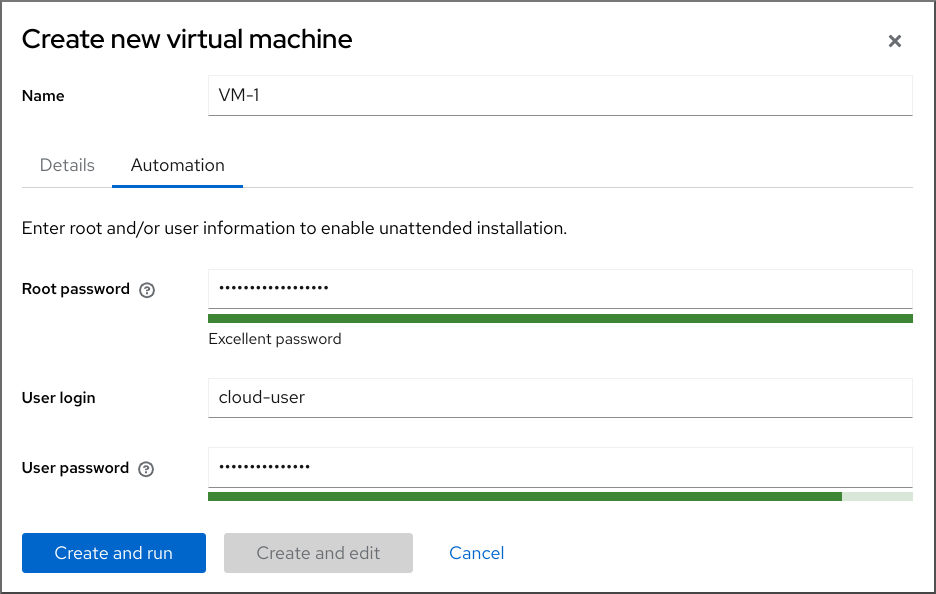

2.2.3. Creating virtual machines with cloud image authentication by using the web console

By default, distro cloud images have no login accounts. However, by using the RHEL web console, you can now create a virtual machine (VM) and specify the root and user account login credentials, which are then passed to cloud-init.

Prerequisites

- The web console VM plug-in is installed on your system.

- Virtualization is enabled on your host system.

- You have a sufficient amount of system resources to allocate to your VMs, such as disk space, RAM, or CPUs. The recommended values may vary significantly depending on the intended tasks and workload of the VMs.

Procedure

In the Virtual Machines interface of the web console, click Create VM.

The Create new virtual machine dialog appears.

- In the Name field, enter a name for the VM.

On the Details tab, in the Installation type field, select Cloud base image.

- In the Installation source field, set the path to the image file on your host system.

Enter the configuration for the VM that you want to create.

- Operating system - The VM’s operating system. Note that Red Hat provides support only for a limited set of guest operating systems.

- Storage - The type of storage with which to configure the VM.

- Storage Limit - The amount of storage space with which to configure the VM.

- Memory - The amount of memory with which to configure the VM.

Click on the Automation tab.

Set your cloud authentication credentials.

- Root password - Enter a root password for your VM. Leave the field blank if you do not wish to set a root password.

- User login - Enter a cloud-init user login. Leave this field blank if you do not wish to create a user account.

User password - Enter a password. Leave this field blank if you do not wish to create a user account.

Click Create and run.

The VM is created.

Additional resources

2.3. Starting virtual machines

To start a virtual machine (VM) in RHEL 8, you can use the command line interface or the web console GUI.

Prerequisites

- Before a VM can be started, it must be created and, ideally, also installed with an OS. For instruction to do so, see Creating virtual machines.

2.3.1. Starting a virtual machine by using the command-line interface

You can use the command line interface (CLI) to start a shut-down virtual machine (VM) or restore a saved VM. By using the CLI, you can start both local and remote VMs.

Prerequisites

- An inactive VM that is already defined.

- The name of the VM.

For remote VMs:

- The IP address of the host where the VM is located.

- Root access privileges to the host.

Procedure

For a local VM, use the

virsh startutility.For example, the following command starts the demo-guest1 VM.

# virsh start demo-guest1 Domain 'demo-guest1' startedFor a VM located on a remote host, use the

virsh startutility along with the QEMU+SSH connection to the host.For example, the following command starts the demo-guest1 VM on the 192.0.2.1 host.

# virsh -c qemu+ssh://root@192.0.2.1/system start demo-guest1 root@192.0.2.1's password: Domain 'demo-guest1' started

Additional resources

-

The

virsh start --helpcommand - Setting up easy access to remote virtualization hosts

- Starting virtual machines automatically when the host starts

2.3.2. Starting virtual machines by using the web console

If a virtual machine (VM) is in the shut off state, you can start it by using the RHEL 8 web console. You can also configure the VM to be started automatically when the host starts.

Prerequisites

- The web console VM plug-in is installed on your system.

- An inactive VM that is already defined.

- The name of the VM.

Procedure

In the Virtual Machines interface, click the VM you want to start.

A new page opens with detailed information about the selected VM and controls for shutting down and deleting the VM.

Click Run.

The VM starts, and you can connect to its console or graphical output.

Optional: To configure the VM to start automatically when the host starts, toggle the

Autostartcheckbox in the Overview section.If you use network interfaces that are not managed by libvirt, you must also make additional changes to the systemd configuration. Otherwise, the affected VMs might fail to start, see starting virtual machines automatically when the host starts.

2.3.3. Starting virtual machines automatically when the host starts

When a host with a running virtual machine (VM) restarts, the VM is shut down, and must be started again manually by default. To ensure a VM is active whenever its host is running, you can configure the VM to be started automatically.

Prerequisites

Procedure

Use the

virsh autostartutility to configure the VM to start automatically when the host starts.For example, the following command configures the demo-guest1 VM to start automatically.

# virsh autostart demo-guest1 Domain 'demo-guest1' marked as autostarted

If you use network interfaces that are not managed by

libvirt, you must also make additional changes to the systemd configuration. Otherwise, the affected VMs might fail to start.NoteThese interfaces include for example:

-

Bridge devices created by

NetworkManager -

Networks configured to use

<forward mode='bridge'/>

In the systemd configuration directory tree, create a

libvirtd.service.ddirectory if it does not exist yet.# mkdir -p /etc/systemd/system/libvirtd.service.d/Create a

10-network-online.confsystemd unit override file in the previously created directory. The content of this file overrides the default systemd configuration for the libvirtd service.# touch /etc/systemd/system/libvirtd.service.d/10-network-online.confAdd the following lines to the

10-network-online.conffile. This configuration change ensures systemd starts thelibvirtdservice only after the network on the host is ready.[Unit] After=network-online.target

-

Bridge devices created by

Verification

View the VM configuration, and check that the autostart option is enabled.

For example, the following command displays basic information about the demo-guest1 VM, including the autostart option.

# virsh dominfo demo-guest1 Id: 2 Name: demo-guest1 UUID: e46bc81c-74e2-406e-bd7a-67042bae80d1 OS Type: hvm State: running CPU(s): 2 CPU time: 385.9s Max memory: 4194304 KiB Used memory: 4194304 KiB Persistent: yes Autostart: enable Managed save: no Security model: selinux Security DOI: 0 Security label: system_u:system_r:svirt_t:s0:c873,c919 (enforcing)If you use network interfaces that are not managed by libvirt, check if the content of the

10-network-online.conffile matches the following output.$ cat /etc/systemd/system/libvirtd.service.d/10-network-online.conf [Unit] After=network-online.target

Additional resources

-

The

virsh autostart --helpcommand - Starting virtual machines by using the web console.

2.4. Connecting to virtual machines

To interact with a virtual machine (VM) in RHEL 8, you need to connect to it by doing one of the following:

- When using the web console interface, use the Virtual Machines pane in the web console interface. For more information, see Interacting with virtual machines by using the web console.

- If you need to interact with a VM graphical display without using the web console, use the Virt Viewer application. For details, see Opening a virtual machine graphical console by using Virt Viewer.

- When a graphical display is not possible or not necessary, use an SSH terminal connection.

- When the virtual machine is not reachable from your system by using a network, use the virsh console.

If the VMs to which you are connecting are on a remote host rather than a local one, you can optionally configure your system for more convenient access to remote hosts.

2.4.1. Interacting with virtual machines by using the web console

To interact with a virtual machine (VM) in the RHEL 8 web console, you need to connect to the VM’s console. These include both graphical and serial consoles.

- To interact with the VM’s graphical interface in the web console, use the graphical console.

- To interact with the VM’s graphical interface in a remote viewer, use the graphical console in remote viewers.

- To interact with the VM’s CLI in the web console, use the serial console.

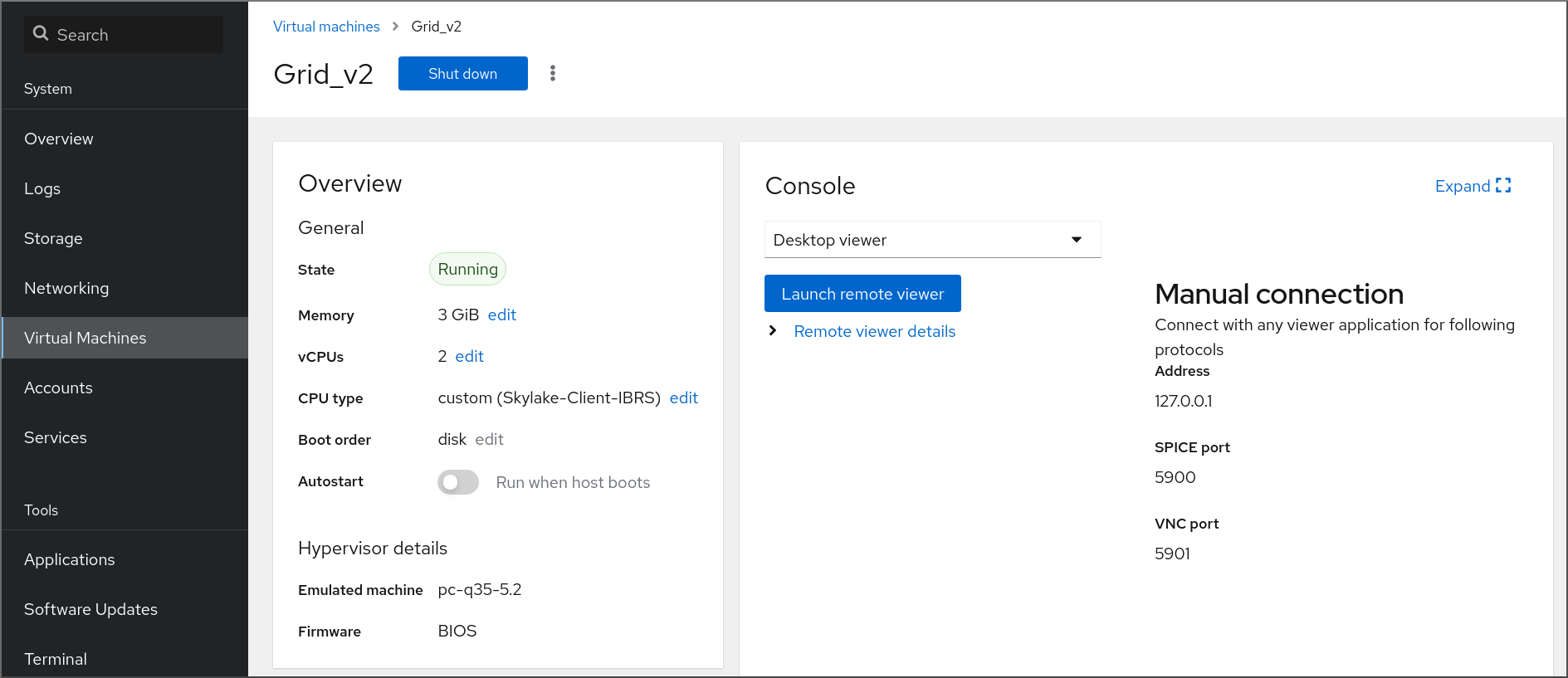

2.4.1.1. Viewing the virtual machine graphical console in the web console

By using the virtual machine (VM) console interface, you can view the graphical output of a selected VM in the RHEL 8 web console.

Prerequisites

- The web console VM plug-in is installed on your system.

- Ensure that both the host and the VM support a graphical interface.

Procedure

In the Virtual Machines interface, click the VM whose graphical console you want to view.

A new page opens with an Overview and a Console section for the VM.

Select VNC console in the console drop down menu.

The VNC console appears below the menu in the web interface.

The graphical console appears in the web interface.

Click Expand

You can now interact with the VM console by using the mouse and keyboard in the same manner you interact with a real machine. The display in the VM console reflects the activities being performed on the VM.

The host on which the web console is running may intercept specific key combinations, such as Ctrl+Alt+Del, preventing them from being sent to the VM.

To send such key combinations, click the Send key menu and select the key sequence to send.

For example, to send the Ctrl+Alt+Del combination to the VM, click the Send key and select the Ctrl+Alt+Del menu entry.

Troubleshooting

- If clicking in the graphical console does not have any effect, expand the console to full screen. This is a known issue with the mouse cursor offset.

2.4.1.2. Viewing the graphical console in a remote viewer by using the web console

By using the web console interface, you can display the graphical console of a selected virtual machine (VM) in a remote viewer, such as Virt Viewer.

You can launch Virt Viewer from within the web console. Other VNC and SPICE remote viewers can be launched manually.

Prerequisites

- The web console VM plug-in is installed on your system.

- Ensure that both the host and the VM support a graphical interface.

Before you can view the graphical console in Virt Viewer, you must install Virt Viewer on the machine to which the web console is connected.

Click Launch remote viewer.

The virt viewer,

.vv, file downloads.- Open the file to launch Virt Viewer.

Remote Viewer is available on most operating systems. However, some browser extensions and plug-ins do not allow the web console to open Virt Viewer.

Procedure

In the Virtual Machines interface, click the VM whose graphical console you want to view.

A new page opens with an Overview and a Console section for the VM.

Select Desktop Viewer in the console drop down menu.

Click Launch Remote Viewer.

The graphical console opens in Virt Viewer.

You can interact with the VM console by using the mouse and keyboard in the same manner in which you interact with a real machine. The display in the VM console reflects the activities being performed on the VM.

The server on which the web console is running can intercept specific key combinations, such as Ctrl+Alt+Del, preventing them from being sent to the VM.

To send such key combinations, click the Send key menu and select the key sequence to send.

For example, to send the Ctrl+Alt+Del combination to the VM, click the Send key menu and select the Ctrl+Alt+Del menu entry.

Troubleshooting

- If clicking in the graphical console does not have any effect, expand the console to full screen. This is a known issue with the mouse cursor offset.

If launching the Remote Viewer in the web console does not work or is not optimal, you can manually connect with any viewer application by using the following protocols:

-

Address - The default address is

127.0.0.1. You can modify thevnc_listenor thespice_listenparameter in/etc/libvirt/qemu.confto change it to the host’s IP address. - SPICE port - 5900

- VNC port - 5901

-

Address - The default address is

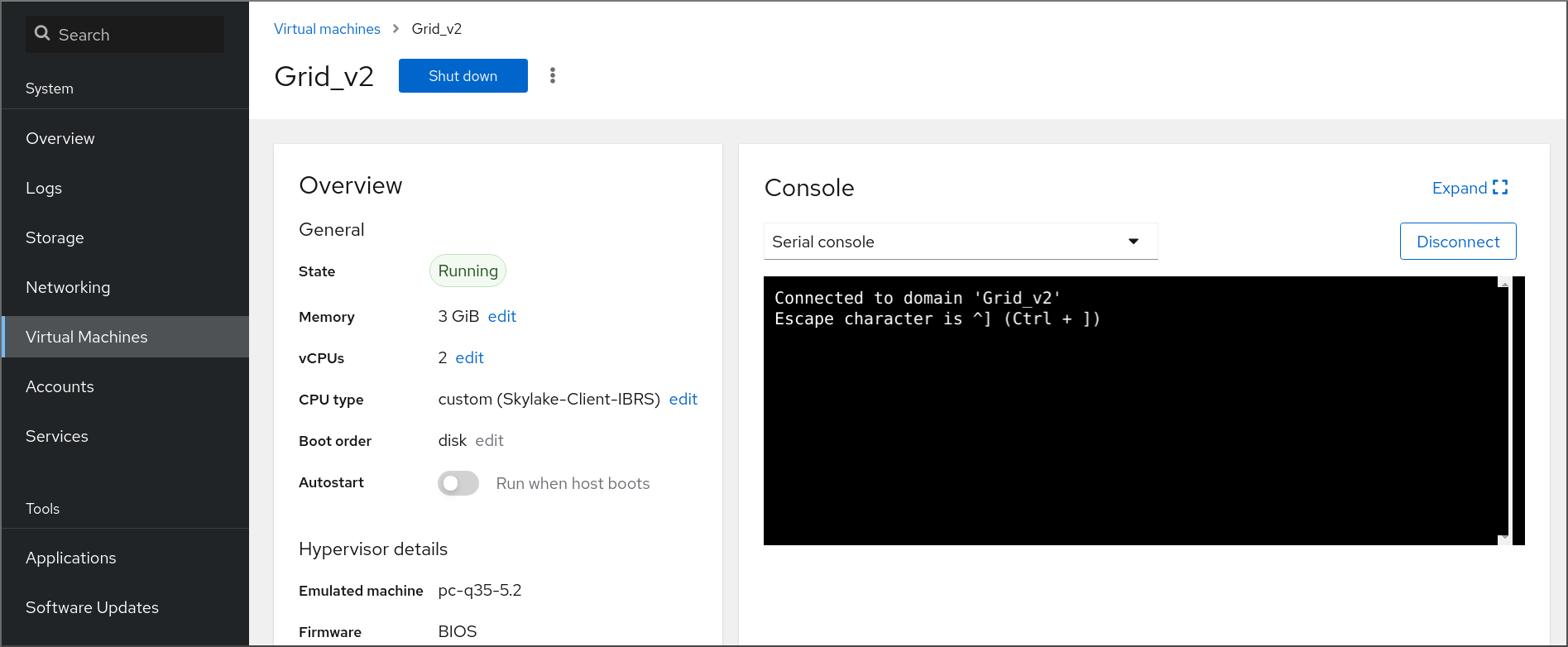

2.4.1.3. Viewing the virtual machine serial console in the web console

You can view the serial console of a selected virtual machine (VM) in the RHEL 8 web console. This is useful when the host machine or the VM is not configured with a graphical interface.

For more information about the serial console, see Opening a virtual machine serial console.

Prerequisites

- The web console VM plug-in is installed on your system.

Procedure

In the Virtual Machines pane, click the VM whose serial console you want to view.

A new page opens with an Overview and a Console section for the VM.

Select Serial console in the console drop down menu.

The graphical console appears in the web interface.

You can disconnect and reconnect the serial console from the VM.

- To disconnect the serial console from the VM, click Disconnect.

- To reconnect the serial console to the VM, click Reconnect.

2.4.2. Opening a virtual machine graphical console by using Virt Viewer

To connect to a graphical console of a KVM virtual machine (VM) and open it in the Virt Viewer desktop application, follow the procedure below.

Prerequisites

- Your system, as well as the VM you are connecting to, must support graphical displays.

- If the target VM is located on a remote host, connection and root access privileges to the host are needed.

- Optional: If the target VM is located on a remote host, set up your libvirt and SSH for more convenient access to remote hosts.

Procedure

To connect to a local VM, use the following command and replace guest-name with the name of the VM you want to connect to:

# virt-viewer guest-nameTo connect to a remote VM, use the

virt-viewercommand with the SSH protocol. For example, the following command connects as root to a VM called guest-name, located on remote system 192.0.2.1. The connection also requires root authentication for 192.0.2.1.# virt-viewer --direct --connect qemu+ssh://root@192.0.2.1/system guest-name root@192.0.2.1's password:

Verification

If the connection works correctly, the VM display is shown in the Virt Viewer window.

You can interact with the VM console by using the mouse and keyboard in the same manner you interact with a real machine. The display in the VM console reflects the activities being performed on the VM.

Troubleshooting

- If clicking in the graphical console does not have any effect, expand the console to full screen. This is a known issue with the mouse cursor offset.

Additional resources

2.4.3. Connecting to a virtual machine by using SSH

To interact with the terminal of a virtual machine (VM) by using the SSH connection protocol, follow the procedure below.

Prerequisites

- You have network connection and root access privileges to the target VM.

- If the target VM is located on a remote host, you also have connection and root access privileges to that host.

Your VM network assigns IP addresses by

dnsmasqgenerated bylibvirt. This is the case for example inlibvirtNAT networks.Notably, if your VM is using one of the following network configurations, you cannot connect to the VM by using SSH:

-

hostdevinterfaces - Direct interfaces

- Bridge interaces

-

The

libvirt-nsscomponent is installed and enabled on the VM’s host. If it is not, do the following:Install the

libvirt-nsspackage:# yum install libvirt-nssEdit the

/etc/nsswitch.conffile and addlibvirt_guestto thehostsline:... passwd: compat shadow: compat group: compat hosts: files libvirt_guest dns ...

Procedure

When connecting to a remote VM, SSH into its physical host first. The following example demonstrates connecting to a host machine

192.0.2.1by using its root credentials:# ssh root@192.0.2.1 root@192.0.2.1's password: Last login: Mon Sep 24 12:05:36 2021 root~#Use the VM’s name and user access credentials to connect to it. For example, the following connects to to the

testguest1VM by using its root credentials:# ssh root@testguest1 root@testguest1's password: Last login: Wed Sep 12 12:05:36 2018 root~]#

Troubleshooting

If you do not know the VM’s name, you can list all VMs available on the host by using the

virsh list --allcommand:# virsh list --all Id Name State ---------------------------------------------------- 2 testguest1 running - testguest2 shut off

Additional resources

2.4.4. Opening a virtual machine serial console

By using the virsh console command, it is possible to connect to the serial console of a virtual machine (VM).

This is useful when the VM:

- Does not provide VNC or SPICE protocols, and thus does not offer video display for GUI tools.

- Does not have a network connection, and thus cannot be interacted with using SSH.

Prerequisites

The VM must have a serial console device configured, such as

console type='pty'. To verify, do the following:# virsh dumpxml vm-name | grep console <console type='pty' tty='/dev/pts/2'> </console>The VM must have the serial console configured in its kernel command line. To verify this, the

cat /proc/cmdlinecommand output on the VM should include console=ttyS0. For example:# cat /proc/cmdline BOOT_IMAGE=/vmlinuz-3.10.0-948.el7.x86_64 root=/dev/mapper/rhel-root ro console=tty0 console=ttyS0,9600n8 rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap rhgbIf the serial console is not set up properly on a VM, using virsh console to connect to the VM connects you to an unresponsive guest console. However, you can still exit the unresponsive console by using the Ctrl+] shortcut.

To set up serial console on the VM, do the following:

On the VM, enable the

console=ttyS0kernel option:# grubby --update-kernel=ALL --args="console=ttyS0"Clear the kernel options that might prevent your changes from taking effect.

# grub2-editenv - unset kernelopts- Reboot the VM.

Procedure

On your host system, use the

virsh consolecommand. The following example connects to the guest1 VM, if the libvirt driver supports safe console handling:# virsh console guest1 --safe Connected to domain 'guest1' Escape character is ^] Subscription-name Kernel 3.10.0-948.el7.x86_64 on an x86_64 localhost login:

- You can interact with the virsh console in the same way as with a standard command-line interface.

Additional resources

-

The

virshman page

2.4.5. Setting up easy access to remote virtualization hosts

When managing VMs on a remote host system by using libvirt utilities, it is recommended to use the -c qemu+ssh://root@hostname/system syntax. For example, to use the virsh list command as root on the 192.0.2.1 host:

# virsh -c qemu+ssh://root@192.0.2.1/system list

root@192.0.2.1's password:

Id Name State

---------------------------------

1 remote-guest runningHowever, you can remove the need to specify the connection details in full by modifying your SSH and libvirt configuration. For example:

# virsh -c remote-host list

root@192.0.2.1's password:

Id Name State

---------------------------------

1 remote-guest runningTo enable this improvement, follow the instructions below.

Procedure

Edit the

~/.ssh/configfile with the following details, where host-alias is a shortened name associated with a specific remote host and an alias for root@192.0.2.1, and hosturl is the URL address of the host :# vi ~/.ssh/config Host example-host-alias User root Hostname 192.0.2.1

Edit the

/etc/libvirt/libvirt.conffile with the following details, the example-qemu-host-alias is a host alias that QEMU and libvirt utilities will associate forqemu+ssh://192.0.2.1/systemwith the intended host example-host-alias :# vi /etc/libvirt/libvirt.conf uri_aliases = [ "example-qemu-host-alias=qemu+ssh://example-host-alias/system", ]

Verification

Confirm that you can manage remote VMs by using libvirt-based utilities on the local system with an added

-c qemu-host-aliasparameter. This automatically performs the commands over SSH on the remote host.For example, verify that the following lists VMs on the 192.0.2.1 remote host, the connection to which was set up as example-qemu-host-alias in the previous steps:

# virsh -c example-qemu-host-alias list root@192.0.2.1's password: Id Name State ---------------------------------------- 1 example-remote-guest runningNoteIn addition to

virsh, the-c(or--connect) option and the remote host access configuration described above can be used by the following utilities:

Next steps

If you want to use libvirt utilities exclusively on a single remote host, you can also set a specific connection as the default target for libvirt-based utilities. However, this is not recommended if you also want to manage VMs on your local host or on different remote hosts.

You can edit the

/etc/libvirt/libvirt.conffile and set the value of theuri_defaultparameter to example-qemu-host-alias as a default libvirt target.# These can be used in cases when no URI is supplied by the application # (@uri_default also prevents probing of the hypervisor driver). # uri_default = "example-qemu-host-alias"

As a result, all libvirt-based commands will automatically be performed on the specified remote host.

$ virsh list root@192.0.2.1's password: Id Name State --------------------------------- 1 example-remote-guest runningWhen connecting to a remote host, you can avoid providing the root password to the remote system. To do so, use one or more of the following methods:

- Set up key-based SSH access to the remote host

- Use SSH connection multiplexing to connect to the remote system

- Kerberos authentication in Identity Management

-

The

-c(or--connect) option can be used to run thevirt-install,virt-viewer,virshandvirt-managercommands on a remote host.

2.5. Shutting down virtual machines

To shut down a running virtual machine hosted on RHEL 8, use the command line interface or the web console GUI.

2.5.1. Shutting down a virtual machine by using the command-line interface

To shut down a responsive virtual machine (VM), do one of the following:

- Use a shutdown command appropriate to the guest OS while connected to the guest.

Use the

virsh shutdowncommand on the host:If the VM is on a local host:

# virsh shutdown demo-guest1 Domain 'demo-guest1' is being shutdownIf the VM is on a remote host, in this example 192.0.2.1:

# virsh -c qemu+ssh://root@192.0.2.1/system shutdown demo-guest1 root@192.0.2.1's password: Domain 'demo-guest1' is being shutdown

To force a VM to shut down, for example if it has become unresponsive, use the virsh destroy command on the host:

# virsh destroy demo-guest1

Domain 'demo-guest1' destroyed

The virsh destroy command does not actually delete or remove the VM configuration or disk images. It only terminates the running VM instance of the VM, similarly to pulling the power cord from a physical machine. As such, in rare cases, virsh destroy may cause corruption of the VM’s file system, so using this command is only recommended if all other shutdown methods have failed.

2.5.2. Shutting down and restarting virtual machines by using the web console

Using the RHEL 8 web console, you can shut down or restart running virtual machines. You can also send a non-maskable interrupt to an unresponsive virtual machine.

2.5.2.1. Shutting down virtual machines in the web console

If a virtual machine (VM) is in the running state, you can shut it down by using the RHEL 8 web console.

Prerequisites

- The web console VM plug-in is installed on your system.

Procedure

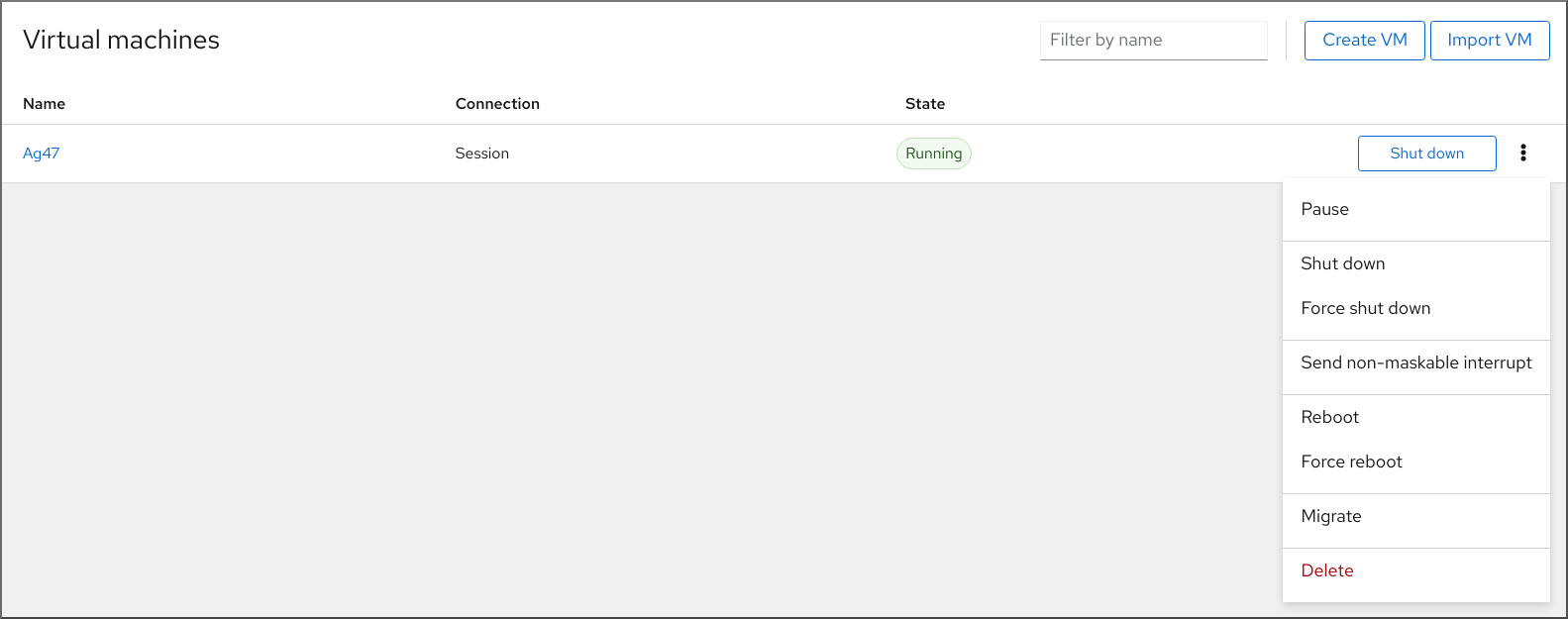

- In the Virtual Machines interface, find the row of the VM you want to shut down.

On the right side of the row, click Shut Down.

The VM shuts down.

Troubleshooting

- If the VM does not shut down, click the Menu button ⋮ next to the Shut Down button and select Force Shut Down.

- To shut down an unresponsive VM, you can also send a non-maskable interrupt.

2.5.2.2. Restarting virtual machines by using the web console

If a virtual machine (VM) is in the running state, you can restart it by using the RHEL 8 web console.

Prerequisites

- The web console VM plug-in is installed on your system.

Procedure

- In the Virtual Machines interface, find the row of the VM you want to restart.

On the right side of the row, click the Menu button ⋮.

A drop-down menu of actions appears.

In the drop-down menu, click Reboot.

The VM shuts down and restarts.

Troubleshooting

- If the VM does not restart, click the Menu button ⋮ next to the Reboot button and select Force Reboot.

- To shut down an unresponsive VM, you can also send a non-maskable interrupt.

2.5.2.3. Sending non-maskable interrupts to VMs by using the web console

Sending a non-maskable interrupt (NMI) may cause an unresponsive running virtual machine (VM) to respond or shut down. For example, you can send the Ctrl+Alt+Del NMI to a VM that is not responding to standard input.

Prerequisites

- The web console VM plug-in is installed on your system.

Procedure

- In the Virtual Machines interface, find the row of the VM to which you want to send an NMI.

On the right side of the row, click the Menu button ⋮.

A drop-down menu of actions appears.

In the drop-down menu, click Send non-maskable interrupt.

An NMI is sent to the VM.

2.6. Deleting virtual machines

To delete virtual machines in RHEL 8, use the command line interface or the web console GUI.

2.6.1. Deleting virtual machines by using the command line interface

To delete a virtual machine (VM), you can remove its XML configuration and associated storage files from the host by using the command line. Follow the procedure below:

Prerequisites

- Back up important data from the VM.

- Shut down the VM.

- Make sure no other VMs use the same associated storage.

Procedure

Use the

virsh undefineutility.For example, the following command removes the guest1 VM, its associated storage volumes, and non-volatile RAM, if any.

# virsh undefine guest1 --remove-all-storage --nvram Domain 'guest1' has been undefined Volume 'vda'(/home/images/guest1.qcow2) removed.

Additional resources

-

The

virsh undefine --helpcommand -

The

virshman page

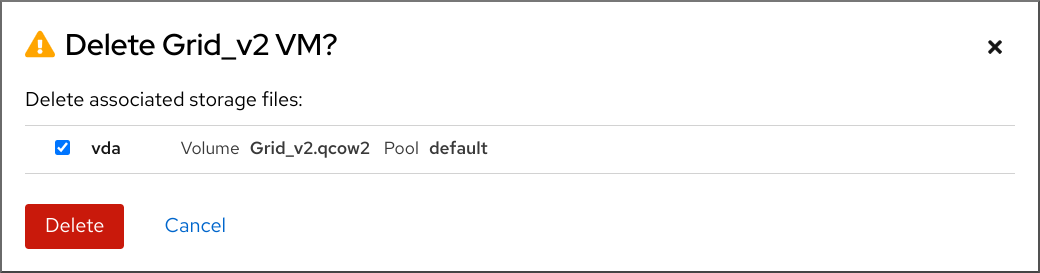

2.6.2. Deleting virtual machines by using the web console

To delete a virtual machine (VM) and its associated storage files from the host to which the RHEL 8 web console is connected with, follow the procedure below:

Prerequisites

- The web console VM plug-in is installed on your system.

- Back up important data from the VM.

- Make sure no other VM uses the same associated storage.

- Optional: Shut down the VM.

Procedure

In the Virtual Machines interface, click the Menu button ⋮ of the VM that you want to delete.

A drop down menu appears with controls for various VM operations.

Click Delete.

A confirmation dialog appears.

- Optional: To delete all or some of the storage files associated with the VM, select the checkboxes next to the storage files you want to delete.

Click Delete.

The VM and any selected storage files are deleted.

Chapter 3. Getting started with virtualization on IBM POWER

You can use KVM virtualization when using RHEL 8 on IBM POWER8 or POWER9 hardware. However, enabling the KVM hypervisor on your system requires extra steps compared to virtualization on AMD64 and Intel64 architectures. Certain RHEL 8 virtualization features also have different or restricted functionality on IBM POWER.

Apart from the information in the following sections, using virtualization on IBM POWER works the same as on AMD64 and Intel 64. Therefore, you can see other RHEL 8 virtualization documentation for more information when using virtualization on IBM POWER.

3.1. Enabling virtualization on IBM POWER

To set up a KVM hypervisor and create virtual machines (VMs) on an IBM POWER8 or IBM POWER9 system running RHEL 8, follow the instructions below.

Prerequisites

- RHEL 8 is installed and registered on your host machine.

The following minimum system resources are available:

- 6 GB free disk space for the host, plus another 6 GB for each intended VM.

- 2 GB of RAM for the host, plus another 2 GB for each intended VM.

- 4 CPUs on the host. VMs can generally run with a single assigned vCPU, but Red Hat recommends assigning 2 or more vCPUs per VM to avoid VMs becoming unresponsive during high load.

Your CPU machine type must support IBM POWER virtualization.

To verify this, query the platform information in your

/proc/cpuinfofile.# grep ^platform /proc/cpuinfo/ platform : PowerNVIf the output of this command includes the

PowerNVentry, you are running a PowerNV machine type and can use virtualization on IBM POWER.

Procedure

Load the KVM-HV kernel module

# modprobe kvm_hvVerify that the KVM kernel module is loaded

# lsmod | grep kvmIf KVM loaded successfully, the output of this command includes

kvm_hv.Install the packages in the virtualization module:

# yum module install virtInstall the

virt-installpackage:# yum install virt-installStart the

libvirtdservice.# systemctl start libvirtd

Verification

Verify that your system is prepared to be a virtualization host:

# virt-host-validate [...] QEMU: Checking if device /dev/vhost-net exists : PASS QEMU: Checking if device /dev/net/tun exists : PASS QEMU: Checking for cgroup 'memory' controller support : PASS QEMU: Checking for cgroup 'memory' controller mount-point : PASS [...] QEMU: Checking for cgroup 'blkio' controller support : PASS QEMU: Checking for cgroup 'blkio' controller mount-point : PASS QEMU: Checking if IOMMU is enabled by kernel : PASSIf all virt-host-validate checks return a

PASSvalue, your system is prepared for creating VMs.If any of the checks return a

FAILvalue, follow the displayed instructions to fix the problem.If any of the checks return a

WARNvalue, consider following the displayed instructions to improve virtualization capabilities.

Troubleshooting

If KVM virtualization is not supported by your host CPU, virt-host-validate generates the following output:

QEMU: Checking for hardware virtualization: FAIL (Only emulated CPUs are available, performance will be significantly limited)

However, VMs on such a host system will fail to boot, rather than have performance problems.

To work around this, you can change the

<domain type>value in the XML configuration of the VM toqemu. Note, however, that Red Hat does not support VMs that use theqemudomain type, and setting this is highly discouraged in production environments.

3.2. How virtualization on IBM POWER differs from AMD64 and Intel 64

KVM virtualization in RHEL 8 on IBM POWER systems is different from KVM on AMD64 and Intel 64 systems in a number of aspects, notably:

- Memory requirements

- VMs on IBM POWER consume more memory. Therefore, the recommended minimum memory allocation for a virtual machine (VM) on an IBM POWER host is 2GB RAM.

- Display protocols

The SPICE protocol is not supported on IBM POWER systems. To display the graphical output of a VM, use the

VNCprotocol. In addition, only the following virtual graphics card devices are supported:-

vga- only supported in-vga stdmode and not in-vga cirrusmode. -

virtio-vga -

virtio-gpu

-

- SMBIOS

- SMBIOS configuration is not available.

- Memory allocation errors

POWER8 VMs, including compatibility mode VMs, may fail with an error similar to:

qemu-kvm: Failed to allocate KVM HPT of order 33 (try smaller maxmem?): Cannot allocate memory

This is significantly more likely to occur on VMs that use RHEL 7.3 and prior as the guest OS.

To fix the problem, increase the CMA memory pool available for the guest’s hashed page table (HPT) by adding

kvm_cma_resv_ratio=memoryto the host’s kernel command line, where memory is the percentage of the host memory that should be reserved for the CMA pool (defaults to 5).- Huge pages

Transparent huge pages (THPs) do not provide any notable performance benefits on IBM POWER8 VMs. However, IBM POWER9 VMs can benefit from THPs as expected.

In addition, the size of static huge pages on IBM POWER8 systems are 16 MiB and 16 GiB, as opposed to 2 MiB and 1 GiB on AMD64, Intel 64, and IBM POWER9. As a consequence, to migrate a VM configured with static huge pages from an IBM POWER8 host to an IBM POWER9 host, you must first set up 1GiB huge pages on the VM.

- kvm-clock

-

The

kvm-clockservice does not have to be configured for time management in VMs on IBM POWER9. - pvpanic

IBM POWER9 systems do not support the

pvpanicdevice. However, an equivalent functionality is available and activated by default on this architecture. To enable it in a VM, use the<on_crash>XML configuration element with thepreservevalue.In addition, make sure to remove the

<panic>element from the<devices>section, as its presence can lead to the VM failing to boot on IBM POWER systems.- Single-threaded host

- On IBM POWER8 systems, the host machine must run in single-threaded mode to support VMs. This is automatically configured if the qemu-kvm packages are installed. However, VMs running on single-threaded hosts can still use multiple threads.

- Peripheral devices

A number of peripheral devices supported on AMD64 and Intel 64 systems are not supported on IBM POWER systems, or a different device is supported as a replacement.

-

Devices used for PCI-E hierarchy, including

ioh3420andxio3130-downstream, are not supported. This functionality is replaced by multiple independent PCI root bridges provided by thespapr-pci-host-bridgedevice. - UHCI and EHCI PCI controllers are not supported. Use OHCI and XHCI controllers instead.

-

IDE devices, including the virtual IDE CD-ROM (

ide-cd) and the virtual IDE disk (ide-hd), are not supported. Use thevirtio-scsiandvirtio-blkdevices instead. -

Emulated PCI NICs (

rtl8139) are not supported. Use thevirtio-netdevice instead. -

Sound devices, including

intel-hda,hda-output, andAC97, are not supported. -

USB redirection devices, including

usb-redirandusb-tablet, are not supported.

-

Devices used for PCI-E hierarchy, including

- v2v and p2v

-

The

virt-v2vandvirt-p2vutilities are only supported on the AMD64 and Intel 64 architecture, and are not provided on IBM POWER.

Additional sources

- For a comparison of selected supported and unsupported virtualization features across system architectures supported by Red Hat, see An overview of virtualization features support in RHEL 8.

Chapter 4. Getting started with virtualization on IBM Z

You can use KVM virtualization when using RHEL 8 on IBM Z hardware. However, enabling the KVM hypervisor on your system requires extra steps compared to virtualization on AMD64 and Intel 64 architectures. Certain RHEL 8 virtualization features also have different or restricted functionality on IBM Z.

Apart from the information in the following sections, using virtualization on IBM Z works the same as on AMD64 and Intel 64. Therefore, you can see other RHEL 8 virtualization documentation for more information when using virtualization on IBM Z.

Running KVM on the z/VM OS is not supported.

4.1. Enabling virtualization on IBM Z

To set up a KVM hypervisor and create virtual machines (VMs) on an IBM Z system running RHEL 8, follow the instructions below.

Prerequisites

RHEL 8.6 or later is installed and registered on your host machine.

ImportantIf you already enabled virtualization on an IBM Z machine by using RHEL 8.5 or earlier, you should instead reconfigure your virtualization module and update your system. For instructions, see How virtualization on IBM Z differs from AMD64 and Intel 64.

The following minimum system resources are available:

- 6 GB free disk space for the host, plus another 6 GB for each intended VM.

- 2 GB of RAM for the host, plus another 2 GB for each intended VM.

- 4 CPUs on the host. VMs can generally run with a single assigned vCPU, but Red Hat recommends assigning 2 or more vCPUs per VM to avoid VMs becoming unresponsive during high load.

- Your IBM Z host system is using a z13 CPU or later.

RHEL 8 is installed on a logical partition (LPAR). In addition, the LPAR supports the start-interpretive execution (SIE) virtualization functions.

To verify this, search for

siein your/proc/cpuinfofile.# grep sie /proc/cpuinfo features : esan3 zarch stfle msa ldisp eimm dfp edat etf3eh highgprs te sie

Procedure

Load the KVM kernel module:

# modprobe kvmVerify that the KVM kernel module is loaded:

# lsmod | grep kvmIf KVM loaded successfully, the output of this command includes

kvm.Install the packages in the

virt:rhel/commonmodule:# yum module install virt:rhel/commonStart the virtualization services:

# for drv in qemu network nodedev nwfilter secret storage interface; do systemctl start virt${drv}d{,-ro,-admin}.socket; done

Verification

Verify that your system is prepared to be a virtualization host.

# virt-host-validate [...] QEMU: Checking if device /dev/kvm is accessible : PASS QEMU: Checking if device /dev/vhost-net exists : PASS QEMU: Checking if device /dev/net/tun exists : PASS QEMU: Checking for cgroup 'memory' controller support : PASS QEMU: Checking for cgroup 'memory' controller mount-point : PASS [...]If all virt-host-validate checks return a

PASSvalue, your system is prepared for creating VMs.If any of the checks return a

FAILvalue, follow the displayed instructions to fix the problem.If any of the checks return a

WARNvalue, consider following the displayed instructions to improve virtualization capabilities.

Troubleshooting

If KVM virtualization is not supported by your host CPU, virt-host-validate generates the following output:

QEMU: Checking for hardware virtualization: FAIL (Only emulated CPUs are available, performance will be significantly limited)

However, VMs on such a host system will fail to boot, rather than have performance problems.

To work around this, you can change the

<domain type>value in the XML configuration of the VM toqemu. Note, however, that Red Hat does not support VMs that use theqemudomain type, and setting this is highly discouraged in production environments.

4.2. Updating virtualization on IBM Z from RHEL 8.5 to RHEL 8.6 or later

If you installed RHEL 8 on IBM Z hardware prior to RHEL 8.6, you had to obtain virtualization RPMs from the AV stream, separate from the base RPM stream of RHEL 8. Starting with RHEL 8.6, virtualization RPMs previously available only from the AV stream are available on the base RHEL stream. In addition, the AV stream will be discontinued in a future minor release of RHEL 8. Therefore, using the AV stream is no longer recommended.

By following the instructions below, you will deactivate your AV stream and enable your access to virtualization RPMs available in RHEL 8.6 and later versions.

Prerequisites

You are using a RHEL 8.5 on IBM Z, with the

virt:avmodule installed. To confirm that this is the case:# hostnamectl | grep "Operating System" Operating System: Red Hat Enterprise Linux 8.5 (Ootpa) # yum module list --installed [...] Advanced Virtualization for RHEL 8 IBM Z Systems (RPMs) Name Stream Profiles Summary virt av [e] common [i] Virtualization module

Procedure

Disable the

virt:avmodule.# yum disable virt:avRemove the pre-existing virtualization packages and modules that your system already contains.

# yum module reset virt -yUpgrade your packages to their latest RHEL versions.

# yum updateThis also automatically enables the

virt:rhelmodule on your system.

Verification

Ensure the

virtmodule on your system is provided by therhelstream.# yum module info virt Name : virt Stream : rhel [d][e][a] Version : 8050020211203195115 [...]

Additional resources

4.3. How virtualization on IBM Z differs from AMD64 and Intel 64

KVM virtualization in RHEL 8 on IBM Z systems differs from KVM on AMD64 and Intel 64 systems in the following:

- PCI and USB devices

Virtual PCI and USB devices are not supported on IBM Z. This also means that

virtio-*-pcidevices are unsupported, andvirtio-*-ccwdevices should be used instead. For example, usevirtio-net-ccwinstead ofvirtio-net-pci.Note that direct attachment of PCI devices, also known as PCI passthrough, is supported.

- Supported guest operating system

- Red Hat only supports VMs hosted on IBM Z if they use RHEL 7, 8, or 9 as their guest operating system.

- Device boot order

IBM Z does not support the

<boot dev='device'>XML configuration element. To define device boot order, use the<boot order='number'>element in the<devices>section of the XML.In addition, you can select the required boot entry by using the architecture-specific

loadparmattribute in the<boot>element. For example, the following determines that the disk should be used first in the boot sequence and if a Linux distribution is available on that disk, it will select the second boot entry:<disk type='file' device='disk'> <driver name='qemu' type='qcow2'/> <source file='/path/to/qcow2'/> <target dev='vda' bus='virtio'/> <address type='ccw' cssid='0xfe' ssid='0x0' devno='0x0000'/> <boot order='1' loadparm='2'/> </disk>

NoteBy using

<boot order='number'>for boot order management is also preferred on AMD64 and Intel 64 hosts.- Memory hot plug

- Adding memory to a running VM is not possible on IBM Z. Note that removing memory from a running VM (memory hot unplug) is also not possible on IBM Z, as well as on AMD64 and Intel 64.

- NUMA topology

-

Non-Uniform Memory Access (NUMA) topology for CPUs is not supported by

libvirton IBM Z. Therefore, tuning vCPU performance by using NUMA is not possible on these systems. - vfio-ap

- VMs on an IBM Z host can use the vfio-ap cryptographic device passthrough, which is not supported on any other architecture.

- vfio-ccw

- VMs on an IBM Z host can use the vfio-ccw disk device passthrough, which is not supported on any other architecture.

- SMBIOS

- SMBIOS configuration is not available on IBM Z.

- Watchdog devices

If using watchdog devices in your VM on an IBM Z host, use the

diag288model. For example:<devices> <watchdog model='diag288' action='poweroff'/> </devices>

- kvm-clock

-

The

kvm-clockservice is specific to AMD64 and Intel 64 systems, and does not have to be configured for VM time management on IBM Z. - v2v and p2v

-

The

virt-v2vandvirt-p2vutilities are supported only on the AMD64 and Intel 64 architecture, and are not provided on IBM Z. - Nested virtualization

- Creating nested VMs requires different settings on IBM Z than on AMD64 and Intel 64. For details, see Creating nested virtual machines.

- No graphical output in earlier releases

-

When using RHEL 8.3 or an earlier minor version on your host, displaying the VM graphical output is not possible when connecting to the VM by using the VNC protocol. This is because the

gnome-desktoputility was not supported in earlier RHEL versions on IBM Z. In addition, the SPICE display protocol does not work on IBM Z. - Migrations

To successfully migrate to a later host model (for example from IBM z14 to z15), or to update the hypervisor, use the

host-modelCPU mode. Thehost-passthroughandmaximumCPU modes are not recommended, as they are generally not migration-safe.If you want to specify an explicit CPU model in the

customCPU mode, follow these guidelines:-

Do not use CPU models that end with

-base. -

Do not use the

qemu,maxorhostCPU model.

To successfully migrate to an older host model (such as from z15 to z14), or to an earlier version of QEMU, KVM, or the RHEL kernel, use the CPU type of the oldest available host model without

-baseat the end.-

If you have both the source host and the destination host running, you can instead use the

virsh hypervisor-cpu-baselinecommand on the destination host to obtain a suitable CPU model. For details, see Verifying host CPU compatibility for virtual machine migration. - For more information about supported machine types in RHEL 8, see Recommended features in RHEL 8 virtualization.

-

Do not use CPU models that end with

- PXE installation and booting

When using PXE to run a VM on IBM Z, a specific configuration is required for the

pxelinux.cfg/defaultfile. For example:# pxelinux default linux label linux kernel kernel.img initrd initrd.img append ip=dhcp inst.repo=example.com/redhat/BaseOS/s390x/os/

- Secure Execution

-

You can boot a VM with a prepared secure guest image by defining

<launchSecurity type="s390-pv"/>in the XML configuration of the VM. This encrypts the VM’s memory to protect it from unwanted access by the hypervisor.

Note that the following features are not supported when running a VM in secure execution mode:

-

Device passthrough by using

vfio -

Obtaining memory information by using

virsh domstatsandvirsh memstat -

The

memballoonandvirtio-rngvirtual devices - Memory backing by using huge pages

- Live and non-live VM migrations

- Saving and restoring VMs

-

VM snapshots, including memory snapshots (using the

--memspecoption) -

Full memory dumps. Instead, specify the

--memory-onlyoption for thevirsh dumpcommand. - 248 or more vCPUs. The vCPU limit for secure guests is 247.

- Nested virtualization

Additional resources

4.4. Next steps

When setting up a VM on an IBM Z system, it is recommended to protect the guest OS from the "Spectre" vulnerability. To do so, use the

virsh editcommand to modify the VM’s XML configuration and configure its CPU in one of the following ways:Use the host CPU model:

<cpu mode='host-model' check='partial'> <model fallback='allow'/> </cpu>

This makes the

ppa15andbpbfeatures available to the guest if the host supports them.If using a specific host model, add the

ppa15andpbpfeatures. The following example uses the zEC12 CPU model:<cpu mode='custom' match='exact' check='partial'> <model fallback='allow'>zEC12</model> <feature policy='force' name='ppa15'/> <feature policy='force' name='bpb'/> </cpu>Note that when using the

ppa15feature with thez114andz196CPU models on a host machine that uses a z12 CPU, you also need to use the latest microcode level (bundle 95 or later).

4.5. Additional resources

Chapter 5. Managing virtual machines in the web console

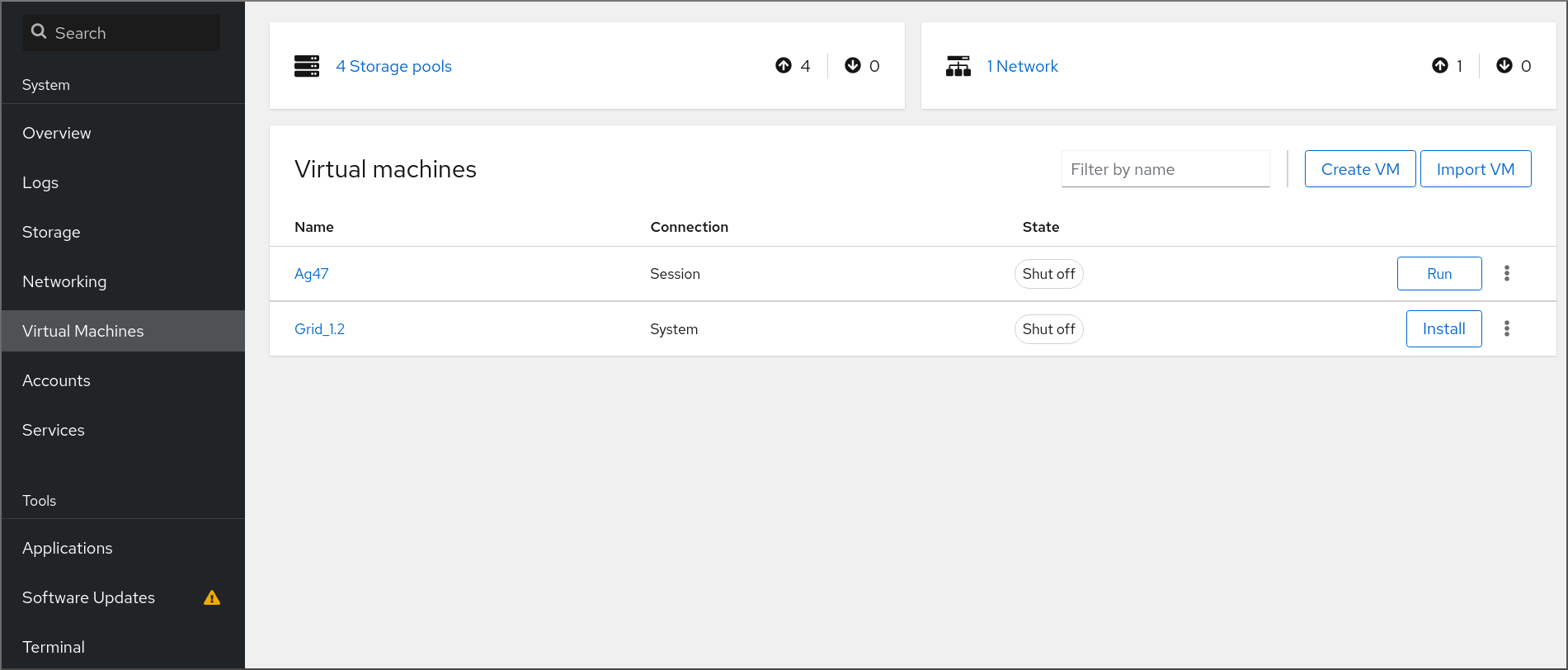

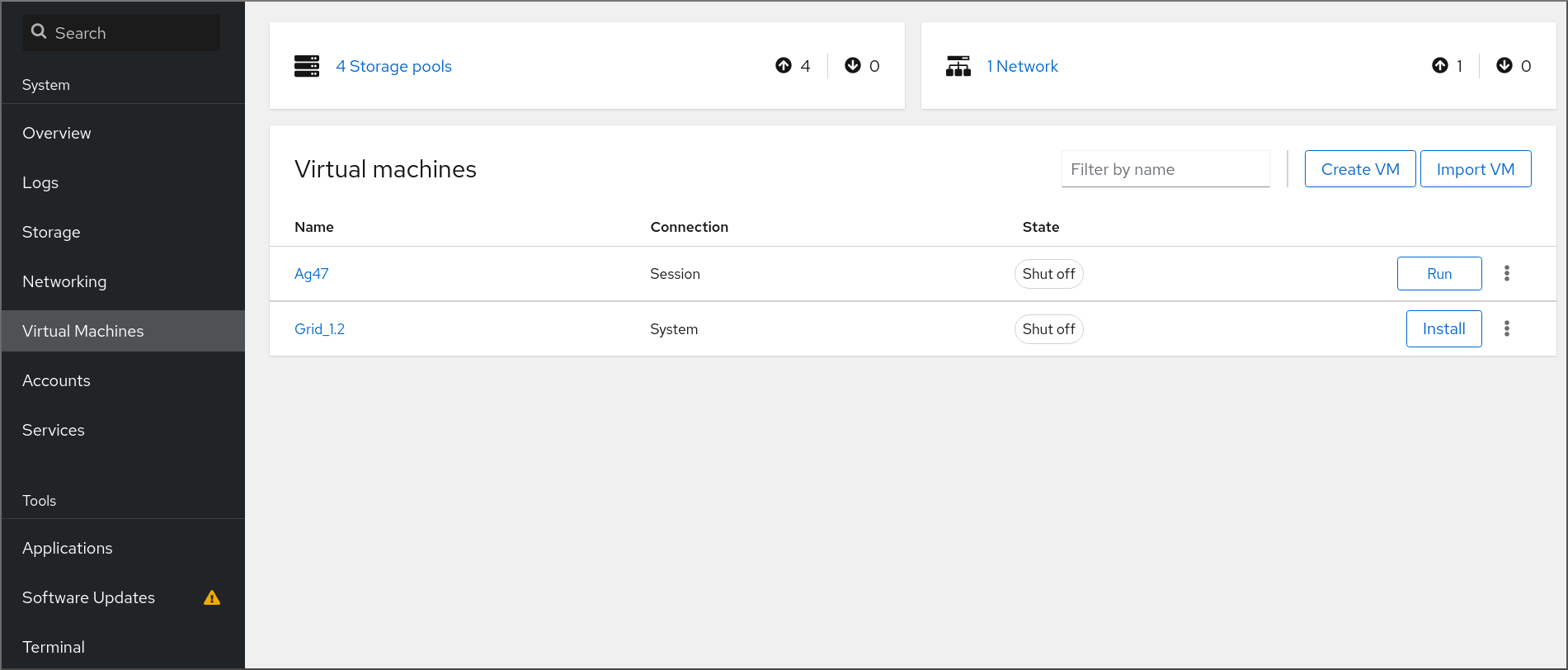

To manage virtual machines in a graphical interface on a RHEL 8 host, you can use the Virtual Machines pane in the RHEL 8 web console.

5.1. Overview of virtual machine management by using the web console

The RHEL 8 web console is a web-based interface for system administration. As one of its features, the web console provides a graphical view of virtual machines (VMs) on the host system, and makes it possible to create, access, and configure these VMs.

Note that to use the web console to manage your VMs on RHEL 8, you must first install a web console plug-in for virtualization.

Next steps

- For instructions on enabling VMs management in your web console, see Setting up the web console to manage virtual machines.

- For a comprehensive list of VM management actions that the web console provides, see Virtual machine management features available in the web console.

- For a list of features that are currently not available in the web console but can be used in the virt-manager application, see Differences between virtualization features in Virtual Machine Manager and the web console.

5.2. Setting up the web console to manage virtual machines

Before using the RHEL 8 web console to manage virtual machines (VMs), you must install the web console virtual machine plug-in on the host.

Prerequisites

Ensure that the web console is installed and enabled on your machine.

# systemctl status cockpit.socket cockpit.socket - Cockpit Web Service Socket Loaded: loaded (/usr/lib/systemd/system/cockpit.socket [...]If this command returns

Unit cockpit.socket could not be found, follow the Installing the web console document to enable the web console.

Procedure

Install the

cockpit-machinesplug-in.# yum install cockpit-machines

Verification

-

Access the web console, for example by entering the

https://localhost:9090address in your browser. - Log in.

If the installation was successful, Virtual Machines appears in the web console side menu.

Additional resources

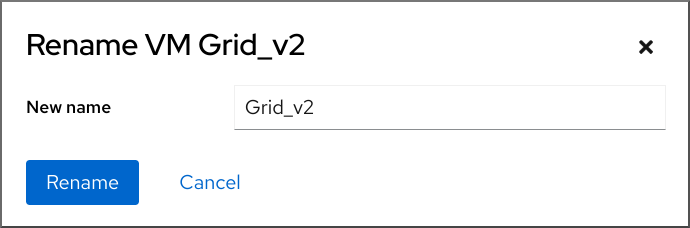

5.3. Renaming virtual machines by using the web console

You might require renaming an existing virtual machine (VM) to avoid naming conflicts or assign a new unique name based on your use case. To rename the VM, you can use the RHEL web console.

Prerequisites

- The web console VM plug-in is installed on your system.

- The VM is shut down.

Procedure

In the Virtual Machines interface, click the Menu button ⋮ of the VM that you want to rename.

A drop-down menu appears with controls for various VM operations.

Click Rename.

The Rename a VM dialog appears.

- In the New name field, enter a name for the VM.

- Click Rename.

Verification

- Check that the new VM name has appeared in the Virtual Machines interface.

5.4. Virtual machine management features available in the web console

By using the RHEL 8 web console, you can perform the following actions to manage the virtual machines (VMs) on your system.

Table 5.1. VM management tasks that you can perform in the RHEL 8 web console

5.5. Differences between virtualization features in Virtual Machine Manager and the web console