Red Hat Training

A Red Hat training course is available for Red Hat Enterprise Linux

7.1 Release Notes

Red Hat Enterprise Linux 7

Release Notes for Red Hat Enterprise Linux 7.1

Red Hat Customer Content Services

Abstract

The Release Notes document the major new features and enhancements implemented in Red Hat Enterprise Linux 7.1 and the known issues in this release. For detailed information regarding the changes between Red Hat Enterprise Linux 6 and Red Hat Enterprise Linux 7, see the Migration Planning Guide.

Acknowledgements

Red Hat Global Support Services would like to recognize Sterling Alexander and Michael Everette for their outstanding contributions in testing Red Hat Enterprise Linux 7.

Preface

Red Hat Enterprise Linux minor releases are an aggregation of individual enhancement, security, and bug fix errata. The Red Hat Enterprise Linux 7.1 Release Notes document the major changes, features, and enhancements introduced in the Red Hat Enterprise Linux 7 operating system and its accompanying applications for this minor release. In addition, the Red Hat Enterprise Linux 7.1 Release Notes document the known issues in Red Hat Enterprise Linux 7.1.

For information regarding the Red Hat Enterprise Linux life cycle, refer to https://access.redhat.com/support/policy/updates/errata/.

Part I. New Features

This part describes new features and major enhancements introduced in Red Hat Enterprise Linux 7.1.

Chapter 1. Architectures

Red Hat Enterprise Linux 7.1 is available as a single kit on the following architectures: [1]

In this release, Red Hat brings together improvements for servers and systems, as well as for the overall Red Hat open source experience.

1.1. Red Hat Enterprise Linux for POWER, little endian

Red Hat Enterprise Linux 7.1 introduces little endian support on IBM Power Systems servers using IBM POWER8 processors. Previously in Red Hat Enterprise Linux 7, only the big endian variant was offered for IBM Power Systems. Support for little endian on POWER8-based servers aims to improve portability of applications between 64-bit Intel compatible systems (

x86_64) and IBM Power Systems.

- Separate installation media are offered for installing Red Hat Enterprise Linux on IBM Power Systems servers in little endian mode. These media are available from the Downloads section of the Red Hat Customer Portal.

- Only IBM POWER8 processor-based servers are supported with Red Hat Enterprise Linux for POWER, little endian.

- Currently, Red Hat Enterprise Linux for POWER, little endian is supported only as a KVM guest under Red Hat Enteprise Virtualization for Power. Installation on bare metal hardware is currently not supported.

- The GRUB2 boot loader is used on the installation media and for network boot. The Installation Guide has been updated with instructions for setting up a network boot server for IBM Power Systems clients using GRUB2.

- All software packages for IBM Power Systems are available for both the little endian and the big endian variant of Red Hat Enterprise Linux for POWER.

- Packages built for Red Hat Enterprise Linux for POWER, little endian use the the

ppc64learchitecture code - for example, gcc-4.8.3-9.ael7b.ppc64le.rpm.

[1]

Note that the Red Hat Enterprise Linux 7.1 installation is supported only on 64-bit hardware. Red Hat Enterprise Linux 7.1 is able to run 32-bit operating systems, including previous versions of Red Hat Enterprise Linux, as virtual machines.

[2]

Red Hat Enterprise Linux 7.1 (little endian) is currently only supported as a KVM guest under Red Hat Enteprise Virtualization for Power and PowerVM hypervisors.

[3]

Note that Red Hat Enterprise Linux 7.1 supports IBM zEnterprise 196 hardware or later; IBM System z10 mainframe systems are no longer supported and will not boot Red Hat Enterprise Linux 7.1.

Chapter 2. Hardware Enablement

2.1. Intel Broadwell Processor and Graphics Support

Red Hat Enterprise Linux 7.1 added initial support for 5th generation Intel processors (code named

Broadwell) with the enablement of the Intel Xeon E3-12xx v4 processor family. Support includes the CPUs themselves, integrated graphics in both 2D and 3D mode, and audio support (Broadwell High Definition Legacy Audio, HDMI audio, and DisplayPort audio).

For detailed information regarding CPU enablement in Red Hat Enterprise Linux, please see the Red Hat Knowledgebase article available at https://access.redhat.com/support/policy/intel .

The turbostat tool (part of the kernel-tools package) has also been updated with support for the new processors.

2.2. Support for TCO Watchdog and I2C (SMBUS) on Intel Communications Chipset 89xx Series

Red Hat Enterprise Linux 7.1 adds support for TCO Watchdog and I2C (SMBUS) on the 89xx series Intel Communications Chipset (formerly Coleto Creek).

2.3. Intel Processor Microcode Update

CPU microcode for Intel processors in the microcode_ctl package has been updated from version

0x17 to version 0x1c in Red Hat Enterprise Linux 7.1.

2.4. AMD Hawaii GPU Support

Red Hat Enterprise Linux 7.1 enables support for hardware acceleration on AMD graphics cards using the Hawaii core (AMD Radeon R9 290 and AMD Radeon R9 290X).

2.5. OSA-Express5s Cards Support in qethqoat

Support for OSA-Express5s cards has been added to the

qethqoat tool, part of the s390utils package. This enhancement extends the serviceability of network and card setups for OSA-Express5s cards, and is included as a Technology Preview with Red Hat Enterprise Linux 7.1 on IBM System z.

Chapter 3. Installation and Booting

3.1. Installer

The Red Hat Enterprise Linux installer, Anaconda, has been enhanced in order to improve the installation process for Red Hat Enterprise Linux 7.1.

Interface

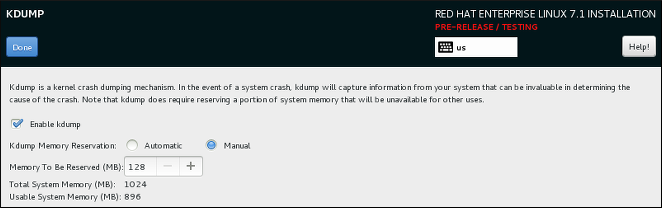

- The graphical installer interface now contains one additional screen which enables configuring the Kdump kernel crash dumping mechanism during the installation. Previously, this was configured after the installation using the firstboot utility, which was not accessible without a graphical interface. Now, you can configure Kdump as part of the installation process on systems without a graphical environment. The new screen is accessible from the main installer menu (Installation Summary).

Figure 3.1. The new Kdump screen

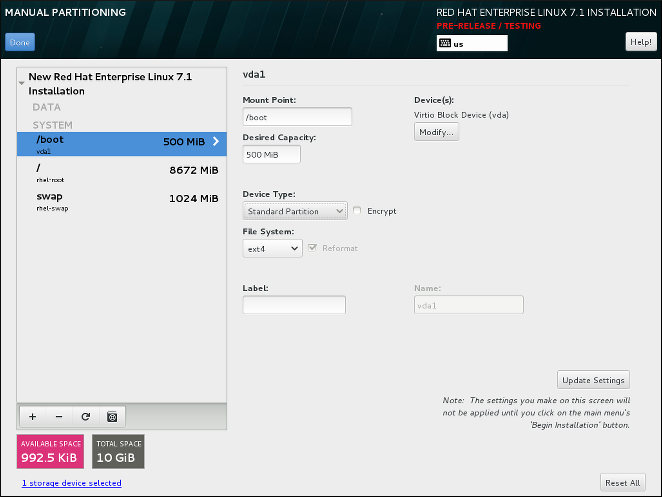

- The manual partitioning screen has been redesigned to improve user experience. Some of the controls have been moved to different locations on the screen.

Figure 3.2. The redesigned Manual Partitioning screen

- You can now configure a network bridge in the Network & Hostname screen of the installer. To do so, click the + button at the bottom of the interface list, select Bridge from the menu, and configure the bridge in the Editing bridge connection dialog window which appears afterwards. This dialog is provided by NetworkManager and is fully documented in the Red Hat Enterprise Linux 7.1 Networking Guide.Several new Kickstart options have also been added for bridge configuration. See below for details.

- The installer no longer uses multiple consoles to display logs. Instead, all logs are in tmux panes in virtual console 1 (

tty1). To access logs during the installation, press Ctrl+Alt+F1 to switch to tmux, and then use Ctrl+b X to switch between different windows (replace X with the number of a particular window as displayed at the bottom of the screen).To switch back to the graphical interface, press Ctrl+Alt+F6. - The command-line interface for Anaconda now includes full help. To view it, use the

anaconda -hcommand on a system with the anaconda package installed. The command-line interface allows you to run the installer on an installed system, which is useful for disk image installations.

Kickstart Commands and Options

- The

logvolcommand has a new option,--profile=. This option enables the user to specify the configuration profile name to use with thin logical volumes. If used, the name will also be included in the metadata for the logical volume.By default, the available profiles aredefaultandthin-performanceand are defined in the/etc/lvm/profiledirectory. See thelvm(8)man page for additional information. - The behavior of the

--size=and--percent=options of thelogvolcommand has changed. Previously, the--percent=option was used together with--growand--size=to specify how much a logical volume should expand after all statically-sized volumes have been created.Since Red Hat Enterprise Linux 7.1,--size=and--percent=can not be used on the samelogvolcommand. - The

--autoscreenshotoption of theautostepKickstart command has been fixed, and now correctly saves a screenshot of each screen into the/tmp/anaconda-screenshotsdirectory upon exiting the screen. After the installation completes, these screenshots are moved into/root/anaconda-screenshots. - The

liveimgcommand now supports installation from tar files as well as disk images. The tar archive must contain the installation media root file system, and the file name must end with.tar,.tbz,.tgz,.txz,.tar.bz2,.tar.gz, or.tar.xz. - Several new options have been added to the

networkcommand for configuring network bridges:- When the

--bridgeslaves=option is used, the network bridge with device name specified using the--device=option will be created and devices defined in the--bridgeslaves=option will be added to the bridge. For example:network --device=bridge0 --bridgeslaves=em1 - The

--bridgeopts=option requires an optional comma-separated list of parameters for the bridged interface. Available values arestp,priority,forward-delay,hello-time,max-age, andageing-time. For information about these parameters, see thenm-settings(5)man page.

- The

autopartcommand has a new option,--fstype. This option allows you to change the default file system type (xfs) when using automatic partitioning in a Kickstart file. - Several new features have been added to Kickstart for better container support. These features include:

- The new

--installoption for therepocommand saves the provided repository configuration on the installed system in the/etc/yum.repos.d/directory. Without using this option, a repository configured in a Kickstart file will only be available during the installation process, not on the installed system. - The

--disabledoption for thebootloadercommand prevents the boot loader from being installed. - The new

--nocoreoption for the%packagessection of a Kickstart file prevents the system from installing the@corepackage group. This enables installing extremely minimal systems for use with containers.

Note

Please note that the described options are useful only when combined with containers. Using these options in a general-purpose installation could result in an unusable system.

Entropy Gathering for LUKS Encryption

- If you choose to encrypt one or more partitions or logical volumes during the installation (either during an interactive installation or in a Kickstart file), Anaconda will attempt to gather 256 bits of entropy (random data) to ensure the encryption is secure. The installation will continue after 256 bits of entropy are gathered or after 10 minutes. The attempt to gather entropy happens at the beginning of the actual installation phase when encrypted partitions or volumes are being created. A dialog window will open in the graphical interface, showing progress and remaining time.The entropy gathering process can not be skipped or disabled. However, there are several ways to speed the process up:

- If you can access the system during the installation, you can supply additional entropy by pressing random keys on the keyboard and moving the mouse.

- If the system being installed is a virtual machine, you can attach a virtio-rng device (a virtual random number generator) as described in the Red Hat Enterprise Linux 7.1 Virtualization Deployment and Administration Guide.

Figure 3.3. Gathering Entropy for Encryption

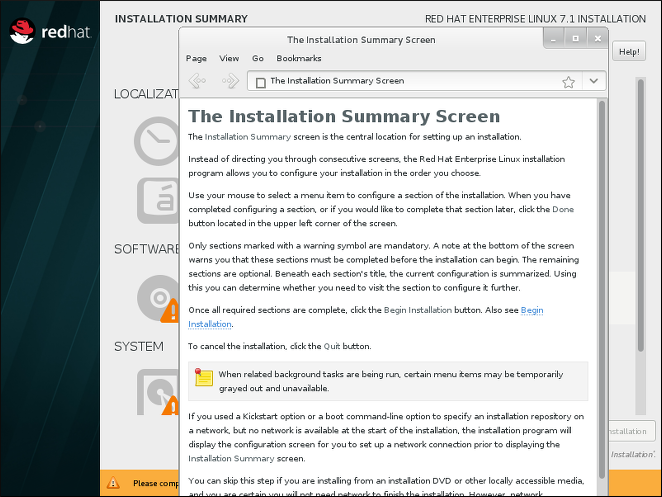

Built-in Help in the Graphical Installer

Each screen in the installer's graphical interface and in the Initial Setup utility now has a Help button in the top right corner. Clicking this button opens the section of the Installation Guide relevant to the current screen using the Yelp help browser.

Figure 3.4. Anaconda built-in help

3.2. Boot Loader

Installation media for IBM Power Systems now use the GRUB2 boot loader instead of the previously offered yaboot. For the big endian variant of Red Hat Enterprise Linux for POWER, GRUB2 is preferred but yaboot can also be used. The newly introduced little endian variant requires GRUB2 to boot.

The Installation Guide has been updated with instructions for setting up a network boot server for IBM Power Systems using GRUB2.

Chapter 4. Storage

LVM Cache

As of Red Hat Enterprise Linux 7.1, LVM cache is fully supported. This feature allows users to create logical volumes with a small fast device performing as a cache to larger slower devices. Please refer to the

lvm(7) manual page for information on creating cache logical volumes.

Note that the following restrictions on the use of cache logical volumes (LV):

- The cache LV must be a top-level device. It cannot be used as a thin-pool LV, an image of a RAID LV, or any other sub-LV type.

- The cache LV sub-LVs (the origin LV, metadata LV, and data LV) can only be of linear, stripe, or RAID type.

- The properties of the cache LV cannot be changed after creation. To change cache properties, remove the cache and recreate it with the desired properties.

Storage Array Management with libStorageMgmt API

Since Red Hat Enterprise Linux 7.1, storage array management with

libStorageMgmt, a storage array independent API, is fully supported. The provided API is stable, consistent, and allows developers to programmatically manage different storage arrays and utilize the hardware-accelerated features provided. System administrators can also use libStorageMgmt to manually configure storage and to automate storage management tasks with the included command-line interface. Please note that the Targetd plug-in is not fully supported and remains a Technology Preview. Supported hardware:

- NetApp Filer (ontap 7-Mode)

- Nexenta (nstor 3.1.x only)

- SMI-S, for the following vendors:

- HP 3PAR

- OS release 3.2.1 or later

- EMC VMAX and VNX

- Solutions Enabler V7.6.2.48 or later

- SMI-S Provider V4.6.2.18 hotfix kit or later

- HDS VSP Array non-embedded provider

- Hitachi Command Suite v8.0 or later

For more information on

libStorageMgmt, refer to the relevant chapter in the Storage Administration Guide.

Support for LSI Syncro

Red Hat Enterprise Linux 7.1 includes code in the

megaraid_sas driver to enable LSI Syncro CS high-availability direct-attached storage (HA-DAS) adapters. While the megaraid_sas driver is fully supported for previously enabled adapters, the use of this driver for Syncro CS is available as a Technology Preview. Support for this adapter will be provided directly by LSI, your system integrator, or system vendor. Users deploying Syncro CS on Red Hat Enterprise Linux 7.1 are encouraged to provide feedback to Red Hat and LSI. For more information on LSI Syncro CS solutions, please visit http://www.lsi.com/products/shared-das/pages/default.aspx.

DIF/DIX Support

DIF/DIX is a new addition to the SCSI Standard and a Technology Preview in Red Hat Enterprise Linux 7.1. DIF/DIX increases the size of the commonly used 512-byte disk block from 512 to 520 bytes, adding the Data Integrity Field (DIF). The DIF stores a checksum value for the data block that is calculated by the Host Bus Adapter (HBA) when a write occurs. The storage device then confirms the checksum on receive, and stores both the data and the checksum. Conversely, when a read occurs, the checksum can be verified by the storage device, and by the receiving HBA.

For more information, refer to the section Block Devices with DIF/DIX Enabled in the Storage Administration Guide.

Enhanced device-mapper-multipath Syntax Error Checking and Output

The

device-mapper-multipath tool has been enhanced to verify the multipath.conf file more reliably. As a result, if multipath.conf contains any lines that cannot be parsed, device-mapper-multipath reports an error and ignores these lines to avoid incorrect parsing.

In addition, the following wildcard expressions have been added for the

multipathd show paths format command:

- %N and %n for the host and target Fibre Channel World Wide Node Names, respectively.

- %R and %r for the host and target Fibre Channel World Wide Port Names, respectively.

Now, it is easier to associate multipaths with specific Fibre Channel hosts, targets, and their ports, which allows users to manage their storage configuration more effectively.

Chapter 5. File Systems

Support of Btrfs File System

The

Btrfs (B-Tree) file system is supported as a Technology Preview in Red Hat Enterprise Linux 7.1. This file system offers advanced management, reliability, and scalability features. It enables users to create snapshots, it enables compression and integrated device management.

OverlayFS

The

OverlayFS file system service allows the user to "overlay" one file system on top of another. Changes are recorded in the upper file system, while the lower file system remains unmodified. This can be useful because it allows multiple users to share a file-system image, for example containers, or when the base image is on read-only media, for example a DVD-ROM.

In Red Hat Enterprise Linux 7.1, OverlayFS is supported as a Technology Preview. There are currently two restrictions:

- It is recommended to use

ext4as the lower file system; the use ofxfsandgfs2file systems is not supported. - SELinux is not supported, and to use OverlayFS, it is required to disable enforcing mode.

Support of Parallel NFS

Parallel NFS (pNFS) is a part of the NFS v4.1 standard that allows clients to access storage devices directly and in parallel. The pNFS architecture can improve the scalability and performance of NFS servers for several common workloads.

pNFS defines three different storage protocols or layouts: files, objects, and blocks. The client supports the files layout, and since Red Hat Enterprise Linux 7.1, the blocks and object layouts are fully supported.

Red Hat continues to work with partners and open source projects to qualify new pNFS layout types and to provide full support for more layout types in the future.

For more information on pNFS, refer to http://www.pnfs.com/.

Chapter 6. Kernel

Support for Ceph Block Devices

The

libceph.ko and rbd.ko modules have been added to the Red Hat Enterprise Linux 7.1 kernel. These RBD kernel modules allow a Linux host to see a Ceph block device as a regular disk device entry which can be mounted to a directory and formatted with a standard file system, such as XFS or ext4.

Note that the CephFS module,

ceph.ko, is currently not supported in Red Hat Enterprise Linux 7.1.

Concurrent Flash MCL Updates

Microcode level upgrades (MCL) are enabled in Red Hat Enterprise Linux 7.1 on the IBM System z architecture. These upgrades can be applied without impacting I/O operations to the flash storage media and notify users of the changed flash hardware service level.

Dynamic kernel Patching

Red Hat Enterprise Linux 7.1 introduces kpatch, a dynamic "kernel patching utility", as a Technology Preview. The kpatch utility allows users to manage a collection of binary kernel patches which can be used to dynamically patch the kernel without rebooting. Note that kpatch is supported to run only on AMD64 and Intel 64 architectures.

Crashkernel with More than 1 CPU

Red Hat Enterprise Linux 7.1 enables booting crashkernel with more than one CPU. This function is supported as a Technology Preview.

dm-era Target

Red Hat Enterprise Linux 7.1 introduces the dm-era device-mapper target as a Technology Preview. dm-era keeps track of which blocks were written within a user-defined period of time called an "era". Each era target instance maintains the current era as a monotonically increasing 32-bit counter. This target enables backup software to track which blocks have changed since the last backup. It also enables partial invalidation of the contents of a cache to restore cache coherency after rolling back to a vendor snapshot. The dm-era target is primarily expected to be paired with the dm-cache target.

Cisco VIC kernel Driver

The Cisco VIC Infiniband kernel driver has been added to Red Hat Enterprise Linux 7.1 as a Technology Preview. This driver allows the use of Remote Directory Memory Access (RDMA)-like semantics on proprietary Cisco architectures.

Enhanced Entropy Management in hwrng

The paravirtualized hardware RNG (hwrng) support for Linux guests via virtio-rng has been enhanced in Red Hat Enterprise Linux 7.1. Previously, the

rngd daemon needed to be started inside the guest and directed to the guest kernel's entropy pool. Since Red Hat Enterprise Linux 7.1, the manual step has been removed. A new khwrngd thread fetches entropy from the virtio-rng device if the guest entropy falls below a specific level. Making this process transparent helps all Red Hat Enterprise Linux guests in utilizing the improved security benefits of having the paravirtualized hardware RNG provided by KVM hosts.

Scheduler Load-Balancing Performance Improvement

Previously, the scheduler load-balancing code balanced for all idle CPUs. In Red Hat Enterprise Linux 7.1, idle load balancing on behalf of an idle CPU is done only when the CPU is due for load balancing. This new behavior reduces the load-balancing rate on non-idle CPUs and therefore the amount of unnecessary work done by the scheduler, which improves its performance.

Improved newidle Balance in Scheduler

The behavior of the scheduler has been modified to stop searching for tasks in the

newidle balance code if there are runnable tasks, which leads to better performance.

HugeTLB Supports Per-Node 1GB Huge Page Allocation

Red Hat Enterprise Linux 7.1 has added support for gigantic page allocation at runtime, which allows the user of 1GB

hugetlbfs to specify which Non-Uniform Memory Access (NUMA) Node the 1GB should be allocated on during runtime.

New MCS-based Locking Mechanism

Red Hat Enterprise Linux 7.1 introduces a new locking mechanism, MCS locks. This new locking mechanism significantly reduces

spinlock overhead in large systems, which makes spinlocks generally more efficient in Red Hat Enterprise Linux 7.1.

Process Stack Size Increased from 8KB to 16KB

Since Red Hat Enterprise Linux 7.1, the kernel process stack size has been increased from 8KB to 16KB to help large processes that use stack space.

uprobe and uretprobe Features Enabled in perf and systemtap

In Red Hat Enterprise Linux 7.1, the

uprobe and uretprobe features work correctly with the perf command and the systemtap script.

End-To-End Data Consistency Checking

End-To-End data consistency checking on IBM System z is fully supported in Red Hat Enterprise Linux 7.1. This enhances data integrity and more effectively prevents data corruption as well as data loss.

DRBG on 32-Bit Systems

In Red Hat Enterprise Linux 7.1, the deterministic random bit generator (DRBG) has been updated to work on 32-bit systems.

NFSoRDMA Available

As a Technology Preview, the NFSoRDMA service has been enabled for Red Hat Enterprise Linux 7.1. This makes the

svcrdma module available for users who intend to use Remote Direct Memory Access (RDMA) transport with the Red Hat Enterprise Linux 7 NFS server.

Support for Large Crashkernel Sizes

The Kdump kernel crash dumping mechanism on systems with large memory, that is up to the Red Hat Enterprise Linux 7.1 maximum memory supported limit of 6TB, has become fully supported in Red Hat Enterprise Linux 7.1.

Kdump Supported on Secure Boot Machines

With Red Hat Enterprise Linux 7.1, the Kdump crash dumping mechanism is supported on machines with enabled Secure Boot.

Firmware-assisted Crash Dumping

Red Hat Enterprise Linux 7.1 introduces support for firmware-assisted dump (fadump), which provides an alternative crash dumping tool to kdump. The firmware-assisted feature provides a mechanism to release the reserved dump memory for general use once the crash dump is saved to the disk. This avoids the need to reboot the system after performing the dump, and thus reduces the system downtime. In addition, fadump uses of the kdump infrastructure already present in the user space, and works seamlessly with the existing kdump init scripts.

Runtime Instrumentation for IBM System z

As a Technology Preview, support for the Runtime Instrumentation feature has been added for Red Hat Enterprise Linux 7.1 on IBM System z. Runtime Instrumentation enables advanced analysis and execution for a number of user-space applications available with the IBM zEnterprise EC12 system.

Cisco usNIC Driver

Cisco Unified Communication Manager (UCM) servers have an optional feature to provide a Cisco proprietary User Space Network Interface Controller (usNIC), which allows performing Remote Direct Memory Access (RDMA)-like operations for user-space applications. As a Technology Preview, Red Hat Enterprise Linux 7.1 includes the

libusnic_verbs driver, which makes it possible to use usNIC devices via standard InfiniBand RDMA programming based on the Verbs API.

Intel Ethernet Server Adapter X710/XL710 Driver Update

The

i40e and i40evf kernel drivers have been updated to their latest upstream versions. These updated drivers are included as a Technology Preview in Red Hat Enterprise Linux 7.1.

Chapter 7. Virtualization

Increased Maximum Number of vCPUs in KVM

The maximum number of supported virtual CPUs (vCPUs) in a KVM guest has been increased to 240. This increases the amount of virtual processing units that a user can assign to the guest, and therefore improves its performance potential.

5th Generation Intel Core New Instructions Support in QEMU, KVM, and libvirt API

In Red Hat Enterprise Linux 7.1, the support for 5th Generation Intel Core processors has been added to the QEMU hypervisor, the KVM kernel code, and the

libvirt API. This allows KVM guests to use the following instructions and features: ADCX, ADOX, RDSFEED, PREFETCHW, and supervisor mode access prevention (SMAP).

USB 3.0 Support for KVM Guests

Red Hat Enterprise Linux 7.1 features improved USB support by adding USB 3.0 host adapter (xHCI) emulation as a Technology Preview.

Compression for the dump-guest-memory Command

Since Red Hat Enterprise Linux 7.1, the

dump-guest-memory command supports crash dump compression. This makes it possible for users who cannot use the virsh dump command to require less hard disk space for guest crash dumps. In addition, saving a compressed guest crash dump usually takes less time than saving a non-compressed one.

Open Virtual Machine Firmware

The Open Virtual Machine Firmware (OVMF) is available as a Technology Preview in Red Hat Enterprise Linux 7.1. OVMF is a UEFI secure boot environment for AMD64 and Intel 64 guests.

Improve Network Performance on Hyper-V

Several new features of the Hyper-V network driver have been introduced to improve network performance. For example, Receive-Side Scaling, Large Send Offload, Scatter/Gather I/O are now supported, and network throughput is increased.

hypervfcopyd in hyperv-daemons

The

hypervfcopyd daemon has been added to the hyperv-daemons packages. hypervfcopyd is an implementation of file copy service functionality for Linux Guest running on Hyper-V 2012 R2 host. It enables the host to copy a file (over VMBUS) into the Linux Guest.

New Features in libguestfs

Red Hat Enterprise Linux 7.1 introduces a number of new features in

libguestfs, a set of tools for accessing and modifying virtual machine disk images. Namely:

virt-builder— a new tool for building virtual machine images. Usevirt-builderto rapidly and securely create guests and customize them.

virt-customize— a new tool for customizing virtual machine disk images. Usevirt-customizeto install packages, edit configuration files, run scripts, and set passwords.

virt-diff— a new tool for showing differences between the file systems of two virtual machines. Usevirt-diffto easily discover what files have been changed between snapshots.

virt-log— a new tool for listing log files from guests. Thevirt-logtool supports a variety of guests including Linux traditional, Linux using journal, and Windows event log.

virt-v2v— a new tool for converting guests from a foreign hypervisor to run on KVM, managed by libvirt, OpenStack, oVirt, Red Hat Enterprise Virtualization (RHEV), and several other targets. Currently,virt-v2vcan convert Red Hat Enterprise Linux and Windows guests running on Xen and VMware ESX.

Flight Recorder Tracing

Support for flight recorder tracing has been introduced in Red Hat Enterprise Linux 7.1. Flight recorder tracing uses

SystemTap to automatically capture qemu-kvm data as long as the guest machine is running. This provides an additional avenue for investigating qemu-kvm problems, more flexible than qemu-kvm core dumps.

For detailed instructions on how to configure and use flight recorder tracing, see the Virtualization Deployment and Administration Guide.

LPAR Watchdog for IBM System z

As a Technology Preview, Red Hat Enterprise Linux 7.1 introduces a new watchdog driver for IBM System z. This enhanced watchdog supports Linux logical partitions (LPAR) as well as Linux guests in the z/VM hypervisor, and provides automatic reboot and automatic dump capabilities if a Linux system becomes unresponsive.

RDMA-based Migration of Live Guests

The support for Remote Direct Memory Access (RDMA)-based migration has been added to

libvirt. As a result, it is now possible to use the new rdma:// migration URI to request migration over RDMA, which allows for significantly shorter live migration of large guests. Note that prior to using RDMA-based migration, RDMA has to be configured and libvirt has to be set up to use it.

Removal of Q35 Chipset, PCI Express Bus, and AHCI Bus Emulation

Red Hat Enterprise Linux 7.1 removes the emulation of the Q35 machine type, required also for supporting the PCI Express (PCIe) bus and the Advanced Host Controller Interface (AHCI) bus in KVM guest virtual machines. These features were previously available on Red Hat Enterprise Linux as Technology Previews. However, they are still being actively developed and might become available in the future as part of Red Hat products.

Chapter 8. Clustering

Dynamic Token Timeout for Corosync

The

token_coefficient option has been added to the Corosync Cluster Engine. The value of token_coefficient is used only when the nodelist section is specified and contains at least three nodes. In such a situation, the token timeout is computed as follows:

[token + (amount of nodes - 2)] * token_coefficient

This allows the cluster to scale without manually changing the token timeout every time a new node is added. The default value is 650 milliseconds, but it can be set to 0, resulting in effective removal of this feature.

This feature allows

Corosync to handle dynamic addition and removal of nodes.

Corosync Tie Breaker Enhancement

The

auto_tie_breaker quorum feature of Corosync has been enhanced to provide options for more flexible configuration and modification of tie breaker nodes. Users can now select a list of nodes that will retain a quorum in case of an even cluster split, or choose that a quorum will be retained by the node with the lowest node ID or the highest node ID.

Enhancements for Red Hat High Availability

For the Red Hat Enterprise Linux 7.1 release, the

Red Hat High Availability Add-On supports the following features. For information on these features, see the High Availability Add-On Reference manual.

- The

pcs resource cleanupcommand can now reset the resource status andfailcountfor all resources. - You can specify a

lifetimeparameter for thepcs resource movecommand to indicate a period of time that the resource constraint this command creates will remain in effect. - You can use the

pcs aclcommand to set permissions for local users to allow read-only or read-write access to the cluster configuration by using access control lists (ACLs). - The

pcs constraintcommand now supports the configuration of specific constraint options in addition to general resource options. - The

pcs resource createcommand supports thedisabledparameter to indicate that the resource being created is not started automatically. - The

pcs cluster quorum unblockcommand prevents the cluster from waiting for all nodes when establishing a quorum. - You can configure resource group order with the

beforeandafterparameters of thepcs resource createcommand. - You can back up the cluster configuration in a tarball and restore the cluster configuration files on all nodes from backup with the

backupandrestoreoptions of thepcs configcommand.

Chapter 9. Compiler and Tools

Hot-patching Support for Linux on System z Binaries

GNU Compiler Collection (GCC) implements support for on-line patching of multi-threaded code for Linux on System z binaries. Selecting specific functions for hot-patching is enabled by using a "function attribute" and hot-patching for all functions can be enabled using the

-mhotpatch command-line option.

Enabling hot-patching has a negative impact on software size and performance. It is therefore recommended to use hot-patching for specific functions instead of enabling hot patch support for all functions.

Hot-patching support for Linux on System z binaries was a Technology Preview for Red Hat Enterprise Linux 7.0. With the release of Red Hat Enterprise Linux 7.1, it is now fully supported.

Performance Application Programming Interface Enhancement

Red Hat Enterprise Linux 7 includes the Performance Application Programming Interface (PAPI). PAPI is a specification for cross-platform interfaces to hardware performance counters on modern microprocessors. These counters exist as a small set of registers that count events, which are occurrences of specific signals related to a processor's function. Monitoring these events has a variety of uses in application performance analysis and tuning.

In Red Hat Enterprise Linux 7.1, PAPI and the related

libpfm libraries have been enhanced to provide support for IBM POWER8, Applied Micro X-Gene, ARM Cortex A57, and ARM Cortex A53 processors. In addition, the events sets have been updated for Intel Xeon, Intel Xeon v2, and Intel Xeon v3 procesors.

OProfile

OProfile is a system-wide profiler for Linux systems. The profiling runs transparently in the background and profile data can be collected at any time. In Red Hat Enterprise Linux 7.1, OProfile has been enhanced to provide support for the following processor families: Intel Atom Processor C2XXX, 5th Generation Intel Core Processors, IBM POWER8, AppliedMicro X-Gene, and ARM Cortex A57.

OpenJDK8

Red Hat Enterprise Linux 7.1 features the java-1.8.0-openjdk packages, which contain the latest version of the Open Java Development Kit, OpenJDK8, that is now fully supported. These packages provide a fully compliant implementation of Java SE 8 and may be used in parallel with the existing java-1.7.0-openjdk packages, which remain available in Red Hat Enterprise Linux 7.1.

Java 8 brings numerous new improvements, such as Lambda expressions, default methods, a new Stream API for collections, JDBC 4.2, hardware AES support, and much more. In addition to these, OpenJDK8 contains numerous other performance updates and bug fixes.

sosreport Replaces snap

The deprecated snap tool has been removed from the powerpc-utils package. Its functionality has been integrated into the sosreport tool.

GDB Support for Little-Endian 64-bit PowerPC

Red Hat Enterprise Linux 7.1 implements support for the 64-bit PowerPC little-endian architecture in the GNU Debugger (GDB).

Tuna Enhancement

Tuna is a tool that can be used to adjust scheduler tunables, such as scheduler policy, RT priority, and CPU affinity. In Red Hat Enterprise Linux 7.1, the Tuna GUI has been enhanced to request root authorization when launched, so that the user does not have to run the desktop as root to invoke the Tuna GUI. For further information on Tuna, see the Tuna User Guide.

crash Moved to Debugging Tools

With Red Hat Enterprise Linux 7.1, the crash packages are no longer a dependency of the abrt packages. Therefore, crash has been removed from the default installation of Red Hat Enterprise Linux 7 in order to keep the installation minimal. Now, users have to select the

Debugging Tools option in the Anaconda installer GUI for the crash packages to be installed.

Accurate ethtool Output

As a Technology Preview, the network-querying capabilities of the

ethtool utility have been enhanced for Red Hat Enterprise Linux 7.1 on IBM System z. As a result, when using hardware compatible with the improved querying, ethtool now provides improved monitoring options, and displays network card settings and values more accurately.

Concerns Regarding Transactional Synchronization Extensions

Intel has issued erratum HSW136 concerning Transactional Synchronization Extensions (TSX) instructions. Under certain circumstances, software using the Intel TSX instructions may result in unpredictable behavior. TSX instructions may be executed by applications built with the Red Hat Enterprise Linux 7.1 GCC under certain conditions. These include the use of GCC's experimental Transactional Memory support (

-fgnu-tm) when executed on hardware with TSX instructions enabled. Users of Red Hat Enterprise Linux 7.1 are advised to exercise further caution when experimenting with Transaction Memory at this time, or to disable TSX instructions by applying an appropriate hardware or firmware update.

Chapter 10. Networking

Trusted Network Connect

Red Hat Enterprise Linux 7.1 introduces the Trusted Network Connect functionality as a Technology Preview. Trusted Network Connect is used with existing network access control (NAC) solutions, such as TLS, 802.1X, or IPsec to integrate endpoint posture assessment; that is, collecting an endpoint's system information (such as operating system configuration settings, installed packages, and others, termed as integrity measurements). Trusted Network Connect is used to verify these measurements against network access policies before allowing the endpoint to access the network.

SR-IOV Functionality in the qlcnic Driver

Support for Single-Root I/O virtualization (SR-IOV) has been added to the

qlcnic driver as a Technology Preview. Support for this functionality will be provided directly by QLogic, and customers are encouraged to provide feedback to QLogic and Red Hat. Other functionality in the qlcnic driver remains fully supported.

Berkeley Packet Filter

Support for a Berkeley Packet Filter (BPF) based traffic classifier has been added to Red Hat Enterprise Linux 7.1. BPF is used in packet filtering for packet sockets, for sand-boxing in secure computing mode (seccomp), and in Netfilter. BPF has a just-in-time implementation for the most important architectures and has a rich syntax for building filters.

Improved Clock Stability

Previously, test results indicated that disabling the tickless kernel capability could significantly improve the stability of the system clock. The kernel tickless mode can be disabled by adding

nohz=off to the kernel boot option parameters. However, recent improvements applied to the kernel in Red Hat Enterprise Linux 7.1 have greatly improved the stability of the system clock and the difference in stability of the clock with and without nohz=off should be much smaller now for most users. This is useful for time synchronization applications using PTP and NTP.

libnetfilter_queue Packages

The libnetfilter_queue package has been added to Red Hat Enterprise Linux 7.1.

libnetfilter_queue is a user space library providing an API to packets that have been queued by the kernel packet filter. It enables receiving queued packets from the kernel nfnetlink_queue subsystem, parsing of the packets, rewriting packet headers, and re-injecting altered packets.

Teaming Enhancements

The libteam packages have been updated to version

1.15 in Red Hat Enterprise Linux 7.1. It provides a number of bug fixes and enhancements, in particular, teamd can now be automatically re-spawned by systemd, which increases overall reliability.

Intel QuickAssist Technology Driver

Intel QuickAssist Technology (QAT) driver has been added to Red Hat Enterprise Linux 7.1. The QAT driver enables QuickAssist hardware which adds hardware offload crypto capabilities to a system.

LinuxPTP timemaster Support for Failover between PTP and NTP

The linuxptp package has been updated to version

1.4 in Red Hat Enterprise Linux 7.1. It provides a number of bug fixes and enhancements, in particular, support for failover between PTP domains and NTP sources using the timemaster application. When there are multiple PTP domains available on the network, or fallback to NTP is needed, the timemaster program can be used to synchronize the system clock to all available time sources.

Network initscripts

Support for custom VLAN names has been added in Red Hat Enterprise Linux 7.1. Improved support for

IPv6 in GRE tunnels has been added; the inner address now persists across reboots.

TCP Delayed ACK

Support for a configurable TCP Delayed ACK has been added to the iproute package in Red Hat Enterprise Linux 7.1. This can be enabled by the

ip route quickack command.

NetworkManager

NetworkManager has been updated to version

1.0 in Red Hat Enterprise Linux 7.1.

The support for Wi-Fi, Bluetooth, wireless wide area network (WWAN), ADSL, and

team has been split into separate subpackages to allow for smaller installations.

To support smaller environments, this update introduces an optional built-in Dynamic Host Configuration Protocol (DHCP) client that uses less memory.

A new NetworkManager mode for static networking configurations that starts NetworkManager, configures interfaces and then quits, has been added.

NetworkManager provides better cooperation with non-NetworkManager managed devices, specifically by no longer setting the IFF_UP flag on these devices. In addition, NetworkManager is aware of connections created outside of itself and is able to save these to be used within NetworkManager if desired.

In Red Hat Enterprise Linux 7.1, NetworkManager assigns a default route for each interface allowed to have one. The metric of each default route is adjusted to select the global default interface, and this metric may be customized to prefer certain interfaces over others. Default routes added by other programs are not modified by NetworkManager.

Improvements have been made to NetworkManager's IPv6 configuration, allowing it to respect IPv6 router advertisement MTUs and keeping manually configured static IPv6 addresses even if automatic configuration fails. In addition, WWAN connections now support IPv6 if the modem and provider support it.

Various improvements to dispatcher scripts have been made, including support for a pre-up and pre-down script.

Bonding option

lacp_rate is now supported in Red Hat Enterprise Linux 7.1. NetworkManager has been enhanced to provide easy device renaming when renaming master interfaces with slave interfaces.

A priority setting has been added to the auto-connect function of NetworkManager. Now, if more than one eligible candidate is available for auto-connect, NetworkManager selects the connection with the highest priority. If all available connections have equal priority values, NetworkManager uses the default behavior and selects the last active connection.

This update also introduces numerous improvements to the

nmcli command-line utility, including the ability to provide passwords when connecting to Wi-Fi or 802.1X networks.

Network Namespaces and VTI

Support for virtual tunnel interfaces (VTI) with network namespaces has been added in Red Hat Enterprise Linux 7.1. This enables traffic from a VTI to be passed between different namespaces when packets are encapsulated or de-encapsulated.

Alternative Configuration Storage for the MemberOf Plug-In

The configuration of the

MemberOf plug-in for the Red Hat Directory Server can now be stored in a suffix mapped to a back-end database. This allows the MemberOf plug-in configuration to be replicated, which makes it easier for the user to maintain a consistent MemberOf plug-in configuration in a replicated environment.

Chapter 11. Red Hat Enterprise Linux Atomic Host

Included in the release of Red Hat Enterprise Linux 7.1 is Red Hat Enterprise Linux Atomic Host - a secure, lightweight, and minimal-footprint operating system optimized to run Linux containers. It has been designed to take advantage of the powerful technology available in Red Hat Enterprise Linux 7. Red Hat Enterprise Linux Atomic Host uses SELinux to provide strong safeguards in multi-tenant environments, and provides the ability to perform atomic upgrades and rollbacks, enabling quicker and easier maintenance with less downtime. Red Hat Enterprise Linux Atomic Host uses the same upstream projects delivered via the same RPM packaging as Red Hat Enterprise Linux 7.

Red Hat Enterprise Linux Atomic Host is pre-installed with the following tools to support Linux containers:

- Docker - For more information, see Get Started with Docker Formatted Container Images on Red Hat Systems.

- Kubernetes, flannel, etcd - For more information, see Get Started Orchestrating Containers with Kubernetes.

Red Hat Enterprise Linux Atomic Host makes use of the following technologies:

- OSTree and rpm-OSTree - These projects provide atomic upgrades and rollback capability.

- systemd - The powerful new init system for Linux that enables faster boot times and easier orchestration.

- SELinux - Enabled by default to provide complete multi-tenant security.

New features in Red Hat Enterprise Linux Atomic Host 7.1.4

- The iptables-service package has been added.

- It is now possible to enable automatic "command forwarding" when commands that are not found on Red Hat Enterprise Linux Atomic Host, are seamlessly retried inside the RHEL Atomic Tools container. The feature is disabled by default (it requires a RHEL Atomic Tools pulled on the system). To enable it, uncomment the

exportline in the/etc/sysconfig/atomicfile so it looks like this:export TOOLSIMG=rhel7/rhel-tools

- The

atomiccommand:- You can now pass three options (

OPT1,OPT2,OPT3) to theLABELcommand in a Dockerfile. Developers can add environment variables to the labels to allow users to pass additional commands usingatomic. The following is an example from a Dockerfile:

This line means that running the following command:LABEL docker run ${OPT1}${IMAGE}

is identical to runningatomic run --opt1="-ti" image_namedocker run -ti image_name - You can now use

${NAME}and${IMAGE}anywhere in your label, andatomicwill substitute it with an image and a name. - The

${SUDO_UID}and${SUDO_GID}options are set and can be used in imageLABEL. - The

atomic mountcommand attempts to mount the file system belonging to a given container/image ID or image to the given directory. Optionally, you can provide a registry and tag to use a specific version of an image.

New features in Red Hat Enterprise Linux Atomic Host 7.1.3

- Enhanced rpm-OSTee to provide a unique machine ID for each machine provisioned.

- Support for remote-specific GPG keyring has been added, specifically to associate a particular GPG key with a particular OSTree remote.

- the

atomiccommand:atomic upload— allows the user to upload a container image to a docker repository or to a Pulp/Crane instance.atomic version— displays the "Name Version Release" container label in the following format:ContainerID;Name-Version-Release;Image/Tagatomic verify— inspects an image to verify that the image layers are based on the latest image layers available. For example, if you have a MongoDB application based on rhel7-1.1.2 and a rhel7-1.1.3 base image is available, the command will inform you there is a later image.- A dbus interface has been added to verify and version commands.

New features in Red Hat Enterprise Linux Atomic Host 7.1.2

The atomic command-line interface is now available for Red Hat Enterprise Linux 7.1 as well as Red Hat Enterprise Linux Atomic Host. Note that the feature set is different on both systems. Only Red Hat Enterprise Linux Atomic Host includes support for OSTree updates. The

atomic run command is supported on both platforms.

atomic runallows a container to specify its run-time options via theRUNmeta-data label. This is used primarily with privileges.atomic installandatomic uninstallallow a container to specify install and uninstall scripts via theINSTALLandUNINSTALLmeta-data labels.atomicnow supports container upgrade and checking for updated images.

The iscsi-initiator-utils package has been added to Red Hat Enterprise Linux Atomic Host. This allows the system to mount iSCSI volumes; Kubernetes has gained a storage plugin to set up iSCSI mounts for containers.

You will also find Integrity Measurement Architecture (IMA), audit and libwrap available from systemd.

Important

Red Hat Enterprise Linux Atomic Host is not managed in the same way as other Red Hat Enterprise Linux 7 variants. Specifically:

- The Yum package manager is not used to update the system and install or update software packages. For more information, see Installing Applications on Red Hat Enterprise Linux Atomic Host.

- There are only two directories on the system with write access for storing local system configuration:

/etc/and/var/. The/usr/directory is mounted read-only. Other directories are symbolic links to a writable location - for example, the/home/directory is a symlink to/var/home/. For more information, see Red Hat Enterprise Linux Atomic Host File System. - The default partitioning dedicates most of available space to containers, using direct Logical Volume Management (LVM) instead of the default loopback.

For more information, see Getting Started with Red Hat Enterprise Linux Atomic Host.

Red Hat Enterprise Linux Atomic Host 7.1.1 provides new versions of Docker and etcd, and maintenance fixes for the

atomic command and other components.

Chapter 12. Linux Containers

12.1. Linux Containers Using Docker Technology

Red Hat Enterprise Linux Atomic Host 7.1.4 includes the following updates:

The docker packages have been upgraded to upstream version 1.7.1, which contains various improvements over version 1.7, which, in its turn, contains significant changes from version 1.6 included in Red Hat Enterprise Linux Atomic Host 7.1.3. See the following change log for the full list of fixes and features between version 1.6 and 1.7.1: https://github.com/docker/docker/blob/master/CHANGELOG.md. Additionally, Red Hat Enterprise Linux Atomic Host 7.1.4 includes the following changes:

- Firewalld is now supported for docker containers. If firewalld is running on the system, the rules will be added via the firewalld passthrough. If firewalld is reloaded, the configuration will be re-applied.

- Docker now mounts the cgroup information specific to a container under the

/sys/fs/cgroupdirectory. Some applications make decisions based on the amount of resources available to them. For example, a Java Virtual Machines (JVMs) would want to check how much memory is available to them so they can allocate a large enough pool to improve their performance. This allows applications to discover the maximum about of memory available to the container, by reading/sys/fs/cgroup/memory. - The

docker runcommand now emits a warning message if you are using a device mapper on a loopback device. It is strongly recommended to use thedm.thinpooldevoption as a storage option for a production environment. Do not useloopbackin a production environment. - You can now run containers in systemd mode with the

--init=systemdflag. If you are running a container with systemd as PID 1, this flag will turn on all systemd features to allow it to run in a non-privileged container. Setcontainer_uuidas an environment variable to pass to systemd what to store in the/etc/machine-idfile. This file links the journald within the container to to external log. Mount host directories into a container so systemd will not require privileges then mount the journal directory from the host into the container. If you run journald within the container, the host journalctl utility will be able to display the content. Mount the/rundirectory as a tmpfs. Then automatically mount the/sys/fs/cgroupdirectory as read-only into a container if--systemdis specified. Send proper signal to systemd when running in systemd mode. - The search experience within containers using the

docker searchcommand has been improved:- You can now prepend indices to search results.

- You can prefix a remote name with a registry name.

- You can shorten the index name if it is not an IP address.

- The

--no-indexoption has been added to avoid listing index names. - The sorting of entries when the index is preserved has been changed: You can sort by

index_name,start_count,registry_name,nameanddescription. - The sorting of entries when the index is omitted has been changed: You can sort by

registry_name,star_count,nameanddescription.

- You can now expose configured registry list using the Docker info API.

Red Hat Enterprise Linux Atomic Host 7.1.3 includes the following updates:

- docker-storage-setup

- docker-storage-setup now relies on the Logical Volume Manager (LVM) to extend thin pools automatically. By default, 60% of free space in the volume group is used for a thin pool and it is grown automatically by LVM. When the thin pool is full 60%, it will be grown by 20%.

- A default configuration file for docker-storage-setup is now in

/usr/lib/docker-storage-setup/docker-storage-setup. You can override the settings in this file by editing the/etc/sysconfig/docker-storage-setupfile. - Support for passing raw block devices to the docker service for creating a thin pool has been removed. Now the docker-storage-setup service creates an LVM thin pool and passes it to docker.

- The chunk size for thin pools has been increased from 64K to 512K.

- By default, the partition table for the root user is not grown. You can change this behavior by setting the

GROWPART=trueoption in the/etc/sysconfig/docker-storage-setupfile. - A thin pool is now set up with the

skip_block_zeroingfeature. This means that when a new block is provisioned in the pool, it will not be zeroed. This is done for performance reasons. One can change this behavior by using the--zerooption:lvchange --zero y thin-pool - By default, docker storage using the devicemapper graphdriver runs on loopback devices. It is strongly recommended to not use this setup, as it is not production ready. A warning message is displayed to warn the user about this. The user has the option to suppress this warning by passing this storage flag

dm.no_warn_on_loop_devices=true.

- Updates related to handling storage on Docker-formatted containers:

- NFS Volume Plugins validated with SELinux have been added. This includes using the NFS Volume Plugin to NFS Mount GlusterFS.

- Persistent volume support validated for the NFS volume plugin only has been added.

- Local storage (HostPath volume plugin) validated with SELinux has been added. (requires workaround described in the docs)

- iSCSI Volume Plugins validated with SELinux has been added.

- GCEPersistentDisk Volume Plugins validated with SELinux has been added. (requires workaround described in the docs)

Red Hat Enterprise Linux Atomic Host 7.1.2 includes the following updates:

- docker-1.6.0-11.el7

- A completely re-architected Registry and a new Registry API supported by Docker 1.6 that enhance significantly image pulls performance and reliability.

- A new logging driver API which allows you to send container logs to other systems has been added to the docker utilty. The

--log driveroption has been added to thedocker runcommand and it takes three sub-options: a JSON file, syslog, or none. Thenoneoption can be used with applications with verbose logs that are non-essential. - Dockerfile instructions can now be used when committing and importing. This also adds the ability to make changes to running images without having to re-build the entire image. The

commit --changeandimport --changeoptions allow you to specify standard changes to be applied to the new image. These are expressed in the Dockerfile syntax and used to modify the image. - This release adds support for custom cgroups. Using the

--cgroup-parentflag, you can pass a specific cgroup to run a container in. This allows you to create and manage cgroups on their own. You can define custom resources for those cgroups and put containers under a common parent group. - With this update, you can now specify the default ulimit settings for all containers, when configuring the Docker daemon. For example:

This command sets a soft limit of 1024 and a hard limit of 2048 child processes for all containers. You can set this option multiple times for different ulimit values, for example:docker -d --default-ulimit nproc=1024:2048

These settings can be overwritten when creating a container as such:--default-ulimit nproc=1024:2408 --default-ulimit nofile=100:200

This will overwrite the default nproc value passed into the daemon.docker run -d --ulimit nproc=2048:4096 httpd - The ability to block registries with the

--block-registryflag. - Support for searching multiple registries at once.

- Pushing local images to a public registry requires confirmation.

- Short names are resolved locally against a list of registries configured in an order, with the docker.io registry last. This way, pulling is always done with a fully qualified name.

Red Hat Enterprise Linux Atomic Host 7.1.1 includes the following updates:

- docker-1.5.0-28.el7

- IPv6 support: Support is available for globally routed and local link addresses.

- Read-only containers: This option is used to restrict applications in a container from being able to write to the entire file system.

- Statistics API and endpoint: Statistics on live CPU, memory, network IO and block IO can now be streamed from containers.

- The

docker build -f docker_filecommand to specify a file other than Dockerfile to be used by docker build. - The ability to specify additional registries to use for unqualified pulls and searches. Prior to this an unqualified name was only searched in the public Docker Hub.

- The ability to block communication with certain registries with

--block-registry=<registry>flag. This includes the ability to block the public Docker Hub and the ability to block all but specified registries. - Confirmation is required to push to a public registry.

- All repositories are now fully qualified when listed. The output of

docker imageslists the source registry name for all images pulled. The output ofdocker searchshows the source registry name for all results.

For more information, see Get Started with Docker Formatted Container Images on Red Hat Systems

12.2. Container Orchestration

Red Hat Enterprise Linux Atomic Host 7.1.5 and Red Hat Enterprise Linux 7.1 include the following updates:

- kubernetes-1.0.3-0.1.gitb9a88a7.el7

- The new kubernetes-client subpackage which provides the

kubectlcommand has been added to the kubernetes component.

- etcd-2.1.1-2.el7

- etcd now provides improved performance when using the peer TLS protocol.

Red Hat Enterprise Linux Atomic Host 7.1.4 and Red Hat Enterprise Linux 7.1 include the following updates:

- kubernetes-1.0.0-0.8.gitb2dafda.el7

- You can now set up a Kubernetes cluster using the Ansible automation platform.

Red Hat Enterprise Linux Atomic Host 7.1.3 and Red Hat Enterprise Linux 7.1 include the following updates:

- kubernetes-0.17.1-4.el7

- kubernetes nodes no longer need to be explicitly created in the API server, they will automatically join and register themselves.

- NFS, GlusterFS and Ceph block plugins have been added to Red Hat Enterprise Linux, and NFS support has been added to Red Hat Enterprise Linux Atomic Host.

- etcd-2.0.11-2.el7

- Fixed bugs with adding or removing cluster members, performance and resource usage improvements.

- The

GOMAXPROCSenvironment variable has been set to use the maximum number of available processors on a system, now etcd will use all processors concurrently. - The configuration file must be updated to include the

-advertise-client-urlsflag when setting the-listen-client-urlsflag.

Red Hat Enterprise Linux Atomic Host 7.1.2 and Red Hat Enterprise Linux 7.1 include the following updates:

- kubernetes-0.15.0-0.3.git0ea87e4.el7

- Enabled the v1beta3 API and sets it as the default API version.

- Added multi-services.

- The Kubelet now listens on a secure HTTPS port.

- The API server now supports client certificate authentication.

- Enabled log collection from the master pod.

- New volume support: iSCSI volume plug-in, GlusterFS volume plug-in, Amazon Elastic Block Store (Amazon EBS) volume support.

- Fixed the NFS volume plug-in * configure scheduler using JSON.

- Improved messages on scheduler failure.

- Improved messages on port conflicts.

- Improved responsiveness of the master when creating new pods.

- Added support for inter-process communication (IPC) namespaces.

- The

--etcd_config_fileand--etcd_serversoptions have been removed from the kube-proxy utility; use the--masteroption instead.

- etcd-2.0.9-2.el7

- The configuration file format has changed significantly; using old configuration files will cause upgrades of etcd to fail.

- The

etcdctlcommand now supports importing hidden keys from the given snapshot. - Added support for IPv6.

- The etcd proxy no longer fails to restart after initial configuration.

- The

-initial-clusterflag is no longer required when bootstrapping a single member cluster with the-nameflag set. - etcd 2 now uses its own implementation of the Raft distributed consensus protocol; previous versions of etcd used the goraft implementation.

- Added the

etcdctlimport command to import the migration snap generated in etcd 0.4.8 to the etcd cluster version 2.0. - The

etcdctlutility now takes port 2379 as its default port.

- The cadvisor package has been obsoleted by the kubernetes package. The functionality of cadvisor is now part of the kubelet sub-package.

Red Hat Enterprise Linux 7.1 includes support for orchestration Linux Containers built using docker technology via kubernetes, flannel and etcd.

Red Hat Enterprise Linux Atomic Host 7.1.1 and Red Hat Enterprise Linux 7.1 include the following updates:

- etcd 0.4.6-0.13.el7 - a new command,

etcdctlwas added to make browsing and editing etcd easier for a system administrator. - flannel 0.2.0-7.el7 - a bug fix to support delaying startup until after network interfaces are up.

For more information see Get Started Orchestrating Containers with Kubernetes.

12.3. Cockpit Enablement

Red Hat Enterprise Linux Atomic Host 7.1.5 and Red Hat Enterprise Linux 7.1 include the following updates:

- The Cockpit Web Service is now available as a privileged container. This allows you to run Cockpit on systems like Red Hat Enterprise Linux Atomic Host where the cockpit-ws package cannot be installed, but other prerequisites of Cockpit are included. To use this privileged container, use the following command:

$

sudo atomic run rhel7/cockpit-ws - Cockpit now includes the ability to access other hosts using a single instance of the Cockpit Web Service. This is useful when only one machine is reachable by the user, or to manage other hosts that do not have the Cockpit Web Service installed. The other hosts should have the cockpit-bridge and cockpit-shell packages installed.

- The authorized SSH keys for a particular user and system can now be configured using the "Administrator Accounts" section.

- Cockpit now uses the new

storagedsystem API to configure and monitor disks and file systems.

Red Hat Enterprise Linux Atomic Host 7.1.2 and Red Hat Enterprise Linux 7.1 include the following updates:

- libssh — a multiplatform C library which implements the SSHv1 and SSHv2 protocol on client and server side. It can be used to remotely execute programs, transfer files, use a secure and transparent tunnel for remote programs. The Secure FTP implementation makes it easier to manager remote files.

- cockpit-ws — The cockpit-ws package contains the web server component used for communication between the browser application and various configuration tools and services like

cockpitd. cockpit-ws is automatically started on system boot. The cockpit-ws package has been included in Red Hat Enterprise Linux 7.1 only.

12.4. Containers Using the libvirt-lxc Tooling Have Been Deprecated

The following libvirt-lxc packages are deprecated since Red Hat Enterprise Linux 7.1:

- libvirt-daemon-driver-lxc

- libvirt-daemon-lxc

- libvirt-login-shell

Future development on the Linux containers framework is now based on the docker command-line interface. libvirt-lxc tooling may be removed in a future release of Red Hat Enterprise Linux (including Red Hat Enterprise Linux 7) and should not be relied upon for developing custom container management applications.

Chapter 13. Authentication and Interoperability

Manual Backup and Restore Functionality

This update introduces the

ipa-backup and ipa-restore commands to Identity Management (IdM), which allow users to manually back up their IdM data and restore them in case of a hardware failure. For further information, see the ipa-backup(1) and ipa-restore(1) manual pages or the documentation in the Linux Domain Identity, Authentication, and Policy Guide.

Support for Migration from WinSync to Trust

This update implements the new

ID Views mechanism of user configuration. It enables the migration of Identity Management users from a WinSync synchronization-based architecture used by Active Directory to an infrastructure based on Cross-Realm Trusts. For the details of ID Views and the migration procedure, see the documentation in the Windows Integration Guide.

One-Time Password Authentication

One of the best ways to increase authentication security is to require two factor authentication (2FA). A very popular option is to use one-time passwords (OTP). This technique began in the proprietary space, but over time some open standards emerged (HOTP: RFC 4226, TOTP: RFC 6238). Identity Management in Red Hat Enterprise Linux 7.1 contains the first implementation of the standard OTP mechanism. For further details, see the documentation in the System-Level Authentication Guide.

SSSD Integration for the Common Internet File System

A plug-in interface provided by

SSSD has been added to configure the way in which the cifs-utils utility conducts the ID-mapping process. As a result, an SSSD client can now access a CIFS share with the same functionality as a client running the Winbind service. For further information, see the documentation in the Windows Integration Guide.

Certificate Authority Management Tool

The

ipa-cacert-manage renew command has been added to the Identity management (IdM) client, which makes it possible to renew the IdM Certification Authority (CA) file. This enables users to smoothly install and set up IdM using a certificate signed by an external CA. For details on this feature, see the ipa-cacert-manage(1) manual page.

Increased Access Control Granularity

It is now possible to regulate read permissions of specific sections in the Identity Management (IdM) server UI. This allows IdM server administrators to limit the accessibility of privileged content only to chosen users. In addition, authenticated users of the IdM server no longer have read permissions to all of its contents by default. These changes improve the overall security of the IdM server data.

Limited Domain Access for Unprivileged Users

The

domains= option has been added to the pam_sss module, which overrides the domains= option in the /etc/sssd/sssd.conf file. In addition, this update adds the pam_trusted_users option, which allows the user to add a list of numerical UIDs or user names that are trusted by the SSSD daemon, and the pam_public_domains option and a list of domains accessible even for untrusted users. The mentioned additions allow the configuration of systems, where regular users are allowed to access the specified applications, but do not have login rights on the system itself. For additional information on this feature, see the documentation in the Linux Domain Identity, Authentication, and Policy Guide.

Automatic data provider configuration

The

ipa-client-install command now by default configures SSSD as the data provider for the sudo service. This behavior can be disabled by using the --no-sudo option. In addition, the --nisdomain option has been added to specify the NIS domain name for the Identity Management client installation, and the --no_nisdomain option has been added to avoid setting the NIS domain name. If neither of these options are used, the IPA domain is used instead.

Use of AD and LDAP sudo Providers

The AD provider is a back end used to connect to an Active Directory server. In Red Hat Enterprise Linux 7.1, using the AD sudo provider together with the LDAP provider is supported as a Technology Preview. To enable the AD sudo provider, add the

sudo_provider=ad setting in the domain section of the sssd.conf file.

32-bit Version of krb5-server and krb5-server-ldap Deprecated

The 32-bit version of

Kerberos 5 Server is no longer distributed, and the following packages are deprecated since Red Hat Enterprise Linux 7.1: krb5-server.i686, krb5-server.s390, krb5-server.ppc, krb5-server-ldap.i686, krb5-server-ldap.s390, and krb5-server-ldap.ppc. There is no need to distribute the 32-bit version of krb5-server on Red Hat Enterprise Linux 7, which is supported only on the following architectures: AMD64 and Intel 64 systems (x86_64), 64-bit IBM Power Systems servers (ppc64), and IBM System z (s390x).

SSSD Leverages GPO Policies to Define HBAC

SSSD is now able to use GPO objects stored on an AD server for access control. This enhancement mimics the functionality of Windows clients, allowing to use a single set of access control rules to handle both Windows and Unix machines. In effect, Windows administrators can now use GPOs to control access to Linux clients.

Apache Modules for IPA

A set of Apache modules has been added to Red Hat Enterprise Linux 7.1 as a Technology Preview. The Apache modules can be used by external applications to achieve tighter interaction with Identity Management beyond simple authentication.

Chapter 14. Security

SCAP Security Guide

The scap-security-guide package has been included in Red Hat Enterprise Linux 7.1 to provide security guidance, baselines, and associated validation mechanisms. The guidance is specified in the Security Content Automation Protocol (SCAP), which constitutes a catalog of practical hardening advice. SCAP Security Guide contains the necessary data to perform system security compliance scans regarding prescribed security policy requirements; both a written description and an automated test (probe) are included. By automating the testing, SCAP Security Guide provides a convenient and reliable way to verify system compliance regularly.

The Red Hat Enterprise Linux 7.1 version of the SCAP Security Guide includes the Red Hat Corporate Profile for Certified Cloud Providers (RH CCP), which can be used for compliance scans of Red Hat Enterprise Linux Server 7.1 cloud systems.

Also, the Red Hat Enterprise Linux 7.1 scap-security-guide package contains SCAP datastream content format files for Red Hat Enterprise Linux 6 and Red Hat Enterprise Linux 7, so that remote compliance scanning of both of these products is possible.

The Red Hat Enterprise Linux 7.1 system administrator can use the

oscap command line tool from the openscap-scanner package to verify that the system conforms to the provided guidelines. See the scap-security-guide(8) manual page for further information.

SELinux Policy

In Red Hat Enterprise Linux 7.1, the SELinux policy has been modified; services without their own SELinux policy that previously ran in the

init_t domain now run in the newly-added unconfined_service_t domain. See the Unconfined Processes chapter in the SELinux User's and Administrator's Guide for Red Hat Enterprise Linux 7.1.

New Features in OpenSSH

The OpenSSH set of tools has been updated to version 6.6.1p1, which adds several new features related to cryptography:

- Key exchange using elliptic-curve

Diffie-Hellmanin Daniel Bernstein'sCurve25519is now supported. This method is now the default provided both the server and the client support it. - Support has been added for using the

Ed25519elliptic-curve signature scheme as a public key type.Ed25519, which can be used for both user and host keys, offers better security thanECDSAandDSAas well as good performance. - A new private-key format has been added that uses the

bcryptkey-derivation function (KDF). By default, this format is used forEd25519keys but may be requested for other types of keys as well. - A new transport cipher,

chacha20-poly1305@openssh.com, has been added. It combines Daniel Bernstein'sChaCha20stream cipher and thePoly1305message authentication code (MAC).

New Features in Libreswan

The Libreswan implementation of IPsec VPN has been updated to version 3.12, which adds several new features and improvements:

- New ciphers have been added.

IKEv2support has been improved.- Intermediary certificate chain support has been added in

IKEv1andIKEv2. - Connection handling has been improved.

- Interoperability has been improved with OpenBSD, Cisco, and Android systems.

- systemd support has been improved.

- Support has been added for hashed

CERTREQand traffic statistics.

New Features in TNC

The Trusted Network Connect (TNC) Architecture, provided by the strongimcv package, has been updated and is now based on strongSwan 5.2.0. The following new features and improvements have been added to the TNC:

- The

PT-EAPtransport protocol (RFC 7171) for Trusted Network Connect has been added. - The Attestation Integrity Measurement Collector (IMC)/Integrity Measurement Verifier (IMV) pair now supports the IMA-NG measurement format.

- The Attestation IMV support has been improved by implementing a new TPMRA work item.

- Support has been added for a JSON-based REST API with SWID IMV.

- The SWID IMC can now extract all installed packages from the dpkg, rpm, or pacman package managers using the swidGenerator, which generates SWID tags according to the new ISO/IEC 19770-2:2014 standard.

- The

libtlsTLS 1.2implementation as used byEAP-(T)TLSand other protocols has been extended by AEAD mode support, currently limited toAES-GCM. - Improved (IMV) support for sharing access requestor ID, device ID, and product information of an access requestor via a common

imv_sessionobject. - Several bugs have been fixed in existing

IF-TNCCS(PB-TNC,IF-M(PA-TNC)) protocols, and in theOS IMC/IMVpair.

New Features in GnuTLS

The GnuTLS implementation of the

SSL, TLS, and DTLS protocols has been updated to version 3.3.8, which offers a number of new features and improvements:

- Support for

DTLS 1.2has been added. - Support for Application Layer Protocol Negotiation (ALPN) has been added.

- The performance of elliptic-curve cipher suites has been improved.

- New cipher suites,

RSA-PSKandCAMELLIA-GCM, have been added. - Native support for the Trusted Platform Module (TPM) standard has been added.

- Support for

PKCS#11smart cards and hardware security modules (HSM) has been improved in several ways. - Compliance with the FIPS 140 security standards (Federal Information Processing Standards) has been improved in several ways.

Chapter 15. Desktop

Mozilla Thunderbird

Mozilla Thunderbird, provided by the thunderbird package, has been added in Red Hat Enterprise Linux 7.1 and offers an alternative to the Evolution mail and newsgroup client.

Support for Quad-buffered OpenGL Stereo Visuals

GNOME Shell and the Mutter compositing window manager now allow you to use quad-buffered OpenGL stereo visuals on supported hardware. You need to have the NVIDIA Display Driver version 337 or later installed to be able to properly use this feature.

Online Account Providers

A new GSettings key

org.gnome.online-accounts.whitelisted-providers has been added to GNOME Online Accounts (provided by the gnome-online-accounts package). This key provides a list of online account providers that are explicitly allowed to be loaded on startup. By specifying this key, system administrators can enable appropriate providers or selectively disable others.

Chapter 16. Supportability and Maintenance

ABRT Authorized Micro-Reporting