Red Hat Training

A Red Hat training course is available for Red Hat Enterprise Linux

1.9. Cluster Administration Tools

Red Hat Cluster Suite provides a variety of tools to configure and manage your Red Hat Cluster. This section provides an overview of the administration tools available with Red Hat Cluster Suite:

1.9.1. Conga

Conga is an integrated set of software components that provides centralized configuration and management of Red Hat clusters and storage. Conga provides the following major features:

- One Web interface for managing cluster and storage

- Automated Deployment of Cluster Data and Supporting Packages

- Easy Integration with Existing Clusters

- No Need to Re-Authenticate

- Integration of Cluster Status and Logs

- Fine-Grained Control over User Permissions

The primary components in Conga are luci and ricci, which are separately installable. luci is a server that runs on one computer and communicates with multiple clusters and computers via ricci. ricci is an agent that runs on each computer (either a cluster member or a standalone computer) managed by Conga.

luci is accessible through a Web browser and provides three major functions that are accessible through the following tabs:

- homebase — Provides tools for adding and deleting computers, adding and deleting users, and configuring user privileges. Only a system administrator is allowed to access this tab.

- cluster — Provides tools for creating and configuring clusters. Each instance of luci lists clusters that have been set up with that luci. A system administrator can administer all clusters listed on this tab. Other users can administer only clusters that the user has permission to manage (granted by an administrator).

- storage — Provides tools for remote administration of storage. With the tools on this tab, you can manage storage on computers whether they belong to a cluster or not.

To administer a cluster or storage, an administrator adds (or registers) a cluster or a computer to a luci server. When a cluster or a computer is registered with luci, the FQDN hostname or IP address of each computer is stored in a luci database.

You can populate the database of one luci instance from another luciinstance. That capability provides a means of replicating a luci server instance and provides an efficient upgrade and testing path. When you install an instance of luci, its database is empty. However, you can import part or all of a luci database from an existing luci server when deploying a new luci server.

Each luci instance has one user at initial installation — admin. Only the admin user may add systems to a luci server. Also, the admin user can create additional user accounts and determine which users are allowed to access clusters and computers registered in the luci database. It is possible to import users as a batch operation in a new luci server, just as it is possible to import clusters and computers.

When a computer is added to a luci server to be administered, authentication is done once. No authentication is necessary from then on (unless the certificate used is revoked by a CA). After that, you can remotely configure and manage clusters and storage through the luci user interface. luci and ricci communicate with each other via XML.

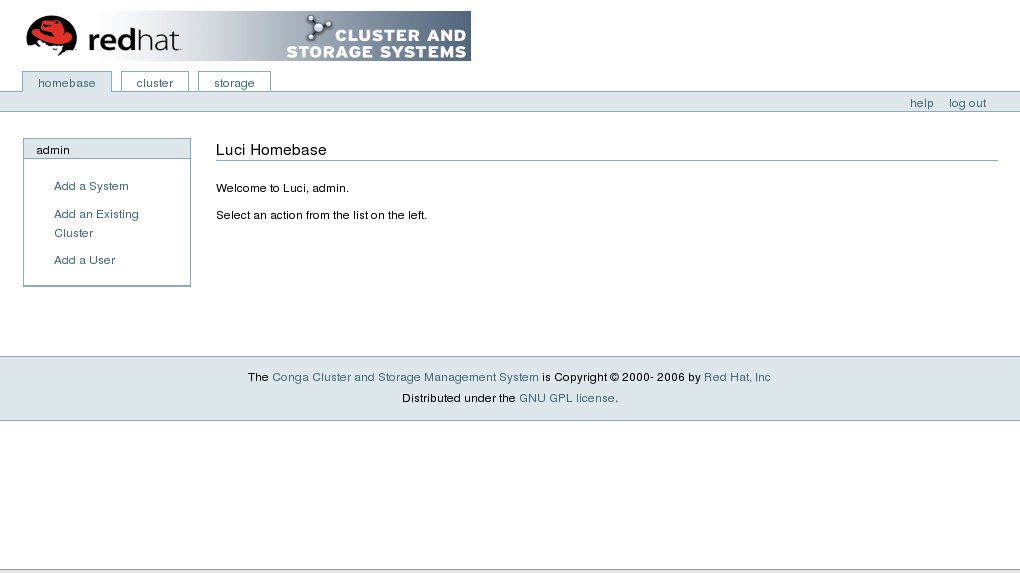

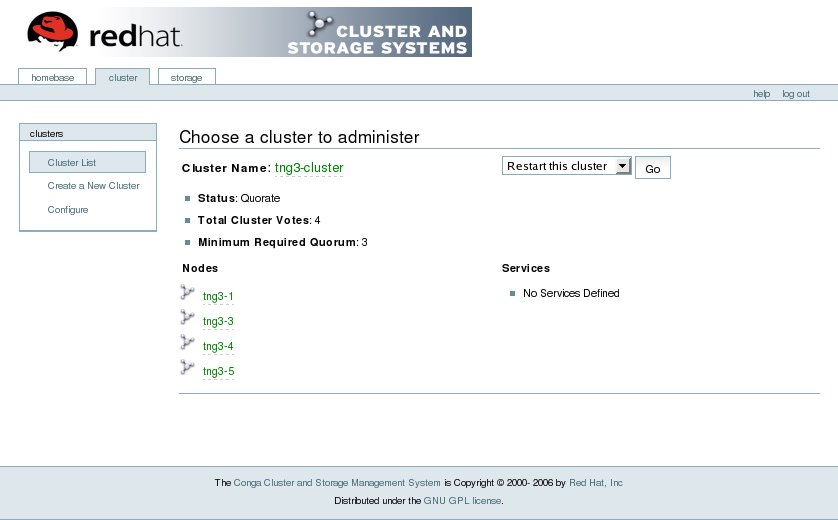

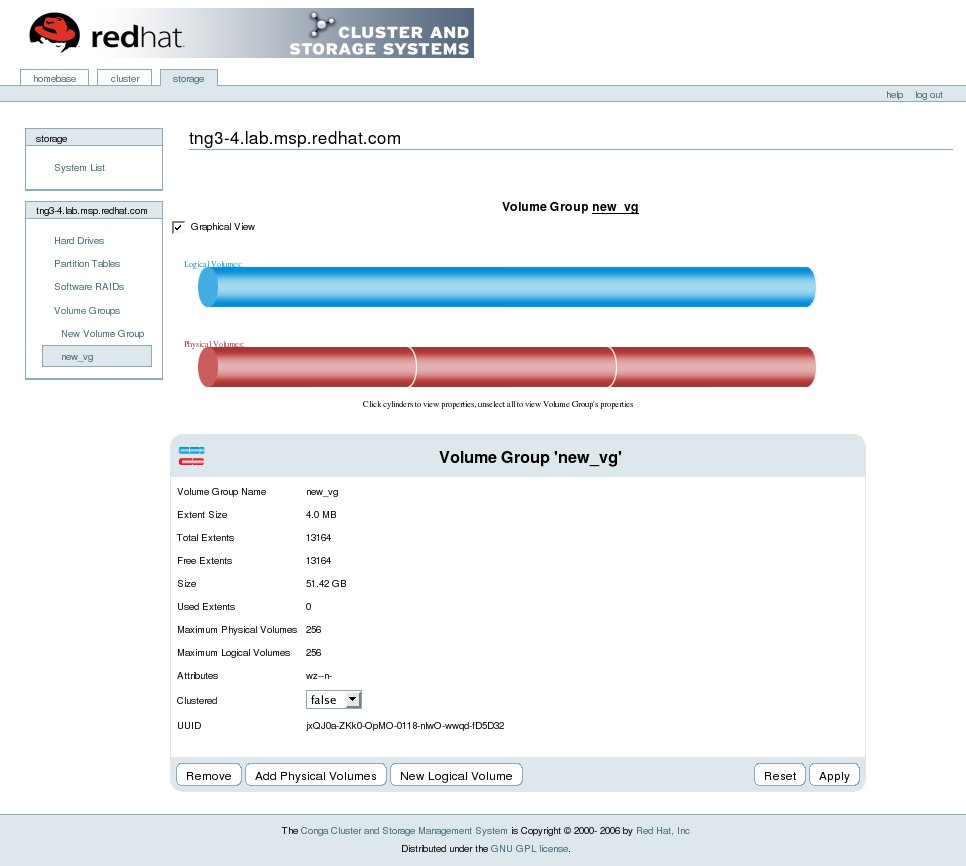

The following figures show sample displays of the three major luci tabs: homebase, cluster, and storage.

For more information about Conga, refer to Configuring and Managing a Red Hat Cluster and the online help available with the luci server.

Figure 1.21. luci homebase Tab

Figure 1.22. luci cluster Tab

Figure 1.23. luci storage Tab

1.9.2. Cluster Administration GUI

This section provides an overview of the

system-config-cluster cluster administration graphical user interface (GUI) available with Red Hat Cluster Suite. The GUI is for use with the cluster infrastructure and the high-availability service management components (refer to Section 1.3, “Cluster Infrastructure” and Section 1.4, “High-availability Service Management”). The GUI consists of two major functions: the Cluster Configuration Tool and the Cluster Status Tool. The Cluster Configuration Tool provides the capability to create, edit, and propagate the cluster configuration file (/etc/cluster/cluster.conf). The Cluster Status Tool provides the capability to manage high-availability services. The following sections summarize those functions.

1.9.2.1. Cluster Configuration Tool

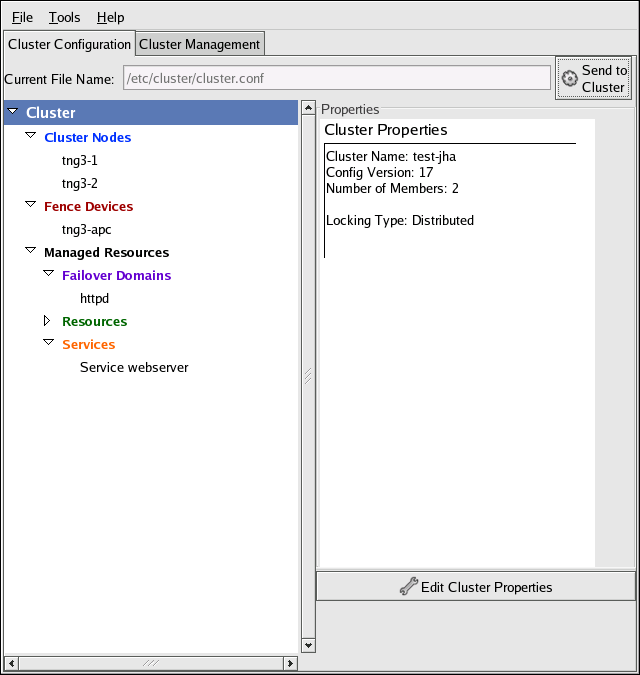

You can access the Cluster Configuration Tool (Figure 1.24, “Cluster Configuration Tool”) through the Cluster Configuration tab in the Cluster Administration GUI.

Figure 1.24. Cluster Configuration Tool

The Cluster Configuration Tool represents cluster configuration components in the configuration file (

/etc/cluster/cluster.conf) with a hierarchical graphical display in the left panel. A triangle icon to the left of a component name indicates that the component has one or more subordinate components assigned to it. Clicking the triangle icon expands and collapses the portion of the tree below a component. The components displayed in the GUI are summarized as follows:

- Cluster Nodes — Displays cluster nodes. Nodes are represented by name as subordinate elements under Cluster Nodes. Using configuration buttons at the bottom of the right frame (below Properties), you can add nodes, delete nodes, edit node properties, and configure fencing methods for each node.

- Fence Devices — Displays fence devices. Fence devices are represented as subordinate elements under Fence Devices. Using configuration buttons at the bottom of the right frame (below Properties), you can add fence devices, delete fence devices, and edit fence-device properties. Fence devices must be defined before you can configure fencing (with the Manage Fencing For This Node button) for each node.

- Managed Resources — Displays failover domains, resources, and services.

- Failover Domains — For configuring one or more subsets of cluster nodes used to run a high-availability service in the event of a node failure. Failover domains are represented as subordinate elements under Failover Domains. Using configuration buttons at the bottom of the right frame (below Properties), you can create failover domains (when Failover Domains is selected) or edit failover domain properties (when a failover domain is selected).

- Resources — For configuring shared resources to be used by high-availability services. Shared resources consist of file systems, IP addresses, NFS mounts and exports, and user-created scripts that are available to any high-availability service in the cluster. Resources are represented as subordinate elements under Resources. Using configuration buttons at the bottom of the right frame (below Properties), you can create resources (when Resources is selected) or edit resource properties (when a resource is selected).

Note

The Cluster Configuration Tool provides the capability to configure private resources, also. A private resource is a resource that is configured for use with only one service. You can configure a private resource within a Service component in the GUI. - Services — For creating and configuring high-availability services. A service is configured by assigning resources (shared or private), assigning a failover domain, and defining a recovery policy for the service. Services are represented as subordinate elements under Services. Using configuration buttons at the bottom of the right frame (below Properties), you can create services (when Services is selected) or edit service properties (when a service is selected).

1.9.2.2. Cluster Status Tool

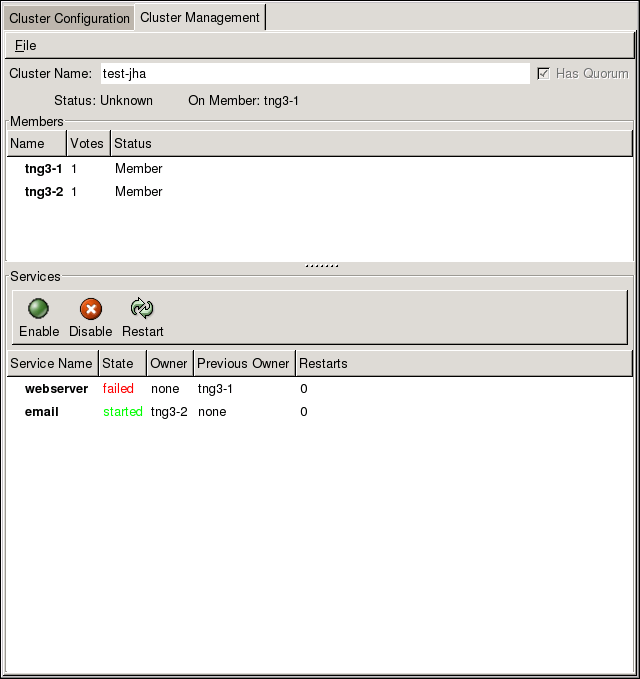

You can access the Cluster Status Tool (Figure 1.25, “Cluster Status Tool”) through the Cluster Management tab in Cluster Administration GUI.

Figure 1.25. Cluster Status Tool

The nodes and services displayed in the Cluster Status Tool are determined by the cluster configuration file (

/etc/cluster/cluster.conf). You can use the Cluster Status Tool to enable, disable, restart, or relocate a high-availability service.

1.9.3. Command Line Administration Tools

In addition to Conga and the

system-config-cluster Cluster Administration GUI, command line tools are available for administering the cluster infrastructure and the high-availability service management components. The command line tools are used by the Cluster Administration GUI and init scripts supplied by Red Hat. Table 1.1, “Command Line Tools” summarizes the command line tools.

Table 1.1. Command Line Tools

| Command Line Tool | Used With | Purpose |

|---|---|---|

ccs_tool — Cluster Configuration System Tool | Cluster Infrastructure | ccs_tool is a program for making online updates to the cluster configuration file. It provides the capability to create and modify cluster infrastructure components (for example, creating a cluster, adding and removing a node). For more information about this tool, refer to the ccs_tool(8) man page. |

cman_tool — Cluster Management Tool | Cluster Infrastructure | cman_tool is a program that manages the CMAN cluster manager. It provides the capability to join a cluster, leave a cluster, kill a node, or change the expected quorum votes of a node in a cluster. For more information about this tool, refer to the cman_tool(8) man page. |

fence_tool — Fence Tool | Cluster Infrastructure | fence_tool is a program used to join or leave the default fence domain. Specifically, it starts the fence daemon (fenced) to join the domain and kills fenced to leave the domain. For more information about this tool, refer to the fence_tool(8) man page. |

clustat — Cluster Status Utility | High-availability Service Management Components | The clustat command displays the status of the cluster. It shows membership information, quorum view, and the state of all configured user services. For more information about this tool, refer to the clustat(8) man page. |

clusvcadm — Cluster User Service Administration Utility | High-availability Service Management Components | The clusvcadm command allows you to enable, disable, relocate, and restart high-availability services in a cluster. For more information about this tool, refer to the clusvcadm(8) man page. |