-

Language:

English

-

Language:

English

Red Hat Training

A Red Hat training course is available for Red Hat Enterprise Linux

Chapter 1. Red Hat Cluster Configuration and Management Overview

Red Hat Cluster allows you to connect a group of computers (called nodes or members) to work together as a cluster. It provides a wide variety of ways to configure hardware and software to suit your clustering needs (for example, a cluster for sharing files on a GFS file system or a cluster with high-availability service failover). This book provides information about how to use configuration tools to configure your cluster and provides considerations to take into account before deploying a Red Hat Cluster. To ensure that your deployment of Red Hat Cluster fully meets your needs and can be supported, consult with an authorized Red Hat representative before you deploy it.

1.1. Configuration Basics

To set up a cluster, you must connect the nodes to certain cluster hardware and configure the nodes into the cluster environment. This chapter provides an overview of cluster configuration and management, and tools available for configuring and managing a Red Hat Cluster.

Note

For information on best practices for deploying and upgrading Red Hat Enterprise Linux 5 Advanced Platform (Clustering and GFS/GFS2), refer to the article "Red Hat Enterprise Linux Cluster, High Availability, and GFS Deployment Best Practices" on Red Hat Customer Portal at https://access.redhat.com/site/articles/40051.

Configuring and managing a Red Hat Cluster consists of the following basic steps:

- Setting up hardware. Refer to Section 1.1.1, “Setting Up Hardware”.

- Installing Red Hat Cluster software. Refer to Section 1.1.2, “Installing Red Hat Cluster software”.

- Configuring Red Hat Cluster Software. Refer to Section 1.1.3, “Configuring Red Hat Cluster Software”.

1.1.1. Setting Up Hardware

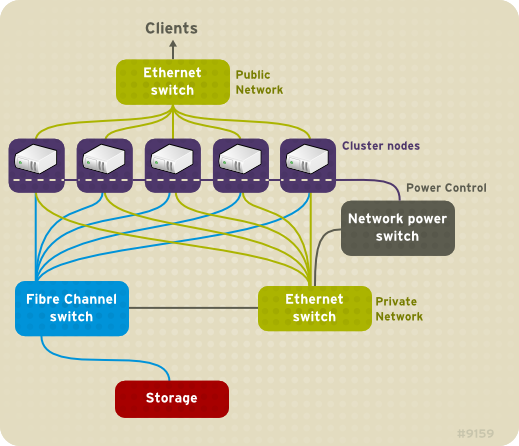

Setting up hardware consists of connecting cluster nodes to other hardware required to run a Red Hat Cluster. The amount and type of hardware varies according to the purpose and availability requirements of the cluster. Typically, an enterprise-level cluster requires the following type of hardware (refer to Figure 1.1, “Red Hat Cluster Hardware Overview”).For considerations about hardware and other cluster configuration concerns, refer to "Before Configuring a Red Hat Cluster" or check with an authorized Red Hat representative.

- Cluster nodes — Computers that are capable of running Red Hat Enterprise Linux 5 software, with at least 1GB of RAM. The maximum number of nodes supported in a Red Hat Cluster is 16.

- Ethernet switch or hub for public network — This is required for client access to the cluster.

- Ethernet switch or hub for private network — This is required for communication among the cluster nodes and other cluster hardware such as network power switches and Fibre Channel switches.

- Network power switch — A network power switch is recommended to perform fencing in an enterprise-level cluster.

- Fibre Channel switch — A Fibre Channel switch provides access to Fibre Channel storage. Other options are available for storage according to the type of storage interface; for example, iSCSI or GNBD. A Fibre Channel switch can be configured to perform fencing.

- Storage — Some type of storage is required for a cluster. The type required depends on the purpose of the cluster.

Figure 1.1. Red Hat Cluster Hardware Overview

1.1.2. Installing Red Hat Cluster software

To install Red Hat Cluster software, you must have entitlements for the software. If you are using the Conga configuration GUI, you can let it install the cluster software. If you are using other tools to configure the cluster, secure and install the software as you would with Red Hat Enterprise Linux software.

1.1.2.1. Upgrading the Cluster Software

It is possible to upgrade the cluster software on a given minor release of Red Hat Enterprise Linux without taking the cluster out of production. Doing so requires disabling the cluster software on one host at a time, upgrading the software, and restarting the cluster software on that host.

- Shut down all cluster services on a single cluster node. For instructions on stopping cluster software on a node, refer to Section 6.1, “Starting and Stopping the Cluster Software”. It may be desirable to manually relocate cluster-managed services and virtual machines off of the host prior to stopping rgmanager.

- Execute the yum update command to install the new RPMs. For example:

yum update -y openais cman rgmanager lvm2-cluster gfs2-utils

- Reboot the cluster node or restart the cluster services manually. For instructions on starting cluster software on a node, refer to Section 6.1, “Starting and Stopping the Cluster Software”.

1.1.3. Configuring Red Hat Cluster Software

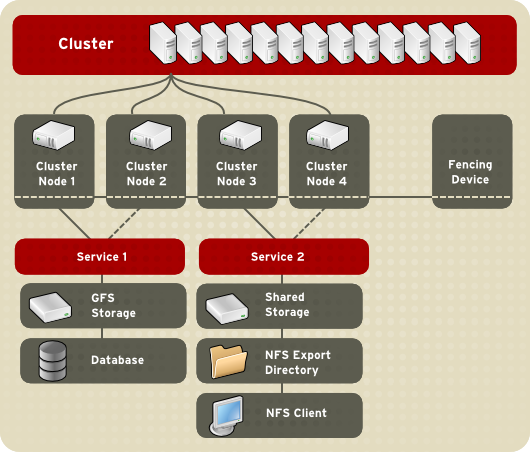

Configuring Red Hat Cluster software consists of using configuration tools to specify the relationship among the cluster components. Figure 1.2, “Cluster Configuration Structure” shows an example of the hierarchical relationship among cluster nodes, high-availability services, and resources. The cluster nodes are connected to one or more fencing devices. Nodes can be grouped into a failover domain for a cluster service. The services comprise resources such as NFS exports, IP addresses, and shared GFS partitions.

Figure 1.2. Cluster Configuration Structure

The following cluster configuration tools are available with Red Hat Cluster:

- Conga — This is a comprehensive user interface for installing, configuring, and managing Red Hat clusters, computers, and storage attached to clusters and computers.

system-config-cluster— This is a user interface for configuring and managing a Red Hat cluster.- Command line tools — This is a set of command line tools for configuring and managing a Red Hat cluster.

A brief overview of each configuration tool is provided in the following sections:

In addition, information about using Conga and

system-config-cluster is provided in subsequent chapters of this document. Information about the command line tools is available in the man pages for the tools.