System Administration Guide

For Red Hat Enterprise Linux 4

Edition 2

Copyright © 2008 Red Hat, Inc

Abstract

Introduction

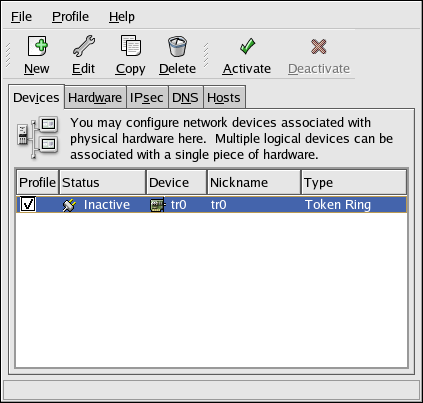

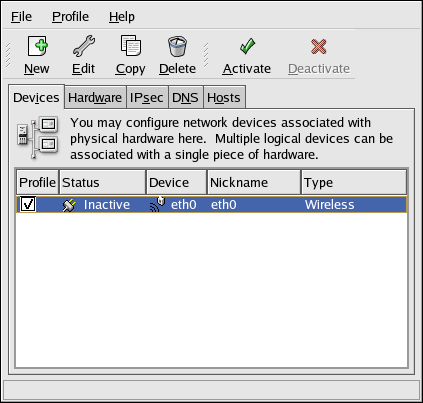

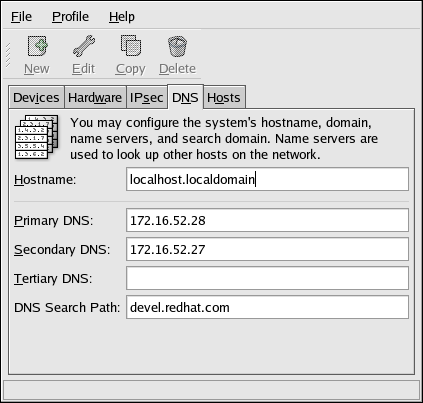

- Setting up a network interface card (NIC)

- Performing a Kickstart installation

- Configuring Samba shares

- Managing your software with RPM

- Determining information about your system

- Upgrading your kernel

- Installation-Related Reference

- File Systems Reference

- Package Management

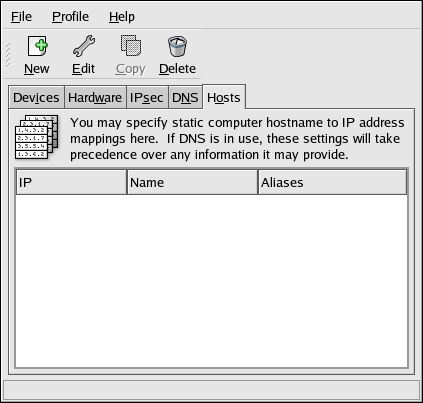

- Network Configuration

- System Configuration

- System Monitoring

1. Changes To This Manual

- Updated

Kernel ModulesandManually Updating the KernelChapters - The

Kernel Modulesand theUpgrading the Kernel Manuallychapters include updated information in regards to the 2.6 kernel. Special thanks to Arjan van de Ven for his hard work in helping to complete this chapter. - An Updated Network File System (NFS) Chapter

- The Network File System (NFS) chapter has been revised and reorganized to include NFSv4. Special thanks to Steve Dickson for his hard work in helping to complete this chapter.

- An Updated OProfile Chapter

- The OProfile chapter has been revised and reorganized to include updated information in regards to the 2.6 kernel. Special thanks to Will Cohen for his hard work in helping to complete this chapter.

- An Updated X Window System Chapter

- The X Window System chapter has been revised to include information on the X11R6.8 release developed by the X.Org team.

2. More to Come

2.1. Send in Your Feedback

rh-sag.

rh-sag

Part I. Installation-Related Information

Chapter 1. Kickstart Installations

1.1. What are Kickstart Installations?

1.2. How Do You Perform a Kickstart Installation?

- Create a kickstart file.

- Create a boot media with the kickstart file or make the kickstart file available on the network.

- Make the installation tree available.

- Start the kickstart installation.

1.3. Creating the Kickstart File

sample.ks file found in the RH-DOCS directory of the Red Hat Enterprise Linux Documentation CD, using the Kickstart Configurator application, or writing it from scratch. The Red Hat Enterprise Linux installation program also creates a sample kickstart file based on the options that you selected during installation. It is written to the file /root/anaconda-ks.cfg. You should be able to edit it with any text editor or word processor that can save files as ASCII text.

- Sections must be specified in order. Items within the sections do not have to be in a specific order unless otherwise specified. The section order is:

- Command section — Refer to Section 1.4, “Kickstart Options” for a list of kickstart options. You must include the required options.

- The

%packagessection — Refer to Section 1.5, “Package Selection” for details. - The

%preand%postsections — These two sections can be in any order and are not required. Refer to Section 1.6, “Pre-installation Script” and Section 1.7, “Post-installation Script” for details.

- Items that are not required can be omitted.

- Omitting any required item results in the installation program prompting the user for an answer to the related item, just as the user would be prompted during a typical installation. Once the answer is given, the installation continues unattended (unless it finds another missing item).

- Lines starting with a pound (or hash) sign (#) are treated as comments and are ignored.

- For kickstart upgrades, the following items are required:

- Language

- Language support

- Installation method

- Device specification (if device is needed to perform the installation)

- Keyboard setup

- The

upgradekeyword - Boot loader configuration

If any other items are specified for an upgrade, those items are ignored (note that this includes package selection).

1.4. Kickstart Options

Note

autopart(optional)- Automatically create partitions — 1 GB or more root (

/) partition, a swap partition, and an appropriate boot partition for the architecture. One or more of the default partition sizes can be redefined with thepartdirective. ignoredisk(optional)- Causes the installer to ignore the specified disks. This is useful if you use autopartition and want to be sure that some disks are ignored. For example, without

ignoredisk, attempting to deploy on a SAN-cluster the kickstart would fail, as the installer detects passive paths to the SAN that return no partition table.Theignorediskoption is also useful if you have multiple paths to your disks.The syntax is:ignoredisk --drives=drive1,drive2,...

where driveN is one ofsda,sdb,...,hda,... etc. autostep(optional)- Similar to

interactiveexcept it goes to the next screen for you. It is used mostly for debugging. authorauthconfig(required)- Sets up the authentication options for the system. It is similar to the

authconfigcommand, which can be run after the install. By default, passwords are normally encrypted and are not shadowed.--enablemd5- Use md5 encryption for user passwords.

--enablenis- Turns on NIS support. By default,

--enablenisuses whatever domain it finds on the network. A domain should almost always be set by hand with the--nisdomain=option. --nisdomain=- NIS domain name to use for NIS services.

--nisserver=- Server to use for NIS services (broadcasts by default).

--useshadowor--enableshadow- Use shadow passwords.

--enableldap- Turns on LDAP support in

/etc/nsswitch.conf, allowing your system to retrieve information about users (UIDs, home directories, shells, etc.) from an LDAP directory. To use this option, you must install thenss_ldappackage. You must also specify a server and a base DN (distinguished name) with--ldapserver=and--ldapbasedn=. --enableldapauth- Use LDAP as an authentication method. This enables the

pam_ldapmodule for authentication and changing passwords, using an LDAP directory. To use this option, you must have thenss_ldappackage installed. You must also specify a server and a base DN with--ldapserver=and--ldapbasedn=. --ldapserver=- If you specified either

--enableldapor--enableldapauth, use this option to specify the name of the LDAP server to use. This option is set in the/etc/ldap.conffile. --ldapbasedn=- If you specified either

--enableldapor--enableldapauth, use this option to specify the DN in your LDAP directory tree under which user information is stored. This option is set in the/etc/ldap.conffile. --enableldaptls- Use TLS (Transport Layer Security) lookups. This option allows LDAP to send encrypted usernames and passwords to an LDAP server before authentication.

--enablekrb5- Use Kerberos 5 for authenticating users. Kerberos itself does not know about home directories, UIDs, or shells. If you enable Kerberos, you must make users' accounts known to this workstation by enabling LDAP, NIS, or Hesiod or by using the

/usr/sbin/useraddcommand to make their accounts known to this workstation. If you use this option, you must have thepam_krb5package installed. --krb5realm=- The Kerberos 5 realm to which your workstation belongs.

--krb5kdc=- The KDC (or KDCs) that serve requests for the realm. If you have multiple KDCs in your realm, separate their names with commas (,).

--krb5adminserver=- The KDC in your realm that is also running kadmind. This server handles password changing and other administrative requests. This server must be run on the master KDC if you have more than one KDC.

--enablehesiod- Enable Hesiod support for looking up user home directories, UIDs, and shells. More information on setting up and using Hesiod on your network is in

/usr/share/doc/glibc-2.x.x/README.hesiod, which is included in theglibcpackage. Hesiod is an extension of DNS that uses DNS records to store information about users, groups, and various other items. --hesiodlhs- The Hesiod LHS ("left-hand side") option, set in

/etc/hesiod.conf. This option is used by the Hesiod library to determine the name to search DNS for when looking up information, similar to LDAP's use of a base DN. --hesiodrhs- The Hesiod RHS ("right-hand side") option, set in

/etc/hesiod.conf. This option is used by the Hesiod library to determine the name to search DNS for when looking up information, similar to LDAP's use of a base DN.Note

To look up user information for "jim", the Hesiod library looks up jim.passwd<LHS><RHS>, which should resolve to a TXT record that looks like what his passwd entry would look like (jim:*:501:501:Jungle Jim:/home/jim:/bin/bash). For groups, the situation is identical, except jim.group<LHS><RHS> would be used.Looking up users and groups by number is handled by making "501.uid" a CNAME for "jim.passwd", and "501.gid" a CNAME for "jim.group". Note that the LHS and RHS do not have periods . put in front of them when the library determines the name for which to search, so the LHS and RHS usually begin with periods. --enablesmbauth- Enables authentication of users against an SMB server (typically a Samba or Windows server). SMB authentication support does not know about home directories, UIDs, or shells. If you enable SMB, you must make users' accounts known to the workstation by enabling LDAP, NIS, or Hesiod or by using the

/usr/sbin/useraddcommand to make their accounts known to the workstation. To use this option, you must have thepam_smbpackage installed. --smbservers=- The name of the server(s) to use for SMB authentication. To specify more than one server, separate the names with commas (,).

--smbworkgroup=- The name of the workgroup for the SMB servers.

--enablecache- Enables the

nscdservice. Thenscdservice caches information about users, groups, and various other types of information. Caching is especially helpful if you choose to distribute information about users and groups over your network using NIS, LDAP, or hesiod.

bootloader(required)- Specifies how the GRUB boot loader should be installed. This option is required for both installations and upgrades. For upgrades, if GRUB is not the current boot loader, the boot loader is changed to GRUB. To preserve other boot loaders, use

bootloader --upgrade.--append=- Specifies kernel parameters. To specify multiple parameters, separate them with spaces. For example:

bootloader --location=mbr --append="hdd=ide-scsi ide=nodma" --driveorder- Specify which drive is first in the BIOS boot order. For example:

bootloader --driveorder=sda,hda --location=- Specifies where the boot record is written. Valid values are the following:

mbr(the default),partition(installs the boot loader on the first sector of the partition containing the kernel), ornone(do not install the boot loader). --password=- Sets the GRUB boot loader password to the one specified with this option. This should be used to restrict access to the GRUB shell, where arbitrary kernel options can be passed.

--md5pass=- Similar to

--password=except the password should already be encrypted. --upgrade- Upgrade the existing boot loader configuration, preserving the old entries. This option is only available for upgrades.

clearpart(optional)- Removes partitions from the system, prior to creation of new partitions. By default, no partitions are removed.

Note

If theclearpartcommand is used, then the--onpartcommand cannot be used on a logical partition.--all- Erases all partitions from the system.

--drives=- Specifies which drives to clear partitions from. For example, the following clears all the partitions on the first two drives on the primary IDE controller:

clearpart --drives=hda,hdb --all --initlabel- Initializes the disk label to the default for your architecture (for example

msdosfor x86 andgptfor Itanium). It is useful so that the installation program does not ask if it should initialize the disk label if installing to a brand new hard drive. --linux- Erases all Linux partitions.

--none(default)- Do not remove any partitions.

cmdline(optional)- Perform the installation in a completely non-interactive command line mode. Any prompts for interaction halts the install. This mode is useful on S/390 systems with the x3270 console.

device(optional)- On most PCI systems, the installation program autoprobes for Ethernet and SCSI cards properly. On older systems and some PCI systems, however, kickstart needs a hint to find the proper devices. The

devicecommand, which tells the installation program to install extra modules, is in this format:device <type><moduleName> --opts=<options>

- <type>

- Replace with either

scsioreth - <moduleName>

- Replace with the name of the kernel module which should be installed.

--opts=- Mount options to use for mounting the NFS export. Any options that can be specified in

/etc/fstabfor an NFS mount are allowed. The options are listed in thenfs(5)man page. Multiple options are separated with a comma.

driverdisk(optional)- Driver diskettes can be used during kickstart installations. You must copy the driver diskettes's contents to the root directory of a partition on the system's hard drive. Then you must use the

driverdiskcommand to tell the installation program where to look for the driver disk.driverdisk <partition> [--type=<fstype>]

Alternatively, a network location can be specified for the driver diskette:driverdisk --source=ftp://path/to/dd.imgdriverdisk --source=http://path/to/dd.imgdriverdisk --source=nfs:host:/path/to/img- <partition>

- Partition containing the driver disk.

--type=- File system type (for example, vfat or ext2).

firewall(optional)- This option corresponds to the Firewall Configuration screen in the installation program:

firewall --enabled|--disabled [--trust=] <device> [--port=]

--enabled- Reject incoming connections that are not in response to outbound requests, such as DNS replies or DHCP requests. If access to services running on this machine is needed, you can choose to allow specific services through the firewall.

--disabled- Do not configure any iptables rules.

--trust=- Listing a device here, such as eth0, allows all traffic coming from that device to go through the firewall. To list more than one device, use

--trust eth0 --trust eth1. Do NOT use a comma-separated format such as--trust eth0, eth1. - <incoming>

- Replace with one or more of the following to allow the specified services through the firewall.

--ssh--telnet--smtp--http--ftp

--port=- You can specify that ports be allowed through the firewall using the port:protocol format. For example, to allow IMAP access through your firewall, specify

imap:tcp. Numeric ports can also be specified explicitly; for example, to allow UDP packets on port 1234 through, specify1234:udp. To specify multiple ports, separate them by commas.

firstboot(optional)- Determine whether the Setup Agent starts the first time the system is booted. If enabled, the

firstbootpackage must be installed. If not specified, this option is disabled by default.--enable- The Setup Agent is started the first time the system boots.

--disable- The Setup Agent is not started the first time the system boots.

--reconfig- Enable the Setup Agent to start at boot time in reconfiguration mode. This mode enables the language, mouse, keyboard, root password, security level, time zone, and networking configuration options in addition to the default ones.

halt(optional)- Halt the system after the installation has successfully completed. This is similar to a manual installation, where anaconda displays a message and waits for the user to press a key before rebooting. During a kickstart installation, if no completion method is specified, the

rebootoption is used as default.Thehaltoption is roughly equivalent to theshutdown -hcommand.For other completion methods, refer to thepoweroff,reboot, andshutdownkickstart options. install(optional)- Tells the system to install a fresh system rather than upgrade an existing system. This is the default mode. For installation, you must specify the type of installation from

cdrom,harddrive,nfs, orurl(for FTP or HTTP installations). Theinstallcommand and the installation method command must be on separate lines.cdrom- Install from the first CD-ROM drive on the system.

harddrive- Install from a Red Hat installation tree on a local drive, which must be either vfat or ext2.

--partition=Partition to install from (such as, sdb2).--dir=Directory containing theRedHatdirectory of the installation tree.

For example:harddrive --partition=hdb2 --dir=/tmp/install-tree

nfs- Install from the NFS server specified.

--server=Server from which to install (hostname or IP).--dir=Directory containing theRedHatdirectory of the installation tree.

For example:nfs --server=nfsserver.example.com --dir=/tmp/install-tree

url- Install from an installation tree on a remote server via FTP or HTTP.For example:

url --url http://<server>/<dir>

or:url --url ftp://<username>:<password>@<server>/<dir>

interactive(optional)- Uses the information provided in the kickstart file during the installation, but allow for inspection and modification of the values given. You are presented with each screen of the installation program with the values from the kickstart file. Either accept the values by clicking Next or change the values and click Next to continue. Refer to the

autostepcommand. keyboard(required)- Sets system keyboard type. Here is the list of available keyboards on i386, Itanium, and Alpha machines:

be-latin1, bg, br-abnt2, cf, cz-lat2, cz-us-qwertz, de, de-latin1, de-latin1-nodeadkeys, dk, dk-latin1, dvorak, es, et, fi, fi-latin1, fr, fr-latin0, fr-latin1, fr-pc, fr_CH, fr_CH-latin1, gr, hu, hu101, is-latin1, it, it-ibm, it2, jp106, la-latin1, mk-utf, no, no-latin1, pl, pt-latin1, ro_win, ru, ru-cp1251, ru-ms, ru1, ru2, ru_win, sg, sg-latin1, sk-qwerty, slovene, speakup, speakup-lt, sv-latin1, sg, sg-latin1, sk-querty, slovene, trq, ua, uk, us, us-acentos

The file/usr/lib/python2.2/site-packages/rhpl/keyboard_models.pyalso contains this list and is part of therhplpackage. lang(required)- Sets the language to use during installation. For example, to set the language to English, the kickstart file should contain the following line:

lang en_USThe file/usr/share/system-config-language/locale-listprovides a list of the valid language codes in the first column of each line and is part of thesystem-config-languagepackage. langsupport(required)- Sets the language(s) to install on the system. The same language codes used with

langcan be used withlangsupport.To install one language, specify it. For example, to install and use the French languagefr_FR:langsupport fr_FR--default=- If language support for more than one language is specified, a default must be identified.

For example, to install English and French and use English as the default language:langsupport --default=en_US fr_FRIf you use--defaultwith only one language, all languages are installed with the specified language set to the default. logvol(optional)- Create a logical volume for Logical Volume Management (LVM) with the syntax:

logvol <mntpoint> --vgname=<name> --size=<size> --name=<name><options>The options are as follows:- --noformat

- Use an existing logical volume and do not format it.

- --useexisting

- Use an existing logical volume and reformat it.

- --pesize

- Set the size of the physical extents.

Create the partition first, create the logical volume group, and then create the logical volume. For example:part pv.01 --size 3000 volgroup myvg pv.01 logvol / --vgname=myvg --size=2000 --name=rootvolFor a detailed example oflogvolin action, refer to Section 1.4.1, “Advanced Partitioning Example”. mouse(required)- Configures the mouse for the system, both in GUI and text modes. Options are:

--device=- Device the mouse is on (such as

--device=ttyS0). --emulthree- If present, simultaneous clicks on the left and right mouse buttons are recognized as the middle mouse button by the X Window System. This option should be used if you have a two button mouse.

After options, the mouse type may be specified as one of the following:alpsps/2, ascii, asciips/2, atibm, generic, generic3, genericps/2, generic3ps/2, genericwheelps/2, genericusb, generic3usb, genericwheelusb, geniusnm, geniusnmps/2, geniusprops/2, geniusscrollps/2, geniusscrollps/2+, thinking, thinkingps/2, logitech, logitechcc, logibm, logimman, logimmanps/2, logimman+, logimman+ps/2, logimmusb, microsoft, msnew, msintelli, msintellips/2, msintelliusb, msbm, mousesystems, mmseries, mmhittab, sun, none

This list can also be found in the/usr/lib/python2.2/site-packages/rhpl/mouse.pyfile, which is part of therhplpackage.If the mouse command is given without any arguments, or it is omitted, the installation program attempts to automatically detect the mouse. This procedure works for most modern mice. network(optional)- Configures network information for the system. If the kickstart installation does not require networking (in other words, it is not installed over NFS, HTTP, or FTP), networking is not configured for the system. If the installation does require networking and network information is not provided in the kickstart file, the installation program assumes that the installation should be done over eth0 via a dynamic IP address (BOOTP/DHCP), and configures the final, installed system to determine its IP address dynamically. The

networkoption configures networking information for kickstart installations via a network as well as for the installed system.--bootproto=- One of

dhcp,bootp, orstatic.It defaults todhcp.bootpanddhcpare treated the same.The DHCP method uses a DHCP server system to obtain its networking configuration. As you might guess, the BOOTP method is similar, requiring a BOOTP server to supply the networking configuration. To direct a system to use DHCP:network --bootproto=dhcp

To direct a machine to use BOOTP to obtain its networking configuration, use the following line in the kickstart file:network --bootproto=bootp

The static method requires that you enter all the required networking information in the kickstart file. As the name implies, this information is static and are used during and after the installation. The line for static networking is more complex, as you must include all network configuration information on one line. You must specify the IP address, netmask, gateway, and nameserver. For example: (the "\" indicates that this should be read as one continuous line):network --bootproto=static --ip=10.0.2.15 --netmask=255.255.255.0 \ --gateway=10.0.2.254 --nameserver=10.0.2.1

If you use the static method, be aware of the following two restrictions:- All static networking configuration information must be specified on one line; you cannot wrap lines using a backslash, for example.

- You can also configure multiple nameservers here. To do so, specify them as a comma-delimited list in the command line. For example:

network --bootproto=static --ip=10.0.2.15 --netmask=255.255.255.0 \ --gateway=10.0.2.254 --nameserver 192.168.2.1,192.168.3.1

--device=- Used to select a specific Ethernet device for installation. Note that using

--device=is not effective unless the kickstart file is a local file (such asks=floppy), since the installation program configures the network to find the kickstart file. For example:network --bootproto=dhcp --device=eth0

--ip=- IP address for the machine to be installed.

--gateway=- Default gateway as an IP address.

--nameserver=- Primary nameserver, as an IP address.

--nodns- Do not configure any DNS server.

--netmask=- Netmask for the installed system.

--hostname=- Hostname for the installed system.

--nostorage- Do not auto-probe storage devices such as ISCI, IDE or RAID.

partorpartition(required for installs, ignored for upgrades)- Creates a partition on the system.If more than one Red Hat Enterprise Linux installation exists on the system on different partitions, the installation program prompts the user and asks which installation to upgrade.

Warning

All partitions created are formatted as part of the installation process unless--noformatand--onpartare used.For a detailed example ofpartin action, refer to Section 1.4.1, “Advanced Partitioning Example”.- <mntpoint>

- The <mntpoint> is where the partition is mounted and must be of one of the following forms:

/<path>For example,/,/usr,/homeswapThe partition is used as swap space.To determine the size of the swap partition automatically, use the--recommendedoption:swap --recommendedThe minimum size of the automatically-generated swap partition is no smaller than the amount of RAM in the system and no larger than twice the amount of RAM in the system.Using therecommendedoption yields a limitation of 8GB for the swap partition. If you want to create a larger swap partition, specify the correct size in the kickstart file or create the partitions manually.raid.<id>The partition is used for software RAID (refer toraid).pv.<id>The partition is used for LVM (refer tologvol).

--size=- The minimum partition size in megabytes. Specify an integer value here such as 500. Do not append the number with MB.

--grow- Tells the partition to grow to fill available space (if any), or up to the maximum size setting.

--maxsize=- The maximum partition size in megabytes when the partition is set to grow. Specify an integer value here, and do not append the number with MB.

--noformat- Tells the installation program not to format the partition, for use with the

--onpartcommand. --onpart=or--usepart=- Put the partition on the already existing device. For example:

partition /home --onpart=hda1puts/homeon/dev/hda1, which must already exist. --ondisk=or--ondrive=- Forces the partition to be created on a particular disk. For example,

--ondisk=sdbputs the partition on the second SCSI disk on the system. --asprimary- Forces automatic allocation of the partition as a primary partition, or the partitioning fails.

--type=(replaced byfstype)- This option is no longer available. Use

fstype. --fstype=- Sets the file system type for the partition. Valid values are

ext2,ext3,swap, andvfat. --start=- Specifies the starting cylinder for the partition. It requires that a drive be specified with

--ondisk=orondrive=. It also requires that the ending cylinder be specified with--end=or the partition size be specified with--size=. --end=- Specifies the ending cylinder for the partition. It requires that the starting cylinder be specified with

--start=.

Note

If partitioning fails for any reason, diagnostic messages appear on virtual console 3. poweroff(optional)- Shut down and power off the system after the installation has successfully completed. Normally during a manual installation, anaconda displays a message and waits for the user to press a key before rebooting. During a kickstart installation, if no completion method is specified, the

rebootoption is used as default.Thepoweroffoption is roughly equivalent to theshutdown -pcommand.Note

Thepoweroffoption is highly dependent on the system hardware in use. Specifically, certain hardware components such as the BIOS, APM (advanced power management), and ACPI (advanced configuration and power interface) must be able to interact with the system kernel. Contact your manufacturer for more information on you system's APM/ACPI abilities.For other completion methods, refer to thehalt,reboot, andshutdownkickstart options. raid(optional)- Assembles a software RAID device. This command is of the form:

raid <mntpoint> --level=<level> --device=<mddevice><partitions*>- <mntpoint>

- Location where the RAID file system is mounted. If it is

/, the RAID level must be 1 unless a boot partition (/boot) is present. If a boot partition is present, the/bootpartition must be level 1 and the root (/) partition can be any of the available types. The <partitions*> (which denotes that multiple partitions can be listed) lists the RAID identifiers to add to the RAID array. --level=- RAID level to use (0, 1, or 5).

--device=- Name of the RAID device to use (such as md0 or md1). RAID devices range from md0 to md7, and each may only be used once.

--spares=- Specifies the number of spare drives allocated for the RAID array. Spare drives are used to rebuild the array in case of drive failure.

--fstype=- Sets the file system type for the RAID array. Valid values are ext2, ext3, swap, and vfat.

--noformat- Use an existing RAID device and do not format the RAID array.

--useexisting- Use an existing RAID device and reformat it.

The following example shows how to create a RAID level 1 partition for/, and a RAID level 5 for/usr, assuming there are three SCSI disks on the system. It also creates three swap partitions, one on each drive.part raid.01 --size=60 --ondisk=sda part raid.02 --size=60 --ondisk=sdb part raid.03 --size=60 --ondisk=sdc

part swap --size=128 --ondisk=sda part swap --size=128 --ondisk=sdb part swap --size=128 --ondisk=sdc

part raid.11 --size=1 --grow --ondisk=sda part raid.12 --size=1 --grow --ondisk=sdb part raid.13 --size=1 --grow --ondisk=sdc

raid / --level=1 --device=md0 raid.01 raid.02 raid.03 raid /usr --level=5 --device=md1 raid.11 raid.12 raid.13

For a detailed example ofraidin action, refer to Section 1.4.1, “Advanced Partitioning Example”. reboot(optional)- Reboot after the installation is successfully completed (no arguments). Normally during a manual installation, anaconda displays a message and waits for the user to press a key before rebooting.The

rebootoption is roughly equivalent to theshutdown -rcommand.Note

Use of therebootoption may result in an endless installation loop, depending on the installation media and method.Therebootoption is the default completion method if no other methods are explicitly specified in the kickstart file.For other completion methods, refer to thehalt,poweroff, andshutdownkickstart options. rootpw(required)- Sets the system's root password to the <password> argument.

rootpw [--iscrypted] <password>--iscrypted- If this is present, the password argument is assumed to already be encrypted.

selinux(optional)- Sets the system's SELinux mode to one of the following arguments:

--enforcing- Enables SELinux with the default targeted policy being enforced.

Note

If theselinuxoption is not present in the kickstart file, SELinux is enabled and set to--enforcingby default. --permissive- Outputs warnings only based on the SELinux policy, but does not actually enforce the policy.

--disabled- Disables SELinux completely on the system.

For complete information regarding SELinux for Red Hat Enterprise Linux, refer to the Red Hat, Inc. shutdown(optional)- Shut down the system after the installation has successfully completed. During a kickstart installation, if no completion method is specified, the

rebootoption is used as default.Theshutdownoption is roughly equivalent to theshutdowncommand.For other completion methods, refer to thehalt,poweroff, andrebootkickstart options. skipx(optional)- If present, X is not configured on the installed system.

text(optional)- Perform the kickstart installation in text mode. Kickstart installations are performed in graphical mode by default.

timezone(required)- Sets the system time zone to <timezone> which may be any of the time zones listed by

timeconfig.timezone [--utc] <timezone>--utc- If present, the system assumes the hardware clock is set to UTC (Greenwich Mean) time.

upgrade(optional)- Tells the system to upgrade an existing system rather than install a fresh system. You must specify one of

cdrom,harddrive,nfs, orurl(for FTP and HTTP) as the location of the installation tree. Refer toinstallfor details. xconfig(optional)- Configures the X Window System. If this option is not given, the user must configure X manually during the installation, if X was installed; this option should not be used if X is not installed on the final system.

--noprobe- Do not probe the monitor.

--card=- Use specified card; this card name should be from the list of cards in

/usr/share/hwdata/Cardsfrom thehwdatapackage. The list of cards can also be found on the X Configuration screen of the Kickstart Configurator. If this argument is not provided, the installation program probes the PCI bus for the card. Since AGP is part of the PCI bus, AGP cards are detected if supported. The probe order is determined by the PCI scan order of the motherboard. --videoram=- Specifies the amount of video RAM the video card has.

--monitor=- Use specified monitor; monitor name should be from the list of monitors in

/usr/share/hwdata/MonitorsDBfrom thehwdatapackage. The list of monitors can also be found on the X Configuration screen of the Kickstart Configurator. This is ignored if--hsyncor--vsyncis provided. If no monitor information is provided, the installation program tries to probe for it automatically. --hsync=- Specifies the horizontal sync frequency of the monitor.

--vsync=- Specifies the vertical sync frequency of the monitor.

--defaultdesktop=- Specify either GNOME or KDE to set the default desktop (assumes that GNOME Desktop Environment and/or KDE Desktop Environment has been installed through

%packages). --startxonboot- Use a graphical login on the installed system.

--resolution=- Specify the default resolution for the X Window System on the installed system. Valid values are 640x480, 800x600, 1024x768, 1152x864, 1280x1024, 1400x1050, 1600x1200. Be sure to specify a resolution that is compatible with the video card and monitor.

--depth=- Specify the default color depth for the X Window System on the installed system. Valid values are 8, 16, 24, and 32. Be sure to specify a color depth that is compatible with the video card and monitor.

volgroup(optional)- Use to create a Logical Volume Management (LVM) group with the syntax:

volgroup <name><partition><options>The options are as follows:- --noformat

- Use an existing volume group and do not format it.

- --useexisting

- Use an existing volume group and reformat it.

Create the partition first, create the logical volume group, and then create the logical volume. For example:part pv.01 --size 3000 volgroup myvg pv.01 logvol / --vgname=myvg --size=2000 --name=rootvolFor a detailed example ofvolgroupin action, refer to Section 1.4.1, “Advanced Partitioning Example”. zerombr(optional)- If

zerombris specified, andyesis its sole argument, any invalid partition tables found on disks are initialized. This destroys all of the contents of disks with invalid partition tables. This command should be in the following format:zerombr yesNo other format is effective. %include- Use the

%include /path/to/filecommand to include the contents of another file in the kickstart file as though the contents were at the location of the%includecommand in the kickstart file.

1.4.1. Advanced Partitioning Example

clearpart, raid, part, volgroup, and logvol kickstart options in action:

clearpart --drives=hda,hdc --initlabel

# Raid 1 IDE config

part raid.11 --size 1000 --asprimary --ondrive=hda

part raid.12 --size 1000 --asprimary --ondrive=hda

part raid.13 --size 2000 --asprimary --ondrive=hda

part raid.14 --size 8000 --ondrive=hda

part raid.15 --size 1 --grow --ondrive=hda

part raid.21 --size 1000 --asprimary --ondrive=hdc

part raid.22 --size 1000 --asprimary --ondrive=hdc

part raid.23 --size 2000 --asprimary --ondrive=hdc

part raid.24 --size 8000 --ondrive=hdc

part raid.25 --size 1 --grow --ondrive=hdc

# You can add --spares=x

raid / --fstype ext3 --device md0 --level=RAID1 raid.11 raid.21

raid /safe --fstype ext3 --device md1 --level=RAID1 raid.12 raid.22

raid swap --fstype swap --device md2 --level=RAID1 raid.13 raid.23

raid /usr --fstype ext3 --device md3 --level=RAID1 raid.14 raid.24

raid pv.01 --fstype ext3 --device md4 --level=RAID1 raid.15 raid.25

# LVM configuration so that we can resize /var and /usr/local later

volgroup sysvg pv.01

logvol /var --vgname=sysvg --size=8000 --name=var

logvol /var/freespace --vgname=sysvg --size=8000 --name=freespacetouse

logvol /usr/local --vgname=sysvg --size=1 --grow --name=usrlocal

1.5. Package Selection

%packages command to begin a kickstart file section that lists the packages you would like to install (this is for installations only, as package selection during upgrades is not supported).

RedHat/base/comps.xml file on the first Red Hat Enterprise Linux CD-ROM for a list of groups. Each group has an id, user visibility value, name, description, and package list. In the package list, the packages marked as mandatory are always installed if the group is selected, the packages marked default are selected by default if the group is selected, and the packages marked optional must be specifically selected even if the group is selected to be installed.

Core and Base groups are always selected by default, so it is not necessary to specify them in the %packages section.

%packages selection:

%packages @ X Window System @ GNOME Desktop Environment @ Graphical Internet @ Sound and Video dhcp @ symbol, a space, and then the full group name as given in the comps.xml file. Groups can also be specified using the id for the group, such as gnome-desktop. Specify individual packages with no additional characters (the dhcp line in the example above is an individual package).

-autofs

%packages option:

--resolvedeps- Install the listed packages and automatically resolve package dependencies. If this option is not specified and there are package dependencies, the automated installation pauses and prompts the user. For example:

%packages --resolvedeps

--ignoredeps- Ignore the unresolved dependencies and install the listed packages without the dependencies. For example:

%packages --ignoredeps

--ignoremissing- Ignore the missing packages and groups instead of halting the installation to ask if the installation should be aborted or continued. For example:

%packages --ignoremissing

1.6. Pre-installation Script

ks.cfg has been parsed. This section must be at the end of the kickstart file (after the commands) and must start with the %pre command. You can access the network in the %pre section; however, name service has not been configured at this point, so only IP addresses work.

Note

--interpreter /usr/bin/python- Allows you to specify a different scripting language, such as Python. Replace /usr/bin/python with the scripting language of your choice.

1.6.1. Example

%pre section:

%pre

#!/bin/sh

hds=""

mymedia=""

for file in /proc/ide/h*

do

mymedia=`cat $file/media`

if [ $mymedia == "disk" ] ; then

hds="$hds `basename $file`"

fi

done

set $hds

numhd=`echo $#`

drive1=`echo $hds | cut -d' ' -f1`

drive2=`echo $hds | cut -d' ' -f2`

#Write out partition scheme based on whether there are 1 or 2 hard drives

if [ $numhd == "2" ] ; then

#2 drives

echo "#partitioning scheme generated in %pre for 2 drives" > /tmp/part-include

echo "clearpart --all" >> /tmp/part-include

echo "part /boot --fstype ext3 --size 75 --ondisk hda" >> /tmp/part-include

echo "part / --fstype ext3 --size 1 --grow --ondisk hda" >> /tmp/part-include

echo "part swap --recommended --ondisk $drive1" >> /tmp/part-include

echo "part /home --fstype ext3 --size 1 --grow --ondisk hdb" >> /tmp/part-include

else

#1 drive

echo "#partitioning scheme generated in %pre for 1 drive" > /tmp/part-include

echo "clearpart --all" >> /tmp/part-include

echo "part /boot --fstype ext3 --size 75" >> /tmp/part-includ

echo "part swap --recommended" >> /tmp/part-include

echo "part / --fstype ext3 --size 2048" >> /tmp/part-include

echo "part /home --fstype ext3 --size 2048 --grow" >> /tmp/part-include

fi

%include /tmp/part-includeNote

1.7. Post-installation Script

%post command. This section is useful for functions such as installing additional software and configuring an additional nameserver.

Note

%post section. If you configured the network for DHCP, the /etc/resolv.conf file has not been completed when the installation executes the %post section. You can access the network, but you can not resolve IP addresses. Thus, if you are using DHCP, you must specify IP addresses in the %post section.

Note

--nochroot- Allows you to specify commands that you would like to run outside of the chroot environment.The following example copies the file

/etc/resolv.confto the file system that was just installed.%post --nochroot cp /etc/resolv.conf /mnt/sysimage/etc/resolv.conf

--interpreter /usr/bin/python- Allows you to specify a different scripting language, such as Python. Replace /usr/bin/python with the scripting language of your choice.

1.7.1. Examples

/sbin/chkconfig --level 345 telnet off /sbin/chkconfig --level 345 finger off /sbin/chkconfig --level 345 lpd off /sbin/chkconfig --level 345 httpd on runme from an NFS share:

mkdir /mnt/temp mount -o nolock 10.10.0.2:/usr/new-machines /mnt/temp open -s -w -- /mnt/temp/runme umount /mnt/temp Note

-o nolock is required when mounting an NFS mount.

/usr/sbin/useradd bob /usr/bin/chfn -f "Bob Smith" bob /usr/sbin/usermod -p 'kjdf$04930FTH/ ' bob 1.8. Making the Kickstart File Available

- On a boot diskette

- On a boot CD-ROM

- On a network

1.8.1. Creating Kickstart Boot Media

ks.cfg.

ks.cfg and must be located in the boot CD-ROM's top-level directory. Since a CD-ROM is read-only, the file must be added to the directory used to create the image that is written to the CD-ROM. Refer to the Installation Guide for instructions on creating boot media; however, before making the file.iso image file, copy the ks.cfg kickstart file to the isolinux/ directory.

ks.cfg and must be located in the flash memory's top-level directory. Create the boot image first, and then copy the ks.cfg file.

/dev/sda) using the dd command:

dd if=diskboot.img of=/dev/sda bs=1M Note

1.8.2. Making the Kickstart File Available on the Network

dhcpd.conf file for the DHCP server:

filename"/usr/new-machine/kickstart/";

next-server blarg.redhat.com;filename with the name of the kickstart file (or the directory in which the kickstart file resides) and the value after next-server with the NFS server name.

<ip-addr>-kickstart<ip-addr> section of the file name should be replaced with the client's IP address in dotted decimal notation. For example, the file name for a computer with an IP address of 10.10.0.1 would be 10.10.0.1-kickstart.

/kickstart from the BOOTP/DHCP server and tries to find the kickstart file using the same <ip-addr>-kickstart file name as described above.

1.9. Making the Installation Tree Available

1.10. Starting a Kickstart Installation

ks command line argument is passed to the kernel.

- CD-ROM #1 and Diskette

- The

linux ks=floppycommand also works if theks.cfgfile is located on a vfat or ext2 file system on a diskette and you boot from the Red Hat Enterprise Linux CD-ROM #1.An alternate boot command is to boot off the Red Hat Enterprise Linux CD-ROM #1 and have the kickstart file on a vfat or ext2 file system on a diskette. To do so, enter the following command at theboot:prompt:linux ks=hd:fd0:/ks.cfg

- With Driver Disk

- If you need to use a driver disk with kickstart, specify the

ddoption as well. For example, to boot off a boot diskette and use a driver disk, enter the following command at theboot:prompt:linux ks=floppy dd - Boot CD-ROM

- If the kickstart file is on a boot CD-ROM as described in Section 1.8.1, “Creating Kickstart Boot Media”, insert the CD-ROM into the system, boot the system, and enter the following command at the

boot:prompt (whereks.cfgis the name of the kickstart file):linux ks=cdrom:/ks.cfg

ks=nfs:<server>:/<path>- The installation program looks for the kickstart file on the NFS server <server>, as file <path>. The installation program uses DHCP to configure the Ethernet card. For example, if your NFS server is server.example.com and the kickstart file is in the NFS share

/mydir/ks.cfg, the correct boot command would beks=nfs:server.example.com:/mydir/ks.cfg. ks=http://<server>/<path>- The installation program looks for the kickstart file on the HTTP server <server>, as file <path>. The installation program uses DHCP to configure the Ethernet card. For example, if your HTTP server is server.example.com and the kickstart file is in the HTTP directory

/mydir/ks.cfg, the correct boot command would beks=http://server.example.com/mydir/ks.cfg. ks=floppy- The installation program looks for the file

ks.cfgon a vfat or ext2 file system on the diskette in/dev/fd0. ks=floppy:/<path>- The installation program looks for the kickstart file on the diskette in

/dev/fd0, as file <path>. ks=hd:<device>:/<file>- The installation program mounts the file system on <device> (which must be vfat or ext2), and look for the kickstart configuration file as <file> in that file system (for example,

ks=hd:sda3:/mydir/ks.cfg). ks=file:/<file>- The installation program tries to read the file <file> from the file system; no mounts are done. This is normally used if the kickstart file is already on the

initrdimage. ks=cdrom:/<path>- The installation program looks for the kickstart file on CD-ROM, as file <path>.

ks- If

ksis used alone, the installation program configures the Ethernet card to use DHCP. The kickstart file is read from the "bootServer" from the DHCP response as if it is an NFS server sharing the kickstart file. By default, the bootServer is the same as the DHCP server. The name of the kickstart file is one of the following:- If DHCP is specified and the boot file begins with a

/, the boot file provided by DHCP is looked for on the NFS server. - If DHCP is specified and the boot file begins with something other then a

/, the boot file provided by DHCP is looked for in the/kickstartdirectory on the NFS server. - If DHCP did not specify a boot file, then the installation program tries to read the file

/kickstart/1.2.3.4-kickstart, where 1.2.3.4 is the numeric IP address of the machine being installed.

ksdevice=<device>- The installation program uses this network device to connect to the network. For example, to start a kickstart installation with the kickstart file on an NFS server that is connected to the system through the eth1 device, use the command

ks=nfs:<server>:/<path> ksdevice=eth1at theboot:prompt.

Chapter 2. Kickstart Configurator

/usr/sbin/system-config-kickstart.

2.1. Basic Configuration

Figure 2.1. Basic Configuration

system-config-language) after installation.

2.2. Installation Method

Figure 2.2. Installation Method

- CD-ROM — Choose this option to install or upgrade from the Red Hat Enterprise Linux CD-ROMs.

- NFS — Choose this option to install or upgrade from an NFS shared directory. In the text field for the the NFS server, enter a fully-qualified domain name or IP address. For the NFS directory, enter the name of the NFS directory that contains the

RedHatdirectory of the installation tree. For example, if the NFS server contains the directory/mirrors/redhat/i386/RedHat/, enter/mirrors/redhat/i386/for the NFS directory. - FTP — Choose this option to install or upgrade from an FTP server. In the FTP server text field, enter a fully-qualified domain name or IP address. For the FTP directory, enter the name of the FTP directory that contains the

RedHatdirectory. For example, if the FTP server contains the directory/mirrors/redhat/i386/RedHat/, enter/mirrors/redhat/i386/for the FTP directory. If the FTP server requires a username and password, specify them as well. - HTTP — Choose this option to install or upgrade from an HTTP server. In the text field for the HTTP server, enter the fully-qualified domain name or IP address. For the HTTP directory, enter the name of the HTTP directory that contains the

RedHatdirectory. For example, if the HTTP server contains the directory/mirrors/redhat/i386/RedHat/, enter/mirrors/redhat/i386/for the HTTP directory. - Hard Drive — Choose this option to install or upgrade from a hard drive. Hard drive installations require the use of ISO (or CD-ROM) images. Be sure to verify that the ISO images are intact before you start the installation. To verify them, use an

md5sumprogram as well as thelinux mediacheckboot option as discussed in the Installation Guide. Enter the hard drive partition that contains the ISO images (for example,/dev/hda1) in the Hard Drive Partition text box. Enter the directory that contains the ISO images in the Hard Drive Directory text box.

2.3. Boot Loader Options

/boot partition). Install the boot loader on the MBR if you plan to use it as your boot loader.

cdrecord by configuring hdd=ide-scsi as a kernel parameter (where hdd is the CD-ROM device).

2.4. Partition Information

Figure 2.4. Partition Information

msdos for x86 and gpt for Itanium), select Initialize the disk label if you are installing on a brand new hard drive.

2.4.1. Creating Partitions

- In the Additional Size Options section, choose to make the partition a fixed size, up to a chosen size, or fill the remaining space on the hard drive. If you selected swap as the file system type, you can select to have the installation program create the swap partition with the recommended size instead of specifying a size.

- Force the partition to be created as a primary partition.

- Create the partition on a specific hard drive. For example, to make the partition on the first IDE hard disk (

/dev/hda), specifyhdaas the drive. Do not include/devin the drive name. - Use an existing partition. For example, to make the partition on the first partition on the first IDE hard disk (

/dev/hda1), specifyhda1as the partition. Do not include/devin the partition name. - Format the partition as the chosen file system type.

Figure 2.5. Creating Partitions

2.4.1.1. Creating Software RAID Partitions

- Click the RAID button.

- Select Create a software RAID partition.

- Configure the partitions as previously described, except select Software RAID as the file system type. Also, you must specify a hard drive on which to make the partition or specify an existing partition to use.

Figure 2.6. Creating a Software RAID Partition

- Click the RAID button.

- Select Create a RAID device.

- Select a mount point, file system type, RAID device name, RAID level, RAID members, number of spares for the software RAID device, and whether to format the RAID device.

Figure 2.7. Creating a Software RAID Device

- Click OK to add the device to the list.

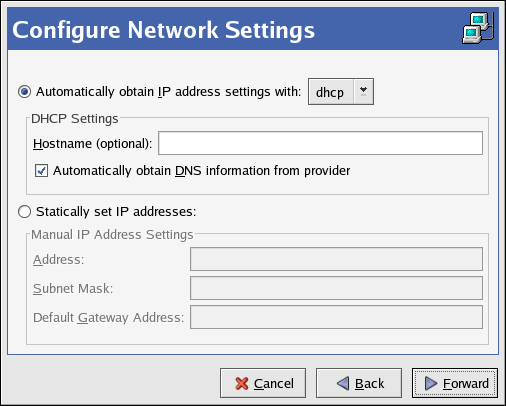

2.5. Network Configuration

Figure 2.8. Network Configuration

system-config-network). Refer to Chapter 17, Network Configuration for details.

2.6. Authentication

Figure 2.9. Authentication

- NIS

- LDAP

- Kerberos 5

- Hesiod

- SMB

- Name Switch Cache

2.7. Firewall Configuration

Figure 2.10. Firewall Configuration

port:protocol. For example, to allow IMAP access through the firewall, specify imap:tcp. Specify numeric ports can also be specified; to allow UDP packets on port 1234 through the firewall, enter 1234:udp. To specify multiple ports, separate them with commas.

2.8. Display Configuration

skipx option is written to the kickstart file.

2.8.1. General

Figure 2.11. X Configuration - General

/etc/inittab configuration file.

2.8.2. Video Card

Figure 2.12. X Configuration - Video Card

2.8.3. Monitor

Figure 2.13. X Configuration - Monitor

2.9. Package Selection

Figure 2.14. Package Selection

%packages section of the kickstart file after you save it. Refer to Section 1.5, “Package Selection” for details.

2.10. Pre-Installation Script

Figure 2.15. Pre-Installation Script

/usr/bin/python2.2 can be specified for a Python script. This option corresponds to using %pre --interpreter /usr/bin/python2.2 in your kickstart file.

Warning

%pre command. It is added for you.

2.11. Post-Installation Script

Figure 2.16. Post-Installation Script

Warning

%post command. It is added for you.

%post section:

echo "Hackers will be punished!" > /etc/motd Note

2.11.1. Chroot Environment

--nochroot option in the %post section.

/mnt/sysimage/.

echo "Hackers will be punished!" > /mnt/sysimage/etc/motd 2.11.2. Use an Interpreter

/usr/bin/python2.2 can be specified for a Python script. This option corresponds to using %post --interpreter /usr/bin/python2.2 in your kickstart file.

2.12. Saving the File

Figure 2.17. Preview

Chapter 3. PXE Network Installations

askmethod boot option with the Red Hat Enterprise Linux CD #1. Alternatively, if the system to be installed contains a network interface card (NIC) with Pre-Execution Environment (PXE) support, it can be configured to boot from files on another networked system rather than local media such as a CD-ROM.

tftp server (which provides the files necessary to start the installation program), and the location of the files on the tftp server. This is possible because of PXELINUX, which is part of the syslinux package.

- Configure the network (NFS, FTP, HTTP) server to export the installation tree.

- Configure the files on the

tftpserver necessary for PXE booting. - Configure which hosts are allowed to boot from the PXE configuration.

- Start the

tftpservice. - Configure DHCP.

- Boot the client, and start the installation.

3.1. Setting up the Network Server

3.2. PXE Boot Configuration

tftp server so they can be found when the client requests them. The tftp server is usually the same server as the network server exporting the installation tree.

system-config-netboot RPM package installed. To start the Network Booting Tool from the desktop, go to Applications (the main menu on the

panel) => System Settings => Server Settings => Network Booting Service. Or, type the command system-config-netboot at a shell prompt (for example, in an XTerm or a GNOME terminal).

Figure 3.1. Network Installation Setup

- Operating system identifier — Provide a unique name using one word to identify the Red Hat Enterprise Linux version and variant. It is used as the directory name in the

/tftpboot/linux-install/directory. - Description — Provide a brief description of the Red Hat Enterprise Linux version and variant.

- Selects protocol for installation — Selects NFS, FTP, or HTTP as the network installation type depending on which one was configured previously. If FTP is selected and anonymous FTP is not being used, uncheck Anonymous FTP and provide a valid username and password combination.

- Kickstart — Specify the location of the kickstart file. The file can be a URL or a file stored locally (diskette). The kickstart file can be created with the Kickstart Configurator. Refer to Chapter 2, Kickstart Configurator for details.

- Server — Provide the IP address or domain name of the NFS, FTP, or HTTP server.

- Location — Provide the directory shared by the network server. If FTP or HTTP was selected, the directory must be relative to the default directory for the FTP server or the document root for the HTTP server. For all network installations, the directory provided must contain the

RedHat/directory of the installation tree.

initrd.img and vmlinuz files necessary to boot the installation program are transfered from images/pxeboot/ in the provided installation tree to /tftpboot/linux-install/<os-identifier>/ on the tftp server (the one you are running the Network Booting Tool on).

3.2.1. Command Line Configuration

pxeos command line utility, which is part of the system-config-netboot package, can be used to configure the tftp server files :

pxeos -a -i "<description>" -p <NFS|HTTP|FTP> -D 0 -s client.example.com \ -L <net-location> -k <kernel> -K <kickstart><os-identifer>-a— Specifies that an OS instance is being added to the PXE configuration.-i"<description>" — Replace "<description>" with a description of the OS instance. This corresponds to the Description field in Figure 3.1, “Network Installation Setup”.-p<NFS|HTTP|FTP> — Specify which of the NFS, FTP, or HTTP protocols to use for installation. Only one may be specified. This corresponds to the Select protocol for installation menu in Figure 3.1, “Network Installation Setup”.-D<0|1> — Specify "0" which indicates that it is not a diskless configuration sincepxeoscan be used to configure a diskless environment as well.-sclient.example.com — Provide the name of the NFS, FTP, or HTTP server after the-soption. This corresponds to the Server field in Figure 3.1, “Network Installation Setup”.-L<net-location> — Provide the location of the installation tree on that server after the-Loption. This corresponds to the Location field in Figure 3.1, “Network Installation Setup”.-k<kernel> — Provide the specific kernel version of the server installation tree for booting.-K<kickstart> — Provide the location of the kickstart file, if available.- <os-identifer> — Specify the OS identifier, which is used as the directory name in the

/tftpboot/linux-install/directory. This corresponds to the Operating system identifier field in Figure 3.1, “Network Installation Setup”.

-A 0 -u <username> -p <password>pxeos command, refer to the pxeos man page.

3.3. Adding PXE Hosts

Figure 3.2. Add Hosts

Figure 3.3. Add a Host

- Hostname or IP Address/Subnet — The IP address, fully qualified hostname, or a subnet of systems that should be allowed to connect to the PXE server for installations.

- Operating System — The operating system identifier to install on this client. The list is populated from the network install instances created from the Network Installation Dialog.

- Serial Console — This option allows use of a serial console.

- Kickstart File — The location of a kickstart file to use, such as

http://server.example.com/kickstart/ks.cfg. This file can be created with the Kickstart Configurator. Refer to Chapter 2, Kickstart Configurator for details.

3.3.1. Command Line Configuration

pxeboot utility, a part of the system-config-netboot package, can be used to add hosts which are allowed to connect to the PXE server:

pxeboot -a -K <kickstart> -O <os-identifier> -r <value><host>-a— Specifies that a host is to be added.-K<kickstart> — The location of the kickstart file, if available.-O<os-identifier> — Specifies the operating system identifier as defined in Section 3.2, “PXE Boot Configuration”.-r<value> — Specifies the ram disk size.- <host> — Specifies the IP address or hostname of the host to add.

pxeboot command, refer to the pxeboot man page.

Chapter 4. Diskless Environments

- Install Red Hat Enterprise Linux on a system so that the files can be copied to the NFS server. (Refer to the Installation Guide for details.) Any software to be used on the clients must be installed on this system and the

busybox-anacondapackage must be installed. - Create a directory on the NFS server to contain the diskless environment such as

/diskless/i386/RHEL4-AS/. For example:mkdir -p /diskless/i386/RHEL4-AS/This directory is referred to as thediskless directory. - Create a subdirectory of this directory named

root/:mkdir -p /diskless/i386/RHEL4-AS/root/ - Copy Red Hat Enterprise Linux from the client system to the server using

rsync. For example:rsync -a -e ssh installed-system.example.com:/ /diskless/i386/RHEL4-AS/root/The length of this operation depends on the network connection speed as well as the size of the file system on the installed system. Depending on these factors, this operation may take a while. - Start the

tftpserver - Configure the DHCP server

- Finish creating the diskless environment as discussed in Section 4.2, “Finish Configuring the Diskless Environment”.

- Configure the diskless clients as discussed in Section 4.3, “Adding Hosts”.

- Configure each diskless client to boot via PXE and boot them.

4.1. Configuring the NFS Server

root/ and snapshot/ directories by adding them to /etc/exports. For example:

/diskless/i386/RHEL4-AS/root/ *(ro,sync,no_root_squash) /diskless/i386/RHEL4-AS/snapshot/ *(rw,sync,no_root_squash)

* with one of the hostname formats discussed in Section 21.3.2, “Hostname Formats”. Make the hostname declaration as specific as possible, so unwanted systems can not access the NFS mount.

service nfs start service nfs reload 4.2. Finish Configuring the Diskless Environment

system-config-netboot RPM package installed. To start the Network Booting Tool from the desktop, go to Applications (the main menu on the

panel) => System Settings => Server Settings => Network Booting Service. Or, type the command system-config-netboot at a shell prompt (for example, in an XTerm or a GNOME terminal).

- Click Forward on the first page.

- On the Diskless Identifier page, enter a Name and Description for the diskless environment. Click Forward.

- Enter the IP address or domain name of the NFS server configured in Section 4.1, “Configuring the NFS Server” as well as the directory exported as the diskless environment. Click Forward.

- The kernel versions installed in the diskless environment are listed. Select the kernel version to boot on the diskless system.

- Click Apply to finish the configuration.

/tftpboot/linux-install/<os-identifier>/. The directory snapshot/ is created in the same directory as the root/ directory (for example, /diskless/i386/RHEL4-AS/snapshot/) with a file called files in it. This file contains a list of files and directories that must be read/write for each diskless system. Do not modify this file. If additional entries must be added to the list, create a files.custom file in the same directory as the files file, and add each additional file or directory on a separate line.

4.3. Adding Hosts

- Hostname or IP Address/Subnet — Specify the hostname or IP address of a system to add it as a host for the diskless environment. Enter a subnet to specify a group of systems.

- Operating System — Select the diskless environment for the host or subnet of hosts.

- Serial Console — Select this checkbox to perform a serial installation.

- Snapshot name — Provide a subdirectory name to be used to store all of the read/write content for the host.

- Ethernet — Select the Ethernet device on the host to use to mount the diskless environment. If the host only has one Ethernet card, select eth0.

Figure 4.1. Add Diskless Host

snapshot/ directory in the diskless directory, a subdirectory is created with the Snapshot name specified as the file name. Then, all of the files listed in snapshot/files and snapshot/files.custom are copied copy from the root/ directory to this new directory.

4.4. Booting the Hosts

root/ directory in the diskless directory as read-only. It also mounts its individual snapshot directory as read/write. Then it mounts all the files and directories in the files and files.custom files using the mount -o bind over the read-only diskless directory to allow applications to write to the root directory of the diskless environment if they need to.

Chapter 5. Basic System Recovery

5.1. Common Problems

- You are unable to boot normally into Red Hat Enterprise Linux (runlevel 3 or 5).

- You are having hardware or software problems, and you want to get a few important files off of your system's hard drive.

- You forgot the root password.

5.1.1. Unable to Boot into Red Hat Enterprise Linux

/ partition changes, the boot loader might not be able to find it to mount the partition. To fix this problem, boot in rescue mode and modify the /boot/grub/grub.conf file.

5.1.2. Hardware/Software Problems

5.2. Booting into Rescue Mode

- By booting the system from an installation boot CD-ROM.

- By booting the system from other installation boot media, such as USB flash devices.

- By booting the system from the Red Hat Enterprise Linux CD-ROM #1.

rescue as a kernel parameter. For example, for an x86 system, type the following command at the installation boot prompt:

linux rescueThe rescue environment will now attempt to find your Linux installation and mount it under the directory /mnt/sysimage. You can then make any changes required to your system. If you want to proceed with this step choose 'Continue'. You can also choose to mount your file systems read-only instead of read-write by choosing 'Read-only'. If for some reason this process fails you can choose 'Skip' and this step will be skipped and you will go directly to a command shell.

/mnt/sysimage/. If it fails to mount a partition, it notifies you. If you select Read-Only, it attempts to mount your file system under the directory /mnt/sysimage/, but in read-only mode. If you select Skip, your file system is not mounted. Choose Skip if you think your file system is corrupted.

sh-3.00b#

chroot /mnt/sysimagerpm that require your root partition to be mounted as /. To exit the chroot environment, type exit to return to the prompt.

/foo, and typing the following command:

mount -t ext3 /dev/mapper/VolGroup00-LogVol02 /foo/foo is a directory that you have created and /dev/mapper/VolGroup00-LogVol02 is the LVM2 logical volume you want to mount. If the partition is of type ext2, replace ext3 with ext2.

fdisk -l

pvdisplay

vgdisplay

lvdisplayssh,scp, andpingif the network is starteddumpandrestorefor users with tape drivespartedandfdiskfor managing partitionsrpmfor installing or upgrading softwarejoefor editing configuration filesNote

If you try to start other popular editors such asemacs,pico, orvi, thejoeeditor is started.

5.2.1. Reinstalling the Boot Loader

- Boot the system from an installation boot medium.

- Type

linux rescueat the installation boot prompt to enter the rescue environment. - Type

chroot /mnt/sysimageto mount the root partition. - Type

/sbin/grub-install /dev/hdato reinstall the GRUB boot loader, where/dev/hdais the boot partition. - Review the

/boot/grub/grub.conffile, as additional entries may be needed for GRUB to control additional operating systems. - Reboot the system.

5.3. Booting into Single-User Mode

- At the GRUB splash screen at boot time, press any key to enter the GRUB interactive menu.

- Select Red Hat Enterprise Linux with the version of the kernel that you wish to boot and type

ato append the line. - Go to the end of the line and type

singleas a separate word (press the Spacebar and then typesingle). Press Enter to exit edit mode.

5.4. Booting into Emergency Mode

init files are not loaded. If init is corrupted or not working, you can still mount file systems to recover data that could be lost during a re-installation.

single with the keyword emergency.

Part II. File Systems

parted utility to manage partitions and access control lists (ACLs) to customize file permissions.

Chapter 6. The ext3 File System

6.1. Features of ext3

- Availability

- After an unexpected power failure or system crash (also called an unclean system shutdown), each mounted ext2 file system on the machine must be checked for consistency by the

e2fsckprogram. This is a time-consuming process that can delay system boot time significantly, especially with large volumes containing a large number of files. During this time, any data on the volumes is unreachable.The journaling provided by the ext3 file system means that this sort of file system check is no longer necessary after an unclean system shutdown. The only time a consistency check occurs using ext3 is in certain rare hardware failure cases, such as hard drive failures. The time to recover an ext3 file system after an unclean system shutdown does not depend on the size of the file system or the number of files; rather, it depends on the size of the journal used to maintain consistency. The default journal size takes about a second to recover, depending on the speed of the hardware. - Data Integrity

- The ext3 file system provides stronger data integrity in the event that an unclean system shutdown occurs. The ext3 file system allows you to choose the type and level of protection that your data receives. By default, the ext3 volumes are configured to keep a high level of data consistency with regard to the state of the file system.

- Speed

- Despite writing some data more than once, ext3 has a higher throughput in most cases than ext2 because ext3's journaling optimizes hard drive head motion. You can choose from three journaling modes to optimize speed, but doing so means trade-offs in regards to data integrity.

- Easy Transition

- It is easy to migrate from ext2 to ext3 and gain the benefits of a robust journaling file system without reformatting. Refer to Section 6.3, “Converting to an ext3 File System” for more on how to perform this task.

6.2. Creating an ext3 File System

- Create the partition using

partedorfdisk. - Format the partition with the ext3 file system using

mkfs. - Label the partition using

e2label. - Create the mount point.

- Add the partition to the

/etc/fstabfile.

6.3. Converting to an ext3 File System

tune2fs program can add a journal to an existing ext2 file system without altering the data already on the partition. If the file system is already mounted while it is being transitioned, the journal is visible as the file .journal in the root directory of the file system. If the file system is not mounted, the journal is hidden and does not appear in the file system at all.

Note

/sbin/tune2fs -j <file_system>- A mapped device — A logical volume in a volume group, for example,

/dev/mapper/VolGroup00-LogVol02. - A static device — A traditional storage volume, for example,

/dev/hdbX, where hdb is a storage device name and X is the partition number.

df command to display mounted file systems. For more detailed information on the LVM file system, refer to Chapter 8, LVM Configuration.

/dev/mapper/VolGroup00-LogVol02 /etc/fstab file.

initrd image (or RAM disk) to boot. To create this, run the mkinitrd program. For information on using the mkinitrd command, type man mkinitrd. Also, make sure your GRUB configuration loads the initrd.

6.4. Reverting to an ext2 File System

resize2fs, which does not yet support ext3. In this situation, it may be necessary to temporarily revert a file system to ext2.

umount /dev/mapper/VolGroup00-LogVol02 /sbin/tune2fs -O ^has_journal /dev/mapper/VolGroup00-LogVol02/sbin/e2fsck -y /dev/mapper/VolGroup00-LogVol02mount -t ext2 /dev/mapper/VolGroup00-LogVol02/mount/point.journal file at the root level of the partition by changing to the directory where it is mounted and typing:

rm -f .journal/etc/fstab file.

Note

ext2online. ext2online allows you to increase the size of an ext3 file system once it is mounted (online) and on a resizable logical volume. The root file system is set up by default on LVM2 logical volumes during installation.

ext2online will only work on ext3 file systems. For more information, refer to man ext2online.

Chapter 7. Logical Volume Manager (LVM)

7.1. What is LVM?

/boot/ partition. The /boot/ partition cannot be on a logical volume group because the boot loader cannot read it. If the root (/) partition is on a logical volume, create a separate /boot/ partition which is not a part of a volume group.

Figure 7.1. Logical Volume Group

/home and / m and file system types, such as ext2 or ext3. When "partitions" reach their full capacity, free space from the logical volume group can be added to the logical volume to increase the size of the partition. When a new hard drive is added to the system, it can be added to the logical volume group, and partitions that are logical volumes can be expanded.

Figure 7.2. Logical Volumes

7.2. What is LVM2?

7.3. Additional Resources

7.3.1. Installed Documentation

rpm -qd lvm— This command shows all the documentation available from thelvmpackage, including man pages.lvm help— This command shows all LVM commands available.

7.3.2. Useful Websites

- http://sourceware.org/lvm2 — LVM2 webpage, which contains an overview, link to the mailing lists, and more.

- http://tldp.org/HOWTO/LVM-HOWTO/ — LVM HOWTO from the Linux Documentation Project.

Chapter 8. LVM Configuration

lvm package to create your own LVM configuration post-installation, but these instructions focus on using Disk Druid during installation to complete this task.

- Creating physical volumes from the hard drives.

- Creating volume groups from the physical volumes.

- Creating logical volumes from the volume groups and assign the logical volumes mount points.

Note

/dev/sda and /dev/sdb) are used in the following examples. They detail how to create a simple configuration using a single LVM volume group with associated logical volumes during installation.

8.1. Automatic Partitioning

- The

/boot/partition resides on its own non-LVM partition. In the following example, it is the first partition on the first drive (/dev/sda1). Bootable partitions cannot reside on LVM logical volumes. - A single LVM volume group (

VolGroup00) is created, which spans all selected drives and all remaining space available. In the following example, the remainder of the first drive (/dev/sda2), and the entire second drive (/dev/sdb1) are allocated to the volume group. - Two LVM logical volumes (

LogVol00andLogVol01) are created from the newly created spanned volume group. In the following example, the recommended swap space is automatically calculated and assigned toLogVol01, and the remainder is allocated to the root file system,LogVol00.

Figure 8.1. Automatic LVM Configuration With Two SCSI Drives

Note

/home/ or /var/, so that each file system has its own independent quota configuration limits.

Note

8.2. Manual LVM Partitioning

8.2.1. Creating the /boot/ Partition

Figure 8.2. Two Blank Drives, Ready For Configuration

Warning

/boot/ partition cannot reside on an LVM volume group because the GRUB boot loader cannot read it.

- Select New.

- Select /boot from the Mount Point pulldown menu.

- Select ext3 from the File System Type pulldown menu.

- Select only the sda checkbox from the Allowable Drives area.

- Leave 100 (the default) in the Size (MB) menu.

- Leave the Fixed size (the default) radio button selected in the Additional Size Options area.

- Select Force to be a primary partition to make the partition be a primary partition. A primary partition is one of the first four partitions on the hard drive. If unselected, the partition is created as a logical partition. If other operating systems are already on the system, unselecting this option should be considered. For more information on primary versus logical/extended partitions, refer to the appendix section of the Installation Guide.

Figure 8.3. Creation of the Boot Partition

Figure 8.4. The /boot/ Partition Displayed

8.2.2. Creating the LVM Physical Volumes

- Select New.

- Select physical volume (LVM) from the File System Type pulldown menu as shown in Figure 8.5, “Creating a Physical Volume”.

Figure 8.5. Creating a Physical Volume

- You cannot enter a mount point yet (you can once you have created all your physical volumes and then all volume groups).

- A physical volume must be constrained to one drive. For Allowable Drives, select the drive on which the physical volume are created. If you have multiple drives, all drives are selected, and you must deselect all but one drive.

- Enter the size that you want the physical volume to be.

- Select Fixed size to make the physical volume the specified size, select Fill all space up to (MB) and enter a size in MBs to give range for the physical volume size, or select Fill to maximum allowable size to make it grow to fill all available space on the hard disk. If you make more than one growable, they share the available free space on the disk.

- Select Force to be a primary partition if you want the partition to be a primary partition.

- Click OK to return to the main screen.

Figure 8.6. Two Physical Volumes Created

8.2.3. Creating the LVM Volume Groups

- Click the LVM button to collect the physical volumes into volume groups. A volume group is basically a collection of physical volumes. You can have multiple logical volume groups, but a physical volume can only be in one volume group.

Note

There is overhead disk space reserved in the logical volume group. The summation of the physical volumes may not equal the size of the volume group; however, the size of the logical volumes shown is correct.

Figure 8.7. Creating an LVM Volume Group

- Change the Volume Group Name if desired.

- All logical volumes inside the volume group must be allocated in physical extent units. By default, the physical extent is set to 32 MB; thus, logical volume sizes must be divisible by 32 MBs. If you enter a size that is not a unit of 32 MBs, the installation program automatically selects the closest size in units of 32 MBs. It is not recommended that you change this setting.

- Select which physical volumes to use for the volume group.

8.2.4. Creating the LVM Logical Volumes

/, /home/, and swap space. Remember that /boot cannot be a logical volume. To add a logical volume, click the Add button in the Logical Volumes section. A dialog window as shown in Figure 8.8, “Creating a Logical Volume” appears.

Figure 8.8. Creating a Logical Volume

Note

Figure 8.9. Pending Logical Volumes

Figure 8.10. Final Manual Configuration