Chapter 20. Client/Server

Red Hat Data Grid offers two alternative access methods: embedded mode and client-server mode.

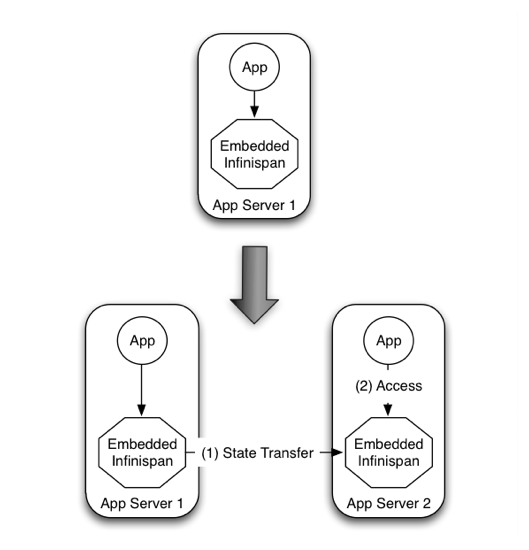

- In Embedded mode the Red Hat Data Grid libraries co-exist with the user application in the same JVM as shown in the following diagram

Figure 20.1. Peer-to-peer access

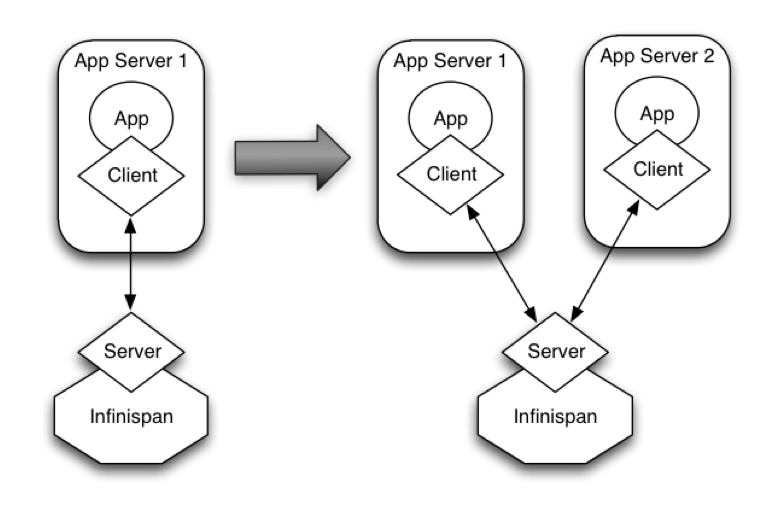

- Client-server mode is when applications access the data stored in a remote Red Hat Data Grid server using some kind of network protocol

20.1. Why Client/Server?

There are situations when accessing Red Hat Data Grid in a client-server mode might make more sense than embedding it within your application, for example, when trying to access Red Hat Data Grid from a non-JVM environment. Since Red Hat Data Grid is written in Java, if someone had a C\\ application that wanted to access it, it couldn’t just do it in a p2p way. On the other hand, client-server would be perfectly suited here assuming that a language neutral protocol was used and the corresponding client and server implementations were available.

Figure 20.2. Non-JVM access

In other situations, Red Hat Data Grid users want to have an elastic application tier where you start/stop business processing servers very regularly. Now, if users deployed Red Hat Data Grid configured with distribution or state transfer, startup time could be greatly influenced by the shuffling around of data that happens in these situations. So in the following diagram, assuming Red Hat Data Grid was deployed in p2p mode, the app in the second server could not access Red Hat Data Grid until state transfer had completed.

Figure 20.3. Elasticity issue with P2P

This effectively means that bringing up new application-tier servers is impacted by things like state transfer because applications cannot access Red Hat Data Grid until these processes have finished and if the state being shifted around is large, this could take some time. This is undesirable in an elastic environment where you want quick application-tier server turnaround and predictable startup times. Problems like this can be solved by accessing Red Hat Data Grid in a client-server mode because starting a new application-tier server is just a matter of starting a lightweight client that can connect to the backing data grid server. No need for rehashing or state transfer to occur and as a result server startup times can be more predictable which is very important for modern cloud-based deployments where elasticity in your application tier is important.

Figure 20.4. Achieving elasticity

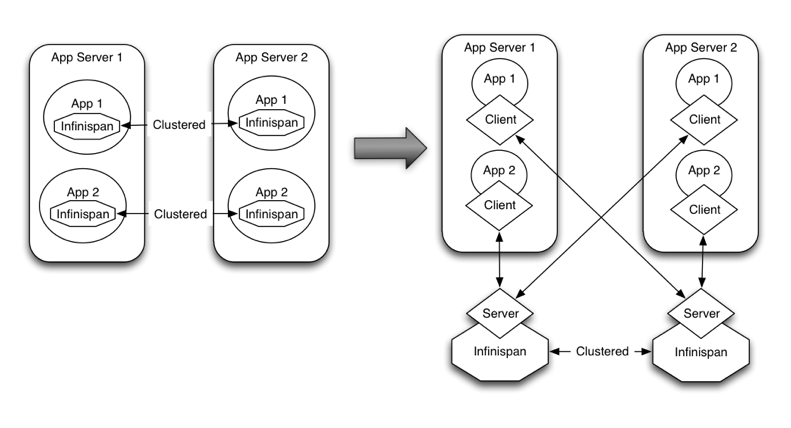

Other times, it’s common to find multiple applications needing access to data storage. In this cases, you could in theory deploy an Red Hat Data Grid instance per each of those applications but this could be wasteful and difficult to maintain. Think about databases here, you don’t deploy a database alongside each of your applications, do you? So, alternatively you could deploy Red Hat Data Grid in client-server mode keeping a pool of Red Hat Data Grid data grid nodes acting as a shared storage tier for your applications.

Figure 20.5. Shared data storage

Deploying Red Hat Data Grid in this way also allows you to manage each tier independently, for example, you can upgrade you application or app server without bringing down your Red Hat Data Grid data grid nodes.

20.2. Why use embedded mode?

Before talking about individual Red Hat Data Grid server modules, it’s worth mentioning that in spite of all the benefits, client-server Red Hat Data Grid still has disadvantages over p2p. Firstly, p2p deployments are simpler than client-server ones because in p2p, all peers are equals to each other and hence this simplifies deployment. So, if this is the first time you’re using Red Hat Data Grid, p2p is likely to be easier for you to get going compared to client-server.

Client-server Red Hat Data Grid requests are likely to take longer compared to p2p requests, due to the serialization and network cost in remote calls. So, this is an important factor to take in account when designing your application. For example, with replicated Red Hat Data Grid caches, it might be more performant to have lightweight HTTP clients connecting to a server side application that accesses Red Hat Data Grid in p2p mode, rather than having more heavyweight client side apps talking to Red Hat Data Grid in client-server mode, particularly if data size handled is rather large. With distributed caches, the difference might not be so big because even in p2p deployments, you’re not guaranteed to have all data available locally.

Environments where application tier elasticity is not so important, or where server side applications access state-transfer-disabled, replicated Red Hat Data Grid cache instances are amongst scenarios where Red Hat Data Grid p2p deployments can be more suited than client-server ones.

20.3. Server Modules

So, now that it’s clear when it makes sense to deploy Red Hat Data Grid in client-server mode, what are available solutions? All Red Hat Data Grid server modules are based on the same pattern where the server backend creates an embedded Red Hat Data Grid instance and if you start multiple backends, they can form a cluster and share/distribute state if configured to do so. The server types below primarily differ in the type of listener endpoint used to handle incoming connections.

Here’s a brief summary of the available server endpoints.

Hot Rod Server Module - This module is an implementation of the Hot Rod binary protocol backed by Red Hat Data Grid which allows clients to do dynamic load balancing and failover and smart routing.

- A variety of clients exist for this protocol.

- If you’re clients are running Java, this should be your defacto server module choice because it allows for dynamic load balancing and failover. This means that Hot Rod clients can dynamically detect changes in the topology of Hot Rod servers as long as these are clustered, so when new nodes join or leave, clients update their Hot Rod server topology view. On top of that, when Hot Rod servers are configured with distribution, clients can detect where a particular key resides and so they can route requests smartly.

- Load balancing and failover is dynamically provided by Hot Rod client implementations using information provided by the server.

REST Server Module - The REST server, which is distributed as a WAR file, can be deployed in any servlet container to allow Red Hat Data Grid to be accessed via a RESTful HTTP interface.

- To connect to it, you can use any HTTP client out there and there’re tons of different client implementations available out there for pretty much any language or system.

- This module is particularly recommended for those environments where HTTP port is the only access method allowed between clients and servers.

- Clients wanting to load balance or failover between different Red Hat Data Grid REST servers can do so using any standard HTTP load balancer such as mod_cluster . It’s worth noting though these load balancers maintain a static view of the servers in the backend and if a new one was to be added, it would require manual update of the load balancer.

Memcached Server Module - This module is an implementation of the Memcached text protocol backed by Red Hat Data Grid.

- To connect to it, you can use any of the existing Memcached clients which are pretty diverse.

- As opposed to Memcached servers, Red Hat Data Grid based Memcached servers can actually be clustered and hence they can replicate or distribute data using consistent hash algorithms around the cluster. So, this module is particularly of interest to those users that want to provide failover capabilities to the data stored in Memcached servers.

- In terms of load balancing and failover, there’re a few clients that can load balance or failover given a static list of server addresses (perl’s Cache::Memcached for example) but any server addition or removal would require manual intervention.

20.4. Which protocol should I use?

Choosing the right protocol depends on a number of factors.

| Hot Rod | HTTP / REST | Memcached | |

|---|---|---|---|

| Topology-aware | Y | N | N |

| Hash-aware | Y | N | N |

| Encryption | Y | Y | N |

| Authentication | Y | Y | N |

| Conditional ops | Y | Y | Y |

| Bulk ops | Y | N | N |

| Transactions | N | N | N |

| Listeners | Y | N | N |

| Query | Y | Y | N |

| Execution | Y | N | N |

| Cross-site failover | Y | N | N |

20.5. Using Hot Rod Server

The Red Hat Data Grid Server distribution contains a server module that implements Red Hat Data Grid’s custom binary protocol called Hot Rod. The protocol was designed to enable faster client/server interactions compared to other existing text based protocols and to allow clients to make more intelligent decisions with regards to load balancing, failover and even data location operations. Please refer to Red Hat Data Grid Server’s documentation for instructions on how to configure and run a HotRod server.

To connect to Red Hat Data Grid over this highly efficient Hot Rod protocol you can either use one of the clients described in this chapter, or use higher level tools such as Hibernate OGM.

20.6. Hot Rod Protocol

The following articles provides detailed information about each version of the custom TCP client/server Hot Rod protocol.

20.6.1. Hot Rod Protocol 1.0

This version of the protocol is implemented since Infinispan 4.1.0.Final

All key and values are sent and stored as byte arrays. Hot Rod makes no assumptions about their types.

Some clarifications about the other types:

vInt : Variable-length integers are defined defined as compressed, positive integers where the high-order bit of each byte indicates whether more bytes need to be read. The low-order seven bits are appended as increasingly more significant bits in the resulting integer value making it efficient to decode. Hence, values from zero to 127 are stored in a single byte, values from 128 to 16,383 are stored in two bytes, and so on:

ValueFirst byteSecond byteThird byte000000000100000001200000010…12701111111128100000000000000112910000001000000011301000001000000001…16,383111111110111111116,38410000000100000000000000116,385100000011000000000000001…- signed vInt: The vInt above is also able to encode negative values, but will always use the maximum size (5 bytes) no matter how small the endoded value is. In order to have a small payload for negative values too, signed vInts uses ZigZag encoding on top of the vInt encoding. More details here

- vLong : Refers to unsigned variable length long values similar to vInt but applied to longer values. They’re between 1 and 9 bytes long.

- String : Strings are always represented using UTF-8 encoding.

20.6.1.1. Request Header

The header for a request is composed of:

Table 20.1. Request header

| Field Name | Size | Value |

|---|---|---|

| Magic | 1 byte | 0xA0 = request |

| Message ID | vLong | ID of the message that will be copied back in the response. This allows for Hot Rod clients to implement the protocol in an asynchronous way. |

| Version | 1 byte | Hot Rod server version. In this particular case, this is 10 |

| Opcode | 1 byte |

Request operation code: |

| Cache Name Length | vInt | Length of cache name. If the passed length is 0 (followed by no cache name), the operation will interact with the default cache. |

| Cache Name | string | Name of cache on which to operate. This name must match the name of predefined cache in the Red Hat Data Grid configuration file. |

| Flags | vInt |

A variable length number representing flags passed to the system. Each flags is represented by a bit. Note that since this field is sent as variable length, the most significant bit in a byte is used to determine whether more bytes need to be read, hence this bit does not represent any flag. Using this model allows for flags to be combined in a short space. Here are the current values for each flag: |

| Client Intelligence | 1 byte |

This byte hints the server on the client capabilities: |

| Topology Id | vInt | This field represents the last known view in the client. Basic clients will only send 0 in this field. When topology-aware or hash-distribution-aware clients will send 0 until they have received a reply from the server with the current view id. Afterwards, they should send that view id until they receive a new view id in a response. |

| Transaction Type | 1 byte |

This is a 1 byte field, containing one of the following well-known supported transaction types (For this version of the protocol, the only supported transaction type is 0): |

| Transaction Id | byte array | The byte array uniquely identifying the transaction associated to this call. Its length is determined by the transaction type. If transaction type is 0, no transaction id will be present. |

20.6.1.2. Response Header

The header for a response is composed of:

Table 20.2. Response header

| Field Name | Size | Value |

|---|---|---|

| Magic | 1 byte | 0xA1 = response |

| Message ID | vLong | ID of the message, matching the request for which the response is sent. |

| Opcode | 1 byte |

Response operation code: |

| Status | 1 byte |

Status of the response, possible values: |

| Topology Change Marker | string | This is a marker byte that indicates whether the response is prepended with topology change information. When no topology change follows, the content of this byte is 0. If a topology change follows, its contents are 1. |

Exceptional error status responses, those that start with 0x8 …, are followed by the length of the error message (as a vInt ) and error message itself as String.

20.6.1.3. Topology Change Headers

The following section discusses how the response headers look for topology-aware or hash-distribution-aware clients when there’s been a cluster or view formation change. Note that it’s the server that makes the decision on whether it sends back the new topology based on the current topology id and the one the client sent. If they’re different, it will send back the new topology.

20.6.1.4. Topology-Aware Client Topology Change Header

This is what topology-aware clients receive as response header when a topology change is sent back:

| Field Name | Size | Value |

|---|---|---|

| Response header with topology change marker | variable | See previous section. |

| Topology Id | vInt | Topology ID |

| Num servers in topology | vInt | Number of Hot Rod servers running within the cluster. This could be a subset of the entire cluster if only a fraction of those nodes are running Hot Rod servers. |

| m1: Host/IP length | vInt | Length of hostname or IP address of individual cluster member that Hot Rod client can use to access it. Using variable length here allows for covering for hostnames, IPv4 and IPv6 addresses. |

| m1: Host/IP address | string | String containing hostname or IP address of individual cluster member that Hot Rod client can use to access it. |

| m1: Port | 2 bytes (Unsigned Short) | Port that Hot Rod clients can use to communicate with this cluster member. |

| m2: Host/IP length | vInt | |

| m2: Host/IP address | string | |

| m2: Port | 2 bytes (Unsigned Short) | |

| …etc |

20.6.1.5. Distribution-Aware Client Topology Change Header

This is what hash-distribution-aware clients receive as response header when a topology change is sent back:

| Field Name | Size | Value |

|---|---|---|

| Response header with topology change marker | variable | See previous section. |

| Topology Id | vInt | Topology ID |

| Num Key Owners | 2 bytes (Unsigned Short) | Globally configured number of copies for each Red Hat Data Grid distributed key |

| Hash Function Version | 1 byte | Hash function version, pointing to a specific hash function in use. See Hot Rod hash functions for details. |

| Hash space size | vInt | Modulus used by Red Hat Data Grid for for all module arithmetic related to hash code generation. Clients will likely require this information in order to apply the correct hash calculation to the keys. |

| Num servers in topology | vInt | Number of Red Hat Data Grid Hot Rod servers running within the cluster. This could be a subset of the entire cluster if only a fraction of those nodes are running Hot Rod servers. |

| m1: Host/IP length | vInt | Length of hostname or IP address of individual cluster member that Hot Rod client can use to access it. Using variable length here allows for covering for hostnames, IPv4 and IPv6 addresses. |

| m1: Host/IP address | string | String containing hostname or IP address of individual cluster member that Hot Rod client can use to access it. |

| m1: Port | 2 bytes (Unsigned Short) | Port that Hot Rod clients can use to communicat with this cluster member. |

| m1: Hashcode | 4 bytes | 32 bit integer representing the hashcode of a cluster member that a Hot Rod client can use indentify in which cluster member a key is located having applied the CSA to it. |

| m2: Host/IP length | vInt | |

| m2: Host/IP address | string | |

| m2: Port | 2 bytes (Unsigned Short) | |

| m2: Hashcode | 4 bytes | |

| …etc |

It’s important to note that since hash headers rely on the consistent hash algorithm used by the server and this is a factor of the cache interacted with, hash-distribution-aware headers can only be returned to operations that target a particular cache. Currently ping command does not target any cache (this is to change as per ISPN-424) , hence calls to ping command with hash-topology-aware client settings will return a hash-distribution-aware header with "Num Key Owners", "Hash Function Version", "Hash space size" and each individual host’s hash code all set to 0. This type of header will also be returned as response to operations with hash-topology-aware client settings that are targeting caches that are not configured with distribution.

20.6.1.6. Operations

Get (0x03)/Remove (0x0B)/ContainsKey (0x0F)/GetWithVersion (0x11)

Common request format:

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Key Length | vInt | Length of key. Note that the size of a vint can be up to 5 bytes which in theory can produce bigger numbers than Integer.MAX_VALUE. However, Java cannot create a single array that’s bigger than Integer.MAX_VALUE, hence the protocol is limiting vint array lengths to Integer.MAX_VALUE. |

| Key | byte array | Byte array containing the key whose value is being requested. |

Get response (0x04):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if key retrieved |

| Value Length | vInt | If success, length of value |

| Value | byte array | If success, the requested value |

Remove response (0x0C):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if key removed |

| Previous value Length | vInt | If force return previous value flag was sent in the request and the key was removed, the length of the previous value will be returned. If the key does not exist, value length would be 0. If no flag was sent, no value length would be present. |

| Previous value | byte array | If force return previous value flag was sent in the request and the key was removed, previous value. |

ContainsKey response (0x10):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if key exists |

GetWithVersion response (0x12):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if key retrieved |

| Entry Version | 8 bytes | Unique value of an existing entry’s modification. The protocol does not mandate that entry_version values are sequential. They just need to be unique per update at the key level. |

| Value Length | vInt | If success, length of value |

| Value | byte array | If success, the requested value |

BulkGet

Request (0x19):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Entry count | vInt | Maximum number of Red Hat Data Grid entries to be returned by the server (entry == key + associated value). Needed to support CacheLoader.load(int). If 0 then all entries are returned (needed for CacheLoader.loadAll()). |

Response (0x20):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, data follows |

| More | 1 byte | One byte representing whether more entries need to be read from the stream. So, when it’s set to 1, it means that an entry follows, whereas when it’s set to 0, it’s the end of stream and no more entries are left to read. For more information on BulkGet look here |

| Key 1 Length | vInt | Length of key |

| Key 1 | byte array | Retrieved key |

| Value 1 Length | vInt | Length of value |

| Value 1 | byte array | Retrieved value |

| More | 1 byte | |

| Key 2 Length | vInt | |

| Key 2 | byte array | |

| Value 2 Length | vInt | |

| Value 2 | byte array | |

| … etc |

Put (0x01)/PutIfAbsent (0x05)/Replace (0x07)

Common request format:

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Key Length | vInt | Length of key. Note that the size of a vint can be up to 5 bytes which in theory can produce bigger numbers than Integer.MAX_VALUE. However, Java cannot create a single array that’s bigger than Integer.MAX_VALUE, hence the protocol is limiting vint array lengths to Integer.MAX_VALUE. |

| Key | byte array | Byte array containing the key whose value is being requested. |

| Lifespan | vInt | Number of seconds that a entry during which the entry is allowed to life. If number of seconds is bigger than 30 days, this number of seconds is treated as UNIX time and so, represents the number of seconds since 1/1/1970. If set to 0, lifespan is unlimited. |

| Max Idle | vInt | Number of seconds that a entry can be idle before it’s evicted from the cache. If 0, no max idle time. |

| Value Length | vInt | Length of value |

| Value | byte-array | Value to be stored |

Put response (0x02):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if stored |

| Previous value Length | vInt | If force return previous value flag was sent in the request and the key was put, the length of the previous value will be returned. If the key does not exist, value length would be 0. If no flag was sent, no value length would be present. |

| Previous value | byte array | If force return previous value flag was sent in the request and the key was put, previous value. |

Replace response (0x08):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if stored |

| Previous value Length | vInt | If force return previous value flag was sent in the request, the length of the previous value will be returned. If the key does not exist, value length would be 0. If no flag was sent, no value length would be present. |

| Previous value | byte array | If force return previous value flag was sent in the request and the key was replaced, previous value. |

PutIfAbsent response (0x06):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if stored |

| Previous value Length | vInt | If force return previous value flag was sent in the request, the length of the previous value will be returned. If the key does not exist, value length would be 0. If no flag was sent, no value length would be present. |

| Previous value | byte array | If force return previous value flag was sent in the request and the key was replaced, previous value. |

ReplaceIfUnmodified

Request (0x09):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Key Length | vInt | Length of key. Note that the size of a vint can be up to 5 bytes which in theory can produce bigger numbers than Integer.MAX_VALUE. However, Java cannot create a single array that’s bigger than Integer.MAX_VALUE, hence the protocol is limiting vint array lengths to Integer.MAX_VALUE. |

| Key | byte array | Byte array containing the key whose value is being requested. |

| Lifespan | vInt | Number of seconds that a entry during which the entry is allowed to life. If number of seconds is bigger than 30 days, this number of seconds is treated as UNIX time and so, represents the number of seconds since 1/1/1970. If set to 0, lifespan is unlimited. |

| Max Idle | vInt | Number of seconds that a entry can be idle before it’s evicted from the cache. If 0, no max idle time. |

| Entry Version | 8 bytes | Use the value returned by GetWithVersion operation. |

| Value Length | vInt | Length of value |

| Value | byte-array | Value to be stored |

Response (0x0A):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if replaced |

| Previous value Length | vInt | If force return previous value flag was sent in the request, the length of the previous value will be returned. If the key does not exist, value length would be 0. If no flag was sent, no value length would be present. |

| Previous value | byte array | If force return previous value flag was sent in the request and the key was replaced, previous value. |

RemoveIfUnmodified

Request (0x0D):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Key Length | vInt | Length of key. Note that the size of a vint can be up to 5 bytes which in theory can produce bigger numbers than Integer.MAX_VALUE. However, Java cannot create a single array that’s bigger than Integer.MAX_VALUE, hence the protocol is limiting vint array lengths to Integer.MAX_VALUE. |

| Key | byte array | Byte array containing the key whose value is being requested. |

| Entry Version | 8 bytes | Use the value returned by GetWithMetadata operation. |

Response (0x0E):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if removed |

| Previous value Length | vInt | If force return previous value flag was sent in the request, the length of the previous value will be returned. If the key does not exist, value length would be 0. If no flag was sent, no value length would be present. |

| Previous value | byte array | If force return previous value flag was sent in the request and the key was removed, previous value. |

Clear

Request (0x13):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

Response (0x14):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if cleared |

PutAll

Bulk operation to put all key value entries into the cache at the same time.

Request (0x2D):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Lifespan | vInt | Number of seconds that provided entries are allowed to live. If number of seconds is bigger than 30 days, this number of seconds is treated as UNIX time and so, represents the number of seconds since 1/1/1970. If set to 0, lifespan is unlimited. |

| Max Idle | vInt | Number of seconds that each entry can be idle before it’s evicted from the cache. If 0, no max idle time. |

| Entry count | vInt | How many entries are being inserted |

| Key 1 Length | vInt | Length of key |

| Key 1 | byte array | Retrieved key |

| Value 1 Length | vInt | Length of value |

| Value 1 | byte array | Retrieved value |

| Key 2 Length | vInt | |

| Key 2 | byte array | |

| Value 2 Length | vInt | |

| Value 2 | byte array | |

| … continues until entry count is reached |

Response (0x2E):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if all put |

GetAll

Bulk operation to get all entries that map to a given set of keys.

Request (0x2F):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Key count | vInt | How many keys to find entries for |

| Key 1 Length | vInt | Length of key |

| Key 1 | byte array | Retrieved key |

| Key 2 Length | vInt | |

| Key 2 | byte array | |

| … continues until key count is reached |

Response (0x30):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte | |

| Entry count | vInt | How many entries are being returned |

| Key 1 Length | vInt | Length of key |

| Key 1 | byte array | Retrieved key |

| Value 1 Length | vInt | Length of value |

| Value 1 | byte array | Retrieved value |

| Key 2 Length | vInt | |

| Key 2 | byte array | |

| Value 2 Length | vInt | |

| Value 2 | byte array | |

| … continues until entry count is reached |

0x00 = success, if the get returned sucessfully |

Stats

Returns a summary of all available statistics. For each statistic returned, a name and a value is returned both in String UTF-8 format. The supported stats are the following:

| Name | Explanation |

|---|---|

| timeSinceStart | Number of seconds since Hot Rod started. |

| currentNumberOfEntries | Number of entries currently in the Hot Rod server. |

| totalNumberOfEntries | Number of entries stored in Hot Rod server. |

| stores | Number of put operations. |

| retrievals | Number of get operations. |

| hits | Number of get hits. |

| misses | Number of get misses. |

| removeHits | Number of removal hits. |

| removeMisses | Number of removal misses. |

Request (0x15):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

Response (0x16):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if stats retrieved |

| Number of stats | vInt | Number of individual stats returned. |

| Name 1 length | vInt | Length of named statistic. |

| Name 1 | string | String containing statistic name. |

| Value 1 length | vInt | Length of value field. |

| Value 1 | string | String containing statistic value. |

| Name 2 length | vInt | |

| Name 2 | string | |

| Value 2 length | vInt | |

| Value 2 | String | |

| …etc |

Ping

Application level request to see if the server is available.

Request (0x17):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

Response (0x18):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if no errors |

Error Handling

Error response (0x50)

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x8x = error response code |

| Error Message Length | vInt | Length of error message |

| Error Message | string | Error message. In the case of 0x84 , this error field contains the latest version supported by the Hot Rod server. Length is defined by total body length. |

Multi-Get Operations

A multi-get operation is a form of get operation that instead of requesting a single key, requests a set of keys. The Hot Rod protocol does not include such operation but remote Hot Rod clients could easily implement this type of operations by either parallelizing/pipelining individual get requests. Another possibility would be for remote clients to use async or non-blocking get requests. For example, if a client wants N keys, it could send send N async get requests and then wait for all the replies. Finally, multi-get is not to be confused with bulk-get operations. In bulk-gets, either all or a number of keys are retrieved, but the client does not know which keys to retrieve, whereas in multi-get, the client defines which keys to retrieve.

20.6.1.7. Example - Put request

- Coded request

| Byte | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|---|

| 8 | 0xA0 | 0x09 | 0x41 | 0x01 | 0x07 | 0x4D ('M') | 0x79 ('y') | 0x43 ('C') |

| 16 | 0x61 ('a') | 0x63 ('c') | 0x68 ('h') | 0x65 ('e') | 0x00 | 0x03 | 0x00 | 0x00 |

| 24 | 0x00 | 0x05 | 0x48 ('H') | 0x65 ('e') | 0x6C ('l') | 0x6C ('l') | 0x6F ('o') | 0x00 |

| 32 | 0x00 | 0x05 | 0x57 ('W') | 0x6F ('o') | 0x72 ('r') | 0x6C ('l') | 0x64 ('d') |

|

- Field explanation

| Field Name | Value | Field Name | Value |

|---|---|---|---|

| Magic (0) | 0xA0 | Message Id (1) | 0x09 |

| Version (2) | 0x41 | Opcode (3) | 0x01 |

| Cache name length (4) | 0x07 | Cache name(5-11) | 'MyCache' |

| Flag (12) | 0x00 | Client Intelligence (13) | 0x03 |

| Topology Id (14) | 0x00 | Transaction Type (15) | 0x00 |

| Transaction Id (16) | 0x00 | Key field length (17) | 0x05 |

| Key (18 - 22) | 'Hello' | Lifespan (23) | 0x00 |

| Max idle (24) | 0x00 | Value field length (25) | 0x05 |

| Value (26-30) | 'World' |

- Coded response

| Byte | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|---|

| 8 | 0xA1 | 0x09 | 0x01 | 0x00 | 0x00 |

|

|

|

- Field Explanation

| Field Name | Value | Field Name | Value |

|---|---|---|---|

| Magic (0) | 0xA1 | Message Id (1) | 0x09 |

| Opcode (2) | 0x01 | Status (3) | 0x00 |

| Topology change marker (4) | 0x00 |

|

20.6.2. Hot Rod Protocol 1.1

This version of the protocol is implemented since Infinispan 5.1.0.FINAL

20.6.2.1. Request Header

The version field in the header is updated to 11.

20.6.2.2. Distribution-Aware Client Topology Change Header

This section has been modified to be more efficient when talking to distributed caches with virtual nodes enabled.

This is what hash-distribution-aware clients receive as response header when a topology change is sent back:

| Field Name | Size | Value |

|---|---|---|

| Response header with topology change marker | variable | See previous section. |

| Topology Id | vInt | Topology ID |

| Num Key Owners | 2 bytes (Unsigned Short) | Globally configured number of copies for each Red Hat Data Grid distributed key |

| Hash Function Version | 1 byte | Hash function version, pointing to a specific hash function in use. See Hot Rod hash functions for details. |

| Hash space size | vInt | Modulus used by Red Hat Data Grid for for all module arithmetic related to hash code generation. Clients will likely require this information in order to apply the correct hash calculation to the keys. |

| Num servers in topology | vInt | Number of Hot Rod servers running within the cluster. This could be a subset of the entire cluster if only a fraction of those nodes are running Hot Rod servers. |

| Num Virtual Nodes Owners | vInt | Field added in version 1.1 of the protocol that represents the number of configured virtual nodes. If no virtual nodes are configured or the cache is not configured with distribution, this field will contain 0. |

| m1: Host/IP length | vInt | Length of hostname or IP address of individual cluster member that Hot Rod client can use to access it. Using variable length here allows for covering for hostnames, IPv4 and IPv6 addresses. |

| m1: Host/IP address | string | String containing hostname or IP address of individual cluster member that Hot Rod client can use to access it. |

| m1: Port | 2 bytes (Unsigned Short) | Port that Hot Rod clients can use to communicat with this cluster member. |

| m1: Hashcode | 4 bytes | 32 bit integer representing the hashcode of a cluster member that a Hot Rod client can use indentify in which cluster member a key is located having applied the CSA to it. |

| m2: Host/IP length | vInt | |

| m2: Host/IP address | string | |

| m2: Port | 2 bytes (Unsigned Short) | |

| m2: Hashcode | 4 bytes | |

| …etc |

20.6.2.3. Server node hash code calculation

Adding support for virtual nodes has made version 1.0 of the Hot Rod protocol impractical due to bandwidth it would have taken to return hash codes for all virtual nodes in the clusters (this number could easily be in the millions). So, as of version 1.1 of the Hot Rod protocol, clients are given the base hash id or hash code of each server, and then they have to calculate the real hash position of each server both with and without virtual nodes configured. Here are the rules clients should follow when trying to calculate a node’s hash code:

1\. With virtual nodes disabled : Once clients have received the base hash code of the server, they need to normalize it in order to find the exact position of the hash wheel. The process of normalization involves passing the base hash code to the hash function, and then do a small calculation to avoid negative values. The resulting number is the node’s position in the hash wheel:

public static int getNormalizedHash(int nodeBaseHashCode, Hash hashFct) {

return hashFct.hash(nodeBaseHashCode) & Integer.MAX_VALUE; // make sure no negative numbers are involved.

}2\. With virtual nodes enabled : In this case, each node represents N different virtual nodes, and to calculate each virtual node’s hash code, we need to take the the range of numbers between 0 and N-1 and apply the following logic:

- For virtual node with 0 as id, use the technique used to retrieve a node’s hash code, as shown in the previous section.

- For virtual nodes from 1 to N-1 ids, execute the following logic:

public static int virtualNodeHashCode(int nodeBaseHashCode, int id, Hash hashFct) {

int virtualNodeBaseHashCode = id;

virtualNodeBaseHashCode = 31 * virtualNodeBaseHashCode + nodeBaseHashCode;

return getNormalizedHash(virtualNodeBaseHashCode, hashFct);

}20.6.3. Hot Rod Protocol 1.2

This version of the protocol is implemented since Red Hat Data Grid 5.2.0.Final. Since Red Hat Data Grid 5.3.0, HotRod supports encryption via SSL. However, since this only affects the transport, the version number of the protocol has not been incremented.

20.6.3.1. Request Header

The version field in the header is updated to 12.

Two new request operation codes have been added:

- 0x1B = getWithMetadata request

- 0x1D = bulkKeysGet request

Two new flags have been added too:

- 0x0002 = use cache-level configured default lifespan

- 0x0004 = use cache-level configured default max idle

20.6.3.2. Response Header

Two new response operation codes have been added:

- 0x1C = getWithMetadata response

- 0x1E = bulkKeysGet response

20.6.3.3. Operations

GetWithMetadata

Request (0x1B):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Key Length | vInt | Length of key. Note that the size of a vint can be up to 5 bytes which in theory can produce bigger numbers than Integer.MAX_VALUE. However, Java cannot create a single array that’s bigger than Integer.MAX_VALUE, hence the protocol is limiting vint array lengths to Integer.MAX_VALUE. |

| Key | byte array | Byte array containing the key whose value is being requested. |

Response (0x1C):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if key retrieved |

| Flag | 1 byte |

A flag indicating whether the response contains expiration information. The value of the flag is obtained as a bitwise OR operation between INFINITE_LIFESPAN (0x01) and |

| Created | Long | (optional) a Long representing the timestamp when the entry was created on the server. This value is returned only if the flag’s INFINITE_LIFESPAN bit is not set. |

| Lifespan | vInt | (optional) a vInt representing the lifespan of the entry in seconds. This value is returned only if the flag’s INFINITE_LIFESPAN bit is not set. |

| LastUsed | Long |

(optional) a Long representing the timestamp when the entry was last accessed on the server. This value is returned only if the flag’s |

| MaxIdle | vInt |

(optional) a vInt representing the maxIdle of the entry in seconds. This value is returned only if the flag’s |

| Entry Version | 8 bytes | Unique value of an existing entry’s modification. The protocol does not mandate that entry_version values are sequential. They just need to be unique per update at the key level. |

| Value Length | vInt | If success, length of value |

| Value | byte array | If success, the requested value |

BulkKeysGet

Request (0x1D):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Scope | vInt |

0 = Default Scope - This scope is used by RemoteCache.keySet() method. If the remote cache is a distributed cache, the server launch a stream operation to retrieve all keys from all of the nodes. (Remember, a topology-aware Hot Rod Client could be load balancing the request to any one node in the cluster). Otherwise, it’ll get keys from the cache instance local to the server receiving the request (that is because the keys should be the same across all nodes in a replicated cache). |

Response (0x1E):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, data follows |

| More | 1 byte | One byte representing whether more keys need to be read from the stream. So, when it’s set to 1, it means that an entry follows, whereas when it’s set to 0, it’s the end of stream and no more entries are left to read. For more information on BulkGet look here |

| Key 1 Length | vInt | Length of key |

| Key 1 | byte array | Retrieved key |

| More | 1 byte | |

| Key 2 Length | vInt | |

| Key 2 | byte array | |

| … etc |

20.6.4. Hot Rod Protocol 1.3

This version of the protocol is implemented since Infinispan 6.0.0.Final.

20.6.4.1. Request Header

The version field in the header is updated to 13.

A new request operation code has been added:

- 0x1F = query request

20.6.4.2. Response Header

A new response operation code has been added:

- 0x20 = query response

20.6.4.3. Operations

Query

Request (0x1F):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Query Length | vInt | The length of the protobuf encoded query object |

| Query | byte array | Byte array containing the protobuf encoded query object, having a length specified by previous field. |

Response (0x20):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response payload Length | vInt | The length of the protobuf encoded response object |

| Response payload | byte array | Byte array containing the protobuf encoded response object, having a length specified by previous field. |

As of Infinispan 6.0, the query and response objects are specified by the protobuf message types 'org.infinispan.client.hotrod.impl.query.QueryRequest' and 'org.infinispan.client.hotrod.impl.query.QueryResponse' defined in remote-query/remote-query-client/src/main/resources/org/infinispan/query/remote/client/query.proto. These definitions could change in future Infinispan versions, but as long as these evolutions will be kept backward compatible (according to the rules defined here) no new Hot Rod protocol version will be introduced to accommodate this.

20.6.5. Hot Rod Protocol 2.0

This version of the protocol is implemented since Infinispan 7.0.0.Final.

20.6.5.1. Request Header

The request header no longer contains Transaction Type and Transaction ID elements since they’re not in use, and even if they were in use, there are several operations for which they would not make sense, such as ping or stats commands. Once transactions are implemented, the protocol version will be upped, with the necessary changes in the request header.

The version field in the header is updated to 20.

Two new flags have been added:

- 0x0008 = operation skips loading from configured cache loader.

- 0x0010 = operation skips indexing. Only relevant when the query module is enabled for the cache

The following new request operation codes have been added:

- 0x21 = auth mech list request

- 0x23 = auth request

- 0x25 = add client remote event listener request

- 0x27 = remove client remote event listener request

- 0x29 = size request

20.6.5.2. Response Header

The following new response operation codes have been added:

- 0x22 = auth mech list response

- 0x24 = auth mech response

- 0x26 = add client remote event listener response

- 0x28 = remove client remote event listener response

- 0x2A = size response

Two new error codes have also been added to enable clients more intelligent decisions, particularly when it comes to fail-over logic:

- 0x87 = Node suspected. When a client receives this error as response, it means that the node that responded had an issue sending an operation to a third node, which was suspected. Generally, requests that return this error should be failed-over to other nodes.

- 0x88 = Illegal lifecycle state. When a client receives this error as response, it means that the server-side cache or cache manager are not available for requests because either stopped, they’re stopping or similar situation. Generally, requests that return this error should be failed-over to other nodes.

Some adjustments have been made to the responses for the following commands in order to better handle response decoding without the need to keep track of the information sent. More precisely, the way previous values are parsed has changed so that the status of the command response provides clues on whether the previous value follows or not. More precisely:

-

Put response returns

0x03status code when put was successful and previous value follows. -

PutIfAbsent response returns

0x04status code only when the putIfAbsent operation failed because the key was present and its value follows in the response. If the putIfAbsent worked, there would have not been a previous value, and hence it does not make sense returning anything extra. -

Replace response returns

0x03status code only when replace happened and the previous or replaced value follows in the response. If the replace did not happen, it means that the cache entry was not present, and hence there’s no previous value that can be returned. -

ReplaceIfUnmodified returns

0x03status code only when replace happened and the previous or replaced value follows in the response. -

ReplaceIfUnmodified returns

0x04status code only when replace did not happen as a result of the key being modified, and the modified value follows in the response. -

Remove returns

0x03status code when the remove happened and the previous or removed value follows in the response. If the remove did not occur as a result of the key not being present, it does not make sense sending any previous value information. -

RemoveIfUnmodified returns

0x03status code only when remove happened and the previous or replaced value follows in the response. -

RemoveIfUnmodified returns

0x04status code only when remove did not happen as a result of the key being modified, and the modified value follows in the response.

20.6.5.3. Distribution-Aware Client Topology Change Header

In Infinispan 5.2, virtual nodes based consistent hashing was abandoned and instead segment based consistent hash was implemented. In order to satisfy the ability for Hot Rod clients to find data as reliably as possible, Red Hat Data Grid has been transforming the segment based consistent hash to fit Hot Rod 1.x protocol. Starting with version 2.0, a brand new distribution-aware topology change header has been implemented which suppors segment based consistent hashing suitably and provides 100% data location guarantees.

| Field Name | Size | Value |

|---|---|---|

| Response header with topology change marker | variable | |

| Topology Id | vInt | Topology ID |

| Num servers in topology | vInt | Number of Red Hat Data Grid Hot Rod servers running within the cluster. This could be a subset of the entire cluster if only a fraction of those nodes are running Hot Rod servers. |

| m1: Host/IP length | vInt | Length of hostname or IP address of individual cluster member that Hot Rod client can use to access it. Using variable length here allows for covering for hostnames, IPv4 and IPv6 addresses. |

| m1: Host/IP address | string | String containing hostname or IP address of individual cluster member that Hot Rod client can use to access it. |

| m1: Port | 2 bytes (Unsigned Short) | Port that Hot Rod clients can use to communicat with this cluster member. |

| m2: Host/IP length | vInt | |

| m2: Host/IP address | string | |

| m2: Port | 2 bytes (Unsigned Short) | |

| … | … | |

| Hash Function Version | 1 byte | Hash function version, pointing to a specific hash function in use. See Hot Rod hash functions for details. |

| Num segments in topology | vInt | Total number of segments in the topology |

| Number of owners in segment | 1 byte | This can be either 0, 1 or 2 owners. |

| First owner’s index | vInt | Given the list of all nodes, the position of this owner in this list. This is only present if number of owners for this segment is 1 or 2. |

| Second owner’s index | vInt | Given the list of all nodes, the position of this owner in this list. This is only present if number of owners for this segment is 2. |

Given this information, Hot Rod clients should be able to recalculate all the hash segments and be able to find out which nodes are owners for each segment. Even though there could be more than 2 owners per segment, Hot Rod protocol limits the number of owners to send for efficiency reasons.

20.6.5.4. Operations

Auth Mech List

Request (0x21):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

Response (0x22):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Mech count | vInt | The number of mechs |

| Mech 1 | string | String containing the name of the SASL mech in its IANA-registered form (e.g. GSSAPI, CRAM-MD5, etc) |

| Mech 2 | string | |

| …etc |

The purpose of this operation is to obtain the list of valid SASL authentication mechs supported by the server. The client will then need to issue an Authenticate request with the preferred mech.

Authenticate

Request (0x23):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Mech | string | String containing the name of the mech chosen by the client for authentication. Empty on the successive invocations |

| Response length | vInt | Length of the SASL client response |

| Response data | byte array | The SASL client response |

Response (0x24):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Completed | byte | 0 if further processing is needed, 1 if authentication is complete |

| Challenge length | vInt | Length of the SASL server challenge |

| Challenge data | byte array | The SASL server challenge |

The purpose of this operation is to authenticate a client against a server using SASL. The authentication process, depending on the chosen mech, might be a multi-step operation. Once complete the connection becomes authenticated

Add client listener for remote events

Request (0x25):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Listener ID | byte array | Listener identifier |

| Include state | byte |

When this byte is set to |

| Key/value filter factory name | string |

Optional name of the key/value filter factory to be used with this listener. The factory is used to create key/value filter instances which allow events to be filtered directly in the Hot Rod server, avoiding sending events that the client is not interested in. If no factory is to be used, the length of the string is |

| Key/value filter factory parameter count | byte | The key/value filter factory, when creating a filter instance, can take an arbitrary number of parameters, enabling the factory to be used to create different filter instances dynamically. This count field indicates how many parameters will be passed to the factory. If no factory name was provided, this field is not present in the request. |

| Key/value filter factory parameter 1 | byte array | First key/value filter factory parameter |

| Key/value filter factory parameter 2 | byte array | Second key/value filter factory parameter |

| … | ||

| Converter factory name | string |

Optional name of the converter factory to be used with this listener. The factory is used to transform the contents of the events sent to clients. By default, when no converter is in use, events are well defined, according to the type of event generated. However, there might be situations where users want to add extra information to the event, or they want to reduce the size of the events. In these cases, a converter can be used to transform the event contents. The given converter factory name produces converter instances to do this job. If no factory is to be used, the length of the string is |

| Converter factory parameter count | byte | The converter factory, when creating a converter instance, can take an arbitrary number of parameters, enabling the factory to be used to create different converter instances dynamically. This count field indicates how many parameters will be passed to the factory. If no factory name was provided, this field is not present in the request. |

| Converter factory parameter 1 | byte array | First converter factory parameter |

| Converter factory parameter 2 | byte array | Second converter factory parameter |

| … |

Response (0x26):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

Remove client listener for remote events

Request (0x27):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Listener ID | byte array | Listener identifier |

Response (0x28):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

Size

Request (0x29):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

Response (0x2A):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Size | vInt | Size of the remote cache, which is calculated globally in the clustered set ups, and if present, takes cache store contents into account as well. |

Exec

Request (0x2B):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Script | string | Name of the task to execute |

| Parameter Count | vInt | The number of parameters |

| Parameter 1 Name | string | The name of the first parameter |

| Parameter 1 Length | vInt | The length of the first parameter |

| Parameter 1 Value | byte array | The value of the first parameter |

Response (0x2C):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if execution completed successfully |

| Value Length | vInt | If success, length of return value |

| Value | byte array | If success, the result of the execution |

20.6.5.5. Remote Events

Starting with Hot Rod 2.0, clients can register listeners for remote events happening in the server. Sending these events commences the moment a client adds a client listener for remote events.

Event Header:

| Field Name | Size | Value |

|---|---|---|

| Magic | 1 byte | 0xA1 = response |

| Message ID | vLong | ID of event |

| Opcode | 1 byte |

Event type: |

| Status | 1 byte |

Status of the response, possible values: |

| Topology Change Marker | 1 byte |

Since events are not associated with a particular incoming topology ID to be able to decide whether a new topology is required to be sent or not, new topologies will never be sent with events. Hence, this marker will always have |

Table 20.3. Cache entry created event

| Field Name | Size | Value |

|---|---|---|

| Header | variable |

Event header with |

| Listener ID | byte array | Listener for which this event is directed |

| Custom marker | byte |

Custom event marker. For created events, this is |

| Command retried | byte |

Marker for events that are result of retried commands. If command is retried, it returns |

| Key | byte array | Created key |

| Version | long | Version of the created entry. This version information can be used to make conditional operations on this cache entry. |

Table 20.4. Cache entry modified event

| Field Name | Size | Value |

|---|---|---|

| Header | variable |

Event header with |

| Listener ID | byte array | Listener for which this event is directed |

| Custom marker | byte |

Custom event marker. For created events, this is |

| Command retried | byte |

Marker for events that are result of retried commands. If command is retried, it returns |

| Key | byte array | Modified key |

| Version | long | Version of the modified entry. This version information can be used to make conditional operations on this cache entry. |

Table 20.5. Cache entry removed event

| Field Name | Size | Value |

|---|---|---|

| Header | variable |

Event header with |

| Listener ID | byte array | Listener for which this event is directed |

| Custom marker | byte |

Custom event marker. For created events, this is |

| Command retried | byte |

Marker for events that are result of retried commands. If command is retried, it returns |

| Key | byte array | Removed key |

Table 20.6. Custom event

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Event header with event specific operation code |

| Listener ID | byte array | Listener for which this event is directed |

| Custom marker | byte |

Custom event marker. For custom events, this is |

| Event data | byte array | Custom event data, formatted according to the converter implementation logic. |

20.6.6. Hot Rod Protocol 2.1

This version of the protocol is implemented since Infinispan 7.1.0.Final.

20.6.6.1. Request Header

The version field in the header is updated to 21.

20.6.6.2. Operations

Add client listener for remote events

An extra byte parameter is added at the end which indicates whether the client prefers client listener to work with raw binary data for filter/converter callbacks. If using raw data, its value is 1 otherwise 0.

Request format:

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Listener ID | byte array | … |

| Include state | byte | … |

| Key/value filter factory parameter count | byte | … |

| … | ||

| Converter factory name | string | … |

| Converter factory parameter count | byte | … |

| … | ||

| Use raw data | byte |

If filter/converter parameters should be raw binary, then |

Custom event

Starting with Hot Rod 2.1, custom events can return raw data that the Hot Rod client should not try to unmarshall before passing it on to the user. The way this is transmitted to the Hot Rod client is by sending 2 as the custom event marker. So, the format of the custom event remains like this:

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Event header with event specific operation code |

| Listener ID | byte array | Listener for which this event is directed |

| Custom marker | byte |

Custom event marker. For custom events whose event data needs to be unmarshalled before returning to user the value is |

| Event data | byte array |

Custom event data. If the custom marker is |

20.6.7. Hot Rod Protocol 2.2

This version of the protocol is implemented since Infinispan 8.0

Added support for different time units.

20.6.7.1. Operations

Put/PutAll/PutIfAbsent/Replace/ReplaceIfUnmodified

Common request format:

| Field Name | Size | Value |

|---|---|---|

| TimeUnits | Byte |

Time units of lifespan (first 4 bits) and maxIdle (last 4 bits). Special units DEFAULT and INFINITE can be used for default server expiration and no expiration respectively. Possible values: |

| Lifespan | vLong | Duration which the entry is allowed to life. Only sent when time unit is not DEFAULT or INFINITE |

| Max Idle | vLong | Duration that each entry can be idle before it’s evicted from the cache. Only sent when time unit is not DEFAULT or INFINITE |

20.6.8. Hot Rod Protocol 2.3

This version of the protocol is implemented since Infinispan 8.0

20.6.8.1. Operations

Iteration Start

Request (0x31):

| Field Name | Size | Value |

|---|---|---|

| Segments size | signed vInt |

Size of the bitset encoding of the segments ids to iterate on. The size is the maximum segment id rounded to nearest multiple of 8. |

| Segments | byte array |

(Optional) Contains the segments ids bitset encoded, where each bit with value 1 represents a segment in the set. Byte order is little-endian. |

| FilterConverter size | signed vInt | The size of the String representing a KeyValueFilterConverter factory name deployed on the server, or -1 if no filter will be used |

| FilterConverter | UTF-8 byte array | (Optional) KeyValueFilterConverter factory name deployed on the server. Present if previous field is not negative |

| BatchSize | vInt | number of entries to transfers from the server at one go |

Response (0x32):

| Field Name | Size | Value |

|---|---|---|

| IterationId | String | The unique id of the iteration |

Iteration Next

Request (0x33):

| Field Name | Size | Value |

|---|---|---|

| IterationId | String | The unique id of the iteration |

Response (0x34):

| Field Name | Size | Value |

|---|---|---|

| Finished segments size | vInt | size of the bitset representing segments that were finished iterating |

| Finished segments | byte array | bitset encoding of the segments that were finished iterating |

| Entry count | vInt | How many entries are being returned |

| Key 1 Length | vInt | Length of key |

| Key 1 | byte array | Retrieved key |

| Value 1 Length | vInt | Length of value |

| Value 1 | byte array | Retrieved value |

| Key 2 Length | vInt | |

| Key 2 | byte array | |

| Value 2 Length | vInt | |

| Value 2 | byte array | |

| … continues until entry count is reached |

Iteration End

Request (0x35):

| Field Name | Size | Value |

|---|---|---|

| IterationId | String | The unique id of the iteration |

Response (0x36):

| Header | variable | Response header |

|---|---|---|

| Response status | 1 byte |

0x00 = success, if execution completed successfully |

20.6.9. Hot Rod Protocol 2.4

This version of the protocol is implemented since Infinispan 8.1

This Hot Rod protocol version adds three new status code that gives the client hints on whether the server has compatibility mode enabled or not:

-

0x06: Success status and compatibility mode is enabled. -

0x07: Success status and return previous value, with compatibility mode is enabled. -

0x08: Not executed and return previous value, with compatibility mode is enabled.

The Iteration Start operation can optionally send parameters if a custom filter is provided and it’s parametrised:

20.6.9.1. Operations

Iteration Start

Request (0x31):

| Field Name | Size | Value |

|---|---|---|

| Segments size | signed vInt | same as protocol version 2.3. |

| Segments | byte array | same as protocol version 2.3. |

| FilterConverter size | signed vInt | same as protocol version 2.3. |

| FilterConverter | UTF-8 byte array | same as protocol version 2.3. |

| Parameters size | byte | the number of params of the filter. Only present when FilterConverter is provided. |

| Parameters | byte[][] | an array of parameters, each parameter is a byte array. Only present if Parameters size is greater than 0. |

| BatchSize | vInt | same as protocol version 2.3. |

The Iteration Next operation can optionally return projections in the value, meaning more than one value is contained in the same entry.

Iteration Next

Response (0x34):

| Field Name | Size | Value |

|---|---|---|

| Finished segments size | vInt | same as protocol version 2.3. |

| Finished segments | byte array | same as protocol version 2.3. |

| Entry count | vInt | same as protocol version 2.3. |

| Number of value projections | vInt | Number of projections for the values. If 1, behaves like version protocol version 2.3. |

| Key1 Length | vInt | same as protocol version 2.3. |

| Key1 | byte array | same as protocol version 2.3. |

| Value1 projection1 length | vInt | length of value1 first projection |

| Value1 projection1 | byte array | retrieved value1 first projection |

| Value1 projection2 length | vInt | length of value2 second projection |

| Value1 projection2 | byte array | retrieved value2 second projection |

| … continues until all projections for the value retrieved | Key2 Length | vInt |

| same as protocol version 2.3. | Key2 | byte array |

| same as protocol version 2.3. | Value2 projection1 length | vInt |

| length of value 2 first projection | Value2 projection1 | byte array |

| retrieved value 2 first projection | Value2 projection2 length | vInt |

| length of value 2 second projection | Value2 projection2 | byte array |

| retrieved value 2 second projection | … continues until entry count is reached |

- Stats:

Statistics returned by previous Hot Rod protocol versions were local to the node where the Hot Rod operation had been called. Starting with 2.4, new statistics have been added which provide global counts for the statistics returned previously. If the Hot Rod is running in local mode, these statistics are not returned:

| Name | Explanation |

|---|---|

| globalCurrentNumberOfEntries | Number of entries currently across the Hot Rod cluster. |

| globalStores | Total number of put operations across the Hot Rod cluster. |

| globalRetrievals | Total number of get operations across the Hot Rod cluster. |

| globalHits | Total number of get hits across the Hot Rod cluster. |

| globalMisses | Total number of get misses across the Hot Rod cluster. |

| globalRemoveHits | Total number of removal hits across the Hot Rod cluster. |

| globalRemoveMisses | Total number of removal misses across the Hot Rod cluster. |

20.6.10. Hot Rod Protocol 2.5

This version of the protocol is implemented since Infinispan 8.2

This Hot Rod protocol version adds support for metadata retrieval along with entries in the iterator. It includes two changes:

- Iteration Start request includes an optional flag

- IterationNext operation may include metadata info for each entry if the flag above is set

Iteration Start

Request (0x31):

| Field Name | Size | Value |

|---|---|---|

| Segments size | signed vInt | same as protocol version 2.4. |

| Segments | byte array | same as protocol version 2.4. |

| FilterConverter size | signed vInt | same as protocol version 2.4. |

| FilterConverter | UTF-8 byte array | same as protocol version 2.4. |

| Parameters size | byte | same as protocol version 2.4. |

| Parameters | byte[][] | same as protocol version 2.4. |

| BatchSize | vInt | same as protocol version 2.4. |

| Metadata | 1 byte | 1 if metadata is to be returned for each entry, 0 otherwise |

Iteration Next

Response (0x34):

| Field Name | Size | Value |

|---|---|---|

| Finished segments size | vInt | same as protocol version 2.4. |

| Finished segments | byte array | same as protocol version 2.4. |

| Entry count | vInt | same as protocol version 2.4. |

| Number of value projections | vInt | same as protocol version 2.4. |

| Metadata (entry 1) | 1 byte | If set, entry has metadata associated |

| Expiration (entry 1) | 1 byte |

A flag indicating whether the response contains expiration information. The value of the flag is obtained as a bitwise OR operation between INFINITE_LIFESPAN (0x01) and |

| Created (entry 1) | Long | (optional) a Long representing the timestamp when the entry was created on the server. This value is returned only if the flag’s INFINITE_LIFESPAN bit is not set. |

| Lifespan (entry 1) | vInt | (optional) a vInt representing the lifespan of the entry in seconds. This value is returned only if the flag’s INFINITE_LIFESPAN bit is not set. |

| LastUsed (entry 1) | Long |

(optional) a Long representing the timestamp when the entry was last accessed on the server. This value is returned only if the flag’s |

| MaxIdle (entry 1) | vInt |

(optional) a vInt representing the maxIdle of the entry in seconds. This value is returned only if the flag’s |

| Entry Version (entry 1) | 8 bytes | Unique value of an existing entry’s modification. Only present if Metadata flag is set |

| Key 1 Length | vInt | same as protocol version 2.4. |

| Key 1 | byte array | same as protocol version 2.4. |

| Value 1 Length | vInt | same as protocol version 2.4. |

| Value 1 | byte array | same as protocol version 2.4. |

| Metadata (entry 2) | 1 byte | Same as for entry 1 |

| Expiration (entry 2) | 1 byte | Same as for entry 1 |

| Created (entry 2) | Long | Same as for entry 1 |

| Lifespan (entry 2) | vInt | Same as for entry 1 |

| LastUsed (entry 2) | Long | Same as for entry 1 |

| MaxIdle (entry 2) | vInt | Same as for entry 1 |

| Entry Version (entry 2) | 8 bytes | Same as for entry 1 |

| Key 2 Length | vInt | |

| Key 2 | byte array | |

| Value 2 Length | vInt | |

| Value 2 | byte array | |

| … continues until entry count is reached |

20.6.11. Hot Rod Protocol 2.6

This version of the protocol is implemented since Infinispan 9.0

This Hot Rod protocol version adds support for streaming get and put operations. It includes two new operations:

- GetStream for retrieving data as a stream, with an optional initial offset

- PutStream for writing data as a stream, optionally by specifying a version

GetStream

Request (0x37):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Offset | vInt | The offset in bytes from which to start retrieving. Set to 0 to retrieve from the beginning |

| Key Length | vInt | Length of key. Note that the size of a vint can be up to 5 bytes which in theory can produce bigger numbers than Integer.MAX_VALUE. However, Java cannot create a single array that’s bigger than Integer.MAX_VALUE, hence the protocol is limiting vint array lengths to Integer.MAX_VALUE. |

| Key | byte array | Byte array containing the key whose value is being requested. |

GetStream

Response (0x38):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

| Response status | 1 byte |

0x00 = success, if key retrieved |

| Flag | 1 byte |

A flag indicating whether the response contains expiration information. The value of the flag is obtained as a bitwise OR operation between INFINITE_LIFESPAN (0x01) and |

| Created | Long | (optional) a Long representing the timestamp when the entry was created on the server. This value is returned only if the flag’s INFINITE_LIFESPAN bit is not set. |

| Lifespan | vInt | (optional) a vInt representing the lifespan of the entry in seconds. This value is returned only if the flag’s INFINITE_LIFESPAN bit is not set. |

| LastUsed | Long |

(optional) a Long representing the timestamp when the entry was last accessed on the server. This value is returned only if the flag’s |

| MaxIdle | vInt |

(optional) a vInt representing the maxIdle of the entry in seconds. This value is returned only if the flag’s |

| Entry Version | 8 bytes | Unique value of an existing entry’s modification. The protocol does not mandate that entry_version values are sequential. They just need to be unique per update at the key level. |

| Value Length | vInt | If success, length of value |

| Value | byte array | If success, the requested value |

PutStream

Request (0x39)

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Entry Version | 8 bytes |

Possible values |

| Key Length | vInt | Length of key. Note that the size of a vint can be up to 5 bytes which in theory can produce bigger numbers than Integer.MAX_VALUE. However, Java cannot create a single array that’s bigger than Integer.MAX_VALUE, hence the protocol is limiting vint array lengths to Integer.MAX_VALUE. |

| Key | byte array | Byte array containing the key whose value is being requested. |

| Value Chunk 1 Length | vInt | The size of the first chunk of data. If this value is 0 it means the client has completed transferring the value and the operation should be performed. |

| Value Chunk 1 | byte array | Array of bytes forming the fist chunk of data. |

| …continues until the value is complete |

Response (0x3A):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Response header |

On top of these additions, this Hot Rod protocol version improves remote listener registration by adding a byte that indicates at a global level, which type of events the client is interested in. For example, a client can indicate that only created events, or only expiration and removal events…etc. More fine grained event interests, e.g. per key, can be defined using the key/value filter parameter.

So, the new add listener request looks like this:

Add client listener for remote events

Request (0x25):

| Field Name | Size | Value |

|---|---|---|

| Header | variable | Request header |

| Listener ID | byte array | Listener identifier |

| Include state | byte |

When this byte is set to |

| Key/value filter factory name | string |

Optional name of the key/value filter factory to be used with this listener. The factory is used to create key/value filter instances which allow events to be filtered directly in the Hot Rod server, avoiding sending events that the client is not interested in. If no factory is to be used, the length of the string is |

| Key/value filter factory parameter count | byte | The key/value filter factory, when creating a filter instance, can take an arbitrary number of parameters, enabling the factory to be used to create different filter instances dynamically. This count field indicates how many parameters will be passed to the factory. If no factory name was provided, this field is not present in the request. |

| Key/value filter factory parameter 1 | byte array | First key/value filter factory parameter |

| Key/value filter factory parameter 2 | byte array | Second key/value filter factory parameter |

| … | ||

| Converter factory name | string |

Optional name of the converter factory to be used with this listener. The factory is used to transform the contents of the events sent to clients. By default, when no converter is in use, events are well defined, according to the type of event generated. However, there might be situations where users want to add extra information to the event, or they want to reduce the size of the events. In these cases, a converter can be used to transform the event contents. The given converter factory name produces converter instances to do this job. If no factory is to be used, the length of the string is |