Administration and Configuration Guide

For use with Red Hat JBoss Data Grid 6.6.1

Abstract

Chapter 1. Setting up Red Hat JBoss Data Grid

1.1. Prerequisites

1.2. Steps to Set up Red Hat JBoss Data Grid

Procedure 1.1. Set Up JBoss Data Grid

Set Up the Cache Manager

The first step in a JBoss Data Grid configuration is a cache manager. Cache managers can retrieve cache instances and create cache instances quickly and easily using previously specified configuration templates. For details about setting up a cache manager, see Part I, “Set Up a Cache Manager”Set Up JVM Memory Management

An important step in configuring your JBoss Data Grid is to set up memory management for your Java Virtual Machine (JVM). JBoss Data Grid offers features such as eviction and expiration to help manage the JVM memory.Set Up Eviction

Use eviction to specify the logic used to remove entries from the in-memory cache implementation based on how often they are used. JBoss Data Grid offers different eviction strategies for finer control over entry eviction in your data grid. Eviction strategies and instructions to configure them are available in Chapter 3, Set Up Eviction.Set Up Expiration

To set upper limits to an entry's time in the cache, attach expiration information to each entry. Use expiration to set up the maximum period an entry is allowed to remain in the cache and how long the retrieved entry can remain idle before being removed from the cache. For details, see Chapter 4, Set Up Expiration

Monitor Your Cache

JBoss Data Grid uses logging via JBoss Logging to help users monitor their caches.Set Up Logging

It is not mandatory to set up logging for your JBoss Data Grid, but it is highly recommended. JBoss Data Grid uses JBoss Logging, which allows the user to easily set up automated logging for operations in the data grid. Logs can subsequently be used to troubleshoot errors and identify the cause of an unexpected failure. For details, see Chapter 5, Set Up Logging

Set Up Cache Modes

Cache modes are used to specify whether a cache is local (simple, in-memory cache) or a clustered cache (replicates state changes over a small subset of nodes). Additionally, if a cache is clustered, either replication, distribution or invalidation mode must be applied to determine how the changes propagate across the subset of nodes. For details, see Part IV, “Set Up Cache Modes”Set Up Locking for the Cache

When replication or distribution is in effect, copies of entries are accessible across multiple nodes. As a result, copies of the data can be accessed or modified concurrently by different threads. To maintain consistency for all copies across nodes, configure locking. For details, see Part VI, “Set Up Locking for the Cache” and Chapter 16, Set Up Isolation LevelsSet Up and Configure a Cache Store

JBoss Data Grid offers the passivation feature (or cache writing strategies if passivation is turned off) to temporarily store entries removed from memory in a persistent, external cache store. To set up passivation or a cache writing strategy, you must first set up a cache store.Set Up a Cache Store

The cache store serves as a connection to the persistent store. Cache stores are primarily used to fetch entries from the persistent store and to push changes back to the persistent store. For details, see Part VII, “Set Up and Configure a Cache Store”Set Up Passivation

Passivation stores entries evicted from memory in a cache store. This feature allows entries to remain available despite not being present in memory and prevents potentially expensive write operations to the persistent cache. For details, see Part VIII, “Set Up Passivation”Set Up a Cache Writing Strategy

If passivation is disabled, every attempt to write to the cache results in writing to the cache store. This is the default Write-Through cache writing strategy. Set the cache writing strategy to determine whether these cache store writes occur synchronously or asynchronously. For details, see Part IX, “Set Up Cache Writing”

Monitor Caches and Cache Managers

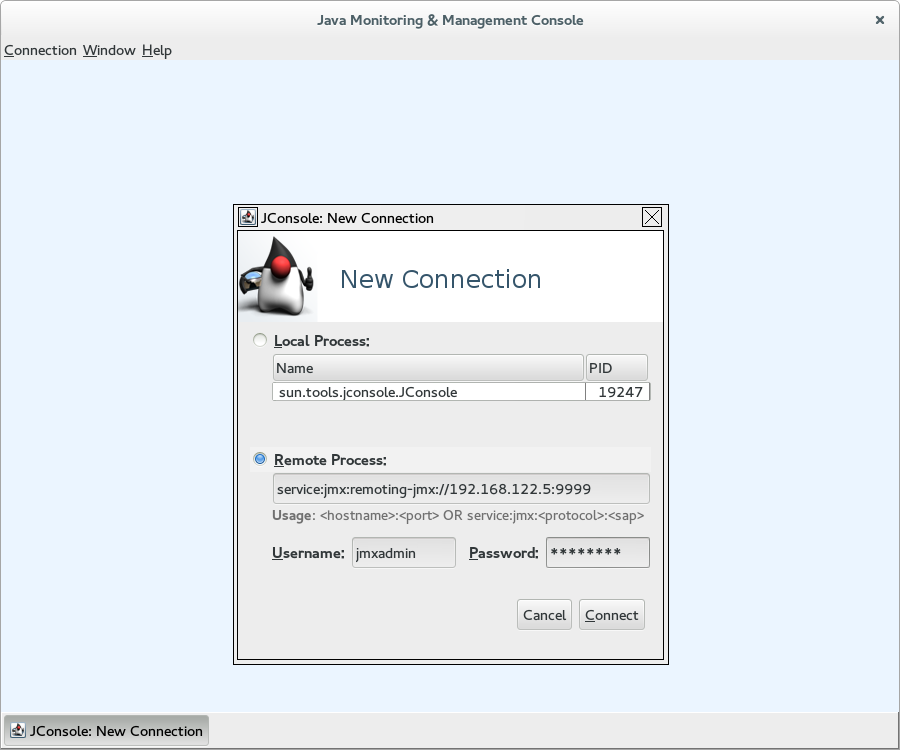

JBoss Data Grid includes two primary tools to monitor the cache and cache managers once the data grid is up and running.Set Up JMX

JMX is the standard statistics and management tool used for JBoss Data Grid. Depending on the use case, JMX can be configured at a cache level or a cache manager level or both. For details, see Chapter 21, Set Up Java Management Extensions (JMX)Set Up Red Hat JBoss Operations Network (JON)

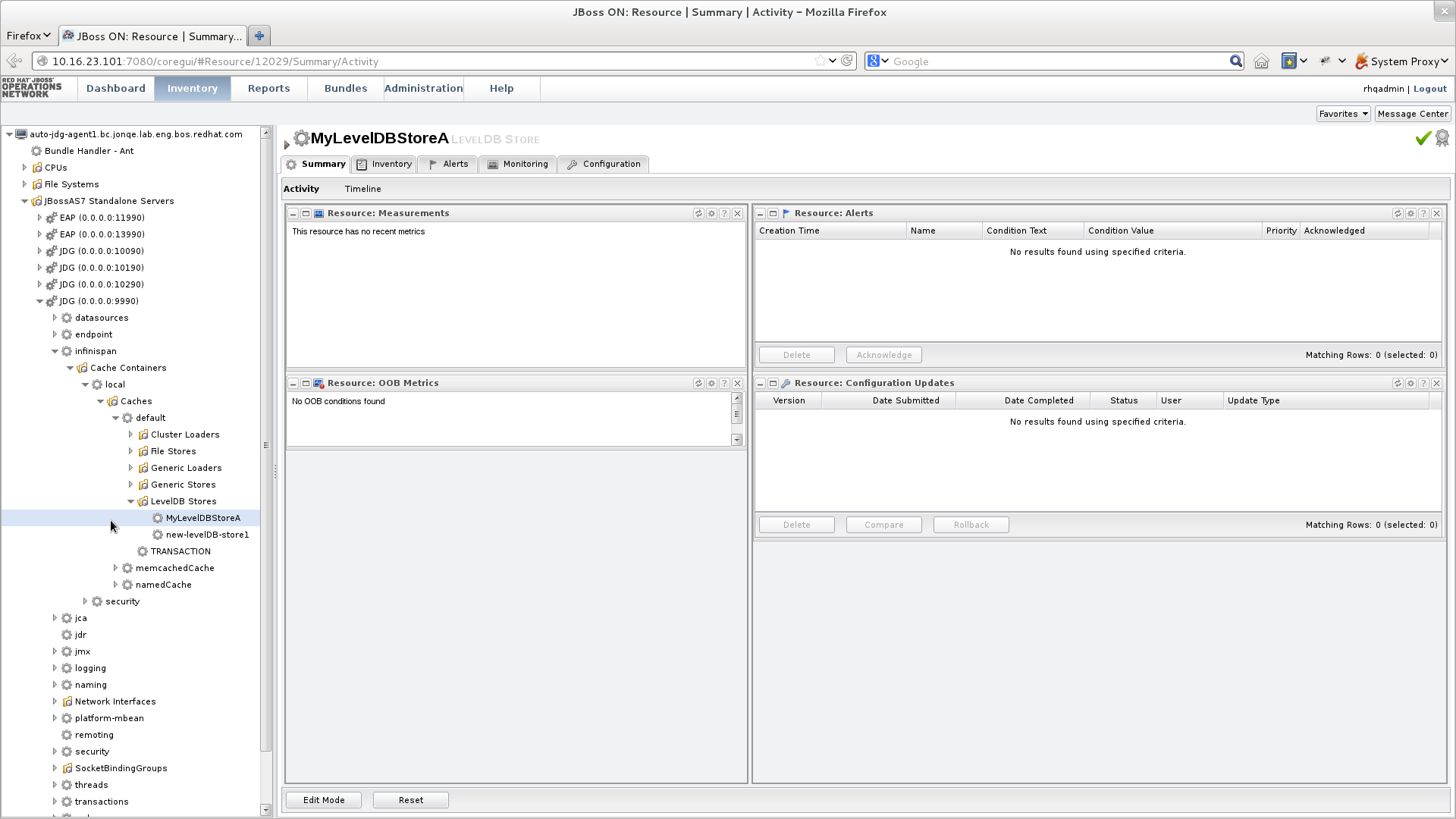

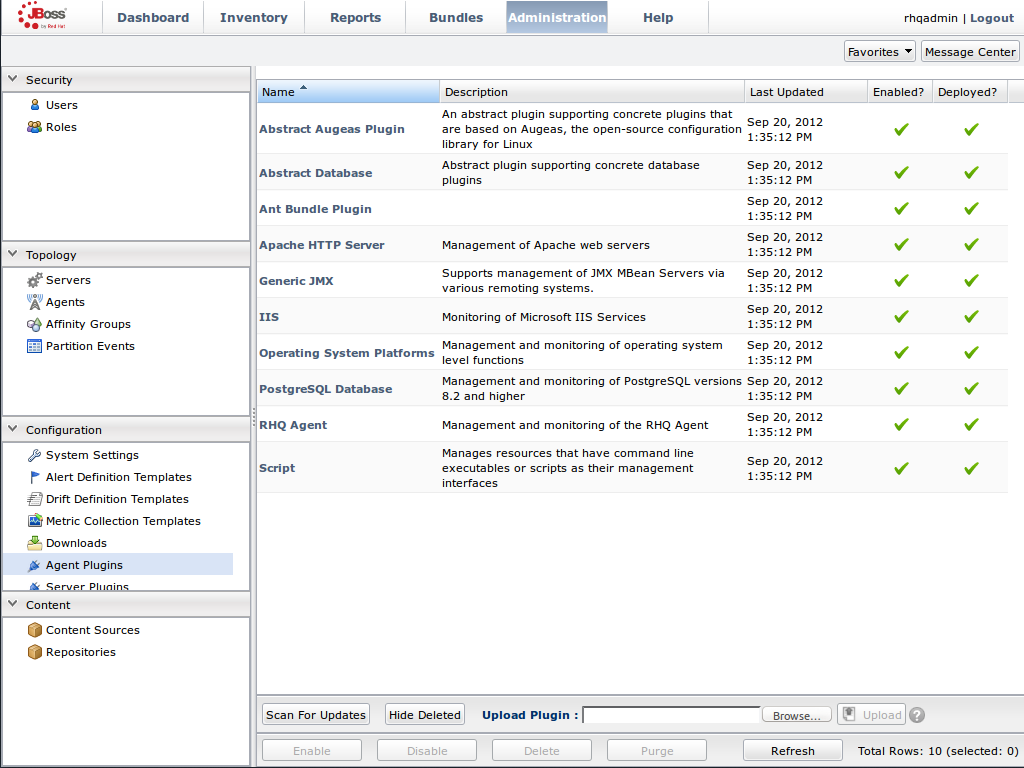

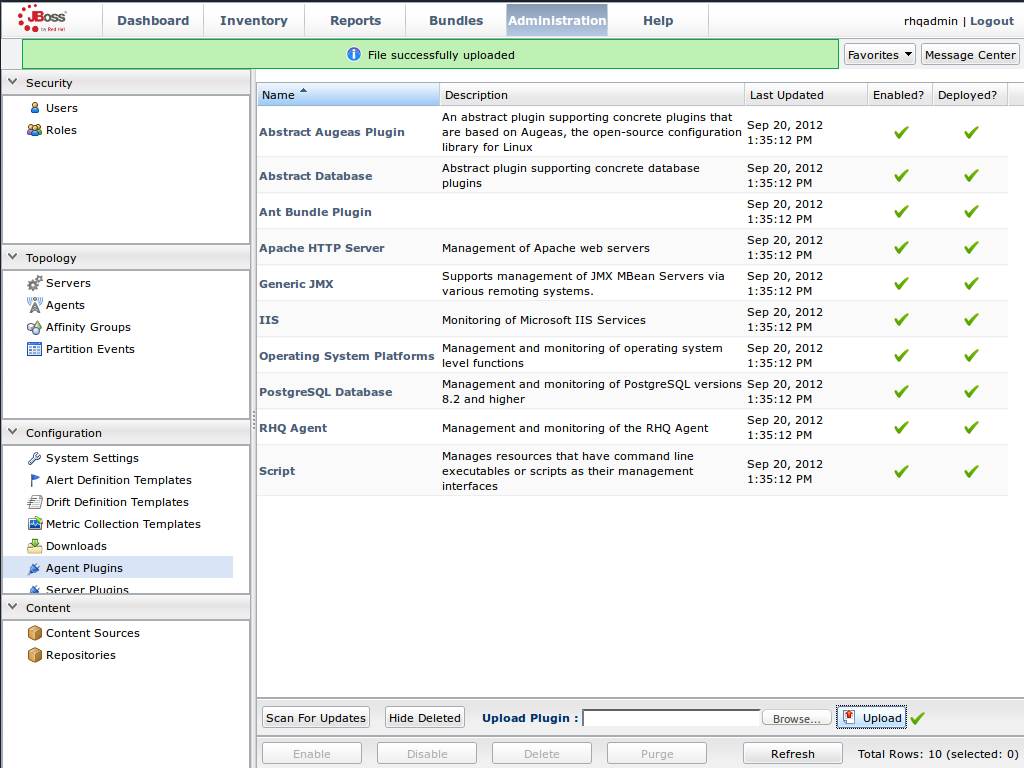

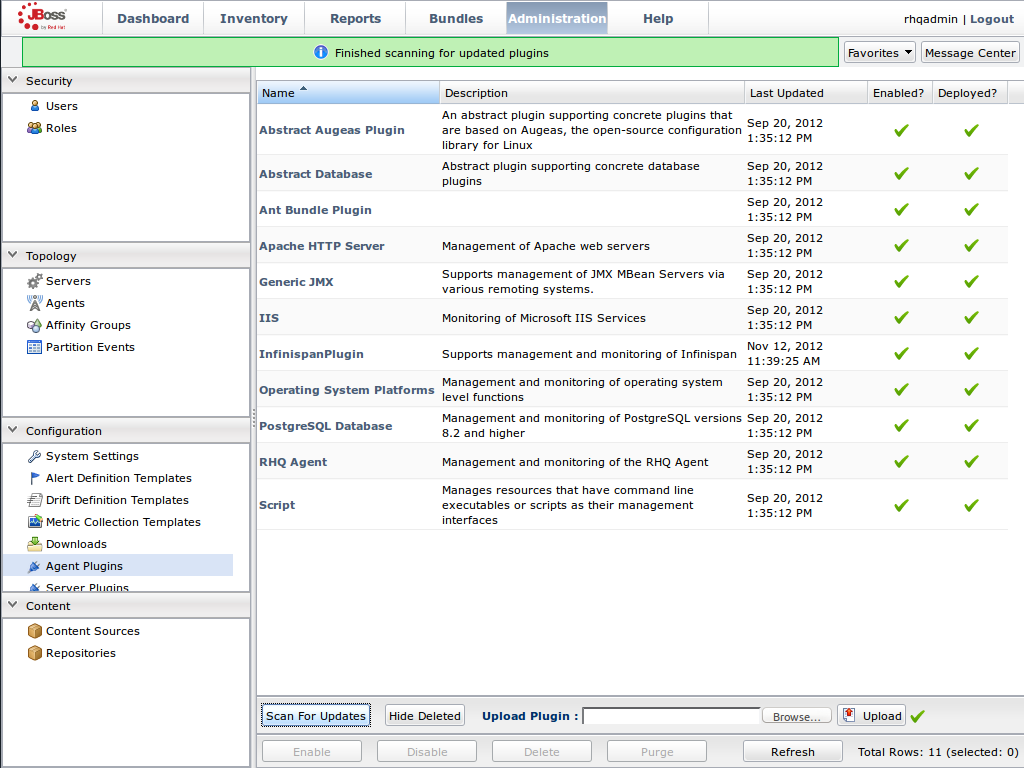

Red Hat JBoss Operations Network (JON) is the second monitoring solution available for JBoss Data Grid. JBoss Operations Network (JON) offers a graphical interface to monitor runtime parameters and statistics for caches and cache managers. For details, see Chapter 22, Set Up JBoss Operations Network (JON)

Introduce Topology Information

Optionally, introduce topology information to your data grid to specify where specific types of information or objects in your data grid are located. Server hinting is one of the ways to introduce topology information in JBoss Data Grid.Set Up Server Hinting

When set up, server hinting provides high availability by ensuring that the original and backup copies of data are not stored on the same physical server, rack or data center. This is optional in cases such as a replicated cache, where all data is backed up on all servers, racks and data centers. For details, see Chapter 30, High Availability Using Server Hinting

Part I. Set Up a Cache Manager

Chapter 2. Cache Managers

- it creates cache instances on demand.

- it retrieves existing cache instances (i.e. caches that have already been created).

2.1. Types of Cache Managers

EmbeddedCacheManageris a cache manager that runs within the Java Virtual Machine (JVM) used by the client. Currently, JBoss Data Grid offers only theDefaultCacheManagerimplementation of theEmbeddedCacheManagerinterface.RemoteCacheManageris used to access remote caches. When started, theRemoteCacheManagerinstantiates connections to the Hot Rod server (or multiple Hot Rod servers). It then manages the persistentTCPconnections while it runs. As a result,RemoteCacheManageris resource-intensive. The recommended approach is to have a singleRemoteCacheManagerinstance for each Java Virtual Machine (JVM).

2.2. Creating CacheManagers

2.2.1. Create a New RemoteCacheManager

Procedure 2.1. Configure a New RemoteCacheManager

import org.infinispan.client.hotrod.RemoteCache;

import org.infinispan.client.hotrod.RemoteCacheManager;

import org.infinispan.client.hotrod.configuration.Configuration;

import org.infinispan.client.hotrod.configuration.ConfigurationBuilder;

Configuration conf = new

ConfigurationBuilder().addServer().host("localhost").port(11222).build();

RemoteCacheManager manager = new RemoteCacheManager(conf);

RemoteCache defaultCache = manager.getCache();- Use the

ConfigurationBuilder()constructor to create a new configuration builder. The.addServer()method adds a remote server, configured via the.host(<hostname|ip>)and.port(<port>)properties. - Create a new

RemoteCacheManagerusing the supplied configuration. - Retrieve the default cache from the remote server.

2.2.2. Create a New Embedded Cache Manager

Procedure 2.2. Create a New Embedded Cache Manager

- Create a configuration XML file. For example, create the

my-config-file.xmlfile on the classpath (in theresources/folder) and add the configuration information in this file. - Use the following programmatic configuration to create a cache manager using the configuration file:

EmbeddedCacheManager manager = new DefaultCacheManager("my-config-file.xml"); Cache defaultCache = manager.getCache();

my-config-file.xml.

2.2.3. Create a New Embedded Cache Manager Using CDI

Procedure 2.3. Use CDI to Create a New EmbeddedCacheManager

- Specify a default configuration:

public class Config @Produces public EmbeddedCacheManager defaultCacheManager() { ConfigurationBuilder builder = new ConfigurationBuilder(); Configuration configuration = builder.eviction().strategy(EvictionStrategy.LRU).maxEntries(100).build(); return new DefaultCacheManager(configuration); } } - Inject the default cache manager.

<!-- Additional configuration information here --> @Inject EmbeddedCacheManager cacheManager; <!-- Additional configuration information here -->

2.3. Multiple Cache Managers

2.3.1. Create Multiple Caches with a Single Cache Manager

2.3.2. Using Multiple Cache Managers

TCP protocol and the other uses the UDP protocol, multiple cache managers must be used.

2.3.3. Create Multiple Cache Managers

infinispan.xml file to a new configuration file. Edit the new file for the desired configuration and then use the new file for a new cache manager.

Part II. Set Up JVM Memory Management

Chapter 3. Set Up Eviction

3.1. About Eviction

3.2. Eviction Strategies

Table 3.1. Eviction Strategies

| Strategy Name | Operations | Details |

|---|---|---|

EvictionStrategy.NONE | No eviction occurs. | This is the default eviction strategy in Red Hat JBoss Data Grid. |

EvictionStrategy.LRU | Least Recently Used eviction strategy. This strategy evicts entries that have not been used for the longest period. This ensures that entries that are reused periodically remain in memory. | |

EvictionStrategy.UNORDERED | Unordered eviction strategy. This strategy evicts entries without any ordered algorithm and may therefore evict entries that are required later. However, this strategy saves resources because no algorithm related calculations are required before eviction. | This strategy is recommended for testing purposes and not for a real work implementation. |

EvictionStrategy.LIRS | Low Inter-Reference Recency Set eviction strategy. | LIRS is an eviction algorithm that suits a large variety of production use cases. |

3.2.1. LRU Eviction Algorithm Limitations

- Single use access entries are not replaced in time.

- Entries that are accessed first are unnecessarily replaced.

3.3. Using Eviction

eviction /> element is used to enable eviction without any strategy or maximum entries settings, the following default values are automatically implemented:

- Strategy: If no eviction strategy is specified,

EvictionStrategy.NONEis assumed as a default. - max-entries/maxEntries: If no value is specified, the

max-entries/maxEntries value is set to-1, which allows unlimited entries.

3.3.1. Initialize Eviction

max-entries attributes value to a number greater than zero. Adjust the value set for max-entries to discover the optimal value for your configuration. It is important to remember that if too large a value is set for max-entries, Red Hat JBoss Data Grid runs out of memory.

Procedure 3.1. Initialize Eviction

Add the Eviction Tag

Add the <eviction> tag to your project's <cache> tags as follows:<eviction />

Set the Eviction Strategy

Set thestrategyvalue to set the eviction strategy employed. Possible values areLRU,UNORDEREDandLIRS(orNONEif no eviction is required). The following is an example of this step:<eviction strategy="LRU" />

Set the Maximum Entries

Set the maximum number of entries allowed in memory. The default value is-1for unlimited entries.- In Library mode, set the

maxEntriesparameter as follows:<eviction strategy="LRU" maxEntries="200" />

- In Remote Client Server mode, set the

max-entriesas follows:<eviction strategy="LRU" max-entries="200" />

Eviction is configured for the target cache.

3.3.2. Eviction Configuration Examples

- A sample XML configuration for Library mode is as follows:

<eviction strategy="LRU" maxEntries="2000"/>

- A sample XML configuration for Remote Client Server Mode is as follows:

<eviction strategy="LRU" max-entries="20"/>

- A sample programmatic configuration for Library Mode is as follows:

Configuration c = new ConfigurationBuilder().eviction().strategy(EvictionStrategy.LRU) .maxEntries(2000) .build();

Note

maxEntries parameter while Remote Client-Server mode uses the max-entries parameter to configure eviction.

3.3.3. Changing the Maximum Entries Value at Runtime

- Invoking the setMaxEntries operation sets maximum number of entries in the data container.

- If the data container does not support eviction, setting it will raise an exception.

- Defining a value less than 0 will throw an error.

3.3.4. Eviction Configuration Troubleshooting

max-entries parameter of the eviction element. This is because although the max-entries value can be configured to a value that is not a power of two, the underlying algorithm will alter the value to V, where V is the closest power of two value that is larger than the max-entries value. Eviction algorithms are in place to ensure that the size of the cache container will never exceed the value V.

3.3.5. Eviction and Passivation

Chapter 4. Set Up Expiration

4.1. About Expiration

- A lifespan value.

- A maximum idle time value.

lifespan or maxIdle value.

lifespan or maxIdle defined are mortal, as they will eventually be removed from the cache once one of these conditions are met.

- expiration removes entries based on the period they have been in memory. Expiration only removes entries when the life span period concludes or when an entry has been idle longer than the specified idle time.

- eviction removes entries based on how recently (and often) they are used. Eviction only removes entries when too many entries are present in the memory. If a cache store has been configured, evicted entries are persisted in the cache store.

4.2. Expiration Operations

lifespan) or maximum idle time (maxIdle in Library Mode and max-idle in Remote Client-Server Mode) defined for an individual key/value pair overrides the cache-wide default for the entry in question.

4.3. Eviction and Expiration Comparison

lifespan) and idle time (maxIdle in Library Mode and max-idle in Remote Client-Server Mode) values are replicated alongside each cache entry.

4.4. Cache Entry Expiration Behavior

- An entry is passivated/overflowed to disk and is discovered to have expired.

- The expiration maintenance thread discovers that an entry it has found is expired.

4.5. Configure Expiration

Procedure 4.1. Configure Expiration in Library Mode

Add the Expiration Tag

Add the <expiration> tag to your project's <cache> tags as follows:<expiration />

Set the Expiration Lifespan

Set thelifespanvalue to set the period of time (in milliseconds) an entry can remain in memory. The following is an example of this step:<expiration lifespan="1000" />

Set the Maximum Idle Time

Set the time that entries are allowed to remain idle (unused) after which they are removed (in milliseconds). The default value is-1for unlimited time.<expiration lifespan="1000" maxIdle="1000" />

Set the Background Reaper Thread

Enable or disable the background reaper thread to test entries for expiration. Regardless of whether a reaper is used, entries are tested for expiration lazily when they are touched. The default value istrue.<expiration lifespan="1000" maxIdle="1000" reaperEnabled="true" />

Set the Expiration Interval

Set the interval (in milliseconds) between subsequent runs to purge expired entries from memory and any associated cache stores. To disable the periodic eviction process set the interval to-1. The default value is1000.<expiration lifespan="1000" maxIdle="1000" reaperEnabled="true" wakeUpInterval="5000" />

Procedure 4.2. Configuration Expiration in Remote Client-Server Mode

Set the Expiration Lifespan

Set thelifespanvalue to set the period of time (in milliseconds) an entry can remain in memory. The following is an example of this step:<expiration lifespan="1000" />

Set the Maximum Idle Time

Set the time that entries are allowed to remain idle (unused) after which they are removed (in milliseconds). The default value is-1for unlimited time.<expiration lifespan="1000" max-idle="1000" />

Set the Expiration Interval

Set the interval (in milliseconds) between subsequent runs to purge expired entries from memory and any associated cache stores. To disable the periodic eviction process set the interval to-1. The default value is5000.<expiration lifespan="1000" max-idle="1000" interval="10000" />

Reaper Thread in Remote Client-Server Mode

In Remote Client-Server Mode the background reaper thread is only enabled ifintervalis greater than0. Asintervaldefaults to5000the background reaper thread is automatically enabled if expiration is configured.

4.6. Troubleshooting Expiration

put() are passed a life span value as a parameter. This value defines the interval after which the entry must expire. In cases where eviction is not configured and the life span interval expires, it can appear as if Red Hat JBoss Data Grid has not removed the entry. For example, when viewing JMX statistics, such as the number of entries, you may see an out of date count, or the persistent store associated with JBoss Data Grid may still contain this entry. Behind the scenes, JBoss Data Grid has marked it as an expired entry, but has not removed it. Removal of such entries happens as follows:

- An entry is passivated/overflowed to disk and is discovered to have expired.

- The expiration maintenance thread discovers that an entry it has found is expired.

get() or containsKey() for the expired entry causes JBoss Data Grid to return a null value. The expired entry is later removed by the expiration thread.

Part III. Monitor Your Cache

Chapter 5. Set Up Logging

5.1. About Logging

5.2. Supported Application Logging Frameworks

- JBoss Logging, which is included with Red Hat JBoss Data Grid 6.

5.2.1. About JBoss Logging

5.2.2. JBoss Logging Features

- Provides an innovative, easy to use typed logger.

- Full support for internationalization and localization. Translators work with message bundles in properties files while developers can work with interfaces and annotations.

- Build-time tooling to generate typed loggers for production, and runtime generation of typed loggers for development.

5.3. Boot Logging

5.3.1. Configure Boot Logging

logging.properties file to configure the boot log. This file is a standard Java properties file and can be edited in a text editor. Each line in the file has the format of property=value.

logging.properties file is available in the $JDG_HOME/standalone/configuration folder.

5.3.2. Default Log File Locations

Table 5.1. Default Log File Locations

| Log File | Location | Description |

|---|---|---|

boot.log | $JDG_HOME/standalone/log/ | The Server Boot Log. Contains log messages related to the start up of the server. |

server.log | $JDG_HOME/standalone/log/ | The Server Log. Contains all log messages once the server has launched. |

5.4. Logging Attributes

5.4.1. About Log Levels

TRACEDEBUGINFOWARNERRORFATAL

WARN will only record messages of the levels WARN, ERROR and FATAL.

5.4.2. Supported Log Levels

Table 5.2. Supported Log Levels

| Log Level | Value | Description |

|---|---|---|

| FINEST | 300 | - |

| FINER | 400 | - |

| TRACE | 400 | Used for messages that provide detailed information about the running state of an application. TRACE level log messages are captured when the server runs with the TRACE level enabled. |

| DEBUG | 500 | Used for messages that indicate the progress of individual requests or activities of an application. DEBUG level log messages are captured when the server runs with the DEBUG level enabled. |

| FINE | 500 | - |

| CONFIG | 700 | - |

| INFO | 800 | Used for messages that indicate the overall progress of the application. Used for application start up, shut down and other major lifecycle events. |

| WARN | 900 | Used to indicate a situation that is not in error but is not considered ideal. Indicates circumstances that can lead to errors in the future. |

| WARNING | 900 | - |

| ERROR | 1000 | Used to indicate an error that has occurred that could prevent the current activity or request from completing but will not prevent the application from running. |

| SEVERE | 1000 | - |

| FATAL | 1100 | Used to indicate events that could cause critical service failure and application shutdown and possibly cause JBoss Data Grid to shut down. |

5.4.3. About Log Categories

WARNING log level results in log values of 900, 1000 and 1100 are captured.

5.4.4. About the Root Logger

server.log. This file is sometimes referred to as the server log.

5.4.5. About Log Handlers

ConsoleFilePeriodicSizeAsyncCustom

5.4.6. Log Handler Types

Table 5.3. Log Handler Types

| Log Handler Type | Description | Use Case |

|---|---|---|

| Console | Console log handlers write log messages to either the host operating system’s standard out (stdout) or standard error (stderr) stream. These messages are displayed when JBoss Data Grid is run from a command line prompt. | The Console log handler is preferred when JBoss Data Grid is administered using the command line. In such a case, the messages from a Console log handler are not saved unless the operating system is configured to capture the standard out or standard error stream. |

| File | File log handlers are the simplest log handlers. Their primary use is to write log messages to a specified file. | File log handlers are most useful if the requirement is to store all log entries according to the time in one place. |

| Periodic | Periodic file handlers write log messages to a named file until a specified period of time has elapsed. Once the time period has elapsed, the specified time stamp is appended to the file name. The handler then continues to write into the newly created log file with the original name. | The Periodic file handler can be used to accumulate log messages on a weekly, daily, hourly or other basis depending on the requirements of the environment. |

| Size | Size log handlers write log messages to a named file until the file reaches a specified size. When the file reaches a specified size, it is renamed with a numeric prefix and the handler continues to write into a newly created log file with the original name. Each size log handler must specify the maximum number of files to be kept in this fashion. | The Size handler is best suited to an environment where the log file size must be consistent. |

| Async | Async log handlers are wrapper log handlers that provide asynchronous behavior for one or more other log handlers. These are useful for log handlers that have high latency or other performance problems such as writing a log file to a network file system. | The Async log handlers are best suited to an environment where high latency is a problem or when writing to a network file system. |

| Custom | Custom log handlers enable to you to configure new types of log handlers that have been implemented. A custom handler must be implemented as a Java class that extends java.util.logging.Handler and be contained in a module. | Custom log handlers create customized log handler types and are recommended for advanced users. |

5.4.7. Selecting Log Handlers

- The

Consolelog handler is preferred when JBoss Data Grid is administered using the command line. In such a case, errors and log messages appear on the console window and are not saved unless separately configured to do so. - The

Filelog handler is used to direct log entries into a specified file. This simplicity is useful if the requirement is to store all log entries according to the time in one place. - The

Periodiclog handler is similar to theFilehandler but creates files according to the specified period. As an example, this handler can be used to accumulate log messages on a weekly, daily, hourly or other basis depending on the requirements of the environment. - The

Sizelog handler also writes log messages to a specified file, but only while the log file size is within a specified limit. Once the file size reaches the specified limit, log files are written to a new log file. This handler is best suited to an environment where the log file size must be consistent. - The

Asynclog handler is a wrapper that forces other log handlers to operate asynchronously. This is best suited to an environment where high latency is a problem or when writing to a network file system. - The

Customlog handler creates new, customized types of log handlers. This is an advanced log handler.

5.4.8. About Log Formatters

java.util.Formatter class.

5.5. Logging Sample Configurations

5.5.1. Logging Sample Configuration Location

standalone.xml or clustered.xml.

5.5.2. Sample XML Configuration for the Root Logger

Procedure 5.1. Configure the Root Logger

Set the

levelPropertyThelevelproperty sets the maximum level of log message that the root logger records.<subsystem xmlns="urn:jboss:domain:logging:1.4"> <root-logger> <level name="INFO"/>List

handlershandlersis a list of log handlers that are used by the root logger.<subsystem xmlns="urn:jboss:domain:logging:1.4"> <root-logger> <level name="INFO"/> <handlers> <handler name="CONSOLE"/> <handler name="FILE"/> </handlers> </root-logger> </subsystem>

5.5.3. Sample XML Configuration for a Log Category

Procedure 5.2. Configure a Log Category

<subsystem xmlns="urn:jboss:domain:logging:1.4">

<logger category="com.company.accounts.rec" use-parent-handlers="true">

<level name="WARN"/>

<handlers>

<handler name="accounts-rec"/>

</handlers>

</logger>

</subsystem>- Use the

categoryproperty to specify the log category from which log messages will be captured.Theuse-parent-handlersis set to"true"by default. When set to"true", this category will use the log handlers of the root logger in addition to any other assigned handlers. - Use the

levelproperty to set the maximum level of log message that the log category records. - The

handlerselement contains a list of log handlers.

5.5.4. Sample XML Configuration for a Console Log Handler

Procedure 5.3. Configure the Console Log Handler

<subsystem xmlns="urn:jboss:domain:logging:1.4">

<console-handler name="CONSOLE" autoflush="true">

<level name="INFO"/>

<encoding value="UTF-8"/>

<target value="System.out"/>

<filter-spec value="not(match("JBAS.*"))"/>

<formatter>

<pattern-formatter pattern="%K{level}%d{HH:mm:ss,SSS} %-5p [%c] (%t) %s%E%n"/>

</formatter>

</console-handler>

</subsystem>Add the Log Handler Identifier Information

Thenameproperty sets the unique identifier for this log handler.Whenautoflushis set to"true"the log messages will be sent to the handler's target immediately upon request.Set the

levelPropertyThelevelproperty sets the maximum level of log messages recorded.Set the

encodingOutputUseencodingto set the character encoding scheme to be used for the output.Define the

targetValueThetargetproperty defines the system output stream where the output of the log handler goes. This can beSystem.errfor the system error stream, orSystem.outfor the standard out stream.Define the

filter-specPropertyThefilter-specproperty is an expression value that defines a filter. The example provided defines a filter that does not match a pattern:not(match("JBAS.*")).Specify the

formatterUseformatterto list the log formatter used by the log handler.

5.5.5. Sample XML Configuration for a File Log Handler

Procedure 5.4. Configure the File Log Handler

<file-handler name="accounts-rec-trail" autoflush="true">

<level name="INFO"/>

<encoding value="UTF-8"/>

<file relative-to="jboss.server.log.dir" path="accounts-rec-trail.log"/>

<formatter>

<pattern-formatter pattern="%d{HH:mm:ss,SSS} %-5p [%c] (%t) %s%E%n"/>

</formatter>

<append value="true"/>

</file-handler>Add the File Log Handler Identifier Information

Thenameproperty sets the unique identifier for this log handler.Whenautoflushis set to"true"the log messages will be sent to the handler's target immediately upon request.Set the

levelPropertyThelevelproperty sets the maximum level of log message that the root logger records.Set the

encodingOutputUseencodingto set the character encoding scheme to be used for the output.Set the

fileObjectThefileobject represents the file where the output of this log handler is written to. It has two configuration properties:relative-toandpath.Therelative-toproperty is the directory where the log file is written to. JBoss Enterprise Application Platform 6 file path variables can be specified here. Thejboss.server.log.dirvariable points to thelog/directory of the server.Thepathproperty is the name of the file where the log messages will be written. It is a relative path name that is appended to the value of therelative-toproperty to determine the complete path.Specify the

formatterUseformatterto list the log formatter used by the log handler.Set the

appendPropertyWhen theappendproperty is set to"true", all messages written by this handler will be appended to an existing file. If set to"false"a new file will be created each time the application server launches. Changes toappendrequire a server reboot to take effect.

5.5.6. Sample XML Configuration for a Periodic Log Handler

Procedure 5.5. Configure the Periodic Log Handler

<periodic-rotating-file-handler name="FILE" autoflush="true">

<level name="INFO"/>

<encoding value="UTF-8"/>

<formatter>

<pattern-formatter pattern="%d{HH:mm:ss,SSS} %-5p [%c] (%t) %s%E%n"/>

</formatter>

<file relative-to="jboss.server.log.dir" path="server.log"/>

<suffix value=".yyyy-MM-dd"/>

<append value="true"/>

</periodic-rotating-file-handler>Add the Periodic Log Handler Identifier Information

Thenameproperty sets the unique identifier for this log handler.Whenautoflushis set to"true"the log messages will be sent to the handler's target immediately upon request.Set the

levelPropertyThelevelproperty sets the maximum level of log message that the root logger records.Set the

encodingOutputUseencodingto set the character encoding scheme to be used for the output.Specify the

formatterUseformatterto list the log formatter used by the log handler.Set the

fileObjectThefileobject represents the file where the output of this log handler is written to. It has two configuration properties:relative-toandpath.Therelative-toproperty is the directory where the log file is written to. JBoss Enterprise Application Platform 6 file path variables can be specified here. Thejboss.server.log.dirvariable points to thelog/directory of the server.Thepathproperty is the name of the file where the log messages will be written. It is a relative path name that is appended to the value of therelative-toproperty to determine the complete path.Set the

suffixValueThesuffixis appended to the filename of the rotated logs and is used to determine the frequency of rotation. The format of thesuffixis a dot (.) followed by a date string, which is parsable by thejava.text.SimpleDateFormatclass. The log is rotated on the basis of the smallest time unit defined by thesuffix. For example,yyyy-MM-ddwill result in daily log rotation. See http://docs.oracle.com/javase/6/docs/api/index.html?java/text/SimpleDateFormat.htmlSet the

appendPropertyWhen theappendproperty is set to"true", all messages written by this handler will be appended to an existing file. If set to"false"a new file will be created each time the application server launches. Changes toappendrequire a server reboot to take effect.

5.5.7. Sample XML Configuration for a Size Log Handler

Procedure 5.6. Configure the Size Log Handler

<size-rotating-file-handler name="accounts_debug" autoflush="false">

<level name="DEBUG"/>

<encoding value="UTF-8"/>

<file relative-to="jboss.server.log.dir" path="accounts-debug.log"/>

<rotate-size value="500k"/>

<max-backup-index value="5"/>

<formatter>

<pattern-formatter pattern="%d{HH:mm:ss,SSS} %-5p [%c] (%t) %s%E%n"/>

</formatter>

<append value="true"/>

</size-rotating-file-handler>Add the Size Log Handler Identifier Information

Thenameproperty sets the unique identifier for this log handler.Whenautoflushis set to"true"the log messages will be sent to the handler's target immediately upon request.Set the

levelPropertyThelevelproperty sets the maximum level of log message that the root logger records.Set the

encodingOutputUseencodingto set the character encoding scheme to be used for the output.Set the

fileObjectThefileobject represents the file where the output of this log handler is written to. It has two configuration properties:relative-toandpath.Therelative-toproperty is the directory where the log file is written to. JBoss Enterprise Application Platform 6 file path variables can be specified here. Thejboss.server.log.dirvariable points to thelog/directory of the server.Thepathproperty is the name of the file where the log messages will be written. It is a relative path name that is appended to the value of therelative-toproperty to determine the complete path.Specify the

rotate-sizeValueThe maximum size that the log file can reach before it is rotated. A single character appended to the number indicates the size units:bfor bytes,kfor kilobytes,mfor megabytes,gfor gigabytes. For example:50mfor 50 megabytes.Set the

max-backup-indexNumberThe maximum number of rotated logs that are kept. When this number is reached, the oldest log is reused.Specify the

formatterUseformatterto list the log formatter used by the log handler.Set the

appendPropertyWhen theappendproperty is set to"true", all messages written by this handler will be appended to an existing file. If set to"false"a new file will be created each time the application server launches. Changes toappendrequire a server reboot to take effect.

5.5.8. Sample XML Configuration for a Async Log Handler

Procedure 5.7. Configure the Async Log Handler

<async-handler name="Async_NFS_handlers">

<level name="INFO"/>

<queue-length value="512"/>

<overflow-action value="block"/>

<subhandlers>

<handler name="FILE"/>

<handler name="accounts-record"/>

</subhandlers>

</async-handler>- The

nameproperty sets the unique identifier for this log handler. - The

levelproperty sets the maximum level of log message that the root logger records. - The

queue-lengthdefines the maximum number of log messages that will be held by this handler while waiting for sub-handlers to respond. - The

overflow-actiondefines how this handler responds when its queue length is exceeded. This can be set toBLOCKorDISCARD.BLOCKmakes the logging application wait until there is available space in the queue. This is the same behavior as an non-async log handler.DISCARDallows the logging application to continue but the log message is deleted. - The

subhandlerslist is the list of log handlers to which this async handler passes its log messages.

Part IV. Set Up Cache Modes

Chapter 6. Cache Modes

- Local mode is the only non-clustered cache mode offered in JBoss Data Grid. In local mode, JBoss Data Grid operates as a simple single-node in-memory data cache. Local mode is most effective when scalability and failover are not required and provides high performance in comparison with clustered modes.

- Clustered mode replicates state changes to a small subset of nodes. The subset size is sufficient for fault tolerance purposes but not large enough to hinder scalability. Before attempting to use clustered mode, it is important to first configure JGroups for a clustered configuration. For details about configuring JGroups, see Section 26.2, “Configure JGroups (Library Mode)”

6.1. About Cache Containers

cache-container element acts as a parent of one or more (local or clustered) caches. To add clustered caches to the container, transport must be defined.

Procedure 6.1. How to Configure the Cache Container

<subsystem xmlns="urn:infinispan:server:core:6.2" default-cache-container="local"> <cache-container name="local" default-cache="default" statistics="true" listener-executor="infinispan-listener" start="EAGER"> <local-cache name="default" start="EAGER" statistics="false"> <!-- Additional configuration information here --> </local-cache> </cache-container> </subsystem>

Configure the Cache Container

Thecache-containerelement specifies information about the cache container using the following parameters:- The

nameparameter defines the name of the cache container. - The

default-cacheparameter defines the name of the default cache used with the cache container. - The

statisticsattribute is optional and istrueby default. Statistics are useful in monitoring JBoss Data Grid via JMX or JBoss Operations Network, however they adversely affect performance. Disable this attribute by setting it tofalseif it is not required. - The

listener-executordefines the executor used for asynchronous cache listener notifications. - The

startparameter indicates when the cache container starts, i.e. whether it will start lazily when requested or "eagerly" when the server starts up. Valid values for this parameter areEAGERandLAZY.

Configure Per-cache Statistics

Ifstatisticsare enabled at the container level, per-cache statistics can be selectively disabled for caches that do not require monitoring by setting thestatisticsattribute tofalse.

6.2. Local Mode

- Write-through and write-behind caching to persist data.

- Entry eviction to prevent the Java Virtual Machine (JVM) running out of memory.

- Support for entries that expire after a defined period.

ConcurrentMap, resulting in a simple migration process from a map to JBoss Data Grid.

6.2.1. Configure Local Mode (Remote Client-Server Mode)

local-cache element.

Procedure 6.2. The local-cache Element

<cache-container name="local"

default-cache="default"

statistics="true">

<local-cache name="default"

start="EAGER"

batching="false"

statistics="true">

<indexing index="NONE">

<property name="default.directory_provider">ram</property>

</indexing>

</local-cache>local-cache element specifies information about the local cache used with the cache container using the following parameters:

- The

nameparameter specifies the name of the local cache to use. - The

startparameter indicates when the cache container starts, i.e. whether it will start lazily when requested or "eagerly" when the server starts up. Valid values for this parameter areEAGERandLAZY. - The

batchingparameter specifies whether batching is enabled for the local cache. - If

statisticsare enabled at the container level, per-cache statistics can be selectively disabled for caches that do not require monitoring by setting thestatisticsattribute tofalse. - The

indexattribute of theindexingparameter specifies the type of indexing used for the local cache. Valid values for this attribute areNONE,LOCALandALL.

DefaultCacheManager with the "no-argument" constructor. Both of these methods create a local default cache.

<transport/> it can only contain local caches. The container used in the example can only contain local caches as it does not have a <transport/>.

ConcurrentMap and is compatible with multiple cache systems.

6.2.2. Configure Local Mode (Library Mode)

mode parameter to local equals not specifying a clustering mode at all. In the case of the latter, the cache defaults to local mode, even if its cache manager defines a transport.

<clustering mode="local" />

6.3. Clustered Modes

- Replication Mode replicates any entry that is added across all cache instances in the cluster.

- Invalidation Mode does not share any data, but signals remote caches to initiate the removal of invalid entries.

- Distribution Mode stores each entry on a subset of nodes instead of on all nodes in the cluster.

6.3.1. Asynchronous and Synchronous Operations

6.3.2. Cache Mode Troubleshooting

6.3.2.1. Invalid Data in ReadExternal

readExternal, it can be because when using Cache.putAsync(), starting serialization can cause your object to be modified, causing the datastream passed to readExternal to be corrupted. This can be resolved if access to the object is synchronized.

6.3.2.2. About Asynchronous Communications

local-cache, distributed-cache and replicated-cache elements respectively. Each of these elements contains a mode property, the value of which can be set to SYNC for synchronous or ASYNC for asynchronous communications.

Example 6.1. Asynchronous Communications Example Configuration

<replicated-cache name="default"

start="EAGER"

mode="ASYNC"

batching="false"

statistics="true">

<!-- Additional configuration information here -->

</replicated-cache>Note

6.3.2.3. Cluster Physical Address Retrieval

The physical address can be retrieved using an instance method call. For example: AdvancedCache.getRpcManager().getTransport().getPhysicalAddresses().

6.4. State Transfer

numOwners copies of each key in the cache (as determined through consistent hashing). In invalidation mode the initial state transfer is similar to replication mode, the only difference being that the nodes are not guaranteed to have the same state. When a node leaves, a replicated mode or invalidation mode cache does not perform any state transfer. A distributed cache needs to make additional copies of the keys that were stored on the leaving nodes, again to keep numOwners copies of each key.

ClusterLoader must be configured, otherwise a node will become the owner or backup owner of a key without the data being loaded into its cache. In addition, if State Transfer is disabled in distributed mode then a key will occasionally have less than numOwners owners.

6.4.1. Non-Blocking State Transfer

- Minimize the interval(s) where the entire cluster cannot respond to requests because of a state transfer in progress.

- Minimize the interval(s) where an existing member stops responding to requests because of a state transfer in progress.

- Allow state transfer to occur with a drop in the performance of the cluster. However, the drop in the performance during the state transfer does not throw any exception, and allows processes to continue.

- Allows a

GEToperation to successfully retrieve a key from another node without returning a null value during a progressive state transfer.

- The blocking protocol queues the transaction delivery during the state transfer.

- State transfer control messages (such as CacheTopologyControlCommand) are sent according to the total order information.

6.4.2. Suppress State Transfer via JMX

getCache() call will timeout after stateTransfer.timeout expires unless rebalancing is re-enabled or stateTransfer.awaitInitialTransferis set to false.

6.4.3. The rebalancingEnabled Attribute

rebalancingEnabled JMX attribute, and requires no specific configuration.

rebalancingEnabled attribute can be modified for the entire cluster from the LocalTopologyManager JMX Mbean on any node. This attribute is true by default, and is configurable programmatically.

<await-initial-transfer="false"/>

Chapter 7. Set Up Distribution Mode

7.1. About Distribution Mode

7.2. Distribution Mode's Consistent Hash Algorithm

numSegments and cannot be changed without restarting the cluster. The mapping of keys to segments is also fixed — a key maps to the same segment, regardless of how the topology of the cluster changes.

7.3. Locating Entries in Distribution Mode

PUT operation can result in as many remote calls as specified by the num_copies parameter, while a GET operation executed on any node in the cluster results in a single remote call. In the background, the GET operation results in the same number of remote calls as a PUT operation (specifically the value of the num_copies parameter), but these occur in parallel and the returned entry is passed to the caller as soon as one returns.

7.4. Return Values in Distribution Mode

7.5. Configure Distribution Mode (Remote Client-Server Mode)

Procedure 7.1. The distributed-cache Element

<cache-container name="clustered" default-cache="default" statistics="true"> <transport executor="infinispan-transport" lock-timeout="60000"/> <distributed-cache name="default" mode="SYNC" segments="20" start="EAGER" owners="2" statistics="true"> <!-- Additional configuration information here --> </distributed-cache> </cache-container>

distributed-cache element configures settings for the distributed cache using the following parameters:

- The

nameparameter provides a unique identifier for the cache. - The

modeparameter sets the clustered cache mode. Valid values areSYNC(synchronous) andASYNC(asynchronous). - The (optional)

segmentsparameter specifies the number of hash space segments per cluster. The recommended value for this parameter is ten multiplied by the cluster size and the default value is20. - The

startparameter specifies whether the cache starts when the server starts up or when it is requested or deployed. - The

ownersparameter indicates the number of nodes that will contain the hash segment. - If

statisticsare enabled at the container level, per-cache statistics can be selectively disabled for caches that do not require monitoring by setting thestatisticsattribute tofalse.

Important

cache-container, locking, and transaction elements, see the appropriate chapter.

7.6. Configure Distribution Mode (Library Mode)

Procedure 7.2. Distributed Cache Configuration

Configure the Clustering Element

<clustering mode="distribution"> <sync replTimeout="${TIME}" /> <stateTransfer chunkSize="${SIZE}" fetchInMemoryState="{true/false}" awaitInitialTransfer="{true/false}" timeout="${TIME}" />- The

clusteringelement'smodeparameter's value determines the clustering mode selected for the cache. - The

syncelement'sreplTimeoutparameter specifies the maximum time period in milliseconds for an acknowledgment after a remote call. If the time period ends without any acknowledgment, an exception is thrown. - The

stateTransferelement specifies how state is transferred when a node leaves or joins the cluster. It uses the following parameters:- The

chunkSizeparameter is the number of cache entries to be transferred in one chunk. The defaultchunkSizevalue is 512. ThechunkSizedepends on many parameters (for example, size of entries) and the best setting needs to be measured. To change the value for larger cache entries, smaller chunks are advisable and for smaller cache entries, increasing thechunkSizeoffers better performance. - The

fetchInMemoryStateparameter when set totrue, requests state information from neighboring caches on start up. This impacts the start up time for the cache. Set the default totrueif there is no shared cache store behind to avoid cache inconsistency. - The

awaitInitialTransferparameter causes the first call to methodCacheManager.getCache()on the joiner node to block and wait until the joining is complete and the cache has finished receiving state from neighboring caches (iffetchInMemoryStateis enabled). This option applies to distributed and replicated caches only and is enabled by default. - The

timeoutparameter specifies the maximum time (in milliseconds) the cache waits for responses from neighboring caches with the requested states. If no response is received within thetimeoutperiod, the start up process aborts and an exception is thrown. As a result of the failed state transfer, the cache is not available on that instance.

Specify Transport Configuration

<global> <transport clusterName="${NAME}" distributedSyncTimeout="${TIME}" transportClass="${CLASS}" /> </global>Thetransportelement defines the transport configuration for the cache container as follows:- The

clusterNameparameter specifies the name of the cluster. Nodes can only connect to clusters that share the same name. - The

distributedSyncTimeoutparameter specifies the time to wait to acquire a lock on the distributed lock. This distributed lock ensures that a single cache can transfer state or rehash state at a time. - The

transportClassparameter specifies a class that represents a network transport for the cache container.

7.7. Synchronous and Asynchronous Distribution

Example 7.1. Communication Mode example

A, B and C, and a key K that maps nodes A and B. Perform an operation on node C that requires a return value, for example Cache.remove(K). To execute successfully, the operation must first synchronously forward the call to both node A and B, and then wait for a result returned from either node A or B. If asynchronous communication was used, the usefulness of the returned values cannot be guaranteed, despite the operation behaving as expected.

7.8. GET and PUT Usage in Distribution Mode

GET command before a write command. This occurs because certain methods (for example, Cache.put()) return the previous value associated with the specified key according to the java.util.Map contract. When this is performed on an instance that does not own the key and the entry is not found in the L1 cache, the only reliable way to elicit this return value is to perform a remote GET before the PUT.

GET operation that occurs before the PUT operation is always synchronous, whether the cache is synchronous or asynchronous, because Red Hat JBoss Data Grid must wait for the return value.

7.8.1. Distributed GET and PUT Operation Resource Usage

GET operation before executing the desired PUT operation.

GET operation does not wait for all responses, which would result in wasted resources. The GET process accepts the first valid response received, which allows its performance to be unrelated to cluster size.

Flag.SKIP_REMOTE_LOOKUP flag for a per-invocation setting if return values are not required for your implementation.

java.util.Map interface contract. The contract breaks because unreliable and inaccurate return values are provided to certain methods. As a result, ensure that these return values are not used for any important purpose on your configuration.

Chapter 8. Set Up Replication Mode

8.1. About Replication Mode

8.2. Optimized Replication Mode Usage

8.3. Configure Replication Mode (Remote Client-Server Mode)

Procedure 8.1. The replicated-cache Element

<cache-container name="clustered"

default-cache="default"

statistics="true">

<transport executor="infinispan-transport"

lock-timeout="60000"/>

<replicated-cache name="default"

mode="SYNC"

start="EAGER"

statistics="true">

<transaction mode="NONE" />

</replicated-cache>

</cache-container>Important

replicated-cache element configures settings for the distributed cache using the following parameters:

- The

nameparameter provides a unique identifier for the cache. - The

modeparameter sets the clustered cache mode. Valid values areSYNC(synchronous) andASYNC(asynchronous). - The

startparameter specifies whether the cache starts when the server starts up or when it is requested or deployed. - If

statisticsare enabled at the container level, per-cache statistics can be selectively disabled for caches that do not require monitoring by setting thestatisticsattribute tofalse. - The

transactionelement sets up the transaction mode for the replicated cache.Important

In Remote Client-Server mode, the transactions element is set toNONEunless JBoss Data Grid is used in a compatibility mode where the cluster contains both JBoss Data Grid server and library instances. In this case, if transactions are configured in the library mode instance, they must also be configured in the server instance.

cache-container and locking, see the appropriate chapter.

8.4. Configure Replication Mode (Library Mode)

Procedure 8.2. Replication Mode Configuration

Configure the Clustering Element

<clustering mode="replication"> <sync replTimeout="${TIME}" /> <stateTransfer chunkSize="${SIZE}" fetchInMemoryState="{true/false}" awaitInitialTransfer="{true/false}" timeout="${TIME}" />- The

clusteringelement'smodeparameter's value determines the clustering mode selected for the cache. - The

syncelement'sreplTimeoutparameter specifies the maximum time period in milliseconds for an acknowledgment after a remote call. If the time period ends without any acknowledgment, an exception is thrown. - The

stateTransferelement specifies how state is transferred when a node leaves or joins the cluster. It uses the following parameters:- The

chunkSizeparameter is the number of cache entries to be transferred in one chunk. The defaultchunkSizevalue is 512. ThechunkSizedepends on many parameters (for example, size of entries) and the best setting needs to be measured. To change the value for larger cache entries, smaller chunks are advisable and for smaller cache entries, increasing thechunkSizeoffers better performance. - The

fetchInMemoryStateparameter when set totrue, requests state information from neighboring caches on start up. This impacts the startup time for the cache. Set the default totrueif there is no shared cache store behind to avoid cache inconsistency. - The

awaitInitialTransferparameter causes the first call to methodCacheManager.getCache()on the joiner node to block and wait until the joining is complete and the cache has finished receiving state from neighboring caches (iffetchInMemoryStateis enabled). This option applies to distributed and replicated caches only and is enabled by default. - The

timeoutparameter specifies the maximum time (in milliseconds) the cache waits for responses from neighboring caches with the requested states. If no response is received within thetimeoutperiod, the start up process aborts and an exception is thrown. As a result of the failed state transfer, the cache is not available on that instance.

Specify Transport Configuration

<global> <transport clusterName="${NAME}" distributedSyncTimeout="${TIME}" transportClass="${CLASS}" /> </global>Thetransportelement defines the transport configuration for the cache container as follows:- The

clusterNameparameter specifies the name of the cluster. Nodes can only connect to clusters that share the same name. - The

distributedSyncTimeoutparameter specifies the time to wait to acquire a lock on the distributed lock. This distributed lock ensures that a single cache can transfer state or rehash state at a time. - The

transportClassparameter specifies a class that represents a network transport for the cache container.

8.5. Synchronous and Asynchronous Replication

- Synchronous replication blocks a thread or caller (for example on a

put()operation) until the modifications are replicated across all nodes in the cluster. By waiting for acknowledgments, synchronous replication ensures that all replications are successfully applied before the operation is concluded. - Asynchronous replication operates significantly faster than synchronous replication because it does not need to wait for responses from nodes. Asynchronous replication performs the replication in the background and the call returns immediately. Errors that occur during asynchronous replication are written to a log. As a result, a transaction can be successfully completed despite the fact that replication of the transaction may not have succeeded on all the cache instances in the cluster.

8.5.1. Troubleshooting Asynchronous Replication Behavior

- Disable state transfer and use a

ClusteredCacheLoaderto lazily look up remote state as and when needed. - Enable state transfer and

REPL_SYNC. Use the Asynchronous API (for example, thecache.putAsync(k, v)) to activate 'fire-and-forget' capabilities. - Enable state transfer and

REPL_ASYNC. All RPCs end up becoming synchronous, but client threads will not be held up if a replication queue is enabled (which is recommended for asynchronous mode).

8.6. The Replication Queue

- Previously set intervals.

- The queue size exceeding the number of elements.

- A combination of previously set intervals and the queue size exceeding the number of elements.

8.6.1. Replication Queue Usage

- Disable asynchronous marshalling.

- Set the

max-threadscount value to1for thetransport executor. Thetransport executoris defined instandalone.xmlorclustered.xmlas follows:<transport executor="infinispan-transport"/>

queue-flush-interval, value is in milliseconds) and queue size (queue-size) as follows:

Example 8.1. Replication Queue in Asynchronous Mode

<replicated-cache name="asyncCache"

start="EAGER"

mode="ASYNC"

batching="false"

indexing="NONE"

statistics="true"

queue-size="1000"

queue-flush-interval="500">

<!-- Additional configuration information here -->

</replicated-cache>8.7. About Replication Guarantees

8.8. Replication Traffic on Internal Networks

IP addresses than for traffic over public IP addresses, or do not charge at all for internal network traffic (for example, GoGrid). To take advantage of lower rates, you can configure Red Hat JBoss Data Grid to transfer replication traffic using the internal network. With such a configuration, it is difficult to know the internal IP address you are assigned. JBoss Data Grid uses JGroups interfaces to solve this problem.

Chapter 9. Set Up Invalidation Mode

9.1. About Invalidation Mode

9.2. Configure Invalidation Mode (Remote Client-Server Mode)

Procedure 9.1. The invalidation-cache Element

<cache-container name="local"

default-cache="default"

statistics="true">

<invalidation-cache name="default"

mode="ASYNC"

start="EAGER"

statistics="true">

<!-- Additional configuration information here -->

</invalidation-cache>

</cache-container>invalidation-cache element configures settings for the distributed cache using the following parameters:

- The

nameparameter provides a unique identifier for the cache. - The

modeparameter sets the clustered cache mode. Valid values areSYNC(synchronous) andASYNC(asynchronous). - The

startparameter specifies whether the cache starts when the server starts up or when it is requested or deployed. - If

statisticsare enabled at the container level, per-cache statistics can be selectively disabled for caches that do not require monitoring by setting thestatisticsattribute tofalse.

Important

cache-container, locking, and transaction elements, see the appropriate chapter.

9.3. Configure Invalidation Mode (Library Mode)

Procedure 9.2. Invalidation Mode Configuration

Configure the Clustering Element

<clustering mode="invalidation"> <sync replTimeout="${TIME}" /> <stateTransfer chunkSize="${SIZE}" fetchInMemoryState="{true/false}" awaitInitialTransfer="{true/false}" timeout="${TIME}" />- The

clusteringelement'smodeparameter's value determines the clustering mode selected for the cache. - The

syncelement'sreplTimeoutparameter specifies the maximum time period in milliseconds for an acknowledgment after a remote call. If the time period ends without any acknowledgment, an exception is thrown. - The

stateTransferelement specifies how state is transferred when a node leaves or joins the cluster. It uses the following parameters:- The

chunkSizeparameter specifies the size of cache entry state batches to be transferred. If this value is greater than0, the value set is the size of chunks sent. If the value is less than0, all states are transferred at the same time. - The

fetchInMemoryStateparameter when set totrue, requests state information from neighboring caches on start up. This impacts the start up time for the cache. - The

awaitInitialTransferparameter causes the first call to methodCacheManager.getCache()on the joiner node to block and wait until the joining is complete and the cache has finished receiving state from neighboring caches (iffetchInMemoryStateis enabled). This option applies to distributed and replicated caches only and is enabled by default. - The

timeoutparameter specifies the maximum time (in milliseconds) the cache waits for responses from neighboring caches with the requested states. If no response is received within thetimeoutperiod, the start up process aborts and an exception is thrown.

Specify Transport Configuration

<global> <transport clusterName="${NAME}" distributedSyncTimeout="${TIME}" transportClass="${CLASS}" /> </global>Thetransportelement defines the transport configuration for the cache container as follows:- The

clusterNameparameter specifies the name of the cluster. Nodes can only connect to clusters that share the same name. - The

distributedSyncTimeoutparameter specifies the time to wait to acquire a lock on the distributed lock. This distributed lock ensures that a single cache can transfer state or rehash state at a time. - The

transportClassparameter specifies a class that represents a network transport for the cache container.

9.4. Synchronous/Asynchronous Invalidation

- Synchronous invalidation blocks the thread until all caches in the cluster have received invalidation messages and evicted the obsolete data.

- Asynchronous invalidation operates in a fire-and-forget mode that allows invalidation messages to be broadcast without blocking a thread to wait for responses.

9.5. The L1 Cache and Invalidation

Part V. Remote Client-Server Mode Interfaces

- The Asynchronous API (can only be used in conjunction with the Hot Rod Client in Remote Client-Server Mode)

- The REST Interface

- The Memcached Interface

- The Hot Rod Interface

- The RemoteCache API

Chapter 10. The Asynchronous API

Async appended to each method name. Asynchronous methods return a Future that contains the result of the operation.

Cache<String, String>, Cache.put(String key, String value) returns a String, while Cache.putAsync(String key, String value) returns a Future<String>.

10.1. Asynchronous API Benefits

- The guarantee of synchronous communication, with the added ability to handle failures and exceptions.

- Not being required to block a thread's operations until the call completes.

Example 10.1. Using the Asynchronous API

Set<Future<?>> futures = new HashSet<Future<?>>();

futures.add(cache.putAsync("key1", "value1"));

futures.add(cache.putAsync("key2", "value2"));

futures.add(cache.putAsync("key3", "value3"));futures.add(cache.putAsync(key1, value1));futures.add(cache.putAsync(key2, value2));futures.add(cache.putAsync(key3, value3));

10.2. About Asynchronous Processes

- Network calls

- Marshalling

- Writing to a cache store (optional)

- Locking

10.3. Return Values and the Asynchronous API

Future or the NotifyingFuture in order to query the previous value.

Note

NotifyingFutures are only available in JBoss Data Grid Library mode.

Future.get()

Chapter 11. The REST Interface

Important

security-domain and auth-method parameters from the connector.

11.1. Ruby Client Code

Example 11.1. Using the REST API with Ruby

require 'net/http'

http = Net::HTTP.new('localhost', 8080)

#An example of how to create a new entry

http.post('/rest/MyData/MyKey', 'DATA_HERE', {"Content-Type" => "text/plain"})

#An example of using a GET operation to retrieve the key

puts http.get('/rest/MyData/MyKey').body

#An Example of using a PUT operation to overwrite the key

http.put('/rest/MyData/MyKey', 'MORE DATA', {"Content-Type" => "text/plain"})

#An example of Removing the remote copy of the key

http.delete('/rest/MyData/MyKey')

#An example of creating binary data

http.put('/rest/MyImages/Image.png', File.read('/Users/michaelneale/logo.png'), {"Content-Type" => "image/png"})11.2. Using JSON with Ruby Example

To use JavaScript Object Notation (JSON) with ruby to interact with Red Hat JBoss Data Grid's REST Interface, install the JSON Ruby library (see your platform's package manager or the Ruby documentation) and declare the requirement using the following code:

require 'json'

The following code is an example of how to use JavaScript Object Notation (JSON) in conjunction with Ruby to send specific data, in this case the name and age of an individual, using the PUT function.

data = {:name => "michael", :age => 42 }

http.put('/rest/Users/data/0', data.to_json, {"Content-Type" => "application/json"})11.3. Python Client Code

Example 11.2. Using the REST API with Python

import httplib

#How to insert data

conn = httplib.HTTPConnection("localhost:8080")

data = "SOME DATA HERE \!" #could be string, or a file...

conn.request("POST", "/rest/default/0", data, {"Content-Type": "text/plain"})

response = conn.getresponse()

print response.status

#How to retrieve data

import httplib

conn = httplib.HTTPConnection("localhost:8080")

conn.request("GET", "/rest/default/0")

response = conn.getresponse()

print response.status

print response.read()11.4. Java Client Code

Example 11.3. Defining Imports

import java.io.BufferedReader;import java.io.IOException; import java.io.InputStreamReader;import java.io.OutputStreamWriter; import java.net.HttpURLConnection;import java.net.URL;

Example 11.4. Adding a String Value to a Cache

public class RestExample {

/**

* Method that puts a String value in cache.

* @param urlServerAddress

* @param value

* @throws IOException

*/

public void putMethod(String urlServerAddress, String value) throws IOException {

System.out.println("----------------------------------------");

System.out.println("Executing PUT");

System.out.println("----------------------------------------");

URL address = new URL(urlServerAddress);

System.out.println("executing request " + urlServerAddress);

HttpURLConnection connection = (HttpURLConnection) address.openConnection();

System.out.println("Executing put method of value: " + value);

connection.setRequestMethod("PUT");

connection.setRequestProperty("Content-Type", "text/plain");

connection.setDoOutput(true);

OutputStreamWriter outputStreamWriter = new OutputStreamWriter(connection.getOutputStream());

outputStreamWriter.write(value);

connection.connect();

outputStreamWriter.flush();

System.out.println("----------------------------------------");

System.out.println(connection.getResponseCode() + " " + connection.getResponseMessage());

System.out.println("----------------------------------------");

connection.disconnect();

}Example 11.5. Get a String Value from a Cache

/**

* Method that gets an value by a key in url as param value.

* @param urlServerAddress

* @return String value

* @throws IOException

*/

public String getMethod(String urlServerAddress) throws IOException {

String line = new String();

StringBuilder stringBuilder = new StringBuilder();

System.out.println("----------------------------------------");

System.out.println("Executing GET");

System.out.println("----------------------------------------");

URL address = new URL(urlServerAddress);

System.out.println("executing request " + urlServerAddress);

HttpURLConnection connection = (HttpURLConnection) address.openConnection();

connection.setRequestMethod("GET");

connection.setRequestProperty("Content-Type", "text/plain");

connection.setDoOutput(true);

BufferedReader bufferedReader = new BufferedReader(new InputStreamReader(connection.getInputStream()));

connection.connect();

while ((line = bufferedReader.readLine()) != null) {

stringBuilder.append(line + '\n');

}

System.out.println("Executing get method of value: " + stringBuilder.toString());

System.out.println("----------------------------------------");

System.out.println(connection.getResponseCode() + " " + connection.getResponseMessage());

System.out.println("----------------------------------------");

connection.disconnect();

return stringBuilder.toString();

}Example 11.6. Using a Java Main Method

/**

* Main method example.

* @param args

* @throws IOException

*/

public static void main(String[] args) throws IOException {

//Note that the cache name is "cacheX"

RestExample restExample = new RestExample();

restExample.putMethod("http://localhost:8080/rest/cacheX/1", "Infinispan REST Test");

restExample.getMethod("http://localhost:8080/rest/cacheX/1");

}

}11.5. The REST Interface Connector

<rest-connector virtual-server="default-host" cache-container="local" security-domain="other" auth-method="BASIC"/>

11.5.1. Configure REST Connectors

rest-connector element in Red Hat JBoss Data Grid's Remote Client-Server mode.

Procedure 11.1. Configuring REST Connectors for Remote Client-Server Mode

<subsystem xmlns="urn:infinispan:server:endpoint:6.1">

<rest-connector virtual-server="default-host"

cache-container="local"

context-path="${CONTEXT_PATH}"

security-domain="${SECURITY_DOMAIN}"

auth-method="${METHOD}"

security-mode="${MODE}" />

</subsystem>rest-connector element specifies the configuration information for the REST connector.

- The

virtual-serverparameter specifies the virtual server used by the REST connector. The default value for this parameter isdefault-host. This is an optional parameter. - The

cache-containerparameter names the cache container used by the REST connector. This is a mandatory parameter. - The

context-pathparameter specifies the context path for the REST connector. The default value for this parameter is an empty string (""). This is an optional parameter. - The

security-domainparameter specifies that the specified domain, declared in the security subsystem, should be used to authenticate access to the REST endpoint. This is an optional parameter. If this parameter is omitted, no authentication is performed. - The

auth-methodparameter specifies the method used to retrieve credentials for the end point. The default value for this parameter isBASIC. Supported alternate values includeBASIC,DIGEST, andCLIENT-CERT. This is an optional parameter. - The

security-modeparameter specifies whether authentication is required only for write operations (such as PUT, POST and DELETE) or for read operations (such as GET and HEAD) as well. Valid values for this parameter areWRITEfor authenticating write operations only, orREAD_WRITEto authenticate read and write operations. The default value for this parameter isREAD_WRITE.

11.6. Using the REST Interface

- Adding data

- Retrieving data

- Removing data

11.6.1. Adding Data Using REST

- HTTP

PUTmethod - HTTP

POSTmethod

PUT and POST methods are used, the body of the request contains this data, which includes any information added by the user.

PUT and POST methods require a Content-Type header.

11.6.1.1. About PUT /{cacheName}/{cacheKey}

PUT request from the provided URL form places the payload, from the request body in the targeted cache using the provided key. The targeted cache must exist on the server for this task to successfully complete.

hr is the cache name and payRoll%2F3 is the key. The value %2F indicates that a / was used in the key.

http://someserver/rest/hr/payRoll%2F3

Time-To-Live and Last-Modified values are updated, if an update is required.

Note

%2F to represent a / in the key (as in the provided example) can be successfully run if the server is started using the following argument:

-Dorg.apache.tomcat.util.buf.UDecoder.ALLOW_ENCODED_SLASH=true

11.6.1.2. About POST /{cacheName}/{cacheKey}

POST method from the provided URL form places the payload (from the request body) in the targeted cache using the provided key. However, in a POST method, if a value in a cache/key exists, a HTTP CONFLICT status is returned and the content is not updated.

11.6.2. Retrieving Data Using REST

- HTTP

GETmethod. - HTTP

HEADmethod.

11.6.2.1. About GET /{cacheName}/{cacheKey}

GET method returns the data located in the supplied cacheName, matched to the relevant key, as the body of the response. The Content-Type header provides the type of the data. A browser can directly access the cache.

11.6.2.2. About HEAD /{cacheName}/{cacheKey}

HEAD method operates in a manner similar to the GET method, however returns no content (header fields are returned).

11.6.3. Removing Data Using REST

DELETE method to retrieve data from the cache. The DELETE method can:

- Remove a cache entry/value. (

DELETE /{cacheName}/{cacheKey}) - Remove all entries from a cache. (

DELETE /{cacheName})

11.6.3.1. About DELETE /{cacheName}/{cacheKey}

DELETE /{cacheName}/{cacheKey}), the DELETE method removes the key/value from the cache for the provided key.

11.6.3.2. About DELETE /{cacheName}

DELETE /{cacheName}), the DELETE method removes all entries in the named cache. After a successful DELETE operation, the HTTP status code 200 is returned.

11.6.3.3. Background Delete Operations

performAsync header to true to ensure an immediate return while the removal operation continues in the background.

11.6.4. REST Interface Operation Headers

Table 11.1. Header Types

| Headers | Mandatory/Optional | Values | Default Value | Details |

|---|---|---|---|---|

| Content-Type | Mandatory | - | - | If the Content-Type is set to application/x-java-serialized-object, it is stored as a Java object. |

| performAsync | Optional | True/False | - | If set to true, an immediate return occurs, followed by a replication of data to the cluster on its own. This feature is useful when dealing with bulk data inserts and large clusters. |

| timeToLiveSeconds | Optional | Numeric (positive and negative numbers) | -1 (This value prevents expiration as a direct result of timeToLiveSeconds. Expiration values set elsewhere override this default value.) | Reflects the number of seconds before the entry in question is automatically deleted. Setting a negative value for timeToLiveSeconds provides the same result as the default value. |

| maxIdleTimeSeconds | Optional | Numeric (positive and negative numbers) | -1 (This value prevents expiration as a direct result of maxIdleTimeSeconds. Expiration values set elsewhere override this default value.) | Contains the number of seconds after the last usage when the entry will be automatically deleted. Passing a negative value provides the same result as the default value. |

timeToLiveSeconds and maxIdleTimeSeconds headers:

- If both the

timeToLiveSecondsandmaxIdleTimeSecondsheaders are assigned the value0, the cache uses the defaulttimeToLiveSecondsandmaxIdleTimeSecondsvalues configured either using XML or programatically. - If only the

maxIdleTimeSecondsheader value is set to0, thetimeToLiveSecondsvalue should be passed as the parameter (or the default-1, if the parameter is not present). Additionally, themaxIdleTimeSecondsparameter value defaults to the values configured either using XML or programatically. - If only the

timeToLiveSecondsheader value is set to0, expiration occurs immediately and themaxIdleTimeSecondsvalue is set to the value passed as a parameter (or the default-1if no parameter was supplied).

ETags (Entity Tags) are returned for each REST Interface entry, along with a Last-Modified header that indicates the state of the data at the supplied URL. ETags are used in HTTP operations to request data exclusively in cases where the data has changed to save bandwidth. The following headers support ETags (Entity Tags) based optimistic locking:

Table 11.2. Entity Tag Related Headers

| Header | Algorithm | Example | Details |

|---|---|---|---|

| If-Match | If-Match = "If-Match" ":" ( "*" | 1#entity-tag ) | - | Used in conjunction with a list of associated entity tags to verify that a specified entity (that was previously obtained from a resource) remains current. |

| If-None-Match | - | Used in conjunction with a list of associated entity tags to verify that none of the specified entities (that was previously obtained from a resource) are current. This feature facilitates efficient updates of cached information when required and with minimal transaction overhead. | |

| If-Modified-Since | If-Modified-Since = "If-Modified-Since" ":" HTTP-date | If-Modified-Since: Sat, 29 Oct 1994 19:43:31 GMT | Compares the requested variant's last modification time and date with a supplied time and date value. If the requested variant has not been modified since the specified time and date, a 304 (not modified) response is returned without a message-body instead of an entity. |

| If-Unmodified-Since | If-Unmodified-Since = "If-Unmodified-Since" ":" HTTP-date | If-Unmodified-Since: Sat, 29 Oct 1994 19:43:31 GMT | Compares the requested variant's last modification time and date with a supplied time and date value. If the requested resources has not been modified since the supplied date and time, the specified operation is performed. If the requested resource has been modified since the supplied date and time, the operation is not performed and a 412 (Precondition Failed) response is returned. |

11.7. REST Interface Security

11.7.1. Publish REST Endpoints as a Public Interface

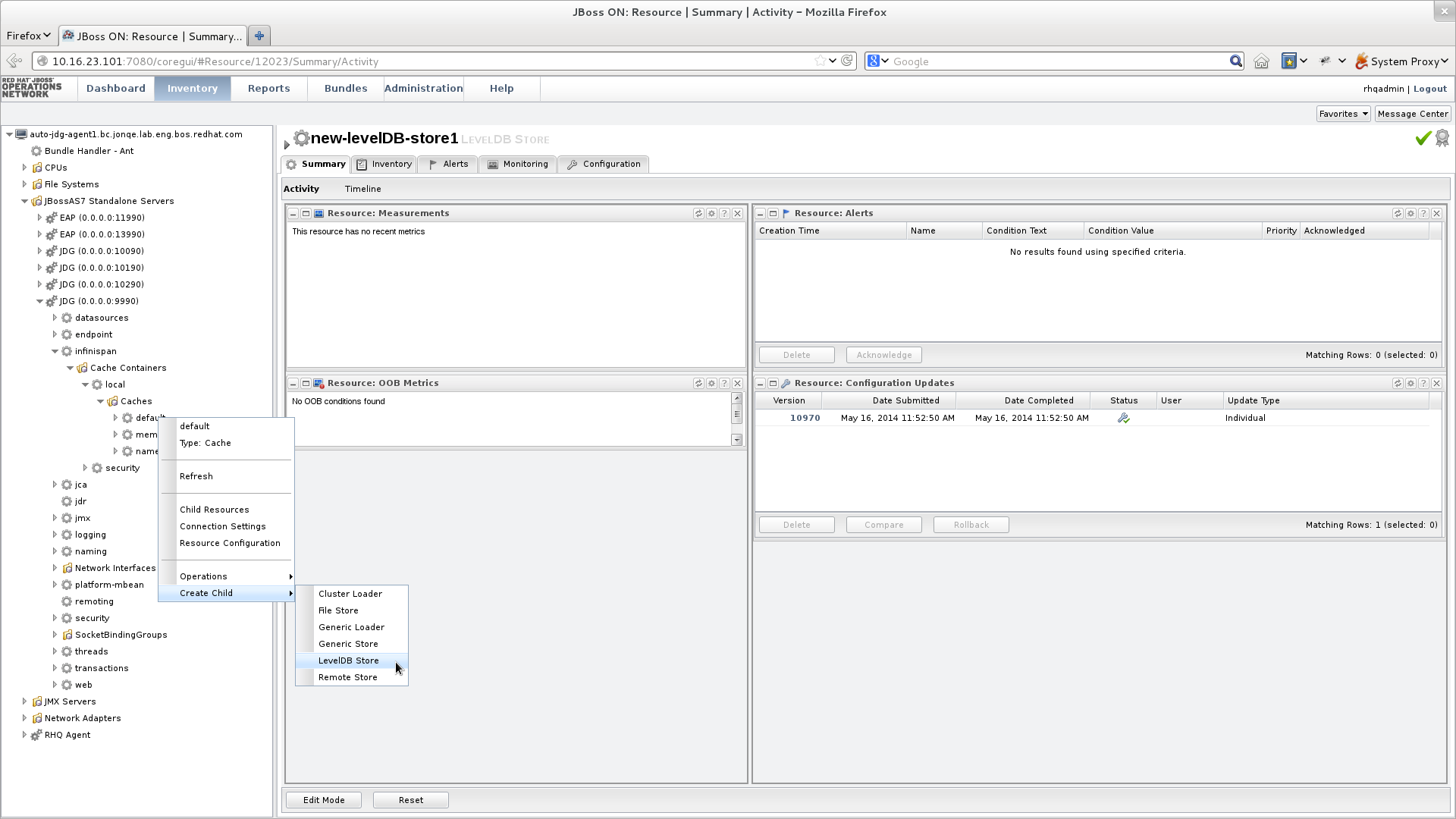

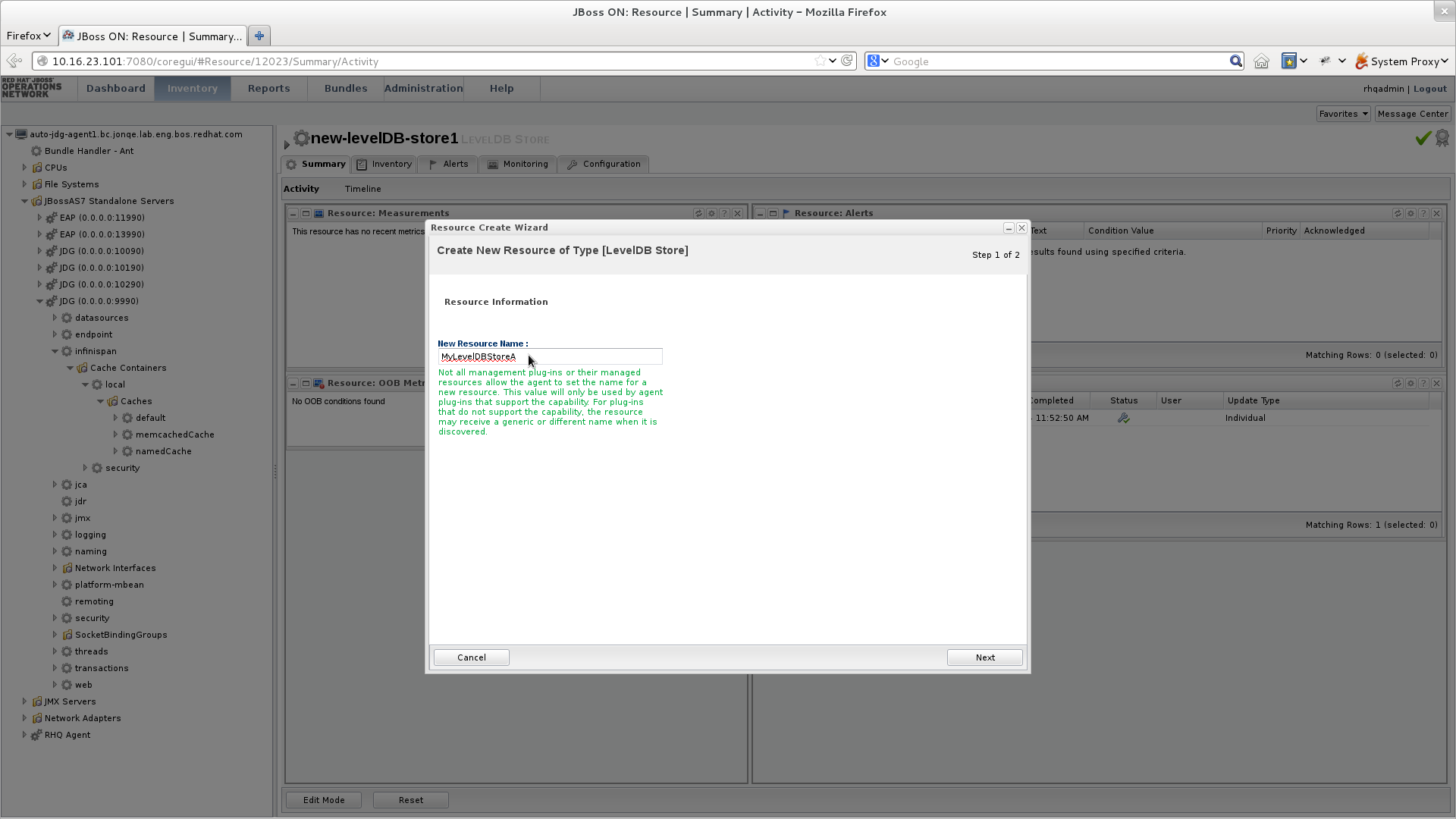

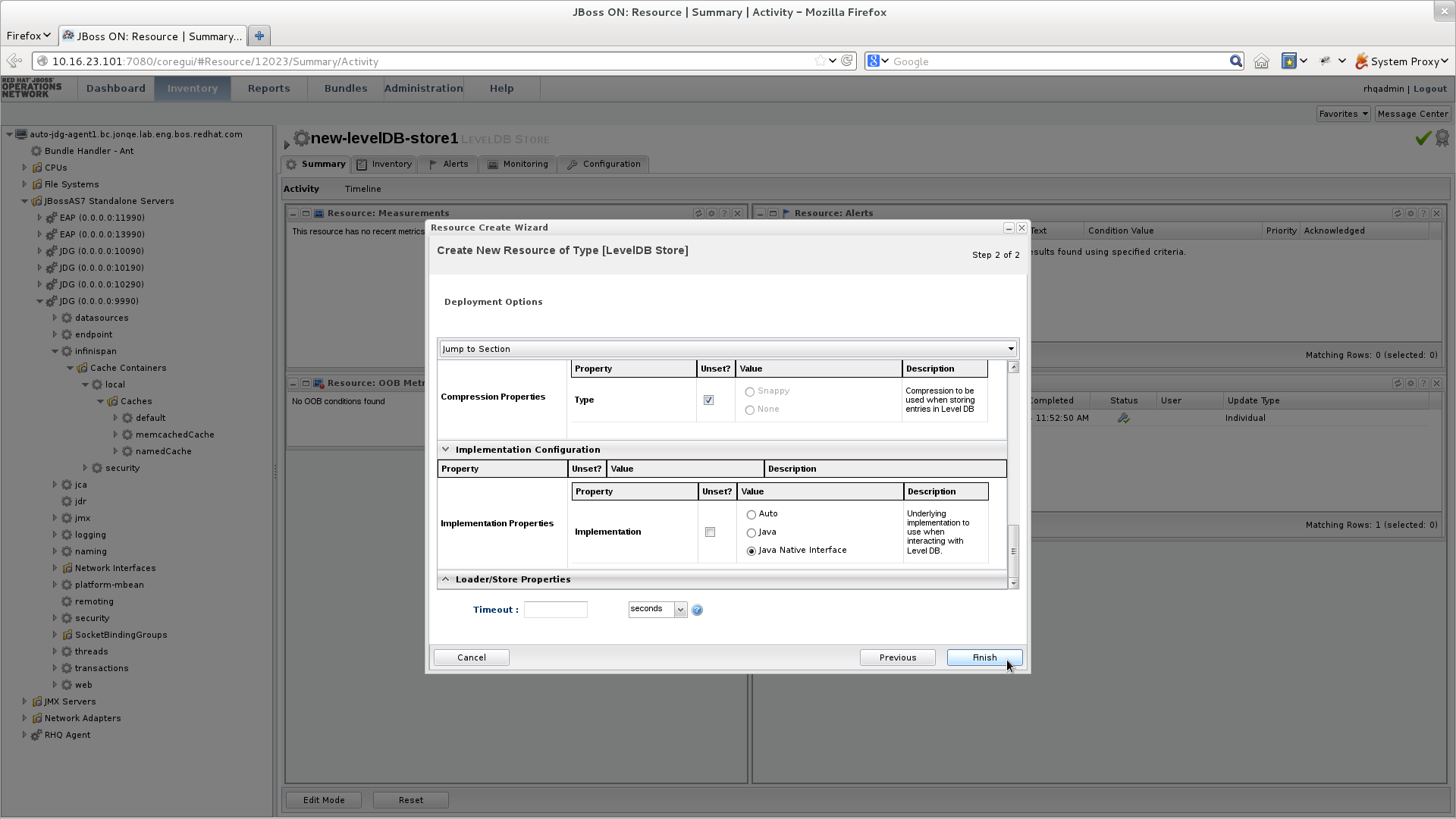

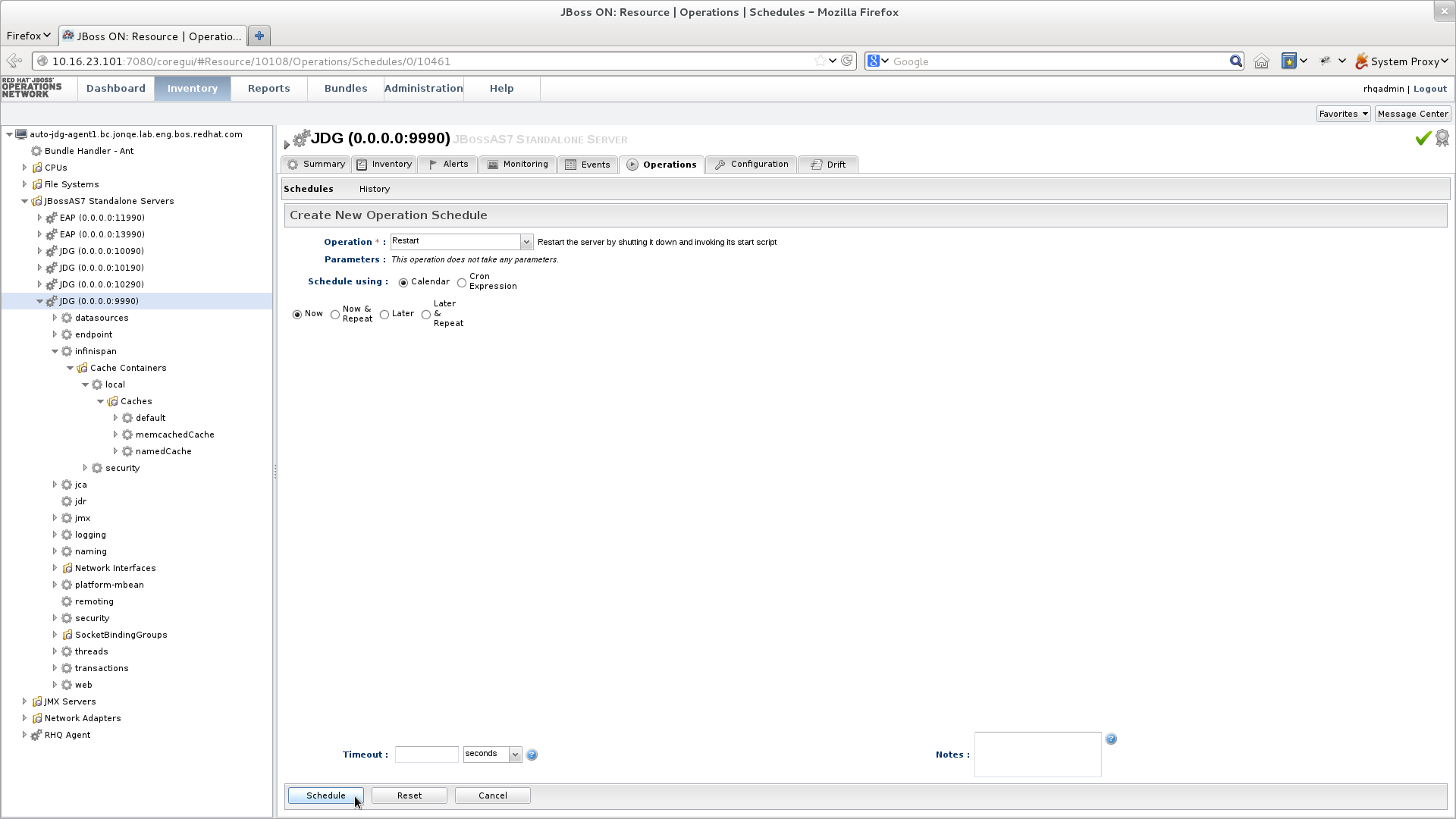

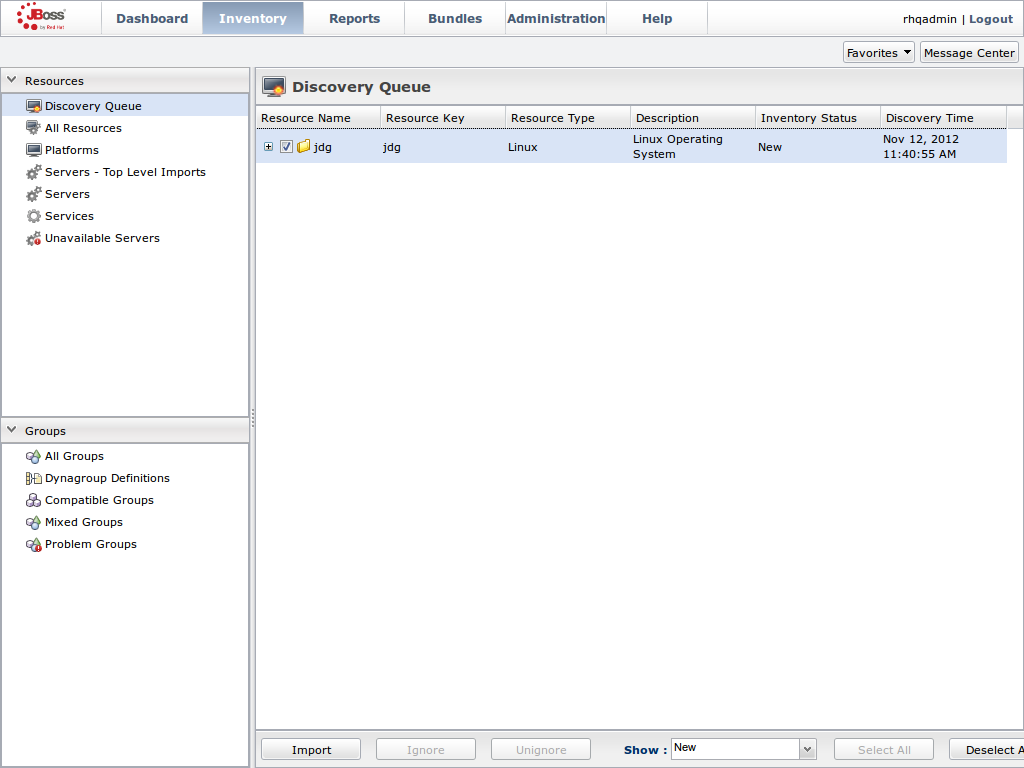

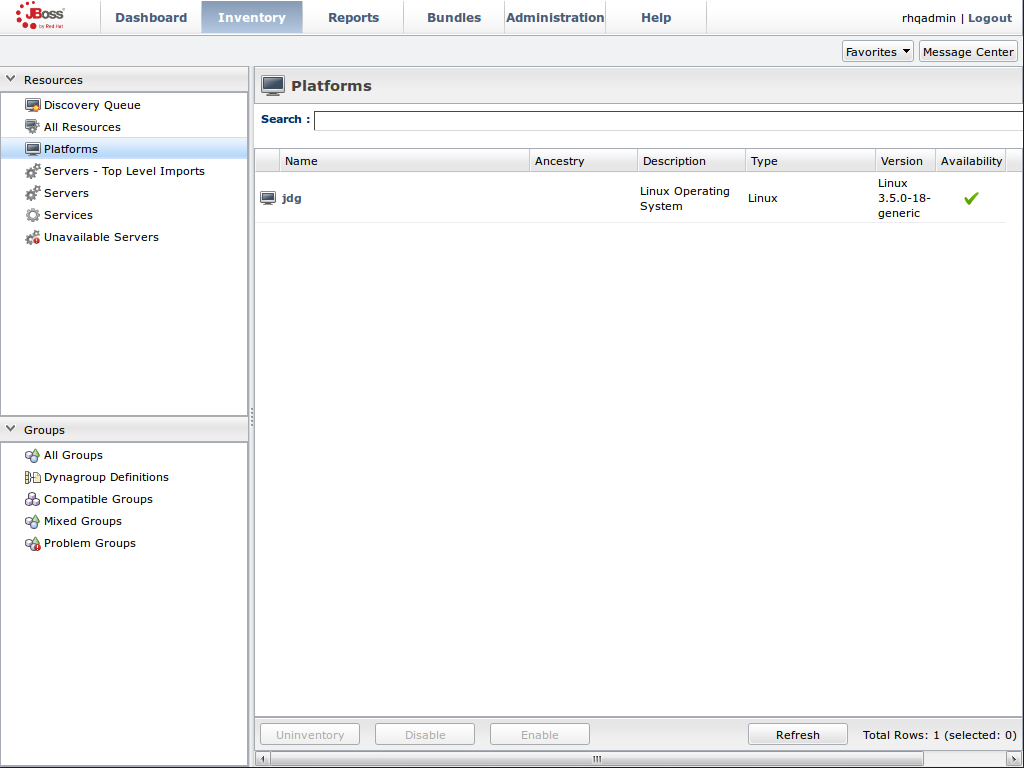

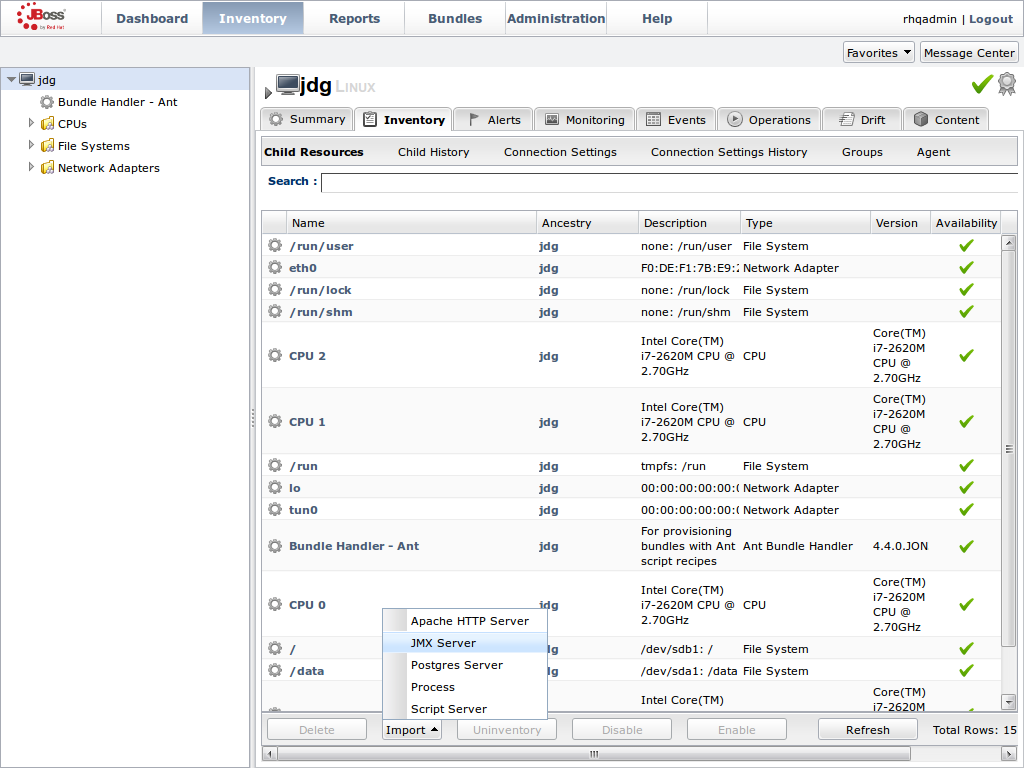

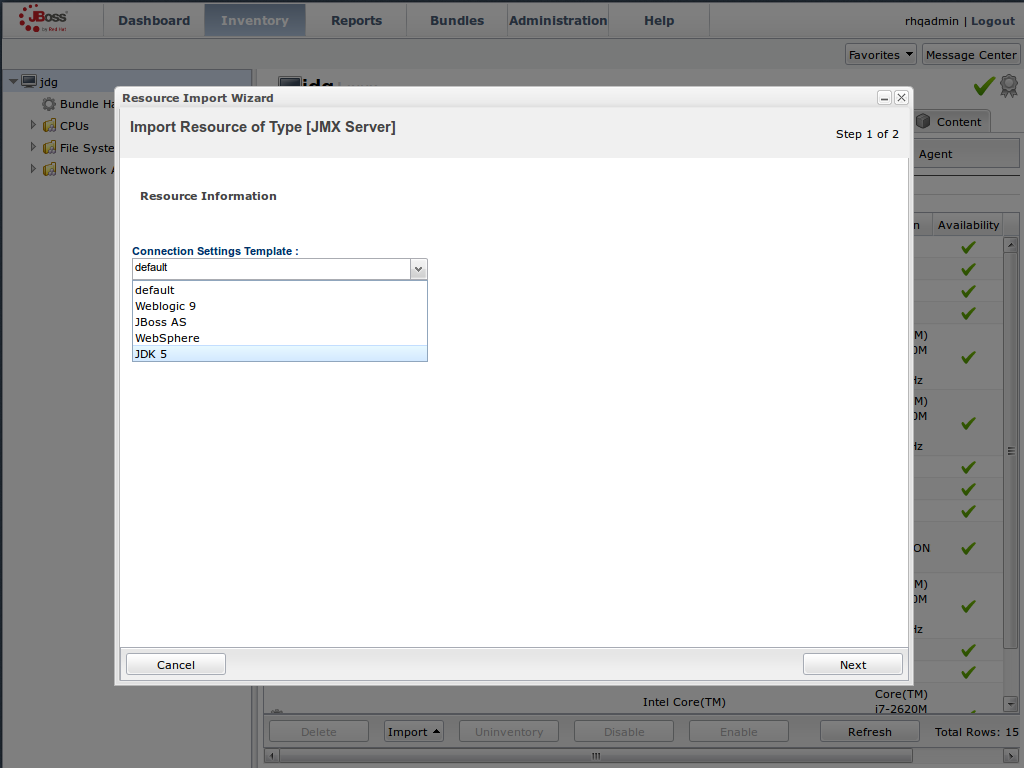

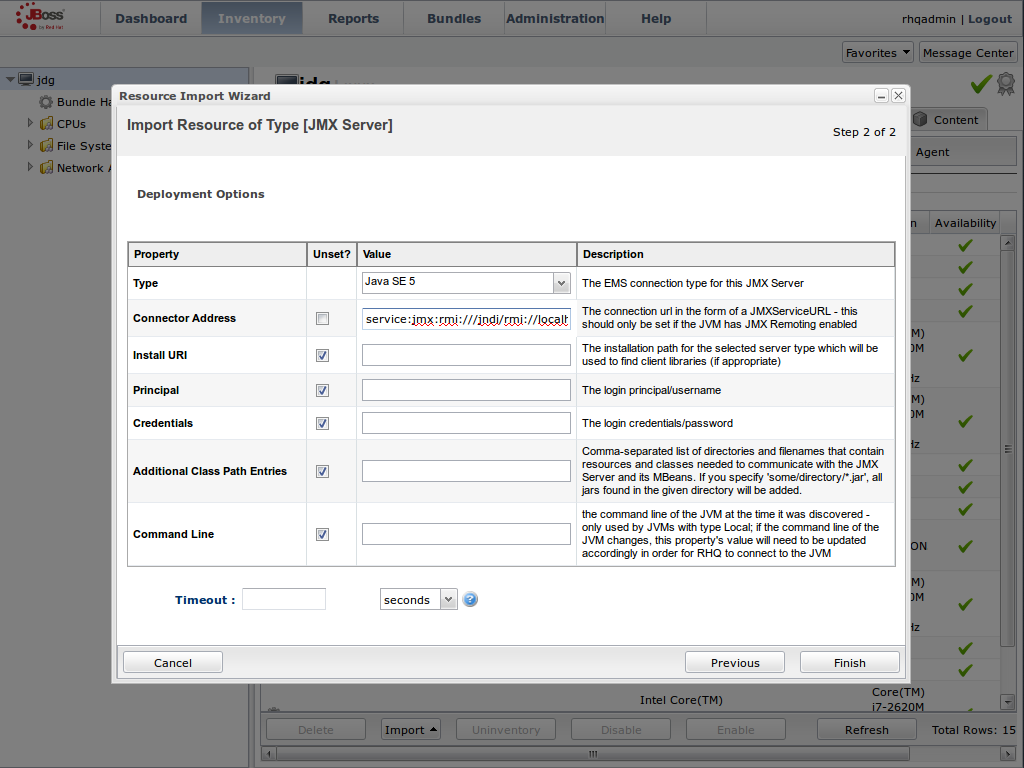

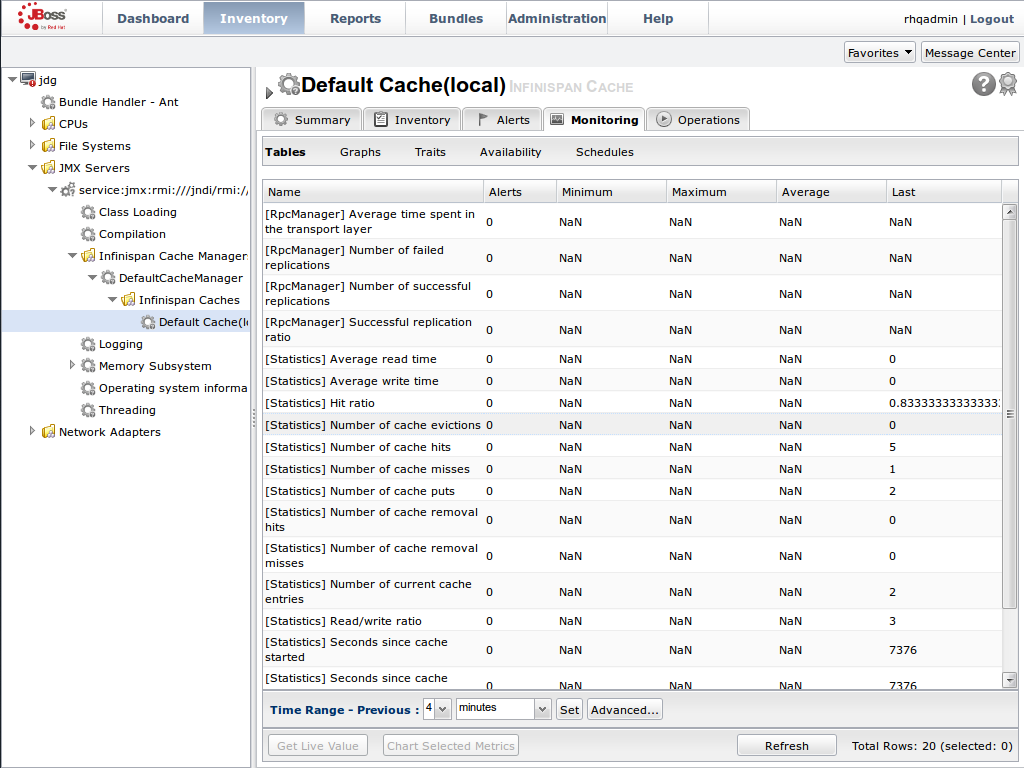

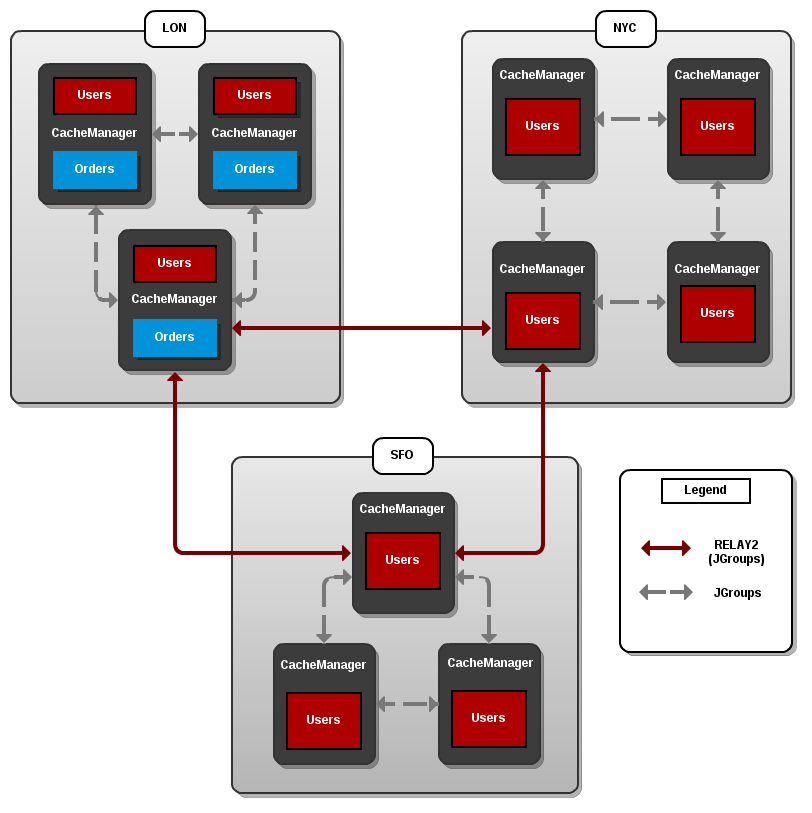

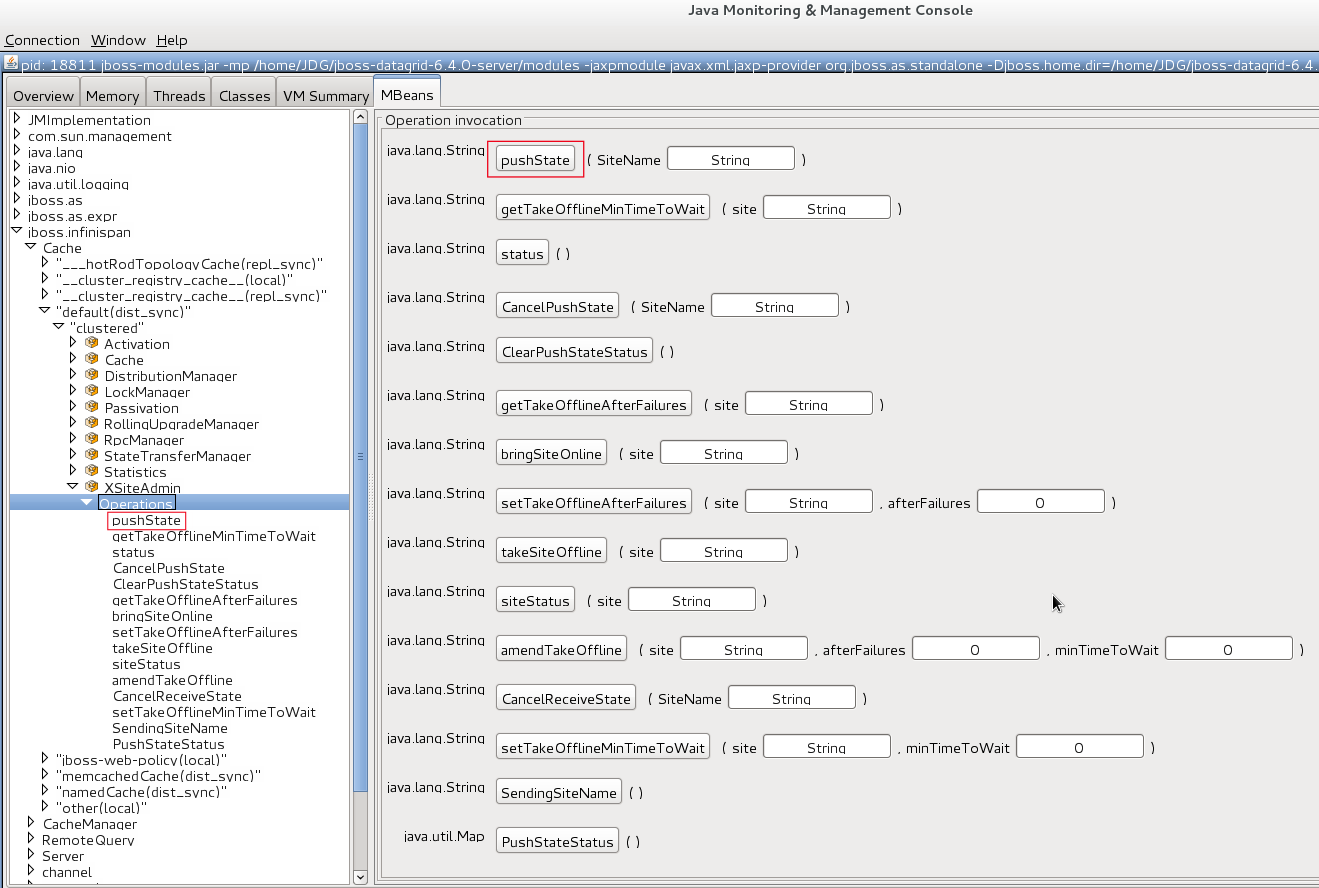

interface parameter in the socket-binding element from management to public as follows: