-

Language:

English

-

Language:

English

Developer Guide

For use with Red Hat JBoss Data Grid 6.1

Edition 2

Abstract

Preface

Chapter 1. JBoss Data Grid

1.1. About JBoss Data Grid

- Schemaless key-value store – Red Hat JBoss Data Grid is a NoSQL database that provides the flexibility to store different objects without a fixed data model.

- Grid-based data storage – Red Hat JBoss Data Grid is designed to easily replicate data across multiple nodes.

- Elastic scaling – Adding and removing nodes is achieved simply and is non-disruptive.

- Multiple access protocols – It is easy to access the data grid using REST, Memcached, Hot Rod, or simple map-like API.

1.2. JBoss Data Grid Supported Configurations

1.3. JBoss Data Grid Usage Modes

1.3.1. JBoss Data Grid Usage Modes

- Remote Client-Server mode

- Library mode

1.3.2. Remote Client-Server Mode

- easier scaling of the data grid.

- easier upgrades of the data grid without impact on client applications.

1.3.3. Library Mode

- transactions.

- listeners and notifications.

1.4. JBoss Data Grid Benefits

Benefits of JBoss Data Grid

- Performance

- Accessing objects from local memory is faster than accessing objects from remote data stores (such as a database). JBoss Data Grid provides an efficient way to store in-memory objects coming from a slower data source, resulting in faster performance than a remote data store. JBoss Data Grid also offers optimization for both clustered and non clustered caches to further improve performance.

- Consistency

- Storing data in a cache carried the inherent risk: at the time it is accessed, the data may be outdated (stale). To address this risk, JBoss Data Grid uses mechanisms such as cache invalidation and expiration to remove stale data entries from the cache. Additionally, JBoss Data Grid supports JTA, distributed (XA) and two-phase commit transactions along with transaction recovery and a version API to remove or replace data according to saved versions.

- Massive Heap and High Availability

- In JBoss Data Grid, applications no longer need to delegate the majority of their data lookup processes to a large single server database for performance benefits. JBoss Data Grid employs techniques such as replication and distribution to completely remove the bottleneck that exists in the majority of current enterprise applications.

Example 1.1. Massive Heap and High Availability Example

In a sample grid with 16 blade servers, each node has 2 GB storage space dedicated for a replicated cache. In this case, all the data in the grid is copies of the 2 GB data. In contrast, using a distributed grid (assuming the requirement of one copy per data item, resulting in the capacity of the overall heap being divided by two) the resulting memory backed virtual heap contains 16 GB data. This data can now be effectively accessed from anywhere in the grid. In case of a server failure, the grid promptly creates new copies of the lost data and places them on operational servers in the grid. - Scalability

- A significant benefit of a distributed data grid over a replicated clustered cache is that a data grid is scalable in terms of both capacity and performance. Add a node to JBoss Data Grid to increase throughput and capacity for the entire grid. JBoss Data Grid uses a consistent hashing algorithm that limits the impact of adding or removing a node to a subset of the nodes instead of every node in the grid.Due to the even distribution of data in JBoss Data Grid, the only upper limit for the size of the grid is the group communication on the network. The network's group communication is minimal and restricted only to the discovery of new nodes. Nodes are permitted by all data access patterns to communicate directly via peer-to-peer connections, facilitating further improved scalability. JBoss Data Grid clusters can be scaled up or down in real time without requiring an infrastructure restart. The result of the real time application of changes in scaling policies results in an exceptionally flexible environment.

- Data Distribution

- JBoss Data Grid uses consistent hash algorithms to determine the locations for keys in clusters. Benefits associated with consistent hashing include:Data distribution ensures that sufficient copies exist within the cluster to provide durability and fault tolerance, while not an abundance of copies, which would reduce the environment's scalability.

- cost effectiveness.

- speed.

- deterministic location of keys with no requirements for further metadata or network traffic.

- Persistence

- JBoss Data Grid exposes a

CacheStoreinterface and several high-performance implementations, including the JDBC Cache stores and file system based cache stores. Cache stores can be used to populate the cache when it starts and to ensure that the relevant data remains safe from corruption. The cache store also overflows data to the disk when required if a process runs out of memory. - Language bindings

- JBoss Data Grid supports both the popular Memcached protocol, with existing clients for a large number of popular programming languages, as well as an optimized JBoss Data Grid specific protocol called Hot Rod. As a result, instead of being restricted to Java, JBoss Data Grid can be used for any major website or application. Additionally, remote caches can be accessed using the HTTP protocol via a RESTful API.

- Management

- In a grid environment of several hundred or more servers, management is an important feature. JBoss Operations Network, the enterprise network management software, is the best tool to manage multiple JBoss Data Grid instances. JBoss Operations Network's features allow easy and effective monitoring of the Cache Manager and cache instances.

- Remote Data Grids

- Rather than scale up the entire application server architecture to scale up your data grid, JBoss Data Grid provides a Remote Client-Server mode which allows the data grid infrastructure to be upgraded independently from the application server architecture. Additionally, the data grid server can be assigned different resources than the application server and also allow independent data grid upgrades and application redeployment within the data grid.

1.5. JBoss Data Grid Prerequisites

1.6. JBoss Data Grid Version Information

1.7. JBoss Data Grid Cache Architecture

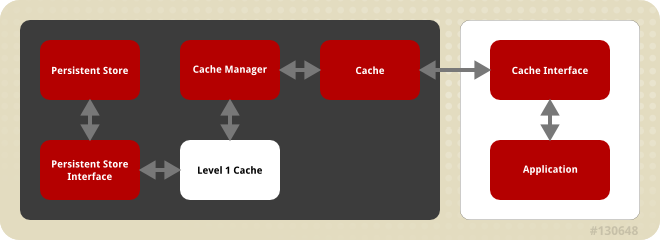

Figure 1.1. JBoss Data Grid Cache Architecture

- Elements that a user cannot directly interact with (depicted within a dark box), which includes the Cache, Cache Manager, Level 1 Cache, Persistent Store Interfaces and the Persistent Store.

- Elements that a user can interact directly with (depicted within a white box), which includes Cache Interfaces and the Application.

JBoss Data Grid's cache architecture includes the following elements:

- The Persistent Store permanently stores cache instances and entries.

- JBoss Data Grid offers two Persistent Store Interfaces to access the persistent store. Persistent store interfaces can be either:

- A cache loader is a read only interface that provides a connection to a persistent data store. A cache loader can locate and retrieve data from cache instances and from the persistent store.

- A cache store extends the cache loader functionality to include write capabilities by exposing methods that allow the cache loader to load and store states.

- The Level 1 Cache (or L1 Cache) stores remote cache entries after they are initially accessed, preventing unnecessary remote fetch operations for each subsequent use of the same entries.

- The Cache Manager is the primary mechanism used to retrieve a Cache instance in JBoss Data Grid, and can be used as a starting point for using the Cache.

- The Cache stores cache instances retrieved by a Cache Manager.

- Cache Interfaces use protocols such as Memcached and Hot Rod, or REST to interface with the cache. For details about the remote interfaces, refer to the Developer Guide.

- Memcached is a distributed memory object caching system used to store key-values in-memory. The Memcached caching system defines a text based, client-server caching protocol called the Memcached protocol.

- Hot Rod is a binary TCP client-server protocol used in JBoss Data Grid. It was created to overcome deficiencies in other client/server protocols, such as Memcached. Hot Rod enables clients to do smart routing of requests in partitioned or distributed JBoss Data Grid server clusters.

- The REST protocol eliminates the need for tightly coupled client libraries and bindings. The REST API introduces an overhead, and requires a REST client or custom code to understand and create REST calls.

- An application allows the user to interact with the cache via a cache interface. Browsers are a common example of such end-user applications.

1.8. JBoss Data Grid APIs

- Cache

- Batching

- Grouping

- CacheStore and ConfigurationBuilder

- Externalizable

- Notification (also known as the Listener API because it deals with Notifications and Listeners)

- The Asynchronous API (can only be used in conjunction with the Hot Rod Client in Remote Client-Server Mode)

- The REST Interface

- The Memcached Interface

- The Hot Rod Interface

- The RemoteCache API

Part I. Programmable APIs

Chapter 2. The Cache API

2.1. About the Cache API

ConcurrentMap interface. How entries are stored depends on the cache mode in use. For example, an entry may be replicated to a remote node or an entry may be looked up in a cache store.

Note

2.2. Using the ConfigurationBuilder API to Configure the Cache API

ConfigurationBuilder helper object.

Configuration c = new ConfigurationBuilder().clustering().cacheMode(CacheMode.REPL_SYNC).build();

String newCacheName = "repl";

manager.defineConfiguration(newCacheName, c);

Cache<String, String> cache = manager.getCache(newCacheName);

An explanation of each line of the provided configuration is as follows:

Configuration c = new ConfigurationBuilder().clustering().cacheMode(CacheMode.REPL_SYNC).build();

In the first line of the configuration, a new cache configuration object (namedc) is created using theConfigurationBuilder. Configurationcis assigned the default values for all cache configuration options except the cache mode, which is overridden and set to synchronous replication (REPL_SYNC).String newCacheName = "repl";

In the second line of the configuration, a new variable (of typeString) is created and assigned the valuerepl.manager.defineConfiguration(newCacheName, c);

In the third line of the configuration, the cache manager is used to define a named cache configuration for itself. This named cache configuration is calledrepland its configuration is based on the configuration provided for cache configurationcin the first line.Cache<String, String> cache = manager.getCache(newCacheName);

In the fourth line of the configuration, the cache manager is used to obtain a reference to the unique instance of thereplthat is held by the cache manager. This cache instance is now ready to be used to perform operations to store and retrieve data.

2.3. Per-Invocation Flags

2.3.1. About Per-Invocation Flags

2.3.2. Per-Invocation Flag Functions

putForExternalRead() method in JBoss Data Grid's Cache API uses flags internally. This method can load a JBoss Data Grid cache with data loaded from an external resource. To improve the efficiency of this call, JBoss Data Grid calls a normal put operation passing the following flags:

- The

ZERO_LOCK_ACQUISITION_TIMEOUTflag: JBoss Data Grid uses an almost zero lock acquisition time when loading data from an external source into a cache. - The

FAIL_SILENTLYflag: If the locks cannot be acquired, JBoss Data Grid fails silently without throwing any lock acquisition exceptions. - The

FORCE_ASYNCHRONOUSflag: If clustered, the cache replicates asynchronously, irrespective of the cache mode set. As a result, a response from other nodes is not required.

putForExternalRead calls of this type are used because the client can retrieve the required data from a persistent store if the data cannot be found in memory. If the client encounters a cache miss, it should retry the operation.

2.3.3. Configure Per-Invocation Flags

withFlags() method call. For example:

Cache cache = ...

cache.getAdvancedCache()

.withFlags(Flag.SKIP_CACHE_STORE, Flag.CACHE_MODE_LOCAL)

.put("local", "only");

Note

withFlags() method for each invocation. If the cache operation must be replicated onto another node, the flags are also carried over to the remote nodes.

2.3.4. Per-Invocation Flags Example

put(), should not return the previous value, two flags are used. The two flags prevent a remote lookup (to get the previous value) in a distributed environment, which in turn prevents the retrieval of the undesired, potential, previous value. Additionally, if the cache is configured with a cache loader, the two flags prevent the previous value from being loaded from its cache store.

Cache cache = ...

cache.getAdvancedCache()

.withFlags(Flag.IGNORE_RETURN_VALUES)

.put("local", "only")

2.4. The AdvancedCache Interface

2.4.1. About the AdvancedCache Interface

AdvancedCache interface, geared towards extending JBoss Data Grid, in addition to its simple Cache Interface. The AdvancedCache Interface can:

- Inject custom interceptors.

- Access certain internal components.

- Apply flags to alter the behavior of certain cache methods.

AdvancedCache:

AdvancedCache advancedCache = cache.getAdvancedCache();

2.4.2. Flag Usage with the AdvancedCache Interface

AdvancedCache.withFlags() to apply any number of flags to a cache invocation, for example:

advancedCache.withFlags(Flag.CACHE_MODE_LOCAL, Flag.SKIP_LOCKING)

.withFlags(Flag.FORCE_SYNCHRONOUS)

.put("hello", "world");

2.4.3. Custom Interceptors and the AdvancedCache Interface

AdvancedCache Interface provides a mechanism that allows advanced developers to attach custom interceptors. Custom interceptors can alter the behavior of the Cache API methods and the AdvacedCache Interface can be used to attach such interceptors programmatically at run time.

2.4.4. Custom Interceptors

2.4.4.1. About Custom Interceptors

2.4.4.2. Custom Interceptor Design

- A custom interceptor must extend the

CommandInterceptor. - A custom interceptor must declare a public, empty constructor to allow for instantiation.

- A custom interceptor must have JavaBean style setters defined for any property that is defined through the

propertyelement.

2.4.4.3. Add Custom Interceptors

2.4.4.3.1. Adding Custom Interceptors Declaratively

<namedCache name="cacheWithCustomInterceptors">

<!--

Define custom interceptors. All custom interceptors need to extend org.jboss.cache.interceptors.base.CommandInterceptor

-->

<customInterceptors>

<interceptor position="FIRST" class="com.mycompany.CustomInterceptor1">

<properties>

<property name="attributeOne" value="value1" />

<property name="attributeTwo" value="value2" />

</properties>

</interceptor>

<interceptor position="LAST" class="com.mycompany.CustomInterceptor2"/>

<interceptor index="3" class="com.mycompany.CustomInterceptor1"/>

<interceptor before="org.infinispanpan.interceptors.CallInterceptor" class="com.mycompany.CustomInterceptor2"/>

<interceptor after="org.infinispanpan.interceptors.CallInterceptor" class="com.mycompany.CustomInterceptor1"/>

</customInterceptors>

</namedCache>

Note

2.4.4.3.2. Adding Custom Interceptors Programmatically

AdvancedCache.

CacheManager cm = getCacheManager();

Cache aCache = cm.getCache("aName");

AdvancedCache advCache = aCache.getAdvancedCache();

addInterceptor() method to add the interceptor.

advCache.addInterceptor(new MyInterceptor(), 0);

Chapter 3. The Batching API

3.1. About the Batching API

Note

3.2. About Java Transaction API Transactions

- First, it retrieves the transactions currently associated with the thread.

- If not already done, it registers

XAResourcewith the transaction manager to receive notifications when a transaction is committed or rolled back.

Important

ExceptionTimeout where JBoss Data Grid is Unable to acquire lock after {time} on key {key} for requester {thread}, enable transactions. This occurs because non-transactional caches acquire locks on each node they write on. Using transactions prevents deadlocks because caches acquire locks on a single node. This problem is resolved in JBoss Data Grid 6.1.

3.3. Batching and the Java Transaction API (JTA)

- Locks acquired during an invocation are retained until the transaction commits or rolls back.

- All changes are replicated in a batch on all nodes in the cluster as part of the transaction commit process. Ensuring that multiple changes occur within the single transaction, the replication traffic remains lower and improves performance.

- When using synchronous replication or invalidation, a replication or invalidation failure causes the transaction to roll back.

- If a

CacheLoaderthat is compatible with a JTA resource, for example a JTADataSource, is used for a transaction, the JTA resource can also participate in the transaction. - All configurations related to a transaction apply for batching as well.

<transaction syncRollbackPhase="false" syncCommitPhase="false" useEagerLocking="true" eagerLockSingleNode="true" />The configuration attributes can be used for both transactions and batching, using different values.

Note

3.4. Using the Batching API

3.4.1. Enable the Batching API

<distributed-cache name="default" batching="true"> ... </distributed-cache>

3.4.2. Configure the Batching API

To configure the Batching API in the XML file:

<invocationBatching enabled="true" />

To configure the Batching API programmatically use:

Configuration c = new ConfigurationBuilder().invocationBatching().enable().build();

3.4.3. Use the Batching API

startBatch() and endBatch() on the cache as follows to use batching:

Cache cache = cacheManager.getCache();

Example 3.1. Without Using Batch

cache.put("key", "value");

cache.put(key, value); line executes, the values are replaced immediately.

Example 3.2. Using Batch

cache.startBatch();

cache.put("k1", "value");

cache.put("k2", "value");

cache.put("k3", "value");

cache.endBatch(true);

cache.startBatch();

cache.put("k1", "value");

cache.put("k2", "value");

cache.put("k3", "value");

cache.endBatch(false);

cache.endBatch(true); executes, all modifications made since the batch started are replicated.

cache.endBatch(false); executes, changes made in the batch are discarded.

3.4.4. Batching API Usage Example

Example 3.3. Batching API Usage Example

Chapter 4. The Grouping API

4.1. About the Grouping API

4.2. Grouping API Operations

- Intrinsic to the entry, which means it was generated by the key class.

- Extrinsic to the entry, which means it was generated by an external function.

4.3. Grouping API Configuration

To configure JBoss Data Grid using the programmatic API, call the following:

Configuration c = new ConfigurationBuilder().clustering().hash().groups().enabled().build();

To configure JBoss Data Grid using XML, use the following:

<clustering>

<hash>

<groups enabled="true" />

</hash>

</clustering>

@Group annotation within the method to specify the intrinsic group. For example:

class User {

...

String office;

...

int hashCode() {

// Defines the hash for the key, normally used to determine location

...

}

// Override the location by specifying a group, all keys in the same

// group end up with the same owner

@Group

String getOffice() {

return office;

}

}

Note

String.

Grouper interface. The computeGroup method within the Grouper interface returns the group.

Grouper operates as an interceptor and passes previously computed values to the computeGroup() method. If defined, @Group determines which group is passed to the first Grouper, providing improved group control when using intrinsic groups.

grouper to determine a key's group, its keyType must be assignable from the target key.

Grouper:

public class KXGrouper implements Grouper<String> {

// A pattern that can extract from a "kX" (e.g. k1, k2) style key

// The pattern requires a String key, of length 2, where the first character is

// "k" and the second character is a digit. We take that digit, and perform

// modular arithmetic on it to assign it to group "1" or group "2".

private static Pattern kPattern = Pattern.compile("(^k)(<a>\\d</a>)$");

public String computeGroup(String key, String group) {

Matcher matcher = kPattern.matcher(key);

if (matcher.matches()) {

String g = Integer.parseInt(matcher.group(2)) % 2 + "";

return g;

} else

return null;

}

public Class<String> getKeyType() {

return String.class;

}

}

grouper uses the key class to extract the group from a key using a pattern. Information specified on the key class is ignored. Each grouper must be registered to be used.

When configuring JBoss Data Grid programmatically:

Configuration c = new ConfigurationBuilder().clustering().hash().groups().addGrouper(new KXGrouper()).build();

Or when configuring JBoss Data Grid using XML:

<clustering>

<hash>

<groups enabled="true">

<grouper class="com.acme.KXGrouper" />

</groups>

</hash>

</clustering>

Chapter 5. The CacheStore and ConfigurationBuilder APIs

5.1. About the CacheStore API

5.2. The ConfigurationBuilder API

5.2.1. About the ConfigurationBuilder API

- Chain coding of configuration options in order to make the coding process more efficient

- Improve the readability of the configuration

5.2.2. Using the ConfigurationBuilder API

5.2.2.1. Programmatically Create a CacheManager and Replicated Cache

Procedure 5.1. Steps for Programmatic Configuration in JBoss Data Grid

- Create a CacheManager as a starting point in an XML file. If required, this CacheManager can be programmed in runtime to the specification that meets the requirements of the use case. The following is an example of how to create a CacheManager:

EmbeddedCacheManager manager = new DefaultCacheManager("my-config-file.xml"); Cache defaultCache = manager.getCache(); - Create a new synchronously replicated cache programmatically.

- Create a new configuration object instance using the ConfigurationBuilder helper object:

Configuration c = new ConfigurationBuilder().clustering().cacheMode(CacheMode.REPL_SYNC) .build();

In the first line of the configuration, a new cache configuration object (namedc) is created using theConfigurationBuilder. Configurationcis assigned the default values for all cache configuration options except the cache mode, which is overridden and set to synchronous replication (REPL_SYNC). - Set the cache mode to synchronous replication:

String newCacheName = "repl";

In the second line of the configuration, a new variable (of typeString) is created and assigned the valuerepl. - Define or register the configuration with a manager:

manager.defineConfiguration(newCacheName, c);

In the third line of the configuration, the cache manager is used to define a named cache configuration for itself. This named cache configuration is calledrepland its configuration is based on the configuration provided for cache configurationcin the first line. Cache<String, String> cache = manager.getCache(newCacheName);

In the fourth line of the configuration, the cache manager is used to obtain a reference to the unique instance of thereplthat is held by the cache manager. This cache instance is now ready to be used to perform operations to store and retrieve data.

5.2.2.2. Create a Customized Cache Using the Default Named Cache

infinispan-config-file.xml specifies the configuration for a replicated cache as a default and a distributed cache with a customized lifespan value is required. The required distributed cache must retain all aspects of the default cache specified in the infinispan-config-file.xml file except the mentioned aspects.

Procedure 5.2. Customize the Default Cache

- Read an instance of a default Configuration object to get the default configuration:

EmbeddedCacheManager manager = new DefaultCacheManager("infinispan-config-file.xml"); Configuration dcc = cacheManager.getDefaultCacheConfiguration(); - Use the ConfigurationBuilder to construct and modify the cache mode and L1 cache lifespan on a new configuration object:

Configuration c = new ConfigurationBuilder().read(dcc).clustering() .cacheMode(CacheMode.DIST_SYNC).l1().lifespan(60000L) .build();

- Register/define your cache configuration with a cache manager:

Cache<String, String> cache = manager.getCache(newCacheName);

5.2.2.3. Create a Customized Cache Using a Non-Default Named Cache

replicatedCache as the base instead of the default cache.

Procedure 5.3. Create a Customized Cache Using a Non-Default Named Cache

- Read the

replicatedCacheto get the default configuration:EmbeddedCacheManager manager = new DefaultCacheManager("infinispan-config-file.xml"); Configuration rc = cacheManager.getCacheConfiguration("replicatedCache"); - Use the ConfigurationBuilder to construct and modify the desired configuration on a new configuration object:

Configuration c = new ConfigurationBuilder().read(rc).clustering() .cacheMode(CacheMode.DIST_SYNC).l1().lifespan(60000L) .build();

- Register/define your cache configuration with a cache manager:

Cache<String, String> cache = manager.getCache(newCacheName);

5.2.2.4. Using the Configuration Builder to Create Caches Programmatically

5.2.2.5. Global Configuration Examples

5.2.2.5.1. Globally Configure the Transport Layer

GlobalConfiguration globalConfig = new GlobalConfigurationBuilder() .globalJmxStatistics() .build();

5.2.2.5.2. Globally Configure the Cache Manager Name

GlobalConfiguration globalConfig = new GlobalConfigurationBuilder()

.globalJmxStatistics()

.cacheManagerName("SalesCacheManager")

.mBeanServerLookupClass(JBossMBeanServerLookup.class)

.build();

5.2.2.5.3. Globally Customize Thread Pool Executors

GlobalConfiguration globalConfig = new GlobalConfigurationBuilder()

.replicationQueueScheduledExecutor()

.factory(DefaultScheduledExecutorFactory.class)

.addProperty("threadNamePrefix", "RQThread")

.build();

5.2.2.6. Cache Level Configuration Examples

5.2.2.6.1. Cache Level Configuration for the Cluster Mode

Configuration config = new ConfigurationBuilder()

.clustering()

.cacheMode(CacheMode.DIST_SYNC)

.sync()

.l1().lifespan(25000L)

.hash().numOwners(3)

.build();

5.2.2.6.2. Cache Level Eviction and Expiration Configuration

Configuration config = new ConfigurationBuilder()

.eviction()

.maxEntries(20000).strategy(EvictionStrategy.LIRS).expiration()

.wakeUpInterval(5000L)

.maxIdle(120000L)

.build();

5.2.2.6.3. Cache Level Configuration for JTA Transactions

Configuration config = new ConfigurationBuilder()

.locking()

.concurrencyLevel(10000).isolationLevel(IsolationLevel.REPEATABLE_READ)

.lockAcquisitionTimeout(12000L).useLockStriping(false).writeSkewCheck(true)

.transaction()

.recovery()

.transactionManagerLookup(new GenericTransactionManagerLookup())

.jmxStatistics()

.build();

5.2.2.6.4. Cache Level Configuration Using Chained Persistent Stores

Configuration config = new ConfigurationBuilder()

.loaders()

.shared(false).passivation(false).preload(false)

.addFileCacheStore().location("/tmp").streamBufferSize(1800).async().enable().threadPoolSize(20).build();

5.2.2.6.5. Cache Level Configuration for Advanced Externalizers

GlobalConfiguration globalConfig = new GlobalConfigurationBuilder()

.serialization()

.addAdvancedExternalizer(PersonExternalizer.class)

.addAdvancedExternalizer(999, AddressExternalizer.class)

.build();

5.2.2.6.6. Cache Level Configuration for Custom Interceptors

Configuration config = new ConfigurationBuilder()

.customInterceptors().interceptors()

.add(new FirstInterceptor()).first()

.add(new LastInterceptor()).last()

.add(new FixPositionInterceptor()).atIndex(8)

.add(new AfterInterceptor()).after(LockingInterceptor.class)

.add(new BeforeInterceptor()).before(CallInterceptor.class)

.build();

Chapter 6. The Externalizable API

6.1. About Externalizer

Externalizer is a class that can:

- Marshall a given object type to a byte array.

- Unmarshall the contents of a byte array into an instance of the object type.

6.2. About the Externalizable API

6.3. Using the Externalizable API

6.3.1. The Externalizable API Usage

- Transform an object class into a serialized class

- Read an object class from an output.

readObject() implementations create object instances of the target class. This provides flexibility in the creation of instances and allows target classes to persist immutably.

Note

6.3.2. The Externalizable API Configuration Example

- Provide an

externalizerimplementation for the type of object to be marshalled/unmarshalled. - Annotate the marshalled type class using {@link SerializeWith} to indicate the

externalizerclass.

import org.infinispan.marshall.Externalizer;

import org.infinispan.marshall.SerializeWith;

@SerializeWith(Person.PersonExternalizer.class)

public class Person {

final String name;

final int age;

public Person(String name, int age) {

this.name = name;

this.age = age;

}

public static class PersonExternalizer implements Externalizer<Person> {

@Override

public void writeObject(ObjectOutput output, Person person)

throws IOException {

output.writeObject(person.name);

output.writeInt(person.age);

}

@Override

public Person readObject(ObjectInput input)

throws IOException, ClassNotFoundException {

return new Person((String) input.readObject(), input.readInt());

}

}

}

- The payload size generated using this method can be inefficient due to constraints within the model.

- An Externalizer can be required for a class for which the source code is not available, or the source code cannot be modified.

- The use of annotations can limit framework developers or service providers attempting to abstract lower level details, such as marshalling layer.

6.3.3. Linking Externalizers with Marshaller Classes

readObject() and writeObject() methods link with the type classes they are configured to externalize by providing a getTypeClasses() implementation.

import org.infinispan.util.Util;

...

@Override

public Set<Class<? extends ReplicableCommand>> getTypeClasses() {

return Util.asSet(LockControlCommand.class, RehashControlCommand.class,

StateTransferControlCommand.class, GetKeyValueCommand.class,

ClusteredGetCommand.class, MultipleRpcCommand.class,

SingleRpcCommand.class, CommitCommand.class,

PrepareCommand.class, RollbackCommand.class,

ClearCommand.class, EvictCommand.class,

InvalidateCommand.class, InvalidateL1Command.class,

PutKeyValueCommand.class, PutMapCommand.class,

RemoveCommand.class, ReplaceCommand.class);

}

@Override

public Set<Class<? extends List>> getTypeClasses() {

return Util.<Class<? extends List>>asSet(

Util.loadClass("java.util.Collections$SingletonList"));

}

6.4. The AdvancedExternalizer

6.4.1. About the AdvancedExternalizer

6.4.2. AdvancedExternalizer Example Configuration

import org.infinispan.marshall.AdvancedExternalizer;

public class Person {

final String name;

final int age;

public Person(String name, int age) {

this.name = name;

this.age = age;

}

public static class PersonExternalizer implements AdvancedExternalizer<Person> {

@Override

public void writeObject(ObjectOutput output, Person person)

throws IOException {

output.writeObject(person.name);

output.writeInt(person.age);

}

@Override

public Person readObject(ObjectInput input)

throws IOException, ClassNotFoundException {

return new Person((String) input.readObject(), input.readInt());

}

@Override

public Set<Class<? extends Person>> getTypeClasses() {

return Util.<Class<? extends Person>>asSet(Person.class);

}

@Override

public Integer getId() {

return 2345;

}

}

}

6.4.3. Externalizer Identifiers

getId()implementations.- Declarative or Programmatic configuration that identifies the externalizer when unmarshalling a payload.

GetId() will either return a positive integer or a null value:

- A positive integer allows the externalizer to be identified when read and assigned to the correct Externalizer capable of reading the contents.

- A null value indicates that the identifier of the AdvancedExternalizer will be defined via declarative or programmatic configuration.

6.4.4. Registering Advanced Externalizers

The following is an example of a declarative configuration for an advanced Externalizer implementation:

<infinispan>

<global>

<serialization>

<advancedExternalizers>

<advancedExternalizer externalizerClass="Person$PersonExternalizer"/>

</advancedExternalizers>

</serialization>

</global>

...

</infinispan>

The following is an example of a programmatic configuration for an advanced Externalizer implementation:

GlobalConfigurationBuilder builder = ... builder.serialization() .addAdvancedExternalizer(new Person.PersonExternalizer());

The following is a declarative configuration for the location of the identifier definition during registration:

<infinispan>

<global>

<serialization>

<advancedExternalizers>

<advancedExternalizer id="123"

externalizerClass="Person$PersonExternalizer"/>

</advancedExternalizers>

</serialization>

</global>

...

</infinispan>

The following is a programmatic configuration for the location of the identifier definition during registration:

GlobalConfigurationBuilder builder = ... builder.serialization() .addAdvancedExternalizer(123, new Person.PersonExternalizer());

6.4.5. Register Multiple Externalizers Programmatically

@Marshalls annotation.

builder.serialization()

.addAdvancedExternalizer(new Person.PersonExternalizer(),

new Address.AddressExternalizer());

6.5. Internal Externalizer Implementation Access

6.5.1. Internal Externalizer Implementation Access

public static class ABCMarshallingExternalizer implements AdvancedExternalizer<ABCMarshalling> {

@Override

public void writeObject(ObjectOutput output, ABCMarshalling object) throws IOException {

MapExternalizer ma = new MapExternalizer();

ma.writeObject(output, object.getMap());

}

@Override

public ABCMarshalling readObject(ObjectInput input) throws IOException, ClassNotFoundException {

ABCMarshalling hi = new ABCMarshalling();

MapExternalizer ma = new MapExternalizer();

hi.setMap((ConcurrentHashMap<Long, Long>) ma.readObject(input));

return hi;

}

...

public static class ABCMarshallingExternalizer implements AdvancedExternalizer<ABCMarshalling> {

@Override

public void writeObject(ObjectOutput output, ABCMarshalling object) throws IOException {

output.writeObject(object.getMap());

}

@Override

public ABCMarshalling readObject(ObjectInput input) throws IOException, ClassNotFoundException {

ABCMarshalling hi = new ABCMarshalling();

hi.setMap((ConcurrentHashMap<Long, Long>) input.readObject());

return hi;

}

...

}

Chapter 7. The Notification/Listener API

7.1. About the Listener API

7.2. Listener Example

@Listener

public class PrintWhenAdded {

@CacheEntryCreated

public void print(CacheEntryCreatedEvent event) {

System.out.println("New entry " + event.getKey() + " created in the cache");

}

}

7.3. Cache Entry Modified Listener Configuration

Cache.get() when isPre (an Event method) is false. For more information about isPre(), refer to the JBoss Data Grid API Documentation's listing for the org.infinispan.notifications.cachelistener.event package.

CacheEntryModifiedEvent.getValue() to retrieve the new value of the modified entry.

7.4. Notifications

7.4.1. About Listener Notifications

@Listener. A Listenable is an interface that denotes that the implementation can have listeners attached to it. Each listener is registered using methods defined in the Listenable.

7.4.2. About Cache-level Notifications

7.4.3. Cache Manager-level Notifications

- Nodes joining or leaving a cluster;

- The starting and stopping of caches

7.4.4. About Synchronous and Asynchronous Notifications

@Listener (sync = false)public class MyAsyncListener { .... }

<asyncListenerExecutor/> element in the configuration file to tune the thread pool that is used to dispatch asynchronous notifications.

7.5. Notifying Futures

7.5.1. About NotifyingFutures

Futures, but a sub-interface known as a NotifyingFuture. Unlike a JDK Future, a listener can be attached to a NotifyingFuture to notify the user about a completed future.

Note

NotifyingFutures are only available in Library mode.

7.5.2. NotifyingFutures Example

NotifyingFutures in JBoss Data Grid:

FutureListener futureListener = new FutureListener() {

public void futureDone(Future future) {

try {

future.get();

} catch (Exception e) {

// Future did not complete successfully

System.out.println("Help!");

}

}

};

cache.putAsync("key", "value").attachListener(futureListener);

Part II. Remote Client-Server Mode Interfaces

Chapter 8. The Asynchronous API

8.1. About the Asynchronous API

Async appended to each method name. Asynchronous methods return a Future that contains the result of the operation.

Cache(String, String), Cache.put(String key, String value) returns a String, while Cache.putAsync(String key, String value) returns a Future(String).

8.2. Asynchronous API Benefits

- The guarantee of synchronous communication, with the added ability to handle failures and exceptions.

- Not being required to block a thread's operations until the call completes.

Set<Future<?>> futures = new HashSet<Future<?>>();

futures.add(cache.putAsync("key1", "value1"));

futures.add(cache.putAsync("key2", "value2"));

futures.add(cache.putAsync("key3", "value3"));

futures.add(cache.putAsync(key1, value1));futures.add(cache.putAsync(key2, value2));futures.add(cache.putAsync(key3, value3));

8.3. About Asynchronous Processes

- Network calls

- Marshalling

- Writing to a cache store (optional)

- Locking

8.4. Return Values and the Asynchronous API

Future or the NotifyingFuture in order to query the previous value.

Note

Future.get()

Chapter 9. The REST Interface

9.1. About the REST Interface in JBoss Data Grid

9.2. Ruby Client Code

require 'net/http'

http = Net::HTTP.new('localhost', 8080)

#An example of how to create a new entry

http.post('/rest/MyData/MyKey', DATA HERE', {"Content-Type" => "text/plain"})

#An example of using a GET operation to retrieve the key

puts http.get('/rest/MyData/MyKey').body

#An Example of using a PUT operation to overwrite the key

http.put('/rest/MyData/MyKey', 'MORE DATA', {"Content-Type" => "text/plain"})

#An example of Removing the remote copy of the key

http.delete('/rest/MyData/MyKey')

#An example of creating binary data

http.put('/rest/MyImages/Image.png', File.read('/Users/michaelneale/logo.png'), {"Content-Type" => "image/png"})

9.3. Using JSON with Ruby Example

To use JavaScript Object Notation (JSON) with ruby to interact with JBoss Data Grid's REST Interface, install the JSON Ruby library (refer to your platform's package manager or the Ruby documentation) and declare the requirement using the following code:

require 'json'

The following code is an example of how to use JavaScript Object Notation (JSON) in conjunction with Ruby to send specific data, in this case the name and age of an individual, using the PUT function.

data = {:name => "michael", :age => 42 }

http.put('/infinispan/rest/Users/data/0', data.to_json, {"Content-Type" => "application/json"})

9.4. Python Client Code

import httplib

#How to insert data

conn = httplib.HTTPConnection("localhost:8080")

data = "SOME DATA HERE \!" #could be string, or a file...

conn.request("POST", "/rest/Bucket/0", data, {"Content-Type": "text/plain"})

response = conn.getresponse()

print response.status

#How to retrieve data

import httplib

conn = httplib.HTTPConnection("localhost:8080")

conn.request("GET", "/rest/Bucket/0")

response = conn.getresponse()

print response.status

print response.read()

9.5. Java Client Code

Define imports as follows:

import java.io.BufferedReader;import java.io.IOException; import java.io.InputStreamReader;import java.io.OutputStreamWriter; import java.net.HttpURLConnection;import java.net.URL;

The following is an example of using Java to add a string value to a cache:

public class RestExample {

/**

* Method that puts a String value in cache.

* @param urlServerAddress

* @param value

* @throws IOException

*/

public void putMethod(String urlServerAddress, String value) throws IOException {

System.out.println("----------------------------------------");

System.out.println("Executing PUT");

System.out.println("----------------------------------------");

URL address = new URL(urlServerAddress);

System.out.println("executing request " + urlServerAddress);

HttpURLConnection connection = (HttpURLConnection) address.openConnection();

System.out.println("Executing put method of value: " + value);

connection.setRequestMethod("PUT");

connection.setRequestProperty("Content-Type", "text/plain");

connection.setDoOutput(true);

OutputStreamWriter outputStreamWriter = new OutputStreamWriter(connection.getOutputStream());

outputStreamWriter.write(value);

connection.connect();

outputStreamWriter.flush();

System.out.println("----------------------------------------");

System.out.println(connection.getResponseCode() + " " + connection.getResponseMessage());

System.out.println("----------------------------------------");

connection.disconnect();

}

The following code is an example of a method used that reads a value specified in a URL using Java to interact with the JBoss Data Grid REST Interface.

/**

* Method that gets an value by a key in url as param value.

* @param urlServerAddress

* @return String value

* @throws IOException

*/

public String getMethod(String urlServerAddress) throws IOException {

String line = new String();

StringBuilder stringBuilder = new StringBuilder();

System.out.println("----------------------------------------");

System.out.println("Executing GET");

System.out.println("----------------------------------------");

URL address = new URL(urlServerAddress);

System.out.println("executing request " + urlServerAddress);

HttpURLConnection connection = (HttpURLConnection) address.openConnection();

connection.setRequestMethod("GET");

connection.setRequestProperty("Content-Type", "text/plain");

connection.setDoOutput(true);

BufferedReader bufferedReader = new BufferedReader(new InputStreamReader(connection.getInputStream()));

connection.connect();

while ((line = bufferedReader.readLine()) \!= null) {

stringBuilder.append(line + '\n');

}

System.out.println("Executing get method of value: " + stringBuilder.toString());

System.out.println("----------------------------------------");

System.out.println(connection.getResponseCode() + " " + connection.getResponseMessage());

System.out.println("----------------------------------------");

connection.disconnect();

return stringBuilder.toString();

}

/**

* Main method example.

* @param args

* @throws IOException

*/

public static void main(String\[\] args) throws IOException {

//Note that the cache name is "cacheX"

RestExample restExample = new RestExample();

restExample.putMethod("http://localhost:8080/rest/cacheX/1", "Infinispan REST Test");

restExample.getMethod("http://localhost:8080/rest/cacheX/1");

}

}

}

9.6. Configure the REST Interface

9.6.1. About JBoss Data Grid Connectors

- The

hotrod-connectorelement, which defines the configuration for a Hot Rod based connector. - The

memcached-connectorelement, which defines the configuration for a memcached based connector. - The

rest-connectorelement, which defines the configuration for a REST interface based connector.

9.6.2. Configure REST Connectors

rest-connector element in JBoss Data Grid's Remote Client-Server mode.

<subsystem xmlns="urn:jboss:domain:datagrid:1.0">

<rest-connector virtual-server="default-host"

cache-container="default"

context-path="$CONTEXT_PATH"

security-domain="other"

auth-method="BASIC"

security-mode="WRITE" />

</subsystem>

9.6.3. REST Connector Attributes

- The

rest-connectorelement specifies the configuration information for the REST connector.- The

virtual-serverparameter specifies the virtual server used by the REST connector. The default value for this parameter isdefault-host. This is an optional parameter. - The

cache-containerparameter names the cache container used by the REST connector. This is a mandatory parameter. - The

context-pathparameter specifies the context path for the REST connector. The default value for this parameter is an empty string (""). This is an optional parameter. - the

security-domainparameter specifies that the specified domain, declared in the security subsystem, should be used to authenticate access to the REST endpoint. This is an optional parameter. If this parameter is omitted, no authentication is performed. - The

auth-methodparameter specifies the method used to retrieve credentials for the end point. The default value for this parameter isBASIC. Supported alternate values includeDIGEST,CLIENT-CERTandSPNEGO. This is an optional parameter. - The

security-modeparameter specifies whether authentication is required only for write operations (such as PUT, POST and DELETE) or for read operations (such as GET and HEAD) as well. Valid values for this parameter areWRITEfor authenticating write operations only, orREAD_WRITEto authenticate read and write operations.

9.7. Using the REST Interface

9.7.1. REST Interface Operations

- Adding data.

- Retrieving data.

- Removing data.

9.7.2. Adding Data

9.7.2.1. Adding Data Using the REST Interface

- HTTP

PUTmethod - HTTP

POSTmethod

PUT and POST methods are used, the body of the request contains this data, which includes any information added by the user.

PUT and POST methods require a Content-Type header.

9.7.2.2. About PUT /{cacheName}/{cacheKey}

PUT request from the provided URL form places the payload, (from the request body) in the targeted cache using the provided key. The targeted cache must exist on the server for this task to successfully complete.

hr is the cache name and payRoll%2F3 is the key. The value %2F indicates that a / was used in the key.

http://someserver/rest/hr/payRoll%2F3

Time-To-Live and Last-Modified values are updated, if an update is required.

Note

%2F to represent a / in the key (as in the provided example) can be successfully run if the server is started using the following argument:

-Dorg.apache.tomcat.util.buf.UDecoder.ALLOW_ENCODED_SLASH=true

9.7.2.3. About POST /{cacheName}/{cacheKey}

POST method from the provided URL form places the payload (from the request body) in the targeted cache using the provided key. However, in a POST method, if a value in a cache/key exists, a HTTP CONFLICT status is returned and the content is not updated.

9.7.3. Retrieving Data

9.7.3.1. Retrieving Data Using the REST Interface

- HTTP

GETmethod. - HTTP

HEADmethod.

9.7.3.2. About GET /{cacheName}/{cacheKey}

GET method returns the data located in the supplied cacheName, matched to the relevant key, as the body of the response. The Content-Type header provides the type of the data. A browser can directly access the cache.

9.7.3.3. About HEAD /{cacheName}/{cacheKey}

HEAD method operates in a manner similar to the GET method, however returns no content (header fields are returned).

9.7.4. Removing Data

9.7.4.1. Removing Data Using the REST Interface

DELETE method to retrieve data from the cache. The DELETE method can:

- Remove a cache entry/value. (

DELETE /{cacheName}/{cacheKey}) - Remove a cache. (

DELETE /{cacheName})

9.7.4.2. About DELETE /{cacheName}/{cacheKey}

DELETE /{cacheName}/{cacheKey}), the DELETE method removes the key/value from the cache for the provided key.

9.7.4.3. About DELETE /{cacheName}

DELETE /{cacheName}), the DELETE method removes all entries in the named cache. After a successful DELETE operation, the HTTP status code 200 is returned.

9.7.4.4. Background Delete Operations

performAsync header to true to ensure an immediate return while the removal operation continues in the background.

9.7.5. REST Interface Operation Headers

9.7.5.1. Headers

Table 9.1. Header Types

| Headers | Mandatory/Optional | Values | Default Value | Details |

|---|---|---|---|---|

| Content-Type | Mandatory | - | - | If the Content-Type is set to application/x-java-serialized-object, it is stored as a Java object. |

| performAsync | Optional | True/False | - | If set to true, an immediate return occurs, followed by a replication of data to the cluster on its own. This feature is useful when dealing with bulk data inserts and large clusters. |

| timeToLiveSeconds | Optional | Numeric (positive and negative numbers) | -1 (This value prevents expiration as a direct result of timeToLiveSeconds. Expiration values set elsewhere override this default value.) | Reflects the number of seconds before the entry in question is automatically deleted. Setting a negative value for timeToLiveSeconds provides the same result as the default value. |

| maxIdleTimeSeconds | Optional | Numeric (positive and negative numbers) | -1 (This value prevents expiration as a direct result of maxIdleTimeSeconds. Expiration values set elsewhere override this default value.) | Contains the number of seconds after the last usage when the entry will be automatically deleted. Passing a negative value provides the same result as the default value. |

timeToLiveSeconds and maxIdleTimeSeconds headers:

- If both the

timeToLiveSecondsandmaxIdleTimeSecondsheaders are assigned the value0, the cache uses the defaulttimeToLiveSecondsandmaxIdleTimeSecondsvalues configured either using XML or programatically. - If only the

maxIdleTimeSecondsheader value is set to0, thetimeToLiveSecondsvalue should be passed as the parameter (or the default-1, if the parameter is not present). Additionally, themaxIdleTimeSecondsparameter value defaults to the values configured either using XML or programatically. - If only the

timeToLiveSecondsheader value is set to0, expiration occurs immediately and themaxIdleTimeSecondsvalue is set to the value passed as a parameter (or the default-1if no parameter was supplied).

ETags (Entity Tags) are returned for each REST Interface entry, along with a Last-Modified header that indicates the state of the data at the supplied URL. ETags are used in HTTP operations to request data exclusively in cases where the data has changed to save bandwidth. The following headers support ETags (Entity Tags) based optimistic locking:

Table 9.2. Entity Tag Related Headers

| Header | Algorithm | Example | Details |

|---|---|---|---|

| If-Match | If-Match = "If-Match" ":" ( "*" | 1#entity-tag ) | - | Used in conjunction with a list of associated entity tags to verify that a specified entity (that was previously obtained from a resource) remains current. |

| If-None-Match | - | Used in conjunction with a list of associated entity tags to verify that none of the specified entities (that was previously obtained from a resource) are current. This feature facilitates efficient updates of cached information when required and with minimal transaction overhead. | |

| If-Modified-Since | If-Modified-Since = "If-Modified-Since" ":" HTTP-date | If-Modified-Since: Sat, 29 Oct 1994 19:43:31 GMT | Compares the requested variant's last modification time and date with a supplied time and date value. If the requested variant has not been modified since the specified time and date, a 304 (not modified) response is returned without a message-body instead of an entity. |

| If-Unmodified-Since | If-Unmodified-Since = "If-Unmodified-Since" ":" HTTP-date | If-Unmodified-Since: Sat, 29 Oct 1994 19:43:31 GMT | Compares the requested variant's last modification time and date with a supplied time and date value. If the requested resources has not been modified since the supplied date and time, the specified operation is performed. If the requested resource has been modified since the supplied date and time, the operation is not performed and a 412 (Precondition Failed) response is returned. |

9.8. REST Interface Security

Note

9.8.1. Enable Security for the REST Endpoint

JBoss Data Grid includes an example standalone-rest-auth.xml file located within the JBoss Data Grid directory at the location /docs/examples/configs).

$JDG_HOME/standalone/configuration directory to use the configuration. From the $JDG_HOME location, enter the following command to create a copy of the standalone-rest-auth.xml in the appropriate location:

$ cp docs/examples/configs/standalone-rest-auth.xml standalone/configuration/standalone.xml

standalone-rest-auth.xml to start with a new configuration template.

Procedure 9.1. Enable Security for the REST Endpoint

standalone.xml:

Specify Security Parameters

Ensure that the rest endpoint specifies a valid value for thesecurity-domainandauth-methodparameters. Recommended settings for these parameters are as follows:<subsystem xmlns="urn:jboss:domain:datagrid:1.0"> <rest-connector virtual-server="default-host" cache-container="local" security-domain="other" auth-method="BASIC"/> </subsystem>Check Security Domain Declaration

Ensure that the security subsystem contains the corresponding security-domain declaration. For details about setting up security-domain declarations, refer to the JBoss Application Server 7 or JBoss Enterprise Application Platform 6 documentation.Add an Application User

Run the relevant script and enter the configuration settings to add an application user.- Run the

adduser.shscript (located in$JDG_HOME/bin).- On a Windows system, run the

adduser.batfile (located in$JDG_HOME/bin) instead.

- When prompted about the type of user to add, select

Application User (application-users.properties)by enteringb. - Accept the default value for realm (

ApplicationRealm) by pressing the return key. - Specify a username and password.

- When prompted for a role for the created user, enter

REST. - Ensure the username and application realm information is correct when prompted and enter "yes" to continue.

Verify the Created Application User

Ensure that the created application user is correctly configured.- Check the configuration listed in the

application-users.propertiesfile (located in$JDG_HOME/standalone/configuration/). The following is an example of what the correct configuration looks like in this file:user1=2dc3eacfed8cf95a4a31159167b936fc

- Check the configuration listed in the

application-roles.propertiesfile (located in$JDG_HOME/standalone/configuration/). The following is an example of what the correct configuration looks like in this file:user1=REST

Test the Server

Start the server and enter the following link in a browser window to access the REST endpoint:http://localhost:8080/rest/namedCache

Note

If testing using a GET request, a405response code is expected and indicates that the server was successfully authenticated.

Chapter 10. The Memcached Interface

10.1. About the Memcached Protocol

10.2. About Memcached Servers in JBoss Data Grid

- Standalone, where each server acts independently without communication with any other memcached servers.

- Clustered, where servers replicate and distribute data to other memcached servers.

10.3. Using the Memcached Interface

10.3.1. Memcached Statistics

Table 10.1. Memcached Statistics

| Statistic | Data Type | Details |

|---|---|---|

| uptime | 32-bit unsigned integer. | Contains the time (in seconds) that the memcached instance has been available and running. |

| time | 32-bit unsigned integer. | Contains the current time. |

| version | String | Contains the current version. |

| curr_items | 32-bit unsigned integer. | Contains the number of items currently stored by the instance. |

| total_items | 32-bit unsigned integer. | Contains the total number of items stored by the instance during its lifetime. |

| cmd_get | 64-bit unsigned integer | Contains the total number of get operation requests (requests to retrieve data). |

| cmd_set | 64-bit unsigned integer | Contains the total number of set operation requests (requests to store data). |

| get_hits | 64-bit unsigned integer | Contains the number of keys that are present from the keys requested. |

| get_misses | 64-bit unsigned integer | Contains the number of keys that were not found from the keys requested. |

| delete_hits | 64-bit unsigned integer | Contains the number of keys to be deleted that were located and successfully deleted. |

| delete_misses | 64-bit unsigned integer | Contains the number of keys to be deleted that were not located and therefore could not be deleted. |

| incr_hits | 64-bit unsigned integer | Contains the number of keys to be incremented that were located and successfully incremented |

| incr_misses | 64-bit unsigned integer | Contains the number of keys to be incremented that were not located and therefore could not be incremented. |

| decr_hits | 64-bit unsigned integer | Contains the number of keys to be decremented that were located and successfully decremented. |

| decr_misses | 64-bit unsigned integer | Contains the number of keys to be decremented that were not located and therefore could not be decremented. |

| cas_hits | 64-bit unsigned integer | Contains the number of keys to be compared and swapped that were found and successfully compared and swapped. |

| cas_misses | 64-bit unsigned integer | Contains the number of keys to be compared and swapped that were not found and therefore not compared and swapped. |

| cas_badvalue | 64-bit unsigned integer | Contains the number of keys where a compare and swap occurred but the original value did not match the supplied value. |

| evictions | 64-bit unsigned integer | Contains the number of eviction calls performed. |

| bytes_read | 64-bit unsigned integer | Contains the total number of bytes read by the server from the network. |

| bytes_written | 64-bit unsigned integer | Contains the total number of bytes written by the server to the network. |

10.4. Configure the Memcached Interface

10.4.1. About JBoss Data Grid Connectors

- The

hotrod-connectorelement, which defines the configuration for a Hot Rod based connector. - The

memcached-connectorelement, which defines the configuration for a memcached based connector. - The

rest-connectorelement, which defines the configuration for a REST interface based connector.

10.4.2. Configure Memcached Connectors

memcached-connector element in JBoss Data Grid's Remote Client-Server Mode.

<subsystem xmlns="urn:jboss:domain:datagrid:1.0">

<memcached-connector socket-binding="memcached"

cache-container="default"

worker-threads="4"

idle-timeout="-1"

tcp-nodelay="true"

send-buffer-size="0"

receive-buffer-size="0" />

</subsystem>

10.4.3. Memcached Connector Attributes

- The following is a list of attributes used to configure the memcached connector within the

connectorselement in JBoss Data Grid's Remote Client-Server Mode.- The

memcached-connectorelement defines the configuration elements for use with memcached.- The

socket-bindingparameter specifies the socket binding port used by the memcached connector. This is a mandatory parameter. - The

cache-containerparameter names the cache container used by the memcached connector. This is a mandatory parameter. - The

worker-threadsparameter specifies the number of worker threads available for the memcached connector. The default value for this parameter is the number of cores available multiplied by two. This is an optional parameter. - The

idle-timeoutparameter specifies the time (in milliseconds) the connector can remain idle before the connection times out. The default value for this parameter is-1, which means that no timeout period is set. This is an optional parameter. - The

tcp-nodelayparameter specifies whether TCP packets will be delayed and sent out in batches. Valid values for this parameter aretrueandfalse. The default value for this parameter istrue. This is an optional parameter. - The

send-buffer-sizeparameter indicates the size of the send buffer for the memcached connector. The default value for this parameter is the size of the TCP stack buffer. This is an optional parameter. - The

receive-buffer-sizeparameter indicates the size of the receive buffer for the memcached connector. The default value for this parameter is the size of the TCP stack buffer. This is an optional parameter.

Chapter 11. The Hot Rod Interface

11.1. About Hot Rod

11.2. The Benefits of Using Hot Rod over Memcached

- Memcached

- The memcached protocol causes the server endpoint to use the memcached text wire protocol. The memcached wire protocol has the benefit of being commonly used, and is available for almost any platform. All of JBoss Data Grid's functions, including clustering, state sharing for scalability, and high availability, are available when using memcached.However the memcached protocol lacks dynamicity, resulting in the need to manually update the list of server nodes on your clients in the event one of the nodes in a cluster fails. Also, memcached clients are not aware of the location of the data in the cluster. This means that they will request data from a non-owner node, incurring the penalty of an additional request from that node to the actual owner, before being able to return the data to the client. This is where the Hot Rod protocol is able to provide greater performance than memcached.

- Hot Rod

- JBoss Data Grid's Hot Rod protocol is a binary wire protocol that offers all the capabilities of memcached, while also providing better scaling, durability, and elasticity.The Hot Rod protocol does not need the hostnames and ports of each node in the remote cache, whereas memcached requires these parameters to be specified. Hot Rod clients automatically detect changes in the topology of clustered Hot Rod servers; when new nodes join or leave the cluster, clients update their Hot Rod server topology view. Consequently, Hot Rod provides ease of configuration and maintenance, with the advantage of dynamic load balancing and failover.Additionally, the Hot Rod wire protocol uses smart routing when connecting to a distributed cache. This involves sharing a consistent hash algorithm between the server nodes and clients, resulting in faster read and writing capabilities than memcached.

11.3. About Hot Rod Servers in JBoss Data Grid

11.4. Hot Rod Hash Functions

Integer.MAX_INT). This value is returned to the client using the Hot Rod protocol each time a hash-topology change is detected to prevent Hot Rod clients assuming a specific hash space as a default. The hash space can only contain positive numbers ranging from 0 to Integer.MAX_INT.

11.5. Hot Rod Server Nodes

11.5.1. About Consistent Hashing Algorithms

11.5.2. The hotrod.properties File

infinispan.client.hotrod.server_list=remote-server:11222

infinispan.client.hotrod.request_balancing_strategy- For replicated (vs distributed) Hot Rod server clusters, the client balances requests to the servers according to this strategy.The default value for this property is

org.infinispan.client.hotrod.impl.transport.tcp.RoundRobinBalancingStrategy. infinispan.client.hotrod.server_list- This is the initial list of Hot Rod servers to connect to, specified in the following format: host1:port1;host2:port2... At least one host:port must be specified.The default value for this property is

127.0.0.1:11222. infinispan.client.hotrod.force_return_values- Whether or not to enable Flag.FORCE_RETURN_VALUE for all calls.The default value for this property is

false. infinispan.client.hotrod.tcp_no_delay- Affects TCP NODELAY on the TCP stack.The default value for this property is

true. infinispan.client.hotrod.ping_on_startup- If true, a ping request is sent to a back end server in order to fetch cluster's topology.The default value for this property is

true. infinispan.client.hotrod.transport_factory- Controls which transport will be used. Currently only the TcpTransport is supported.The default value for this property is

org.infinispan.client.hotrod.impl.transport.tcp.TcpTransportFactory. infinispan.client.hotrod.marshaller- Allows you to specify a custom Marshaller implementation to serialize and deserialize user objects.The default value for this property is

org.infinispan.marshall.jboss.GenericJBossMarshaller. infinispan.client.hotrod.async_executor_factory- Allows you to specify a custom asynchronous executor for async calls.The default value for this property is

org.infinispan.client.hotrod.impl.async.DefaultAsyncExecutorFactory. infinispan.client.hotrod.default_executor_factory.pool_size- If the default executor is used, this configures the number of threads to initialize the executor with.The default value for this property is

10. infinispan.client.hotrod.default_executor_factory.queue_size- If the default executor is used, this configures the queue size to initialize the executor with.The default value for this property is

100000. infinispan.client.hotrod.hash_function_impl.1- This specifies the version of the hash function and consistent hash algorithm in use, and is closely tied with the Hot Rod server version used.The default value for this property is the

Hash function specified by the server in the responses as indicated in ConsistentHashFactory. infinispan.client.hotrod.key_size_estimate- This hint allows sizing of byte buffers when serializing and deserializing keys, to minimize array resizing.The default value for this property is

64. infinispan.client.hotrod.value_size_estimate- This hint allows sizing of byte buffers when serializing and deserializing values, to minimize array resizing.The default value for this property is

512. infinispan.client.hotrod.socket_timeout- This property defines the maximum socket read timeout before giving up waiting for bytes from the server.The default value for this property is

60000 (equals 60 seconds). infinispan.client.hotrod.protocol_version- This property defines the protocol version that this client should use. Other valid values include 1.0.The default value for this property is

1.1. infinispan.client.hotrod.connect_timeout- This property defines the maximum socket connect timeout before giving up connecting to the server.The default value for this property is

60000 (equals 60 seconds).

11.6. Hot Rod Headers

11.6.1. Hot Rod Header Data Types

Table 11.1. Header Data Types

| Data Type | Size | Details |

|---|---|---|

| vInt | Between 1-5 bytes. | Unsigned variable length integer values. |

| vLong | Between 1-9 bytes. | Unsigned variable length long values. |

| string | - | Strings are always represented using UTF-8 encoding. |

11.6.2. Request Header

Table 11.2. Request Header Fields

| Field Name | Data Type/Size | Details |

|---|---|---|

| Magic | 1 byte | Indicates whether the header is a request header or response header. |

| Message ID | vLong | Contains the message ID. Responses use this unique ID when responding to a request. This allows Hot Rod clients to implement the protocol in an asynchronous manner. |

| Version | 1 byte | Contains the Hot Rod server version. |

| Opcode | 1 byte | Contains the relevant operation code. In a request header, opcode can only contain the request operation codes. |

| Cache Name Length | vInt | Stores the length of the cache name. If Cache Name Length is set to 0 and no value is supplied for Cache Name, the operation interacts with the default cache. |

| Cache Name | string | Stores the name of the target cache for the specified operation. This name must match the name of a predefined cache in the cache configuration file. |

| Flags | vInt | Contains a numeric value of variable length that represents flags passed to the system. Each bit represents a flag, except the most significant bit, which is used to determine whether more bytes must be read. Using a bit to represent each flag facilitates the representation of flag combinations in a condensed manner. |

| Client Intelligence | 1 byte | Contains a value that indicates the client capabilities to the server. |

| Topology ID | vInt | Contains the last known view ID in the client. Basic clients supply the value 0 for this field. Clients that support topology or hash information supply the value 0 until the server responds with the current view ID, which is subsequently used until a new view ID is returned by the server to replace the current view ID. |

| Transaction Type | 1 byte | Contains a value that represents one of two known transaction types. Currently, the only supported value is 0. |

| Transaction ID | byte-array | Contains a byte array that uniquely identifies the transaction associated with the call. The transaction type determines the length of this byte array. If the value for Transaction Type was set to 0, no Transaction ID is present. |

11.6.3. Response Header

Table 11.3. Response Header Fields

| Field Name | Data Type | Details |

|---|---|---|

| Magic | 1 byte | Indicates whether the header is a request or response header. |

| Message ID | vLong | Contains the message ID. This unique ID is used to pair the response with the original request. This allows Hot Rod clients to implement the protocol in an asynchronous manner. |

| Opcode | 1 byte | Contains the relevant operation code. In a response header, opcode can only contain the response operation codes. |

| Status | 1 byte | Contains a code that represents the status of the response. |

| Topology Change Marker | 1 byte | Contains a marker byte that indicates whether the response is included in the topology change information. |

11.6.4. Topology Change Headers

11.6.4.1. About Topology Change Headers

topology ID and the topology ID sent by the client and, if the two differ, it returns a new topology ID.

11.6.4.2. Topology Change Marker Values

Topology Change Marker field in a response header:

Table 11.4. Topology Change Marker Field Values

| Value | Details |

|---|---|

| 0 | No topology change information is added. |

| 1 | Topology change information is added. |

11.6.4.3. Topology Change Headers for Topology-Aware Clients

Table 11.5. Topology Change Header Fields

| Response Header Fields | Data Type/Size | Details |

|---|---|---|

| Response Header with Topology Change Marker | - | - |

| Topology ID | vInt | - |

| Num Servers in Topology | vInt | Contains the number of Hot Rod servers running in the cluster. This value can be a subset of the entire cluster if only some nodes are running Hot Rod servers. |

| mX: Host/IP Length | vInt | Contains the length of the hostname or IP address of an individual cluster member. Variable length allows this element to include hostnames, IPv4 and IPv addresses. |

| mX: Host/IP Address | string | Contains the hostname or IP address of an individual cluster member. The Hot Rod client uses this information to access the individual cluster member. |

| mX: Port | Unsigned Short. 2 bytes | Contains the port used by Hot Rod clients to communicate with the cluster member. |

mX, are repeated for each server in the topology. The first server in the topology's information fields will be prefixed with m1 and the numerical value is incremented by one for each additional server till the value of X equals the number of servers specified in the num servers in topology field.

11.6.4.4. Topology Change Headers for Hash Distribution-Aware Clients

Table 11.6. Topology Change Header Fields

| Field | Data Type/Size | Details |

|---|---|---|

| Response Header with Topology Change Marker | - | - |

| Topology ID | vInt | - |

| Number Key Owners | Unsigned short. 2 bytes. | Contains the number of globally configured copies for each distributed key. Contains the value 0 if distribution is not configured on the cache. |

| Hash Function Version | 1 byte | Contains a pointer to the hash function in use. Contains the value 0 if distribution is not configured on the cache. |

| Hash Space Size | vInt | Contains the modulus used by JBoss Data Grid for all module arithmetic related to hash code generation. Clients use this information to apply the correct hash calculations to the keys. Contains the value 0 if distribution is not configured on the cache. |

| Number servers in topology | vInt | Contains the number of Hot Rod servers running in the cluster. This value can be a subset of the entire cluster if only some nodes are running Hot Rod servers. This value also represents the number of host to port pairings included in the header. |

| Number Virtual Nodes Owners | vInt | Contains the number of configured virtual nodes. Contains the value 0 if no virtual nodes are configured or if distribution is not configured on the cache. |

| mX: Host/IP Length | vInt | Contains the length of the hostname or IP address of an individual cluster member. Variable length allows this element to include hostnames, IPv4 and IPv6 addresses. |

| mX: Host/IP Address | string | Contains the hostname or IP address of an individual cluster member. The Hot Rod client uses this information to access the individual cluster member. |

| mX: Port | Unsigned short. 2 bytes. | Contains the port used by Hot Rod clients to communicate with the cluster member. |

| mX: Hashcode | 4 bytes. |

mX, are repeated for each server in the topology. The first server in the topology's information fields will be prefixed with m1 and the numerical value is incremented by one for each additional server till the value of X equals the number of servers specified in the num servers in topology field.

11.7. Hot Rod Operations

11.7.1. Hot Rod Operations

- Get

- BulkGet

- GetWithVersion

- Put

- PutIfAbsent

- Remove

- RemoveIfUnmodified

- Replace

- ReplaceIfUnmodified

- Clear

- ContainsKey

- Ping

- Stats

11.7.2. Hot Rod Get Operation

Get operation uses the following request format:

Table 11.7. Get Operation Request Format

| Field | Data Type | Details |

|---|---|---|

| Header | - | - |

| Key Length | vInt | Contains the length of the key. The vInt data type is used because of its size (up to 6 bytes), which is larger than the size of Integer.MAX_VALUE. However, Java disallows single array sizes to exceed the size of Integer.MAX_VALUE. As a result, this vInt is also limited to the maximum size of Integer.MAX_VALUE. |

| Key | Byte array | Contains a key, the corresponding value of which is requested. |

Table 11.8. Get Operation Response Format

| Response Status | Details |

|---|---|

| 0x00 | Successful operation. |

| 0x02 | The key does not exist. |

get operation's response when the key is found is as follows:

Table 11.9. Get Operation Response Format

| Field | Data Type | Details |

|---|---|---|

| Header | - | - |

| Value Length | vInt | Contains the length of the value. |

| Value | Byte array | Contains the requested value. |

11.7.3. Hot Rod BulkGet Operation