Administration Guide

Administering Red Hat CodeReady Workspaces 2.3

Robert Kratky

rkratky@redhat.comMichal Maléř

mmaler@redhat.comFabrice Flore-Thébault

ffloreth@redhat.comYana Hontyk

yhontyk@redhat.comdevtools-docs@redhat.com

Abstract

Chapter 1. Customizing the devfile and plug-in registries

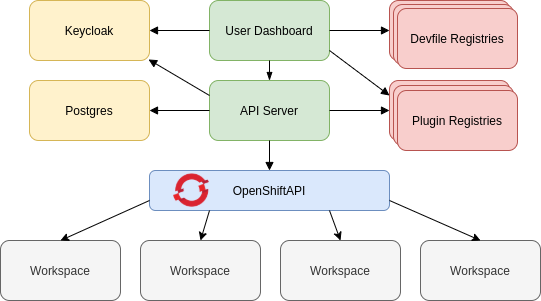

CodeReady Workspaces 2.3 introduces two registries: the plug-ins registry and the devfile registry. They are static websites where the metadata of CodeReady Workspaces plug-ins and CodeReady Workspaces devfiles is published.

The plug-in registry makes it possible to share a plug-in definition across all the users of the same instance of CodeReady Workspaces. Only plug-ins that are published in a registry can be used in a devfile.

The devfile registry holds the definitions of the CodeReady Workspaces stacks. These are available on the CodeReady Workspaces user dashboard when selecting Create Workspace. It contains the list of CodeReady Workspaces technological stack samples with example projects.

The devfile and plug-in registries run in two separate pods and are deployed when the CodeReady Workspaces server is deployed (that is the default behavior of the CodeReady Workspaces Operator). The metadata of the plug-ins and devfiles are versioned on GitHub and follow the CodeReady Workspaces server life cycle.

In this document, the following two ways to customize the default registries that are deployed with CodeReady Workspaces (to modify the plug-ins or devfile metadata) are described:

- Building a custom image of the registries

Running the default images but modifying them at runtime

1.1. Building and running a custom registry image

This section describes the building of registries and updating a running CodeReady Workspaces server to point to the registries.

1.1.1. Building a custom devfile registry

This section describes how to build a custom devfiles registry. Following operations are covered:

- Getting a copy of the source code necessary to build a devfiles registry.

- Adding a new devfile.

- Building the devfiles registry.

Procedure

Clone the devfile registry repository:

$ git clone git@github.com:redhat-developer/codeready-workspaces.git $ cd codeready-workspaces/dependencies/che-devfile-registry

In the

./che-devfile-registry/devfiles/directory, create a subdirectory<devfile-name>/and add thedevfile.yamlandmeta.yamlfiles.File organization for a devfile

./che-devfile-registry/devfiles/ └── <devfile-name> ├── devfile.yaml └── meta.yaml-

Add valid content in the

devfile.yamlfile. For a detailed description of the devfile format, see Making a workspace portable using a Devfile. Ensure that the

meta.yamlfile conforms to the following structure:Table 1.1. Parameters for a devfile

meta.yamlAttribute Description descriptionDescription as it appears on the user dashboard.

displayNameName as it appears on the user dashboard.

globalMemoryLimitThe sum of the expected memory consumed by all the components launched by the devfile. This number will be visible on the user dashboard. It is informative and is not taken into account by the CodeReady Workspaces server.

iconLink to an

.svgfile that is displayed on the user dashboard.tagsList of tags. Tags usually include the tools included in the stack.

Example devfile

meta.yamldisplayName: Rust description: Rust Stack with Rust 1.39 tags: ["Rust"] icon: https://www.eclipse.org/che/images/logo-eclipseche.svg globalMemoryLimit: 1686Mi

Build the containers for the custom devfile registry:

$ docker build -t my-devfile-registry .

1.1.2. Building a custom plug-in registry

This section describes how to build a custom plug-in registry. Following operations are covered:

- Getting a copy of the source code necessary to build a custom plug-in registry.

- Adding a new plug-in.

- Building the custom plug-in registry.

Procedure

Clone the plug-in registry repository:

$ git clone git@github.com:redhat-developer/codeready-workspaces.git $ cd codeready-workspaces/dependencies/che-plugin-registry

In the

./che-plugin-registry/v3/plugins/directory, create new directories<publisher>/<plugin-name>/<plugin-version>/and ameta.yamlfile in the last directory.File organization for a plugin

./che-plugin-registry/v3/plugins/ ├── <publisher> │ └── <plugin-name> │ ├── <plugin-version> │ │ └── meta.yaml │ └── latest.txt

-

Add valid content to the

meta.yamlfile. See the “Using a Visual Studio Code extension in CodeReady Workspaces” section or the README.md file in theeclipse/che-plugin-registryrepository for a detailed description of themeta.yamlfile format. Create a file named

latest.txtwith content the name of the latest<plugin-version>directory.Example$ tree che-plugin-registry/v3/plugins/redhat/java/ che-plugin-registry/v3/plugins/redhat/java/ ├── 0.38.0 │ └── meta.yaml ├── 0.43.0 │ └── meta.yaml ├── 0.45.0 │ └── meta.yaml ├── 0.46.0 │ └── meta.yaml ├── 0.50.0 │ └── meta.yaml └── latest.txt $ cat che-plugin-registry/v3/plugins/redhat/java/latest.txt 0.50.0

Build the containers for the custom plug-in registry:

./build.sh

1.1.3. Deploying the registries

Prerequisites

The my-plug-in-registry and my-devfile-registry images used in this section are built using the docker command. This section assumes that these images are available on the OpenShift cluster where CodeReady Workspaces is deployed.

This is true on Minikube, for example, if before running the docker build commands, the user executed the eval $\{minikube docker-env} command (or, the eval $\{minishift docker-env} command for Minishift).

Otherwise, these images can be pushed to a container registry (public, such as quay.io, or the DockerHub, or a private registry).

1.1.3.1. Deploying registries in OpenShift

Procedure

An OpenShift template to deploy the plug-in registry is available in the openshift/ directory of the GitHub repository.

To deploy the plug-in registry using the OpenShift template, run the following command:

NAMESPACE=<namespace-name> 1 IMAGE_NAME="my-plug-in-registry" IMAGE_TAG="latest" oc new-app -f openshift/che-plugin-registry.yml \ -n "$\{NAMESPACE}" \ -p IMAGE="$\{IMAGE_NAME}" \ -p IMAGE_TAG="$\{IMAGE_TAG}" \ -p PULL_POLICY="IfNotPresent"

- 1

- If installed using crwctl, the default CodeReady Workspaces project is

workspaces. The OperatorHub installation method deploys CodeReady Workspaces to the users current project.

The devfile registry has an OpenShift template in the

deploy/openshift/directory of the GitHub repository. To deploy it, run the command:NAMESPACE=<namespace-name> 1 IMAGE_NAME="my-devfile-registry" IMAGE_TAG="latest" oc new-app -f openshift/che-devfile-registry.yml \ -n "$\{NAMESPACE}" \ -p IMAGE="$\{IMAGE_NAME}" \ -p IMAGE_TAG="$\{IMAGE_TAG}" \ -p PULL_POLICY="IfNotPresent"

- 1

- If installed using crwctl, the default CodeReady Workspaces project is

workspaces. The OperatorHub installation method deploys CodeReady Workspaces to the users current project.

Check if the registries are deployed successfully on OpenShift.

To verify that the new plug-in is correctly published to the plug-in registry, make a request to the registry path

/v3/plugins/index.json(or/devfiles/index.jsonfor the devfile registry).$ URL=$(oc get -o 'custom-columns=URL:.spec.rules[0].host' \ -l app=che-plugin-registry route --no-headers) $ INDEX_JSON=$(curl -sSL http://${URL}/v3/plugins/index.json) $ echo ${INDEX_JSON} | grep -A 4 -B 5 "\"name\":\"my-plug-in\"" ,\{ "id": "my-org/my-plug-in/1.0.0", "displayName":"This is my first plug-in for CodeReady Workspaces", "version":"1.0.0", "type":"VS Code extension", "name":"my-plug-in", "description":"This plugin shows that we are able to add plugins to the registry", "publisher":"my-org", "links": \{"self":"/v3/plugins/my-org/my-plug-in/1.0.0" } } -- -- ,\{ "id": "my-org/my-plug-in/latest", "displayName":"This is my first plug-in for CodeReady Workspaces", "version":"latest", "type":"VS Code extension", "name":"my-plug-in", "description":"This plugin shows that we are able to add plugins to the registry", "publisher":"my-org", "links": \{"self":"/v3/plugins/my-org/my-plug-in/latest" } }Verify that the CodeReady Workspaces server points to the URL of the registry. To do this, compare the value of the

CHE_WORKSPACE_PLUGIN__REGISTRY__URLparameter in thecodereadyConfigMap (orCHE_WORKSPACE_DEVFILE__REGISTRY__URLfor the devfile registry):$ oc get \ -o "custom-columns=URL:.data['CHE_WORKSPACE_PLUGINREGISTRYURL']" \ --no-headers cm/che URL http://che-plugin-registry-che.192.168.99.100.mycluster.mycompany.com/v3with the URL of the route:

$ oc get -o 'custom-columns=URL:.spec.rules[0].host' \ -l app=che-plugin-registry route --no-headers che-plugin-registry-che.192.168.99.100.mycluster.mycompany.com

If they do not match, update the ConfigMap and restart the CodeReady Workspaces server.

$ oc edit cm/che (...) $ oc scale --replicas=0 deployment/che $ oc scale --replicas=1 deployment/che

When the new registries are deployed and the CodeReady Workspaces server is configured to use them, the new plug-ins are available in the Plugin view of a workspace and the new stacks are displayed in the New Workspace tab of the user dashboard.

1.2. Including the plug-in binaries in the registry image

The plug-in registry of CodeReady Workspaces differs from the Eclipse Che version. The Eclipse Che only hosts plug-in metadata, but the CodeReady Workspaces plug-in registry also hosts the corresponding binaries, and it is built in an offline mode by default. This means the binaries are already hosted in the plug-in-registry image.

This section describes how to add a new plug-in or reference a different version of a plug-in. This is achieved by modifying the plug-in meta.yaml file to point to a new plug-in and building a new registry in offline mode that contains the modified plug-in meta.yaml file and the plug-in binary file.

Prerequisites

- An instance of CodeReady Workspaces is available.

-

The

octool is available.

Procedure

Clone the

codeready-workspacesrepository$ git clone https://github.com/redhat-developer/codeready-workspaces $ cd codeready-workspaces/dependencies/che-plugin-registry

Identify the binaries you wish to change in the plug-in registry

The

meta.yamlfile includes theextensionsection, which defines the URLs of required extensions for the plug-in. For example, theredhat/java11/0.63.0plug-in lists the following two extensions:meta.yaml

extensions: - https://download.jboss.org/jbosstools/vscode/3rdparty/vscode-java-debug/vscode-java-debug-0.26.0.vsix - https://download.jboss.org/jbosstools/static/jdt.ls/stable/java-0.63.0-2222.vsix

Change the first extension to reference the version hosted on GitHub and rebuild the plug-in registry. When using the

redhat/java11/0.63.0plug-in, the binary will be fetched from the custom plug-in-registry server. Set the following environment variables to help with the subsequent commands:ORG=redhat NAME=java11 CHE_PLUGIN_VERSION=0.63.0 VSCODE_JAVA_DEBUG_VERSION=0.26.0 VSCODE_JAVA_DEBUG_URL="https://github.com/microsoft/vscode-java-debug/releases/download/0.26.0/vscjava.vscode-java-debug-0.26.0.vsix" OLD_JAVA_DEBUG_META_YAML_URL="https://download.jboss.org/jbosstools/vscode/3rdparty/vscode-java-debug/vscode-java-debug-0.26.0.vsix"

Get the plug-in registry URL:

$ oc get route plugin-registry -o jsonpath='{.spec.host}' -n ${CHE_NAMESPACE}Save this value in a variable called

PLUGIN_REGISTRY_URL.Update the URLs in the

meta.yamlfile to point to the VS Code extension binaries that are saved in the registry container:$ sed -i -e "s#${OLD_JAVA_DEBUG_META_YAML_URL}#${VSCODE_JAVA_DEBUG_URL}#g" \ ./v3/plugins/${ORG}/${NAME}/${CHE_PLUGIN_VERSION}/meta.yaml ./v3/plugins/${ORG}/${NAME}/${CHE_PLUGIN_VERSION}/meta.yamlImportantBy default, CodeReady Workspaces is deployed with TLS enabled. For installations that do not use TLS, use

http://in theNEW_JAVA_DEBUG_URLandNEW_JAVA_LS_URLvariables.Confirm that the

meta.yamlhas the correctly substituted URLs:$ cat ./v3/plugins/${ORG}/${NAME}/${CHE_PLUGIN_VERSION}/meta.yamlmeta.yaml

extensions: - https://plugin-registry-che.apps-crc.testing/v3/plugins/redhat/java11/0.63.0/vscode-java-debug-0.26.0.vsix - https://plugin-registry-che.apps-crc.testing/v3/plugins/redhat/java11/0.63.0/java-0.63.0-2222.vsix

- Build and deploy the plug-in registry using the instructions in the Building and running a custom registry image section.

1.3. Editing a devfile and plug-in at runtime

An alternative to building a custom registry image is to:

- Start a registry

- Modify its content at runtime

This approach is simpler and faster. But the modifications are lost as soon as the container is deleted.

1.3.1. Adding a plug-in at runtime

Procedure

To add a plug-in:

Check out the plugin registry sources.

$ git clone https://github.com/redhat-developer/codeready-workspaces; \ cd codeready-workspaces/dependencies/che-plugin-registry

Create a

meta.yamlin some local folder. This can be done from scratch or by copying from an existing plug-in’smeta.yamlfile.$ PLUGIN="v3/plugins/new-org/new-plugin/0.0.1"; \ mkdir -p ${PLUGIN}; cp v3/plugins/che-incubator/cpptools/0.1/* ${PLUGIN}/ echo "${PLUGIN##*/}" > ${PLUGIN}/../latest.txt-

If copying from an existing plug-in, make changes to the

meta.yamlfile to suit your needs. Make sure the new plug-in has a uniquetitle,displayNameanddescription. Update thefirstPublicationDateto today’s date. These fields in

meta.yamlmust match the path defined inPLUGINabove.publisher: new-org name: new-plugin version: 0.0.1

Get the name of the Pod that hosts the plug-in registry container. To do this, filter the

component=plugin-registrylabel:$ PLUGIN_REG_POD=$(oc get -o custom-columns=NAME:.metadata.name \ --no-headers pod -l component=plugin-registry)

Regenerate the registry’s

index.jsonfile to include the new plug-in.$ cd codeready-workspaces/dependencies/che-plugin-registry; \ "$(pwd)/build/scripts/generate_latest_metas.sh" v3 && \ "$(pwd)/build/scripts/check_plugins_location.sh" v3 && \ "$(pwd)/build/scripts/set_plugin_dates.sh" v3 && \ "$(pwd)/build/scripts/check_plugins_viewer_mandatory_fields.sh" v3 && \ "$(pwd)/build/scripts/index.sh" v3 > v3/plugins/index.jsonCopy the new

index.jsonandmeta.yamlfiles from the new local plug-in folder to the container.$ cd codeready-workspaces/dependencies/che-plugin-registry; \ LOCAL_FILES="$(pwd)/${PLUGIN}/meta.yaml $(pwd)/v3/plugins/index.json"; \ oc exec ${PLUGIN_REG_POD} -i -t -- mkdir -p /var/www/html/${PLUGIN}; \ for f in $LOCAL_FILES; do e=${f/$(pwd)\//}; echo "Upload ${f} -> /var/www/html/${e}"; \ oc cp "${f}" ${PLUGIN_REG_POD}:/var/www/html/${e}; done- The new plug-in can now be used from the existing CodeReady Workspaces instance of the plug-in registry. To discover it, go to the CodeReady Workspaces dashboard, then click the Workspaces link. From there, click the gear icon to configure one of your workspaces. Select the Plugins tab to see the updated list of available plug-ins.

1.3.2. Adding a devfile at runtime

Procedure

To add a devfile:

Check out the devfile registry sources.

$ git clone https://github.com/redhat-developer/codeready-workspaces; \ cd codeready-workspaces/dependencies/che-devfile-registry

Create a

devfile.yamlandmeta.yamlin some local folder. This can be done from scratch or by copying from an existing devfile.$ STACK="new-stack"; \ mkdir -p devfiles/${STACK}; cp devfiles/03_web-nodejs-simple/* devfiles/${STACK}/-

If copying from an existing devfile, make changes to the devfile to suit your needs. Make sure the new devfile has a unique

displayNameanddescription. Get the name of the Pod that hosts the devfile registry container. To do this, filter the

component=devfile-registrylabel:$ DEVFILE_REG_POD=$(oc get -o custom-columns=NAME:.metadata.name \ --no-headers pod -l component=devfile-registry)

Regenerate the registry’s

index.jsonfile to include the new devfile.$ cd codeready-workspaces/dependencies/che-devfile-registry; \ "$(pwd)/build/scripts/check_mandatory_fields.sh" devfiles; \ "$(pwd)/build/scripts/index.sh" > index.json

Copy the new

index.json,devfile.yamlandmeta.yamlfiles from the new local devfile folder to the container.$ cd codeready-workspaces/dependencies/che-devfile-registry; \ oc exec ${DEVFILE_REG_POD} -i -t -- mkdir -p /var/www/html/devfiles/${STACK}; \ oc cp $(pwd)/devfiles/${STACK}/meta.yaml ${DEVFILE_REG_POD}:/var/www/html/devfiles/${STACK}/meta.yaml; \ oc cp $(pwd)/devfiles/${STACK}/devfile.yaml ${DEVFILE_REG_POD}:/var/www/html/devfiles/${STACK}/devfile.yaml; \ oc cp $(pwd)/index.json ${DEVFILE_REG_POD}:/var/www/html/devfiles/index.json- The new devfile can now be used from the existing CodeReady Workspaces instance of the devfile registry. To discover it, go to the CodeReady Workspaces dashboard, then click the Workspaces link. From there, click Add Workspace to see the updated list of available devfiles.

1.4. Using a Visual Studio Code extension in CodeReady Workspaces

Starting with Red Hat CodeReady Workspaces 2.3, Visual Studio Code (VS Code) extensions can be installed to extend the functionality of a CodeReady Workspaces workspace. VS Code extensions can run in the Che-Theia editor container, or they can be packaged in their own isolated and pre-configured containers with their prerequisites.

This document describes:

- Use of a VS Code extension in CodeReady Workspaces with workspaces.

- CodeReady Workspaces Plug-ins panel.

How to publish a VS Code extension in the CodeReady Workspaces plug-in registry (to share the extension with other CodeReady Workspaces users).

- The extension-hosting sidecar container and the use of the extension in a devfile are optional for this.

- How to review the compatibility of the VS Code extensions to be informed whether a specific API is supported or has not been implemented yet.

1.4.1. Publishing a VS Code extension into the CodeReady Workspaces plug-in registry

The user of CodeReady Workspaces can use a workspace devfile to use any plug-in, also known as Visual Studio Code (VS Code) extension. This plug-in can be added to the plug-in registry, then easily reused by anyone in the same organization with access to that workspaces installation.

Some plug-ins need a runtime dedicated container for code compilation. This fact makes those plug-ins a combination of a runtime sidecar container and a VS Code extension.

The following section describes the portability of a plug-in configuration and associating an extension with a runtime container that the plug-in needs.

1.4.1.1. Writing a meta.yaml file and adding it to a plug-in registry

The plug-in meta information is required to publish a VS Code extension in an Red Hat CodeReady Workspaces plug-in registry. This meta information is provided as a meta.yaml file. This section describes how to create a meta.yaml file for an extension.

Procedure

-

Create a

meta.yamlfile in the following plug-in registry directory:<apiVersion>/plugins/<publisher>/<plug-inName>/<plug-inVersion>/. Edit the

meta.yamlfile and provide the necessary information. The configuration file must adhere to the following structure:apiVersion: v2 1 publisher: myorg 2 name: my-vscode-ext 3 version: 1.7.2 4 type: value 5 displayName: 6 title: 7 description: 8 icon: https://www.eclipse.org/che/images/logo-eclipseche.svg 9 repository: 10 category: 11 spec: containers: 12 - image: 13 memoryLimit: 14 memoryRequest: 15 cpuLimit: 16 cpuRequest: 17 extensions: 18 - https://github.com/redhat-developer/vscode-yaml/releases/download/0.4.0/redhat.vscode-yaml-0.4.0.vsix - https://github.com/SonarSource/sonarlint-vscode/releases/download/1.16.0/sonarlint-vscode-1.16.0.vsix

- 1

- Version of the file structure.

- 2

- Name of the plug-in publisher. Must be the same as the publisher in the path.

- 3

- Name of the plug-in. Must be the same as in path.

- 4

- Version of the plug-in. Must be the same as in path.

- 5

- Type of the plug-in. Possible values:

Che Plugin,Che Editor,Theia plugin,VS Code extension. - 6

- A short name of the plug-in.

- 7

- Title of the plug-in.

- 8

- A brief explanation of the plug-in and what it does.

- 9

- The link to the plug-in logo.

- 10

- Optional. The link to the source-code repository of the plug-in.

- 11

- Defines the category that this plug-in belongs to. Should be one of the following:

Editor,Debugger,Formatter,Language,Linter,Snippet,Theme, orOther. - 12

- If this section is omitted, the VS Code extension is added into the Che-Theia IDE container.

- 13

- The Docker image from which the sidecar container will be started. Example:

eclipse/che-theia-endpoint-runtime:next. - 14

- The maximum RAM which is available for the sidecar container. Example: "512Mi". This value might be overridden by the user in the component configuration.

- 15

- The RAM which is given for the sidecar container by default. Example: "256Mi". This value might be overridden by the user in the component configuration.

- 16

- The maximum CPU amount in cores or millicores (suffixed with "m") which is available for the sidecar container. Examples: "500m", "2". This value might be overridden by the user in the component configuration.

- 17

- The CPU amount in cores or millicores (suffixed with "m") which is given for the sidecar container by default. Example: "125m". This value might be overridden by the user in the component configuration.

- 18

- A list of VS Code extensions run in this sidecar container.

1.4.2. Adding a plug-in registry VS Code extension to a workspace

When the required VS Code extension is added into a CodeReady Workspaces plug-in registry, the user can add it into the workspace through the CodeReady Workspaces Plugins panel or through the workspace configuration.

1.4.2.1. Adding a VS Code extension using the CodeReady Workspaces Plugins panel

Prerequisites

- A running instance of Red Hat CodeReady Workspaces. To install an instance of Red Hat CodeReady Workspaces, see Installing CodeReady Workspaces on OpenShift Container Platform.

Procedure

To add a VS Code extension using the CodeReady Workspaces Plugins panel:

-

Open the CodeReady Workspaces Plugins panel by pressing

CTRL+SHIFT+Jor navigate to View/Plugins. - Change the current registry to the registry in which the VS Code extension was added.

-

In the search bar, click the Menu button and then click Change Registry to choose the registry from the list. If the required registry is not in the list, add it using the Add Registry menu option. The registry link points to the

pluginssegment of the registry, for example:https://my-registry.com/v3/plugins/index.json. -

To update the list of plug-ins after adding a new registry link, use

Refreshcommand from the search bar menu. - Search for the required plug-in using the filter, and then click the Install button.

- Restart the workspace for the changes to take effect.

1.4.2.2. Adding a VS Code extension using the workspace configuration

Prerequisites

- A running instance of Red Hat CodeReady Workspaces. To install an instance of Red Hat CodeReady Workspaces, see Installing CodeReady Workspaces on OpenShift Container Platform.

- An existing workspace defined on this instance of Red Hat CodeReady Workspaces Creating a workspace from user dashboard.

Procedure

To add a VS Code extension using the workspace configuration:

- Click the Workspaces tab on the Dashboard and select the workspace in which you want to add the plug-in. The Workspace <workspace-name> window is opened showing the details of the workspace.

- Click the devfile tab.

Locate the components section, and add a new entry with the following structure:

- type: chePlugin id: 1- 1

- ID format: <publisher>/<plug-inName>/<plug-inVersion>

CodeReady Workspaces automatically adds the other fields to the new component.

Alternatively, you can link to a

meta.yamlfile hosted on GitHub, using the dedicated reference field.- type: chePlugin reference: 1- 1

https://raw.githubusercontent.com/<username>/<registryRepository>/v3/plugins/<publisher>/<plug-inName>/<plug-inVersion>/meta.yaml

1.4.2.2.1. Testing a VS Code extension using GitHub gist

Each workspace can have its own set of plug-ins. The list of plug-ins and the list of projects to clone are defined in the devfile.yaml file.

For example, to enable an AsciiDoc plug-in from the Red Hat CodeReady Workspaces dashboard, add the following snippet to the devfile:

components: - id: joaopinto/vscode-asciidoctor/latest type: chePlugin

To add a plug-in that is not in the default plug-in registry, build a custom plug-in registry. See link:Building a custom plug-in registry[the documentation on building custom registries], or, alternatively, use GitHub and the gist service.

Prerequisites

- A running instance of Red Hat CodeReady Workspaces. To install an instance of Red Hat CodeReady Workspaces, see Installing CodeReady Workspaces on OpenShift Container Platform.

- A GitHub account.

Procedure

-

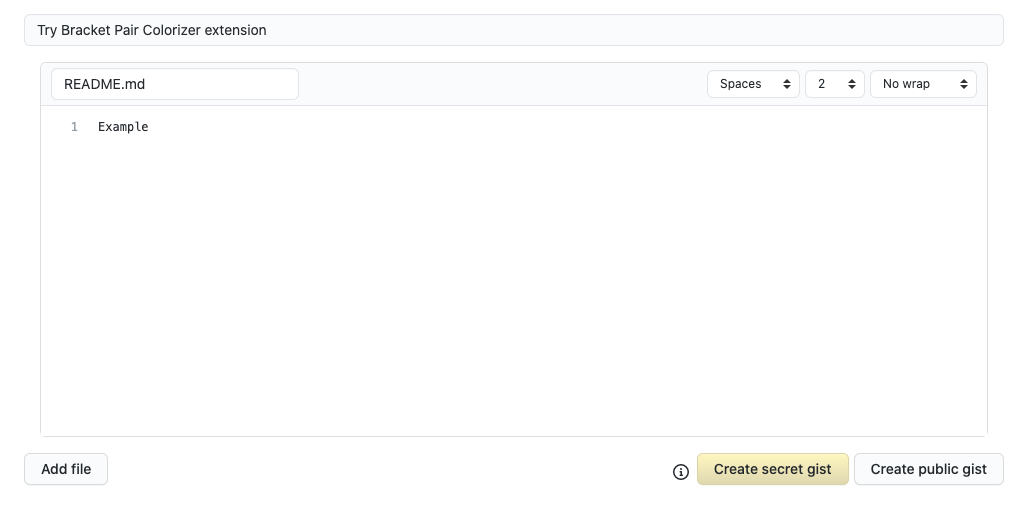

Go to the gist webpage and create a

README.mdfile with the following description:Try Bracket Pair Colorizer extension in Red Hat CodeReady Workspacesand content:Example VS Code extension. (Bracket Pair Colorizer is a popular VS Code extension.) Click the Create secret gist button:

Clone the gist repository by using the URL from the navigation bar of the browser:

$ git clone https://gist.github.com/<your-github-username>/<gist-id>

Example of the output of the

git clonecommandgit clone https://gist.github.com/benoitf/85c60c8c439177ac50141d527729b9d9 1 Cloning into '85c60c8c439177ac50141d527729b9d9'... remote: Enumerating objects: 3, done. remote: Counting objects: 100% (3/3), done. remote: Total 3 (delta 0), reused 0 (delta 0), pack-reused 0 Unpacking objects: 100% (3/3), done.- 1

- Each gist has a unique ID.

Change the directory:

$ cd <gist-directory-name> 1

- 1

- Directory name matching the gist ID.

- Download the plug-in from the VS Code marketplace or from its GitHub page, and store the plug-in file in the cloned directory.

Create a

plugin.yamlfile in the cloned directory to add the definition of this plug-in.Example of the

plugin.yamlfile referencing the.vsixbinary file extensionapiVersion: v2 publisher: CoenraadS name: bracket-pair-colorizer version: 1.0.61 type: VS Code extension displayName: Bracket Pair Colorizer title: Bracket Pair Colorizer description: Bracket Pair Colorizer icon: https://raw.githubusercontent.com/redhat-developer/codeready-workspaces/master/dependencies/che-plugin-registry/resources/images/default.svg?sanitize=true repository: https://github.com/CoenraadS/BracketPair category: Language firstPublicationDate: '2020-07-30' spec: 1 extensions: - "{{REPOSITORY}}/CoenraadS.bracket-pair-colorizer-1.0.61.vsix" 2 latestUpdateDate: "2020-07-30"

Define a memory limit and volumes:

spec: containers: - image: "quay.io/crw/che-sidecar-java:8-0cfbacb" name: vscode-java memoryLimit: "1500Mi" volumes: - mountPath: "/home/theia/.m2" name: m2Create a

devfile.yamlthat references theplugin.yamlfile:apiVersion: 1.0.0 metadata: generateName: java-maven- projects: - name: console-java-simple source: type: git location: "https://github.com/che-samples/console-java-simple.git" branch: java1.11 components: - type: chePlugin id: redhat/java11/latest - type: chePlugin 1 reference: "{{REPOSITORY}}/plugin.yaml" - type: dockerimage alias: maven image: quay.io/crw/che-java11-maven:nightly memoryLimit: 512Mi mountSources: true volumes: - name: m2 containerPath: /home/user/.m2 commands: - name: maven build actions: - type: exec component: maven command: "mvn clean install" workdir: ${CODEREADY_PROJECTS_ROOT}/console-java-simple - name: maven build and run actions: - type: exec component: maven command: "mvn clean install && java -jar ./target/*.jar" workdir: ${CODEREADY_PROJECTS_ROOT}/console-java-simple- 1

- Any other devfile definition is also accepted. The important information in this devfile are the lines defining this external component. It means that an external reference defines the plug-in (instead of an ID pointing to a definition in the default plug-in registry).

Verify there are 4 files in the current Git directory:

$ ls -la .git CoenraadS.bracket-pair-colorizer-1.0.61.vsix README.md devfile.yaml plugin.yaml

Before committing the files, add a pre-commit hook to update the

{{REPOSITORY}}variable to the public external raw gist link:Create a

.git/hooks/pre-commitfile with this content:#!/bin/sh # get modified files FILES=$(git diff --cached --name-only --diff-filter=ACMR "*.yaml" | sed 's| |\\ |g') # exit fast if no files found [ -z "$FILES" ] && exit 0 # grab remote origin origin=$(git config --get remote.origin.url) url="${origin}/raw" # iterate on files and add the good prefix pattern for FILE in ${FILES}; do sed -e "s#{{REPOSITORY}}#${url}#g" "${FILE}" > "${FILE}.back" mv "${FILE}.back" "${FILE}" done # Add back to staging echo "$FILES" | xargs git add exit 0The hook replaces the

{{REPOSITORY}}macro and adds the external raw link to the gist.Make the script executable:

$ chmod u+x .git/hooks/pre-commit

Commit and push the files:

# Add files $ git add * # Commit $ git commit -m "Initial Commit for the test of our extension" [master 98dd370] Initial Commit for the test of our extension 3 files changed, 61 insertions(+) create mode 100644 CoenraadS.bracket-pair-colorizer-1.0.61.vsix create mode 100644 devfile.yaml create mode 100644 plugin.yaml # and push the files to the main branch $ git push origin

Visit the gist website and verify that all links have the correct public URL and do not contain any

{{REPOSITORY}}variables. To reach the devfile:$ echo "$(git config --get remote.origin.url)/raw/devfile.yaml"

or:

$ echo "https://<che-server>/f?url=$(git config --get remote.origin.url)/raw/devfile.yaml"

- Restart the workspace for the changes to take effect.

1.4.3. Verifying the VS Code extension API compatibility level

Che-Theia does not fully support the VS Code extensions API. The vscode-theia-comparator is used to analyze the compatibility between the Che-Theia plug-in API and the VS Code extension API. This tool runs nightly, and the results are published on the vscode-theia-comparator GitHub page.

Prerequisites

- Personal GitHub access token. See Creating a personal access token for the command line. A GitHub access token is required to increase the GitHub download limit for your IP address.

Procedure

To run the vscode-theia comparator manually:

-

Clone the vscode-theia-comparator repository, and build it using the

yarncommand. -

Set the

GITHUB_TOKENenvironment variable to your token. -

Execute the

yarn run generatecommand to generate a report. -

Open the

out/status.htmlfile to view the report.

1.5. Testing a Visual Studio Code extension in CodeReady Workspaces

Visual Studio Code (VS Code) extensions work in a workspace. VS Code extensions can run in the Che-Theia editor container, or in their own isolated and preconfigured containers with their prerequisites.

This section describes how to test a VS Code extension in CodeReady Workspaces with workspaces and how to review the compatibility of VS Code extensions to check whether a specific API is available.

The extension-hosting sidecar container and the use of the extension in a devfile are optional.

1.5.1. Testing a VS Code extension using GitHub gist

Each workspace can have its own set of plug-ins. The list of plug-ins and the list of projects to clone are defined in the devfile.yaml file.

For example, to enable an AsciiDoc plug-in from the Red Hat CodeReady Workspaces dashboard, add the following snippet to the devfile:

components: - id: joaopinto/vscode-asciidoctor/latest type: chePlugin

To add a plug-in that is not in the default plug-in registry, build a custom plug-in registry. See link:Building a custom plug-in registry[the documentation on building custom registries], or, alternatively, use GitHub and the gist service.

Prerequisites

- A running instance of Red Hat CodeReady Workspaces. To install an instance of Red Hat CodeReady Workspaces, see Installing CodeReady Workspaces on OpenShift Container Platform.

- A GitHub account.

Procedure

-

Go to the gist webpage and create a

README.mdfile with the following description:Try Bracket Pair Colorizer extension in Red Hat CodeReady Workspacesand content:Example VS Code extension. (Bracket Pair Colorizer is a popular VS Code extension.) Click the Create secret gist button:

Clone the gist repository by using the URL from the navigation bar of the browser:

$ git clone https://gist.github.com/<your-github-username>/<gist-id>

Example of the output of the

git clonecommandgit clone https://gist.github.com/benoitf/85c60c8c439177ac50141d527729b9d9 1 Cloning into '85c60c8c439177ac50141d527729b9d9'... remote: Enumerating objects: 3, done. remote: Counting objects: 100% (3/3), done. remote: Total 3 (delta 0), reused 0 (delta 0), pack-reused 0 Unpacking objects: 100% (3/3), done.- 1

- Each gist has a unique ID.

Change the directory:

$ cd <gist-directory-name> 1

- 1

- Directory name matching the gist ID.

- Download the plug-in from the VS Code marketplace or from its GitHub page, and store the plug-in file in the cloned directory.

Create a

plugin.yamlfile in the cloned directory to add the definition of this plug-in.Example of the

plugin.yamlfile referencing the.vsixbinary file extensionapiVersion: v2 publisher: CoenraadS name: bracket-pair-colorizer version: 1.0.61 type: VS Code extension displayName: Bracket Pair Colorizer title: Bracket Pair Colorizer description: Bracket Pair Colorizer icon: https://raw.githubusercontent.com/redhat-developer/codeready-workspaces/master/dependencies/che-plugin-registry/resources/images/default.svg?sanitize=true repository: https://github.com/CoenraadS/BracketPair category: Language firstPublicationDate: '2020-07-30' spec: 1 extensions: - "{{REPOSITORY}}/CoenraadS.bracket-pair-colorizer-1.0.61.vsix" 2 latestUpdateDate: "2020-07-30"

Define a memory limit and volumes:

spec: containers: - image: "quay.io/crw/che-sidecar-java:8-0cfbacb" name: vscode-java memoryLimit: "1500Mi" volumes: - mountPath: "/home/theia/.m2" name: m2Create a

devfile.yamlthat references theplugin.yamlfile:apiVersion: 1.0.0 metadata: generateName: java-maven- projects: - name: console-java-simple source: type: git location: "https://github.com/che-samples/console-java-simple.git" branch: java1.11 components: - type: chePlugin id: redhat/java11/latest - type: chePlugin 1 reference: "{{REPOSITORY}}/plugin.yaml" - type: dockerimage alias: maven image: quay.io/crw/che-java11-maven:nightly memoryLimit: 512Mi mountSources: true volumes: - name: m2 containerPath: /home/user/.m2 commands: - name: maven build actions: - type: exec component: maven command: "mvn clean install" workdir: ${CODEREADY_PROJECTS_ROOT}/console-java-simple - name: maven build and run actions: - type: exec component: maven command: "mvn clean install && java -jar ./target/*.jar" workdir: ${CODEREADY_PROJECTS_ROOT}/console-java-simple- 1

- Any other devfile definition is also accepted. The important information in this devfile are the lines defining this external component. It means that an external reference defines the plug-in (instead of an ID pointing to a definition in the default plug-in registry).

Verify there are 4 files in the current Git directory:

$ ls -la .git CoenraadS.bracket-pair-colorizer-1.0.61.vsix README.md devfile.yaml plugin.yaml

Before committing the files, add a pre-commit hook to update the

{{REPOSITORY}}variable to the public external raw gist link:Create a

.git/hooks/pre-commitfile with this content:#!/bin/sh # get modified files FILES=$(git diff --cached --name-only --diff-filter=ACMR "*.yaml" | sed 's| |\\ |g') # exit fast if no files found [ -z "$FILES" ] && exit 0 # grab remote origin origin=$(git config --get remote.origin.url) url="${origin}/raw" # iterate on files and add the good prefix pattern for FILE in ${FILES}; do sed -e "s#{{REPOSITORY}}#${url}#g" "${FILE}" > "${FILE}.back" mv "${FILE}.back" "${FILE}" done # Add back to staging echo "$FILES" | xargs git add exit 0The hook replaces the

{{REPOSITORY}}macro and adds the external raw link to the gist.Make the script executable:

$ chmod u+x .git/hooks/pre-commit

Commit and push the files:

# Add files $ git add * # Commit $ git commit -m "Initial Commit for the test of our extension" [master 98dd370] Initial Commit for the test of our extension 3 files changed, 61 insertions(+) create mode 100644 CoenraadS.bracket-pair-colorizer-1.0.61.vsix create mode 100644 devfile.yaml create mode 100644 plugin.yaml # and push the files to the main branch $ git push origin

Visit the gist website and verify that all links have the correct public URL and do not contain any

{{REPOSITORY}}variables. To reach the devfile:$ echo "$(git config --get remote.origin.url)/raw/devfile.yaml"

or:

$ echo "https://<che-server>/f?url=$(git config --get remote.origin.url)/raw/devfile.yaml"

1.5.2. Verifying the VS Code extension API compatibility level

Che-Theia does not fully support the VS Code extensions API. The vscode-theia-comparator is used to analyze the compatibility between the Che-Theia plug-in API and the VS Code extension API. This tool runs nightly, and the results are published on the vscode-theia-comparator GitHub page.

Prerequisites

- Personal GitHub access token. See Creating a personal access token for the command line. A GitHub access token is required to increase the GitHub download limit for your IP address.

Procedure

To run the vscode-theia comparator manually:

-

Clone the vscode-theia-comparator repository, and build it using the

yarncommand. -

Set the

GITHUB_TOKENenvironment variable to your token. -

Execute the

yarn run generatecommand to generate a report. -

Open the

out/status.htmlfile to view the report.

Chapter 2. Retrieving CodeReady Workspaces logs

For information about obtaining various types of logs in CodeReady Workspaces, see the following sections:

2.1. Accessing OpenShift events on OpenShift

For high-level monitoring of OpenShift projects, view the OpenShift events that the project performs.

This section describes how to access these events in the OpenShift web console.

Prerequisites

- A running OpenShift web console.

Procedure

- In the left panel of the OpenShift web console, click the Home → Events.

- To view the list of all events for a particular project, select the project from the list.

- The details of the events for the current project are displayed.

Additional resources

- For a list of OpenShift events, see Comprehensive List of Events in OpenShift documentation.

2.2. Viewing the state of the CodeReady Workspaces cluster deployment using OpenShift 4 CLI tools

This section describes how to view the state of the CodeReady Workspaces cluster deployment using OpenShift 4 CLI tools.

Prerequisites

- An instance of Red Hat CodeReady Workspaces running on OpenShift.

-

An installation of the OpenShift command-line tool,

oc.

Procedure

Run the following commands to select the

crwproject:$ oc project <project_name>Run the following commands to get the name and status of the Pods running in the selected project:

$ oc get pods

Check that the status of all the Pods is

Running.Example 2.1. Pods with status

RunningNAME READY STATUS RESTARTS AGE codeready-8495f4946b-jrzdc 0/1 Running 0 86s codeready-operator-578765d954-99szc 1/1 Running 0 42m keycloak-74fbfb9654-g9vp5 1/1 Running 0 4m32s postgres-5d579c6847-w6wx5 1/1 Running 0 5m14s

To see the state of the CodeReady Workspaces cluster deployment, run:

$ oc logs --tail=10 -f `(oc get pods -o name | grep operator)`

Example 2.2. Logs of the Operator:

2.3. Viewing CodeReady Workspaces server logs

This section describes how to view the CodeReady Workspaces server logs using the command line.

2.3.1. Viewing the CodeReady Workspaces server logs using the OpenShift CLI

This section describes how to view the CodeReady Workspaces server logs using the OpenShift CLI (command line interface).

Procedure

In the terminal, run the following command to get the Pods:

$ oc get pods

Example

$ oc get pods NAME READY STATUS RESTARTS AGE codeready-11-j4w2b 1/1 Running 0 3m

To get the logs for a deployment, run the following command:

$ oc logs <name-of-pod>Example

$ oc logs codeready-11-j4w2b

2.4. Viewing external service logs

This section describes how the view the logs from external services related to CodeReady Workspaces server.

2.4.1. Viewing RH-SSO logs

The RH-SSO OpenID provider consists of two parts: Server and IDE. It writes its diagnostics or error information to several logs.

2.4.1.1. Viewing the RH-SSO server logs

This section describes how to view the RH-SSO OpenID provider server logs.

Procedure

- In the OpenShift Web Console, click Deployments.

-

In the Filter by label search field, type

keycloakto see the RH-SSO logs. -

In the Deployment Configs section, click the

keycloaklink to open it. - In the History tab, click the View log link for the active RH-SSO deployment.

- The RH-SSO logs are displayed.

Additional resources

- See the active CodeReady Workspaces deployment log for diagnostics and error messages related to the RH-SSO IDE Server.

2.4.1.2. Viewing the RH-SSO client logs on Firefox

This section describes how to view the RH-SSO IDE client diagnostics or error information in the Firefox WebConsole.

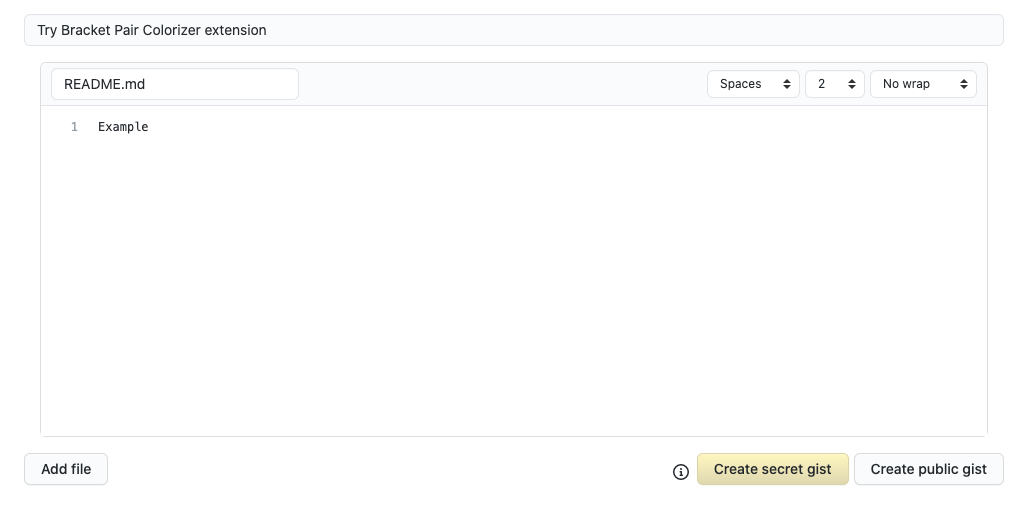

Procedure

- Click Menu > WebDeveloper > WebConsole.

2.4.1.3. Viewing the RH-SSO client logs on Google Chrome

This section describes how to view the RH-SSO IDE client diagnostics or error information in the Google Chrome Console tab.

Procedure

- Click on Menu > More Tools > Developer Tools.

Click on the Console tab.

2.4.2. Viewing the CodeReady Workspaces database logs

This section describes how to view the database logs in CodeReady Workspaces, such as PostgreSQL server logs.

Procedure

- In the OpenShift Web Console, click Deployments.

In the Find by label search field, type:

-

app=cheand press Enter component=postgresand EnterThe OpenShift Web Console now searches base on those two keys and displays PostgreSQL logs.

-

- Click postgres deployment to open it.

Click the View log link for the active PostgreSQL deployment.

The OpenShift Web Console displays the database logs.

Additional resources

- Some diagnostics or error messages related to the PostgreSQL server can be found in the active CodeReady Workspaces deployment log. For details to access the active CodeReady Workspaces deployments logs, see the Viewing the CodeReady Workspaces server logs section.

2.5. Viewing CodeReady Workspaces workspaces logs

This section describes how to view CodeReady Workspaces workspaces logs.

2.5.1. Viewing Che-Theia IDE logs

This section describes how to view Che-Theia IDE logs.

2.5.1.1. Viewing Che-Theia editor logs using the OpenShift CLI

Observing Che-Theia editor logs helps to get a better understanding and insight over the plug-ins loaded by the editor. This section describes how to access the Che-Theia editor logs using the OpenShift CLI (command-line interface).

Prerequisites

- CodeReady Workspaces is deployed in an OpenShift cluster.

- A workspace is created.

- User is located in a CodeReady Workspaces installation project.

Procedure

Obtain the list of the available Pods:

$ oc get pods

Example

$ oc get pods NAME READY STATUS RESTARTS AGE codeready-9-xz6g8 1/1 Running 1 15h workspace0zqb2ew3py4srthh.go-cli-549cdcf69-9n4w2 4/4 Running 0 1h

Obtain the list of the available containers in the particular Pod:

$ oc get pods <name-of-pod> --output jsonpath='\{.spec.containers[*].name}'Example:

$ oc get pods workspace0zqb2ew3py4srthh.go-cli-549cdcf69-9n4w2 -o jsonpath='\{.spec.containers[*].name}' > go-cli che-machine-exechr7 theia-idexzb vscode-gox3rGet logs from the

theia/idecontainer:$ oc logs --follow <name-of-pod> --container <name-of-container>

Example:

$ oc logs --follow workspace0zqb2ew3py4srthh.go-cli-549cdcf69-9n4w2 -container theia-idexzb >root INFO unzipping the plug-in 'task_plugin.theia' to directory: /tmp/theia-unpacked/task_plugin.theia root INFO unzipping the plug-in 'theia_yeoman_plugin.theia' to directory: /tmp/theia-unpacked/theia_yeoman_plugin.theia root WARN A handler with prefix term is already registered. root INFO [nsfw-watcher: 75] Started watching: /home/theia/.theia root WARN e.onStart is slow, took: 367.4600000013015 ms root INFO [nsfw-watcher: 75] Started watching: /projects root INFO [nsfw-watcher: 75] Started watching: /projects/.theia/tasks.json root INFO [4f9590c5-e1c5-40d1-b9f8-ec31ec3bdac5] Sync of 9 plugins took: 62.26000000242493 ms root INFO [nsfw-watcher: 75] Started watching: /projects root INFO [hosted-plugin: 88] PLUGIN_HOST(88) starting instance

2.5.2. Viewing logs from language servers and debug adapters

2.5.2.1. Checking important logs

This section describes how to check important logs.

Procedure

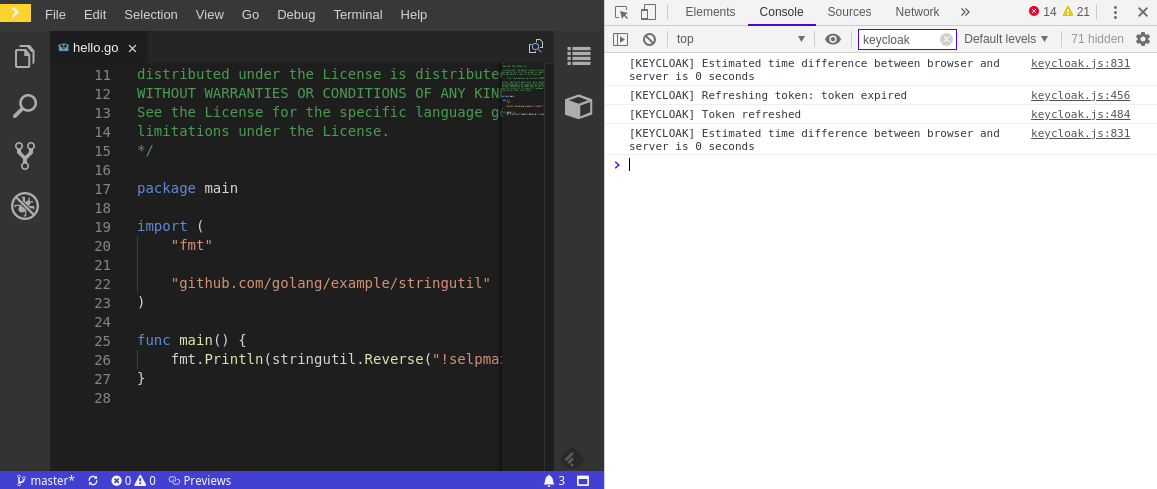

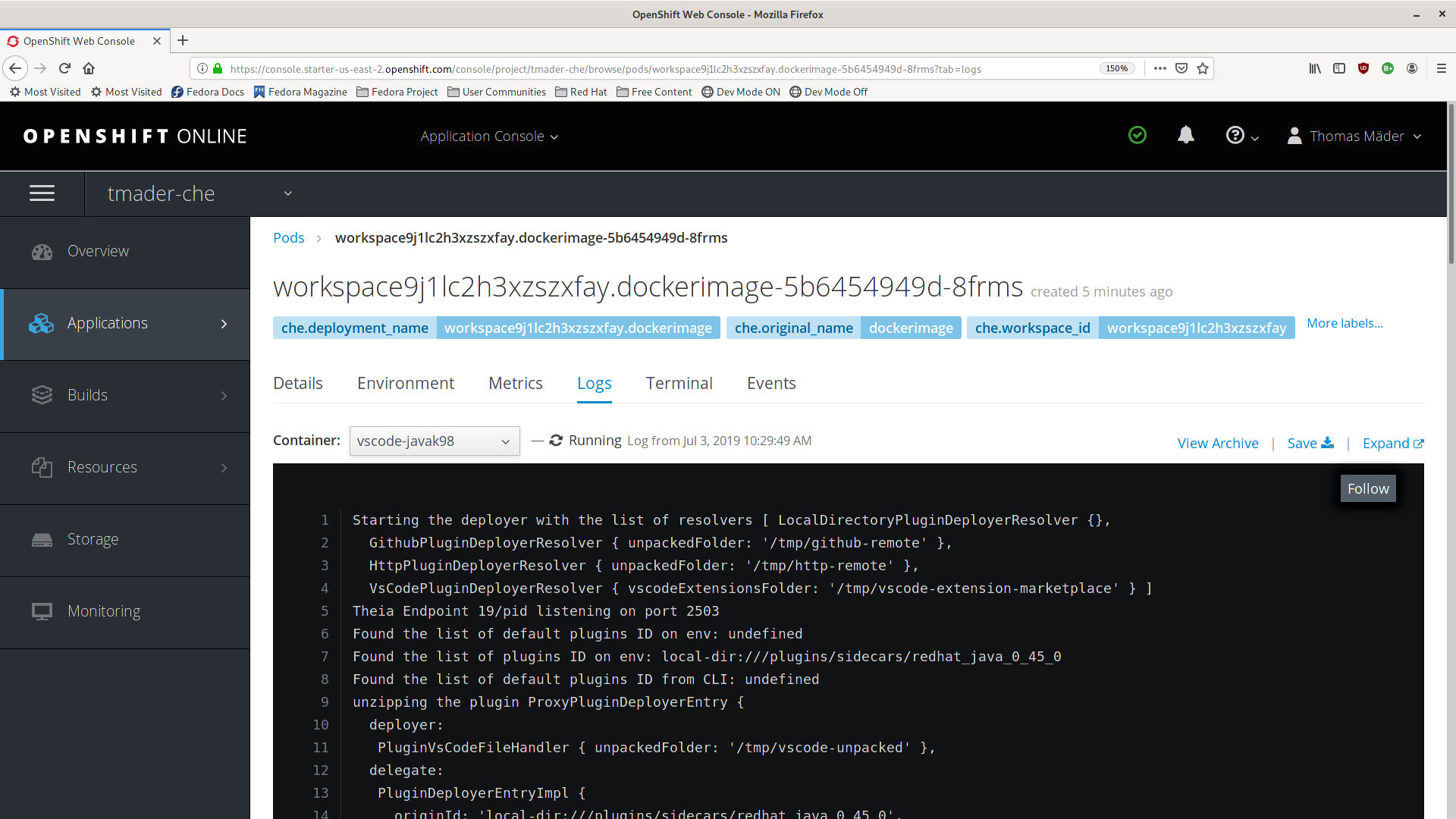

- In the OpenShift web console, click Applications → Pods to see a list of all the active workspaces.

- Click on the name of the running Pod where the workspace is running. The Pod screen contains the list of all containers with additional information.

Choose a container and click the container name.

TipThe most important logs are the

theia-idecontainer and the plug-ins container logs.On the container screen, navigate to the Logs section.

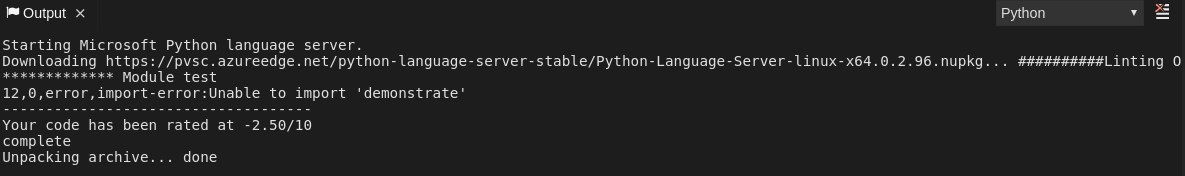

ExampleThe following is an output log of the sidecar container running the Java plug-in.

2.5.2.2. Detecting memory problems

This section describes how to detect memory problems related to a plug-in running out of memory. The following are the two most common problems related to a plug-in running out of memory:

- The plug-in container runs out of memory

-

This can happen during plug-in initialization when the container does not have enough RAM to execute the entrypoint of the image. The user can detect this in the logs of the plug-in container. In this case, the logs contain

OOMKilled, which implies that the processes in the container requested more memory than is available in the container. - A process inside the container runs out of memory without the container noticing this

For example, the Java language server (Eclipse JDT Language Server, started by the vscode-java extension) throws an OutOfMemoryException. This can happen any time after the container is initialized, for example, when a plug-in starts a language server or when a process runs out of memory because of the size of the project it has to handle.

To detect this problem, check the logs of the primary process running in the container. For example, to check the log file of Eclipse JDT Language Server for details, see the relevant plug-in-specific sections.

2.5.2.3. Logging the client-server traffic for debug adapters

This section describes how to log the exchange between Che-Theia and a debug adapter into the Output view.

Prerequisites

- A debug session must be started for the Debug adapters option to appear in the list.

Procedure

- Click File → Settings and then open Preferences.

- Expand the Debug section in the Preferences view.

-

Set the trace preference value to

true(default isfalse). - All the communication events are now logged.

- To watch these events, click View → Output and select Debug adapters from the drop-down list at the upper right corner of the Output view.

2.5.2.4. Viewing logs for Python

This section describes how to view logs for the Python language server.

Procedure

Navigate to the Output view and select Python in the drop-down list.

2.5.2.5. Viewing logs for Go

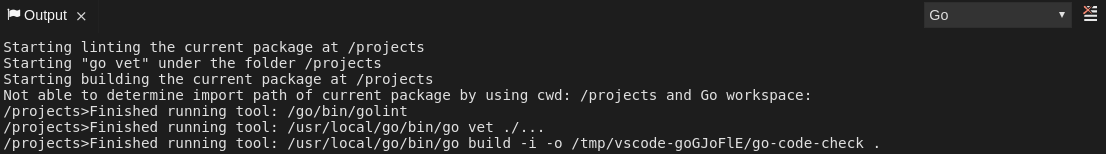

This section describes how to view logs for the Go language server.

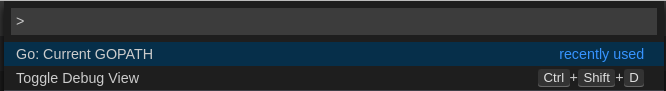

2.5.2.5.1. Finding the gopath

This section describes how to find where the GOPATH variable points to.

Procedure

Execute the

Go: Current GOPATHcommand.

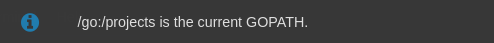

2.5.2.5.2. Viewing the Debug Console log for Go

This section describes how to view the log output from the Go debugger.

Procedure

Set the

showLogattribute totruein the debug configuration.{ "version": "0.2.0", "configurations": [ { "type": "go", "showLog": true .... } ] }To enable debugging output for a component, add the package to the comma-separated list value of the

logOutputattribute:{ "version": "0.2.0", "configurations": [ { "type": "go", "showLog": true, "logOutput": "debugger,rpc,gdbwire,lldbout,debuglineerr" .... } ] }The debug console prints the additional information in the debug console.

2.5.2.5.3. Viewing the Go logs output in the Output panel

This section describes how to view the Go logs output in the Output panel.

Procedure

- Navigate to the Output view.

Select Go in the drop-down list.

2.5.2.6. Viewing logs for the NodeDebug NodeDebug2 adapter

No specific diagnostics exist other than the general ones.

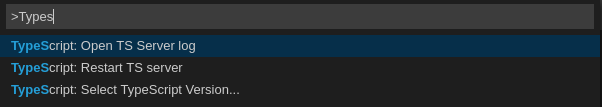

2.5.2.7. Viewing logs for Typescript

2.5.2.7.1. Enabling the label switched protocol (LSP) tracing

Procedure

-

To enable the tracing of messages sent to the Typescript (TS) server, in the Preferences view, set the

typescript.tsserver.traceattribute toverbose. Use this to diagnose the TS server issues. -

To enable logging of the TS server to a file, set the

typescript.tsserver.logattribute toverbose. Use this log to diagnose the TS server issues. The log contains the file paths.

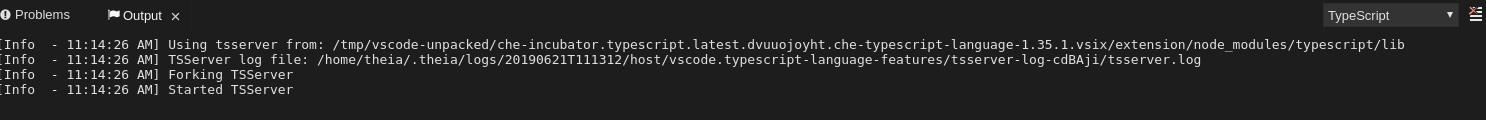

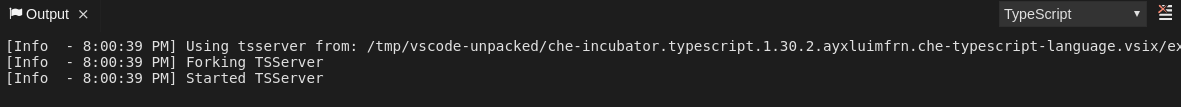

2.5.2.7.2. Viewing the Typescript language server log

This section describes how to view the Typescript language server log.

Procedure

To get the path to the log file, see the Typescript Output console:

To open log file, use the Open TS Server log command.

2.5.2.7.3. Viewing the Typescript logs output in the Output panel

This section describes how to view the Typescript logs output in the Output panel.

Procedure

- Navigate to the Output view

Select TypeScript in the drop-down list.

2.5.2.8. Viewing logs for Java

Other than the general diagnostics, there are Language Support for Java (Eclipse JDT Language Server) plug-in actions that the user can perform.

2.5.2.8.1. Verifying the state of the Eclipse JDT Language Server

Procedure

Check if the container that is running the Eclipse JDT Language Server plug-in is running the Eclipse JDT Language Server main process.

-

Open a terminal in the container that is running the Eclipse JDT Language Server plug-in (an example name for the container:

vscode-javaxxx). Inside the terminal, run the

ps aux | grep jdtcommand to check if the Eclipse JDT Language Server process is running in the container. If the process is running, the output is:usr/lib/jvm/default-jvm/bin/java --add-modules=ALL-SYSTEM --add-opens java.base/java.util

This message also shows the VSCode Java extension used. If it is not running, the language server has not been started inside the container.

- Check all logs described in Checking important logs

2.5.2.8.2. Verifying the Eclipse JDT Language Server features

Procedure

If the Eclipse JDT Language Server process is running, check if the language server features are working:

- Open a Java file and use the hover or autocomplete functionality. In case of an erroneous file, the user sees Java in the Outline view or in the Problems view.

2.5.2.8.3. Viewing the Java language server log

Procedure

The Eclipse JDT Language Server has its own workspace where it logs errors, information about executed commands, and events.

- To open this log file, open a terminal in the container that is running the Eclipse JDT Language Server plug-in. You can also view the log file by running the Java: Open Java Language Server log file command.

-

Run

cat <PATH_TO_LOG_FILE>wherePATH_TO_LOG_FILEis/home/theia/.theia/workspace-storage/<workspace_name>/redhat.java/jdt_ws/.metadata/.log.

2.5.2.8.4. Logging the Java language server protocol (LSP) messages

Procedure

To log the LSP messages to the VS Code Output view, enable tracing by setting the java.trace.server attribute to verbose.

Additional resources

For troubleshooting instructions, see the VS Code Java Github repository.

2.5.2.9. Viewing logs for Intelephense

2.5.2.9.1. Logging the Intelephense client-server communication

Procedure

To configure the PHP Intelephense language support to log the client-server interexchange in the Output view:

- Click File → Settings.

- Open the Preferences view.

-

Expand the Intelephense section and set the

trace.server.verbosepreference value toverboseto see all the communication events (the default value isoff).

2.5.2.9.2. Viewing Intelephense events in the Output panel

This procedure describes how to view Intelephense events in the Output panel.

Procedure

- Click View → Output

- Select Intelephense in the drop-down list for the Output view.

2.5.2.10. Viewing logs for PHP-Debug

This procedure describes how to configure the PHP Debug plug-in to log the PHP Debug plug-in diagnostic messages into the Debug Console view. Configure this before the start of the debug session.

Procedure

-

In the

launch.jsonfile, add the"log": trueattribute to the selected launch configuration. - Start the debug session.

- The diagnostic messages are printed into the Debug Console view along with the application output.

2.5.2.11. Viewing logs for XML

Other than the general diagnostics, there are XML plug-in specific actions that the user can perform.

2.5.2.11.1. Verifying the state of the XML language server

Procedure

-

Open a terminal in the container named

vscode-xml-<xxx>. Run

ps aux | grep javato verify that the XML language server has started. If the process is running, the output is:java ***/org.eclipse.ls4xml-uber.jar`

If is not, see the Checking important logs chapter.

2.5.2.11.2. Checking XML language server feature flags

Procedure

Check if the features are enabled. The XML plug-in provides multiple settings that can enable and disable features:

-

xml.format.enabled: Enable the formatter -

xml.validation.enabled: Enable the validation -

xml.documentSymbols.enabled: Enable the document symbols

-

-

To diagnose whether the XML language server is working, create a simple XML element, such as

<hello></hello>, and confirm that it appears in the Outline panel on the right. -

If the document symbols do not show, ensure that the

xml.documentSymbols.enabledattribute is set totrue. If it istrue, and there are no symbols, the language server may not be hooked to the editor. If there are document symbols, then the language server is connected to the editor. -

Ensure that the features that the user needs, are set to

truein the settings (they are set totrueby default). If any of the features are not working, or not working as expected, file an issue against the Language Server.

2.5.2.11.3. Enabling XML Language Server Protocol (LSP) tracing

Procedure

To log LSP messages to the VS Code Output view, enable tracing by setting the xml.trace.server attribute to verbose.

2.5.2.11.4. Viewing the XML language server log

Procedure

The log from the language server can be found in the plug-in sidecar at /home/theia/.theia/workspace-storage/<workspace_name>/redhat.vscode-xml/lsp4xml.log.

2.5.2.12. Viewing logs for YAML

This section describes the YAML plug-in specific actions that the user can perform, in addition to the general diagnostics ones.

2.5.2.12.1. Verifying the state of the YAML language server

This section describes how to verify the state of the YAML language server.

Procedure

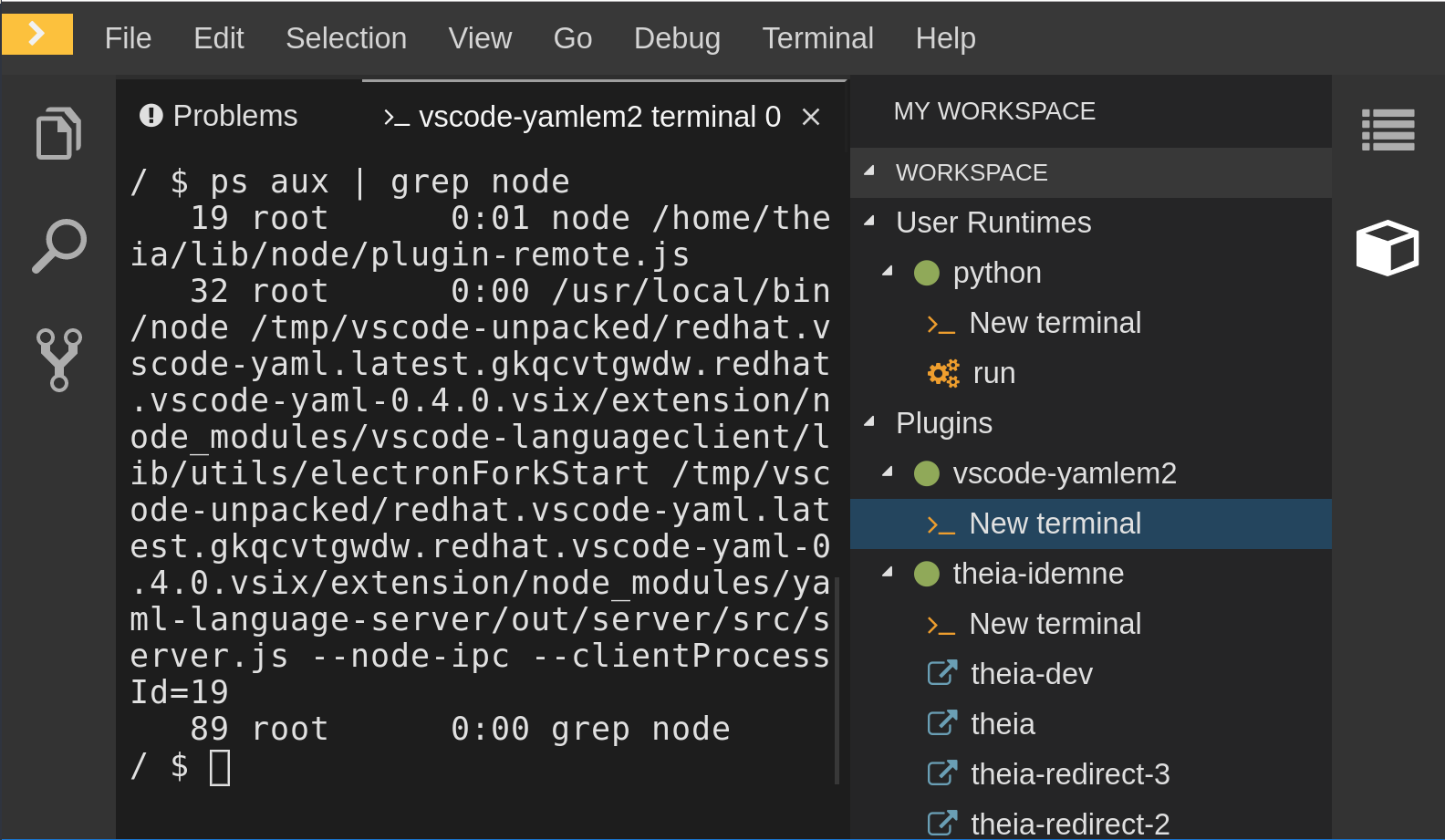

Check if the container running the YAML plug-in is running the YAML language server.

-

In the editor, open a terminal in the container that is running the YAML plug-in (an example name of the container:

vscode-yaml-<xxx>). -

In the terminal, run the

ps aux | grep nodecommand. This command searches all the node processes running in the current container. Verify that a command

node **/server.jsis running.

The node **/server.js running in the container indicates that the language server is running. If it is not running, the language server has not started inside the container. In this case, see Checking important logs.

2.5.2.12.2. Checking the YAML language server feature flags

Procedure

To check the feature flags:

Check if the features are enabled. The YAML plug-in provides multiple settings that can enable and disable features, such as:

-

yaml.format.enable: Enables the formatter -

yaml.validate: Enables validation -

yaml.hover: Enables the hover function -

yaml.completion: Enables the completion function

-

-

To check if the plug-in is working, type the simplest YAML, such as

hello: world, and then open the Outline panel on the right side of the editor. - Verify if there are any document symbols. If yes, the language server is connected to the editor.

-

If any feature is not working, make sure that the settings listed above are set to

true(they are set totrueby default). If a feature is not working, file an issue against the Language Server.

2.5.2.12.3. Enabling YAML Language Server Protocol (LSP) tracing

Procedure

To log LSP messages to the VS Code Output view, enable tracing by setting the yaml.trace.server attribute to verbose.

2.5.2.13. Viewing logs for Dotnet with Omnisharp-Theia plug-in

2.5.2.13.1. Omnisharp-Theia plug-in

CodeReady Workspaces uses the Omnisharp-Theia plug-in as a remote plug-in. It is located at github.com/redhat-developer/omnisharp-theia-plugin. In case of an issue, report it, or contribute your fix in the repository.

This plug-in registers omnisharp-roslyn as a language server and provides project dependencies and language syntax for C# applications.

The language server runs on .NET SDK 2.2.105.

2.5.2.13.2. Verifying the state of the Omnisharp-Theia plug-in language server

Procedure

To check if the container running the Omnisharp-Theia plug-in is running OmniSharp, execute the ps aux | grep OmniSharp.exe command. If the process is running, the following is an example output:

/tmp/theia-unpacked/redhat-developer.che-omnisharp-plugin.0.0.1.zcpaqpczwb.omnisharp_theia_plugin.theia/server/bin/mono /tmp/theia-unpacked/redhat-developer.che-omnisharp-plugin.0.0.1.zcpaqpczwb.omnisharp_theia_plugin.theia/server/omnisharp/OmniSharp.exe

If the output is different, the language server has not started inside the container. Check the logs described in Checking important logs.

2.5.2.13.3. Checking Omnisharp Che-Theia plug-in language server features

Procedure

-

If the OmniSharp.exe process is running, check if the language server features are working by opening a

.csfile and trying the hover or completion features, or opening the Problems or Outline view.

2.5.2.13.4. Viewing Omnisharp-Theia plug-in logs in the Output panel

Procedure

If Omnisharp.exe is running, it logs all information in the Output panel. To view the logs, open the Output view and select C# from the drop-down list.

2.5.2.14. Viewing logs for Dotnet with NetcoredebugOutput plug-in

2.5.2.14.1. NetcoredebugOutput plug-in

The NetcoredebugOutput plug-in provides the netcoredbg tool. This tool implements the VS Code Debug Adapter protocol and allows users to debug .NET applications under the .NET Core runtime.

The container where the NetcoredebugOutput plug-in is running contains Dotnet SDK v.2.2.105.

2.5.2.14.2. Verifying the state of the NetcoredebugOutput plug-in

Procedure

To test the plug-in initialization:

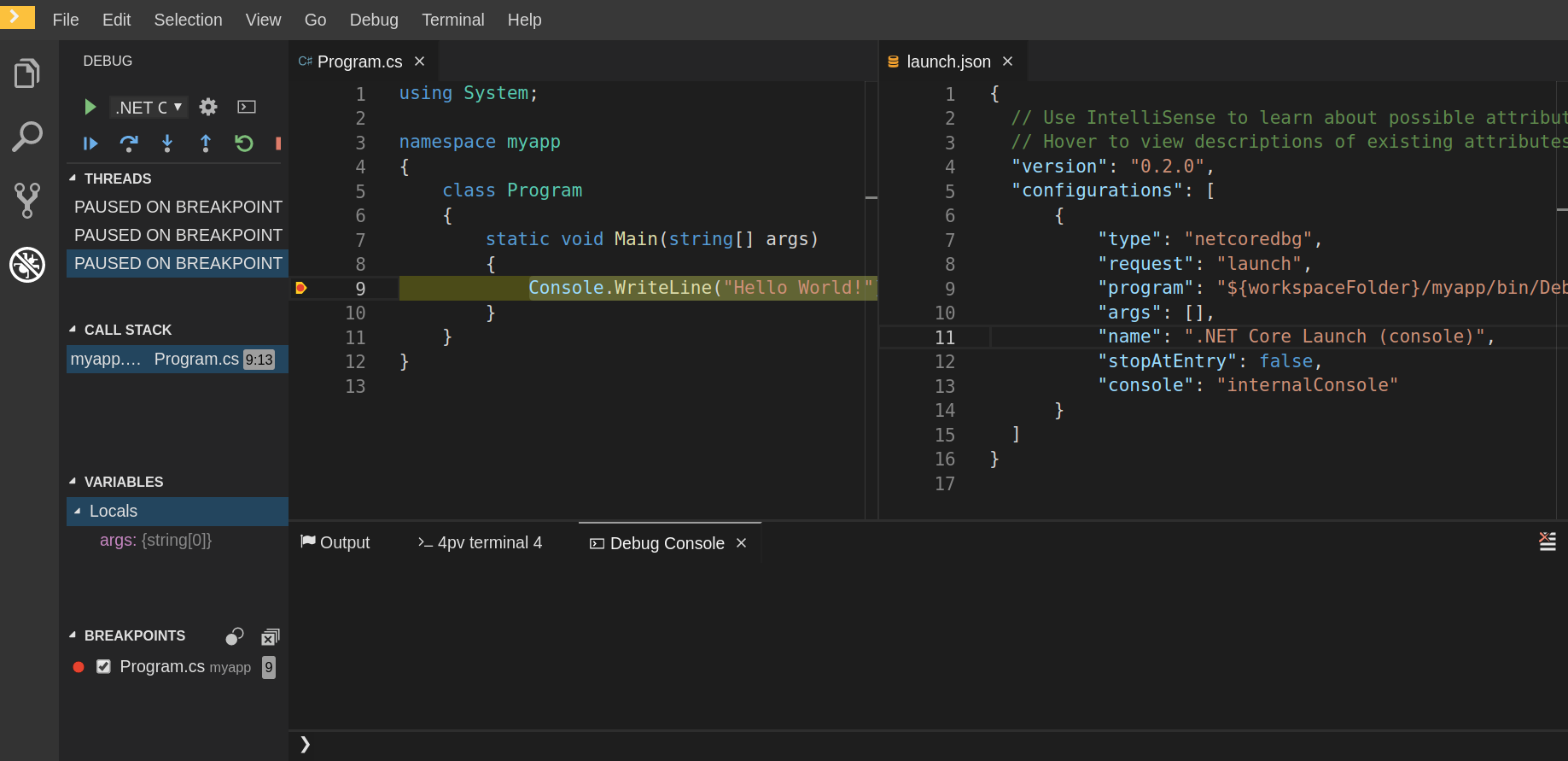

Check if there is a netcoredbg debug configuration in the

launch.jsonfile. The following is an example debug configuration:{ "type": "netcoredbg", "request": "launch", "program": "$\{workspaceFolder}/bin/Debug/<target-framework>/<project-name.dll>", "args": [], "name": ".NET Core Launch (console)", "stopAtEntry": false, "console": "internalConsole" }-

To test if it exists, test the autocompletion feature within the braces of the

configurationsection of thelaunch.jsonfile. If you can findnetcoredbg, the Che-Theia plug-in is correctly initialized. If not, see Checking important logs.

2.5.2.14.3. Viewing NetcoredebugOutput plug-in logs in the Output panel

This section describes how to view NetcoredebugOutput plug-in logs in the Output panel.

Procedure

Open the Debug console.

2.5.2.15. Viewing logs for Camel

2.5.2.15.1. Verifying the state of the Camel language server

Procedure

The user can inspect the log output of the sidecar container using the Camel language tools that are stored in the vscode-apache-camel<xxx> Camel container.

To verify the state of the language server:

-

Open a terminal inside the

vscode-apache-camel<xxx>container. Run the

ps aux | grep javacommand. The following is an example language server process:java -jar /tmp/vscode-unpacked/camel-tooling.vscode-apache-camel.latest.euqhbmepxd.camel-tooling.vscode-apache-camel-0.0.14.vsix/extension/jars/language-server.jar

- If you cannot find it, see Checking important logs.

2.5.2.15.2. Viewing Camel logs in the Output panel

The Camel language server is a SpringBoot application that writes its log to the $\{java.io.tmpdir}/log-camel-lsp.out file. Typically, $\{java.io.tmpdir} points to the /tmp directory, so the filename is /tmp/log-camel-lsp.out.

Procedure

The Camel language server logs are printed in the Output channel named Language Support for Apache Camel.

The output channel is created only at the first created log entry on the client side. It may be absent when everything is going well.

2.6. Viewing the plug-in broker logs

This section describes how to view the plug-in broker logs.

The che-plugin-broker Pod itself is deleted when its work is complete. Therefore, its event logs are only available while the workspace is starting.

Procedure

To see logged events from temporary Pods:

- Start a CodeReady Workspaces workspace.

- From the main OpenShift Container Platform screen, go to Workload → Pods.

- Use the OpenShift terminal console located in the Pod’s Terminal tab

Verification step

- OpenShift terminal console displays the plug-in broker logs while the workspace is starting

2.7. Collecting logs using crwctl

It is possible to get all Red Hat CodeReady Workspaces logs from a OpenShift cluster using the crwctl tool.

-

crwctl server:startautomatically starts collecting Red Hat CodeReady Workspaces servers logs during installation of Red Hat CodeReady Workspaces -

crwctl server:logscollects existing Red Hat CodeReady Workspaces server logs -

crwctl workspace:logscollects workspace logs

Chapter 3. Monitoring CodeReady Workspaces

This chapter describes how to configure CodeReady Workspaces to expose metrics and how to build an example monitoring stack with external tools to process data exposed as metrics by CodeReady Workspaces.

3.1. Enabling and exposing CodeReady Workspaces metrics

This section describes how to enable and expose CodeReady Workspaces metrics.

Procedure

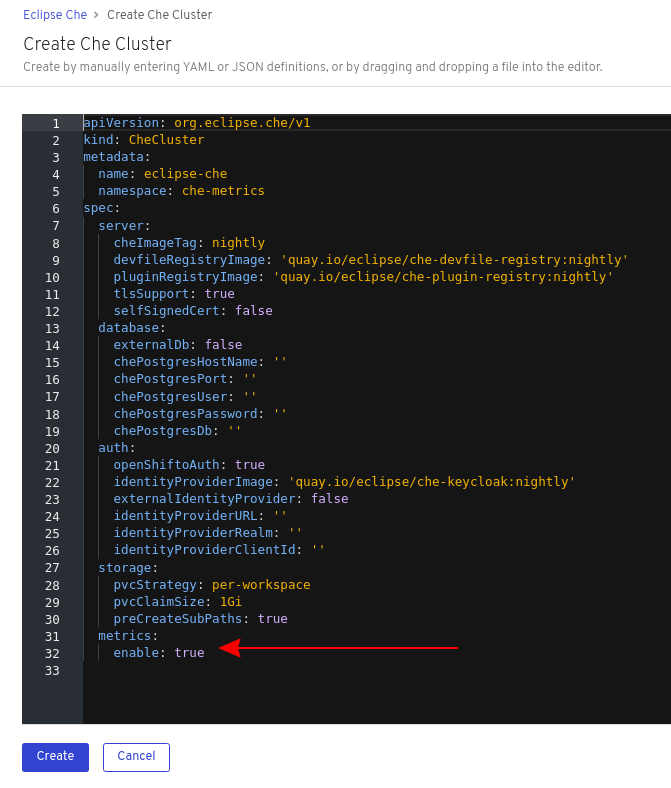

-

Set the

CHE_METRICS_ENABLED=trueenvironment variable, which will expose the8087port as a service on the che-master host.

When Red Hat CodeReady Workspaces is installed from the OperatorHub, the environment variable is set automatically if the default CheCluster CR is used:

spec:

metrics:

enable: true3.2. Collecting CodeReady Workspaces metrics with Prometheus

This section describes how to use the Prometheus monitoring system to collect, store and query metrics about CodeReady Workspaces.

Prerequisites

-

CodeReady Workspaces is exposing metrics on port

8087. See Enabling and exposing codeready-workspaces metrics. -

Prometheus 2.9.1 or higher is running. The Prometheus console is running on port

9090with a corresponding service and route. See First steps with Prometheus.

Procedure

Configure Prometheus to scrape metrics from the

8087port:Prometheus configuration example

apiVersion: v1 kind: ConfigMap metadata: name: prometheus-config data: prometheus.yml: |- global: scrape_interval: 5s 1 evaluation_interval: 5s 2 scrape_configs: 3 - job_name: 'che' static_configs: - targets: ['[che-host]:8087'] 4- 1

- Rate, at which a target is scraped.

- 2

- Rate, at which recording and alerting rules are re-checked (not used in the system at the moment).

- 3

- Resources Prometheus monitors. In the default configuration, there is a single job called

codeready, which scrapes the time series data exposed by the CodeReady Workspaces server. - 4

- Scrape metrics from the

8087port.

Verification steps

Use the Prometheus console to query and view metrics.

Metrics are available at:

http://<che-server-url>:9090/metrics.For more information, see Using the expression browser in the Prometheus documentation.

Additional resources

3.3. Extending CodeReady Workspaces monitoring metrics

This section describes how to create a metric or a group of metrics to extend the monitoring metrics that CodeReady Workspaces is exposing.

CodeReady Workspaces has two major modules metrics:

-

che-core-metrics-core— contains core metrics module -

che-core-api-metrics— contains metrics that are dependent on core CodeReady Workspaces components, such as workspace or user managers

Procedure

Create a class that extends the

MeterBinderclass. This allows to register the created metric in the overriddenbindTo(MeterRegistry registry)method.The following is an example of a metric that has a function that supplies the value for it:

Example metric

public class UserMeterBinder implements MeterBinder { private final UserManager userManager; @Inject public UserMeterBinder(UserManager userManager) { this.userManager = userManager; } @Override public void bindTo(MeterRegistry registry) { Gauge.builder("che.user.total", this::count) .description("Total amount of users") .register(registry); } private double count() { try { return userManager.getTotalCount(); } catch (ServerException e) { return Double.NaN; } }Alternatively, the metric can be stored with a reference and updated manually in some other place in the code.

Additional resources

Chapter 4. Tracing CodeReady Workspaces

Tracing helps gather timing data to troubleshoot latency problems in microservice architectures and helps to understand a complete transaction or workflow as it propagates through a distributed system. Every transaction may reflect performance anomalies in an early phase when new services are being introduced by independent teams.

Tracing the CodeReady Workspaces application may help analyze the execution of various operations, such as workspace creations, workspace startup, breaking down the duration of sub-operations executions, helping finding bottlenecks and improve the overall state of the platform.

Tracers live in applications. They record timing and metadata about operations that take place. They often instrument libraries, so that their use is transparent to users. For example, an instrumented web server records when it received a request and when it sent a response. The trace data collected is called a span. A span has a context that contains information such as trace and span identifiers and other kinds of data that can be propagated down the line.

4.1. Tracing API

CodeReady Workspaces utilizes OpenTracing API - a vendor-neutral framework for instrumentation. This means that if a developer wants to try a different tracing back end, then instead of repeating the whole instrumentation process for the new distributed tracing system, the developer can simply change the configuration of the tracer back end.

4.2. Tracing back end

By default, CodeReady Workspaces uses Jaeger as the tracing back end. Jaeger was inspired by Dapper and OpenZipkin, and it is a distributed tracing system released as open source by Uber Technologies. Jaeger extends a more complex architecture for a larger scale of requests and performance.

4.3. Installing the Jaeger tracing tool

The following sections describe the installation methods for the Jaeger tracing tool. Jaeger can then be used for gathering metrics in CodeReady Workspaces.

Installation methods available:

For tracing a CodeReady Workspaces instance using Jaeger, version 1.12.0 or above is required. For additional information about Jaeger, see the Jaeger website.

4.3.1. Installing the Jaeger tracing tool for CodeReady Workspaces on OpenShift 4

This section provide information about using Jaeger tracing tool for testing an evaluation purposes.

To install the Jaeger tracing tool from a CodeReady Workspaces project in OpenShift Container Platform, follow the instructions in this section.

Prerequisites

- The user is logged in to the OpenShift Container Platform web console.

- A instance of CodeReady Workspaces in an OpenShift Container Platform cluster.

Procedure

In the CodeReady Workspaces installation project of the OpenShift Container Platform cluster, use the

occlient to create a new application for the Jaeger deployment.$ oc new-app -f / ${CHE_LOCAL_GIT_REPO}/deploy/openshift/templates/jaeger-all-in-one-template.yml: --> Deploying template "<project_name>/jaeger-template-all-in-one" for "/home/user/crw-projects/crw/deploy/openshift/templates/jaeger-all-in-one-template.yml" to project <project_name> Jaeger (all-in-one) --------- Jaeger Distributed Tracing Server (all-in-one) * With parameters: * Jaeger Service Name=jaeger * Image version=latest * Jaeger Zipkin Service Name=zipkin --> Creating resources ... deployment.apps "jaeger" created service "jaeger-query" created service "jaeger-collector" created service "jaeger-agent" created service "zipkin" created route.route.openshift.io "jaeger-query" created --> Success Access your application using the route: 'jaeger-query-<project_name>.apps.ci-ln-whx0352-d5d6b.origin-ci-int-aws.dev.rhcloud.com' Run 'oc status' to view your app.- Using the Workloads → Deployments from the left menu of main OpenShift Container Platform screen, monitor the Jaeger deployment until it finishes successfully.

- Select Networking → Routes from the left menu of the main OpenShift Container Platform screen, and click the URL link to access the Jaeger dashboard.

- Follow the steps in Enabling CodeReady Workspaces traces collections to finish the procedure.

4.3.2. Installing Jaeger using OperatorHub on OpenShift 4

This section provide information about using Jaeger tracing tool for testing an evaluation purposes in production.

To install the Jaeger tracing tool from the OperatorHub interface in OpenShift Container Platform, follow the instructions below.

Prerequisites

- The user is logged in to the OpenShift Container Platform Web Console.

- A CodeReady Workspaces instance is available in a project.

Procedure

- Open the OpenShift Container Platform console.

- From the left menu of the main OpenShift Container Platform screen, navigate to Operators → OperatorHub.

-

In the Search by keyword search bar, type

Jaeger Operator. -

Click the

Jaeger Operatortile. -

Click the Install button in the

Jaeger Operatorpop-up window. -

Select the installation method:

A specific project on the clusterwhere the CodeReady Workspaces is deployed and leave the rest in its default values. - Click the Subscribe button.

- From the left menu of the main OpenShift Container Platform screen, navigate to the Operators → Installed Operators section.

- Red Hat CodeReady Workspaces is displayed as an Installed Operator, as indicated by the InstallSucceeded status.

- Click the Jaeger Operator name in the list of installed Operators.

- Navigate to the Overview tab.

-

In the Conditions sections at the bottom of the page, wait for this message:

install strategy completed with no errors. -

Jaeger Operatorand additionalElasticsearch Operatoris installed. - Navigate to the Operators → Installed Operators section.

- Click Jaeger Operator in the list of installed Operators.

- The Jaeger Cluster page is displayed.

- In the lower left corner of the window, click Create Instance

- Click Save.

-

OpenShift creates the Jaeger cluster

jaeger-all-in-one-inmemory. - Follow the steps in Enabling CodeReady Workspaces metrics collections to finish the procedure.

4.4. Enabling CodeReady Workspaces traces collections

Prerequisites

- Installed Jaeger v1.12.0 or above. See instructions at Section 4.3, “Installing the Jaeger tracing tool”

Procedure

For Jaeger tracing to work, enable the following environment variables in your CodeReady Workspaces deployment:

# Activating CodeReady Workspaces tracing modules CHE_TRACING_ENABLED=true # Following variables are the basic Jaeger client library configuration. JAEGER_ENDPOINT="http://jaeger-collector:14268/api/traces" # Service name JAEGER_SERVICE_NAME="che-server" # URL to remote sampler JAEGER_SAMPLER_MANAGER_HOST_PORT="jaeger:5778" # Type and param of sampler (constant sampler for all traces) JAEGER_SAMPLER_TYPE="const" JAEGER_SAMPLER_PARAM="1" # Maximum queue size of reporter JAEGER_REPORTER_MAX_QUEUE_SIZE="10000"

To enable the following environment variables:

In the

yamlsource code of the CodeReady Workspaces deployment, add the following configuration variables underspec.server.customCheProperties.customCheProperties: CHE_TRACING_ENABLED: 'true' JAEGER_SAMPLER_TYPE: const DEFAULT_JAEGER_REPORTER_MAX_QUEUE_SIZE: '10000' JAEGER_SERVICE_NAME: che-server JAEGER_ENDPOINT: 'http://jaeger-collector:14268/api/traces' JAEGER_SAMPLER_MANAGER_HOST_PORT: 'jaeger:5778' JAEGER_SAMPLER_PARAM: '1'Edit the

JAEGER_ENDPOINTvalue to match the name of the Jaeger collector service in your deployment.From the left menu of the main OpenShift Container Platform screen, obtain the value of JAEGER_ENDPOINT by navigation to Networking → Services. Alternatively, execute the following

occommand:$ oc get services

The requested value is included in the service name that contains the

collectorstring.

Additional resources

- For additional information about custom environment properties and how to define them in CheCluster Custom Resource, see Advanced configuration options for the CodeReady Workspaces server component.

- For custom configuration of Jaeger, see the list of Jaeger client environment variables.

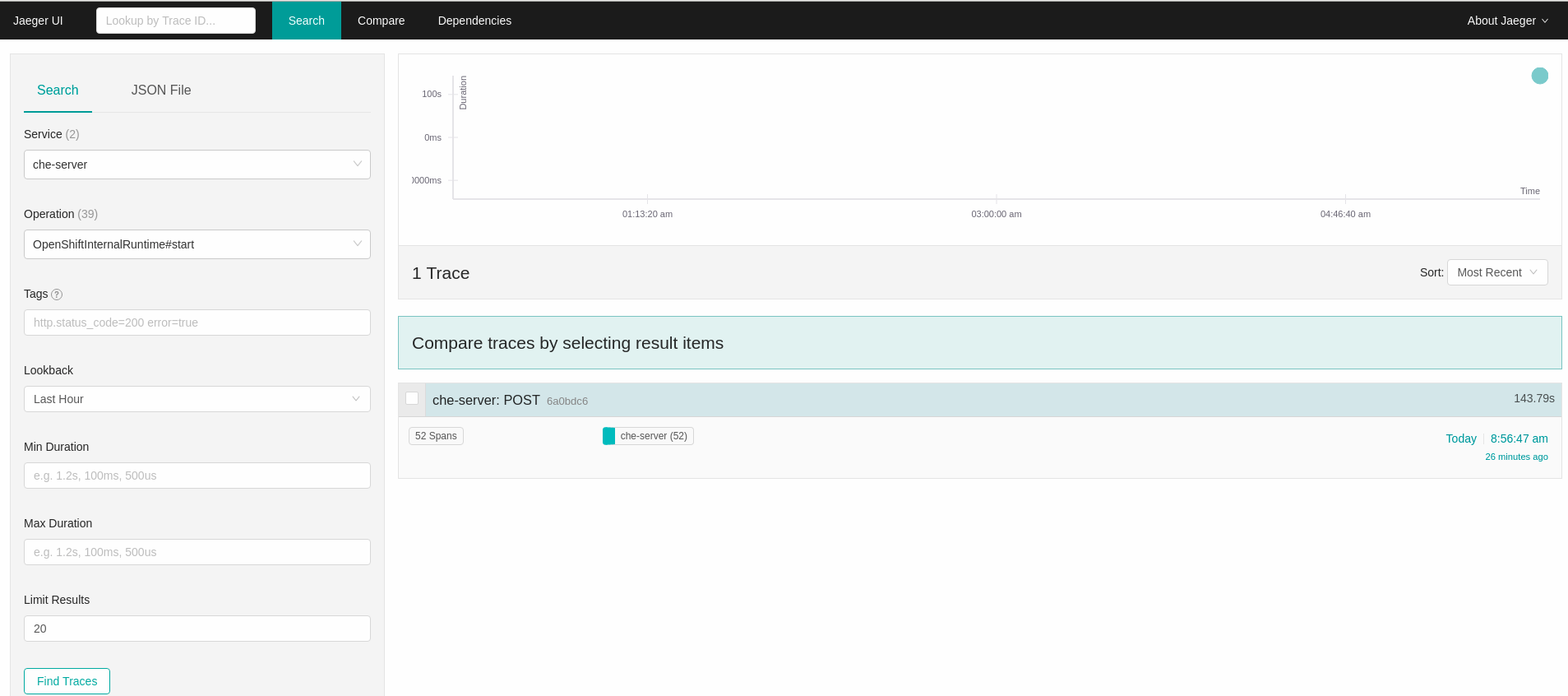

4.5. Viewing CodeReady Workspaces traces in Jaeger UI

This section demonstrates how to utilize the Jaeger UI to overview traces of CodeReady Workspaces operations.

Procedure

In this example, the CodeReady Workspaces instance has been running for some time and one workspace start has occurred.

To inspect the trace of the workspace start:

In the Search panel on the left, filter spans by the operation name (span name), tags, or time and duration.

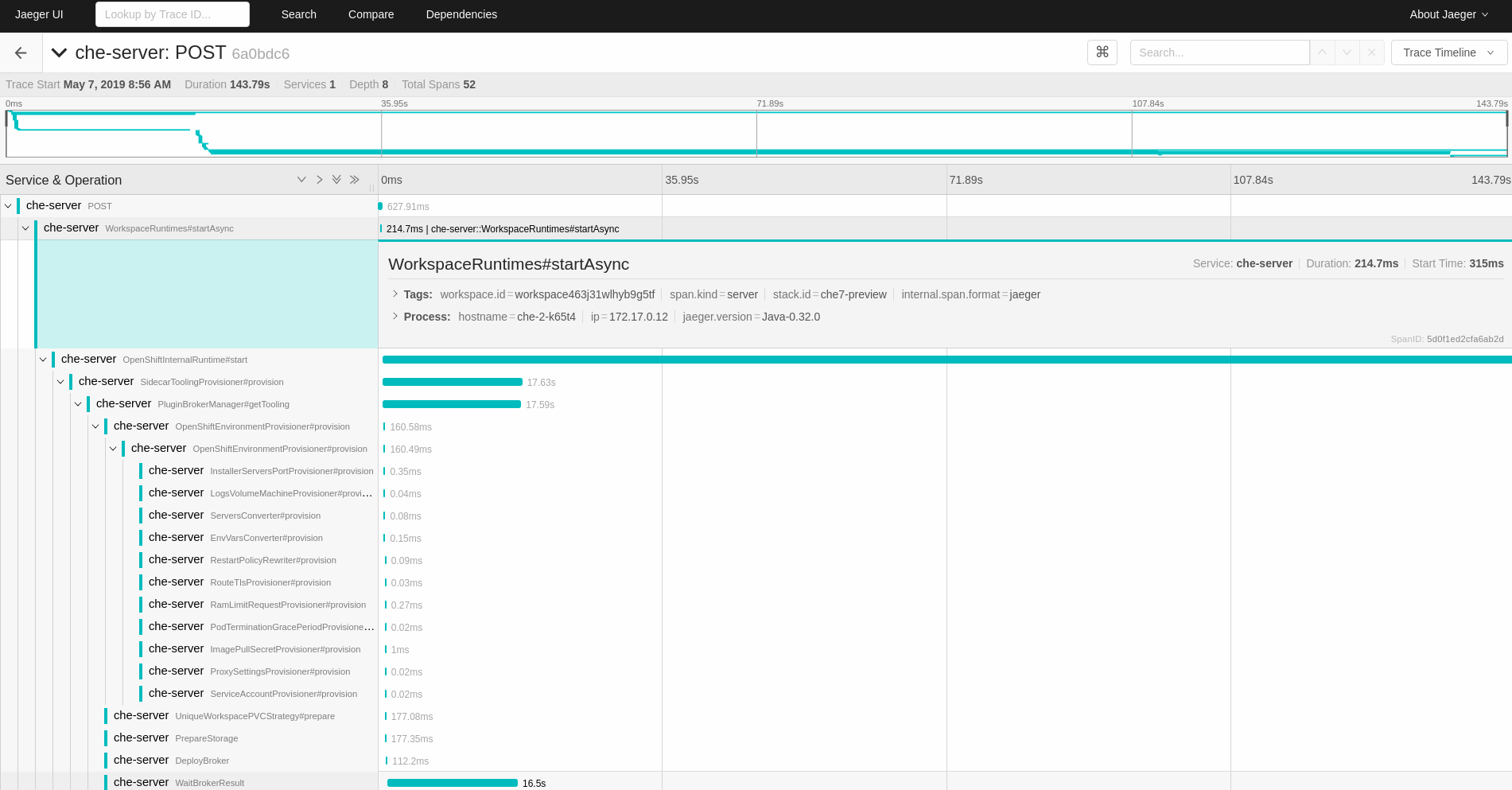

Figure 4.1. Using Jaeger UI to trace CodeReady Workspaces

Select the trace to expand it and show the tree of nested spans and additional information about the highlighted span, such as tags or durations.

Figure 4.2. Expanded tracing tree

4.6. CodeReady Workspaces tracing codebase overview and extension guide

The core of the tracing implementation for CodeReady Workspaces is in the che-core-tracing-core and che-core-tracing-web modules.

All HTTP requests to the tracing API have their own trace. This is done by TracingFilter from the OpenTracing library, which is bound for the whole server application. Adding a @Traced annotation to methods causes the TracingInterceptor to add tracing spans for them.

4.6.1. Tagging

Spans may contain standard tags, such as operation name, span origin, error, and other tags that may help users with querying and filtering spans. Workspace-related operations (such as starting or stopping workspaces) have additional tags, including userId, workspaceID, and stackId. Spans created by TracingFilter also have an HTTP status code tag.

Declaring tags in a traced method is done statically by setting fields from the TracingTags class:

TracingTags.WORKSPACE_ID.set(workspace.getId());

TracingTags is a class where all commonly used tags are declared, as respective AnnotationAware tag implementations.

Additional resources

For more information about how to use Jaeger UI, visit Jaeger documentation: Jaeger Getting Started Guide.

Chapter 5. Managing users