Chapter 1. Ceph dashboard overview

As a storage administrator, the Red Hat Ceph Storage Dashboard provides management and monitoring capabilities, allowing you to administer and configure the cluster, as well as visualize information and performance statistics related to it. The dashboard uses a web server hosted by the ceph-mgr daemon.

The dashboard is accessible from a web browser and includes many useful management and monitoring features, for example, to configure manager modules and monitor the state of OSDs.

Prerequisites

- System administrator level experience.

1.1. Ceph Dashboard components

The functionality of the dashboard is provided by multiple components.

- The Cephadm application for deployment.

-

The embedded dashboard

ceph-mgrmodule. -

The embedded Prometheus

ceph-mgrmodule. - The Prometheus time-series database.

- The Prometheus node-exporter daemon, running on each host of the storage cluster.

- The Grafana platform to provide monitoring user interface and alerting.

Additional Resources

- For more information, see the Prometheus website.

- For more information, see the Grafana website.

1.2. Ceph Dashboard features

The Ceph dashboard provides the following features:

- Multi-user and role management: The dashboard supports multiple user accounts with different permissions and roles. User accounts and roles can be managed using both, the command line and the web user interface. The dashboard supports various methods to enhance password security. Password complexity rules may be configured, requiring users to change their password after the first login or after a configurable time period.

- Single Sign-On (SSO): The dashboard supports authentication with an external identity provider using the SAML 2.0 protocol.

- Auditing: The dashboard backend can be configured to log all PUT, POST and DELETE API requests in the Ceph manager log.

Management features

- View cluster hierarchy: You can view the CRUSH map, for example, to determine which host a specific OSD ID is running on. This is helpful if there is an issue with an OSD.

- Configure manager modules: You can view and change parameters for Ceph manager modules.

- Embedded Grafana Dashboards: Ceph Dashboard Grafana dashboards might be embedded in external applications and web pages to surface information and performance metrics gathered by the Prometheus module.

- View and filter logs: You can view event and audit cluster logs and filter them based on priority, keyword, date, or time range.

- Toggle dashboard components: You can enable and disable dashboard components so only the features you need are available.

- Manage OSD settings: You can set cluster-wide OSD flags using the dashboard. You can also Mark OSDs up, down or out, purge and reweight OSDs, perform scrub operations, modify various scrub-related configuration options, select profiles to adjust the level of backfilling activity. You can set and change the device class of an OSD, display and sort OSDs by device class. You can deploy OSDs on new drives and hosts.

- Viewing Alerts: The alerts page allows you to see details of current alerts.

- Quality of Service for images: You can set performance limits on images, for example limiting IOPS or read BPS burst rates.

Monitoring features

- Username and password protection: You can access the dashboard only by providing a configurable user name and password.

- Overall cluster health: Displays performance and capacity metrics. This also displays the overall cluster status, storage utilization, for example, number of objects, raw capacity, usage per pool, a list of pools and their status and usage statistics.

- Hosts: Provides a list of all hosts associated with the cluster along with the running services and the installed Ceph version.

- Performance counters: Displays detailed statistics for each running service.

- Monitors: Lists all Monitors, their quorum status and open sessions.

- Configuration editor: Displays all the available configuration options, their descriptions, types, default, and currently set values. These values are editable.

- Cluster logs: Displays and filters the latest updates to the cluster’s event and audit log files by priority, date, or keyword.

- Device management: Lists all hosts known by the Orchestrator. Lists all drives attached to a host and their properties. Displays drive health predictions, SMART data, and blink enclosure LEDs.

- View storage cluster capacity: You can view raw storage capacity of the Red Hat Ceph Storage cluster in the Capacity panels of the Ceph dashboard.

- Pools: Lists and manages all Ceph pools and their details. For example: applications, placement groups, replication size, EC profile, quotas, CRUSH ruleset, etc.

- OSDs: Lists and manages all OSDs, their status and usage statistics as well as detailed information like attributes, like OSD map, metadata, and performance counters for read and write operations. Lists all drives associated with an OSD.

Images: Lists all RBD images and their properties such as size, objects, and features. Create, copy, modify and delete RBD images. Create, delete, and rollback snapshots of selected images, protect or unprotect these snapshots against modification. Copy or clone snapshots, flatten cloned images.

NoteThe performance graph for I/O changes in the Overall Performance tab for a specific image shows values only after specifying the pool that includes that image by setting the

rbd_stats_poolparameter in Cluster > Manager modules > Prometheus.- RBD Mirroring: Enables and configures RBD mirroring to a remote Ceph server. Lists all active sync daemons and their status, pools and RBD images including their synchronization state.

- Ceph File Systems: Lists all active Ceph file system (CephFS) clients and associated pools, including their usage statistics. Evict active CephFS clients, manage CephFS quotas and snapshots, and browse a CephFS directory structure.

- Object Gateway (RGW): Lists all active object gateways and their performance counters. Displays and manages, including add, edit, delete, object gateway users and their details, for example quotas, as well as the users’ buckets and their details, for example, owner or quotas.

- NFS: Manages NFS exports of CephFS and Ceph object gateway S3 buckets using the NFS Ganesha.

Security features

- SSL and TLS support: All HTTP communication between the web browser and the dashboard is secured via SSL. A self-signed certificate can be created with a built-in command, but it is also possible to import custom certificates signed and issued by a Certificate Authority (CA).

Additional Resources

- See Toggling Ceph dashboard features in the Red Hat Ceph Storage Dashboard Guide for more information.

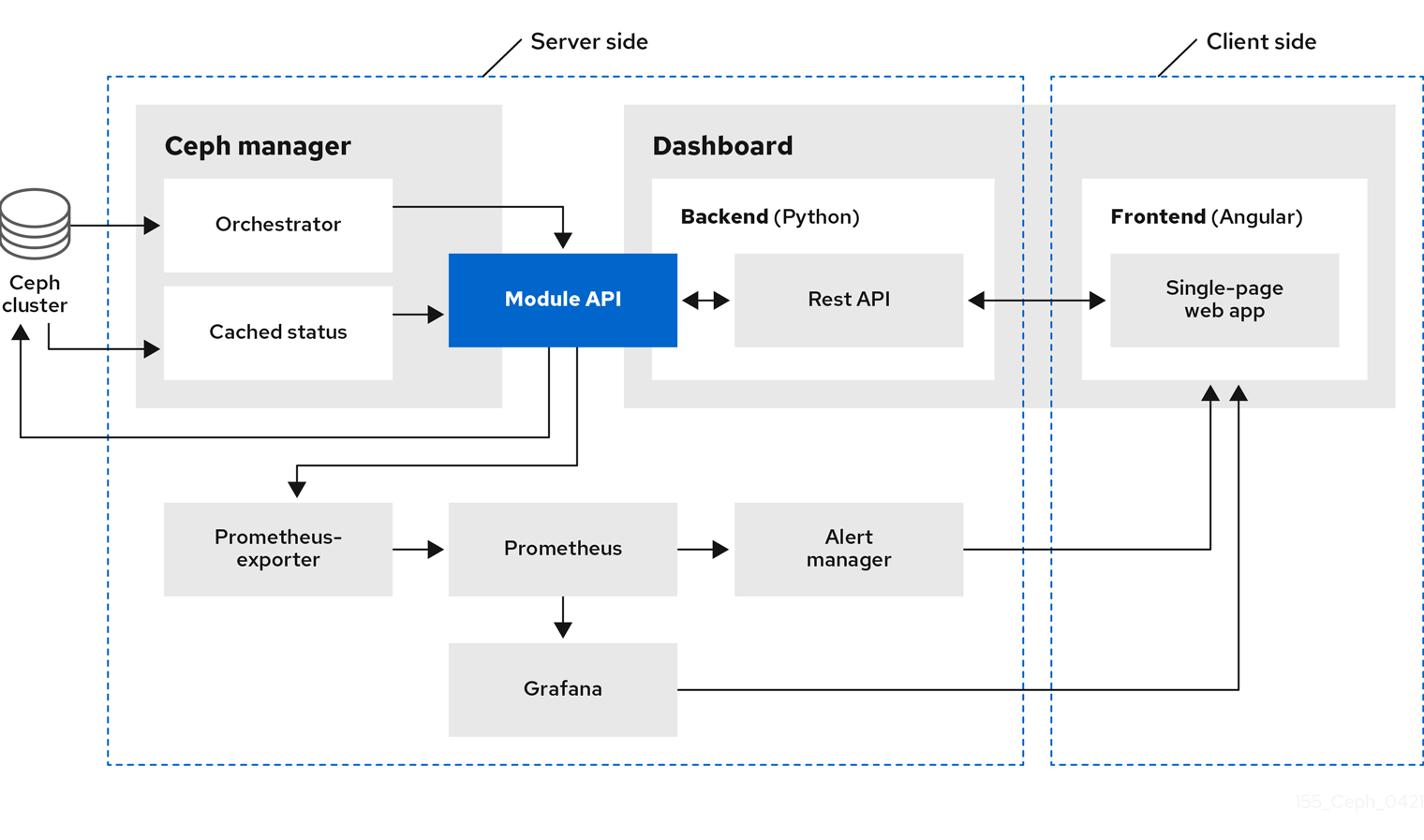

1.3. Red Hat Ceph Storage Dashboard architecture

The Dashboard architecture depends on the Ceph manager dashboard plugin and other components. See the diagram below to understand how they work together.