-

Language:

English

-

Language:

English

Deploying AMQ Broker on OpenShift

For Use with AMQ Broker 7.6

Abstract

Chapter 1. Introduction to AMQ Broker on OpenShift Container Platform

Red Hat AMQ Broker 7.6 is available as a containerized image that is provided for use with OpenShift Container Platform (OCP) 3.11 and later.

AMQ Broker is based on Apache ActiveMQ Artemis. It provides a message broker that is JMS-compliant. After you have set up the initial broker pod, you can quickly deploy duplicates by using OpenShift Container Platform features.

AMQ Broker on OCP provides similar functionality to Red Hat AMQ Broker, but some aspects of the functionality need to be configured specifically for use with OpenShift Container Platform.

1.1. Version compatibility and support

For details about OpenShift Container Platform image version compatibility, see:

1.2. Unsupported features

Master-slave-based high availability

High availability (HA) achieved by configuring master and slave pairs is not supported. Instead, when pods are scaled down, HA is provided in OpenShift by using the scaledown controller, which enables message migration.

External Clients that connect to a cluster of brokers, either through the OpenShift proxy or by using bind ports, may need to be configured for HA accordingly. In a clustered scenario, a broker will inform certain clients of the addresses of all the broker’s host and port information. Since these are only accessible internally, certain client features either will not work or will need to be disabled.

Client Configuration Core JMS Client

Because external Core Protocol JMS clients do not support HA or any type of failover, the connection factories must be configured with

useTopologyForLoadBalancing=false.AMQP Clients

AMQP clients do not support failover lists

Durable subscriptions in a cluster

When a durable subscription is created, this is represented as a durable queue on the broker to which a client has connected. When a cluster is running within OpenShift the client does not know on which broker the durable subscription queue has been created. If the subscription is durable and the client reconnects there is currently no method for the load balancer to reconnect it to the same node. When this happens, it is possible that the client will connect to a different broker and create a duplicate subscription queue. For this reason, using durable subscriptions with a cluster of brokers is not recommended.

Chapter 2. Deploying AMQ Broker on OpenShift Container Platform using an Operator

2.1. Overview of the AMQ Broker Operator

Kubernetes - and, by extension, OpenShift Container Platform - includes features such as secret handling, load balancing, service discovery, and autoscaling that enable you to build complex distributed systems. Operators are programs that enable you to package, deploy, and manage Kubernetes applications. Often, Operators automate common or complex tasks.

Commonly, Operators are intended to provide:

- Consistent, repeatable installations

- Health checks of system components

- Over-the-air (OTA) updates

- Managed upgrades

Operators use Kubernetes extension mechanisms called Custom Resource Definitions and corresponding Custom Resources to ensure that your custom objects look and act just like native, built-in Kubernetes objects. Custom Resource Definitions and Custom Resources are how you specify the configuration of the OpenShift objects that you plan to deploy.

Previously, you could use only application templates to deploy AMQ Broker on OpenShift Container Platform. While templates are effective for creating an initial deployment, they do not provide a mechanism for updating the deployment. Operators enable you to make changes while your broker instances are running, because they are always listening for changes to your Custom Resources, where you specify your configuration. When you make changes to a Custom Resource, the Operator reconciles the changes with the existing broker installation in your project, and makes it reflect the changes you have made.

2.2. Overview of Custom Resource Definitions

In general, a Custom Resource Definition (CRD) is a schema of configuration items that you can modify for a custom OpenShift object deployed with an Operator. An accompanying Custom Resource (CR) file enables you to specify values for configuration items in the CRD. If you are an Operator developer, what you expose through a CRD essentially becomes the API for how a deployed object is configured and used. You can directly access the CRD through regular HTTP curl commands, because the CRD gets exposed automatically through Kubernetes. The Operator also interacts with Kubernetes via the kubectl command using HTTP requests.

The main broker CRD for AMQ Broker 7.6 is the broker_activemqartemis_crd file in the deploy/crds directory of the archive that you download and extract when installing the Operator. This CRD enables you to configure a broker deployment in a given OpenShift project. The other CRDs in the deploy/crds directory are for configuring addresses and for the Operator to use when instantiating a scaledown controller .

When deployed, each CRD is a separate controller, running independently within the Operator.

For a complete configuration reference for each CRD see:

2.2.1. Sample broker Custom Resources

The AMQ Broker Operator archive that you download and extract during installation includes sample Custom Resource (CR) files in the deploy/crs directory. These sample CR files enable you to:

- Deploy a minimal broker without SSL or clustering.

- Define addresses.

The broker Operator archive that you download and extract also includes CRs for example deployments in the deploy/examples directory, as listed below.

artemis-basic-deployment.yaml- Basic broker deployment.

artemis-persistence-deployment.yaml- Broker deployment with persistent storage.

artemis-cluster-deployment.yaml- Deployment of clustered brokers.

artemis-persistence-cluster-deployment.yaml- Deployment of clustered brokers with persistent storage.

artemis-ssl-deployment.yaml- Broker deployment with SSL security.

artemis-ssl-persistence-deployment.yaml- Broker deployment with SSL security and persistent storage.

artemis-aio-journal.yaml- Use of asynchronous I/O (AIO) with the broker journal.

address-queue-create.yaml- Address and queue creation.

The procedures in the following sections show you how to use the Operator and CRs to create some container-based broker deployments on OpenShift Container Platform. When you have successfully completed the procedures, you will have the Operator running in an individual Pod. Each broker instance that you create will run in a separate StatefulSet containing a Pod in the project. You will use a dedicated CR to define addresses in your broker deployments.

You cannot create more than one broker deployment in a given OpenShift project by deploying multiple broker CR instances. However, when you have created a broker deployment in a project, you can deploy multiple CR instances for addresses.

2.3. Installing the AMQ Broker Operator using the CLI

The procedures in this section show how to use the OpenShift command-line interface (CLI) to install and deploy the latest version of the Operator for AMQ Broker 7.6 in a given OpenShift project. In subsequent procedures, you use this Operator to deploy some broker instances.

For an alternative method of installing the AMQ Broker Operator that uses the OperatorHub graphical interface, see Section 2.4, “Managing the AMQ Broker Operator using the Operator Lifecycle Manager”.

- Deploying the Custom Resource Definitions (CRDs) that accompany the AMQ Broker Operator requires cluster administrator privileges for your OpenShift cluster. When the Operator is deployed, non-administrator users can create broker instances via corresponding Custom Resources (CRs). To enable regular users to deploy CRs, the cluster administrator must first assign roles and permissions to the CRDs. For more information, see Creating cluster roles for Custom Resource Definitions in the OpenShift Container Platform documentation.

- If you previously deployed the Operator for AMQ Broker 7.4 (that is, version 0.6), you must remove all CRDs in your cluster and the main broker Custom Resource (CR) in your OpenShift project before installing the latest version of the Operator for AMQ Broker 7.6. When you have installed the latest version of the Operator in your project, you can deploy CRs that correspond to the latest CRDs to create a new broker deployment. These steps are described in the procedures in this section.

- If you previously deployed the Operator for AMQ Broker 7.5 (that is, version 0.9), you can upgrade your project to use the latest version of the AMQ Broker Operator without removing the existing CRDs in your cluster. However, as part of this upgrade, you must delete the main broker CR from your project. These steps are described in Section 4.1.2, “Upgrading the Operator using the CLI”.

- When you update your cluster with the latest CRDs (required as part of a new Operator installation or an upgrade), this update affects all projects in the cluster. In particular, any broker Pods previously deployed from version 0.6 or version 0.9 of the AMQ Broker Operator become unable to update their status in the OpenShift Container Platform web console. When you click the Logs tab of a running broker Pod, you see messages in the log indicating that 'UpdatePodStatus' has failed. Despite this update failure, the broker Pods and broker Operator in that project continue to work as expected. To fix this issue for an affected project, that project must also be updated to use the latest version of the AMQ Broker Operator, either through a new installation or an upgrade.

2.3.1. Getting the Operator code

This procedure shows you how to access and prepare the code you need to install the latest version of the Operator for AMQ Broker 7.6.

Procedure

- In your web browser, navigate to the AMQ Broker Software Downloads page.

-

In the Version drop-down box, ensure that the value is set to the latest Broker version,

7.6.0. Next to AMQ Broker 7.6 Operator Installation Files, click Download.

Download of the

amq-broker-operator-7.6.0-ocp-install-examples.zipcompressed archive automatically begins.When the download has completed, move the archive to your chosen installation directory. The following example moves the archive to a directory called

/broker/operator.sudo mv amq-broker-operator-7.6.0-ocp-install-examples.zip /broker/operator

In your chosen installation directory, extract the contents of the archive. For example:

cd /broker/operator sudo unzip amq-broker-operator-7.6.0-ocp-install-examples.zip

Log in to OpenShift Container Platform as a cluster administrator. For example:

$ oc login -u system:admin

Specify the project in which you want to install the Operator. You can create a new project or switch to an existing one.

Create a new project:

$ oc new-project <project_name>

Or, switch to an existing project:

$ oc project <project_name>

Specify a service account to use with the Operator.

-

In the

deploydirectory of the Operator archive that you extracted, open theservice_account.yamlfile. -

Ensure that the

kindelement is set toServiceAccount. -

In the

metadatasection, assign a custom name to the service account, or use the default name. The default name isamq-broker-operator. Create the service account in your project.

$ oc create -f deploy/service_account.yaml

-

In the

Specify a role name for the Operator.

-

Open the

role.yamlfile. This file specifies the resources that the Operator can use and modify. -

Ensure that the

kindelement is set toRole. -

In the

metadatasection, assign a custom name to the role, or use the default name. The default name isamq-broker-operator. Create the role in your project.

$ oc create -f deploy/role.yaml

-

Open the

Specify a role binding for the Operator. The role binding binds the previously-created service account to the Operator role, based on the names you specified.

Open the

role_binding.yamlfile. Ensure that thenamevalues forServiceAccountandRolematch those specified in theservice_account.yamlandrole.yamlfiles. For example:metadata: name: amq-broker-operator subjects: kind: ServiceAccount name: amq-broker-operator roleRef: kind: Role name: amq-broker-operatorCreate the role binding in your project.

$ oc create -f deploy/role_binding.yaml

2.3.2. Deploying the AMQ Broker Operator using the CLI

The procedure in this section shows how to use the OpenShift command-line interface (CLI) to deploy the latest version of the Operator for AMQ Broker 7.6 in your OpenShift project.

If you previously deployed the Operator for AMQ Broker 7.5, you can upgrade your project to use the latest version of the Operator for AMQ Broker 7.6 without performing a new deployment. For more information, see Section 4.1.2, “Upgrading the Operator using the CLI”.

Prerequisites

- You have already prepared your OpenShift project for the Operator deployment. See Section 2.3.1, “Getting the Operator code”.

- Starting in AMQ Broker 7.3, you use a new version of the Red Hat Container Registry to access container images. This new version of the registry requires you to become an authenticated user before you can access images. Before you can follow the procedure in this section, you must first complete the steps described in Red Hat Container Registry Authentication.

If you intend to deploy brokers with persistent storage and do not have container-native storage in your OpenShift cluster, you need to manually provision persistent volumes and ensure that they are available to be claimed by the Operator. For example, if you want to create a cluster of two brokers with persistent storage (that is, by setting

persistenceEnabled=truein your Custom Resource), you need to have two persistent volumes available. By default, each broker instance requires storage of 2 GiB.If you specify

persistenceEnabled=falsein your Custom Resource, the deployed brokers uses ephemeral storage. Ephemeral storage means that that every time you restart the broker Pods, any existing data is lost.For more information about provisioning persistent storage in OpenShift Container Platform, see Understanding persistent storage in the OpenShift Container Platform documentation.

Procedure

In the OpenShift Container Platform web console, open the project in which you want your broker deployment.

If you created a new project, it is currently empty. Observe that there are no deployments, StatefulSets, Pods, Services, or Routes.

If you deployed an earlier version of the AMQ Broker Operator in the project, remove the main broker Custom Resource (CR) from the project. Deleting the main CR removes the existing broker deployment in the project. For example:

oc delete -f deploy/crs/broker_v1alpha1_activemqartemis_cr.yaml.

If you deployed an earlier version of the AMQ Broker Operator in the project, delete this Operator instance. For example:

$ oc delete -f deploy/operator.yaml

If you deployed Custom Resource Definitions (CRDs) in your OpenShift cluster for an earlier version of the AMQ Broker Operator, remove these CRDs from the cluster. For example:

oc delete -f deploy/crds/broker_v1alpha1_activemqartemis_crd.yaml oc delete -f deploy/crds/broker_v1alpha1_activemqartemisaddress_crd.yaml oc delete -f deploy/crds/broker_v1alpha1_activemqartemisscaledown_crd.yaml

Deploy the CRDs that are included in the

deploy/crdsdirectory of the Operator archive that you downloaded and extracted. You must install the latest CRDs in your OpenShift cluster before deploying and starting the Operator.Deploy the main broker CRD.

$ oc create -f deploy/crds/broker_activemqartemis_crd.yaml

Deploy the addressing CRD.

$ oc create -f deploy/crds/broker_activemqartemisaddress_crd.yaml

Deploy the scaledown controller CRD.

$ oc create -f deploy/crds/broker_activemqartemisscaledown_crd.yaml

Link the pull secret associated with the account used for authentication in the Red Hat Container Registry with the

default,deployer, andbuilderservice accounts for your OpenShift project.$ oc secrets link --for=pull default <secret-name> $ oc secrets link --for=pull deployer <secret-name> $ oc secrets link --for=pull builder <secret-name>

NoteIn OpenShift Container Platform 4.1 or later, you can also use the web console to associate a pull secret with a project in which you want to deploy container images such as the AMQ Broker Operator. To do this, click Administration → Service Accounts. Specify the pull secret associated with the account that you use for authentication in the Red Hat Container Registry.

In the

deploydirectory of the Operator archive that you downloaded and extracted, open theoperator.yamlfile. Updatespec.containers.imagewith the full path to the latest Operator image for AMQ Broker 7.6 in the Red Hat Container Registry.spec: template: spec: containers: image: registry.redhat.io/amq7/amq-broker-rhel7-operator:0.13Deploy the Operator.

$ oc create -f deploy/operator.yaml

In your OpenShift project, the

amq-broker-operatorimage that you deployed starts in a new Pod.The information on the Events tab of the new Pod confirms that OpenShift has deployed the Operator image you specified, assigned a new container to a node in your OpenShift cluster, and started the new container.

In addition, if you click the Logs tab within the Pod, the output should include lines resembling the following:

... {"level":"info","ts":1553619035.8302743,"logger":"kubebuilder.controller","msg":"Starting Controller","controller":"activemqartemisaddress-controller"} {"level":"info","ts":1553619035.830541,"logger":"kubebuilder.controller","msg":"Starting Controller","controller":"activemqartemis-controller"} {"level":"info","ts":1553619035.9306898,"logger":"kubebuilder.controller","msg":"Starting workers","controller":"activemqartemisaddress-controller","worker count":1} {"level":"info","ts":1553619035.9311671,"logger":"kubebuilder.controller","msg":"Starting workers","controller":"activemqartemis-controller","worker count":1}The preceding output confirms that the newly-deployed Operator is communicating with Kubernetes, that the controllers for the broker and addressing are running, and that these controllers have started some workers.

It is recommended that you deploy only a single instance of the AMQ Broker Operator in a given OpenShift project. Specifically, setting the replicas element of your Operator deployment to a value greater than 1, or deploying the Operator more than once in the same project is not recommended.

Additional resources

- For an alternative method of installing the AMQ Broker Operator that uses the OperatorHub graphical interface, see Section 2.4, “Managing the AMQ Broker Operator using the Operator Lifecycle Manager”.

2.4. Managing the AMQ Broker Operator using the Operator Lifecycle Manager

2.4.1. Overview of the Operator Lifecycle Manager

In OpenShift Container Platform 4.0 and later, the Operator Lifecycle Manager (OLM) helps users install, update, and generally manage the lifecycle of all Operators and their associated services running across their clusters. It is part of the Operator Framework, an open source toolkit designed to manage Kubernetes native applications (Operators) in an effective, automated, and scalable way.

The OLM runs by default in OpenShift Container Platform 4.0, which aids cluster administrators in installing, upgrading, and granting access to Operators running on their cluster. The OpenShift Container Platform web console provides management screens for cluster administrators to install Operators, as well as grant specific projects access to use the catalog of Operators available on the cluster.

OperatorHub is the graphical interface that OpenShift cluster administrators use to discover, install, and upgrade Operators. With one click, these Operators can be pulled from OperatorHub, installed on the cluster, and managed by the OLM, ready for engineering teams to self-service manage the software in development, test, and production environments.

When you have installed the AMQ Broker Operator in OperatorHub, you can deploy Custom Resources (CRs) to create broker deployments such as standalone and clustered brokers.

2.4.2. Installing the AMQ Broker Operator in OperatorHub

If you do not see the latest version of the Operator for AMQ Broker 7.6 automatically available in OperatorHub, follow this procedure to manually install the latest version of the Operator in OperatorHub.

Procedure

- In your web browser, navigate to the AMQ Broker Software Downloads page.

-

In the Version drop-down box, ensure the value is set to the latest Broker version,

7.6.0. Next to AMQ Broker 7.6 Operator Installation Files, click Download.

Download of the

amq-broker-operator-7.6.0-ocp-install-examples.zipcompressed archive automatically begins.When the download has completed, move the archive to your chosen installation directory. The following example moves the archive to a directory called

/broker/operator.sudo mv amq-broker-operator-7.6.0-ocp-install-examples.zip /broker/operator

In your chosen installation directory, extract the contents of the archive. For example:

cd /broker/operator unzip amq-broker-operator-7.6.0-ocp-install-examples.zip

Switch to the directory for the Operator archive that you extracted. For example:

cd amq-broker-operator-7.6.0-ocp-install-examples

Log in to OpenShift Container Platform as a cluster administrator. For example:

$ oc login -u system:admin

Install the Operator in OperatorHub.

$ oc create -f deploy/catalog_resources/amq-broker-operatorsource.yaml

After a few minutes, the AMQ Broker Operator is available in the OperatorHub section of the OpenShift Container Platform web console.

2.4.3. Deploying the AMQ Broker Operator from OperatorHub

This procedure shows how to deploy the latest version of the Operator for AMQ Broker 7.6 from OperatorHub to a specified OpenShift project.

Prerequisites

- The latest version of the AMQ Broker Operator must be available in OperatorHub. For more information, see Section 2.4.2, “Installing the AMQ Broker Operator in OperatorHub”.

Procedure

- From the Projects drop-down menu in the OpenShift Container Platform web console, select the project in which you want to install the AMQ Broker Operator.

- In the web console, click Operators → OperatorHub.

In OperatorHub, click the AMQ Broker Operator.

If more than one instance of the AMQ Broker Operator is installed in OperatorHub, click each instance and review the information pane that opens. The major version of the latest Operator for AMQ Broker 7.6 is

0.13.- To install the Operator, click Install.

On the Operator Subscription page:

- Under Installation Mode, ensure that the radio button entitled A specific namespace on the cluster is selected. From the drop-down menu, select the project in which you want to install the AMQ Broker Operator.

-

Under Update Channel, ensure that the radio button entitled

current-76is selected. This option specifies the channel used to track and receive updates for the Operator. Thecurrent-76value specifies that the AMQ Broker 7.6 channel is used. -

Under Approval Strategy, ensure that the radio button entitled

Automaticis selected. This option specifies that updates to the Operator do not require manual approval for installation to take place. - Click Subscribe.

When the Operator installation is complete, the Installed Operators page opens. You should see that the AMQ Broker Operator is installed in the project namespace that you specified.

Additional resources

- To learn how to create a broker deployment in a project that has the AMQ Broker Operator installed, see Section 2.5, “Deploying a basic broker”.

2.5. Deploying a basic broker

The following procedure shows how to deploy a basic broker instance in your OpenShift project when you have installed the AMQ Broker Operator.

You cannot create more than one broker deployment in a given OpenShift project by deploying multiple Custom Resource (CR) instances. However, when you have created a broker deployment in a project, you can deploy multiple CR instances for addresses.

Prerequisites

You have already installed the AMQ Broker Operator.

- To use the OpenShift command-line interface (CLI) to install the AMQ Broker Operator, see Section 2.3, “Installing the AMQ Broker Operator using the CLI”.

- To use the OperatorHub graphical interface to install the AMQ Broker Operator, see Section 2.4, “Managing the AMQ Broker Operator using the Operator Lifecycle Manager”.

- Starting in AMQ Broker 7.3, you use a new version of the Red Hat Container Registry to access container images. This new version of the registry requires you to become an authenticated user before you can access images. Before you can follow the procedure in this section, you must first complete the steps described in Red Hat Container Registry Authentication.

Procedure

When you have successfully installed the Operator, the Operator is running and listening for changes related to your CRs. This example procedure shows how to use a CR to deploy a basic broker in your project.

In the

deploy/crsdirectory of the Operator archive that you downloaded and extracted, open thebroker_activemqartemis_cr.yamlfile. This file is an instance of a basic broker CR. The contents of the file look as follows:apiVersion: broker.amq.io/v2alpha2 kind: ActiveMQArtemis metadata: name: ex-aao application: ex-aao-app spec: deploymentPlan: size: 2 image: registry.redhat.io/amq7/amq-broker:7.6 ...-

The

deploymentPlan.sizevalue specifies the number of brokers to deploy. The default value of2specifies a clustered broker deployment of two brokers. However, to deploy a single broker instance, change the value to1. The

deploymentPlan.imagevalue specifies the container image to use to launch the broker. Ensure that this value specifies the latest version of the AMQ Broker 7.6 broker container image in the Red Hat Container Registry, as shown below.image: registry.redhat.io/amq7/amq-broker:7.6

- Save any changes that you made to the CR.

Deploy the CR.

$ oc create -f deploy/crs/broker_activemqartemis_cr.yaml

In the OpenShift Container Platform web console, click Workloads → Stateful Sets (OpenShift Container Platform 4.1 or later) or Applications → Stateful Sets (OpenShift Container Platform 3.11). You see a new Stateful Set called

ex-aao-ss.Expand the ex-aao-ss Stateful Set section. You see that there is one Pod, corresponding to the single broker that you defined in the CR.

On the Events tab of the running Pod, you see that the broker container has started. The Logs tab shows that the broker itself is running.

Additional resources

- For a complete configuration reference for the main broker Custom Resource Definition (CRD), see Section 10.1, “Custom Resource configuration reference”.

- To learn how to connect a running broker to the AMQ Broker management console, see Section 2.7.5, “Connecting to the AMQ Broker management console”.

2.6. Applying Custom Resource changes to running broker deployments

The following are some important things to note about applying Custom Resource (CR) changes to running broker deployments:

-

You cannot dynamically update the

persistenceEnabledattribute in your CR. To change this attribute, scale your cluster down to zero brokers. Delete the existing CR. Then, recreate and redeploy the CR with your changes, also specifying a deployment size. -

If the

imageattribute in your CR uses a floating tag such as7.6, then your deployment automatically pulls new image versions as they become available in the Red Hat Container Registry, provided that theimagePullPolicyattribute in your Stateful Set is set toAlways. For example, if your deployment currently uses a broker image version,7.6-2, and a newer broker image version,7.6-3, becomes available, then your deployment automatically pulls and uses the new image version. To use the new image, each broker in the deployment is restarted. If you have multiple brokers in your deployment, brokers are restarted one at a time. -

The value of the

deploymentPlan.sizeattribute in your CR overrides any change you make to size of your broker deployment via theoc scalecommand. For example, suppose you useoc scaleto change the size of a deployment from three brokers to two, but the value ofdeploymentPlan.sizein your CR is still3. In this case, OpenShift initially scales the deployment down to two brokers. However, when the scaledown operation is complete, the Operator restores the deployment to three brokers, as specified in the CR. -

As described in Section 2.3.2, “Deploying the AMQ Broker Operator using the CLI”, if you create a broker deployment with persistent storage (that is, by setting

persistenceEnabled=truein your CR), you might need to provision persistent volumes (PVs) for the AMQ Broker Operator to claim for your broker Pods. If you scale down the size of your broker deployment, the Operator releases any PVs that it previously claimed for the broker Pods that are now shut down. However, if you remove your broker deployment by deleting your CR, AMQ Broker Operator does not release persistent volume claims for any broker Pods that are still in the deployment. In addition, these unreleased PVs are unavailable to any new deployment. In this case, you need to manually release the volumes. For more information, see Releasing volumes in the OpenShift documentation. - During an active scaling event, any further changes that you apply are queued by the Operator and executed only when scaling is complete. For example, suppose that you scale the size of your deployment down from four brokers to one. Then, while scaledown is taking place, you also change the values of the broker administrator user name and password. In this case, the Operator queues the user name and password changes until the deployment is running with one active broker.

-

All Custom Resource changes – apart from changing the size of your deployment, or changing the value of the

exposeattribute for acceptors, connectors, or the console – cause existing brokers to be restarted. If you have multiple brokers in your deployment, only one broker restarts at a time.

2.7. Configuring Operator-based broker deployments for client connections

2.7.1. Configuring acceptors

To enable client connections to a broker Pod in your OpenShift deployment, you define acceptors on the Pod. Acceptors define how the broker accepts connections. You define acceptors in the Custom Resource (CR) used for your broker deployment. When you create an acceptor, you specify information such as the messaging protocols to enable on the acceptor, and the port on the broker Pod to use for these protocols.

The following procedure shows how to define a new acceptor in the CR for your broker deployment.

Prerequisites

- To configure acceptors, your broker deployment must be based on based on version 0.9 or greater of the AMQ Broker Operator. For more information about installing the latest version of the Operator, see Section 2.3, “Installing the AMQ Broker Operator using the CLI”.

- The information in this section applies only to broker deployments based on the AMQ Broker Operator. If you used application templates to create your broker deployment, you cannot define individual protocol-specific acceptors. For more information, see Chapter 6, "Connecting external clients to templates-based broker deployments".

Procedure

-

In the

deploy/crsdirectory of the Operator archive that you downloaded and extracted during your initial installation, open thebroker_activemqartemis_cr.yamlCustom Resource (CR). In the

acceptorselement, add a named acceptor. Typically, you also specify a minimal set of attributes such as the protocols to be used by the acceptor and the port on the broker Pod to expose for those protocols. An example is shown below.spec: ... acceptors: - name: amqp_acceptor protocols: amqp port: 5672 sslEnabled: false ...The preceding example shows configuration of a simple AMQP acceptor. The acceptor exposes port 5672 to AMQP clients.

2.7.1.1. Additional acceptor configuration notes

This section describes some additional things to note about acceptor configurations.

-

You can define acceptors either for internal clients (that is, client applications in the same OpenShift cluster as the broker Pod), or for both internal and external clients (that is, applications outside OpenShift). To also expose an acceptor to external clients, set the

exposeparameter of the acceptor configuration totrue. The default value of this parameter isfalse. -

A single acceptor can accept multiple client connections, up to a maximum limit specified by the

connectionsAllowedparameter of your acceptor configuration. - If you do not define any acceptors in your CR, the broker Pods in your deployment use a single acceptor, created by default, on port 61616. This default acceptor has only the Core protocol specified.

- Port 8161 is automatically exposed on the broker Pod for use by the AMQ Broker management console. Within the OpenShift network, this port can be accessed via the headless service that runs in your broker deployment. For more information, see Section 2.7.5.1, “Accessing the broker management console”.

You can enable SSL on the acceptor by setting

sslEnabledtotrue. You can specify additional information such as:- The secret name used to store SSL credentials (required).

- The cipher suites and and protocols to use for SSL communication.

- Whether the acceptor uses two-way SSL, that is, mutual authentication between the broker and the client.

If the acceptor that you define uses SSL, then the SSL credentials used by the acceptor must be stored in a secret. You can create your own secret and specify this secret name in the

sslSecretparameter of your acceptor configuration. If you do not specify a custom secret name in thesslSecretparameter, the acceptor uses a default secret. The default secret name uses the format<CustomResourceName>-<AcceptorName>-secret. For example,ex-aao-amqp-secret.The SSL credentials required in the secret are

broker.ks, which must be a base64-encoded keystore,client.ts, which must be a base64-encoded truststore, andkeyStorePasswordandtrustStorePassword, which are passwords specified in raw text. This requirement is the same for any connectors that you configure. For information about generating credentials for SSL connections, see Section 2.7.3, “Generating credentials for SSL connections”.

Additional resources

- For a complete configuration reference for the main broker Custom Resource Definition (CRD), including configuration of acceptors, see Section 10.1, “Custom Resource configuration reference”.

2.7.2. Connecting to the broker from internal and external clients

-

An internal client can connect to the broker Pod by specifying an address in the format

<PodName>:<AcceptorPortNumber>. OpenShift DNS successfully resolves addresses in this format because the Stateful Sets created by Operator-based broker deployments provide stable Pod names. When you expose an acceptor to external clients, a dedicated Service and Route are automatically created. To see the Routes configured on a given broker Pod, select the Pod in the OpenShift Container Platform web console and click the Routes tab. An external client can connect to the broker by specifying the full host name of the Route created for the acceptor. You can use a

curlcommand to test external access to this full host name. For example:$ curl https://ex-aao-0-svc-my_project.my_openshift_domain

The full host name for the Route must resolve to the node that’s hosting the OpenShift router. The OpenShift router uses the host name to determine where to send the traffic inside the OpenShift internal network.

By default, the OpenShift router listens to port 80 for non-secured (that is, non-SSL) traffic and port 443 for secured (that is, SSL-encrypted) traffic. For an HTTP connection, the router automatically directs traffic to port 443 if you specify a secure connection URL (that is,

https), or to port 80 if you specify a non-secure connection URL (that is,http).By contrast, a messaging client that uses TCP must explicitly specify the port number as part of the connection URL. For example:

tcp://ex-aao-0-svc-my_project.my_openshift_domain:443

-

As an alternative to using a Route, an OpenShift administrator can configure a NodePort to connect to a broker Pod from a client outside OpenShift. The NodePort should map to one of the protocol-specifc ports specified by the acceptors configured for the broker. By default, NodePorts are in the range 30000 to 32767, which means that a NodePort typically does not match the intended port on the broker Pod. To connect from a client outside OpenShift to the broker via a NodePort, you specify a URI in the format

<Protocol>://<OCPNodeIP>:<NodePortNumber>.

Additional resources

For more information about using methods such as Routes and NodePorts for communicating from outside an OpenShift cluster with services running in the cluster, see:

- Configuring ingress cluster traffic overview (OpenShift Container Platform 4.1 and later)

- Getting Traffic into a Cluster (OpenShift Container Platform 3.11)

2.7.3. Generating credentials for SSL connections

For SSL connections, AMQ Broker requires a broker keystore, a client keystore, and a client truststore that includes the broker keystore. This procedure shows you how to generate the credentials. The procedure uses Java Keytool, a package included with the Java Development Kit.

Procedure

Generate a self-signed certificate for the broker keystore.

$ keytool -genkey -alias broker -keyalg RSA -keystore broker.ks

Export the certificate, so that it can be shared with clients.

$ keytool -export -alias broker -keystore broker.ks -file broker_cert

Generate a self-signed certificate for the client keystore.

$ keytool -genkey -alias client -keyalg RSA -keystore client.ks

Create a client truststore that imports the broker certificate.

$ keytool -import -alias broker -keystore client.ts -file broker_cert

Use the broker keystore file to create a secret to store the SSL credentials, as shown in the example below.

$ oc secrets new amq-app-secret broker.ks client.ts

Add the secret to the service account that you created when installing the Operator, as shown in the example below.

$ oc secrets add sa/amq-broker-operator secret/amq-app-secret

2.7.4. Networking services in your broker deployments

On the Networking pane of the OpenShift Container Platform web console for your broker deployment, there are two running services; a headless service and a ping service. The default name of the headless service uses the format <Custom Resource name>-hdls-svc, for example, ex-aao-hdls-svc. The default name of the ping service uses a format of <Custom Resource name>-ping-svc, for example, ex-aao-ping-svc.

The headless service provides access to ports 8161 and 61616 on each broker Pod. Port 8161 is used by the broker management console, and port 61616 is used for broker clustering.

The ping service is a service used by the brokers for discovery, and enables brokers to form a cluster within the OpenShift environment. Internally, this service exposes the 8888 port.

2.7.5. Connecting to the AMQ Broker management console

The broker hosts its own management console at port 8161. Each broker Pod in your deployment has a Service and Route that provide access to the console.

The following procedure shows how to connect a running broker instance to the AMQ Broker management console.

Prerequisites

- You have deployed a basic broker using the AMQ Broker Operator. For more information, see Deploying a basic broker.

2.7.5.1. Accessing the broker management console

Each broker Pod in your deployment has a service that provides access to the console. The default name of this service uses the format <Custom Resource name>-wconsj-<broker Pod ordinal>-svc. For example, ex-aao-wconsj-0-svc. Each Service has a corresponding Route that uses the format `<Custom Resource name>-wconsj-<broker Pod ordinal>-svc-rte. For example, ex-aao-wconsj-0-svc-rte.

This procedure shows you how to access the AMQ Broker management console for a running broker instance.

Procedure

In the OpenShift Container Platform web console, click Networking → Routes (OpenShift Container Platform 4.1 or later) or Applications → Routes (OpenShift Container Platform 3.11).

On the Routes pane, you see a Route corresponding to the

wconsjService.- Under Hostname, note the complete URL. You need to specify this URL to access the console.

In a web browser, enter the host name URL.

-

If your console configuration does not use SSL, specify

httpin the URL. In this case, DNS resolution of the host name directs traffic to port 80 of the OpenShift router. -

If your console configuration uses SSL, specify

httpsin the URL. In this case, your browser defaults to port 443 of the OpenShift router. This enables a successful connection to the console if the OpenShift router also uses port 443 for SSL traffic, which the router does by default.

-

If your console configuration does not use SSL, specify

-

To log in to the management console, enter the user name and password specified in the

adminUserandadminPasswordparameters of your broker deployment Custom Resource. If there are no values specified foradminUserandadminPassword, follow the instructions in Accessing management console login credentials to retrieve the credentials required to log in to the console.

2.7.5.2. Accessing management console login credentials

If you did not specify a value for adminUser and adminPassword in your broker Custom Resource (CR), the Operator automatically generates the broker user name and password (required to log in to the AMQ Broker management console) and stores these credentials in a secret. The default secret name has a format of <Custom Resource name>-credentials-secret, for example, ex-aao-credentials-secret.

This procedure shows you how to access the login credentials required to log in to the management console.

Procedure

See the complete list of secrets in your OpenShift project.

- From the OpenShift Container Platform web console, click Workload → Secrets (OpenShift Container Platform 4.1 or later) or Resources → Secrets (OpenShift Container Platform 3.11).

From the command line:

$ oc get secrets

Open the appropriate secret to reveal the console login credentials.

- From the OpenShift Container Platform web console, click the secret that includes your broker Custom Resource instance in its name. To see the encrypted user name and password values, click the YAML tab (OpenShift Container Platform 4.1 or later) or Actions → Edit YAML (OpenShift Container Platform 3.11).

From the command line:

$ oc edit secret <my_custom_resource_name-credentials-secret>

2.8. Operator-based broker deployment examples

2.8.1. Deploying clustered brokers

If there are two or more broker Pods running in your project, the Pods automatically form a broker cluster. A clustered configuration enables brokers to connect to each other and redistribute messages as needed, for load balancing.

The following procedure shows you how to deploy clustered brokers. By default, the brokers in this deployment use on demand load balancing, meaning that brokers will forward messages only to other brokers that have matching consumers.

Prerequisites

- A basic broker is already deployed. See Deploying a basic broker.

Procedure

-

In the

deploy/crsdirectory of the Operator archive that you downloaded and extracted, open thebroker_activemqartemis_cr.yamlCustom Resource file. -

For a minimally-sized clustered deployment, ensure that the value of

deploymentPlan.sizeis2. At the command line, apply the change:

$ oc apply -f deploy/crs/broker_activemqartemis_cr.yaml

In the OpenShift Container Platform web console, a second Pod starts in your project, for the additional broker that you specified in your CR. By default, the two brokers running in your project are clustered.

Open the Logs tab of each Pod. The logs show that OpenShift has established a cluster connection bridge on each broker. Specifically, the log output includes a line like the following:

targetConnector=ServerLocatorImpl (identity=(Cluster-connection-bridge::ClusterConnectionBridge@6f13fb88

2.8.2. Creating queues in a broker cluster

The following procedure shows you how to use a Custom Resource Definition (CRD) and example Custom Resource (CR) to add and remove a queue from a broker cluster deployed using an Operator.

Prerequisites

- You have already deployed a broker cluster. See Deploying clustered brokers.

Procedure

Deploy the addressing CRD.

$ oc create -f deploy/crds/broker_activemqartemisaddress_crd.yaml

An example CR file,

broker_activemqartemisaddress_cr.yaml, was included in the Operator archive that you downloaded and extracted. The example Custom Resource includes the following:spec: # Add fields here spec: addressName: myAddress0 queueName: myQueue0 routingType: anycastWith your broker cluster already already deployed and running via the Operator, use the example Custom Resource to create an address on every running broker in your cluster.

$ oc create -f deploy/crs/broker_activemqartemisaddress_cr.yaml

Deploying the example CR creates an address

myAddress0with a queue namedmyQueue0that has ananycastrouting type. This address is created on every running broker.NoteTo create multiple addresses and/or queues in your broker cluster, you need to create separate CR files and deploy them individually, specifying new address and/or queue names in each case.

NoteIf you add brokers to your cluster after deploying the addressing CR, the new brokers will not have the address you previously created. In this case, you need to delete the addresses and redeploy the addressing CR.

To delete queues created from the example CR, use the following command:

$ oc delete -f deploy/crs/broker_activemqartemisaddress_cr.yaml

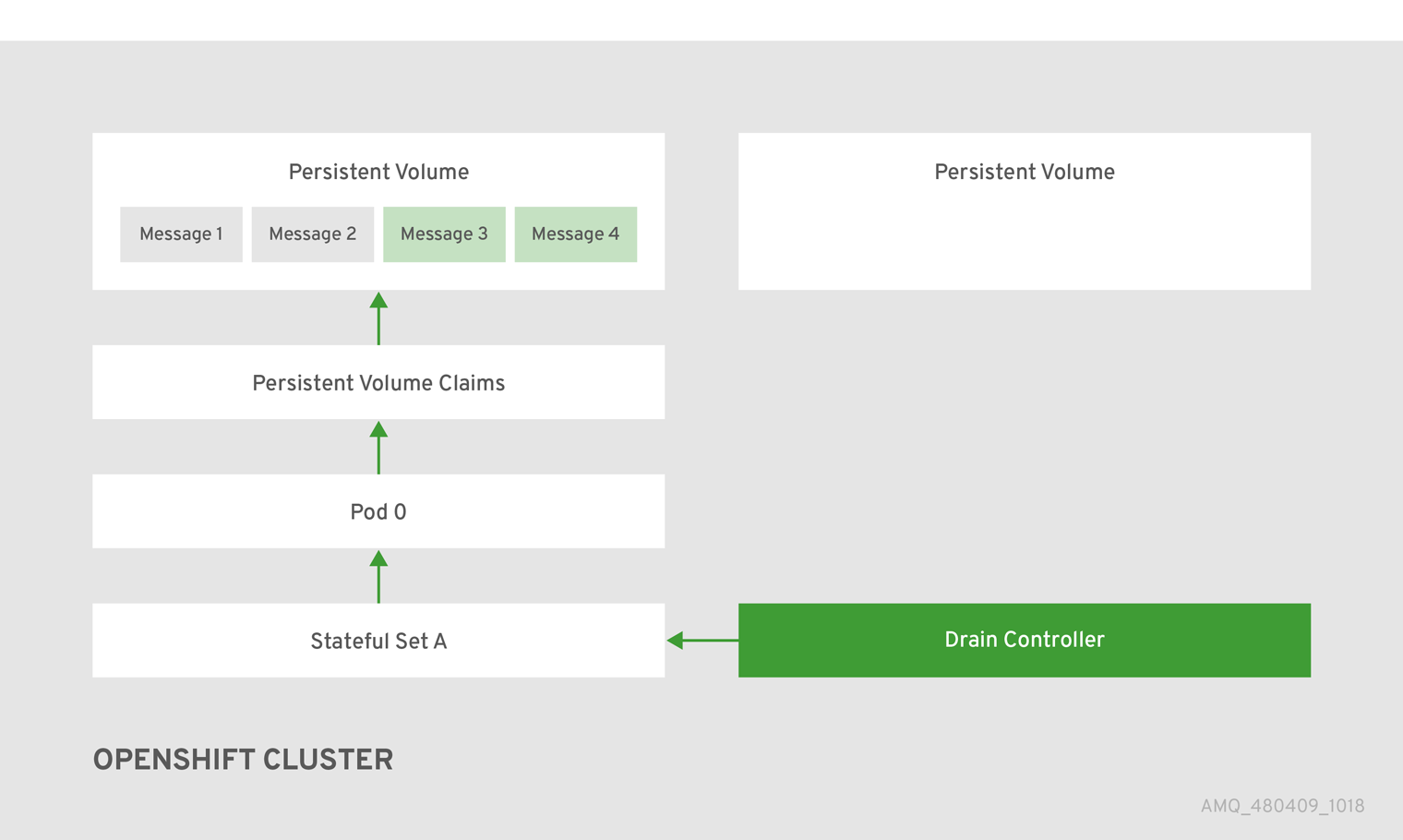

2.9. Migrating messages upon scaledown

To migrate messages upon scaledown of your broker deployment, use the main broker Custom Resource (CR) to enable message migration. The AMQ Broker Operator automatically runs a dedicated scaledown controller to execute message migration when you scale down a clustered broker deployment.

With message migration enabled, the scaledown controller within the Operator detects shutdown of a broker Pod and starts a drainer Pod to execute message migration. The drainer Pod connects to one of the other live broker Pods in the cluster and migrates messages over to that live broker Pod. After migration is complete, the scaledown controller shuts down.

A scaledown controller operates only within a single OpenShift project. The controller cannot migrate messages between brokers in separate projects.

If you scale a broker deployment down to 0 (zero), message migration does not occur, since there is no running broker Pod to which the messaging data can be migrated. However, if you scale a deployment down to zero brokers and then back up to only some of the brokers that were in the original deployment, drainer Pods are started for the brokers that remain shut down.

The following example procedure shows the behavior of the scaledown controller.

Prerequisites

- You already have a basic broker deployment. See Deploying a basic broker.

- You should understand how message migration works. For more information, see Message migration.

Procedure

-

In the

deploy/crsdirectory of the Operator repository that you originally downloaded and extracted, open the main broker CR,broker_activemqartemis_cr.yaml. In the main broker CR set

messageMigrationandpersistenceEnabledtotrue.These settings mean that when you later scale down the size of your clustered broker deployment, the Operator automatically starts a scaledown controller and migrate messages to a broker Pod that is still running.

In your existing broker deployment, verify which Pods are running.

$ oc get pods

You see output that looks like the following.

activemq-artemis-operator-8566d9bf58-9g25l 1/1 Running 0 3m38s ex-aao-ss-0 1/1 Running 0 112s ex-aao-ss-1 1/1 Running 0 8s

The preceding output shows that there are three Pods running; one for the broker Operator itself, and a separate Pod for each broker in the deployment.

Log into each Pod and send some messages to each broker.

Supposing that Pod

ex-aao-ss-0has a cluster IP address of172.17.0.6, run the following command:$ /opt/amq-broker/bin/artemis producer --url tcp://172.17.0.6:61616 --user admin --password admin

Supposing that Pod

ex-aao-ss-1has a cluster IP address of172.17.0.7, run the following command:$ /opt/amq-broker/bin/artemis producer --url tcp://172.17.0.7:61616 --user admin --password admin

The preceding commands create a queue called

TESTon each broker and add 1000 messages to each queue.

Scale the cluster down from two brokers to one.

-

Open the main broker CR,

broker_activemqartemis_cr.yaml. -

In the CR, set

deploymentPlan.sizeto1. At the command line, apply the change:

$ oc apply -f deploy/crs/broker_activemqartemis_cr.yaml

You see that the Pod

ex-aao-ss-1starts to shut down. The scaledown controller starts a new drainer Pod of the same name. This drainer Pod also shuts down after it migrates all messages from broker Podex-aao-ss-1to the other broker Pod in the cluster,ex-aao-ss-0.

-

Open the main broker CR,

-

When the drainer Pod is shut down, check the message count on the

TESTqueue of broker Podex-aao-ss-0. You see that the number of messages in the queue is 2000, indicating that the drainer Pod successfully migrated 1000 messages from the broker Pod that shut down.

Chapter 3. Deploying AMQ Broker on OpenShift Container Platform using Application Templates

The procedures in this section show:

- How to install the AMQ Broker image streams and application templates

- How to prepare a templates-based broker deployment

- An example of using the OpenShift Container Platform web console to deploy a basic broker instance using an application template. For examples of deploying other broker configurations using templates, see Templates-based broker deployment examples.

3.1. Installing the image streams and application templates

The AMQ Broker on OpenShift Container Platform image streams and application templates are not available in OpenShift Container Platform by default. You must manually install them using the procedure in this section. When you have completed the manual installation, you can then instantiate a template that enables you to deploy a chosen broker configuration on your OpenShift cluster. For examples of creating various broker configurations in this way, see Deploying AMQ Broker on OpenShift Container Platform using application templates and Templates-based broker deployment examples.

Procedure

At the command line, log in to OpenShift as a cluster administrator (or as a user that has namespace-specific administrator access for the global

openshiftproject namespace), for example:$ oc login -u system:admin $ oc project openshift

Using the

openshiftproject makes the image stream and application templates that you install later in this procedure globally available to all projects in your OpenShift cluster. If you want to explicitly specify that image streams and application templates are imported to theopenshiftproject, you can also add-n openshiftas an optional parameter with theoc replacecommands that you use later in the procedure.As an alternative to using the

openshiftproject (e.g., if a cluster administrator is unavailable), you can log in to a specific OpenShift project to which you have administrator access and in which you want to create a broker deployment, for example:$ oc login -u <USERNAME> $ oc project <PROJECT_NAME>

Logging into a specific project makes the image stream and templates that you install later in this procedure available only in that project’s namespace.

NoteAMQ Broker on OpenShift Container Platform uses StatefulSet resources with all

*-persistence*.yamltemplates. For templates that are not*-persistence*.yaml, AMQ Broker uses Deployments resources. Both types of resources are Kubernetes-native resources that can consume image streams only from the same project namespace in which the template will be instantiated.At the command line, run the following commands to import the broker image streams to your project namespace. Using the

--forceoption with theoc replacecommand updates the resources, or creates them if they don’t already exist.$ oc replace --force -f \ https://raw.githubusercontent.com/jboss-container-images/jboss-amq-7-broker-openshift-image/76-7.6.0.GA/amq-broker-7-image-streams.yaml

Run the following command to update the AMQ Broker application templates.

$ for template in amq-broker-76-basic.yaml \ amq-broker-76-ssl.yaml \ amq-broker-76-custom.yaml \ amq-broker-76-persistence.yaml \ amq-broker-76-persistence-ssl.yaml \ amq-broker-76-persistence-clustered.yaml \ amq-broker-76-persistence-clustered-ssl.yaml; do oc replace --force -f \ https://raw.githubusercontent.com/jboss-container-images/jboss-amq-7-broker-openshift-image/76-7.6.0.GA/templates/${template} done

3.2. Preparing a templates-based broker deployment

Prerequisites

- Before deploying a broker instance on OpenShift Container Platform, you must have installed the AMQ Broker image streams and application templates. For more information, see Installing the image streams and application templates.

-

The following procedure assumes that the broker image stream and application templates you installed are available in the global

openshiftproject. If you installed the image and application templates in a specific project namespace, then continue to use that project instead of creating a new project such asamq-demo.

Procedure

Use the command prompt to create a new project:

$ oc new-project amq-demo

Create a service account to be used for the AMQ Broker deployment:

$ echo '{"kind": "ServiceAccount", "apiVersion": "v1", "metadata": {"name": "amq-service-account"}}' | oc create -f -Add the view role to the service account. The view role enables the service account to view all the resources in the amq-demo namespace, which is necessary for managing the cluster when using the OpenShift dns-ping protocol for discovering the broker cluster endpoints.

$ oc policy add-role-to-user view system:serviceaccount:amq-demo:amq-service-account

AMQ Broker requires a broker keystore, a client keystore, and a client truststore that includes the broker keystore. This example uses Java Keytool, a package included with the Java Development Kit, to generate dummy credentials for use with the AMQ Broker installation.

Generate a self-signed certificate for the broker keystore:

$ keytool -genkey -alias broker -keyalg RSA -keystore broker.ks

Export the certificate so that it can be shared with clients:

$ keytool -export -alias broker -keystore broker.ks -file broker_cert

Generate a self-signed certificate for the client keystore:

$ keytool -genkey -alias client -keyalg RSA -keystore client.ks

Create a client truststore that imports the broker certificate:

$ keytool -import -alias broker -keystore client.ts -file broker_cert

Use the broker keystore file to create the AMQ Broker secret:

$ oc create secret generic amq-app-secret --from-file=broker.ks

Add the secret to the service account created earlier:

$ oc secrets add sa/amq-service-account secret/amq-app-secret

3.3. Deploying a basic broker

The procedure in this section shows you how to deploy a basic broker that is ephemeral and does not support SSL.

This broker does not support SSL and is not accessible to external clients. Only clients running internally on the OpenShift cluster can connect to the broker. For examples of creating broker configurations that support SSL, see Templates-based broker deployment examples.

Prerequisites

- You have already prepared the broker deployment. See Preparing a templates-based broker deployment.

-

The following procedure assumes that the broker image stream and application templates you installed in Installing the image streams and application templates are available in the global

openshiftproject. If you installed the image and application templates in a specific project namespace, then continue to use that project instead of creating a new project such asamq-demo. - Starting in AMQ Broker 7.3, you use a new version of the Red Hat Container Registry to access container images. This new version of the registry requires you to become an authenticated user before you can access images and pull them into an OpenShift project. Before following the procedure in this section, you must first complete the steps described in Red Hat Container Registry Authentication.

3.3.1. Creating the broker application

Procedure

Log in to the

amq-demoproject space, or another, existing project in which you want to deploy a broker.$ oc login -u <USER_NAME> $ oc project <PROJECT_NAME>

Create a new broker application, based on the template for a basic broker. The broker created by this template is ephemeral and does not support SSL.

$ oc new-app --template=amq-broker-76-basic \ -p AMQ_PROTOCOL=openwire,amqp,stomp,mqtt,hornetq \ -p AMQ_QUEUES=demoQueue \ -p AMQ_ADDRESSES=demoTopic \ -p AMQ_USER=amq-demo-user \ -p AMQ_PASSWORD=password \

The basic broker application template sets the environment variables shown in the following table.

Table 3.1. Basic broker application template

Environment variable Display Name Value Description AMQ_PROTOCOL

AMQ Protocols

openwire,amqp,stomp,mqtt,hornetq

The protocols to be accepted by the broker

AMQ_QUEUES

Queues

demoQueue

Creates an anycast queue called demoQueue

AMQ_ADDRESSES

Addresses

demoTopic

Creates an address (or topic) called demoTopic. By default, this address has no assigned routing type.

AMQ_USER

AMQ Username

amq-demo-user

User name that the client uses to connect to the broker

AMQ_PASSWORD

AMQ Password

password

Password that the client uses with the user name to connect to the broker

3.3.2. About sensitive credentials

In the AMQ Broker application templates, the values of the following environment variables are stored in a secret:

- AMQ_USER

- AMQ_PASSWORD

- AMQ_CLUSTER_USER (clustered broker deployments)

- AMQ_CLUSTER_PASSWORD (clustered broker deployments)

- AMQ_TRUSTSTORE_PASSWORD (SSL-enabled broker deployments)

- AMQ_KEYSTORE_PASSWORD (SSL-enabled broker deployments)

To retrieve and use the values for these environment variables, the AMQ Broker application templates access the secret specified in the AMQ_CREDENTIAL_SECRET environment variable. By default, the secret name specified in this environment variable is amq-credential-secret. Even if you specify a custom value for any of these variables when deploying a template, OpenShift Container Platform uses the value currently stored in the named secret. Furthermore, the application templates always use the default values stored in amq-credential-secret unless you edit the secret to change the values, or create and specify a new secret with new values. You can edit a secret using the OpenShift command-line interface, as shown in this example:

$ oc edit secrets amq-credential-secret

Values in the amq-credential-secret use base64 encoding. To decode a value in the secret, use a command that looks like this:

$ echo 'dXNlcl9uYW1l' | base64 --decode user_name

3.3.3. Deploying and starting the broker application

After the broker application is created, you need to deploy it. Deploying the application creates a Pod for the broker to run in.

Procedure

- Click Deployments in the OpenShift Container Platform web console.

- Click the broker-amq application.

Click Deploy.

NoteIf the application does not deploy, you can check the configuration by clicking the Events tab. If something is incorrect, edit the deployment configuration by clicking the Actions button.

After you deploy the broker application, inspect the current state of the broker Pod.

- Click Deployment Configs.

Click the broker-amq Pod and then click the Logs tab to verify the state of the broker. You should see the queue previously created via the application template.

If the logs show that:

- The broker is running, skip to step 9 of this procedure.

-

The broker logs have not loaded, and the Pod status shows

ErrImagePullorImagePullBackOff, your deployment configuration was not able to directly pull the specified broker image from the Red Hat Container Registry. In this case, continue to step 5 of this procedure.

To prepare the Pod for installation of the broker container image, scale the number of running brokers to

0.- Click Deployment Configs → broker-amq.

- Click Actions → Edit Deployment Configs.

-

In the deployment config

.yamlfile, set the value of thereplicasattribute to0. -

Click

Save. - The pod restarts, with zero broker instances running.

Install the latest broker container image.

- In your web browser, navigate to the Red Hat Container Catalog.

-

In the search box, enter

AMQ Broker. Click Search. -

In the search results, click AMQ Broker. The

amq7/amq-brokerrepository opens, with the most recent image version automatically selected. If you want to change to an earlier image version, click the Tags tab and choose another version tag. - Click the Get This Image tab.

Under Authentication with registry tokens, review the on-page instructions in the Using OpenShift secrets section. The instructions describe how to add references to the broker image and the image pull secret name associated with the account used for authentication in the Red Hat Container Registry to your Pod deployment configuration file.

For example, to reference the broker image and pull secret in the

broker-amqdeployment configuration in theamq-demoproject namespace, include lines that look like the following:apiVersion: apps.openshift.io/v1 kind: DeploymentConfig .. metadata: name: broker-amq namespace: amq-demo .. spec: containers: name: broker-amq image: 'registry.redhat.io/amq7/amq-broker:7.6' .. imagePullSecrets: - name: {PULL-SECRET-NAME}- Click Save.

Import the latest broker image version to your project namespace. For example:

$ oc import-image amq7/amq-broker:7.6 --from=registry.redhat.io/amq7/amq-broker --confirm

Edit the

broker-amqdeployment config again, as previously described. Set the value of thereplicasattribute back to its original value.The broker Pod restarts, with all running brokers referencing the new broker image.

Click the Terminal tab to access a shell where you can start the broker and use the CLI to test sending and consuming messages.

sh-4.2$ ./broker/bin/artemis run sh-4.2$ ./broker/bin/artemis producer --destination queue://demoQueue Producer ActiveMQQueue[demoQueue], thread=0 Started to calculate elapsed time ... Producer ActiveMQQueue[demoQueue], thread=0 Produced: 1000 messages Producer ActiveMQQueue[demoQueue], thread=0 Elapsed time in second : 4 s Producer ActiveMQQueue[demoQueue], thread=0 Elapsed time in milli second : 4584 milli seconds sh-4.2$ ./broker/bin/artemis consumer --destination queue://demoQueue Consumer:: filter = null Consumer ActiveMQQueue[demoQueue], thread=0 wait until 1000 messages are consumed Received 1000 Consumer ActiveMQQueue[demoQueue], thread=0 Consumed: 1000 messages Consumer ActiveMQQueue[demoQueue], thread=0 Consumer thread finished

Alternatively, use the OpenShift client to access the shell using the Pod name, as shown in the following example.

// Get the Pod names and internal IP Addresses $ oc get pods -o wide // Access a broker Pod by name $ oc rsh <broker-pod-name>

Chapter 4. Upgrading broker deployments on OpenShift Container Platform

The procedures in this section show how to upgrade:

- The version for an existing instance of the AMQ Broker Operator, using both the OpenShift command-line interface (CLI) and the Operator Lifecycle Manager

- The broker container image for both Operator- and templates-based broker deployments

The procedures in this section show to manually upgrade your image specifications between major versions, as represented by floating tags (for example, from 7.x to 7.y). When you specify a floating tag such as 7.y in your image specification, your deployment automatically pulls and uses a new minor image version (that is, 7.y-z) when it becomes available in the Red Hat Container Registry, provided that the imagePullPolicy attribute in your Stateful Set or Deployment Config is set to Always.

For example, suppose that the image attribute of your deployment specifies a floating tag of 7.6. If the deployment currently uses minor version 7.6-2, and a newer minor version, 7.6-3, becomes available in the registry, then your deployment automatically pulls and uses the new minor version. To use the new image, each broker in the deployment is restarted. If you have multiple brokers in your deployment, brokers are restarted one at a time.

To upgrade an existing AMQ Broker deployment on OpenShift Container Platform 3.11 to run on OpenShift Container Platform 4.1 or later, you must first upgrade your OpenShift Container Platform installation, before performing a clean installation of AMQ Broker that matches your existing deployment. To perform a clean AMQ Broker installation, use one of these methods:

4.1. Upgrading an Operator-based broker deployment

The following procedures show you how to upgrade:

- The broker container image for an Operator-based broker deployment

- The Operator version used by your OpenShift project

4.1.1. Upgrading the broker container image for an Operator-based deployment

The following procedure shows how to upgrade the broker container image for a broker deployment based on the AMQ Broker Operator.

The procedure assumes that you used the Operator for AMQ Broker 7.5 (version 0.9) to create your previous broker deployment. The name of the main broker Custom Resource (CR) instance included with 0.9-x versions of the Operator was broker_v2alpha1_activemqartemis_cr.yaml.

You can also use instructions similar to those below to upgrade the broker container image for a deployment based on the Operator for AMQ Broker 7.4 (version 0.6). The name of the main broker Custom Resource (CR) instance included with 0.6-x versions of the Operator was broker_v1alpha1_activemqartemis_cr.yaml.

Prerequisites

- You have previously used the AMQ Broker Operator to create a broker deployment. For more information, see Deploying AMQ Broker on OpenShift Container Platform using an Operator.

Procedure

-

In the

deploy/crsdirectory of the Operator archive that you downloaded and extracted during your previous installation, open thebroker_v2alpha1_activemqartemis_cr.yamlCustom Resource (CR). Edit the

imageelement to specify the latest AMQ Broker 7.6 container image, as shown below.image: registry.redhat.io/amq7/amq-broker:7.6

Apply the CR change.

$ oc apply -f deploy/crs/broker_v2alpha1_activemqartemis_cr.yaml

When you apply the CR change, each broker Pod in your deployment shuts down and then restarts using the new broker container image. If you have multiple brokers in your deployment, only one broker Pod shuts down and restarts at a time.

4.1.2. Upgrading the Operator using the CLI

This procedure shows to how to use the OpenShift command-line interface (CLI) to upgrade an installation of the Operator for AMQ Broker 7.5 (version 0.9) to the latest version for AMQ Broker 7.6.

- As part of the Operator upgrade, you must delete and then recreate any existing broker deployment in your project. These steps are described in the procedure that follows.

- You cannot directly upgrade an Operator installation for AMQ Broker 7.4 (version 0.6) to the latest version for AMQ Broker 7.6. The Custom Resource Definitions (CRDs) used by version 0.6 are not compatible with the latest version of the Operator. To upgrade your Operator from version 0.6, you must delete your previously-deployed CRDs before performing a new installation of the Operator. When you have installed the new version of the Operator, you then need to recreate your broker deployments. For more information, see Section 2.3, “Installing the AMQ Broker Operator using the CLI”.

- For an alternative method of upgrading the AMQ Broker Operator that uses the OperatorHub graphical interface, see Section 4.1.3, “Upgrading the Operator using the Operator Lifecycle Manager”.

Prerequisites

- You previously installed and deployed an instance of the Operator for AMQ Broker 7.5 (version 0.9).

Procedure

- In your web browser, navigate to the AMQ Broker Software Downloads page.

-

In the Version drop-down box, ensure that the value is set to the latest Broker version,

7.6.0. Next to AMQ Broker 7.6 Operator Installation Files, click Download.

Download of the

amq-broker-operator-7.6.0-ocp-install-examples.zipcompressed archive automatically begins.When the download has completed, move the archive to your chosen installation directory. The following example moves the archive to a directory called

/broker/operator.sudo mv amq-broker-operator-7.6.0-ocp-install-examples.zip /broker/operator

In your chosen installation directory, extract the contents of the archive. For example:

cd /broker/operator sudo unzip amq-broker-operator-7.6.0-ocp-install-examples.zip

Log in to OpenShift Container Platform as a cluster administrator. For example:

$ oc login -u system:admin

Switch to the OpenShift project in which you want to upgrade your Operator version.

$ oc project <project_name>

Delete the main CR for the existing broker deployment in your project. For example:

$ oc delete -f deploy/crs/broker_v2alpha1_activemqartemis_cr.yaml

Update the main broker CRD in your OpenShift cluster to the latest version included with AMQ Broker 7.6.

$ oc apply -f deploy/crds/broker_activemqartemis_crd.yaml

NoteYou do not need to update your cluster with the latest versions of the CRDs for addressing or the scaledown controller. In AMQ Broker 7.6, these CRDs are fully compatible with those included with AMQ Broker 7.5.

-

In the

deploydirectory of the Operator archive that you downloaded and extracted during your previous installation, open theoperator.yamlfile. Update the

spec.containers.imageattribute to specify the full path to the latest AMQ Broker Operator image for AMQ Broker 7.6 in the Red Hat Container Registry.spec: template: spec: containers: image: registry.redhat.io/amq7/amq-broker-rhel7-operator:0.13When you have updated the

spec.containers.imageattribute to specify your desired Operator version, apply the changes.$ oc apply -f deploy/operator.yaml

OpenShift updates your project to use the latest Operator version.

- To recreate your previous broker deployment, deploy a new instance of the main broker CR in your project. For more information, see Section 2.5, “Deploying a basic broker”.

4.1.3. Upgrading the Operator using the Operator Lifecycle Manager

This procedure shows how to use the Operator Lifecycle Manager (OLM) to upgrade an instance of the AMQ Broker Operator.

- In AMQ Broker 7.6, you cannot use the OLM to seamlessly upgrade a previous installation of the AMQ Broker Operator to the latest version for AMQ Broker 7.6. Instead, you must first uninstall the existing Operator and then install the latest version from OperatorHub, as described in the procedure that follows. As part of this upgrade, you must also delete and then recreate any existing broker deployment in your project.

- If you plan to upgrade an installation of the Operator for AMQ Broker 7.4 (version 0.6) to the latest version for AMQ Broker 7.6, you must delete the Custom Resource Definitions (CRDs) previously deployed in your cluster, before performing a new installation of the Operator. These steps are described in the procedure that follows.

Procedure

Log in to OpenShift Container Platform as an administrator. For example:

$ oc login -u system:admin

Switch to the OpenShift project in which you want to upgrade your Operator version.

$ oc project <project_name>

Delete the main Custom Resource (CR) for the existing broker deployment in your project. For example:

$ oc delete -f deploy/crs/broker_v2alpha1_activemqartemis_cr.yaml

- Log in to the OpenShift Container Platform web console as a cluster administrator.

In the OpenShift Container Platform web console, uninstall the existing AMQ Broker Operator.

- Click Operators → Installed Operators.

- From the Projects drop-down menu, select the project in which you want to uninstall the Operator.

- From the Actions drop-down menu in the top-right corner, select Uninstall Operator.

- On the Remove Operator Subscription dialog box, ensure that the check box entitled Also completely remove the Operator from the selected namespace is selected.

- Click Remove.

If you are upgrading the AMQ Broker 7.4 Operator (that is, version 0.6):

- Access the latest CRDs included with AMQ Broker 7.6. See Section 2.3.1, “Getting the Operator code”.

Remove existing CRDs in your cluster and manually deploy the latest CRDs. See Section 2.3.2, “Deploying the AMQ Broker Operator using the CLI”.

Note- If you are upgrading the AMQ Broker 7.5 Operator (that is, version 0.9), the OLM automatically updates the CRDs in your OpenShift cluster when you install the latest Operator version from OperatorHub. You do not need to remove existing CRDs.

- When you update your cluster with the latest CRDs, this update affects all projects in the cluster. In particular, any broker Pods previously deployed from version 0.6 or version 0.9 of the AMQ Broker Operator become unable to update their status in the OpenShift Container Platform web console. When you click the Logs tab of a running broker Pod, you see messages in the log indicating that 'UpdatePodStatus' has failed. Despite this update failure, however, the broker Pods and broker Operator in that project continue to work as expected. To fix this issue for an affected project, that project must also use the latest version of the AMQ Broker Operator.

- Use OperatorHub to install the latest version of the Operator for AMQ Broker 7.6. For more information, see Section 2.4.3, “Deploying the AMQ Broker Operator from OperatorHub”.

- To recreate your previous broker deployment, deploy a new instance of the main broker CR in your project. For more information, see Section 2.5, “Deploying a basic broker”.

4.2. Upgrading a templates-based broker deployment

The following procedures show how to upgrade the broker container image for a deployment that is based on application templates.

4.2.1. Upgrading non-persistent broker deployments

This procedure shows you how to upgrade a non-persistent broker deployment. The non-persistent broker templates in the OpenShift Container Platform service catalog have labels that resemble the following:

- Red Hat AMQ Broker 7.x (Ephemeral, no SSL)

- Red Hat AMQ Broker 7.x (Ephemeral, with SSL)

- Red Hat AMQ Broker 7.x (Custom Config, Ephemeral, no SSL)

Prerequisites

- Starting in AMQ Broker 7.3, you use a new version of the Red Hat Container Registry to access container images. This new version of the registry requires you to become an authenticated user before you can access images and pull them into an OpenShift project. Before following the procedure in this section, you must first complete the steps described in Red Hat Container Registry Authentication.

Procedure

- Navigate to the OpenShift Container Platform web console and log in.

- Click the project in which you want to upgrade a non-persistent broker deployment.

Select the Deployment Config (DC) corresponding to your broker deployment.

- In OpenShift Container Platform 4.1, click Workloads → Deployment Configs.

- In OpenShift Container Platform 3.11, click Applications → Deployments. Within your broker deployment, click the Configuration tab.

From the Actions menu, click Edit Deployment Config (OpenShift Container Platform 4.1) or Edit YAML (OpenShift Container Platform 3.11).

The YAML tab of the Deployment Config opens, with the

.yamlfile in an editable mode.-

Edit the

imageattribute to specify the latest AMQ Broker 7.6 container image,registry.redhat.io/amq7/amq-broker:7.6. Add the

imagePullSecretsattribute to specify the image pull secret associated with the account used for authentication in the Red Hat Container Registry.Changes based on the previous two steps are shown in the example below: