-

Language:

English

-

Language:

English

Red Hat Training

A Red Hat training course is available for Red Hat AMQ

Using AMQ Streams on Red Hat Enterprise Linux (RHEL)

For Use with AMQ Streams 1.1.0

Abstract

Chapter 1. Overview of AMQ Streams

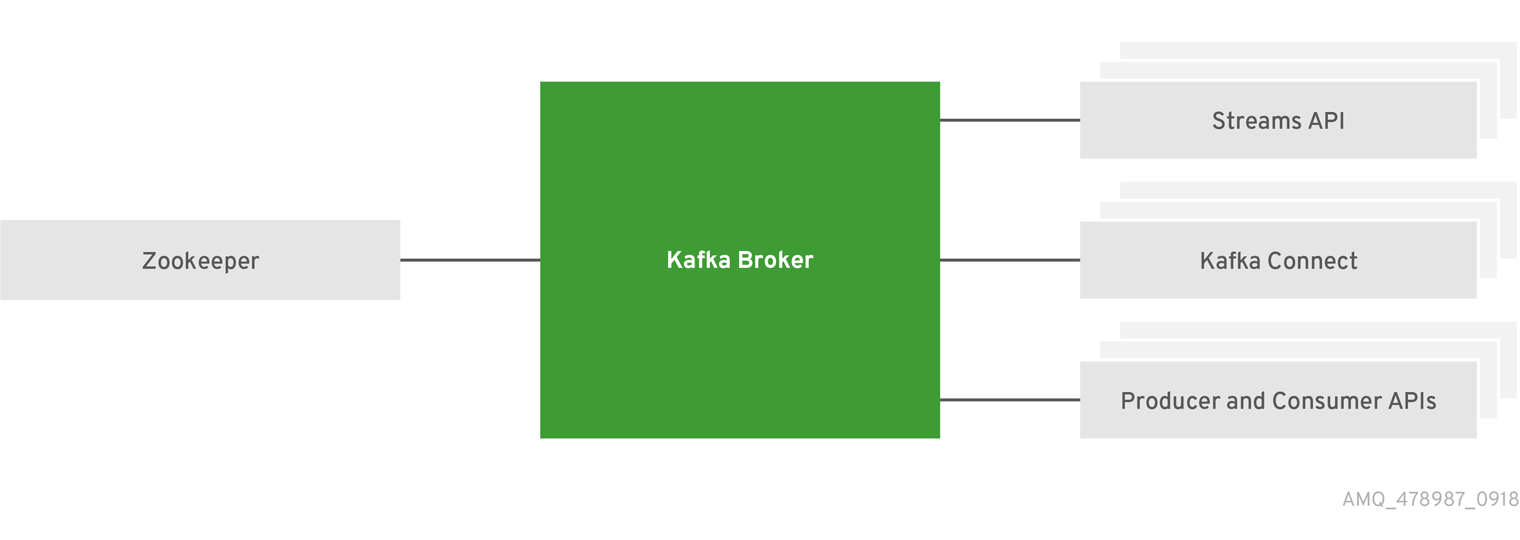

Red Hat AMQ Streams is a massively-scalable, distributed, and high-performance data streaming platform based on the Apache Zookeeper and Apache Kafka projects. It consists of the following main components:

- Zookeeper

- Service for highly reliable distributed coordination.

- Kafka Broker

- Messaging broker responsible for delivering records from producing clients to consuming clients.

- Kafka Connect

- A toolkit for streaming data between Kafka brokers and other systems using Connector plugins.

- Kafka Consumer and Producer APIs

- Java based APIs for producing and consuming messages to and from Kafka brokers.

- Kafka Streams API

- API for writing stream processor applications.

A cluster of Kafka brokers is the hub connecting all these components. The broker uses Apache Zookeeper for storing configuration data and for cluster coordination. Before running Apache Kafka, an Apache Zookeeper cluster has to be ready.

Figure 1.1. Example Architecture diagram of AMQ Streams

1.1. Key features

Scalability and performance

- Designed for horizontal scalability

Message ordering guarantee

- At partition level

Message rewind/replay

- "Long term" storage

- Allows to reconstruct application state by replaying the messages

- Combined with compacted topics allows to use Kafka as key-value store

1.2. Supported Configurations

In order to be running in a supported configuration, AMQ Streams must be running in one of the following JVM versions and on one of the supported operating systems.

Table 1.1. List of supported Java Virtual Machines

| Java Virtual Machine | Version |

|---|---|

| OpenJDK | 1.8 |

| OracleJDK | 1.8 |

| IBM JDK | 1.8 |

Table 1.2. List of supported Operating Systems

| Operating System | Architecture | Version |

|---|---|---|

| Red Hat Enterprise Linux | x86_64 | 7.x |

1.3. Document conventions

Replaceables

In this document, replaceable text is styled in monospace and surrounded by angle brackets.

For example, in the following code, you will want to replace <bootstrap-address> and <topic-name> with your own address and topic name:

bin/kafka-console-consumer.sh --bootstrap-server <bootstrap-address> --topic <topic-name> --from-beginning

Chapter 2. Getting started

2.1. AMQ Streams distribution

AMQ Streams is distributed as single ZIP file. This ZIP file contains all AMQ Streams components:

- Apache Zookeeper

- Apache Kafka

- Apache Kafka Connect

- Apache Kafka Mirror Maker

2.2. Downloading an AMQ Streams Archive

An archived distribution of AMQ Streams is available for download from the Red Hat website. You can download a copy of the distribution by following the steps below.

Procedure

- Download the latest version of the Red Hat AMQ Streams archive from the Customer Portal.

2.3. Installing AMQ Streams

Follow this procedure to install the latest version of AMQ Streams on Red Hat Enterprise Linux. For instructions on upgrading an existing cluster to AMQ Streams version 1.1.0, see Upgrading an AMQ Streams cluster from 1.0.0 to 1.1.0.

Prerequisites

- Download the installation archive.

- Review the Section 1.2, “Supported Configurations”

Procedure

Add new

kafkauser and group.sudo groupadd kafka sudo useradd -g kafka kafka sudo passwd kafka

Create directory

/opt/kafka.sudo mkdir /opt/kafka

Create a temporary directory and extract the contents of the AMQ Streams ZIP file.

mkdir /tmp/kafka unzip amq-streams_y.y-x.x.x.zip -d /tmp/kafkaMove the extracted contents into

/opt/kafkadirectory and delete the temporary directory.sudo mv /tmp/kafka/kafka_y.y-x.x.x/* /opt/kafka/ rm -r /tmp/kafkaChange the ownership of the

/opt/kafkadirectory to thekafkauser.sudo chown -R kafka:kafka /opt/kafka

Create directory

/var/lib/zookeeperfor storing Zookeeper data and set its ownership to thekafkauser.sudo mkdir /var/lib/zookeeper sudo chown -R kafka:kafka /var/lib/zookeeper

Create directory

/var/lib/kafkafor storing Kafka data and set its ownership to thekafkauser.sudo mkdir /var/lib/kafka sudo chown -R kafka:kafka /var/lib/kafka

2.4. Data storage considerations

An efficient data storage infrastructure is essential to the optimal performance of AMQ Streams.

AMQ Streams requires block storage and works well with cloud-based block storage solutions, such as Amazon Elastic Block Store (EBS). The use of file storage is not recommended.

Choose local storage when possible. If local storage is not available, you can use a Storage Area Network (SAN) accessed by a protocol such as Fibre Channel or iSCSI.

2.4.1. Apache Kafka and Zookeeper storage support

Use separate disks for Apache Kafka and Zookeeper.

Kafka supports JBOD (Just a Bunch of Disks) storage, a data storage configuration of multiple disks or volumes. JBOD provides increased data storage for Kafka brokers. It can also improve performance.

Solid-state drives (SSDs), though not essential, can improve the performance of Kafka in large clusters where data is sent to and received from multiple topics asynchronously. SSDs are particularly effective with Zookeeper, which requires fast, low latency data access.

You do not need to provision replicated storage because Kafka and Zookeeper both have built-in data replication.

2.4.2. File systems

It is recommended that you configure your storage system to use the XFS file system. AMQ Streams is also compatible with the ext4 file system, but this might require additional configuration for best results.

Additional resources

- For more information about XFS, see The XFS File System.

2.5. Running single node AMQ Streams cluster

This procedure will show you how to run a basic AMQ Streams cluster consisting of single Zookeeper and single Apache Kafka node both running on the same host. It is using the default configuration files for both Zookeeper and Kafka.

Single node AMQ Streams cluster does not provide realibility and high availability and is suitable only for development purposes.

Prerequisites

- AMQ Streams is installed on the host

Running the cluster

Edit the Zookeeper configuration file

/opt/kafka/config/zookeeper.properties. Set thedataDiroption to/var/lib/zookeeper/.dataDir=/var/lib/zookeeper/

Start Zookeeper.

su - kafka /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

Make sure that Apache Zookeeper is running.

jcmd | grep zookeeper

Edit the Kafka configuration file

/opt/kafka/config/server.properties. Set thelog.dirsoption to/var/lib/kafka/.log.dirs=/var/lib/kafka/

Start Kafka.

su - kafka /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

Make sure that Kafka is running.

jcmd | grep kafka

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

2.6. Using the cluster

Prerequisites

- AMQ Streams is installed on the host

- Zookeeper and Kafka are up and running

Procedure

Start the Kafka console producer.

bin/kafka-console-producer.sh --broker-list <bootstrap-address> --topic <topic-name>

For example:

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic my-topic

- Type your message into the console where the producer is running.

- Press Enter to send.

- Press Ctrl+C to exit the Kafka console producer.

Start the message receiver.

bin/kafka-console-consumer.sh --bootstrap-server <bootstrap-address> --topic <topic-name> --from-beginning

For example:

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic my-topic --from-beginning

- Confirm that you see the incoming messages in the consumer console.

- Press Crtl+C to exit the Kafka console consumer.

2.7. Stopping the AMQ Streams services

You can stop the Kafka and Zookeeper services by running a script. All connections to the Kafka and Zookeeper services will be terminated.

Prerequisites

- AMQ Streams is installed on the host

- Zookeeper and Kafka are up and running

Procedure

Stop the Kafka broker.

su - kafka /opt/kafka/bin/kafka-server-stop.sh

Confirm that the Kafka broker is stopped.

jcmd | grep kafka

Stop Zookeeper.

su - kafka /opt/kafka/bin/zookeeper-server-stop.sh

2.8. Configuring AMQ Streams

Prerequisites

- AMQ Streams is downloaded and installed on the host

Procedure

Open Zookeeper and Kafka broker configuration files in a text editor. The configuration files are located at :

- Zookeeper

-

/opt/kafka/config/zookeeper.properties - Kafka

-

/opt/kafka/config/server.properties

Edit the configuration options. The configuration files are in the Java properties format. Every configuration option should be on separate line in the following format:

<option> = <value>

Lines starting with

#or!will be treated as comments and will be ignored by AMQ Streams components.# This is a comment

Values can be split into multiple lines by using

\directly before the newline / carriage return.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \ username="bob" \ password="bobs-password";- Save the changes

- Restart the Zookeeper or Kafka broker

- Repeat this procedure on all the nodes of the cluster.

Chapter 3. Configuring Zookeeper

Kafka uses Zookeeper to store configuration data and for cluster coordination. It is strongly recommended to run a cluster of replicated Zookeeper instances.

3.1. Basic configuration

The most important Zookeepr configuration options are:

tickTime- Zookeeper’s basic time unit in milliseconds. It is used for heartbeats and session timeouts. For example, minimum session timeout will be two ticks.

dataDir-

The directory where Zookeeper stores its transaction log and snapshots of its in-memory database. This should be set to the

/var/lib/zookeeper/directory created during installation. clientPort-

Port number where clients can connect. Defaults to

2181.

An example zookeeper configuration file config/zookeeper.properties is located in the AMQ Streams installation directory. It is recommended to place the dataDir directory on a separate disk device to minimize the latency in Zookeeper.

Zookeeper configuration file should be located in /opt/kafka/config/zookeeper.properties. A basic example of the configuration file can be found below. The configuration file has to be readable by the kafka user.

timeTick=2000 dataDir=/var/lib/zookeeper/ clientPort=2181

3.2. Zookeeper cluster configuration

For reliable ZooKeeper service, you should deploy ZooKeeper in a cluster. Hence, for production use cases, you must run a cluster of replicated Zookeeper instances. Zookeeper clusters are also referred as ensembles.

Zookeeper clusters usually consist of an odd number of nodes. Zookeeper requires a majority of the nodes in the cluster should be up and running. For example, a cluster with three nodes, at least two of them must be up and running. This means it can tolerate one node being down. A cluster consisting of five nodes, at least three nodes must be available. This means it can tolerate two nodes being down. A cluster consisting of seven nodes, at least four nodes must be available. This means it can tolerate three nodes being down. Having more nodes in the Zookeeper cluster delivers better resiliency and reliability of the whole cluster.

Zookeeper can run in clusters with an even number of nodes. The additional node, however, does not increase the resiliency of the cluster. A cluster with four nodes requires at least three nodes to be available and can tolerate only one node being down. Therefore it has exactly the same resiliency as a cluster with only three nodes.

The different Zookeeper nodes should be ideally placed into different data centers or network segments. Increasing the number of Zookeeper nodes increases the workload spent on cluster synchronization. For most Kafka use cases Zookeeper cluster with 3, 5 or 7 nodes should be fully sufficient.

Zookeeper cluster with 3 nodes can tolerate only 1 unavailable node. This means that when a cluster node crashes while you are doing maintenance on another node your Zookeeper cluster will be unavailable.

Replicated Zookeeper configuration supports all configuration options supported by the standalone configuration. Additional options are added for the clustering configuration:

initLimit-

Amount of time to allow followers to connect and sync to the cluster leader. The time is specified as a number of ticks (see the

timeTickoption for more details). syncLimit-

Amount of time for which followers can be behind the leader. The time is specified as a number of ticks (see the

timeTickoption for more details).

In addition to the options above, every configuration file should contain a list of servers which should be members of the Zookeeper cluster. The server records should be specified in the format server.id=hostname:port1:port2, where:

id- The ID of the Zookeeper cluster node.

hostname- The hostname or IP address where the node listens for connections.

port1- The port number used for intra-cluster communication.

port2- The port number used for leader election.

The following is an example configuration file of a Zookeeper cluster with three nodes:

timeTick=2000 dataDir=/var/lib/zookeeper/ clientPort=2181 initLimit=5 syncLimit=2 server.1=172.17.0.1:2888:3888 server.2=172.17.0.2:2888:3888 server.3=172.17.0.3:2888:3888

Each node in the Zookeeper cluster has to be assigned with a unique ID. Each node’s ID has to be configured in myid file and stored in the dataDir folder like /var/lib/zookeeper/. The myid files should contain only a single line with the written ID as text. The ID can be any integer from 1 to 255. You must manually create this file on each cluster node. Using this file, each Zookeeper instance will use the configuration from the corresponding server. line in the configuration file to configure its listeners. It will also use all other server. lines to identify other cluster members.

In the above example, there are three nodes, so each one will have a different myid with values 1, 2, and 3 respectively.

3.3. Running multi-node Zookeeper cluster

This procedure will show you how to configure and run Zookeeper as a multi-node cluster.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Zookeeper cluster nodes.

Running the cluster

Create the

myidfile in/var/lib/zookeeper/. Enter ID1for the first Zookeeper node,2for the second Zookeeper node, and so on.su - kafka echo "_<NodeID>_" > /var/lib/zookeeper/myid

For example:

su - kafka echo "1" > /var/lib/zookeeper/myid

Edit the Zookeeper

/opt/kafka/config/zookeeper.propertiesconfiguration file for the following:-

Set the option

dataDirto/var/lib/zookeeper/. + Configure theinitLimitandsyncLimitoptions. Add a list of all Zookeeper nodes. The list should include also the current node.

Example configuration for a node of Zookeeper cluster with five members

timeTick=2000 dataDir=/var/lib/zookeeper/ clientPort=2181 initLimit=5 syncLimit=2 server.1=172.17.0.1:2888:3888 server.2=172.17.0.2:2888:3888 server.3=172.17.0.3:2888:3888 server.4=172.17.0.4:2888:3888 server.5=172.17.0.5:2888:3888

-

Set the option

Start Zookeeper with the default configuration file.

su - kafka /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

Verify that the Zookeeper is running.

jcmd | grep zookeeper

- Repeat this procedure on all the nodes of the cluster.

Once all nodes of the clusters are up and running, verify that all nodes are members of the cluster by sending a

statcommand to each of the nodes usingncatutility.Use ncat stat to check the node status

echo stat | ncat localhost 2181

In the output you should see information that the node is either

leaderorfollower.Example output from the

ncatcommandZookeeper version: 3.4.13-2d71af4dbe22557fda74f9a9b4309b15a7487f03, built on 06/29/2018 00:39 GMT Clients: /0:0:0:0:0:0:0:1:59726[0](queued=0,recved=1,sent=0) Latency min/avg/max: 0/0/0 Received: 2 Sent: 1 Connections: 1 Outstanding: 0 Zxid: 0x200000000 Mode: follower Node count: 4

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

3.4. Authentication

By default, Zookeeper does not use any form of authentication and allows anonymous connections. However, it supports Java Authentication and Authorization Service (JAAS) which can be used to set up authentication using Simple Authentication and Security Layer (SASL). Zookeeper supports authentication using the DIGEST-MD5 SASL mechanism with locally stored credentials.

3.4.1. Authentication with SASL

JAAS is configured using a separate configuration file. It is recommended to place the JAAS configuration file in the same directory as the Zookeeper configuration (/opt/kafka/config/). The recommended file name is zookeeper-jaas.conf. When using a Zookeeper cluster with multiple nodes, the JAAS configuration file has to be created on all cluster nodes.

JAAS is configured using contexts. Separate parts such as the server and client are always configured with a separate context. The context is a configuration option and has the following format:

ContextName {

param1

param2;

};SASL Authentication is configured separately for server-to-server communication (communication between Zookeeper instances) and client-to-server communication (communication between Kafka and Zookeeper). Server-to-server authentication is relevant only for Zookeeper clusters with multiple nodes.

Server-to-Server authentication

For server-to-server authentication, the JAAS configuration file contains two parts:

- The server configuration

- The client configuration

When using DIGEST-MD5 SASL mechanism, the QuorumServer context is used to configure the authentication server. It must contain all the usernames to be allowed to connect together with their passwords in an unencrypted form. The second context, QuorumLearner, has to be configured for the client which is built into Zookeeper. It also contains the password in an unencrypted form. An example of the JAAS configuration file for DIGEST-MD5 mechanism can be found below:

QuorumServer {

org.apache.zookeeper.server.auth.DigestLoginModule required

user_zookeeper="123456";

};

QuorumLearner {

org.apache.zookeeper.server.auth.DigestLoginModule required

username="zookeeper"

password="123456";

};In addition to the JAAS configuration file, you must enable the server-to-server authentication in the regular Zookeeper configuration file by specifying the following options:

quorum.auth.enableSasl=true quorum.auth.learnerRequireSasl=true quorum.auth.serverRequireSasl=true quorum.auth.learner.loginContext=QuorumLearner quorum.auth.server.loginContext=QuorumServer quorum.cnxn.threads.size=20

Use the EXTRA_ARGS environment variable to pass the JAAS configuration file to the Zookeeper server as a Java property:

su - kafka export EXTRA_ARGS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

For more information about server-to-server authentication, see Zookeeper wiki.

Client-to-Server authentication

Client-to-server authentication is configured in the same JAAS file as the server-to-server authentication. However, unlike the server-to-server authentication, it contains only the server configuration. The client part of the configuration has to be done in the client. For information on how to configure a Kafka broker to connect to Zookeeper using authentication, see the Kafka installation section.

Add the Server context to the JAAS configuration file to configure client-to-server authentication. For DIGEST-MD5 mechanism it configures all usernames and passwords:

Server {

org.apache.zookeeper.server.auth.DigestLoginModule required

user_super="123456"

user_kafka="123456"

user_someoneelse="123456";

};After configuring the JAAS context, enable the client-to-server authentication in the Zookeeper configuration file by adding the following line:

requireClientAuthScheme=sasl authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

You must add the authProvider.<ID> property for every server that is part of the Zookeeper cluster.

Use the EXTRA_ARGS environment variable to pass the JAAS configuration file to the Zookeeper server as a Java property:

su - kafka export EXTRA_ARGS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

For more information about configuring Zookeeper authentication in Kafka brokers, see Section 4.6, “Zookeeper authentication”.

3.4.2. Enabling Server-to-server authentication using DIGEST-MD5

This procedure describes how to enable authentication using the SASL DIGEST-MD5 mechanism between the nodes of the Zookeeper cluster.

Prerequisites

- AMQ Streams is installed on the host

- Zookeeper cluster is configured with multiple nodes.

Enabling SASL DIGEST-MD5 authentication

On all Zookeeper nodes, create or edit the

/opt/kafka/config/zookeeper-jaas.confJAAS configuration file and add the following contexts:QuorumServer { org.apache.zookeeper.server.auth.DigestLoginModule required user__<Username>_="<Password>"; }; QuorumLearner { org.apache.zookeeper.server.auth.DigestLoginModule required username="<Username>" password="<Password>"; };The username and password must be the same in both JAAS contexts. For example:

QuorumServer { org.apache.zookeeper.server.auth.DigestLoginModule required user_zookeeper="123456"; }; QuorumLearner { org.apache.zookeeper.server.auth.DigestLoginModule required username="zookeeper" password="123456"; };On all Zookeeper nodes, edit the

/opt/kafka/config/zookeeper.propertiesZookeeper configuration file and set the following options:quorum.auth.enableSasl=true quorum.auth.learnerRequireSasl=true quorum.auth.serverRequireSasl=true quorum.auth.learner.loginContext=QuorumLearner quorum.auth.server.loginContext=QuorumServer quorum.cnxn.threads.size=20

Restart all Zookeeper nodes one by one. To pass the JAAS configuration to Zookeeper, use the

EXTRA_ARGSenvironment variable.su - kafka export EXTRA_ARGS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a Zookeeper cluster, see Section 3.3, “Running multi-node Zookeeper cluster”.

3.4.3. Enabling Client-to-server authentication using DIGEST-MD5

This procedure describes how to enable authentication using the SASL DIGEST-MD5 mechanism between Zookeeper clients and Zookeeper.

Prerequisites

- AMQ Streams is installed on the host

- Zookeeper cluster is configured and running.

Enabling SASL DIGEST-MD5 authentication

On all Zookeeper nodes, create or edit the

/opt/kafka/config/zookeeper-jaas.confJAAS configuration file and add the following context:Server { org.apache.zookeeper.server.auth.DigestLoginModule required user_super="<SuperUserPassword>" user<Username1>_="<Password1>" user<USername2>_="<Password2>"; };The

superwill have automatically administrator priviledges. The file can contain multiple users, but only one additional user is required by the Kafka brokers. The recommended name for the Kafka user iskafka.The following example shows the

Servercontext for client-to-server authentication:Server { org.apache.zookeeper.server.auth.DigestLoginModule required user_super="123456" user_kafka="123456"; };On all Zookeeper nodes, edit the

/opt/kafka/config/zookeeper.propertiesZookeeper configuration file and set the following options:requireClientAuthScheme=sasl authProvider.<IdOfBroker1>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.<IdOfBroker2>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.<IdOfBroker3>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

The

authProvider.<ID>property has to be added for every node which is part of the Zookeeper cluster. An example three-node Zookeeper cluster configuration must look like the following:requireClientAuthScheme=sasl authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

Restart all Zookeeper nodes one by one. To pass the JAAS configuration to Zookeeper, use the

EXTRA_ARGSenvironment variable.su - kafka export EXTRA_ARGS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a Zookeeper cluster, see Section 3.3, “Running multi-node Zookeeper cluster”.

3.5. Authorization

Zookeeper supports access control lists (ACLs) to protect data stored inside it. Kafka brokers can automatically configure the ACL rights for all Zookeeper records they create so no other Zookeeper user can modify them.

For more information about enabling Zookeeper ACLs in Kafka brokers, see Section 4.7, “Zookeeper authorization”.

3.6. TLS

The version of Zookeeper which is part of AMQ Streams currently does not support TLS for encryption or authentication.

3.7. Additional configuration options

You can set the following options based on your use case:

maxClientCnxns- The maximum number of concurrent client connections to a single member of the ZooKeeper cluster.

autopurge.snapRetainCount-

Number of snapshots of Zookeeper’s in-memory database which will be retained. Default value is

3. autopurge.purgeInterval-

The time interval in hours for purging snapshots. The default value is

0and this option is disabled.

All available configuration options can be found in Zookeeper documentation.

3.8. Logging

Zookeeper is using log4j as their logging infrastructure. Logging configuration is by default read from the log4j.propeties configuration file which should be placed either in the /opt/kafka/config/ directory or in the classpath. The location and name of the configuration file can be changed using the Java property log4j.configuration which can be passed to Zookeeper using the KAFKA_LOG4J_OPTS environment variable:

su - kafka export KAFKA_LOG4J_OPTS="-Dlog4j.configuration=file:/my/path/to/log4j.properties"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

For more information about Log4j configurations, see Log4j documentation.

Chapter 4. Configuring Kafka

Kafka uses a properties file to store static configuration. The recommended location for the configuration file is /opt/kafka/config/server.properties. The configuration file has to be readable by the kafka user.

AMQ Streams ships an example configuration file that highlights various basic and advanced features of the product. It can be found under config/server.properties in the AMQ Streams installation directory.

This chapter explains the most important configuration options. For a complete list of supported Kafka broker configuration options, see Appendix A, Broker configuration parameters.

4.1. Zookeeper

Kafka brokers need Zookeeper to store some parts of their configuration as well as to coordinate the cluster (for example to decide which node is a leader for which partition). Connection details for the Zookeeper cluster are stored in the configuration file. The field zookeeper.connect contains a comma-separated list of hostnames and ports of members of the zookeeper cluster.

For example:

zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181

Kafka will use these addresses to connect to the Zookeeper cluster. With this configuration, all Kafka znodes will be created directly in the root of Zookeeper database. Therefore, such a Zookeeper cluster could be used only for a single Kafka cluster. To configure multiple Kafka clusters to use single Zookeeper cluster, specify a base (prefix) path at the end of the Zookeeper connection string in the Kafka configuration file:

zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181/my-cluster-1

4.2. Listeners

Kafka brokers can be configured to use multiple listeners. Each listener can be used to listen on a different port or network interface and can have different configuration. Listeners are configured in the listeners property in the configuration file. The listeners property contains a list of listeners with each listener configured as <listenerName>://<hostname>:_<port>_. When the hostname value is empty, Kafka will use java.net.InetAddress.getCanonicalHostName() as hostname. The following example shows how multiple listeners might be configured:

listeners=INT1://:9092,INT2://:9093,REPLICATION://:9094

When a Kafka client wants to connect to a Kafka cluster, it first connects to a bootstrap server. The bootstrap server is one of the cluster nodes. It will provide the client with a list of all other brokers which are part of the cluster and the client will connect to them individually. By default the bootstrap server will provide the client with a list of nodes based on the listeners field.

Advertised listeners

It is possible to give the client a different set of addresses than given in the listeners property. It is useful in situations when additional network infrastructure, such as a proxy, is between the client and the broker, or when an external DNS name should be used instead of an IP address. Here, the broker allows defining the advertised addresses of the listeners in the advertised.listeners configuration property. This property has the same format as the listeners property. The following example shows how to configure advertised listeners:

listeners=INT1://:9092,INT2://:9093 advertised.listeners=INT1://my-broker-1.my-domain.com:1234,INT2://my-broker-1.my-domain.com:1234:9093

The names of the listeners have to match the names of the listeners from the listeners property.

Inter-broker listeners

When the cluster has replicated topics, the brokers responsible for such topics need to communicate with each other in order to replicate the messages in those topics. When multiple listeners are configured, the configuration field inter.broker.listener.name can be used to specify the name of the listener which should be used for replication between brokers. For example:

inter.broker.listener.name=REPLICATION

4.3. Commit logs

Apache Kafka stores all records it receives from producers in commit logs. The commit logs contain the actual data, in the form of records, that Kafka needs to deliver. These are not the application log files which record what the broker is doing.

Log directories

You can configure log directories using the log.dirs property filed to store commit logs in one or multiple log directories. It should be set to /var/lib/kafka directory created during installation:

log.dirs=/var/lib/kafka

For performance reasons, you can configure log.dirs to multiple directories and place each of them on a different physical device to improve disk I/O performance. For example:

log.dirs=/var/lib/kafka1,/var/lib/kafka2,/var/lib/kafka3

4.4. Broker ID

Broker ID is a unique identifier for each broker in the cluster. You can assign an integer greater than or equal to 0 as broker ID. The broker ID is used to identify the brokers after restarts or crashes and it is therefore important that the id is stable and does not change over time. The broker ID is configured in the broker properties file:

broker.id=1

4.5. Running a multi-node Kafka cluster

This procedure will show you how to configure and run Kafka as a multi-node cluster.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

- Zookeeper cluster is configured and running.

Running the cluster

Edit the

/opt/kafka/config/server.propertiesKafka configuration file for the following:Set the

broker.idfield to0for the first broker,1for the second broker, and so on. + Configure the details for connecting to Zookeeper in thezookeeper.connectoption. + Configure the Kafka listeners. + Set the directories where the commit logs should be stored in thelogs.dirdirectory.The following example shows the configuration for a Kafka broker:

broker.id=0 zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181 listeners=REPLICATION://:9091,PLAINTEXT://:9092 inter.broker.listener.name=REPLICATION log.dirs=/var/lib/kafka

In a typical installation where each Kafka broker is running on identical hardware, only the

broker.idconfiguration property will differ between each broker config.

Start the Kafka broker with the default configuration file.

su - kafka /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

Verify that the Kafka broker is running.

jcmd | grep Kafka

- Repeat this procedure on all the nodes of the Kafka cluster.

Once all nodes of the clusters are up and running, verify that all nodes are members of the Kafka cluster by sending a

dumpcommand to one of the Zookeeper nodes using thencatutility. The command will print all Kafka brokers registered in Zookeeper.Use ncat stat to check the node status

echo dump | ncat zoo1.my-domain.com 2181

The output should contain all Kafka brokers you just configured and started.

Example output from the

ncatcommand for Kafka cluster with 3 nodesSessionTracker dump: org.apache.zookeeper.server.quorum.LearnerSessionTracker@28848ab9 ephemeral nodes dump: Sessions with Ephemerals (3): 0x20000015dd00000: /brokers/ids/1 0x10000015dc70000: /controller /brokers/ids/0 0x10000015dc70001: /brokers/ids/2

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a Zookeeper cluster, see Section 3.3, “Running multi-node Zookeeper cluster”.

- For a complete list of supported Kafka broker configuration options, see Appendix A, Broker configuration parameters.

4.6. Zookeeper authentication

By default, connections between Zookeeper and Kafka are not authenticated. However, Kafka and Zookeeper support Java Authentication and Authorization Service (JAAS) which can be used to set up authentication using Simple Authentication and Security Layer (SASL). Zookeeper supports authentication using the DIGEST-MD5 SASL mechanism with locally stored credentials.

4.6.1. JAAS Configuration

SASL authentication for Zookeeper connections has to be configured in the JAAS configuration file. By default, Kafka will use the JAAS context named Client for connecting to Zookeeper. The Client context should be configured in the /opt/kafka/config/jass.conf file. The context has to enable the PLAIN SASL authentication, as in the following example:

Client {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kafka"

password="123456";

};4.6.2. Enabling Zookeeper authentication

This procedure describes how to enable authentication using the SASL DIGEST-MD5 mechanism when connecting to Zookeeper.

Prerequisites

- Client-to-server authentication is enabled in Zookeeper

Enabling SASL DIGEST-MD5 authentication

On all Kafka broker nodes, create or edit the

/opt/kafka/config/jaas.confJAAS configuration file and add the following context:Client { org.apache.kafka.common.security.plain.PlainLoginModule required username="<Username>" password="<Password>"; };The username and password should be the same as configured in Zookeeper.

Following example shows the

Clientcontext:Client { org.apache.kafka.common.security.plain.PlainLoginModule required username="kafka" password="123456"; };Restart all Kafka broker nodes one by one. To pass the JAAS configuration to Kafka brokers, use the

KAFKA_OPTSenvironment variable.su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/jaas.conf"; /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

Additional resources

- For more information about configuring client-to-server authentication in Zookeeper, see Section 3.4, “Authentication”.

4.7. Zookeeper authorization

When authentication is enabled between Kafka and Zookeeper, Kafka can be configured to automatically protect all its records with Access Control List (ACL) rules which will allow only the Kafka user to change the data. All other users will have read-only access.

4.7.1. ACL Configuration

Enforcement of ACL rules is controlled by the zookeeper.set.acl property in the config/server.properties Kafka configuration file and is disabled by default. To enabled the ACL protection set zookeeper.set.acl to true:

zookeeper.set.acl=true

Kafka will set the ACL rules only for newly created Zookeeper znodes. When the ACLs are only enabled after the first start of the cluster, the tool zookeeper-security-migration.sh can be used to set ACLs on all existing znodes.

The data stored in Zookeeper includes information such as topic names and their configuration. The Zookeeper database also contains the salted and hashed user credentials when SASL SCRAM authentication is used. But it does not include any records sent and received using Kafka. Kafka, in general, considers the data stored in Zookeeper as non-confidential. In case these data are considered confidential (for example because topic names contain customer identification) the only way how to protect them is by isolating Zookeeper on the network level and allowing access only to Kafka brokers.

4.7.2. Enabling Zookeeper ACLs for a new Kafka cluster

This procedure describes how to enable Zookeeper ACLs in Kafka configuration for a new Kafka cluster. Use this procedure only before the first start of the Kafka cluster. For enabling Zookeeper ACLs in already running cluster, see Section 4.7.3, “Enabling Zookeeper ACLs in an existing Kafka cluster”.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

- Zookeeper cluster is configured and running.

- Client-to-server authentication is enabled in Zookeeper.

- Zookeeper authentication is enabled in the Kafka brokers.

- Kafka broker have not yet been started.

Procedure

Edit the

/opt/kafka/config/server.propertiesKafka configuration file to set thezookeeper.set.aclfield totrueon all cluster nodes.zookeeper.set.acl=true

- Start the Kafka brokers.

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a Zookeeper cluster, see Section 3.3, “Running multi-node Zookeeper cluster”.

- For more information about running a Kafka cluster, see Section 4.5, “Running a multi-node Kafka cluster”.

4.7.3. Enabling Zookeeper ACLs in an existing Kafka cluster

The zookeeper-security-migration.sh tool can to be used to set Zookeeper ACLs on all existing znodes. The zookeeper-security-migration.sh is available as part of AMQ Streams and can be found in the bin directory.

Prerequisites

- Kafka cluster is configured and running.

Enabling the Zookeeper ACLs

Edit the

/opt/kafka/config/server.propertiesKafka configuration file to set thezookeeper.set.aclfield totrueon all cluster nodes.zookeeper.set.acl=true

- Restart all Kafka brokers one by one

Set the ACLs on all existing Zookeeper

znodesusing thezookeeper-security-migration.shtool.su - kafka cd /opt/kafka KAFKA_OPTS="-Djava.security.auth.login.config=./config/jaas.conf"; ./bin/zookeeper-security-migration.sh --zookeeper.acl=secure --zookeeper.connect=_<ZookeeperURL>_ exit

For example:

su - kafka cd /opt/kafka KAFKA_OPTS="-Djava.security.auth.login.config=./config/jaas.conf"; ./bin/zookeeper-security-migration.sh --zookeeper.acl=secure --zookeeper.connect=zoo1.my-domain.com:2181 exit

4.8. Encryption and authentication

Kafka supports TLS for encrypting the communication with Kafka clients. Additionally, it supports two types of authentication:

- TLS client authentication based on X.509 certificates

- SASL Authentication based on a username and password

4.8.1. Listener configuration

Encryption and authentication in Kafka brokers is configured per listener. For more information about Kafka listener configuration, see Section 4.2, “Listeners”.

Each listener in the Kafka broker is configured with its own security protocol. The configuration property listener.security.protocol.map defines which listener uses which security protocol. It maps each listener name to its security protocol. Supported security protocols are:

PLAINTEXT- Listener without any encryption or authentication.

SSL- Listener using TLS encryption and, optionally, authentication using TLS client certificates.

SASL_PLAINTEXT- Listener without encryption but with SASL-based authentication.

SASL_SSL- Listener with TLS-based encryption and SASL-based authentication.

Given the following listeners configuration:

listeners=INT1://:9092,INT2://:9093,REPLICATION://:9094

the listener.security.protocol.map might look like this:

listener.security.protocol.map=INT1:SASL_PLAINTEXT,INT2:SASL_SSL,REPLICATION:SSL

This would configure the listener INT1 to use unencrypted connections with SASL authentication, the listener INT2 to use encrypted connections with SASL authentication and the REPLICATION interface to use TLS encryption (possibly with TLS client authentication). The same security protocol can be used multiple times. The following example is also a valid configuration:

listener.security.protocol.map=INT1:SSL,INT2:SSL,REPLICATION:SSL

Such a configuration would use TLS encryption and TLS authentication for all interfaces. The following chapters will explain in more detail how to configure TLS and SASL.

4.8.2. TLS Encryption

In order to use TLS encryption and server authentication, a keystore containing private and public keys has to be provided. This is usually done using a file in the Java Keystore (JKS) format. A path to this file is set in the ssl.keystore.location property. The ssl.keystore.password property should be used to set the password protecting the keystore. For example:

ssl.keystore.location=/path/to/keystore/server-1.jks ssl.keystore.password=123456

In some cases, an additional password is used to protect the private key. Any such password can be set using the ssl.key.password property.

Kafka is able to use keys signed by certification authorities as well as self-signed keys. Using keys signed by certification authorities should always be the preferred method. In order to allow clients to verify the identity of the Kafka broker they are connecting to, the certificate should always contain the advertised hostname(s) as its Common Name (CN) or in the Subject Alternative Names (SAN).

It is possible to use different SSL configurations for different listeners. All options starting with ssl. can be prefixed with listener.name.<NameOfTheListener>., where the name of the listener has to be always in lower case. This will override the default SSL configuration for that specific listener. The following example shows how to use different SSL configurations for different listeners:

listeners=INT1://:9092,INT2://:9093,REPLICATION://:9094 listener.security.protocol.map=INT1:SSL,INT2:SSL,REPLICATION:SSL # Default configuration - will be used for listeners INT1 and INT2 ssl.keystore.location=/path/to/keystore/server-1.jks ssl.keystore.password=123456 # Different configuration for listener REPLICATION listener.name.replication.ssl.keystore.location=/path/to/keystore/server-1.jks listener.name.replication.ssl.keystore.password=123456

Additional TLS configuration options

In addition to the main TLS configuration options described above, Kafka supports many options for fine-tuning the TLS configuration. For example, to enable or disable TLS / SSL protocols or cipher suites:

ssl.cipher.suites- List of enabled cipher suites. Each cipher suite is a combination of authentication, encryption, MAC and key exchange algorithms used for the TLS connection. By default, all available cipher suites are enabled.

ssl.enabled.protocols-

List of enabled TLS / SSL protocols. Defaults to

TLSv1.2,TLSv1.1,TLSv1.

For a complete list of supported Kafka broker configuration options, see Appendix A, Broker configuration parameters.

4.8.3. Enabling TLS encryption

This procedure describes how to enable encryption in Kafka brokers.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

Procedure

- Generate TLS certificates for all Kafka brokers in your cluster. The certificates should have their advertised and bootstrap addresses in their Common Name or Subject Alternative Name.

Edit the

/opt/kafka/config/server.propertiesKafka configuration file on all cluster nodes for the following:-

Change the

listener.security.protocol.mapfield to specify theSSLprotocol for the listener where you want to use TLS encryption. -

Set the

ssl.keystore.locationoption to the path to the JKS keystore with the broker certificate. Set the

ssl.keystore.passwordoption to the password you used to protect the keystore.For example:

listeners=UNENCRYPTED://:9092,ENCRYPTED://:9093,REPLICATION://:9094 listener.security.protocol.map=UNENCRYPTED:PLAINTEXT,ENCRYPTED:SSL,REPLICATION:PLAINTEXT ssl.keystore.location=/path/to/keystore/server-1.jks ssl.keystore.password=123456

-

Change the

- (Re)start the Kafka brokers

Additional resources

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a Kafka cluster, see Section 4.5, “Running a multi-node Kafka cluster”.

- For more information about configuring TLS encryption in clients, see Appendix D, Producer configuration parameters and Appendix C, Consumer configuration parameters.

4.8.4. Authentication

Kafka supports two methods of authentication. On all connections, authentication using one of the supported SASL (Simple Authentication and Security Layer) mechanisms can be used. On encrypted connections, TLS client authentication based on X.509 certificates can be used.

4.8.4.1. TLS client authentication

TLS client authentication can be used only on connections which are already using TLS encryption. To use TLS client authentication, a truststore with public keys can be provided to the broker. These keys can be used to authenticate clients connecting to the broker. The truststore should be provided in Java Keystore (JKS) format and should contain public keys of the certification authorities. All clients with public and private keys signed by one of the certification authorities included in the truststore will be authenticated. The location of the truststore is set using field ssl.truststore.location. In case the truststore is password protected, the password should be set in the ssl.truststore.password property. For example:

ssl.truststore.location=/path/to/keystore/server-1.jks ssl.truststore.password=123456

Once the truststore is configured, TLS client authentication has to be enabled using the ssl.client.auth property. This property can be set to one of three different values:

none- TLS client authentication is switched off. (Default value)

requested- TLS client authentication is optional. Clients will be asked to authenticate using TLS client certificate but they can choose not to.

required- Clients are required to authenticate using TLS client certificate.

When a client authenticates using TLS client authentication, the authenticated principal name is the distinguished name from the authenticated client certificate. For example, a user with a certificate which has a distinguished name CN=someuser will be authenticated with the following principal CN=someuser,OU=Unknown,O=Unknown,L=Unknown,ST=Unknown,C=Unknown. When TLS client authentication is not used and SASL is disabled, the principal name will be ANONYMOUS.

4.8.4.2. SASL authentication

SASL authentication is configured using Java Authentication and Authorization Service (JAAS). JAAS is also used for authentication of connections between Kafka and Zookeeper. JAAS uses its own configuration file. The recommended location for this file is /opt/kafka/config/jaas.conf. The file has to be readable by the kafka user. When running Kafka, the location of this file is specified using Java system property java.security.auth.login.config. This property has to be passed to Kafka when starting the broker nodes:

KAFKA_OPTS="-Djava.security.auth.login.config=/path/to/my/jaas.config"; bin/kafka-server-start.sh

SASL authentication is supported both through plain unencrypted connections as well as through TLS connections. SASL can be enabled individually for each listener. To enable it, the security protocol in listener.security.protocol.map has to be either SASL_PLAINTEXT or SASL_SSL.

SASL authentication in Kafka supports several different mechanisms:

PLAIN- Implements authentication based on username and passwords. Usernames and passwords are stored locally in Kafka configuration.

SCRAM-SHA-256andSCRAM-SHA-512- Implements authentication using Salted Challenge Response Authentication Mechanism (SCRAM). SCRAM credentials are stored centrally in Zookeeper. SCRAM can be used in situations where Zookeeper cluster nodes are running isolated in a private network.

GSSAPI- Implements authentication against a Kerberos server.

The PLAIN mechanism sends the username and password over the network in an unencrypted format. It should be therefore only be used in combination with TLS encryption.

The SASL mechanisms are configured via the JAAS configuration file. Kafka uses the JAAS context named KafkaServer. After they are configured in JAAS, the SASL mechanisms have to be enabled in the Kafka configuration. This is done using the sasl.enabled.mechanisms property. This property contains a comma-separated list of enabled mechanisms:

sasl.enabled.mechanisms=PLAIN,SCRAM-SHA-256,SCRAM-SHA-512

In case the listener used for inter-broker communication is using SASL, the property sasl.mechanism.inter.broker.protocol has to be used to specify the SASL mechanism which it should use. For example:

sasl.mechanism.inter.broker.protocol=PLAIN

The username and password which will be used for the inter-broker communication has to be specified in the KafkaServer JAAS context using the field username and password.

SASL PLAIN

To use the PLAIN mechanism, the usernames and password which are allowed to connect are specified directly in the JAAS context. The following example shows the context configured for SASL PLAIN authentication. The example configures three different users:

-

admin -

user1 -

user2

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

user_admin="123456"

user_user1="123456"

user_user2="123456";

};The JAAS configuration file with the user database should be kept in sync on all Kafka brokers.

When SASL PLAIN is also used for inter-broker authentication, the username and password properties should be included in the JAAS context:

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="123456"

user_admin="123456"

user_user1="123456"

user_user2="123456";

};SASL SCRAM

SCRAM authentication in Kafka consists of two mechanisms: SCRAM-SHA-256 and SCRAM-SHA-512. These mechanisms differ only in the hashing algorithm used - SHA-256 versus stronger SHA-512. To enable SCRAM authentication, the JAAS configuration file has to include the following configuration:

KafkaServer {

org.apache.kafka.common.security.scram.ScramLoginModule required;

};When enabling SASL authentication in the Kafka configuration file, both SCRAM mechanisms can be listed. However, only one of them can be chosen for the inter-broker communication. For example:

sasl.enabled.mechanisms=SCRAM-SHA-256,SCRAM-SHA-512 sasl.mechanism.inter.broker.protocol=SCRAM-SHA-512

User credentials for the SCRAM mechanism are stored in Zookeeper. The kafka-configs.sh tool can be used to manage them. For example, run the following command to add user user1 with password 123456:

bin/kafka-configs.sh --zookeeper zoo1.my-domain.com:2181 --alter --add-config 'SCRAM-SHA-256=[password=123456],SCRAM-SHA-512=[password=123456]' --entity-type users --entity-name user1

To delete a user credential use:

bin/kafka-configs.sh --zookeeper zoo1.my-domain.com:2181 --alter --delete-config 'SCRAM-SHA-512' --entity-type users --entity-name user1

SASL GSSAPI

The SASL mechanism used for authentication using Kerberos is called GSSAPI. To configure Kerberos SASL authentication, the following configuration should be added to the JAAS configuration file:

KafkaServer {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/etc/security/keytabs/kafka_server.keytab"

principal="kafka/kafka1.hostname.com@EXAMPLE.COM";

};The domain name in the Kerberos principal has to be always in upper case.

In addition to the JAAS configuration, the Kerberos service name needs to be specified in the sasl.kerberos.service.name property in the Kafka configuration:

sasl.enabled.mechanisms=GSSAPI sasl.mechanism.inter.broker.protocol=GSSAPI sasl.kerberos.service.name=kafka

Multiple SASL mechanisms

Kafka can use multiple SASL mechanisms at the same time. The different JAAS configurations can be all added to the same context:

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

user_admin="123456"

user_user1="123456"

user_user2="123456";

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/etc/security/keytabs/kafka_server.keytab"

principal="kafka/kafka1.hostname.com@EXAMPLE.COM";

org.apache.kafka.common.security.scram.ScramLoginModule required;

};When multiple mechanisms are enabled, clients will be able to choose the mechanism which they want to use.

4.8.5. Enabling TLS client authentication

This procedure describes how to enable TLS client authentication in Kafka brokers.

Prerequisites

Procedure

- Prepare a JKS truststore containing the public key of the certification authority used to sign the user certificates.

Edit the

/opt/kafka/config/server.propertiesKafka configuration file on all cluster nodes for the following:-

Set the

ssl.truststore.locationoption to the path to the JKS truststore with the certification authority of the user certificates. -

Set the

ssl.truststore.passwordoption to the password you used to protect the truststore. Set the

ssl.client.authoption torequired.For example:

ssl.truststore.location=/path/to/truststore.jks ssl.truststore.password=123456 ssl.client.auth=required

-

Set the

- (Re)start the Kafka brokers

Additional resources

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a Kafka cluster, see Section 4.5, “Running a multi-node Kafka cluster”.

- For more information about configuring TLS client authentication in clients, see Appendix D, Producer configuration parameters and Appendix C, Consumer configuration parameters.

4.8.6. Enabling SASL PLAIN authentication

This procedure describes how to enable SASL PLAIN authentication in Kafka brokers.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

Procedure

Edit or create the

/opt/kafka/config/jaas.confJAAS configuration file. This file should contain all your users and their passwords. Make sure this file is the same on all Kafka brokers.For example:

KafkaServer { org.apache.kafka.common.security.plain.PlainLoginModule required user_admin="123456" user_user1="123456" user_user2="123456"; };Edit the

/opt/kafka/config/server.propertiesKafka configuration file on all cluster nodes for the following:-

Change the

listener.security.protocol.mapfield to specify theSASL_PLAINTEXTorSASL_SSLprotocol for the listener where you want to use SASL PLAIN authentication. Set the

sasl.enabled.mechanismsoption toPLAIN.For example:

listeners=INSECURE://:9092,AUTHENTICATED://:9093,REPLICATION://:9094 listener.security.protocol.map=INSECURE:PLAINTEXT,AUTHENTICATED:SASL_PLAINTEXT,REPLICATION:PLAINTEXT sasl.enabled.mechanisms=PLAIN

-

Change the

(Re)start the Kafka brokers using the KAFKA_OPTS environment variable to pass the JAAS configuration to Kafka brokers.

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/jaas.conf"; /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

Additional resources

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a Kafka cluster, see Section 4.5, “Running a multi-node Kafka cluster”.

- For more information about configuring SASL PLAIN authentication in clients, see Appendix D, Producer configuration parameters and Appendix C, Consumer configuration parameters.

4.8.7. Enabling SASL SCRAM authentication

This procedure describes how to enable SASL SCRAM authentication in Kafka brokers.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

Procedure

Edit or create the

/opt/kafka/config/jaas.confJAAS configuration file. Enable theScramLoginModulefor theKafkaServercontext. Make sure this file is the same on all Kafka brokers.For example:

KafkaServer { org.apache.kafka.common.security.scram.ScramLoginModule required; };Edit the

/opt/kafka/config/server.propertiesKafka configuration file on all cluster nodes for the following:-

Change the

listener.security.protocol.mapfield to specify theSASL_PLAINTEXTorSASL_SSLprotocol for the listener where you want to use SASL SCRAM authentication. Set the

sasl.enabled.mechanismsoption toSCRAM-SHA-256orSCRAM-SHA-512.For example:

listeners=INSECURE://:9092,AUTHENTICATED://:9093,REPLICATION://:9094 listener.security.protocol.map=INSECURE:PLAINTEXT,AUTHENTICATED:SASL_PLAINTEXT,REPLICATION:PLAINTEXT sasl.enabled.mechanisms=SCRAM-SHA-512

-

Change the

(Re)start the Kafka brokers using the KAFKA_OPTS environment variable to pass the JAAS configuration to Kafka brokers.

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/jaas.conf"; /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

Additional resources

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a Kafka cluster, see Section 4.5, “Running a multi-node Kafka cluster”.

- For more information about adding SASL SCRAM users, see Section 4.8.8, “Adding SASL SCRAM users”.

- For more information about deleting SASL SCRAM users, see Section 4.8.9, “Deleting SASL SCRAM users”.

- For more information about configuring SASL SCRAM authentication in clients, see Appendix D, Producer configuration parameters and Appendix C, Consumer configuration parameters.

4.8.8. Adding SASL SCRAM users

This procedure describes how to add new users for authentication using SASL SCRAM.

Prerequisites

Procedure

Use the

kafka-configs.shtool to add new SASL SCRAM users.bin/kafka-configs.sh --zookeeper <ZookeeperAddress> --alter --add-config 'SCRAM-SHA-512=[password=<Password>]' --entity-type users --entity-name <Username>

For example:

bin/kafka-configs.sh --zookeeper zoo1.my-domain.com:2181 --alter --add-config 'SCRAM-SHA-512=[password=123456]' --entity-type users --entity-name user1

Additional resources

- For more information about configuring SASL SCRAM authentication in clients, see Appendix D, Producer configuration parameters and Appendix C, Consumer configuration parameters.

4.8.9. Deleting SASL SCRAM users

This procedure describes how to remove users when using SASL SCRAM authentication.

Prerequisites

Procedure

Use the

kafka-configs.shtool to delete SASL SCRAM users.bin/kafka-configs.sh --zookeeper <ZookeeperAddress> --alter --delete-config 'SCRAM-SHA-512' --entity-type users --entity-name <Username>

For example:

bin/kafka-configs.sh --zookeeper zoo1.my-domain.com:2181 --alter --delete-config 'SCRAM-SHA-512' --entity-type users --entity-name user1

Additional resources

- For more information about configuring SASL SCRAM authentication in clients, see Appendix D, Producer configuration parameters and Appendix C, Consumer configuration parameters.

4.9. Logging

Kafka brokers use Log4j as their logging infrastructure. Logging configuration is by default read from the log4j.propeties configuration file which should be placed either in the /opt/kafka/config/ directory or on the classpath. The location and name of the configuration file can be changed using the Java property log4j.configuration which can be passed to Kafka using the KAFKA_LOG4J_OPTS environment variable:

su - kafka export KAFKA_LOG4J_OPTS="-Dlog4j.configuration=file:/my/path/to/log4j.config"; /opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

For more information about Log4j configurations, see Log4j manual.

Chapter 5. Topics

Messages in Kafka are always sent to or received from a topic. This chapter describes how to configure and manage Kafka topics.

5.1. Partitions and replicas

Messages in Kafka are always sent to or received from a topic. A topic is always split into one or more partitions. Partitions act as shards. That means that every message sent by a producer is always written only into a single partition. Thanks to the sharding of messages into different partitions, topics are easy to scale horizontally.

Each partition can have one or more replicas, which will be stored on different brokers in the cluster. When creating a topic you can configure the number of replicas using the replication factor. Replication factor defines the number of copies which will be held within the cluster. One of the replicas for given partition will be elected as a leader. The leader replica will be used by the producers to send new messages and by the consumers to consume messages. The other replicas will be follower replicas. The followers replicate the leader.

If the leader fails, one of the followers will automatically become the new leader. Each server acts as a leader for some of its partitions and a follower for others so the load is well balanced within the cluster.

NOTE: The replication factor determines the number of replicas including the leader and the followers. For example, if you set the replication factor to 3, then there will one leader and two follower replicas.

5.2. Message retention

The message retention policy defines how long the messages will be stored on the Kafka brokers. It can be defined based on time, partition size or both.

For example, you can define that the messages should be kept:

- For 7 days

- Until the parition has 1GB of messages. Once the limit is reached, the oldest messages will be removed.

- For 7 days or until the 1GB limit has been reached. Whatever limit comes first will be used.

Kafka brokers store messages in log segments. The messages which are past their retention policy will be deleted only when a new log segment is created. New log segments are created when the previous log segment exceeds the configured log segment size. Additionally, users can request new segments to be created periodically.

Additionally, Kafka brokers support a compacting policy.

For a topic with the compacted policy, the broker will always keep only the last message for each key. The older messages with the same key will be removed from the partition. Because compacting is a periodically executed action, it does not happen immediately when the new message with the same key are sent to the partition. Instead it might take some time until the older messages are removed.

For more information about the message retention configuration options, see Section 5.5, “Topic configuration”.

5.3. Topic auto-creation

When a producer or consumer tries to sent to or received from from a topic which does not exist, Kafka will, by default, automatically create that topic. This behavior is controlled by the auto.create.topics.enable configuration property which is set to true by default.

To disable it, set auto.create.topics.enable to false in the Kafka broker configuration file:

auto.create.topics.enable=false

5.4. Topic deletion

Kafka offers the possibility to disable deletion of topics. This is configured through the delete.topic.enable property, which is set to true by default (that is, deleting topics is possible). When this property is set to false it will be not possible to delete topics and all attempts to delete topic will return success but the topic will not be deleted.

delete.topic.enable=false

5.5. Topic configuration

Auto-created topics will use the default topic configuration which can be specified in the broker properties file. However, when creating topics manually, their configuration can be specified at creation time. It is also possible to change a topic’s configuration after it has been created. The main topic configuration options for manually created topics are:

cleanup.policy-

Configures the retention policy to

deleteorcompact. Thedeletepolicy will delete old records. Thecompactpolicy will enable log compaction. The default value isdelete. For more information about log compaction, see Kafka website. compression.type-

Specifies the compression which is used for stored messages. Valid values are

gzip,snappy,lz4,uncompressed(no compression) andproducer(retain the compression codec used by the producer). The default value isproducer. max.message.bytes-

The maximum size of a batch of messages allowed by the Kafka broker, in bytes. The default value is

1000012. min.insync.replicas-

The minimum number of replicas which must be in sync for a write to be considered successful. The default value is

1. retention.ms-

Maximum number of milliseconds for which log segments will be retained. Log segments older than this value will be deleted. The default value is

604800000(7 days). retention.bytes-

The maximum number of bytes a partition will retain. Once the partition size grows over this limit, the oldest log segments will be deleted. Value of

-1indicates no limit. The default value is-1. segment.bytes-

The maximum file size of a single commit log segment file in bytes. When the segment reaches its size, a new segment will be started. The default value is

1073741824bytes (1 gibibyte).

For list of all supported topic configuration options, see Appendix B, Topic configuration parameters.

The defaults for auto-created topics can be specified in the Kafka broker configuration using similar options:

log.cleanup.policy-

See

cleanup.policyabove. compression.type-

See

compression.typeabove. message.max.bytes-

See

max.message.bytesabove. min.insync.replicas-

See

min.insync.replicasabove. log.retention.ms-

See

retention.msabove. log.retention.bytes-

See

retention.bytesabove. log.segment.bytes-

See

segment.bytesabove. default.replication.factor-

Default replication factor for automatically created topics. Default value is

1. num.partitions-

Default number of partitions for automatically created topics. Default value is

1.

For list of all supported Kafka broker configuration options, see Appendix A, Broker configuration parameters.

5.6. Internal topics

Internal topics are created and used internally by the Kafka brokers and clients. Kafka has several internal topics. These are used to store consumer offsets (__consumer_offsets) or transaction state (__transaction_state). These topics can be configured using dedicated Kafka broker configuration options starting with prefix offsets.topic. and transaction.state.log.. The most important configuration options are:

offsets.topic.replication.factor-

Number of replicas for

__consumer_offsetstopic. The default value is3. offsets.topic.num.partitions-

Number of partitions for

__consumer_offsetstopic. The default value is50. transaction.state.log.replication.factor-

Number of replicas for

__transaction_statetopic. The default value is3. transaction.state.log.num.partitions-

Number of partitions for

__transaction_statetopic. The default value is50. transaction.state.log.min.isr-

Minimum number of replicas that must acknowledge a write to

__transaction_statetopic to be considered successful. If this minimum cannot be met, then the producer will fail with an exception. The default value is2.

5.7. Creating a topic

The kafka-topics.sh tool can be used to manage topics. kafka-topics.sh is part of the AMQ Streams distribution and can be found in the bin directory.

Prerequisites

- AMQ Streams cluster is installed and running

Creating a topic

Create a topic using the

kafka-topics.shutility and specify the following: Zookeeper URL in the--zookeeperoption. The new topic to be created in the--createoption. Topic name in the--topicoption. The number of partitions in the--partitionsoption. Replication factor in the--replication-factoroption.You can also override some of the default topic configuration options using the option

--config. This option can be used multiple times to override different options.bin/kafka-topics.sh --zookeeper <ZookeeperAddress> --create --topic <TopicName> --partitions <NumberOfPartitions> --replication-factor <ReplicationFactor> --config <Option1>=<Value1> --config <Option2>=<Value2>

Example of the command to create a topic named

mytopicbin/kafka-topics.sh --zookeeper zoo1.my-domain.com:2181 --create --topic mytopic --partitions 50 --replication-factor 3 --config cleanup.policy=compact --config min.insync.replicas=2

Verify that the topic exists using

kafka-topics.sh.bin/kafka-topics.sh --zookeeper <ZookeeperAddress> --describe --topic <TopicName>

Example of the command to describe a topic named

mytopicbin/kafka-topics.sh --zookeeper zoo1.my-domain.com:2181 --describe --topic mytopic

Additional resources

- For more information about topic configuration, see Section 5.5, “Topic configuration”.

- For list of all supported topic configuration options, see Appendix B, Topic configuration parameters.

5.8. Listing and describing topics

The kafka-topics.sh tool can be used to list and describe topics. kafka-topics.sh is part of the AMQ Streams distribution and can be found in the bin directory.

Prerequisites

- AMQ Streams cluster is installed and running

-

Topic

mytopicexists

Describing a topic

Describe a topic using the

kafka-topics.shutility.-

Specify the Zookeeper URL in the

--zookeeperoption. -

Use

--describeoption to specify that you want to describe a topic. -

Topic name has to be specified in the

--topicoption. When the

--topicoption is omitted, it will describe all available topics.bin/kafka-topics.sh --zookeeper <ZookeeperAddress> --describe --topic <TopicName>

Example of the command to describe a topic named

mytopicbin/kafka-topics.sh --zookeeper zoo1.my-domain.com:2181 --describe --topic mytopic

The describe command will list all partitions and replicas which belong to this topic. It will also list all topic configuration options.

-

Specify the Zookeeper URL in the

Additional resources

- For more information about topic configuration, see Section 5.5, “Topic configuration”.

- For more information about creating topics, see Section 5.7, “Creating a topic”.

5.9. Modifying a topic configuration

The kafka-configs.sh tool can be used to modify topic configurations. kafka-configs.sh is part of the AMQ Streams distribution and can be found in the bin directory.

Prerequisites

- AMQ Streams cluster is installed and running

-

Topic

mytopicexists

Modify topic configuration

Use the

kafka-configs.shtool to get the current configuration.-

Specify the Zookeeper URL in the

--zookeeperoption. -

Set the

--entity-typeastopicand--entity-nameto the name of your topic. Use

--describeoption to get the current configuration.bin/kafka-configs.sh --zookeeper <ZookeeperAddress> --entity-type topics --entity-name <TopicName> --describe

Example of the command to get configuration of a topic named

mytopicbin/kafka-configs.sh --zookeeper zoo1.my-domain.com:2181 --entity-type topics --entity-name mytopic --describe

-

Specify the Zookeeper URL in the

Use the

kafka-configs.shtool to change the configuration.-

Specify the Zookeeper URL in the

--zookeeperoptions. -

Set the

--entity-typeastopicand--entity-nameto the name of your topic. -

Use

--alteroption to modify the current configuration. Specify the options you want to add or change in the option

--add-config.bin/kafka-configs.sh --zookeeper <ZookeeperAddress> --entity-type topics --entity-name <TopicName> --alter --add-config <Option>=<Value>

Example of the command to change configuration of a topic named

mytopicbin/kafka-configs.sh --zookeeper zoo1.my-domain.com:2181 --entity-type topics --entity-name mytopic --alter --add-config min.insync.replicas=1

-

Specify the Zookeeper URL in the

Use the

kafka-configs.shtool to delete an existing configuration option.-

Specify the Zookeeper URL in the

--zookeeperoptions. -

Set the

--entity-typeastopicand--entity-nameto the name of your topic. -

Use

--delete-configoption to remove existing configuration option. Specify the options you want to remove in the option

--remove-config.bin/kafka-configs.sh --zookeeper <ZookeeperAddress> --entity-type topics --entity-name <TopicName> --alter --delete-config <Option>

Example of the command to change configuration of a topic named

mytopicbin/kafka-configs.sh --zookeeper zoo1.my-domain.com:2181 --entity-type topics --entity-name mytopic --alter --delete-config min.insync.replicas

-

Specify the Zookeeper URL in the

Additional resources

- For more information about topic configuration, see Section 5.5, “Topic configuration”.

- For more information about creating topics, see Section 5.7, “Creating a topic”.

- For list of all supported topic configuration options, see Appendix B, Topic configuration parameters.

5.10. Deleting a topic

The kafka-topics.sh tool can be used to manage topics. kafka-topics.sh is part of the AMQ Streams distribution and can be found in the bin directory.

Prerequisites

- AMQ Streams cluster is installed and running

-

Topic

mytopicexists

Deleting a topic

Delete a topic using the

kafka-topics.shutility.-

Specify the Zookeeper URL in the

--zookeeperoption. -

Use

--deleteoption to specify that an existing topic should be deleted. Topic name has to be specified in the

--topicoption.bin/kafka-topics.sh --zookeeper <ZookeeperAddress> --delete --topic <TopicName>

Example of the command to create a topic named